Abstract

Fall risk assessment needs contemporary approaches based on habitual data. Currently, inertial measurement unit (IMU)-based wearables are used to inform free-living spatio-temporal gait characteristics to inform mobility assessment. Typically, a fluctuation of those characteristics will infer an increased fall risk. However, current approaches with IMUs alone remain limited, as there are no contextual data to comprehensively determine if underlying mechanistic (intrinsic) or environmental (extrinsic) factors impact mobility and, therefore, fall risk. Here, a case study is used to explore and discuss how contemporary video-based wearables could be used to supplement arising mobility-based IMU gait data to better inform habitual fall risk assessment. A single stroke survivor was recruited, and he conducted a series of mobility tasks in a lab and beyond while wearing video-based glasses and a single IMU. The latter generated topical gait characteristics that were discussed according to current research practices. Although current IMU-based approaches are beginning to provide habitual data, they remain limited. Given the plethora of extrinsic factors that may influence mobility-based gait, there is a need to corroborate IMUs with video data to comprehensively inform fall risk assessment. Use of artificial intelligence (AI)-based computer vision approaches could drastically aid the processing of video data in a timely and ethical manner. Many off-the-shelf AI tools exist to aid this current need and provide a means to automate contextual analysis to better inform mobility from IMU gait data for an individualized and contemporary approach to habitual fall risk assessment.

1. Introduction

Neurological conditions impair cognition and mobility, which can lead to an increased risk of falls. Approximately 60% of people with a neurological condition such as Parkinson’s disease report at least one fall per year, with 39% reporting recurrent falls [1,2]. Additionally, stroke survivors fall often in the early period after discharge; >70% experiencing at least one fall within 6 months [3]. To date, a current limitation of any fall risk assessment strategy is that it is not tracked in a real-world environment (i.e., remote/free-living/habitual (accessed on 17 December 2022), and there are no objective markers to understand this on an individual level. However, one contemporary mode of investigation is the use of wearable technologies to personalise fall risk assessment for use within habitual settings [4]. Current trends in wearable research align to the nuanced insights from a detailed mobility analysis to tailor fall prevention strategies to the individual [5].

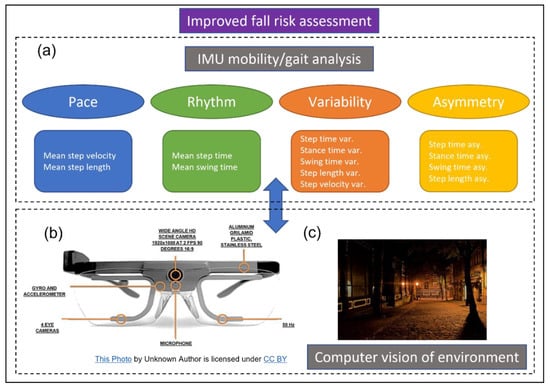

Specifically, wearable inertial measurement units (IMU) consist of an accelerometer and gyroscope capable of high resolution (e.g., 100 Hz) data capture and are at the forefront of capturing clinically relevant habitual mobility-based data to better inform fall risk assessment [6,7]. In addition to providing high-resolution data, these wearables are capable of big data capture due to the typical recording time periods (e.g., 7 days) and offer extreme portability (e.g., worn on the person, under clothing). Typically, wearable IMUs can robustly quantify spatio-temporal gait characteristics (Figure 1a), providing valuable mobility data to provide some insight to an individual’s habitual fall risk [8]. For example, unstable/unsafe alternations in mobility manifested through high/abnormal gait characteristics can be represented by an increased step time variability [9]. If that is quantified in a supervised setting (e.g., clinic/laboratory) under the examination of a trained clinician, well informed assumptions and suggestions can be made to help reduce gait variability, tempering unsafe mobility alterations to reduced falls risk [10]. Although the same mobility-based gait characteristic can be quantified beyond the lab, the current limitation is that there is no contextual information to derive an informed assumption and more appropriate suggestion(s) to help reduce habitual fall risk [11]. For example, IMU-based high step time variability captured beyond the lab in a stroke survivor could be due to an intrinsic (e.g., disruption of neural pathways in the motor cortex) or extrinsic factor (e.g., uneven terrain). There is a need to augment habitual wearable IMU-based mobility assessment to better inform free-living fall risk.

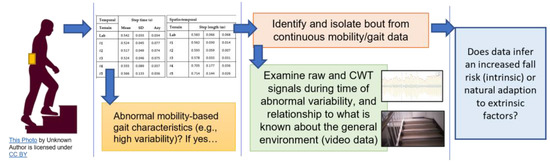

Figure 1.

The proposal for a more informed and improved fall risk assessment. (a) IMU mobility-based gait data are useful but lack contextual information. (b) As technology in wearable glasses becomes more advanced, they could be a viable option to provide more routine video capture to augment (and better inform) IMU data captured for mobility-based gait analysis. (c) Video data could provide absolute clarity on environment and why e.g., high (step time) variability may occur such as on uneven pavement in a poorly lit setting.

Studies have begun to address this limitation using wearable cameras [12]. To date, methodologies have included utilising generic commercial cameras (e.g., GoPro®, https://gopro.com/en/gb (accessed on 17 December 2022)) mounted at the waist and aimed downwards at the feet, which is not only less ergonomic and more obtrusive for the participant, but it also fails to provide wider environmental context. Other studies have utilised cameras mounted at the chest to provide wider context to the scenes (e.g., identification of upcoming hazards or obstacles); however, the limitation of having cumbersome and unnatural technology attached to a participant remains. Compounding the issues arising from the previous methodologies are the number of ethical concerns and general procedural problems given that a researcher is required to manually review the video data. Ethically, having a researcher review and label hours’ worth of video showing a participant’s general view is problematic, as it can lead to scenarios such as a participant not turning the camera off during a private/sensitive moment like going to the toilet or having faces captured within the video image without consent [13]. Additionally, the process of manually reviewing a video is cumbersome, with review of an hour-long video typically taking many minutes/hours longer than the actual recording time [14].

Those challenges enable opportunities for automation [14], using computer vision-based artificial intelligence (AI) algorithms that can synchronise both IMU and wearable video data whilst also annotating/labelling the context of the environments (e.g., indoor/outdoor, type of terrain). Therefore, this case study explores and discusses how wearable video-based data capture can be used to better inform mobility-based fall risk within free-living environments. Additionally, this case study proposes a plausible computer vision processes for use on wearable video data to enable an automated approach for better IMU-based mobility analysis, leading to improved fall risk assessment. Here, this case study:

- Uses a contemporary and pragmatic video-based wearable to describe how context can better inform data from a lower back mounted IMU in fall risk assessment,

- Presents a suggested AI-based approach to automatically contextualise video data.

2. Materials and Methods

This preliminary investigation presents a single participant report to explore the role of a wearable camera to supplement mobility-based gait data captured by an IMU from within and beyond the laboratory. Pragmatic wearable technologies were worn by a participant, providing a detailed single case study to explore why use of video data is important, i.e., to better inform free-living mobility and habitual fall risk assessment. Here, IMU and video data were continuously captured from scripted mobility tasks within a university setting.

2.1. Participant

Ethical consent was granted by the Northumbria University Research Ethics Committee (REF: 21603, approval date: 11 February 2020). The single subject participant gave informed written consent before participating in this study. Testing took place at the Clinical Gait Laboratory and wider Coach Lane Campus, Northumbria University, Newcastle upon Tyne. The participant tested was an older adult (male, 72 years) stroke survivor who presented with hemiplegia, i.e., slight reduced clarity of movement on his left side.

2.2. Protocol

The participant conducted a series of (single and dual task) mobility tasks, consisting of both indoor and outdoor walking. The participant walked unaided around a range of different environments throughout the campus on a range of terrains. Firstly, the participant performed two 2-min (min) walks around a circuit in a controlled gait lab, followed by a long continuous walk around the university campus with a researcher. During all walks, the participant wore head-mounted camera-based glasses and an IMU mounted on the lower back (5th lumbar vertebrae, L5). For the purposes of this work, 30 s periods of video and corresponding IMU data from walks on contrasting terrain types were used only.

For lab-based dual tasking, the participant was tested at his maximum forward digit span, which was determined while sitting, and defined as the longest digit span the participant could successfully recall in two of three attempts. Digit strings consisted of pre-ordered numbers read aloud by a researcher. For the dual-task condition, the participant listened to digit strings while walking (for 2 min) and repeated them back.

2.3. Wearable Camera

Typically, wearable technologies are designed to integrate into the wearer’s daily life. That is routinely evidenced through wearable fitness trackers [15]. Yet, there are many sensing technologies that must be reimagined for routine adoption in daily life to overcome any stigma associated with their use [16]. One such technology is the camera which could provide a range of useful real-world data pertaining to fall risk e.g., absolute clarity on the effect of terrain to negatively impact mobility. Contemporary options exist such that video-based sensing could be routinely integrated into everyday life by continuous use through camera-based glasses (Figure 1). For example, the Invisible device proposed by Pupil Labs (https://pupil-labs.com/products/invisible/ (accessed on 19 December 2022)). Here, this study utilises similar technology to propose a contemporary approach for wearable camera deployment.

The glasses used in this preliminary study were the Tobii Pro 2 (Tobii, https://corporate.tobii.com/ (accessed on 19 December 2022)), which is capable of capturing high definition video at 1920 × 1080 pixels (px) at 30 frames/second (fps). Specifically, the glasses comprise two cameras: one facing outward from the frame to capture the environment where the wearer is looking, as well as an inward-facing infrared camera (with accompanying infrared light emitting diodes) to capture eye position. Accordingly, these glasses provide an overlaid crosshair to display eye location on the resulting (outward facing) video. This resulting crosshair provides exact clarity regarding the location of the participant’s visual focus throughout the entire video, allowing for the correlation of events with the participants gaze at the time. For the purposes of this preliminary study, only data from the outward-facing camera will be explored (Figure 1).

2.4. Wearable IMU

The field of mobility-based gait analysis is readily adopting the use of IMUs due to their more affordable cost (compared to e.g., instrumented walkways) and unobtrusiveness while capturing data in a range of environments [17]. Currently, there are a plethora of IMU devices used for mobility-based gait analysis [18]. Here, the AX6 (Axivity, https://axivity.com/ (accessed on 19 December 2022)) was used and secured to the participant at L5 via a waist strap. Accelerometer signals were recorded at a sampling frequency of 100 Hertz (Hz), and IMU configured (16-bit resolution, ±8 g) prior to data collection. Data were downloaded to a laptop upon completion of recording and analysed via validated algorithms, below.

2.5. IMU Algorithms and Mobility-Based Gait Characteristics

There are many IMU-based algorithms for mobility-based gait assessment specifically for use in neurological cohorts [19]. Generally, the field has aligned to the use of a single IMU on L5 and use of 14 spatio-temporal characteristics which map to four mobility-based gait domains [20], as shown in Figure 1. Many other characteristics can be generated, such as those from the frequency or time–frequency domain [11] but those remain limited for routine use in daily clinical practice due to inadequate clarity on how they could inform rehabilitation strategies [8].

Here, IMU data were segmented from the continuous stream of data captured during the duration of the mobility tasks by a previously described and validated approach [21]. In brief, the combined tri-axial inertial data and vertical acceleration (av) were used to identify possible moving and upright periods, respectively. Subsequently, those periods of interest were subjected to further investigation, whereby the identification of consecutive initial contact (IC) and final contact (FC) gait cycle events suggested periods of mobility-based gait activity and the required spatio-temporal characteristics, as shown in Figure 1. The following briefly describes IC/FC detection and mobility-based gait characteristics [22,23]:

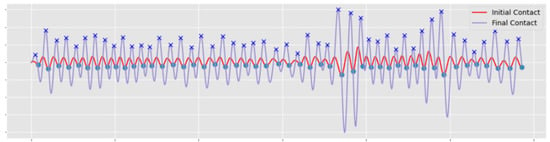

- A continuous wavelet transform (CWT) estimated IC and FC time events from av. First, av was integrated and then differentiated using a Gaussian CWT, ICs were identified as the times of the minima. The differentiated signal underwent a further CWT differentiation from which FC’s were identified as the times of the maxima, Figure 2. From the sequence of IC/FC events produced within a gait cycle temporal characteristics (e.g., step time) were generated.

Figure 2. Example wavelet outputs from the bouts of walking captured during level ground asphalt walking phase with minima and maxima (peaks i.e., ICs and FCs) identified from CWT-based signals.

Figure 2. Example wavelet outputs from the bouts of walking captured during level ground asphalt walking phase with minima and maxima (peaks i.e., ICs and FCs) identified from CWT-based signals. - IC and FC values were used to calculate an array of times for: (i) step (time from ICRight to ICLeft to ICRight, etc.), (ii) stance (time between ICLeft and FCLeft, then ICRight and FCRight, etc.) and swing (time between FCLeft and ICLeft then FCRight and ICRight, etc.), [24].

- The spatial characteristic of step length was estimated from the up/down movement of participants centre of mass (CoM), close to L5. Movement in the vertical direction follows a circular trajectory and the changes in CoM height can be calculated (double integration of av) to produce step length from the inverted pendulum model [23]. A combination of step time and step length produce step velocity [24].

2.6. Analysis

IMU-based data with bouts of walking such as navigating stairs or curbs were manually extracted and contrasted with other bouts by comparing the mobility-based gait characteristics. Firstly, the array of spatio-temporal characteristics were examined (Section 3.1). Then, to adopt a contemporary mobility assessment approach, the mean, variability and asymmetry of gait data were presented and discussed (Figure 1a). Variability was calculated as the standard deviation across all detected steps in every 30 s bout, while asymmetry was calculated as the absolute values of the difference between means of every other step (i.e., left, and right) within the same 30 s bout.

3. Results and Discussion

The purpose of this case study was to propose and explore the approach of a contemporary wearable camera to supplement IMU-based mobility-based assessment to provide more insight for arising gait characteristics. It is proposed that the approach can provide a method for better free-living fall risk assessment.

Here, this paper presents a breakdown of extracted spatio-temporal mobility-based gait characteristics obtained from an IMU on the lower back, along with contextual environmental data obtained from concurrent videos gathered by wearable glasses. When analysed, it is proposed that video data can provide explanations to account for variations in the extracted mobility-based IMU gait characteristics, dependent on environment (indoor/outdoor) and the terrain the participant was navigating. Data are presented and discussed in the following sections by investigating mobility-based gait outcomes and CWT signals with contextualisation from use of video data.

3.1. IMU Mobility-Based Gait Data: Current Limitations and Future Considerations

Overall, when navigating level terrain (lab, #1 asphalt, #2 asphalt + paving and #3 paving alone), little to no observable difference can be identified across the range of extracted mobility-based temporal IMU gait characteristics within 30 s bouts (≈57 steps), as shown in Figure 3. Yet, some anomalies/peaks are observed in step length, as denoted by the red and black arrows in Figure 3. Current approaches of free-living mobility-based assessment based on IMU data alone fail to understand why those anomalies could exist and what causes the participant to have reduced and/or inflated step lengths. In short, there is a failure to comprehensively understand if (i) underlying intrinsic or (ii) the environment/terrains and associated extrinsic factors are influencing the participant’s mobility-based gait. The use of wearable video data enables a more insightful examination and understanding of the IMU-based characteristics. By augmenting mobility-based IMU gait characteristics with video data, the impact of extrinsic (and not necessarily intrinsic) factors on mobility emerge:

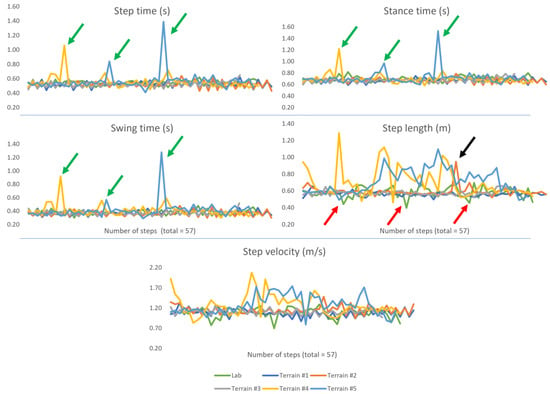

Figure 3.

Spatio-temporal mobility-based gait characteristics from 30 s (≈57 steps) bouts of walking across the numerous terrain types. Here, we observed the greatest anomalies in the characteristics on terrains #4 (stair ascent, yellow) and #5 (stair descent, light blue). Typically, the remaining terrains present characteristics in what may appear to be normal fluctuations. Of note, the step length and step velocity in the lab bout (green) show some altered fluctuations and may be attributed to the protocol i.e., a walk in a looped (non-linear) circuit.

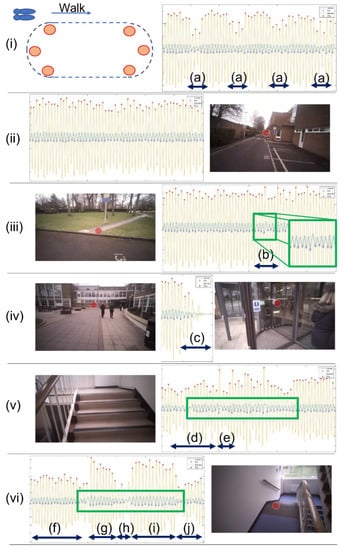

- The lab protocol consisted of a 2-min walk in a loop which, although it is a scripted task, is useful to examine. Step length characteristics show reduced measurements (Figure 3, red arrows) at periodical moments, which are explained by the reduced stepping distance as the participant rounded the ends of the curved path of the circuit (Figure 4i). Though the step length (inverted pendulum) algorithm is designed for straight level walking, it is sensitive enough to detect shorter step lengths during obtuse angled curved walking i.e., gradual turns compared to acute turns. Accordingly, the added context (video) is useful for understanding the reduced stepping length, which could help provide a better understanding of natural intrinsic considerations to extrinsic factors, such as purposefully adjusting direction of travel to round obstacles or other pedestrians. This is a simple example within the lab but the usefulness of examining step length is also evidenced beyond controlled conditions.

Figure 4. Walking bout IMU processed and video data. (i) 30 s (from continuous 2 min loop) within a lab, (a) denoting the turns at the end of the track, (ii) level walking on asphalt with no clear abnormality in the IMU data but walking was in a linear path (i.e., straight line), (iii) walk from asphalt to paving and a step identified by the participant (red dot), with a possible anomaly in IMU-based data from visual observation denoted by (b and green squares), which roughly equates to the time of a single step from asphalt to paving, (iv) walking on paving and (c) at the latter stages of the 30 s walk as the participant stops before entering a revolving door, (v) stair ascent, noticeable changes in the CWT-based data as the participant walks up steps (d) and turns left (e) on the landing, and (vi) noticeable changes in the CWT-based data from level walking (f), stair descent (g), short steps to turn to next flight (h), stair descent (i) and level walking (j). The green rectangle in (v,vi) highlighting the similar fluctuations, as observed in (iii), due to stepping.

Figure 4. Walking bout IMU processed and video data. (i) 30 s (from continuous 2 min loop) within a lab, (a) denoting the turns at the end of the track, (ii) level walking on asphalt with no clear abnormality in the IMU data but walking was in a linear path (i.e., straight line), (iii) walk from asphalt to paving and a step identified by the participant (red dot), with a possible anomaly in IMU-based data from visual observation denoted by (b and green squares), which roughly equates to the time of a single step from asphalt to paving, (iv) walking on paving and (c) at the latter stages of the 30 s walk as the participant stops before entering a revolving door, (v) stair ascent, noticeable changes in the CWT-based data as the participant walks up steps (d) and turns left (e) on the landing, and (vi) noticeable changes in the CWT-based data from level walking (f), stair descent (g), short steps to turn to next flight (h), stair descent (i) and level walking (j). The green rectangle in (v,vi) highlighting the similar fluctuations, as observed in (iii), due to stepping. - In Figure 3, step length is the one obvious anomaly from terrain #2 (black arrow), asphalt to paving (Figure 4iii). Interestingly, the increased step length (anomaly) occurred at the approximate time it was observed (from corresponding video), when the participant performed a step and transitioned from asphalt onto paving. This matches the corresponding CWT derived IMU-based signals of Figure 4iii, where there is a very subtle change in the 1st derivation of av (Figure 4iii-b). Generally, it could be that occasional anomalies in step length data equate to routine adjustments in the daily mobility of a participant undertaking a single step transitioning from one terrain to another. Equally, it could be a natural avoidance of an obstacle on the ground (i.e., stepping over or beyond) due to natural intrinsic/instinctive reactions. Accordingly, it may be important to examine those step length anomalies arising from IMUs in accordance with video data to better understand the natural abilities of the participant to adjust and manage naturally occurring obstacles/hazards. The approach may help future areas of research aiming to refine IMU-based mobility-based gait data from continuous monitoring (e.g., 7-days) for targeted areas of investigation in fall risk assessment.

When more challenging terrains were identified (by video) and examined (i.e., #4 stair ascent and #5 descent) more significant fluctuations/changes are observed in all gait data, Figure 3. On those terrains, the (environmental) complexity produces extrinsic factors (e.g., numerous repeated steps in an upward or downward trajectory) resulting in some clear anomalies in temporal characteristics (step, stance, and swing times), Figure 3 (green arrows). Although the underlying CWT algorithm is not specifically designed to quantify mobility-based gait from stair ascent or descent, it generally appears to function no differently compared to temporal IMU data captured during level walking, giving the appearance that its end mobility-based gait characteristics are valid (ignoring the occasional anomaly). Perhaps this is due to its fundamental principle of identifying the low-frequency accelerations of the pelvic/L5 region, which may be similar during stair ambulation compared to level walking. Indeed, other research has shown that net moments of force (albeit) at the hip are the same for stair walking and level walking [25]. Additionally, Table 1 presents mean temporal data and there appears to be no major observable difference across all 5 terrains (other than swing time on terrain #2). Perhaps, the stairs (and possibly the use of visual cues such as black stripes to readily identify each step) may regulate/cue the participant, meaning temporal data on these terrains (#4 and #5) appear equal/similar from those obtained during level ground walking when, in fact, they should (fundamentally) not be treated as equal i.e., not included in the same analysis due to biomechanical differences needed for mobility on such contrasting terrains (i.e., level vs. stairs).

Table 1.

Initial assessment of mobility/gait characteristics and terrain types over different 30 s bouts, no context.

Discrepancies between level and stair terrains become more observable when utilising mean spatio-temporal as well as all variability and asymmetry characteristics, as shown in Table 1. Specifically, step length and velocity have more obvious anomalies on these terrains compared to others i.e., highly fluctuating characteristics compared to their equivalent data on level ground, as shown in Figure 3. Here, these CoM-derived characteristics are dependent on the inverted pendulum model, designed for use on linear and flat level walking only [23]. Accordingly, use of the model for step length and velocity characteristics is clearly not fit for purpose on terrains like stairs, and so their resulting values are (i) fundamentally incorrect and therefore (ii) misleading in terms of the participant’s true mobility-based gait. However, current approaches that do not use video data have no absolute clarity to determine why the discrepancy in spatio-temporal, variability and asymmetry mobility-based gait characteristics may occur from one mobility bout to another (i.e., from lab and terrains #1 to #3 compared to #4 and #5). Although, if the research focus is to broadly examine fall risk, then perhaps current IMU-based mobility-based gait data from L5 may remain a useful proxy (Section 3.3).

Increased variability within step-to-step events is often cited as the most impactful predictor of fall risk [26,27], and use of a single IMU on L5 is a common mechanism to quantify free-living gait. Table 1 shows variability characteristics, which display an interesting pattern. Although there may be no true IC/FC event from stair ambulation due to foot placement, the CWT algorithm produces (what seem like) plausible temporal characteristics due to the proxy nature of the algorithm (highlighted in previous section). Specifically, the algorithm infers times derived from underlying frequency variations due to IC/FC events [22] that are distal (feet) to the IMU site of attachment (L5) [6]. Regardless of the lack of fundamental accuracies of IC/FC in gait cycle, all quantified variability characteristics show changes from level to stair terrains. Broadly and perhaps expectedly, there seems to be a linear relationship between increasing terrain complexity and temporal gait characteristic variability (SD) from the lab to outdoor level terrain (asphalt, asphalt + paving and paving), to stairs (Table 1).

Interestingly, a linear relationship is not evident from variability and asymmetry spatio-temporal gait from the lab to outdoor level terrain (Table 1). It can be rationalised that the researcher impacted the participant’s normal walking style. This can be evidenced from video analysis where it was observed that the participant occasionally gazed at the researcher while engaging in conversation causing occasional moments of hesitancy and therefore, an altered stepping pattern. Here, the use of video data is particularly useful to help guide IMU characteristics in comparison to lab-based data. Additionally, variability greatly increased for stair ambulation (Table 1) due to the obvious biomechanical differences required and arising impact on the inverted pendulum model used to quantify step length and velocity. Again, use of video data clearly identified stair ambulation.

Although the use of the CWT and inverted pendulum algorithms may not be specifically designed for some terrains (Table 1) and are unlikely to produce valid data when considered within the gait cycle on those terrains, they seem to produce data that could be generally useful to broadly identify increased complexity within common mobility tasks. For example, the identification of time periods within continuous IMU data for when a participant data is abnormal could be useful to segment video data and determine if the cause is due to intrinsic or extrinsic factors, as shown in Figure 5. Accordingly, that approach may help streamline IMU and video processing while helping to better investigate fall risk that continues to be limited by assumptions needing to be made of intrinsic or extrinsic factors.

Figure 5.

Left to right, although common algorithms for spatio-temporal processing for data collect on L5 are not specifically designed for e.g., stair ambulation, they may still be useful tools to help inform fall risk when used with video data for a more rounded mobility assessment.

3.2. Other Observations

Based on current approaches (single IMU on L5), there is no absolute clarity to determine whether intrinsic or extrinsic factors are impacting mobility-based gait from the quantification of characteristics, as shown in Figure 4. Although data may remain somewhat useful to broadly infer assumptions on fall risk (e.g., higher gait variability in habitual settings compared to a lab), the continued use of a device at that anatomical location remains limited if the research focus is on the fundamentals of gait cycles alone, as no targeted interventions can be developed to suit the individual needs of a participant. Furthermore, there are many other natural occurring extrinsic factors that impact mobility-based gait and increased fall risk. For example, pausing/stopping at a doorway (Figure 4iv), which has been shown to produce notable mechanistic impact on specific regions of the brain which may encode environmental cues to adapt mobility-based gait [28].

Doorways are well documented in Parkinson’s research, eliciting various stimuli that evoke abnormal gait characteristics, resulting in episodes of freezing [29]. In our example (Figure 4iv), the CWT signals begin to show a reduction in amplitude as the participant slows to firstly allow another pedestrian through the door before he advances. That routine event may occur often during a person’s community-based ambulation, but is important to isolate, to determine habitual mobility-based gait characteristics during these specific and challenging tasks which may seem to produce abnormal values indicative of increased falls risk. This is important, as arising characteristics are proposed as useful to explore underlying mechanisms to aid safe and effective mobility and therefore, reduce fall risk.

3.3. A Conceptual Model with Context

Although a general examination of mobility-based characteristics (Table 1) may be useful for an initial fall risk assessment with current IMU approaches, stratifying characteristics according to conceptual models (Figure 1) may be more helpful to better understand discrete cognitive functions [30] and the effectiveness of targeted interventions [31]. Table 2 stratifies all mobility-based gait characteristics according to a free-living conceptual model [20], the use of which may enable more sensitive methods to better identify the use of a targeted intervention [32], particularly when the associated environmental context is known.

Table 2.

Mobility/gait characteristics and terrain types over 30 s walk, with context.

Here, mobility-based gait characteristics are presented under single (quiet walking) and dual task (number recall) to compare with data from beyond the lab, where naturally occurring stimuli may often arise due to the uncontrolled environment, e.g., as noted, our participant engaged in conversation and glancing at the researcher. As might be expected from the lab data, in comparison to the single task, the dual task condition reduced the participants pace and increased their rhythm and variability, as shown in Table 2. No obvious pattern is evident when comparing lab-based single and dual task data to outdoor terrain. However, the use of video data show terrains #1 to #3 were on level ground, with walks conducted in a linear manner (i.e., no curved deviations in comparison to the lab). In contrast, video data readily identified stairs (#4 and #5) with higher pace, rhythm and variability characteristics. Again, those data cannot be considered as valid (Section 3.1), but merely as a proxy to indicate a period of time within mobility-based IMU gait data that should be used in conjunction with video data to thoroughly assess fall risk.

3.4. Further Context and Next Steps

As broadly demonstrated, the use of wearable cameras could enable further insights beyond environment and terrain, additional daily extrinsic factors such as entering doorways, which may impact mobility-based gait and increase fall risk. Additionally, as described, our participant was accompanied by a researcher during his walk beyond the lab. The head-mounted video-based glasses showed that the participant turned his head many times during his walk to face the researcher while undertaking a conversation. If future studies are to continue using IMUs for mobility-based gait to inform fall risk, then accompanying contextual information needs to be gathered as well, such as if the participant is, e.g., walking and talking with another person. Accordingly, due to any underlying functional physical limitation (e.g., hemiplegia) and an additional (distracting, dual tasking) cognitive task (s), the mobility-based IMU gait may be sufficiently altered to robustly inform fall risk. Indeed, the effect of distractions on situational awareness and the subsequent (negative) impact on gait is evidenced elsewhere [33,34,35], playing an important role in the control of mobility and, therefore, fall risk.

For this exploratory and preliminary case study examination, a manual approach was used to segment and assess video data. Here, this process was not overly time consuming due to the scripted nature of the protocol and use of validated IMU-based algorithms to segment associated mobility-based gait data. However, for pragmatic use of video data to augment IMU-based mobility data for better fall risk assessment, a more automated approach would be preferred. An automated, artificial intelligence (AI) approach would expedite the process while also potentially protecting the privacy of the participant. Next, one possible AI approach is presented.

3.5. Computer Vision-Based Algorithm

There are many approaches/algorithms that could be used to automatically analyse video arising from a wearable camera [36,37]. Here, the purpose is not to verify or validate any one algorithm, but rather to showcase the use of a computing vision approach to undertake the proposed work outlined. Typically, the need for AI computer vision-based algorithms is to classify everyday environments, i.e., whether a participant is indoor/outdoor and on what terrain they are walking. Approaches typically involve CNNs (convolutional neural networks) developed using a python-based deep learning library (e.g., PyTorch/TensorFlow) and either involve mobile waist-mounted cameras aimed directly at a participant’s feet [38] or utilise further pre-processing steps on the image stemming from head-mounted video glasses to extract and classify floor location [39]. For more nuanced classification of external factors affecting gait, object detection algorithms can be implemented.

Given the much wider application of generalised object detection algorithms, more off the shelf algorithms are widely available, such as the you only look once (YOLO) series of algorithms [40,41,42] or generalised object segmentation algorithms [43,44]. Typically, these algorithms are available pretrained to identify and perform on widely available datasets such as ImageNet [45] or common objects in context (COCO) [46], allowing for the detection of objects such as chairs, cars, people and other common obstacles. This allows for the detection of obstacles within a participant’s path to be identified from field-of-view video data without the need for any large-scale model building or dataset labelling. One caveat to this approach is that, within fall risk assessment, areas of heightened interest would include instances in which a participant is navigating a curb, stairs or walking through doorways, none of which are possible using off-the-shelf algorithms trained on either ImageNet or COCO. To enable the algorithms to detect these instances, transfer learning may be the best approach, where an off-the-shelf algorithm is utilised, pretrained on either the ImageNet or COCO datasets, with the final classification layer mapping to the available classes replaced by a custom defined layer mapping to our required classes [47,48,49] (curbs, doorways, people, stairs, etc.) that are identified as impactful in terms of their extrinsic effect on gait. While this would require more effort on the part of researchers, the outcomes would be orders of magnitude more impactful on providing context to the correlated signals stemming from the IMU devices.

One key element of any contextual analysis identified from our preliminary examination was the distractor of the participant talking to researchers while walking. That action caused the participant to turn their head and focus on the researcher, which may have been a possible cause of higher gait variability within the mobility-based IMU gait characteristics and, without context, could be misunderstood as an intrinsic factor. Utilising the aforementioned object-detection AI algorithms, identification of people and, by extension, faces would allow for this context to be concatenated to the signal and mobility-based gait characteristics, providing the context that the participant was talking while walking. Furthermore, by identifying an individual in that context, the same AI could blur the face of the individual to protect their identity [50,51], upholding ethical concerns.

While the classification of currently navigated terrain is an important stepping stone to achieving complete environmental contextualisation, future work entails the implementation of further computer vision algorithms to extract potential hazards/obstacles within view in conjunction with wearable eye tracking devices to better understand how participants navigate their environments, with specific focus on detailing any obstacles discovered within a participant’s path and whether or not the participant had seen that obstacle by correlating eye location with the obstacle’s position within frame.

3.6. Video-Based Challenges

A key challenge with the proposed computer vision approach is the quality of camera provided by the wearable eye-tracking glasses. Given the micro size requirements of the camera to fit ergonomically within the headset, the quality of output video can be somewhat degraded. This is especially noticeable within poorly lit environments. However, as with all hardware developments, as time moves forward, expectations for the quality of the camera to increase are a given, allowing for the same algorithm to be applied, leading to more robust accuracies.

3.7. Ethical AI

There is a plethora of ethical considerations that must be considered when dealing with playback of wearable camera footage taken within free-living environments across a prolonged period. With the usage of automated AI-based methods to handle processing of the video itself, many of the previous ethical concerns are alleviated. However, ethical considerations of the usage and design of the AI itself must be considered. Typically, ethical AI represents topics such as preventing bias in AI algorithms; however, within this studies context, ethical AI represents removing sensitive information and scenarios from the viewable FPV-based scenes. This could be, for example, when a participant is viewing a bank statement while the cameras are active or during a sensitive event such as a participant using the toilet while forgetting to turn off the cameras. Future work within this area should involve the utilisation of computer vision-based object detection algorithms to begin to remove any sensitive environments or information (including faces, above) from the view of any researchers reviewing the data.

3.8. Enhanced Fall Risk Assessment

Falls can begin a cascade of financial, individual and social consequences [52]. At a time of an ageing population and increasing demands on health and social care providers, screening patients for fall risk and addressing risks has been shown to be cost-effective [52]. With the approach of providing automated context, a groundwork for the automated analysis of fall risk could be laid. Through use of an AI system, subjective opinions on whether areas of high gait variability are caused by intrinsic or extrinsic factors could be alleviated by providing absolute context to the terrain being traversed, along with whether the participant was, e.g., (i) within an indoor or outdoor environment, (ii) on a challenging terrain, (iii) with or without someone else or (iv) navigating a busy/complex environment. This offers the opportunity to better manage the risk of falling and to realise the benefits at an individual level.

4. Conclusions

This paper broadly discusses the current uses and limitations of a single IMU worn on the lower back, which was used within the field to inform fall risk from mobility-based gait. Here, a single participant stroke survivor was used to provide insightful data captured from various terrains within a lab and beyond. While some data derived from commonly used IMU-based algorithms seem somewhat useful for fall risk assessment, approaches remain limited due to a lack of comprehensive understanding of the context associated with mobility-based IMU gait characteristics. A thorough understanding of intrinsic and extrinsic factors may only be achieved by augmenting mobility-based IMU gait with a contemporary approach for video data capture, i.e., wearable video-based glasses. Many possible AI-based computing vision algorithms exist and should be investigated for their use to process contextual information ethically and efficiently from free-living environments to comprehensively inform fall risk assessment. The field of free-living fall risk assessment should consider the use of contemporary wearable video-based devices to better inform the current limited approach of IMU wearables only. Although the use of video has notable ethical concerns, many current automated AI-based approaches using computer vision may uphold participant anonymity and privacy. By supplementing mobility-based IMU gait data with video, a thorough and better understanding of fall risk from habitual/free-living environments can be determined.

Author Contributions

Conceptualization, J.M. and A.G.; Methodology, J.M., Y.C. and A.G.; Formal analysis, J.M., Y.C. and A.G.; Investigation, J.M. and A.G.; Resources, J.M. and A.G.; Data curation, J.M. and Y.C.; Writing—original draft, J.M.; Writing—review & editing, J.M., S.S., P.M., Y.C., M.P., R.W. and A.G.; Supervision, S.S., R.W., P.M. and A.G.; Project administration, J.M., P.M., R.W., S.S. and A.G.; Funding acquisition, S.S., P.M. and A.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research is co-funded by a grant from the National Institute of Health Research (NIHR) Applied Research Collaboration (ARC) North-East and North Cumbria (NENC). This research is also co-funded as part of a PhD studentship (J.M.) by the Faculty of Engineering and Environment at Northumbria University. Additionally, work was supported in part, by the Parkinson’s Foundation (PF-FBS-1898, PF-CRA-2073) (PI: S.S.).

Institutional Review Board Statement

Northumbria University Research Ethics Committee (Reference Number: 21603, approval date: 11 February 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

Data is available upon reasonable request.

Acknowledgments

The authors would like to thank the participant who took his time to complete this study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Allen, N.E.; Schwarzel, A.K.; Canning, C.G. Recurrent falls in Parkinson’s disease: A systematic review. Park. Dis. 2013, 2013, 906274. [Google Scholar] [CrossRef] [PubMed]

- Pelicioni, P.H.; Menant, J.C.; Latt, M.D.; Lord, S.R. Falls in Parkinson’s disease subtypes: Risk factors, locations and circumstances. Int. J. Environ. Res. Public Health 2019, 16, 2216. [Google Scholar] [CrossRef] [PubMed]

- Goto, Y.; Otaka, Y.; Suzuki, K.; Inoue, S.; Kondo, K.; Shimizu, E. Incidence and circumstances of falls among community-dwelling ambulatory stroke survivors: A prospective study. Geriatr. Gerontol. Int. 2019, 19, 240–244. [Google Scholar] [CrossRef] [PubMed]

- Sejdić, E.; Godfrey, A.; McIlroy, W.; Montero-Odasso, M. Engineering human gait and the potential role of wearable sensors to monitor falls. In Falls and Cognition in Older Persons; Springer: Berlin/Heidelberg, Germany, 2020; pp. 401–426. [Google Scholar]

- Danielsen, A.; Olofsen, H.; Bremdal, B.A. Increasing fall risk awareness using wearables: A fall risk awareness protocol. J. Biomed. Inform. 2016, 63, 184–194. [Google Scholar] [CrossRef] [PubMed]

- Godfrey, A.; Conway, R.; Meagher, D.; ÓLaighin, G. Direct measurement of human movement by accelerometry. Med. Eng. Phys. 2008, 30, 1364–1386. [Google Scholar]

- Del Din, S.; Godfrey, A.; Mazzà, C.; Lord, S.; Rochester, L. Free-living monitoring of Parkinson’s disease: Lessons from the field. Mov. Disord. 2016, 31, 1293–1313. [Google Scholar] [CrossRef]

- Godfrey, A. Wearables for independent living in older adults: Gait and falls. Maturitas 2017, 100, 16–26. [Google Scholar] [CrossRef]

- Lord, S.; Galna, B.; Rochester, L. Moving forward on gait measurement: Toward a more refined approach. Mov. Disord. 2013, 28, 1534–1543. [Google Scholar] [CrossRef]

- Mactier, K.; Lord, S.; Godfrey, A.; Burn, D.; Rochester, L. The relationship between real world ambulatory activity and falls in incident Parkinson’s disease: Influence of classification scheme. Park. Relat. Disord. 2015, 21, 236–242. [Google Scholar] [CrossRef]

- Nouredanesh, M.; Godfrey, A.; Howcroft, J.; Lemaire, E.D.; Tung, J. Fall risk assessment in the wild: A critical examination of wearable sensor use in free-living conditions. Gait Posture 2021, 85, 178–190. [Google Scholar] [CrossRef]

- Nouredanesh, M.; Ojeda, L.; Alexander, N.B.; Godfrey, A.; Schwenk, M.; Melek, W.; Tung, J. Automated Detection of Older Adults’ Naturally-Occurring Compensatory Balance Reactions: Translation From Laboratory to Free-Living Conditions. IEEE J. Transl. Eng. Health Med. 2022, 10, 1–13. [Google Scholar] [CrossRef]

- Woolrych, R.; Zecevic, A.; Sixsmith, A.; Sims-Gould, J.; Feldman, F.; Chaudhury, H.; Symes, B.; Robinovitch, S.N. Using video capture to investigate the causes of falls in long-term care. Gerontologist 2015, 55, 483–494. [Google Scholar] [CrossRef] [PubMed]

- Bianco, S.; Ciocca, G.; Napoletano, P.; Schettini, R. An interactive tool for manual, semi-automatic and automatic video annotation. Comput. Vis. Image Underst. 2015, 131, 88–99. [Google Scholar] [CrossRef]

- Patel, J.; Lai, P.; Dormer, D.; Gullapelli, R.; Wu, H.; Jones, J.J. Comparison of Ease of Use and Comfort in Fitness Trackers for Participants Impaired by Parkinson’s Disease: An exploratory study. AMIA Summits Transl. Sci. Proc. 2021, 2021, 505. [Google Scholar]

- Li, C.; Lee, C.-F.; Xu, S. Stigma Threat in Design for Older Adults: Exploring Design Factors that Induce Stigma Perception. Int. J. Des. 2020, 14, 51–64. [Google Scholar]

- Celik, Y.; Stuart, S.; Woo, W.L.; Godfrey, A. Wearable Inertial Gait Algorithms: Impact of Wear Location and Environment in Healthy and Parkinson’s Populations. Sensors 2021, 21, 6476. [Google Scholar] [CrossRef] [PubMed]

- Vijayan, V.; Connolly, J.P.; Condell, J.; McKelvey, N.; Gardiner, P. Review of wearable devices and data collection considerations for connected health. Sensors 2021, 21, 5589. [Google Scholar] [CrossRef]

- Celik, Y.; Stuart, S.; Woo, W.L.; Godfrey, A. Gait analysis in neurological populations: Progression in the use of wearables. Med. Eng. Phys. 2021, 87, 9–29. [Google Scholar] [CrossRef]

- Morris, R.; Hickey, A.; Del Din, S.; Godfrey, A.; Lord, S.; Rochester, L. A model of free-living gait: A factor analysis in Parkinson’s disease. Gait Posture 2017, 52, 68–71. [Google Scholar] [CrossRef] [PubMed]

- Hickey, A.; Del Din, S.; Rochester, L.; Godfrey, A. Detecting free-living steps and walking bouts: Validating an algorithm for macro gait analysis. Physiol. Meas. 2016, 38, N1. [Google Scholar] [CrossRef] [PubMed]

- McCamley, J.; Donati, M.; Grimpampi, E.; Mazzà, C. An enhanced estimate of initial contact and final contact instants of time using lower trunk inertial sensor data. Gait Posture 2012, 36, 316–318. [Google Scholar] [CrossRef] [PubMed]

- Zijlstra, W.; Hof, A.L. Assessment of spatio-temporal gait parameters from trunk accelerations during human walking. Gait Posture 2003, 18, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Godfrey, A.; Del Din, S.; Barry, G.; Mathers, J.; Rochester, L. Instrumenting gait with an accelerometer: A system and algorithm examination. Med. Eng. Phys. 2015, 37, 400–407. [Google Scholar] [CrossRef] [PubMed]

- Kirkwood, R.N.; Culham, E.G.; Costigan, P. Hip moments during level walking, stair climbing, and exercise in individuals aged 55 years or older. Phys. Ther. 1999, 79, 360–370. [Google Scholar] [CrossRef]

- Hausdorff, J.M.; Rios, D.A.; Edelberg, H.K. Gait variability and fall risk in community-living older adults: A 1-year prospective study. Arch. Phys. Med. Rehabil. 2001, 82, 1050–1056. [Google Scholar] [CrossRef] [PubMed]

- Moon, Y.; Wajda, D.A.; Motl, R.W.; Sosnoff, J.J. Stride-time variability and fall risk in persons with multiple sclerosis. Mult. Scler. Int. 2015, 2015, 964790. [Google Scholar] [CrossRef] [PubMed]

- Marchal, V.; Sellers, J.; Pélégrini-Issac, M.; Galléa, C.; Bertasi, E.; Valabrègue, R.; Lau, B.; Leboucher, P.; Bardinet, E.; Welter, M.-L. Deep brain activation patterns involved in virtual gait without and with a doorway: An fMRI study. PLoS ONE 2019, 14, e0223494. [Google Scholar] [CrossRef]

- Cowie, D.; Limousin, P.; Peters, A.; Day, B.L. Insights into the neural control of locomotion from walking through doorways in Parkinson’s disease. Neuropsychologia 2010, 48, 2750–2757. [Google Scholar] [CrossRef]

- Morris, R.; Lord, S.; Bunce, J.; Burn, D.; Rochester, L. Gait and cognition: Mapping the global and discrete relationships in ageing and neurodegenerative disease. Neurosci. Biobehav. Rev. 2016, 64, 326–345. [Google Scholar] [CrossRef] [PubMed]

- Veldema, J.; Gharabaghi, A. Non-invasive brain stimulation for improving gait, balance, and lower limbs motor function in stroke. J. NeuroEng. Rehabil. 2022, 19, 84. [Google Scholar] [CrossRef]

- Gonzalez-Hoelling, S.; Bertran-Noguer, C.; Reig-Garcia, G.; Suñer-Soler, R. Effects of a music-based rhythmic auditory stimulation on gait and balance in subacute stroke. Int. J. Environ. Res. Public Health 2021, 18, 2032. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.; Amado, A.; Sheehan, L.; Van Emmerik, R.E. Dual task interference during walking: The effects of texting on situational awareness and gait stability. Gait Posture 2015, 42, 466–471. [Google Scholar] [CrossRef] [PubMed]

- Kressig, R.W.; Herrmann, F.R.; Grandjean, R.; Michel, J.-P.; Beauchet, O. Gait variability while dual-tasking: Fall predictor in older inpatients? Aging Clin. Exp. Res. 2008, 20, 123–130. [Google Scholar] [CrossRef] [PubMed]

- Plummer-D’Amato, P.; Altmann, L.J.; Saracino, D.; Fox, E.; Behrman, A.L.; Marsiske, M. Interactions between cognitive tasks and gait after stroke: A dual task study. Gait Posture 2008, 27, 683–688. [Google Scholar] [CrossRef] [PubMed]

- Pavlov, V.; Khryashchev, V.; Pavlov, E.; Shmaglit, L. Application for video analysis based on machine learning and computer vision algorithms. In Proceedings of the 14th Conference of Open Innovation Association FRUCT, Helsinki, Finland, 11–15 November 2013; IEEE: New York, NY, USA, 2013; pp. 90–100. [Google Scholar]

- Nishani, E.; Çiço, B. Computer vision approaches based on deep learning and neural networks: Deep neural networks for video analysis of human pose estimation. In Proceedings of the 6th Mediterranean Conference on Embedded Computing (MECO), Bar, Montenegro, 11–15 June 2017; IEEE: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Nouredanesh, M.; Godfrey, A.; Powell, D.; Tung, J. Egocentric vision-based detection of surfaces: Towards context-aware free-living digital biomarkers for gait and fall risk assessment. J. NeuroEng. Rehabil. 2022, 19, 79. [Google Scholar] [CrossRef]

- Moore, J.; Stuart, S.; Walker, R.; McMeekin, P.; Young, F.; Godfrey, A. Deep learning semantic segmentation for indoor terrain extraction: Toward better informing free-living wearable gait assessment. In Proceedings of the IEEE BHI-BSN Conference 2022, Ioanina, Greece, 27–30 September 2022. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhou, J.; Pun, C.-M.; Tong, Y. Privacy-sensitive objects pixelation for live video streaming. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3025–3033. [Google Scholar]

- Zhou, J.; Pun, C.-M. Personal privacy protection via irrelevant faces tracking and pixelation in video live streaming. IEEE Trans. Inf. Secur. 2020, 16, 1088–1103. [Google Scholar] [CrossRef]

- Alipour, V.; Azami-Aghdash, S.; Rezapour, A.; Derakhshani, N.; Ghiasi, A.; Yusefzadeh, N.; Taghizade, S.; Amuzadeh, S. Cost-effectiveness of multifactorial interventions in preventing falls among elderly population: A systematic review. Bull. Emerg. Trauma 2021, 9, 159. [Google Scholar] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).