Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning

Abstract

1. Introduction

- (1)

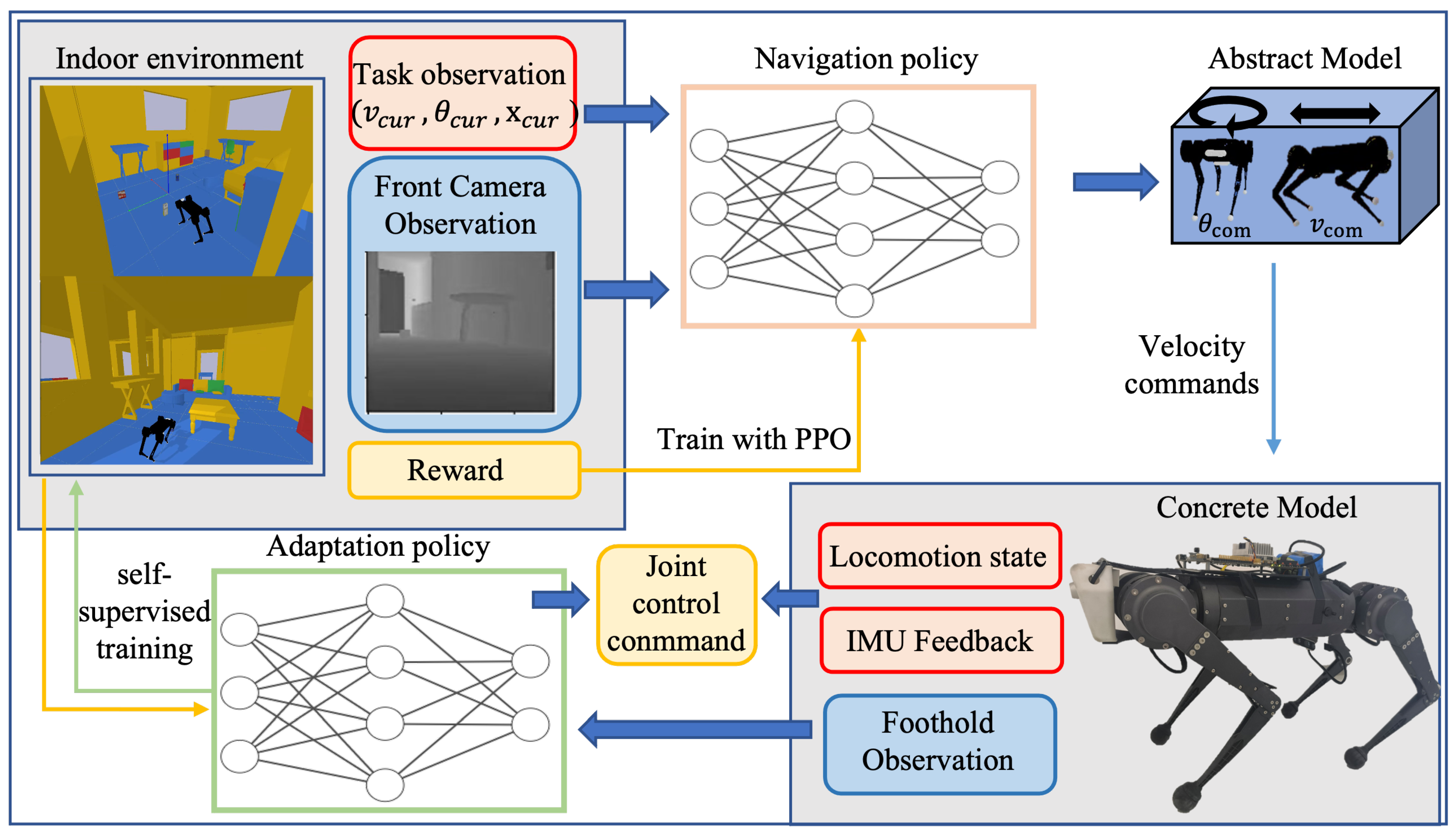

- The proposed hierarchical vision system combines high-level navigation policy with vision-based locomotion control, resulting in a data-efficient and concise embodied system with increased practical applicability for legged robots.

- (2)

- We design an auto-annotated supervised training approach for a foothold adaptation policy that optimizes a visual-based controller without complex reward designs or extensive training; the policy establishes a direct correlation between foot observation and successful obstacle avoidance.

2. Related Work

2.1. Vision Sensors for Robot Navigation

2.2. Quadruped Robots Navigation Policy

2.3. Quadruped Robots Foothold Adaptation

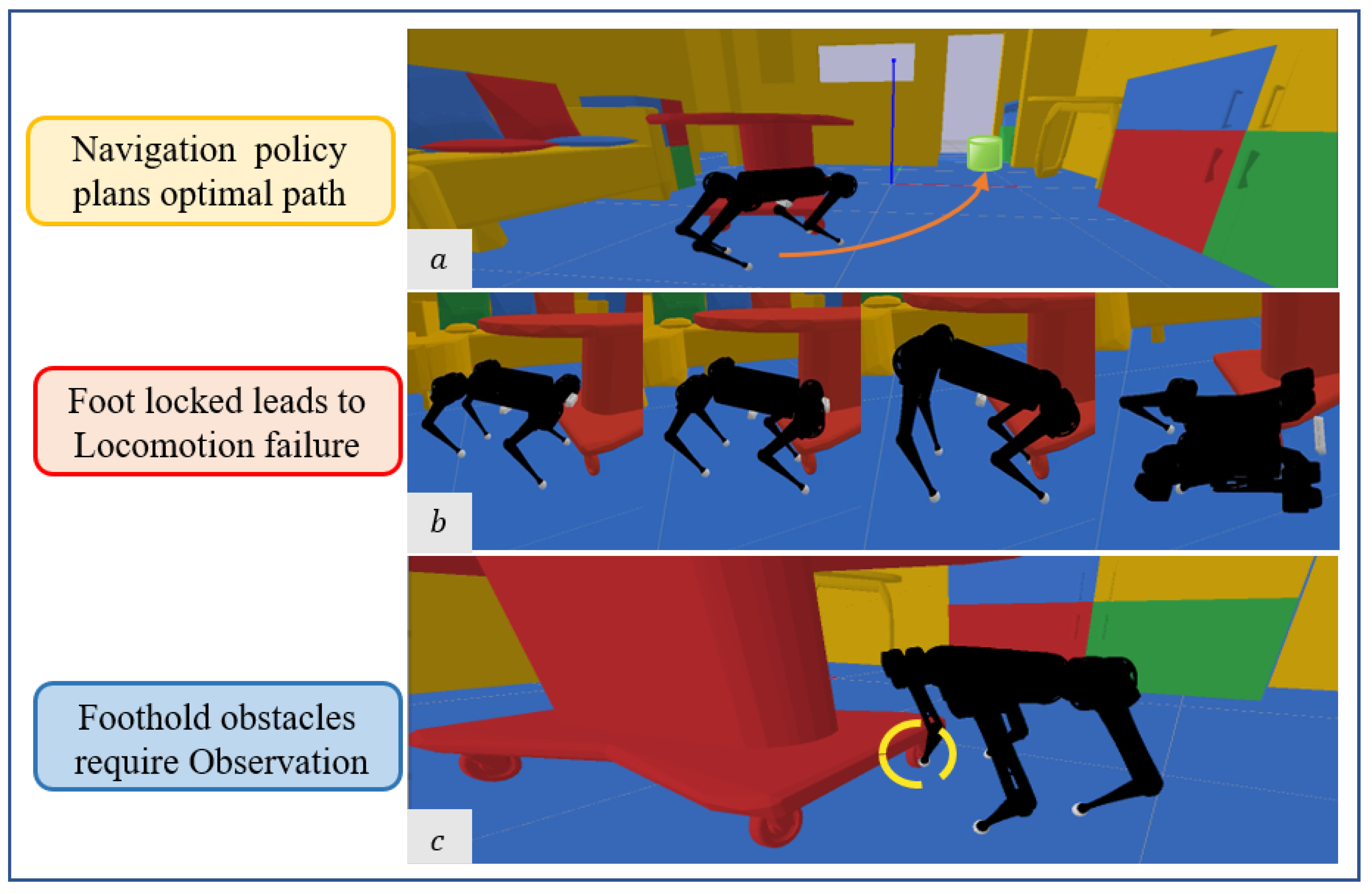

3. Hierarchical Vision Navigation System

3.1. Navigation Policy

3.2. Controller with Foothold Adaptation

4. Foothold Adaptation Learning

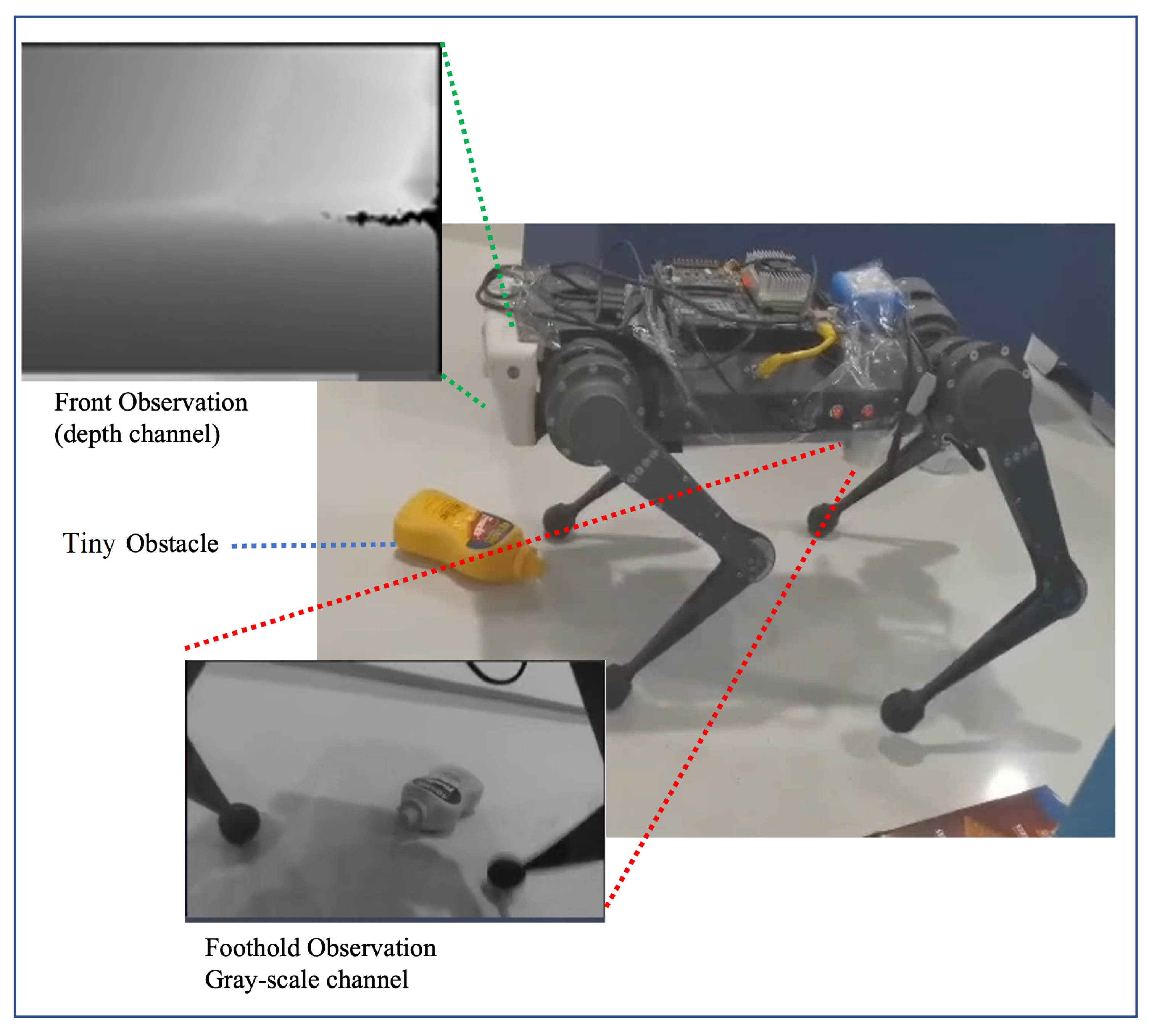

4.1. Observation System and Adaptation Action

4.2. Policy Learning

| Algorithm 1 Adaptation Policy Routine |

Require: adaptation action library , greedy factor

|

4.3. Combination with Locomotion Controller

5. Experiment

5.1. Experimental Setup

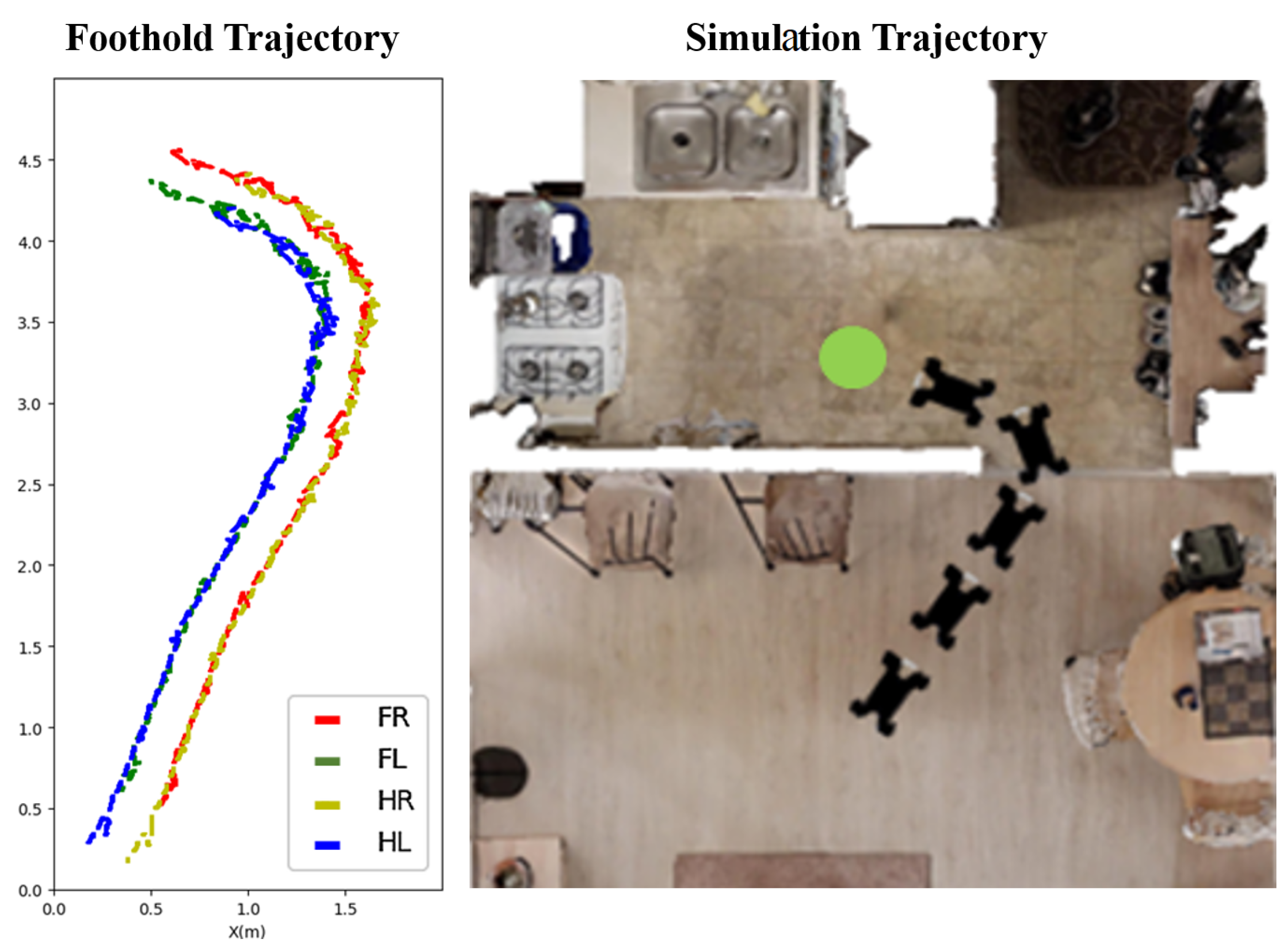

5.1.1. Simulation Setup

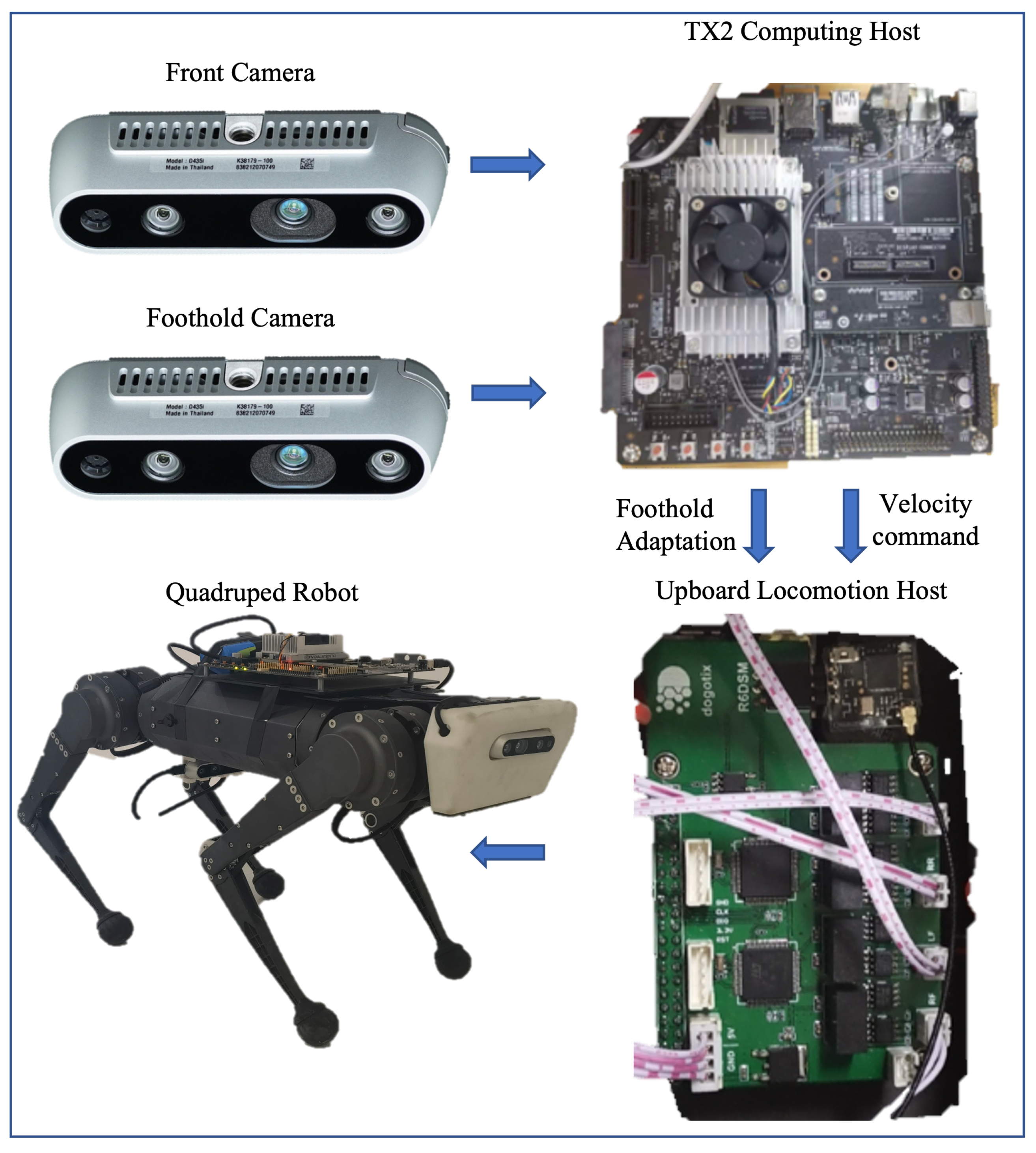

5.1.2. Physical Hardware

5.1.3. Baseline and Metrics

- (1)

- End-to-end navigation policy (Base-Nav) [46]: We deploy the navigation policy directly on the quadruped robot.

- (2)

- Heuristic foot placement policy (Heuristic) [36]: Among recent foothold adaptation methods that use potential search [16,36,37], we choose to compare our method with [36] as it shares the same motion controllers with our approach. To implement this baseline, we construct a heightmap using the initial obstacle positions, and the robot autonomously chooses foothold positions with a height below 0.03 m.

- (3)

- RL learned foothold adaptation policy (RL) [41]: We train a foothold adaptation policy using reinforcement learning as a baseline for comparison. Because we use the egocentric image input as well, we mainly replicate the RL method of [41] instead of [14] for comparison. In addition to the foothold observation, we incorporated the robot’s joint states, base velocity, and body height as inputs. For reward shaping, we incentivized speed following and penalized collisions with obstacles.

- (1)

- Success weighted by Path Length (SPL) [45], which evaluates the efficiency of the navigation (static target only).

- (2)

- Success Rate (Success), which indicates the performance of the proposed system to complete the task. We consider a successful navigation to have occurred when the robot reaches the target location within twice the agent’s width [45].

- (3)

- Crash Rate (Crashes), which is used to represent the probability that the robot will collide with the environment and cause the task to fail.

- (4)

- Average Collisions (Collisions), which is the average number of collision points between robots and obstacles per step. The Average Collision evaluates the obstacle avoidance capability of the navigation system.

5.2. Implementation Details

5.2.1. Network Structure

5.2.2. Training Details

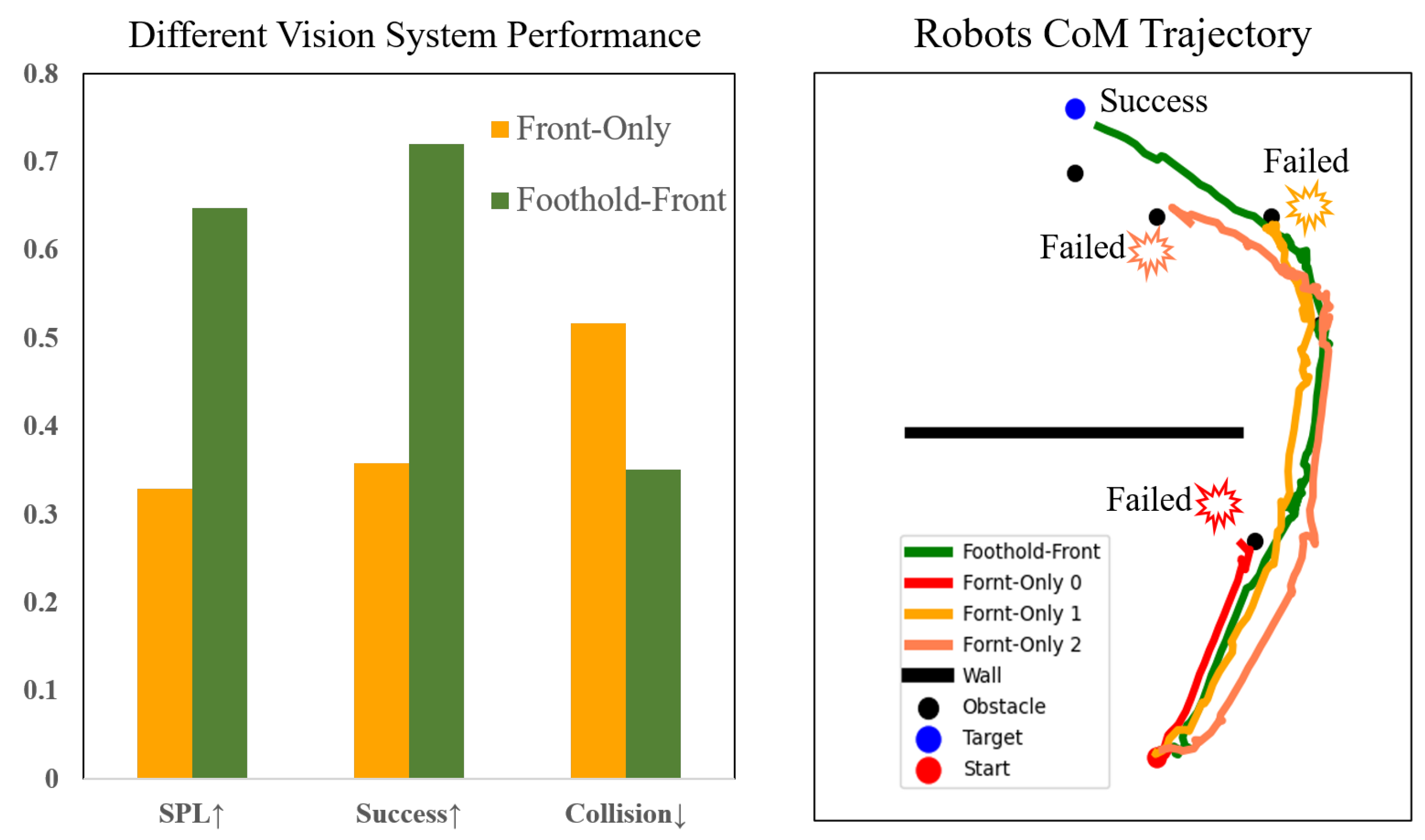

5.3. Simulation Experiments

5.3.1. Improvements with Hierarchical Vision System

5.3.2. Improvements with the Auto-Annotated Supervised Module

5.4. Real-World Experiments

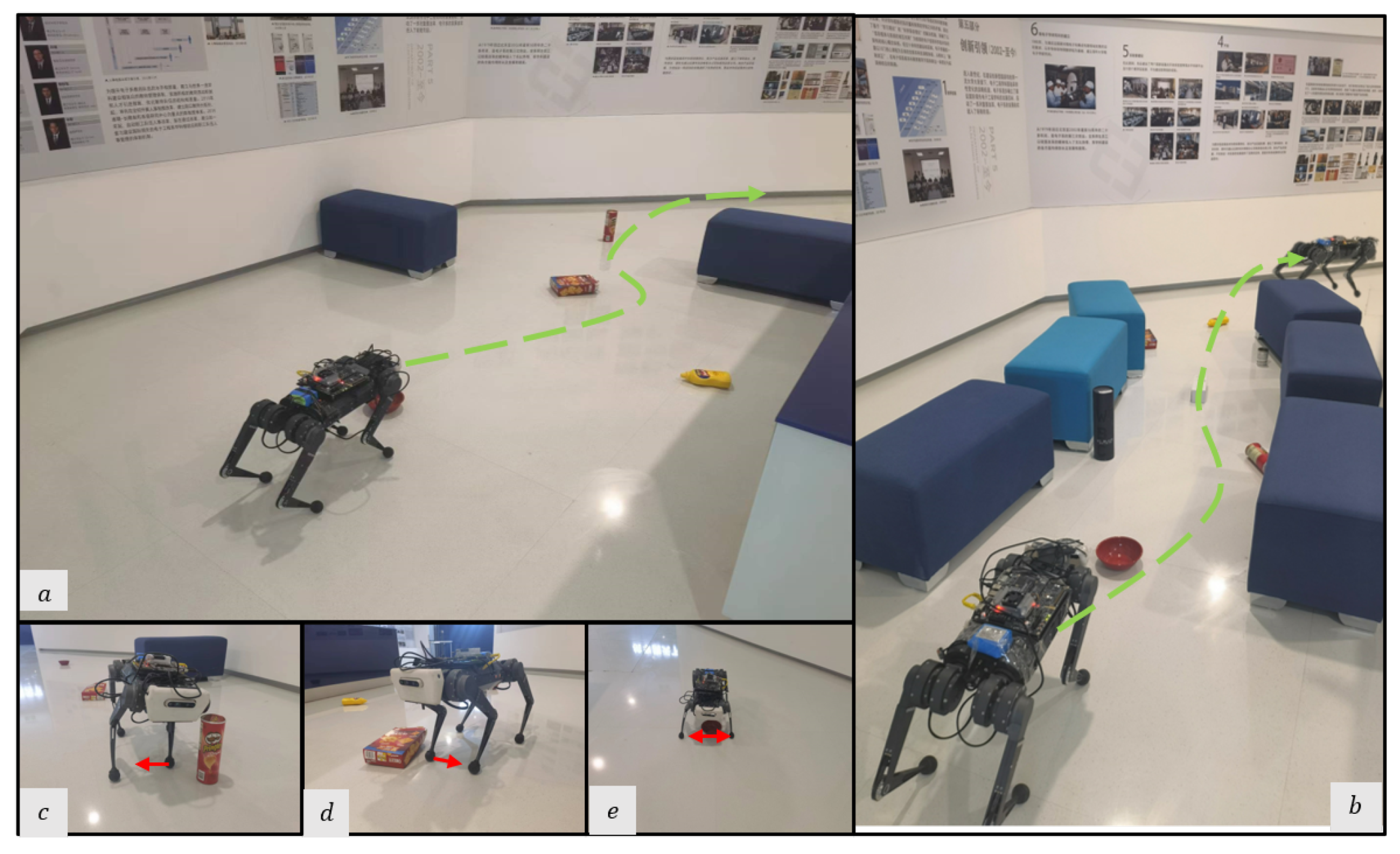

- (1)

- A cluttered exhibition hall (Figure 8) with static obstacles and target points [6 m, 2 m]. To mimic the obstacles in the simulation, we manually construct this scene in the real world. The obstacles include square sofas [0.8 m, 0.4 m, 0.4 m] and YCB objects with maximum size [0.25 m, 0.15 m, 0.1 m].

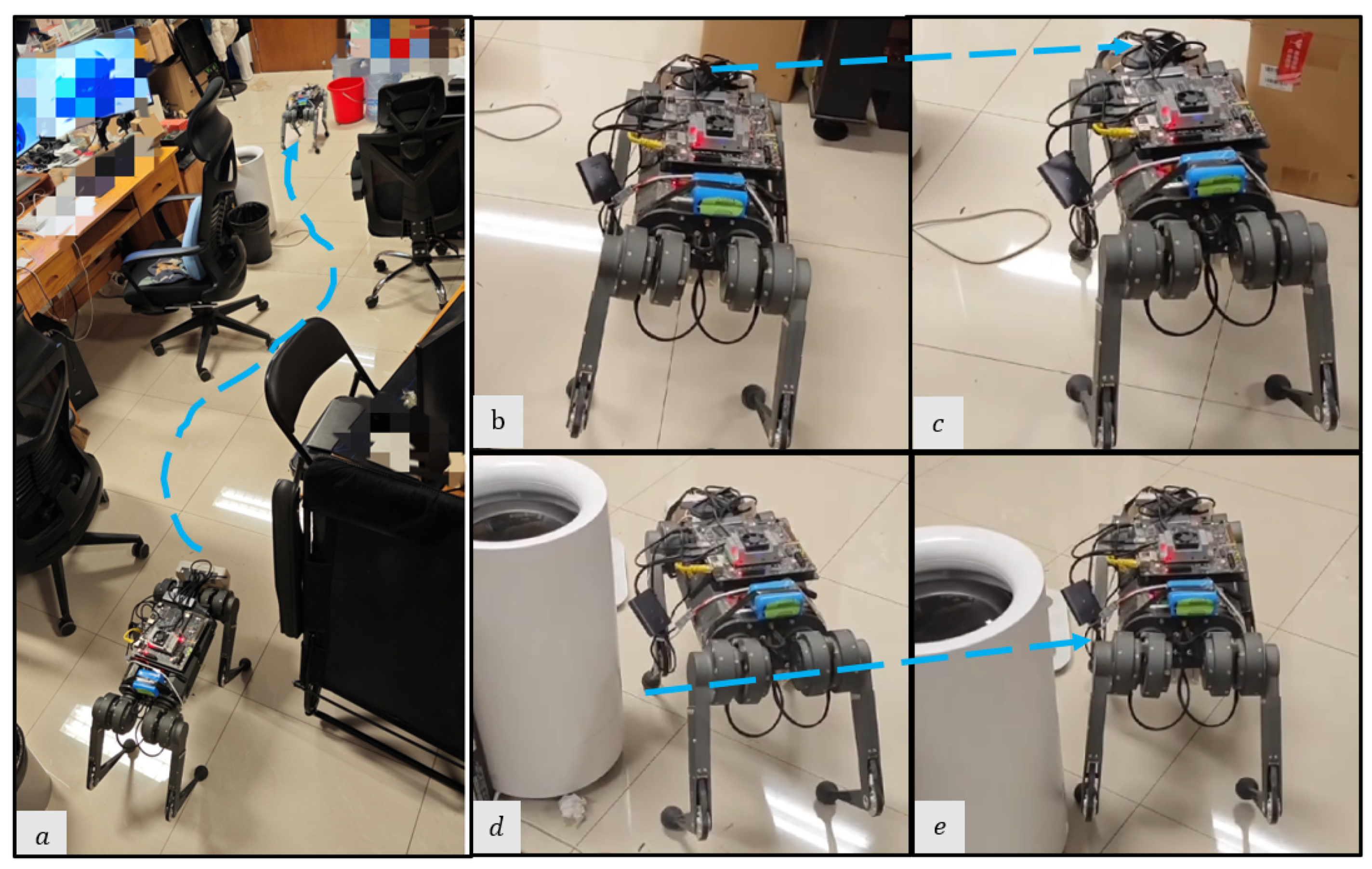

- (2)

- A static lab environment (Figure 9) with target point [6.5 m, 2 m]. The obstacles are common objects that are naturally placed in indoor scenes, including chairs, desks, the water dispenser, and so on.

- (3)

- A parlor (Figure 10) with target point [5 m, 3 m]. In addition to static obstacles (square sofas, chairs), we incorporate a dynamic obstacle, where a person walks from the side of the robot to its front at an approximate speed of 0.5 m/s. This setting allows us to evaluate the system’s performance in dynamic environments.

5.4.1. Sim-to-Real Transfer

5.4.2. Avoiding Static Obstacles

5.4.3. Avoiding Dynamic Obstacles

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Roscia, F.; Cumerlotti, A.; Del Prete, A.; Semini, C.; Focchi, M. Orientation Control System: Enhancing Aerial Maneuvers for Quadruped Robots. Sensors 2023, 23, 1234. [Google Scholar] [CrossRef] [PubMed]

- Semini, C. HyQ-Design and Development of a Hydraulically Actuated Quadruped Robot. Ph.D. Thesis, University of Genoa, Genoa, Italy, 2010. [Google Scholar]

- Bonin-Font, F.; Ortiz, A.; Oliver, G. Visual navigation for mobile robots: A survey. J. Intell. Robot. Syst. 2008, 53, 263–296. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Thrun, S. Probabilistic robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

- Bansal, S.; Tolani, V.; Gupta, S.; Malik, J.; Tomlin, C. Combining optimal control and learning for visual navigation in novel environments. In Proceedings of the Conference on Robot Learning, PMLR, Cambridge, MA, USA, 16–18 November 2020; pp. 420–429. [Google Scholar]

- Truong, J.; Yarats, D.; Li, T.; Meier, F.; Chernova, S.; Batra, D.; Rai, A. Learning navigation skills for legged robots with learned robot embeddings. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 484–491. [Google Scholar]

- Ravankar, A.; Ravankar, A.A.; Kobayashi, Y.; Hoshino, Y.; Peng, C.C. Path smoothing techniques in robot navigation: State-of-the-art, current and future challenges. Sensors 2018, 18, 3170. [Google Scholar] [CrossRef]

- Fahmi, S.; Mastalli, C.; Focchi, M.; Semini, C. Passive Whole-Body Control for Quadruped Robots: Experimental Validation Over Challenging Terrain. IEEE Robot. Autom. Lett. 2019, 4, 2553–2560. [Google Scholar] [CrossRef]

- Ding, Y.; Pandala, A.; Park, H.W. Real-time Model Predictive Control for Versatile Dynamic Motions in Quadrupedal Robots. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8484–8490. [Google Scholar] [CrossRef]

- Neunert, M.; Stäuble, M.; Giftthaler, M.; Bellicoso, C.D.; Carius, J.; Gehring, C.; Hutter, M.; Buchli, J. Whole-body nonlinear model predictive control through contacts for quadrupeds. IEEE Robot. Autom. Lett. 2018, 3, 1458–1465. [Google Scholar] [CrossRef]

- Miki, T.; Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning robust perceptive locomotion for quadrupedal robots in the wild. Sci. Robot. 2022, 7, eabk2822. [Google Scholar] [CrossRef]

- Gangapurwala, S.; Geisert, M.; Orsolino, R.; Fallon, M.; Havoutis, I. Rloc: Terrain-aware legged locomotion using reinforcement learning and optimal control. IEEE Trans. Robot. 2022, 38, 2908–2927. [Google Scholar] [CrossRef]

- Wellhausen, L.; Dosovitskiy, A.; Ranftl, R.; Walas, K.; Cadena, C.; Hutter, M. Where should i walk? predicting terrain properties from images via self-supervised learning. IEEE Robot. Autom. Lett. 2019, 4, 1509–1516. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bjelonic, M.; Bellicoso, C.D.; Miki, T.; Hutter, M. Robust rough-terrain locomotion with a quadrupedal robot. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 5761–5768. [Google Scholar]

- Moreno, F.A.; Monroy, J.; Ruiz-Sarmiento, J.R.; Galindo, C.; Gonzalez-Jimenez, J. Automatic waypoint generation to improve robot navigation through narrow spaces. Sensors 2019, 20, 240. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhou, Y.; Li, H.; Hao, H.; Chen, W.; Zhan, W. The Navigation System of a Logistics Inspection Robot Based on Multi-Sensor Fusion in a Complex Storage Environment. Sensors 2022, 22, 7794. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Dai, S.; Shi, Y.; Zhao, L.; Ding, M. Navigation simulation of a Mecanum wheel mobile robot based on an improved A* algorithm in Unity3D. Sensors 2019, 19, 2976. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.A.; Mailah, M. Path planning and control of mobile robot in road environments using sensor fusion and active force control. IEEE Trans. Veh. Technol. 2019, 68, 2176–2195. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H.; Wang, G. OMC-SLIO: Online Multiple Calibrations Spinning LiDAR Inertial Odometry. Sensors 2022, 23, 248. [Google Scholar] [CrossRef]

- Dudzik, T.; Chignoli, M.; Bledt, G.; Lim, B.; Miller, A.; Kim, D.; Kim, S. Robust autonomous navigation of a small-scale quadruped robot in real-world environments. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 3664–3671. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef]

- Díaz-Vilariño, L.; Khoshelham, K.; Martínez-Sánchez, J.; Arias, P. 3D modeling of building indoor spaces and closed doors from imagery and point clouds. Sensors 2015, 15, 3491–3512. [Google Scholar] [CrossRef]

- Pfeiffer, M.; Schaeuble, M.; Nieto, J.; Siegwart, R.; Cadena, C. From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1527–1533. [Google Scholar]

- Wijmans, E.; Kadian, A.; Morcos, A.; Lee, S.; Essa, I.; Parikh, D.; Savva, M.; Batra, D. Dd-ppo: Learning near-perfect pointgoal navigators from 2.5 billion frames. arXiv 2019, arXiv:1911.00357. [Google Scholar]

- Cetin, E.; Barrado, C.; Munoz, G.; Macias, M.; Pastor, E. Drone navigation and avoidance of obstacles through deep reinforcement learning. In Proceedings of the 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; pp. 1–7. [Google Scholar]

- Pandey, A.; Pandey, S.; Parhi, D. Mobile robot navigation and obstacle avoidance techniques: A review. Int. Rob. Auto J. 2017, 2, 96–105. [Google Scholar] [CrossRef]

- Zhao, X.; Agrawal, H.; Batra, D.; Schwing, A.G. The surprising effectiveness of visual odometry techniques for embodied pointgoal navigation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16127–16136. [Google Scholar]

- Li, T.; Calandra, R.; Pathak, D.; Tian, Y.; Meier, F.; Rai, A. Planning in learned latent action spaces for generalizable legged locomotion. IEEE Robot. Autom. Lett. 2021, 6, 2682–2689. [Google Scholar] [CrossRef]

- Hoeller, D.; Wellhausen, L.; Farshidian, F.; Hutter, M. Learning a state representation and navigation in cluttered and dynamic environments. IEEE Robot. Autom. Lett. 2021, 6, 5081–5088. [Google Scholar] [CrossRef]

- Fu, Z.; Kumar, A.; Agarwal, A.; Qi, H.; Malik, J.; Pathak, D. Coupling vision and proprioception for navigation of legged robots. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17273–17283. [Google Scholar]

- Kim, D.; Di Carlo, J.; Katz, B.; Bledt, G.; Kim, S. Highly dynamic quadruped locomotion via whole-body impulse control and model predictive control. arXiv 2019, arXiv:1909.06586. [Google Scholar]

- Kim, D.; Carballo, D.; Di Carlo, J.; Katz, B.; Bledt, G.; Lim, B.; Kim, S. Vision Aided Dynamic Exploration of Unstructured Terrain with a Small-Scale Quadruped Robot. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2464–2470. [Google Scholar] [CrossRef]

- Agrawal, A.; Chen, S.; Rai, A.; Sreenath, K. Vision-aided dynamic quadrupedal locomotion on discrete terrain using motion libraries. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 4708–4714. [Google Scholar]

- Hwangbo, J.; Lee, J.; Dosovitskiy, A.; Bellicoso, D.; Tsounis, V.; Koltun, V.; Hutter, M. Learning agile and dynamic motor skills for legged robots. Sci. Robot. 2019, 4, eaau5872. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Yuan, K.; Zhu, Q.; Yu, W.; Li, Z. Multi-expert learning of adaptive legged locomotion. Sci. Robot. 2020, 5, eabb2174. [Google Scholar] [CrossRef] [PubMed]

- Ji, G.; Mun, J.; Kim, H.; Hwangbo, J. Concurrent Training of a Control Policy and a State Estimator for Dynamic and Robust Legged Locomotion. IEEE Robot. Autom. Lett. 2022, 7, 4630–4637. [Google Scholar] [CrossRef]

- Yu, W.; Jain, D.; Escontrela, A.; Iscen, A.; Xu, P.; Coumans, E.; Ha, S.; Tan, J.; Zhang, T. Visual-locomotion: Learning to walk on complex terrains with vision. In Proceedings of the 5th Annual Conference on Robot Learning, London, UK, 8–11 November 2021. [Google Scholar]

- Kumar, A.; Fu, Z.; Pathak, D.; Malik, J. Rma: Rapid motor adaptation for legged robots. arXiv 2021, arXiv:2107.04034. [Google Scholar]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef]

- Peng, X.B.; Coumans, E.; Zhang, T.; Lee, T.W.; Tan, J.; Levine, S. Learning agile robotic locomotion skills by imitating animals. arXiv 2020, arXiv:2004.00784. [Google Scholar]

- Anderson, P.; Chang, A.; Chaplot, D.S.; Dosovitskiy, A.; Gupta, S.; Koltun, V.; Kosecka, J.; Malik, J.; Mottaghi, R.; Savva, M.; et al. On evaluation of embodied navigation agents. arXiv 2018, arXiv:1807.06757. [Google Scholar]

- Xia, F.; Shen, W.B.; Li, C.; Kasimbeg, P.; Tchapmi, M.E.; Toshev, A.; Martín-Martín, R.; Savarese, S. Interactive gibson benchmark: A benchmark for interactive navigation in cluttered environments. IEEE Robot. Autom. Lett. 2020, 5, 713–720. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Liang, E.; Liaw, R.; Nishihara, R.; Moritz, P.; Fox, R.; Goldberg, K.; Gonzalez, J.; Jordan, M.; Stoica, I. RLlib: Abstractions for distributed reinforcement learning. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 3053–3062. [Google Scholar]

- Villarreal, O.; Barasuol, V.; Camurri, M.; Focchi, M.; Franceschi, L.; Pontil, M.; Caldwell, D.; Semini, C. Fast and continuous foothold adaptation for dynamic locomotion through convolutional neural networks. IEEE Robot. Autom. Lett. 2019, 4, 2140–2147. [Google Scholar]

- Coumans, E.; Bai, Y. Pybullet, a Python Module for Physics Simulation for Games, Robotics and Machine Learning. 2016. Available online: http://pybullet.org/ (accessed on 1 February 2023).

- Xiang, Y.; Schmidt, T.; Narayanan, V.; Fox, D. PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes. arXiv 2017, arXiv:1711.00199. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Bloesch, M.; Hutter, M.; Hoepflinger, M.A.; Leutenegger, S.; Gehring, C.; Remy, C.D.; Siegwart, R. State estimation for legged robots-consistent fusion of leg kinematics and IMU. Robotics 2013, 17, 17–24. [Google Scholar]

| Policy | Parameter | Value |

|---|---|---|

| High-Level Navigation | Discount factor | 0.99 |

| Learning rate | 1 × 10−4 | |

| Batch size | 4096 | |

| GAE | 0.9 | |

| max steps per iter | 500 | |

| Foothold Adaptation | Pretrained | True |

| Greedy factor | 0.75 | |

| Learning Rate | 1 × 10−4 | |

| Batch Size | 32 | |

| Motion Controller | Control Frequency | 500 |

| Sim [kp, kd] | [3, 1] | |

| Real [kp, kd] | [10, 0.2] |

| Scene | Policy 1 | SPL | Success | Collisions |

|---|---|---|---|---|

| Static | Base-Nav [46] | 0.27 | 0.29 | 0.63 |

| Heuristic [36] | 0.64 | 0.71 | 0.39 | |

| RL [41] | 0.41 | 0.47 | 0.44 | |

| Ours | 0.65 | 0.72 | 0.35 | |

| Dynamic | Base-Nav [46] | 0.26 | 0.28 | 0.63 |

| Heuristic [36] | 0.45 | 0.50 | 0.51 | |

| RL [41] | 0.45 | 0.49 | 0.48 | |

| Ours | 0.57 | 0.65 | 0.39 |

| Obstacles | Policy | Success | Crashes | Collisions |

|---|---|---|---|---|

| Static | Base-Nav [46] | 0.25 | 0.57 | 0.63 |

| Heuristic [36] | 0.59 | 0.21 | 0.41 | |

| RL [41] | 0.42 | 0.49 | 0.46 | |

| Ours | 0.62 | 0.20 | 0.38 | |

| Dynamic | Base-Nav [46] | 0.29 | 0.56 | 0.6 |

| Heuristic [36] | 0.45 | 0.39 | 0.46 | |

| RL [41] | 0.42 | 0.51 | 0.50 | |

| Ours | 0.54 | 0.34 | 0.41 |

| n-Step | Static | Dynamic | ||

|---|---|---|---|---|

| Success | Collisions | Success | Collision | |

| 100 | 0.19 | 0.66 | 0.19 | 0.59 |

| 300 | 0.19 | 0.62 | 0.26 | 0.55 |

| 500 | 0.50 | 0.41 | 0.56 | 0.29 |

| 900 | 0.79 | 0.26 | 0.80 | 0.18 |

| 1500 | 0.75 | 0.27 | 0.79 | 0.21 |

| Policy | Exhibition Hall (Static) | Lab Environment (Static) | Parlor (Dynamic) |

|---|---|---|---|

| Base-Nav | 0.2 | 0.6 | 0 |

| Ours | 0.8 | 1 | 0.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, J.; Dai, Y.; Liu, B.; Xie, P.; Wang, G. Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning. Sensors 2023, 23, 5194. https://doi.org/10.3390/s23115194

Ren J, Dai Y, Liu B, Xie P, Wang G. Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning. Sensors. 2023; 23(11):5194. https://doi.org/10.3390/s23115194

Chicago/Turabian StyleRen, Junli, Yingru Dai, Bowen Liu, Pengwei Xie, and Guijin Wang. 2023. "Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning" Sensors 23, no. 11: 5194. https://doi.org/10.3390/s23115194

APA StyleRen, J., Dai, Y., Liu, B., Xie, P., & Wang, G. (2023). Hierarchical Vision Navigation System for Quadruped Robots with Foothold Adaptation Learning. Sensors, 23(11), 5194. https://doi.org/10.3390/s23115194