An Experimental Analysis on Multicepstral Projection Representation Strategies for Dysphonia Detection

Abstract

1. Introduction

- A new framework for detecting dysphonia in voice signals;

- The formalization of a process involving the fusion and multiple projections of CCs to represent the voice signal;

- An extensive number of experimental results were conducted on the Saarbruecken Voice Database with respect to diverse configurations involving various techniques of extracting CCs and other measurements of the speech signal, such as fundamental frequency measurements.

2. Related Works

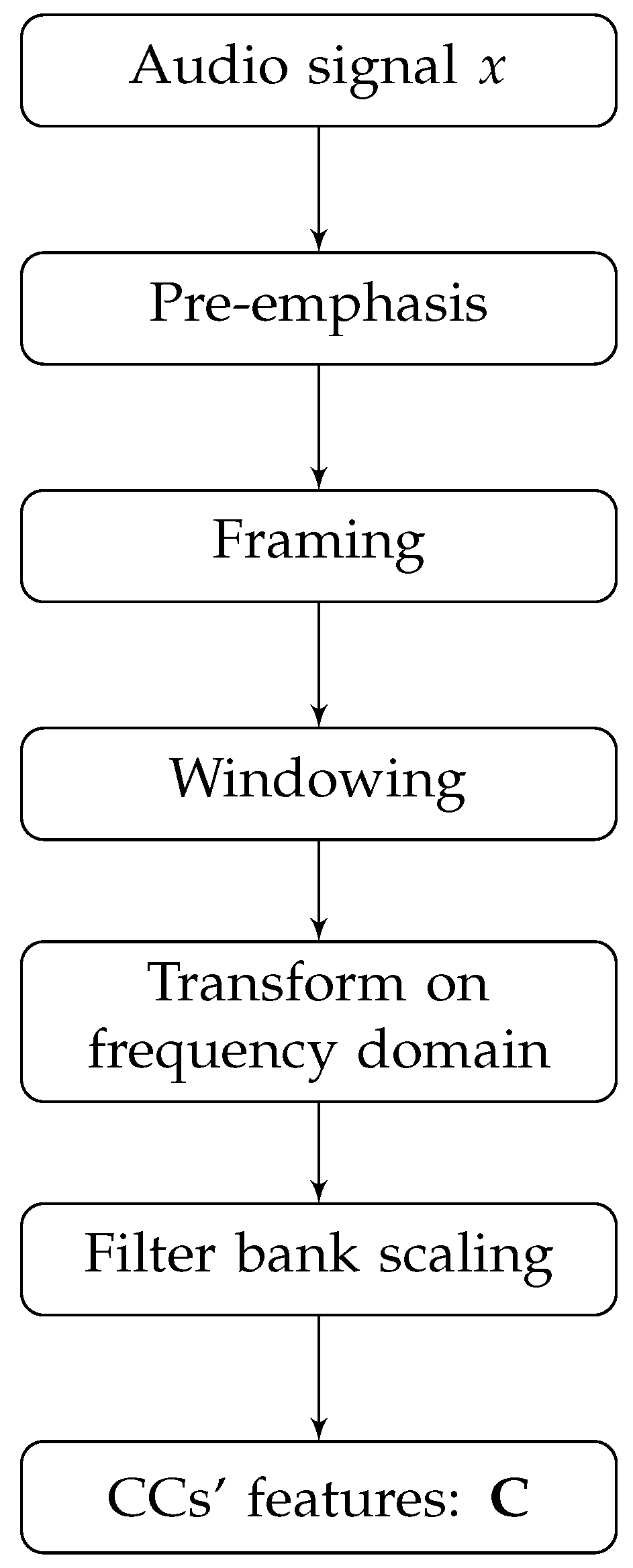

3. Cepstral Features Extraction Fundamentals

4. Methodology

- —problem domain definition: It is necessary to provide a voice signal recorded with a microphone device, in which we can suppose that there is a possibility of use from an affected individual. Thus, the problem domain is formed by voice signals that are usually defined in a time domain, that is, vectors in the space .

- —proposed method: As mentioned, in this work, the advances are contained in the dysphonia detection in a voice signal. Then, our contributions must compose or define specialized models to provide an answer to the VAS with respect to the type of voice signal that was provided to the system. For this, two new technologies are proposed to perform this task:

- −

- —multiprojection of cepstral features set: This contribution consists of a mathematical formalization of representing a set of cepstral features that must be defined by matrices. Thus, this contribution is a generalization of representing a voice signal in a feature space, therefore configuring a handcrafted feature technique. Therefore, to circumvent the VAS performance loss problem caused by the possible presence of dysphonia in the signal, this contribution needs other operational steps, such as the use of a classifier.

- −

- —framework to increase the accuracy of dysphonia detection using machine learning: Unlike , this contribution corresponds to all dysphonia detection routines in the voice signal, as the use of a classifier is one of its steps. In this case, the framework is a proposal of steps that a dysphonia detection solution must perform. Furthermore, the proposed framework can be configured in different ways, as it is defined in a generalized way.

- —method output: The developed tool must be able to point out if a given voice signal presents a sample recorded from a healthy person or from a dysphonic user. Thus, the method must work according to a binary classification routine, assigning one of the following values to the input signal: “healthy voice” or “dysphonic voice”.

- —validation: To prove the effectiveness of the proposed material, analysis situations will be conducted considering the most used benchmark in the area, which is the Saarbruecken Voice Database. This database, which will be presented in more detail in the Experiments section, is formed by voice samples obtained from healthy individuals and from individuals affected by diseases that act directly on the human vocal tract. Specifically, for this work, we will consider voice samples from healthy individuals and voice samples from individuals classified as affected by “dysphonia”. In all test situations, what must determine whether a technique succeeds in the classification task are performance measures that are associated with the accuracy of the model. Furthermore, as the two contributions of this work allow for different configurations, several specific instances of the proposed material will be considered in all test scenarios. In detail, bearing in mind that the proposed model is a generalization, and consequently allows several specific instances, comparisons are conducted between many proposed instances.

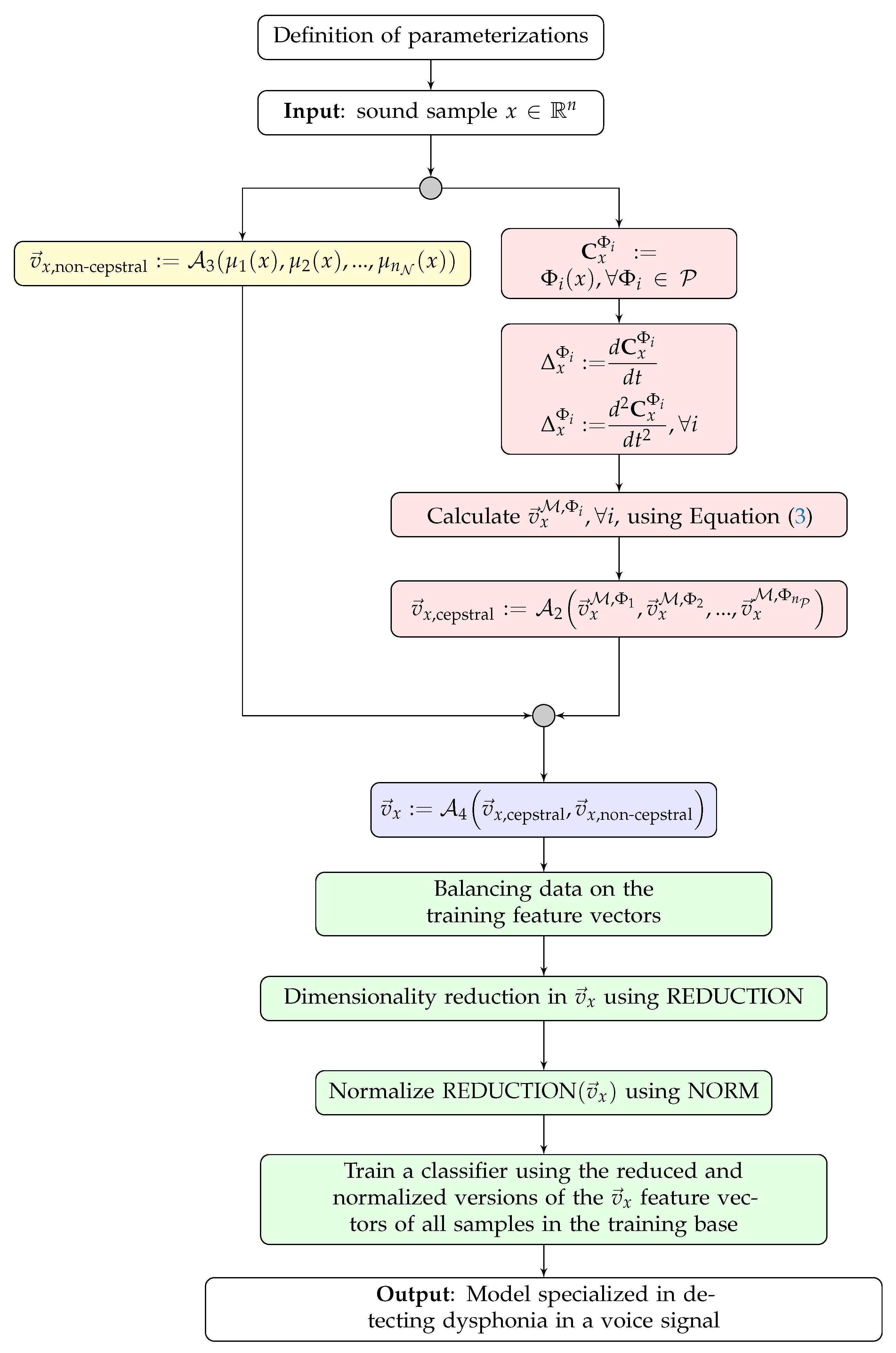

5. Proposed Multicepstral Framework Based on Multiprojection Strategies for Voice Dysphonia Detection

- A new framework for extracting and classifying the features of voice signals, with the aim of discriminating the samples into two distinct groups: the first group consists of speech samples from healthy individuals, while the second group contains speech samples from individuals suffering from of dysphonia;

- An experimental analysis of several configurations of the proposed generalization is conducted in this work. Furthermore, an important contribution is a computational evaluation of the performance of the representation of several features based on CCs, projected by different types of mappings, to solve the voice dysphonia detection problem, using three different classifiers.

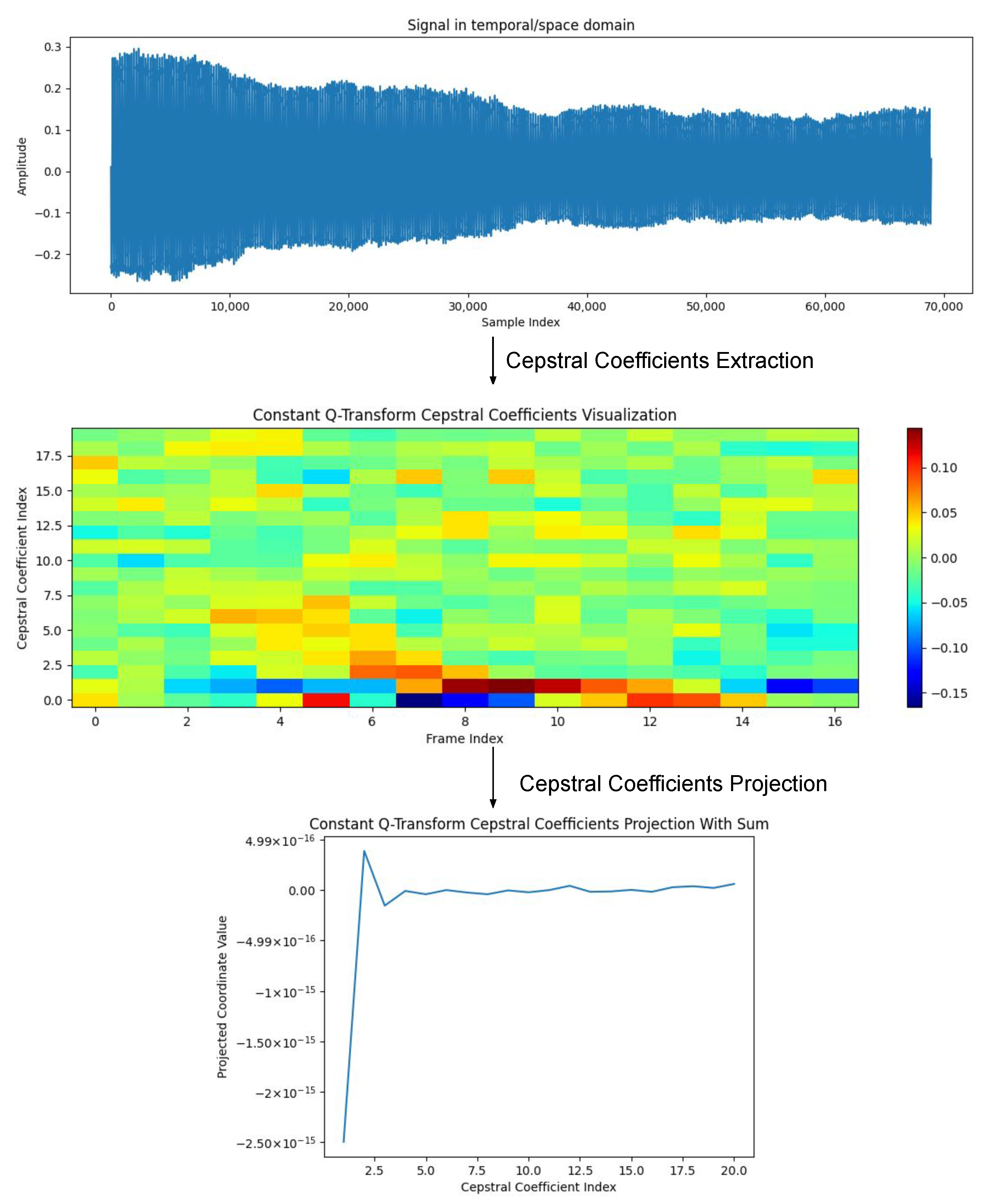

5.1. Cepstral Feature Multiprojection

5.2. Extraction of Cepstral Features Set

- 1.

- Calculate the CCs of x using the techniques of . That is, ;

- 2.

- Calculate the first- and second-order differentials of each CC defined in the previous step, these being represented, respectively, by and ;

- 3.

- Carry out the multiprojection of the calculated cepstral features using Equation (3), and consequently, defining vectors ;

- 4.

- Aggregate the vectors using the information fusion strategy according to Equation (5):

5.3. Voice Signal Vector Representation

5.4. Dysphonia Detection Model Definition

| Algorithm 1 Classifier training stage and definition of the dysphonia detection model in the proposed framework. |

| Training database with feature vectors. Input: Dimensionality reduction strategy. Normalization function.

Output: The proposed dysphonia detection model. |

5.5. Proposed Algorithm

- 1.

- Establish the necessary parameters for the execution of the framework. For example, the mapping functions that will compose the set , the CC extraction techniques, and the used classifier, among other configurations.

- 2.

- Extract the CCs and their first- and second-order differentials from each available voice sample using all the techniques in the set.

- 3.

- Conduct the proposed multiprojection process to build the cepstral feature vector for each speech signal x.

- 4.

- Construct the vector of non-cepstral features for each voice sample.

- 5.

- Aggregate cepstral and non-cepstral information for each voice signal, building a feature vector database.

- 6.

- Conduct balancing of the training feature vectors such that the number of vectors associated with pathological individuals should be the same number of vectors associated with healthy individuals.

- 7.

- Reduce the dimensions of the made-up feature vectors.

- 8.

- Normalize the reduced-dimensional feature vectors defined in the previous step.

- 9.

- Train a classifier based on normalized and reduced-dimensional feature vectors.

- The set of noncepstral features is represented by the following measures: energy, low-short time energy ratio, ZCR, Teager–Kaiser energy operator, entropy of energy, Hurst’s coefficient, , formants, jitter, shimmer, spectral centroid, spectral roll-off, spectral flux, spectral flatness, spectral entropy, spectral spread, linear prediction coefficients, HNR, power spectral density, and phonatory frequency range.

- The cepstral features are defined solely by the static CCs calculated by the MFCC technique. In other words, .

- The CCs are projected using mean. Thus, .

- All the features are fused using concatenation. Thus, = CONCATENATION.

- No data balancing strategy is cited, so this procedure should be disregarded.

- Two dimensionality reduction strategies are considered: one based on feature selection by mutual information, and another based on the PCA.

- As no data normalization routine is mentioned, the NORM function must be the identity function. That is, , for all y.

- Three classifiers are considered: SVM, RF, and KNN.

6. Parameters for the Proposed Method and Practical Instances

- Fusion strategies: As already mentioned in the description of the steps of the proposed method, all information fusion strategies will be defined as the concatenation of vectors. Therefore, .

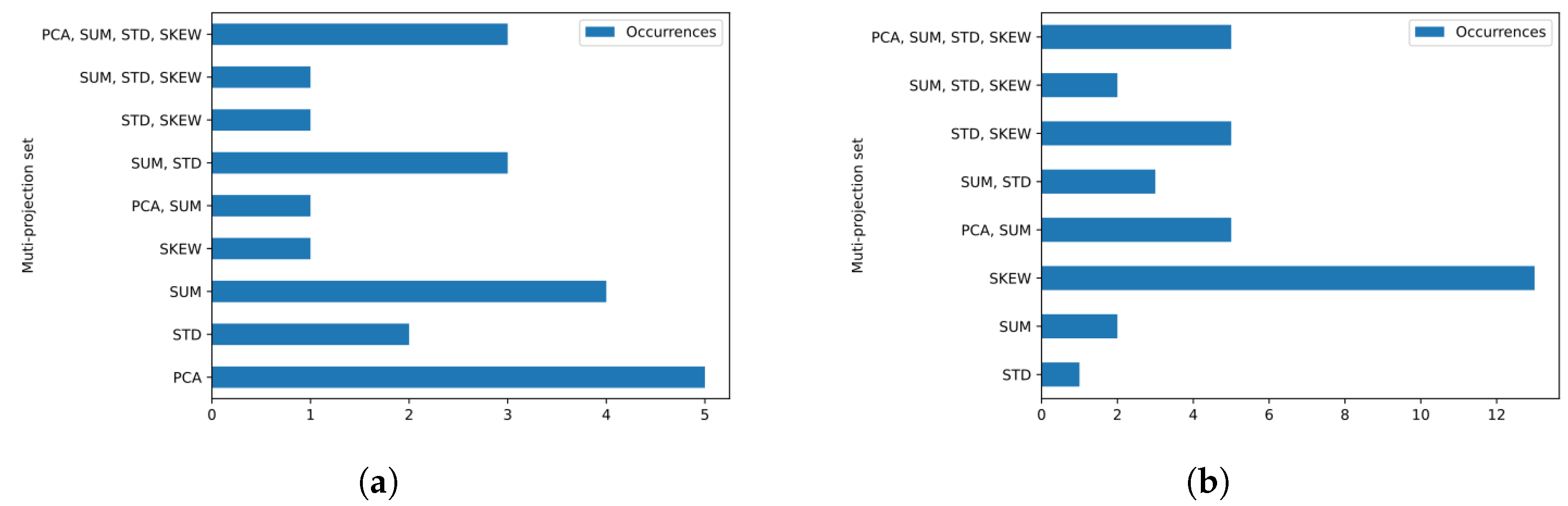

- Mapping functions: For our experiments, we will consider four matrix projection strategies, as defined in Equation (3): the projection of columns by PCA (); the sum of columns (); the standard deviation of columns (); and the skewness of the columns (). We will also consider some combinations of these mappings to employ the proposed multiprojection concept. Specifically, let us consider the sets of mappings defined in Table 2.

- Noncepstral features: As noncepstral measures are associated with the voice signal that must compose the set , the following will be considered [23,83,84,85,86,87,88,89]:

- −

- ”Statistical moments of the fundamental frequency”: In this work, we will use only the mean and standard deviation of .

- −

- “HNR”: As mentioned in Section 2, this is an acoustic measurement that compares the energy ratio of harmonic and noise components in a speech signal. Generally, the HNR is used as an indicator of voice quality, with higher values indicating a clearer, less noisy voice.

- −

- “Local Jitter”: This is the time difference between the actual duration of each audio frame and the expected average duration of each frame.

- −

- “Local Absolute Jitter”: This is a measure of time variation similar to Local Jitter that is calculated from the absolute differences between the time intervals between consecutive voice frames and the average of these intervals.

- −

- “Relative Average Perturbation (RAP) Jitter”: This is a measure that describes the relative variation between the durations of the time intervals between consecutive speech signal cycles. In other words, Rap Jitter is calculated as the ratio between the standard deviation of the time intervals and the average of the time intervals.

- −

- “Local dB Shimmer”: This is used to assess variability in sound wave amplitude during speech. The Local dB Shimmer measure is calculated as the local frame-by-frame variation in the amplitude of the sound wave, expressed in decibels (dB). Specifically, it is the difference between the maximum and minimum value of the sound wave amplitude in a specific voice frame, expressed in dB.

- −

- “Amplitude Perturbation Quotient 3 (APQ3) Shimmer”: Calculates the change in the amplitude of the sound wave during speech using the difference between the amplitude values at three equally spaced points in a speech cycle. The APQ3 Shimmer is calculated as the mean of the absolute differences between the amplitude values at three consecutive points divided by the mean amplitude value at those three points.

- −

- “APQ5 Shimmer”: This is similar to the APQ3 Shimmer, but uses five equally spaced points on a voice cycle to calculate the change in the amplitude of the sound wave.

- −

- “APQ11 Shimmer”: A measure similar to APQ3 Shimmer and APQ5 Shimmer, but considering 11 equidistant points.

- −

- “DDA Shimmer”: This is calculated as the average of the absolute differences between the sound wave amplitude values in a voice cycle, divided by the number of samples in the cycle. The difference between the amplitude values is calculated as the absolute difference between the maximum and minimum amplitude values in the voice cycle.

- −

- “Jitter PCA projection”: A one-dimensional PCA projection of all Jitter-based features that were used.

- −

- “Shimmer PCA projection”: A one-dimensional PCA projection of all Shimmer-based features that were used.

- −

- “Fitch Virtual Tract Length (FVTL)”: This involves analysis of the acoustic spectrum of the sound produced during speech and the comparison with mathematical models that relate the acoustic properties with the length of the vocal tract.

- −

- “Mean and median of the four formant frequencies”: , , , and are the four main formant frequencies that are measured and analyzed in speech processing. is the lowest frequency of the first formant, which is determined by the position of the jaw and tongue. is the frequency of the second formant, which is mainly influenced by the position of the tongue in the mouth. and are the frequencies of the third and fourth formants, respectively, and are mainly influenced by the opening of the lips and the position of the soft palate.

- −

- “Formant Dispersion”: One-third the value of the difference between the medians of the fourth and first formants.

- −

- “Arithmetic mean of formant frequencies”: Mean between the medians of the first four formant frequencies.

- −

- “Formant Position”: Standardized mean of the medians of the first four formant frequencies.

- −

- “Spacing between formant frequencies ()”: Estimation of minimum spacing between formant frequencies using linear regression.

- −

- “VTL of ”: The spacing between any two consecutive formants in the frequency spectrum, which can be estimated by the speed of propagation of sound in air divided by twice the spacing between the formant frequencies.

- −

- “Mean formant frequency (MFF)”: Fourth root of the product between the medians of the first four formant frequencies.

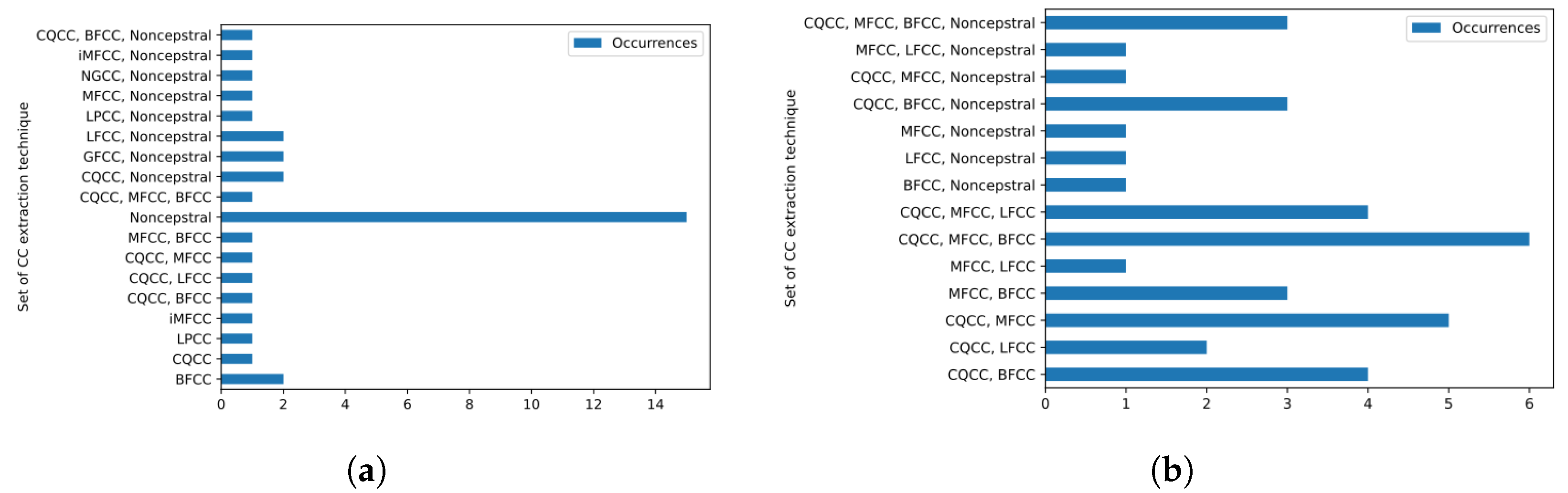

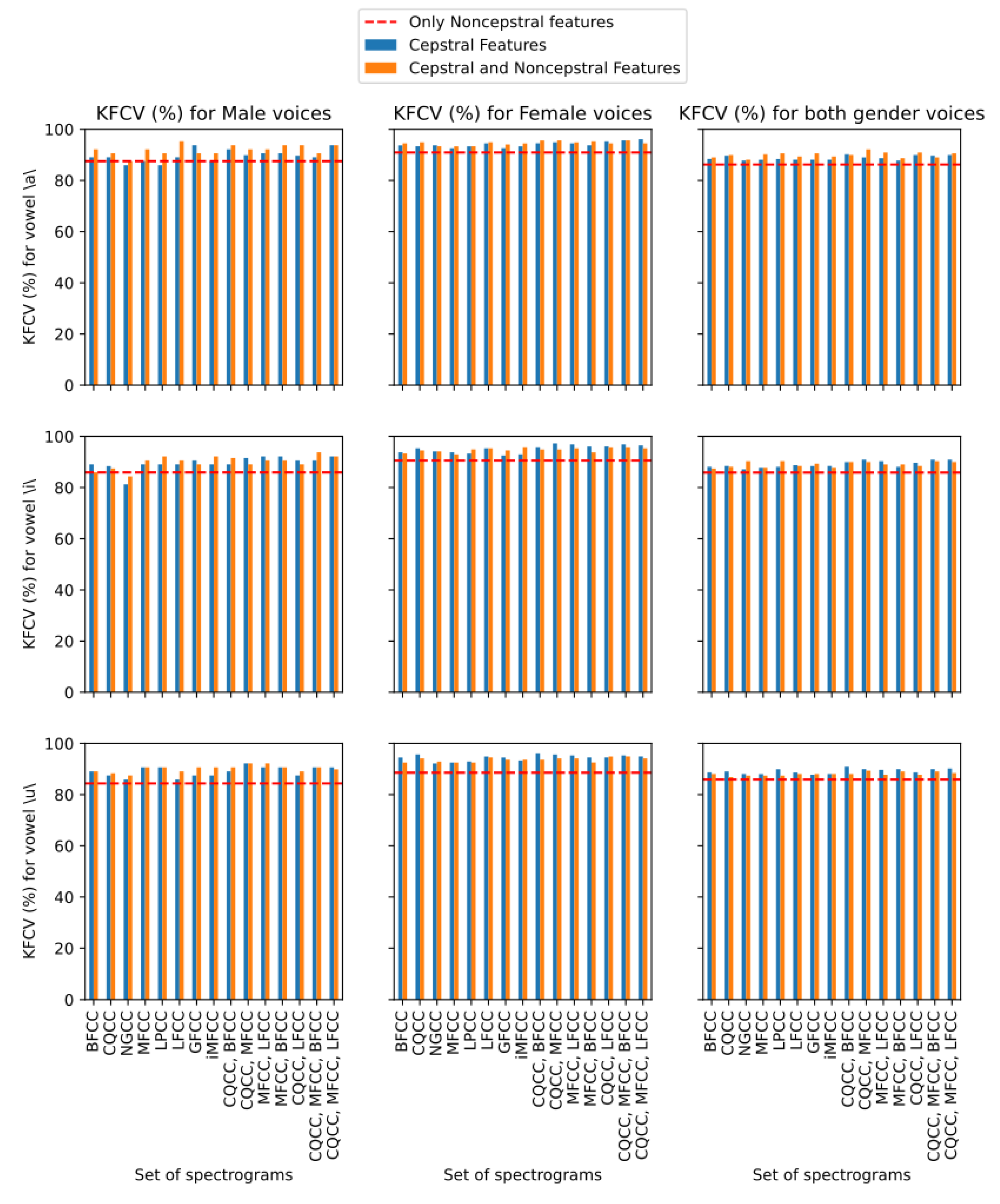

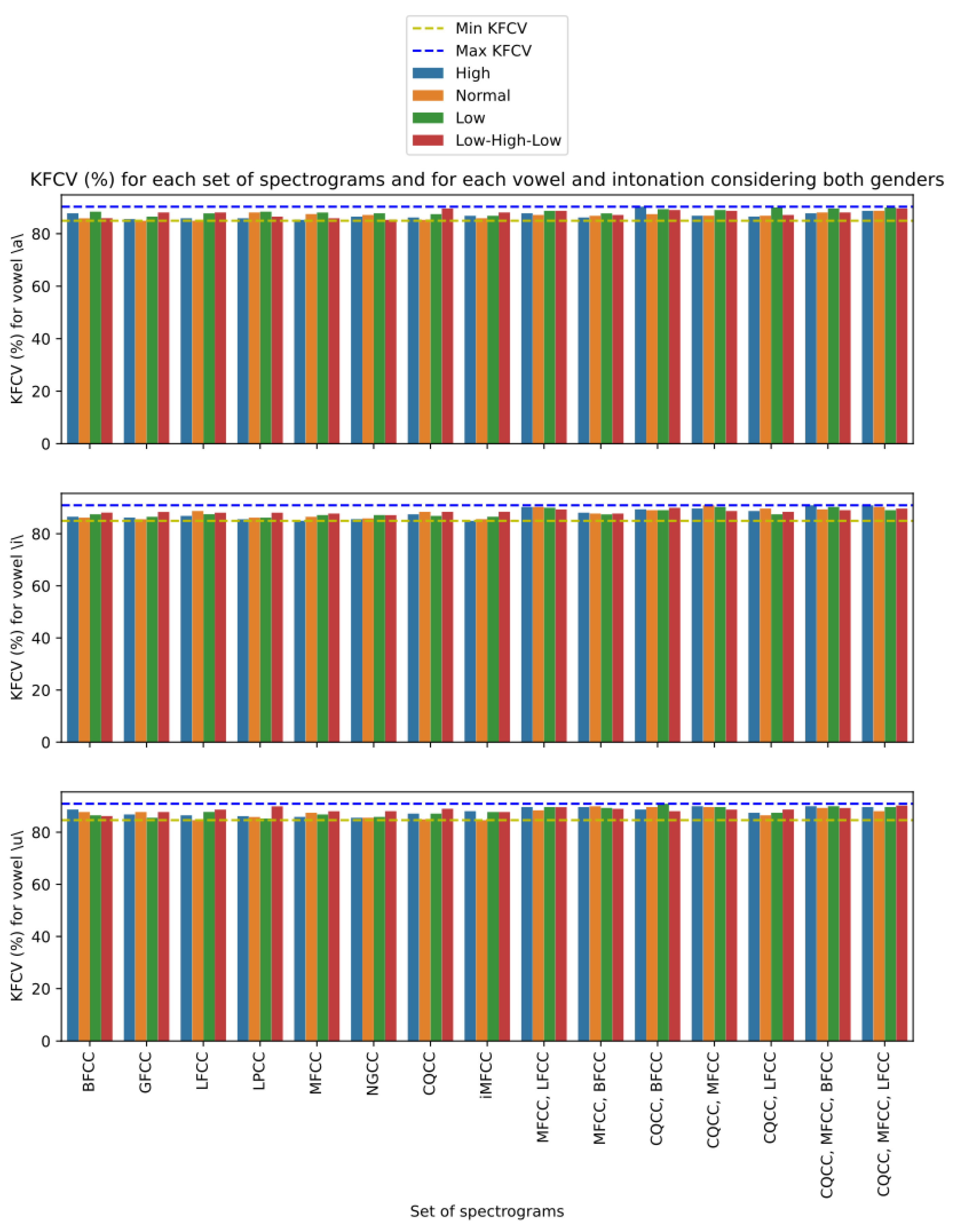

- Cepstral features: For CC extraction techniques, the following methods will be considered: CQCC, MFCC, inverse MFCC (iMFCC), linear-frequency cepstral coefficients (LFCC) [90], gammatone-frequency cepstral coefficients (GFCCs) [91], bark-frequency cepstral coefficients (BFCCs) [92], LPCC [93], and normalized gammachirp cepstral coefficients (NGCC) [94], all of which are defined with static coefficients, 20 first-order dynamics () and 20 s-order dynamics (), being normalized by mean and variance, which configures the strategy of cepstral mean and variance normalization (CMVN). These techniques are considered separately and in combination according to the sets defined in Table 3. Due to text space limitations, it was not possible to consider all existing combinations of the eight CC extraction techniques. However, the most important combinations were analyzed to evaluate the performance of the developed material. We consider versions of to , in which each representation of has only one element. These versions allowed for an evaluation of how much the framework is enhancing the capacity of the techniques to detect dysphonia in voice signals. In addition, the other versions of CC extraction techniques should serve to confirm the ability of the proposed material to represent different features in the sound sample, which should contribute to improving its ability to detect dysphonia in these samples.

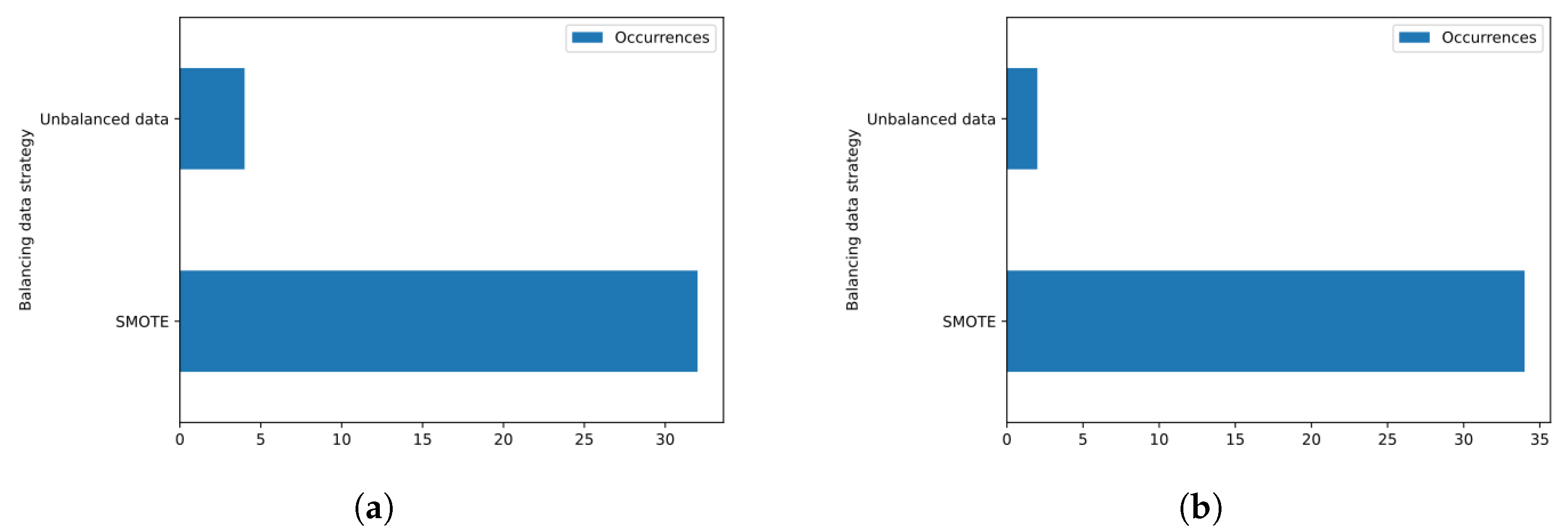

- Balancing data: As a data balancing technique, we chose to adopt an oversampling strategy on the minority set of samples in order to balance the number of representatives of each class. Specifically, we use one of the simplest techniques for this purpose: the synthetic minority oversampling technique (SMOTE).

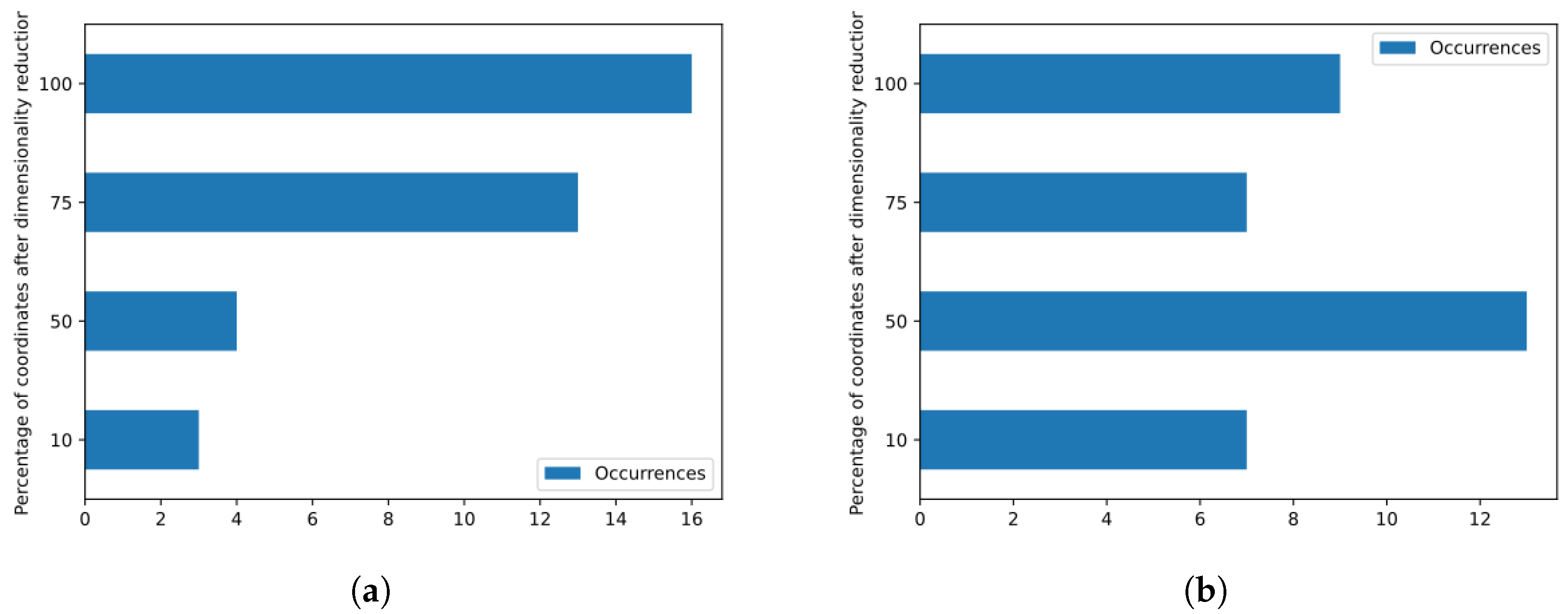

- Dimensionality reduction: The singular value decomposition [95] technique will be used as a function, as it is considered one of the simplest and most representative techniques of the dimensionality reduction methods. It is worth mentioning that this technique, which is very similar to the well-known PCA, is based on the representation of the data through a new basis constituted by the eigenvectors associated with the eigenvalues of larger modules, which represent the variances of the original data in the directions of these vectors. This reduced-dimensional representation tends to decrease the covariance, and consequently, the redundancy [96,97] between the considered data, which can be beneficial in the representation, since the feature fusion strategies we are using are based on the concatenation of vectors. For each considered version, four dimensions will be evaluated to compose the reduced feature space. This includes a version reduced to 10% coordinates, another reduced to half coordinates, another reduced to 75% coordinates, and a version without reduction. These dimensions represent different levels of feature space dimensionality reduction, allowing us to evaluate how the reduction affects the technique’s ability to detect dysphonia in voice signals.

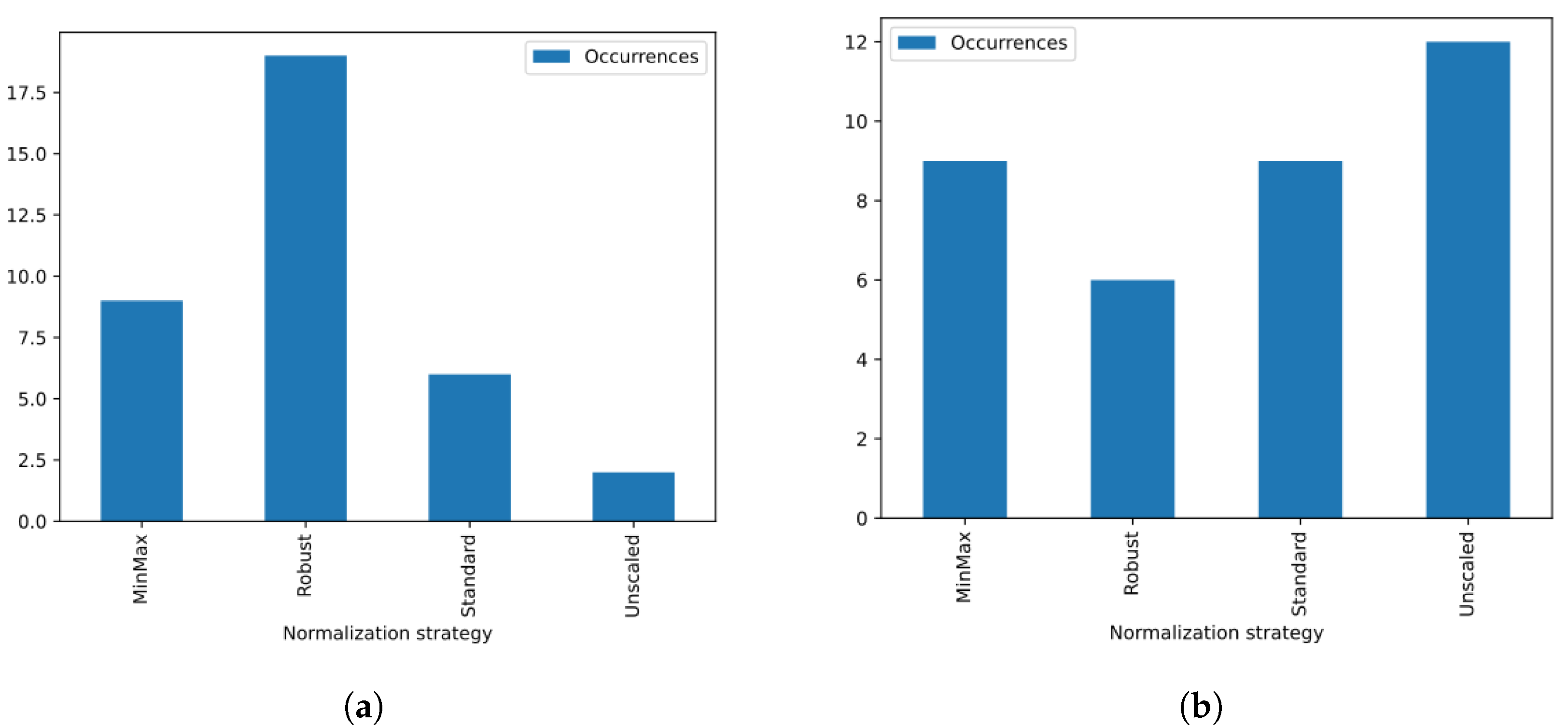

- Normalization: Four different strategies were used to normalize the feature vectors, considered the most common in the addressed problem. For each considered version, four different normalizations were evaluated: the Min–Max normalization (), standard normalization (), robust normalization () and nonuse of normalization (). Mathematically, the training basis of the feature vectors in the reduced space is represented by , where each vector . Specifically, the four considered normalization functions are:

- 1.

- Min–Max scale (MM):

- 2.

- Standard scale:where and

- 3.

- Robust scale (): Robust normalization is an adaptation of standard normalization that handles extreme values in the data more efficiently. This approach replaces the mean of the values () with the median, which is less sensitive to these extreme values; and the standard deviation () with the interquartile range, which is the difference between the first and the third quartile of the distribution. This makes normalization more resistant to outliers that can degrade model performance.

- 4.

- No scale: this is the strategy represented by the identity function. That is, .

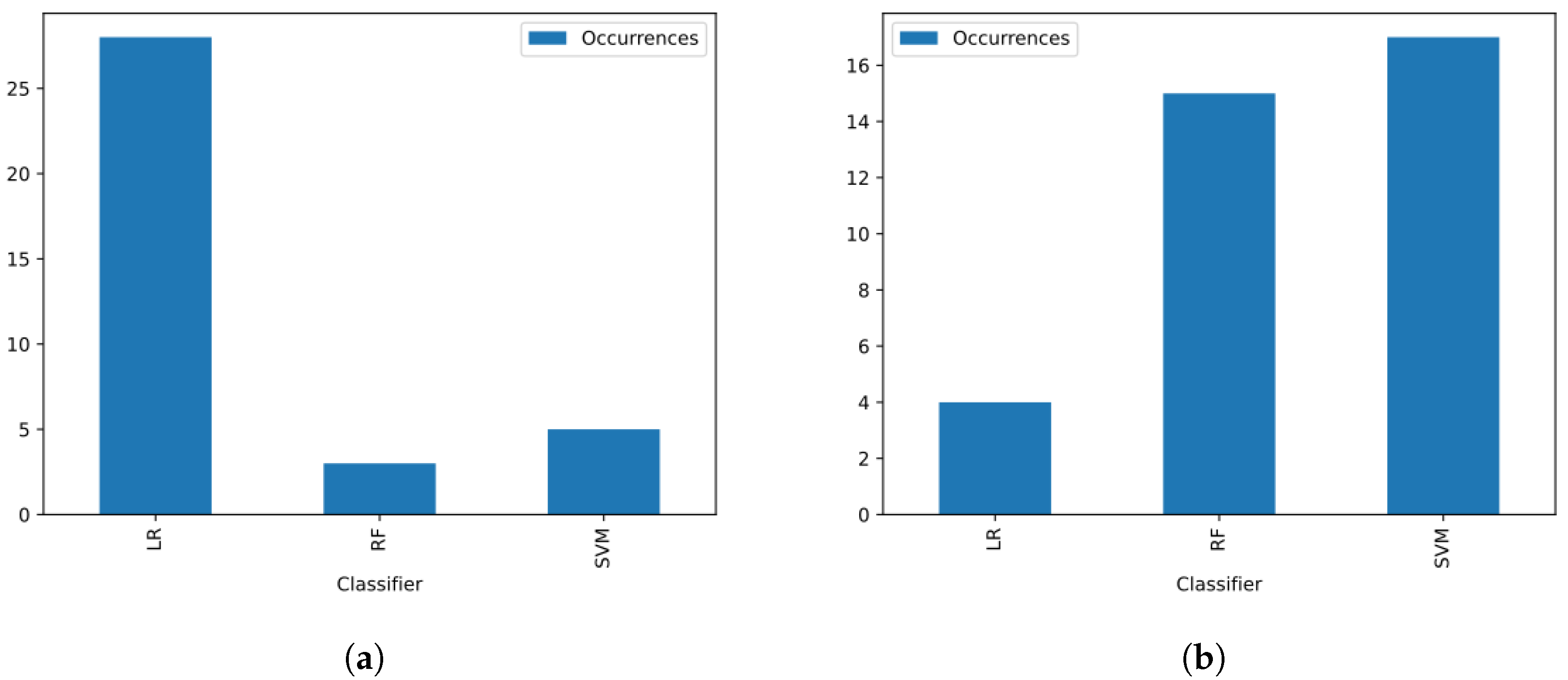

- Classifiers: We will evaluate the three most used classifiers in the addressed problem. Specifically, let us consider a Gaussian kernel SVM [98], also known as a radial basis function (RBF) kernel, an RF [99], and a linear logistic regression (LR) [100]. All of these classifiers are flexible in the sense of allowing an unbalanced data-resistant configuration. We will consider this configuration even in situations where we do not use SMOTE on the feature vector database.

7. Results and Experiments

- Error in Healthy (EH): represents the rate of healthy voice signals classified as pathological.

- Error in Pathological (EP): represents the percentage of pathological voice signals classified as healthy.

- Equal Error Rate (EER): the average between the false-positive and false-negative rates of the method. Mathematically,

- Accuracy (ACC): the rate of correctly classified voice signals.

- F1-score: the harmonic mean between the sensitivity and accuracy of the model.

- K-fold Cross-Validation Score (KFCV): Average accuracy score of a 5-fold cross-validation over the training dataset.

7.1. Benchmark

- 384 recordings of healthy male subjects;

- 1524 recordings of healthy female subjects;

- 324 recordings of dysphonic male subjects;

- 492 recordings of dysphonic female subjects.

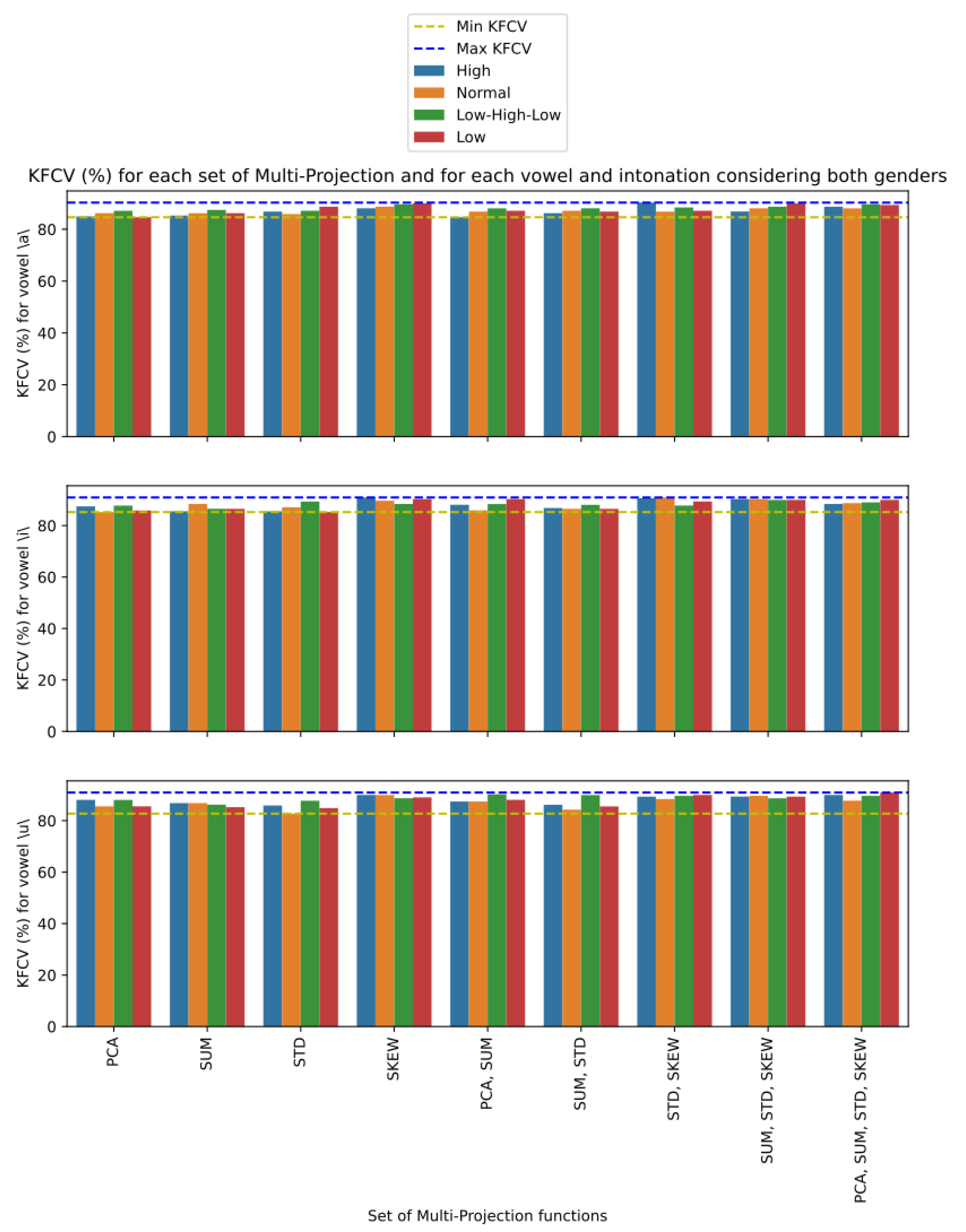

7.2. Performance Analysis

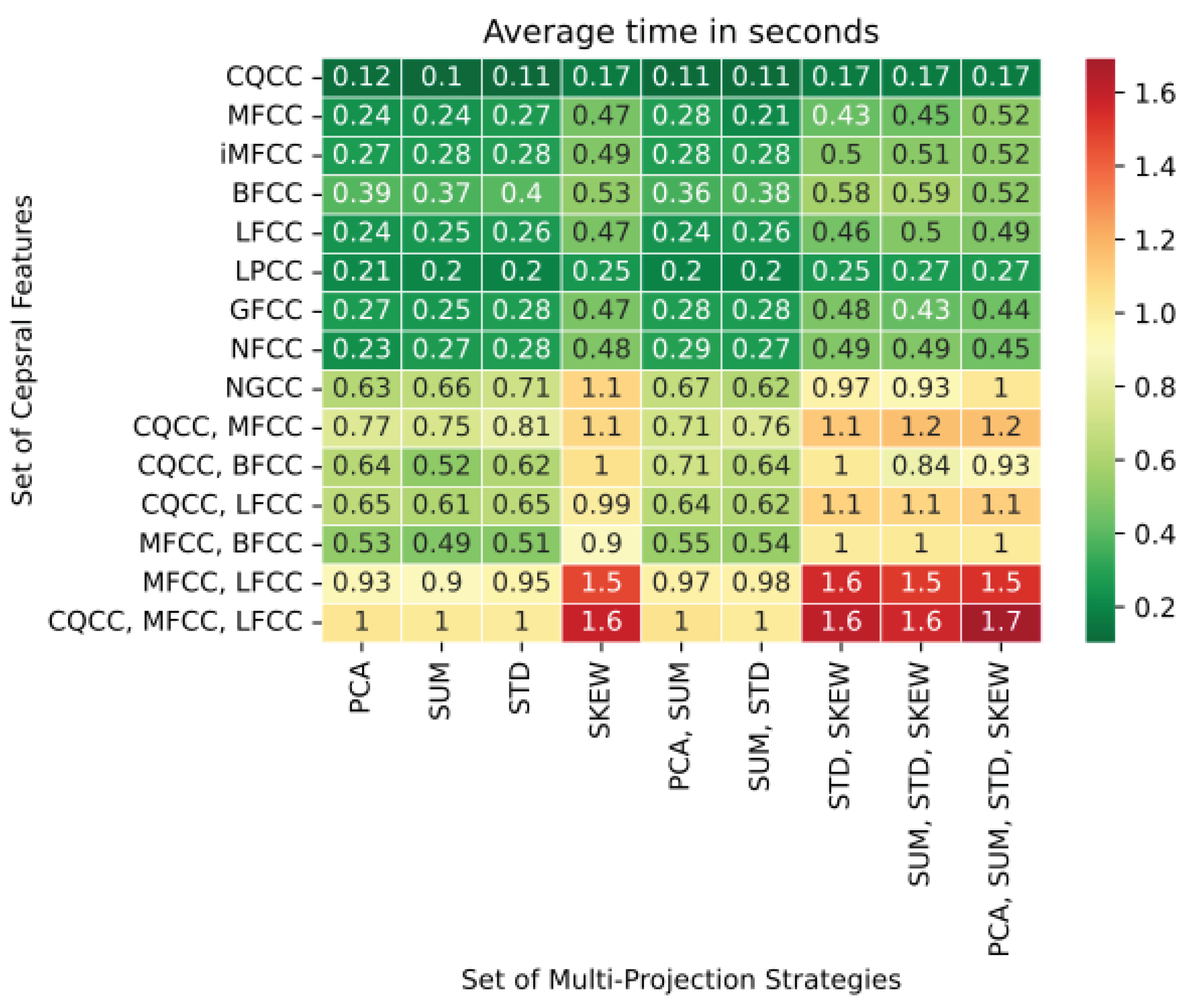

7.3. Complexity Analysis

7.4. Limitations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACC | Accuracy |

| ANN | Artificial Neural Network |

| APQ | Amplitude Perturbation Quotient |

| AVPD | Arabic Voice Pathology Database |

| BFCC | Bark-Frequency Cepstral Coefficients |

| CC | Cepstral Coefficient |

| CNN | Convolutional Neural Network |

| CQCC | Constant Q Cepstral Coefficient |

| DFT | Discrete Fourier Transform |

| DNN | Deep Neural Network |

| DPV | Database of Pathological Voices |

| DTCWT | Dual-Tree Complex Wavelet Transform |

| DWT | Discrete Wavelet Transform |

| EER | Equal Error Rate |

| EH | Error in Healthy |

| EMD | Empirical Mode Decomposition |

| EP | Error in Pathological |

| ETO | Energy Teager Operator |

| Fundamental Frequency | |

| F1 | F1-score |

| FFT | Fast Fourier Transform |

| FNN | Feedforward Neural Network |

| FVTL | Fitch Virtual Tract Length |

| GA | Genetic Algorithm |

| GFCC | Gammatone-Frequency Cepstral Coefficients |

| GMM | Gaussian Mixture Model |

| HNR | Harmonic-to-Noise Ratio |

| HOS | High-Order Statistics |

| ILPF | Inverse Linear Predictive Filter |

| iMFCC | Inverse Mel-Frequency Cepstral Coefficients |

| KFCV | K-Fold Cross Validation |

| KNN | k-Nearest Neighbors |

| LDA | Linear Discriminant Analysis |

| LFCC | Linear-Frequency Cepstral Coefficients |

| LPCC | Linear-Prediction Cepstral Coefficients |

| LR | Logistic Regression |

| LVQ | Learning Vector Quantization |

| MEEI | Massachusetts Eye and Ear Infirmary |

| MFCC | Mel-Frequency Cepstral Coefficients |

| MLP | Multilayer Perceptron |

| NB | Naive Bayes |

| NGCC | Normalized Gammachirp Cepstral Coefficients |

| NHR | Noise-to-Harmonic Ratio |

| PCA | Principal Component Analysis |

| PdA | Príncipe de Asturias Database |

| PDE | Paraconsistent Discrimination Engineering |

| RAP | Relative Average Perturbation |

| RF | Random Forest |

| SGD | Stochastic Gradient Descent |

| SMOTE | Synthetic Minority Oversampling Technique |

| STD | Standard Deviation |

| SVD | Saarbruecken Voice Database |

| SVM | Support Vector Machine |

| SWT | Stationary Wavelet Transform |

| UPM | Universidad Politécnica de Madrid |

| USPD | São Paulo University Voice Database |

| VTL | Virtual Tract Length |

| ZCR | Zero Crossing Rate |

References

- Rui, Z.; Yan, Z. A survey on biometric authentication: Toward secure and privacy-preserving identification. IEEE Access 2018, 7, 5994–6009. [Google Scholar] [CrossRef]

- Sarkar, A.; Singh, B.K. A review on performance, security and various biometric template protection schemes for biometric authentication systems. Multimed. Tools Appl. 2020, 79, 27721–27776. [Google Scholar] [CrossRef]

- Sharif, M.; Raza, M.; Shah, J.H.; Yasmin, M.; Fernandes, S.L. An overview of biometrics methods. In Handbook of Multimedia Information Security: Techniques and Applications; Srpinger: Berlin/Heidelberg, Germany, 2019; pp. 15–35. [Google Scholar]

- Yudin, O.; Ziubina, R.; Buchyk, S.; Bohuslavska, O.; Teliushchenko, V. Speaker’s Voice Recognition Methods in High-Level Interference Conditions. In Proceedings of the 2019 IEEE 2nd Ukraine Conference on Electrical and Computer Engineering (UKRCON), Lviv, Ukraine, 2–6 July 2019; pp. 851–854. [Google Scholar]

- Chandra, E.; Sunitha, C. A review on Speech and Speaker Authentication System using Voice Signal feature selection and extraction. In Proceedings of the 2009 IEEE International Advance Computing Conference, Patiala, India, 6–7 March 2009; pp. 1341–1346. [Google Scholar]

- Kersta, L.G. Voiceprint identification. J. Acoust. Soc. Am. 1962, 34, 725. [Google Scholar] [CrossRef]

- Senk, C.; Dotzler, F. Biometric authentication as a service for enterprise identity management deployment: A data protection perspective. In Proceedings of the 2011 Sixth International Conference on Availability, Reliability and Security, Vienna, Austria, 22–26 August 2011; pp. 43–50. [Google Scholar]

- Folorunso, C.; Asaolu, O.; Popoola, O. A review of voice-base person identification: State-of-the-art. Covenant J. Eng. Technol. 2019, 3, 38–60. [Google Scholar]

- Khoury, E.; El Shafey, L.; Marcel, S. Spear: An open source toolbox for speaker recognition based on Bob. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1655–1659. [Google Scholar]

- Memon, Q.; AlKassim, Z.; AlHassan, E.; Omer, M.; Alsiddig, M. Audio-visual biometric authentication for secured access into personal devices. In Proceedings of the 6th International Conference on Bioinformatics and Biomedical Science, 22–24 June 2017; pp. 85–89. [Google Scholar]

- Tait, B.L. Applied Phon Curve Algorithm for Improved Voice Recognition and Authentication. In Global Security, Safety and Sustainability & e-Democracy; Springer: Berlin/Heidelberg, Germany, 2011; pp. 23–30. [Google Scholar]

- Osman, M.A.; Zawawi Talib, A.; Sanusi, Z.A.; Yen, T.S.; Alwi, A.S. An exploratory study on the trend of smartphone usage in a developing country. In Proceedings of the Digital Enterprise and Information Systems: International Conference, DEIS 2011, London, UK, 20–22 July 2011; pp. 387–396. [Google Scholar]

- Wang, S.; Liu, J. Biometrics on mobile phone. In Recent Application in Biometrics; IntechOpen: London, UK, 2011; pp. 3–22. [Google Scholar]

- Lopatovska, I.; Rink, K.; Knight, I.; Raines, K.; Cosenza, K.; Williams, H.; Sorsche, P.; Hirsch, D.; Li, Q.; Martinez, A. Talk to me: Exploring user interactions with the Amazon Alexa. J. Librariansh. Inf. Sci. 2019, 51, 984–997. [Google Scholar] [CrossRef]

- Li, B.; Sainath, T.N.; Narayanan, A.; Caroselli, J.; Bacchiani, M.; Misra, A.; Shafran, I.; Sak, H.; Pundak, G.; Chin, K.K.; et al. Acoustic Modeling for Google Home. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 399–403. [Google Scholar]

- Assefi, M.; Liu, G.; Wittie, M.P.; Izurieta, C. An experimental evaluation of apple siri and google speech recognition. Proccedings of the 24th International Conference on Software Engineering and Data Engineering, San Diego, CA, USA, 12–14 October 2015; Volume 118. [Google Scholar]

- Kepuska, V.; Bohouta, G. Next-generation of virtual personal assistants (microsoft cortana, apple siri, amazon alexa and google home). In Proceedings of the 2018 IEEE 8th annual computing and communication workshop and conference (CCWC), Las Vegas, NV, USA, 8–10 January 2018; pp. 99–103. [Google Scholar]

- Mor, N.; Simonyan, K.; Blitzer, A. Central voice production and pathophysiology of spasmodic dysphonia. Laryngoscope 2018, 128, 177–183. [Google Scholar] [CrossRef]

- Van Houtte, E.; Van Lierde, K.; Claeys, S. Pathophysiology and treatment of muscle tension dysphonia: A review of the current knowledge. J. Voice 2011, 25, 202–207. [Google Scholar] [CrossRef]

- Jani, R.; Jaana, S.; Laura, L.; Jos, V. Systematic review of the treatment of functional dysphonia and prevention of voice disorders. Otolaryngol. Neck Surg. 2008, 138, 557–565. [Google Scholar] [CrossRef]

- Mohamed, E.E. Voice changes in patients with chronic obstructive pulmonary disease. Egypt. J. Chest Dis. Tuberc. 2014, 63, 561–567. [Google Scholar] [CrossRef]

- Ngo, Q.C.; Motin, M.A.; Pah, N.D.; Drotár, P.; Kempster, P.; Kumar, D. Computerized analysis of speech and voice for Parkinson’s disease: A systematic review. In Computer Methods and Programs in Biomedicine; Elsevier: Amsterdam, The Netherlands, 2022; p. 107133. [Google Scholar]

- Little, M.; McSharry, P.; Hunter, E.; Spielman, J.; Ramig, L. Suitability of dysphonia measurements for telemonitoring of Parkinson’s disease. Nat. Preced. 2008. [Google Scholar] [CrossRef]

- Agbavor, F.; Liang, H. Artificial Intelligence-Enabled End-To-End Detection and Assessment of Alzheimer’s Disease Using Voice. Brain Sci. 2023, 13, 28. [Google Scholar] [CrossRef] [PubMed]

- Hur, K.; Zhou, S.; Bertelsen, C.; Johns, M.M., III. Health disparities among adults with voice problems in the United States. Laryngoscope 2018, 128, 915–920. [Google Scholar] [CrossRef] [PubMed]

- Spina, A.L.; Crespo, A.N. Assessment of grade of dysphonia and correlation with quality of life protocol. J. Voice 2017, 31, 243.e21–243.e26. [Google Scholar] [CrossRef]

- Rohlfing, M.L.; Buckley, D.P.; Piraquive, J.; Stepp, C.E.; Tracy, L.F. Hey Siri: How effective are common voice recognition systems at recognizing dysphonic voices? Laryngoscope 2021, 131, 1599–1607. [Google Scholar] [CrossRef]

- Barche, P.; Gurugubelli, K.; Vuppala, A.K. Towards Automatic Assessment of Voice Disorders: A Clinical Approach. In Proceedings of the INTERSPEECH, Shanghai, China, 25–29 October 2020; pp. 2537–2541. [Google Scholar]

- Al-Hussain, G.; Shuweihdi, F.; Alali, H.; Househ, M.; Abd-Alrazaq, A. The Effectiveness of Supervised Machine Learning in Screening and Diagnosing Voice Disorders: Systematic Review and Meta-analysis. J. Med. Internet Res. 2022, 24, e38472. [Google Scholar] [CrossRef]

- Hegde, S.; Shetty, S.; Rai, S.; Dodderi, T. A Survey on Machine Learning Approaches for Automatic Detection of Voice Disorders. J. Voice 2019, 33, 947.e11–947.e33. [Google Scholar] [CrossRef] [PubMed]

- Shrivas, A.; Deshpande, S.; Gidaye, G.; Nirmal, J.; Ezzine, K.; Frikha, M.; Desai, K.; Shinde, S.; Oza, A.D.; Burduhos-Nergis, D.D.; et al. Employing Energy and Statistical Features for Automatic Diagnosis of Voice Disorders. Diagnostics 2022, 12, 2758. [Google Scholar] [CrossRef]

- Gidaye, G.; Nirmal, J.; Ezzine, K.; Frikha, M. Wavelet sub-band features for voice disorder detection and classification. Multimed. Tools Appl. 2020, 79, 28499–28523. [Google Scholar] [CrossRef]

- Verde, L.; De Pietro, G.; Sannino, G. Voice disorder identification by using machine learning techniques. IEEE Access 2018, 6, 16246–16255. [Google Scholar] [CrossRef]

- Dankovičová, Z.; Sovák, D.; Drotár, P.; Vokorokos, L. Machine Learning Approach to Dysphonia Detection. Appl. Sci. 2018, 8, 1927. [Google Scholar] [CrossRef]

- Reddy, M.K.; Alku, P. A comparison of cepstral features in the detection of pathological voices by varying the input and filterbank of the cepstrum computation. IEEE Access 2021, 9, 135953–135963. [Google Scholar] [CrossRef]

- Souissi, N.; Cherif, A. Dimensionality reduction for voice disorders identification system based on mel frequency cepstral coefficients and support vector machine. In Proceedings of the 2015 7th International Conference on Modelling, Identification and Control (ICMIC), Sousse, Tunisia, 18–20 December 2015; pp. 1–6. [Google Scholar]

- Lee, J.Y. Experimental evaluation of deep learning methods for an intelligent pathological voice detection system using the saarbruecken voice database. Appl. Sci. 2021, 11, 7149. [Google Scholar] [CrossRef]

- Castellana, A.; Carullo, A.; Corbellini, S.; Astolfi, A. Discriminating pathological voice from healthy voice using cepstral peak prominence smoothed distribution in sustained vowel. IEEE Trans. Instrum. Meas. 2018, 67, 646–654. [Google Scholar] [CrossRef]

- Castellana, A.; Carullo, A.; Astolfi, A.; Bisetti, M.S.; Colombini, J. Vocal health assessment by means of Cepstral Peak Prominence Smoothed distribution in continuous speech. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Woldert-Jokisz, B. Saarbruecken Voice Database. 2007. Available online: https://stimmdatenbank.coli.uni-saarland.de/help_en.php4 (accessed on 22 May 2023).

- Verde, L.; De Pietro, G.; Alrashoud, M.; Ghoneim, A.; Al-Mutib, K.N.; Sannino, G. Dysphonia detection index (DDI): A new multi-parametric marker to evaluate voice quality. IEEE Access 2019, 7, 55689–55697. [Google Scholar] [CrossRef]

- Sulica, L. Laryngoscopy, stroboscopy and other tools for the evaluation of voice disorders. Otolaryngol. Clin. N. Am. 2013, 46, 21–30. [Google Scholar] [CrossRef] [PubMed]

- Paul, B.C.; Chen, S.; Sridharan, S.; Fang, Y.; Amin, M.R.; Branski, R.C. Diagnostic accuracy of history, laryngoscopy, and stroboscopy. Laryngoscope 2013, 123, 215–219. [Google Scholar] [CrossRef] [PubMed]

- Akhlaghi, M.; Abedinzadeh, M.; Ahmadi, A.; Heidari, Z. Predicting difficult laryngoscopy and intubation with laryngoscopic exam test: A new method. Acta Med. Iran. 2017, 453–458. [Google Scholar]

- Maccarini, A.R.; Lucchini, E. La valutazione soggettiva ed oggettiva della disfonia. Il protocollo SIFEL. Acta Phoniatr. Lat. 2002, 24, 13–42. [Google Scholar]

- Brown, C.A.; Bacon, S.P. Fundamental frequency and speech intelligibility in background noise. Hear. Res. 2010, 266, 52–59. [Google Scholar] [CrossRef]

- Teixeira, J.P.; Gonçalves, A. Accuracy of jitter and shimmer measurements. Procedia Technol. 2014, 16, 1190–1199. [Google Scholar] [CrossRef]

- Fernandes, J.; Teixeira, F.; Guedes, V.; Junior, A.; Teixeira, J.P. Harmonic to noise ratio measurement-selection of window and length. Procedia Comput. Sci. 2018, 138, 280–285. [Google Scholar] [CrossRef]

- Lee, Y.; Park, H.; Bae, I.; Kim, G. The usefulness of multi voice evaluation: Development of a model for predicting a degree of dysphonia. J. Voice 2023, 37, 142.e5–142.e12. [Google Scholar] [CrossRef] [PubMed]

- Duffy, J.R. Motor Speech Disorders E-Book: Substrates, Differential Diagnosis, and Management; Elsevier Health Sciences: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Schenck, A.; Hilger, A.I.; Levant, S.; Kim, J.H.; Lester-Smith, R.A.; Larson, C. The effect of pitch and loudness auditory feedback perturbations on vocal quality during sustained phonation. J. Voice 2023, 37, 37–47. [Google Scholar] [CrossRef] [PubMed]

- ElBouazzaoui, L.; Chebbi, S.; Idrissi, N.; Jebara, S.B. Relevant pitch features selection for voice disorders families classification. In Proceedings of the 2022 11th International Symposium on Signal, Image, Video and Communications (ISIVC), El Jadida, Morocco, 18–20 May 2022; pp. 1–7. [Google Scholar]

- Parsa, V.; Jamieson, D.G. Acoustic discrimination of pathological voice. J. Speech Lang. Hear. Res. 2001, 44, 327–339. [Google Scholar] [CrossRef] [PubMed]

- Teixeira, J.P.; Fernandes, P.O.; Alves, N. Vocal acoustic analysis–classification of dysphonic voices with artificial neural networks. Procedia Comput. Sci. 2017, 121, 19–26. [Google Scholar] [CrossRef]

- Fernandes, J.F.T.; Freitas, D.; Junior, A.C.; Teixeira, J.P. Determination of Harmonic Parameters in Pathological Voices—Efficient Algorithm. Appl. Sci. 2023, 13, 2333. [Google Scholar] [CrossRef]

- Fonseca, E.S.; Guido, R.C.; Junior, S.B.; Dezani, H.; Gati, R.R.; Pereira, D.C.M. Acoustic investigation of speech pathologies based on the discriminative paraconsistent machine (DPM). Biomed. Signal Process. Control 2020, 55, 101615. [Google Scholar] [CrossRef]

- Guido, R.C.; Pedroso, F.; Furlan, A.; Contreras, R.C.; Caobianco, L.G.; Neto, J.S. CWT× DWT× DTWT× SDTWT: Clarifying terminologies and roles of different types of wavelet transforms. Int. J. Wavelets Multiresolut. Inf. Process. 2020, 18, 2030001. [Google Scholar] [CrossRef]

- Agbinya, J.I. Discrete wavelet transform techniques in speech processing. In Proceedings of the Digital Processing Applications (TENCON’96), Perth, Australia, 29–29 November 1996; Volume 2, pp. 514–519. [Google Scholar]

- Tsanas, A.; Little, M.A.; McSharry, P.E.; Spielman, J.; Ramig, L.O. Novel speech signal processing algorithms for high-accuracy classification of Parkinson’s disease. IEEE Trans. Biomed. Eng. 2012, 59, 1264–1271. [Google Scholar] [CrossRef]

- Fonseca, E.S.; Pereira, D.C.M.; Maschi, L.F.C.; Guido, R.C.; Paulo, K.C.S. Linear prediction and discrete wavelet transform to identify pathology in voice signals. In Proceedings of the 2017 Signal Processing Symposium (SPSympo), Jachranka, Poland, 12–14 September 2017; pp. 1–4. [Google Scholar]

- Hammami, I.; Salhi, L.; Labidi, S. Voice pathologies classification and detection using EMD-DWT analysis based on higher order statistic features. IRBM 2020, 41, 161–171. [Google Scholar] [CrossRef]

- Saeedi, N.E.; Almasganj, F. Wavelet adaptation for automatic voice disorders sorting. Comput. Biol. Med. 2013, 43, 699–704. [Google Scholar] [CrossRef] [PubMed]

- Kassim, F.N.C.; Vijean, V.; Muthusamy, H.; Abdullah, Z.; Abdullah, R.; Palaniappan, R. DT-CWPT based Tsallis Entropy for Vocal Fold Pathology Detection. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020; pp. 1–5. [Google Scholar]

- Chen, J.; Li, G. Tsallis wavelet entropy and its application in power signal analysis. Entropy 2014, 16, 3009–3025. [Google Scholar] [CrossRef]

- Selesnick, I.W.; Baraniuk, R.G.; Kingsbury, N.C. The dual-tree complex wavelet transform. IEEE Signal Process. Mag. 2005, 22, 123–151. [Google Scholar] [CrossRef]

- Prabakaran, D.; Shyamala, R. A review on performance of voice feature extraction techniques. In Proceedings of the 2019 3rd International Conference on Computing and Communications Technologies (ICCCT), Chennai, India, 21–22 February 2019; pp. 221–231. [Google Scholar]

- Martinez, C.; Rufiner, H. Acoustic analysis of speech for detection of laryngeal pathologies. In Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (Cat. No. 00CH37143), Chicago, IL, USA, 23–28 July 2000; Volume 3, pp. 2369–2372. [Google Scholar]

- Abdul, Z.K.; Al-Talabani, A.K. Mel Frequency Cepstral Coefficient and its applications: A Review. IEEE Access 2022, 10, 122136–122158. [Google Scholar] [CrossRef]

- Godino-Llorente, J.I.; Gómez-Vilda, P. Automatic detection of voice impairments by means of short-term cepstral parameters and neural network based detectors. IEEE Trans. Biomed. Eng. 2004, 51, 380–384. [Google Scholar] [CrossRef]

- Arias-Londoño, J.D.; Godino-Llorente, J.I.; Markaki, M.; Stylianou, Y. On combining information from modulation spectra and mel-frequency cepstral coefficients for automatic detection of pathological voices. Logop. Phoniatr. Vocology 2011, 36, 60–69. [Google Scholar] [CrossRef]

- Cordeiro, H.; Fonseca, J.; Guimarães, I.; Meneses, C. Hierarchical classification and system combination for automatically identifying physiological and neuromuscular laryngeal pathologies. J. Voice 2017, 31, 384e9–384e14. [Google Scholar] [CrossRef]

- Zakariah, M.; Ajmi Alothaibi, Y.; Guo, Y.; Tran-Trung, K.; Elahi, M.M. An Analytical Study of Speech Pathology Detection Based on MFCC and Deep Neural Networks. Comput. Math. Methods Med. 2022, 2022, 7814952. [Google Scholar] [CrossRef]

- Lee, J.N.; Lee, J.Y. An Efficient SMOTE-Based Deep Learning Model for Voice Pathology Detection. Appl. Sci. 2023, 13, 3571. [Google Scholar] [CrossRef]

- Guido, R.C. A Tutorial on Signal Energy and its Applications. Neurocomputing 2016, 179, 264–282. [Google Scholar] [CrossRef]

- Guido, R.C. A Tutorial-review on Entropy-based Handcrafted Feature Extraction for Information Fusion. Inf. Fusion 2018, 41, 161–175. [Google Scholar] [CrossRef]

- Guido, R.C. ZCR-aided Neurocomputing: A study with applications. Knowl. Based Syst. 2016, 105, 248–269. [Google Scholar] [CrossRef]

- Guido, R.C. Enhancing Teager Energy Operator Based on a Novel and Appealing Concept: Signal mass. J. Frankl. Inst. 2018, 356, 1341–1354. [Google Scholar] [CrossRef]

- Alim, S.A.; Rashid, N.K.A. Some Commonly Used Speech Feature Extraction Algorithms; IntechOpen: London, UK, 2018. [Google Scholar]

- Contreras, R.C.; Nonato, L.G.; Boaventura, M.; Boaventura, I.A.G.; Coelho, B.G.; Viana, M.S. A New Multi-filter Framework with Statistical Dense SIFT Descriptor for Spoofing Detection in Fingerprint Authentication Systems. In Proceedings of the 20th International Conference on Artificial Intelligence and Soft Computing, Virtual, 21–23 June 2021; pp. 442–455. [Google Scholar]

- Liu, C.; Yuen, J.; Torralba, A. Sift flow: Dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 978–994. [Google Scholar] [CrossRef]

- Contreras, R.C.; Nonato, L.G.; Boaventura, M.; Boaventura, I.A.G.; Santos, F.L.D.; Zanin, R.B.; Viana, M.S. A New Multi-Filter Framework for Texture Image Representation Improvement Using Set of Pattern Descriptors to Fingerprint Liveness Detection. IEEE Access 2022, 10, 117681–117706. [Google Scholar] [CrossRef]

- Todisco, M.; Delgado, H.; Evans, N. Constant Q cepstral coefficients: A spoofing countermeasure for automatic speaker verification. Comput. Speech Lang. 2017, 45, 516–535. [Google Scholar] [CrossRef]

- Ladefoged, P.; Johnson, K. A Course in Phonetics; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Teixeira, J.P.; Fernandes, P.O. Jitter, shimmer and HNR classification within gender, tones and vowels in healthy voices. Procedia Technol. 2014, 16, 1228–1237. [Google Scholar] [CrossRef]

- Yang, S.; Zheng, F.; Luo, X.; Cai, S.; Wu, Y.; Liu, K.; Wu, M.; Chen, J.; Krishnan, S. Effective dysphonia detection using feature dimension reduction and kernel density estimation for patients with Parkinson’s disease. PLoS ONE 2014, 9, e88825. [Google Scholar] [CrossRef]

- Puts, D.A.; Apicella, C.L.; Cárdenas, R.A. Masculine voices signal men’s threat potential in forager and industrial societies. Proc. R. Soc. Biol. Sci. 2012, 279, 601–609. [Google Scholar] [CrossRef]

- Pisanski, K.; Rendall, D. The prioritization of voice fundamental frequency or formants in listeners’ assessments of speaker size, masculinity, and attractiveness. J. Acoust. Soc. Am. 2011, 129, 2201–2212. [Google Scholar] [CrossRef]

- Reby, D.; McComb, K. Anatomical constraints generate honesty: Acoustic cues to age and weight in the roars of red deer stags. Anim. Behav. 2003, 65, 519–530. [Google Scholar] [CrossRef]

- Fitch, W.T. Vocal tract length and formant frequency dispersion correlate with body size in rhesus macaques. J. Acoust. Soc. Am. 1997, 102, 1213–1222. [Google Scholar] [CrossRef] [PubMed]

- Sahidullah, M.; Kinnunen, T.; Hanilçi, C. A comparison of features for synthetic speech detection. In Proceedings of the 16th Annual Conference of the International Speech Communication Association (INTERSPEECH 2015), Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Qi, J.; Wang, D.; Jiang, Y.; Liu, R. Auditory features based on gammatone filters for robust speech recognition. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS), Taipei, Taiwan, 19–24 April 2009; pp. 305–308. [Google Scholar]

- Herrera, A.; Del Rio, F. Frequency bark cepstral coefficients extraction for speech analysis by synthesis. J. Acoust. Soc. Am. 2010, 128, 2290. [Google Scholar] [CrossRef]

- Rao, K.S.; Reddy, V.R.; Maity, S. Language Identification Using Spectral and Prosodic Features; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Zouhir, Y.; Ouni, K. Feature Extraction Method for Improving Speech Recognition in Noisy Environments. J. Comput. Sci. 2016, 12, 56–61. [Google Scholar] [CrossRef]

- Stewart, G.W. On the early history of the singular value decomposition. SIAM Rev. 1993, 35, 551–566. [Google Scholar] [CrossRef]

- Ramachandran, R.; Ravichandran, G.; Raveendran, A. Evaluation of dimensionality reduction techniques for big data. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 11–13 March 2020; pp. 226–231. [Google Scholar]

- Tanwar, S.; Ramani, T.; Tyagi, S. Dimensionality reduction using PCA and SVD in big data: A comparative case study. In Proceedings of the Future Internet Technologies and Trends: First International Conference, ICFITT 2017, Surat, India, 31 August–2 September 2017; pp. 116–125. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Wright, R.E. Logistic regression. In Reading and Understanding Multivariate Statistics; Grimm, L.G., Yarnold, P.R., Eds.; American Psychological Association: Washington, DC, USA, 1995; pp. 217–244. [Google Scholar]

- Handelman, G.S.; Kok, H.K.; Chandra, R.V.; Razavi, A.H.; Huang, S.; Brooks, M.; Lee, M.J.; Asadi, H. Peering into the black box of artificial intelligence: Evaluation metrics of machine learning methods. Am. J. Roentgenol. 2019, 212, 38–43. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Malek, A.; Titeux, H.; Borzi, S.; Nielsen, C.H.; Stoter, F.R.; Bredin, H.; Moerman, K.M. SuperKogito-Spafe: v0.3.2, 2023. Available online: https://doi.org/10.5281/zenodo.7686438 (accessed on 22 May 2023).

- Jadoul, Y.; Thompson, B.; De Boer, B. Introducing parselmouth: A python interface to praat. J. Phon. 2018, 71, 1–15. [Google Scholar] [CrossRef]

- Contreras, R.C. Result Dataset for Our Experimental Analysis on Multi-Cepstral Projection Representation Strategies for Dysphonia Detection, 2023. Available online: https://doi.org/10.5281/zenodo.7897603 (accessed on 25 May 2023).

| Work | Features | Reduction | Classifier | Database | Accuracy | Year |

|---|---|---|---|---|---|---|

| [53] | Jitter, shimmer, metrics | All possible combinations of features are considered | LDA | MEEI | 96.5 | 2001 |

| [69] | MFCC | - | MLP, LVQ | MEEI | 96 | 2004 |

| [70] | MFCC, modulation spectral features | Singular value decomposition | GMM, SVM | MEEI, UPM | 95.89 | 2011 |

| [62] | Energy-based features of GA-adaptive wavelet | - | SVM | MEEI | 100 | 2013 |

| [54] | Jitter, shimmer, HNR | PCA | ANN | SVD | 100 | 2017 |

| [60] | DWT, ILPF | - | - | USPD | 85.94 | 2017 |

| [71] | MFCCs, line spectral frequencies | - | GMM, SVM, discriminant analysis | MEEI | 98.7 | 2017 |

| [32] | SWT-based energy and statistical features | Information gain | SVM, SGD, ANN | tSVD, PdA, AVPD, MEEI | 99.99 | 2020 |

| [56] | Energy, entropy, ZCR | Paraconsistent engineering | Paraconsistent discrimination, SVM | SVD | 95 | 2020 |

| [61] | HOS, EMD-DWT | - | SVM | SVD | 99.26 | 2020 |

| [63] | DTCWT - Tsallis entropy | - | KNN, SVM | MEEI, SVD | 93.32 | 2020 |

| [37] | MFCC, LPCC, HOS | - | CNN, FNN | SVD | 82.69 | 2021 |

| [52] | Pitch-based | Fisher discriminant ratio, stepwise discriminant analysis, scatter measure, ANOVA statistical test, divergence measure, relief F-measure, run filtering | KNN, SVM, RF, NB | DPV | 91.5 | 2022 |

| [31] | SWT-based energy and statistical features | Probability density plots, information gain | SVM, SGD | tSVD, PdA, AVPD, MEEI | 99.99 | 2022 |

| [72] | MFCC, Mel-spectrogram, chroma | - | DNN | SVD | 96.77 | 2022 |

| [55] | Autocorrelation, HNR, NHR | - | - | SVD, USPD | - | 2023 |

| [73] | MFCC, LPCC | - | CNN, FNN | SVD | 98.89 | 2023 |

| Proposed | , HNR, jitters and shimmers variations, formant frequencies measures, CQCC, MFCC, iMFCC, BFCC, LFCC, LPCC, GFCC, NGCC | Singular value decomposition | SVM, RF, LR | SVD | 100 | 2023 |

| Set | Mapping Functions |

|---|---|

| , | |

| , | |

| , | |

| , , | |

| , , , |

| Set | CC Extraction Technique |

|---|---|

| CQCC | |

| MFCC | |

| iMFCC | |

| BFCC | |

| LFCC | |

| LPCC | |

| GFCC | |

| NGCC | |

| CQCC, MFCC | |

| CQCC, BFCC | |

| CQCC, LFCC | |

| MFCC, BFCC | |

| MFCC, LFCC | |

| CQCC, MFCC, LFCC | |

| CQCC, MFCC, BFCC |

| Metric | ACC (%) | ||||

|---|---|---|---|---|---|

| Gender | V\P | High | Low | Low-High-Low | Normal |

| Both | a | 91.23|nonceps|Robust|23|LR|SMOTE | 92.98|nonceps|Standard|31|LR|- | 96.49|nonceps|Standard|31|LR|SMOTE | 94.74|nonceps|Standard|31|LR|- |

| i | 85.96|MFCC, nonceps|Robust|91|LR|-|STD | 91.23|NGCC, nonceps|Robust|91|LR|SMOTE|STD | 85.96|BFCC|Standard|120|SVM|SMOTE|SUM, STD | 87.72|GFCC, nonceps|Robust|91|LR|-|STD | |

| u | 85.96|LFCC, nonceps|Robust|91|RF|SMOTE|PCA | 92.98|nonceps|Robust|31|LR|SMOTE | 87.72|GFCC, nonceps|MinMax|46|LR|-|STD | 89.47|iMFCC, nonceps|Standard|91|LR|SMOTE|PCA | |

| Female | a | 97.67|iMFCC, nonceps|MinMax|68|LR|-|PCA | 100.0|nonceps|Standard|23|LR|SMOTE | 100.0|nonceps|Robust|23|LR|SMOTE | 100.0|nonceps|Standard|23|LR|SMOTE |

| i | 100.0|iMFCC, nonceps|Standard|68|LR|SMOTE|SUM | 100.0|nonceps|MinMax|23|SVM|SMOTE | 100.0|CQCC, nonceps|Robust|68|LR|-|SUM | 100.0|LFCC, nonceps|Robust|68|LR|SMOTE|SUM | |

| u | 97.67|nonceps|Standard|23|LR|- | 95.35|nonceps|MinMax|23|SVM|- | 100.0|iMFCC, nonceps|MinMax|68|LR|SMOTE|PCA | 100.0|nonceps|Standard|23|LR|SMOTE | |

| Male | a | 100.0|LFCC, nonceps|MinMax|15|SVM|SMOTE|SUM, STD | 100.0|nonceps|Standard|31|LR|SMOTE | 100.0|nonceps|Robust|31|LR|- | 100.0|nonceps|Standard|16|LR|SMOTE |

| i | 100.0|CQCC, MFCC, BFCC|Robust|720|LR|SMOTE|PCA, SUM, STD, SKEW | 100.0|MFCC, BFCC|Robust|120|RF|SMOTE|STD | 100.0|CQCC, LFCC|Robust|240|LR|SMOTE|STD, SKEW | 100.0|CQCC, BFCC|Unscaled|180|RF|SMOTE|PCA, SUM | |

| u | 100.0|CQCC, LFCC|Robust|24|SVM|SMOTE|SUM, STD | 100.0|NGCC, nonceps|Unscaled|46|RF|SMOTE|SKEW | 100.0|iMFCC|Robust|30|LR|SMOTE|PCA | 100.0|CQCC, MFCC|Standard|480|LR|SMOTE|PCA, SUM, STD, SKEW | |

| Metric | KFCV | ||||

| Gender | V\P | High | Low | Low-High-Low | Normal |

| Both | a | 0.90|CQCC, BFCC|MinMax|240|RF|SMOTE|STD, SKEW | 0.90|CQCC, LFCC|Standard|12|RF|SMOTE|SKEW | 0.92|CQCC, MFCC, nonceps|Unscaled|136|LR|SMOTE|PCA, SUM | 0.89|CQCC, MFCC, LFCC|Unscaled|135|SVM|SMOTE|SKEW |

| i | 0.91|CQCC, MFCC, BFCC|Standard|180|SVM|SMOTE|SKEW | 0.90|CQCC, MFCC, BFCC|Robust|36|RF|SMOTE|PCA, SUM | 0.90|CQCC, BFCC|Robust|36|RF|SMOTE|SUM, STD, SKEW | 0.91|CQCC, MFCC|Standard|180|SVM|SMOTE|STD, SKEW | |

| u | 0.90|CQCC, MFCC, BFCC|Unscaled|90|SVM|SMOTE|SKEW | 0.91|CQCC, BFCC|Robust|48|SVM|SMOTE|PCA, SUM, STD, SKEW | 0.90|CQCC, MFCC, LFCC|Unscaled|180|RF|SMOTE|PCA, SUM | 0.90|CQCC, MFCC|Unscaled|270|SVM|SMOTE|SUM, STD, SKEW | |

| Female | a | 0.96|CQCC, MFCC, LFCC|Robust|270|SVM|SMOTE|STD, SKEW | 0.95|MFCC, BFCC, nonceps|Robust|136|RF|SMOTE|PCA, SUM | 0.96|CQCC, MFCC, LFCC|Robust|180|SVM|SMOTE|SKEW | 0.95|CQCC, MFCC|Robust|120|SVM|SMOTE|SKEW |

| i | 0.97|CQCC, MFCC, BFCC|MinMax|180|SVM|SMOTE|SKEW | 0.96|CQCC, MFCC, BFCC|Robust|360|SVM|SMOTE|PCA, SUM, STD, SKEW | 0.96|CQCC, MFCC, LFCC|MinMax|180|SVM|SMOTE|SKEW | 0.97|CQCC, MFCC|MinMax|240|SVM|SMOTE|STD, SKEW | |

| u | 0.95|CQCC, MFCC, BFCC|Standard|360|SVM|SMOTE|PCA, SUM, STD, SKEW | 0.96|CQCC, BFCC|Standard|60|SVM|SMOTE|SKEW | 0.96|CQCC, BFCC|Robust|12|SVM|SMOTE|SKEW | 0.95|CQCC, MFCC, BFCC|Unscaled|18|RF|SMOTE|SUM | |

| Male | a | 0.85|iMFCC, nonceps|Unscaled|68|RF|-|PCA | 0.92|MFCC, LFCC, nonceps|Standard|76|LR|SMOTE|STD | 0.95|LFCC, nonceps|Standard|15|RF|SMOTE|STD, SKEW | 0.88|MFCC, BFCC, nonceps|Robust|256|LR|SMOTE|PCA, SUM, STD, SKEW |

| i | 0.92|CQCC, BFCC, nonceps|Unscaled|136|RF|-|SUM, STD | 0.88|MFCC, BFCC|Unscaled|240|RF|SMOTE|PCA, SUM | 0.94|CQCC, MFCC, BFCC, nonceps|Unscaled|39|RF|SMOTE|PCA, SUM | 0.84|CQCC, MFCC, LFCC, nonceps|Robust|563|RF|SMOTE|PCA, SUM, STD, SKEW | |

| u | 0.83|CQCC, nonceps|Standard|91|RF|SMOTE|SUM | 0.89|MFCC, nonceps|Robust|9|LR|SMOTE|SUM | 0.92|CQCC, MFCC, nonceps|Standard|271|RF|SMOTE|SUM, STD | 0.86|CQCC, MFCC, BFCC|Unscaled|270|RF|SMOTE|SUM, STD | |

| Metric | F1 | ||||

| Gender | V\P | High | Low | Low-High-Low | Normal |

| Both | a | 0.94|nonceps|Robust|23|LR|SMOTE | 0.95|nonceps|Standard|31|LR|SMOTE | 0.98|nonceps|Standard|31|LR|SMOTE | 0.96|nonceps|Standard|31|LR|- |

| i | 0.91|MFCC, nonceps|Robust|91|LR|-|STD | 0.94|NGCC, nonceps|Robust|91|LR|SMOTE|STD | 0.90|BFCC|Standard|120|SVM|SMOTE|SUM, STD | 0.92|GFCC, nonceps|Robust|91|LR|-|STD | |

| u | 0.91|LFCC, nonceps|Robust|91|RF|SMOTE|PCA | 0.95|nonceps|Robust|31|LR|SMOTE | 0.92|GFCC, nonceps|MinMax|46|LR|-|STD | 0.93|iMFCC, nonceps|Standard|91|LR|SMOTE|PCA | |

| Female | a | 0.98|iMFCC, nonceps|MinMax|68|LR|-|PCA | 1.00|nonceps|Standard|23|LR|SMOTE | 1.00|nonceps|Robust|23|LR|SMOTE | 1.00|nonceps|Standard|23|LR|SMOTE |

| i | 1.00|iMFCC, nonceps|Standard|68|LR|SMOTE|SUM | 1.00|nonceps|MinMax|23|SVM|SMOTE | 1.00|CQCC, nonceps|Robust|68|LR|-|SUM | 1.00|LFCC, nonceps|Robust|68|LR|SMOTE|SUM | |

| u | 0.98|nonceps|Standard|23|LR|- | 0.97|nonceps|MinMax|23|SVM|- | 1.00|iMFCC, nonceps|MinMax|68|LR|SMOTE|PCA | 1.00|nonceps|Standard|23|LR|SMOTE | |

| Male | a | 1.00|LFCC, nonceps|MinMax|15|SVM|SMOTE|SUM, STD | 1.00|nonceps|Standard|31|LR|SMOTE | 1.00|nonceps|Robust|31|LR|- | 1.00|nonceps|Standard|16|LR|SMOTE |

| i | 1.00|CQCC, MFCC, BFCC|Robust|720|LR|SMOTE|PCA, SUM, STD, SKEW | 1.00|MFCC, BFCC|Robust|120|RF|SMOTE|STD | 1.00|CQCC|Robust|120|LR|SMOTE|STD, SKEW | 1.00|CQCC, BFCC|Unscaled|180|RF|SMOTE|PCA, SUM | |

| u | 1.00|CQCC, LFCC|Robust|24|SVM|SMOTE|SUM, STD | 1.00|NGCC, nonceps|Unscaled|46|RF|SMOTE|SKEW | 1.00|iMFCC|Robust|30|LR|SMOTE|PCA | 1.00|CQCC, MFCC|Standard|480|LR|SMOTE|PCA, SUM, STD, SKEW | |

| Metric | EER (%) | ||||

| Gender | V\P | High | Low | Low-High-Low | Normal |

| Both | a | 7.94|nonceps|Robust|23|LR|SMOTE | 6.69|nonceps|Standard|31|LR|SMOTE | 5.88|nonceps|Robust|31|LR|SMOTE | 7.13|nonceps|Standard|31|LR|- |

| i | 18.82|BFCC|MinMax|120|SVM|SMOTE|SUM, STD | 13.82|GFCC, nonceps|Robust|91|LR|SMOTE|SUM | 14.19|LPCC|MinMax|60|SVM|-|PCA | 18.82|LPCC, nonceps|Robust|91|LR|SMOTE|PCA | |

| u | 19.26|BFCC|Standard|180|LR|SMOTE|SUM, STD, SKEW | 11.76|nonceps|Robust|31|LR|SMOTE | 16.76|CQCC, BFCC, nonceps|MinMax|51|LR|SMOTE|PCA, SUM, STD, SKEW | 13.82|CQCC, nonceps|MinMax|46|LR|SMOTE|PCA | |

| Female | a | 1.56|nonceps|Robust|23|LR|SMOTE | 0.00|nonceps|MinMax|23|LR|SMOTE | 0.00|nonceps|Robust|23|LR|SMOTE | 0.00|nonceps|MinMax|23|LR|SMOTE |

| i | 0.00|MFCC, nonceps|Standard|68|LR|SMOTE|SUM | 0.00|nonceps|MinMax|23|SVM|SMOTE | 0.00|CQCC, nonceps|Robust|68|LR|-|SUM | 0.00|LFCC, nonceps|Robust|68|LR|SMOTE|SUM | |

| u | 4.55|nonceps|Robust|23|LR|- | 6.11|GFCC, nonceps|Robust|68|LR|SMOTE|STD | 0.00|iMFCC, nonceps|MinMax|68|LR|SMOTE|PCA | 0.00|nonceps|Robust|23|LR|SMOTE | |

| Male | a | 0.00|LFCC, nonceps|MinMax|15|SVM|SMOTE|SUM, STD | 0.00|nonceps|Robust|16|LR|SMOTE | 0.00|nonceps|Robust|31|LR|- | 0.00|nonceps|Standard|16|LR|SMOTE |

| i | 0.00|CQCC, MFCC, BFCC|Robust|720|LR|SMOTE|PCA, SUM, STD, SKEW | 0.00|MFCC, BFCC|Robust|120|RF|SMOTE|STD | 0.00|CQCC, LFCC|Standard|240|LR|SMOTE|STD, SKEW | 0.00|CQCC, BFCC|Unscaled|180|RF|SMOTE|PCA, SUM | |

| u | 0.00|CQCC, LFCC|Robust|24|SVM|SMOTE|SUM, STD | 0.00|NGCC, nonceps|Unscaled|46|RF|SMOTE|SKEW | 0.00|iMFCC|Robust|30|LR|SMOTE|PCA | 0.00|CQCC, MFCC|Standard|480|LR|SMOTE|PCA, SUM, STD, SKEW | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Contreras , R.C.; Viana , M.S.; Fonseca , E.S.; dos Santos, F.L.; Zanin , R.B.; Guido , R.C. An Experimental Analysis on Multicepstral Projection Representation Strategies for Dysphonia Detection. Sensors 2023, 23, 5196. https://doi.org/10.3390/s23115196

Contreras RC, Viana MS, Fonseca ES, dos Santos FL, Zanin RB, Guido RC. An Experimental Analysis on Multicepstral Projection Representation Strategies for Dysphonia Detection. Sensors. 2023; 23(11):5196. https://doi.org/10.3390/s23115196

Chicago/Turabian StyleContreras , Rodrigo Colnago, Monique Simplicio Viana , Everthon Silva Fonseca , Francisco Lledo dos Santos, Rodrigo Bruno Zanin , and Rodrigo Capobianco Guido . 2023. "An Experimental Analysis on Multicepstral Projection Representation Strategies for Dysphonia Detection" Sensors 23, no. 11: 5196. https://doi.org/10.3390/s23115196

APA StyleContreras , R. C., Viana , M. S., Fonseca , E. S., dos Santos, F. L., Zanin , R. B., & Guido , R. C. (2023). An Experimental Analysis on Multicepstral Projection Representation Strategies for Dysphonia Detection. Sensors, 23(11), 5196. https://doi.org/10.3390/s23115196