A Serious Game for the Assessment of Visuomotor Adaptation Capabilities during Locomotion Tasks Employing an Embodied Avatar in Virtual Reality

Abstract

1. Introduction

2. Materials and Methods

2.1. The Proposed Framework

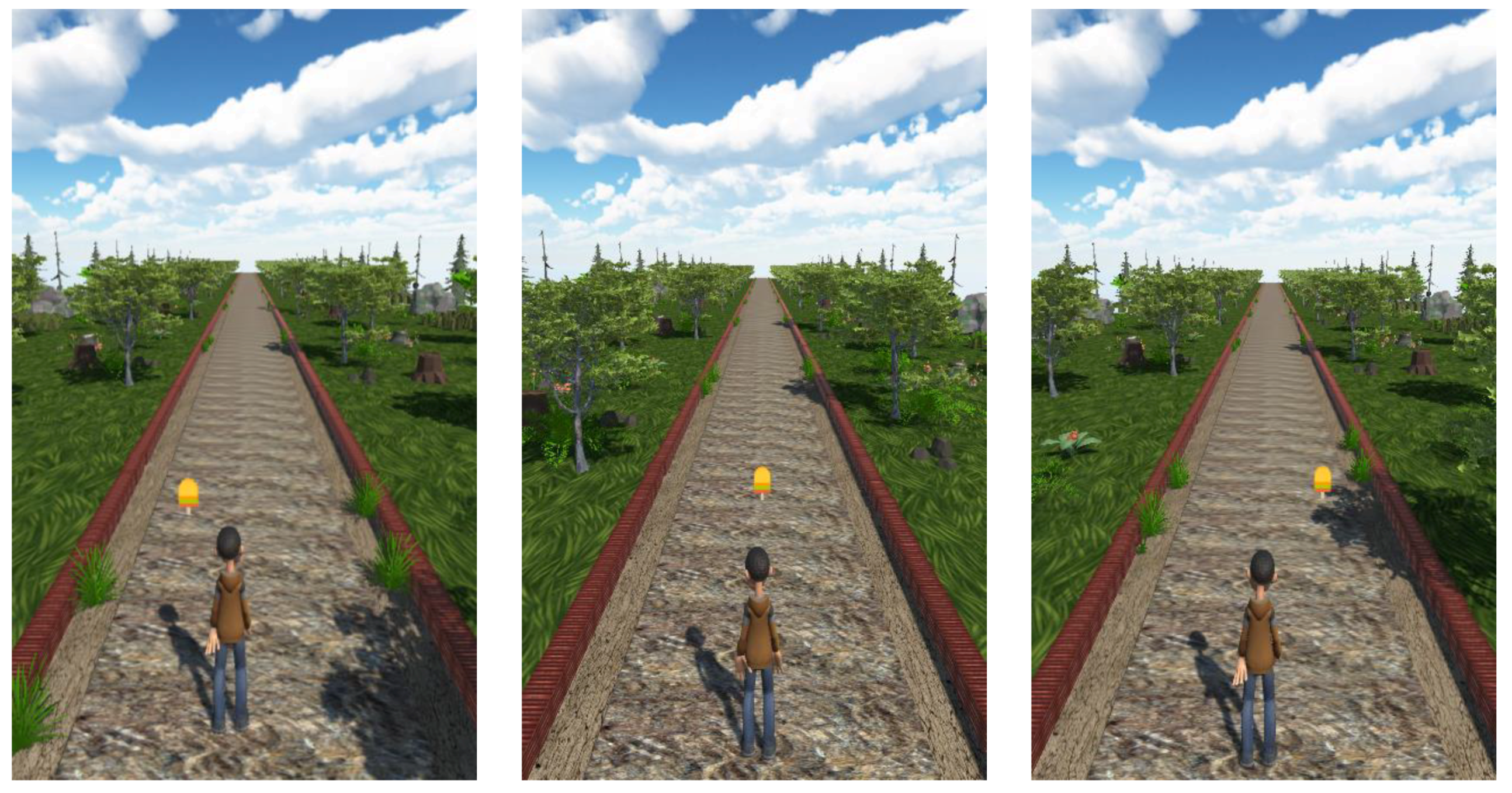

- The subject has to control the position of an embodied avatar in a custom virtual environment during a locomotion task;

- The subject has to collect pseudo-random objects that are located on the virtual ground along a path—the avatar can collect objects by hitting them;

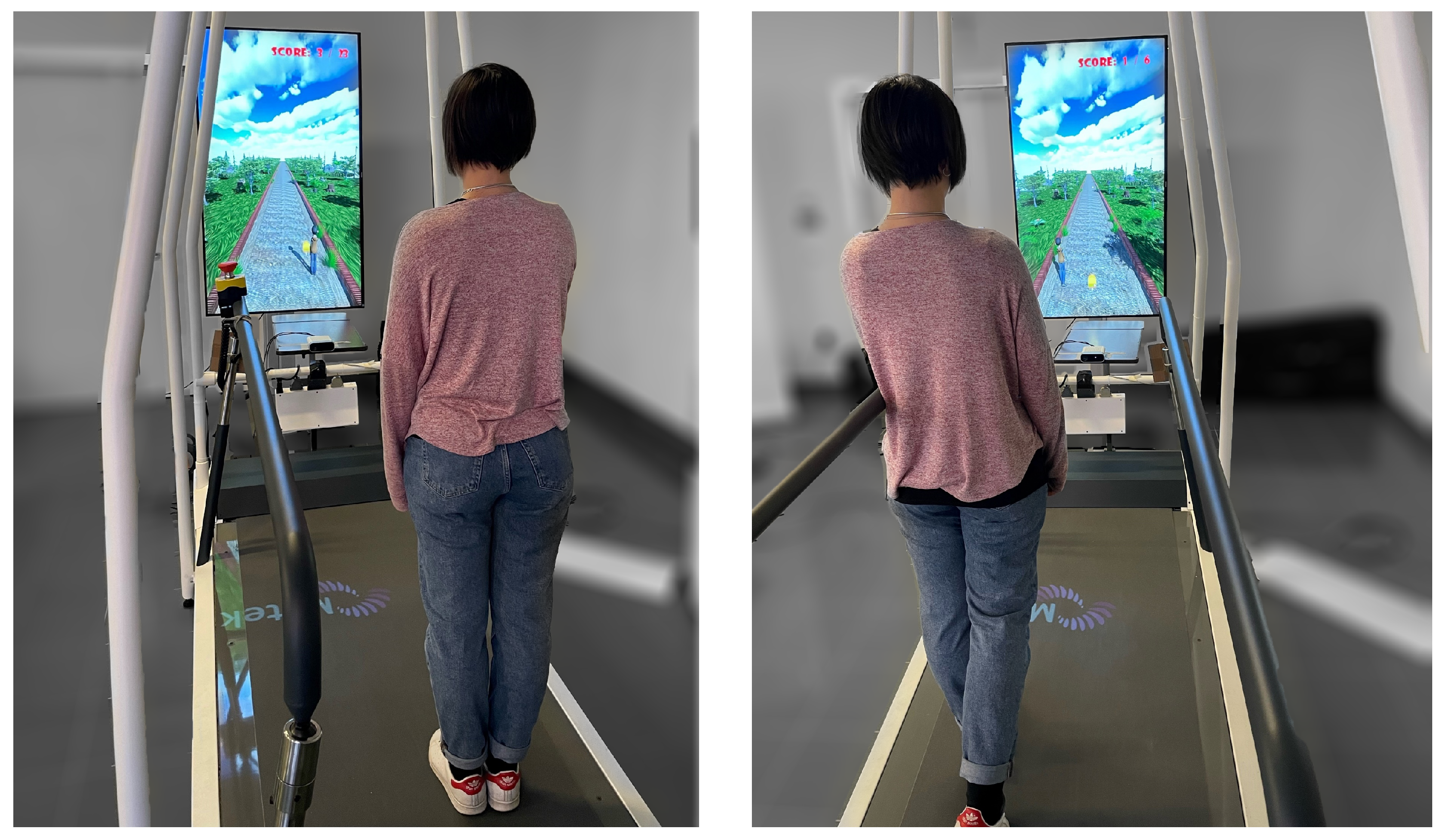

- The subject has to walk on a treadmill while collecting the virtual objects, and the treadmill must allow mediolateral (ML) movement of the entire body in order to pick up objects that are positioned on the sides of the path;

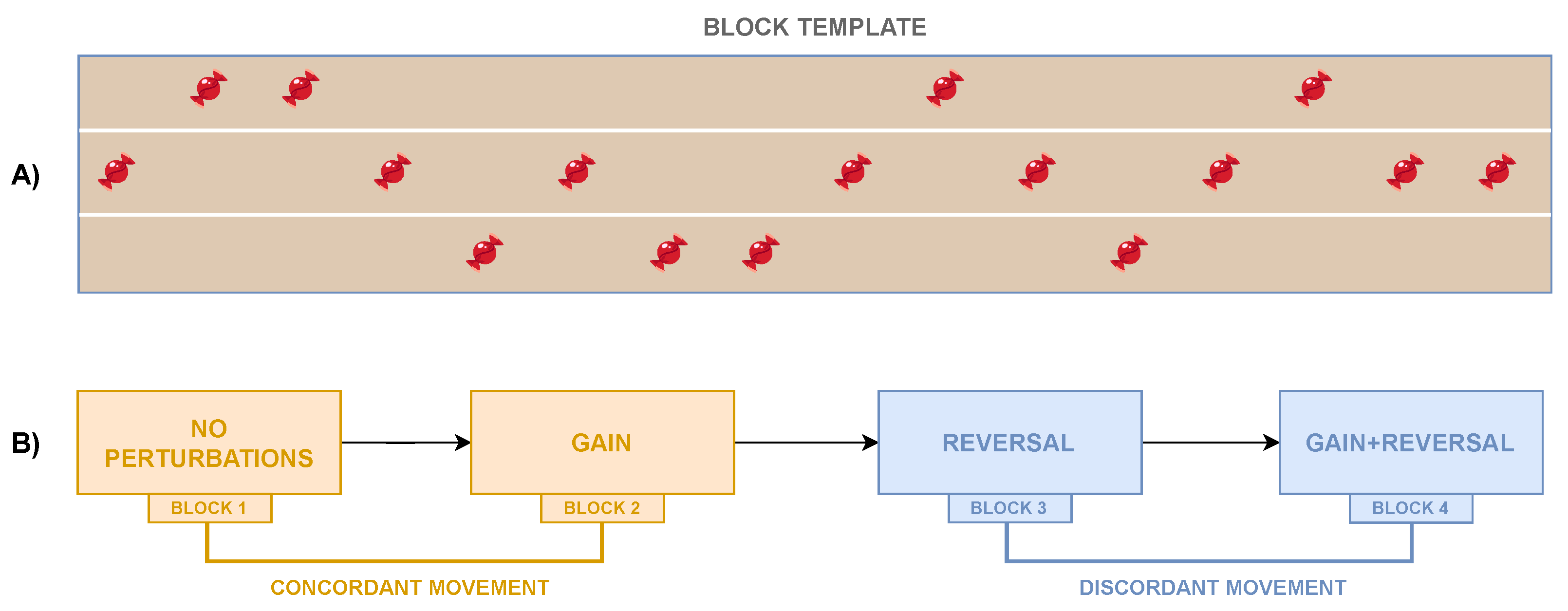

- The subject must also experience two kinds of perturbation that alter the position mapping between the real ML position of the subject and the ML position of the avatar in the VE, i.e., GAIN and REVERSAL, that aim to amplify and reverse, respectively, the avatar’s movement respectively;

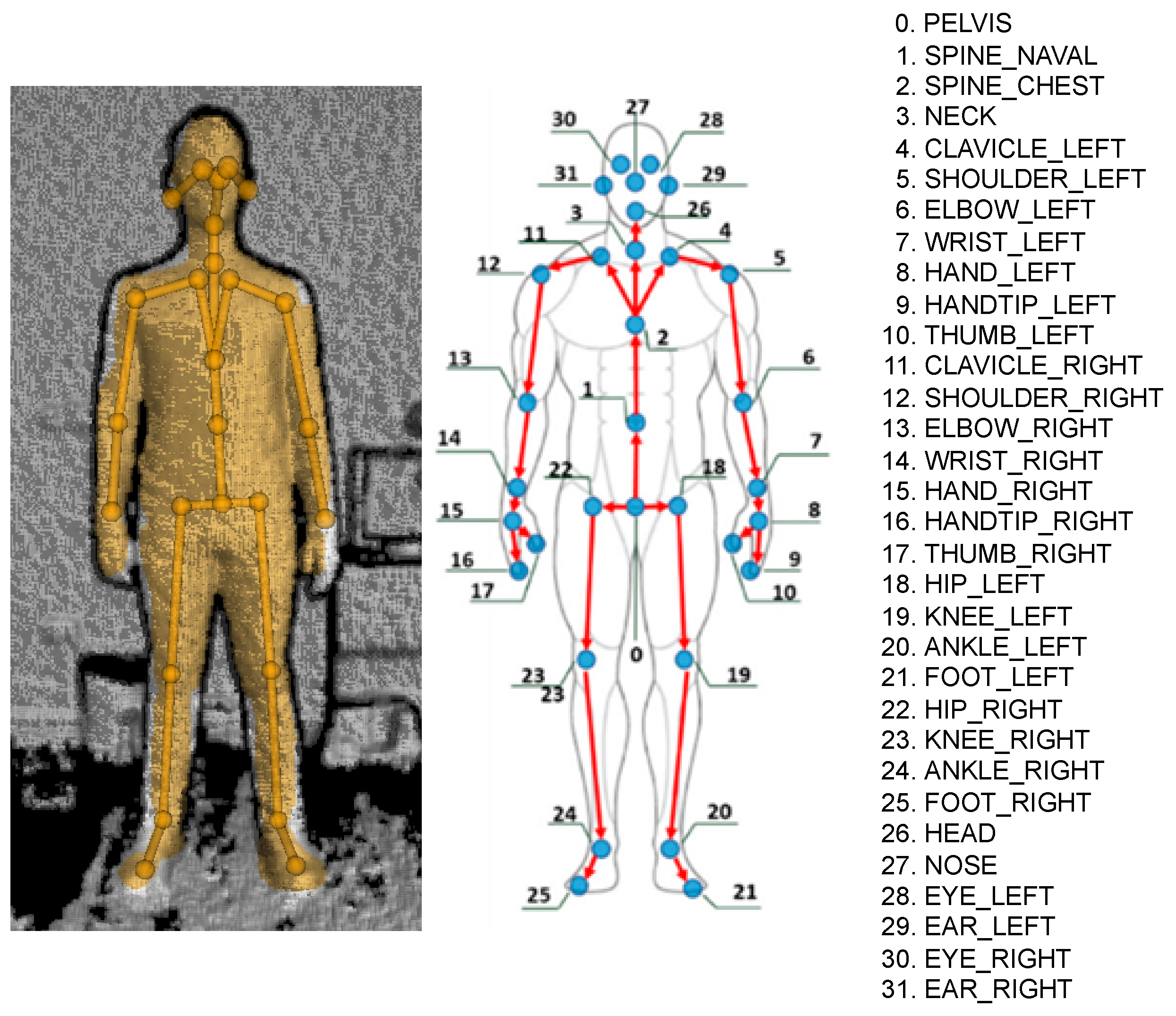

- The use of a marker-less solution to track the human skeleton body is preferred in order to speed up the experimental setup phase;

- Motivational soundtracks should be used.

2.1.1. The Subject Skeleton Tracking System

2.1.2. The Treadmill

2.1.3. The Serious Game

- No Perturbations: the mapping is not altered—the avatar moves as the subject—when the subject reaches the side of the treadmill, the avatar is on the side of the virtual road;

- Gain: the mapping is altered—the avatar moves following the same sense of the subject’s movement—the avatar’s movements on the ML plane are amplified by a specific gain factor;

- Reversal: the mapping is altered—the avatar moves following the opposite sense of the subject’s movement (i.e., when the subject moves to the right, the mapping leads the avatar to the left, and vice versa)—when the subject reaches the left side of the treadmill, the avatar is on the right side of the virtual road, and vice versa;

- Reversal + Gain: the mapping is altered—the above-mentioned perturbations are simultaneously applied, thus amplifying and reverting the avatar’s position at the same time.

2.1.4. Initial Calibration Procedures

- Preferred walking speed (PWS): the treadmill speed is kept fixed and is equal to the PWS during the entire experimental session—such a speed is also used to translate the avatar’s center of mass along the locomotion direction—this speed is experimentally found with the help of the clinical staff by gradually increasing the belt speed until the participants report that they are walking at their PWS [70,71];

- Mean step length: this parameter is used to define the distance between two subsequent objects to collect—in this specific work, the step length was automatically extracted by the C-Mill software (CueFors 2.2.3, Motek Medical B.V., Vleugelboot 14, 3991 CL Houten, Netherlands), even though any other solution based on skeleton data processing can be used;

- Range of motion of the subject’s pelvis on the treadmill along the mediolateral axis—such a measure is used to adapt the real subject’s RoM to the avatar’s RoM.

2.1.5. Performance Metrics

- Normalized Path Length (NPL): the length of the actual path divided by the length of the minimum length path (MLP), which is the straight line that passes through 2D points, i.e., start and end positions;

- Normalized Area (NA): the area between the actual path and the MLP divided by the MLP length;

- Initial Angle Error (IAE): the angle between the MLP and the segment joining the avatar’s initial position with the point of the real path that corresponds to the first peak of the distance from the MLP.

2.2. Framework Test

2.2.1. Participants

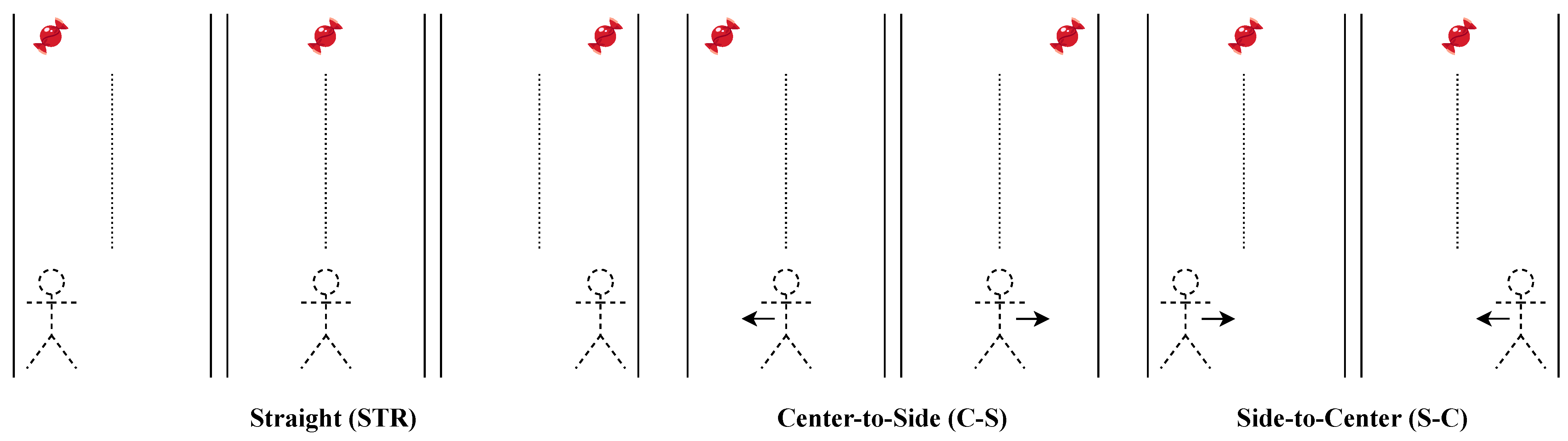

2.2.2. Experimental Protocol

2.2.3. Comparisons and Statistical Analysis

- Comparison 1—among mapping conditions;

- Comparison 2—among mapping conditions grouped by directions;

- Comparison 3—among directions grouped by mapping conditions.

3. Results

3.1. Feasibility in a Clinical Context

3.2. Quantitative Metric Validation

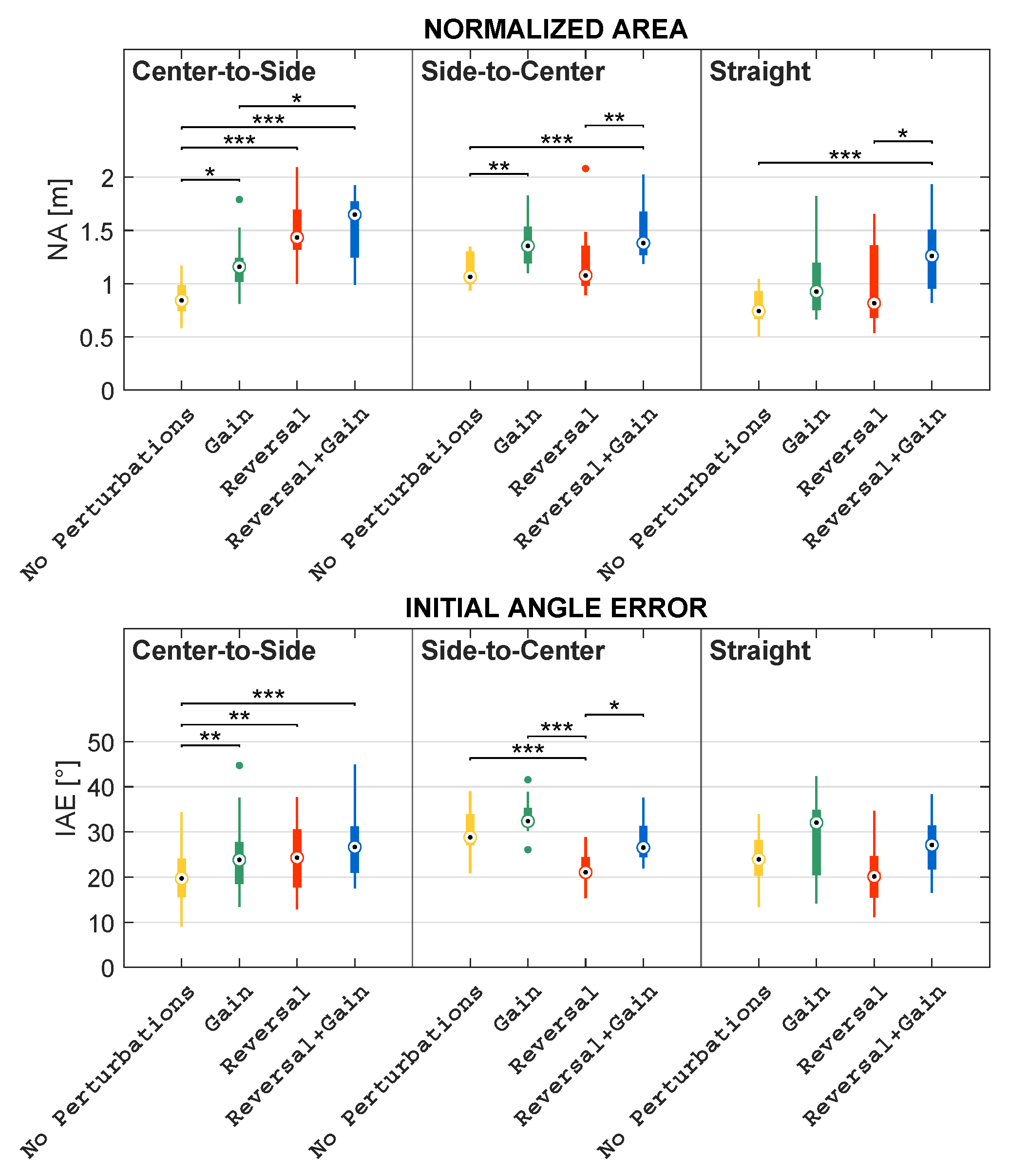

3.2.1. Differences among Mapping Conditions

3.2.2. Differences among Mapping Conditions Grouped by Directions

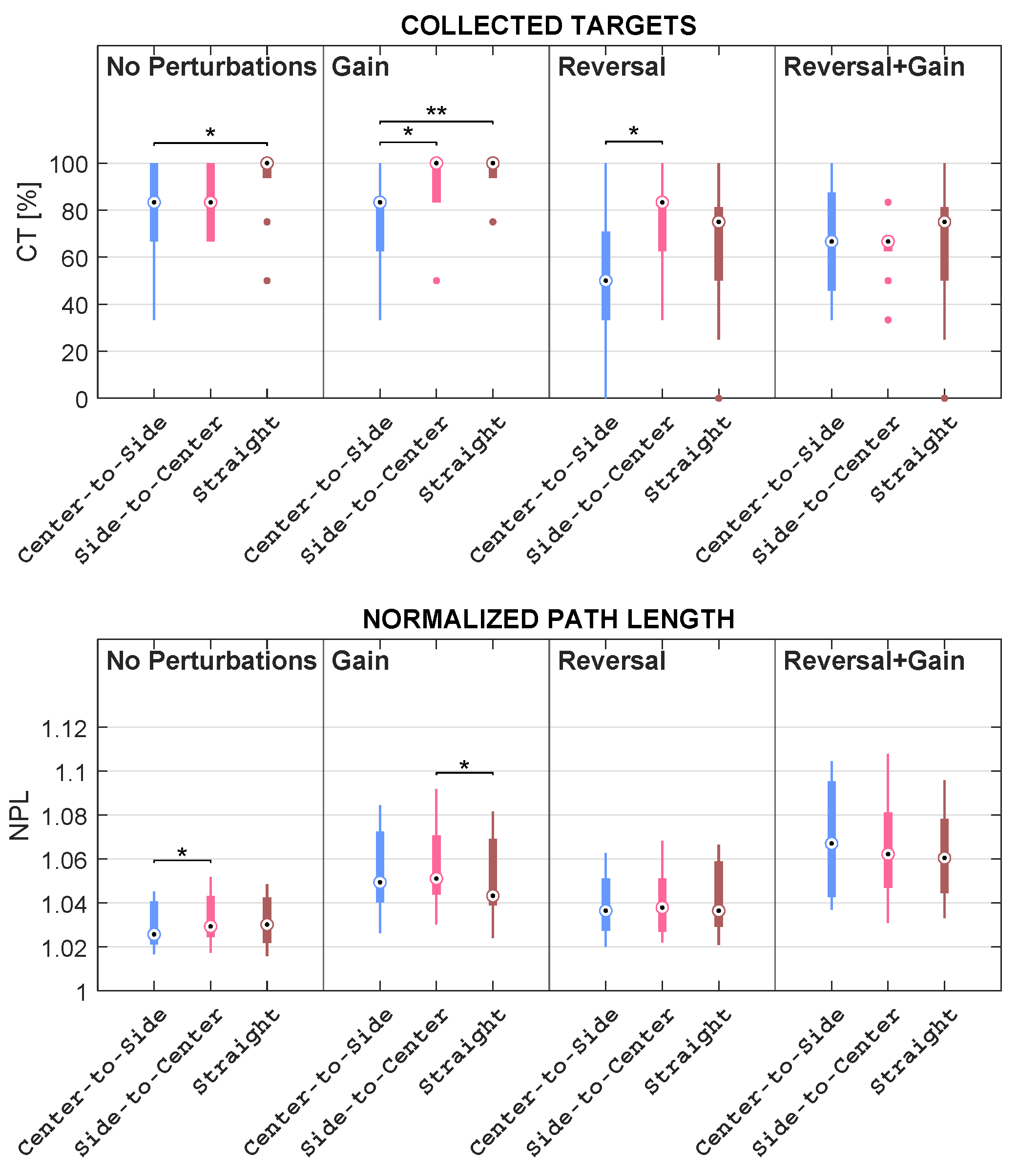

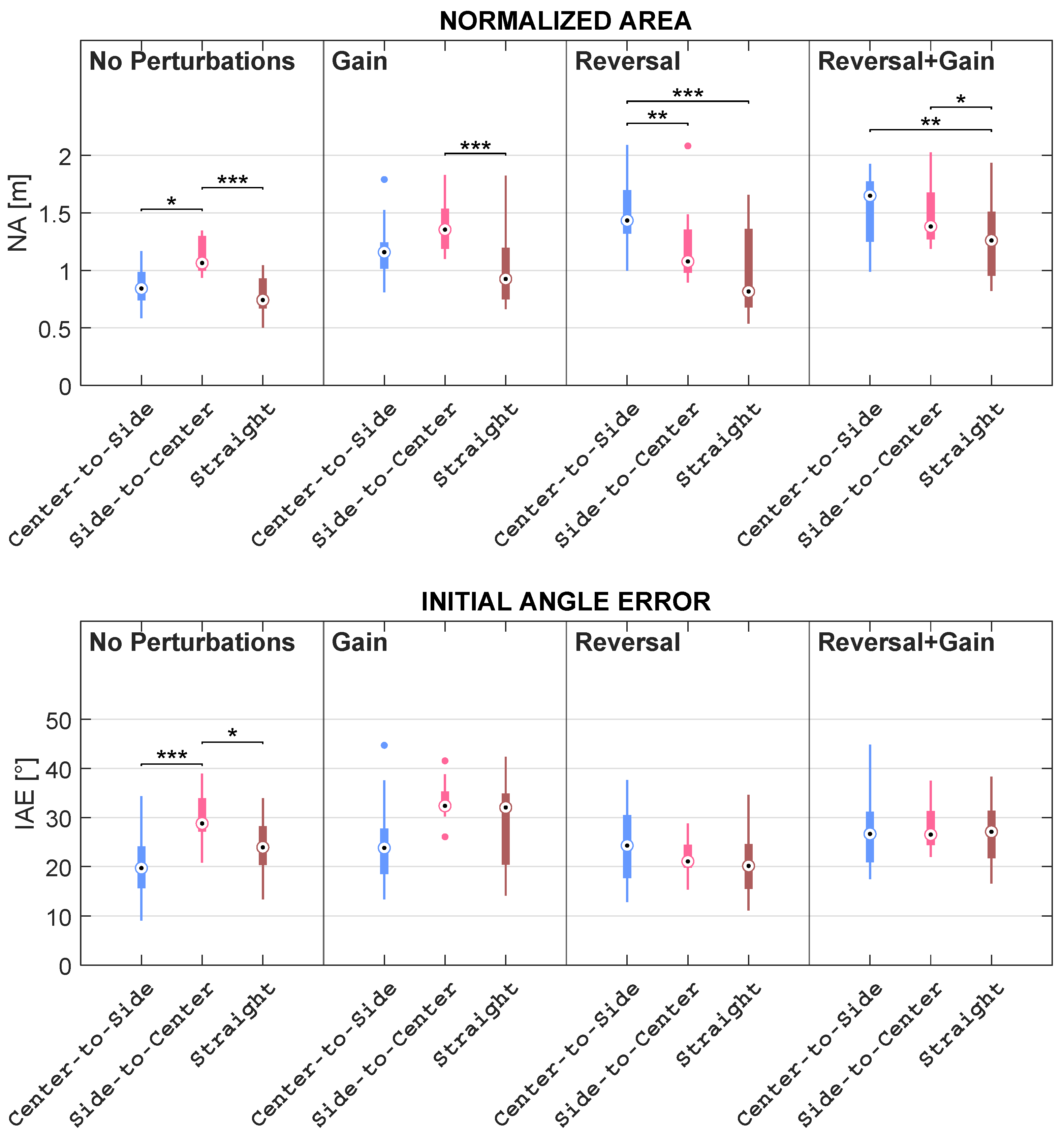

3.2.3. Differences among Directions Grouped by Mapping Conditions

4. Discussion

- Comparison 1—among mapping conditions;

- Comparison 2—among mapping conditions grouped by direction;

- Comparison 3—among directions grouped by mapping condition.

- Reversal is more challenging than Gain, and Reversal + Gain is more difficult than Reversal and Gain;

- The targets positioned along the STR direction are easy to pick, since no movement along the ML axis is required if the previous object has been collected.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- The effects of brain lateralization on motor control and adaptation. J. Mot. Behav. 2012, 44, 455–469. [CrossRef] [PubMed]

- Ferrel, C.; Bard, C.; Fleury, M. Coordination in childhood: Modifications of visuomotor representations in 6- to 11-year-old children. Exp. Brain Res. 2001, 138, 313–321. [Google Scholar] [CrossRef]

- Ferrel-Chapus, C.; Hay, L.; Olivier, I.; Bard, C.; Fleury, M. Visuomanual coordination in childhood: Adaptation to visual distortion. Exp. Brain Res. 2002, 144, 506–517. [Google Scholar] [CrossRef] [PubMed]

- Contreras-Vidal, J.L.; Bo, J.; Boudreau, J.P.; Clark, J.E. Development of visuomotor representations for hand movement in young children. Exp. Brain Res. 2005, 162, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Abeele, S.; Bock, O. Mechanisms for sensorimotor adaptation to rotated visual input. Exp. Brain Res. 2001, 139, 248–253. [Google Scholar] [CrossRef] [PubMed]

- Abeele, S.; Bock, O. Sensorimotor adaptation to rotated visual input: Different mechanisms for small versus large rotations. Exp. Brain Res. 2001, 140, 407–410. [Google Scholar] [CrossRef]

- Allen, J.L.; Franz, J.R. The motor repertoire of older adult fallers may constrain their response to balance perturbations. J. Neurophysiol. 2018, 120, 2368–2378. [Google Scholar] [CrossRef]

- Franz, J.R.; Francis, C.A.; Allen, M.S.; Thelen, D.G. Visuomotor entrainment and the frequency-dependent response of walking balance to perturbations. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 1135–1142. [Google Scholar] [CrossRef]

- Thompson, J.D.; Franz, J.R. Do kinematic metrics of walking balance adapt to perturbed optical flow? Hum. Mov. Sci. 2017, 54, 34–40. [Google Scholar] [CrossRef]

- Krakauer, J.W.; Pine, Z.M.; Ghilardi, M.F.; Ghez, C. “Learning of visuomotor transformations for vectorial planning of reaching trajectories”: Correction. J. Neurosci. 2001, 21, 1. [Google Scholar] [CrossRef]

- Mazzoni, P.; Krakauer, J.W. An implicit plan overrides an explicit strategy during visuomotor adaptation. J. Neurosci. 2006, 26, 3642–3645. [Google Scholar] [CrossRef] [PubMed]

- Taylor, J.A.; Krakauer, J.W.; Ivry, R.B. Explicit and implicit contributions to learning in a sensorimotor adaptation task. J. Neurosci. 2014, 34, 3023–3032. [Google Scholar] [CrossRef] [PubMed]

- Haith, A.M.; Huberdeau, D.M.; Krakauer, J.W. The influence of movement preparation time on the expression of visuomotor learning and savings. J. Neurosci. 2015, 35, 5109–5117. [Google Scholar] [CrossRef] [PubMed]

- Bagce, H.F.; Saleh, S.; Adamovich, S.V.; Krakauer, J.W.; Tunik, E. Corticospinal excitability is enhanced after visuomotor adaptation and depends on learning rather than performance or error. J. Neurophysiol. 2013, 109, 1097–1106. [Google Scholar] [CrossRef] [PubMed]

- Huberdeau, D.M.; Haith, A.M.; Krakauer, J.W. Formation of a long-term memory for visuomotor adaptation following only a few trials of practice. J. Neurophysiol. 2015, 114, 969–977. [Google Scholar] [CrossRef] [PubMed]

- Huberdeau, D.M.; Krakauer, J.W.; Haith, A.M. Practice induces a qualitative change in the memory representation for visuomotor learning. J. Neurophysiol. 2019, 122, 1050–1059. [Google Scholar] [CrossRef]

- Xing, X.; Saunders, J.A. Different generalization of fast and slow visuomotor adaptation across locomotion and pointing tasks. Exp. Brain Res. 2021, 239, 2859–2871. [Google Scholar] [CrossRef]

- Anguera, J.A.; Reuter-Lorenz, P.A.; Willingham, D.T.; Seidler, R.D. Contributions of Spatial Working Memory to Visuomotor Learning. J. Cogn. Neurosci. 2010, 22, 1917–1930. [Google Scholar] [CrossRef]

- Lex, H.; Weigelt, M.; Knoblauch, A.; Schack, T. Functional relationship between cognitive representations of movement directions and visuomotor adaptation performance. Exp. Brain Res. 2012, 223, 457–467. [Google Scholar] [CrossRef]

- Lex, H.; Weigelt, M.; Knoblauch, A.; Schack, T. The Functional Role of Cognitive Frameworks on Visuomotor Adaptation Performance. J. Mot. Behav. 2014, 46, 389–396. [Google Scholar] [CrossRef]

- Anwar, M.N.; Navid, M.S.; Khan, M.; Kitajo, K. A possible correlation between performance IQ, visuomotor adaptation ability and mu suppression. Brain Res. 2015, 1603, 84–93. [Google Scholar] [CrossRef] [PubMed]

- Christou, A.I.; Miall, R.C.; McNab, F.; Galea, J.M. Individual differences in explicit and implicit visuomotor learning and working memory capacity. Sci. Rep. 2016, 6, 36633. [Google Scholar] [CrossRef]

- Schmitz, G. Interference between adaptation to double steps and adaptation to rotated feedback in spite of differences in directional selectivity. Exp. Brain Res. 2016, 234, 1491–1504. [Google Scholar] [CrossRef] [PubMed]

- Schmitz, G.; Dierking, M.; Guenther, A. Correlations between executive functions and adaptation to incrementally increasing sensorimotor discordances. Exp. Brain Res. 2018, 236, 3417–3426. [Google Scholar] [CrossRef]

- Kannape, O.; Schwabe, L.; Tadi, T.; Blanke, O. The limits of agency in walking humans. Neuropsychologia 2010, 48, 1628–1636. [Google Scholar] [CrossRef] [PubMed]

- Kannape, O.A.; Barré, A.; Aminian, K.; Blanke, O. Cognitive Loading Affects Motor Awareness and Movement Kinematics but Not Locomotor Trajectories during Goal-Directed Walking in a Virtual Reality Environment. PLoS ONE 2014, 9, e85560. [Google Scholar] [CrossRef] [PubMed]

- Osaba, M.Y.; Martelli, D.; Prado, A.; Agrawal, S.K.; Lalwani, A.K. Age-related differences in gait adaptations during overground walking with and without visual perturbations using a virtual reality headset. Sci. Rep. 2020, 10, 15376. [Google Scholar] [CrossRef]

- Bock, O. Components of sensorimotor adaptation in young and elderly subjects. Exp. Brain Res. 2005, 160, 259–263. [Google Scholar] [CrossRef]

- Anguera, J.A.; Reuter-Lorenz, P.A.; Willingham, D.T.; Seidler, R.D. Failure to Engage Spatial Working Memory Contributes to Age-related Declines in Visuomotor Learning. J. Cogn. Neurosci. 2011, 23, 11–25. [Google Scholar] [CrossRef]

- Li, N.; Chen, G.; Xie, Y.; Chen, Z. Aging Effect on Visuomotor Adaptation: Mediated by Cognitive Decline. Front. Aging Neurosci. 2021, 13, 742928. [Google Scholar] [CrossRef]

- Bock, O.; Girgenrath, M. Relationship between sensorimotor adaptation and cognitive functions in younger and older subjects. Exp. Brain Res. 2006, 169, 400–406. [Google Scholar] [CrossRef] [PubMed]

- Simon, A.; Bock, O. Influence of divergent and convergent thinking on visuomotor adaptation in young and older adults. Hum. Mov. Sci. 2016, 46, 23–29. [Google Scholar] [CrossRef] [PubMed]

- Wong, A.L.; Marvel, C.L.; Taylor, J.A.; Krakauer, J.W. Can patients with cerebellar disease switch learning mechanisms to reduce their adaptation deficits? Brain 2019, 142, 662–673. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.M.; Bo, J. Visuomotor adaptation and its relationship with motor ability in children with and without autism spectrum disorder. Hum. Mov. Sci. 2021, 78, 102826. [Google Scholar] [CrossRef]

- Kagerer, F.; Contreras-Vidal, J.; Bo, J.; Clark, J. Abrupt, but not gradual visuomotor distortion facilitates adaptation in children with developmental coordination disorder. Hum. Mov. Sci. 2006, 25, 622–633. [Google Scholar] [CrossRef]

- Cristella, G.; Allighieri, M.; Pasquini, G.; Simoni, L.; Antonetti, A.; Beni, C.; Macchi, C.; Ferrari, A. Evaluation of sense of position and agency in children with diplegic cerebral palsy: A pilot study. J. Pediatr. Rehabil. Med. 2022, 15, 181–191. [Google Scholar] [CrossRef]

- Contreras-Vidal, J.L.; Buch, E.R. Effects of Parkinson’s disease on visuomotor adaptation. Exp. Brain Res. 2003, 150, 25–32. [Google Scholar] [CrossRef]

- Semrau, J.A.; Perlmutter, J.S.; Thoroughman, K.A. Visuomotor adaptation in Parkinson’s disease: Effects of perturbation type and medication state. J. Neurophysiol. 2014, 111, 2675–2687. [Google Scholar] [CrossRef]

- Cressman, E.K.; Salomonczyk, D.; Constantin, A.; Miyasaki, J.; Moro, E.; Chen, R.; Strafella, A.; Fox, S.; Lang, A.E.; Poizner, H.; et al. Proprioceptive recalibration following implicit visuomotor adaptation is preserved in Parkinson’s disease. Exp. Brain Res. 2021, 239, 1551–1565. [Google Scholar] [CrossRef]

- Schaefer, S.Y.; Haaland, K.Y.; Sainburg, R.L. Dissociation of initial trajectory and final position errors during visuomotor adaptation following unilateral stroke. Brain Res. 2009, 1298, 78–91. [Google Scholar] [CrossRef]

- Nguemeni, C.; Nakchbandi, L.; Homola, G.; Zeller, D. Impaired consolidation of visuomotor adaptation in patients with multiple sclerosis. Eur. J. Neurol. 2021, 28, 884–892. [Google Scholar] [CrossRef] [PubMed]

- Sadnicka, A.; Stevenson, A.; Bhatia, K.P.; Rothwell, J.C.; Edwards, M.J.; Galea, J.M. High motor variability in DYT1 dystonia is associated with impaired visuomotor adaptation. Sci. Rep. 2018, 8, 3653. [Google Scholar] [CrossRef] [PubMed]

- Kurdziel, L.B.F.; Dempsey, K.; Zahara, M.; Valera, E.; Spencer, R.M.C. Impaired visuomotor adaptation in adults with ADHD. Exp. Brain Res. 2015, 233, 1145–1153. [Google Scholar] [CrossRef] [PubMed]

- Bindel, L.; Mühlberg, C.; Pfeiffer, V.; Nitschke, M.; Müller, A.; Wegscheider, M.; Rumpf, J.J.; Zeuner, K.E.; Becktepe, J.S.; Welzel, J.; et al. Visuomotor Adaptation Deficits in Patients with Essential Tremor. Cerebellum 2022, in press. [Google Scholar] [CrossRef] [PubMed]

- Shadmehr, R.; Smith, M.A.; Krakauer, J.W. Error Correction, Sensory Prediction, and Adaptation in Motor Control. Annu. Rev. Neurosci. 2010, 33, 89–108. [Google Scholar] [CrossRef]

- Berger, D.J.; Gentner, R.; Edmunds, T.; Pai, D.K.; d’Avella, A. Differences in Adaptation Rates after Virtual Surgeries Provide Direct Evidence for Modularity. J. Neurosci. 2013, 33, 12384–12394. [Google Scholar] [CrossRef]

- Janeh, O.; Langbehn, E.; Steinicke, F.; Bruder, G.; Gulberti, A.; Poetter-Nerger, M. Walking in virtual reality: Effects of manipulated visual self-motion on walking biomechanics. ACM Trans. Appl. Percept. 2017, 14, 1–15. [Google Scholar] [CrossRef]

- Martelli, D.; Xia, B.; Prado, A.; Agrawal, S.K. Gait adaptations during overground walking and multidirectional oscillations of the visual field in a virtual reality headset. Gait Posture 2019, 67, 251–256. [Google Scholar] [CrossRef]

- Buongiorno, D.; Barone, F.; Solazzi, M.; Bevilacqua, V.; Frisoli, A. A Linear Optimization Procedure for an EMG-driven NeuroMusculoSkeletal Model Parameters Adjusting: Validation Through a Myoelectric Exoskeleton Control. In Haptics: Perception, Devices, Control, and Applications, Proceedings of the 10th International Conference, EuroHaptics 2016, London, UK, 4–7 July 2016; Bello, F., Kajimoto, H., Visell, Y., Eds.; Springer: Cham, Switzerland, 2016; pp. 218–227. [Google Scholar] [CrossRef]

- Buongiorno, D.; Barone, F.; Berger, D.J.; Cesqui, B.; Bevilacqua, V.; d’Avella, A.; Frisoli, A. Evaluation of a Pose-Shared Synergy-Based Isometric Model for Hand Force Estimation: Towards Myocontrol. In Converging Clinical and Engineering Research on Neurorehabilitation II, Proceedings of the 3rd International Conference on NeuroRehabilitation (ICNR2016), Segovia, Spain, 18–21 October 2016; Ibáñez, J., González-Vargas, J., Azorín, J.M., Akay, M., Pons, J.L., Eds.; Springer: Cham, Switzerland, 2017; pp. 953–958. [Google Scholar] [CrossRef]

- Buongiorno, D.; Camardella, C.; Cascarano, G.D.; Pelaez Murciego, L.; Barsotti, M.; De Feudis, I.; Frisoli, A.; Bevilacqua, V. An undercomplete autoencoder to extract muscle synergies for motor intention detection. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Schmitz, G. Enhanced cognitive performance after multiple adaptations to visuomotor transformations. PLoS ONE 2022, 17, e274759. [Google Scholar] [CrossRef]

- Franklin, D.W.; Burdet, E.; Peng Tee, K.; Osu, R.; Chew, C.M.; Milner, T.E.; Kawato, M. CNS Learns Stable, Accurate, and Efficient Movements Using a Simple Algorithm. J. Neurosci. 2008, 28, 11165–11173. [Google Scholar] [CrossRef]

- Buongiorno, D.; Cascarano, G.D.; Camardella, C.; De Feudis, I.; Frisoli, A.; Bevilacqua, V. Task-Oriented Muscle Synergy Extraction Using An Autoencoder-Based Neural Model. Information 2020, 11, 219. [Google Scholar] [CrossRef]

- Camardella, C.; Barsotti, M.; Buongiorno, D.; Frisoli, A.; Bevilacqua, V. Towards online myoelectric control based on muscle synergies-to-force mapping for robotic applications. Neurocomputing 2021, 452, 768–778. [Google Scholar] [CrossRef]

- Baraduc, P.; Wolpert, D.M. Adaptation to a Visuomotor Shift Depends on the Starting Posture. J. Neurophysiol. 2002, 88, 973–981. [Google Scholar] [CrossRef] [PubMed]

- Hegele, M.; Heuer, H. Adaptation to a direction-dependent visuomotor gain in the young and elderly. Psychol. Res. 2008, 74, 21. [Google Scholar] [CrossRef]

- Langan, J.; Seidler, R.D. Age differences in spatial working memory contributions to visuomotor adaptation and transfer. Behav. Brain Res. 2011, 225, 160–168. [Google Scholar] [CrossRef]

- Michaud, B.; Cherni, Y.; Begon, M.; Girardin-Vignola, G.; Roussel, P. A serious game for gait rehabilitation with the Lokomat. In Proceedings of the 2017 IEEE International Conference on Virtual Rehabilitation (ICVR), Montreal, QC, Canada, 19–22 June 2017; pp. 1–2. [Google Scholar] [CrossRef]

- Labruyère, R.; Gerber, C.N.; Birrer-Brütsch, K.; Meyer-Heim, A.; van Hedel, H.J. Requirements for and impact of a serious game for neuro-pediatric robot-assisted gait training. Res. Dev. Disabil. 2013, 34, 3906–3915. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, M.; Zhao, L.; Yang, R.; Liang, H.N.; Han, J.; Wang, L.; Sun, W. Influence of hand representation design on presence and embodiment in virtual environment. In Proceedings of the 2020 IEEE 13th International Symposium on Computational Intelligence and Design (ISCID), Hongzhou, China, 12–13 December 2020; pp. 364–367. [Google Scholar] [CrossRef]

- Gonçalves, G.; Melo, M.; Barbosa, L.; Vasconcelos-Raposo, J.; Bessa, M. Evaluation of the impact of different levels of self-representation and body tracking on the sense of presence and embodiment in immersive VR. Virtual Real. 2022, 26, 1–14. [Google Scholar] [CrossRef]

- Lin, J.; Zhu, Y.; Kubricht, J.; Zhu, S.C.; Lu, H. Visuomotor Adaptation and Sensory Recalibration in Reversed Hand Movement Task. In Proceedings of the CogSci, London, UK, 26–29 July 2017. [Google Scholar]

- Azure Kinect DK Hardware Specifications. Available online: https://learn.microsoft.com/it-it/azure/kinect-dk/hardware-specification (accessed on 25 January 2022).

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer vision and deep learning techniques for pedestrian detection and tracking: A survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Buongiorno, D.; Trotta, G.F.; Bortone, I.; Di Gioia, N.; Avitto, F.; Losavio, G.; Bevilacqua, V. Assessment and Rating of Movement Impairment in Parkinson’s Disease Using a Low-Cost Vision-Based System. In Intelligent Computing Methodologies, Proceedings of the 14th International Conference, ICIC 2018, Wuhan, China, 15–18 August 2018; Huang, D.S., Gromiha, M.M., Han, K., Hussain, A., Eds.; Springer: Cham, Switzerland, 2018; pp. 777–788. [Google Scholar] [CrossRef]

- Bortone, I.; Buongiorno, D.; Lelli, G.; Di Candia, A.; Cascarano, G.D.; Trotta, G.F.; Fiore, P.; Bevilacqua, V. Gait Analysis and Parkinson’s Disease: Recent Trends on Main Applications in Healthcare. In Converging Clinical and Engineering Research on Neurorehabilitation III, Proceedings of the 4th International Conference on NeuroRehabilitation (ICNR2018), Pisa, Italy, 16–20 October 2018; Masia, L., Micera, S., Akay, M., Pons, J.L., Eds.; Springer: Cham, Switzerland, 2019; pp. 1121–1125. [Google Scholar] [CrossRef]

- Azure-Kinect-Samples. Available online: https://github.com/microsoft/Azure-Kinect-Samples/tree/master/body-tracking-samples/sample_unity_bodytracking (accessed on 25 January 2022).

- Wang, Y.; Gao, L.; Yan, H.; Jin, Z.; Fang, J.; Qi, L.; Zhen, Q.; Liu, C.; Wang, P.; Liu, Y.; et al. Efficacy of C-Mill gait training for improving walking adaptability in early and middle stages of Parkinson’s disease. Gait Posture 2022, 91, 79–85. [Google Scholar] [CrossRef]

- Determination of preferred walking speed on treadmill may lead to high oxygen cost on treadmill walking. Gait Posture 2010, 31, 366–369. [CrossRef] [PubMed]

- ArUco: A Minimal Library for Augmented Reality Applications Based on OpenCV. Available online: https://www.uco.es/investiga/grupos/ava/portfolio/aruco/ (accessed on 25 January 2022).

- Watkins, M.W. Structure of the Wechsler Intelligence Scale for Children—Fourth Edition among a national sample of referred students. Psychol. Assess. 2010, 22, 782–787. [Google Scholar] [CrossRef] [PubMed]

- Davis, J.L.; Matthews, R.N. NEPSY-II Review: Korkman, M., Kirk, U., & Kemp, S. (2007). NEPSY—Second Edition (NEPSY-II). San Antonio, TX: Harcourt Assessment. J. Psychoeduc. Assess. 2010, 28, 175–182. [Google Scholar] [CrossRef]

- Ardito, C.; Caivano, D.; Colizzi, L.; Dimauro, G.; Verardi, L. Design and Execution of Integrated Clinical Pathway: A Simplified Meta-Model and Associated Methodology. Information 2020, 11, 362. [Google Scholar] [CrossRef]

- Irshad, S.; Perkis, A.; Azam, W. Wayfinding in virtual reality serious game: An exploratory study in the context of user perceived experiences. Appl. Sci. 2021, 11, 7822. [Google Scholar] [CrossRef]

- Mystakidis, S.; Besharat, J.; Papantzikos, G.; Christopoulos, A.; Stylios, C.; Agorgianitis, S.; Tselentis, D. Design, Development, and Evaluation of a Virtual Reality Serious Game for School Fire Preparedness Training. Educ. Sci. 2022, 12, 281. [Google Scholar] [CrossRef]

- Hussain, S.M.; Brunetti, A.; Lucarelli, G.; Memeo, R.; Bevilacqua, V.; Buongiorno, D. Deep Learning Based Image Processing for Robot Assisted Surgery: A Systematic Literature Survey. IEEE Access 2022, 10, 122627–122657. [Google Scholar] [CrossRef]

- Mao, R.Q.; Lan, L.; Kay, J.; Lohre, R.; Ayeni, O.R.; Goel, D.P.; de SA, D. Immersive Virtual Reality for Surgical Training: A Systematic Review. J. Surg. Res. 2021, 268, 40–58. [Google Scholar] [CrossRef]

- Winter, C.; Kern, F.; Gall, D.; Latoschik, M.E.; Pauli, P.; Käthner, I. Immersive virtual reality during gait rehabilitation increases walking speed and motivation: A usability evaluation with healthy participants and patients with multiple sclerosis and stroke. J. Neuroeng. Rehabil. 2021, 18, 1–14. [Google Scholar] [CrossRef]

- Serge, S.R.; Fragomeni, G. Assessing the Relationship Between Type of Head Movement and Simulator Sickness Using an Immersive Virtual Reality Head Mounted Display: A Pilot Study. In Virtual, Augmented and Mixed Reality, Proceedings of the 9th International Conference, VAMR 2017, Held as Part of HCI International 2017, Vancouver, BC, Canada, 9–14 July 2017; Lackey, S., Chen, J., Eds.; Springer: Cham, Switzerland, 2017; pp. 556–566. [Google Scholar] [CrossRef]

- Robert, M.T.; Ballaz, L.; Lemay, M. The effect of viewing a virtual environment through a head-mounted display on balance. Gait Posture 2016, 48, 261–266. [Google Scholar] [CrossRef]

- Kamikokuryo, K.; Haga, T.; Venture, G.; Hernandez, V. Adversarial Autoencoder and Multi-Armed Bandit for Dynamic Difficulty Adjustment in Immersive Virtual Reality for Rehabilitation: Application to Hand Movement. Sensors 2022, 22, 4499. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suglia, V.; Brunetti, A.; Pasquini, G.; Caputo, M.; Marvulli, T.M.; Sibilano, E.; Della Bella, S.; Carrozza, P.; Beni, C.; Naso, D.; et al. A Serious Game for the Assessment of Visuomotor Adaptation Capabilities during Locomotion Tasks Employing an Embodied Avatar in Virtual Reality. Sensors 2023, 23, 5017. https://doi.org/10.3390/s23115017

Suglia V, Brunetti A, Pasquini G, Caputo M, Marvulli TM, Sibilano E, Della Bella S, Carrozza P, Beni C, Naso D, et al. A Serious Game for the Assessment of Visuomotor Adaptation Capabilities during Locomotion Tasks Employing an Embodied Avatar in Virtual Reality. Sensors. 2023; 23(11):5017. https://doi.org/10.3390/s23115017

Chicago/Turabian StyleSuglia, Vladimiro, Antonio Brunetti, Guido Pasquini, Mariapia Caputo, Tommaso Maria Marvulli, Elena Sibilano, Sara Della Bella, Paola Carrozza, Chiara Beni, David Naso, and et al. 2023. "A Serious Game for the Assessment of Visuomotor Adaptation Capabilities during Locomotion Tasks Employing an Embodied Avatar in Virtual Reality" Sensors 23, no. 11: 5017. https://doi.org/10.3390/s23115017

APA StyleSuglia, V., Brunetti, A., Pasquini, G., Caputo, M., Marvulli, T. M., Sibilano, E., Della Bella, S., Carrozza, P., Beni, C., Naso, D., Monaco, V., Cristella, G., Bevilacqua, V., & Buongiorno, D. (2023). A Serious Game for the Assessment of Visuomotor Adaptation Capabilities during Locomotion Tasks Employing an Embodied Avatar in Virtual Reality. Sensors, 23(11), 5017. https://doi.org/10.3390/s23115017