Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions

Abstract

:1. Introduction

1.1. Why Both Agriculture and Forestry Are Important

1.2. Artificial Intelligence

1.3. Machine Learning—The Workhorse of AI

- Supervised learning includes algorithms that learn from human-labeled data, e.g., support vector machines (SVM), logistic regression, naive Bayes, random forests, and decision trees [30]. A typical example is a model that receives as input a set of images of crops and a set of images of weeds. Each image is labeled by a human, and the neural network’s task is to learn the features that help distinguish between these two classes [31], which is nowadays performed by neural networks (deep learning approaches) using semi-supervised learning [32]. Typically, modern neural networks decompose inputs into lower-level image concepts, such as lines and curves, and then assemble them into larger structures to internally represent what distinguishes the two (or more) types of images they encounter during their learning process [33]. A trained network that has performed well on one classification task is expected to classify similar inputs (new images of cats and cars) with acceptable success; on the other hand, it is not expected to perform well on other tasks, so the generalization problem mentioned above shows the limits of the capacities of the algorithm.

- Unsupervised learning includes algorithms that do not require human labels. However, of course, some assumptions are always made about the structure of the data, typically after a visualization process [34,35]. Clustering algorithms that categorize data based on their intrinsic features were the first examples of unsupervised machine learning algorithms. More recent neural network architectures, called autoencoders, compute more compact representations of the input data in a space of lower dimensions than that to which the input belongs. These models can be easily used as generative models after the convergence of the learning process, thereby demonstrating their generalization ability in a constructive way. Even if the human has no direct influence on these algorithms, there is interference in the form of configuration of some meta-parameters; even the choice of the appropriate unsupervised algorithm is made by the designer. Some basic knowledge of the data is required, and techniques such as cross-validation are used to find good parameters and avoid over-fitting problems.

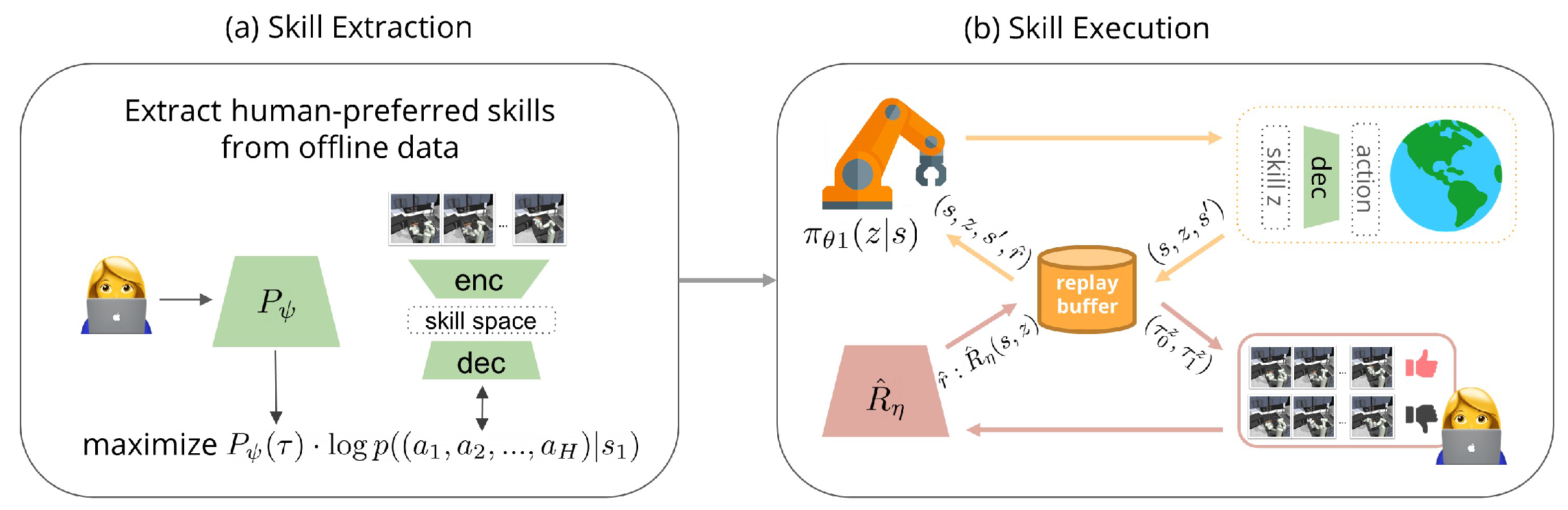

- Reinforcement learning follows a very different paradigm. The RL algorithm (often called agent here) bases its decisions on both the data and the human input, but the human input is not as direct as labeling [36,37]. To better understand this, it is important to remember that the tasks performed by reinforcement learning algorithms are not about categorizing the input data and learning the meaning of each feature, but rather about learning how to efficiently navigate to a goal. What the algorithm needs to learn is a strategy for reaching the desired state from a starting point, guided not by a predefined plan but by a reward it will receive if it completes either the end goal or key subtasks. The learning process includes several iterations (episodes) in which a so-called agent explores an environment randomly at the beginning and learns from its mistakes, and it eventually finds itself in unprofitable states (from the point of view of the reward). Over time, the agent gathers knowledge about which states are more successful and exploits them to obtain the greatest possible reward. Current state-of-the-art reinforcement learning algorithms are able to find strategies in complex games unknown to humans [38] and also use multiple agents communicating with each other to enable even more efficient strategy discovery [39].

2. State-of-the-Art AI Technologies

2.1. Classification of AI Technologies

- Assisted AI systems that help humans perform repetitive routine tasks faster and both quantitatively and qualitatively better, e.g., ambient assisted smart living [51] and weather forecasting.

- Augmenting AI systems that put a human in the loop or at least enable a human to be in control in order to augment human intelligence with machine intelligence and the opposite. Examples range from simple, low-cost augmented reality applications [52] to augmented AI in agriculture [53] and interactive machine teaching concepts [54].

2.2. Autonomous AI Systems

2.2.1. Examples from Agriculture

2.2.2. Examples from Forestry

2.3. Automated AI Systems

2.3.1. Example from Agriculture

2.3.2. Examples from Forestry

2.4. Assisted AI Systems

2.4.1. Example from Agriculture

2.4.2. Examples from Forestry

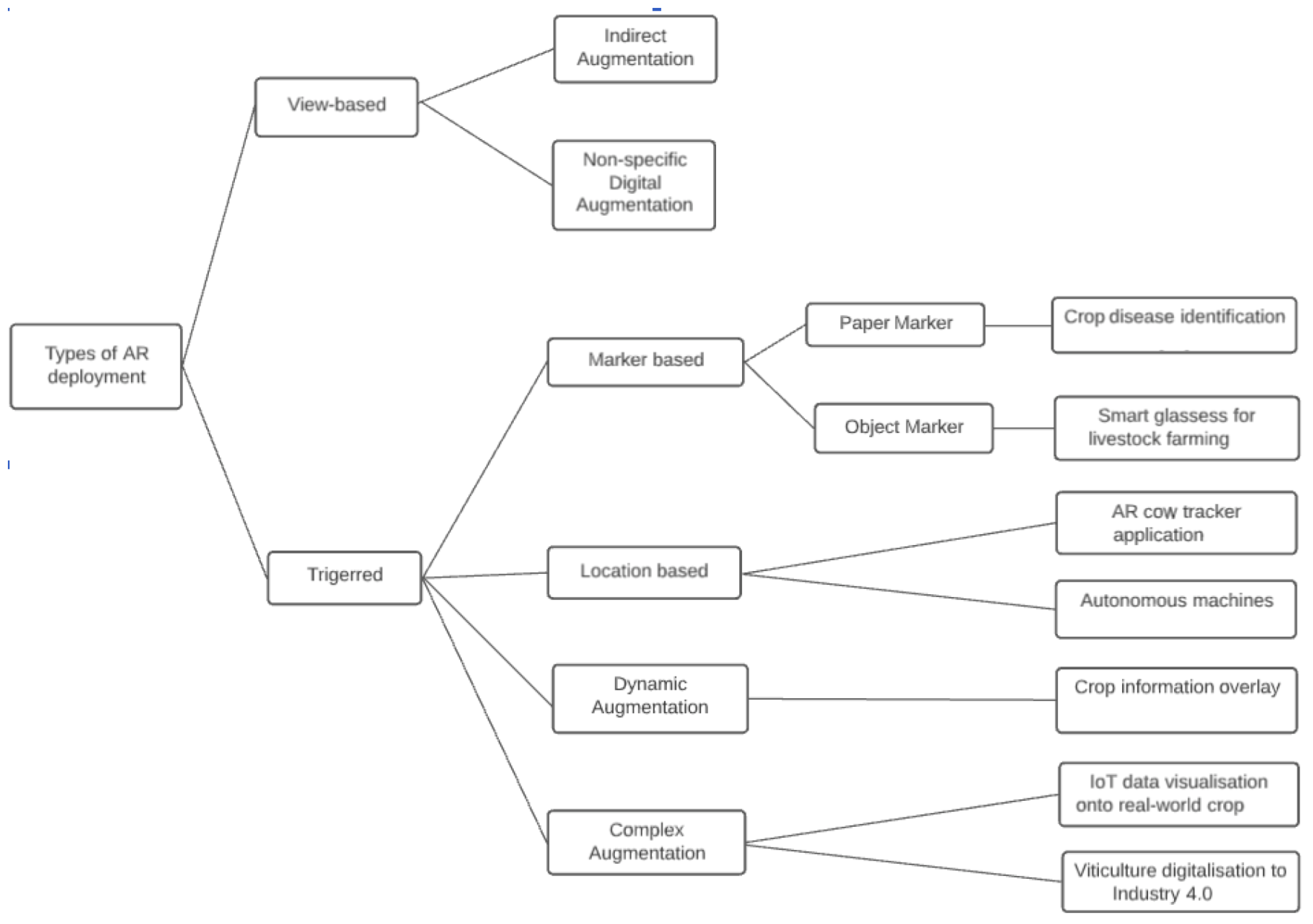

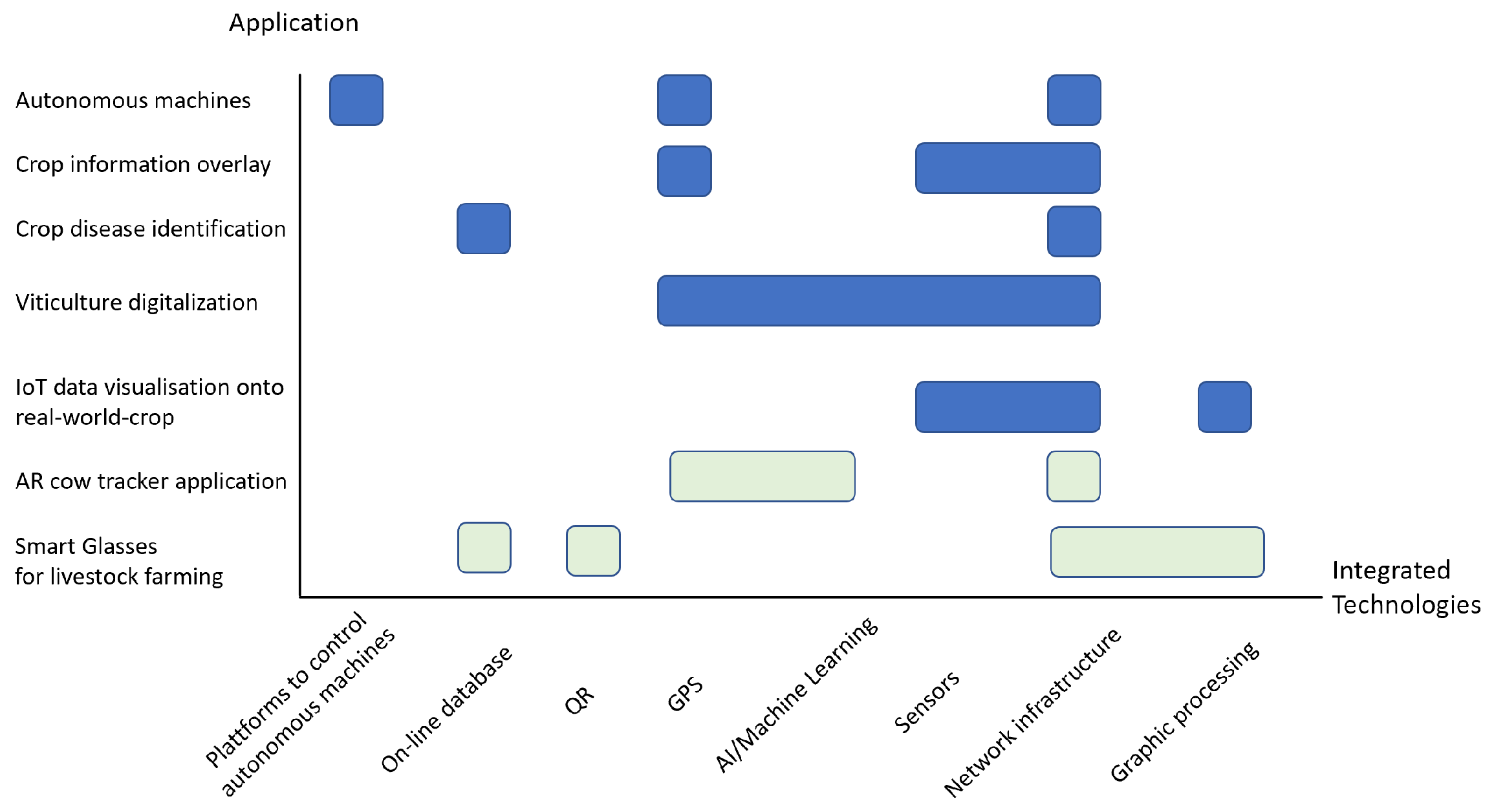

2.5. Augmenting AI Systems

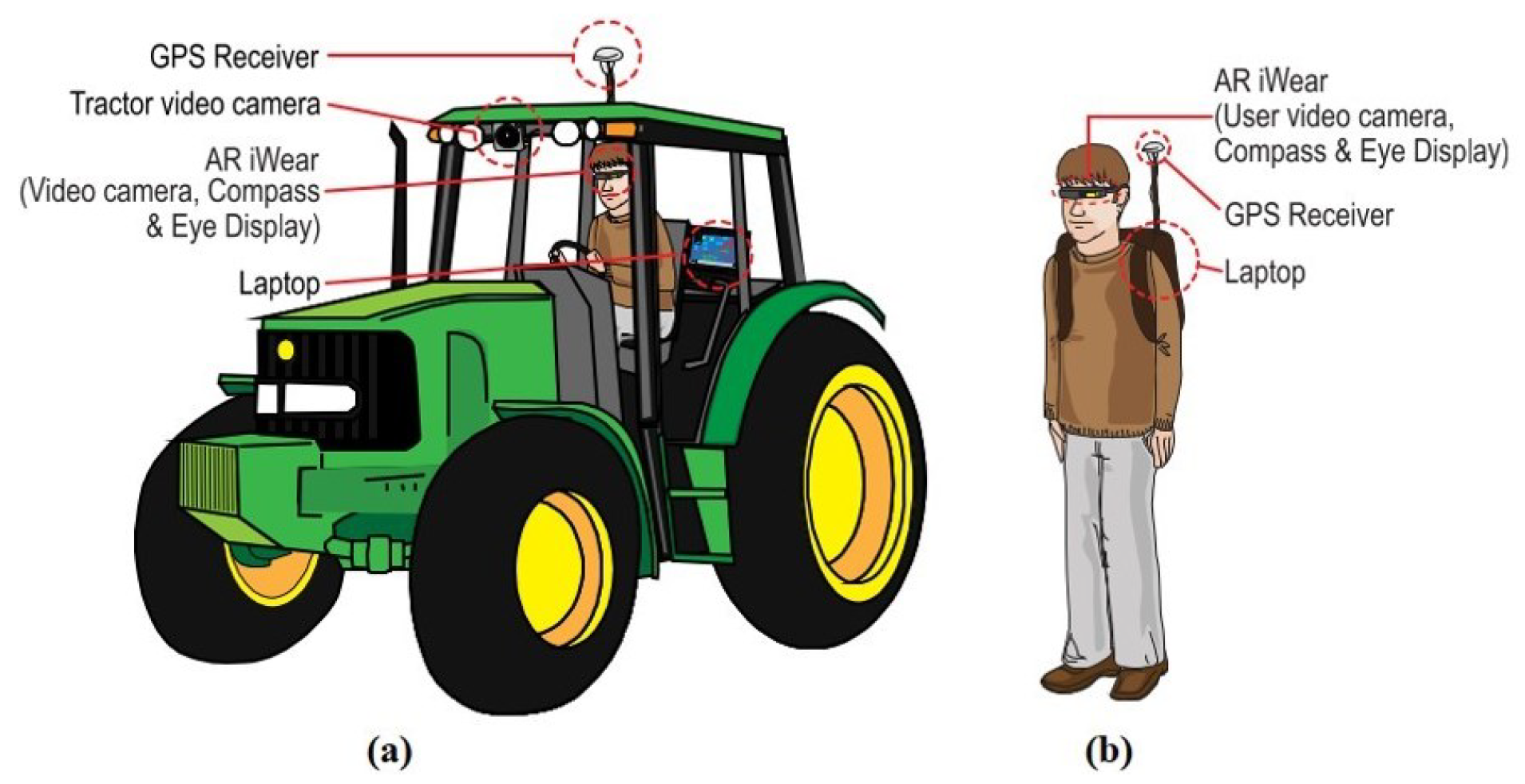

2.5.1. Example from Agriculture

2.5.2. Examples from Forestry

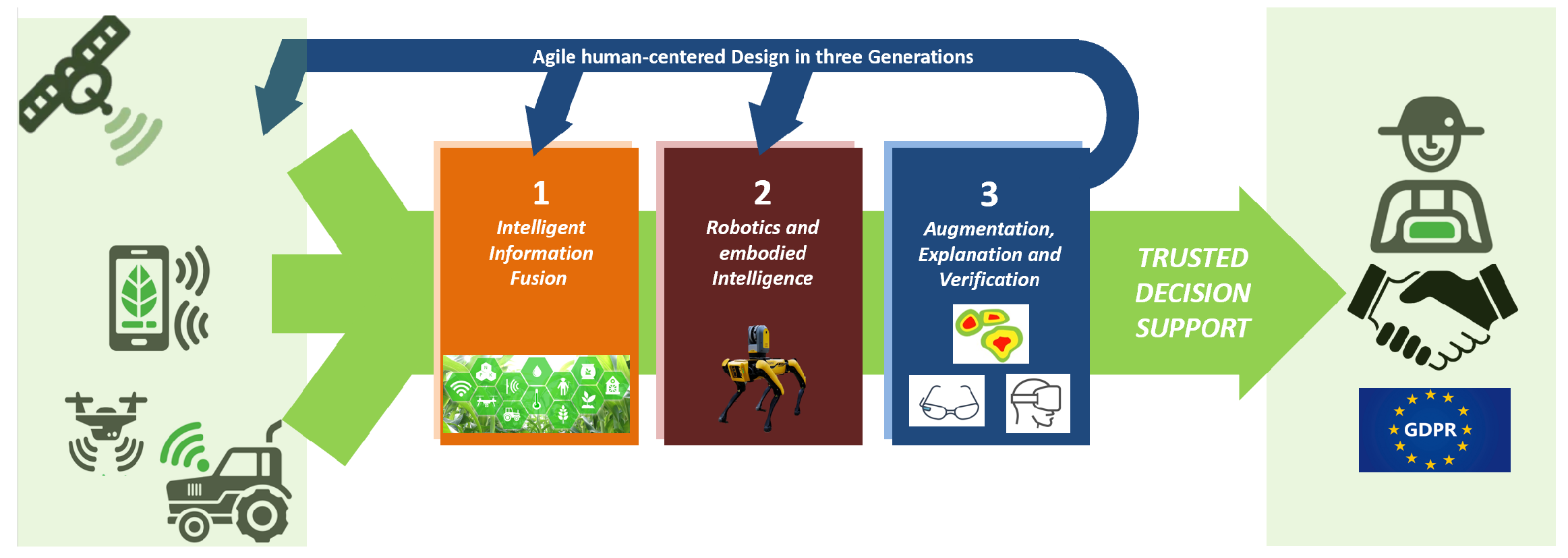

3. AI Branches of Future Interest: Frontier Research Areas

- Generation 1: Enabling an easily realizable application through immediate deployment of existing technology which can be solved at the “bachelor level”.

- Generation 2: Medium-term modification of existing technology, which can be solved at “master level”.

- Generation 3: Advanced adaptation and evolution going beyond state-of-the-art at “doctoral level and beyond”.

3.1. Intelligent Sensor Information Fusion

- Open Challenge G1: “Garbage in-garbage out”—this motto of data scientists is there to always reminds us of the fundamental property of information entropy, as defined by Claude Shannon [154,155]. Since the information that is lost can never be fully and perfectly reconstructed—in the best case only approximated—one of the first and foremost goals is to make sure that the sensor information is gathered with as few problems as possible. The sensor’s data gathering capability in difficult environmental conditions, and the continuous, (near-)real-time operation thereof and for the servers, need to be ensured. The quality and the sensitivity of the sensor are defined by production but need to be verified in practice. Together with human experts, the gathered data (both sensors and satellite) need to be analyzed, and the individual characteristics thereof need to be specified.

- Open Challenge G2: Human experts have the capability to recognize abnormal behavior in sensor data by adequate visualizations and statistics. Fault detection software, on the other hand, has to rely on anomalies in the data, which means that (1) the outliers need to be “rare” (relative) and (2) the values of different sensors need to be compared with each other. What the vast majority of the sensors will track, will be roughly decisive for describing the whole data gathering, preprocessing, transferring, and detecting a fault either in a few of the sensors, all of them, or even the whole data transfer process.

- Open Challenge G3: A reliable real-time data fusion system that has the intelligence to know when it is advisable to fuse different sensor data. If data from the majority of different sensor sources are inconsistent, then the data fusion process should rather be discarded, since this is an indication of a fault in the data pipeline. Ensuring that the gathered data lie in an acceptable range, are consistent with each other, and obey roughly some expected physical rules (radiance, humidity with respect to temperature) can open the path for successful and insightful information fusion.

3.2. Robotics and Embodied Intelligence

- Open Challenge G1: Before even starting on-site operations, several experiments with synthetic data under simulated conditions must be completed. The scope of the challenges needs to be documented, along with the basic elements of the RL problems that will be faced down the line. Basic thoughts about the state and action space of the problems at hand, the reward strategy, the obstacles, and the limitations need to be examined. The feasibility of the solution, its scope, computational resources, and time resources need to be defined. The first simple prototypes in the laboratory that use deep RL need to work efficiently.

- Open Challenge G2: The next level must incorporate stronger modeling testing, where both the agent and the environment’s characteristics will be represented by state-of-the-art AI software. The robot’s behavior has to take into account that noisy data and edge cases are things that will be encountered. Gradually, it has to move on from the more idealized case of Challenge G1 to real-world data that contain faults, have drifts, and are representative of an environment that is much more complex. This is planned to be an incremental process that will step-wise make the agent capable of dealing with real, on-site situations. The decision-making process of the robot, while confronted with newer, more complex situations, must also be highlighted through XAI methods. By that means, human experts can control if the robot is following plausible principles or relies on Clever–Hans correlations [176].

- Open Challenge G3: The milestone goal of embodied intelligence for agricultural applications will be for the robots being able to perform the required tasks in real conditions that are far more complex than the ones encountered in the simulations of Challenge G1. At this level, the robot is well capable of recognizing when it can act autonomously, providing an understandable explanation for its decisions to the human with the help of XAI, learning from human feedback, and also enhancing the human’s expert knowledge—since the robot might discover a new solution to the encountered problems, as in the game of Go [38].

3.3. Augmentation, Explanation, and Verification Technologies

- Open Challenge G1: Create the first visualizations for multi-modal data gathered from the sensors. Concentrate only on the adequate presentation of the data to domain experts and consider some usability aspects, without consideration or software development of data filtering in mind. Incorporate principles from graphical design and use all the facilities that one can ideally have, such as big monitors, Google Glass, and augmented reality systems (AR).

- Open Challenge G2: Work in collaboration with human experts (forester and farmer) and see what are their principal needs, requirements, and expectations from an AI solution. Define with their help what their priorities are and what characteristics of the data differentiate between normal vs. abnormal behavior. Use the results from Challenge G1 to show them static visualizations from particular situations, and let them pinpoint, choose, and enhance those visualizations. Define with them use-cases that take into account which information is mist important for their decision-making process and how to prioritize and present it so that they are informed precisely, as fast as possible, but without being overwhelmed.

- Open Challenge G3: Develop a real-time fault detection AI solution that encompasses all parts of the pipeline: (1) Data gathering, preprocessing, and fusion, (2) adequate visualization as implemented for Challenge G2 but now real-time, and (3) real-time fault detection with the use of efficient and explainable AI solutions. This pipeline is not static, since neither the human nor the AI software is perfect, nor can either prepare itself for every possible fault and condition that might occur. The components adapt to new anomalies, user requirements, domain-expert knowledge, and challenges that will arise from the use of more data, more sophisticated XAI methods, and quality management techniques.

4. Human-Centered AI and the Human in the Loop

4.1. Interactive Machine Learning with the “Human in the Loop”

4.2. Human-Centered Design

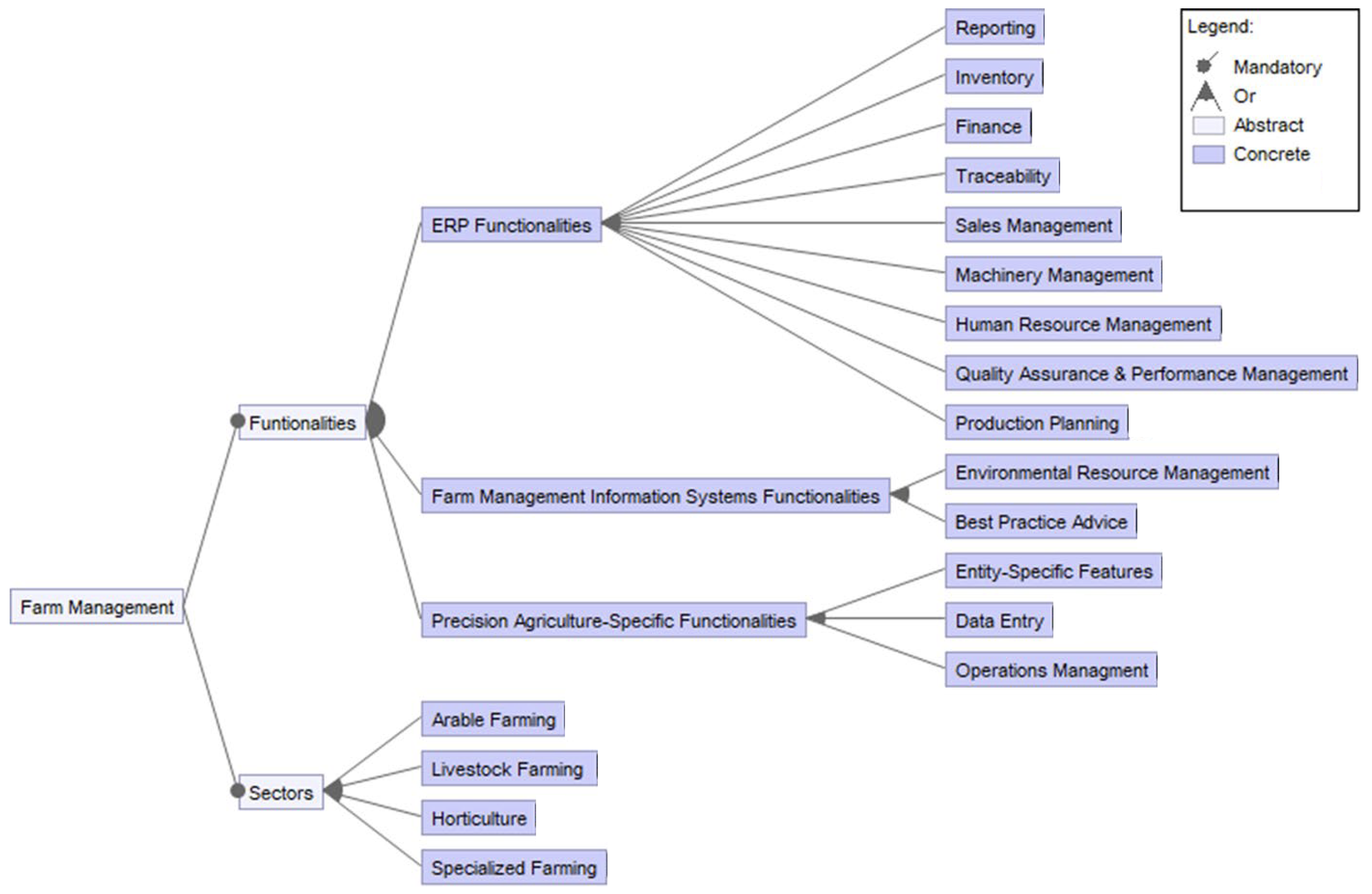

4.3. Farmer-in-the-Loop

- Open Challenge G1: Identify a requirements map and technology overview. Create a toolbox of existing technologies for inexperienced farmers with easy-to-use methods and cost-effective applications to create benefits in everyday life according to the concept of human-centered design (see Section 4.2).

- Open Challenge G2: Making online available data accessible and integrate the structure and computational operations of the above toolset into AI solutions. Networking, fusion, integration, presentation, and visualization of information from different sources and locations, following the information visualization mantra “overview first, zoom and filter, then details on demand” [224] to provide a respective snapshot across the entire value chain, thereby identifying insight and opportunities for further analysis of key indicators across the entire value chain (“from seed to the consumer’s stomach”).

- Open Challenge G3: A key challenge is to gradually refine the tools of LCA, LCC, and SLCA.

4.4. Forester-in-the-Loop

- Open Challenge G1: Due to the small-scale forestry in Austria, many forest owners work in their own forests. Therefore, most of the work is done manually with a chainsaw. The future task of the forester-in-the-loop approach is to develop digital and smart tools in this area as well. For example, individual tree information could be shown to the forest owners via heads-up displays in their helmets or VR glasses. Furthermore, it would also be conceivable to provide assistance with regard to value-optimized bucking in order to increase efficiency and revenue. Conversely, the AI can always learn from the forestry worker’s expertise and concrete actions during the work process.

- Open Challenge G2: In principle, a good first step into autonomous practice would be to decouple the acquisition of the environment data of a forest machine and its AI-controlled features in terms of time. For example, digital twins of the forest can be created with 3D scanners (often takes place as part of forest inventory anyway); and autonomous, automated, or even augmented processes can be integrated into the forest machine on the basis of these. This saves time-consuming on-the-fly environment mapping and navigation. Robots (e.g., [226]) offer a good opportunity to test such a process. During the entire process, the forester should be specifically involved and make decisions.

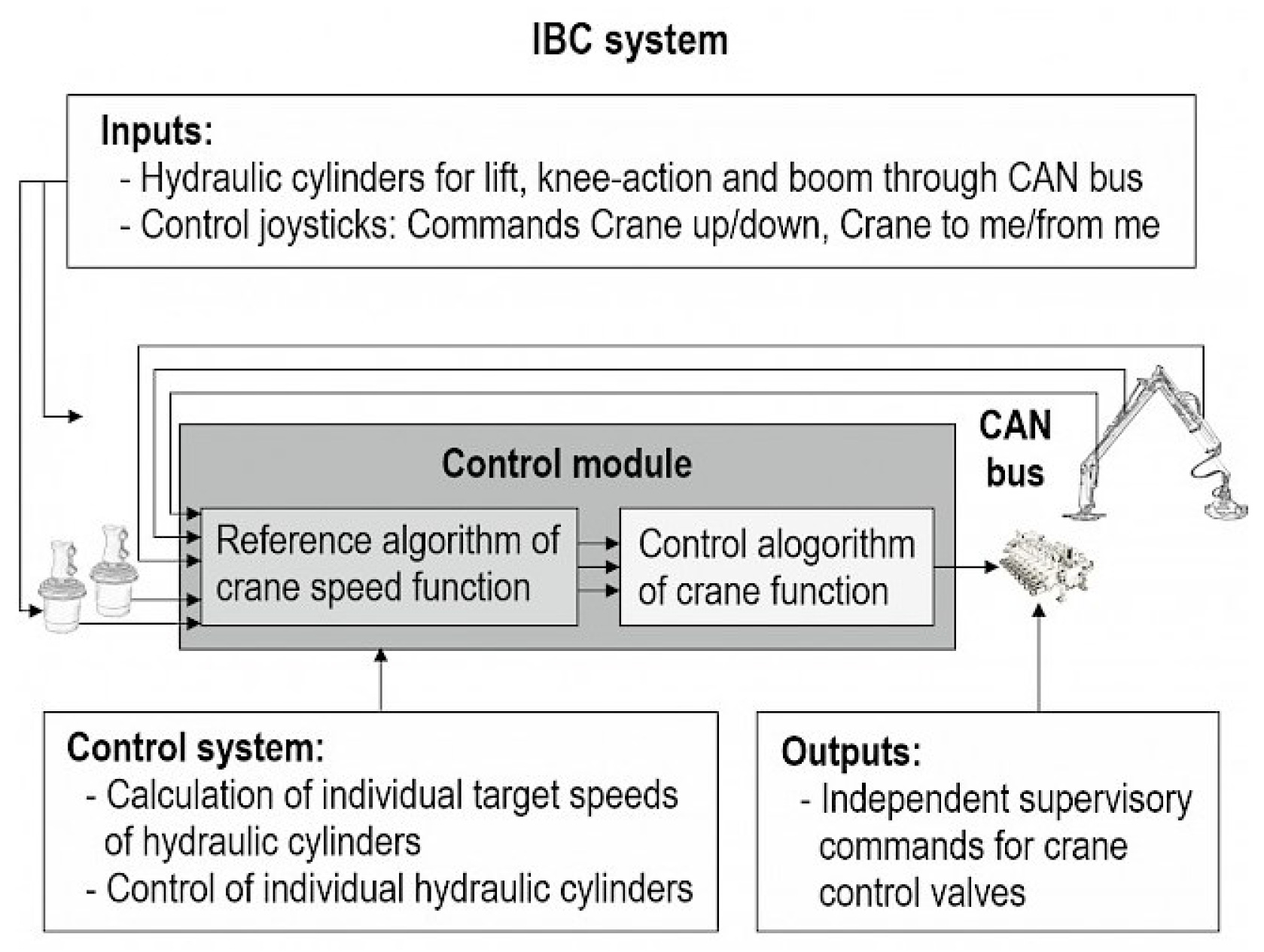

- Open Challenge G3: Based on Open Challenge G2, a direct navigation system for forestry machines should be developed, enabling on-the-fly navigation. This concerns not only the pure driving with the machine but also the autonomous control of attachments and aggregates (e.g., crane and harvester head). This not only applies to simply driving the machine, but also to the autonomous control of attachments and aggregates (crane, harvester head, etc.). In this further development step, it is also necessary to integrate further sensors and to process their data intelligently (GNSS sensor, 3D laser scanners, 2D laser scanners, stereo cameras, hydraulic sensors). Furthermore, the methods and approaches should also be transferred from the small robot to a real forest machine. The expertise of the previous operators should be incorporated in order to avoid environmental impacts and to work in a value-optimized manner. This step towards a large forestry will be done in close cooperation with machine manufacturers. Furthermore, large-scale practical studies will be carried out in the forest in order to test and continuously develop the systems.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Fuso Nerini, F. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- United Nations. UN Sustainable Development Goals. 2020. Available online: https://sdgs.un.org/goals (accessed on 14 March 2022).

- Barbier, E.B.; Burgess, J.C. The Sustainable Development Goals and the systems approach to sustainability. Economics 2017, 11. [Google Scholar] [CrossRef] [Green Version]

- Regulation (EU) 2021/1119 of the European Parliament and of the Council of 30 June 2021. 2021. Available online: https://eur-lex.europa.eu/eli/reg/2021/1119/oj (accessed on 4 March 2022).

- Nature and Forest Strategy Factsheet. 2021. Available online: https://ec.europa.eu/commission/presscorner/detail/en/fs_21_3670 (accessed on 6 March 2022).

- Carbonell, I.M. The ethics of big data in big agriculture. Internet Policy Rev. 2016, 5, 1–13. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biol. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Bengio, Y.; Lecun, Y.; Hinton, G. Deep learning for AI. Commun. ACM 2021, 64, 58–65. [Google Scholar] [CrossRef]

- Holzinger, A.; Weippl, E.; Tjoa, A.M.; Kieseberg, P. Digital Transformation for Sustainable Development Goals (SDGs)—A Security, Safety and Privacy Perspective on AI. In Springer Lecture Notes in Computer Science, LNCS 12844; Springer: Cham, Switzerland, 2021; pp. 1–20. [Google Scholar] [CrossRef]

- Holzinger, A.; Kickmeier-Rust, M.; Müller, H. Kandinsky Patterns as IQ-Test for Machine Learning. In Lecture Notes in Computer Science LNCS 11713; Springer/Nature: Cham, Switzerland, 2019; pp. 1–14. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2020. [Google Scholar]

- Holzinger, A.; Dehmer, M.; Emmert-Streib, F.; Cucchiara, R.; Augenstein, I.; Del Ser, J.; Samek, W.; Jurisica, I.; Díaz-Rodríguez, N. Information fusion as an integrative cross-cutting enabler to achieve robust, explainable, and trustworthy medical artificial intelligence. Inf. Fusion 2022, 79, 263–278. [Google Scholar] [CrossRef]

- Holzinger, A. The Next Frontier: AI We Can Really Trust. In Proceedings of the ECML PKDD 2021, CCIS 1524; Michael Kamp, E.A., Ed.; Springer: Cham, Switzerland, 2021; pp. 1–14. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Holzinger, A.; Plass, M.; Kickmeier-Rust, M.; Holzinger, K.; Crişan, G.C.; Pintea, C.M.; Palade, V. Interactive machine learning: Experimental evidence for the human in the algorithmic loop. Appl. Intell. 2019, 49, 2401–2414. [Google Scholar] [CrossRef] [Green Version]

- Dietterich, T.G.; Horvitz, E.J. Rise of concerns about AI: Reflections and directions. Commun. ACM 2015, 58, 38–40. [Google Scholar] [CrossRef]

- Holzinger, A.; Malle, B.; Saranti, A.; Pfeifer, B. Towards Multi-Modal Causability with Graph Neural Networks enabling Information Fusion for explainable AI. Inf. Fusion 2021, 71, 28–37. [Google Scholar] [CrossRef]

- Laplace, P.S. Mémoire sur les probabilités. Mémoires L’académie R. Des Sci. Paris 1781, 1778, 227–332. [Google Scholar]

- Bayes, T. An Essay towards solving a Problem in the Doctrine of Chances (communicated by Richard Price). Philos. Trans. 1763, 53, 370–418. [Google Scholar]

- Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 2015, 521, 452–459. [Google Scholar] [CrossRef] [PubMed]

- Mandel, T.; Liu, Y.E.; Brunskill, E.; Popovic, Z. Where to add actions in human-in-the-loop reinforcement learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Holzinger, A.; Plass, M.; Holzinger, K.; Crisan, G.C.; Pintea, C.M.; Palade, V. A glass-box interactive machine learning approach for solving NP-hard problems with the human-in-the-loop. arXiv 2017, arXiv:1708.01104. [Google Scholar] [CrossRef]

- Lage, I.; Ross, A.; Gershman, S.J.; Kim, B.; Doshi-Velez, F. Human-in-the-loop interpretability prior. In Proceedings of the Advances in Neural Information Processing Systems NeurIPS 2018, Montreal, QC, Canada, 3–8 December 2018; pp. 10159–10168. [Google Scholar]

- Wasserman, L. All of Statistics: A Concise Course in Statistical Inference; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2004. [Google Scholar]

- Hensman, J.; Fusi, N.; Lawrence, N.D. Gaussian processes for big data. arXiv 2013, arXiv:1309.6835. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Holzinger, A. Introduction to Machine Learning and Knowledge Extraction (MAKE). Mach. Learn. Knowl. Extr. 2019, 1, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Caruana, R.; Niculescu-Mizil, A. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd international conference on Machine learning (ICML 2006), Pittsburgh, PA, USA, 25–29 June 2006; Association for Computing Machinery: New York, NY, USA, 2006. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop. Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Shorewala, S.; Ashfaque, A.; Sidharth, R.; Verma, U. Weed density and distribution estimation for precision agriculture using semi-supervised learning. IEEE Access 2021, 9, 27971–27986. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Murphy, K.P. Probabilistic Machine Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2022. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Graesser, L.; Keng, W.L. Foundations of Deep Reinforcement Learning: Theory and Practice in Python; Addison-Wesley Professional: Boston, MA, USA, 2019. [Google Scholar]

- Pumperla, M.; Ferguson, K. Deep Learning and the Game of Go; Manning Publications Company: Shelter Island, NY, USA, 2019; Volume 231. [Google Scholar]

- Hernandez-Leal, P.; Kartal, B.; Taylor, M.E. Is multiagent deep reinforcement learning the answer or the question? A brief survey. Learning 2018, 21, 22. [Google Scholar]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; de Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proc. IEEE 2016, 104, 148–175. [Google Scholar] [CrossRef] [Green Version]

- Sonnenburg, S.; Rätsch, G.; Schaefer, C.; Schölkopf, B. Large scale multiple kernel learning. J. Mach. Learn. Res. 2006, 7, 1531–1565. [Google Scholar]

- Mueller, H.; Mayrhofer, M.T.; Veen, E.B.V.; Holzinger, A. The Ten Commandments of Ethical Medical AI. IEEE Comput. 2021, 54, 119–123. [Google Scholar] [CrossRef]

- Stoeger, K.; Schneeberger, D.; Holzinger, A. Medical Artificial Intelligence: The European Legal Perspective. Commun. ACM 2021, 64, 34–36. [Google Scholar] [CrossRef]

- Wilson, A.G.; Dann, C.; Lucas, C.; Xing, E.P. The Human Kernel. In Advances in Neural Information Processing Systems, NIPS 2015; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; NIPS Foundation: La Jolla, CA, USA, 2015. [Google Scholar]

- Holzinger, A. Interactive Machine Learning for Health Informatics: When do we need the human-in-the-loop? Brain Inform. 2016, 3, 119–131. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Holzinger, A.; Plass, M.; Holzinger, K.; Crisan, G.C.; Pintea, C.M.; Palade, V. Towards interactive Machine Learning (iML): Applying Ant Colony Algorithms to solve the Traveling Salesman Problem with the Human-in-the-Loop approach. In Springer Lecture Notes in Computer Science LNCS 9817; Springer: Heidelberg/Berlin, Germany; New York, NY, USA, 2016; pp. 81–95. [Google Scholar] [CrossRef]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the Intelligent Vehicles Symposium (IV 2011), Baden-Baden, Germany, 5–9 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 163–168. [Google Scholar] [CrossRef]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [Green Version]

- Van der Aalst, W.M.; Bichler, M.; Heinzl, A. Robotic process automation. Bus. Inf. Syst. Eng. 2018, 60, 269–272. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Sun, Y.; Wang, C.; Zhang, Y.; Qiu, Z.; Gong, W.; Lei, S.; Tong, X.; Duan, X. Unmanned aerial vehicle and artificial intelligence revolutionizing efficient and precision sustainable forest management. J. Clean. Prod. 2021, 311, 127546. [Google Scholar] [CrossRef]

- Singh, D.; Merdivan, E.; Hanke, S.; Kropf, J.; Geist, M.; Holzinger, A. Convolutional and Recurrent Neural Networks for Activity Recognition in Smart Environment. In Towards Integrative Machine Learning and Knowledge Extraction: BIRS Workshop, Banff, AB, Canada, July 24–26, 2015, Revised Selected Papers; Holzinger, A., Goebel, R., Ferri, M., Palade, V., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 194–205. [Google Scholar] [CrossRef]

- Nischelwitzer, A.; Lenz, F.J.; Searle, G.; Holzinger, A. Some Aspects of the Development of Low-Cost Augmented Reality Learning Environments as examples for Future Interfaces in Technology Enhanced Learning. In Universal Access to Applications and Services, Lecture Notes in Computer Science (LNCS 4556); Stephanidis, C., Ed.; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2007; pp. 728–737. [Google Scholar] [CrossRef]

- Silva, S.; Duarte, D.; Valente, A.; Soares, S.; Soares, J.; Pinto, F.C. Augmented Intelligent Distributed Sensing System Model for Precision Agriculture. In Proceedings of the 2021 Telecoms Conference (ConfTELE), Leiria, Portugal, 11–12 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Ramos, G.; Meek, C.; Simard, P.; Suh, J.; Ghorashi, S. Interactive machine teaching: A human-centered approach to building machine-learned models. Hum.–Comput. Interact. 2020, 35, 413–451. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A Review of Deep Learning in Multiscale Agricultural Sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Saiz-Rubio, V.; Rovira-Más, F. From smart farming towards agriculture 5.0: A review on crop data management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef] [Green Version]

- Mendes, J.; Pinho, T.M.; Neves dos Santos, F.; Sousa, J.J.; Peres, E.; Boaventura-Cunha, J.; Cunha, M.; Morais, R. Smartphone applications targeting precision agriculture practices—A systematic review. Agronomy 2020, 10, 855. [Google Scholar] [CrossRef]

- Sartori, D.; Brunelli, D. A smart sensor for precision agriculture powered by microbial fuel cells. In Proceedings of the 2016 IEEE Sensors Applications Symposium (SAS), Catania, Italy, 20–22 April 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Elmeseiry, N.; Alshaer, N.; Ismail, T. A Detailed Survey and Future Directions of Unmanned Aerial Vehicles (UAVs) with Potential Applications. Aerospace 2021, 8, 363. [Google Scholar] [CrossRef]

- Kalyani, Y.; Collier, R. A Systematic Survey on the Role of Cloud, Fog, and Edge Computing Combination in Smart Agriculture. Sensors 2021, 21, 5922. [Google Scholar] [CrossRef]

- Jarial, S. Internet of Things application in Indian agriculture, challenges and effect on the extension advisory services—A review. J. Agribus. Dev. Emerg. Econ. 2022, ahead-of-print. [CrossRef]

- Cockburn, M. Application and prospective discussion of machine learning for the management of dairy farms. Animals 2020, 10, 1690. [Google Scholar] [CrossRef] [PubMed]

- Haxhibeqiri, J.; De Poorter, E.; Moerman, I.; Hoebeke, J. A survey of LoRaWAN for IoT: From technology to application. Sensors 2018, 18, 3995. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Waldrop, M.M. Autonomous vehicles: No drivers required. Nat. News 2015, 518, 20–23. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, D.; Schwanen, T. Talking about automated vehicles: What do levels of automation do? Technol. Soc. 2021, 64, 101488. [Google Scholar] [CrossRef]

- Monaco, T.; Grayson, A.; Sanders, D. Influence of four weed species on the growth, yield, and quality of direct-seeded tomatoes (Lycopersicon esculentum). Weed Sci. 1981, 29, 394–397. [Google Scholar] [CrossRef]

- Roberts, H.; Hewson, R.; Ricketts, M.E. Weed competition in drilled summer lettuce. Hortic. Res. 1977, 17, 39–45. [Google Scholar]

- Slaughter, D.C.; Giles, D.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2017, 153, 110–128. [Google Scholar] [CrossRef]

- Sabatini, R.; Moore, T.; Ramasamy, S. Global navigation satellite systems performance analysis and augmentation strategies in aviation. Prog. Aerosp. Sci. 2017, 95, 45–98. [Google Scholar] [CrossRef]

- Lim, Y.; Pongsakornsathien, N.; Gardi, A.; Sabatini, R.; Kistan, T.; Ezer, N.; Bursch, D.J. Adaptive human–robot interactions for multiple unmanned aerial vehicles. Robotics 2021, 10, 12. [Google Scholar] [CrossRef]

- Ehsani, M.R.; Sullivan, M.D.; Zimmerman, T.L.; Stombaugh, T. Evaluating the dynamic accuracy of low-cost GPS receivers. In Proceedings of the 2003 ASAE Annual Meeting. American Society of Agricultural and Biological Engineers, Las Vegas, NV, USA, 27–30 July 2003; p. 1. [Google Scholar] [CrossRef]

- Åstrand, B.; Baerveldt, A.J. An agricultural mobile robot with vision-based perception for mechanical weed control. Auton. Robot. 2002, 13, 21–35. [Google Scholar] [CrossRef]

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef] [Green Version]

- Scholz, C.; Moeller, K.; Ruckelshausen, A.; Hinck, S.; Göttinger, M. Automatic soil penetrometer measurements and GIS based documentation with the autonomous field robot platform bonirob. In Proceedings of the 12th International Conference of Precision Agriculture, Sacramento, CA, USA, 20–23 July 2014. [Google Scholar]

- Lamm, R.D.; Slaughter, D.C.; Giles, D.K. Precision weed control system for cotton. Trans. ASAE 2002, 45, 231. [Google Scholar] [CrossRef]

- Blasco, J.; Aleixos, N.; Roger, J.; Rabatel, G.; Moltó, E. AE—Automation and emerging technologies: Robotic weed control using machine vision. Biosyst. Eng. 2002, 83, 149–157. [Google Scholar] [CrossRef]

- Bawden, O.; Kulk, J.; Russell, R.; McCool, C.; English, A.; Dayoub, F.; Lehnert, C.; Perez, T. Robot for weed species plant-specific management. J. Field Robot. 2017, 34, 1179–1199. [Google Scholar] [CrossRef]

- Ringdahl, O. Automation in Forestry: Development of Unmanned Forwarders. Ph.D. Thesis, Institutionen för Datavetenskap, Umeå Universitet, Umeå, Sweden, 2011. [Google Scholar]

- Parker, R.; Bayne, K.; Clinton, P.W. Robotics in forestry. N. Z. J. For. 2016, 60, 8–14. [Google Scholar]

- Rossmann, J.; Krahwinkler, P.; Schlette, C. Navigation of mobile robots in natural environments: Using sensor fusion in forestry. J. Syst. Cybern. Inform. 2010, 8, 67–71. [Google Scholar]

- Gollob, C.; Ritter, T.; Nothdurft, A. Forest inventory with long range and high-speed personal laser scanning (PLS) and simultaneous localization and mapping (SLAM) technology. Remote Sens. 2020, 12, 1509. [Google Scholar] [CrossRef]

- Visser, R. Next Generation Timber Harvesting Systems: Opportunities for Remote Controlled and Autonomous Machinery; Project No: PRC437-1718; Forest & Wood Products Australia Limited: Melbourne, Australia, 2018. [Google Scholar]

- Visser, R.; Obi, O.F. Automation and robotics in forest harvesting operations: Identifying near-term opportunities. Croat. J. For. Eng. J. Theory Appl. For. Eng. 2021, 42, 13–24. [Google Scholar] [CrossRef]

- Wells, L.A.; Chung, W. Evaluation of ground plane detection for estimating breast height in stereo images. For. Sci. 2020, 66, 612–622. [Google Scholar] [CrossRef]

- Thomasson, J.A.; Baillie, C.P.; Antille, D.L.; McCarthy, C.L.; Lobsey, C.R. A review of the state of the art in agricultural automation. Part II: On-farm agricultural communications and connectivity. In Proceedings of the 2018 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Detroit, Michigan, 29 July–1 August 2018; p. 1. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D imaging systems for agricultural applications—A review. Sensors 2016, 16, 618. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schueller, J.K. Engineering advancements. In Automation: The Future of Weed Control in Cropping Systems; Springer: Berlin/Heidelberg, Germany, 2014; pp. 35–49. [Google Scholar] [CrossRef]

- Claas Crop Sensor. 2022. Available online: https://www.claas.co.uk/products/easy-2018/precision-farming/crop-sensor-isaria (accessed on 6 March 2022).

- Goense, D.; Hofstee, J.; Van Bergeijk, J. An information model to describe systems for spatially variable field operations. Comput. Electron. Agric. 1996, 14, 197–214. [Google Scholar] [CrossRef]

- Gollob, C.; Ritter, T.; Wassermann, C.; Nothdurft, A. Influence of scanner position and plot size on the accuracy of tree detection and diameter estimation using terrestrial laser scanning on forest inventory plots. Remote Sens. 2019, 11, 1602. [Google Scholar] [CrossRef] [Green Version]

- Bont, L.G.; Maurer, S.; Breschan, J.R. Automated cable road layout and harvesting planning for multiple objectives in steep terrain. Forests 2019, 10, 687. [Google Scholar] [CrossRef] [Green Version]

- Heinimann, H.R. Holzerntetechnik zur Sicherstellung einer minimalen Schutzwaldpflege: Bericht im Auftrag des Bundesamtes für Umwelt, Wald und Landschaft (BUWAL). Interner Ber./ETH For. Eng. 2003, 12. [Google Scholar]

- Dykstra, D.P.; Riggs, J.L. An application of facilities location theory to the design of forest harvesting areas. AIIE Trans. 1977, 9, 270–277. [Google Scholar] [CrossRef]

- Chung, W. Optimization of Cable Logging Layout Using a Heuristic Algorithm for Network Programming; Oregon State University: Corvallis, OR, USA, 2003. [Google Scholar]

- Epstein, R.; Weintraub, A.; Sapunar, P.; Nieto, E.; Sessions, J.B.; Sessions, J.; Bustamante, F.; Musante, H. A combinatorial heuristic approach for solving real-size machinery location and road design problems in forestry planning. Oper. Res. 2006, 54, 1017–1027. [Google Scholar] [CrossRef] [Green Version]

- Bont, L.; Heinimann, H.R.; Church, R.L. Optimizing cable harvesting layout when using variable-length cable roads in central Europe. Can. J. For. Res. 2014, 44, 949–960. [Google Scholar] [CrossRef]

- Pierzchała, M.; Kvaal, K.; Stampfer, K.; Talbot, B. Automatic recognition of work phases in cable yarding supported by sensor fusion. Int. J. For. Eng. 2018, 29, 12–20. [Google Scholar] [CrossRef]

- Abdullahi, H.S.; Mahieddine, F.; Sheriff, R.E. Technology impact on agricultural productivity: A review of precision agriculture using unmanned aerial vehicles. In International Conference on Wireless and Satellite Systems; Springer: Berlin/Heidelberg, Germany, 2015; pp. 388–400. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, M.; Xu, B.; Sun, J.; Mujumdar, A.S. Artificial intelligence assisted technologies for controlling the drying of fruits and vegetables using physical fields: A review. Trends Food Sci. Technol. 2020, 105, 251–260. [Google Scholar] [CrossRef]

- Antille, D.L.; Lobsey, C.R.; McCarthy, C.L.; Thomasson, J.A.; Baillie, C.P. A review of the state of the art in agricultural automation. Part IV: Sensor-based nitrogen management technologies. In Proceedings of the 2018 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Detroit, Michigan, 29 July–1 August 2018; p. 1. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N. Navigation and control of an autonomous horticultural robot. Mechatronics 1996, 6, 165–180. [Google Scholar] [CrossRef]

- Howard, C.N.; Kocher, M.F.; Hoy, R.M.; Blankenship, E.E. Testing the fuel efficiency of tractors with continuously variable and standard geared transmissions. Trans. ASABE 2013, 56, 869–879. [Google Scholar] [CrossRef] [Green Version]

- Ovaskainen, H.; Uusitalo, J.; Väätäinen, K. Characteristics and significance of a harvester operators’ working technique in thinnings. Int. J. For. Eng. 2004, 15, 67–77. [Google Scholar] [CrossRef]

- Dreger, F.A.; Rinkenauer, G. Cut to Length Harvester Operator Skill: How Human Planning and Motor Control Co-Evolve to Allow Expert Performance. Fruehjahrskongress 2020, Berlin Digitaler Wandel, Digitale Arbeit, Digitaler Mensch? 2020. Available online: https://gfa2020.gesellschaft-fuer-arbeitswissenschaft.de/inhalt/D.1.3.pdf (accessed on 4 March 2022).

- Purfürst, F.T. Learning curves of harvester operators. Croat. J. For. Eng. J. Theory Appl. For. Eng. 2010, 31, 89–97. [Google Scholar]

- Intelligent Boom Control. 2022. Available online: https://www.deere.co.uk/en/forestry/ibc/ (accessed on 6 March 2022).

- Smart Crane. 2022. Available online: https://www.komatsuforest.com/explore/smart-crane-for-forwarders (accessed on 6 March 2022).

- Smart Control. 2022. Available online: https://www.palfingerepsilon.com/en/Epsolutions/Smart-Control (accessed on 4 March 2022).

- Manner, J.; Mörk, A.; Englund, M. Comparing forwarder boom-control systems based on an automatically recorded follow-up dataset. Silva. Fenn. 2019, 53, 10161. [Google Scholar] [CrossRef] [Green Version]

- Englund, M.; Mörk, A.; Andersson, H.; Manner, J. Delautomation av Skotarkran–Utveckling och Utvärdering i Simulator. [Semi-Automated Forwarder Crane–Development and Evaluation in a Simulator]. 2017. Available online: https://www.skogforsk.se/cd_20190114162732/contentassets/e7e1a93a4ebd41c386b85dc3f566e5e8/delautomatiserad-skotarkran-utveckling-och-utvardering-i-simulator-arbetsrapport-932-2017.pdf (accessed on 3 March 2022).

- IBC: Operator’s Instructions 1WJ1110G004202-, 1WJ1210G002102-, 1WJ1510G003604-. 2022. Available online: https://www.johndeeretechinfo.com/search?p0=doc_type&p0_v=operators%20manuals&pattr=p0 (accessed on 7 March 2022).

- Hurst, W.; Mendoza, F.R.; Tekinerdogan, B. Augmented Reality in Precision Farming: Concepts and Applications. Smart Cities 2021, 4, 1454–1468. [Google Scholar] [CrossRef]

- Burdea, G.C.; Coiffet, P. Virtual Reality Technology; John Wiley & Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Seth, A.; Vance, J.M.; Oliver, J.H. Virtual reality for assembly methods prototyping: A review. Virtual Real. 2011, 15, 5–20. [Google Scholar] [CrossRef] [Green Version]

- Schultheis, M.T.; Rizzo, A.A. The application of virtual reality technology in rehabilitation. Rehabil. Psychol. 2001, 46, 296. [Google Scholar] [CrossRef]

- Höllerer, T.; Feiner, S. Mobile augmented reality. In Telegeoinformatics: Location-Based Computing and Services; Routledge: London, UK, 2004; Volume 21. [Google Scholar]

- Lee, L.H.; Hui, P. Interaction methods for smart glasses: A survey. IEEE Access 2018, 6, 28712–28732. [Google Scholar] [CrossRef]

- Ponnusamy, V.; Natarajan, S.; Ramasamy, N.; Clement, C.; Rajalingam, P.; Mitsunori, M. An iot- enabled augmented reality framework for plant disease detection. Revue D’Intell. Artif. 2021, 35, 185–192. [Google Scholar] [CrossRef]

- Caria, M.; Sara, G.; Todde, G.; Polese, M.; Pazzona, A. Exploring Smart Glasses for Augmented Reality: A Valuable and Integrative Tool in Precision Livestock Farming. Animals 2019, 9, 903. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Santana-Fernández, J.; Gómez-Gil, J.; del Pozo-San-Cirilo, L. Design and implementation of a GPS guidance system for agricultural tractors using augmented reality technology. Sensors 2010, 10, 10435–10447. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De Castro Neto, M.; Cardoso, P. Augmented reality greenhouse. In Proceedings of the EFITA-WCCA-CIGR Conference “Sustainable Agriculture through ICT Innovation”, Turin, Italy, 24–27 June 2013; pp. 24–27. [Google Scholar]

- Vidal, N.R.; Vidal, R.A. Augmented reality systems for weed economic thresholds applications. Planta Daninha 2010, 28, 449–454. [Google Scholar] [CrossRef] [Green Version]

- Okayama, T.; Miyawaki, K. The “Smart Garden” system using augmented reality. IFAC Proc. Vol. 2013, 46, 307–310. [Google Scholar] [CrossRef]

- Sitompul, T.A.; Wallmyr, M. Using augmented reality to improve productivity and safety for heavy machinery operators: State of the art. In Proceedings of the 17th International Conference on Virtual-Reality Continuum and Its Applications in Industry, Brisbane, QLD, Australia, 14–16 November 2019; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- Akyeampong, J.; Udoka, S.; Caruso, G.; Bordegoni, M. Evaluation of hydraulic excavator Human–Machine Interface concepts using NASA TLX. Int. J. Ind. Ergon. 2014, 44, 374–382. [Google Scholar] [CrossRef]

- Chen, Y.C.; Chi, H.L.; Kangm, S.C.; Hsieh, S.H. A smart crane operations assistance system using augmented reality technology. In Proceedings of the 28th International Symposium on Automation and Robotics in Construction, ISARC 2011, Seoul, Korea, 29 June–2 July 2011; pp. 643–649. [Google Scholar]

- Kymäläinen, T.; Suominen, O.; Aromaa, S.; Goriachev, V. Science fiction prototypes illustrating future see-through digital structures in mobile work machines. In EAI International Conference on Technology, Innovation, Entrepreneurship and Education; Springer: Berlin/Heidelberg, Germany, 2017; pp. 179–193. [Google Scholar]

- Aromaa, S.; Goriachev, V.; Kymäläinen, T. Virtual prototyping in the design of see-through features in mobile machinery. Virtual Real. 2020, 24, 23–37. [Google Scholar] [CrossRef] [Green Version]

- Englund, M.; Lundström, H.; Brunberg, T.; Löfgren, B. Utvärdering av Head-Up Display för Visning av Apteringsinformation i Slutavverkning; Technical Report; Skogforsk: Uppsala, Sweden, 2015. [Google Scholar]

- Fang, Y.; Cho, Y.K. Effectiveness analysis from a cognitive perspective for a real-time safety assistance system for mobile crane lifting operations. J. Constr. Eng. Manag. 2017, 143, 05016025. [Google Scholar] [CrossRef]

- HIAB HIVISION. 2022. Available online: https://www.hiab.com/en-us/digital-solutions/hivision (accessed on 6 March 2022).

- Virtual Drive. 2022. Available online: https://www.palfingerepsilon.com/en/Epsolutions/Virtual-Drive (accessed on 6 March 2022).

- Virtual Training for Ponsse. 2022. Available online: http://www.upknowledge.com/ponsse (accessed on 3 March 2022).

- Freund, E.; Krämer, M.; Rossmann, J. Towards realistic forest machine simulators. In Proceedings of the Modeling and Simulation Technologies Conference, Denver, CO, USA, 14–17 August 2000; p. 4095. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Bochtis, D. Intelligent Data Mining and Fusion Systems in Agriculture; Academic Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Boginski, V.L.; Commander, C.W.; Pardalos, P.M.; Ye, Y. Sensors: Theory, Algorithms, and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011; Volume 61. [Google Scholar]

- Al Hage, J.; El Najjar, M.E.; Pomorski, D. Multi-sensor fusion approach with fault detection and exclusion based on the Kullback–Leibler Divergence: Application on collaborative multi-robot system. Inf. Fusion 2017, 37, 61–76. [Google Scholar] [CrossRef]

- Ghosh, N.; Paul, R.; Maity, S.; Maity, K.; Saha, S. Fault Matters: Sensor data fusion for detection of faults using Dempster–Shafer theory of evidence in IoT-based applications. Expert Syst. Appl. 2020, 162, 113887. [Google Scholar] [CrossRef]

- Saranti, A.; Taraghi, B.; Ebner, M.; Holzinger, A. Property-based testing for parameter learning of probabilistic graphical models. In International Cross-Domain Conference for Machine Learning and Knowledge Extraction; Springer: Berlin/Heidelberg, Germany, 2020; pp. 499–515. [Google Scholar] [CrossRef]

- Aggarwal, C.C. An introduction to outlier analysis. In Outlier Analysis; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–34. [Google Scholar]

- Moshou, D.; Bravo, C.; Oberti, R.; West, J.; Bodria, L.; McCartney, A.; Ramon, H. Plant disease detection based on data fusion of hyper-spectral and multi-spectral fluorescence imaging using Kohonen maps. Real-Time Imaging 2005, 11, 75–83. [Google Scholar] [CrossRef]

- Lee, W.S.; Alchanatis, V.; Yang, C.; Hirafuji, M.; Moshou, D.; Li, C. Sensing technologies for precision specialty crop production. Comput. Electron. Agric. 2010, 74, 2–33. [Google Scholar] [CrossRef]

- Moshou, D.; Kateris, D.; Gravalos, I.; Loutridis, S.; Sawalhi, N.; Gialamas, T.; Xyradakis, P.; Tsiropoulos, Z. Determination of fault topology in mechanical subsystems of agricultural machinery based on feature fusion and neural networks. In Proceedings of the Trends in Agricultural Engineering 2010, Prague, Czech Republic, 7–10 September 2010; p. 101. [Google Scholar]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media, Inc.: Newton, MA, USA, 2019. [Google Scholar]

- Zheng, A.; Casari, A. Feature Engineering for Machine Learning: Principles and Techniques for Data Scientists; O’Reilly Media, Inc.: Newton, MA, USA, 2018. [Google Scholar]

- Pantazi, X.E.; Moshou, D.; Mouazen, A.M.; Alexandridis, T.; Kuang, B. Data Fusion of Proximal Soil Sensing and Remote Crop Sensing for the Delineation of Management Zones in Arable Crop Precision Farming. In Proceedings of the HAICTA, Kavala, Greece, 17–20 September 2015; pp. 765–776. [Google Scholar]

- Yin, H.; Cao, Y.; Marelli, B.; Zeng, X.; Mason, A.J.; Cao, C. Soil Sensors and Plant Wearables for Smart and Precision Agriculture. Adv. Mater. 2021, 33, 2007764. [Google Scholar] [CrossRef] [PubMed]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- MacKay, D.J.; Mac Kay, D.J. Information Theory, Inference and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Lapan, M. Deep Reinforcement Learning Hands-On: Apply Modern RL Methods, with Deep Q-Networks, Value Iteration, Policy Gradients, TRPO, AlphaGo Zero and More; Packt Publishing Ltd.: Birmingham, UK, 2018. [Google Scholar]

- Montavon, G.; Binder, A.; Lapuschkin, S.; Samek, W.; Müller, K.R. Layer-Wise Relevance Propagation: An Overview. Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Springer: Berlin/Heidelberg, Germany, 2019; pp. 193–209. [Google Scholar]

- Wirth, C.; Akrour, R.; Neumann, G.; Fürnkranz, J. A survey of preference-based reinforcement learning methods. J. Mach. Learn. Res. 2017, 18, 1–46. [Google Scholar]

- Lee, K.; Smith, L.M.; Abbeel, P. PEBBLE: Feedback-Efficient Interactive Reinforcement Learning via Relabeling Experience and Unsupervised Pre-training. arXiv 2021, arXiv:2106.05091. [Google Scholar]

- Luo, F.; Yang, P.; Li, S.; Ren, X.; Sun, X. CAPT: Contrastive pre-training for learning denoised sequence representations. arXiv 2020, arXiv:2010.06351. [Google Scholar] [CrossRef]

- Srinivas, A.; Laskin, M.; Abbeel, P. Curl: Contrastive unsupervised representations for reinforcement learning. arXiv 2020, arXiv:2004.04136. [Google Scholar] [CrossRef]

- Liu, H.; Abbeel, P. Unsupervised Active Pre-Training for Reinforcement Learning. ICLR 2021 2020, unpublished paper.

- Xu, Z.; Wu, K.; Che, Z.; Tang, J.; Ye, J. Knowledge Transfer in Multi-Task Deep Reinforcement Learning for Continuous Control. arXiv 2020, arXiv:2010.07494. [Google Scholar]

- Lin, K.; Gong, L.; Li, X.; Sun, T.; Chen, B.; Liu, C.; Zhang, Z.; Pu, J.; Zhang, J. Exploration-efficient Deep Reinforcement Learning with Demonstration Guidance for Robot Control. arXiv 2020, arXiv:2002.12089. [Google Scholar] [CrossRef]

- Wang, X.; Lee, K.; Hakhamaneshi, K.; Abbeel, P.; Laskin, M. Skill preferences: Learning to extract and execute robotic skills from human feedback. In Proceedings of the 5th Conference on Robot Learning (CoRL 2021), London, UK, 8 November 2021; PMLR: New York, NY, USA, 2022. [Google Scholar]

- Ajay, A.; Kumar, A.; Agrawal, P.; Levine, S.; Nachum, O. Opal: Offline primitive discovery for accelerating offline reinforcement learning. arXiv 2020, arXiv:2010.13611. [Google Scholar] [CrossRef]

- Fang, M.; Li, Y.; Cohn, T. Learning how to Active Learn: A Deep Reinforcement Learning Approach. arXiv 2017, arXiv:1708.02383. [Google Scholar]

- Rudovic, O.; Zhang, M.; Schuller, B.; Picard, R. Multi-modal active learning from human data: A deep reinforcement learning approach. In Proceedings of the 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; pp. 6–15. [Google Scholar] [CrossRef] [Green Version]

- Kassahun, A.; Bloo, R.; Catal, C.; Mishra, A. Dairy Farm Management Information Systems. Electronics 2022, 11, 239. [Google Scholar] [CrossRef]

- Wang, H.; Ren, Y.; Meng, Z. A Farm Management Information System for Semi-Supervised Path Planning and Autonomous Vehicle Control. Sustainability 2021, 13, 7497. [Google Scholar] [CrossRef]

- Groeneveld, D.; Tekinerdogan, B.; Garousi, V.; Catal, C. A domain-specific language framework for farm management information systems in precision agriculture. Precis. Agric. 2021, 22, 1067–1106. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Roshanianfard, A.; Noguchi, N.; Okamoto, H.; Ishii, K. A review of autonomous agricultural vehicles (The experience of Hokkaido University). J. Terramechanics 2020, 91, 155–183. [Google Scholar] [CrossRef]

- Bhatt, U.; Xiang, A.; Sharma, S.; Weller, A.; Taly, A.; Jia, Y.; Ghosh, J.; Puri, R.; Moura, J.M.F.; Eckersley, P. Explainable Machine Learning in Deployment. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, FAT* ’20, Barcelona, Spain, 27–30 January 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 648–657. [Google Scholar] [CrossRef]

- Lapuschkin, S.; Wäldchen, S.; Binder, A.; Montavon, G.; Samek, W.; Müller, K.R. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. 2019, 10, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Martins, N.C.; Marques, B.; Alves, J.; Araújo, T.; Dias, P.; Santos, B.S. Augmented reality situated visualization in decision-making. In Multimedia Tools and Applications; Spinger: Berlin/Heidelberg, Germany, 2021; pp. 1–24. [Google Scholar] [CrossRef]

- Kim, K.; Billinghurst, M.; Bruder, G.; Duh, H.B.L.; Welch, G.F. Revisiting trends in augmented reality research: A review of the 2nd decade of ISMAR (2008–2017). IEEE Trans. Vis. Comput. Graph. 2018, 24, 2947–2962. [Google Scholar] [CrossRef] [PubMed]

- Julier, S.; Lanzagorta, M.; Baillot, Y.; Rosenblum, L.; Feiner, S.; Hollerer, T.; Sestito, S. Information filtering for mobile augmented reality. In Proceedings of the IEEE and ACM International Symposium on Augmented Reality (ISAR 2000), Munich, Germany, 5–6 October 2000; pp. 3–11. [Google Scholar] [CrossRef] [Green Version]

- Xi, M.; Adcock, M.; McCulloch, J. Future Agriculture Farm Management using Augmented Reality. In Proceedings of the 2018 IEEE Workshop on Augmented and Virtual Realities for Good (VAR4Good), Reutlingen, Germany, 18 March 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; Brem, A.; Ivens, B.S. Who will buy smart glasses? Empirical results of two pre-market-entry studies on the role of personality in individual awareness and intended adoption of Google Glass wearables. Comput. Hum. Behav. 2015, 49, 635–647. [Google Scholar] [CrossRef]

- Google. Google Meet on Glass. Available online: https://www.google.com/glass/meet-on-glass/ (accessed on 6 March 2022).

- Marques, B.; Santos, B.S.; Araújo, T.; Martins, N.C.; Alves, J.B.; Dias, P. Situated Visualization in The Decision Process Through Augmented Reality. In Proceedings of the 2019 23rd International Conference Information Visualisation (IV), Paris, France, 2–5 July 2019; pp. 13–18. [Google Scholar] [CrossRef]

- Ludeña-Choez, J.; Choquehuanca-Zevallos, J.J.; Mayhua-López, E. Sensor nodes fault detection for agricultural wireless sensor networks based on NMF. Comput. Electron. Agric. 2019, 161, 214–224. [Google Scholar] [CrossRef]

- Cecchini, M.; Piccioni, F.; Ferri, S.; Coltrinari, G.; Bianchini, L.; Colantoni, A. Preliminary investigation on systems for the preventive diagnosis of faults on agricultural operating machines. Sensors 2021, 21, 1547. [Google Scholar] [CrossRef]

- Holzinger, A. Human–Computer Interaction and Knowledge Discovery (HCI-KDD): What is the benefit of bringing those two fields to work together? In Multidisciplinary Research and Practice for Information Systems; Springer Lecture Notes in Computer Science LNCS 8127; Cuzzocrea, A., Kittl, C., Simos, D.E., Weippl, E., Xu, L., Eds.; Springer: Heidelberg/Berlin, Germany; New York, NY, USA, 2013; pp. 319–328. [Google Scholar] [CrossRef] [Green Version]

- Holzinger, A.; Kargl, M.; Kipperer, B.; Regitnig, P.; Plass, M.; Müller, H. Personas for Artificial Intelligence (AI) An Open Source Toolbox. IEEE Access 2022, 10, 23732–23747. [Google Scholar] [CrossRef]

- Hussain, Z.; Slany, W.; Holzinger, A. Current State of Agile User-Centered Design: A Survey. In HCI and Usability for e-Inclusion, USAB 2009; Lecture Notes in Computer Science, LNCS 5889; Springer: Berlin/Heidelberg, Germany, 2009; pp. 416–427. [Google Scholar] [CrossRef]

- Hussain, Z.; Slany, W.; Holzinger, A. Investigating Agile User-Centered Design in Practice: A Grounded Theory Perspective. In HCI and Usability for e-Inclusion; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5889, pp. 279–289. [Google Scholar] [CrossRef]

- Holzinger, A.; Malle, B.; Kieseberg, P.; Roth, P.M.; Müller, H.; Reihs, R.; Zatloukal, K. Towards the Augmented Pathologist: Challenges of Explainable-AI in Digital Pathology. arXiv 2017, arXiv:1712.06657. [Google Scholar]

- Sorantin, E.; Grasser, M.G.; Hemmelmayr, A.; Tschauner, S.; Hrzic, F.; Weiss, V.; Lacekova, J.; Holzinger, A. The augmented radiologist: Artificial intelligence in the practice of radiology. Pediatr. Radiol. 2021, 51, 1–13. [Google Scholar] [CrossRef]

- ISO 14044:2006; Environmental Management—Life Cycle Assessment—Requirements and Guidelines. ISO: Geneve, Switzerland, 2006; pp. 1–46.

- Swarr, T.E.; Hunkeler, D.; Klöpffer, W.; Pesonen, H.L.; Ciroth, A.; Brent, A.C.; Pagan, R. Environmental Life-Cycle Costing: A Code of Practice; Spinger: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Ciroth, A.; Finkbeiner, M.; Traverso, M.; Hildenbrand, J.; Kloepffer, W.; Mazijn, B.; Prakash, S.; Sonnemann, G.; Valdivia, S.; Ugaya, C.M.L.; et al. Towards a life cycle sustainability assessment: Making informed choices on products. In Report of the UNEP/SETAC Life Cycle Initiative Programme; UNEP: Nairobi, Kenya, 2011. [Google Scholar]

- Lehmann, A.; Zschieschang, E.; Traverso, M.; Finkbeiner, M.; Schebek, L. Social aspects for sustainability assessment of technologies—Challenges for social life cycle assessment (SLCA). Int. J. Life Cycle Assess. 2013, 18, 1581–1592. [Google Scholar] [CrossRef]

- Zhang, Q. Control of Precision Agriculture Production. In Precision Agriculture Technology for Crop Farming; Washington State University Prosser: Prosser, WA, USA, 2015; pp. 103–132. [Google Scholar]

- Taylor, S.L.; Raun, W.R.; Solie, J.B.; Johnson, G.V.; Stone, M.L.; Whitney, R.W. Use of spectral radiance for correcting nitrogen deficiencies and estimating soil test variability in an established bermudagrass pasture. J. Plant Nutr. 1998, 21, 2287–2302. [Google Scholar] [CrossRef]

- Carr, P.; Carlson, G.; Jacobsen, J.; Nielsen, G.; Skogley, E. Farming soils, not fields: A strategy for increasing fertilizer profitability. J. Prod. Agric. 1991, 4, 57–61. [Google Scholar] [CrossRef]

- Li, A.; Duval, B.D.; Anex, R.; Scharf, P.; Ashtekar, J.M.; Owens, P.R.; Ellis, C. A case study of environmental benefits of sensor-based nitrogen application in corn. J. Environ. Qual. 2016, 45, 675–683. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ehlert, D.; Schmerler, J.; Voelker, U. Variable rate nitrogen fertilisation of winter wheat based on a crop density sensor. Precis. Agric. 2004, 5, 263–273. [Google Scholar] [CrossRef]

- Meyer-Aurich, A.; Gandorfer, M.; Heißenhuber, A. Economic analysis of precision farming technologies at the farm level: Two german case studies. In Agricultural Systems: Economics, Technology, and Diversity; Nova Science Publishers, Hauppage: Hauppauge, NY, USA, 2008; pp. 67–76. [Google Scholar]

- Meyer-Aurich, A.; Griffin, T.W.; Herbst, R.; Giebel, A.; Muhammad, N. Spatial econometric analysis of a field-scale site-specific nitrogen fertilizer experiment on wheat (Triticum aestuvum L.) yield and quality. Comput. Electron. Agric. 2010, 74, 73–79. [Google Scholar] [CrossRef]

- Nishant, R.; Kennedy, M.; Corbett, J. Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda. Int. J. Inf. Manag. 2020, 53, 102104. [Google Scholar] [CrossRef]

- Gandorfer, M.; Schleicher, S.; Heuser, S.; Pfeiffer, J.; Demmel, M. Landwirtschaft 4.0–Digitalisierung und ihre Herausforderungen. Ackerbau-Tech. Lösungen für die Zuk. 2017, 9, 9–19. [Google Scholar]

- Ciruela-Lorenzo, A.M.; Del-Aguila-Obra, A.R.; Padilla-Meléndez, A.; Plaza-Angulo, J.J. Digitalization of agri-cooperatives in the smart agriculture context. proposal of a digital diagnosis tool. Sustainability 2020, 12, 1325. [Google Scholar] [CrossRef] [Green Version]

- Mazzetto, F.; Gallo, R.; Riedl, M.; Sacco, P. Proposal of an ontological approach to design and analyse farm information systems to support Precision Agriculture techniques. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2019; Volume 275, p. 012008. [Google Scholar] [CrossRef]

- Jin, X.B.; Yang, N.X.; Wang, X.Y.; Bai, Y.T.; Su, T.L.; Kong, J.L. Hybrid Deep Learning Predictor for Smart Agriculture Sensing Based on Empirical Mode Decomposition and Gated Recurrent Unit Group Model. Sensors 2020, 20, 1334. [Google Scholar] [CrossRef] [Green Version]

- Sundmaeker, H.; Verdouw, C.; Wolfert, S.; Freire, L.P.; Vermesan, O.; Friess, P. Internet of food and farm 2020. In Digitising the Industry-Internet of Things Connecting Physical, Digital and Virtual Worlds; River Publishers: Delft, The Netherlands, 2016; Volume 129, p. 4. [Google Scholar]

- Wolfert, S.; Ge, L.; Verdouw, C.; Bogaardt, M.J. Big data in smart farming—A review. Agric. Syst. 2017, 153, 69–80. [Google Scholar] [CrossRef]

- Steward, B.L.; Gai, J.; Tang, L. The use of agricultural robots in weed management and control. Robot. Autom. Improv. Agric. 2019, 44, 1–25. [Google Scholar] [CrossRef] [Green Version]

- Mahtani, A.; Sanchez, L.; Fernández, E.; Martinez, A. Effective Robotics Programming with ROS; Packt Publishing Ltd.: Birmingham, UK, 2016. [Google Scholar]

- Li, L.; Zhang, Q.; Huang, D. A review of imaging techniques for plant phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2229–2235. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y.Y.; Kong, J.L.; Jin, X.B.; Wang, X.Y.; Su, T.L.; Zuo, M. CropDeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Unsupervised deep learning and semi-automatic data labeling in weed discrimination. Comput. Electron. Agric. 2019, 165, 104963. [Google Scholar] [CrossRef]

- Ribes, M.; Russias, G.; Tregoat, D.; Fournier, A. Towards Low-Cost Hyperspectral Single-Pixel Imaging for Plant Phenotyping. Sensors 2020, 20, 1132. [Google Scholar] [CrossRef] [Green Version]

- Hashem, I.A.T.; Yaqoob, I.; Anuar, N.B.; Mokhtar, S.; Gani, A.; Khan, S.U. The rise of “big data” on cloud computing: Review and open research issues. Inf. Syst. 2015, 47, 98–115. [Google Scholar] [CrossRef]

- De Mauro, A.; Greco, M.; Grimaldi, M. A formal definition of Big Data based on its essential features. Libr. Rev. 2016, 65, 122–135. [Google Scholar] [CrossRef]

- Kshetri, N. The emerging role of Big Data in key development issues: Opportunities, challenges, and concerns. Big Data Soc. 2014, 1, 2053951714564227. [Google Scholar] [CrossRef] [Green Version]

- Tomkos, I.; Klonidis, D.; Pikasis, E.; Theodoridis, S. Toward the 6G network era: Opportunities and challenges. IT Prof. 2020, 22, 34–38. [Google Scholar] [CrossRef]

- Medici, M.; Mattetti, M.; Canavari, M. A conceptual framework for telemetry data use in agriculture. In Precision Agriculture ’21; Stafford, J.V., Ed.; Wageningen Academic Publishers: Wageningen, The Netherlands, 2021; pp. 935–940. [Google Scholar] [CrossRef]

- Shneiderman, B. The eyes have it: A task by data type taxonomy for information visualizations. In The Craft of Information Visualization; Elsevier: Amsterdam, The Netherlands, 2003; pp. 364–371. [Google Scholar]

- Courteau, J. Robotics in Forest Harvesting Machines; Paper-American Society of Agricultural Engineers (USA): St. Joseph Charter Township, MI, USA, 1989. [Google Scholar]

- Spot. 2022. Available online: https://www.bostondynamics.com/products/spot (accessed on 6 March 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Holzinger, A.; Saranti, A.; Angerschmid, A.; Retzlaff, C.O.; Gronauer, A.; Pejakovic, V.; Medel-Jimenez, F.; Krexner, T.; Gollob, C.; Stampfer, K. Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions. Sensors 2022, 22, 3043. https://doi.org/10.3390/s22083043

Holzinger A, Saranti A, Angerschmid A, Retzlaff CO, Gronauer A, Pejakovic V, Medel-Jimenez F, Krexner T, Gollob C, Stampfer K. Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions. Sensors. 2022; 22(8):3043. https://doi.org/10.3390/s22083043

Chicago/Turabian StyleHolzinger, Andreas, Anna Saranti, Alessa Angerschmid, Carl Orge Retzlaff, Andreas Gronauer, Vladimir Pejakovic, Francisco Medel-Jimenez, Theresa Krexner, Christoph Gollob, and Karl Stampfer. 2022. "Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions" Sensors 22, no. 8: 3043. https://doi.org/10.3390/s22083043

APA StyleHolzinger, A., Saranti, A., Angerschmid, A., Retzlaff, C. O., Gronauer, A., Pejakovic, V., Medel-Jimenez, F., Krexner, T., Gollob, C., & Stampfer, K. (2022). Digital Transformation in Smart Farm and Forest Operations Needs Human-Centered AI: Challenges and Future Directions. Sensors, 22(8), 3043. https://doi.org/10.3390/s22083043