EOG-Based Human–Computer Interface: 2000–2020 Review

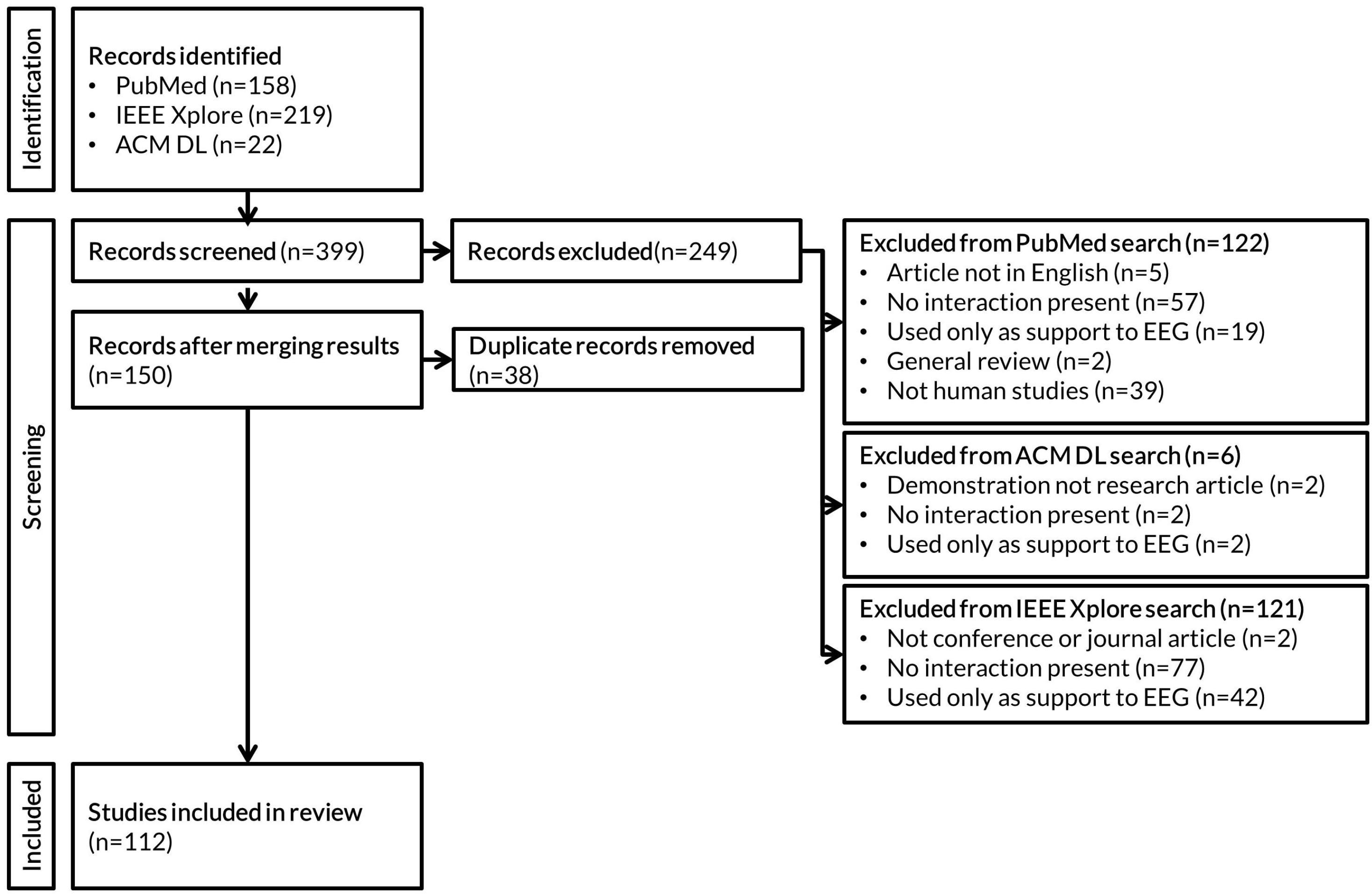

Abstract

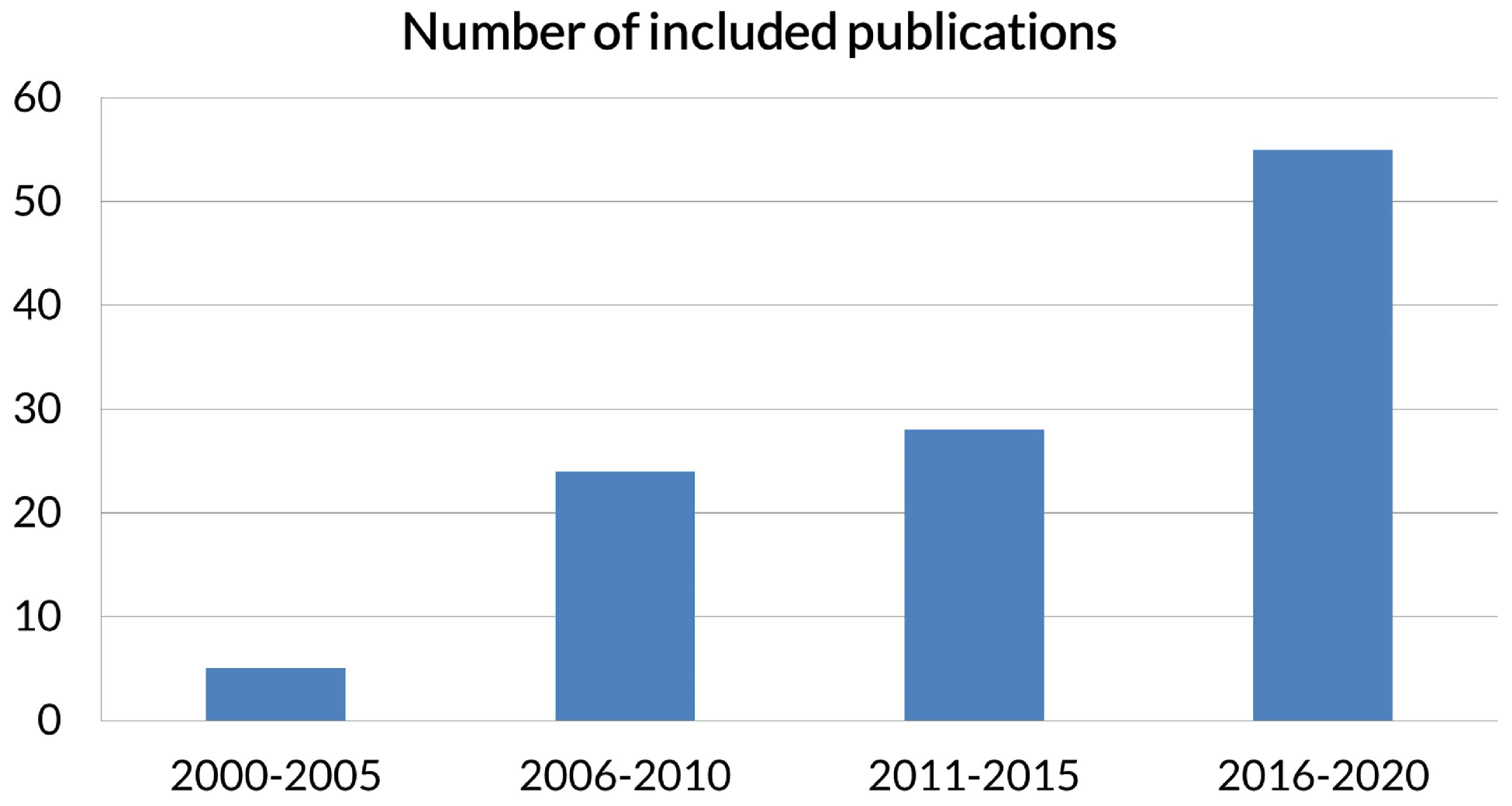

:1. Introduction

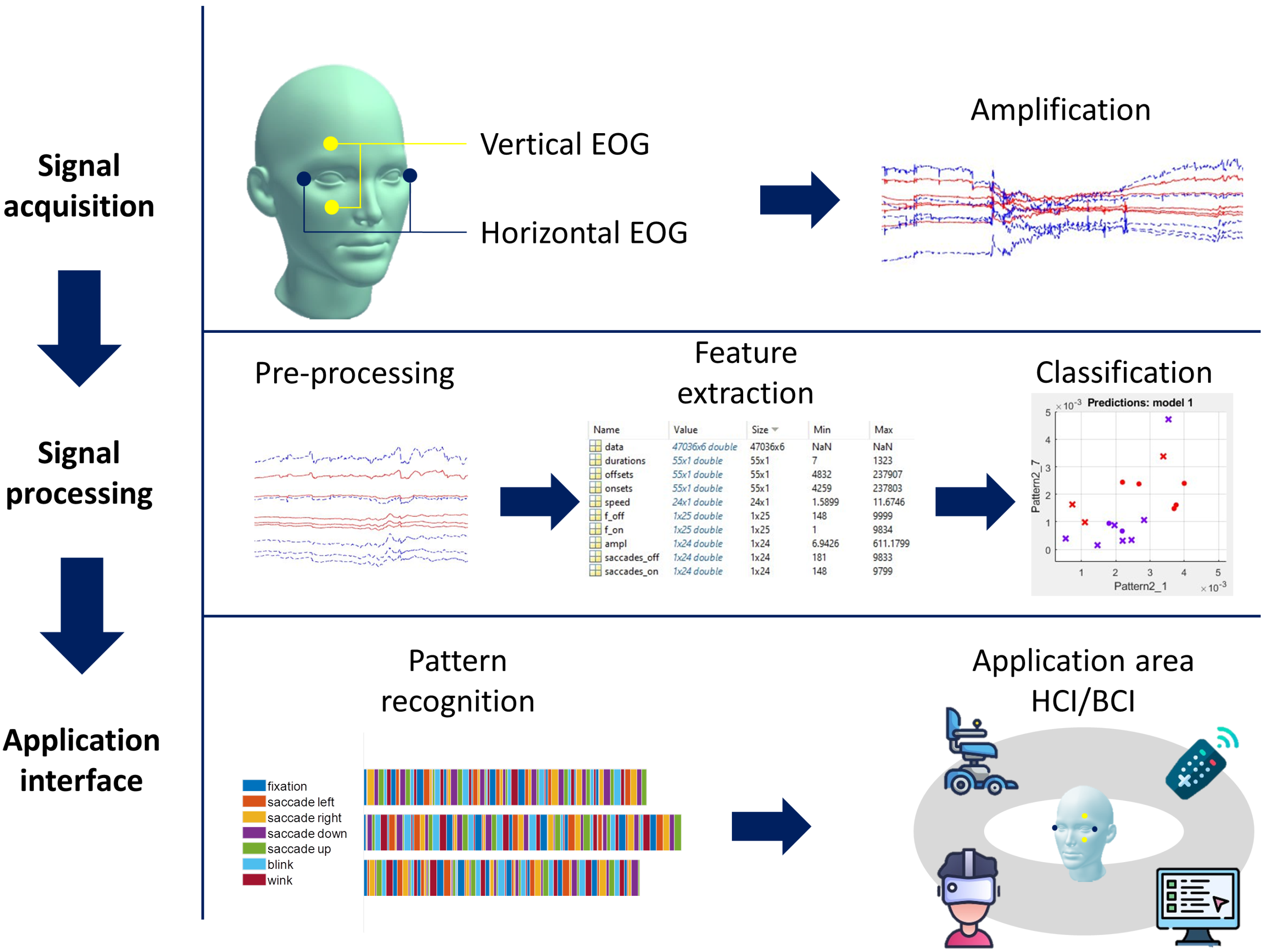

2. Acquiring EOG Signal

3. EOG Devices

- (i)

- The standard EOG consisted of the placement of two electrodes at the left and right corners of the participant’s eyes to measure the horizontal movement. Two other electrodes were placed up and down of one eye to obtain the vertical movement. A ground electrode was positioned on AFz (as required by the International 10/10 System) and the reference electrode was positioned on the right or left earlobe [10].

- (ii)

- The alternative EOG mounting represented an uncommon position of surface electrodes such as around the ears. Manabe et al. [11] placed four electrodes on the head at the locations of the common headphone cushion. Ang et al. [12] used an alternative EOG system where activities were recorded by a single-channel commercial headset NeuroSky MindWave Mobile Headset (NeuroSky, San Jose, CA, USA). The device was formed by a single-channel sensor based on a dry electrode with stainless steel. The sensor was placed on the participant’s forehead to capture electrical signals produced by the brain and the muscles.

- (iii)

- The J!NS MEME glasses (JINS MEME ES Digital Innovation Lab, Fujitsu, Tokyo, Japan) employs 3-point stainless steel EOG electrodes on the nose bridge and IMU, battery, and Bluetooth units on the eyeglasses temples. The J!NS MEME are not a common computing interface but rather a sensing device. They stream the data from the sensor to smartphones or laptops via Bluetooth. The sensor data corresponds to vertical and horizontal EOG signal, accelerometer and gyroscope data. The equipment has an operating time of 8 hours. It allows a long duration of real-time streaming of eye and head movements. The J!NS MEME are unobtrusive and look like ordinary glasses.

- (iv)

- The customized EOG device category presents the mounted system elaborated by the experimental group according to their objectives. Here we introduce a brief presentation of each customized device included in this review. Vehkaoja et al. [13] used a prototype of a wearable and wireless device designed for EOG and also for facial electromyography (EMG) data recorded from the participant’s forehead. The device was based on five easy-to-proceed textile electrodes that were inserted into a head cap. Bulling et al. [14] employed a wearable and standalone device. It was formed by dry electrodes integrated into goggles with a small pocket-worn constituent with a digital signal processor to explore EOG signals in real-time. Bulling et al. [15] recorded EOG data from the commercial Mobile Brain/Body Imaging (MoBI) from Twente Medical Systems. The device was formed by four-channel EOG that was worn on a belt around the participant’s waist and data were transmitted via Bluetooth. Kuo et al. [16] captured the horizontal eye-gaze direction to control wheelchair driving. They used a pair of eyeglasses to set up left and right surface electrodes as a compact modular. Zhang et al. [17] developed a wireless and lightweight head-mounted system that measured both EOG and EEG for interacting in a virtual reality environment. They positioned six horizontal and vertical electrodes symmetrically around both eyes. This device captures azimuth, elevation and vergence of gaze. Xiao et al. [18] proposed a single-channel EOG device that enables real-time interactions with the virtual reality environment. They designed a graphical user interface for the EOG-based BCI in virtual reality that contains several buttons. The user had to blink while the algorithm identifies the eye blinks and detects the user’s target button. Vidal et al. [19] measured eye movements’ amplitude and duration in reaction to the stimulus with an elaborated experimental system consisting of three devices. First, they connected EOG electrodes to the Mobile Brain/Body Imaging (MoBI) approach developed by Scott Makeig’s group. Second, they implemented an infrared eye tracker from Ergoneers GmbH. Third, they recorded head movements by connecting a cap with an inertial quantification. Inaez et al. [20] integrated five EOG electrodes into a pair of glasses. They incorporated electronic and mechanical elements including a printed circuit board, batteries, communication module electrode holders, lenses and frame. English et al. [21] analyzed four electrodes from the EEG Emotive EEG Headset (San Francisco, CA, USA) to process small and large amplitude of EOG signals. Their conceptualized EOG eyephone system was efficient during sitting, standing and walking. Valeriani et al. [22] recorded EOG signals utilizing two facial electrodes placed on the forehead. The interaction was realized through eye winks by comparing the peak amplitudes and an app installed on the smartphone. Kosmyna et al. [23] included EEG and EOG electrodes, an amplifier, a Bluetooth module and a speaker for bone conduction auditory feedback in a pair of glasses. The operator rather received feedback or nudges sent by a wireless vibration brooch. Despite the varied and diverse acquisition devices, the different investigations shared the next step which concerns features extraction.

4. Extracted Features

5. Classification Algorithms

| Interaction | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reference | Device | Extracted Features | Algorithm | Active | Passive | |||||||||||||

| First Author | Year | Custom Device | J!ns MEME | EOG Standard | EOG Alternative | Blinks | Saccades | Eye-related | Other Features | Linear | Network | NM | “Keyboard” Input | Navigation | Conf./Select/Point | Visually Guided? | Fatigue/Cognitive | Activity |

| Chen [41] | 2000 | • | • | • | • | |||||||||||||

| Rosander [42] | 2000 | • | • | • | • | |||||||||||||

| Barea [43] | 2002 | • | • | • | • | |||||||||||||

| Ding [44] | 2005 | • | • | • | ||||||||||||||

| Vehkaoja [13] | 2005 | • | • | • | • | |||||||||||||

| Manabe [11] | 2006 | • | • | • | • | • | • | |||||||||||

| Ding [45] | 2006 | • | • | • | • | • | ||||||||||||

| Trejo [46] | 2006 | • | • | • | • | |||||||||||||

| Yamagishi [31] | 2006 | • | • | • | • | |||||||||||||

| Akan [47] | 2007 | • | • | • | • | |||||||||||||

| Bashashati [48] | 2007 | • | • | • | • | |||||||||||||

| Krueger [49] | 2007 | • | • | • | • | |||||||||||||

| Skotte [50] | 2007 | • | • | • | • | |||||||||||||

| Estrany [51] | 2008 | • | • | • | • | |||||||||||||

| Bulling [14] | 2008 | • | • | • | • | • | ||||||||||||

| Cheng [52] | 2008 | • | • | • | • | |||||||||||||

| Mühlberger [53] | 2008 | • | • | • | • | |||||||||||||

| Bulling [15] | 2009 | • | • | • | • | • | • | |||||||||||

| Estrany [54] | 2009 | • | • | • | • | |||||||||||||

| Bulling [55] | 2009 | • | • | • | • | • | ||||||||||||

| Kuo [16] | 2009 | • | • | • | • | |||||||||||||

| Zheng [56] | 2009 | • | • | • | • | • | ||||||||||||

| Keegan [57] | 2009 | • | • | • | • | |||||||||||||

| Usakli [58] | 2009 | • | • | • | • | • | ||||||||||||

| Yagi [59] | 2010 | • | • | • | • | |||||||||||||

| Usakli [60] | 2010 | • | • | • | • | |||||||||||||

| Belov [61] | 2010 | • | • | • | • | |||||||||||||

| Zhang [17] | 2010 | • | • | • | ||||||||||||||

| Punsawad [62] | 2010 | • | • | • | • | |||||||||||||

| Vidal [19] | 2011 | • | • | • | • | • | • | |||||||||||

| Bulling [28] | 2011 | • | • | • | • | • | ||||||||||||

| Li [63] | 2011 | • | • | • | ||||||||||||||

| Liu [64] | 2011 | • | • | • | • | |||||||||||||

| Banarjee [37] | 2012 | • | • | • | • | • | ||||||||||||

| Tangsuksant [65] | 2012 | • | • | • | • | • | • | |||||||||||

| Swami Nathan [66] | 2012 | • | • | • | • | • | ||||||||||||

| Iáñez [20] | 2013 | • | • | • | • | • | • | |||||||||||

| English [21] | 2013 | • | • | • | • | • | • | • | ||||||||||

| Ubeda [67] | 2013 | • | • | • | • | • | • | |||||||||||

| Li [68] | 2014 | • | • | • | • | • | • | |||||||||||

| Manabe [69] | 2014 | • | • | • | • | |||||||||||||

| Ishimaru [70] | 2014 | • | • | • | • | |||||||||||||

| Witkowski [71] | 2014 | • | • | • | • | |||||||||||||

| Ma [72] | 2014 | • | • | • | • | • | • | |||||||||||

| Jiang [73] | 2014 | • | • | • | • | • | ||||||||||||

| Wang [74] | 2014 | • | • | • | ||||||||||||||

| Aziz [75] | 2014 | • | • | • | • | |||||||||||||

| Hossain [76] | 2014 | • | • | • | • | • | ||||||||||||

| D’Souza [77] | 2014 | • | • | • | • | • | ||||||||||||

| Manmadhan [78] | 2014 | • | • | • | • | • | ||||||||||||

| OuYang [79] | 2015 | • | • | • | • | • | • | |||||||||||

| Manabe [80] | 2015 | • | • | • | • | |||||||||||||

| Ishimaru [81] | 2015 | • | • | • | • | • | • | • | ||||||||||

| Hossain [82] | 2015 | • | • | • | • | • | ||||||||||||

| Valriani [22] | 2015 | • | • | • | • | • | ||||||||||||

| Banik [83] | 2015 | • | • | • | ||||||||||||||

| Ang [12] | 2015 | • | • | • | • | |||||||||||||

| Interaction | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reference | Device | Extracted Features | Algorithm | Active | Passive | |||||||||||||

| First Author | Year | Custom Device | J!ns MEME | EOG Standard | EOG Alternative | Blinks | Saccades | Eye-related | Other Features | Linear | Network | NM | “Keyboard” Input | Navigation | Conf./Select/Point | Visually Guided? | Fatigue/Cognitive | Activity |

| Kumar [84] | 2016 | • | • | • | • | |||||||||||||

| Dhuliawala [85] | 2016 | • | • | • | • | |||||||||||||

| Shimizu [86] | 2016 | • | • | • | • | • | ||||||||||||

| Shimizu bis [87] | 2016 | • | • | • | • | • | ||||||||||||

| Bissoli [88] | 2016 | • | • | • | • | |||||||||||||

| Wilaiprasitporn [89] | 2016 | • | • | • | • | |||||||||||||

| Fang [90] | 2016 | • | • | • | • | • | ||||||||||||

| Tamura [91] | 2016 | • | • | • | • | • | • | • | ||||||||||

| Barbara [92] | 2016 | • | • | • | • | • | ||||||||||||

| Atique [93] | 2016 | • | • | • | • | • | ||||||||||||

| Naijian [94] | 2016 | • | • | • | ||||||||||||||

| Ogai [95] | 2017 | • | • | • | • | • | ||||||||||||

| Lee [96] | 2017 | • | • | • | • | |||||||||||||

| Robert [97] | 2017 | • | • | • | • | |||||||||||||

| Ishimaru [98] | 2017 | • | • | • | • | |||||||||||||

| Kise [99] | 2017 | • | • | • | • | |||||||||||||

| Augereau [100] | 2017 | • | • | • | • | |||||||||||||

| Tag [101] | 2017 | • | • | • | • | |||||||||||||

| Thakur [102] | 2017 | • | • | • | • | |||||||||||||

| Chang [103] | 2017 | • | • | • | • | |||||||||||||

| Huang [104] | 2017 | • | • | • | ||||||||||||||

| López [25] | 2017 | • | • | • | • | |||||||||||||

| Heo [105] | 2017 | • | • | • | • | • | • | • | ||||||||||

| He [106] | 2017 | • | • | • | • | |||||||||||||

| Lee [107] | 2017 | • | • | • | • | • | ||||||||||||

| Zheng [56] | 2017 | • | • | • | • | • | ||||||||||||

| Zhang [108] | 2017 | • | • | • | • | • | ||||||||||||

| Zhi-Hao [109] | 2017 | • | • | • | ||||||||||||||

| Hossain [27] | 2017 | • | • | • | • | |||||||||||||

| Soundariya [110] | 2017 | • | • | • | • | |||||||||||||

| O’Bard [111] | 2017 | • | • | • | • | • | ||||||||||||

| Perin [112] | 2017 | • | • | • | • | • | ||||||||||||

| Karagöz [113] | 2017 | • | • | • | • | |||||||||||||

| Crea [114] | 2018 | • | ||||||||||||||||

| Zhang [115] | 2018 | • | • | • | • | |||||||||||||

| Lee [107] | 2018 | • | • | • | • | |||||||||||||

| Kim [116] | 2018 | • | • | • | • | |||||||||||||

| Fang [117] | 2018 | • | • | • | • | • | ||||||||||||

| Bastes [118] | 2018 | • | • | • | • | • | • | |||||||||||

| Hou [119] | 2018 | • | • | • | • | • | ||||||||||||

| Jialu [120] | 2018 | • | • | • | • | • | ||||||||||||

| Sun [121] | 2018 | • | • | • | • | • | ||||||||||||

| Xiao [18] | 2019 | • | • | • | • | • | ||||||||||||

| Lu [122] | 2019 | • | • | • | • | |||||||||||||

| Garrote [123] | 2019 | • | • | • | • | • | ||||||||||||

| Tag [124] | 2019 | • | • | • | • | |||||||||||||

| Findling [125] | 2019 | • | • | • | • | |||||||||||||

| Rostaminia [126] | 2019 | • | • | • | • | |||||||||||||

| Kosmyna [23] | 2019 | • | • | • | • | • | ||||||||||||

| Badesa [127] | 2019 | • | • | • | • | |||||||||||||

| Zhang [128] | 2019 | • | • | • | • | |||||||||||||

| Wu [129] | 2019 | • | • | • | ||||||||||||||

| Mocny-Pachońska [130] | 2020 | • | • | • | • | • | • | • | ||||||||||

| He [131] | 2020 | • | • | • | • | |||||||||||||

| Huang [132] | 2020 | • | • | • | • | |||||||||||||

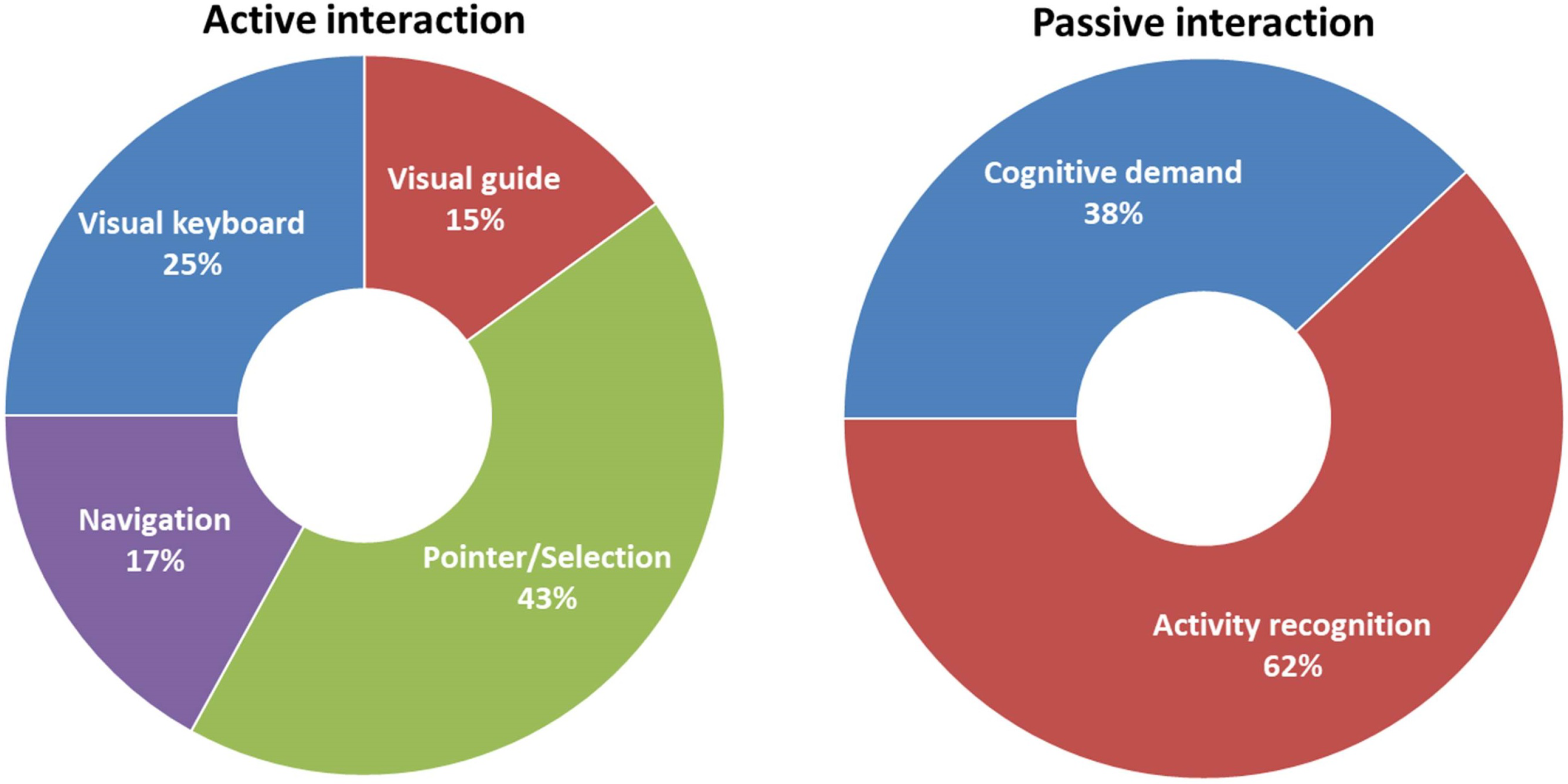

6. EOG-Based Interaction

6.1. Real Environment Interaction

6.2. Virtual Reality Interaction

6.3. Aircraft Pilot Interaction

7. EOG Limits

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jacob, R.J.; Karn, K.S. Eye tracking in human-computer interaction and usability research: Ready to deliver the promises. In The Mind’s Eye; Elsevier: Amsterdam, The Netherlands, 2003; pp. 573–605. [Google Scholar]

- Ottoson, D. Analysis of the electrical activity of the olfactoy epithelium. Acta Physiol. Scand. 1956, 35, 122. [Google Scholar]

- Belkhiria, C.; Peysakhovich, V. Electro-Encephalography and Electro-Oculography in Aeronautics: A Review Over the Last Decade (2010–2020). Front. Neuroergon. 2020, 3, 606719. [Google Scholar] [CrossRef]

- Di Flumeri, G.; Aricò, P.; Borghini, G.; Sciaraffa, N.; Di Florio, A.; Babiloni, F. The dry revolution: Evaluation of three different EEG dry electrode types in terms of signal spectral features, mental states classification and usability. Sensors 2019, 19, 1365. [Google Scholar] [CrossRef] [Green Version]

- Acar, G.; Ozturk, O.; Golparvar, A.J.; Elboshra, T.A.; Böhringer, K.; Yapici, M.K. Wearable and flexible textile electrodes for biopotential signal monitoring: A review. Electronics 2019, 8, 479. [Google Scholar] [CrossRef] [Green Version]

- Young, L.R.; Sheena, D. Survey of eye movement recording methods. Behav. Res. Methods Instrum. 1975, 7, 397–429. [Google Scholar] [CrossRef]

- Brown, M.; Marmor, M.; Zrenner, E.; Brigell, M.; Bach, M. ISCEV standard for clinical electro-oculography (EOG) 2006. Doc. Ophthalmol. 2006, 113, 205–212. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y. Design and Evaluation of a Human-Computer Interface Based on Electrooculography. Ph.D. Thesis, Case Western Reserve University, Cleveland, OH, USA, 2003. [Google Scholar]

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Scherer, R.; Lee, F.; Schlogl, A.; Leeb, R.; Bischof, H.; Pfurtscheller, G. Toward self-paced brain–computer communication: Navigation through virtual worlds. IEEE Trans. Biomed. Eng. 2008, 55, 675–682. [Google Scholar] [CrossRef]

- Manabe, H.; Fukumoto, M. Full-Time Wearable Headphone-Type Gaze Detector; Association for Computing Machinery: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Ang, A.M.S.; Zhang, Z.; Hung, Y.S.; Mak, J.N. A user-friendly wearable single-channel EOG-based human-computer interface for cursor control. In Proceedings of the 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015; pp. 565–568. [Google Scholar]

- Vehkaoja, A.T.; Verho, J.A.; Puurtinen, M.M.; Nojd, N.M.; Lekkala, J.O.; Hyttinen, J.A. Wireless head cap for EOG and facial EMG measurements. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 5865–5868. [Google Scholar]

- Bulling, A.; Roggen, D.; Tröster, G. It’s in Your Eyes: Towards Context-Awareness and Mobile HCI Using Wearable EOG Goggles; Association for Computing Machinery: New York, NY, USA, 2008. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2009. [Google Scholar] [CrossRef]

- Kuo, F.Y.; Hsu, C.W.; Day, R.F. An exploratory study of cognitive effort involved in decision under Framing—An application of the eye-tracking technology. Decis. Support Syst. 2009, 48, 81–91. [Google Scholar] [CrossRef]

- Zhang, L.; Chi, Y.M.; Edelstein, E.; Schulze, J.; Gramann, K.; Velasquez, A.; Cauwenberghs, G.; Macagno, E. Wireless physiological monitoring and ocular tracking: 3D calibration in a fully-immersive virtual health care environment. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 4464–4467. [Google Scholar]

- Xiao, J.; Qu, J.; Li, Y. An Electrooculogram-based interaction method and its music-on-demand application in a virtual reality environment. IEEE Access 2019, 7, 22059–22070. [Google Scholar] [CrossRef]

- Vidal, M.; Bulling, A.; Gellersen, H. Analysing EOG Signal Features for the Discrimination of Eye Movements with Wearable Devices; Association for Computing Machinery: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Iáñez, E.; Azorin, J.M.; Perez-Vidal, C. Using eye movement to control a computer: A design for a lightweight electro-oculogram electrode array and computer interface. PLoS ONE 2013, 8, e67099. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- English, E.; Hung, A.; Kesten, E.; Latulipe, D.; Jin, Z. EyePhone: A mobile EOG-based human-computer interface for assistive healthcare. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 105–108. [Google Scholar]

- Valeriani, D.; Matran-Fernandez, A. Towards a wearable device for controlling a smartphone with eye winks. In Proceedings of the 2015 7th Computer Science and Electronic Engineering Conference (CEEC), Colchester, UK, 24–25 September 2015; pp. 41–46. [Google Scholar]

- Kosmyna, N.; Morris, C.; Nguyen, T.; Zepf, S.; Hernandez, J.; Maes, P. AttentivU: Designing EEG and EOG compatible glasses for physiological sensing and feedback in the car. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Leeds, UK, 9–14 September 2019; pp. 355–368. [Google Scholar]

- Aungsakun, S.; Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Robust Eye Movement Recognition Using EOG Signal for Human-COMPUTER interface, International Conference on Software Engineering and Computer Systems, Pahang, Malaysia, 27–29 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 714–723. [Google Scholar]

- López, A.; Ferrero, F.; Yangüela, D.; Álvarez, C.; Postolache, O. Development of a computer writing system based on EOG. Sensors 2017, 17, 1505. [Google Scholar] [CrossRef] [PubMed]

- Rajesh, A.N.; Chandralingam, S.; Anjaneyulu, T.; Satyanarayana, K. Eog controlled motorized wheelchair for disabled persons. Int. J. Med. Health Biomed. Bioeng. Pharm. Eng. 2014, 8, 302–305. [Google Scholar]

- Hossain, Z.; Shuvo, M.M.H.; Sarker, P. Hardware and software implementation of real time electrooculogram (EOG) acquisition system to control computer cursor with eyeball movement. In Proceedings of the 2017 4th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 28–30 September 2017; pp. 132–137. [Google Scholar]

- Bulling, A.; Roggen, D. Recognition of Visual Memory Recall Processes Using Eye Movement Analysis; Association for Computing Machinery: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Goto, S.; Yano, O.; Matsuda, Y.; Sugi, T.; Egashira, N. Development of Hands-Free Remote Operation System for a Mobile Robot Using EOG and EMG. Electron. Commun. Jpn. 2017, 100, 38–47. [Google Scholar] [CrossRef]

- Barea, R.; Boquete, L.; Bergasa, L.M.; López, E.; Mazo, M. Electro-oculographic guidance of a wheelchair using eye movements codification. Int. J. Robot. Res. 2003, 22, 641–652. [Google Scholar] [CrossRef]

- Yamagishi, K.; Hori, J.; Miyakawa, M. Development of EOG-based communication system controlled by eight-directional eye movements. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30August–3 September 2006; pp. 2574–2577. [Google Scholar]

- Tsai, J.; Lee, C.; Wu, C.; Wu, J.; Kao, K. A feasibility study of an eye-writing system based on electro-oculography. J. Med. Biol. Eng. 2008, 28, 39. [Google Scholar]

- Gandhi, T.; Trikha, M.; Santhosh, J.; Anand, S. Development of an expert multitask gadget controlled by voluntary eye movements. Expert Syst. Appl. 2010, 37, 4204–4211. [Google Scholar] [CrossRef]

- Aungsakul, S.; Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Evaluating feature extraction methods of electrooculography (EOG) signal for human-computer interface. Procedia Eng. 2012, 32, 246–252. [Google Scholar] [CrossRef] [Green Version]

- Pournamdar, V.; Vahdani-Manaf, N. Classification of eye movement signals using electrooculography in order to device controlling. In Proceedings of the 2017 IEEE 4th International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 22 December 2017; pp. 339–342. [Google Scholar]

- Widge, A.S.; Moritz, C.T.; Matsuoka, Y. Direct neural control of anatomically correct robotic hands. In Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2010; pp. 105–119. [Google Scholar]

- Banerjee, A.; Datta, S.; Pal, M.; Konar, A.; Tibarewala, D.; Janarthanan, R. Classifying electrooculogram to detect directional eye movements. Procedia Technol. 2013, 10, 67–75. [Google Scholar] [CrossRef] [Green Version]

- Syal, P.; Kumari, P. Comparative Analysis of KNN, SVM, DT for EOG based Human Computer Interface. In Proceedings of the 2017 International Conference on Current Trends in Computer, Electrical, Electronics and Communication (CTCEEC), Mysore, India, 8–9 September 2017; pp. 1023–1028. [Google Scholar]

- CR, H.; MP, P. Classification of eye movements using electrooculography and neural networks. Int. J. Hum. Comput. Interact. (IJHCI) 2014, 5, 51. [Google Scholar]

- Mala, S.; Latha, K. Feature selection in classification of eye movements using electrooculography for activity recognition. Comput. Math. Methods Med. 2014, 2014, 713818. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.C.; Tsai, T.T.; Luo, C.H. Portable and programmable clinical EOG diagnostic system. J. Med. Eng. Technol. 2020, 24, 154–162. [Google Scholar] [CrossRef]

- Rosander, K.; von Hofsten, C. Visual-vestibular interaction in early infancy. Exp. Brain Res. 2000, 133, 321–333. [Google Scholar] [CrossRef] [PubMed]

- Barea, R.; Boquete, L.; Mazo, M.; Lopez, E. System for assisted mobility using eye movements based on electrooculography. IEEE Trans. Neural Syst. Rehabil. Eng. 2002, 10, 209–218. [Google Scholar] [CrossRef]

- Ding, F.; Chen, T. Gradient based iterative algorithms for solving a class of matrix equations. IEEE Trans. Autom. Control 2005, 50, 1216–1221. [Google Scholar] [CrossRef]

- Ding, Q.; Tong, K.; Li, G. Development of an EOG (electro-oculography) based human-computer interface. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 6829–6831. [Google Scholar]

- Trejo, L.J.; Rosipal, R.; Matthews, B. Brain-computer interfaces for 1-D and 2-D cursor control: Designs using volitional control of the EEG spectrum or steady-state visual evoked potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 225–229. [Google Scholar] [CrossRef] [Green Version]

- Akan, B.; Argunsah, A.O. A human-computer interface (HCI) based on electrooculogram (EOG) for handicapped. In Proceedings of the 2007 IEEE 15th Signal Processing and Communications Applications, Eskisehir, Turkey, 11–13 June 2007; pp. 1–3. [Google Scholar]

- Bashashati, A.; Nouredin, B.; Ward, R.K.; Lawrence, P.; Birch, G.E. Effect of eye-blinks on a self-paced brain interface design. Clin. Neurophysiol. 2007, 118, 1639–1647. [Google Scholar] [CrossRef]

- Krueger, T.B.; Stieglitz, T. A Naïve and Fast Human Computer Interface Controllable for the Inexperienced-a Performance Study. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 2508–2511. [Google Scholar]

- Skotte, J.H.; Nøjgaard, J.K.; Jørgensen, L.V.; Christensen, K.B.; Sjøgaard, G. Eye blink frequency during different computer tasks quantified by electrooculography. Eur. J. Appl. Physiol. 2007, 99, 113–119. [Google Scholar] [CrossRef]

- Estrany, B.; Fuster, P.; Garcia, A.; Luo, Y. Human computer interface by EOG tracking. In Proceedings of the 1st International Conference on PErvasive Technologies Related to Assistive Environments, Athens, Greece, 16–18 July 2008; pp. 1–9. [Google Scholar]

- Cheng, W.C. Interactive Techniques for Reducing Color Breakup; Association for Computing Machinery: New York, NY, USA, 2008. [Google Scholar] [CrossRef]

- Mühlberger, A.; Wieser, M.J.; Pauli, P. Visual attention during virtual social situations depends on social anxiety. CyberPsychol. Behav. 2008, 11, 425–430. [Google Scholar] [CrossRef]

- Estrany, B.; Fuster, P.; Garcia, A.; Luo, Y. EOG signal processing and analysis for controlling computer by eye movements. In Proceedings of the 2nd International Conference on Pervasive Technologies Related to Assistive Environments, Corfu, Greece, 9–13 June 2009; pp. 1–4. [Google Scholar]

- Bulling, A.; Roggen, D.; Tröster, G. Wearable EOG Goggles: Eye-Based Interaction in Everyday Environments; Association for Computing Machinery: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef] [Green Version]

- Keegan, J.; Burke, E.; Condron, J. An electrooculogram-based binary saccade sequence classification (BSSC) technique for augmentative communication and control. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 2604–2607. [Google Scholar]

- Usakli, A.; Gurkan, S.; Aloise, F.; Vecchiato, G.; Babiloni, F. A hybrid platform based on EOG and EEG signals to restore communication for patients afflicted with progressive motor neuron diseases. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 543–546. [Google Scholar]

- Yagi, T. Eye-gaze interfaces using electro-oculography (EOG). In Proceedings of the 2010 Workshop on Eye Gaze in Intelligent Human Machine Interaction, Hong Kong, China, 7 February 2010; pp. 28–32. [Google Scholar]

- Usakli, A.B.; Gurkan, S.; Aloise, F.; Vecchiato, G.; Babiloni, F. On the use of electrooculogram for efficient human computer interfaces. Comput. Intell. Neurosci. 2010, 2010, 135629. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Belov, D.P.; Eram, S.Y.; Kolodyazhnyi, S.F.; Kanunikov, I.E.; Getmanenko, O.V. Electrooculogram detection of eye movements on gaze displacement. Neurosci. Behav. Physiol. 2010, 40, 583–591. [Google Scholar] [CrossRef] [PubMed]

- Punsawad, Y.; Wongsawat, Y.; Parnichkun, M. Hybrid EEG-EOG brain-computer interface system for practical machine control. In Proceedings of the annual international conference of the IEEE engineering in medicine and biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 1360–1363. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Ratcliffe, M.; Liu, L.; Qi, Y.; Liu, Q. A Real-Time EEG-Based BCI System for Attention Recognition in Ubiquitous Environment; Association for Computing Machinery: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, Z.; Hu, D. Gaze independent brain–computer speller with covert visual search tasks. Clin. Neurophysiol. 2011, 122, 1127–1136. [Google Scholar] [CrossRef] [PubMed]

- Tangsuksant, W.; Aekmunkhongpaisal, C.; Cambua, P.; Charoenpong, T.; Chanwimalueang, T. Directional eye movement detection system for virtual keyboard controller. In Proceedings of the 5th 2012 Biomedical Engineering International Conference, Muang, Thailand, 5–7 December 2012; pp. 1–5. [Google Scholar]

- Nathan, D.S.; Vinod, A.P.; Thomas, K.P. An electrooculogram based assistive communication system with improved speed and accuracy using multi-directional eye movements. In Proceedings of the 2012 35th International Conference on Telecommunications and Signal Processing (TSP), Prague, Czech Republic, 3–4 July 2012; pp. 554–558. [Google Scholar]

- Ubeda, A.; Ianez, E.; Azorin, J.M. An integrated electrooculography and desktop input bimodal interface to support robotic arm control. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 338–342. [Google Scholar] [CrossRef]

- Li, L. The Research and Implementation of the HCI System Based on Bioelectricity. In Proceedings of the 2013 International Conference on Computer Sciences and Applications, Wuhan, China, 14–15 December 2013; pp. 414–417. [Google Scholar]

- Manabe, H.; Yagi, T. EOG-Based Eye Gesture Input with Audio Staging; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Ishimaru, S.; Kunze, K.; Uema, Y.; Kise, K.; Inami, M.; Tanaka, K. Smarter Eyewear: Using Commercial EOG Glasses for Activity Recognition; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Witkowski, M.; Cortese, M.; Cempini, M.; Mellinger, J.; Vitiello, N.; Soekadar, S.R. Enhancing brain-machine interface (BMI) control of a hand exoskeleton using electrooculography (EOG). J. NeuroEng. Rehabil. 2014, 11, 165. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Zhang, Y.; Cichocki, A.; Matsuno, F. A Novel EOG/EEG Hybrid Human–Machine Interface Adopting Eye Movements and ERPs: Application to Robot Control. IEEE Trans. Biomed. Eng. 2014, 62, 876–889. [Google Scholar] [CrossRef]

- Jiang, J.; Zhou, Z.; Yin, E.; Yu, Y.; Hu, D. Hybrid Brain-Computer Interface (BCI) based on the EEG and EOG signals. Bio-Med. Mater. Eng. 2014, 24, 2919–2925. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Li, Y.; Long, J.; Yu, T.; Gu, Z. An asynchronous wheelchair control by hybrid EEG-EOG brain-computer interface. Cogn. Neurodyn. 2014, 8, 399–409. [Google Scholar] [CrossRef]

- Aziz, F.; Arof, H.; Mokhtar, N.; Mubin, M. HMM based automated wheelchair navigation using EOG traces in EEG. J. Neural Eng. 2014, 11, 056018. [Google Scholar] [CrossRef]

- Hossain, M.S.; Huda, K.; Ahmad, M. Command the computer with your eye-An electrooculography based approach. In Proceedings of the 8th International Conference on Software, Knowledge, Information Management and Applications (SKIMA 2014), Dhaka, Bangladesh, 18–20 December 2014; pp. 1–6. [Google Scholar]

- D’Souza, S.; Natarajan, S. Recognition of EOG based reading task using AR features. In Proceedings of the International Conference on Circuits, Communication, Control and Computing, Bangalore, India, 21–22 November 2014; pp. 113–117. [Google Scholar]

- Manmadhan, S. Eye movement controlled portable human computer interface for the disabled. In Proceedings of the 2014 International Conference on Embedded Systems (ICES), Coimbatore, India, 3–5 July 2014; pp. 271–274. [Google Scholar]

- OuYang, R.; Lv, Z.; Wu, X. An algorithm for reading activity recognition based on electrooculogram. In Proceedings of the 2015 10th International Conference on Information, Communications and Signal Processing (ICICS), Singapore, 2–4 December 2015; pp. 1–5. [Google Scholar]

- Manabe, H.; Fukumoto, M.; Yagi, T. Direct gaze estimation based on nonlinearity of EOG. IEEE Trans. Biomed. Eng. 2015, 62, 1553–1562. [Google Scholar] [CrossRef]

- Ishimaru, S.; Kunze, K.; Tanaka, K.; Uema, Y.; Kise, K.; Inami, M. Smart Eyewear for Interaction and Activity Recognition; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Hossain, M.S.; Huda, K.; Rahman, S.S.; Ahmad, M. Implementation of an EOG based security system by analyzing eye movement patterns. In Proceedings of the 2015 International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 17–19 December 2015; pp. 149–152. [Google Scholar]

- Banik, P.P.; Azam, M.K.; Mondal, C.; Rahman, M.A. Single channel electrooculography based human-computer interface for physically disabled persons. In Proceedings of the 2015 International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Savar, Bangladesh, 21–23 May 2015; pp. 1–6. [Google Scholar]

- Kumar, D.; Sharma, A. Electrooculogram-based virtual reality game control using blink detection and gaze calibration. In Proceedings of the 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Jaipur, India, 21–24 September 2016; pp. 2358–2362. [Google Scholar]

- Dhuliawala, M.; Lee, J.; Shimizu, J.; Bulling, A.; Kunze, K.; Starner, T.; Woo, W. Smooth Eye Movement Interaction Using EOG Glasses; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Shimizu, J.; Chernyshov, G. Eye Movement Interactions in Google Cardboard Using a Low Cost EOG Setup; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Shimizu, J.; Lee, J.; Dhuliawala, M.; Bulling, A.; Starner, T.; Woo, W.; Kunze, K. Solar System: Smooth Pursuit Interactions Using EOG Glasses; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Bissoli, A.L.C.; Coelho, Y.L.; Bastos-Filho, T.F. A System for Multimodal Assistive Domotics and Augmentative and Alternative Communication; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Wilaiprasitporn, T.; Yagi, T. Feasibility Study of Drowsiness Detection Using Hybrid Brain-Computer Interface. In Proceedings of the international Convention on Rehabilitation Engineering & Assistive Technology, Singapore, 25–28 July 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Fang, F.; Shinozaki, T.; Horiuchi, Y.; Kuroiwa, S.; Furui, S.; Musha, T. Improving Eye Motion Sequence Recognition Using Electrooculography Based on Context-Dependent HMM. Comput. Intell. Neurosci. 2016, 2016, 6898031. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tamura, H.; Yan, M.; Sakurai, K.; Tanno, K. EOG-sEMG Human Interface for Communication. Comput. Intell. Neurosci. 2016, 2016, 7354082. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barbara, N.; Camilleri, T.A. Interfacing with a speller using EOG glasses. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 001069–001074. [Google Scholar]

- Atique, M.M.U.; Rakib, S.H.; Siddique-e Rabbani, K. An electrooculogram based control system. In Proceedings of the 2016 5th International Conference on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, 13–14 May 2016; pp. 809–812. [Google Scholar]

- Naijian, C.; Xiangdong, H.; Yantao, W.; Xinglai, C.; Hui, C. Coordination control strategy between human vision and wheelchair manipulator based on BCI. In Proceedings of the 2016 IEEE 11th Conference on Industrial Electronics and Applications (ICIEA), Hefei, China, 5–7 June 2016; pp. 1872–1875. [Google Scholar]

- Ogai, S.; Tanaka, T. A drag-and-drop type human computer interaction technique based on electrooculogram. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 716–720. [Google Scholar]

- Lee, K.R.; Chang, W.D.; Kim, S.; Im, C.H. Real-Time “Eye-Writing” Recognition Using Electrooculogram. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 37–48. [Google Scholar] [CrossRef] [PubMed]

- Robert, H.; Kise, K.; Augereau, O. Real-Time Wordometer Demonstration Using Commercial EoG Glasses; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Ishimaru, S.; Hoshika, K.; Kunze, K.; Kise, K.; Dengel, A. Towards Reading Trackers in the Wild: Detecting Reading Activities by EOG Glasses and Deep Neural Networks; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Kise, K.; Augereau, O.; Utsumi, Y.; Iwamura, M.; Kunze, K.; Ishimaru, S.; Dengel, A. Quantified Reading and Learning for Sharing Experiences; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Augereau, O.; Sanches, C.L.; Kise, K.; Kunze, K. Wordometer Systems for Everyday Life. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Tag, B.; Mannschreck, R.; Sugiura, K.; Chernyshov, G.; Ohta, N.; Kunze, K. Facial Thermography for Attention Tracking on Smart Eyewear: An Initial Study; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Thakur, B.; Syal, P.; Kumari, P. An Electrooculogram Signal Based Control System in Offline Environment; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Chang, W.D.; Cha, H.S.; Kim, D.Y.; Kim, S.H.; Im, C.H. Development of an electrooculogram-based eye-computer interface for communication of individuals with amyotrophic lateral sclerosis. J. NeuroEng. Rehabil. 2017, 14, 89. [Google Scholar] [CrossRef] [Green Version]

- Huang, Q.; He, S.; Wang, Q.; Gu, Z.; Peng, N.; Li, K.; Zhang, Y.; Shao, M.; Li, Y. An EOG-Based Human-Machine Interface for Wheelchair Control. IEEE Trans. Biomed. Eng. 2018, 65, 2023–2032. [Google Scholar] [CrossRef]

- Heo, J.; Yoon, H.; Park, K.S. A Novel Wearable Forehead EOG Measurement System for Human Computer Interfaces. Sensors 2017, 17, 1485. [Google Scholar] [CrossRef] [Green Version]

- He, S.; Li, Y. A Single-Channel EOG-Based Speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1978–1987. [Google Scholar] [CrossRef]

- Lee, M.H.; Williamson, J.; Won, D.O.; Fazli, S.; Lee, S.W. A High Performance Spelling System based on EEG-EOG Signals with Visual Feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1443–1459. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, B.; Zhang, C.; Hong, J. Volitional and Real-Time Control Cursor Based on Eye Movement Decoding Using a Linear Decoding Model. Comput. Intell. Neurosci. 2016, 2016, 4069790. [Google Scholar] [CrossRef]

- Zhi-Hao, W.; Yu-Fan, K.; Chuan-Te, C.; Shi-Hao, L.; Gwo-Jia, J. Controlling DC motor using eye blink signals based on LabVIEW. In Proceedings of the 2017 5th International Conference on Electrical, Electronics and Information Engineering (ICEEIE), Malang, Indonesia, 6–8 October 2017; pp. 61–65. [Google Scholar]

- Soundariya, R.; Renuga, R. Eye movement based emotion recognition using electrooculography. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5. [Google Scholar]

- O’Bard, B.; Larson, A.; Herrera, J.; Nega, D.; George, K. Electrooculography based iOS controller for individuals with quadriplegia or neurodegenerative disease. In Proceedings of the 2017 IEEE International Conference on Healthcare Informatics (ICHI), Park City, UT, USA, 23–26 August 2017; pp. 101–106. [Google Scholar]

- Perin, M.; Porto, R.; Neto, A.Z.; Spindola, M.M. EOG analog front-end for human machine interface. In Proceedings of the 22017 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Turin, Italy, 22–25 May 2017; pp. 1–6. [Google Scholar]

- Karagöz, Y.; Gül, S.; Çetınel, G. An EOG based communication channel for paralyzed patients. In Proceedings of the 2017 25th Signal Processing and Communications Applications Conference (SIU), Antalya, Turkey, 15–18 May 2017; pp. 1–4. [Google Scholar]

- Crea, S.; Nann, M.; Trigili, E.; Cordella, F.; Baldoni, A.; Badesa, F.J.; Catalán, J.M.; Zollo, L.; Vitiello, N.; Aracil, N.G.; et al. Feasibility and safety of shared EEG/EOG and vision-guided autonomous whole-arm exoskeleton control to perform activities of daily living. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, R.; He, S.; Yang, X.; Wang, X.; Li, K.; Huang, Q.; Yu, Z.; Zhang, X.; Tang, D.; Li, Y. An EOG-based human–machine interface to control a smart home environment for patients with severe spinal cord injuries. IEEE Trans. Biomed. Eng. 2018, 66, 89–100. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.Y.; Han, C.H.; Im, C.H. Development of an electrooculogram-based human-computer interface using involuntary eye movement by spatially rotating sound for communication of locked-in patients. Sci. Rep. 2018, 8, 9505. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fang, F.; Shinozaki, T. Electrooculography-based continuous eye-writing recognition system for efficient assistive communication systems. PLoS ONE 2018, 13, e0192684. [Google Scholar] [CrossRef] [Green Version]

- Bastes, A.; Alhat, S.; Panse, M. Speech Assistive Communication System Using EOG. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 504–510. [Google Scholar]

- Hou, H.K.; Smitha, K. Low-cost wireless electrooculography speller. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 123–128. [Google Scholar]

- Jialu, G.; Ramkumar, S.; Emayavaramban, G.; Thilagaraj, M.; Muneeswaran, V.; Rajasekaran, M.P.; Hussein, A.F. Offline analysis for designing electrooculogram based human computer interface control for paralyzed patients. IEEE Access 2018, 6, 79151–79161. [Google Scholar] [CrossRef]

- Sun, L.; Wang, S.a.; Chen, H.; Chen, Y. A novel human computer interface based on electrooculogram signal for smart assistive robots. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; pp. 215–219. [Google Scholar]

- Lu, Y.Y.; Huang, Y.T. A method of personal computer operation using Electrooculography signal. In Proceedings of the 2019 IEEE Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Okinawa, Japan, 31 May–3 June 2019; pp. 76–78. [Google Scholar]

- Garrote, L.; Perdiz, J.; Pires, G.; Nunes, U.J. Reinforcement learning motion planning for an EOG-centered robot assisted navigation in a virtual environment. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–7. [Google Scholar]

- Tag, B.; Vargo, A.W.; Gupta, A.; Chernyshov, G.; Kunze, K.; Dingler, T. Continuous Alertness Assessments: Using EOG Glasses to Unobtrusively Monitor Fatigue Levels In-The-Wild; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Findling, R.D.; Quddus, T.; Sigg, S. Hide my Gaze with EOG! Towards Closed-Eye Gaze Gesture Passwords that Resist Observation-Attacks with Electrooculography in Smart Glasses; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Rostaminia, S.; Lamson, A.; Maji, S.; Rahman, T.; Ganesan, D. W!NCE: Unobtrusive Sensing of Upper Facial Action Units with EOG-based Eyewear. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 3, 1–26. [Google Scholar] [CrossRef]

- Badesa, F.J.; Diez, J.A.; Catalan, J.M.; Trigili, E.; Cordella, F.; Nann, M.; Crea, S.; Soekadar, S.R.; Zollo, L.; Vitiello, N.; et al. Physiological Responses During Hybrid BNCI Control of an Upper-Limb Exoskeleton. Sensors 2019, 19, 4931. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Wang, B.; Zhang, C.; Xiao, Y.; Wang, M.Y. An EEG/EMG/EOG-based multimodal human-machine interface to real-time control of a soft robot hand. Front. Neurorobot. 2019, 13, 7. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Kihara, K.; Takeda, Y.; Sato, T.; Akamatsu, M.; Kitazaki, S. Effects of scheduled manual driving on drowsiness and response to take over request: A simulator study towards understanding drivers in automated driving. Accid. Anal. Prev. 2019, 124, 202–209. [Google Scholar] [CrossRef]

- Mocny-Pachońska, K.; Doniec, R.; Trzcionka, A.; Pachoński, M.; Piaseczna, N.; Sieciński, S.; Osadcha, O.; anowy, P.; Tanasiewicz, M. Evaluating the stress-response of dental students to the dental school environment. PeerJ 2020, 8. [Google Scholar] [CrossRef]

- He, S.; Zhou, Y.; Yu, T.; Zhang, R.; Huang, Q.; Chuai, L.; Mustafa, M.U.; Gu, Z.; Yu, Z.L.; Tan, H.; et al. EEG- and EOG-Based Asynchronous Hybrid BCI: A System Integrating a Speller, a Web Browser, an E-Mail Client, and a File Explorer. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 28, 519–530. [Google Scholar] [CrossRef] [PubMed]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-based hybrid brain-computer interface: Application on controlling an integrated wheelchair robotic arm system. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zander, T.O.; Kothe, C.; Jatzev, S.; Gaertner, M. Enhancing human-computer interaction with input from active and passive brain-computer interfaces. In Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2010; pp. 181–199. [Google Scholar]

- Vidal, M.; Bulling, A.; Gellersen, H. Pursuits: Spontaneous interaction with displays based on smooth pursuit eye movement and moving targets. In Proceedings of the 2013 ACM International Joint CONFERENCE on Pervasive and Ubiquitous Computing, Zurich Switzerland, 8–12 September 2013; pp. 439–448. [Google Scholar]

- Esteves, A.; Velloso, E.; Bulling, A.; Gellersen, H. Orbits: Gaze interaction for smart watches using smooth pursuit eye movements. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 8–11 November 2015; pp. 457–466. [Google Scholar]

- Kangas, J.; Špakov, O.; Isokoski, P.; Akkil, D.; Rantala, J.; Raisamo, R. Feedback for smooth pursuit gaze tracking based control. In Proceedings of the 7th Augmented Human International Conference 2016, Geneva, Switzerland, 25–27 February 2016; pp. 1–8. [Google Scholar]

- Khamis, M.; Alt, F.; Bulling, A. A field study on spontaneous gaze-based interaction with a public display using pursuits. In Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Umeda, Japan, 9–11 September 2015; pp. 863–872. [Google Scholar]

- Jalaliniya, S.; Mardanbegi, D. Eyegrip: Detecting targets in a series of uni-directional moving objects using optokinetic nystagmus eye movements. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 5801–5811. [Google Scholar]

- Schenk, S.; Tiefenbacher, P.; Rigoll, G.; Dorr, M. Spock: A smooth pursuit oculomotor control kit. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 2681–2687. [Google Scholar]

- Zao, J.K.; Jung, T.P.; Chang, H.M.; Gan, T.T.; Wang, Y.T.; Lin, Y.P.; Liu, W.H.; Zheng, G.Y.; Lin, C.K.; Lin, C.H. Augmenting VR/AR applications with EEG/EOG monitoring and oculo-vestibular recoupling. In International Conference on Augmented Cognition; Springer: Berlin/Heidelberg, Germany, 2016; pp. 121–131. [Google Scholar]

- Altobelli, F. ElectroOculoGraphy (EOG) Eye-Tracking for Virtual Reality. Master’s Thesis, Delft University of Technology, Delft, The Nethlerlands, 2019. [Google Scholar]

- Lupu, R.G.; Irimia, D.C.; Ungureanu, F.; Poboroniuc, M.S.; Moldoveanu, A. BCI and FES based therapy for stroke rehabilitation using VR facilities. Wirel. Commun. Mob. Comput. 2018, 2018, 4798359. [Google Scholar] [CrossRef] [Green Version]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Review of three-dimensional human-computer interaction with focus on the leap motion controller. Sensors 2018, 18, 2194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Caldwell, R. Models of change agency: A fourfold classification. Br. J. Manag. 2003, 14, 131–142. [Google Scholar] [CrossRef]

- Wilson, G.F.; Caldwell, J.A.; Russell, C.A. Performance and psychophysiological measures of fatigue effects on aviation related tasks of varying difficulty. Int. J. Aviat. Psychol. 2007, 17, 219–247. [Google Scholar] [CrossRef]

- Sirevaag, E.J.; Stern, J.A. Ocular measures of fatigue and cognitive factors. In Engineering Psychophysiology: Issues and Applications; CRC Press: BOca Raton, FL, USA, 2000; pp. 269–287. [Google Scholar]

- Peysakhovich, V.; Vachon, F.; Dehais, F. The impact of luminance on tonic and phasic pupillary responses to sustained cognitive load. Int. J. Psychophysiol. 2017, 112, 40–45. [Google Scholar] [CrossRef]

- Jia, Y.; Tyler, C.W. Measurement of saccadic eye movements by electrooculography for simultaneous EEG recording. Behav. Res. Methods 2019, 51, 2139–2151. [Google Scholar] [CrossRef] [Green Version]

- Penzel, T.; Lo, C.C.; Ivanov, P.; Kesper, K.; Becker, H.; Vogelmeier, C. Analysis of sleep fragmentation and sleep structure in patients with sleep apnea and normal volunteers. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 2591–2594. [Google Scholar]

- Borghetti, D.; Bruni, A.; Fabbrini, M.; Murri, L.; Sartucci, F. A low-cost interface for control of computer functions by means of eye movements. Comput. Biol. Med. 2007, 37, 1765–1770. [Google Scholar] [CrossRef]

- Rosekind, M.R.; Gander, P.H.; Miller, D.L.; Gregory, K.B.; Smith, R.M.; Weldon, K.J.; Co, E.L.; McNally, K.L.; Lebacqz, J.V. Fatigue in operational settings: Examples from the aviation environment. Hum. Factors 1994, 36, 327–338. [Google Scholar] [CrossRef]

- Dahlstrom, N.; Nahlinder, S. Mental workload in aircraft and simulator during basic civil aviation training. Int. J. Aviat. Psychol. 2009, 19, 309–325. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belkhiria, C.; Boudir, A.; Hurter, C.; Peysakhovich, V. EOG-Based Human–Computer Interface: 2000–2020 Review. Sensors 2022, 22, 4914. https://doi.org/10.3390/s22134914

Belkhiria C, Boudir A, Hurter C, Peysakhovich V. EOG-Based Human–Computer Interface: 2000–2020 Review. Sensors. 2022; 22(13):4914. https://doi.org/10.3390/s22134914

Chicago/Turabian StyleBelkhiria, Chama, Atlal Boudir, Christophe Hurter, and Vsevolod Peysakhovich. 2022. "EOG-Based Human–Computer Interface: 2000–2020 Review" Sensors 22, no. 13: 4914. https://doi.org/10.3390/s22134914

APA StyleBelkhiria, C., Boudir, A., Hurter, C., & Peysakhovich, V. (2022). EOG-Based Human–Computer Interface: 2000–2020 Review. Sensors, 22(13), 4914. https://doi.org/10.3390/s22134914