Using Smartwatches to Detect Face Touching

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Study Procedure

2.3. Instrumentation

2.4. Problem Formulation and Data Processing

2.5. Model Training

3. Results

4. Discussion

4.1. Principle Results

4.2. Comparison with Prior Work

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- CDC How to Prevent the Spread of Respiratory Illnesses in Disaster Evacuation Centers. Available online: https://www.cdc.gov/disasters/disease/respiratoryic.html (accessed on 13 August 2021).

- Di Giuseppe, G.; Abbate, R.; Albano, L.; Marinelli, P.; Angelillo, I.F. A survey of knowledge, attitudes and practices towards avian influenza in an adult population of Italy. BMC Infect. Dis. 2008, 8, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nicas, M.; Best, D. A study quantifying the hand-to-face contact rate and its potential application to predicting respiratory tract infection. J. Occup. Environ. Hyg. 2008, 5, 347–352. [Google Scholar] [CrossRef] [PubMed]

- Frank, D.L.; Khorshid, L.; Kiffer, J.F.; Moravec, C.S.; McKee, M.G. Biofeedback in medicine: Who, when, why and how? Ment. Health Fam. Med. 2010, 7, 85–91. [Google Scholar] [PubMed]

- Kwok, Y.L.A.; Gralton, J.; McLaws, M.L. Face touching: A frequent habit that has implications for hand hygiene. Am. J. Infect. Control 2015, 43, 112–114. [Google Scholar] [CrossRef]

- Lucas, T.L.; Mustain, R.; Goldsby, R.E. Frequency of face touching with and without a mask in pediatric hematology/oncology health care professionals. Pediatr. Blood Cancer 2020, 67, 14–16. [Google Scholar] [CrossRef] [PubMed]

- Himle, J.A.; Bybee, D.; O’Donnell, L.A.; Weaver, A.; Vlnka, S.; DeSena, D.T.; Rimer, J.M. Awareness enhancing and monitoring device plus habit reversal in the treatment of trichotillomania: An open feasibility trial. J. Obsessive. Compuls. Relat. Disord. 2018, 16, 14–20. [Google Scholar] [CrossRef]

- IDC Worldwide Wearables Market Forecast to Maintain Double-Digit Growth in 2020 and Through 2024, According to IDC. Available online: https://www.idc.com/getdoc.jsp?containerId=prUS46885820 (accessed on 13 August 2021).

- Davoudi, A.; Wanigatunga, A.A.; Kheirkhahan, M.; Corbett, D.B.; Mendoza, T.; Battula, M.; Ranka, S.; Fillingim, R.B.; Manini, T.M.; Rashidi, P. Accuracy of samsung gear s smartwatch for activity recognition: Validation study. JMIR mHealth uHealth 2019, 7. [Google Scholar] [CrossRef]

- Vaizman, Y.; Ellis, K.; Lanckriet, G. Recognizing detailed human context in the wild from smartphones and smartwatches. IEEE Pervasive Comput. 2017, 16, 62–74. [Google Scholar] [CrossRef] [Green Version]

- Channa, A.; Popescu, N.; Skibinska, J.; Burget, R. The Rise of Wearable Devices during the COVID-19 Pandemic: A Systematic Review. Sensors 2021, 21, 5787. [Google Scholar] [CrossRef]

- Michelin, A.M.; Korres, G.; Ba’ara, S.; Assadi, H.; Alsuradi, H.; Sayegh, R.R.; Argyros, A.; Eid, M. FaceGuard: A Wearable System To Avoid Face Touching. Front. Robot. AI 2021, 8, 1–11. [Google Scholar] [CrossRef]

- Ye, X.; Chen, G.; Cao, Y. Automatic Eating Detection using head-mount and wrist-worn accelerometers. In Proceedings of the 2015 17th International Conference on E-health Networking, Application & Services, Boston, MA, USA, 14–17 October 2015; pp. 578–581. [Google Scholar] [CrossRef]

- Dong, Y.; Scisco, J.; Wilson, M.; Muth, E.; Hoover, A. Detecting periods of eating during free-living by tracking wrist motion. IEEE J. Biomed. Health Inform. 2014, 18, 1253–1260. [Google Scholar] [CrossRef]

- Parate, A.; Chiu, M.C.; Chadowitz, C.; Ganesan, D.; Kalogerakis, E. RisQ: Recognizing smoking gestures with inertial sensors on a wristband. In Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 149–161. [Google Scholar] [CrossRef] [Green Version]

- Senyurek, V.; Imtiaz, M.; Belsare, P.; Tiffany, S.; Sazonov, E. Cigarette smoking detection with an inertial sensor and a smart lighter. Sensors 2019, 19, 570. [Google Scholar] [CrossRef] [Green Version]

- Sudharsan, B.; Sundaram, D.; Breslin, J.G.; Ali, M.I. Avoid Touching Your Face: A Hand-to-face 3D Motion Dataset (COVID-away) and Trained Models for Smartwatches. In Proceedings of the 10th International Conference on the Internet of Things Companion, Malmö, Sweden, 6–9 October 2020. [Google Scholar] [CrossRef]

- Keadle, S.K.; Lyden, K.A.; Strath, S.J.; Staudenmayer, J.W.; Freedson, P.S. A Framework to evaluate devices that assess physical behavior. Exerc. Sport Sci. Rev. 2019, 47, 206–214. [Google Scholar] [CrossRef] [PubMed]

- Dieu, O.; Mikulovic, J.; Fardy, P.S.; Bui-Xuan, G.; Béghin, L.; Vanhelst, J. Physical activity using wrist-worn accelerometers: Comparison of dominant and non-dominant wrist. Clin. Physiol. Funct. Imaging 2017, 37, 525–529. [Google Scholar] [CrossRef] [PubMed]

- Kheirkhahan, M.; Nair, S.; Davoudi, A.; Rashidi, P.; Wanigatunga, A.A.; Corbett, D.B.; Mendoza, T.; Manini, T.M.; Ranka, S. A smartwatch-based framework for real-time and online assessment and mobility monitoring. J. Biomed. Inform. 2019, 89, 29–40. [Google Scholar] [CrossRef] [PubMed]

- Incel, O.D.; Kose, M.; Ersoy, C. A Review and Taxonomy of Activity Recognition on Mobile Phones. Bionanoscience 2013, 3, 145–171. [Google Scholar] [CrossRef]

- Krause, A.; Smailagic, A.; Siewiorek, D.P.; Farringdon, J. Unsupervised, dynamic identification of physiological and activity context in wearable computing. Proc. Int. Symp. Wearable Comput. ISWC 2003, 88–97. [Google Scholar] [CrossRef]

- Mannini, A.; Intille, S.S.; Rosenberger, M.; Sabatini, A.M.; Haskell, W. Activity recognition using a single accelerometer placed at the wrist or ankle. Med. Sci. Sports Exerc. 2013, 45, 2193–2203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pirttikangas, S.; Fujinami, K.; Nakajima, T. Feature selection and activity recognition from wearable sensors. Lect. Notes Comput. Sci. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform. 2006, 4239, 516–527. [Google Scholar] [CrossRef]

- Stikic, M.; Huỳnh, T.; Van Laerhoven, K.; Schiele, B. ADL recognition based on the combination of RFID and aeeelerometer sensing. In Proceedings of the 2008 Second International Conference on Pervasive Computing Technologies for Healthcare, Tampere, Finland, 30 January–1 February 2008; pp. 258–263. [Google Scholar] [CrossRef]

- Huynh, T.; Schiele, B. Analyzing features for activity recognition. ACM Int. Conf. Proc. Ser. 2005, 121, 159–164. [Google Scholar] [CrossRef]

- Staudenmayer, J.; He, S.; Hickey, A.; Sasaki, J.; Freedson, P. Methods to estimate aspects of physical activity and sedentary behavior from high-frequency wrist accelerometer measurements. J. Appl. Physiol. 2015, 119, 396–403. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pires, I.M.; Marques, G.; Garcia, N.M.; Flórez-Revuelta, F.; Teixeira, M.C.; Zdravevski, E.; Spinsante, S.; Coimbra, M. Pattern recognition techniques for the identification of activities of daily living using a mobile device accelerometer. Electronics 2020, 9, 509. [Google Scholar] [CrossRef] [Green Version]

- Cawley, G.C.; Talbot, N.L.C. On over-fitting in model selection and subsequent selection bias in performance evaluation. J. Mach. Learn. Res. 2010, 11, 2079–2107. [Google Scholar]

- Jiménez, Á.B.; Lázaro, J.L.; Dorronsoro, J.R. Finding Optimal Model Parameters by Discrete Grid Search. In Innovations in Hybrid Intelligent Systems; Corchado, E., Corchado, J.M., Abraham, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 120–127. ISBN 978-3-540-74972-1. [Google Scholar]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Machine Learning Model Codes. Available online: https://github.com/ufdsat/FTCode (accessed on 13 August 2021).

- Zaki, Z. Logistic Regression Based Human Activities Recognition. J. Mech. Contin. Math. Sci. 2020, 15. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. A Public Domain Dataset for Human Activity Recognition Using Smartphones. In Proceedings of the 21th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, ESANN, Bruges, Belgium, 2–4 October 2013; pp. 24–26. [Google Scholar]

- Bellinger, C.; Sharma, S.; Japkowicz, N. One-class versus binary classification: Which and when? In Proceedings of the 2012 11th International Conference on Machine Learning and Applications, Boca Raton, FL, USA, 12–15 December 2012; Volume 2, pp. 102–106. [Google Scholar] [CrossRef]

| Activity | Laboratory-Based Setting Description | Face Touching |

|---|---|---|

| Using mobile phone | Messaging using social media—no phone calls | No |

| Lying flat on the back | Simulating sleeping or napping | No |

| Computer tasks | Typing a document and navigating websites | No |

| Writing | Writing on a piece of paper | No |

| Leisure walk | Walking at a leisurely pace | No |

| Moving items from one location to another | Moving folding chairs from one location to another | No |

| Repeated face touching | Wiping nose, gestures on the face | Yes |

| Eating and drinking | Eating a snack and drinking water | Yes |

| Simulated smoking | Simulating the act of smoking | Yes |

| Adjusting eyeglass | Adjusting eyeglasses placed on the face | Yes |

| Feature | Description | |

|---|---|---|

| Time | Mean of vector magnitude and acceleration from 3 axes (mvm, mean_x, mean_y, and mean_z) | Sample mean of VM, acceleration from x, y, and z axes in the window |

| SD of vector magnitude and acceleration from 3 axes (sdvm, sd_x, sd_y, and sd_z) | Sample standard deviation of VM, acceleration from x, y, and z axes in the window | |

| Coefficient of variation of vector magnitude and acceleration from 3 axes (cv_vm, cv_x, cv_y, and cv_z) | Standard deviation of VM, acceleration from x, y, and z axes in the window divided by the mean, multiplied by 100 | |

| Minimum value of vector magnitude and acceleration from 3 axes (min_vm, min_x, min_y, and min_z) | Minimum value of VM and acceleration from x, y, and z axes in the window | |

| Maximum value of vector magnitude (max_vm, max_x, max_y, and max_z) | Maximum value of VM and acceleration from x, y, and z axes in the window | |

| Twenty-five percent quantile of vector magnitude and acceleration from 3 axes (lower_vm_25, lower_x_25, lower_y_25, and lower_z_25) | Lower 25% quantile of VM and acceleration from x, y, and z axes in the window | |

| Seventy-five percent quantile of vector magnitude and acceleration from 3 axes (upper_vm_75, upper_x_75, upper_y_75, and upper_z_75) | Upper 75% quantile of VM and acceleration from x axis, y axis, and z axis in the window | |

| Third moment of vector magnitude and acceleration from 3 axes (third_vm, third_x, third_y, and third_z) | Third moment of VM and acceleration from x, y, and z axes in the window | |

| Fourth moment of vector magnitude and acceleration from 3 axes (fourth_vm, fourth_x, fourth_y, and fourth_z) | Fourth moment of VM and acceleration from x, y, and z axes in the window | |

| Skewness of vector magnitude and acceleration from 3 axes (skewness_vm, skewness_x, skewness_y, and skewness_z) | Skewness of VM, acceleration from x, y, and z axes in the window | |

| Kurtosis of vector magnitude and acceleration from 3 axes (kurtosis_vm, kurtosis_x, kurtosis_y, and kurtosis_z) | Kurtosis of VM, acceleration from x, y, and z axes in the window | |

| Mean angle of acceleration relative to vertical on the device (mangle) | Sample mean of the angle between x axis and VM in the window | |

| SD of the angle of acceleration relative to vertical on the device (sdangle) | Sample standard deviation of the angles in the window | |

| Frequency | Percentage of the power of the vm that is in 0.6–2.5 Hz (p625) | Sum of moduli corresponding to frequency in this range divided by sum of moduli of all frequencies |

| Dominant frequency of vm (df) | Frequency corresponding to the largest modulus | |

| Fraction of power in vm at dominant frequency (fpdf) | Modulus of the dominant frequency/sum of moduli at each frequency |

| Window Size | Number of Samples in Training Set | Number of Samples in Validation Set | Number of Samples in Testing Set |

|---|---|---|---|

| 2 s | 4716 | 2358 | 787 |

| 16 s | 588 | 294 | 98 |

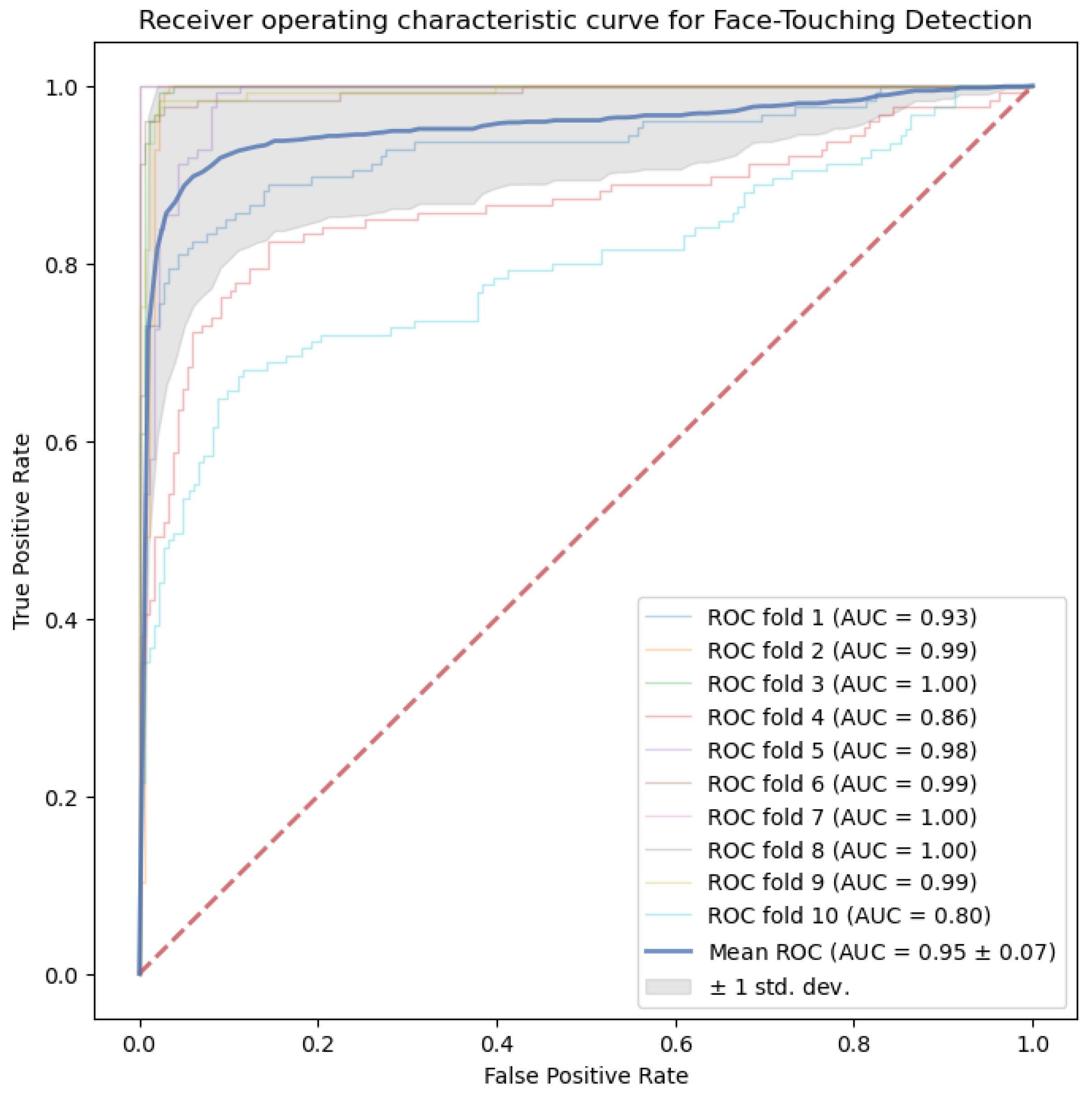

| Classifier | Accuracy | Recall | Precision | F1-Score | AUC |

|---|---|---|---|---|---|

| LR | 0.93 (0.08) | 0.89 (0.16) | 0.93 (0.08) | 0.90 (0.11) | 0.95 (0.07) |

| SVM | 0.89 (0.09) | 0.85 (0.15) | 0.89 (0.12) | 0.86 (0.12) | 0.92 (0.08) |

| Decision Tree | 0.88 (0.09) | 0.82 (0.18) | 0.87 (0.10) | 0.84 (0.13) | 0.89 (0.10) |

| Random Forest | 0.91 (0.10) | 0.86 (0.17) | 0.91 (0.12) | 0.88 (0.14) | 0.95 (0.08) |

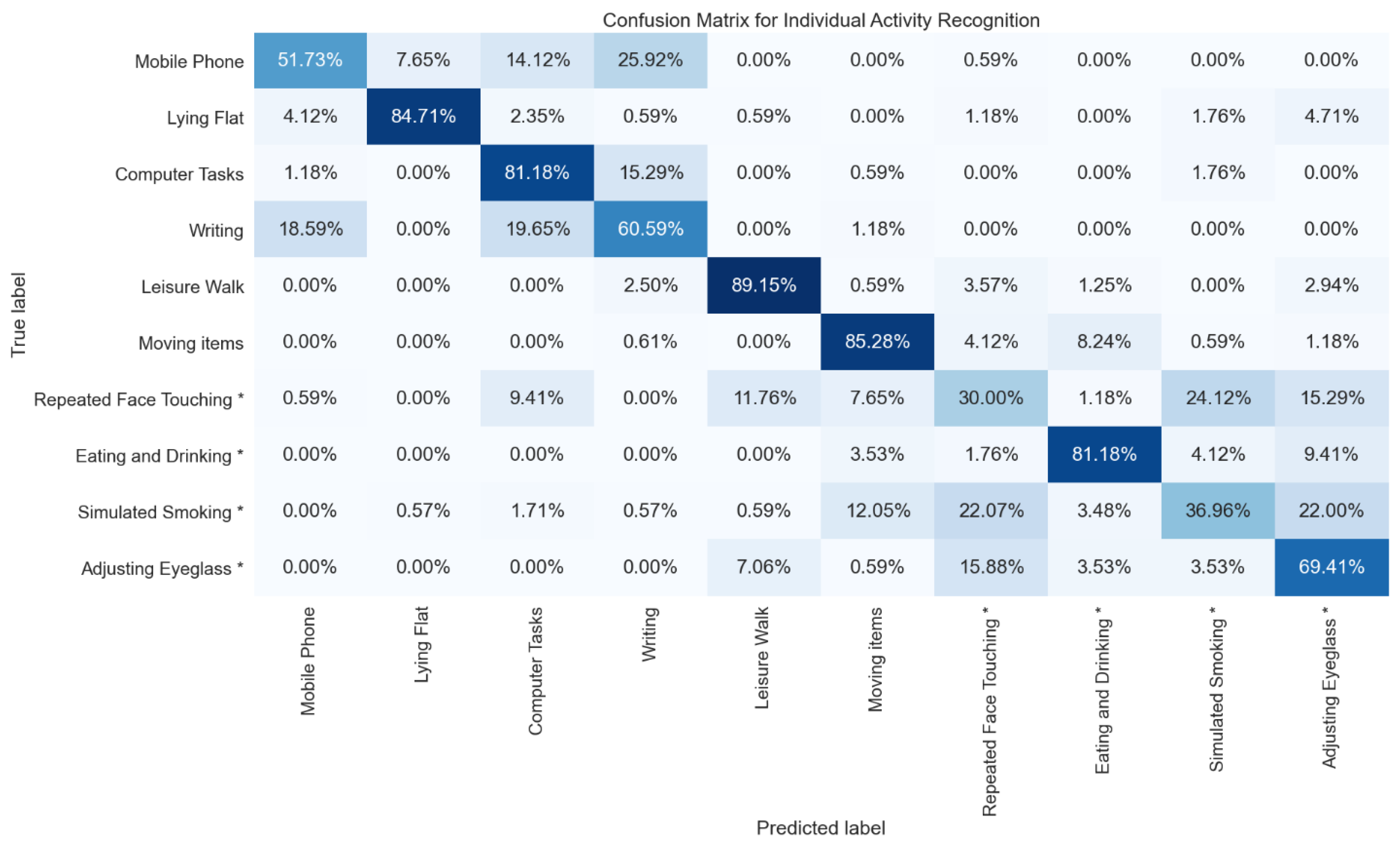

| Classifier | Accuracy | Recall | Precision | F1-Score |

|---|---|---|---|---|

| LR | 0.65 (0.10) | 0.66 (0.10) | 0.65 (0.12) | 0.62 (0.12) |

| SVM | 0.66 (0.13) | 0.66 (0.13) | 0.66 (0.14) | 0.64 (0.14) |

| Decision Tree | 0.59 (0.11) | 0.59 (0.11) | 0.61 (0.13) | 0.56 (0.12) |

| Random Forest | 0.70 (0.14) | 0.70 (0.14) | 0.70 (0.16) | 0.67 (0.15) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, C.; Chen, Y.-P.; Wolach, A.; Anthony, L.; Mardini, M.T. Using Smartwatches to Detect Face Touching. Sensors 2021, 21, 6528. https://doi.org/10.3390/s21196528

Bai C, Chen Y-P, Wolach A, Anthony L, Mardini MT. Using Smartwatches to Detect Face Touching. Sensors. 2021; 21(19):6528. https://doi.org/10.3390/s21196528

Chicago/Turabian StyleBai, Chen, Yu-Peng Chen, Adam Wolach, Lisa Anthony, and Mamoun T. Mardini. 2021. "Using Smartwatches to Detect Face Touching" Sensors 21, no. 19: 6528. https://doi.org/10.3390/s21196528

APA StyleBai, C., Chen, Y.-P., Wolach, A., Anthony, L., & Mardini, M. T. (2021). Using Smartwatches to Detect Face Touching. Sensors, 21(19), 6528. https://doi.org/10.3390/s21196528