1. Introduction

With the advent in interactive display technology, they have become easily accessible and as a result, their use in a variety of domains has been investigated. In the educational domain, interactive displays have been increasingly employed to support informal learning [

1] in museums and as public displays. Despite their increased usage in informal learning scenarios, there is a lack of research about how to create and promote engaging, efficient and collaborative activities around interactive displays to foster learning. There have been a few studies relating the use of interactive displays in informal learning context and level of collaboration and cooperation [

2]. To emphasize play, work and learning, the underlying mechanisms responsible for interaction should be adaptive to different context [

1]. This is because a table top like interactive display could be considered as a ubiquitous from of learning tool [

1]. There is an increasing interest in investigating the role of interactive displays to improve collaborative processes in various educational contexts, which results in a condensation of knowledge and guidelines about the usage and expectation from the interactive displays. For example, Higgins et al. [

3,

4] proposed an analysis of pedagogic opportunities of the interactive features of such displays using a typology of features. On the other hand, Dillenbourg and Evans [

1] pointed out the risks of over-generalization and over-expectation from the interactive displays. However, past investigations are limited in terms of their scope in the sense that they were focused on the enjoyment, fun and engagement related experiences of the interactive displays [

2,

5]. Such studies do not focus on the relation among collaboration around interactive displays, engagement and learning gain. One such study that studied such relations did not find any significant difference for the learning gains between the conditions with the traditional methods of learning and collaborative learning supported by the interactive displays [

6]. However, students who collaborated and interacted with the displays reported significant higher levels of fun and engagement [

6]. The present work is motivated by such contributions and proposes further research in the direction of understanding and implementing better ways to collaboratively engage and learn with the interactive displays.

Prior studies have shown the potential of collaborative eye-tracking (also known as dual eye-tracking or DUET) in explaining the cognitive mechanisms responsible for the quality or outcome of collaboration. Previous DUET studies have shown that the eye-tracking data has the capability of explaining the task-based performance [

7], collaboration quality [

8], the expertise [

9] and learning outcome [

10]. However, to the best of our knowledge, the present study is the first one to utilize DUET in an informal learning scenario to study the collaborative eye-tracking data while combining physical and digital collaborative spaces.

Moreover, there is a recent trend shown in various learning scenarios to use the physiological data sources to explain the learning processes or to study the relation between the different variables influencing the learning gains or experiences [

11,

12,

13,

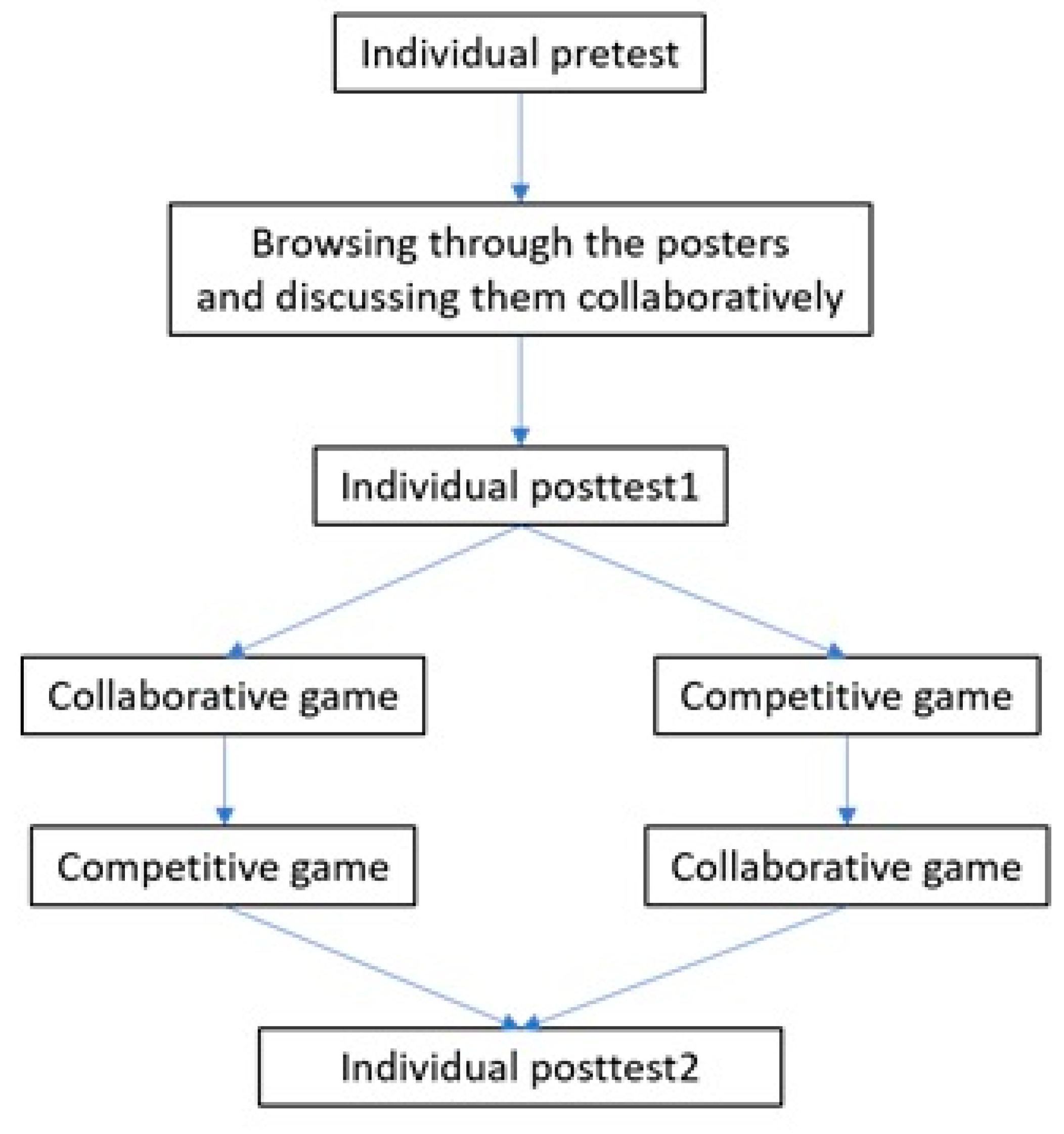

14]. In this contribution, we utilize DUET to understand how learners collaborate, engage with the content and learn with interactive displays in informal educational settings. To do so, we designed an application which enhances the capacities of the interactive display and then designed and conducted a two-staged within-group experiment. In the experiment, participants were asked to go through a set of posters and interact with the application (both in collaborative and competitive ways). We recorded the gaze of the participants while they were watching the posters and while they were interacting with the application; at the end of each session, we asked them to fill in some content specific questions and attitudinal questions. Utilizing the data from this study, we focus on how to explain the relation between the learning gains in an informal learning setting, engagement via game-play and collaboration via DUET data. More precisely, this paper contributes to the state-of-the-art in the following ways:

We present the implications from the first (to the best of our knowledge) collaborative eye-tracking study in the informal learning setting.

We present a method to analyze and compare the collaborative interaction of peers across different tasks (learning and gaming) and mediating interfaces (physical and digital).

We provide empirical extension of the theoretical framework collaborative learning mechanisms.

2. Theoretical Background in Informal Learning and Collaborative Learning

Recent computer supported collaborative learning (CSCL) research for informal learning has been focused on the process of collaborative learning rather than the outcome of the collaborative effort. For example, Tissenbaum et al. [

15] proposed a framework to analyze the verbal and physical interaction of peers around an interactive table top environment and showed that having common learning goal is not a necessary condition for the success of collaborative learning sessions. Similarly, Shapiro et al. [

16] proposed a new framework to visualize and analyze the interaction and movement of participants during museum visits based on the video coding. Davis et al. [

17] analyzed the interaction between peers in a dyad to understand how the next step negotiation took place and how was the common understanding attained. One common theme across all these different studies is the qualitative nature of analyses. In this paper, we take a more quantitative view of the problem at hand, that is, to analyze the collaborative learning process in informal learning contexts.

One of the theoretical frameworks used to analyze the collaborative learning in informal settings is the collaborative learning mechanisms (CLM) framework [

18]. The CLM framework is defined across two dimensions, explaining the different mechanisms underlying collaborative learning in informal settings. First, collaborative discussion; and second, coordination of collaboration. Collaborative discussion deals with the moments concerning the negotiation and suggestions, while the coordination of collaboration focuses on the specific moments related to the joint attention and narrations. In this way, the analysis of the collaborative sessions focuses both on the verbal and physical interactions [

18]. CLM provides a way to analyze the process involving the collaborative learning, which has attracted a lot of attention in the recent CSCL research ([

19,

20]).

There have been other extensions of CLM that provide in depth analysis mechanisms to study the informal collaborative learning processes, such as divergent CLM (DCLM, Tissenabum et al. [

15]). DCLM framework does not necessarily assume that the goal of collaborative learning is to create a mutual understanding among the peers in a group (or dyad). However, for this contribution, we base our guiding principle on the convergent conceptual change (CCC, [

21]). The CCC theory suggests that in a collaborative learning scenario the learners engage in collaborative (joint) tasks. These tasks aid in reducing the initial difference in peers’ understanding about the domain concepts. This reduction is due to the processes of argumentation, negotiation and acceptance of suggestions [

22]. This convergence could also be a result of communication and the resulting socio-cognitive processes within the group that supports a collective knowledge structure, or mutually shared cognition, forming of shared mental models [

23].

One of the common factors that most of the studies in the field of CSCL and informal learning have focused on is the analyses methods. Most of the studies mentioned in the previous sections of the exemplar studies from the theoretical contributions focused on analyzing the dialogues and gestures (manually annotated) and video analyses. This is a significant lack of automatic analyses methods that can be used to further provide feedback to the learners about their individual and/or collaborative learning processes [

19]. CLM is partially based on CCC because one of the assumptions for the framework is that the main motivation of the collaborating peers is creating and sustaining mutual understanding [

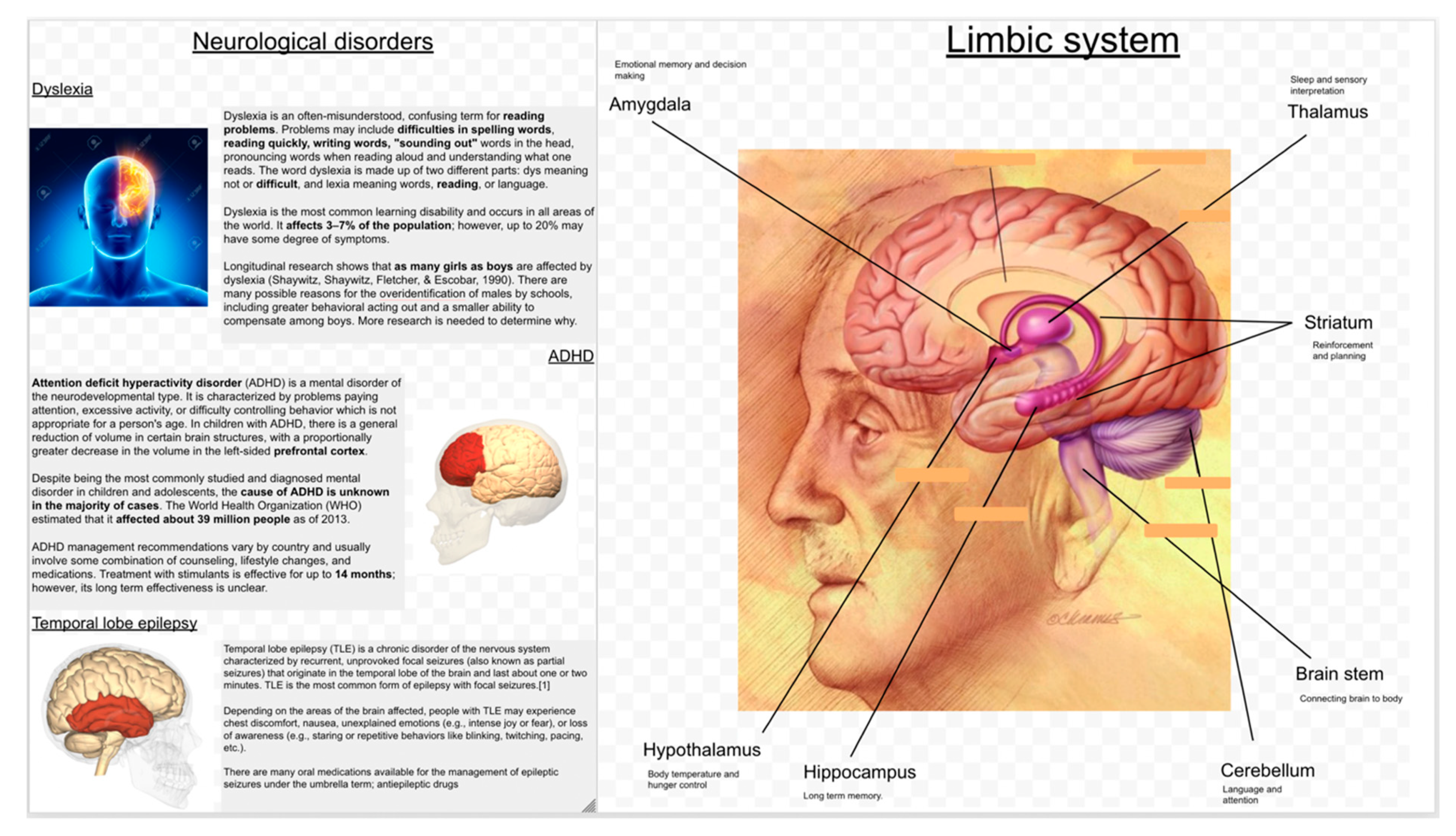

18]. This is quite like the scenario that we present via this contribution, where the participants must work in dyads to be able to understand a few basic neurological concepts (see Section “Research design”).

One might argue that most of the visitors in museums have diverse goals, for example, adults accompanying children might have different learning goals than those of the children themselves [

24]. Thus, CCC might not be the best suited theoretical model for such “free-choice” learning contexts. There are two counter arguments for this. First, according to Dillenbourg [

25] such situations are still collaborative; and second, the case with present contribution is not a typical “free-choice” environment. The main objective of the “museum-like” environment was to teach students about basic neurological concepts. Hence, there is an opportunity for having a convergent learning. Therefore, we argue that CCC and, by extension CLM is still applicable in our case.

In this contribution, we extend the CLM framework by having our focus on automatic analyses of collaborative learning in informal settings via multimodal data. We use DUET for measuring joint attention among the collaborating partners and compare the gaze similarity (a measure that captures the convergence of the eye-tracking patterns) for the pairs with the different levels of performance/learning gains. We will also use automatically extracted speech segments to compare the joint attention and other eye-tracking measurements (see Section “Measurements”) while the peers engage in dialogues. By using the automatic ways of analyzing CSCL situations, we could not only understand the process faster than the manual tagging of events, but we might also be able to predict the outcome/experiences in real-time or with fewer data points [

20,

26]. This opens new avenues for supporting real-time analysis of multimodal data to support CSCL.

6. Discussion and Conclusions

We presented a dual eye-tracking study in the context of an informal collaborative setting. Dyads of participants were asked to explore and discuss the material provided in a “museum-like” setup. They were then required to play a gamified quiz. In this contribution, we used automatic gaze and speech analyses to explain the collaborative learning mechanisms (CLM) involved in the informal learning scenarios.

From the results of the present DUET study we can explain the following:

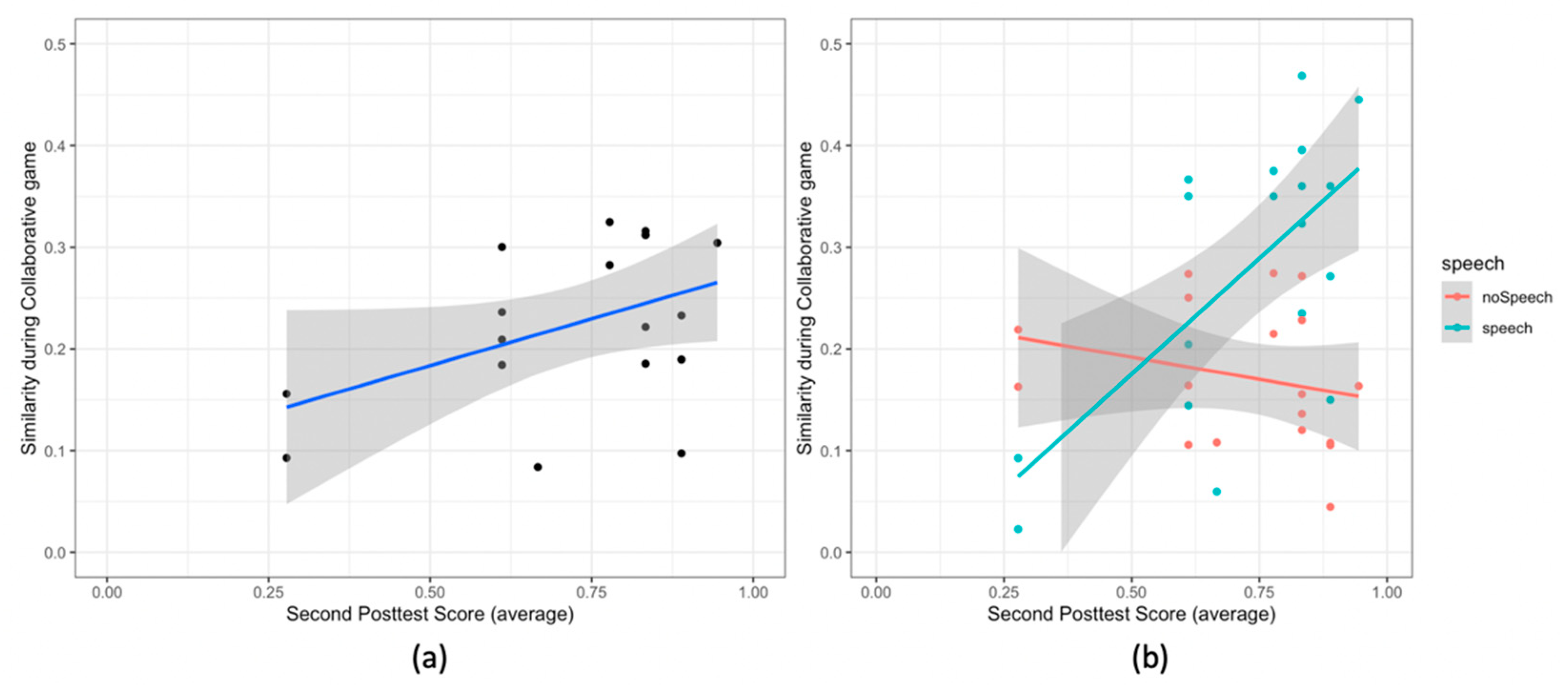

Using the DUET data one can understand both the individual and collaborative learning processes. For example, we observe that those individuals who understood the relation between the text and the visualizations correctly (high number of image to text and back transitions) had higher learning gains. Moreover, we also observe that those dyads who put efforts into establishing the common ground (looking at the same thing at the same time while talking about it) learned more (high posttest and game scores).

We also provided an analysis that combined different tasks and showed that it is important to consider the behavior in both the interaction media (physical posters and digital games) to understand the successful learning processes. For example, we observe that the gaze similarity in the poster and game phases, and the scores (posttests and game) are correlated to each other.

Finally, we empirically show how collaborative learning mechanisms could be understood using DUET data. For example, coordination of collaboration, joint attention and narration, can be captured using the overall gaze similarity and the similarity measures during speech episodes. Furthermore, collaborative discussion can be measured using the transition similarity during the speech episodes.

In this section, we elaborate on our contributions and provide detailed implications of our findings.

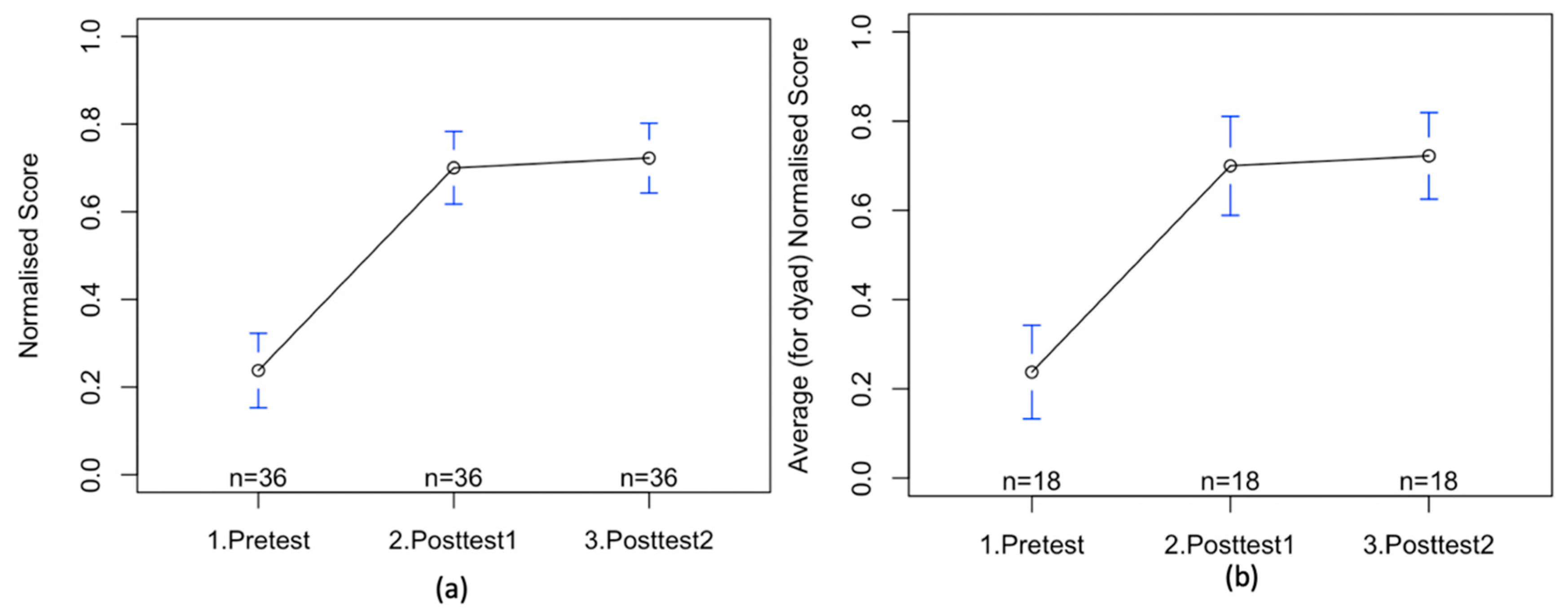

Regarding the game and test scores, the results show no significant improvement from the first posttest score to the second posttest score. Looking at the means and the standard deviation of the first (mean = 0.70, sd = 0.24) and the second (mean = 0.72, sd = 0.23) posttest, one can reason that there was a very small room for improvement for the students in the second posttest. However, there is a slight but nonsignificant improvement. The results also indicate towards a positively significant correlation between the two posttest scores, which shows that the interactive multi-touch display does not obstruct the learning process of the teams. This is similar to the results reported in the prior works done by Sluis et al. [

37] and Zaharias et al. [

6]. These two studies also report although the interactive displays do not significantly increase the learning gain, they also do not hinder them. Concerning the game score and the learning gain of students, the results show a positive correlation between the two quantities. Sangin et al. [

75], in a collaborative concept map task, also reported a strong, positive and significant correlation between the learning gains and task-based performance of a team.

The results, based on the eye-tracking data, presented in the previous section represent two behavioral gaze patterns (individual AOI transitions and collaborative gaze/transitions similarity), both of which are correlated to participants’ learning outcomes.

Concerning the individual gaze patterns reported in this study, the gaze transitions between images and the corresponding text pieces play an important role in explaining the posttest scores. The results show that the students with a higher first posttest scores connect the text and images/graphics in a better manner and in higher proportions than the students with the low first posttest scores. The complementarity of the information presented in the textual form and the images is of utmost importance when it comes to understanding a learning material (as suggested by Meyer, [

76]). By connecting the correct pieces of text to the appropriate image, the students understand the content more and hence obtain a high first posttest score. On the other hand, students who fail to do so end up with a low first posttest score. In two separate eye-tracking studies, Sharma et al. [

50] and Strobel et al. [

77] have shown that the understanding the content using the complementary information present in the different formats was a key process while learning with video lectures [

8,

50] or printed content [

77].

Concerning the collaborative gaze patterns reported in this study, the gaze similarity between the peers depicts the amount of time spent by the partners looking together at the similar sets of objects in their field of view. This contribution measures the joint attention of the partners using gaze similarity. Joint attention is one of the key aspects of creating and sustaining high levels of mutual understanding. The results show a significant and positive correlation between the obtained knowledge and the gaze similarity of the pair. One plausible reason for this correlation could be the fact that while looking at the similar objects the peers might discuss and reflect upon the content of the posters which in turn sustains a high level of mutual understanding of the team. On the other hand, this process might be difficult if the peers are not looking at the similar set of objects together. Such results were also reported in different educational contexts [

5,

10,

50,

51,

53,

59]. These contributions have shown that the gaze cross-recurrence (or gaze similarity) is correlated to the learning outcomes and/or task-based performance in collaborative settings such as, collaborative programming [

10,

78], tangible interaction [

5,

51], concept maps [

50], listening comprehension [

53,

54]. We found that the gaze similarity during the collaborative phase of the game to be significantly higher than the gaze similarity during the competitive phase of the game. This verifies and quantifies findings coming from relevant qualitative studies [

63,

64] which argue that collaboration promotes different behavior from competition, demonstrating a trait-like individual difference for students.

We also compared the gaze similarity during the collaborative and the competitive phases of the game. The results show that the gaze similarity during the collaborative phase of the game is significantly higher than the gaze similarity during the competitive phase of the game. On the other hand, the scores in the two phases were not significantly different. The difference in the collaborative gaze behavior during two game phases is driven by the need of the respective phases. For example, during the collaborative phase the peers would need to discuss the answer before answering the question and this would lead them to point to the different parts of the questions and the multiple choices available to them. This would in turn increase the gaze-similarity (as our results show that during speech episodes the gaze-similarity increases). On the other hand, during the competitive phase no such dialogue is necessary among the peers and most of them answered the questions quietly, this could also explain the lower values of the gaze-similarity. One might argue that in the competitive phase the gaze similarity should be zero. However, this would not be the case because the questions were made available to the peers at the same time hence, they would read part (or full) question at the same time. This would result in a non-zero value gaze similarity (still significantly less than the gaze-similarity during the collaborative phase) during the competitive phase.

We also computed the transitions similarity between the peers in a dyad. Transition similarity is an extension of the gaze similarity. Gaze similarity captures if the two peers are looking at the similar sets of the objects in their respective field of view in a given time window. On the other hand, transition similarity denotes that the peers are also shifting their attention among the different sets of objects in a similar manner. In other words, gaze similarity is the similarity in attention distribution, while the transition similarity is the similarity in the attention changes. We found that the transition similarity is higher for the pairs with high posttest scores than that for the pairs with low posttest scores. This shows that the pairs with high posttest score did not only focused on the similar object, but they also had similar change of the focus.

Furthermore, this paper also presents the relationship between the collaborative gaze patterns in the two very different phases of the experiment, poster phase that was physical and the game phase that was digital. Another difference between the two phases was rooted in the script of the experiment. In the poster/physical phase of the experiment the students were not forced/told/asked to collaborate, they were simply told to “watch the posters as if they were in a museum with their friend”. This made the collaboration during the poster phase a voluntary effort of the students. On the other hand, during the game/digital phase of the experiment, according to the script of the experiment, the participants collaborated. Despite these two major differences, the gaze similarities in the poster/physical and the game/digital phases were significantly and positively correlated. This result indicates towards the validation of the interaction styles hypothesis by Sharma et al. [

50], stating that in different collaborative settings the partners interact in two primary ways: “Looking AT” and “Looking THROUGH”. While Looking AT (teams with low gaze similarity in poster and game phases), the partners use the content as the main interaction point and while Looking THROUGH (teams with high gaze similarity in poster and game phases), the partners use the content as the medium to communicate and ground the conversation.

Finally, while comparing the gaze patterns across the different speech segments, we observe that the speech episodes have higher gaze and transition similarity then the no-speech episodes and this difference is wider for the participants with the high posttest score. In addition, this difference exists in both the poster and the game phases. This shows that there is higher amount of joint attention (measured by gaze similarity) and attention shift (measured by transition similarity) accompanying the verbal actions of peers in a dyad. Additionally, the gaze similarity is higher during the moments when the participants were using the power ups in the collaborative version of the game. This depicts that beneficial coordinated actions and joint attention episodes occur together.

The correlation between the average posttest score for the dyads and the measures of their joint attention shows that there is a convergence of conceptual change. These results also show that CLM can be used in an automatic manner, although up to an extent. For example, the coordination of collaboration, joint attention and narration, can be captured using the overall gaze similarity and the similarity measures during speech episodes. The triumvirate relation between the posttest score, gaze similarity and the type of speech episodes indicates that there was a process responsible for creating and sustaining the mutual understanding trough references. Previous research has shown a close relation between the mutual understanding and the joint attention [

10,

79]).

On the other hand, the collaborative discussion can be measured using the transition similarity during the speech episodes. Transition similarity indicates a deeper level of mutual understanding as it does not merely capture the attention similarity but the similarity between peers’ shift of attention. This might be a result of more engaging dialogue process then simple deictic references since the high levels of transition similarity co-occur more with the speech episodes than the no-speech episodes.

We used a simple script for the present study, which consisted of two phases based on the framework presented by Leftheriotis et al. [

40]. In the first exploration phase, the dyads were asked to go through the learning material in a “museum-like” setting, and the second application phase was the game. The application phase had two different substages, a collaboration stage and a competitive stage. Competitive stage was the same game as the collaborative stage but with the peers playing against each other. The results (learning outcomes, game performance) show no differences in terms of which version of the game was first played by the team, this depicts the fact that both the collaboration and competition entail similar effect on the engagement of the users. This is reflected by the fact that most of the users indicated high levels of fun (mean = 6.4, sd = 0.87, 7-point Likert-scale), excitement (mean = 6.1, sd = 1.04, 7-point Likert-scale) and enjoyment (mean = 6.2, sd = 0.98, 7-point Likert-scale). The users also indicated that having a multi-touch display in such a setting is practical (mean = 6.0, sd = 0.97, 7-point Likert-scale). While the pairs were going through the posters, we observed significant discussion among the team members about the content of the poster. This might reflect the effectiveness of the simple script employed for the study as previously suggested by Giannakos et al. [

27].

In this contribution, we showed that there are individual and collaborative gaze patterns, which can explain the learning outcomes/processes of the participants in a collaborative informal educational setting using automatic methods in a museum-like setting and through the utilization of interactive surfaces. These explanations are coherent with studies conducted in more formal educational settings and other in-the-wild studies that employ interactive surfaces and collect data utilizing other techniques (e.g., observations, interviews, movement tracking; [

43,

62]). However, the utilization of multi-modal learning analytics (in our case gaze and speech) in understanding the learning processes and outcomes around an interactive surface and comparison of those processes with a typical informal learning setting, allows us to extract insights about students’ collaboration and learning in an automated manner. This contributes to the contemporary body of research on the use of interactive displays to support learning and collaboration [

1,

44] and opens new research avenues. For example, how to incorporate this automated information to the interface and functionalities of interactive displays. Further development of these methods might have the same rigor as the qualitative analyses of videos and dialogues, which are time taking and cumbersome processes. Moreover, these results will also lead us to design more hands-on activities with interactive displays within the informal settings to study their influence on the learning outcomes.

The present study is one of the first to use eye-tracking data to examine the relation between gaze, collaboration and learning gain in an informal learning scenario. However, our study entails some limitations. Mobile eye tracking devices are fragile, need frequent recharging and of course demand good calibration and thus additional time for the experiment was needed; in addition, the equipment is expensive. Due to the above limitations, there was a relatively small sample size, the total number of participants was 36 and most of them were males. With a small sample size, there is less statistical power. However, in most metrics the differences we found are significantly important.

Moreover, the participants were volunteers from our university which may somewhat limit the generalizability of our results, so other sampling methods could have been applied to ensure a more consistent sample was obtained in terms of the age and reading skills. In addition, the experiment took place in a lab in the university and not in a museum or a similar real-life setting. Finally, this study lacked structured qualitative data (e.g., observations and interviews), which would be a fruitful opportunity for further research.

Future studies could compare the role of gaze in alternative learning environments, as well as obtaining deeper insights from longitudinal collection of eye-tracking data. In addition, instead of posters with information, we are in discussions to run a similar study in a museum with real artefacts where participants could also interact with them and thus understand the role of gaze while interacting collaboratively in a real museum setting.