Evaluation of Vineyard Cropping Systems Using On-Board RGB-Depth Perception

Abstract

1. Introduction

2. Materials and Methods

2.1. Site Location

2.2. Sampling System

2.3. Data Processing

2.4. Statistical Analysis

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, X.; Singh, D.; Marla, S.; Morris, G.; Poland, J. Field-based high-throughput phenotyping of plant height in sorghum using different sensing technologies. Plant Methods 2018, 14, 53. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Phinn, S.; Johansen, K.; Robson, A.; Muir, J.; Searle, C. Estimating Changes in Leaf Area, Leaf Area Density, and Vertical Leaf Area Profile for Mango, Avocado, and Macadamia Tree Crowns Using Terrestrial Laser Scanning. Remote Sens. 2018, 10, 1750. [Google Scholar] [CrossRef]

- Kragh, M.F.; Christiansen, P.; Laursen, M.S.; Larsen, M.; Steen, K.A.; Green, O.; Karstoft, H.; Jørgensen, R.N. FieldSAFE: Dataset for Obstacle Detection in Agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef] [PubMed]

- Paulus, S.; Schumann, H.; Kuhlmann, H.; Léon, J. High-precision laser scanning system for capturing 3D plant architecture and analysing growth of cereal plants. Biosyst. Eng. 2014, 121, 1–11. [Google Scholar] [CrossRef]

- Rosell Polo, J.R.; Sanz, R.; Llorens, J.; Arnó, J.; Escolà, A.; Ribes-Dasi, M.; Masip, J.; Camp, F.; Gràcia, F.; Solanelles, F.; et al. A tractor-mounted scanning LIDAR for the non-destructive measurement of vegetative volume and surface area of tree-row plantations: A comparison with conventional destructive measurements. Biosyst. Eng. 2009, 102, 128–134. [Google Scholar] [CrossRef]

- Jimenez-Berni, J.A.; Deery, D.M.; Rozas-Larraondo, P.; Condon, A.T.G.; Rebetzke, G.J.; James, R.A.; Bovill, W.D.; Furbank, R.T.; Sirault, X.R.R. High Throughput Determination of Plant Height, Ground Cover, and Above-Ground Biomass in Wheat with LiDAR. Front. Plant Sci. 2018, 9, 237. [Google Scholar] [CrossRef]

- Andújar, D.; Weis, M.; Gerhards, R. An Ultrasonic System for Weed Detection in Cereal Crops. Sensors 2012, 12, 17343–17357. [Google Scholar] [CrossRef]

- Llorens, J.; Gil, E.; Llop, J.; Queraltó, M. Georeferenced LiDAR 3D Vine Plantation Map Generation. Sensors 2011, 11, 6237–6256. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Bessa, J.; Sousa, A.; Peres, E.; Morais, R.; Sousa, J.J. Vineyard properties extraction combining UAS-based RGB imagery with elevation data. Int. J. Remote Sens. 2018, 39, 5377–5401. [Google Scholar] [CrossRef]

- Peteinatos, G.G.; Weis, M.; Andújar, D.; Rueda Ayala, V.; Gerhards, R. Potential use of ground-based sensor technologies for weed detection. Pest. Manag. Sci. 2014, 70, 190–199. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.P.; Peña, J.M.; Höglind, M.; Bengochea-Guevara, J.M.; Andújar, D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef] [PubMed]

- Andújar, D.; Ribeiro, A.; Fernández-Quintanilla, C.; Dorado, J. Using depth cameras to extract structural parameters to assess the growth state and yield of cauliflower crops. Comput. Electron. Agric. 2016, 122, 67–73. [Google Scholar] [CrossRef]

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A Robot System for Pruning Grape Vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Tabb, A.; Medeiros, H. A Robotic Vision System to Measure Tree Traits. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 6005–6012. [Google Scholar]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Reiser, D.; Paraforos, D.S.; Garrido-Izard, M.; Burce, M.E.C.; Griepentrog, H.W. 3D reconstruction of maize plants using a time-of-flight camera. Comput. Electron. Agric. 2018, 145, 235–247. [Google Scholar] [CrossRef]

- Rosell-Polo, J.R.; Gregorio, E.; Gené, J.; Llorens, J.; Torrent, X.; Arnó, J.; Escolà, A. Kinect v2 Sensor-Based Mobile Terrestrial Laser Scanner for Agricultural Outdoor Applications. IEEE/ASME Trans. Mechatron. 2017, 22, 2420–2427. [Google Scholar] [CrossRef]

- Vit, A.; Shani, G. Comparing RGB-D Sensors for Close Range Outdoor Agricultural Phenotyping. Sensors 2018, 18, 4413. [Google Scholar] [CrossRef]

- Pagliari, D.; Pinto, L. Calibration of Kinect for Xbox One and Comparison between the Two Generations of Microsoft Sensors. Sensors 2015, 15, 27569–27589. [Google Scholar] [CrossRef]

- Lun, R.; Zhao, W. A Survey of Applications and Human Motion Recognition with Microsoft Kinect. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1555008. [Google Scholar] [CrossRef]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the Kinect Sensor and Leap Motion Controller—A Literature Review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef]

- Rosell-Polo, J.R.; Auat Cheein, F.; Gregorio, E.; Andújar, D.; Puigdomènech, L.; Masip, J.; Escolà, A. Chapter Three-Advances in Structured Light Sensors Applications in Precision Agriculture and Livestock Farming. In Advances in Agronomy; Sparks, D.L., Ed.; Academic Press: Cambridge, MA, USA, 2015; Volume 133, pp. 71–112. [Google Scholar]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and Calibration of a RGB-D Camera (Kinect v2 Sensor) Towards a Potential Use for Close-Range 3D Modeling. Remote Sens. 2015, 7, 13070–13097. [Google Scholar] [CrossRef]

- Andújar, D.; Dorado, J.; Bengochea-Guevara, J.M.; Conesa-Muñoz, J.; Fernández-Quintanilla, C.; Ribeiro, Á. Influence of Wind Speed on RGB-D Images in Tree Plantations. Sensors 2017, 17, 914. [Google Scholar] [CrossRef] [PubMed]

- Bengochea-Guevara, J.M.; Andújar, D.; Sanchez-Sardana, F.L.; Cantuña, K.; Ribeiro, A. A Low-Cost Approach to Automatically Obtain Accurate 3D Models of Woody Crops. Sensors 2018, 18, 30. [Google Scholar] [CrossRef] [PubMed]

- FAO. Faostat: Crops, National Production. Online. 2014. Available online: http://faostat.fao.org (accessed on 10 September 2020).

- Santesteban, L.G. Precision viticulture and advanced analytics. A short review. Food Chem. 2019, 279, 58–62. [Google Scholar] [CrossRef]

- Andújar, D.; Moreno, H.; Bengochea-Guevara, J.M.; de Castro, A.; Ribeiro, A. Aerial imagery or on-ground detection? An economic analysis for vineyard crops. Comput. Electron. Agric. 2019, 157, 351–358. [Google Scholar] [CrossRef]

- Tagarakis, A.; Liakos, V.; Chatzinikos, T.; Koundouras, S.; Fountas, S.; Gemtos, T. Using Laser Scanner to Map Pruning Wood in Vineyards; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013; pp. 633–639. [Google Scholar]

- Dryden, G. 2014 Viticulture Monitoring Report; Ministry for Primary Industries: Wellington, New Zealand, 2014.

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. 2013, 32, 169. [Google Scholar] [CrossRef]

- Curless, B.; Levoy, M. A volumetric method for building complex models from range images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, Association for Computing Machinery, New Orleans, LA, USA, 4–9 August 1996; pp. 303–312. [Google Scholar]

- Roth, S.D. Ray casting for modeling solids. Comput. Graph. Image Process. 1982, 18, 109–144. [Google Scholar] [CrossRef]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Mücke, E.P. Three-dimensional alpha shapes. ACM Trans. Graph. 1994, 13, 43–72. [Google Scholar] [CrossRef]

- Colaço, A.F.; Trevisan, R.G.; Molin, J.P.; Rosell-Polo, J.R.; Escolà, A. A Method to Obtain Orange Crop Geometry Information Using a Mobile Terrestrial Laser Scanner and 3D Modeling. Remote Sens. 2017, 9, 763. [Google Scholar] [CrossRef]

- Lafarge, T.; Pateiro-López, B. Alphashape3d: Implementation of the 3D Alpha-Shape for the Reconstruction of 3D Sets from a Point Cloud, 1.3. 2017. Available online: https://cran.r-project.org (accessed on 10 September 2020).

- The R Foundation. R: A Language and Environment for Statistical Computing. Available online: https://www.R-project.org/ (accessed on 10 September 2020).

- Moreno, H.; Valero, C.; Bengochea-Guevara, J.M.; Ribeiro, Á.; Garrido-Izard, M.; Andújar, D. On-Ground Vineyard Reconstruction Using a LiDAR-Based Automated System. Sensors 2020, 20, 1102. [Google Scholar] [CrossRef] [PubMed]

- Jiao, J.; Yuan, L.; Tang, W.; Deng, Z.; Wu, Q. A Post-Rectification Approach of Depth Images of Kinect v2 for 3D Reconstruction of Indoor Scenes. ISPRS Int. J. Geo-Inf. 2017, 6, 349. [Google Scholar] [CrossRef]

- Fankhauser, P.; Bloesch, M.; Rodriguez, D.; Kaestner, R.; Hutter, M.; Siegwart, R. Kinect v2 for mobile robot navigation: Evaluation and modeling. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 388–394. [Google Scholar]

- Kumar, P.; Cai, J.; Miklavcic, S. High-throughput 3D modelling of plants for phenotypic analysis. In Proceedings of the 27th Conference on Image and Vision Computing New Zealand; Association for Computing Machinery: Dunedin, New Zealand, 2012; pp. 301–306. [Google Scholar]

- Wasenmüller, O.; Stricker, D. Comparison of Kinect V1 and V2 Depth Images in Terms of Accuracy and Precision; Springer International Publishing: Cham, Switzerland, 2017; pp. 34–45. [Google Scholar]

| Response | Regressor | Treatment | Estimate | Std. Error | p Value | Lower 95% CI | Upper 95% CI |

|---|---|---|---|---|---|---|---|

| Vineyard dry biomass | Kinect volume | c | 0.10 | 1.64 | 0.95 | −3.48 | 3.68 |

| d | 2.05 | 1.40 | 0.17 | −1.00 | 5.11 | ||

| f | 3.02 | 4.76 | 0.54 | −7.34 | 13.38 | ||

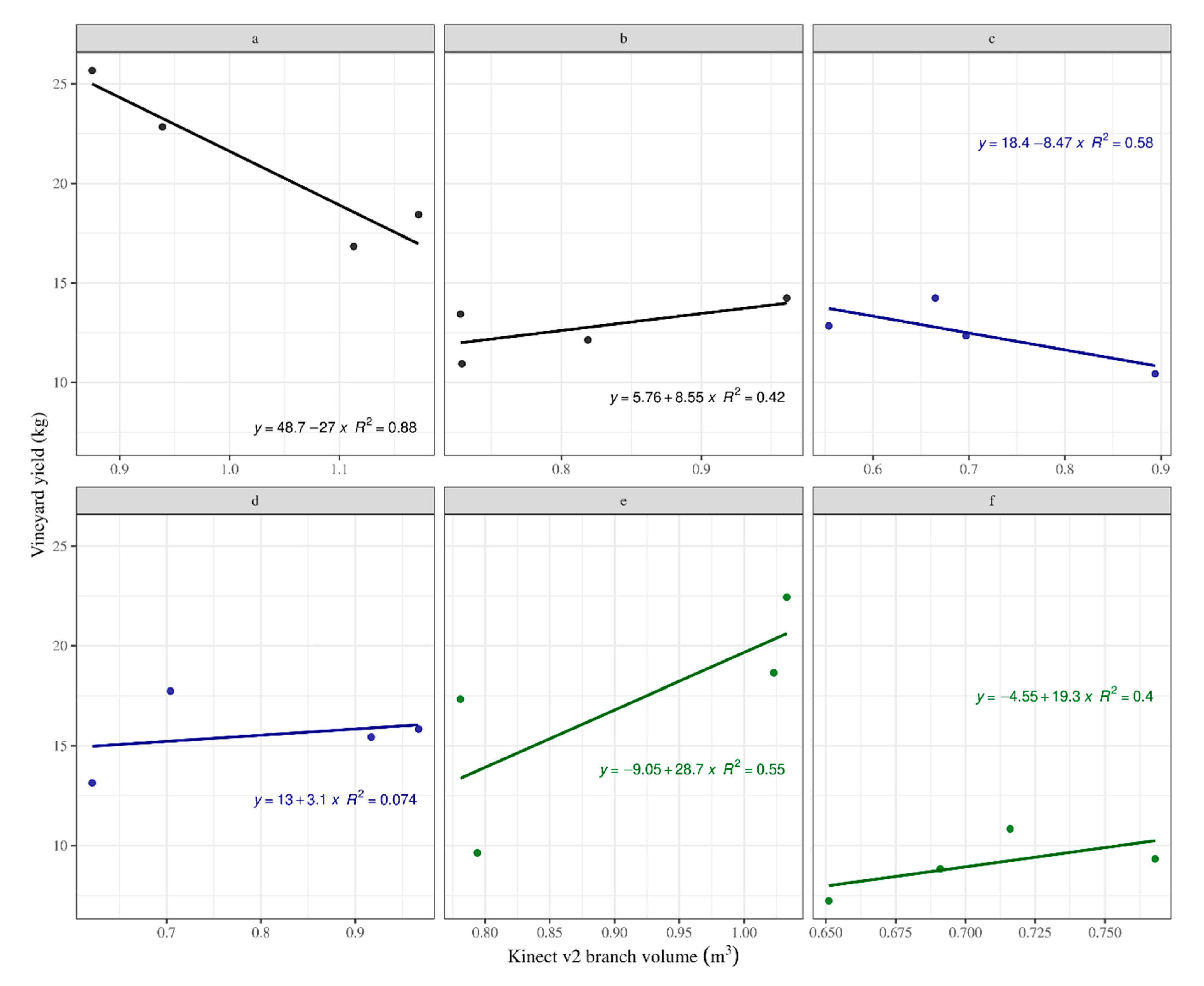

| Vineyard yield | Kinect volume | b | 8.55 | 12.26 | 0.50 | −18.15 | 35.26 |

| d | 3.10 | 8.10 | 0.71 | −14.54 | 20.75 | ||

| e | 28.71 | 9.68 | 0.01 | 7.62 | 49.80 | ||

| f | 19.26 | 27.48 | 0.50 | −40.61 | 79.13 | ||

| Vineyard dry biomass | Vineyard yield | a | 0.15 | 0.05 | 0.02 | 0.03 | 0.27 |

| b | 0.02 | 0.15 | 0.88 | −0.31 | 0.36 | ||

| c | 0.15 | 0.14 | 0.30 | −0.15 | 0.46 | ||

| d | 0.23 | 0.12 | 0.07 | −0.02 | 0.49 | ||

| f | 0.16 | 0.15 | 0.29 | −0.16 | 0.49 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreno, H.; Rueda-Ayala, V.; Ribeiro, A.; Bengochea-Guevara, J.; Lopez, J.; Peteinatos, G.; Valero, C.; Andújar, D. Evaluation of Vineyard Cropping Systems Using On-Board RGB-Depth Perception. Sensors 2020, 20, 6912. https://doi.org/10.3390/s20236912

Moreno H, Rueda-Ayala V, Ribeiro A, Bengochea-Guevara J, Lopez J, Peteinatos G, Valero C, Andújar D. Evaluation of Vineyard Cropping Systems Using On-Board RGB-Depth Perception. Sensors. 2020; 20(23):6912. https://doi.org/10.3390/s20236912

Chicago/Turabian StyleMoreno, Hugo, Victor Rueda-Ayala, Angela Ribeiro, Jose Bengochea-Guevara, Juan Lopez, Gerassimos Peteinatos, Constantino Valero, and Dionisio Andújar. 2020. "Evaluation of Vineyard Cropping Systems Using On-Board RGB-Depth Perception" Sensors 20, no. 23: 6912. https://doi.org/10.3390/s20236912

APA StyleMoreno, H., Rueda-Ayala, V., Ribeiro, A., Bengochea-Guevara, J., Lopez, J., Peteinatos, G., Valero, C., & Andújar, D. (2020). Evaluation of Vineyard Cropping Systems Using On-Board RGB-Depth Perception. Sensors, 20(23), 6912. https://doi.org/10.3390/s20236912