Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach

Abstract

1. Introduction

2. Materials and Methods

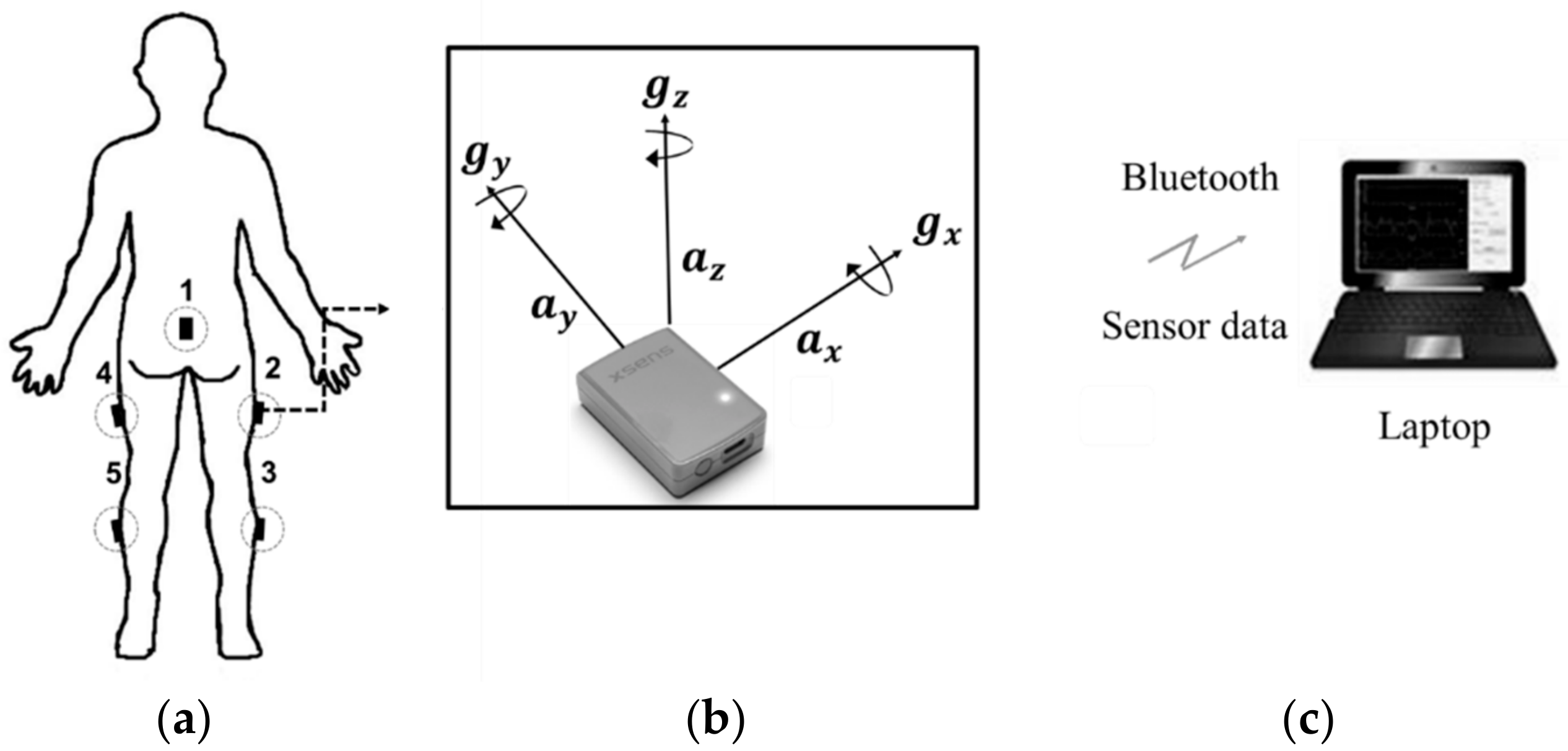

2.1. Measurement Settings and Experimental Protocol

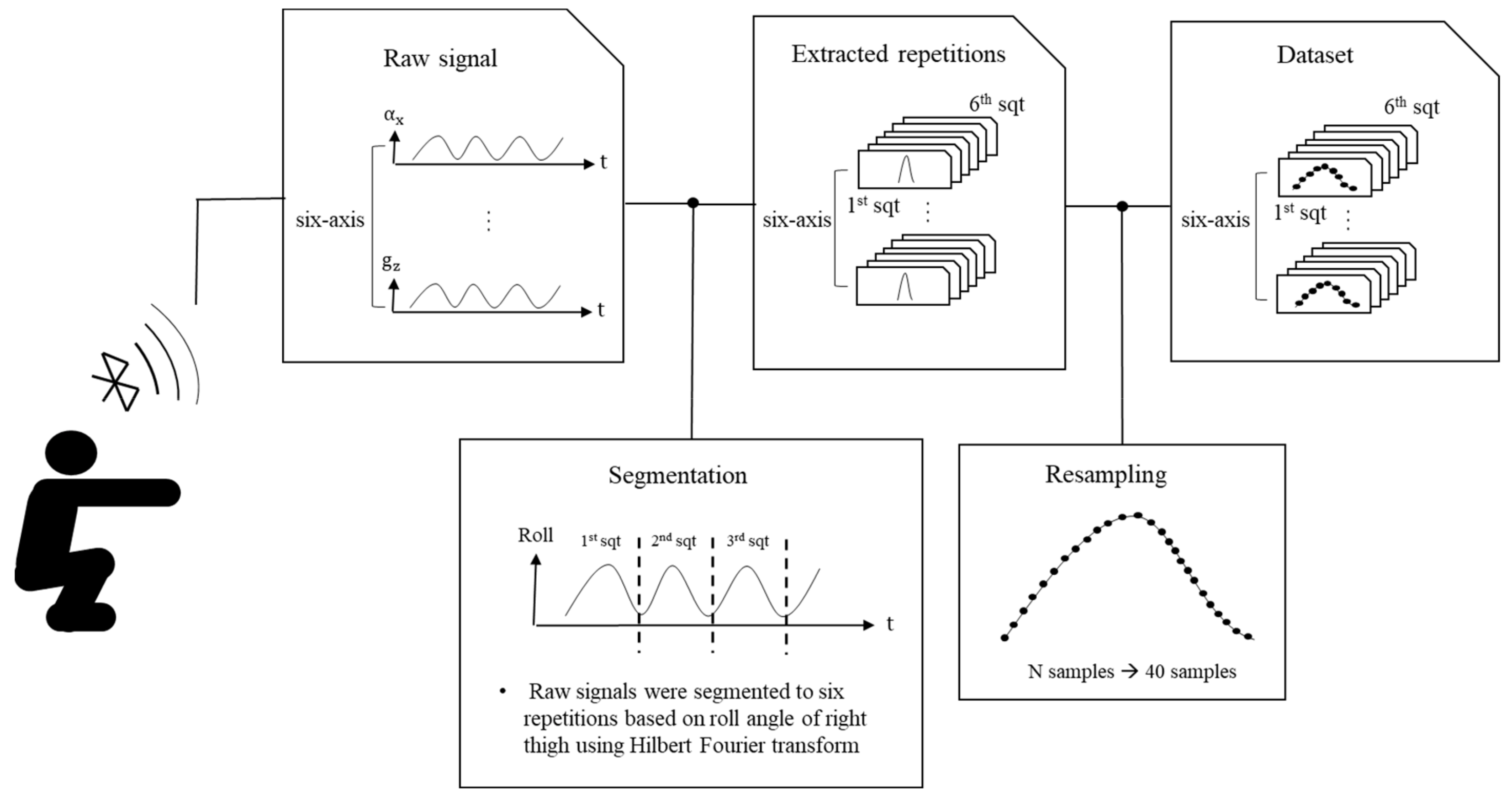

2.2. Preprocessing

2.3. Classification Algorithms

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bassett, S. Measuring Patient Adherence to Physiotherapy. J. Nov. Physiother. 2012, 2, 60–66. [Google Scholar] [CrossRef]

- McCurdy, K.W.; Langford, G.A.; Doscher, M.W.; Wiley, L.P.; Mallard, K.G. The Effects of Short-Term Unilateral and Bilateral Lower-Body Resistance Training on Measures of Strength and Power. J. Strength Cond. Res. 2005, 19, 9–15. [Google Scholar] [PubMed]

- Kritz, M.; Cronin, J.; Hume, P. The Bodyweight Squat: A Movement Screen for the Squat Pattern. Strength Cond. J. 2009, 31, 76–85. [Google Scholar] [CrossRef]

- Friedrich, M.; Cermak, T.; Maderbacher, P. The Effect of Brochure Use versus Therapist Teaching on Patients Performing Therapeutic Exercise and on Changes in Impairment Status. Phys. Ther. 1996, 76, 1082–1088. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.-H.; Kwon, O.-Y.; Park, K.-N.; Jeon, I.-C.; Weon, J.-H. Lower Extremity Strength and the Range of Motion in Relation to Squat Depth. J. Hum. Kinet. 2015, 45, 59–69. [Google Scholar] [CrossRef] [PubMed]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In International Workshop on Human Behavior Understanding; Springer: New York, NY, USA, 2011; pp. 29–39. [Google Scholar]

- Yang, J.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Mehrizi, R.; Peng, X.; Tang, Z.; Xu, X.; Metaxas, D.; Li, K. Toward marker-free 3d pose estimation in lifting: A deep multi-view solution. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 485–491. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In European Conference on Computer Vision; Springer: New York, NY, USA, 2016; pp. 483–499. [Google Scholar]

- Mehrizi, R.; Peng, X.; Metaxas, D.N.; Xu, X.; Zhang, S.; Li, K. Predicting 3-D Lower Back Joint Load in Lifting: A Deep Pose Estimation Approach. IEEE Trans. Hum. Mach. Syst. 2019, 49, 85–94. [Google Scholar] [CrossRef]

- Whelan, D.F.; O’Reilly, M.A.; Ward, T.E.; Delahunt, E.; Caulfield, B. Technology in Rehabilitation: Evaluating the Single Leg Squat Exercise with Wearable Inertial Measurement Units. Methods Inf. Med. 2017, 56, 88–94. [Google Scholar] [PubMed]

- O’Reilly, M.; Whelan, D.; Chanialidis, C.; Friel, N.; Delahunt, E.; Ward, T.; Caulfield, B. Evaluating squat performance with a single inertial measurement unit. In Proceedings of the 2015 IEEE 12th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Cambridge, MA, USA, 9 June 2015; pp. 1–6. [Google Scholar]

- Biswas, D.; Cranny, A.; Gupta, N.; Maharatna, K.; Achner, J.; Klemke, J.; Jöbges, M.; Ortmann, S. Recognizing Upper Limb Movements with Wrist Worn Inertial Sensors Using K-Means Clustering Classification. Hum. Mov. Sci. 2015, 40, 59–76. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Ordóñez, F.; Roggen, D. Deep Convolutional and Lstm Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Hammerla, N.Y.; Halloran, S.; Plötz, T. Deep, Convolutional, and Recurrent Models for Human Activity Recognition Using Wearables. arXiv 2016, arXiv:1604.08880. [Google Scholar]

- Hu, B.; Dixon, P.C.; Jacobs, J.V.; Dennerlein, J.T.; Schiffman, J.M. Machine Learning Algorithms Based on Signals from a Single Wearable Inertial Sensor Can Detect Surface-and Age-Related Differences in Walking. J. Biomech. 2018, 71, 37–42. [Google Scholar] [CrossRef] [PubMed]

- Work Out Smarter: Best Gym Trackers and Wearables to Look Out For. Available online: https://www.wareable.com/sport/the-best-gym-fitness-tracker-band-weights-wearables (accessed on 19 December 2019).

- Paulich, M.; Schepers, M.; Rudigkeit, N.; Bellusci, G. Xsens MTw Awinda: Miniature Wireless Inertial-Magnetic Motion Tracker for Highly Accurate 3D Kinematic Applications; Xsens: Enschede, The Netherlands, 2018. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep Learning for Sensor-Based Activity Recognition: A Survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional neural networks for human activity recognition using mobile sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, Austin, TX, USA, 6–7 November 2014; pp. 197–205. [Google Scholar]

- Bonnet, V.; Mazza, C.; Fraisse, P.; Cappozzo, A. A Least-Squares Identification Algorithm for Estimating Squat Exercise Mechanics Using a Single Inertial Measurement Unit. J. Biomech. 2012, 45, 1472–1477. [Google Scholar] [CrossRef] [PubMed]

- O’Reilly, M.; Duffin, J.; Ward, T.; Caulfield, B. Mobile App to Streamline the Development of Wearable Sensor-Based Exercise Biofeedback Systems: System Development and Evaluation. JMIR Rehabil. Assist. Technol. 2017, 4, e9. [Google Scholar] [CrossRef] [PubMed]

| Squat | Description | Figure | Squat | Description | Figure |

|---|---|---|---|---|---|

| Acceptable (ACC) | Normal squat |  | Knee varus (KVR) | Both knees pointing outside during exercise |  |

| Anterior knee (AK) | Knees ahead of toes during exercise |  | Half squat (HS) | Insufficient squatting depth |  |

| Knee valgus (KVG) | Both knees pointing inside during exercise |  | Bent over (BO) | Excessive flexing of hip and torso |  |

| Number of IMUs | Placement of IMUs | Random Forest (CML) | CNN–LSTM (DL) | ||||

|---|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | ||

| 5 IMUs | Right thigh, right calf, left thigh, left calf, and lumbar region | 75.4% | 78.6% | 90.3% | 91.7% | 90.9% | 94.6% |

| 2 IMUs | Right thigh and lumbar region | 63.2% | 64.6% | 87.6% | 83.9% | 85.6% | 90.4% |

| Right thigh and right calf | 73.9% | 76.8% | 89.5% | 88.7% | 90.5% | 95.7% | |

| Right calf and lumbar region | 66.0% | 70.1% | 86.1% | 86.2% | 87.1% | 87.6% | |

| 1 IMUs | Right thigh | 58.7% | 66.7% | 88.9% | 80.9% | 80.0% | 93.1% |

| Right calf | 57.6% | 62.7% | 82.2% | 76.1% | 78.9% | 92.8% | |

| Lumbar region | 34.6% | 38.6% | 68.1% | 46.1% | 50.3% | 79.0% | |

| (a) Right thigh with DL | (b) Lumbar region with DL | ||||||||||||||

| Predicted Values | Predicted Values | ||||||||||||||

| ACC | AK | KVG | KVR | HS | BO | ACC | AK | KVG | KVR | HS | BO | ||||

| Actual Values | ACC | 114 | 29 | 43 | 18 | 0 | 30 | Actual Values | ACC | 80 | 21 | 59 | 49 | 13 | 12 |

| AK | 43 | 92 | 14 | 22 | 20 | 43 | AK | 28 | 82 | 11 | 51 | 44 | 18 | ||

| KVG | 24 | 23 | 170 | 0 | 0 | 17 | KVG | 76 | 11 | 105 | 24 | 16 | 2 | ||

| KVR | 28 | 19 | 3 | 168 | 2 | 14 | KVR | 39 | 33 | 22 | 87 | 40 | 13 | ||

| HS | 0 | 13 | 0 | 4 | 188 | 29 | HS | 6 | 32 | 13 | 19 | 149 | 15 | ||

| BO | 34 | 46 | 26 | 4 | 34 | 90 | BO | 15 | 31 | 4 | 17 | 23 | 144 | ||

| (c) Right thigh with CML | (d) Lumbar region with CML | ||||||||||||||

| Predicted Values | Predicted Values | ||||||||||||||

| ACC | AK | KVG | KVR | HS | BO | ACC | AK | KVG | KVR | HS | BO | ||||

| Actual Values | ACC | 114 | 29 | 43 | 18 | 0 | 30 | Actual Values | ACC | 71 | 22 | 62 | 34 | 28 | 17 |

| AK | 43 | 92 | 14 | 22 | 20 | 43 | AK | 33 | 56 | 18 | 46 | 31 | 50 | ||

| KVG | 24 | 23 | 170 | 0 | 0 | 17 | KVG | 62 | 18 | 87 | 17 | 21 | 29 | ||

| KVR | 28 | 19 | 3 | 168 | 2 | 14 | KVR | 41 | 45 | 38 | 52 | 43 | 15 | ||

| HS | 0 | 13 | 0 | 4 | 188 | 29 | HS | 23 | 31 | 19 | 49 | 74 | 38 | ||

| BO | 34 | 46 | 26 | 4 | 34 | 90 | BO | 15 | 31 | 20 | 8 | 16 | 144 | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Joo, H.; Lee, J.; Chee, Y. Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach. Sensors 2020, 20, 361. https://doi.org/10.3390/s20020361

Lee J, Joo H, Lee J, Chee Y. Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach. Sensors. 2020; 20(2):361. https://doi.org/10.3390/s20020361

Chicago/Turabian StyleLee, Jaehyun, Hyosung Joo, Junglyeon Lee, and Youngjoon Chee. 2020. "Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach" Sensors 20, no. 2: 361. https://doi.org/10.3390/s20020361

APA StyleLee, J., Joo, H., Lee, J., & Chee, Y. (2020). Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach. Sensors, 20(2), 361. https://doi.org/10.3390/s20020361