Abstract

Infrared detectors suffer from severe non-uniform noise which highly reduces image resolution and point target signal-to-noise ratio. This is the restriction for airborne point target detection systems in reaching the background limit. The existing methods are either not accurate enough, or too complex to be applied to engineering. To improve the precision and reduce the algorithm complexity of scene-based Non-Uniformity Correction (NUC) for an airborne point target detection system, a Median-Ratio Scene-based NUC (MRSBNUC) method is proposed. The method is based on the assumption that the median value of neighboring pixels is approximately constant. The NUC coefficients are calculated recursively by selecting the median ratio of adjacent pixels. Several experiments were designed and conducted. For both the clear sky scene and scene with clouds, the non-uniformity is effectively reduced. Furthermore, targets were detected in outfield experiments. For Target 1 48.36 km away and Target 2 50.53 km away, employing MRSBNUC the SNR of the target increased 2.09 and 1.73 times respectively compared to Two-Point NUC. It was concluded that the MRSBNUC method can reduce the non-uniformity of the detector effectively which leads to a longer detection distance and fewer false alarms of the airborne point target detection system.

1. Introduction

Airborne infrared small target detection systems are widely used in military fields for they possess night vision and anti-hidden capability, as well as mist-penetrating power. Usually, targets need to be detected as far away as possible for early warning. They are tiny and appear as dim point targets on the focal plane.

For airborne detection systems, the complex aerial imaging environment seriously affects imaging quality and reduces detection rate. First, infrared radiation is easily affected by atmospheric attenuation, such as the absorption of atmospheric gas molecules, the scattering of suspended particles in the atmosphere, and the blocking effect under meteorological conditions. Second, both the target and the detection system are exposed to the air, the weather, season, day and night, and clouds all affecting imaging quality. Third, the imaging equipment is installed on various unmanned aerial vehicles (UAV) or manned aircraft. Vibration during in-flight imaging severely undermines imaging resolution. All those factors may cause infrared radiation in some scenes or regions of the infrared image to exceed the infrared radiation intensity of the small target local area, so that the target is drowned in a complex background. As a result, it has been a challenging task to detect small targets under a complex background [1,2,3].

Infrared detectors are often subject to severe non-uniform noise due to factors such as semiconductor materials, fabrication processes, readout circuits, and amplifier circuits [4,5]. Non- uniform noise seriously affects the imaging quality of the system, reduces the system resolution and the point target Signal-to-Noise Ratio (SNR), which is the bottleneck restricting the infrared point target detection system to reach the background limit [6,7]. Reducing non-uniform noise is an urgent problem to be solved for infrared point target detection systems [8].

In response to the non-uniformity problem of infrared detectors, scholars both at home and abroad have conducted relevant research and made some progress. At present, there are two major categories of calibration methods [9]: calibration-based [10,11,12] and scene-based [13,14,15] methods. The most commonly used calibration method is the two-point Non-Uniformity Correction (TPNUC) method. However, it cannot meet the high precision requirements of airborne early warning systems for the following reasons: (1) The radiation response of long-wave infrared detectors drifts slowly over time; (2) It is impossible to simulate complex airborne environments in the laboratory effectively. In order to compensate for the infrared detectors drift with time, an on-board embedded blackbody calibration method has been proposed in engineering [16]. Limited to the carrier‘s volume and weight, the blackbody can only be embedded in the front of the second mirror of all the optical lenses, so the main mirror is excluded in the calibration. The results are not reliable. Besides, the imaging process needs to be stopped during calibration which does not meet the efficiency requirement.

There are two types of scene-based NUC methods: (1) The statistics-based method [13,17,18,19,20], which relies on the spatial-temporal assumption and completes the NUC process by adjusting the factors. Typical statistical methods include the constant statistical [13,17,18], neural network, and Kalman filter method [19,20], etc. The disadvantage of this type of method is that some application scenarios have difficulty in meeting the assumptions of such methods, and it is easy to produce ghost phenomenon. (2) The registration-based method [21,22,23,24,25], which assumes that different pixels respond identically for the same scene point within certain blocks of time. However, this type of method requires a complex registration algorithm, and the error is easily accumulated and spread, which is challenging to implement in engineering.

This research focuses on improving the precision and reducing the algorithm complexity of scene-based NUC in airborne infrared early warning systems. A strategy and a method of statistical scene-based NUC (Median-Ratio Scene-Based NUC) are proposed. The method is based on the assumption that for infrared detector arrays neighboring pixels should respond similarly within the exposure time. The effectiveness of the proposed method is then verified in simulation and practical application in the point target detecting system.

The rest of the paper is organized as follows: Section 2 introduces the proposed method and how to evaluate the effect after applying the method. Experimental details of the proposed method and comparison method are also described. Section 3 presents the comparison results of experiments. Method design considerations, results interpretation, and future research are discussed in Section 4. Section 5 concludes the research work.

2. Materials and Methods

2.1. Bad Points Replacement

The existence of bad points will cause fixed white and black points to appear when imaging with an infrared focal plane array, which will seriously affect the visual effect of the image. Therefore, when using infrared focal plane arrays, the blind points must be processed.

2.1.1. Bad Pixels Detection Algorithm Based on the Sliding Window

The response rate of each pixel Bi,j is defined as the time-domain average of the gray value of each pixel [26]:

where is the gray value for the (i,j)th pixel of n-th frame, k is the total frames that are used for calculation, here we set k = 10 due to engineering experience.

Query the average gray value of all pixels in the 3 × 3 sliding window to find the maximum and minimum pixel gray value: Bmax and Bmin. Remove the maximum and minimum values, and find the average value of the remaining pixel gray levels in the window, that is,

Calculate the percentage of and relative to :

when ≥ 10%, the pixel is considered to be a bad point. Set the blind element position (i,j) corresponding to the position element in the infrared focal plane array to 1. Set the remaining positions to 0. This matrix can be called a bad point matrix.

2.1.2. Bad Points Compensation: Neighborhood Substitution

The blind pixel Pi,j detected in the original image of the current frame is replaced by the mean value of the four pixels adjacent to the blind pixel. The replacement formula is:

2.2. The Observation Model of Median-Ratio Scene-Based NUC (MRSBNUC)

Due to observation, the model of calibration can be approximately expressed as a simple linear model:

where and are the gray values for the (i,j)th pixel of the n-th frame after correction and before correction respectively, and are the gain and offset parameters respectively. For an infrared Focal Plane Array (FPA) with M lines and N rows, .

According to Equation (5), there are two parameters that need to be solved for an NUC method, which is easy for laboratory correction. However, it is not the case for scene-based NUC due to the difficulty of finding two effective constraints. So it can be simplified to a one-point model with the more dominant coefficient :

Thus it is possible to discuss the problem of NUC coefficient solving.

2.3. MRSBNUC Method

In order to solve the influence of the detector drift over time, it is necessary to study the method without pre-stored coefficients. This is really important for accurate in-flight correction. The MRSBNUC method proposed here is an in-flight correction method that can be performed anytime during the flight or imaging process without stopping the camera. It relies on the empirical observation that adjacent pixels of airborne infrared search and track (IRST) systems usually image similar scenes the same as one another [27]. Thus we make the assumption that the median value of neighboring pixels is approximately constant.

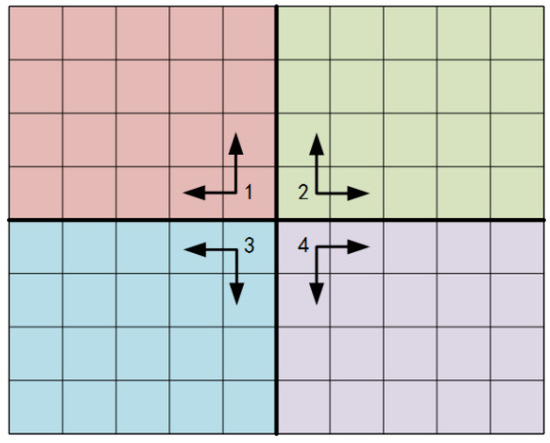

For a pixel, neighboring pixels here mean two adjacent pixels: one from the horizontal direction and one from the vertical direction representatively. Taking the middle point of FPA as the original starting point, the FPA can be divided into four individual areas (Figure 1). For Section 1, the starting point is at the bottom right corner. The adjacent pixels are in the up and left side of the point recursively. The other three parts are the same. Take Section 2 as an example, this can be expressed as:

Figure 1.

Adjacent pixels schematic.

Substituting Equation (6) into Equation (7) to obtain:

Since the parameter is irrelevant with the frame number n, Equation (8) can be rewritten as:

For a sequence of camera frames (n frames) which is considered as n samples in the method, compute the ratios between adjacent pixels, (where ). Then the median ratio of can be expressed as , NUC can be calculated with:

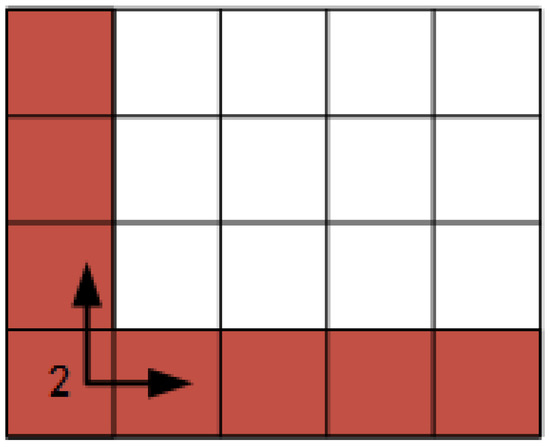

It is obvious as seen in Equation (10) that the solution of is iterative. That is to calculate we need to know and in advance. This process eventually leads to the problem of getting the parameters of and which are the NUC coefficient for the first row and first column (Figure 2, taking Section 2 as an example). Thus the problem of solving NUC for detector arrays becomes that of solving NUC for two linear detectors.

Figure 2.

The problem is transferred to solving the Non-Uniformity Correction (NUC) for the first row and first column of the section.

For linear arrays, we continue to implement the MRSBNUC method. The difference between linear array and area array is that the adjacent pixel is the one pixel next to it in one direction. Take the first row (where j is set to 1) as an example to illustrate this method for linear arrays. It is defined:

Accordingly, the same as Equation (9), we can get:

The ratio between adjacent pixels is computed by:

Select the median ratio of for linear array which is expressed as . It is derived from Equation (12) and Equation (13):

Similarly, for the first column, the non-uniformity correction coefficient can be calculated by the following formula:

One more thing that needs to be pointed out is that it can be seen from Formula (12) that the number of unknowns is M, and there are M–1 independent equations, which results in a non-unique solution for this equation set. It is the uniformity that matters the most in this method, so we initialize the v1, 1 = 1 to get a unique solution of the formula.

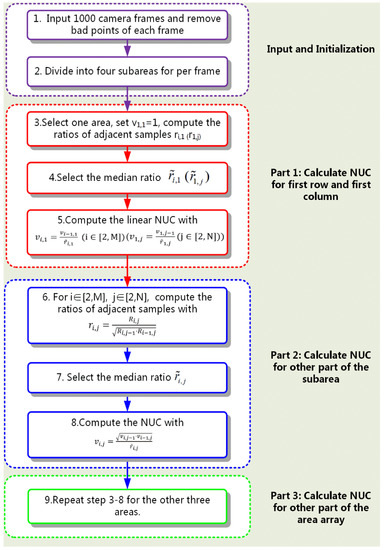

To sum up, the procedure of the MRSBNUC method is shown in Figure 3. As can be seen from Figure 3, the method requires 1000 frames of the camera, simple multiplication and division operations, and calculates only one parameter of NUC—only the gain. Compared to traditional scene-based methods [17,18,19,20,21,22,23,24,25] which need to calculate two parameters (both the gain and the bias), and require 10,000 images or a complicated motion estimation or registration algorithm, the MRSBNUC method reduces the complexity of scene-based NUC significantly.

Figure 3.

Procedure of MRSBNUC method.

2.4. Uniformity Evaluation

Uniformity can usually be characterized by the global standard deviation (STD) of the gray value of the detector. However for an IRST, especially for the point target detecting, it is the SNR of the target that decides whether the target can be detected. Therefore global uniformity is not as effective as local STD. So local STD (5 × 5) is used for uniformity evaluation. It is defined as:

where μ is the arithmetic mean of the 5 × 5 pixels:

2.5. Experiments

To verify the effectiveness of the proposed NUC method, experiments need to be designed. The two-point NUC calibration (TP) method is commonly used in engineering. We conducted the laboratory calibration for comparison and basic effective foundation.

2.5.1. Laboratory Calibration

A FLIR HgCdTe Long-Wave Infrared (LWIR) detector was used in the experiments. The parameters of the IR system are listed in Table 1.

Table 1.

Parameters of the experimental IR system.

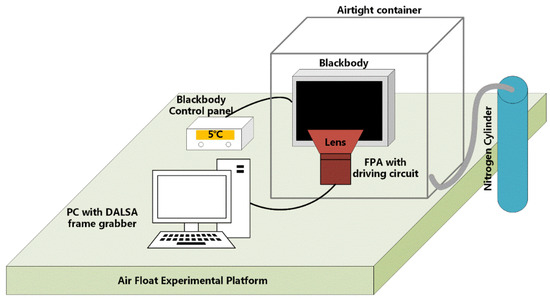

The schematic diagram of the laboratory calibration experiment is shown in Figure 4. All devices are placed on the vibration isolation platform. A CI blackbody with a controller is located right in front of the IR system (infrared detector, optical lens, and driving circuits). The camera is linked to a DALSA frame grabber connected to a PC through a camera link cable.

Figure 4.

Schematic diagram of the laboratory calibration experiment.

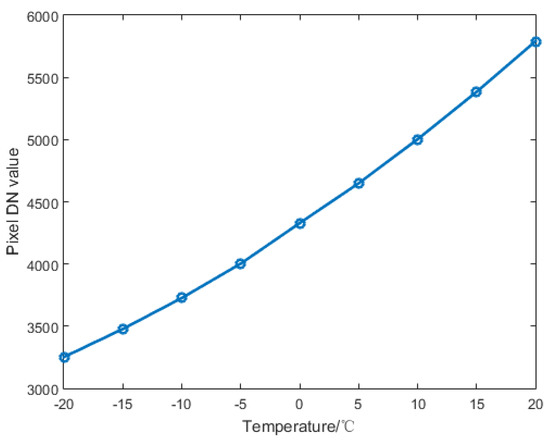

The integration time of the IR system is set to 300 μs. By setting the black body temperature from −20 to +20 °C at intervals of 5 °C, we can adapt the incident radiance into the IR detector. Image data for each temperature is restored in the PC through the grabber. The relationship between the average gray value of the image and the black body temperature is shown in Figure 5.

Figure 5.

Relationship between Digital Number (DN) value of the image and the black body temperature.

The TP NUC method was applied to analyze data collected in the laboratory. Since it was a laboratory test, we could use the global STD to indicate the uniformity of the IR system. The images used to calculate the NUC parameters are marked as R1 and R2. The corresponding temperature of the blackbody is T1 and T2, respectively. The image needing to be corrected is labeled R3. The corresponding temperature is T3. Two different TP NUC experiments were conducted:

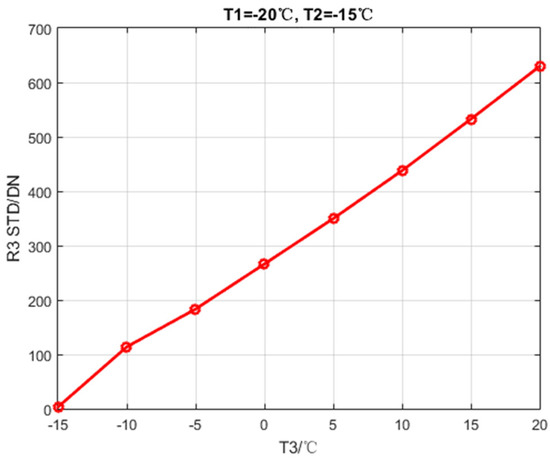

- Experiment 1: T3 Falls Outside the Range of T1 and T2

Set T1 to −20 °C, T2 to −15 °C, T3 increases from −15 to 20 °C at intervals of 5 °C. Then calculate the global STD of R3. The calibration data are listed in Table 2. The global STD after NUC is shown in Figure 6.

Table 2.

Two-point Non-Uniformity Correction (TP NUC) statistics of experiment 1.

Figure 6.

Global STD after NUC changes with T3.

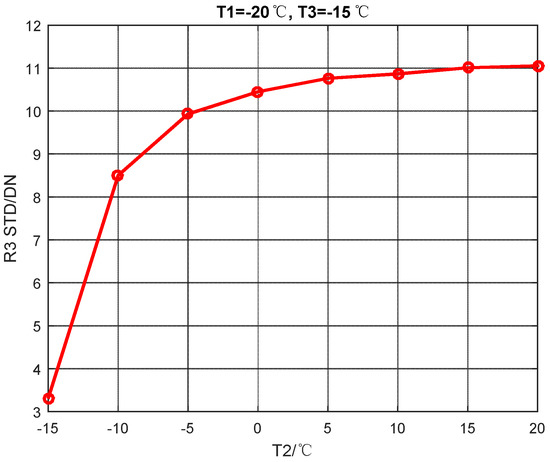

- Experiment 2: T3 Falls between T1 and T2

Set T1 to −20 °C, T3 to −15 °C, T2 increases from −15 to 20 °C at intervals of 5 °C. Then calculate the global STD of R3. The calibration data is listed in Table 3. The global STD after NUC is shown in Figure 7.

Table 3.

TP NUC statistics of experiment 2.

Figure 7.

Global STD after NUC changes with T2.

2.5.2. Scene-Based Experiment

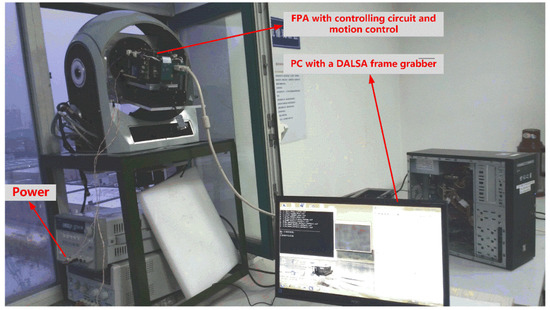

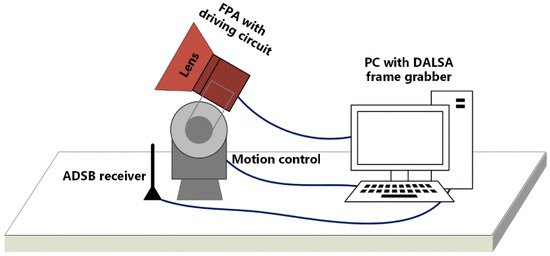

In order to verify the validity of the method, an optical imaging platform was set up to integrate the long-wave infrared camera with the turntable system. Except for collecting image data, an industrial computer was also used to control the camera speed and rotation direction through the RS422 serial port to simulate the working mode of the detection system. The integration time was adjusted to 300 us, which is consistent with the laboratory calibration to facilitate the comparison of the effects of two NUC methods. The actual experimental platform is shown in Figure 8.

Figure 8.

Experimental devices of scene-based NUC calibration.

The experimental process is as follows: First, adjust the camera height and elevation angle to align it with the scene outside the window. Second, change the azimuth of the camera at a constant speed to simulate the working mode of the airborne infrared point target detection system: sweeping mode, and then start the camera image acquisition process. The scene is continuously collected through the CameraLink interface, and more than 1500 sequence image raw data are stored in the computer.

To exclude the influence of infrared detector drift over time, weather, season, and other factors on the experimental results, the two experiments, laboratory calibration and scene-based experiment were performed in the same week. Since the IRST is for air-to-air small target detection, it is mainly focused on the sky scene background. Two different sets of scene image data were stored: clear sky scene and sky scene with clouds. The collected scene image data is corrected using the TP method and the MRSBNUC method proposed in this paper, and the correction results are compared and analyzed.

2.5.3. Target Detection

In order to verify the effectiveness of this method in actual target detection, an experiment to detect the airliners leaving our local International Airport was designed. The schematic diagram of the experimental platform is shown in Figure 9. The IR imaging system was mounted to a motion control system so that the angle of the optical window could be adjusted due to requirements. A global navigation system (GNS 5890 ADSB receiver) was placed as near as possible to the IR target imaging system. An ADSB receiver was used to assist us in finding the airliners (actual targets) manually in the image frames sequence. The distance from the imaging system and the target can also be calculated with data read from the ADSB receiver. The imaging system, motion control system, and ADSB receiver are connected to a PC through CameraLink, Ethernet, and USB connectors separately. The whole devices were placed on the roof of a building.

Figure 9.

Schematic diagram of the target detection experiment.

The experimental process is as follows: First, adjust the camera height and elevation angle to align it with the sky background scene and potential target passing area. Second, set up the ADSB receiver and show all the data (location, velocity, identification of the airliners, etc.) from ADSB Scope software on the PC screen. Third, change the azimuth of the camera at a constant speed to simulate the working mode of the airborne infrared point target detection system—sweeping mode— and then start the camera image acquisition. The scene is continuously collected through the CameraLink interface, and images of raw data are stored in the computer. Fourth, find the frames with airliners in the Field of View(FOV). Fifth, calculate the SNR of the target both after TPNUC and MRSBNUC.

3. Results

3.1. Clear Sky Scene

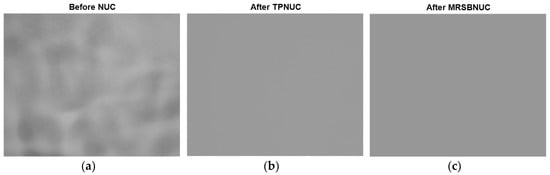

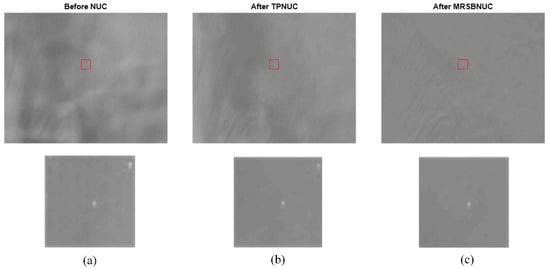

Take one frame as an example, the original and corrected images are shown in Figure 10. As can be seen from Figure 10a, the original image has strong non-uniformity, which causes the image to be uneven. Both the TPNUC and the MRSBNUC method can improve the uniformity of the original image effectively. Visually, there is no ghosting phenomenon in the corrected images. However, there is still some noise remaining in Figure 10b, and the correction effect of Figure 10c is better than Figure 10b.

Figure 10.

Clear sky scenes: (a) before NUC, (b) after TPNUC and (c) after MRSBNUC.

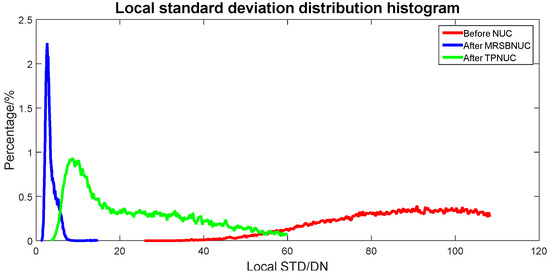

To evaluate the correcting effect quantitatively, local (5 × 5 pixels) STD distributions and local (5 × 5 pixels) STD cumulative distributions are calculated and shown in Figure 11 and Figure 12. The 5 × 5 neighborhood local STD means of the scene before NUC, after TPNUC, and after MRSBNUC are 109.8, 39.9, and 5.2 separately. It is indicated that for clear sky scenes MRSBNUC is much better than TPNUC.

Figure 11.

Local standard deviation distribution of clear sky scene: before NUC, after TPNUC, and after MRSBNUC.

Figure 12.

Local standard deviation cumulative distribution of clear sky scene: before NUC, after TPNUC, and after MRSBNUC.

3.2. Sky Scene with Clouds

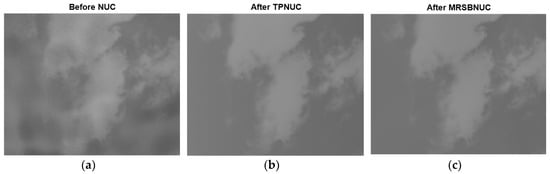

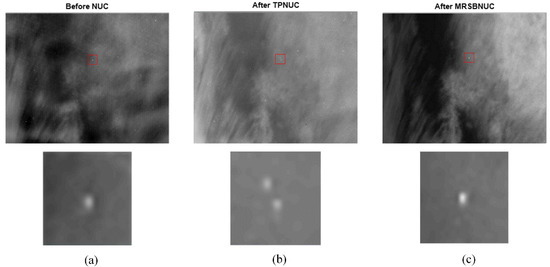

For the sky scene with clouds, also take one frame as an example, the original and corrected images are shown in Figure 13. The same as in Figure 10, it can be seen from Figure 13a, the original image has strong non-uniformity, which causes the image to be uneven. Both the TPNUC and the MRSBNUC methods can improve the uniformity of the original image effectively. Visually, there is no ghosting phenomenon in the corrected images. However, there is still some noise remaining in Figure 13b, and the correction effect of Figure 13c is better than Figure 13b.

Figure 13.

Sky scene with clouds: (a) before NUC, (b) after TPNUC and (c) after MRSBNUC.

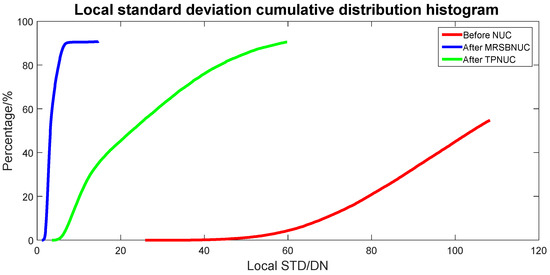

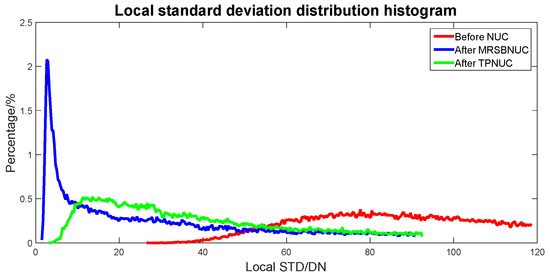

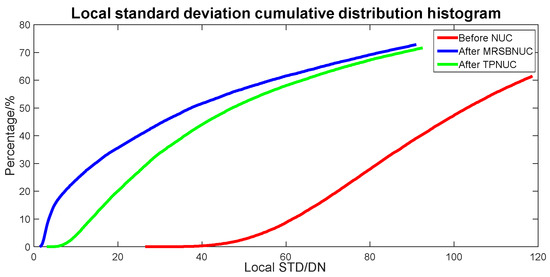

To evaluate the correcting effect quantitatively, local (5 × 5 pixels) STD distributions and local (5 × 5 pixels) STD cumulative distributions are calculated and shown in Figure 14 and Figure 15. The 5 × 5 neighborhood local STD means of scene before NUC, after TPNUC, and after MRSBNUC are 118, 75.4 and 62.3 separately. It is indicated that for sky scene with clouds MRSBNUC is also better than TPNUC.

Figure 14.

Local standard deviation distribution of sky scene with clouds: before NUC, after TPNUC, and after MRSBNUC.

Figure 15.

Local standard deviation cumulative distribution of sky scene with clouds: before NUC, after TPNUC, and after MRSBNUC.

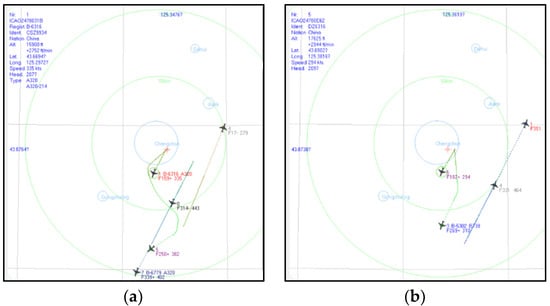

3.3. Target Detection

Figure 16 is the screenshot of ADSB Scope Software. Targets could be found manually based on the ADSB data. The distance between one target and the IR system can also be calculated employing the geodetic coordinate system. The experiment was done repeatedly on different dates, and some targets were captured. In order to show the effectiveness of the algorithm, among those targets two representative targets in the cloudy scene were selected to introduce the experimental results. In Figure 16a, Target 1 Airline 0 from Korea was detected. It is 48.36 km away from the IR system. In Figure 16b, Target 2 Airline 4 from China was detected. It is 50.53 km away from the IR system.

Figure 16.

ADSB receiver data shown on ADSB Scope Software: (a) Target 1 Airline 0 from Korea was detected; (b) Target 2 Airline 4 from China was detected.

For scenes with the target in FOV, also take one frame as an example, the original and corrected images of Target 1 and Target 2 are shown in Figure 17 and Figure 18. The 5 × 5 neighborhood local STD means of the scene before NUC, after TPNUC and after MRSBNUC are 118.2, 78.2, and 52.6 separately in Figure 17. Local STD means increased by 1.49 times; In Figure 18, The 5 × 5 neighborhood local STD means of scene before NUC, after TPNUC and after MRSBNUC are 123.5, 80.1, and 52.4 separately. Local STD means increased by 1.53 times.

Figure 17.

Target 1: (a) before NUC, (b) after TPNUC and (c) after MRSBNUC.

Figure 18.

Target 2: (a) before NUC, (b) after TPNUC and (c) after MRSBNUC.

Signal-to-Noise (SNR) is often used for evaluation of detection results. Image SNR is defined as [28,29]:

where and are the gray value of the detection unit corresponding to the target and local background area respectively, is the noise standard deviation of the local background. It can also be written in decibels:

For an infrared point target, Signal-to-Noise can be calculated with [28]:

where is the gray value of target, B is the mean gray value of the background area, and represents noise standard deviation.

The target SNR before NUC, after TPNUC and MRSBNUC for Target 1 and Target 2 are listed in Table 4. As can be seen from the results in Table 4. For Target 1, the SNR is increased by 1.88 and 3.93 times after TPNUC and MRSBNUC, respectively. For Target 2, the SNR is increased by 1.60 and 2.76 times after TPNUC and MRSBNUC, respectively. Compared to TPNUC that is usually used in engineering, utilizing MRSBNUC target SNR is increased by 2.09 times and 1.73 times for Target 1 and Target 2. Therefore, the performance of MRSBNUC is better than TPNUC in target detection.

Table 4.

SNR before NUC, after TPNUC and MRSBNUC for Target 1 and Target 2.

4. Discussion

4.1. Why Does the MRSBUNC Method Calculate from the Center of the Image (Divided into Four Regions)?

As is shown in Equation (10), this method is based on a recursive process. The error and uncertainty will also be accumulated and passed on during the calculation. We start the NUC calculation from the center of the image so as to limit the error accumulation. Even with this strategy the parameter could slowly change from the center to the edges of one picture due to statistical noise among thousands of samples. It means that the NUC can be accurate locally and inaccurate globally which depends on the data collected. Since we are trying to improve the detecting rate of the point target in this paper, the accurate local NUC is good enough and practical. For scenes that require high global uniformity, this method may not be effective.

4.2. Interpretation of Results

The range R of small target detection based on signal-to-noise ratio is calculated with:

where is the specific detection rate of a detector, , and are the areas of target radiation, the entrance pupil and detector array respectively, is the effective noise bandwidth of the detector’s amplifier, is the atmospheric spectral transmittance, , are radiances of the target and the uniform background respectively. is the limit of signal-to-noise ratio required under certain detection probability.

From Section 3 we can see that the MRSBNUC results of both the clear sky scene and sky scene with clouds are better than that of TPNUC which is often used in engineering. For the clear sky scene the uniformity (local STD) mean decreased from 39.9 to 5.2, which was reduced by 7.7 times. As shown in Table 2 and Table 3, the background limit is 3.3 (both the calculating and correcting temperature are −15 °C). It should be noticed that after MRSBNUC, the uniformity of the image approaches its background limit. As is indicated in Equation (21), accordingly, the SNR is increased to 59.3 and the detection range is increased to 7.7 times.

For cloudy scenes, the uniformity (local STD) mean decreased from 75.4 (TPNUC) to 62.3 (MRSBNUC), decreased by 1.2 times. The corresponding SNR is increased to 1.46 times, with a nearly 50% improvement rate. According to Equation (21), the detection distance is 20% increased. It is clear that for scenes with heavy clouds, although the NUC effect is not as good as the clear sky scene, it still improves the target detection range and reduces the false alarm rate. In summary, the detection distance of the system can be increased by 1.2–7.7 times due to different sky scene backgrounds.

For the actual target detection experiment, as can be seen in Figure 17 and Figure 18, due to the difference in weather conditions and time at which the images were captured, the image could show differently. Take a close look at the figures. It can be discovered that the little blob in the upper right corner in the small picture of Figure 17a,b is not shown in Figure 17c. Also Figure 18b has one more blob except for the target, which is not shown in Figure 18a,c. These circumstances are due to TPNUC being based on laboratory calibration data. The detector response drifts over time, and it is hard to simulate the sky background environments precisely in the laboratory. So when employing TPNUC to actual target detection images, some noises and non-uniformity may not be corrected as in Figure 17b and noises could be introduced to the original image as in Figure 18b. These circumstances do not exist in Figure 17c and Figure 18c. This further strengthens the results that the MRSBNUC method has better performance than TPNUC.

Furthermore, the SNRs of two representative targets after MRSBNUC are increased by 2.09 times and 1.73 times compared to TPNUC, respectively. Accordingly, the detection range of these two actual targets can also be increased. It shows that the proposed method MRSBNUC can increase the uniformity of an IR image and the detection distance effectively.

4.3. Future Research

According to the results and discussion above, future research should be devoted to solving aspects such as error transfer and correction of heavy-cloud scenes. It is very important to find a universal scene correction method suitable for various scenes.

5. Conclusions

In this work, a Median-Ratio Scene-based NUC method was proposed for the airborne infrared point target detection system. The presented method is based on a simple assumption which is commonly acknowledged in digital images. It is a timely method which can be used anytime during the flight without stopping the imaging process. Experiments showed that the proposed method is of high ability in reducing the non-uniformity of target detection images which leads to higher target SNR and longer detection range. Except for a better correction effect, the proposed method also reduced the algorithm complexity significantly in terms of parameter number, algorithm process, and the amount of input data required, thus making it possible to be employed in engineering.

Author Contributions

Conceptualization, T.Z.; Funding acquisition, D.W.; Methodology, D.W.; Software, S.D.; Validation, S.D. and D.W.; Writing—original draft, S.D.; Writing—review & editing, T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number 61675202.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qi, S.X.; Ming, D.L.; Ma, J.; Sun, X.; Tian, J.W. Robust method for infrared small-target detection based on Boolean map visual theory. Appl. Opt. 2014, 53, 3929–3940. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Cui, C.; Fu, W.; Huang, H.; Cheng, H. Improved small moving target detection method in infrared sequences under a rotational background. Appl. Opt. 2018, 57, 9279–9286. [Google Scholar]

- Liu, R.; Wang, D.J.; Jia, P.; Sun, H. An omnidirectional morphological method for aerial point target detection based on infrared dual-band model. Remote Sens. 2018, 10, 1054. [Google Scholar] [CrossRef]

- Zhang, Q.; Qin, H.; Yan, X.; Yang, S.; Yang, T. Single infrared image-based stripe nonuniformity correction via a two-stage filtering method. Sensors 2018, 18, 4299. [Google Scholar] [CrossRef]

- Wu, Z.M.; Wang, X. Non-uniformity correction for medium wave infrared focal plane array-based compressive imaging. Opt. Express 2020, 28, 8541–8559. [Google Scholar] [CrossRef] [PubMed]

- Huo, L.; Zhou, D.; Wang, D.; Liu, R.; He, B. Staircase-scene-based nonuniformity correction in aerial point target detection systems. Appl. Opt. 2016, 55, 7149–7156. [Google Scholar] [CrossRef]

- Wang, E.D.; Jiang, P.; Li, X.P.; Cao, H. Infrared stripe correction algorithm based on wavelet decomposition and total variation-guided filtering J. Eur. Opt. Soc. Rapid Publ. 2020, 16, 1. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, D.; Huo, L.; Liu, R.; Jia, P. Scene-based nonuniformity correction for airborne point target detection systems. Opt. Express 2017, 25, 14210–14226. [Google Scholar] [CrossRef]

- Chen, Q. The Status and Development Trend of Infrared Image Processing Technology. Infrared Technol. 2013, 35, 311–318. [Google Scholar]

- Dereniak, E.L. Linear theory of nonuniformity correction in infrared staring sensors. Opt. Eng. 1993, 32, 1854–1859. [Google Scholar]

- Wang, Y.; Chen, J.; Liu, Y.; Xue, Y. Study on two-point multi-section IRFPA nonuniformity correction algorithm. Infrared Millim. Waves 2003, 22, 415–418. [Google Scholar]

- Zhou, H.; Liu, S.; Lai, R.; Wang, D.; Cheng, Y. Solution for the nonuniformity correction of infrared focal plane arrays. Appl. Opt. 2005, 44, 2928–2932. [Google Scholar] [CrossRef] [PubMed]

- Harris, J.G.; Chiang, Y.M. Nonuniformity correction of infrared image sequences using the constant-statistics constraint. IEEE Trans. Image Process. 1999, 8, 1148–1151. [Google Scholar] [CrossRef] [PubMed]

- Scribner, D.A.; Sarkady, K.A.; Kruer, M.R.; Caulfield, J.T.; Hunt, J.D.; Herman, C. Adaptive nonuniformity correction for IR focal-plane arrays using neural networks. In Proceedings of the Infrared Sensors: Detectors, Electronics, and Signal Processing, San Diego, CA, USA, 24–26 July 1991; International Society for Optics and Photonics: San Diego, CA, USA, 1991; pp. 100–110. [Google Scholar]

- Torres, S.N.; Hayat, M.M. Kalman filtering for adaptive nonuniformity correction in infrared focal-plane arrays. Opt. Soc. Am. A 2003, 20, 470–480. [Google Scholar] [CrossRef]

- Sun, Z.; Chang, S.; Zhu, W. Radiometric calibration method for large aperture infrared system with broad dynamic range. Appl. Opt. 2015, 54, 4659–4666. [Google Scholar] [CrossRef]

- Lv, B.L.; Tong, S.F.; Liu, Q.Y.; Sun, H.J. Statistical scene-based non-uniformity correction method with interframe registration. Sensors 2019, 19, 5395. [Google Scholar] [CrossRef]

- Qian, W.; Chen, Q.; Bai, J.; Gu, G. Adaptive convergence nonuniformity correction algorithm. Appl. Opt. 2011, 50, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Hardie, R.C.; Baxley, F.; Brys, B.; Hytla, P.C. Scene-based nonuniformity correction with reduced ghosting using a gated LMS algorithm. Opt. Express 2009, 17, 14918–14933. [Google Scholar] [CrossRef]

- Leng, H.; Yi, B.; Xie, Q.; Tang, L.; Gong, Z. Adaptive nonuniformity correction for infrared images based on temporal moment matching. Acta Opt. Sin. 2015, 35, 0410003. [Google Scholar] [CrossRef]

- Boutemedjet, A.; Deng, C.; Zhao, B. Robust approach for nonuniformity correction in infrared focal plane array. Sensors 2016, 16, 1890. [Google Scholar] [CrossRef]

- Li, Y.; Jin, W.; Zhu, J.; Zhang, X.; Li, S. An adaptive deghosting method in neural network-based infrared detectors nonuniformity correction. Sensors 2018, 18, 211. [Google Scholar]

- Hardie, R.C.; Hayat, M.M.; Armstrong, E.; Yasuda, B. Scene-based nonuniformity correction with video sequences and registration. Appl. Opt. 2000, 39, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X. Scene-based nonuniformity correction algorithm based on interframe registration. Opt. Soc. Am. 2011, 28, 1164–1176. [Google Scholar] [CrossRef] [PubMed]

- Zeng, J.; Sui, X.; Gao, H. Adaptive image-registration-based nonuniformity correction algorithm with ghost artifacts eliminating for infrared focal plane arrays. IEEE Photonics J. 2015, 7, 1–16. [Google Scholar] [CrossRef]

- Dai, S.; Li, J.; Zhang, T.; Huang, J. Blind points detection and compensation. In Infrared Focal Plane Array Imaging and Its Non-Uniformity Correction Technology, 1st ed.; Yanfen, Z., Ed.; Science Press: Beijing, China, 2015; Volume 3, pp. 96–105. (In Chinese) [Google Scholar]

- Leathers, R.A.; Downes, T.V.; Priest, R.G. Scene-based nonuniformity corrections for optical and SWIR pushbroom sensors. Opt. Express 2005, 13, 5136–5150. [Google Scholar] [CrossRef]

- Wang, X.; Wang, C.; Zhang, Y. Research on SNR of Point Target Image. Electron. Opt. Control 2010, 17, 18–21. (In Chinese) [Google Scholar]

- Liu, R.; Wang, D.; Zhang, L.; Zhou, D.; Jia, P.; Ding, P. Non-uniformity correction and point target detection based on gradient sky background. J. Jilin Univ. Eng. Technol. Ed. 2017, 47, 1625–1633. (In Chinese) [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).