In this section, a novel adaptive CKF is proposed to solve the estimation problem with the uncertain noise covariance matrix. Without loss of generality, we introduce this method based on the standard nonlinear model with the nonlinear state and measurement functions.

3.1. Gaussian Kalman Filter and Cubature Kalman Filter

The Gaussian filter is the main method to solve the nonlinear estimation, which has two key assumptions, that the one step predicted PDFs of the state and measurement are Gaussian, i.e.,

where

and

denote the mean and variance of

.

where

and

denote the mean and variance of

.

Obviously, the joint one step predicted PDF of the state and measurement

is also Gaussian, i.e.,

where

is the covariance of

and

. Based on (

14) and (

15), in the Bayesian theorem, the posterior PDF of

is also Gaussian, i.e.,

where

and

denote the mean and variance of

.

and

are derived as follows:

where

is the filter gain, and the other parameters are calculated as follows:

where

means the expectation operation.

From (

20) to (

24), the general framework of the Gaussian filter is established, and the core idea of the Gaussian filter is to calculate Gaussian weighted integrals. Due to the nonlinearity of

and

, it is difficult to obtain the accurate numerical solution of (

20)–(

24), and the approximation solution is necessary, i.e.,

where

and

are the sampling points and corresponding weights of

.

CKF, which is a typical Gaussian filter, uses the third degree spherical–radial cubature rule to obtain these weighted samples. In (

20), the cubature points of

are selected based on

and

. These cubature points are defined as follows:

where

denotes the

jth column of

A. Propagating the cubature points of

by

, the state one step predicted mean

and covariance

can be obtained as follows based on (

20) and (

21):

Furthermore, the cubature points of

based on

and

are selected as follows:

Propagating the cubature points of

by

, the measurement one step predicted mean and covariance can be obtained as follows:

Filter gain

and measurement update are given as follows:

3.2. The Proposed Adaptive Cubature Kalman Filter

When the state noise covariance and measurement noise covariance are unknown or inaccurate, the estimation accuracy of CKF may degrade or diverge. Because the one step predicted state error covariance is influenced by the inaccurate , it is easier to estimate than . Therefore, in our works, the state, one step predicted state error covariance and are jointly estimated to improve the accuracy of CKF with inaccurate noise statistical properties.

In the frame of Bayesian probability theory, the conjugate prior distribution is selected to guarantee the unified form of the prior and posterior distribution. For the Gaussian distribution with known mean, the standard inverse Wishart (IW) PDF is always used as the conjugate prior distribution. The IW PDF is formulated as follows:

where

is positive definite random matrix,

is the inverse scale matrix,

is the degrees of freedom (dof) parameter,

d is the dimension of

,

is the trace calculation, and

is the

d-variate Gamma function. When

, the mean of

is shown as follows:

Therefore, the prior distribution

and

are modeled as follows:

where

and

are dof parameters and

and

are inverse scale matrices.

The mean value of

is set as nominal

, determined by:

where

is the nominal state noise covariance matrix, which means an inaccurate value.

Let:

and set

, where

is a tuning parameter. We can obtain:

According to the Bayesian theorem,

is formulated as:

where

is the posterior PDF of

. Because the posterior and prior PDF of

has the same distribution, the posterior PDF of

is also formulated as the inverse Wishart distribution, as follows:

Because of the unknown dynamic model of

, we selected a forgetting factor

to spread the previous posterior to the current prior, and the prior parameters in (

41) are written as follows:

The initial

is also assumed as an inverse Wishart PDF, i.e.,

, where the mean value of

is set as the initial nominal

:

In order to estimate the state

, one step predicted state error covariance

and

, their joint posterior PDF

is calculated. Due to the coupling of these parameters, the analytical solution cannot be obtained. Therefore, the VB method is used to solve the estimation problem in coupling.

are calculated by minimizing the Kullback–Leibler divergence (KLD):

The optimal solution of (

51) is given by:

where

means the logarithmic function,

is the arbitrary element of

,

contains all elements in

except for

, and

means the constant dependent on

. According to the Bayesian theorem, the joint PDF

is factored as:

where likelihood PDF

is assumed as a normal distribution.

Substituting (

13), (

38), (

41), and (

55) into (

54), we have:

Taking the logarithm on both sides of (

56), the normal distribution

and IW distribution

are formulated as follows:

According to (

57) and (

58),

is formulated as:

Using (

59) in (

52) and letting

, we have:

where

is the approximation of PDF

at the iteration

, and

is given as follows:

is updated as an IW PDF with dof parameter

and inverse scale matrix

:

where:

Let

; we have:

where

is given by:

where

are cubature points based on

and

.

is updated as an IW PDF with dof parameter

and inverse scale matrix

:

where:

Let

; we have:

where:

The one step predicted PDF

and likelihood PDF

at iteration

are defined as follows:

where:

Employing (

74)–(

77) in (

71), we have:

where the normalization constant

is given as:

is updated as the normal distribution with mean

and variance

:

where

and

at iteration

are calculated similarly to (

31)–(

37).

The cubature points of

based on

and modified

are given as:

After

N fixed point iterations, we can obtain the approximate solution of

,

and

:

When the measurement model is linear, such as the initial alignment measurement model in (

12), we can obtain the simplified algorithm, where (

66) and (

81)–(

89) are formulated as follows:

The implementation pseudocode of the proposed adaptive cubature Kalman filter is shown in Algorithm 1.

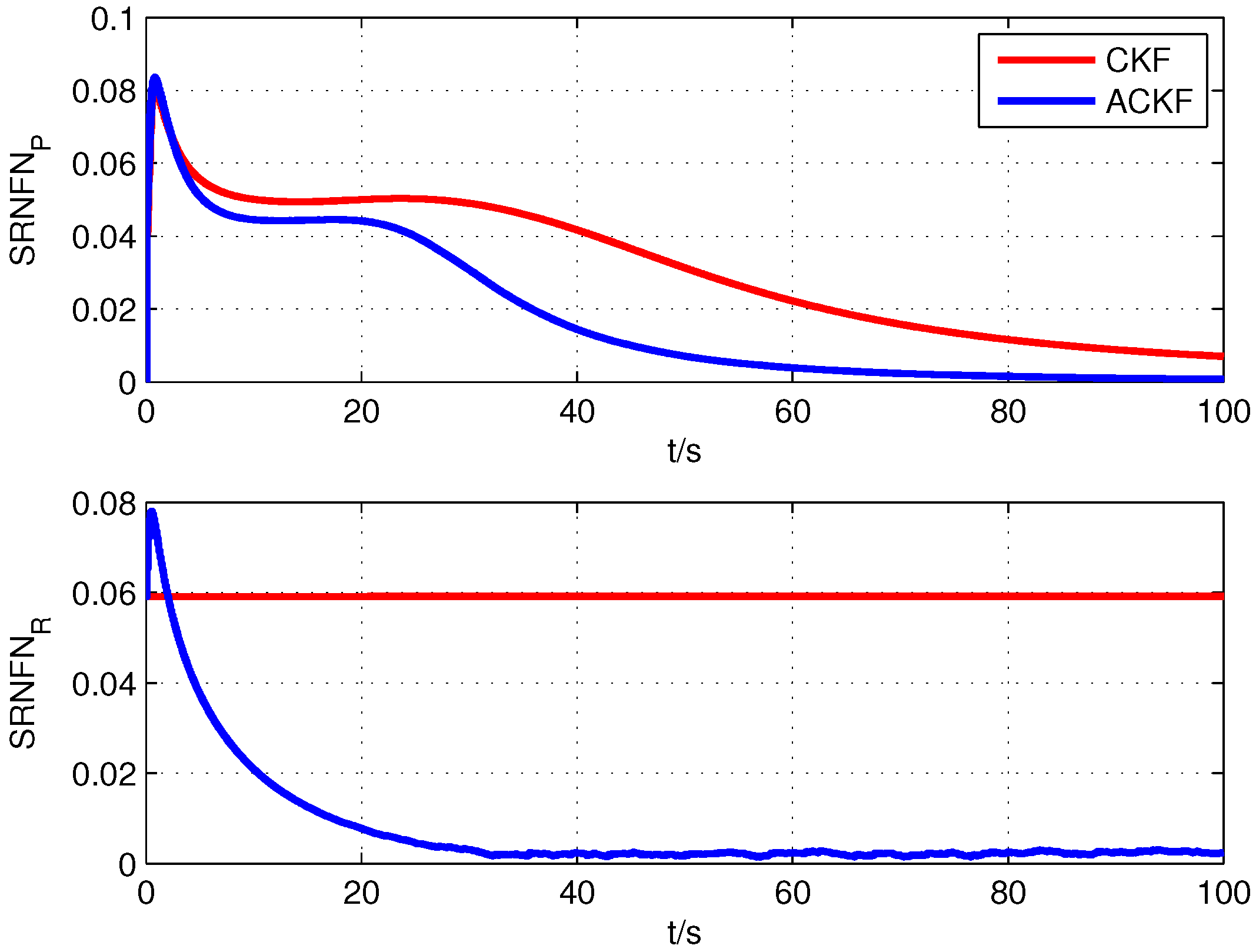

To implement the proposed ACKF method, we need to select the tuning parameter

, the forgetting factor

, and the iteration number

N. Tuning parameter

can be seen as an adjustment parameter of

. If

is too large, the prior uncertainties induced by nominal

will influence the measurement update. If

is too small, the information of the process model will be also lost. According to the research result of [

27], the optimal range of the turning parameter is

, which has better estimation performance and estimation accuracy. The forgetting factor

also adjusts the influence of

. Note that

means the stationary measurement noise covariance. A large iteration number

N will improve the estimation accuracy, but also increase the computational cost. According to our experience,

will have good performance in the alignment.

| Algorithm 1: One-step of the proposed adaptive cubature Kalman filter. |

| Inputs: , , , , , , , , N. |

| Time update |

| 1. Calculate cubature points based on and . |

| 2. . |

| 3. . |

| 4. . |

| Iterated measurement update |

| 5. Initialization: , , ,, |

| , . |

| For |

| 6. Update , |

| , , where . |

| 7. Update , |

| , , where . |

| 8. Update , |

| , . |

| 9. Calculate the mean and variance of posterior PDF, |

| , |

| , |

| . |

| End for |

| 10. ,, , . |

| Outputs: , , , . |