Modeling and Analysis of a Direct Time-of-Flight Sensor Architecture for LiDAR Applications †

Abstract

1. Introduction

- We will address the challenge of imaging in a high background noise scenario with particular emphasis on wide dynamic range targets based on coincidence detection and time-gating.

- We propose a coincidence-based shared-DTOF sensor architecture to operate in a Flash LiDAR scenario. The architecture is analyzed using a probabilistic model simulated on MATLAB.

- We outline a framework for the integrated implementation of the sensor based on simulations of the proposed architecture.

2. Flash LiDAR with DTOF Sensors

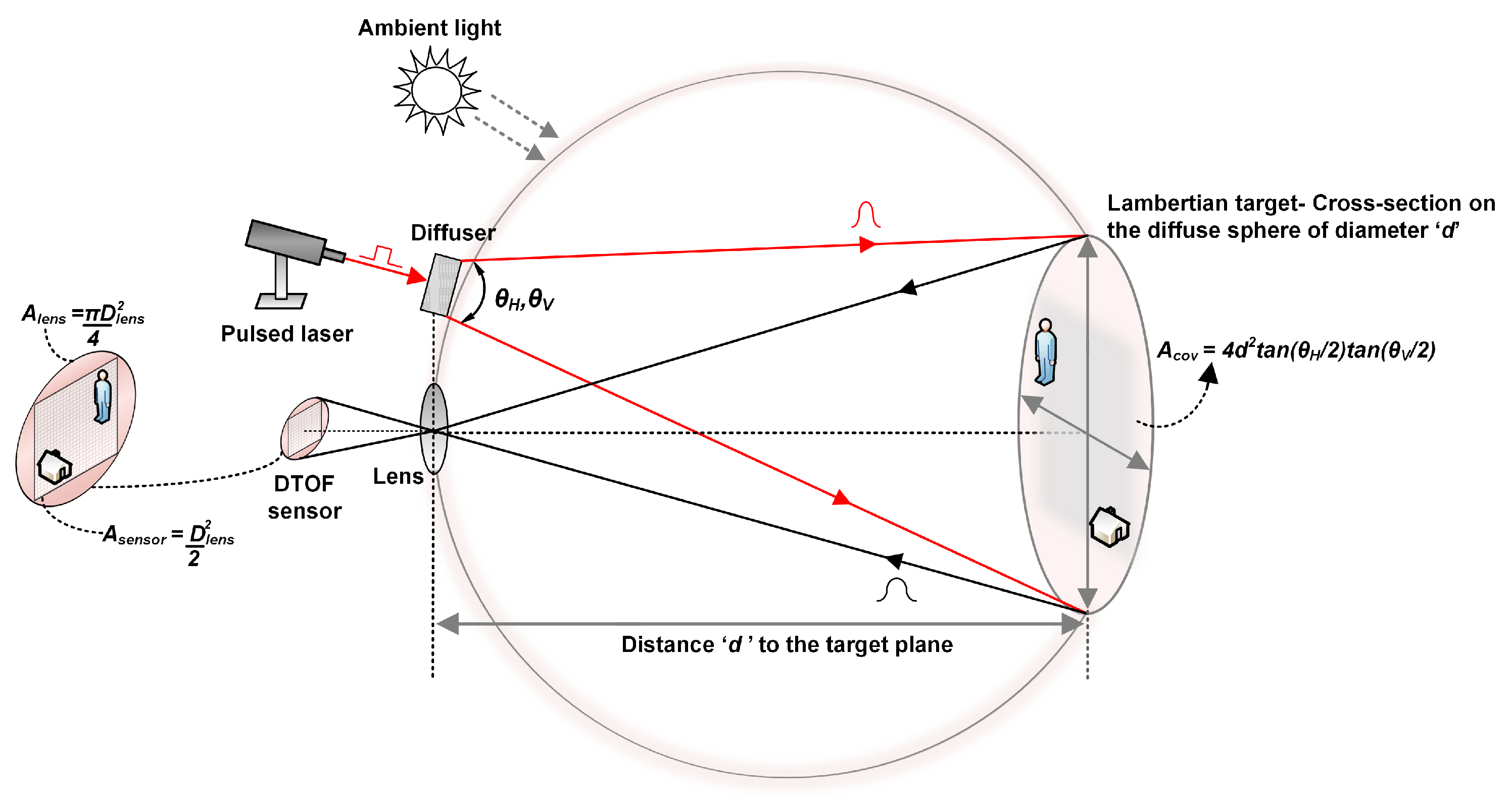

2.1. Flash LiDAR Model

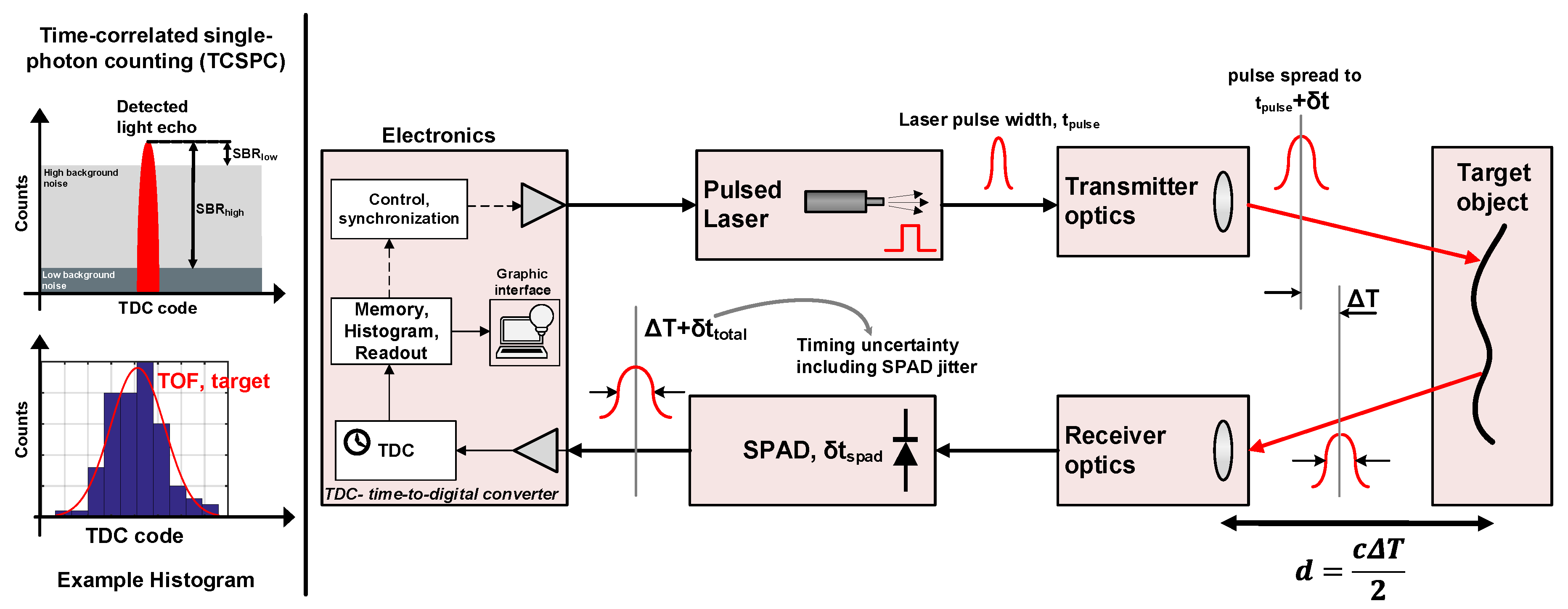

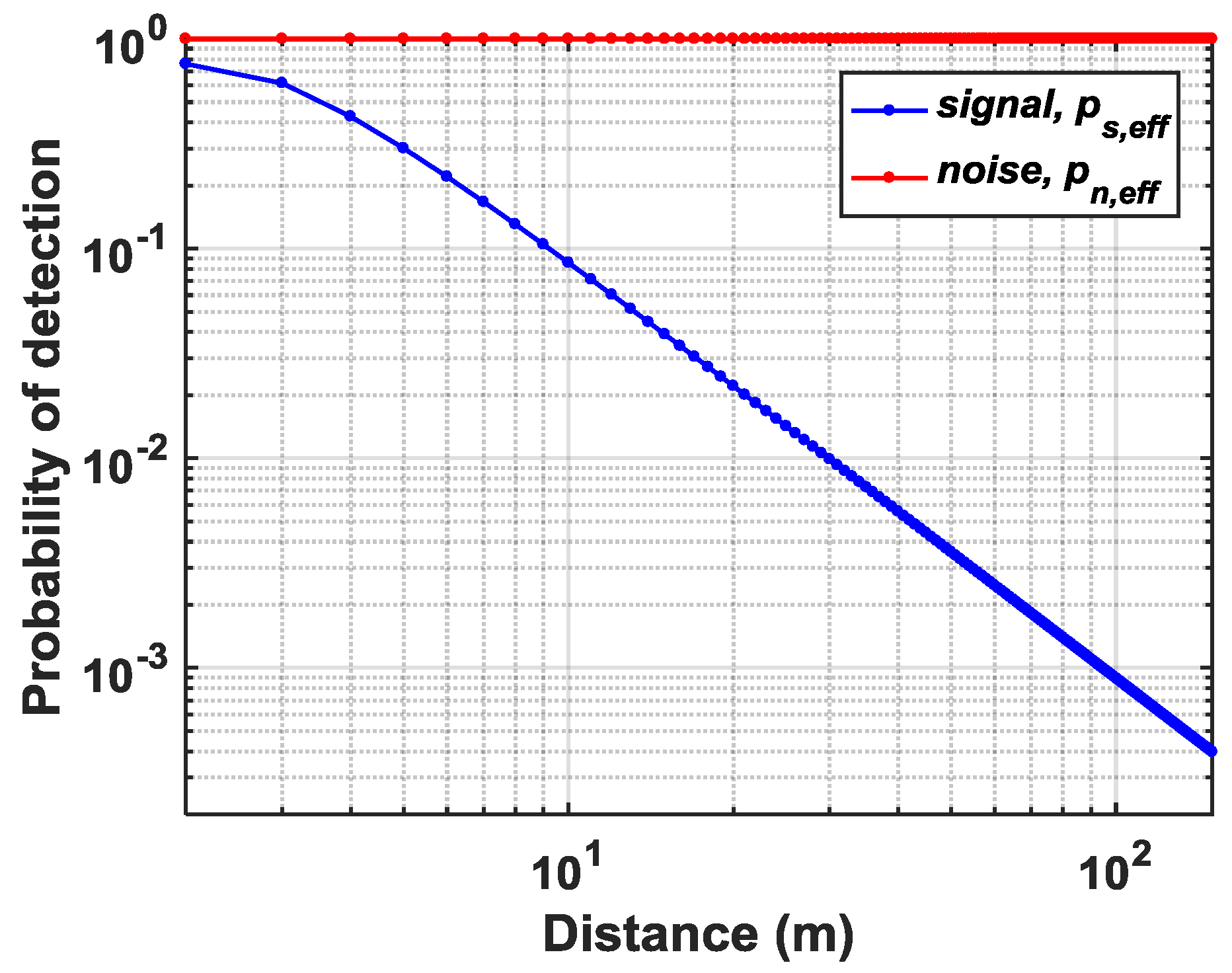

2.2. Analytical Model of a DTOF Sensor

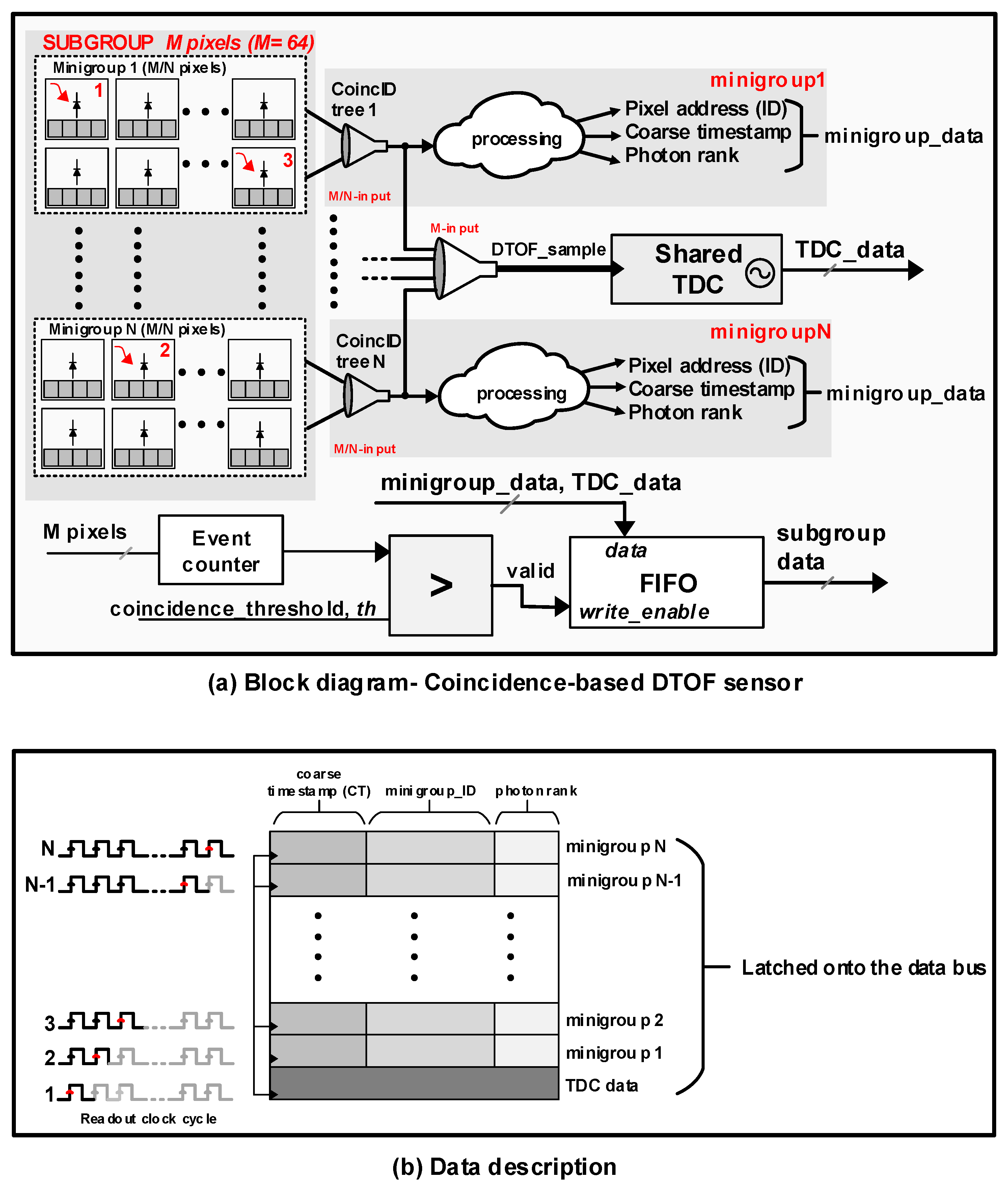

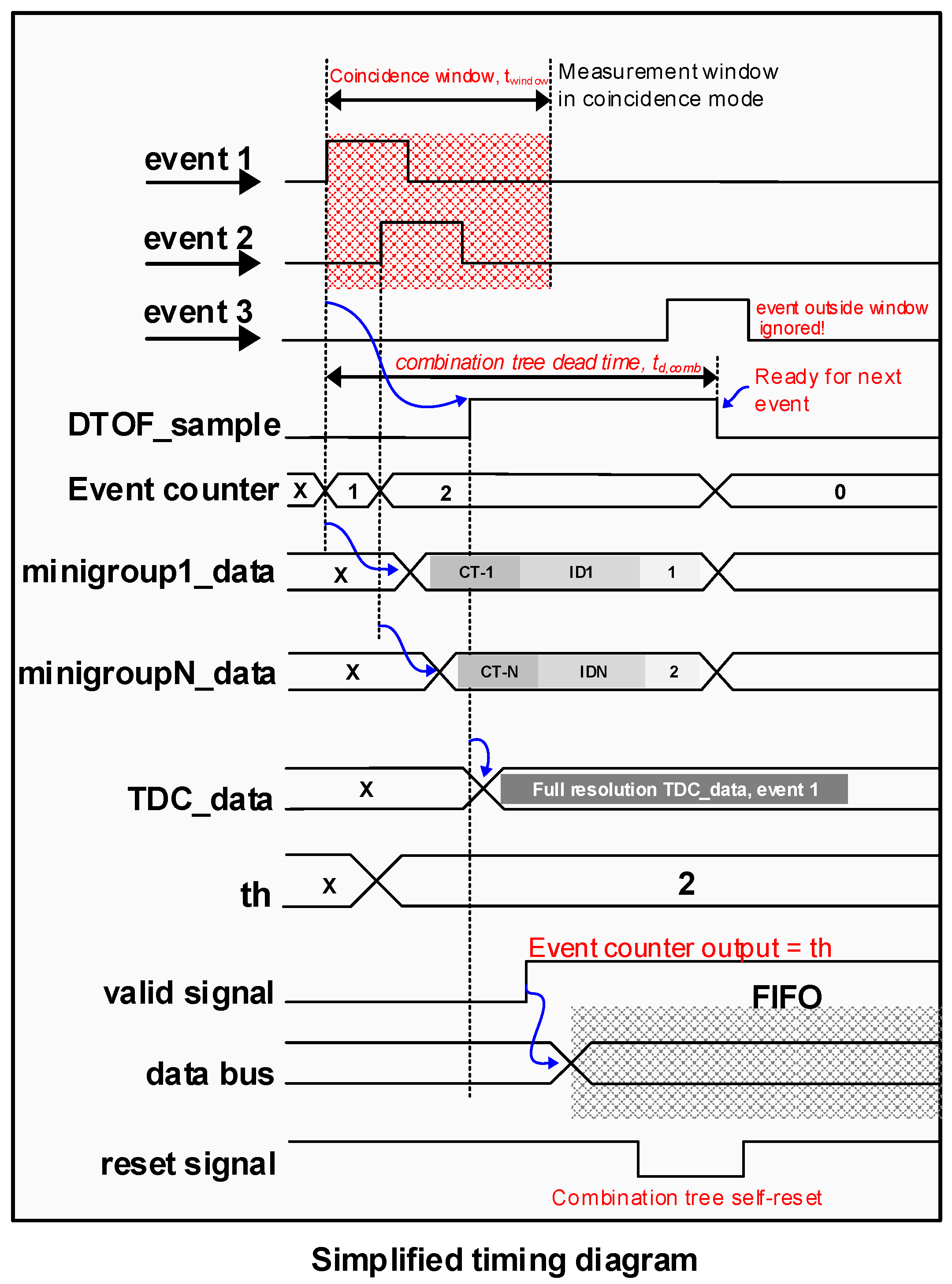

3. Proposed DTOF Sensor Model

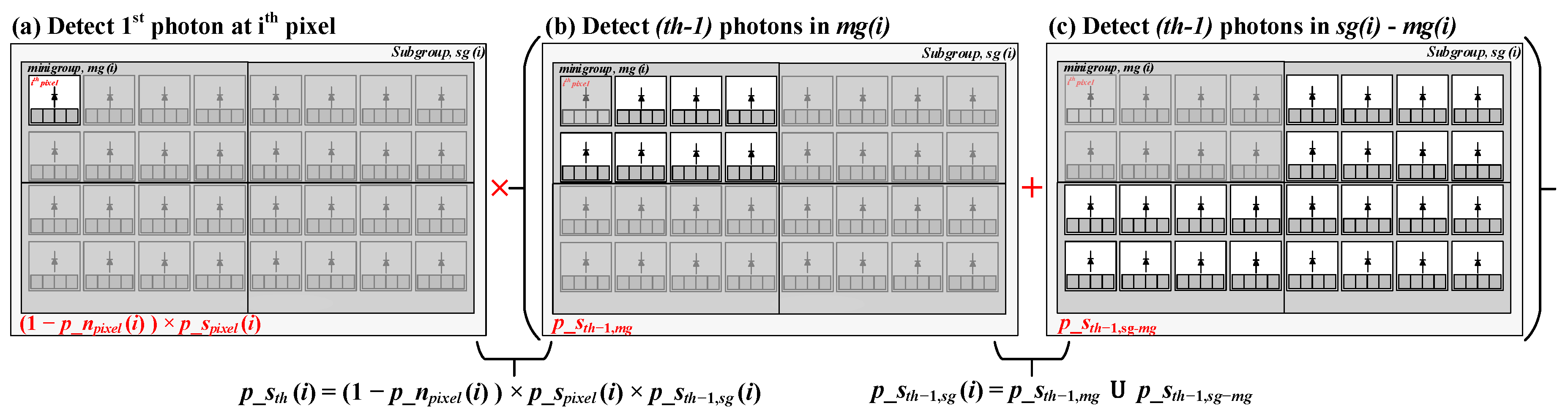

3.1. DTOF Sensor Adapted for Coincidence Detection

4. Simulation Results

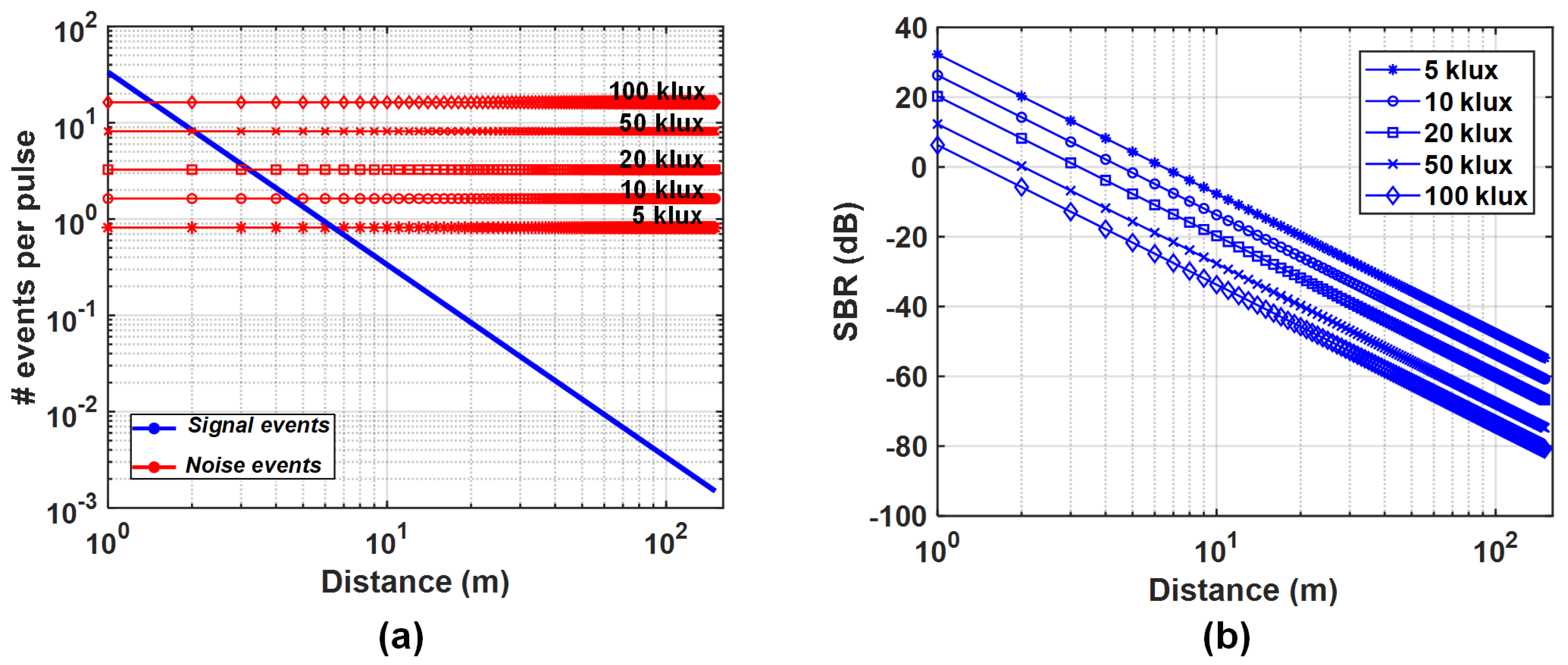

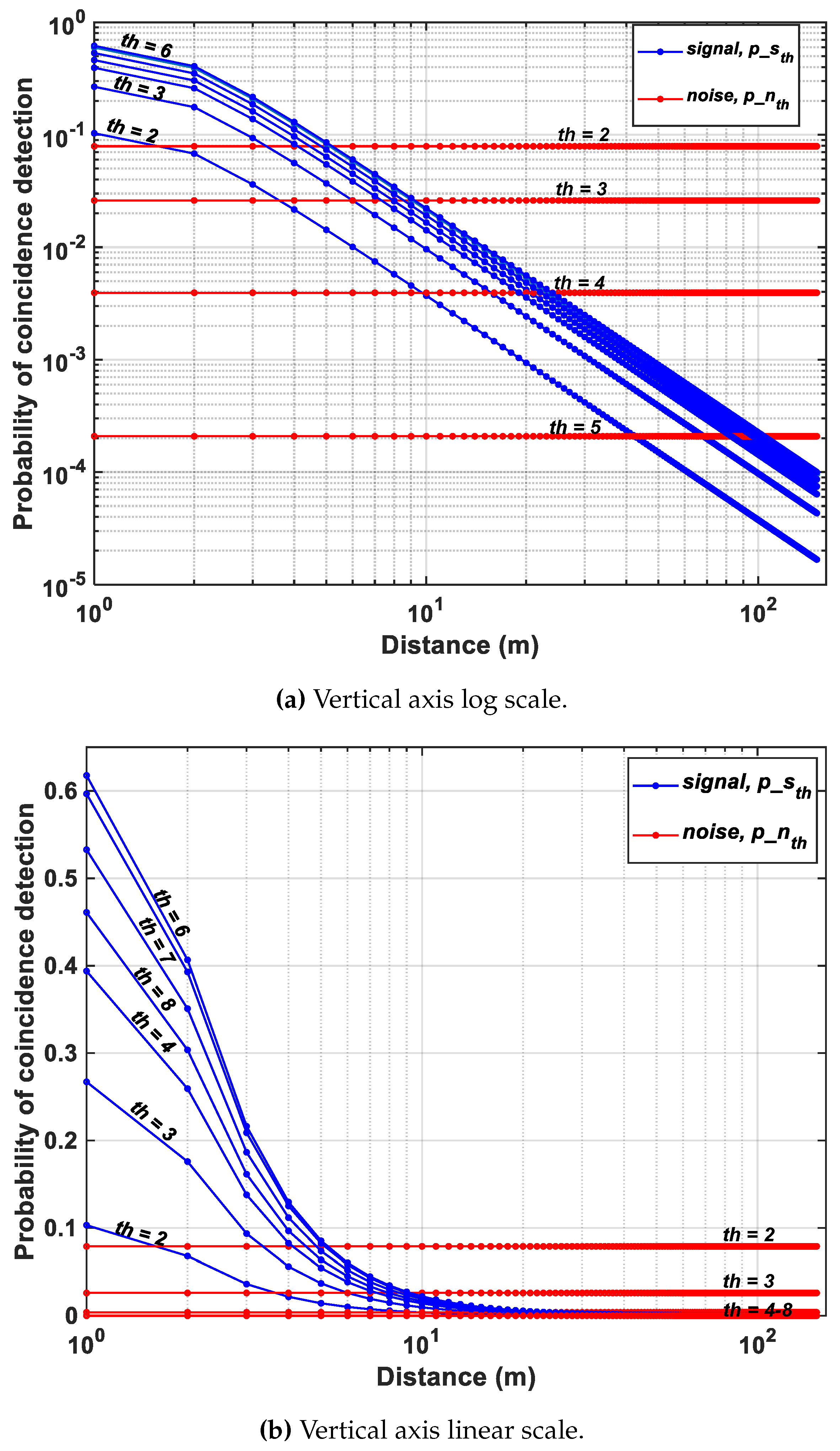

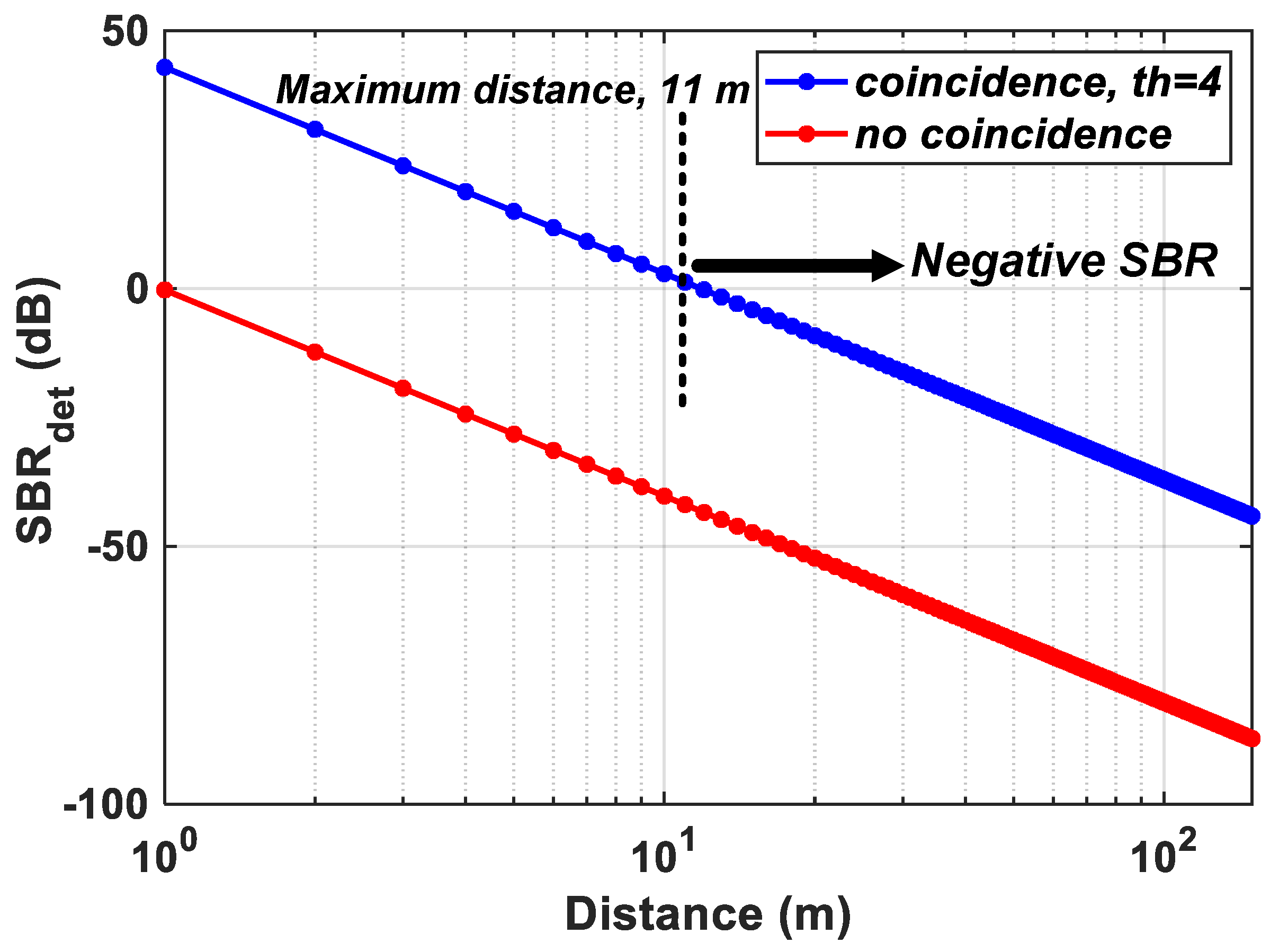

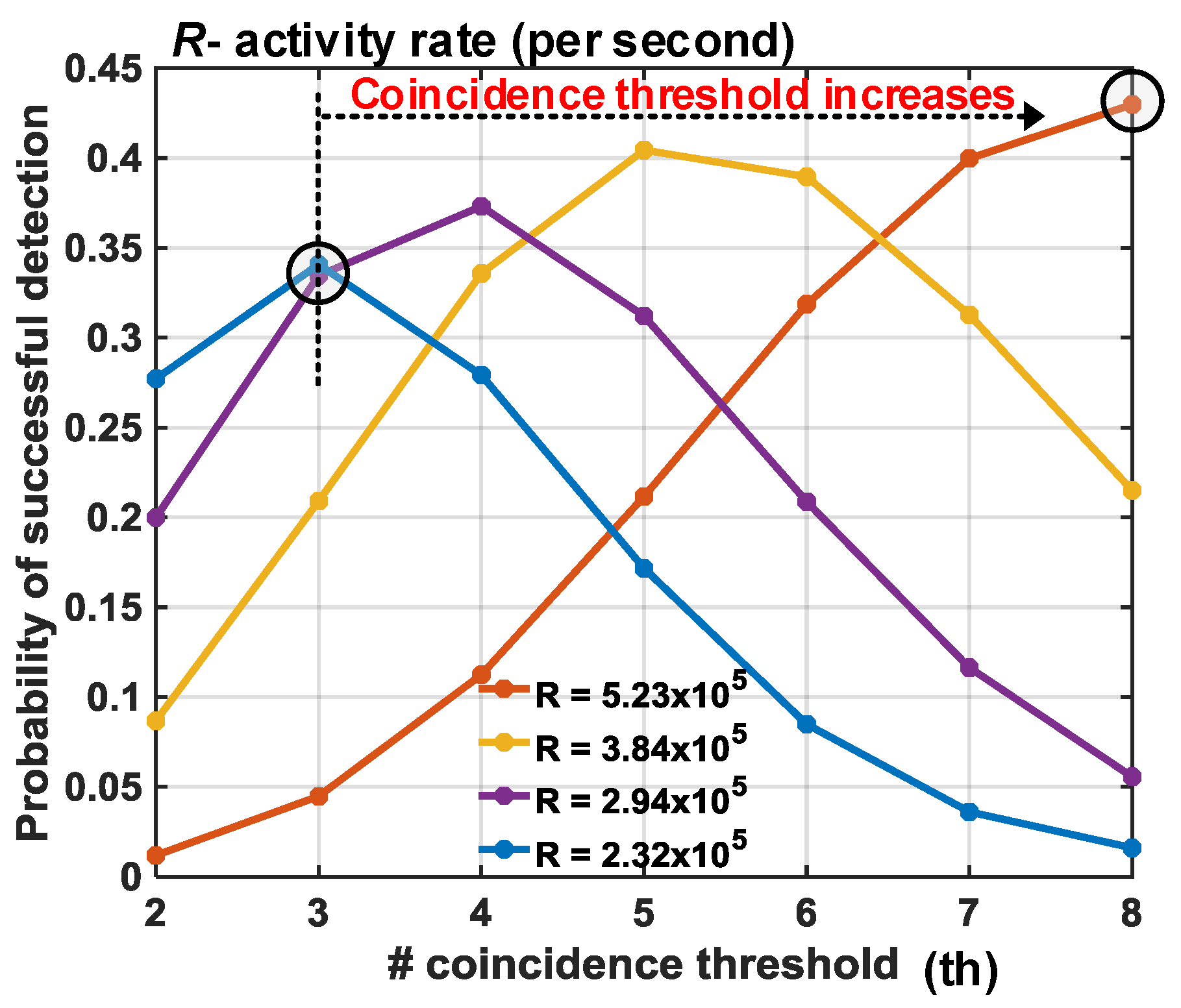

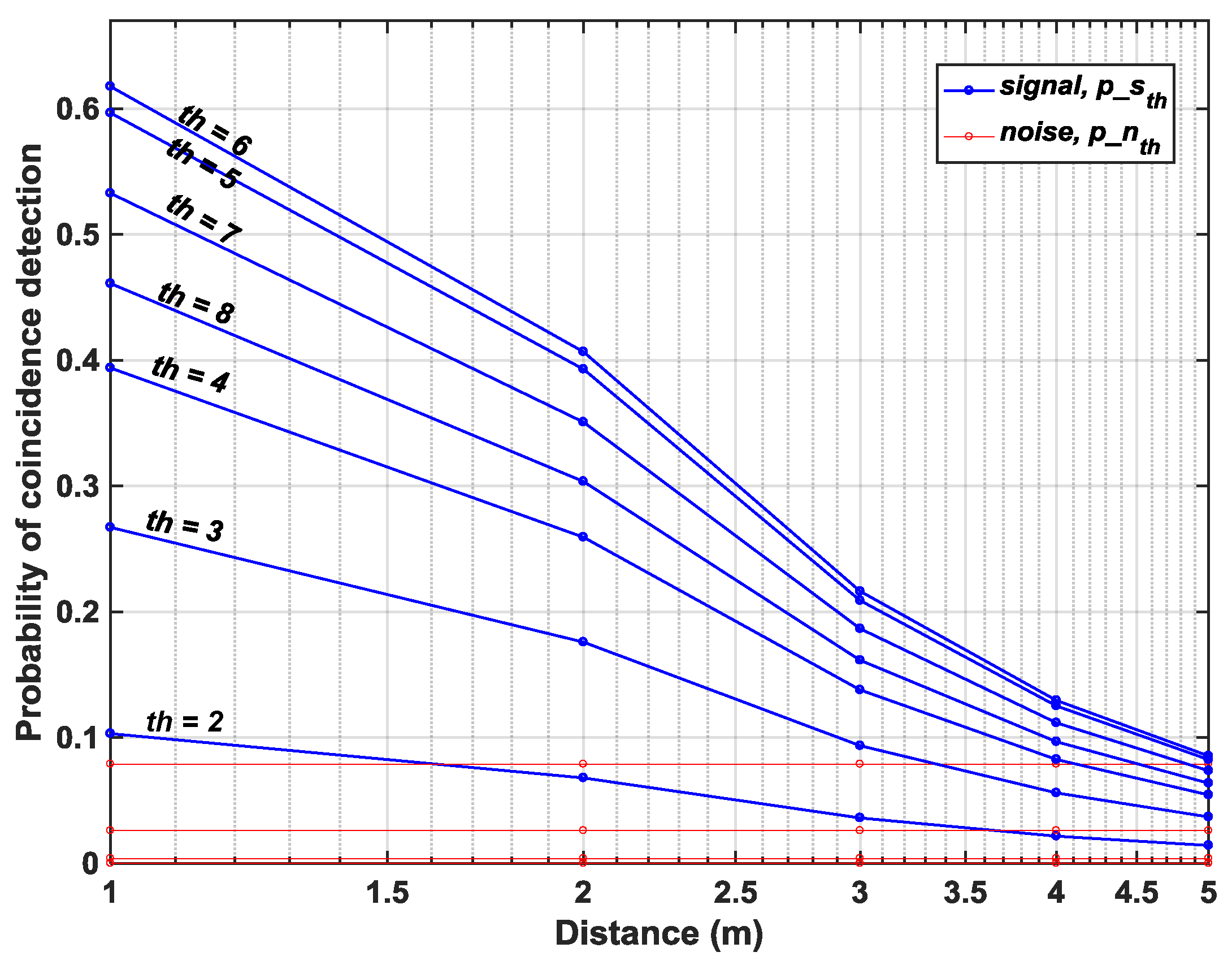

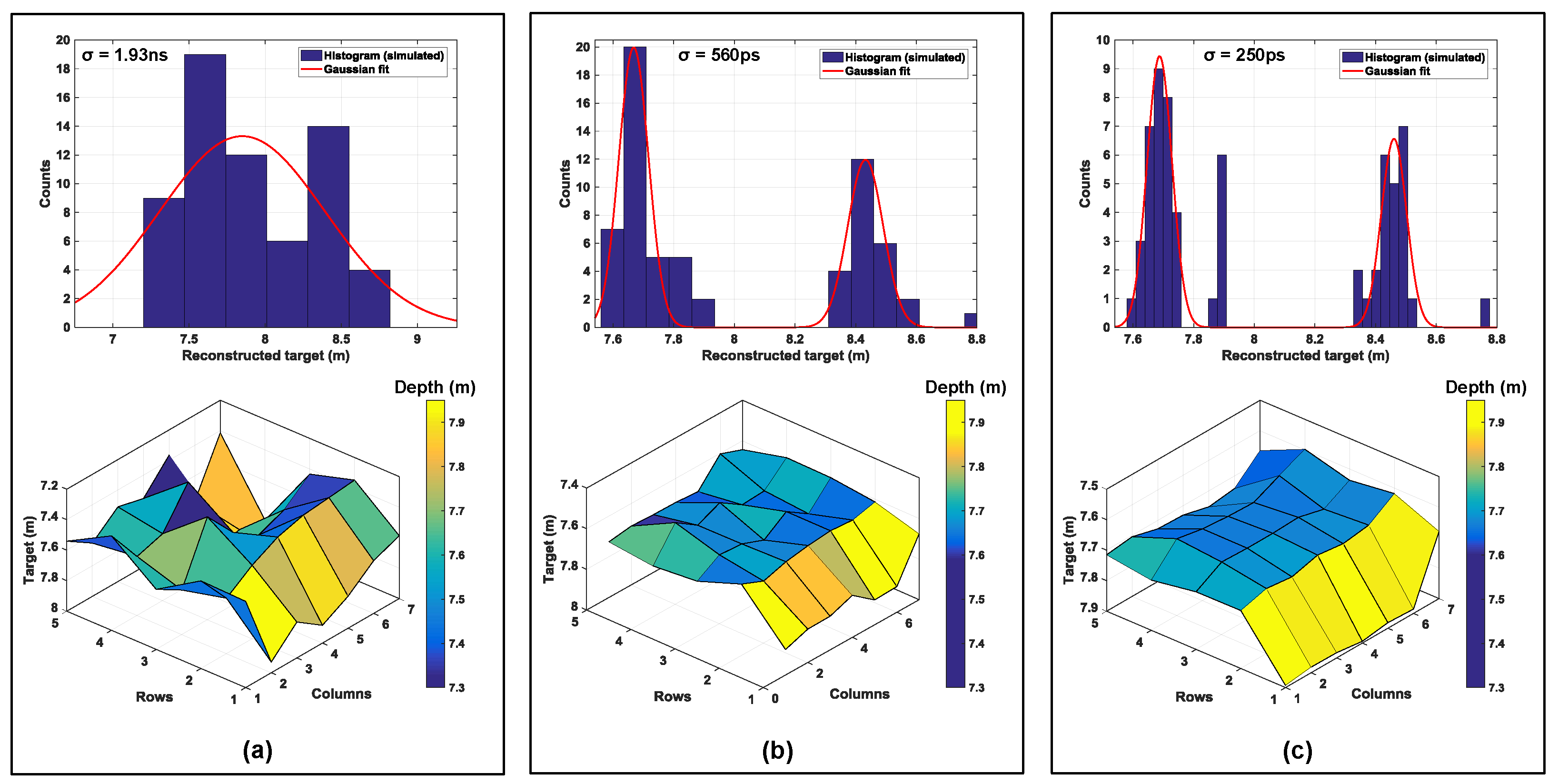

4.1. Single-Point Ranging

- Increasing the outgoing laser power without violating eye-safety regulations

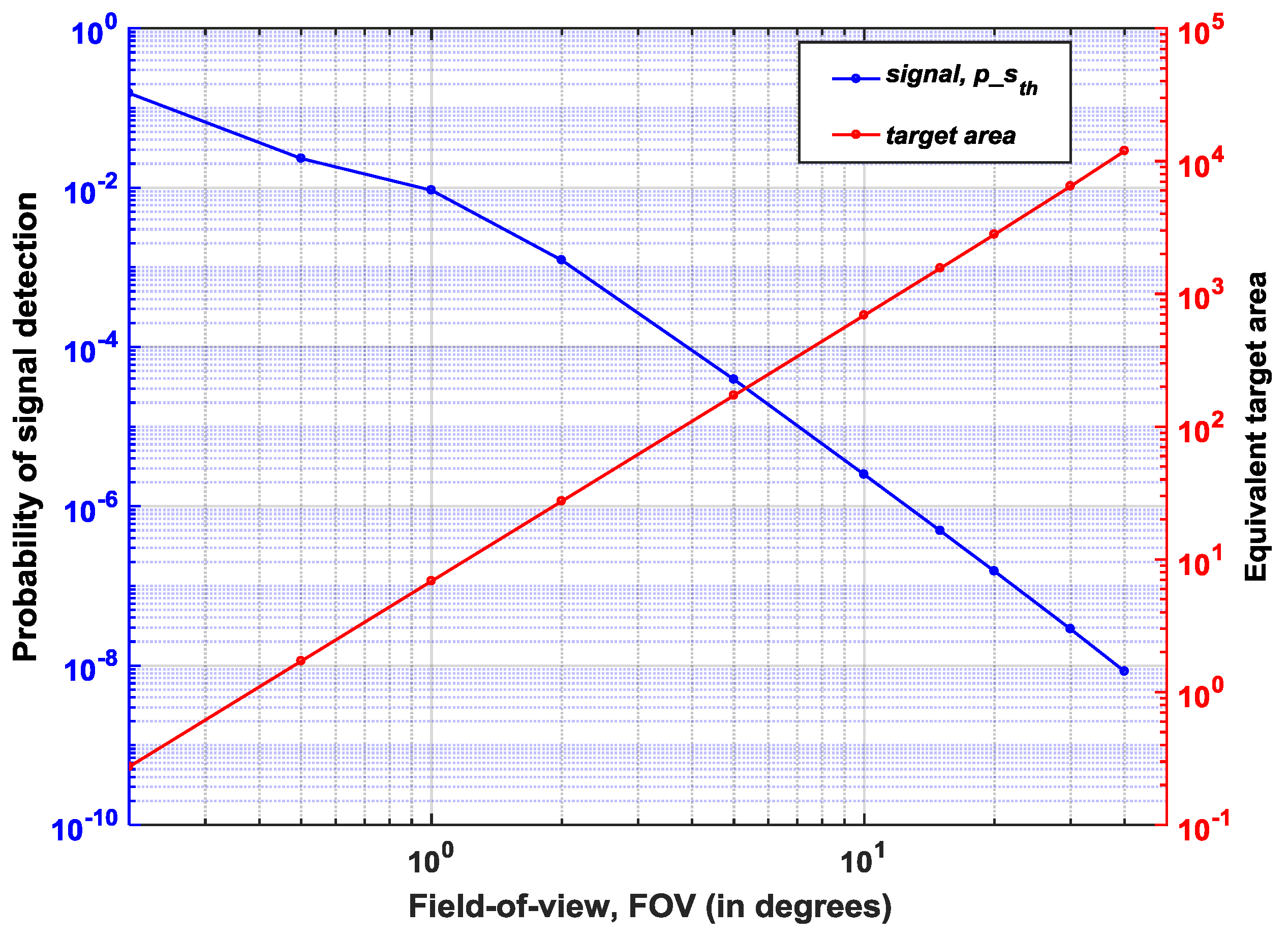

- Decreasing the FOV, given that at longer distances, a full resolution sensing may not be required

- At the sensor level, data from multiple pixels may be combined at the expense of lower spatial resolution

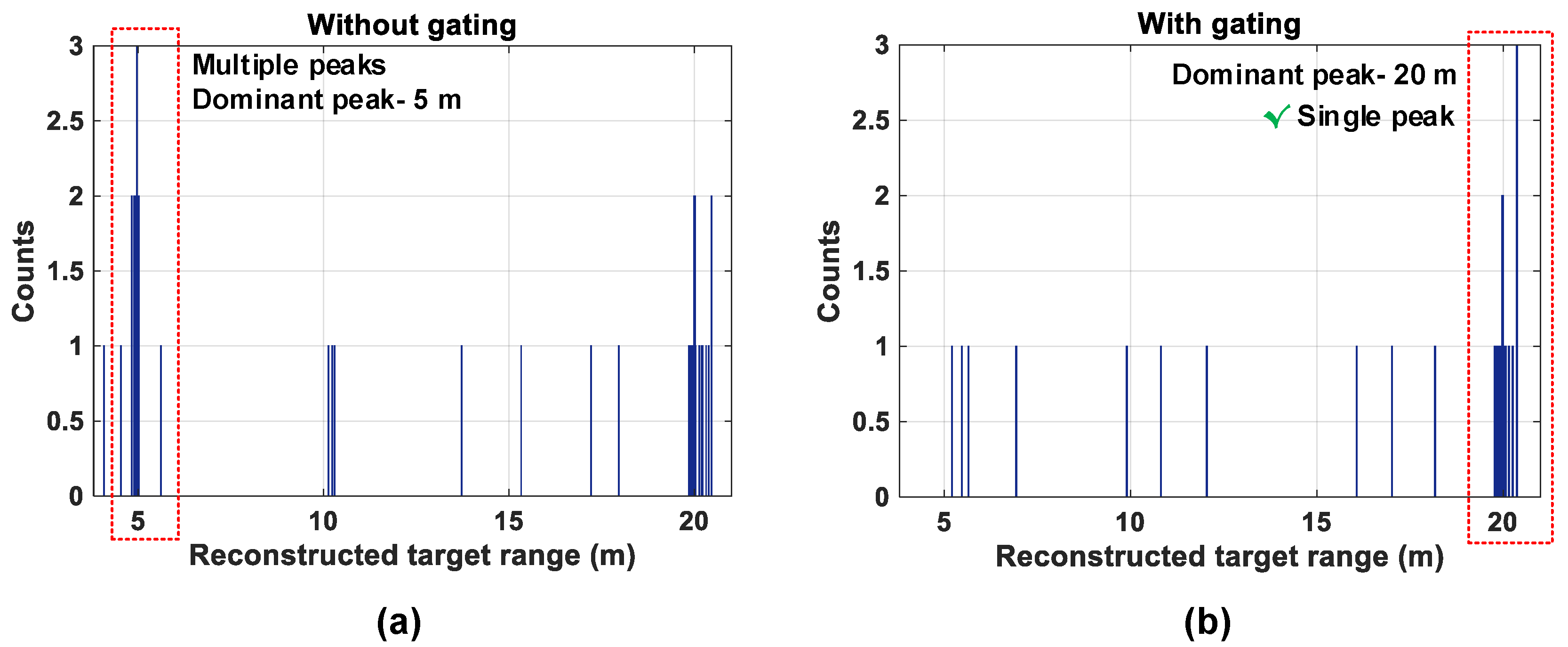

- Time-gating may be another alternative to achieve target-dependent ranging at the sensor level (will be discussed in Section 4.4)

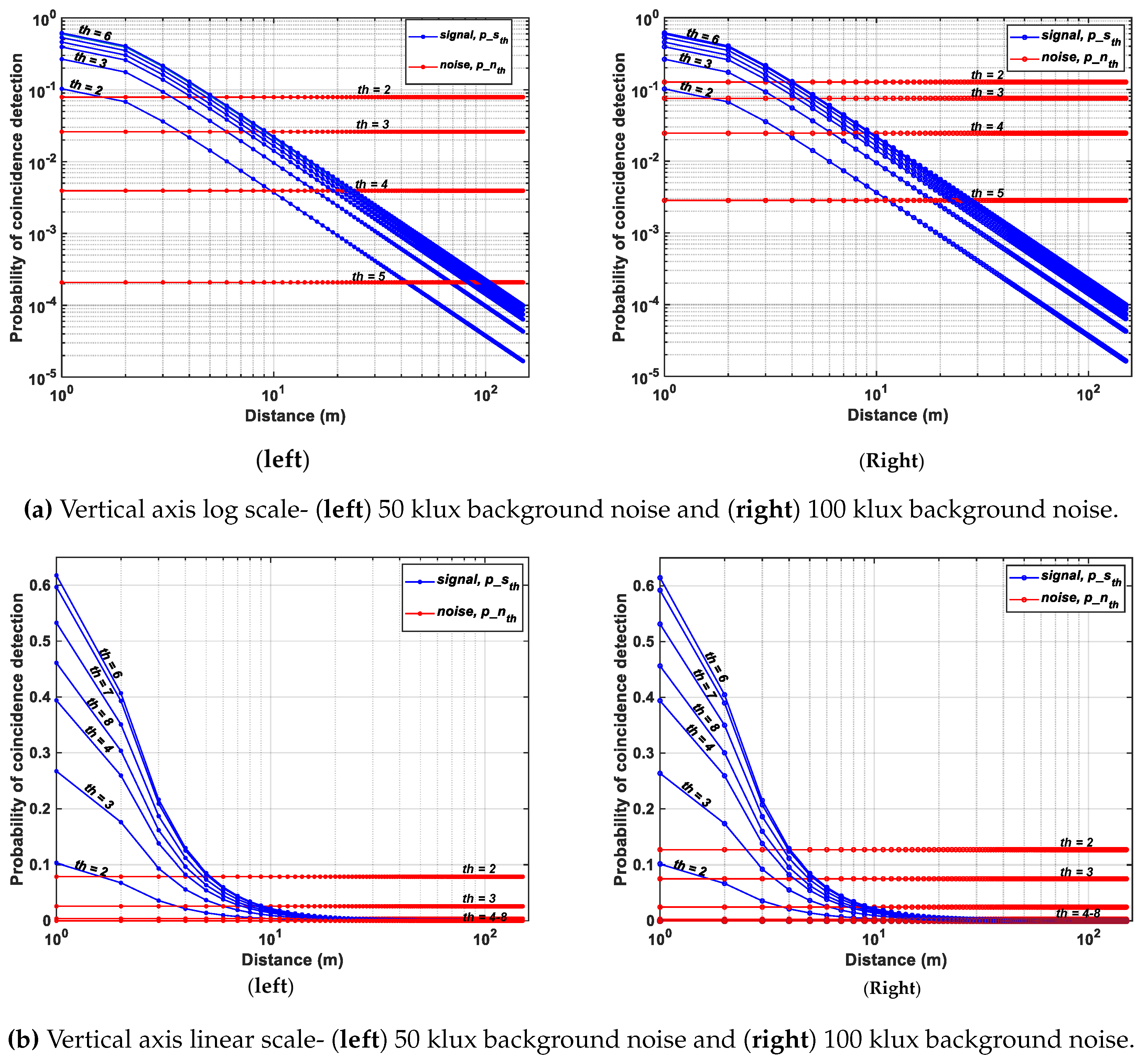

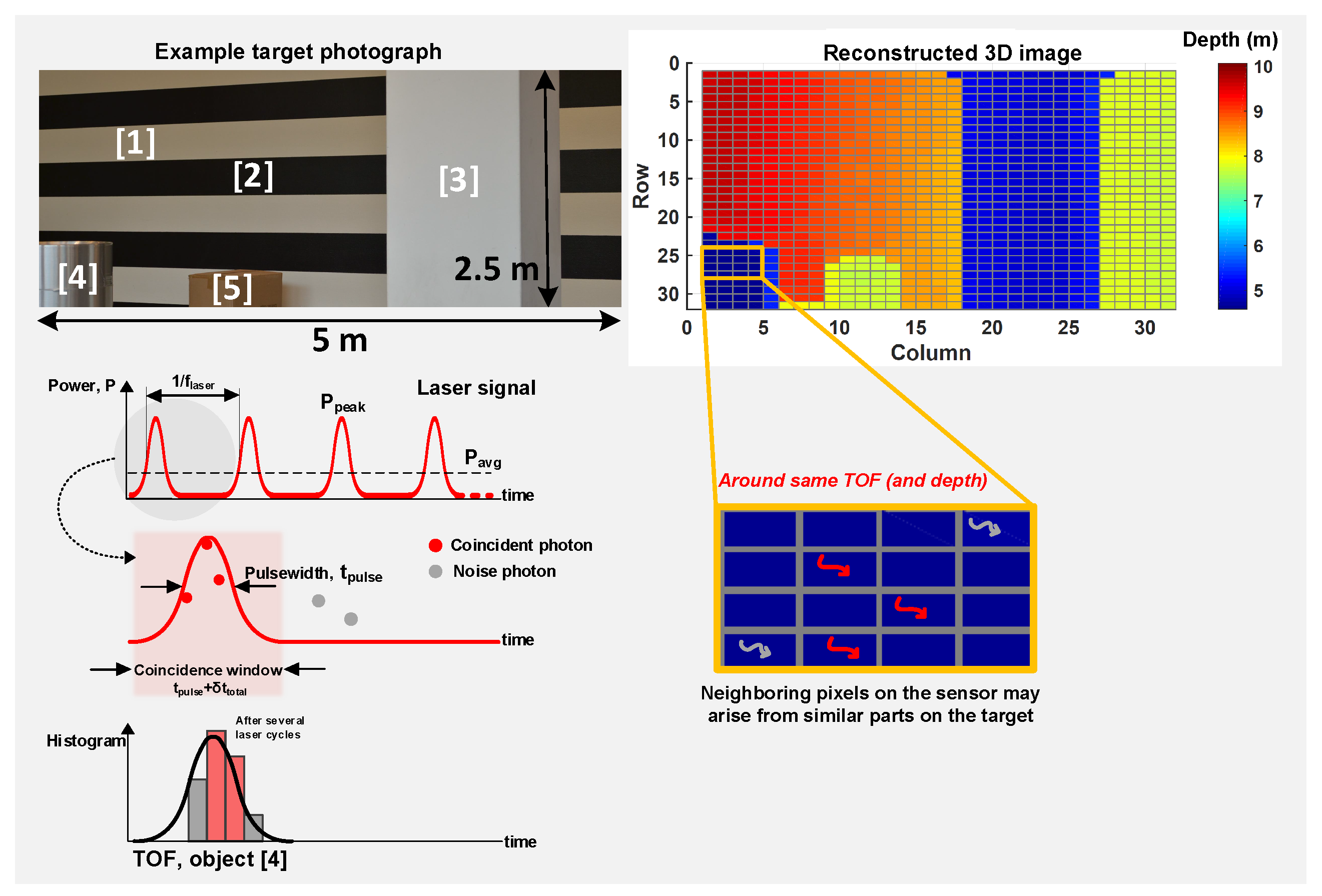

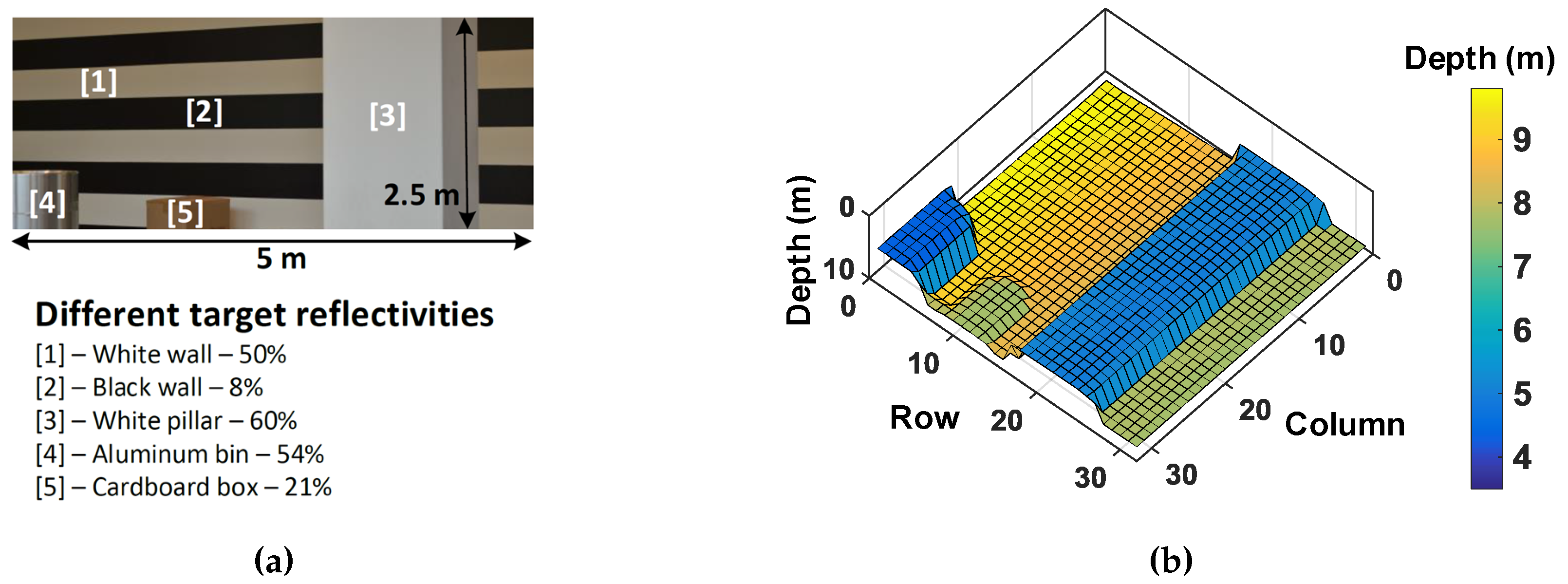

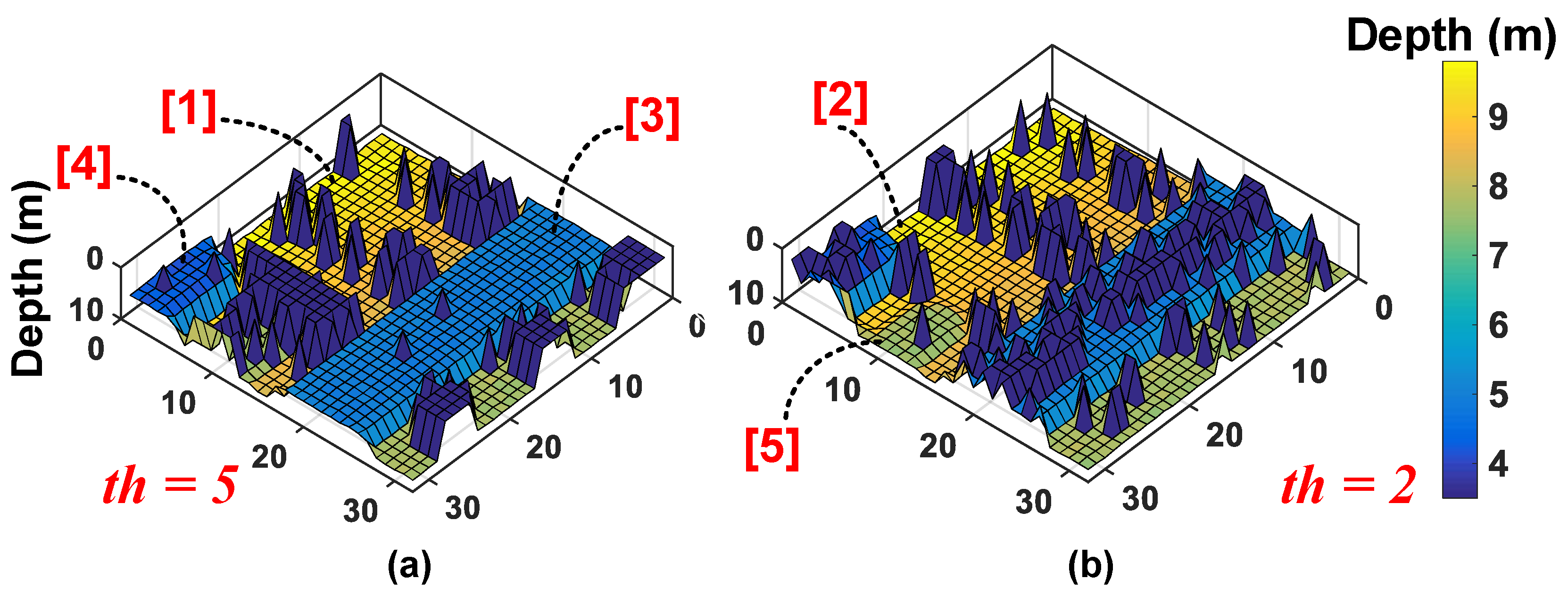

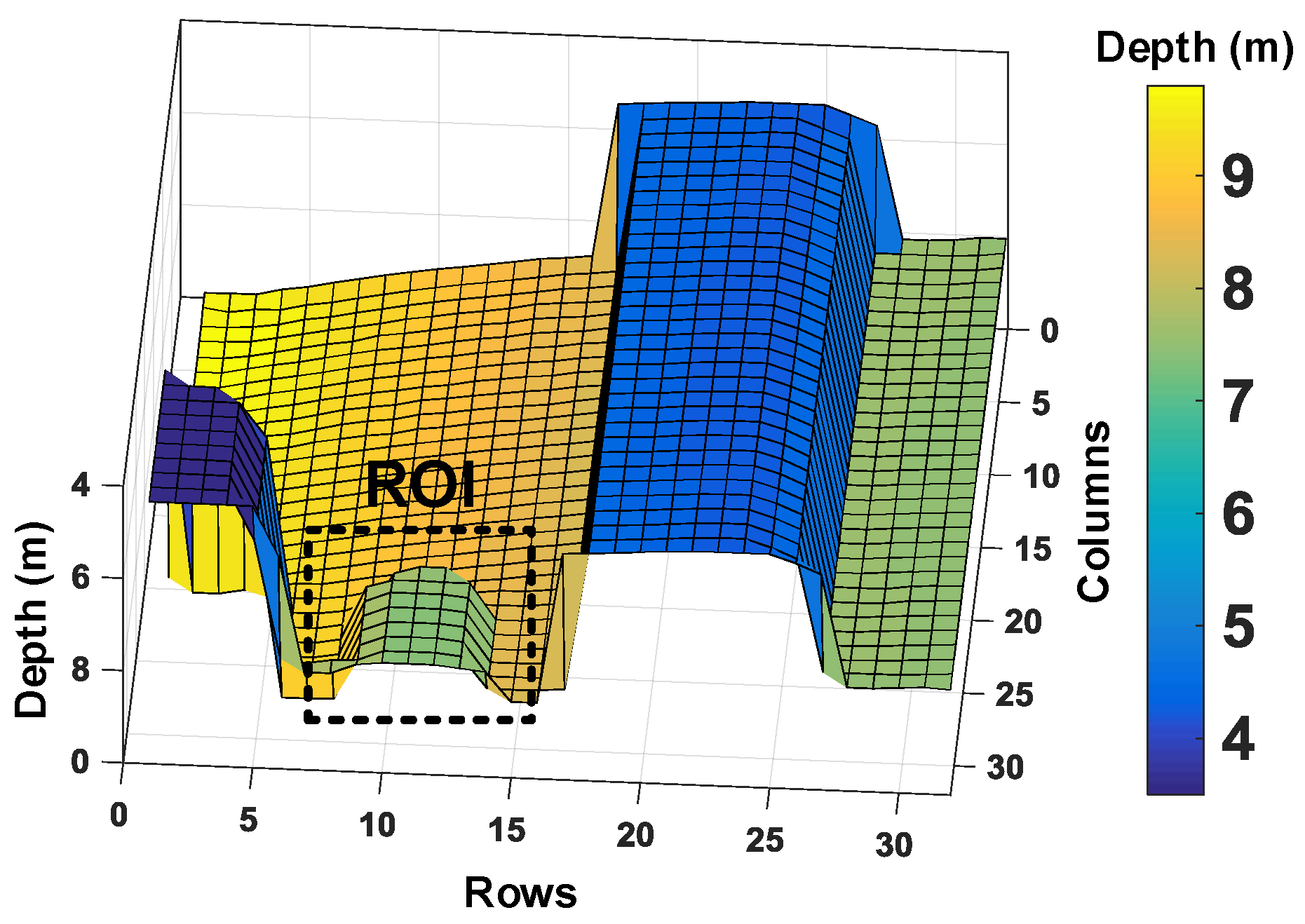

4.2. 3D Imaging with Wide Dynamic Range Targets

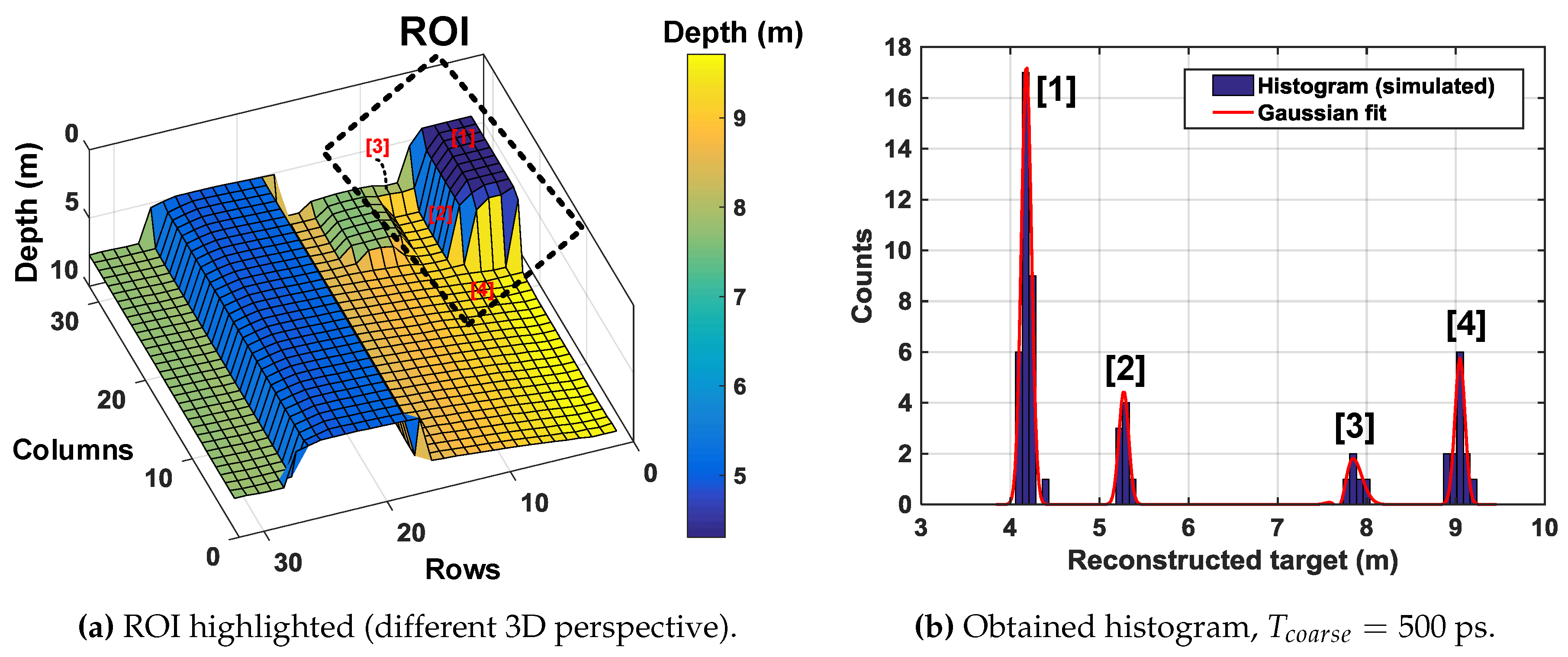

4.3. 3D Imaging and Multiple Timestamping

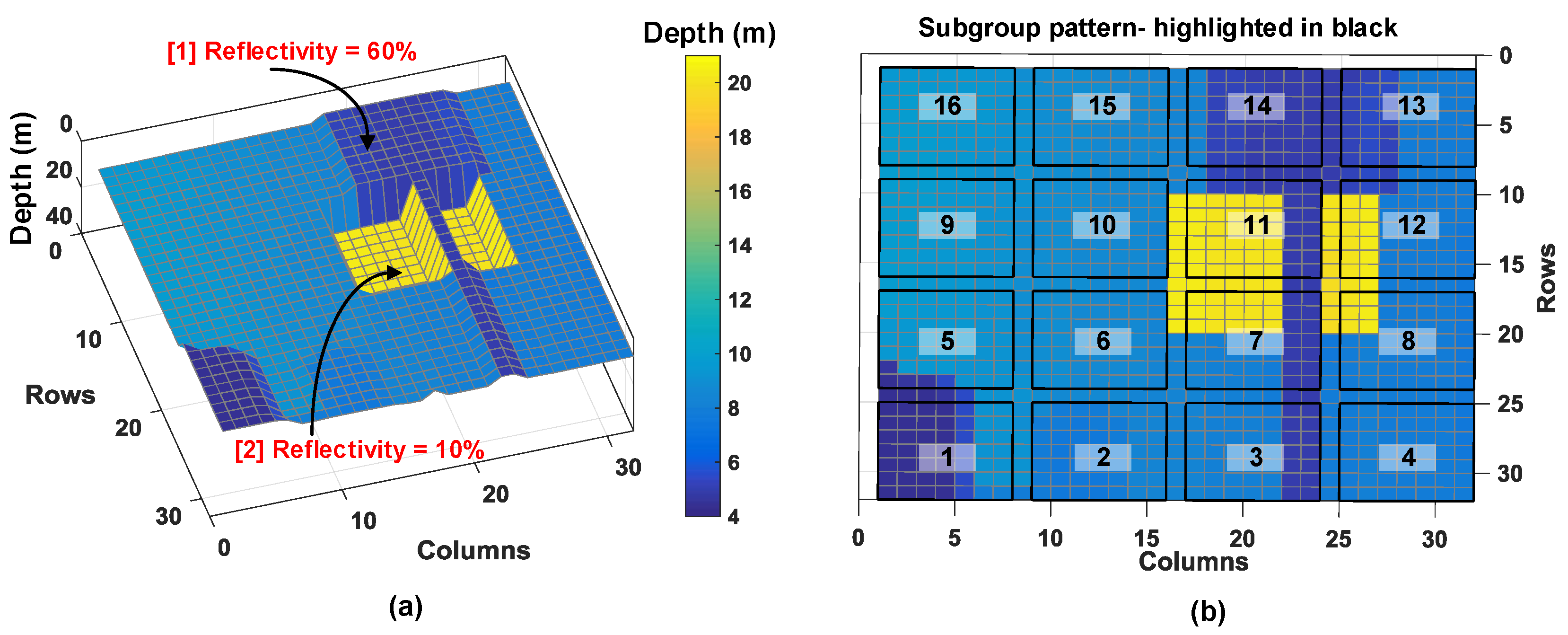

4.4. 3D Imaging and Time-Gating

5. Conclusions and Future Work

6. Patents

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| TOF | time-of-flight |

| LiDAR | light detection and ranging |

| DTOF | direct time-of-flight |

| ITOF | indirect time-of-flight |

| SPAD | single-photon avalanche diode |

| MEMS | micro-electro-mechanical systems |

| TCSPC | time-correlated single-photon counting |

| APDs | avalanche photodiodes |

| SiPM | silicon photomultipliers |

| TDCs | time-to-digital converters |

| SBR | signal-to-background noise ratio |

| FOV | field-of-view |

| VCSEL | vertical cavity surface-emitting laser |

| FWHM | full width at half maximum |

| ROI | region of interest |

Appendix A

References

- Bamji, C.S.; Mehta, S.; Thompson, B.; Elkhatib, T.; Wurster, S.; Akkaya, O.; Payne, A.; Godbaz, J.; Fenton, M.; Rajasekaran, V.; et al. IMpixel 65 nm BSI 320MHz demodulated TOF Image sensor with 3 μm global shutter pixels and analog binning. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 94–96. [Google Scholar]

- Niclass, C.; Favi, C.; Kluter, T.; Monnier, F.; Charbon, E. Single-photon synchronous detection. IEEE J. Solid-State Circuits 2009, 44, 1977–1989. [Google Scholar] [CrossRef]

- Bronzi, D.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F.; Durini, D.; Weyers, S.; Brockherde, W. 100,000 frames/s 64 × 32 single-photon detector array for 2-D imaging and 3-D ranging. IEEE J. Sel. Top. Quantum Electron. 2014, 20, 354–363. [Google Scholar] [CrossRef]

- Yasutomi, K.; Okura, Y.; Kagawa, K.; Kawahito, S. A Sub-100 μ m-Range-Resolution Time-of-Flight Range Image Sensor With Three-Tap Lock-In Pixels, Non-Overlapping Gate Clock, and Reference Plane Sampling. IEEE J. Solid-State Circuits 2019, 54, 2291–2303. [Google Scholar] [CrossRef]

- Yamada, K.; Akihito, K.; Takasawa, T.; Yasutomi, K.; Kagawa, K.; Kawahito, S. A Distance Measurement Method Using A Time-of-Flight CMOS Range Image Sensor with 4-Tap Output Pixels and Multiple Time-Windows. Electron. Imaging 2018, 2018, 326. [Google Scholar] [CrossRef]

- Remondino, F.; Stoppa, D. TOF Range-Imaging Cameras; Springer: Heidelberg, Germany, 2013. [Google Scholar]

- Perenzoni, M.; Perenzoni, D.; Stoppa, D. A 64 × 64-Pixels Digital Silicon Photomultiplier Direct TOF Sensor With 100-MPhotons/s/pixel Background Rejection and Imaging/Altimeter Mode With 0.14% Precision Up To 6 km for Spacecraft Navigation and Landing. IEEE J. Solid-State Circuits 2016, 52, 151–160. [Google Scholar] [CrossRef]

- Ximenes, A.R.; Padmanabhan, P.; Lee, M.J.; Yamashita, Y.; Yaung, D.; Charbon, E. A 256 × 256 45/65 nm 3D-stacked SPAD-based direct TOF image sensor for LiDAR applications with optical polar modulation for up to 18.6 dB interference suppression. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 96–98. [Google Scholar]

- Dutton, N.A.; Gnecchi, S.; Parmesan, L.; Holmes, A.J.; Rae, B.; Grant, L.A.; Henderson, R.K. 11.5 A time-correlated single-photon-counting sensor with 14GS/S histogramming time-to-digital converter. In Proceedings of the 2015 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 22–26 February 2015; pp. 1–3. [Google Scholar]

- Veerappan, C.; Richardson, J.; Walker, R.; Li, D.U.; Fishburn, M.W.; Maruyama, Y.; Stoppa, D.; Borghetti, F.; Gersbach, M.; Henderson, R.K.; et al. A 160 × 128 single-photon image sensor with on-pixel 55ps 10b time-to-digital converter. In Proceedings of the 2011 IEEE International Solid-State Circuits Conference, San Francisco, CA, USA, 20–24 February 2011; pp. 312–314. [Google Scholar]

- Niclass, C.; Soga, M.; Matsubara, H.; Ogawa, M.; Kagami, M. A 0.18-μ m CMOS SoC for a 100-m-Range 10-Frame/s 200×96-Pixel Time-of-Flight Depth Sensor. IEEE J. Solid-State Circuits 2013, 49, 315–330. [Google Scholar] [CrossRef]

- Portaluppi, D.; Conca, E.; Villa, F. 32 × 32 CMOS SPAD imager for gated imaging, photon timing, and photon coincidence. IEEE J. Sel. Top. Quantum Electron. 2017, 24, 1–6. [Google Scholar] [CrossRef]

- Zhang, C.; Lindner, S.; Antolović, I.M.; Pavia, J.M.; Wolf, M.; Charbon, E. A 30-frames/s, 252 ×144 SPAD Flash LiDAR With 1728 Dual-Clock 48.8-ps TDCs, and Pixel-Wise Integrated Histogramming. IEEE J. Solid-State Circuits 2018, 54, 1137–1151. [Google Scholar] [CrossRef]

- Henderson, R.K.; Johnston, N.; Hutchings, S.W.; Gyongy, I.; Al Abbas, T.; Dutton, N.; Tyler, M.; Chan, S.; Leach, J. 5.7 A 256 × 256 40nm/90nm CMOS 3D-Stacked 120dB Dynamic-Range Reconfigurable Time-Resolved SPAD Imager. In Proceedings of the 2019 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 106–108. [Google Scholar]

- Gasparini, L.; Zarghami, M.; Xu, H.; Parmesan, L.; Garcia, M.M.; Unternährer, M.; Bessire, B.; Stefanov, A.; Stoppa, D.; Perenzoni, M. A 32 × 32-pixel time-resolved single-photon image sensor with 44.64 μm pitch and 19.48% fill-factor with on-chip row/frame skipping features reaching 800 kHz observation rate for quantum physics applications. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; pp. 98–100. [Google Scholar]

- Beer, M.; Haase, J.; Ruskowski, J.; Kokozinski, R. Background Light Rejection in SPAD-Based LiDAR Sensors by Adaptive Photon Coincidence Detection. Sensors 2018, 18, 4338. [Google Scholar] [CrossRef] [PubMed]

- Seitz, S.M.; Matsushita, Y.; Kutulakos, K.N. A theory of inverse light transport. In Proceedings of the 10th IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 2, pp. 1440–1447. [Google Scholar]

- Nayar, S.K.; Krishnan, G.; Grossberg, M.D.; Raskar, R. Fast separation of direct and global components of a scene using high frequency illumination. ACM Trans. Graph. 2006, 25, 935–944. [Google Scholar] [CrossRef]

- O’Toole, M.; Raskar, R.; Kutulakos, K.N. Primal-dual coding to probe light transport. ACM Trans. Graph. 2012, 31, 39. [Google Scholar] [CrossRef]

- Jelalian, A.V. Laser radar systems. In Proceedings of the EASCON’80, Electronics and Aerospace Systems Conference, Arlington, VA, USA, 29 September– 1 October 1980; pp. 546–554. [Google Scholar]

- Tan, K.; Cheng, X. Surface reflectance retrieval from the intensity data of a terrestrial laser scanner. J. Opt. Soc. Am. A 2016, 33, 771–778. [Google Scholar] [CrossRef] [PubMed]

- McCluney, W.R. Introduction to Radiometry and Photometry; Artech House: Boston, MA, USA, 2014. [Google Scholar]

- Lee, S.H.; Gardner, R.P. A new G–M counter dead time model. Appl. Radiat. Isot. 2000, 53, 731–737. [Google Scholar] [CrossRef]

- Ronchini Ximenes, A.; Padmanabhan, P.; Charbon, E. Mutually coupled time-to-digital converters (TDCs) for direct time-of-flight (dTOF) image sensors. Sensors 2018, 18, 3413. [Google Scholar] [CrossRef] [PubMed]

- Becker, W. Advanced Time-Correlated Single Photon Counting Techniques; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Satat, G.; Tancik, M.; Raskar, R. Towards photography through realistic fog. In Proceedings of the 2018 IEEE International Conference on Computational Photography (ICCP), Pittsburgh, PA, USA, 4–6 May 2018; pp. 1–10. [Google Scholar]

- Phillips, T.G.; Guenther, N.; McAree, P.R. When the dust settles: The four behaviors of LiDAR in the presence of fine airborne particulates. J. Field Robot. 2017, 34, 985–1009. [Google Scholar] [CrossRef]

- Rapp, J.; Goyal, V.K. A few photons among many: Unmixing signal and noise for photon-efficient active imaging. IEEE Trans. Comput. Imaging 2017, 3, 445–459. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Average laser power, | 20 mW |

| Laser wavelength, | 780 nm |

| Repetition rate, | 1 MHz |

| Total system FWHM | 530 ps |

| Target reflectivity, r | variable, 8–60% |

| Field-of-view, FOV | 15–40 |

| Background light | variable, 5–100 klux |

| Sensor resolution | 32 × 32 |

| SPAD detector PDP | 10% |

| Pixel fill-factor, FF | 50% |

| Diameter of collecting lens, | 11 mm |

| f-number, f# | 1.4 |

| focal length, f | 15 mm |

| Lens efficiency, | 0.8 |

| Optical filter passband, | 20 nm |

| Filter efficiency, | 0.7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Padmanabhan, P.; Zhang, C.; Charbon, E. Modeling and Analysis of a Direct Time-of-Flight Sensor Architecture for LiDAR Applications. Sensors 2019, 19, 5464. https://doi.org/10.3390/s19245464

Padmanabhan P, Zhang C, Charbon E. Modeling and Analysis of a Direct Time-of-Flight Sensor Architecture for LiDAR Applications. Sensors. 2019; 19(24):5464. https://doi.org/10.3390/s19245464

Chicago/Turabian StylePadmanabhan, Preethi, Chao Zhang, and Edoardo Charbon. 2019. "Modeling and Analysis of a Direct Time-of-Flight Sensor Architecture for LiDAR Applications" Sensors 19, no. 24: 5464. https://doi.org/10.3390/s19245464

APA StylePadmanabhan, P., Zhang, C., & Charbon, E. (2019). Modeling and Analysis of a Direct Time-of-Flight Sensor Architecture for LiDAR Applications. Sensors, 19(24), 5464. https://doi.org/10.3390/s19245464