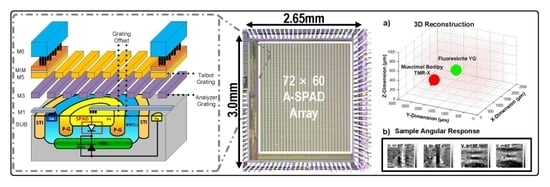

A 72 × 60 Angle-Sensitive SPAD Imaging Array for Lens-less FLIM

Abstract

:1. Introduction

1.1. Overview

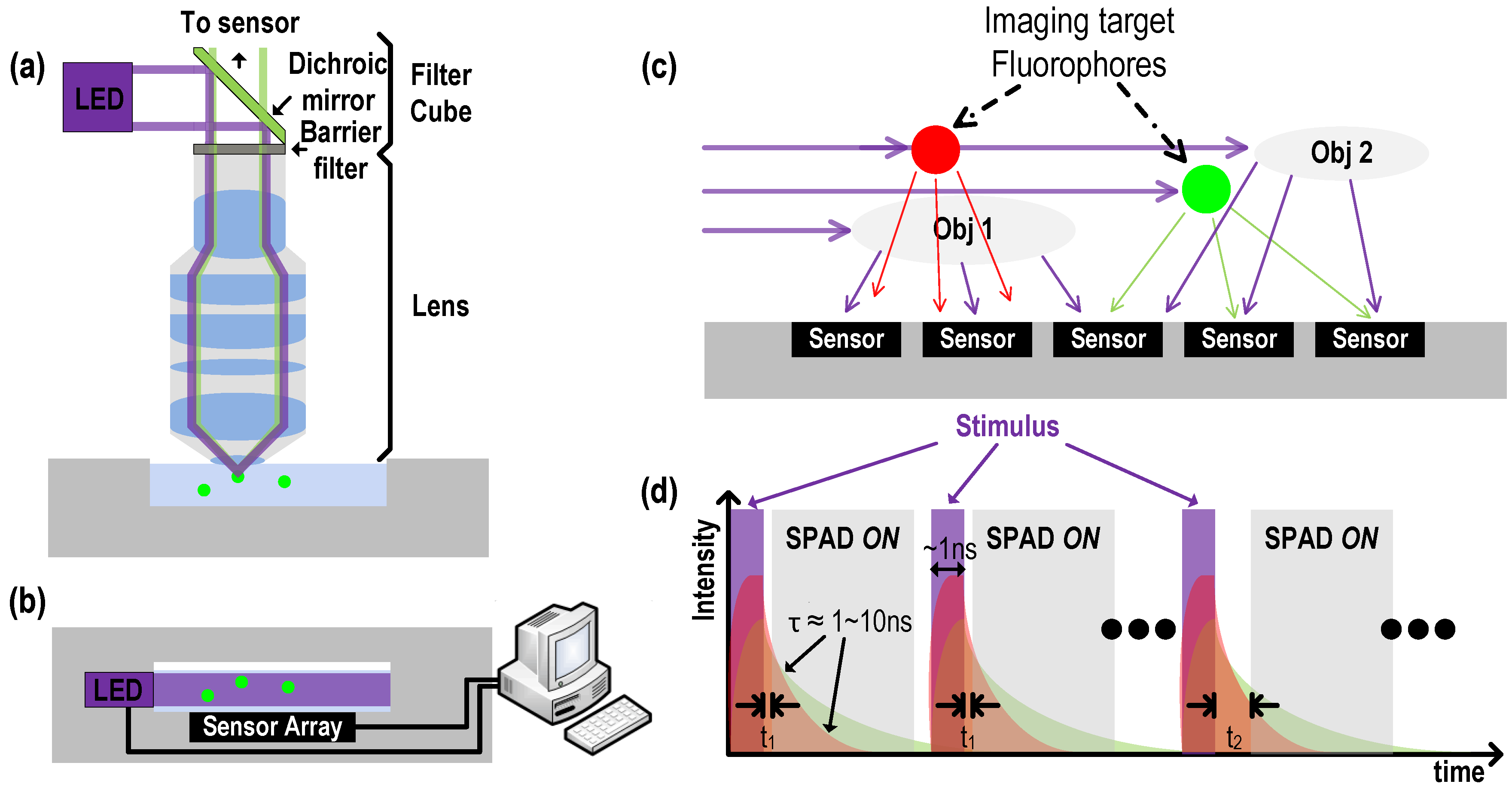

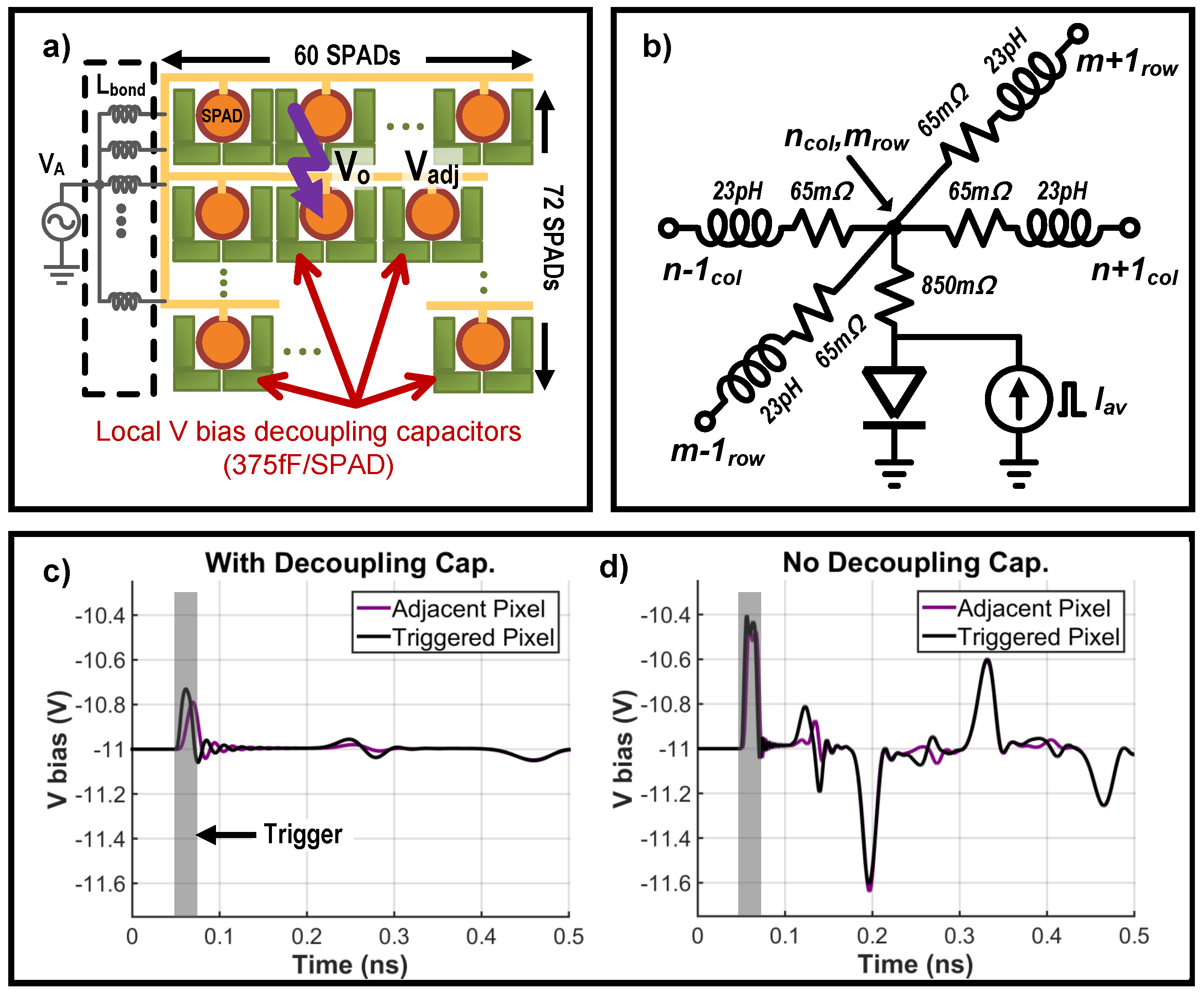

1.2. Replacing Filters: Time-Gated SPAD Drive

1.3. Fluorescence Lifetime Imaging

1.4. Replacing Lenses: Angle Sensitive Pixels

1.5. Angle Sensitive Time Resolved Fluorescence Lifetime Imaging

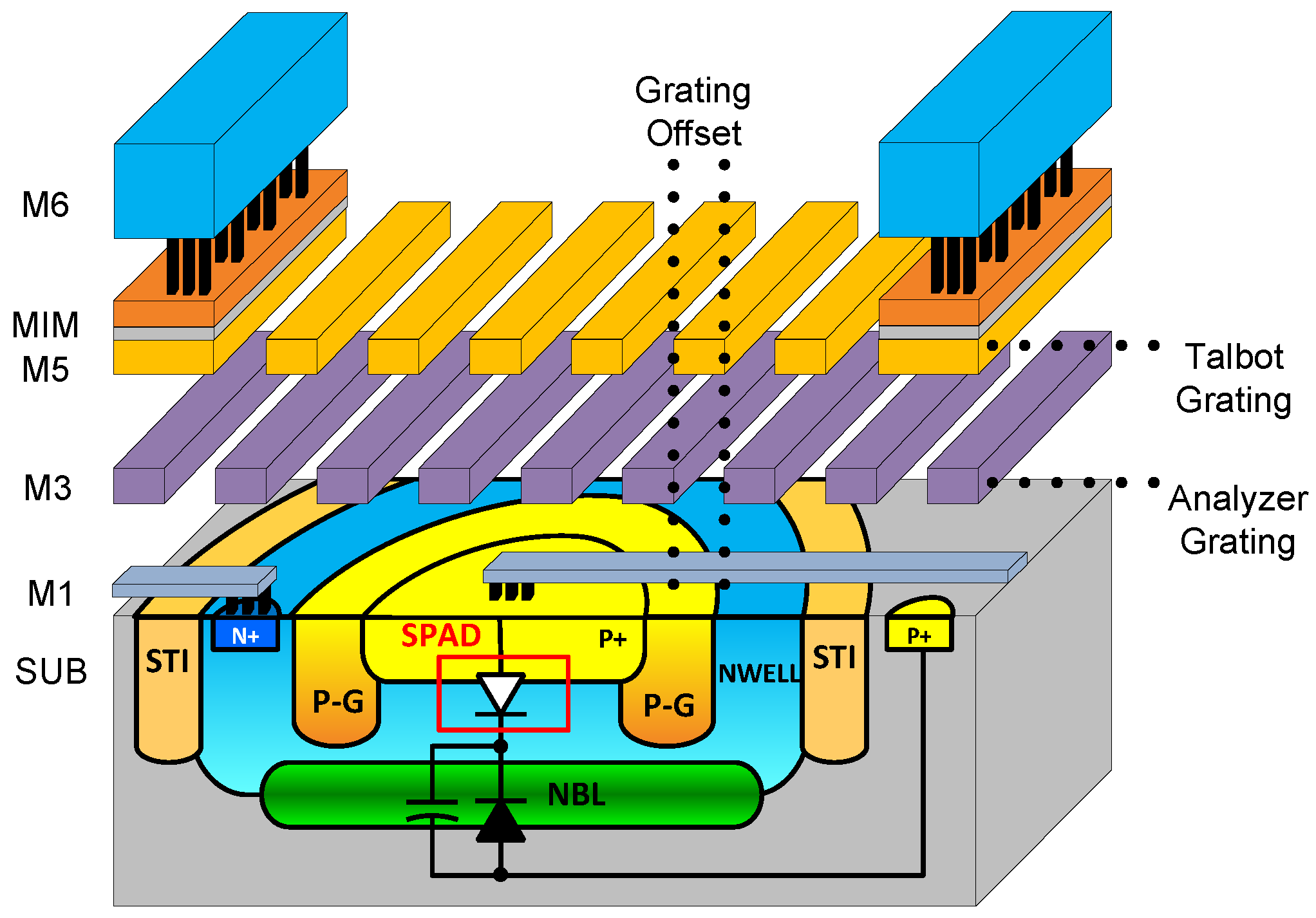

2. Angle-Sensitive Single Photon Avalanche Diode

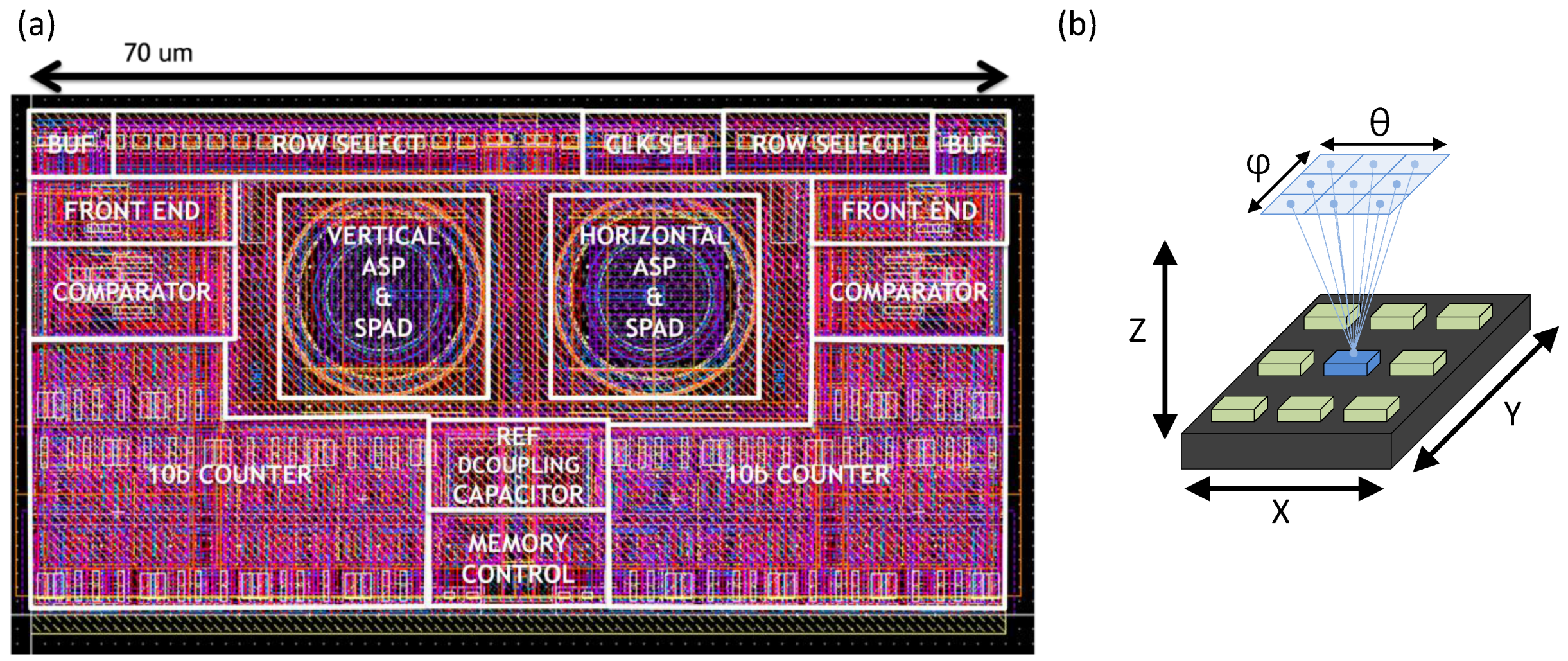

2.1. A-SPAD Pixel Structure and Circuitry

2.2. Readout Circuitry: Comparator and Counter

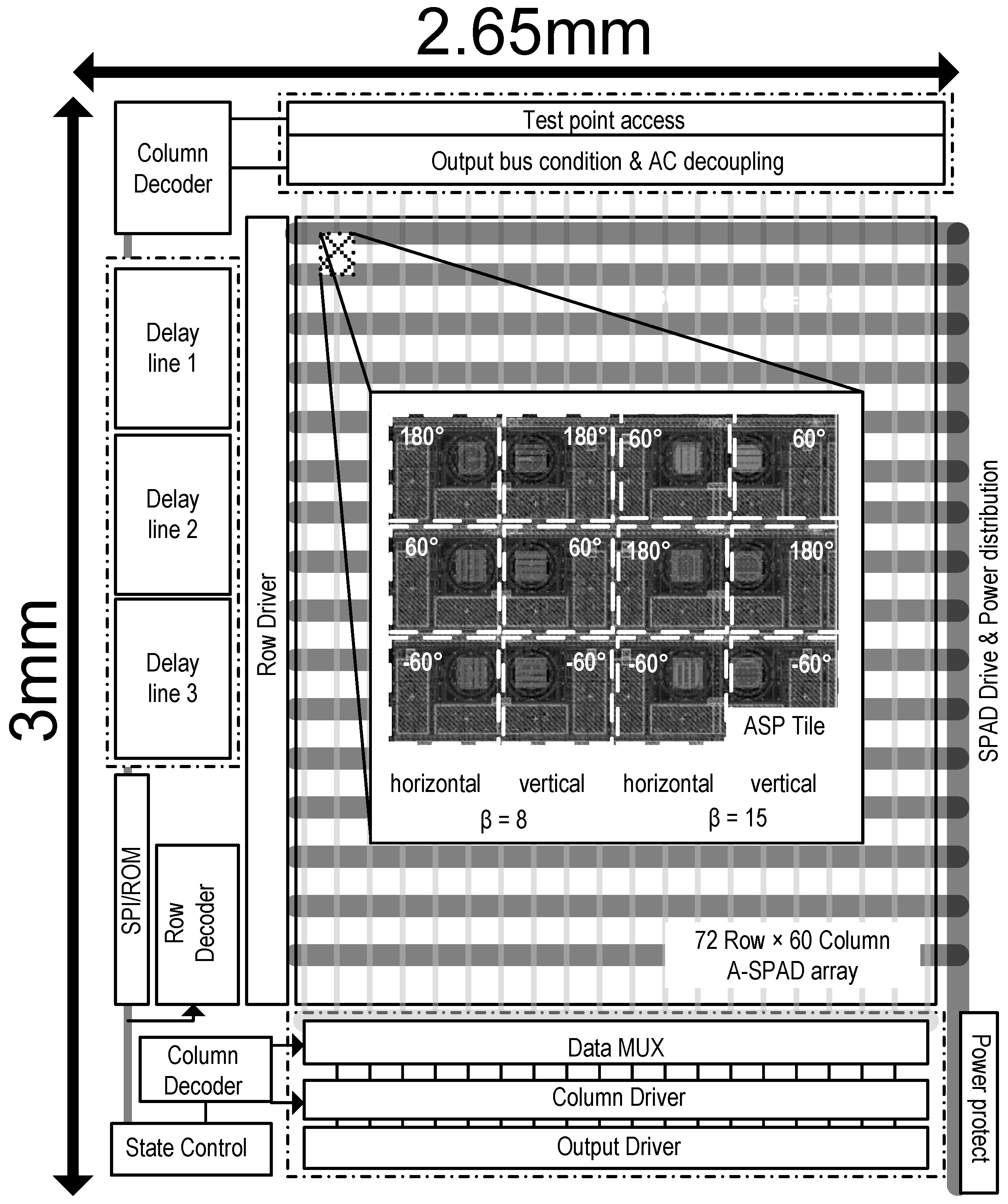

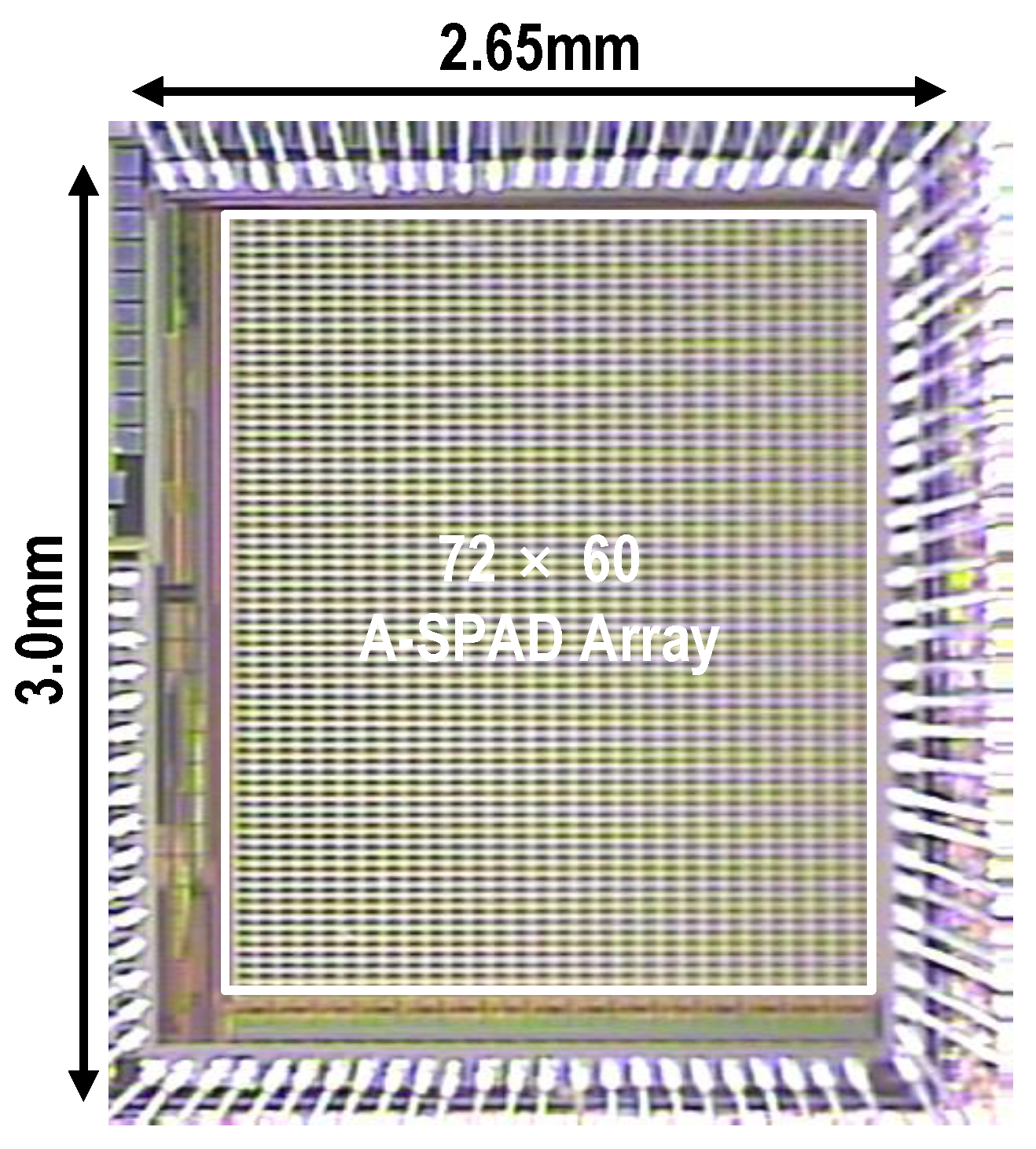

3. Large Scale A-SPAD Array Design

3.1. Power and Area Efficient Lifetime Estimation Approach

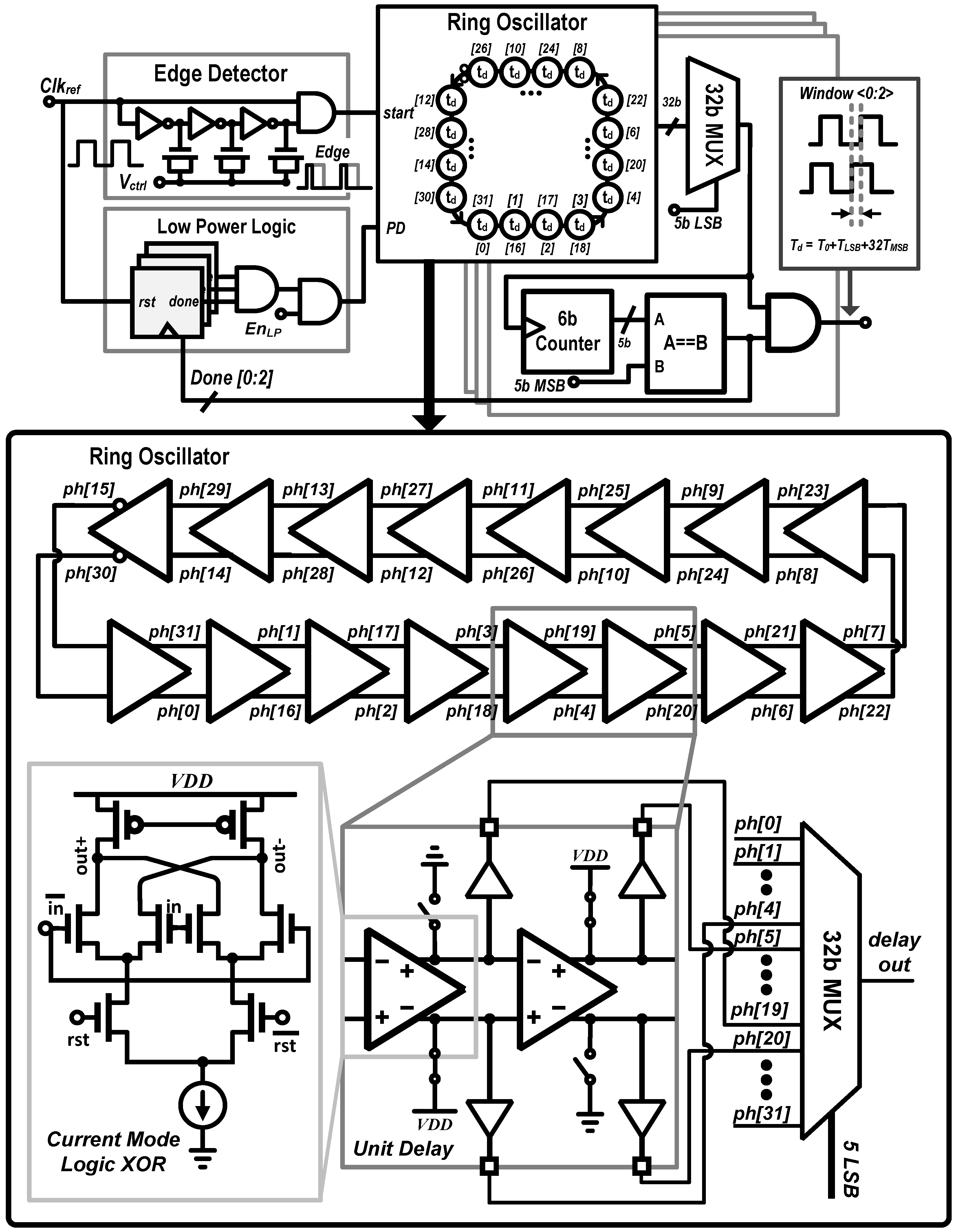

3.2. Digital-to-Time Converter (DTC)

3.3. System Architecture

4. Results

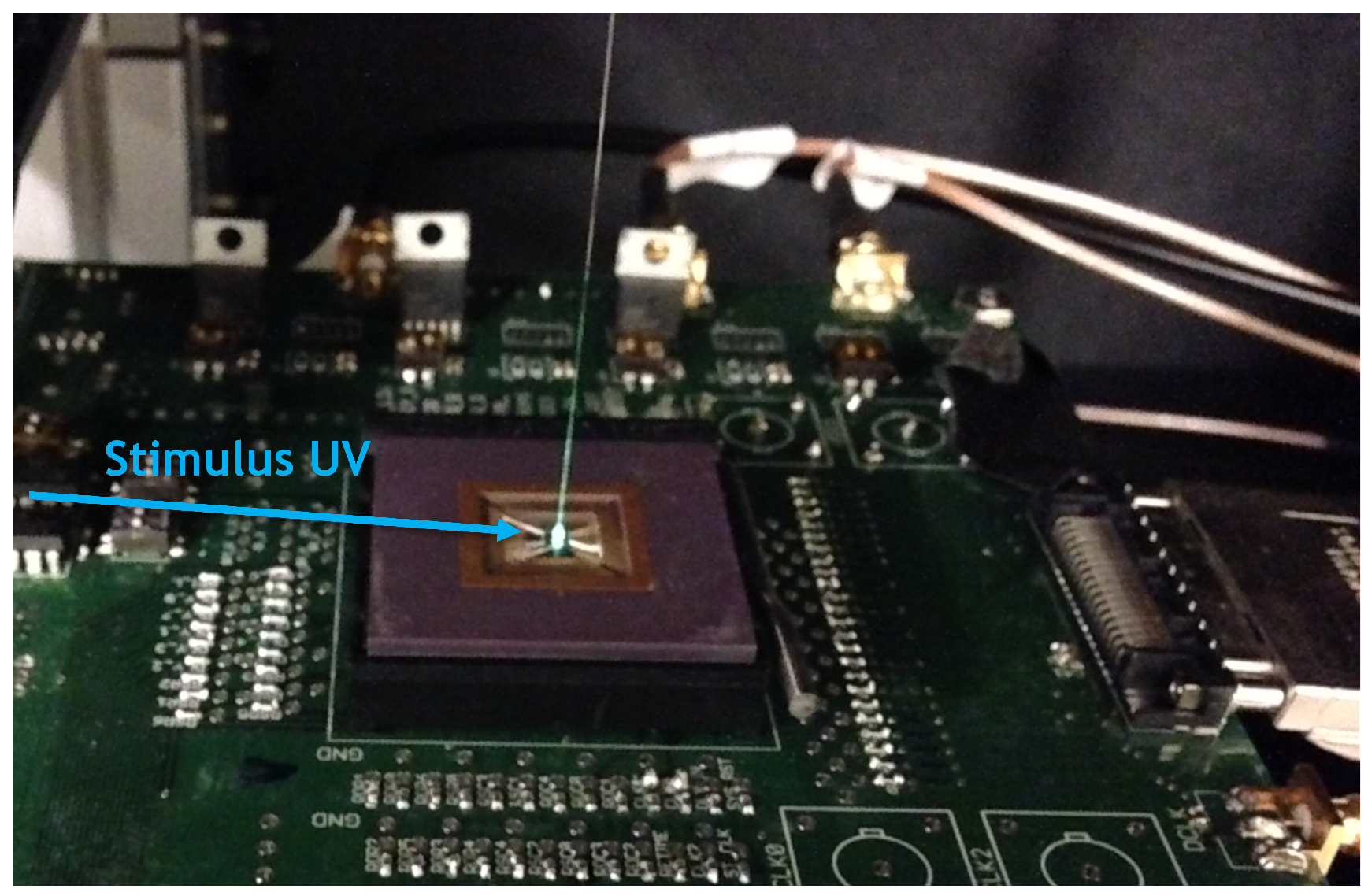

4.1. Measurement Setup

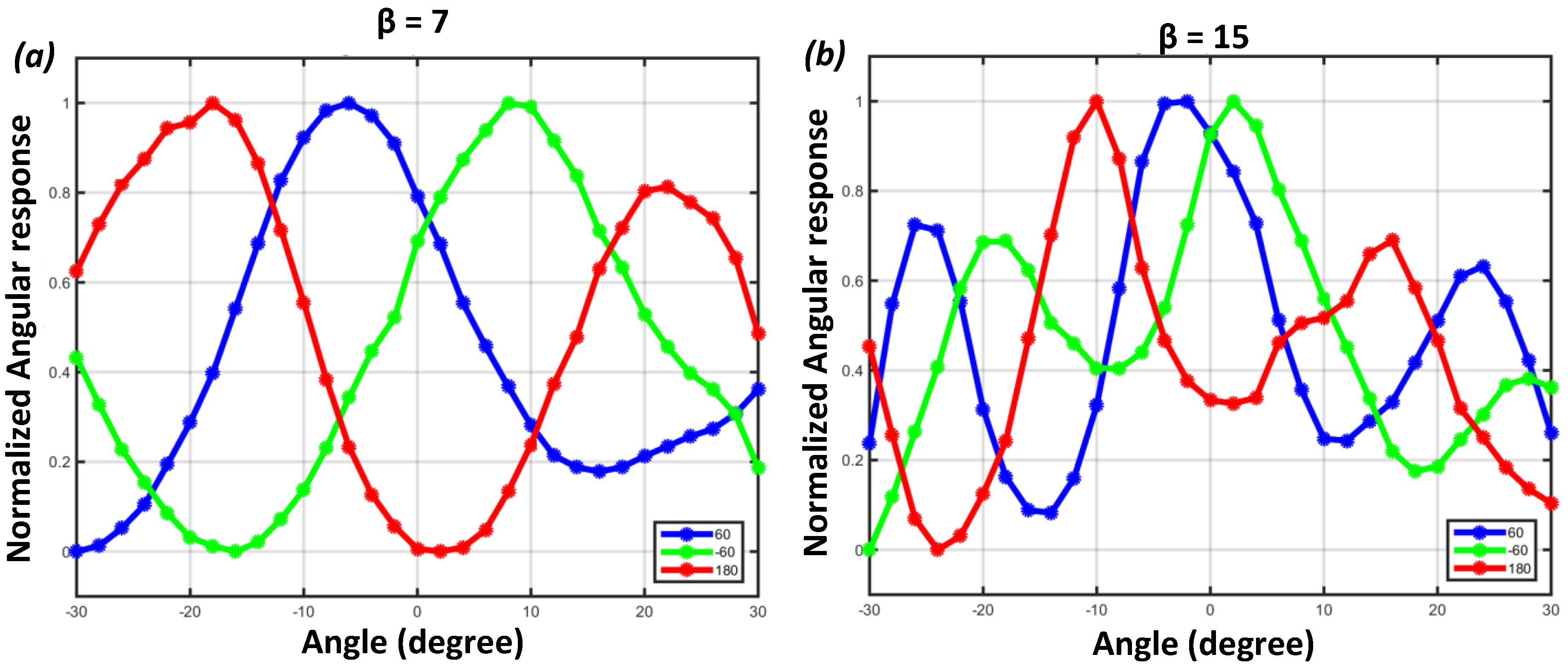

4.2. Angular Sensitivity Response

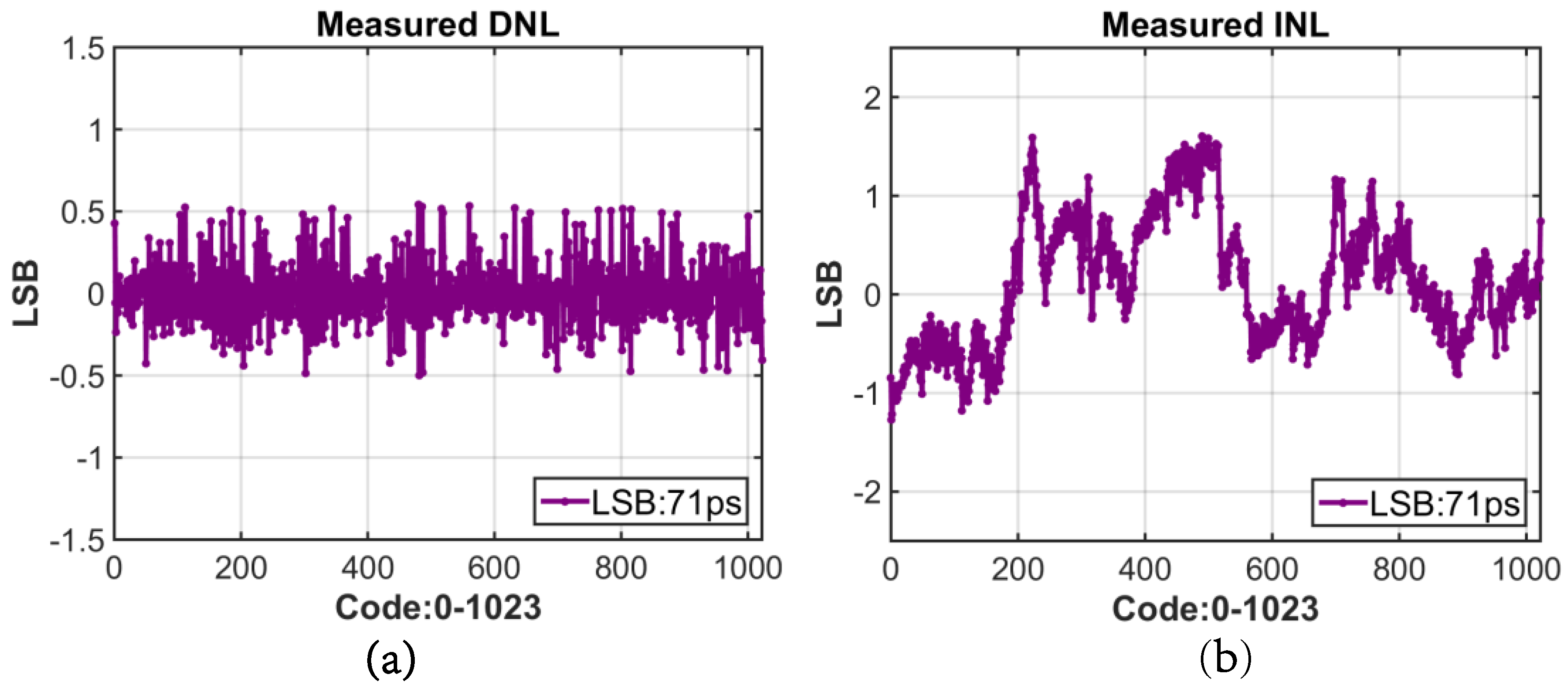

4.3. Digital to Time Converter (DTC) Performance

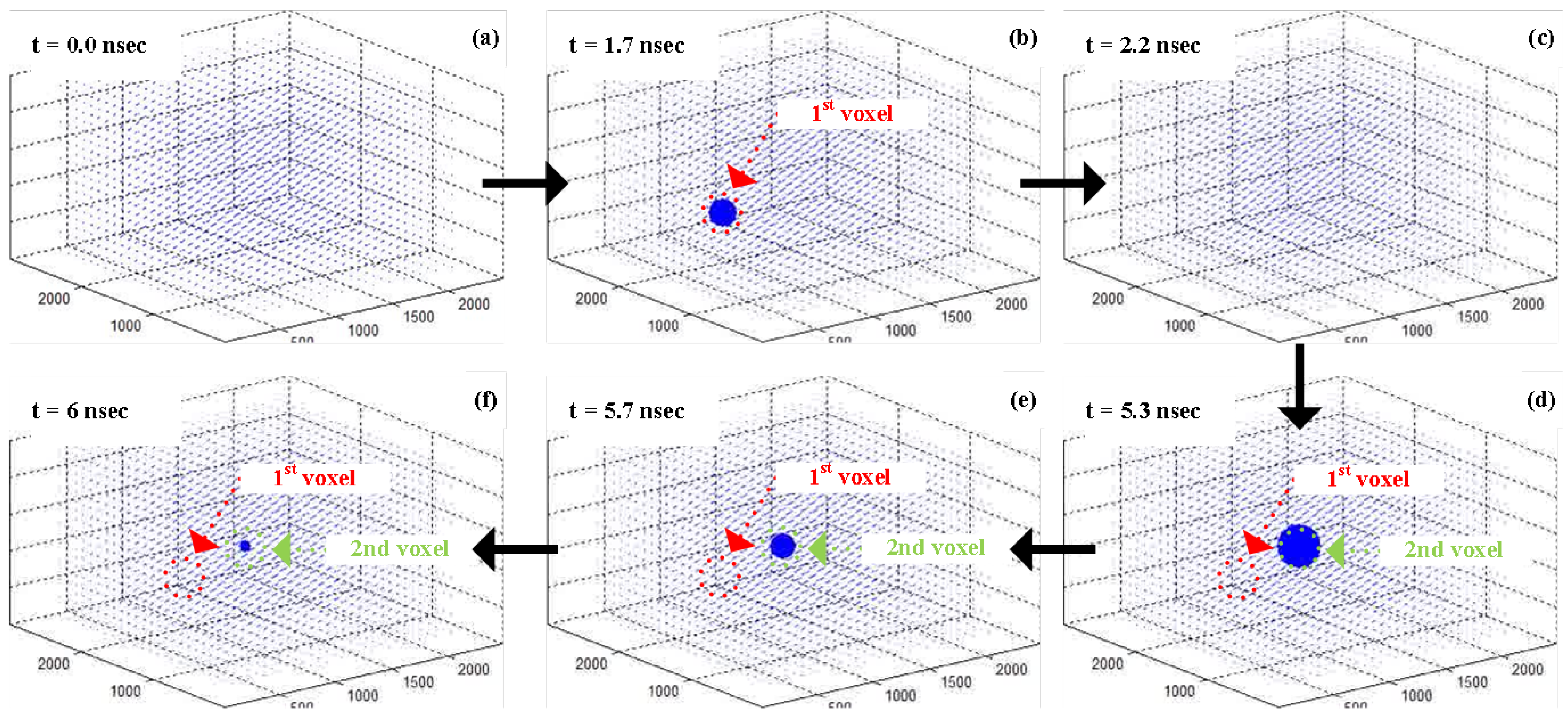

5. 3D Localization Using FLIM

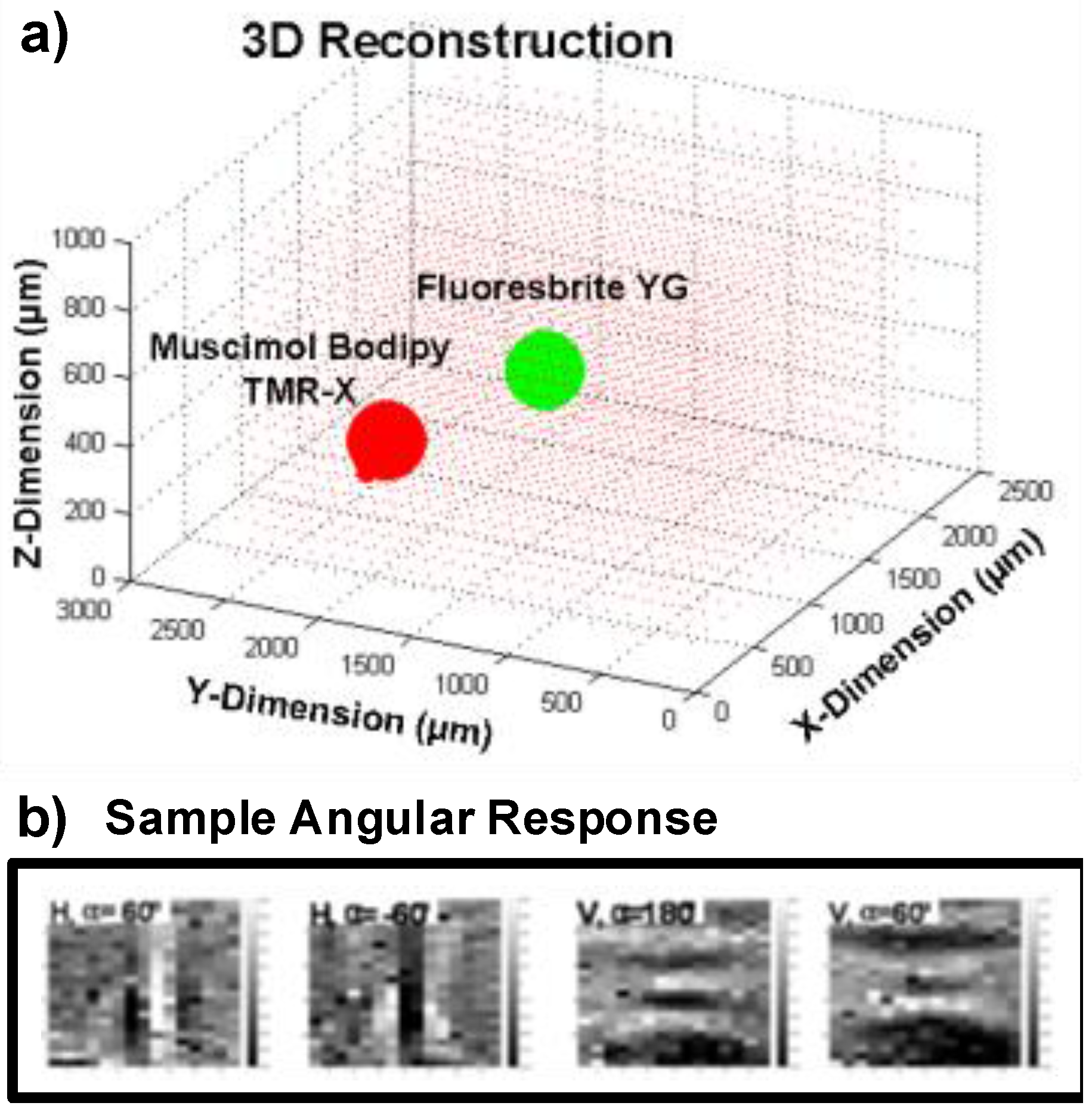

5.1. Measurement Setup

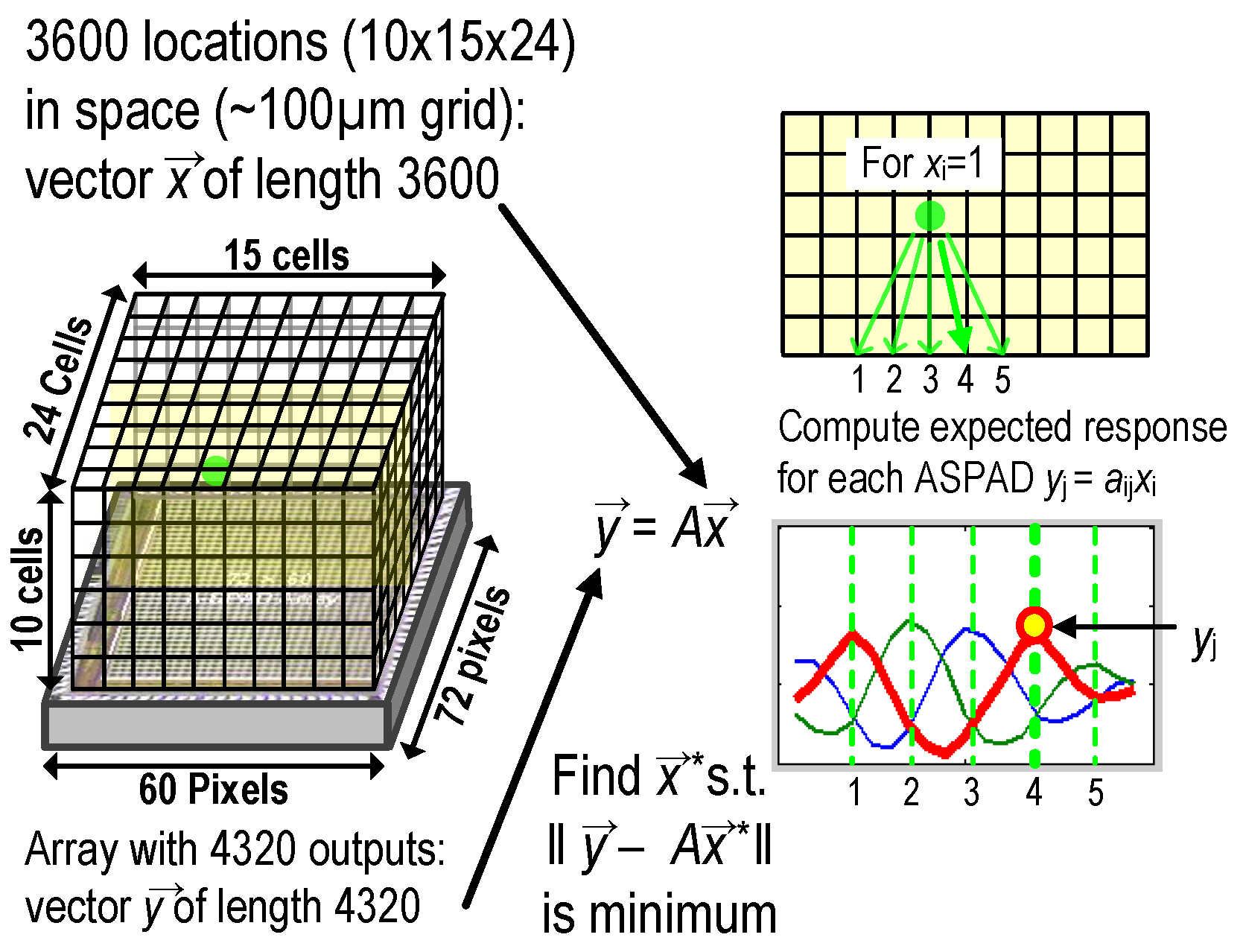

5.2. 3D Localization Based on Angle Sensitivity and Lifetime Information

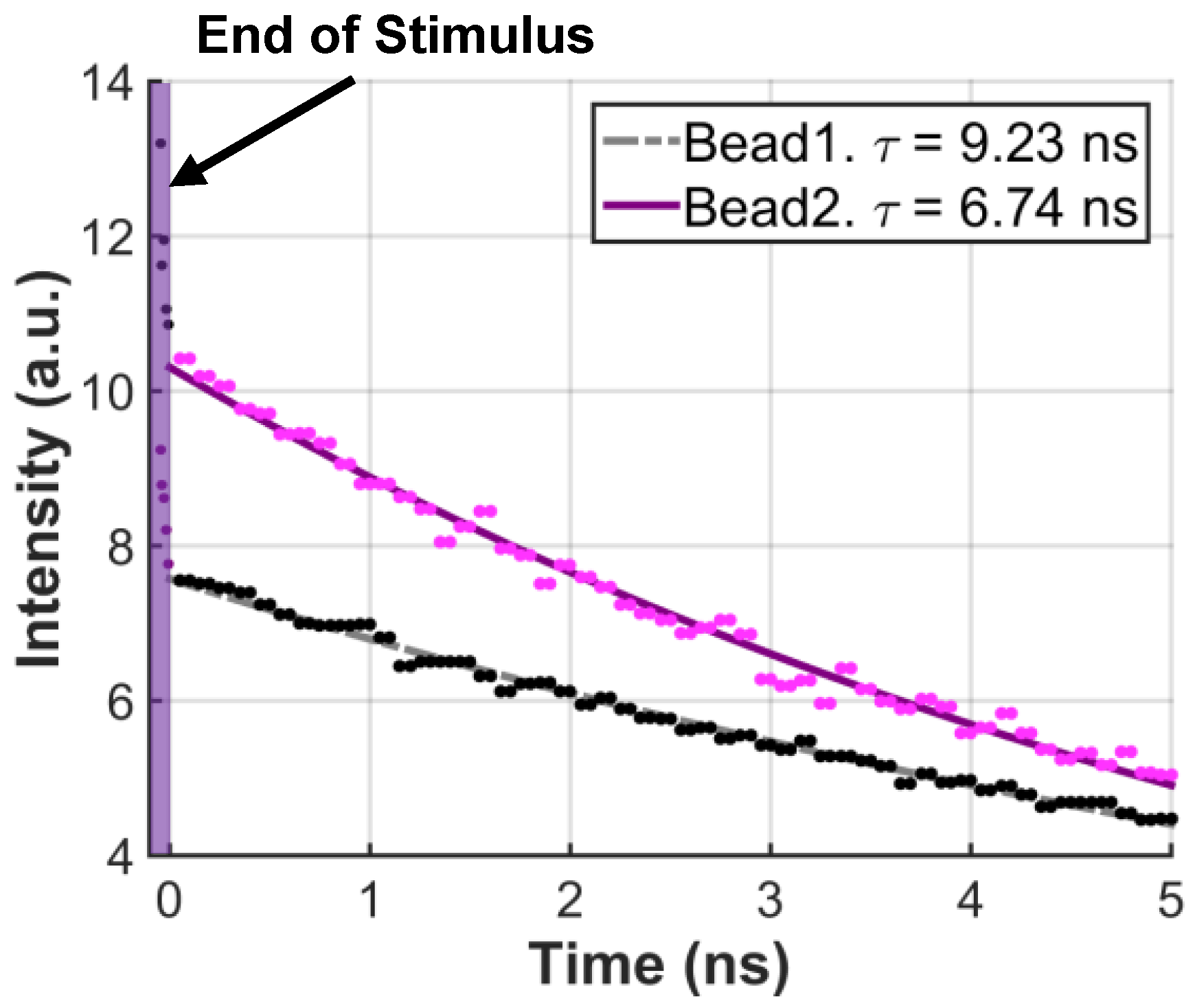

5.3. Fluorescence Lifetime Measurement

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| SPAD | Single Photon Avalanche Diode |

| FLIM | Fluorescence Lifetime Imaging Microscopy |

| CMOS | Complementary metal-oxide-semiconductor |

| TCSPC | Time Correlated Single Photon Counting |

| SRAM | Static random-access memory |

References

- Ghosh, K.K.; Burns, L.D.; Cocker, E.D.; Nimmerjahn, A.; Ziv, Y.; Gamal, A.E.; Schnitzer, M.J. Miniaturized integration of a fluorescence microscope. Nat. Methods 2011, 8, 871–878. [Google Scholar] [CrossRef] [PubMed]

- Jin, L.; Han, Z.; Platisa, J.; Wooltorton, J.R.A.; Cohen, L.B.; Pieribone, V.A. Single action potentials and subthreshold electrical events imaged in neurons with a novel fluorescent protein voltage probe. Neuron 2012, 75, 779–785. [Google Scholar] [CrossRef] [PubMed]

- Johnson, B.; Peace, S.T.; Cleland, T.A.; Molnar, A. A 50 µm pitch, 1120-channel, 20 kHz frame rate microelectrode array for slice recording. In Proceedings of the 2013 IEEE Biomedical Circuits and Systems Conference (BioCAS), Rotterdam, The Netherlands, 31 October–2 November 2013; pp. 109–112.

- Greenbaum, A.; Luo, W.; Su, T.W.; Gorocs, Z.; Xue, L.; Isikman, S.O.; Coskun, A.F.; Mudanyali, O.; Ozcan, A. Imaging without lenses: Achievements and remaining challenges of wide-field on-chip microscopy. Nat. Methods 2012, 9, 889–895. [Google Scholar] [CrossRef] [PubMed]

- Zheng, G.; Lee, S.A.; Antebi, Y.; Elowitz, M.B.; Yang, C. The ePetri dish, an on-chip cell imaging platform based on subpixel perspective sweeping microscopy (SPSM). Proc. Natl. Acad. Sci. USA 2011, 108, 16889–16894. [Google Scholar] [CrossRef] [PubMed]

- Monjur, M.; Spinoulas, L.; Gill, P.R.; Stork, D.G. Ultra-miniature, computationally efficient diffractive visual-bar-position sensor. In Proceedings of the 9th International Conference on Sensor Technologies and Applications (SENSORCOMM 2015), Venice, Italy, 24–29 August 2015; pp. 51–56.

- Kim, T.i.; McCall, J.G.; Jung, Y.H.; Huang, X.; Siuda, E.R.; Li, Y.; Song, J.; Song, Y.M.; Pao, H.A.; Kim, R.H.; et al. Injectable, Cellular-Scale Optoelectronics with Applications for Wireless Optogenetics. Science 2013, 340, 211–216. [Google Scholar] [CrossRef] [PubMed]

- Zorzos, A.N.; Boyden, E.S.; Fonstad, C.G. Multiwaveguide implantable probe for light delivery to sets of distributed brain targets. Opt. Lett. 2010, 35, 4133–4135. [Google Scholar] [CrossRef] [PubMed]

- Coskun, A.F.; Sencan, I.; Su, T.W.; Ozcan, A. Lensfree Fluorescent On-Chip Imaging of Transgenic Caenorhabditis elegans Over an Ultra-Wide Field-of-View. PLoS ONE 2011, 6, e15955. [Google Scholar] [CrossRef] [PubMed]

- Szmacinski, H.; Lakowicz, J.R. Fluorescence lifetime-based sensing and imaging. Sens. Actuators B Chem. 1995, 29, 16–24. [Google Scholar] [CrossRef]

- Bastiaens, P.I.; Squire, A. Fluorescence lifetime imaging microscopy: Spatial resolution of biochemical processes in the cell. Trends Cell Biol. 1999, 9, 48–52. [Google Scholar] [CrossRef]

- Hum, J.M.; Siegel, A.P.; Pavalko, F.M.; Day, R.N. Monitoring Biosensor Activity in Living Cells with Fluorescence Lifetime Imaging Microscopy. Int. J. Mol. Sci. 2012, 13, 14385. [Google Scholar] [CrossRef] [PubMed]

- Tyndall, D.; Rae, B.; Li, D.; Richardson, J.; Arlt, J.; Henderson, R. A 100 Mphoton/s time-resolved mini-silicon photomultiplier with on-chip fluorescence lifetime estimation in 0.13 µm CMOS imaging technology. In Proceedings of the 2012 IEEE International Solid-State Circuits Conference (ISSCC), Digest of Technical Papers, San Francisco, CA, USA, 19–23 February 2012; pp. 122–124.

- Gersbach, M.; Maruyama, Y.; Trimananda, R.; Fishburn, M.W.; Stoppa, D.; Richardson, J.A.; Walker, R.; Henderson, R.; Charbon, E. A Time-Resolved, Low-Noise Single-Photon Image Sensor Fabricated in Deep-Submicron CMOS Technology. IEEE J. Solid-State Circuits 2012, 47, 1394–1407. [Google Scholar] [CrossRef]

- Field, R.M.; Shepard, K. A 100-fps fluorescence lifetime imager in standard 0.13-µm CMOS. In Proceedings of the 2013 Symposium on VLSI Circuits (VLSIC), Kyoto, Japan, 11–14 June 2013; pp. C10–C11.

- Bishara, W.; Sikora, U.; Mudanyali, O.; Su, T.W.; Yaglidere, O.; Luckhart, S.; Ozcan, A. Holographic pixel super-resolution in portable lensless on-chip microscopy using a fiber-optic array. Lab Chip 2011, 11, 1276–1279. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Molnar, A. A Light-Field Image Sensor in 180 nm CMOS. IEEE J. Solid-State Circuits 2012, 47, 257–271. [Google Scholar] [CrossRef]

- Gill, P.R.; Lee, C.; Lee, D.G.; Wang, A.; Molnar, A. A microscale camera using direct Fourier-domain scene capture. Opt. Lett. 2011, 36, 2949–2951. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Gill, P.; Molnar, A. Fluorescent imaging and localization with angle sensitive pixel arrays in standard CMOS. In Proceedings of the 2010 IEEE Sensors Conference, Waikoloa, HI, USA, 1–4 Novomber 2010; pp. 1706–1709.

- Levoy, M.; Ng, R.; Adams, A.; Footer, M.; Horowitz, M. Light Field Microscopy. ACM Trans. Graph. 2006, 25, 924–934. [Google Scholar] [CrossRef]

- Lee, C.; Molnar, A. Self-quenching, Forward-bias-reset for Single Photon Avalanche Detectors in 1.8 V, 0.18-µm process. In Proceedings of the 2011 IEEE International Symposium on Circuits and Systems (ISCAS), Rio de Janeiro, Brazil, 15–19 May 2011; pp. 2217–2220.

- Lee, C.; Johnson, B.; Molnar, A. Angle sensitive single photon avalanche diode. Appl. Phys. Lett. 2015, 106, 231105. [Google Scholar] [CrossRef]

- Burri, S.; Maruyama, Y.; Michalet, X.; Regazzoni, F.; Bruschini, C.; Charbon, E. Architecture and applications of a high resolution gated SPAD image sensor. Opt. Express 2014, 22, 17573–17589. [Google Scholar] [CrossRef] [PubMed]

- Field, R.; Realov, S.; Shepard, K. A 100 fps, Time-Correlated Single-Photon-Counting-Based Fluorescence-Lifetime Imager in 130 nm CMOS. IEEE J. Solid-State Circuits 2014, 49, 867–880. [Google Scholar] [CrossRef]

- Veerappan, C.; Richardson, J.; Walker, R.; Li, D.U.; Fishburn, M.; Maruyama, Y.; Stoppa, D.; Borghetti, F.; Gersbach, M.; Henderson, R.; et al. A 160 × 128 single-photon image sensor with on-pixel 55 ps 10 b time-to-digital converter. In Proceedings of the 2011 IEEE International Solid-State Circuits Conference, Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 20–24 February 2011; pp. 312–314.

- Homulle, H.A.R.; Powolny, F.; Stegehuis, P.L.; Dijkstra, J.; Li, D.U.; Homicsko, K.; Rimoldi, D.; Muehlethaler, K.; Prior, J.O.; Sinisi, R.; et al. Compact solid-state CMOS single-photon detector array for in vivo NIR fluorescence lifetime oncology measurements. Biomed. Opt. Express 2016, 7, 1797–1814. [Google Scholar] [CrossRef] [PubMed]

- Dutton, N.A.W.; Gyongy, I.; Parmesan, L.; Henderson, R.K. Single Photon Counting Performance and Noise Analysis of CMOS SPAD-Based Image Sensors. Sensors 2016, 16, 1122. [Google Scholar] [CrossRef] [PubMed]

- Leonard, J.; Dumas, N.; Causse, J.P.; Maillot, S.; Giannakopoulou, N.; Barre, S.; Uhring, W. High-throughput time-correlated single photon counting. Lab Chip 2014, 14, 4338–4343. [Google Scholar] [CrossRef] [PubMed]

- Harris, C.; Selinger, B. Single-Photon Decay Spectroscopy. II. The Pile-up Problem. Aust. J. Chem. 1979, 32, 2111–2129. [Google Scholar] [CrossRef]

- Nie, K.; Wang, X.; Qiao, J.; Xu, J. A Full Parallel Event Driven Readout Technique for Area Array SPAD FLIM Image Sensors. Sensors 2016, 16, 160. [Google Scholar] [CrossRef] [PubMed]

- Malass, I.; Uhring, W.; Le Normand, J.P.; Dumas, N.; Dadouche, F. Efficiency improvement of high rate integrated time correlated single photon counting systems by incorporating an embedded FIFO. In Proceedings of 2015 the 13th International New Circuits and Systems Conference (NEWCAS), Grenoble, France, 7–10 June 2015; pp. 1–4.

- Ballew, R.M.; Demas, J.N. An error analysis of the rapid lifetime determination method for the evaluation of single exponential decays. Anal. Chem. 1989, 61, 30–33. [Google Scholar] [CrossRef]

- Grant, D.M.; McGinty, J.; McGhee, E.J.; Bunney, T.D.; Owen, D.M.; Talbot, C.B.; Zhang, W.; Kumar, S.; Munro, I.; Lanigan, P.M.; et al. High speed optically sectioned fluorescence lifetime imaging permits study of live cell signaling events. Opt. Express 2007, 15, 15656–15673. [Google Scholar] [CrossRef] [PubMed]

- Chan, S.P.; Fuller, Z.J.; Demas, J.N.; DeGraff, B.A. Optimized Gating Scheme for Rapid Lifetime Determinations of Single-Exponential Luminescence Lifetimes. Anal. Chem. 2001, 73, 4486–4490. [Google Scholar] [CrossRef]

- Li, D.D.U.; Ameer-Beg, S.; Arlt, J.; Tyndall, D.; Walker, R.; Matthews, D.R.; Visitkul, V.; Richardson, J.; Henderson, R.K. Time-Domain Fluorescence Lifetime Imaging Techniques Suitable for Solid-State Imaging Sensor Arrays. Sensors 2012, 12, 5650–5669. [Google Scholar] [CrossRef] [PubMed]

- Villa, F.; Markovic, B.; Bellisai, S.; Bronzi, D.; Tosi, A.; Zappa, F.; Tisa, S.; Durini, D.; Weyers, S.; Paschen, U.; et al. SPAD Smart Pixel for Time-of-Flight and Time-Correlated Single-Photon Counting Measurements. IEEE Photonics J. 2012, 4, 795–804. [Google Scholar] [CrossRef]

- Maruyama, Y.; Blacksberg, J.; Charbon, E. A 1024 × 8, 700-ps Time-Gated SPAD Line Sensor for Planetary Surface Exploration with Laser Raman Spectroscopy and LIBS. IEEE J. Solid-State Circuits 2014, 49, 179–189. [Google Scholar] [CrossRef]

- De Grauw, C.J.; Gerritsen, H.C. Multiple Time-Gate Module for Fluorescence Lifetime Imaging. Appl. Spectrosc. 2001, 55, 670–678. [Google Scholar] [CrossRef]

- Webster, E.; Richardson, J.; Grant, L.; Renshaw, D.; Henderson, R. A Single-Photon Avalanche Diode in 90-nm CMOS Imaging Technology With 44% Photon Detection Efficiency at 690 nm. IEEE Electron Device Lett. 2012, 33, 694–696. [Google Scholar] [CrossRef]

- Mizuno, M.; Yamashina, M.; Furuta, K.; Igura, H.; Abiko, H.; Okabe, K.; Ono, A.; Yamada, H. A GHz MOS adaptive pipeline technique using MOS current-mode logic. IEEE J. Solid-State Circuits 1996, 31, 784–791. [Google Scholar] [CrossRef]

- Gill, P.R.; Wang, A.; Molnar, A. The In-Crowd Algorithm for Fast Basis Pursuit Denoising. IEEE Trans. Signal Process. 2011, 59, 4595–4605. [Google Scholar] [CrossRef]

- Landy, M.; Movshon, J.A. The Plenoptic Function and the Elements of Early Vision. In Computational Models of Visual Processing; MIT Press: Cambridge, MA, USA, 1991; pp. 3–20. [Google Scholar]

- Maruyama, Y.; Charbon, E. An all-digital, time-gated 128 × 128 SPAD array for on-chip, filter-less fluorescence detection. In Proceedings of 2011 the 16th International Solid-State Sensors, Actuators and Microsystems Conference (TRANSDUCERS), Beijing, China, 5–9 June 2011; pp. 1180–1183.

- Parmesan, L.; Dutton, N.; Calder, N.; Holmes, A.; Grant, L.; Henderson, R. A 9.8 µm sample and hold time to amplitude converter CMOS SPAD pixel. In Proceedings of 2014 the 44th European Solid State Device Research Conference (ESSDERC), Venice, Italy, 22–26 September 2014; pp. 290–293.

- Vitali, M.; Bronzi, D.; Krmpot, A.; Nikolic, S.; Schmitt, F.J.; Junghans, C.; Tisa, S.; Friedrich, T.; Vukojevic, V.; Terenius, L.; et al. A Single-Photon Avalanche Camera for Fluorescence Lifetime Imaging Microscopy and Correlation Spectroscopy. IEEE J. Sel. Top. Quan. 2014, 20, 344–353. [Google Scholar] [CrossRef]

| This Work | [14] | [43] | [24] | [44] | [45] | |

|---|---|---|---|---|---|---|

| PDP (Max %) @Ve | 2.72 †@1.2 Ve | 25@1 Ve | 20@3 Ve | 30@1.5 Ve | - | 40@5 Ve |

| Pixel Size | 35 µm × 35 µm | 50 µm × 50 µm | 25 µm × 25 µm | 48 µm × 48 µm | 8 µm × 8 µm | 100 µm × 100 µm |

| Fill Factor | 14.4% | 2% | 4.5% | 0.77% | 19.63% | 3.14% |

| DCR (kHz) | 0.404@1.2 VE | 0.1@1 VE | 0.078@3 VE | 0.54@2.5 VE | 0.05@2 VE | 4@5 VE |

| Array Format | 72×60 | 32× 32 | 128 × 128 | 64 × 64 | 256 × 256 | 32 × 32 |

| Chip size | 2.64 mm × 3 mm | 4.8 mm × 3.2 mm | 25 mm × 25 mm | 9.1 mm × 4.2 mm | 3.5 mm × 3.1 mm | 3.5 mm × 3.5 mm |

| Power | 83.8 mW | 90 mW | 363 mW | 26.4 W | - | - |

| Process | 180 nm | CIS 130 nm | 350 nm | 130 nm | CIS 130 nm | 350 nm |

| Lifetime Method | Time-Gated (DTC) | TDC | Time-Gated | TDC | Time-Gated (TAC) | Time-Gated |

| Resolution (LSB) | <71 ps | 119 ps | 200 ps | 62.5 ps | 6.66 ps | - |

| Max Range | 72.7 ns | 100 ns | 9.6 ns | 64 ns | 50 ns | - |

| DNL (LSB) | 0.54 | 0.4 | - | 4 | 3.5 | - |

| INL (LSB) | 1.27 | 1.2 | - | 8 | - | - |

| Application | 3D FLIM | FLIM | FLIM | FLIM | FLIM | FLIM |

| Lens | No | Yes | Yes | - | Yes | Yes |

| Filter | No | Yes | No | Yes | - | Yes |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.; Johnson, B.; Jung, T.; Molnar, A. A 72 × 60 Angle-Sensitive SPAD Imaging Array for Lens-less FLIM. Sensors 2016, 16, 1422. https://doi.org/10.3390/s16091422

Lee C, Johnson B, Jung T, Molnar A. A 72 × 60 Angle-Sensitive SPAD Imaging Array for Lens-less FLIM. Sensors. 2016; 16(9):1422. https://doi.org/10.3390/s16091422

Chicago/Turabian StyleLee, Changhyuk, Ben Johnson, TaeSung Jung, and Alyosha Molnar. 2016. "A 72 × 60 Angle-Sensitive SPAD Imaging Array for Lens-less FLIM" Sensors 16, no. 9: 1422. https://doi.org/10.3390/s16091422

APA StyleLee, C., Johnson, B., Jung, T., & Molnar, A. (2016). A 72 × 60 Angle-Sensitive SPAD Imaging Array for Lens-less FLIM. Sensors, 16(9), 1422. https://doi.org/10.3390/s16091422