Gait Characteristic Analysis and Identification Based on the iPhone’s Accelerometer and Gyrometer

Abstract

: Gait identification is a valuable approach to identify humans at a distance. In this paper, gait characteristics are analyzed based on an iPhone's accelerometer and gyrometer, and a new approach is proposed for gait identification. Specifically, gait datasets are collected by the triaxial accelerometer and gyrometer embedded in an iPhone. Then, the datasets are processed to extract gait characteristic parameters which include gait frequency, symmetry coefficient, dynamic range and similarity coefficient of characteristic curves. Finally, a weighted voting scheme dependent upon the gait characteristic parameters is proposed for gait identification. Four experiments are implemented to validate the proposed scheme. The attitude and acceleration solutions are verified by simulation. Then the gait characteristics are analyzed by comparing two sets of actual data, and the performance of the weighted voting identification scheme is verified by 40 datasets of 10 subjects.1. Introduction

Gait refers to an idiosyncratic feature of a person, that is determined by an individual's weight, limb length, posture combined with characteristic motion [1] and so on. Also gait may reflect the health condition of humans [2,3], such as symptoms of Cerebral Palsy and Parkinson's disease. Hence, gait can be used as a biometric measure to recognize known persons and classify unknown subjects [1]. Gait identification allows a nonintrusive way of data collection from a distance. This procedure can provide supplemental information for the traditional approaches (e.g., fingerprint, iris, face, voice) in security and usability applications, thereby enhancing or complimenting them.

At present, gait analysis can be classified according to two different approaches: non-wearable sensors (NWS) based and wearable sensors (WS) based [4]. NWS systems usually require optical sensors [5–8] or pressure sensors on the floor [9]. Gait features are extracted by processing image or video data. In the security surveillance field, this approach can effectively narrow the range of subjects in gait database and plays an important role. WS systems are based on motion-recording sensors which are attached to moving subjects [10–16]. Motion-record sensors (e.g., accelerometers, gyrometers, force sensors, bend sensors, and so on) are usually attached to various parts of the body such as the ankle, hip and waist [12,16]. Then, gait signatures are extracted by analyzing the recorded signals in both time and frequency domains. With the application of a Microelectromechanical System (MEMS), a device such as an Inertial Measurement Unit (IMU) is easily inserted to shoes, gloves, watches and other wearable attire. The prospect of applying wearable sensors to gait analysis is discussed in [12–15].

Smart mobile phones, nowadays widely equipped with MEMS accelerometers and gyrometers to determine device orientation, provide developers and users with a rich and easily accessible platform for exploration. Plenty of application programs are developed based on smartphone sensors. An iPhone is used as a wireless accelerometer sensor for Parkinson's disease tremor analysis in [17]. The feasibility of gait identification using an iPhone's accelerometer is discussed in [18]. However, current study of gait analysis based on fixed smartphone sensors mostly use data including gravitational acceleration without considering the effects of attitude change. Our goal is mainly to analyze gait characteristics and identification with an unfixed iPhone in pant pockets instead of professional sensors and laboratory conditions. Under this case, attitude of the iPhone should be considered to minimize the effects of attitude change and gravitational acceleration. After extracting some gait information, a voting scheme is investigated for gait identification.

This paper is organized as follows. In Section 2, pre-processing methods for iPhone inertial sensor data are introduced. In Section 3, gait characteristics are analyzed and methods are studied to extract gait parameters from accelerometer and gyrometer data. In Section 4, a weighted voting scheme based on gait signatures is proposed for gait identification. In Section 5, validating experiments are conducted for gait analysis and identification using both simulated data and actually collected data.

2. Pre-Processing of iPhone Measurement Data

Several kinds of sensors are integrated in an iPhone like a GPS sensor, accelerometer, gyrometer, magnetometer, and so on. Theoretically, the motion of an iPhone (including its acceleration, velocity, and position) can be solved based on the sensor data. However, according to experiments, the precision of iPhone sensors [19] can hardly support accurate solutions of device velocity and position, especially for short-distance measurements. According to the operating principles of iPhone accelerometers, the collected data is in its own inertial coordinate system, and has gravitational acceleration included. During the collection of an individual's gait data, the attitude of an iPhone is changing so it is not an accurate method to directly process the measured acceleration data. In this section, a pre-processing approach is studied to get the linear acceleration, in which the gravitational acceleration is not included. This is achieved by defining a reference coordinate system with its Z-axis corresponding to the opposite direction of gravity.

2.1. Inertial Data Calibration

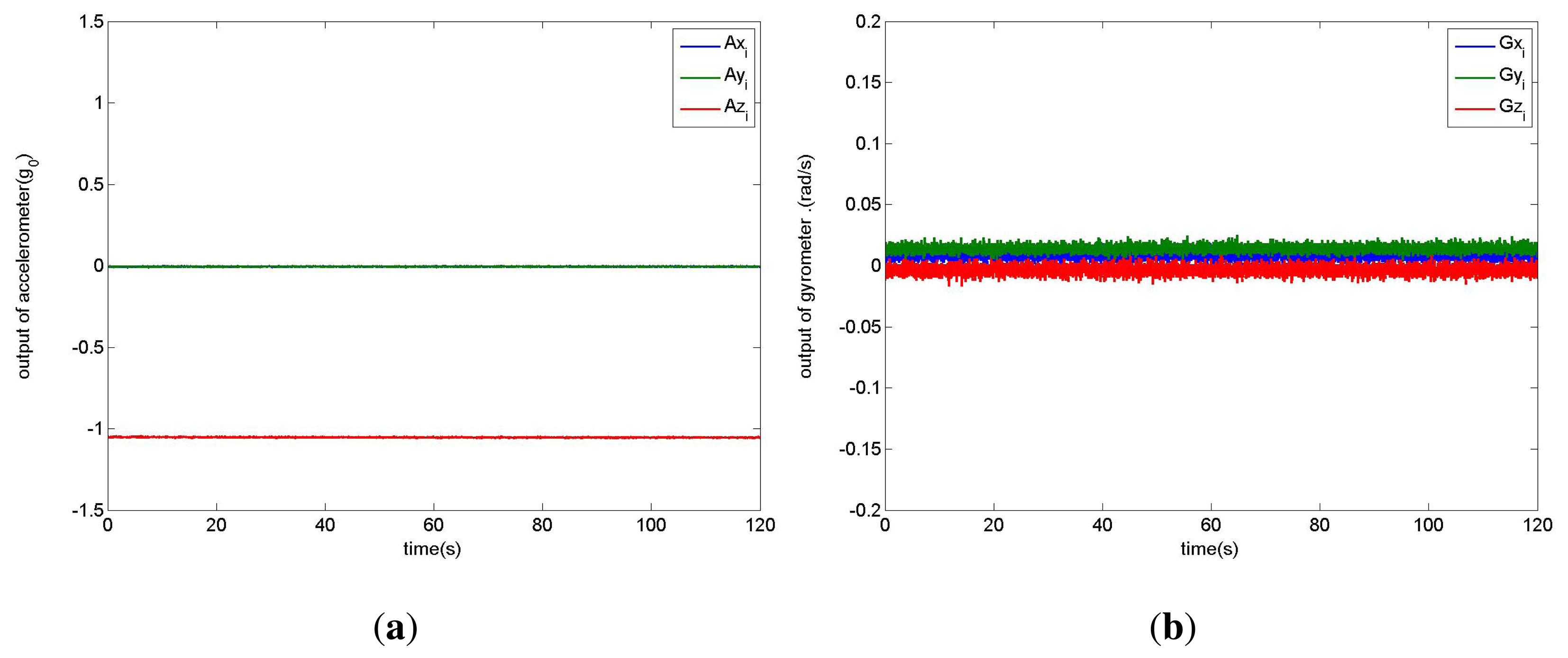

Although the inertial sensors are calibrated by the manufacturers, there are still some systematic errors for applications. Figure 1 illustrates the direct outputs of the triaxial accelerometer and gyrometer, which are collected when the iPhone is placed on a horizontal surface for 120 s. It is obvious that the values of z-axis acceleration deviate from the theoretical value −1.0 *g0, where g0 = 9.81 m/s2 is the gravitational acceleration constant. A similar situation occurs for the gyrometer. So the calibration of inertial data is needed.

2.1.1. Static State Judgement

To determine the initial attitude of an iPhone, an approximate static state is required. If the variance of the complex acceleration amplitude is below a threshold in a short period (e.g., 1 s), the iPhone can be regarded as static or at uniform motion in a straight line. Due to ups and downs during walking, uniform motion is almost impossible. In a static state, the measured acceleration data corresponds to the gravity only.

2.1.2. Acceleration Bias

There may be systematic errors in accelerometers because of device differences, device aging, or even occasional damage to the device. The systematic error is coupled with motion error and gravitational acceleration, and leads to the acceleration bias in measurements. The acceleration bias can be estimated by recording triaxial accelerations under static state and calculating their mean values. Thus in the processing of gait data, acceleration bias can be eliminated by directly subtracting the estimated bias from triaxial acceleration data. In Figure 1a, the main bias comes from z-axis, which is about −0.05 * g0.

2.1.3. Gyrometer Bias and Drift

The theoretical outputs of triaxial gyrometer should be zeros in a static state. We also calculate their mean values to be the systematic biases and subtract these biases from triaxial gyrometer data. Usually the biggest problem for a gyrometer is its drift during long time measurement. However, according to Figure 1b we can see the drift in 120 s is almost zero. Therefore in a short time period the drift can be neglected in gait measurement, which is usually sampled in less than 20 s intervals.

2.2. Attitude during Motion

To study the attitude of the iPhone sensor during motion, a reference coordinate system is defined based on gravity and the initial attitude of the iPhone sensor. In this paper, attitude is described by quaternion, which can be calculated via the combination of acceleration and gyrometer data. Then the coordinate transformation matrix is solved. The specific process is as follows:

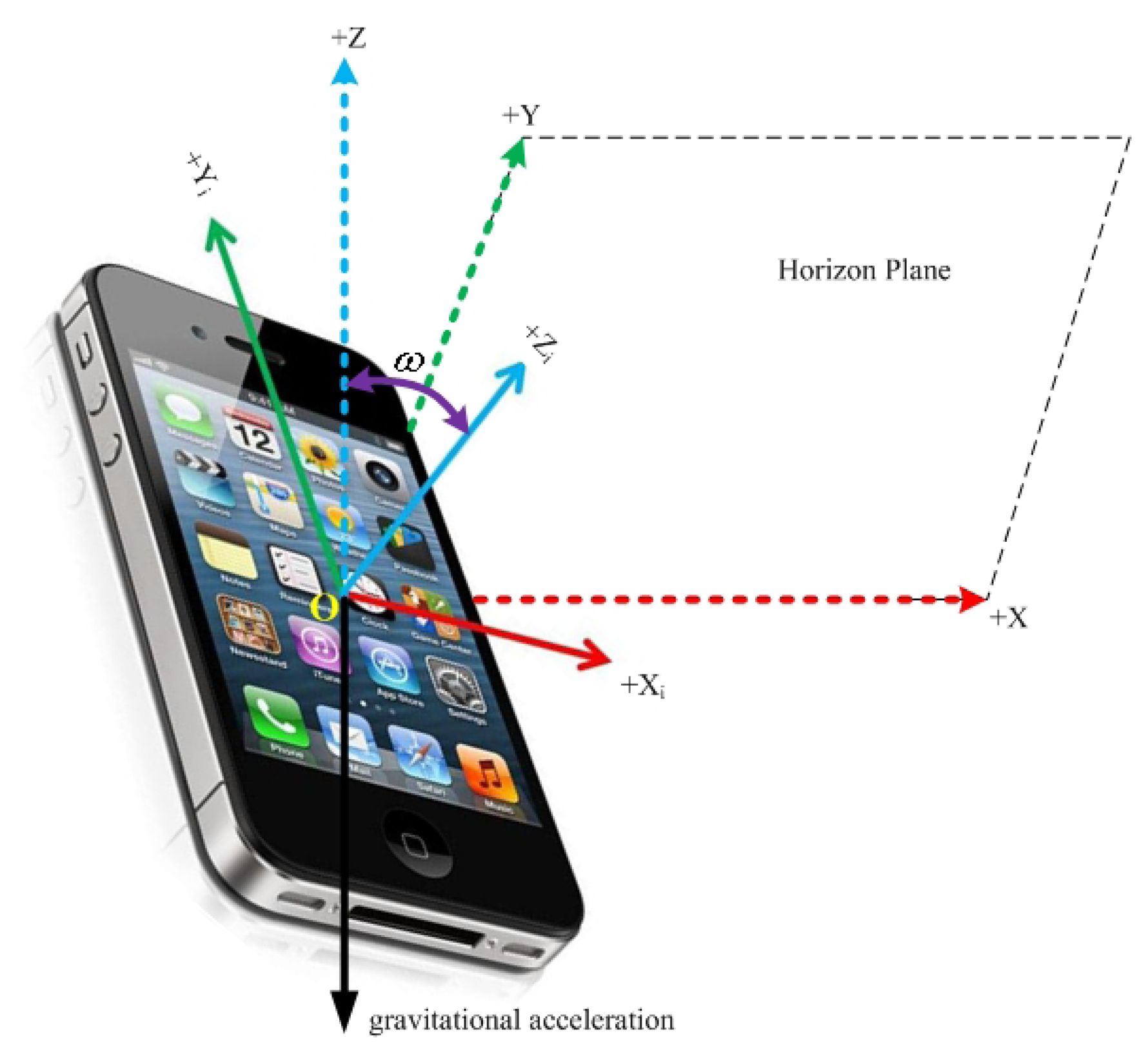

- (1)

To define the reference coordinate system:

The iPhone sensor has its own inertial coordinate system [O, X,i,Yi,Zi], as shown in Figure 2. Define Z-axis of the reference coordinate system opposite to the gravity vector, ω as the angle between the Zi-axis and Z-axis, and a unit vector C =(c1,c2,c3) which is vertical to both Zi- and Z-axis as the rotation axis. For the initial attitude of iPhone sensor, the triaxial accelerations are A0 =(ax0,ay0,az0)T in iPhone inertial coordinate system, and the gravitational acceleration is B0 = (0, 0, −g0)T in reference coordinate system, then ω and C can be obtained as , . After a rotation around C by an angle of ω, the Zi-axis is rotated to Z-axis, and the Xi-axis and Yi-axis are rotated to the horizontal plane, in which they are now defined as X-axis and Y-axis. [O, X, Y, Z] is the reference coordinate system for further analysis.

According to the quaternion as rotations [20,21], the initial quaternion Q0 = [w0, x0, y0, z0]T is solved as:

It means one time rotating around unit vector C=(c1,c2,c3) by ω transforms the iPhone inertial coordinate to the defined reference coordinate.

In a further step, the three Euler angles of the iPhone can be solved based on quaternion. However, the values of Euler angles depend on their definition and rotations [22]. In this paper, quaternion is adopted for iPhone solution of attitude.

- (2)

Update quaternion:

To update quaternion Qi = [wi, xi, zi]T with combined acceleration data and gyrometer data using the Fourth-order Runge-Kutta Algorithm [23], the specific process is as follows:

where Qi ⋆ gi refers to quaternion product,and .- (3)

Calculate coordinate transformation matrix:

To find the coordinate transformation matrix for t = i, according to real-time quaternion Qi =[wi, xi, yi, zi]T. The coordinate transformation matrix [22] is

2.3. Linear Acceleration Solution

Gravitational acceleration can be eliminated to get the linear acceleration using the coordinate transformation matrix, described as follows

The first term of the right side of Equation (12) is the total acceleration in reference coordinate system. After subtracting the gravitational acceleration in the same reference coordinate system, we can get the linear acceleration. Since the two components and correspond to the horizon direction of motion, the following gait characteristic analysis is based mainly on the component of linear acceleration .

3. Gait Characteristic Analysis

In this section, we discuss four gait characteristic parameters: gait frequency, symmetry coefficient, dynamic range and similarity degree between characteristic curves. The location-related parameters are not considered because the integration of acceleration will generate accumulation error.

3.1. Gait Frequency

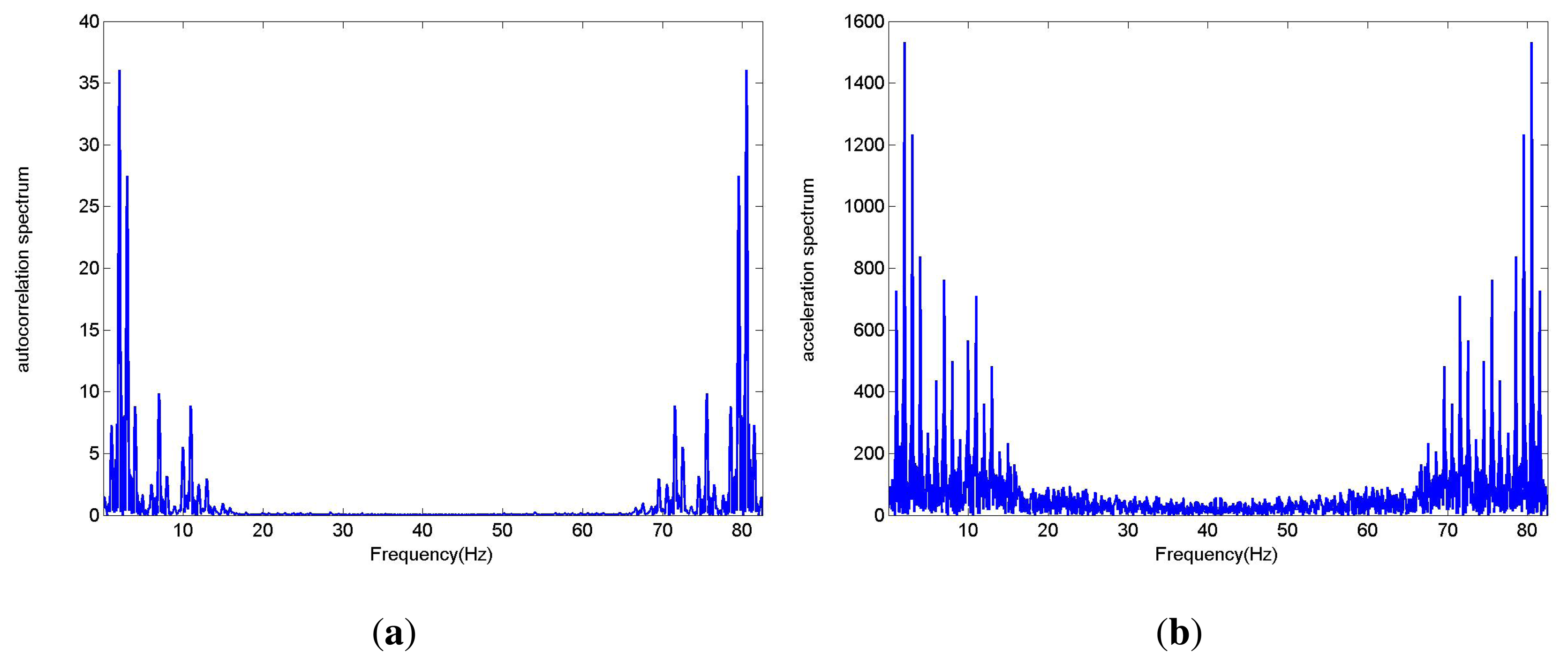

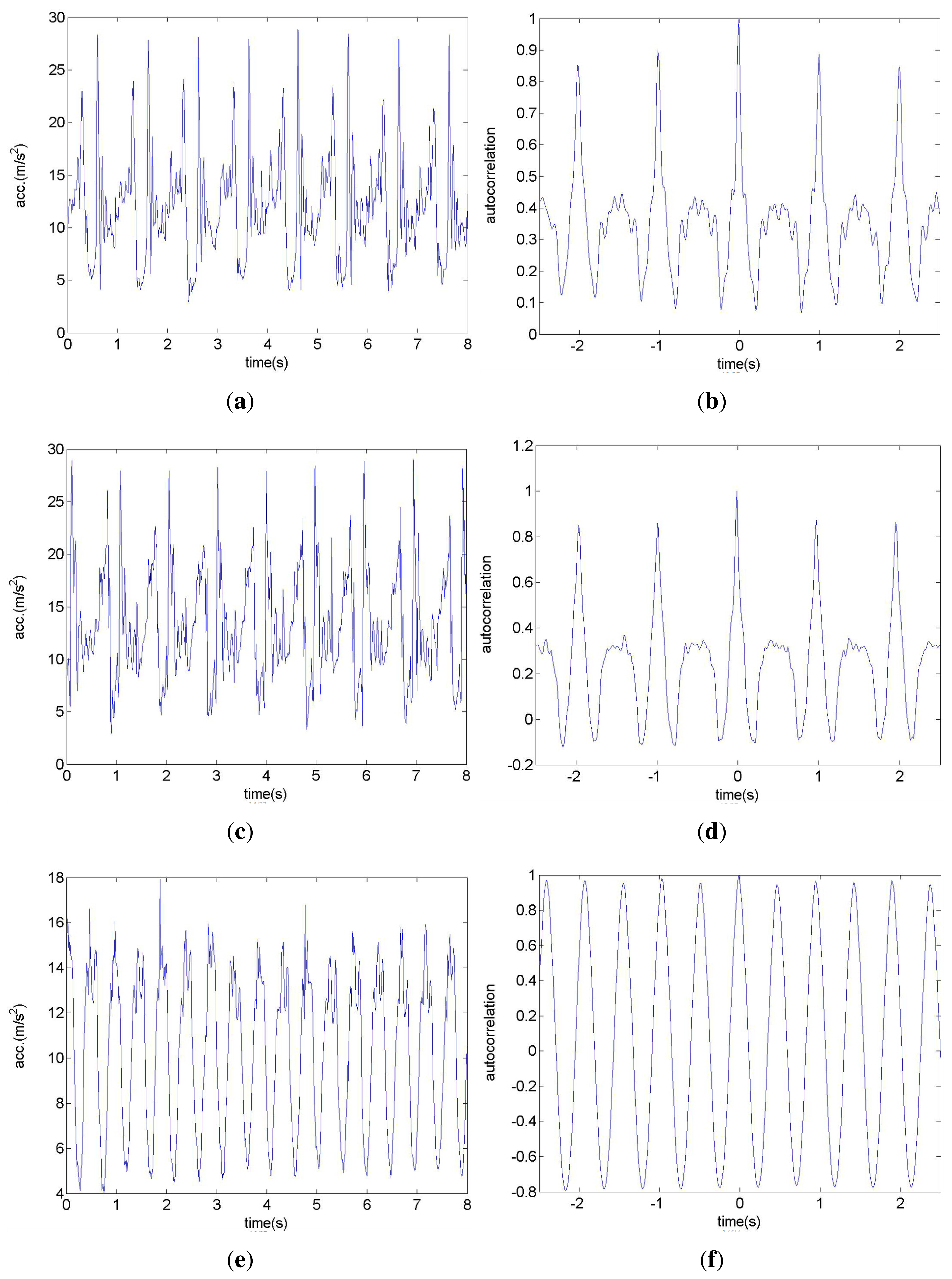

Gait frequency is the most basic and important parameter in gait characteristics analysis. Due to the quasi-periodic characteristic of paces during walking, gait frequency can be extracted by the characteristic of the acceleration signal in the frequency domain. After FFT of the acceleration signal, the maximum frequency, excluding DC component, is not always the gait frequency. An improved method is presented in this paper. The autocorrelation of the acceleration signal is calculated and converted to frequency domain. Then the maximum frequency, excluding zero frequency component, equals to gait frequency. Figure 3 shows the spectrum of acceleration signals and their autocorrelation. Interpolation processing can greatly improve the accuracy of gait frequency while calculating correlation coefficient.

According to the Figure 3, the spectrum lines of the autocorrelation function are more focused than those of the acceleration. Thus the autocorrelation function of acceleration is usually less affected by harmonic components and easier to extract gait frequency.

3.2. Symmetry Coefficient

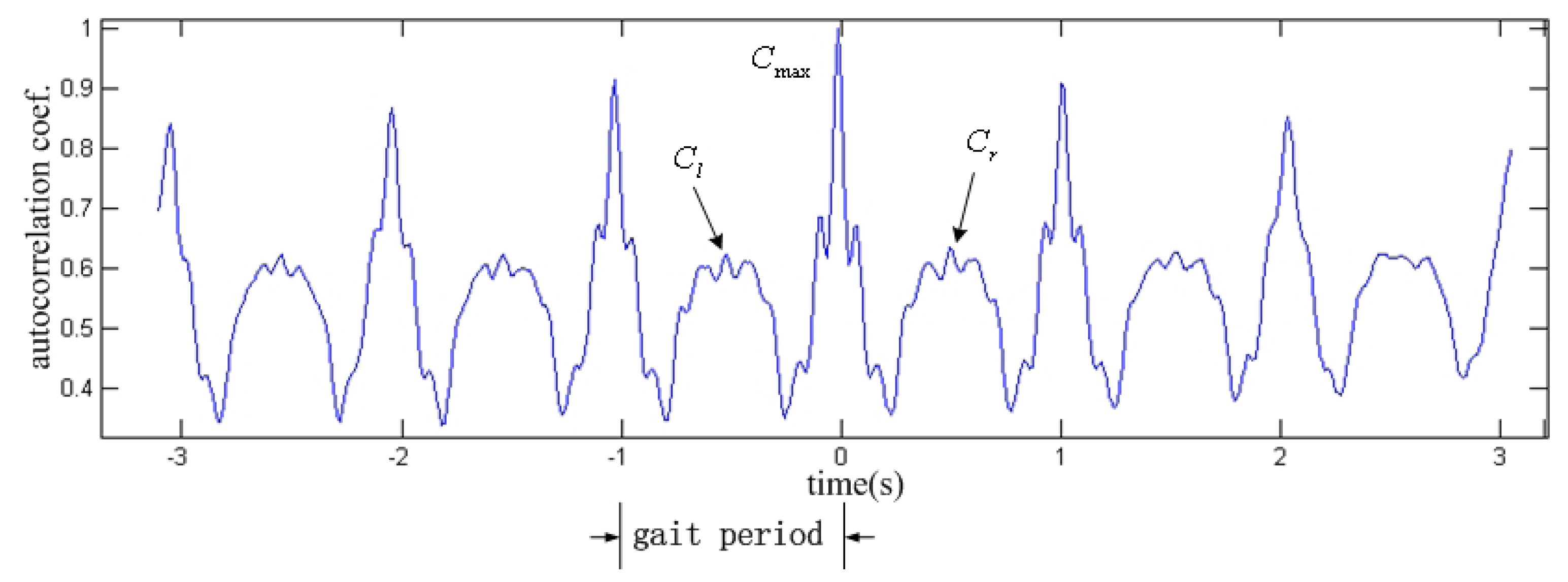

Some literature has defined the gait symmetry corresponding their own dataset [24]. In this paper, we define the symmetry coefficient Si of gait according to autocorrelation of acceleration signal, shown in Figure 4.

Two possible causes may lead to asymmetry of the acceleration signal: (1) inconsistent gait, such as the gait of patients with unilateral actions and other obstacles; (2) sensors are not strictly centered placed. The first case is not considered in this paper, and all subsequent tests are based on almost symmetrical gait. We will discuss the effect of sensor's positions in Section 5.

3.3. Dynamic Range

The dynamic range of acceleration can reflect a gait characteristic in some aspects. In this paper, the difference between the maximum and minimum values of Z-linear acceleration is defined as dynamic range estimation.

Usually, a few aspects including the speed of walking, pace length, noise and so on will affect the dynamic range. It is reasonable that dynamic range should has less contribution during gait identification.

3.4. Similarity Degree between Characteristic Curves

For typical gait acceleration, the characteristic curve can be extracted according to its fluctuation characteristics, that is the time-amplitude sequence. This sequence can be used as an important basis for the subsequent gait matching and identification. By comparing the characteristic curve with each characteristic curve in database, a high similarity degree indicates the two curves correspond to the same people with a high probability, and it is helpful in gait identification.

To accurately estimate the similarity degree between two characteristic curves, scale transformation and panning of the curves have to be taken into consideration. Even for the same subject, walking speed and magnitude may have subtle variations under different test conditions. The discrete acceleration data cannot be directly used for similarity comparison. Although a one-dimensional invariant matrix method can be used theoretically, the experimental performance is not ideal. In this paper, the similarity degree between characteristic curves is calculated as follows:

apply interpolation processing, according to the gait frequencies, to eliminate the effects of scale differences.

find the peak positions of two curves as reference, and make a rough alignment of the vectors. The aligned acceleration characteristic curves are signed as l1, l2.

calculate the similarity coefficient, which is used to describe similarity degree, based on the normalized dot product of two vectors. Moreover, to eliminate noise affect in characteristic curves, we translate one vector within one pace period, which corresponds to N samples, then calculate the normalized dot product of the vectors, and finally select the maximum normal product as the similarity coefficient C.

4. Gait Identification

In the previous section, several gait characteristic parameters including gait frequency, symmetry coefficient, dynamic range and similarity coefficient are discussed. Based on these typical parameters, a weighted voting scheme is proposed for gait identification. The specific scheme is as follows:

calculate gait frequency Fi, symmetry coefficient Si, dynamic range Di for all the characteristic curves in the database one by one. Suppose the number of the curves in database is M, i =1, 2, …, M.

calculate gait frequency F , symmetry coefficient S, dynamic range D of the linear acceleration from the measured data.

calculate the similarity coefficient Ci between the characteristic curves of linear acceleration and samples in the database.

the weighted voting process is as follows:

- (a)

sort the absolute error between F and Fi in increasing order. We vote the first term 1, the second term 2, and so on. The number of votes of gait frequency is denoted as .

- (b)

sort the absolute error between S and Si in increasing order. We vote the first term 1, the second term 2, and so on. The number of votes of symmetry coefficient is denoted as .

- (c)

sort the absolute error between D and Di in increasing order. We vote the first term 1, the second term 2, and so on. The number of votes of dynamic range is denoted as .

- (d)

sort Ci in decreasing order. We vote the first term 1, the second term 2, and so on. The number of votes of similarity coefficient is denoted as .

- (e)

sum the weighted number of votes for each sample in the database.

where W =[w1,w2,w3,w4] is the weighted coefficient vector.- (f)

vote judgment. The sample in the database that has the minimum weighted vote summation matches the current measured data, and we can accomplish gait identification within the given database.

The weighted coefficient can be chosen by further experimental data. The similarity coefficient of characteristic curves is of the most importance for gait identification, followed by gait frequency. The symmetry coefficient has a close relationship with the iPhone's placement. The dynamic range is greatly affected by noise. Therefore, the weighted coefficients are set as W =[2, 2, 1, w4] in this paper, where

5. Experiments

Four experiments are conducted to validate the methods proposed in this paper: (1) simulation experiment to verify the solutions of attitude and linear acceleration; (2) comparison experiment of the same subject walking on cement pavement and on grass; (3) comparison experiment of three different placements of iPhones; (4) gait identification experiment with 10 subjects and 40 sets of data.

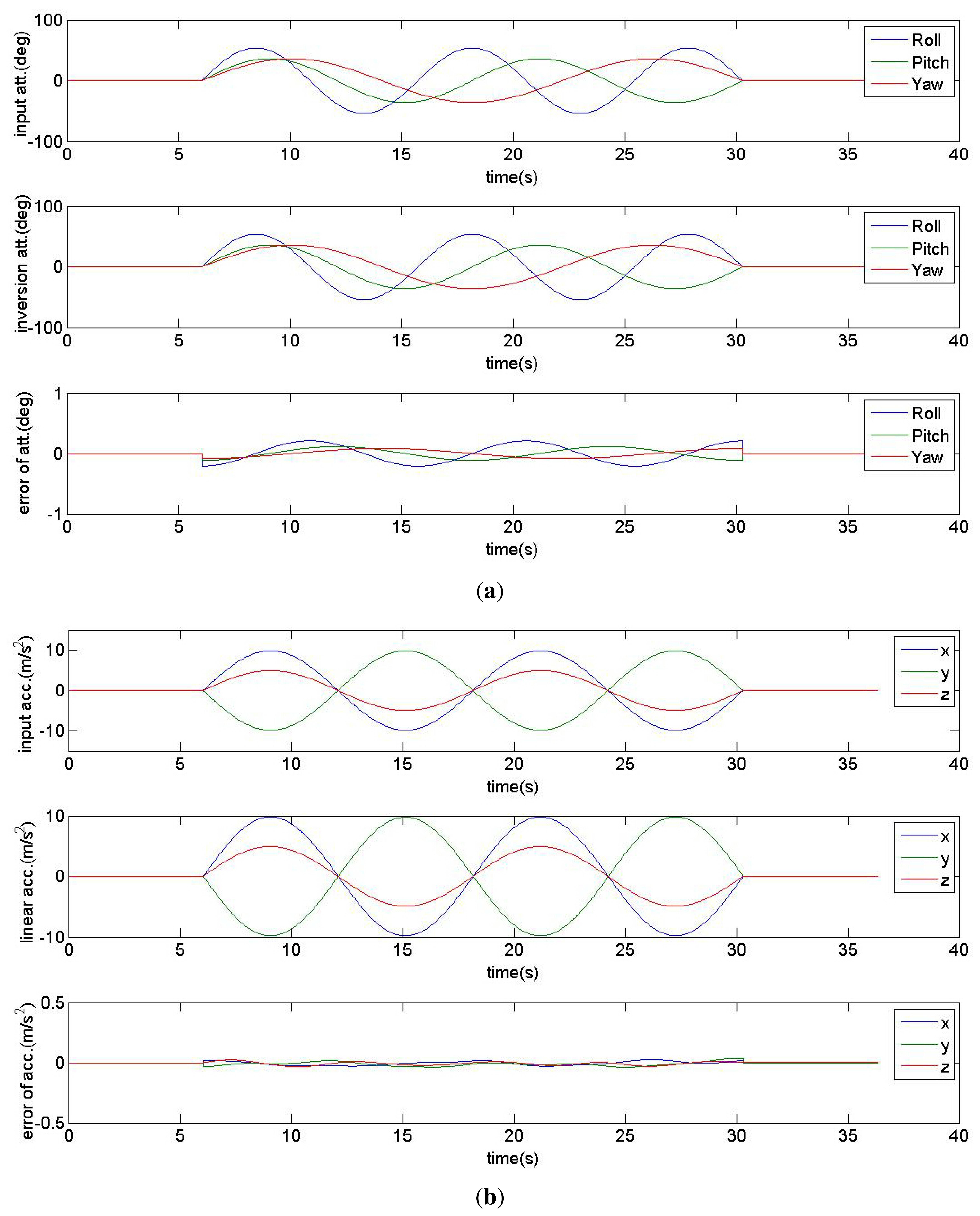

In the first experiment, the sinusoidal linear acceleration and attitude data are simulated. The attitude angles (yaw, pitch and roll) are defined by Tait-Bryan angles formalism with rotation sequence z-y-x [25]. Then the triaxial accelerometer and gyrometer data in iPhone's inertial coordinate system is generated as the input of the proposed method in Section 2. After calculation and comparison, the inversion linear acceleration and attitude data, and the errors are shown in Figure 5.

According to simulation results, the inversion errors of attitude are less than 1°, and the inversion errors of acceleration are less than 0.1 m/s2.

In the second experiment, the subject walked on cement pavement and grass, respectively, with the same iPhone placed in the right pant pocket. The characteristic curves of recorded acceleration data are shown in Figure 6.

At least two differences between the two datasets can be seen in Figure 6.

The data recorded on cement pavement is nearly 10 cycles, while the data recorded on grass is less than 9 cycles, both recorded in 10 s. They are consistent with the gait periods calculated by gait frequency analysis, which are 1.070 s and 1.174 s, respectively. This is due to the fact that it's more difficult to walk on soft surfaces like grass than on cement pavement.

The dynamic range of linear acceleration recorded while walking on cement pavement is a little larger than that on grass, and it can be explained by the buffer caused by grass.

In the third experiment, three iPhones are placed in three different positions: the left pant pocket, the right pant pocket and the center of the back waist area of the subject. The calculated linear accelerations and autocorrelation curves are shown in Figure 7.

According to Figure 7a,c,e, the linear acceleration signal reflects preferable quasi-periodicity. Moreover, the curves in Figure 7a,c are very similar and differ mainly in half gait period in time. The third data shows good symmetry among each single step, and the frequency is twice of the above two curves. According to Figure 7b,d, the accelerations corresponding to the left and right pace are asymmetrical, obviously due to the off-center displacement. Furthermore, the symmetry coefficients are only about 0.4. Figure 7f describes the third case, when the iPhone was placed in the center of the back waist. The left and right paces cause similar linear accelerations, and the symmetry coefficient is more than 0.9. The definition of symmetry coefficient is also validated. Moreover, the dynamic ranges of Figure 7a,b are greater than that in Figure 7c as a result of the up and down leg motion versus the body motion within the same pace period.

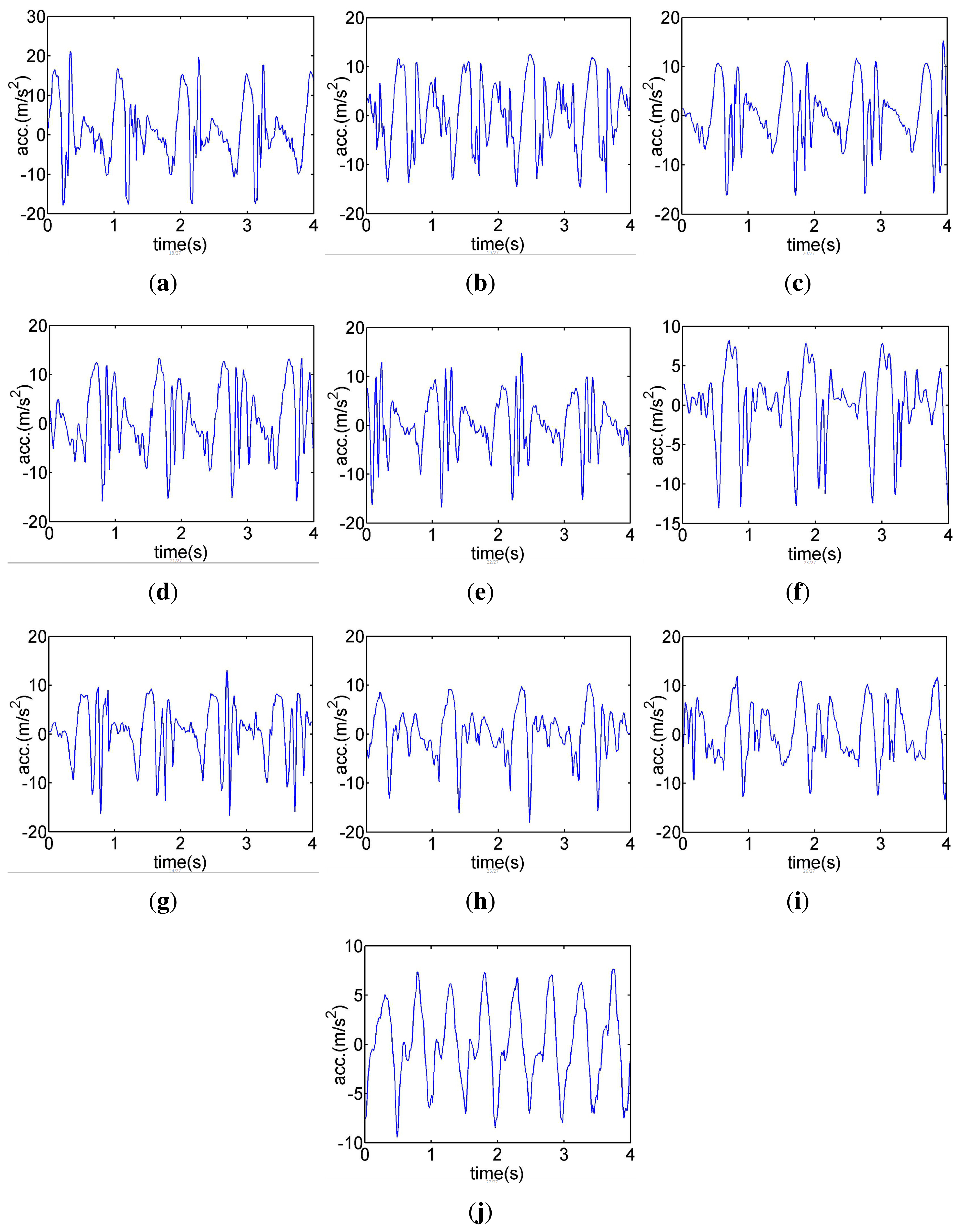

In the fourth experiment, a single iPhone is used to record 4 sets of gait data for each of the 10 tested subjects. The phone is placed in their left and right pant pockets twice, respectively. Then one set of data is selected for each subject in order to make the gait database by calculating characteristic parameters and extracting characteristic curves, shown in Table 1 and Figure 8.

The remaining 30 sets of recorded data are used as measured data to compare with 10 samples in the database. The voting results of 10 groups of measured data is listed in Table 2. We calculate the weighted votes for measured data M1∼M10 according to the method described in Section 4. For example, data M1 yields gait frequency Fi = 1.039 Hz, symmetry coefficient S1 = 0.554, and dynamic range D1 = 25.87 m/s2 . The similarity coefficients between M1 and D1∼D10 are [C1,C2,C3,C4,C5,C6,C7,C8,C9,C10]=[0.876, 0.773, 0.667, 0.762, 0.793, 0.498, 0.614, 0.735, 0.794, 0.575]. After comparing the above parameters with Table 1 and sorting the absolute errors in increasing order and similarity coefficients in decreasing order, we can get the votes of gait frequency , the votes of symmetry coefficient , the votes of dynamic range , and the votes of similarity coefficient . Following multiplication by W = [2, 2, 1,ω4], where ω4 = 4 is calculated using Equation (17), the summation votes, using Equation (16), equal to [17, 26, 48, 53, 50, 71, 61, 61, 36, 72] and are displayed in the first row in Table 2. The minimum of this row is 17, which corresponds to data D1. Because datasets D1 and M1 are collected from the same person in different time, the identification is true.

According to Table 2, the weighted voting scheme has a good performance in identification experiments. And all the remaining 20 sets of recorded data are tested and correctly identified.

As a further step, the weighted coefficient vectors are set to W = [0, 0, 0, 1], W = [1, 1, 1, 1], W = [1, 1, 1, 2], W = [2, 3, 1,ω4] and W = [2, 2, 1,ω4], respectively. Then the numbers of successfully recognized datasets are 23, 26, 27, 27 and 30, respectively. It is known that the weighted coefficients have great effects on identification performances. Among the weighted coefficients, the similarity degree of characteristic curves has the highest effect, but the identification cannot depend on this coefficient alone. For experiments conducted in this paper, the weighted coefficient vector W = [2, 2, 1,ω4] was adopted and resulted in an excellent identification performances, while the error of identification is zero.

6. Conclusions

The accelerometer and gyrometer integrated in smartphones provide users with a convenient platform for gait analysis. In this paper, a new method for gait analysis is proposed based on an iPhone. The Fourth-order Runge-Kutta algorithm and quaternion are applied to combine inertial data so as to solve linear acceleration and eliminate the errors caused by attitude change and gravitational acceleration. The gait characteristic parameters and characteristic curves are analyzed, then a weighted voting scheme is adopted for gait identification. Simulation and experiment results demonstrate good performance of the proposed scheme. In future study, the gait database will be improved to support gait identification under different scenarios, e.g., different motion status of tested subjects.

Acknowledgments

The authors would like to thank Ko, Young-woo for providing free iPhone application Sensor Monitor and the volunteers for providing their gait data.

Author Contributions

Bing Sun defined the methodology of the research and the structure of the paper and supervised the entire writing process. Yang Wang performed the main research. Jacob Banda performed the main writing of the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lee, L.; Grimson, W.E.L. Gait analysis for recognition and classification. Proceedings of Fifth IEEE International Conference on Automatic Face and Gesture Recognition, Washington, DC, USA, 20–21 May 2002; pp. 148–155.

- Pogorelc, B.; Bosnić, Z.; Gams, M. Automatic recognition of gait-related health problems in the elderly using machine learning. Multimed. Tools Appl. 2012, 58, 333–354. [Google Scholar]

- Azevedo Coste, C.; Sijobert, B.; Pissard-Gibollet, R.; Pasquier, M.; Espiau, B.; Geny, C. Detection of Freezing of Gait in Parkinson Disease: Preliminary Results. Sensors 2014, 14, 6819–6827. [Google Scholar]

- Muro-de-la Herran, A.; Garcia-Zapirain, B.; Mendez-Zorrilla, A. Gait Analysis Methods: An Overview of Wearable and Non-Wearable Systems, Highlighting Clinical Applications. Sensors 2014, 14, 3362–3394. [Google Scholar]

- Kale, A.; Sundaresan, A.; Rajagopalan, A.; Cuntoor, N.P.; Roy-Chowdhury, A.K.; Kruger, V.; Chellappa, R. Identification of humans using gait. IEEE Trans. Image Process. 2004, 13, 1163–1173. [Google Scholar]

- Kale, A.; Cuntoor, N.; Yegnanarayana, B.; Rajagopalan, A.; Chellappa, R. Gait Analysis for Human Identification. In Audio-and Video-Based Biometric Person Authentication; Springer: Berlin Heidelberg, Germany, 2003; pp. 706–714. [Google Scholar]

- Tang, J.; Luo, J.; Tjahjadi, T.; Gao, Y. 2.5 D Multi-View Gait Recognition Based on Point Cloud Registration. Sensors 2014, 14, 6124–6143. [Google Scholar]

- Yoo, J.H.; Hwang, D.; Moon, K.Y.; Nixon, M.S. Automated human recognition by gait using neural network. Proceedings of First Workshops on Image Processing Theory, Tools and Applications, Sousse, Tunisia, 23–26 November 2008; pp. 1–6.

- Middleton, L.; Buss, A.A.; Bazin, A.; Nixon, M.S. A floor sensor system for gait recognition. Proceedings of Fourth IEEE Workshop on Automatic Identification Advanced Technologies, Buffalo, NY, USA, 17–18 October 2005; pp. 171–176.

- Popovic, M.R.; Keller, T.; Ibrahim, S.; Bueren, G.V.; Morari, M. Gait identification and recognition sensor. Proceedings of 6th Vienna International Workshop in Functional Electrostimulation.

- Sprager, S.; Zazula, D. Gait identification using cumulants of accelerometer data. Proceedings of the 2nd WSEAS International Conference on Sensors, and Signals and Visualization, Imaging and Simulation and Materials Science, Baltimore, MD, USA, 7–9 November 2009; pp. 94–99.

- Gafurov, D.; Snekkenes, E. Gait recognition using wearable motion recording sensors. EURASIP J. Adv. Signal Process 2009, 2009, 1–16. [Google Scholar]

- Bamberg, S.J.M.; Benbasat, A.Y.; Scarborough, D.M.; Krebs, D.E.; Paradiso, J.A. Gait analysis using a shoe-integrated wireless sensor system. IEEE Trans. Inf. Technol. Biomed 2008, 12, 413–423. [Google Scholar]

- Pan, G.; Zhang, Y.; Wu, Z. Accelerometer-based gait recognition via voting by signature points. Electron. Lett. 2009, 45, 1116–1118. [Google Scholar]

- Avvenuti, M.; Casella, A.; Cesarini, D. Using gait symmetry to virtually align a triaxial accelerometer during running and walking. Electron. Lett. 2013, 49, 120–121. [Google Scholar]

- Gafurov, D.; Snekkkenes, E. Arm swing as a weak biometric for unobtrusive user authentication. Proceedings of IEEE International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Harbin, China, 15–17 October 2008; pp. 1080–1087.

- LeMoyne, R.; Mastroianni, T.; Cozza, M.; Coroian, C.; Grundfest, W. Implementation of an iPhone for characterizing Parkinson's disease tremor through a wireless accelerometer application. Proceedings of 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Buenos Aires, Argentina, 31 August–4 September 2010; pp. 4954–4958.

- Chan, H.K.; Zheng, H.; Wang, H.; Gawley, R.; Yang, M.; Sterritt, R. Feasibility study on iPhone accelerometer for gait detection. Proceedings of 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth), Dublin, Ireland, 23–26 May 2011; pp. 184–187.

- Zandbergen, P.A. Accuracy of iPhone locations: A comparison of assisted GPS, WiFi and cellular positioning. Trans. GIS 2009, 13, 5–25. [Google Scholar]

- Shoemake, K. Quaternions, 1994. Available online: http://www.cs.ucr.edu/∼vbz/resources/quatut.pdf (accessed on 11 September 2014).

- Haug, E. Computer Aided Analysis and Optimization of Mechanical System Dynamics; NATO ASI Series; Springer-Verlag: Berlin Heidelberg, Germany, 1984. [Google Scholar]

- Working, R. Euler Angles, Quaternions, and Transformation Matrices, 1977. Available online: http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19770024290.pdf (accessed on 11 September 2014).

- Zhang, R.; Jia, H.; Chen, T.; Zhang, Y. Attitude solution for strapdown inertial navigation system based on quaternion algorithm. Opt. Precis. Eng. 2008, 16, 1963–1970. [Google Scholar]

- Sadeghi, H.; Allard, P.; Prince, F.; Labelle, H. Symmetry and limb dominance in able-bodied gait: A review. Gait Posture 2000, 12, 34–45. [Google Scholar]

- Diebel, J. Representing attitude: Euler angles, unit quaternions, and rotation vectors. Matrix 2006, 58, 15–16. [Google Scholar]

| Indices | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 | D9 | D10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Gait Frequency | 1.036 | 1.041 | 0.957 | 1.009 | 0.939 | 0.845 | 1.002 | 0.945 | 0.987 | 1.019 |

| Symmetry Coef. | 0.534 | 0.563 | 0.571 | 0.404 | 0.669 | 0.525 | 0.623 | 0.597 | 0.576 | 0.914 |

| Dynamic Range | 26.480 | 29.301 | 25.300 | 22.379 | 24.891 | 25.618 | 27.051 | 20.674 | 21.567 | 14.736 |

| Data Name | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 | D9 | D10 | True/False |

|---|---|---|---|---|---|---|---|---|---|---|---|

| M1 | 26 | 48 | 53 | 50 | 71 | 61 | 61 | 36 | 72 | True | |

| M2 | 28 | 29 | 47 | 52 | 48 | 39 | 45 | 30 | 50 | True | |

| M3 | 50 | 65 | 71 | 45 | 68 | 55 | 31 | 45 | 93 | True | |

| M4 | 40 | 66 | 50 | 73 | 72 | 45 | 52 | 54 | 82 | True | |

| M5 | 51 | 70 | 54 | 57 | 77 | 46 | 39 | 43 | 96 | True | |

| M6 | 45 | 46 | 29 | 49 | 51 | 48 | 43 | 41 | 76 | True | |

| M7 | 51 | 58 | 65 | 44 | 65 | 87 | 50 | 36 | 72 | True | |

| M8 | 46 | 58 | 24 | 73 | 68 | 76 | 46 | 45 | 94 | True | |

| M9 | 48 | 45 | 53 | 45 | 79 | 72 | 69 | 48 | 69 | True | |

| M10 | 76 | 61 | 48 | 62 | 53 | 90 | 66 | 44 | 28 | True |

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Sun, B.; Wang, Y.; Banda, J. Gait Characteristic Analysis and Identification Based on the iPhone’s Accelerometer and Gyrometer. Sensors 2014, 14, 17037-17054. https://doi.org/10.3390/s140917037

Sun B, Wang Y, Banda J. Gait Characteristic Analysis and Identification Based on the iPhone’s Accelerometer and Gyrometer. Sensors. 2014; 14(9):17037-17054. https://doi.org/10.3390/s140917037

Chicago/Turabian StyleSun, Bing, Yang Wang, and Jacob Banda. 2014. "Gait Characteristic Analysis and Identification Based on the iPhone’s Accelerometer and Gyrometer" Sensors 14, no. 9: 17037-17054. https://doi.org/10.3390/s140917037

APA StyleSun, B., Wang, Y., & Banda, J. (2014). Gait Characteristic Analysis and Identification Based on the iPhone’s Accelerometer and Gyrometer. Sensors, 14(9), 17037-17054. https://doi.org/10.3390/s140917037