Abstract

In device-independent (DI) quantum protocols, security statements are agnostic to the internal workings of the quantum devices—they rely solely on classical interactions with the devices and specific assumptions. Traditionally, such protocols are set in a non-local scenario, where two non-communicating devices exhibit Bell inequality violations. Recently, a new class of DI protocols has emerged that requires only a single device. In this setting, the assumption of no communication is replaced by a computational one: the device cannot solve certain post-quantum cryptographic problems. Protocols developed in this single-device computational setting—such as for randomness certification—have relied on ad hoc techniques, making their guarantees difficult to compare and generalize. In this work, we introduce a modular proof framework inspired by techniques from the non-local DI literature. Our approach combines tools from quantum information theory, including entropic uncertainty relations and the entropy accumulation theorem, to yield both conceptual clarity and quantitative security guarantees. This framework provides a foundation for systematically analyzing DI protocols in the single-device setting under computational assumptions. It enables the design and security proof of future protocols for DI randomness generation, expansion, amplification, and key distribution, grounded in post-quantum cryptographic hardness.

1. Introduction

The fields of quantum and post-quantum cryptography are rapidly evolving. In particular, the device-independent (DI) approach for quantum cryptography is being investigated in different setups and for various protocols. Consider a cryptographic protocol and a physical device that is being used to implement the protocol. The DI paradigm treats the device as untrusted and possibly adversarial. This means that in proving security, one must assume the device may have been prepared by an adversary. Only limited, well-defined assumptions regarding the inner workings of the device are placed. In such protocols, the honest party, called the verifier here, interacts with the untrusted device in a black-box manner, using classical communication. The security proofs are then based on properties of the transcript of the interaction, i.e., the classical data collected during the execution of the protocol, and the underlying assumptions.

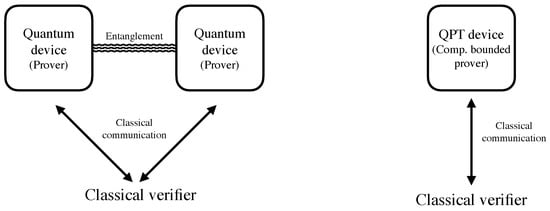

The most well-studied DI setup is the so-called “non-local setting” [1,2]. There, the protocols are implemented using (at least) two untrusted devices, and the assumption made is that the devices cannot communicate between themselves during the execution of the protocol (or parts of it). In recent years, another variant has been introduced: instead of working with two devices, the protocol requires only a single device and the no-communication assumption is replaced by an assumption regarding the computational power of the device. More specifically, one assumes that during the execution of the protocol, the device is unable to solve certain computational problems, such as Learning With Errors (LWE) [3], which are believed to be hard for a quantum computer. (The exact setup and assumptions are explained in Section 3). Both models are DI, in the sense that the actions of the quantum devices are uncharacterized. Figure 1 schematically presents the two scenarios.

Figure 1.

Two setups for device-independent protocols. On the left, a classical verifier is interacting classically with two non-communicating but otherwise all powerful quantum devices (also called provers) that can share entanglement. On the right, the verifier is interacting with a single polynomial-time quantum computer.

As in practice it might be challenging to assure that two quantum devices do not communicate, as required in the non-local setting, the incentive to study what can be achieved using only a single device is high. Indeed, after the novel proposals made in [4,5] for DI protocols for the verification of computation and randomness certification, many more protocols for various tasks and computational assumptions were investigated; see, e.g., [6,7,8,9,10,11,12,13,14,15,16]. Experimental works also followed, aiming at verifying quantum computation in the model of a single computationally restricted device [17,18]. With this research agenda advancing, more theoretical and experimental progress is needed—a necessity before one can estimate whether this avenue is of relevance for future quantum technologies or of sheer theoretical interest.

In this work we are interested in the task of generating randomness (Unless otherwise written, when we discuss the generation of randomness, we refer to a broad family of tasks: randomness certification, expansion, and amplification, as well as, potentially, quantum key distribution. In the context of the current work, the differences are minor). We consider a situation in which the device is prepared by a quantum adversary and then given to the verifier (the end user or costumer). The verifier wishes to use the device in order to produce a sequence of random bits. In particular, the bits should be random also from the perspective of the quantum adversary. The protocol defines how the verifier interacts with the device (also called the prover). It should be constructed to guarantee that the verifier aborts with high probability if the device is not trustworthy. Otherwise, it must certify that the bits produced by the device are random and unknown to the adversary.

Let us slightly formalize the above. The story begins with the adversary preparing a quantum state ; the marginal is the initial state of the device given to the verifier while the adversary keeps the quantum register E for herself. In addition to the initial state , the device is described by the quantum operations, e.g., measurements, that it performs during the execution of the protocol. We assume that the device is a quantum polynomial time (QPT) device and thus can only perform efficient operations. Namely, the initial state is of polynomial size and the operations can be described by a polynomial size quantum circuit; these are formally defined in Section 2. The verifier can perform only (efficient) classical operations. Together with the verifier, the initial state of all parties is denoted by . (In a simple scenario, one can consider , i.e., the verifier is initially decoupled from the device and the adversary. This is, however, not necessarily the case in, for example, randomness amplification protocols [19]. We therefore allow for this flexibility with the above more general notation.) The verifier then executes the considered protocol using the device.

All protocols consist of what we call “test rounds” and “generation rounds”. The goal of a test round is to allow the verifier to check that the device is performing the operations that it is asked to apply by making it pass a test that only certain quantum devices can pass. The test and its correctness are based on the chosen computational assumption. (In the non-local setting, the test is based on the violation of a Bell inequality, or winning a non-local game with sufficiently high winning probability. Here the computational assumption “replaces” the Bell inequality.) For example, in [5], the cryptographic scheme being used is “Trapdoor Claw-Free Functions” (TCF)—a family of pairs of injective functions . Informally speaking, it is assumed that, for every image y, (a) given a “trapdoor” one could classically and efficiently compute two pre-images such that ; (b) without a trapdoor, even a quantum computer cannot come up with such that (with high probability). While there does not exist an efficient quantum algorithm that can compute both pre-images for a given image y without a trapdoor, a quantum device can nonetheless hold a superposition of the pre-images by computing the function over a uniform superposition of all inputs to receive . These insights (and more—see Section 2.3 for details) allow one to define a test based on TCF such that a quantum device that creates can win while other devices that do not hold a trapdoor cannot. Moreover, the verifier, holding the trapdoor, will be able to check classically that the device indeed passes the test.

Let us move on to the generation rounds. In these rounds, the device produces the output bits O, which are supposed to be random. During the execution of the protocol, some additional information, such as a chosen public key for example (or whatever is determined by the protocol), may be publicly announced or leaked; we denote this side information by S.

After executing all the test and generation rounds, the verifier checks if the average winning probability in the test rounds is higher than some pre-determined threshold probability . If it is, then the protocol continues and otherwise aborts. Let the final state of the entire system, conditioned on not aborting the protocol, be . To show that randomness has been produced, one needs to lower bound the conditional smooth min-entropy [20] (the formal definitions are given in Section 2). Indeed, this is the quantity that tightly describes the amount of information-theoretically uniform bits that can be extracted from the output O, given S and E, using a quantum-proof randomness extractor [21,22]. The focus of our work is to supply explicit lower bounds on in a general and modular way.

An important remark is in order before continuing. In our setting, the device is computationally bounded, but the output bits O must be secure against an unbounded adversary. This means that the adversary may retain her system E and later perform arbitrary operations on it, possibly using the side information S. Proving security in this setting implies that the computational assumption needs to hold only during the execution of the protocol. Once the output is generated, the security guarantee remains valid even if the computational assumption (such as LWE) is later broken. This property, known as “security lifting”, is a fundamental feature of all DI protocols based on computational assumptions.

1.1. Motivation of the Current Work

The setup of two non-communicating devices has naturally emerged from the study of quantum key distribution (QKD) protocols and non-local games (or Bell inequalities). As such, the quantum information theoretic toolkit for proving the security of protocols such as DIQKD, DI randomness certification, and alike was developed over many years and used for the analysis of numerous quantum protocols (see, for example, the survey [23]). The well-established toolkit includes powerful techniques that allow bounding the conditional smooth min-entropy mentioned above. Examples for such tools are the entropic uncertainty relations [24], the entropy accumulation theorem [25,26], and more. The usage of these tools allows one to derive quantitatively strong lower bounds on , as well as handling realistically noisy quantum devices.

Unlike the protocols in the non-local setup, the newly developed protocols, for example, for randomness certification with a single device restricted by its computational power, are each analyzed using ad hoc proof techniques. On the qualitative side, such proofs make it harder to separate the wheat from the chaff, resulting in less modular and insightful claims. Quantitatively, the strength of the achieved statements is hard to judge—they are most likely not strong enough to lead to practical applications, and it is unknown whether this is due to a fundamental difficulty or a result of the proof technique. As a consequence, it is unclear whether such protocols are of relevance for future technology.

In this work, we show how to combine the information theoretic toolkit with assumptions regarding the computational power of the device. More specifically, we prove the lower bounds on the quantity by exploiting post-quantum cryptographic assumptions and quantum information-theoretic techniques, in particular the entropic uncertainty relation and the entropy accumulation theorem. Prior to our work, it was believed that such an approach cannot be taken in the computational setting (see the discussion in [5]). Once a bound on is proven, the security of the considered protocols then follows from our bounds using standard tools. The developed framework is general and modular. We use the original work of [5] as an explicit example; the same steps can be easily applied to, for example, the protocols studied in the recent works [14,15].

1.2. Main Ideas and Results

The main tool which is used to lower bound the conditional smooth min-entropy in DI protocols in the non-local setting is the entropy accumulation theorem (EAT) [25,27]. The EAT deals with sequential protocols, namely, protocols that proceed in rounds, one after the other, and in each round, some bits are being output. Roughly speaking, the theorem allows us to relate the total amount of entropy that accumulates throughout the execution of the protocol to, in some sense, an “average worst-case entropy of a single round” (see Section 2 for the formal statements). It was previously unclear how to use the EAT in the context of computational assumptions, and so [5] used an ad hoc proof to bound the total entropy.

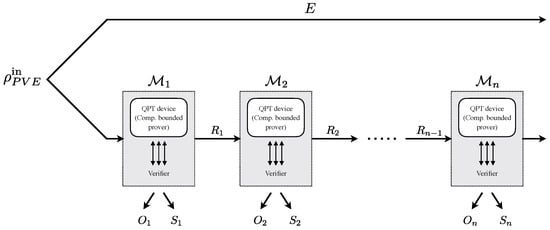

The general structure of a protocol that we consider is shown in Figure 2. The initial state of the system is . The protocol, as mentioned, proceeds in rounds. Each round includes interaction between the verifier and the prover and, overall, can be described by an efficient quantum channel for round . The channels output some outcomes and side information . In addition, the device (as well as the verifier) may keep the quantum and classical memory from previous rounds—this is denoted in the figure by the registers . We remark that there is only one device and the figure merely describes the way that the protocol proceeds. That is, in each round , the combination of the actions of the device and the verifier, together, defines the maps . The adversary’s system E is untouched by the protocol. One could also consider more complex protocols in which the adversary’s information does change in some ways. This can be covered using the generalized EAT [26]. We do not do so in the current manuscript since all protocols so far fall in the above description, but the results can be extended to the setup of [26].

Figure 2.

The general structure of a protocol that we consider. The initial state of the entire system is . The protocol proceeds in rounds: Each round includes an interaction between the verifier and the prover, as shown by the gray boxes in the figure, and can be described by an efficient quantum channel for every round . The channels output outcomes and side information . The device may keep the quantum memory from previous rounds using the registers . The adversary’s system E is untouched by the protocol. This structure fits the setup of the entropy accumulation theorem [25] and can easily be extended to that of the generalized entropy accumulation [26].

We are interested in bounding the entropy that accumulates by the end of the protocol: , where and similarly . The EAT tells us that, under certain conditions, this quantity is lower bounded, to the first order in n, by with t of the form

Here, denotes the quantum channel that describes the action of the i-th round of the protocol on the input state , including both the verifier’s interaction and the device’s response. The entropy is then computed with respect to the output distribution induced by this channel on the input state . The value is the average winning probability of the device in the test rounds, and is the set of all (including inefficiently constructed) states of polynomial size that achieve the winning probability .

To lower bound the entropy appearing in Equation (1), one needs to use the computational assumption being made. This can be performed in various ways. In the current work, we show how statements about anti-commuting operators, such as those proven in [5,14,15], can be brought together with the entropic uncertainty relation [24], another tool frequently used in quantum information theory, to obtain the desired bound. In combination with our usage of the EAT, the bound on follows.

The described techniques are being made formal in the rest of the manuscript. We use [5] as an explicit example, also deriving quantitative bounds. We discuss the implications of the quantitative results in Section 4 and their importance for future works. Furthermore, our results can be used directly to prove the full security of the DIQKD protocol of [9].

The core technical result of this work is formalized in Theorem 2. This theorem provides a concrete, non-asymptotic lower bound on the conditional smooth min-entropy of the protocol output, conditioned on all side information including the adversary’s system. It gives a usable, round-scalable guarantee that applies to protocols based on noisy trapdoor claw-free functions, assuming only computational limitations on the device.

Informally, Theorem 2 states the following. If the device passes the test rounds with a sufficiently high success probability, then the output bits collected in the generation rounds contain min-entropy linear in the number of rounds, even conditioned on all classical and quantum side information.

We add that on top of the generality and modularity of our technique, its simplicity contributes to a better understanding of the usage of post-quantum cryptographic assumptions in randomness generation protocols. Thus, apart from being a tool for the analysis of protocols, we also shed light on what is required for a protocol to be strong and useful. For example, we can see from the explanation given in this section that the computational assumptions enter in three forms in Equation (1):

- The channels must be efficient.

- The states must be of polynomial size.

- Due to the minimization, the states that we need to consider may also be inefficient to construct, even though the device is efficient.

Points 1 and 2 are the basis when considering the computational assumption and constructing the test; in particular, they allow one to bound , up to a negligible function , where is a security parameter defined by the computational assumption.

Point 3 is slightly different. The set over which the minimization is taken determines the strength of the computational assumption that one needs to consider. For example, it indicates that the protocol in [5] requires that the LWE assumption is hard even with a potentially inefficient polynomial-size advice state. This is a (potentially) stronger assumption than the “standard” formulation of LWE. Note that the stronger assumption is required even though the initial state of the device, , is efficient to prepare (i.e., it does not act as an advice state in this case). In [5], this delicate issue arises only when analyzing everything that can happen to the initial state throughout the entire protocol and conditioning upon not aborting. In the current work, we directly see and deal with the need for allowing advice states from the minimization in Equation (1).

We conclude our work with a discussion of what information is it that we allow our protocol to leak to the adversary via the side information register . One obvious part of the side information is the cryptographic key , which was used to initiate the protocol, and the type of challenge (as was considered in [5]). Here we consider leakage of additional information to the adversary, and its consequent effect on the smooth min entropy . With regard to the constituents of the side information, it is also important to note that even leakage of the trapdoor to the side information is allowed. This should, naively, render the cryptographic primitives useless by undermining the computational assumptions, but in practice does not since we only demand that the computational assumptions hold, with respect to the device we are interacting with, during the execution of the protocol. Since the adversary E itself is unbounded and has access to the cryptographic key , we can assume it computes the trapdoor.

1.3. Related Works

- This work builds on [5] as a case study and draws on several of its results. We make use of the cryptographic primitive introduced in [5] (Section 3), as well as lemmas from [5] (Sections 6 and 7), which together define the core of the computational assumption underlying randomness generation.Our main advancement over this prior work is the ability to construct a von Neumann entropy bound for a single protocol round. This enables us to entirely replace the protocol-specific analysis in [5] (Section 8), a task that was conjectured to be beyond the reach of entropy accumulation techniques, by adapting the EAT framework of [28] to the computational setting.In Section 8 of [5], the authors develop a two-step analysis: they define an idealized device equipped with a fictitious projective measurement used to identify when the device lies in a “good subspace”, and then argue that the real protocol approximates the behavior of this idealized version. The entropy bound is derived manually, using a custom score function and round-by-round reductions that are specific to their protocol. While this approach is rigorous, it is mathematically involved and lacks generality.In contrast, our framework defines a per-round min-trade-off function and uses a general entropy accumulation argument to bound the total output entropy. This substitution yields a conceptually cleaner and modular proof, and allows us to derive concrete, non-asymptotic entropy bounds. This stands in contrast to the asymptotic nature of the results in [5], which do not quantify the amount of certified entropy for any finite choice of parameters.The absence of explicit bounds in [5] was later addressed by the same authors in [29], where a protocol variant generates n bits of randomness in a single round but without the ability to accumulate entropy across multiple rounds. As a result, achieving meaningful entropy guarantees in that setting requires choosing security parameters that are unreasonably large. This mirrors the trade-off in our work, where a large number of rounds is similarly required to achieve practically significant entropy rates.

- In [16], the authors investigate the certification of randomness on “NISQ” (Noisy Intermediate Scale Quantum) devices using the entropy accumulation theorem. The verification scheme proposed in that work is computationally hard. As a result, there is a tension between ensuring security and maintaining practicality: the security parameter must be large enough to make the underlying problem hard, yet small enough to keep verification feasible. Perhaps as a consequence, the computational assumptions described in that work are somewhat amorphous.

2. Preliminaries

Throughout this work, we use the symbol as the identity operator and as the characteristic function interchangeably; the usage is clear due to context. We denote when x is sampled uniformly from the set X or when x is sampled according to a distribution . The Bernoulli distribution with is denoted as The Pauli operators are denoted by . The set is denoted as .

2.1. Mathematical Background

Definition 1 (Negligible function).

A function is to be negligible if for every there exists such that for every , .

Lemma 1.

Let be a negligible function. The function is also negligible.

Proof.

Assume, without loss of generality, the monotonicity and positivity of some negligible function . Given , we wish to find such that , . We denote the following function:

This means

yielding

By the negligibility property of , the function diverges to infinity. Therefore, such that

and for all

□

Corollary 1.

Let η be a negligible function and let be the binary entropy function. There exists a negligible function ξ for which the following holds:

Definition 2 (Hellinger distance).

Given two probability distributions and , the Hellinger distance between P and Q is defined as

Lemma 2 (Jordan’s lemma extension).

Let be two self-adjoint unitary operators acting on a Hilbert space of a countable dimension. Let L be a normal operator acting on the same space such that . There exists a decomposition of the Hilbert space into a direct sum of orthogonal subspaces such that for all α, and given , all three operators satisfy .

Jordan’s lemma appears, among other places, in [2] (Appendix G.4). The sole change in the proof of the extension is in the choice of diagonalizing basis of the unitary operator . The chosen basis is now a mutual diagonalizing basis of and L, which exists due to commutative relations.

Lemma 3.

Let be Hermitian projections acting on a Hilbert space of a countable dimension such that . There exists a decomposition of the Hilbert space into a direct sum of orthogonal subspaces such that and K are a 2 by 2 block diagonal. In addition, in subspaces of dimension 2, Π and M take the forms

where for some .

Proof.

Given a Hermitian projection , consider the unitary operator , satisfying . Therefore, the operators satisfy the conditions for Lemma 2 and there exists the decomposition of to a direct sum of orthogonal subspaces such that in these subspaces,

One can recognize the operators as and , respectively, for some angle . Therefore, there exists a basis of such that the operators are and , respectively, for some angle . Consequently, in this subspace, the projections take the form

with . □

Lemma 4 (Jensen’s inequality extension).

Let be a convex function over a convex set such that for every there exists a subgradient. Let be a sequence of random variables with support over U. Then, .

2.2. Tools in Quantum Information Theory

We state here the main quantum information theoretic definitions and techniques appearing in previous work, on which we build in the current manuscript.

Definition 3 (von Neumann entropy).

Let be a density over the Hilbert space . The von Neumann entropy of the is defined as

The conditional von Neumann entropy of A given B is defined to be

Definition 4

(Square overlap [24]). Let Π and M be two observables, described by orthonormal bases and on a d-dimensional Hilbert space . The measurement processes are then described by the completely positive maps

The square overlap of Π and M is then defined as

Definition 5.

Let M be an observable on a d-dimensional Hilbert space and let be its corresponding map as appearing in Equation (3). Given a state , we define the conditional entropy of the measurement M given the side information B as

Lemma 5

(Entropic uncertainty principle [24] (Supplementary—Corollary 2)). Let Π and M be two observables on a Hilbert space and let c be their square overlap. For any density operator ,

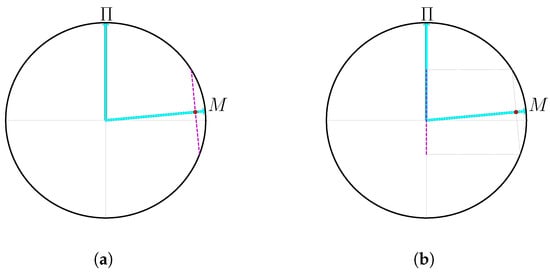

In order to provide a clear understanding of the quantum uncertainty that arises from two measurements, as in Lemma 5, it is beneficial to examine the square overlap between those measurements (Definition 4). The Bloch sphere representation, which pertains to Hilbert spaces of two dimensions, offers a lucid illustration of this concept as shown in Figure 3.

Figure 3.

A Bloch sphere representation of the uncertainty relations. A small square overlap between and M corresponds to a larger angle between the two. Each point on the arrow M corresponds to a probability of some unknown state that must lie on the corresponding magenta dashed line in (a). All the states described in (a) allow distributions of that correspond to the points on the dashed magenta line described in (b). A small square overlap and high values of mean that the allowed distributions of are close to uniform. (a) All possible states with on the magenta dashed line. (b) All possible distributions of on the magenta dashed line.

Lemma 6.

Given two non-trivial Hermitian projections

acting on a two-dimensional Hilbert space , the eigenvalues of the operator are and . In addition, the square overlap of the operators in Equation (5) is .

Lemma 7

(Continuity of conditional entropies [30] (Lemma 2)). For states ρ and σ on a Hilbert space , if , then

Lemma 8

(Good-subspace projection [5] (Lemma 7.2)). Let Π and M be two Hermitian projections on and ϕ a state on . Let and

Let . Let be the orthogonal projection on the j’th block as given in Lemma 3 and denote as the square overlap of Π and M in the corresponding block. Define Γ to be the orthogonal projection on all blocks such that the square overlap is bound by c:

Then,

The above lemma was proven in [5]. Since it plays a crucial part in the work, we include the proof for completeness.

Proof.

Using Jordan’s lemma we find a basis of , in which

where , , for some angles . Let be the orthogonal projection on those two-dimensional blocks such that . Note the following:

- commutes with both M and , but not necessarily with .

- is the projection on blocks where the eigenvalues of the operator are in the range as a consequence of Lemma 6.

Assume that . This implies that is supported on the range of M. For any block j, let be the projection on the block and . It follows from the decomposition of M and to two-dimensional blocks and the definition of that (this is easily seen in the Bloch sphere representation):

Using that for j with such that and the fact that the polynomial is strictly rising in the regime , we get

From Equation (10) and , it follows that

Consequently,

This concludes the case where .

Consider and , as otherwise the lemma is trivial. Let . By the gentle measurement lemma [31] (Lemma 9.4.1),

Using the definition of , it follows that

A main tool that we are going to use is the entropy accumulation theorem (EAT) [25,32]. For our quantitative results, we used the version appearing in [25]; one can use any other version of the EAT in order to optimize the randomness rates, e.g., [32] as well as the generalization in [26]. We do not explain the EAT in detail; the interested reader is directed to [28] for a pedagogical explanation.

Definition 6 (EAT channels).

Quantum channels are said to be EAT channels if the following requirements hold:

- 1.

- are finite dimensional quantum systems of dimension , and are finite-dimensional classical systems (RV). are arbitrary quantum systems.

- 2.

- For any and any input state , the output state has the property that the classical value can be measured from the marginal without changing the state. That is, for the map describing the process of deriving from and , it holds that .

- 3.

- For any initial state , the final state fulfills the Markov chain conditions .

Definition 7 (Min-trade-off function).

Let be a family of EAT channels and denote the common alphabet of . A differentiable and convex function from the set of probability distributions p over to the real numbers is called a min-trade-off function for if it satisfies

for all , where the infimum is taken over all purifications of input states of for which the marginal on of the output state is the probability distribution p.

Definition 8.

Given an alphabet , for any , we define the probability distribution over such that for any ,

Theorem 1 (Entropy Accumulation Theorem (EAT)).

Let for be EAT channels, let ρ be the final state, Ω an event defined over , the probability of Ω in ρ and the final state conditioned on Ω. Let . For convex, is a min-trade-off function for , and any such that for any ,

where

2.3. Post-Quantum Cryptography

Definition 9 (Trapdoor claw-free function family).

For every security parameter , let and be finite sets of inputs, outputs, and keys, respectively. A family of injective functions

is said to be trapdoor claw-free (TCF) family if the following holds:

- Efficient Function Generation: There exists a PPT algorithm which takes the security parameter and outputs a key and a trapdoor t.

- Trapdoor: For all keys , there exists an efficient deterministic algorithm such that, given t, for all and , .

- Claw-Free: For every QPT algorithm receiving as input and outputting a pair , the probability to find a claw is negligible, i.e., there exists a negligible function η for which the following holds:

The noisy trapdoor claw-free (NTCF) family used in this work is a generalization of the standard TCF definition presented above. The NTCF primitive was introduced in [5] as part of the construction of cryptographic protocols secure against quantum adversaries. Although the cryptographic primitive underlying Protocol 1 is an NTCF, it is often more intuitive to reason about the simpler TCF structure from Definition 9, which captures the essential properties in the noise-free setting.

Definition 10

(Noisy NTCF family [5]). For every security parameter , let and be finite sets of inputs, outputs, and keys, respectively, and the set of distributions over . A family of functions

is said to be a noisy trapdoor claw-free (NTCF) family if the following conditions hold:

- Efficient Function Generation: There exists a probabilistic polynomial time (PPT) algorithm which takes the security parameter and outputs a key and a trapdoor t.

- Trapdoor Injective Pair: For all keys , the following two conditions are satisfied:

- 1.

- Trapdoor: For all and . In addition, there exists an efficient deterministic algorithm such that for all and , .

- 2.

- Injective Pair: There exists a perfect matching relation such that if and only if .

- Efficient Range Superposition: For every function in the family , there exists a function (not necessarily a member of ) such that the following hold:

- 1.

- For all and , and .

- 2.

- There exists an efficient deterministic algorithm (not requiring a trapdoor) such that

- 3.

- There exists some negligible function η such thatwhere is the Hellinger distance for distributions defined in Definition 2.

- 4.

- There exists a quantum polynomial time (QPT) algorithm that prepares the quantum state

- Adaptive Hardcore Bit: For all keys , the following holds. For some integer w that is a polynomially bounded function of λ, we have the following:

- 1.

- For all and , there exists a set such that for some negligible function η. In addition, there exists a PPT algorithm that checks for membership in given and the trapdoor t.

- 2.

- Letthen for any QPT and polynomial size (potentially inefficient to prepare) advice state ϕ independent of the key k, there exists a negligible function for which the following holds (we remark that the same computational assumption is being used in [5], even though the advice state is not included explicitly in the definition therein):

Lemma 9

(Informal: existence of NTCF family [5] (Section 4)). There exists an LWE-based construction of an NTCF family as in Definition 10. (As previously noted, [5] assumes that the LWE problem is hard also when given an advice state. Thus, though not explicitly stated, the construction in [5] (Section 4) is secure with respect to an advice state.)

| Protocol 1 Entropy accumulation protocol. |

|

- Protocol 1: High-level description of the entropy accumulation protocol. In each round, the verifier interacts with an untrusted device by sending a challenge based on a noisy trapdoor claw-free function and receiving a response. The round is designated as either a test or generation round. In test rounds, the verifier checks whether the device behaves consistently with the structure of the function (either via a pre-image or equation check). In generation rounds, the verifier collects output bits intended to serve as randomness. The variable encodes the outcome of the round in a unified way: it stores a candidate output bit if the associated check passes, and a placeholder value (2) otherwise. This allows both test and generation rounds to be treated uniformly in the later entropy analysis.

3. Randomness Certification

Our main goal in this section is to provide a framework for lower bounding the entropy accumulated during the execution of a protocol involving a single quantum device. Protocol 1 specifies the randomness generation protocol used in our setting, which consists of n rounds of classical interaction between a verifier and a quantum device. The security of the protocol is based on the existence of a noisy trapdoor claw-free (NTCF) family , constructed under the LWE assumption [3], following [5]. The protocol makes use of the keys sampled from and a security parameter , with all definitions stated implicitly in terms of these.

In each round, the verifier samples a bit to determine whether the round is a test round () or a generation round (). When , the device produces a pair in both types of rounds. The difference lies in how the verifier handles this response: In test rounds, the verifier uses the trapdoor to check whether the response is consistent with the challenge, contributing to a success/failure count. In generation rounds, no check is performed, and the output bit is retained as part of the protocol’s output. Correctness in generation rounds is inferred from the device’s performance in the test rounds.

We denote by a unified variable that stores either the output bit (when a check is passed or skipped) or a placeholder value otherwise. The final quantitative result of this section is a lower bound on the smooth min-entropy of the output bits , conditioned on the adversary’s information and public transcript.

3.1. Single-Round Entropy

In this section, we analyze a single round of Protocol 1, presented as Protocol 2.

| Protocol 2 Single-round protocol. |

|

- Protocol 2: Description of the single-round protocol.

3.1.1. One Round Protocols and Devices

Protocol 2, roughly, describes a single round of Protocol 1. The goal of Protocol 2 can be clarified by thinking about an interaction between a verifier and a quantum prover in an independent and identically distributed (IID) scenario, in which the device is repeating the same actions in each round. Upon multiple rounds of interaction between the two, is convinced that the winning rate provided by is as high as expected and therefore can continue with the protocol. We will, of course, use the protocol later on without assuming that the device behaves in an IID manner throughout the multiple rounds of interaction.

We begin by formally defining the most general device that can be used to execute the considered single round Protocol 2.

Definition 11

(General device [5]). A general device is a tuple that receives as input and is specified by the following:

- 1.

- A normalized density matrix .

- is a polynomial (in λ) space, private to the device.

- is a space private to the device whose dimension is the same of the cardinality of .

- For every , is a sub-normalized state such that

- 2.

- For every , a projective measurement on , with outcomes (The value w is determined by the NTCF construction. The string d is used in equation-test rounds as a witness that the verifier can check using the trapdoor. It does not play a direct role in the entropy analysis).

- 3.

- For every , a projective measurement on , with outcomes .

Definition 12 (Valid response).

Let be a challenge derived from the NTCF family associated with key k.

- 1.

- A pair is called a valid response to a pre-image test if . There are exactly two such valid responses for each challenge y, and .

- 2.

- A pair is called a valid response to an equation test if for both .Intuitively, this means that if holds, then the response is valid (up to some choices of d that the verifier always rejects, such as ).

We denote these sets of valid responses as and , for the pre-image and equation tests, respectively. These conditions define the responses that the verifier accepts during test rounds of Protocol 1.

Definition 13 (Efficient device).

We say that a device is efficient if the following holds:

- 1.

- The state ϕ is a polynomial size (in λ) “advice state” that is independent of the chosen keys . The state might not be producible using a polynomial time quantum circuit (we say that the state can be inefficient).

- 2.

- The measurements Π and M can be implemented by polynomial size quantum circuit.

The above definitions do not specify an honest strategy. Rather, they provide a formal abstraction that allows us to analyze the entropy generated by the device in a single round. This abstraction is crucial for our proof technique: it isolates a simplified description of the device in terms of a fixed state and two projective measurements and M, which correspond to the two possible test types in the protocol. By reducing to this formal model, we are able to apply tools such as the entropic uncertainty relation and construct a min-trade-off function compatible with the entropy accumulation theorem.

Importantly, this abstraction is not purely theoretical—it is designed to faithfully capture the observable behavior of real quantum devices, whether honest or adversarial, while making the analysis tractable. In particular, an honest device fits naturally within this formalism. For example, it may prepare a state of the form , where , and perform either a computational basis measurement (yielding ) or a Hadamard measurement (yielding ), depending on the challenge. Such a device is efficient according to Definition 13 and behaves in a way that satisfies the test criteria of the protocol with probability 1.

To ensure that the analysis remains sound even for dishonest or arbitrarily structured devices, we impose computational constraints on the device model. These constraints limit both its time and memory resources, and are essential for the validity of the cryptographic assumptions used in the protocol.

We emphasize that the device D is computationally bounded by both time steps and memory. This prevents pre-processing schemes in which the device manually goes over all keys and pre-images to store a table of answers to all the possible challenges, as such schemes demand exponential memory in .

The cryptographic assumption made on the device is the following. Intuitively, the lemma states that due to the hardcore bit property in Equation (15), the device cannot pass both pre-image and equation tests. Once it passes the pre-image test, trying to pass also the equation test results in two computationally indistinguishable states—one in which the device also passes the equation test and one in which it does not.

Lemma 10

(Computational indistinguishability [5] (Lemma 7.1)). Let be an efficient general device as in Definitions 11 and 14. Define a sub-normalized density matrix

Let

where is the set of valid responses to the equation test challenge y as defined in Definition 12. Then, and are computationally indistinguishable.

The proof is given in [5] (Lemma 7.1). Note that even though the proof in [5] does not address the potentially inefficient advice state explicitly, it holds due to the non-explicit definition of their computational assumption.

We proceed with a reduction of Protocol 2 to a simplified one, Protocol 3. As the name suggests, it will be easier to work with the simplified protocol and devices when bounding the produced entropy. We remark that a similar reduction is used in [5]; the main difference is that we are using the reduction on the level of a single round, in contrast to the way it is used in [5] (Section 8) when dealing with the full multi-round protocol. Using the reduction in the single-round protocol instead of the full protocol helps in disentangling the various challenges that arise in the analysis of the entropy.

| Protocol 3 Simplified single-round protocol. |

|

- Protocol 3: Description of the simplified single-round protocol.

Definition 14

(Simplified device [5] (Definition 6.4)). A simplified device is a tuple that receives as input and specified by the following:

- 1.

- is a family of positive semidefinite operators on an arbitrary space such that ;

- 2.

- and are defined as the sets , respectively, such that for each , the operators, and , are projective measurements on .

Definition 15

(Simplified device construction [5]). Given a general device as in Definition 11 , we construct a simplified device in the following manner:

- The device measures like the general device D would.

- The measurement is defined as follows:

- –

- Perform the measurement for an outcome .

- –

- If , the constructed device returns b corresponding to the projection .

- –

- If , the constructed device returns 2, corresponding to the projection .

- The measurement is defined as follows:where is valid answers for the equation test, meaning the outcome corresponds to a valid response in the equation test.

The above construction of a simplified device maintains important properties of the general device. Firstly, the simplified device fulfills the same cryptographic assumption as the general one. This is stated in the following corollary.

Corollary 2.

Given a general efficient device , a simplified device constructed according to Definition 15 is also efficient. Hence, the cryptographic assumption described in Lemma 10 holds also for as well.

Secondly, the entropy produced by the simplified device in the simplified single-round protocol, Protocol 3, is identical to that produced by the general device in single round protocol, Protocol 2. A general device executing Protocol 2 defines a probability distribution of over . Using the same general device to construct a simplified one, via Definition 15, leads to the same distribution for when executing Protocol 3. This results in the following corollary.

Corollary 3.

Given a general efficient device and a simplified device constructed according to Definition 15, we have for all k,

where is the entropy produced by Protocol 2 using the general device D, and is the entropy produced in Protocol 3 using the simplified device . Both entropies are evaluated on the purification of the state ϕ.

The above corollary tells us that we can reduce the analysis of the entropy created by the general device to that of the simplified one, hence justifying its construction and the following sections.

3.1.2. Reduction to Qubits

In order to provide a clear understanding of the quantum uncertainty that arises from two measurements, it is beneficial to examine the square overlap between those measurements (Definition 4). The Bloch sphere representation, which pertains to Hilbert spaces of two dimensions, offers a lucid illustration of this concept.

Throughout this section, it is demonstrated that the devices under investigation, under specific conditions, can be expressed as a convex combination of devices, each operating on a single qubit. Working in a qubit subspace then allows one to make definitive statements regarding the entropy of the measurement outcomes produced by the device. We remark that this is in complete analogy with the proof techniques used when studying DI protocols in the non-local setting, in which one reduces the analysis to that of two single qubit devices [33] (Lemma 1).

Lemma 11.

Let be a simplified device (Definition 14), acting within a Hilbert space of a countable dimension, with the additional assumption that consists of only two outcomes and let Γ be a Hermitian projection that commutes with both and . Given an operator constructed from some non-commutative polynomial of two variables f, let be the expectation value of F.

Then, there exists a set of Hermitian projections , acting within the same Hilbert space , satisfying the following conditions

such that

where , and is the expectation value of F given the state , corresponding to the simplified device . That is, .

Proof.

As an immediate result of Lemma 3, there exists a basis in which and are a block diagonal. In this basis, we take the projection on every block j as . This satisfies the conditions in Conditions (16). Furthermore,

□

Note that the simplified device yields the same expectation values to those of the simplified device . The resulting operation is therefore, effectively, performed in a space of a single qubit. In addition, due to the symmetry of and in this proof, the lemma also holds for an observable constructed from and instead of and , i.e., .

The simplified protocol permits the use of the uncertainty principle, appearing in Lemma 5, in a vivid way since it has a geometrical interpretation on the Bloch sphere; recall Figure 3. Under the assumption that has two outcomes (this has yet to be justified) we can represent and as two Bloch vectors with some angle between them that corresponds to their square overlap—the smaller the square overlap, the closer the angle is to . In the ideal case, the square overlap is , which means that in some basis, the two measurements are the standard and the Hadamard measurements. If one is able to confirm that , the only possible distribution on the outcomes of is a uniform one, which has the maximal entropy.

, however, has three outcomes and not two, rendering the reduction to qubits unjustified. We can nonetheless argue that the state being used in the protocol is very close to some other state which produces only the first two outcomes. The entropy of both states can then be related using Equation (6). The ideas described here, are combined and explained thoroughly in the main proof shown in the following subsection.

3.1.3. Conditional Entropy Bound

The main proof of this subsection is performed with respect to a simplified device constructed from a standard one using Definition 15.

Before proceeding with the proof, we define the winning probability in both challenges.

Definition 16.

Given a simplified device (with implicit key k) , for a given , we define the and winning probabilities, respectively, as

Likewise, the winning probabilities of Π and M as

Recall that are sub-normalized.

We are now ready to prove our main technical lemma.

Lemma 12.

Let be a simplified device as in Definition 14, constructed from an efficient general device in the manner depicted in Definition 15. Let be a purification of (respecting the sub-normalization of ) and . For all and some negligible function , the following inequality holds:

where is the binary entropy function and

Proof.

For every , we introduce the state

in order to reduce the problem to a convex combination of two-dimensional ones as described in Section 3.1.2. We provide a lower bound for the entropy and by the continuity of entropies, a lower bound for is then derived. The proof proceeds in steps.

- LetUsing Jordan’s lemma, Lemma 2, there exists an orthonormal basis where the operators are -block diagonal. Let be the Hermitian projection on blocks where the square overlap of and is bound by c (good blocks). Note that also commutes with both unitaries.

- Using Lemma 8 we bound the probability to be in subspace where the square overlap of and M is larger than c (bad blocks).where is given by Equation (18) andDue to Lemma 10 and Corollary 2, is negligible in the security parameter . Thus, for some negligible function ,

- Note that is the state of the device (and the adversary), not . Hence, we cannot, a priori, relate to the winning probabilities of the device. We therefore want to translate Equation (20) to the quantities observed in the application of Protocol 3 when using the simplified device . That is, we would like to use the values given in Definition 16 in our equations:where in the last inequality, we use the gentle measurement lemma [31] (Lemma 9.4.1). The last equation, combined with Equation (20), immediately yields

- In a similar manner, for later use, we must find a lower bound on using quantities that can be observed from the simplified protocol. To that end, we again use the gentle measurement lemma:Using the definitions of ,

- We proceed by providing a bound on the conditional entropy, given that , using the uncertainty principle in Lemma 5. Conditioned on being in a good subspace (which happens with probability ), the square overlap of and is upper bounded by c. We can then bound the entropy of using the entropy of :Since we have a lower bound on , we proceed by working in the regime where the binary entropy function is strictly decreasing. To that end, it is henceforth assumed that the argument of the binary entropy function, and all of its subsequent lower bounds, are larger than . By using the inequalitywe obtainTherefore,

- We now want to bound the value , i.e., without conditioning on the event . We write,

- Now, taking the expectation over y on both sides of the inequality and using Lemma 4:

- Using the continuity bound in Equation (7) with yields

□

For brevity, denote the bound in Equation (17) as

Seeing that this inequality holds for all values , for each winning probability pair we can pick an optimal value of c to maximize the inequality. We do this implicitly and rewrite the bound as

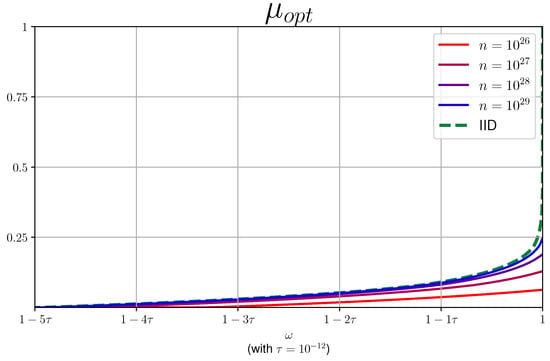

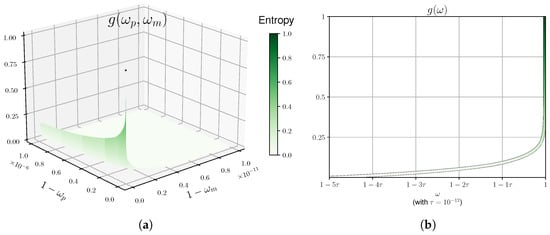

Doing so yields the graph in Figure 4a.

Figure 4.

The entropy as a function of the winning probabilities as given by Equations (28) and (29). In the left plot, the differential at diverges, and therefore one does not see the entropy grow to its optimal value (the dark green point); this is only a visual effect. This entropy and the divergence are seen more clearly on a slice of this graph, appearing in the right plot. (a) Plot of the function given in Equation (28). (b) Plot of the function appearing in Equation (29) with . The width of the curve is added for clarity.

In the protocol, later on, the verifier chooses whether to abort or not, depending on the overall winning probability :

We therefore define for every , another bound on the entropy, that depends only on (assuming ) as

3.2. Entropy Accumulation

Combining Lemma 12 with Corollary 3, we now hold a lower bound for the von Neumann entropy of Protocol 3—we can connect any winning probability to a lower bound on the entropy of the pre-image test. This allows us to proceed with the task of entropy accumulation. To lower bound the total amount of smooth min-entropy accumulated throughout the entire execution of Protocol 1, we use the entropy accumulation theorem (EAT), stated as Theorem 1.

To use the EAT, we need to first define the channels corresponding to Protocol 1 followed by a proof that they are in fact EAT channels. In the notation of Definition 6, we make the following choice of channels:

and set .

Lemma 13.

The channels defined by the CPTP map describing the i-th round of Protocol 1 as implemented by the computationally bounded untrusted device and the verifier are EAT channels according to Definition 6.

Proof.

To prove that the constructed channels are EAT channels, we need to show that the conditions are fulfilled:

- , and are all finite-dimensional classical systems. are arbitrary quantum systems. Finally, we have

- For any and any input state , is a function of the classical values . Hence, the marginal is unchanged when deriving from it.

- For any initial state and the resulting final state , the Markov chain conditionstrivially hold for all , as and are chosen independently from everything else.

□

Lemma 14.

Let and be the function in Equation (29). Let p be a probability distribution over such that and . Define . Then, there exists a negligible function such that

In particular, this implies that for every , the function

satisfies Definition 7 and is therefore a min-trade-off function.

Proof.

Due to Lemma 12 and the consequent Equation (29), the following holds for any polynomial-sized state (not necessarily efficient):

For the last equality, note that the device only knows which test to perform while being unaware if it is for a generation round or not. Therefore, once T is given, G does not provide any additional information. □

Using Theorem 1, we can bound the smooth min-entropy resulting in the application of Protocol 1:

where is given by Equation (31). We simplify the right-hand side in Equation (32) to a single entropy accumulation rate , and a negligible reduction :

Note that the differential of is unbounded. This prevents us from using the EAT due to Equation (14). This issue is addressed by defining a new min-trade-off function such that

We provide a number of plots for as a function of for various values of n in Figure 5. We remark that we did not fully optimize the code to derive the plots, and one can probably derive tighter plots.

3.3. Randomness Rates

In the previous subsection, we derive a lower bound on . However, our ultimate goal is to provide a bound on the designated source of randomness, namely . The following result, Theorem 2, formalizes this guarantee and constitutes the main theorem of this work. It gives a concrete lower bound on the min-entropy of the protocol’s output, conditioned on the adversary’s information, under a computational assumption on the device. While Theorem 1 provides the general entropy accumulation framework applicable to our setting, the core technical difficulty lies in establishing the single-round von Neumann entropy bound proved in Lemma 12. This bound captures the contribution of the cryptographic structure and enables the application of Theorem 1, ultimately leading to the main result in Theorem 2.

Theorem 2.

Let D be a device executing Protocol 1, and let ρ denote the joint quantum state generated at the end of the protocol. Let be a fixed threshold specifying the minimum fraction of successfully passed equation test rounds required for the verifier not to abort. Let Ω denote the event that the device achieves a test score of at least ω on the test rounds, and define . (This dependence on reflects a known limitation of the entropy accumulation theorem: the bound applies only conditionally, and the probability of passing the test (i.e., ) is not provided by the theorem itself. However, in practice, can be estimated or lower bounded using concentration inequalities.) Let denote the state ρ conditioned on the event Ω, and let be a smoothness parameter. Then, the following bound holds:

Proof.

We begin with entropy chain rule [34] (Theorem 6.1)

The first term on the right-hand side is given in Equation (33); it remains to find an upper bound for the second term. Let us start from

We then use the EAT again in order to bound . We identify the EAT channels with and . The Markov conditions then trivially hold, and the max-trade-off function reads

Since the following distributions are satisfied for all

the max-trade-off function is simply (thus . We therefore get

□

We wish to make a final remark. One could repeat the above analysis under the assumption that Y and X are leaked, to obtain a stronger security statement (this is, however, not performed in previous works). In this case, and should be part of the output of the channel. Then, the dimension of scales exponentially with , which worsens the accumulation rate since this results in exponential factors in Equation (13). In that case, becomes a monotonically decreasing function of and we obtain a certain trade-off between the two elements in the following expression:

This means that there is an optimal value of that maximizes the entropy and to find it, one requires an explicit bound on .

3.4. Generalization to Computational CHSH

It is worth considering a couple of recent works in which the proof techniques, introduced in the current one, may be generalized. In [14] a test of quantumness is developed for a family of protocols with a concrete example in the form of the KCVY Protocol [11]. In [15], explicit bounds are provided on compiled non-local games and more specifically, a compiled CHSH game where a classical verifier acts both as a verifier and one of the players in a standard CHSH game while the second player is a quantum device.

Both of these works use computational problems which bear a strong resemblance to the standard non-local CHSH game. Furthermore, ref [15] (Lemma 34) and ref [14] (Theorem 4.7) provide an upper bound on the anti-commutation relations of the challenges the prover receives as a function of the winning probability in the protocol—This potentially allows the use of the Entropic uncertainty principle Lemma 5. As an example, in an optimal winning probability, the anti-commutator is negligibly close to zero (it is also worth mentioning that this is a similar condition that must also be met in the standard non-local CHSH game).

Similarly to the upper bound on the projection on “bad blocks” in Equation (19), one could use the lemma to provide a similar bound using a Markov inequality. Assuming now that the subspaces we are working with have measurements whose square overlap is bound by c, one may use the statistics of each measurements, geometrical arguments (along with rigidity) and entropic uncertainty relations, to provide a lower bound on the entropies of each measurement. It is worth noting that even with an honest prover, the von Neumann entropy will be . This could potentially be remedied by introducing a third measurement but regardless, once a lower bound on the von Neumann entropy is found, similar steps to those of Section 3.2 can be followed.

4. Conclusions and Outlook

By utilizing a combination of results from quantum information theory and post-quantum cryptography, we have shown that entropy accumulation is feasible when interacting with a single device. While this was previously performed in [5] using ad hoc techniques, we provide a flexible framework that builds on well-studied tools and follows similar steps to those used in DI protocols based on the violation of Bell inequalities using two devices [27]. Prior to our work, it was believed that such an approach cannot be taken (see the discussion in [5]). We remark that while we focused on randomness certification in the current manuscript, one could now easily extend the analysis to randomness expansion, amplification and key distribution using the same standard techniques applied when working with two devices [19,27].

Furthermore, even though we carried out the proof here specifically for the computational challenge derived from a NTCF, the methods that we establish are modular and can be generalized to other protocols with different cryptographic assumptions. For example, in two recent works [14,15], the winning probability in various “computational games” is tied to the anti-commutator of the measurements used by the device that plays the game (see [15] (Lemma 38) and [14] (Theorem 4.7) in particular). Thus, their results can be used to derive a bound on the conditional von Neumann entropy as we do in Lemma 12. From there onward, the final bound on the accumulated smooth min-entropy is derived exactly as in our work.

Apart from the theoretical contribution, the new proof method allows us to derive explicit bounds for a finite number of rounds of the protocol, in contrast to asymptotic statements. Thus, one can use the bounds to study the practicality of DI randomness certification protocols based on computational assumptions.

For the current protocol, in order to obtain a positive rate, the number of repetitions n required, as seen in Figure 5, is too demanding for actual implementation. In addition, the necessary observed winning probability is extremely high. We pinpoint the “source of the problem” to the min-trade-off function presented in Figure 4 and provide below a number of suggestions as to how one might improve the derived bounds. In general, however, we expect that for other protocols (e.g., those suggested in [14,15]), one could derive better min-trade-off functions that will bring us closer to the regime of experimentally relevant protocols. In fact, our framework allows us to compare different protocols via their min-trade-off functions and thus can be used as a tool for benchmarking new protocols.

Beyond these directions, the suggestions raised in the review point toward further refinements that may help reduce the round complexity. In particular, one could explore variants of the entropy accumulation framework that allow for memory-aware entropy tracking or dynamically weighted contributions across rounds. Another possibility is to structure the protocol in layers, separating fast verification from more costly entropy collection. While we do not pursue these directions here, our framework is compatible with such modifications and could serve as a foundation for their analysis.

We conclude with several open questions.

- In both the original analysis performed in [5] and our work, the cryptographic assumption needs to hold even when the (efficient) device has an (inefficient) “advice state”. Including the advice states is necessary when using the entropy accumulation in its current form, due to the usage of a min-trade-off function (see Equation (30)). One fundamental question is therefore whether this is necessary in all DI protocols based on the post-quantum computational assumptions or not.

- As mentioned above, what makes the protocol considered here potentially unfeasible for experimental implementations is its min-trade-off function. Ideally, one would like to both decrease the winning probability needed in order to certify entropy as well as the derivative of the function, which is currently too large. The derivative impacts the second-order term of the accumulated entropy, and this is why we observe the need for a large number of rounds in the protocol—many orders of magnitude more than in the DI setup with two devices. Any improvement of Lemma 12 may be useful; when looking into the details of the proof, there is indeed some room for it.

- Once one is interested in non-asymptotic statements and actual implementations, the unknown negligible function needs to be better understood. The assumption is that as , but in any given execution, one does fix a finite . Some more explicit statements should then be made regarding and incorporated into the final bound.

- In the current manuscript, we worked with the EAT presented in [25]. A generalized version, that allows for potentially more complex protocols, appears in [26]. All of our lemmas and theorems can also be derived using [26] without any modifications. An interesting question is whether there are DI protocols with a single device that can exploit the more general structure of [26].

Author Contributions

Conceptualization, R.A.; methodology, I.M.; formal analysis, I.M.; investigation, I.M.; validation, R.A.; writing—original draft preparation, I.M.; writing—review and editing, I.M. and R.A.; visualization, I.M.; supervision, R.A.; funding acquisition, R.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was generously supported by the Peter and Patricia Gruber Award, the Daniel E. Koshland Career Development Chair, the Koshland Research Fund, the Karen Siem Fellowship for Women in Science and the Israel Science Foundation (ISF) and the Directorate for Defense Research and Development (DDR&D), grant No. 3426/21.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank Zvika Brakerski, Tony Metger, Thomas Vidick and Tina Zhang for useful discussions.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DI | Device Independent |

| LWE | Learning With Errors |

| QPT | Quantum Polynomial Time |

| TCF | Trapdoor Claw-free Function |

| NTCF | Noisy Trapdoor Claw-free Function |

| QKD | Quantum Key Distribution |

| EAT | Entropy Accumulation Theorem |

| NISQ | Noisy Intermediate Scale Quantum |

References

- Brunner, N.; Cavalcanti, D.; Pironio, S.; Scarani, V.; Wehner, S. Bell nonlocality. Rev. Mod. Phys. 2014, 86, 419. [Google Scholar] [CrossRef]

- Scarani, V. Bell Nonlocality; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Regev, O. On lattices, learning with errors, random linear codes, and cryptography. J. ACM 2009, 56, 1–40. [Google Scholar] [CrossRef]

- Mahadev, U. Classical Verification of Quantum Computations. In Proceedings of the 2018 IEEE 59th Annual Symposium on Foundations of Computer Science (FOCS), Paris, France, 7–9 October 2018; pp. 259–267. [Google Scholar]

- Brakerski, Z.; Christiano, P.; Mahadev, U.; Vazirani, U.; Vidick, T. A Cryptographic Test of Quantumness and Certifiable Randomness from a Single Quantum Device. J. ACM 2021, 68, 1–47. [Google Scholar] [CrossRef]

- Gheorghiu, A.; Vidick, T. Computationally-secure and composable remote state preparation. In Proceedings of the 2019 IEEE 60th Annual Symposium on Foundations of Computer Science (FOCS), Baltimore, MD, USA, 9–12 November 2019; pp. 1024–1033. [Google Scholar]

- Brakerski, Z.; Koppula, V.; Vazirani, U.; Vidick, T. Simpler Proofs of Quantumness. In Proceedings of the 15th Conference on the Theory of Quantum Computation, Communication and Cryptography (TQC 2020), Riga, Latvia, 9–12 June 2020; Schloss Dagstuhl—Leibniz-Zentrum für Informatik: Wadern, Germany, 2020; Volume 158, pp. 8:1–8:14. [Google Scholar]

- Metger, T.; Vidick, T. Self-testing of a single quantum device under computational assumptions. Quantum 2021, 5, 544. [Google Scholar] [CrossRef]

- Metger, T.; Dulek, Y.; Coladangelo, A.; Arnon-Friedman, R. Device-independent quantum key distribution from computational assumptions. New J. Phys. 2021, 23, 123021. [Google Scholar] [CrossRef]

- Vidick, T.; Zhang, T. Classical proofs of quantum knowledge. In Proceedings of the 40th Annual International Conference on the Theory and Applications of Cryptographic Techniques (EUROCRYPT 2021), Zagreb, Croatia, 17–21 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 630–660. [Google Scholar]

- Kahanamoku-Meyer, G.D.; Choi, S.; Vazirani, U.V.; Yao, N.Y. Classically verifiable quantum advantage from a computational Bell test. Nat. Phys. 2022, 18, 918–924. [Google Scholar] [CrossRef]

- Liu, Z.; Gheorghiu, A. Depth-efficient proofs of quantumness. Quantum 2022, 6, 807. [Google Scholar] [CrossRef]

- Gheorghiu, A.; Metger, T.; Poremba, A. Quantum Cryptography with Classical Communication: Parallel Remote State Preparation for Copy-Protection, Verification, and More. In Proceedings of the 50th International Colloquium on Automata, Languages, and Programming (ICALP 2023), Paderborn, Germany, 10–14 July 2023; Schloss Dagstuhl—Leibniz-Zentrum für Informatik: Wadern, Germany, 2023; Volume 261, pp. 67:1–67:17. [Google Scholar]

- Brakerski, Z.; Gheorghiu, A.; Kahanamoku-Meyer, G.D.; Porat, E.; Vidick, T. Simple Tests of Quantumness Also Certify Qubits. In Proceedings of the 43rd Annual International Cryptology Conference (CRYPTO 2023), Santa Barbara, CA, USA, 20–24 August 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 162–191. [Google Scholar]

- Natarajan, A.; Zhang, T. Bounding the Quantum Value of Compiled Nonlocal Games: From CHSH to BQP Verification. In Proceedings of the 2023 IEEE 64th Annual Symposium on Foundations of Computer Science (FOCS), Santa Cruz, CA, USA, 6–9 November 2023; pp. 1342–1348. [Google Scholar]

- Aaronson, S.; Hung, S.H. Certified Randomness from Quantum Supremacy. In Proceedings of the 55th Annual ACM Symposium on Theory of Computing (STOC 2023), Orlando, FL, USA, 20–23 June 2023; pp. 933–944. [Google Scholar]

- Zhu, D.; Kahanamoku-Meyer, G.D.; Lewis, L.; Noel, C.; Katz, O.; Harraz, B.; Wang, Q.; Risinger, A.; Feng, L.; Biswas, D.; et al. Interactive cryptographic proofs of quantumness using mid-circuit measurements. Nat. Phys. 2023, 19, 1725–1731. [Google Scholar] [CrossRef]

- Stricker, R.; Carrasco, J.; Ringbauer, M.; Postler, L.; Meth, M.; Edmunds, C.; Schindler, P.; Blatt, R.; Zoller, P.; Kraus, B.; et al. Towards experimental classical verification of quantum computation. arXiv 2022. [Google Scholar] [CrossRef]

- Kessler, M.; Arnon-Friedman, R. Device-independent randomness amplification and privatization. IEEE J. Sel. Areas Inf. Theory 2020, 1, 568–584. [Google Scholar] [CrossRef]

- Tomamichel, M.; Colbeck, R.; Renner, R. Duality between smooth min-and max-entropies. IEEE Trans. Inf. Theory 2010, 56, 4674–4681. [Google Scholar] [CrossRef]

- Renner, R.; König, R. Universally composable privacy amplification against quantum adversaries. In Proceedings of the Theory of Cryptography, Second Theory of Cryptography Conference (TCC 2005), Cambridge, MA, USA, 10–12 February 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 407–425. [Google Scholar]

- De, A.; Portmann, C.; Vidick, T.; Renner, R. Trevisan’s extractor in the presence of quantum side information. SIAM J. Comput. 2012, 41, 915–940. [Google Scholar] [CrossRef]

- Primaatmaja, I.W.; Goh, K.T.; Tan, E.Y.Z.; Khoo, J.T.F.; Ghorai, S.; Lim, C.C.W. Security of device-independent quantum key distribution protocols: A review. Quantum 2023, 7, 932. [Google Scholar] [CrossRef]

- Berta, M.; Christandl, M.; Colbeck, R.; Renes, J.M.; Renner, R. The uncertainty principle in the presence of quantum memory. Nat. Phys. 2010, 6, 659–662. [Google Scholar] [CrossRef]

- Dupuis, F.; Fawzi, O.; Renner, R. Entropy accumulation. Commun. Math. Phys. 2020, 379, 867–913. [Google Scholar] [CrossRef]

- Metger, T.; Fawzi, O.; Sutter, D.; Renner, R. Generalised entropy accumulation. In Proceedings of the 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS), Denver, CO, USA, 31 October–3 November 2022; pp. 844–850. [Google Scholar]

- Arnon-Friedman, R.; Renner, R.; Vidick, T. Simple and tight device-independent security proofs. SIAM J. Comput. 2019, 48, 181–225. [Google Scholar] [CrossRef]

- Arnon-Friedman, R. Device-Independent Quantum Information Processing: A Simplified Analysis; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Mahadev, U.; Vazirani, U.; Vidick, T. Efficient Certifiable Randomness from a Single Quantum Device. arXiv 2022. [Google Scholar] [CrossRef]

- Winter, A. Tight Uniform Continuity Bounds for Quantum Entropies: Conditional Entropy, Relative Entropy Distance and Energy Constraints. Commun. Math. Phys. 2016, 347, 291–313. [Google Scholar] [CrossRef]

- Wilde, M.M. Quantum Information Theory; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Dupuis, F.; Fawzi, O. Entropy accumulation with improved second-order term. IEEE Trans. Inf. Theory 2019, 65, 7596–7612. [Google Scholar] [CrossRef]

- Pironio, S.; Acín, A.; Brunner, N.; Gisin, N.; Massar, S.; Scarani, V. Device-independent quantum key distribution secure against collective attacks. New J. Phys. 2009, 11, 045021. [Google Scholar] [CrossRef]

- Tomamichel, M. Quantum Information Processing with Finite Resources; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).