- Article

Drawing and Soccer Tactical Memorization: An Eye-Tracking Investigation of the Moderating Role of Visuospatial Abilities and Expertise

- Sabrine Tlili,

- Hatem Ben Mahfoudh and

- Bachir Zoudji

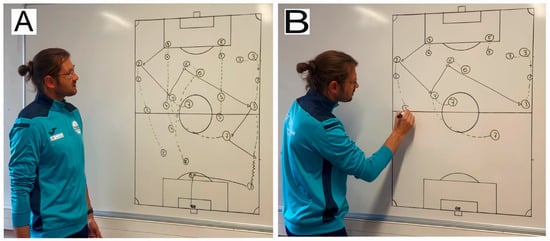

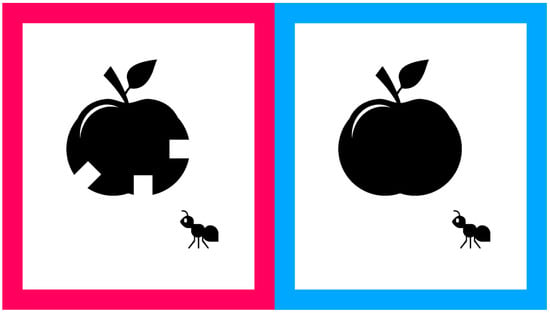

Dynamic drawing has emerged as a strategy to communicate tactical diagrams, yet its effectiveness remains uncertain and appears to depend on individual differences. This study investigated how the memorization and visual processing of tactical soccer scenes are influenced by drawing format (static drawing vs. dynamic drawing) and moderated by visuospatial abilities (VSA) and expertise. Expert (N = 57) and novice (N = 54) participants were randomly assigned to one of two conditions. In the static drawing condition, participants viewed a pre-drawn, completed tactical diagram accompanied by an oral explanation. In the dynamic drawing condition, they observed the coach drawing the diagram in real time while delivering the same explanation. VSA was first assessed using a control test. Then, in the main test, participants memorized and reproduced the tactical scene while their eye movements were recorded using an eye tracker. Key findings revealed a three-way interaction, highlighting the occurrence of an expertise reversal effect: high VSA novices performed better with dynamic drawing, whereas low VSA experts benefited more from static drawing, showing distinct visual processing patterns across groups. Overall, the results highlight the need to tailor drawing strategies to individual characteristics, particularly VSA and expertise, to optimize visual attention and tactical memorization.

1 January 2026