Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks

Abstract

1. Introduction

1.1. LiDAR

1.2. Convolutional Neural Networks

1.3. OpenStreetMap

1.4. Hypothesis

- Whether it is possible to combine OSM data with LiDAR data;

- Whether it is possible to improve the accuracy of 3D urban environments generated with OSM data through LiDAR data analysis;

- Whether it is possible to extract features from LiDAR data in order to generate complete OSM data via LiDAR image analysis by creating models based on a convolutional neural network;

- Whether a model based on a convolutional neural network trained with LiDAR data on a particular region can then extrapolate from those data to analyze new regions and complete the OSM data on these new regions.

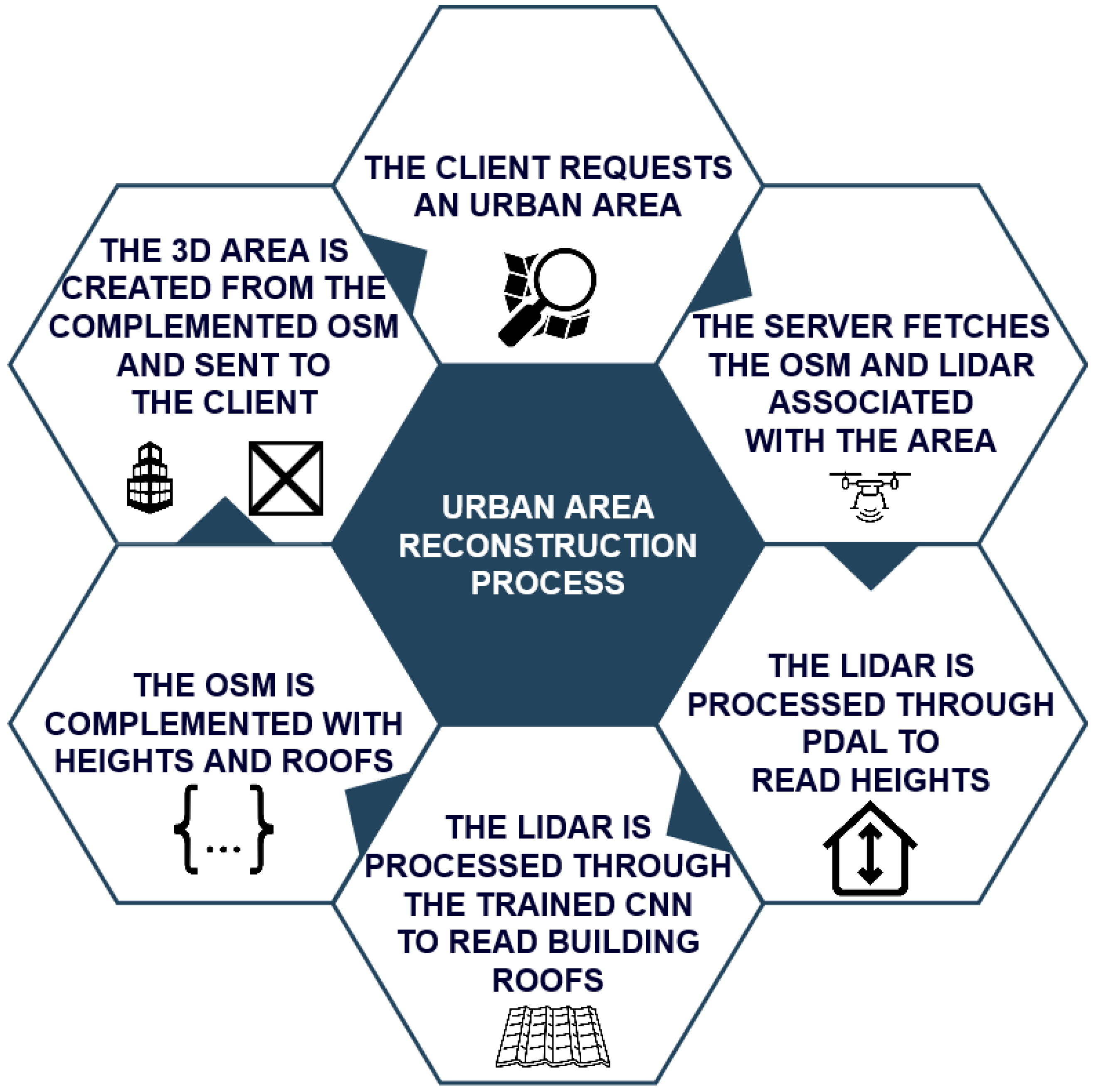

2. Materials and Methods

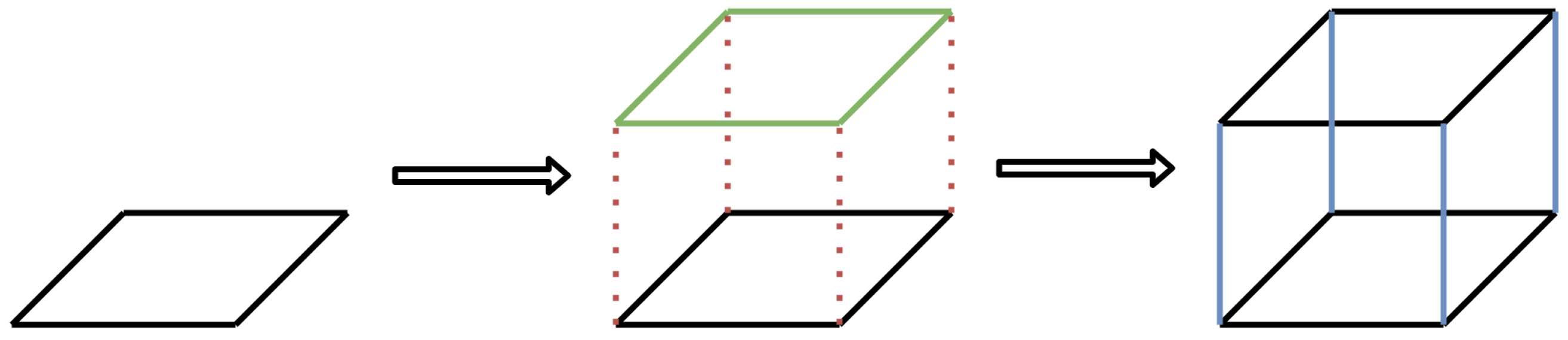

2.1. Generating 3D Models of Buildings and Inferring Heights

- The latitude and longitude of all vertices of the polygon representing the building are obtained.

- The minimum and maximum latitude and longitude of the polygon vertices are obtained, generating the bounding box.

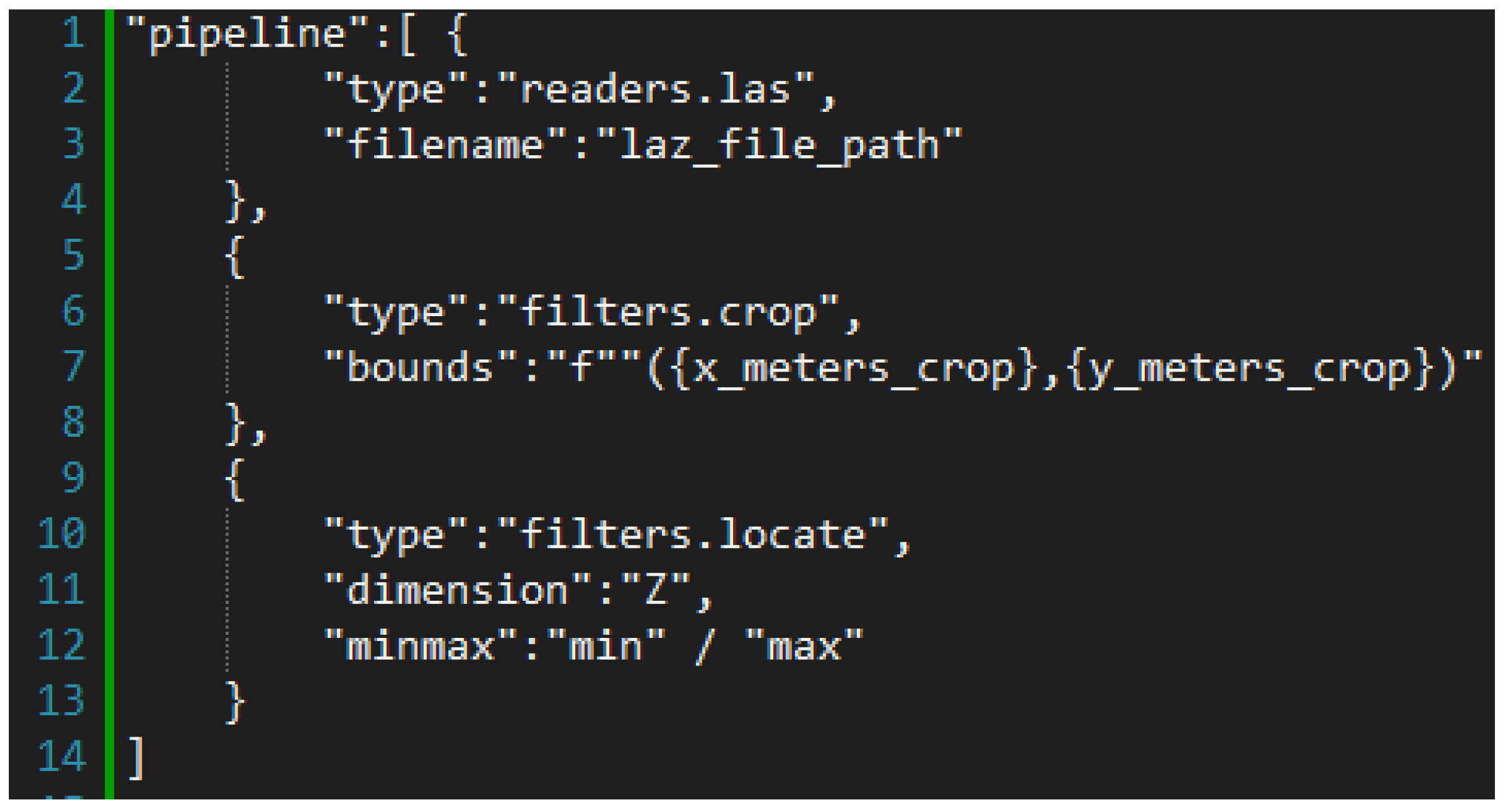

- The information of the LAZ file is obtained using pdal info, including the projection and bounding box of the file in EPSG projection and native (in meters).

- The range of X and Y coordinates and latitude and longitude of the LAZ file bounding box is extracted. The building bounding box is then mapped to the LAZ file bounding box (in meters).

- The script which crops the LAZ file with PDAL and reads the minimum (ground) and maximum (roof) height of the building area is executed using the building bounding box (in meters).

- A sensitivity parameter was added to allow increasing or decreasing the size of the bounding box of the building area, which is useful in cases where the building is not fully contained in the LAZ file or when there are buildings very close together.

- The minimum and maximum heights obtained are stored in an array, which is then used to update the OSM data with the height values of each building.

2.2. Using CNN to Add Inferred LiDAR Data to OSM

2.2.1. LiDAR Data Pre-Processing and Image Set Construction

2.2.2. Training the YOLOv7 CNN

- epochs: this refers to the number of iterations performed on the image set. For training, approximately 3000 epochs were initially used, starting from a pre-trained model and performing transfer learning on it. This allowed us to create a new neural network model with updated weights based on the roof images used for training. Taking into account that the neural network incorporates features or patterns as it is trained, the updated weights model was then incorporated into the training process to continue performing transfer learning. A total of approximately 9000 additional epochs were tested, obtaining acceptable results for the final model generated using the constructed image set.

- batchsize 2: this parameter corresponds to the number of images passed to memory per iteration within an epoch, allowing the weights of the CNN to be updated.

- img 2000 2000: this indicates the size of the images to be resized. Because the input images all had a size of 2000 × 2000, no resizing was executed for this training.

- weights ’model.pt’: this parameter indicates where the initial weights are located. It should be noted that the model.pt changes as training progresses over a given number of epochs, always taking the best model.

- The OSM polygon representing the building is obtained.

- The bounding box and center in terms of the latitude and longitude of the polygon are extracted.

- The LiDAR area, transformed into an image with LASTools, is processed by the neural network. The result is a label file with the position of each detected roof in the image indicated in pixel coordinate format.

- The labels of the image are mapped to the latitude and longitude coordinates of the building using the bounding box of the building and its center. A sensitivity parameter allows the size of the bounding box of the building to be increased or decreased, which is useful in cases where the building is not fully contained in the LiDAR area or when there are buildings very close together.

- The roof is written to the resulting OSM file.

- The 3D generator receives the resulting OSM and generates the 3D model.

3. Results

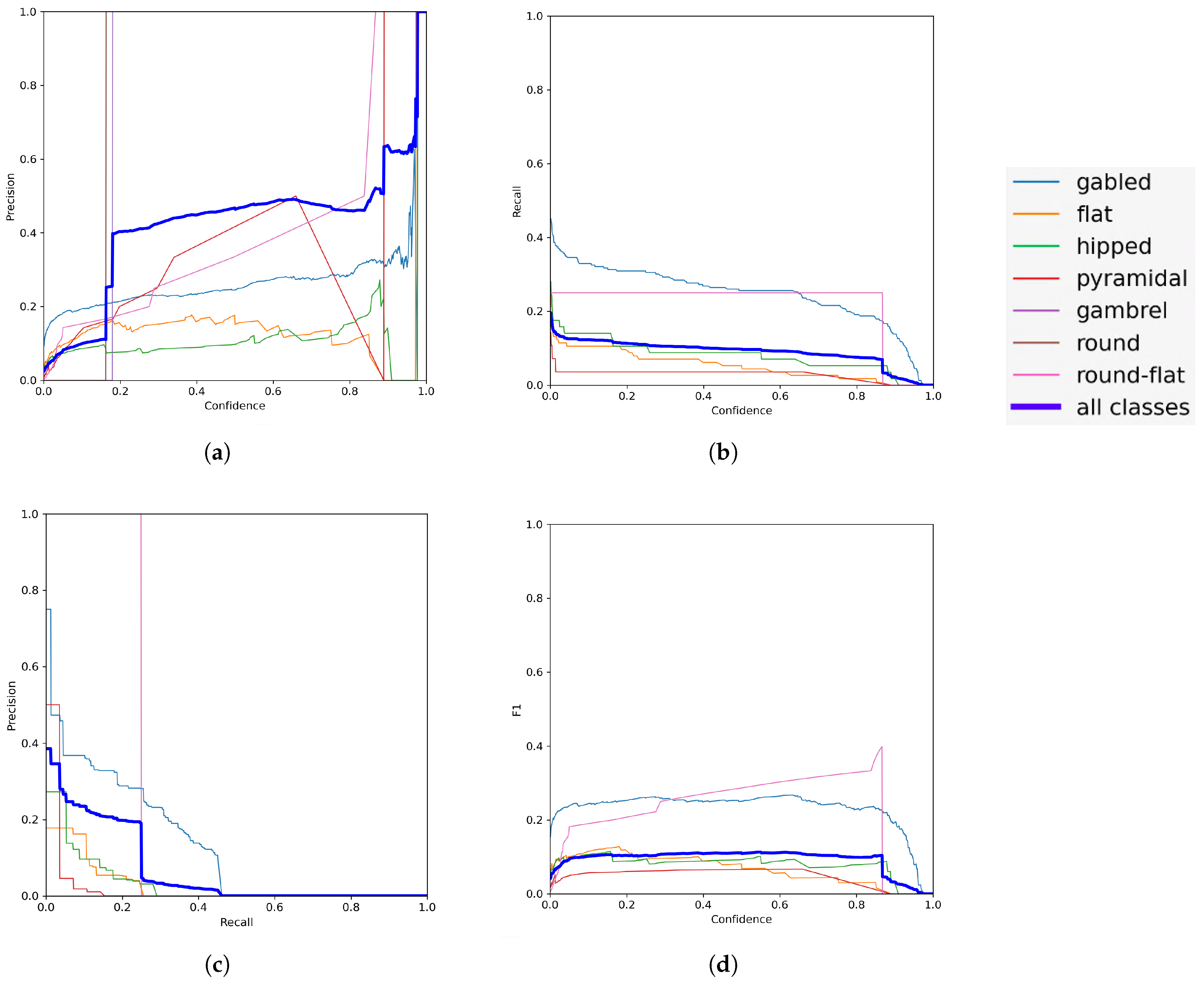

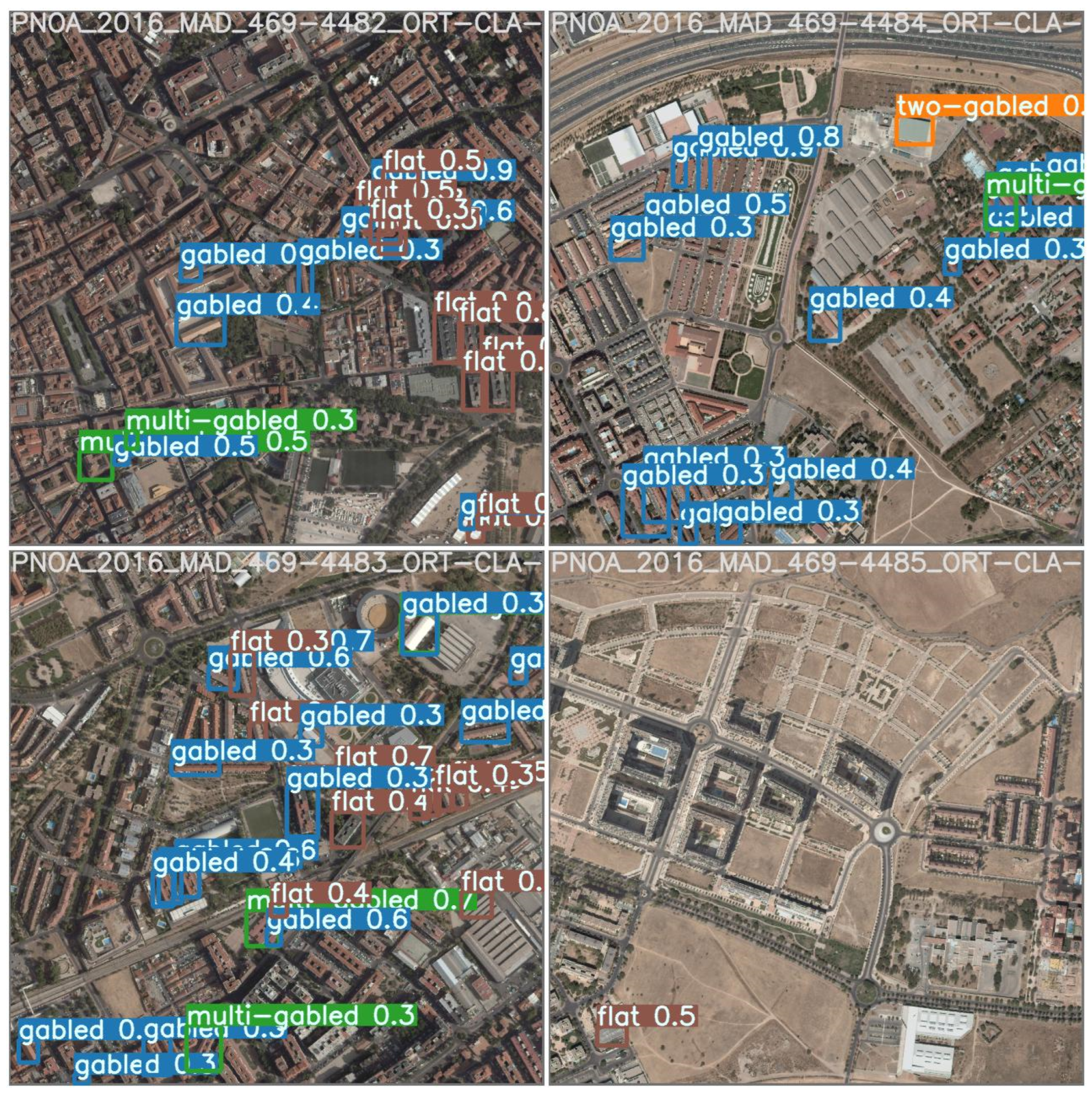

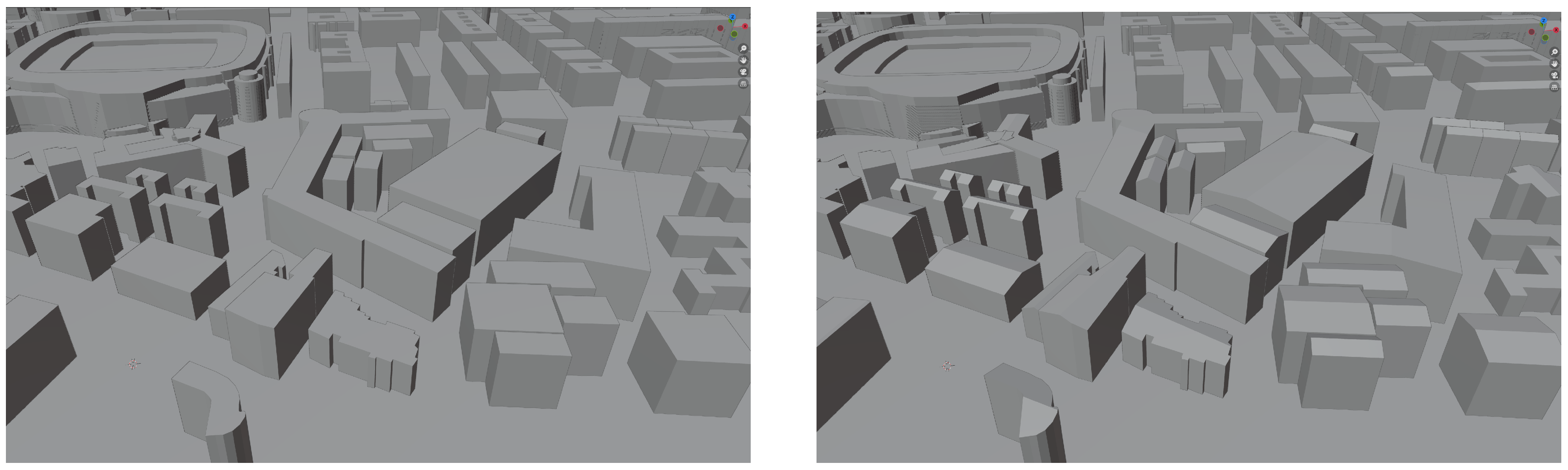

Results Obtained Using CNN for 3D Reconstruction of Building Roofs

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| OSM | OpenStreetMap |

| CNN | Convolutional Neural Network |

| DEM | Digital Elevation Model |

| DFM | Digital Feature Model |

| DTM | Digital Terrain Model |

| DSM | Digital Surface Model |

| DCNN | Dynamic Convolutional Neural Network |

| HTTPS | Hypertext Transfer Protocol Secure |

| JSON | JavaScript Object Notation |

| CSG | Constructive Solid Geometry |

| HTTP | Hypertext Transfer Protocol Secure |

| JSON | JavaScript Object Notation |

| CSG | Constructive Solid Geometry |

| GLTF | GL Transmission Format |

| DGTOE | Direction General of Teledetection and Earth Observation |

| IGN | National Geographic Institute |

| GPU | Graphics Processing Unit |

| RGB | Red, Green, Blue |

References

- Biljecki, F.; Stoter, J.; Ledoux, H.; Zlatanova, S.; Çöltekin, A. Applications of 3D City Models: State of the Art Review. ISPRS Int. J. Geo-Inf. 2015, 4, 2842–2889. [Google Scholar] [CrossRef]

- Cappelle, C.; El Najjar, M.E.; Charpillet, F.; Pomorski, D. Virtual 3D City Model for Navigation in Urban Areas. J. Intell. Robot. Syst. 2012, 66, 377–399. [Google Scholar] [CrossRef]

- Jovanović, D.; Milovanov, S.; Ruskovski, I.; Govedarica, M.; Sladić, D.; Radulović, A.; Pajić, V. Building Virtual 3D City Model for Smart Cities Applications: A Case Study on Campus Area of the University of Novi Sad. ISPRS Int. J. Geo-Inf. 2020, 9, 476. [Google Scholar] [CrossRef]

- Xu, J.; Liu, J.; Yin, H.; Wu, T.; Qiu, G. Research on 3D modeling and application in urban emergency management. In Proceedings of the 2011 International Conference on E-Business and E-Government (ICEE), Shanghai, China, 6–8 May 2011; pp. 1–4. [Google Scholar] [CrossRef]

- Tayebi, A.; Gomez, J.; Saez de Adana, F.; Gutierrez, O.; Fernandez de Sevilla, M. Development of a Web-Based Simulation Tool to Estimate the Path Loss in Outdoor Environments using OpenStreetMaps [Wireless Corner]. IEEE Antennas Propag. Mag. 2019, 61, 123–129. [Google Scholar] [CrossRef]

- Štular, B.; Eichert, S.; Lozić, E. Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox. Remote Sens. 2021, 13, 3225. [Google Scholar] [CrossRef]

- Muhadi, N.A.; Abdullah, A.F.; Bejo, S.K.; Mahadi, M.R.; Mijic, A. The use of LiDAR-derived DEM in flood applications: A review. Remote Sens. 2020, 12, 2308. [Google Scholar] [CrossRef]

- Lu, J.; Wang, H.; Qin, S.; Cao, L.; Pu, R.; Li, G.; Sun, J. Estimation of aboveground biomass of Robinia pseudoacacia forest in the Yellow River Delta based on UAV and Backpack LiDAR point clouds. Int. J. Appl. Earth Obs. Geoinf. 2020, 86, 102014. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An overview of lidar imaging systems for autonomous vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Abdullah, S.M.; Awrangjeb, M.; Lu, G. Automatic segmentation of LiDAR point cloud data at different height levels for 3D building extraction. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Gamal, A.; Wibisono, A.; Wicaksono, S.B.; Abyan, M.A.; Hamid, N.; Wisesa, H.A.; Jatmiko, W.; Ardhianto, R. Automatic LIDAR building segmentation based on DGCNN and euclidean clustering. J. Big Data 2020, 7, 1–18. [Google Scholar] [CrossRef]

- Garwood, T.L.; Hughes, B.R.; O’Connor, D.; Calautit, J.K.; Oates, M.R.; Hodgson, T. A framework for producing gbXML building geometry from Point Clouds for accurate and efficient Building Energy Modelling. Appl. Energy 2018, 224, 527–537. [Google Scholar] [CrossRef]

- Yang, C.; Rottensteiner, F.; Heipke, C. A hierarchical deep learning framework for the consistent classification of land use objects in geospatial databases. ISPRS J. Photogramm. Remote Sens. 2021, 177, 38–56. [Google Scholar] [CrossRef]

- Pratiwi, N.K.C.; Fu’adah, Y.N.; Edwar, E. Early Detection of Deforestation through Satellite Land Geospatial Images based on CNN Architecture. J. Infotel 2021, 13, 54–62. [Google Scholar] [CrossRef]

- Guo, W.; Yang, W.; Zhang, H.; Hua, G. Geospatial object detection in high resolution satellite images based on multi-scale convolutional neural network. Remote Sens. 2018, 10, 131. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, J.; Yang, Z.; Jing, Q.; Wang, J.; Geng, Y. GAN and CNN for imbalanced partial discharge pattern recognition in GIS. High Volt. 2022, 7, 452–460. [Google Scholar] [CrossRef]

- Jadhav, J.; Rao Surampudi, S.; Alagirisamy, M. Convolution neural network based infection transmission analysis on Covid-19 using GIS and Covid data materials. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Malaainine, M.E.I.; Lechgar, H.; Rhinane, H. YOLOv2 Deep Learning Model and GIS Based Algorithms for Vehicle Tracking. J. Geogr. Inf. Syst. 2021, 13, 395–409. [Google Scholar] [CrossRef]

- Chun, P.J.; Yamane, T.; Tsuzuki, Y. Automatic detection of cracks in asphalt pavement using deep learning to overcome weaknesses in images and gis visualization. Appl. Sci. 2021, 11, 892. [Google Scholar] [CrossRef]

- Zhou, X.; Zhu, M.; Leonardos, S.; Daniilidis, K. Sparse Representation for 3D Shape Estimation: A Convex Relaxation Approach. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1648–1661. [Google Scholar] [CrossRef]

- Sinha, A.; Bai, J.; Ramani, K. Deep Learning 3D Shape Surfaces Using Geometry Images. In Computer Vision—ECCV 2016, Proceedings of the European Conference on Computer Vision 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 223–240. [Google Scholar]

- Neis, P.; Zipf, A. Analyzing the Contributor Activity of a Volunteered Geographic Information Project—The Case of OpenStreetMap. ISPRS Int. J. Geo-Inf. 2012, 1, 146–165. [Google Scholar] [CrossRef]

- Tyrasd. Node Density Map. 2022. Available online: https://tyrasd.github.io/osm-node-density/#2/38.0/13.0/2021,places (accessed on 29 December 2022).

- Almendros-Jiménez, J.M.; Becerra-Terón, A.; Merayo, M.G.; Núñez, M. Metamorphic testing of OpenStreetMap. Inf. Softw. Technol. 2021, 138, 106631. [Google Scholar] [CrossRef]

- Hagenmeyer, V.; KemalÇakmak, H.; Düpmeier, C.; Faulwasser, T.; Isele, J.; Keller, H.B. Information and Communication Technology in Energy Lab 2.0: Smart Energies System Simulation and Control Center with an Open-Street-Map-Based Power Flow Simulation Example. Energy Technol. 2016, 4, 145–162. [Google Scholar] [CrossRef]

- Ariyanto, R.; Syaifudin, Y.W.; Puspitasari, D.; Suprihatin; Ananta, A.Y.; Setiawan, A.; Rohadi, E. A web and mobile GIS for identifying areas within the radius affected by natural disasters based on openstreetmap data. Int. J. Online Biomed. Eng. 2019, 15, 80–95. [Google Scholar] [CrossRef]

- Juhász, L.; Novack, T.; Hochmair, H.H.; Qiao, S. Cartographic Vandalism in the Era of Location-Based Games-The Case of Open Street Map and Pokémon GO. ISPRS Int. J. Geo-Inf. 2020, 9, 197. [Google Scholar] [CrossRef]

- Fan, W.; Wu, C.; Wang, J. Improving Impervious Surface Estimation by Using Remote Sensed Imagery Combined with Open Street Map Points-of-Interest (POI) Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4265–4274. [Google Scholar] [CrossRef]

- Weiss, D.J.; Nelson, A.; Gibson, H.S.; Temperley, W.; Peedell, S.; Lieber, A.; Hancher, M.; Poyart, E.; Belchior, S.; Fullman, N.; et al. A global map of travel time to cities to assess inequalities in accessibility in 2015. Nature 2018, 553, 333–336. [Google Scholar] [CrossRef]

- Klimanova, O.; Kolbowsky, E.; Illarionova, O. Impacts of urbanization on green infrastructure ecosystem services: The case study of post-soviet Moscow. BELGEO 2018, 4, 30889. [Google Scholar] [CrossRef]

- Bíl, M.; Andrášik, R.; Nezval, V.; Bílová, M. Identifying locations along railway networks with the highest tree fall hazard. Appl. Geogr. 2017, 87, 45–53. [Google Scholar] [CrossRef]

- Gharaee, Z.; Kowshik, S.; Stromann, O.; Felsberg, M. Graph representation learning for road type classification. Pattern Recognit. 2021, 120, 108174. [Google Scholar] [CrossRef]

- Stewart, C.; Lazzarini, M.; Luna, A.; Albani, S. Deep learning with open data for desert road mapping. Remote Sens. 2020, 12, 2274. [Google Scholar] [CrossRef]

- Esch, T.; Zeidler, J.; Palacios-Lopez, D.; Marconcini, M.; Roth, A.; Mönks, M.; Dech, S. Towards a large-scale 3D modeling of the built environment: Joint analysis of tanDEM-X, sentinel-2 and open street map data. Remote Sens. 2020, 12, 2391. [Google Scholar] [CrossRef]

- Atwal, K.S.; Anderson, T.; Pfoser, D.; Züfle, A. Predicting building types using OpenStreetMap. Sci. Rep. 2022, 12, 19976. [Google Scholar] [CrossRef]

- Cabello, R. ThreeJS. Available online: https://threejs.org/ (accessed on 10 February 2023).

- Alexander, S. Constructive Solid Geometry for Three.js. Available online: https://github.com/samalexander/three-csg-ts (accessed on 10 February 2023).

- Raifer, M. OSM to GeoJSON. Available online: https://github.com/tyrasd/osmtogeojson (accessed on 10 February 2023).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7 Repository. Available online: https://github.com/WongKinYiu/yolov7 (accessed on 10 February 2023).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- OpenStreetMap. Key:roof:shape. Available online: https://wiki.openstreetmap.org/wiki/Key:roof:shape (accessed on 10 February 2023).

- Nacional, I.G. Centro de Descargas del CNIG. Available online: https://centrodedescargas.cnig.es/CentroDescargas/buscador.do (accessed on 10 February 2023).

- 4SmartMachines. Image Annotation Lab. Available online: https://ial.4smartmachines.com/ (accessed on 10 February 2023).

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- Skansi, S. Introduction to Deep Learning: From Logical Calculus to Artificial Intelligence; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Moraisferreira, D. Luxembourg LiDAR Coverage Map. Available online: https://davidmoraisferreira.github.io/lidar-coverage-map-luxembourg/index.htmln (accessed on 10 February 2023).

| Process | Tool/Filters | Comments |

|---|---|---|

| Download | Web browser | Download LIDAR data in .laz format |

| Descompression | laszip | Generate point cloud in .las format |

| Cleanup | blast2dem/drop_class | Overlap and vegetation points are removed |

| Generation | blast2dem | Images are generated in png format |

| Labeling | Image Annotation Lab | Mark coordinates of rooftops |

| Alcalá | Luxemb. | Córdoba | Madrid | Zaragoza | Barcelona | |

|---|---|---|---|---|---|---|

| N of buildings | 28 | 288 | 537 | 543 | 2035 | 7455 |

| Original heights | 1 | 1 | 27 | 200 | 17 | 7238 |

| Added heights | 27 | 287 | 533 | 336 | 2016 | 133 |

| Buildings left without height | 0 | 0 | 4 | 7 | 2 | 84 |

| % of added heights | 96.42% | 99.65% | 94.67% | 61.87% | 99.06% | 1.78% |

| Alcalá | Luxemb. | Córdoba | Madrid | Zaragoza | Barcelona | |

|---|---|---|---|---|---|---|

| N of buildings | 28 | 288 | 537 | 543 | 2035 | 7455 |

| Original roofs | 0 | 0 | 0 | 4 | 0 | 174 |

| Added roofs | 17 | 160 | 148 | 226 | 392 | 2331 |

| Buildings left without roof | 12 | 128 | 389 | 334 | 1643 | 5124 |

| % of added roofs | 60.71% | 55.55% | 27.56% | 40.88% | 19.26% | 28.93% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barranquero, M.; Olmedo, A.; Gómez, J.; Tayebi, A.; Hellín, C.J.; Saez de Adana, F. Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks. Sensors 2023, 23, 2444. https://doi.org/10.3390/s23052444

Barranquero M, Olmedo A, Gómez J, Tayebi A, Hellín CJ, Saez de Adana F. Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks. Sensors. 2023; 23(5):2444. https://doi.org/10.3390/s23052444

Chicago/Turabian StyleBarranquero, Marcos, Alvaro Olmedo, Josefa Gómez, Abdelhamid Tayebi, Carlos Javier Hellín, and Francisco Saez de Adana. 2023. "Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks" Sensors 23, no. 5: 2444. https://doi.org/10.3390/s23052444

APA StyleBarranquero, M., Olmedo, A., Gómez, J., Tayebi, A., Hellín, C. J., & Saez de Adana, F. (2023). Automatic 3D Building Reconstruction from OpenStreetMap and LiDAR Using Convolutional Neural Networks. Sensors, 23(5), 2444. https://doi.org/10.3390/s23052444