Continuous Improvement of an Exit Exam Tool for the Effective Assessment of Student Learning in Engineering Education

Abstract

1. Introduction

1.1. Background

1.2. Research Significance

2. Materials and Methods

2.1. Exit Exam Description

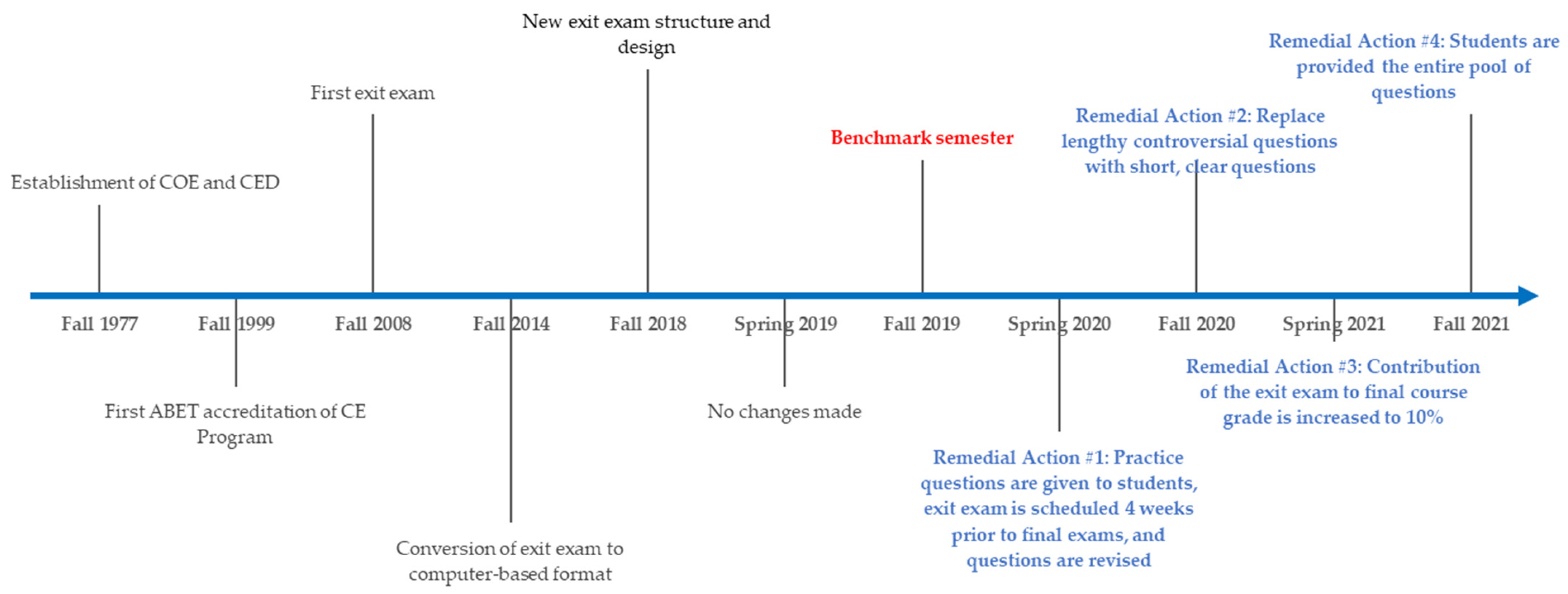

2.2. Remedial Actions

2.3. Participants

2.4. Statistical Data Analysis

3. Results

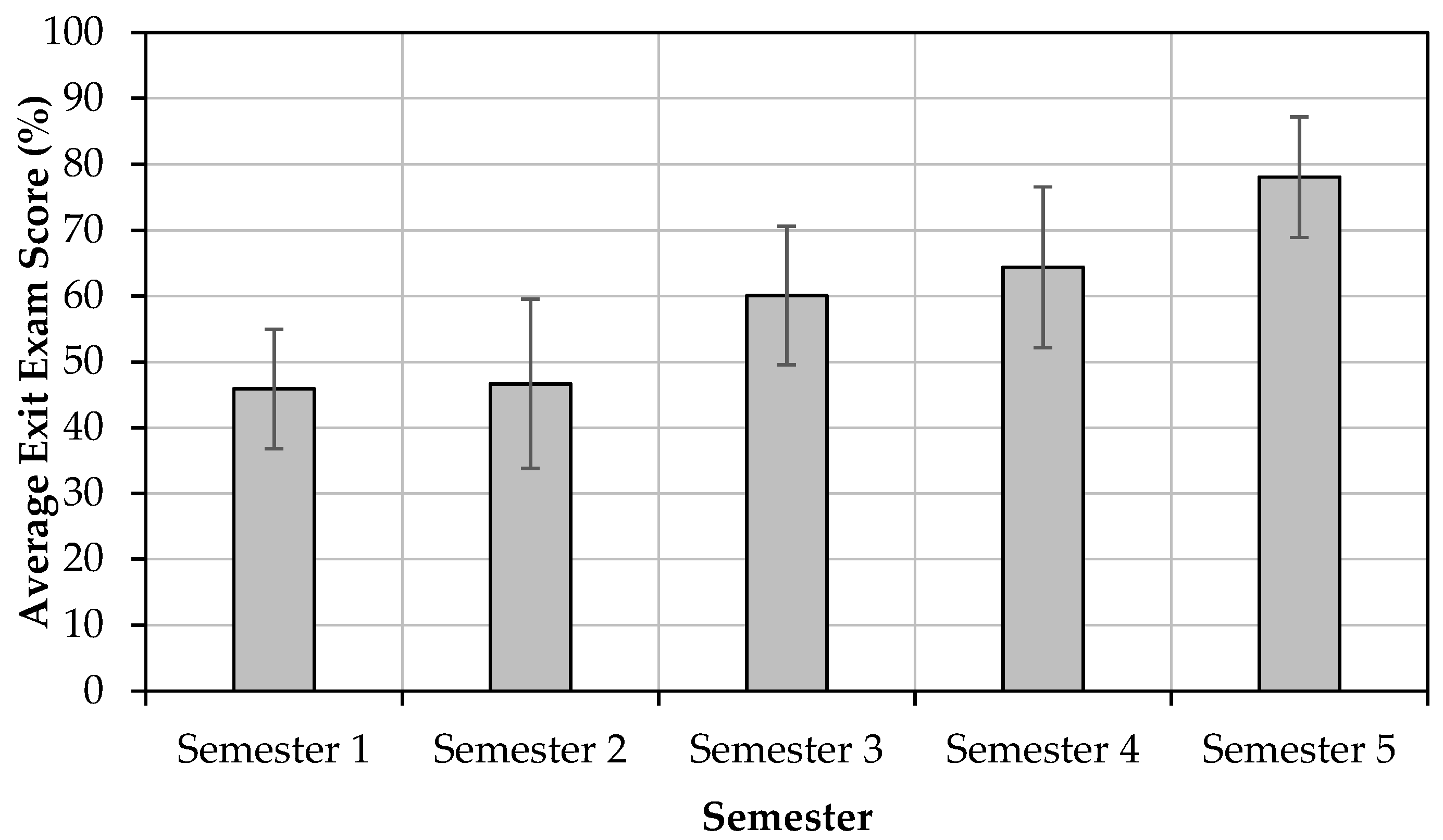

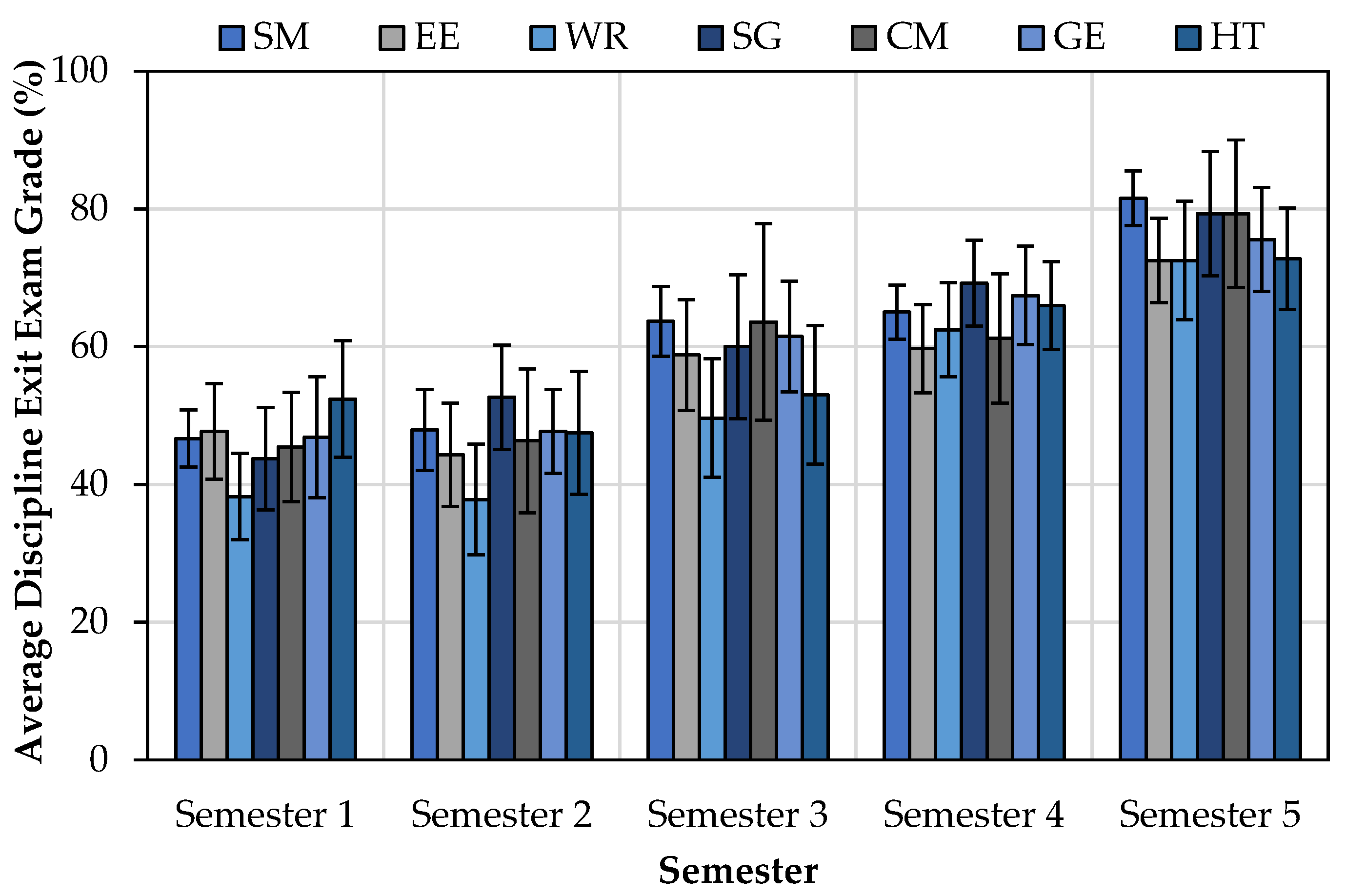

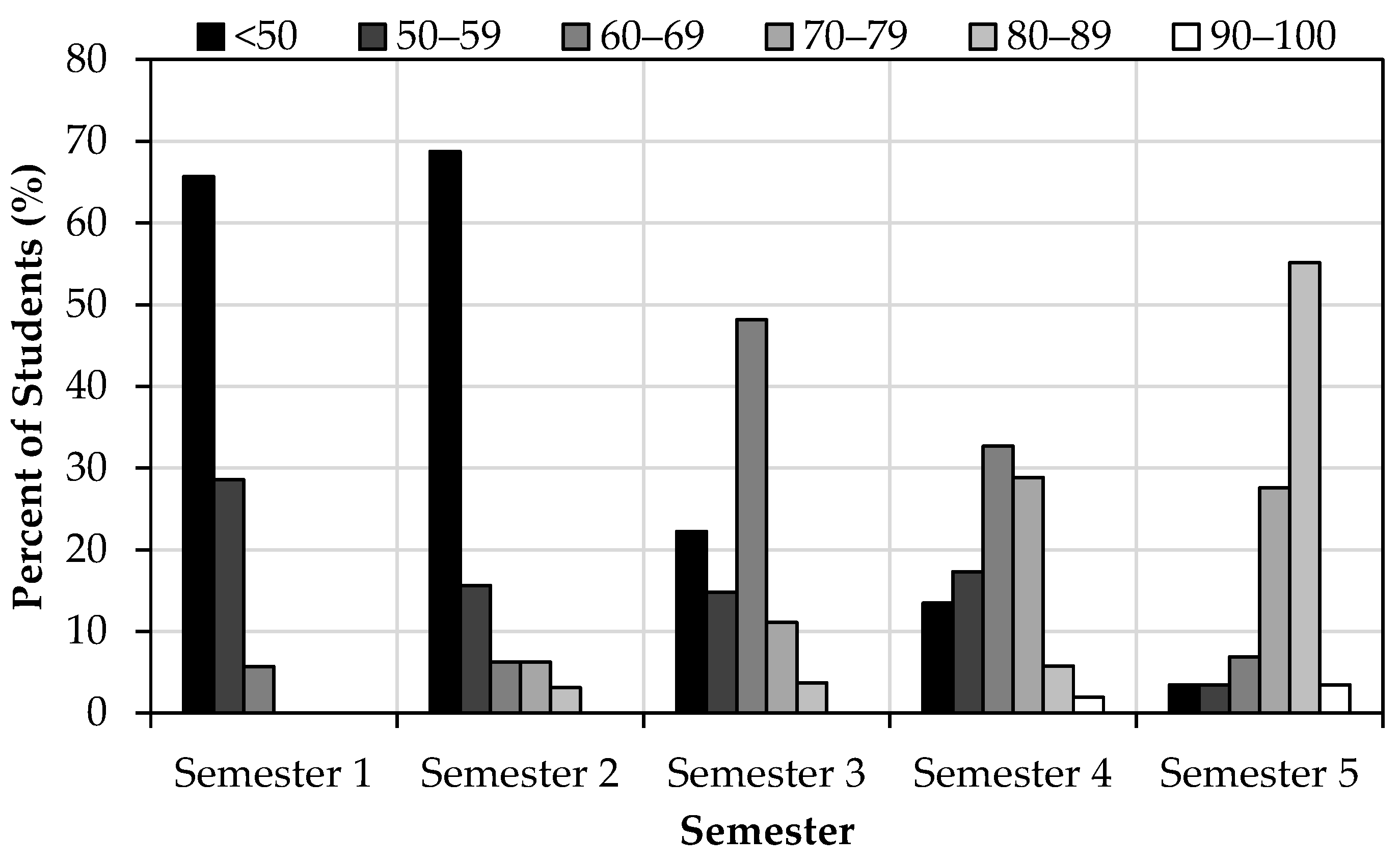

3.1. Exit Exam Grades

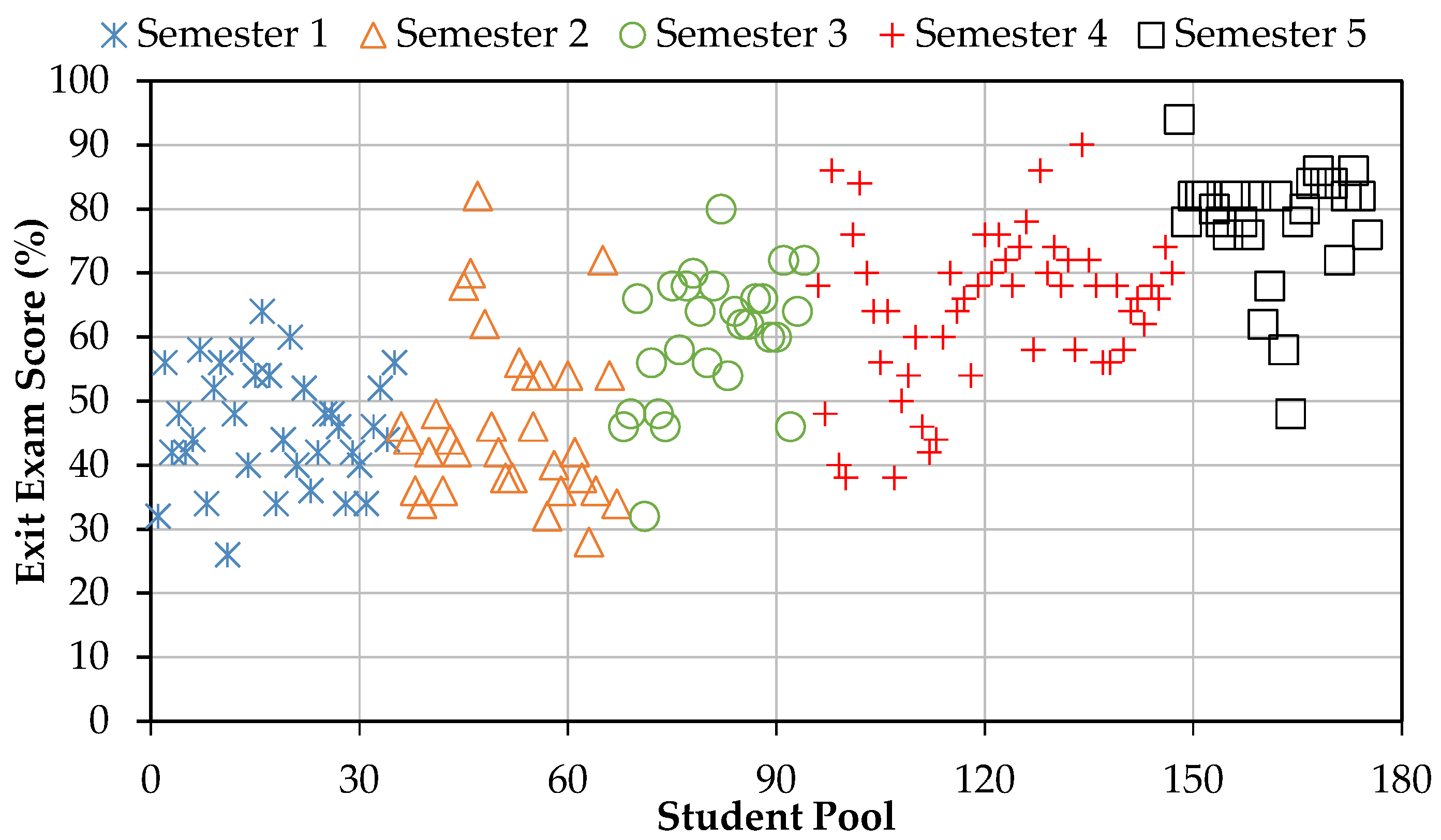

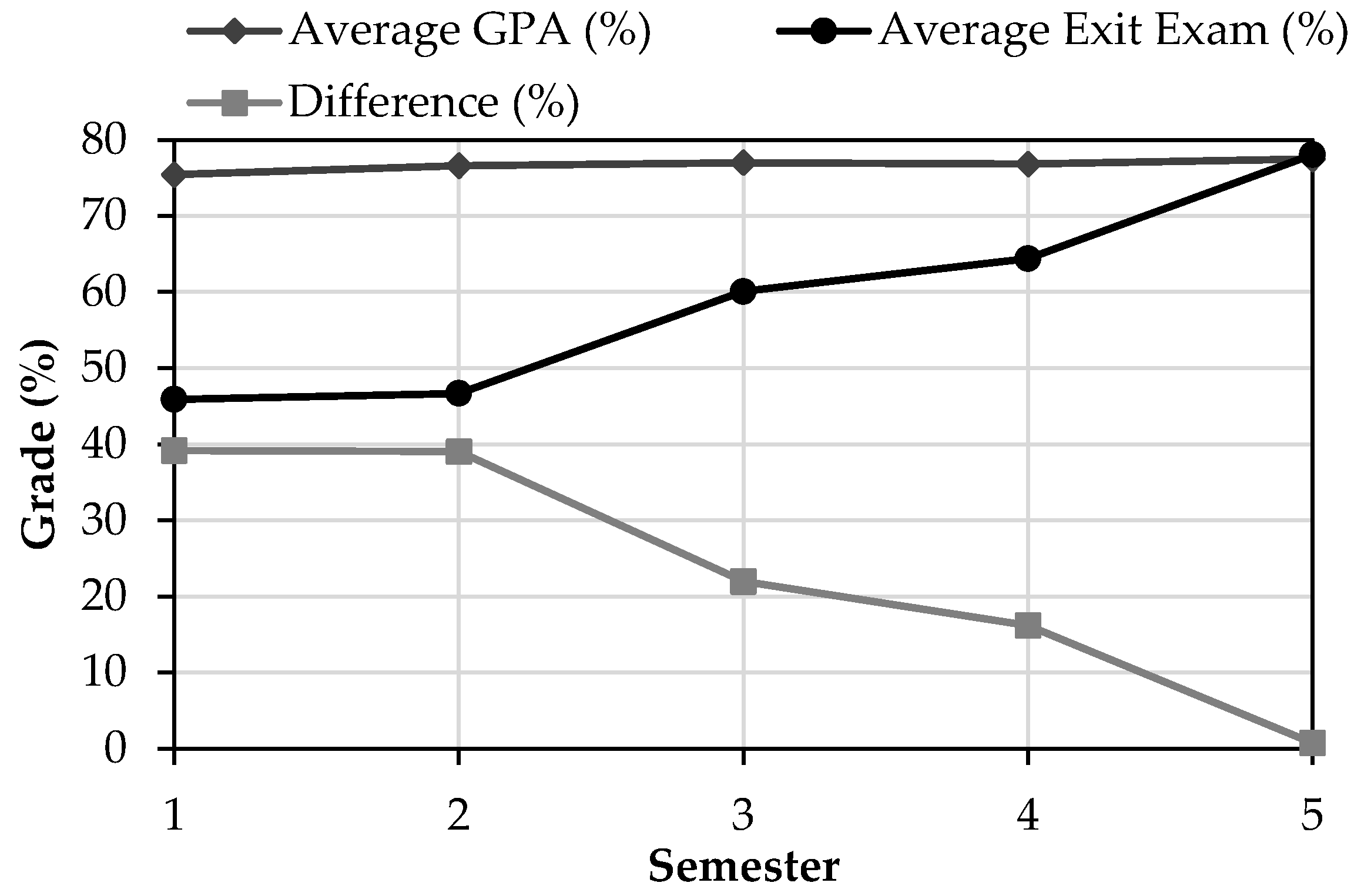

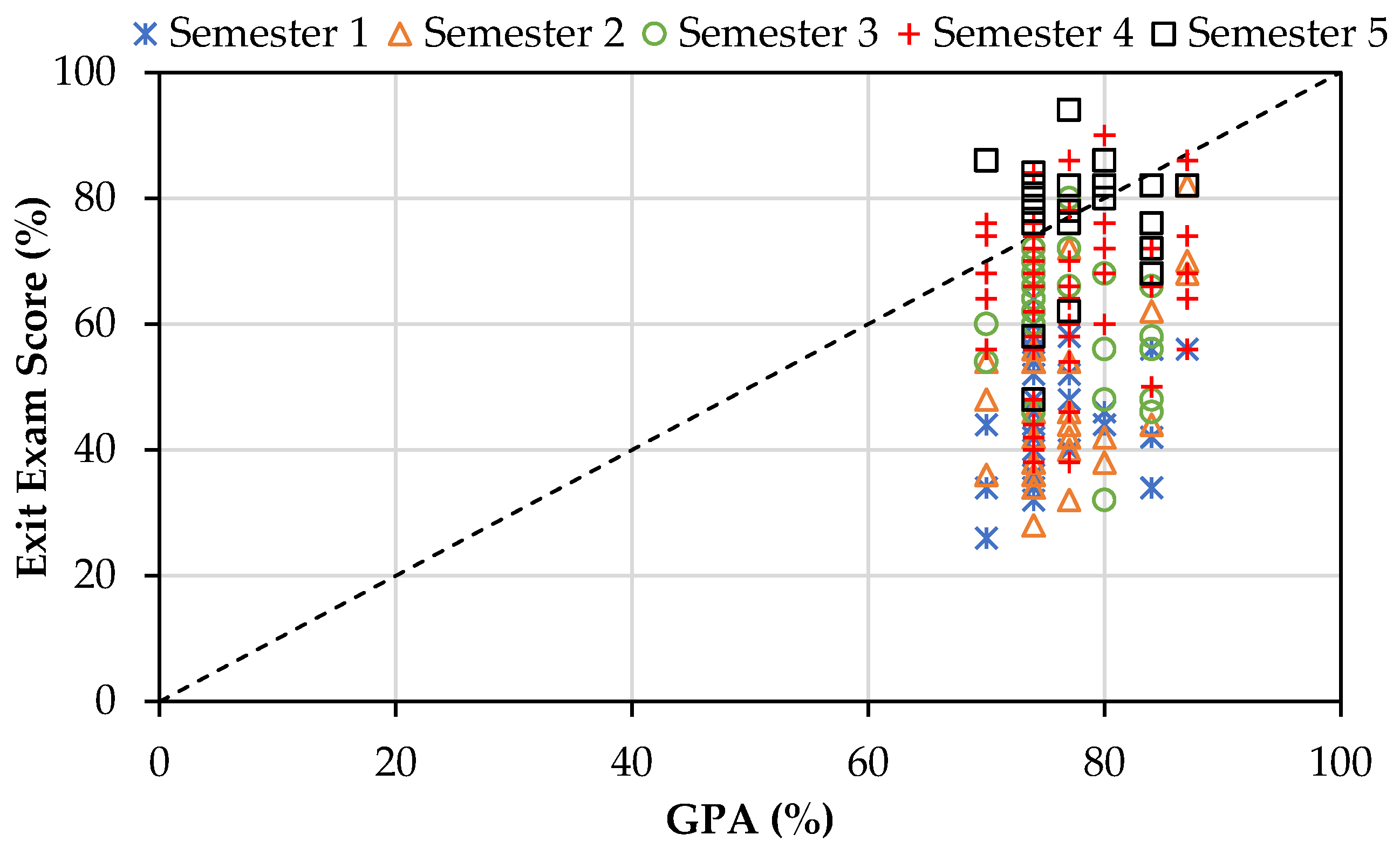

3.2. Relationship between GPA and Exit Exam Score

3.2.1. Overview

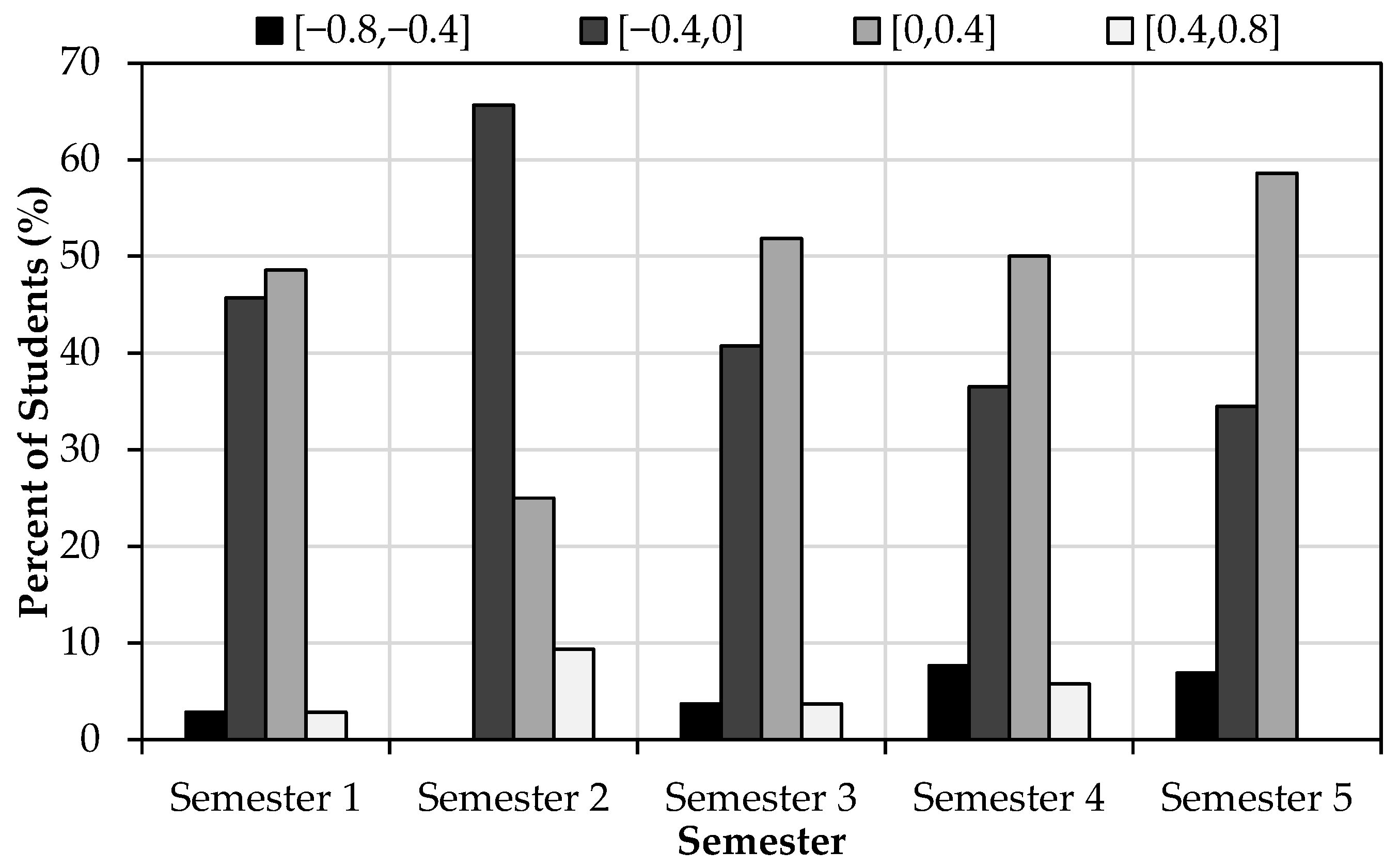

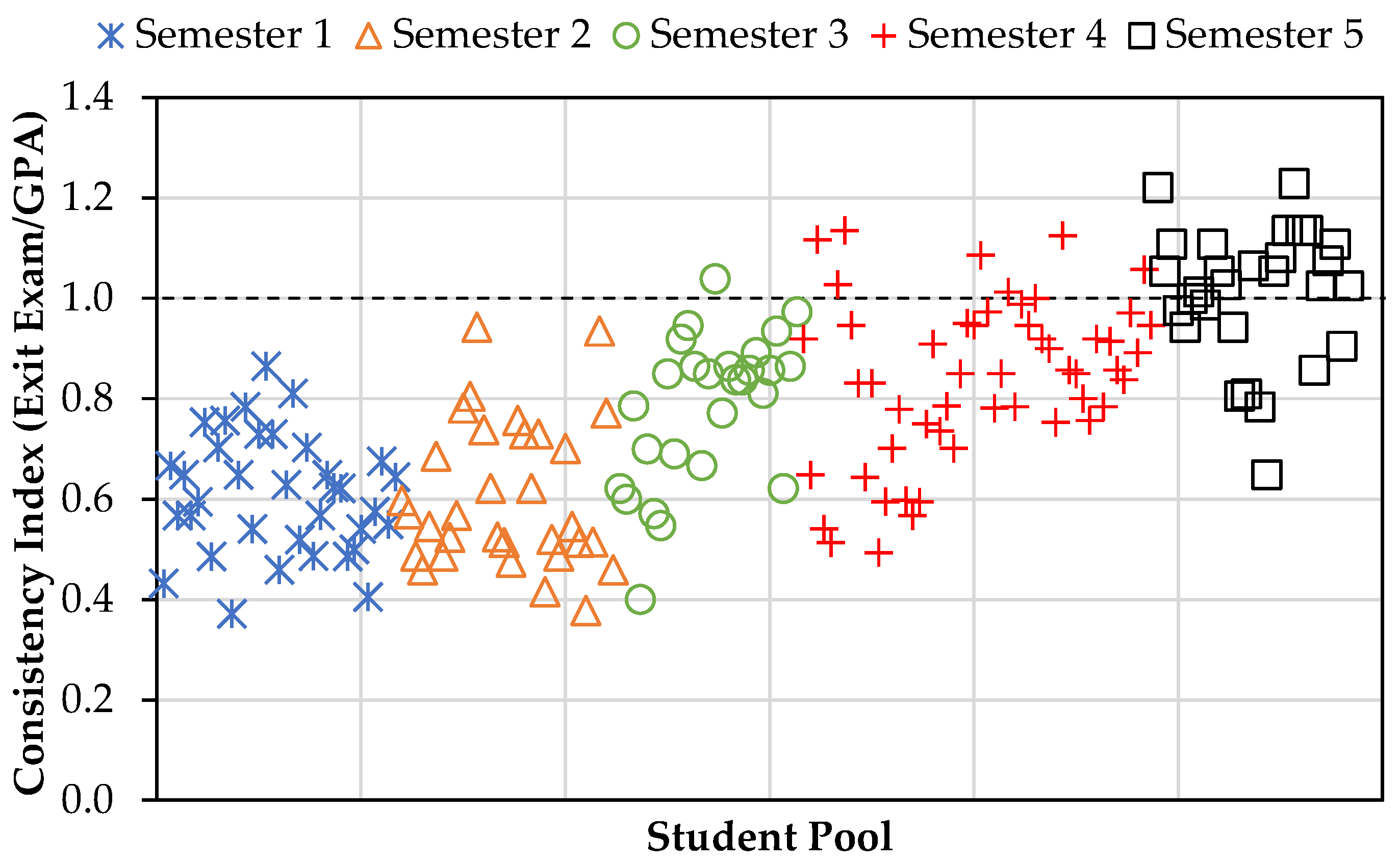

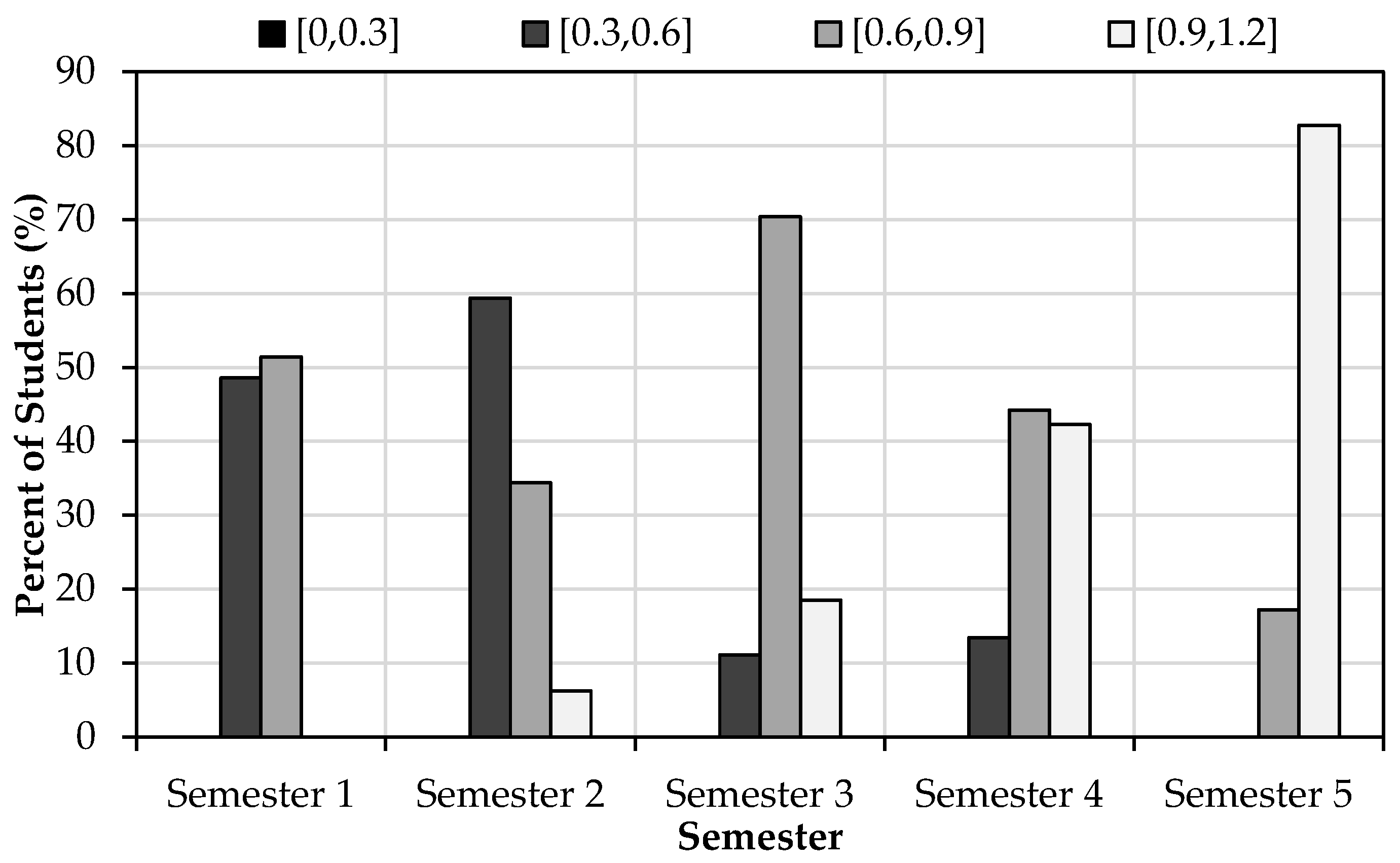

3.2.2. Consistency Index

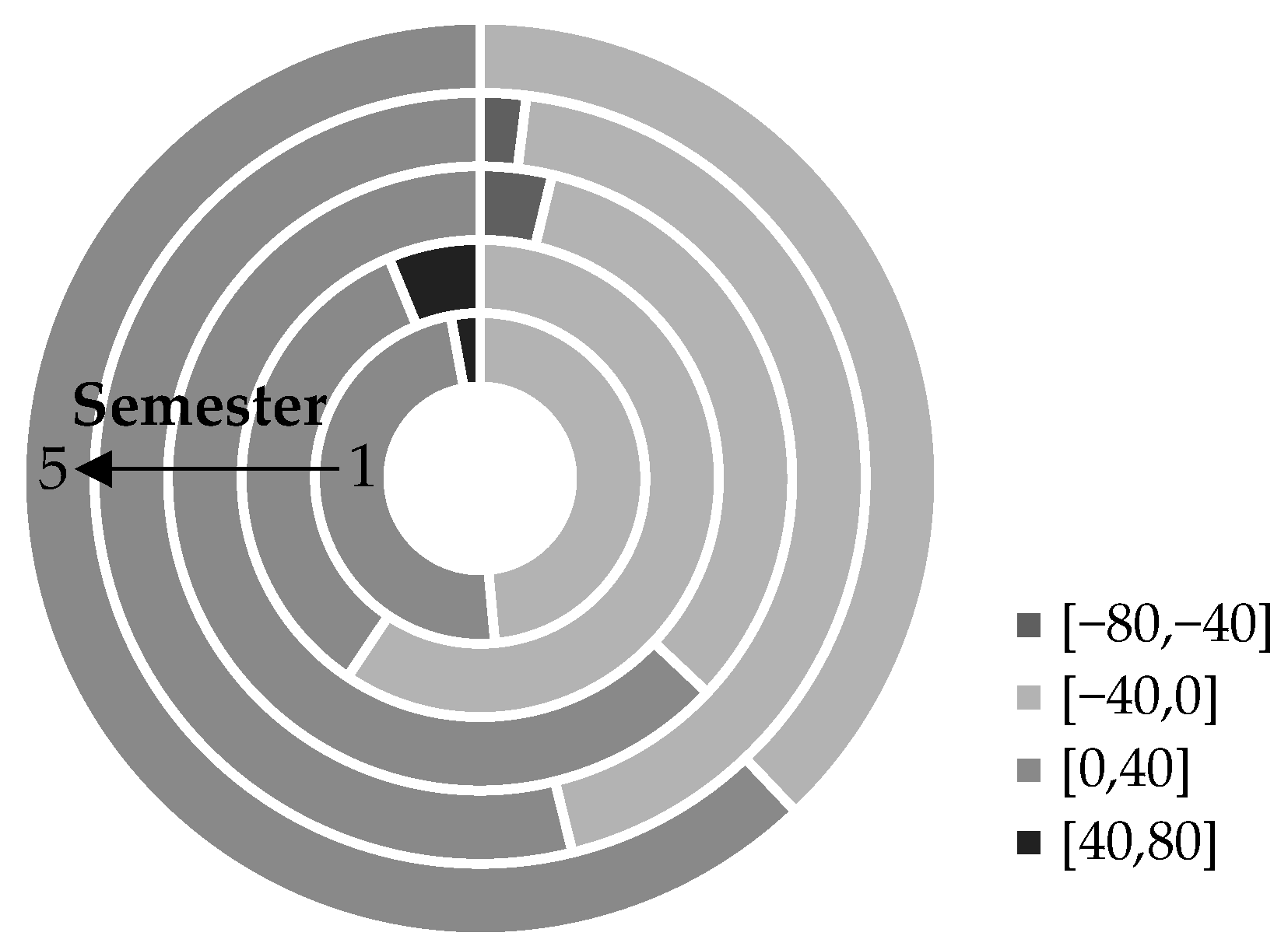

3.2.3. Performance Score

3.3. Exit Exam–GPA Correlation Testing

4. Discussion

4.1. Impact of Remedial Actions

4.2. Quality Assurance and Quality Enhancement

4.3. Limitations and Future Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Awoniyi, S.A. A Template for Organizing Efforts to Satisfy ABET EC 2000 Requirements. J. Eng. Educ. 1999, 88, 449–453. [Google Scholar] [CrossRef]

- Soundarajan, N. Preparing for Accreditation Under EC 2000: An Experience Report. J. Eng. Educ. 2002, 91, 117–123. [Google Scholar] [CrossRef][Green Version]

- Henri, M.; Johnson, M.D.; Nepal, B. A Review of Competency-Based Learning: Tools, Assessments, and Recommendations. J. Eng. Educ. 2017, 106, 607–638. [Google Scholar] [CrossRef]

- Shaeiwitz, J.A. Outcomes Assessment in Engineering Education. J. Eng. Educ. 1996, 85, 239–246. [Google Scholar] [CrossRef]

- Violante, M.G.; Moos, S.; Vezzetti, E. A methodology for supporting the design of a learning outcomes-based formative assessment: The engineering drawing case study. Eur. J. Eng. Educ. 2020, 45, 305–327. [Google Scholar] [CrossRef]

- Kadiyala, M.; Crynes, B.L. A Review of Literature on Effectiveness of Use of Information Technology in Education. J. Eng. Educ. 2000, 89, 177–189. [Google Scholar] [CrossRef]

- Dodridge, M.; Kassinopoulos, M. Assessment of student learning: The experience of two European institutions where outcomes-based assessment has been implemented. Eur. J. Eng. Educ. 2003, 28, 549–565. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Zhao, Y.; Luk, L.Y.Y. A Validated and Reliable Instrument Investigating Engineering Students’ Perceptions of Competency in Generic Skills. J. Eng. Educ. 2017, 106, 299–325. [Google Scholar] [CrossRef]

- Tener, R.K. Outcomes Assessment and the Faculty Culture: Conflict or Congruence? J. Eng. Educ. 1999, 88, 65–71. [Google Scholar] [CrossRef]

- Huang-Saad, A.Y.; Morton, C.S.; Libarkin, J.C. Entrepreneurship Assessment in Higher Education: A Research Review for Engineering Education Researchers. J. Eng. Educ. 2018, 107, 263–290. [Google Scholar] [CrossRef]

- Woolston, D.C. Outcomes-based assessment in engineering education: A critique of its foundations and practice. In Proceedings of the 2008 38th Annual Frontiers in Education Conference, Saratoga, CA, USA, 22–25 October 2008; pp. S4G-1–S4G-5. [Google Scholar]

- Crespo, R.M.; Najjar, J.; Derntl, M.; Leony, D.; Neumann, S.; Oberhuemer, P.; Totschnig, M.; Simon, B.; Gutiérrez, I.; Kloos, C.D. Aligning assessment with learning outcomes in outcome-based education. In Proceedings of the IEEE EDUCON 2010 Conference, Madrid, Spain, 14–16 April 2010; pp. 1239–1246. [Google Scholar]

- ABET. Criteria for Accrediting Engineering Programs, 2020–2021. 2019. Available online: https://www.abet.org/accreditation/accreditation-criteria/criteria-for-accrediting-engineering-programs-2022-2023/ (accessed on 20 March 2024).

- Bishop, J.H. The Effect of Curriculum-Based External Exit Exam Systems on Student Achievement. J. Econ. Educ. 1998, 29, 171–182. [Google Scholar] [CrossRef]

- El-Hassan, H.; Hamouda, M.; El-Maaddawy, T.; Maraqa, M. Curriculum-based exit exam for assessment of student learning. Eur. J. Eng. Educ. 2021, 46, 849–873. [Google Scholar] [CrossRef]

- Kamoun, F.; Selim, S. On the Design and Development of WEBSEE: A Webbased Senior Exit Exam for Value-added Assessment of a CIS Program. J. Inf. Syst. Educ. 2008, 19, 209. [Google Scholar]

- Naghedolfeizi, M.; Garcia, S.; Yousif, N.A. Analysis of Student Performance in Programming Subjects of an In-house Exit Exam. In Proceedings of the ASEE Southeastern Section Conference, Louisville, KY, USA, 1–3 April 2007. [Google Scholar]

- Schlemer, L.; Waldorf, D. Testing The Test: Validity And Reliability Of Senior Exit Exam. In Proceedings of the 2010 Annual Conference & Exposition, San Diego, CA, USA, 27–30 June 2010; pp. 15.1202.1–15.1202.14. [Google Scholar]

- Thomas, G.; Darayan, S. Electronic Engineering Technology Program Exit Examination as an ABET and Self-Assessment Tool. J. STEM Educ. 2018, 18, 32–35. [Google Scholar]

- Watson, J.L. An Analysis of the Value of the FE Examination for the Assessment of Student Learning in Engineering and Science Topics. J. Eng. Educ. 1998, 87, 305–311. [Google Scholar] [CrossRef]

- Al Ahmad, M.; Al Marzouqi, A.H.; Hussien, M. Exit Exam as Academic Performance Indicator. Turk. Online J. Educ. Technol. 2014, 13, 58–67. [Google Scholar]

- Cowart, K.; Dell, K.; Rodriguez-Snapp, N.; Petrelli, H.M.W. An Examination of Correlations between MMI scores and Pharmacy School GPA. Am. J. Pharm. Educ. 2016, 80, 98. [Google Scholar] [CrossRef]

- Young, A.; Rose, G.; Willson, P. Online Case Studies: HESI Exit Exam Scores and NCLEX-RN Outcomes. J. Prof. Nurs. 2013, 29, S17–S21. [Google Scholar] [CrossRef] [PubMed]

- Slomp, D.; Marynowski, R.; Holec, V.; Ratcliffe, B. Consequences and outcomes of policies governing medium-stakes large-scale exit exams. Educ. Asse. Eval. Acc. 2020, 32, 431–460. [Google Scholar] [CrossRef]

- Varma, V. Internally Developed Departmental Exit Exams V/S Externally Normed Assessment Tests: What We Found. In Proceedings of the 2006 Annual Conference & Exposition, Chicago, IL, USA, 18–21 June 2006; pp. 11.817.1–11.817.6. [Google Scholar]

- Alolaywi, Y.; Alkhalaf, S.; Almuhilib, B. Analyzing the efficacy of comprehensive testing: A comprehensive evaluation. Front. Educ. 2024, 9, 1338818. [Google Scholar] [CrossRef]

- Sula, G.; Haxhihyseni, S.; Noti, K. Wikis as a tool for co-constructed learning in higher education—An exploratory study in an Albanian higher education. Int. J. Emerg. Technol. Learn. 2021, 16, 191–204. [Google Scholar] [CrossRef]

- Parent, D.W. Improvements to an Electrical Engineering Skill Audit Exam to Improve Student Mastery of Core EE Concepts. IEEE Trans. Educ. 2011, 54, 184–187. [Google Scholar] [CrossRef]

- Elnajjar, E.; Al Omari, S.-A.B.; Omar, F.; Selim, M.Y.; Mourad, A.H.I. An example of ABET accreditation practice of mechanical engineering program at UAE University. Int. J. Innov. Educ. Res. 2019, 7, 387–401. [Google Scholar] [CrossRef]

- Shafi, A.; Saeed, S.; Bamarouf, Y.A.; Iqbal, S.Z.; Min-Allah, N.; Alqahtani, M.A. Student outcomes assessment methodology for ABET accreditation: A case study of computer science and computer information systems programs. IEEE Access 2019, 7, 13653–13667. [Google Scholar] [CrossRef]

- El-Hassan, H.; Hamouda, M.; El-Maaddawy, T.; Maraqa, M. Student Perceptions of Curriculum-Based Exit Exams in Civil Engineering Education. In Proceedings of the IEEE EDUCON 2021 Conference, Online, 21–23 April 2021; pp. 214–218. [Google Scholar]

- Carless, D. Excellence in University Assessment: Learning from Award-Winning Practice; Routledge: London, UK, 2015. [Google Scholar]

- Barkley, E.; Major, C. Learning Assessment Techniques: A Handbook for College Faculty; Jossey-Bass: San Francisco, CA, USA, 1999. [Google Scholar]

- Deneen, C.; Boud, D. Patterns of Resistance in Managing Assessment Change. Assess. Eval. Higher Educ. 2014, 39, 577–591. [Google Scholar] [CrossRef]

- El-Maaddawy, T.; Deneen, C. Outcomes-based assessment and learning: Trialling change in a postgraduate civil engineering course. J. Univ. Teach. Learn. Prac. 2017, 14, 10. [Google Scholar] [CrossRef]

- El-Maaddawy, T. Innovative assessment paradigm to enhance student learning in engineering education. Eur. J. Eng. Educ. 2017, 42, 1439–1454. [Google Scholar] [CrossRef]

- Deneen, C.; Brown, G.; Bond, T.; Shroff, R. Understanding Outcome-Based Education Changes in Teacher Education: Valuation of a New Instrument with Preliminary Findings. Asia-Pac. J. Teach. Educ. 2013, 41, 441–456. [Google Scholar] [CrossRef]

- El-Maaddawy, T.; El-Hassan, H.; Al Jassmi, H.; Kamareddine, L. Applying Outcomes-Based Learning in Civil Engineering Education. In Proceedings of the 2019 IEEE Global Engineering Education Conference (EDUCON), Dubai, United Arab Emirates, 8–11 April 2019; pp. 986–989. [Google Scholar]

- Liu, O.L.; Rios, J.A.; Borden, V. The effects of motivational instruction on college students’ performance on low-stakes assessment. Educ. Assess. 2015, 20, 79–94. [Google Scholar] [CrossRef]

- Wise, S.L.; DeMars, C.D. Low examinee effort in low-stakes assessment: Problems and potential solutions. Educ. Assess. 2005, 10, 1–17. [Google Scholar] [CrossRef]

| Semester No. | Semester Designation | Total Number of Students | Number of Students Based on GPA | Average GPA (%) | |||

|---|---|---|---|---|---|---|---|

| <70% | 70–79% | 80–89% | >90% | ||||

| 1 | Fall 2019 | 35 | 0 | 29 | 6 | 0 | 75.5 ± 4.1 |

| 2 | Spring 2020 | 32 | 0 | 25 | 7 | 0 | 76.6 ± 4.5 |

| 3 | Fall 2020 | 27 | 0 | 17 | 10 | 0 | 77.0 ± 4.9 |

| 4 | Spring 2021 | 52 | 0 | 38 | 14 | 0 | 76.8 ± 5.2 |

| 5 | Fall 2021 | 29 | 0 | 19 | 10 | 0 | 77.5 ± 4.1 |

| Semester | Number of Students | Benchmark Score * (%) | Average Exit Exam (%) | Confidence Interval | t-Value | Null Hypothesis |

|---|---|---|---|---|---|---|

| 2 | 32 | 45.9 | 46.7 | [−6.19, 4.58] | −0.29 | Cannot be Rejected |

| 3 | 27 | 45.9 | 60.1 | [−19.17, −9.21] | −5.58 | Rejected |

| 4 | 52 | 45.9 | 64.4 | [−22.98, −14.02] | −8.09 | Rejected |

| 5 | 29 | 45.9 | 78.1 | [−36.68, −27.69] | −14.04 | Rejected |

| Semester | Number of Students | Average GPA (%) | Average Exit Exam (%) | Confidence Interval | t-Value | Null Hypothesis |

|---|---|---|---|---|---|---|

| 1 | 35 | 75.5 | 45.9 | [26.3, 32.9] | 17.54 | Rejected |

| 2 | 32 | 76.6 | 46.7 | [25.2, 34.6] | 12.40 | Rejected |

| 3 | 27 | 77.0 | 60.1 | [12.6, 21.2] | 7.70 | Rejected |

| 4 | 52 | 76.8 | 64.4 | [8.9, 16.0] | 6.83 | Rejected |

| 5 | 29 | 77.5 | 78.1 | [−4.4, 3.2] | −0.30 | Cannot be rejected |

| Semester No. | Semester Designation | Exit Exam | PLO1 | PLO2 | PLO4 | PLO6 |

|---|---|---|---|---|---|---|

| 1 | Fall 2019 | 6% | 6% | 22% | 25% | 19% |

| 2 | Spring 2020 | 15% | 16% | 16% | 16% | 18% |

| 3 | Fall 2020 | 63% | 56% | 56% | 56% | 33% |

| 4 | Spring 2021 | 69% | 73% | 52% | 65% | 46% |

| 5 | Fall 2021 | 93% | 93% | 93% | 69% | 66% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Hassan, H.; Issa, A.; Hamouda, M.A.; Maraqa, M.A.; El-Maaddawy, T. Continuous Improvement of an Exit Exam Tool for the Effective Assessment of Student Learning in Engineering Education. Trends High. Educ. 2024, 3, 560-577. https://doi.org/10.3390/higheredu3030033

El-Hassan H, Issa A, Hamouda MA, Maraqa MA, El-Maaddawy T. Continuous Improvement of an Exit Exam Tool for the Effective Assessment of Student Learning in Engineering Education. Trends in Higher Education. 2024; 3(3):560-577. https://doi.org/10.3390/higheredu3030033

Chicago/Turabian StyleEl-Hassan, Hilal, Anas Issa, Mohamed A. Hamouda, Munjed A. Maraqa, and Tamer El-Maaddawy. 2024. "Continuous Improvement of an Exit Exam Tool for the Effective Assessment of Student Learning in Engineering Education" Trends in Higher Education 3, no. 3: 560-577. https://doi.org/10.3390/higheredu3030033

APA StyleEl-Hassan, H., Issa, A., Hamouda, M. A., Maraqa, M. A., & El-Maaddawy, T. (2024). Continuous Improvement of an Exit Exam Tool for the Effective Assessment of Student Learning in Engineering Education. Trends in Higher Education, 3(3), 560-577. https://doi.org/10.3390/higheredu3030033