Inter-Subject EEG Synchronization during a Cooperative Motor Task in a Shared Mixed-Reality Environment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Experimental Procedures

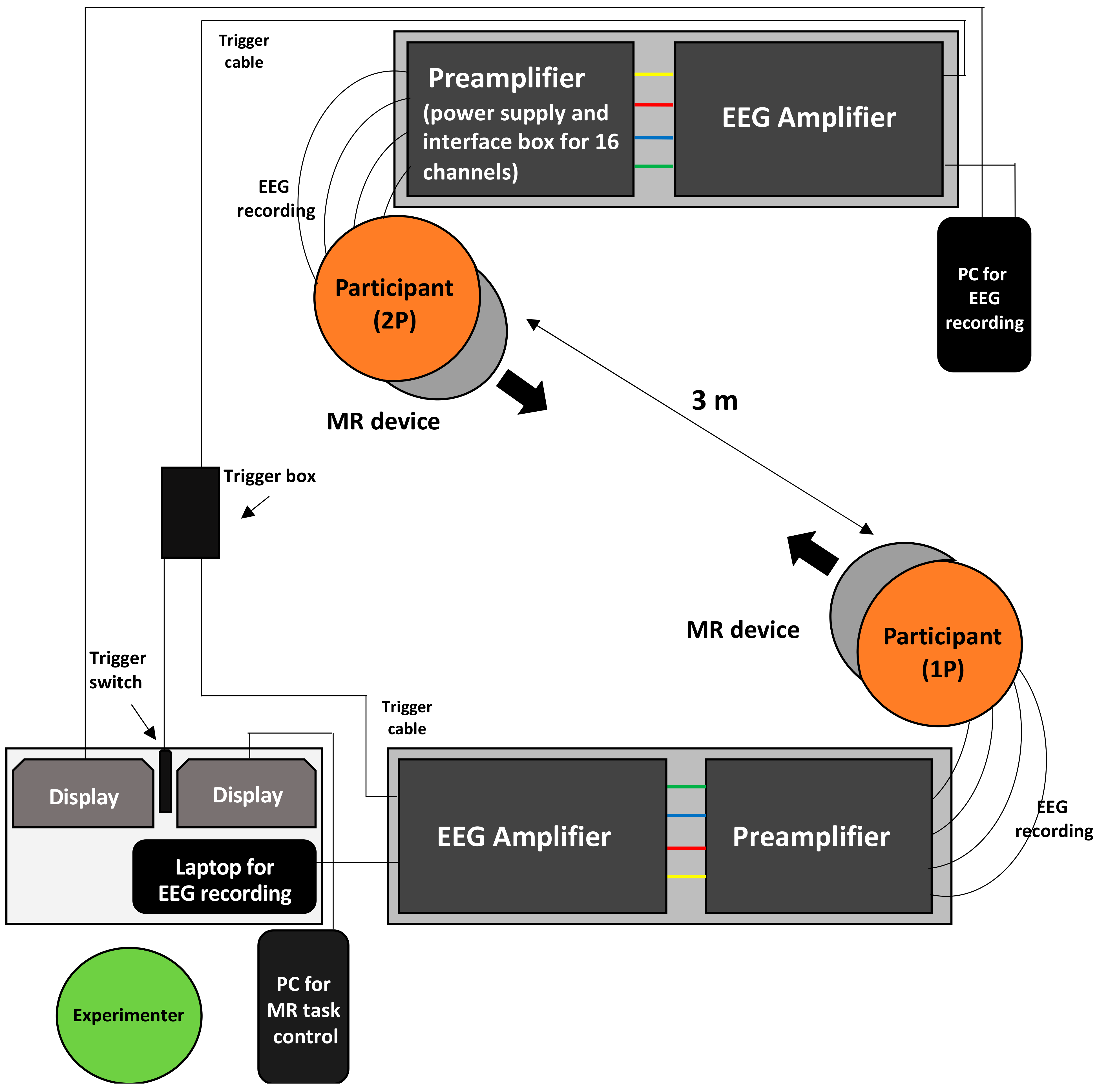

2.3. EEG Recordings

2.4. Data Analysis

2.4.1. Subjective Ratings

2.4.2. EEG Data

3. Results

3.1. Subjective Reports

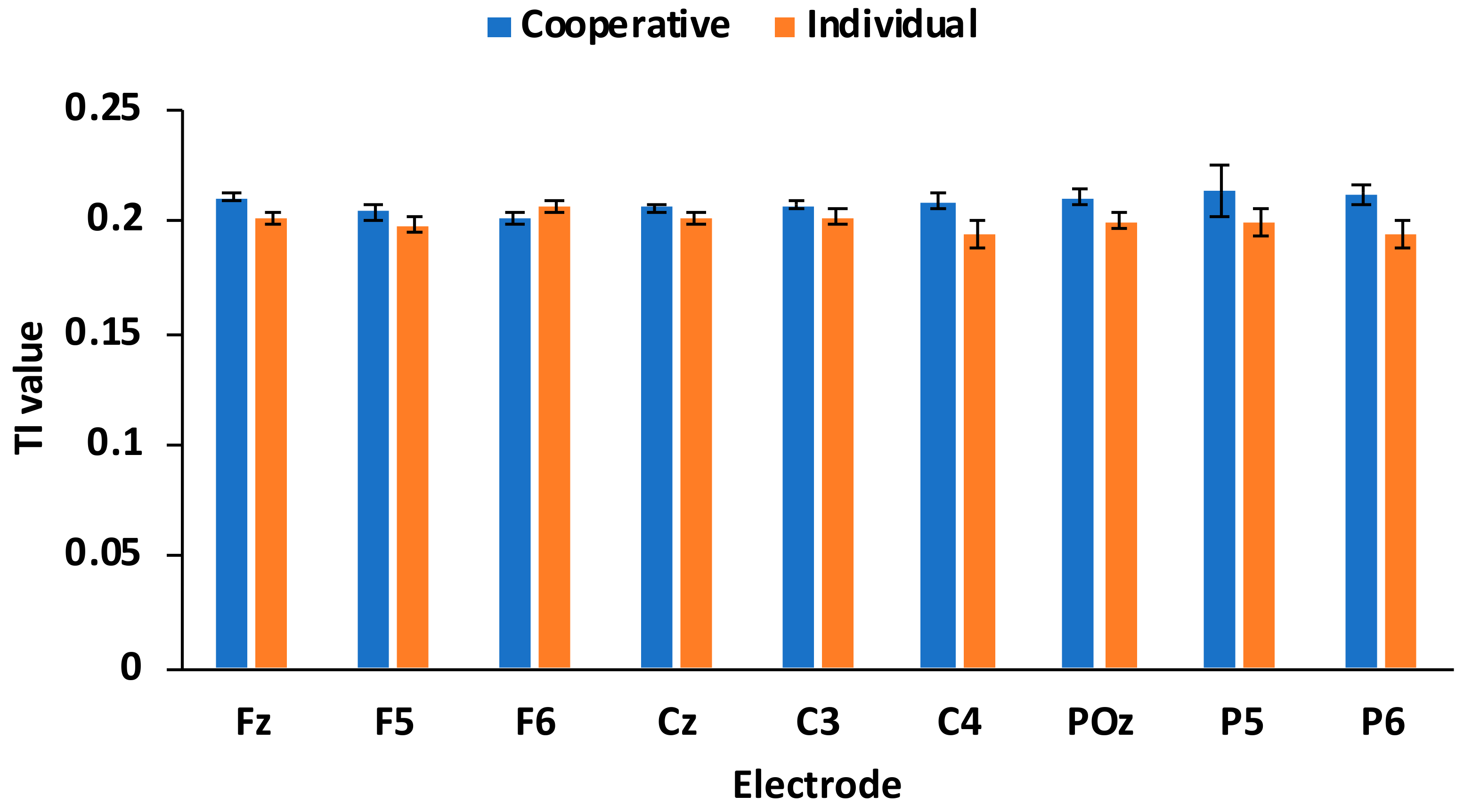

3.2. Inter-Brain Synchronization

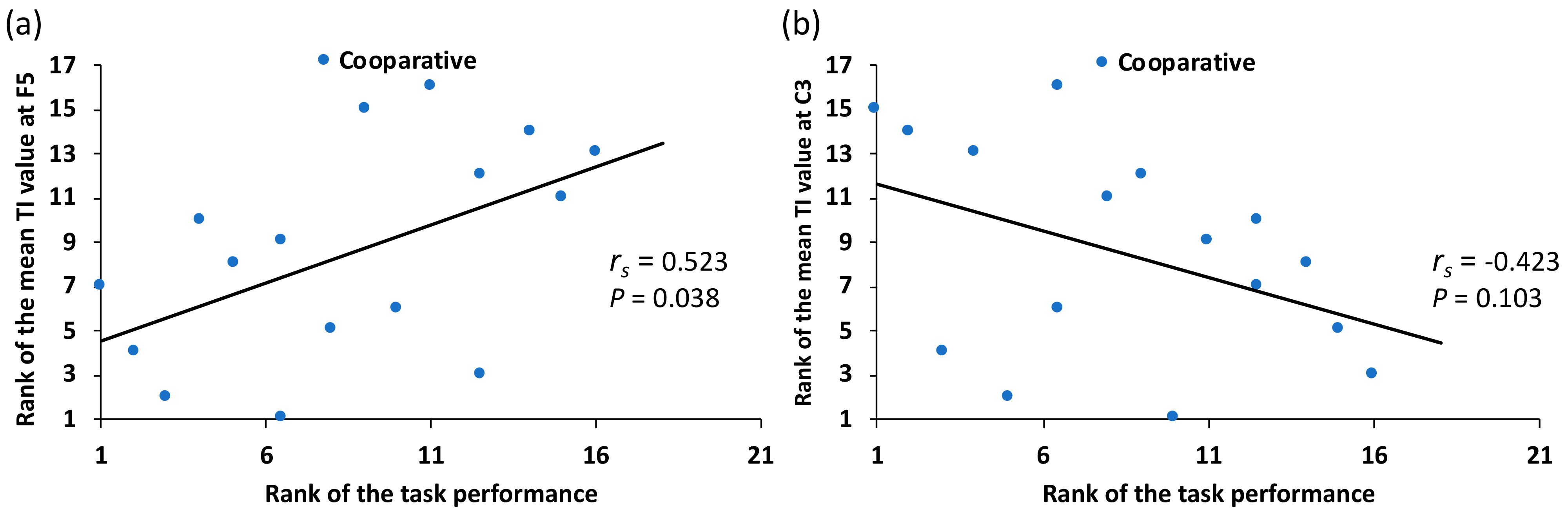

3.3. Correlation between Subjective Reports and Inter-Brain Synchronization

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ens, B.; Lanir, J.; Tang, A.; Bateman, S.; Lee, G.; Piumsomboon, T.; Billinghurst, M. Revisiting collaboration through mixed reality: The evolustion of groupware. Int. J. Hum. Comput. Stud. 2019, 131, 81–98. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Choi, S.-M. A review on mixed reality: Current trends, challenges and prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, E77D, 1321–1329. [Google Scholar]

- Lindgren, R.; Tscholl, M.; Wang, S.; Johnson, E. Enhancing learning and engagement through embodied interaction within a mixed reality simulation. Comput. Educ. 2016, 95, 174–187. [Google Scholar] [CrossRef]

- Aguayo, C.; Danobeitia, C.; Cochrane, T.; Aiello, S.; Cook, S.; Cuevas, A. Embodied reports in paramedicine mixed reality learning. Res. Learn. Technol. 2018, 26, 2150. [Google Scholar] [CrossRef]

- Leonard, S.N.; Fitzgerald, R.N. Holographic learning: A mixed reality trial of Microsoft HoloLens in an Australian secondary school. Res. Learn. Technol. 2018, 26, 2160. [Google Scholar] [CrossRef]

- Ali, A.A.; Dafoulas, G.A.; Augusto, J.C. Collaborative Educational Environments Incorporating Mixed Reality Technologies: A Systematic Mapping Study. IEEE Trans. Learn. Technol. 2019, 12, 321–332. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Dey, A.; Ens, B.; Lee, G.; Billinghurst, M. The effects of sharing awareness cues in collaborative mixed reality. Front. Robot. AI 2019, 6, 5. [Google Scholar] [CrossRef]

- Ask, T.F.; Kullman, K.; Sutterlin, S.; Knox, B.J.; Engel, D.; Lugo, R.G. A 3D mixed reality visualization of network topology and activity results in better dyadic cyber team communication and cyber situational awareness. Front. Big Data 2023, 6, 1042783. [Google Scholar] [CrossRef]

- Dumas, G.; Nadel, J.; Soussignan, R.; Martinerie, J.; Garnero, L. Inter-brain synchronization during social interaction. PLoS ONE 2010, 5, e12166. [Google Scholar] [CrossRef]

- Hari, R.; Himberg, T.; Nummenmaa, L.; Hamalainen, M.; Parkkonen, L. Synchrony of brains and bodies during implicit interpersonal interaction. Trends Cogn. Sci. 2013, 17, 105–106. [Google Scholar] [CrossRef] [PubMed]

- Schilbach, L.; Timmermans, B.; Reddy, V.; Castall, A.; Bente, G.; Shilicht, T.; Vogeley, K. Toward a second-person neuroscience. Behav. Brain Sci. 2013, 36, 393–462. [Google Scholar] [CrossRef] [PubMed]

- Babiloni, F.; Astolfi, L. Social neuroscience and hyperscanning techniques: Past, present and future. Neurosci. Biobehav. Rev. 2014, 44, 76–93. [Google Scholar] [CrossRef]

- Koike, T.; Tanabe, H.C.; Sadato, N. Hyperscanning neuroimaging technique to reveal the “two-in-one” system in social interactions. Neurosci. Res. 2015, 90, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Yun, K.; Watanabe, K.; Shimojo, S. Interpersonal body and neural synchronization as a marker of implicit social interaction. Sci. Rep. 2012, 2, 959. [Google Scholar] [CrossRef]

- Lindenberger, U.; Li, S.C.; Gruber, W.; Muller, V. Brains swinging in concert: Cortical phase synchronization while playing guitar. BMC Neurosci. 2009, 10, 22. [Google Scholar] [CrossRef]

- Muller, V.; Saenger, J.; Lindenberger, U. Hyperbrain network properties of guitarists playing in quartet. Ann. N. Y. Acad. Sci. 2018, 1423, 198–210. [Google Scholar] [CrossRef]

- Kawasaki, M.; Yamada, Y.; Ushiku, Y.; Miyauchi, E.; Yamaguchi, Y. Inter-brain synchronization during coordination of speech rhythm in human-to-human social interaction. Sci. Rep. 2013, 3, 1692. [Google Scholar] [CrossRef]

- Saito, D.N.; Tanabe, H.C.; Izuma, K.; Hayashi, M.J.; Morito, Y.; Komeda, H.; Uchiyama, H.; Kosaka, H.; Okazawa, H.; Fujibayashi, Y.; et al. “Stay tuned”: Inter-individual neural synchronization during mutual gaze and joint attention. Front. Integr. Neurosci. 2010, 4, 127. [Google Scholar] [CrossRef]

- Cui, X.; Bryant, D.M.; Reiss, A.L. NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. Neuroimage 2012, 59, 2430–2437. [Google Scholar] [CrossRef]

- Jiang, J.; Dai, B.H.; Peng, D.L.; Zhu, C.Z.; Liu, L.; Lu, C.M. Neural Synchronization during Face-to-Face Communication. J. Neurosci. 2012, 32, 16064–16069. [Google Scholar] [CrossRef] [PubMed]

- Tanabe, H.C.; Kosaka, H.; Saito, D.N.; Koike, T.; Hayashi, M.J.; Izuma, K.; Komeda, H.; Ishitobi, M.; Omori, M.; Munesue, T.; et al. Hard to “tune in”: Neural mechanisms of live face-to-face interaction with high-functioning autistic spectrum disorder. Front. Hum. Neurosci. 2012, 6, 268. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Mok, C.; Witt, E.E.; Pradhans, A.H.; Chen, J.E.; Reiss, A.L. NIRS-Based Hyperscanning Reveals Inter-brain Neural Synchronization during Cooperative Jenga Game with Face-to-Face Communication. Front. Hum. Neurosci. 2016, 10, 82. [Google Scholar] [CrossRef] [PubMed]

- Hirsch, J.; Zhang, X.; Noah, J.A.; Ono, Y. Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. NeuroImage 2017, 157, 314–330. [Google Scholar] [CrossRef] [PubMed]

- Jahng, J.; Kralik, J.D.; Hwang, D.-U.; Jeong, J. Neural dynamics of two players when using nonverbal cues to gauge intentions to cooperate during the Prisoner’s Dilemma Game. NeuroImage 2017, 157, 263–274. [Google Scholar] [CrossRef] [PubMed]

- Dikker, S.; Wan, L.; Davidesco, I.; Kaggen, L.; Oostrik, M.; McClintock, J.; Rowland, J.; Michalareas, G.; Van Bavel, J.J.; Ding, M.Z.; et al. Brain-to-Brain Synchrony Tracks Real-World Dynamic Group Interactions in the Classroom. Curr. Biol. 2017, 27, 1375–1380. [Google Scholar] [CrossRef]

- Barraza, P.; Dumas, G.; Liu, H.; Blanco-Gomez, G.; Van Den Heuvel, M.I.; Baart, M.; Pérez, A. Implementing EEG hyperscanning setups. MethodsX 2019, 6, 428–436. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Picton, T.W.; van Roon, P.; Armilio, M.L.; Berg, P.; Ille, N.; Scherg, M. The correction of ocular artifacts: A topographic perspective. Clin. Neurophysiol. 2000, 111, 53–65. [Google Scholar] [CrossRef]

- van der Helden, J.; Boksem, M.A.S.; Blom, J.H.G. The Importance of Failure: Feedback-Related Negativity Predicts Motor Learning Efficiency. Cereb. Cortex 2010, 20, 1596–1603. [Google Scholar] [CrossRef]

- Burgess, A.P. On the interpretation of synchronization in EEG hyperscanning studies: A cautionary note. Front. Hum. Neurosci. 2013, 7, 881. [Google Scholar] [CrossRef] [PubMed]

- Tenke, C.E.; Kayser, J.; Alvarenga, J.E.; Abraham, K.S.; Warner, V.; Talati, A.; Weissman, M.M.; Bruder, G.E. Temporal stability of posterior EEG alpha over twelve years. Clin. Neurophysiol. 2018, 129, 1410–1417. [Google Scholar] [CrossRef] [PubMed]

- Bevilacqua, D.; Davidesco, I.; Wan, L.; Chaloner, K.; Rowland, J.; Ding, M.Z.; Poeppel, D.; Dikker, S. Brain-to-Brain Synchrony and Learning Outcomes Vary by Student-Teacher Dynamics: Evidence from a Real-world Classroom Electroencephalography Study. J. Cogn. Neurosci. 2019, 31, 401–411. [Google Scholar] [CrossRef] [PubMed]

- Wen, X.T.; Mo, J.; Ding, M.Z. Exploring resting-state functional connectivity with total interdependence. Neuroimage 2012, 60, 1587–1595. [Google Scholar] [CrossRef]

- Dmochowski, J.P.; Sajda, P.; Dias, J.; Parra, L.C. Correlated components of ongoing EEG point to emotionally laden attention—A possible marker of engagement? Front. Hum. Neurosci. 2012, 6, 112. [Google Scholar] [CrossRef]

- Shimada, S.; Matsumoto, M.; Takahashi, H.; Yomogida, Y.; Matsumoto, K. Coodinated activation of premotor and ventromdeial prefrontal cortices during vicarious reward. Soc. Cogn. Affect. Neurosci. 2016, 11, 508–511. [Google Scholar] [CrossRef]

- Koide, T.; Shimada, S. Cheering enhances inter-brain synchronization between sensorimotor areas of player and observer. Jpn. Psychol. Res. 2018, 60, 265–275. [Google Scholar] [CrossRef]

- Gallotti, M.; Frith, C. Social cognition in the we-mode. Trends Cogn. Sci. 2013, 17, 160–165. [Google Scholar] [CrossRef]

- Funane, T.; Kiguchi, M.; Atsumori, H.; Sato, H.; Kubota, K.; Koizumi, H. Synchronous activity of two people’s prefrontal cortices during a cooperative task measured by simultaneous near-infrared spectroscopy. J. Biomed. Opt. 2011, 16, 077011. [Google Scholar] [CrossRef]

- Balconi, M.; Vanutelli, M.E. EEG hyperscanning and behavioral synchronization during a joint action. Neuropsychol. Trends 2018, 24, 23–47. [Google Scholar] [CrossRef]

- Dai, R.N.; Liu, R.; Liu, T.; Zhang, Z.; Xiao, X.; Sun, P.P.; Yu, X.T.; Wang, D.H.; Zhu, C.Z. Holistic cognitive and neural processes: A fNIRS-hyperscanning study on interpersonal sensorimotor synchronization. Soc. Cogn. Affect. Neurosci. 2018, 13, 1141–1154. [Google Scholar] [CrossRef] [PubMed]

- Osaka, N.; Minamoto, T.; Yaoi, K.; Azuma, M.; Shimada, Y.M.; Osaka, M. How Two Brains Make One Synchronized Mind in the Inferior Frontal Cortex: fNIRS-Based Hyperscanning During Cooperative Singing. Front. Psychol. 2015, 6, 1811. [Google Scholar] [CrossRef] [PubMed]

- Pan, Y.; Dikker, S.; Goldstein, P.; Zhu, Y.; Yang, C.; Hu, Y. Instructor-learner brain coupling discriminates between instructional approaches and predicts learning. NeuroImage 2020, 211, 116657. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.; Xiao, W.; Feng, X.; Shao, Y.; Zhang, W.; Li, W. Behavioral and brain synchronization differences between expert and novice teachers when collaborating with students. Brain Cogn. 2020, 139, 105513. [Google Scholar] [CrossRef]

- Xue, H.; Lu, K.; Hao, N. Cooperation makes two less-creative individuals turn into a highly-creative pair. NeuroImage 2018, 172, 527–537. [Google Scholar] [CrossRef]

- Kringelbach, M.L.; Rolls, E.T. The functional neuroanatomy of the human orbitofrontal cortex: Evidence from neuroimaging and neuropsychology. Prog. Neurobiol. 2004, 72, 341–372. [Google Scholar] [CrossRef]

- Rushworth, M.F.S.; Noonan, M.P.; Boorman, E.D.; Walton, M.E.; Behrens, T.E. Frontal Cortex and Reward-Guided Learning and Decision-Making. Neuron 2011, 70, 1054–1069. [Google Scholar] [CrossRef]

- Kawasaki, M.; Kitajo, K.; Fukao, K.; Murai, T.; Yamaguchi, Y.; Funabiki, Y. Frontal theta activation during motor synchronization in autism. Sci. Rep. 2017, 7, 15034. [Google Scholar] [CrossRef]

- Gallagher, H.L.; Happe, F.; Brunswick, N.; Fletcher, P.C.; Frith, U.; Frith, C.D. Reading the mind in cartoons and stories: An fMRI study of ‘theory of mind’ in verbal and nonverbal tasks. Neuropsychologia 2000, 38, 11–21. [Google Scholar] [CrossRef]

- Rilling, J.K.; Sanfey, A.G.; Aronson, J.A.; Nystrom, L.E.; Cohen, J.D. The neural correlates of theory of mind within interpersonal interactions. NeuroImage 2004, 22, 1694–1703. [Google Scholar] [CrossRef]

- Carrington, S.J.; Bailey, A.J. Are There Theory of Mind Regions in the Brain? A Review of the Neuroimaging Literature. Hum. Brain Mapp. 2009, 30, 2313–2335. [Google Scholar] [CrossRef] [PubMed]

- McCabe, K.; Houser, D.; Ryan, L.; Smith, V.; Trouard, T. A functional imaging study of cooperation in two-person reciprocal exchange. Proc. Natl. Acad. Sci. USA 2001, 98, 11832–11835. [Google Scholar] [CrossRef] [PubMed]

- Gallagher, H.L.; Jack, A.I.; Roepstorff, A.; Frith, C.D. Imaging the intentional stance in a competitive game. NeuroImage 2002, 16, 814–821. [Google Scholar] [CrossRef] [PubMed]

- Gallese, V. The manifold nature of interpersonal relations: The quest for a common mechanism. Philos. Trans. R. Soc. B Biol. Sci. 2003, 358, 517–528. [Google Scholar] [CrossRef] [PubMed]

- Iacoboni, M.; Molnar-Szakacs, I.; Gallese, V.; Buccino, G.; Mazziotta, J.C.; Rizzolatti, G. Grasping the intentions of others with one’s own mirror neuron system. PLoS Biol. 2005, 3, 529–535. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Cattaneo, L.; Fabbri-Destro, M.; Rozzi, S. Cortical mechanisms underlying the organization of goal-directed actions and mirror neuron-based action understanding. Physiol. Rev. 2014, 94, 655–706. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z. Identification of motor and mental imagery EEG in two and multiclass subject-dependent tasks using successive decomposition index. Sensors 2020, 20, 5283. [Google Scholar] [CrossRef] [PubMed]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Fan, Z.; Rehman, A.U.; Li, G.; Xiao, G. Motor imagery EEG signals classification based on mode amplitude and frequency components using empirical wavelet transform. IEEE Access 2019, 7, 127678–127692. [Google Scholar] [CrossRef]

- Mayseless, N.; Hawthorne, G.; Reiss, A.L. Real-life creative problem solving in teams: fNIRS based hyperscanning study. Neuroimage 2019, 203, 116161. [Google Scholar] [CrossRef]

- Suh, H. Collaborative Learning Models and Support Technologies in the Future Classroom. Int. J. Educ. Media Technol. 2011, 5, 50–61. [Google Scholar]

| Electrode | Condition | TI-Value | Perm. Test | Coop. vs. Indiv. | ||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | p-Value | t-Value | p-Value | Effect Size (Cohen’s d) | |||

| Frontal | Fz | coop | 0.211 | 0.008 | 0.014 * | 2.551 | 0.022 * | 1.035 |

| indiv | 0.201 | 0.010 | 0.072 | |||||

| F5 | coop | 0.205 | 0.013 | 0.053 | 1.103 | 0.287 | 0.437 | |

| indiv | 0.199 | 0.014 | 0.081 | |||||

| F6 | coop | 0.202 | 0.009 | 0.06 | −1.224 | 0.240 | −0.455 | |

| indiv | 0.206 | 0.012 | 0.106 | |||||

| Central | Cz | coop | 0.206 | 0.007 | 0.029 * | 1.248 | 0.231 | 0.444 |

| indiv | 0.202 | 0.011 | 0.116 | |||||

| C3 | coop | 0.208 | 0.008 | 0.036 * | 1.299 | 0.214 | 0.506 | |

| indiv | 0.203 | 0.012 | 0.096 | |||||

| C4 | coop | 0.210 | 0.012 | 0.021 * | 2.248 | 0.040 * | 0.827 | |

| indiv | 0.194 | 0.024 | 0.109 | |||||

| Posterior | POz | coop | 0.211 | 0.017 | 0.015 * | 1.855 | 0.083 | 0.717 |

| indiv | 0.201 | 0.012 | 0.085 | |||||

| P5 | coop | 0.214 | 0.047 | 0.043 * | 1.005 | 0.331 | 0.374 | |

| indiv | 0.200 | 0.023 | 0.124 | |||||

| P6 | coop | 0.213 | 0.018 | 0.035 * | 1.841 | 0.085 | 0.814 | |

| indiv | 0.195 | 0.024 | 0.082 | |||||

| Electrode | Condition | TI - Questionnaire Score | TI-Task Performance | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cooperation | Sharing Space | Sharing Objects | rs | p-Value | ||||||

| rs | p-Value | rs | p-Value | rs | p-Value | |||||

| Frontal | Fz | Coop | 0.005 | 0.980 | 0.308 | 0.087 | 0.373 | 0.036 * | −0.225 | 0.401 |

| Indiv | −0.254 | 0.161 | −0.091 | 0.621 | −0.133 | 0.468 | 0.158 | 0.558 | ||

| F5 | Coop | 0.152 | 0.407 | −0.137 | 0.455 | 0.006 | 0.972 | 0.523 | 0.038 * | |

| Indiv | 0.055 | 0.765 | 0.237 | 0.191 | 0.288 | 0.110 | 0.029 | 0.914 | ||

| F6 | Coop | 0.119 | 0.516 | 0.032 | 0.862 | 0.039 | 0.831 | −0.228 | 0.395 | |

| Indiv | −0.062 | 0.736 | −0.021 | 0.909 | 0.008 | 0.967 | −0.245 | 0.360 | ||

| Central | Cz | Coop | −0.009 | 0.960 | −0.039 | 0.834 | 0.031 | 0.866 | −0.293 | 0.271 |

| Indiv | −0.023 | 0.902 | 0.197 | 0.280 | 0.130 | 0.480 | –0.032 | 0.906 | ||

| C3 | Coop | 0.128 | 0.484 | 0.240 | 0.186 | 0.202 | 0.268 | −0.423 | 0.103 | |

| Indiv | −0.048 | 0.794 | 0.154 | 0.400 | 0.035 | 0.850 | −0.068 | 0.803 | ||

| C4 | Coop | −0.157 | 0.392 | −0.222 | 0.223 | 0.022 | 0.904 | −0.049 | 0.858 | |

| Indiv | 0.003 | 0.989 | 0.172 | 0.346 | 0.114 | 0.533 | 0.068 | 0.802 | ||

| Posterior | POz | Coop | 0.052 | 0.777 | −0.219 | 0.229 | −0.288 | 0.110 | −0.240 | 0.370 |

| Indiv | −0.108 | 0.558 | 0.192 | 0.291 | 0.182 | 0.319 | 0.123 | 0.651 | ||

| P5 | Coop | 0.170 | 0.351 | −0.185 | 0.312 | −0.178 | 0.331 | 0.004 | 0.987 | |

| Indiv | −0.250 | 0.167 | −0.019 | 0.918 | −0.101 | 0.584 | 0.073 | 0.787 | ||

| P6 | Coop | −0.060 | 0.745 | −0.275 | 0.128 | −0.271 | 0.134 | 0.119 | 0.660 | |

| Indiv | −0.052 | 0.779 | 0.195 | 0.286 | 0.064 | 0.728 | −0.014 | 0.959 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogawa, Y.; Shimada, S. Inter-Subject EEG Synchronization during a Cooperative Motor Task in a Shared Mixed-Reality Environment. Virtual Worlds 2023, 2, 129-143. https://doi.org/10.3390/virtualworlds2020008

Ogawa Y, Shimada S. Inter-Subject EEG Synchronization during a Cooperative Motor Task in a Shared Mixed-Reality Environment. Virtual Worlds. 2023; 2(2):129-143. https://doi.org/10.3390/virtualworlds2020008

Chicago/Turabian StyleOgawa, Yutaro, and Sotaro Shimada. 2023. "Inter-Subject EEG Synchronization during a Cooperative Motor Task in a Shared Mixed-Reality Environment" Virtual Worlds 2, no. 2: 129-143. https://doi.org/10.3390/virtualworlds2020008

APA StyleOgawa, Y., & Shimada, S. (2023). Inter-Subject EEG Synchronization during a Cooperative Motor Task in a Shared Mixed-Reality Environment. Virtual Worlds, 2(2), 129-143. https://doi.org/10.3390/virtualworlds2020008