Abstract

The COVID-19 pandemic exposed the urgent need for scalable, reliable telemedicine tools to manage mild cases remotely and avoid overburdening healthcare systems. This study evaluates StepCare, a remote monitoring medical device, during the first pandemic wave at a single center in Spain. Among 35 patients monitored, StepCare showed high clinical reliability, aligning with physician assessments in 90.4% of cases. Patients and clinicians reported excellent usability and satisfaction. The system improved workflow efficiency, reducing triage time by 25% and associated costs by 84%. These results highlight StepCare’s value as a scalable, patient-centered solution for remote care during health crises.

1. Introduction

The increasing demand for efficient, scalable, and patient-centered healthcare solutions has highlighted the need for digital tools that support clinical decision making and improve care delivery [1,2,3,4]. StepCare (version 1.0) is a digital platform designed to enhance remote monitoring and triage by combining automated risk scoring with clinician oversight. Developed in response to growing challenges in patient management and healthcare resource allocation [5], StepCare offers a structured, data-driven approach to prioritizing care needs while maintaining safety and clinical relevance.

This study evaluates StepCare’s performance across several dimensions, including clinical impact, time and cost efficiency [6], usability [7], and satisfaction. By integrating real-time patient data with intelligent triage algorithms, StepCare aims to optimize clinician workload, reduce unnecessary healthcare utilization, and empower patients to take a more active role in managing their health. Through a two-phase implementation involving continuous clinical use, we assessed how familiarity with the platform affects perceptions, workflow, and clinical outcomes, offering valuable insights into its potential for long-term integration into routine practice.

2. Materials and Methods

A prospective, single-center pilot study was conducted at the Osakidetza OSI Bidasoa center to assess the clinical reliability, usability, and efficiency of StepCare, a software-based remote patient monitoring tool for managing symptomatic COVID-19 patients during the first pandemic wave. The study included 35 adult patients (51% men and 49% women, mean age 34.5) who were monitored remotely during their home isolation, with an average follow-up of 7.5 days. Patients with diagnosed cognitive impairment were excluded.

Usability studies frequently rely on small samples, as testing with 5–10 users can reveal up to 80% of usability issues [8,9,10]. In our study, 35 users participated in the evaluation, which exceeds the typical size used in formative usability testing and strengthens the reliability of the findings [9,11]. This sample size aligns with established practices and allowed efficient identification of key usability and reliability issues under real-world conditions.

The study was divided into two phases. Phase I (April 2021) involved 15 patients and focused on evaluating system usability. Phase II (June 2021) included 20 patients and aimed to assess StepCare’s ability to support clinical decision making. Each patient was monitored for a maximum of 15–20 days.

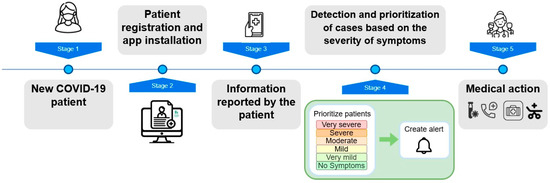

The StepCare system operates through structured process designed to optimize patient management and healthcare resource allocation (Figure 1). Upon diagnosis or identification of COVID-19 symptoms, patients completed an initial risk-profile questionnaire via a hospital-provided web application. This profile enabled StepCare to personalize symptom monitoring questions according to the patient’s characteristics.

Figure 1.

StepCare remote monitoring workflow.

Patients submitted daily updates by responding to a minimal set of questions (one or two per day) via the StepCare web application. These responses fed into the automated risk assessment system, which classified symptom severity in real time.

Based on reported symptoms and the patient’s risk profile, StepCare automatically categorized patients by severity level. This process allowed the system to alert healthcare personnel when a patient exceeded acceptable symptom thresholds, facilitating early intervention.

Healthcare professionals accessed an interface that visualized patient data and severity rankings. This dashboard helped clinicians prioritize cases efficiently and minimized unnecessary contacts with low-risk patients.

All patient-reported data were standardized, anonymized, and securely stored in compliance with GDPR. This module also integrated hospital electronic health record (EHR) data when available. The system’s proprietary algorithm supported early detection of deterioration and generated actionable insights to inform care protocols and workflow optimization.

Clinical safety was assessed by comparing StepCare’s symptom severity categorizations with clinical team evaluations across 228 patient assessments. Usability and satisfaction were measured through app usage data and post-study surveys completed by both patients and healthcare professionals.

Economic impact analysis focused on the reduction in clinical management time per patient achieved with StepCare compared to standard care. The time saved was translated into cost savings by multiplying the average minutes saved per patient by the average cost per minute of healthcare professionals. Operational costs of the system were also considered to estimate the total cost reduction.

Descriptive statistics were used to summarize outcomes such as clinical reliability, patient risk assessment, usability and satisfaction, and impact on time and cost.

Clinical reliability was measured through two separate validation procedures. First, the system’s risk prioritization algorithm was evaluated by comparing the automated classification (generated from a structured patient profile questionnaire) with the clinical risk assessment performed manually by healthcare professionals. The agreement between these two assessments was quantified using standard classification metrics, including true-positive rate (TPR), false-positive rate (FPR), true-negative rate (TNR), and false-negative rate (FNR), across multiple predefined risk categories. Second, the system’s categorization of patient severity was assessed by comparing it to independent severity ratings assigned by the clinical team, who were blinded to the system’s output. The concordance between the two assessments was used to evaluate consistency and potential conservativeness in clinical judgment.

Usability was assessed from both patient and clinical staff perspectives using a combination of task-based interaction analysis and structured usability surveys. Task efficiency was evaluated by recording the time required to complete core interactions with the system. For patients, this included measuring the time to select a symptom and report it; for clinical staff, the time to access a patient profile was recorded. These time-based measurements were used to construct learning curves and evaluate intuitiveness and ease of use. In addition, a standardized usability questionnaire was administered, covering aspects such as system content, navigation, appearance, clarity of instructions, and ease of use. Each item was rated on a Likert-type scale ranging from 0 (lowest) to 10 (highest), and general usability scores were calculated accordingly.

Satisfaction and perceived utility were evaluated through structured self-administered surveys targeting both patients and healthcare professionals. These surveys explored multiple dimensions, including perceived usefulness of the system in symptom monitoring, confidence and safety while using the system, communication effectiveness with care teams, and overall experience. Participants rated each item on a numeric scale from 0 to 10. Additional indicators related to system adherence, cost, and time efficiency were collected through usage logs and administrative data. This multifaceted approach was designed to capture both subjective experiences and objective indicators relevant to clinical integration.

3. Results

3.1. Clinical Reliability

This analysis assesses the concordance between StepCare’s automated severity categorization and the clinical team’s independent assessments of patient condition. The primary objective was to determine whether StepCare reliably identifies patients requiring higher-priority clinical attention.

In 228 instances, healthcare professionals independently assigned a severity level based on patients’ reported symptoms, without access to StepCare’s categorizations. In 167 cases (73.2%), StepCare and the clinical team assigned the same severity. In the remaining 61 cases (26.8%), discrepancies were analyzed and grouped into four main categories:

- StepCare classified patients as more severe when their profile indicated increased clinical risk, even if symptoms appeared mild;

- StepCare’s classification was based on symptom data from the previous 24 h, while clinicians occasionally focused on shorter time frames (e.g., same-day symptoms), leading StepCare to adopt a more conservative stance;

- In some cases, clinicians factored in information beyond the 24 h window (e.g., weekend reports), while StepCare maintained strict 24 h limits;

- The absence of reported symptoms was sometimes interpreted by clinicians as “no symptoms”, whereas StepCare categorized such instances as “no information”, erring on the side of caution.

Importantly, StepCare never assigned a lower severity level than the clinical team. In 100% of discordant cases, StepCare categorized patients with the same or higher severity, reflecting a clinically safe, risk-averse design. Discrepancies (9.6%) were mainly due to StepCare’s prioritization of risk factors and temporal data consistency.

3.2. Patient Risk Assessment

This subsection evaluates concordance between StepCare’s risk categorization (based on patient profile questionnaires) and the clinical team’s risk assessments. Key performance metrics—including true-positive rate (TPR), false-positive rate (FPR), true-negative rate (TNR), and false-negative rate (FNR)—are summarized in Table 1.

Table 1.

StepCare vs. clinical team risk categorization performance.

StepCare consistently demonstrated higher sensitivity in identifying at-risk patients across all categories. A key reason for this was the system’s inclusion of tobacco use and other lifestyle factors not always considered by clinicians. These results show that StepCare’s automatic risk scoring can help doctors by making sure patients at high risk are not missed.

3.3. Usability and Satisfaction

3.3.1. Healthcare Professionals

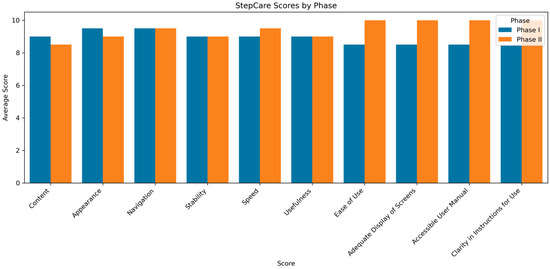

Usability and satisfaction among healthcare professionals were assessed using in-app analytics and post-study surveys, with the same clinical team involved throughout the evaluation period. This continuity allowed for a detailed analysis of how user experience and perceptions evolved over time (Figure 2).

Figure 2.

Comparison of average usability scores between Phase I and Phase II for StepCare, as evaluated by healthcare professionals.

- Learning curve and efficiency gains. During the initial phase, clinicians accessed more severe patient cases more rapidly, reflecting intuitive prioritization. By the second phase, once severity assignment was clinician-driven, the median time to access a patient profile dropped to just 3 s, indicating a rapid learning curve and growing familiarity with the system;

- Overall system rating. StepCare received an average usability score of 9.45 out of 10, reflecting high satisfaction across dimensions such as clarity of instructions, accessibility of the user manual, ease of navigation, system speed, stability, and overall usefulness;

- Perceived benefits. Clinicians highlighted several advantages, including time and resource optimization, enhanced communication with patients, improved symptom management, and proactive identification of high-risk cases. The reported benefits became more evident during the second phase of the study, indicating that continued use of StepCare led to greater recognition and appreciation of its value;

- Workload management. All clinicians (100%) indicated that StepCare made it easier to manage their daily tasks. There were no reports of increased burden, and the system was consistently seen as helpful and non-disruptive to their routine.

- Ease of use. Clinicians experienced minimal difficulty performing core tasks such as accessing patient data, registering or editing events, and navigating the interface. The features were seen as intuitive, appropriately detailed, and informative, particularly in terms of patient prioritization and clinical alerts;

- Satisfaction and recommendation. StepCare’s features received consistently high ratings. Following the study, 100% of clinicians expressed willingness to continue using the system and to recommend it to colleagues, reflecting strong endorsement and sustained user trust.

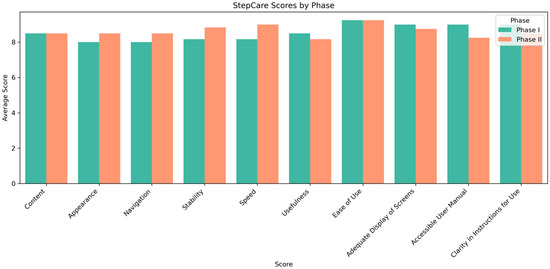

3.3.2. Patients

Patient usability and satisfaction were also evaluated through app usage data and post-study surveys.

- Learning curve. Patients adapted to StepCare quickly. Core actions such as symptom selection and reporting were completed efficiently (on average in 18.3 s and 11.4 s, respectively), with many users improving over time;

- Overall app experience. Patients consistently rated the app highly across multiple aspects (see Figure 3). The content was perceived as clear and informative, and the visual design was particularly appreciated by older users for its simplicity and readability. Minimal technical issues were reported, and the app’s responsiveness contributed to a positive overall experience;

Figure 3. Comparison of average usability scores between Phase I and Phase II for StepCare, as evaluated by patients.

Figure 3. Comparison of average usability scores between Phase I and Phase II for StepCare, as evaluated by patients. - Perceived benefits. Patients reported significant improvements in their health management. Key impacts included better quality of life (7/10), increased confidence in managing their condition (8/10), improved communication with healthcare providers (8.5/10), and greater security in knowing their health was being monitored (8/10). Symptom management also received a strong rating (8/10), as the app made tracking and reporting more straightforward;

- Ease of use. All patients (100%) reported no difficulty using the system on their devices. The in-app questionnaires were described as clear and easy to understand, with no issues selecting or interpreting responses;

- Satisfaction with clinical response. Patients gave high marks to the clinical team’s response to their reports—9.12/10 for adequacy, usefulness, and safety, 8.12/10 for timeliness, and 8.25/10 for helpfulness in managing symptoms;

- Recommendation and continued use. A total of 83% of patients said they would recommend StepCare, and 50% expressed interest in continuing its use beyond the study period.

3.4. Cost and Time Efficiency

A comparative analysis between usual clinical practice and the use of StepCare demonstrated significant improvements in both time management and cost savings.

- Time reduction. The time needed for patient triage was reduced by 25%, decreasing from 400 min to 300 min for the full patient cohort. This improvement reflects a more streamlined and efficient workflow for healthcare professionals;

- Cost savings. Direct healthcare costs were reduced by 84%, primarily due to

- The elimination of home visits,

- Fewer calls initiated by patients,

- A reduction in follow-up calls from healthcare staff;

- Healthcare utilization improvements. StepCare enabled a complete removal of home visits and patient-initiated calls (Table 2). Additionally, the number of follow-up calls by the clinical team decreased, indicating that the system’s remote monitoring capabilities reduced the need for direct interactions.

Table 2. Reduction in patient management costs using StepCare.

Table 2. Reduction in patient management costs using StepCare.

StepCare not only enhanced patient monitoring and safety but also significantly improved the efficiency of clinical workflows. These time and cost reductions support the system’s role in delivering more sustainable, patient-centered care.

4. Limitation

This study has several limitations that should be considered when interpreting the findings. First, due to the emergency conditions during the first wave of the COVID-19 pandemic, the evaluation was designed as a real-world feasibility study without a control group. As a result, we cannot definitively attribute observed improvements in workflow efficiency or patient outcomes solely to the use of StepCare. Future controlled studies are needed to isolate the system’s specific contributions.

Second, the algorithm underpinning StepCare is proprietary, and while we have provided a high-level description of its logic, full disclosure of its decision rules is not possible in the current format. This limits complete transparency and reproducibility, although a clinical team independent from the system’s developers conducted an external validation, contributing to objectivity.

Third, the study relied primarily on descriptive and aggregated analyses due to the urgency of implementation and limited availability of repeated individual-level data. This constrained the use of inferential statistics and may affect the generalizability of the results.

Finally, no long-term follow-up was conducted to assess sustained engagement or outcomes beyond the acute period. These aspects are planned to be explored in future evaluations.

5. Discussion

StepCare highlights telemedicine’s role in disaster medicine, supporting remote monitoring of COVID-19 patients, improving clinical decisions, and optimizing resource allocation while ensuring patient safety. Its high usability and satisfaction among both patients and clinicians make it a scalable solution for health emergencies. The study confirms its reliability, ease of use, and efficiency over standard care.

StepCare has proven to be a reliable tool for patient prioritization and categorization. While there have been some discrepancies between the algorithm and clinicians, the tool has always been more conservative in order to minimize patient risk. These cases are under review to further refine the algorithm and further align the tool with clinical practice.

The use of digital tools also allows the collection of patient information that is not generally contemplated in clinical practice, such as consumption preferences and patient habits such as alcohol and smoking. This allows to dimension clinical practice from a different and novel perspective since the knowledge about the patient’s status allows to improve the capacity of reaction and the factors identified when assessing the patient’s risk.

To ensure effective use of digital tools, it is essential that they have optimal usability to ensure adherence and continuity of use. The development of easy-to-use, intuitive, and useful tools is key to ensure continued use to improve clinical practice.

The improvement in clinical practice and hospital operating costs is demonstrated by the reduction in emergency room visits, calls made by patients, and calls made by clinical staff. This demonstrates that continuous monitoring not only improves the quality of life of patients but that we can improve hospital management and the efficiency of healthcare systems.

Author Contributions

Conceptualization, M.M.P.G., A.B.A., A.M.O., I.L.V., F.D.T., M.S., A.B., A.A., M.A. and M.M.S.; methodology, M.M.P.G., A.B.A., A.M.O., I.L.V., F.D.T., M.S., A.B., A.A. and M.A.; software, M.S., A.B., A.A. and M.A.; validation, M.M.P.G., A.B.A., A.M.O., I.L.V., F.D.T., M.S., A.B., A.A., M.A. and M.M.S.; formal analysis, M.S., A.B., A.A. and M.A.; investigation, M.M.P.G., A.B.A., A.M.O., I.L.V., F.D.T., M.S., A.B., A.A., D.C., M.A. and M.M.S.; writing—original draft preparation, M.S., A.B., A.A., D.C. and M.A.; writing—review and editing, M.M.P.G., A.B.A., A.M.O., I.L.V., F.D.T., M.S., A.B., A.A., D.C., M.A. and M.M.S.; supervision, M.A. and M.M.S.; project administration, A.A. and M.A.; funding acquisition, A.A. and M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fundación Biozientziak Gipuzkoa Fundazioa, grant for “aid within the framework of the extraordinary call for R&D&I projects that contribute to the prevention of contagion, better diagnosis, treatment, and monitoring of patients infected with SARS-CoV-2”.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of the Ethics Committee for Research with Medicines of Euskadi (CEIm-E) (protocol code STEP-CARE-01-2020, approved on 13 January 2021, as recorded in Acta 01/2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets generated and analyzed during this study are not publicly available to ensure participant privacy. Deidentified outcome datasets can be obtained from the corresponding author upon reasonable request.

Acknowledgments

The authors thank all participants who volunteered their time for this usability test.

Conflicts of Interest

M.A. owns stock in Naru.

Abbreviations

The following abbreviations are used in this manuscript:

| RWE | Real-world evidence |

| EHR | Electronic health record |

| TPR | True-positive rate |

| FPR | False-positive rate |

| TNR | True-negative rate |

| FNR | False-negative rate |

References

- Jackman, L.; Kamran, R. Transforming Patient-Reported Outcome Measurement with Digital Health Technology. J. Eval. Clin. Pract. 2025, 31, e70107. [Google Scholar] [CrossRef] [PubMed]

- Khalil, H.; Ameen, M.; Davies, C.; Liu, C. Implementing value-based healthcare: A scoping review of key elements, outcomes, and challenges for sustainable healthcare systems. Front. Public Health 2025, 13, 1514098. [Google Scholar] [CrossRef] [PubMed]

- Leclercq, V.; Saesen, R.; Schmitt, T.; Habimana, K.; Habl, C.; Gottlob, A.; Bulcke, M.V.D.; Delnord, M. How to scale up telemedicine for cancer prevention and care? Recommendations for sustainably implementing telemedicine services within EU health systems. J. Cancer Policy 2025, 44, 100593. [Google Scholar] [CrossRef] [PubMed]

- Pfeifer, B.; Neururer, S.B.; Hackl, W.O. Advancing Clinical Information Systems: Harnessing Telemedicine, Data Science, and AI for Enhanced and More Precise Healthcare Delivery. Yearb. Med. Inform. 2024, 33, 115–122. [Google Scholar] [CrossRef] [PubMed]

- Heo, J.; Park, J.A.; Han, D.; Kim, H.-J.; Ahn, D.; Ha, B.; Seog, W.; Park, Y.R. COVID-19 Outcome Prediction and Monitoring Solution for Military Hospitals in South Korea: Development and Evaluation of an Application. J. Med. Internet Res. 2020, 22, e22131. [Google Scholar] [CrossRef] [PubMed]

- Lowe, C.; Atherton, L.; Lloyd, P.; Waters, A.; Morrissey, D. Improving safety, efficiency, cost, and satisfaction across a musculoskeletal pathway using the Digital Assessment Routing tool for triage: A quality improvement study. J. Med. Internet Res. 2025, 27, e67269. [Google Scholar] [CrossRef] [PubMed]

- Chyzhyk, D.; Arregi, M.; Errazquin, M.; Ariceta, A.; Sevilla, M.; Álvarez, R.; Inchausti, M.A. Exploring Usability of a Clinical Decision Support System for Cancer Care: A User-Centered Study. Cancer Rep. 2025, 8, e70173. [Google Scholar] [CrossRef]

- Nielsen, J.; Landauer, T.K. Mathematical model of the finding of usability problems. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems, Amsterdam, The Netherlands, 24–29 April 1993; pp. 206–213. [Google Scholar] [CrossRef]

- Nielson, J. Why You Only Need to Test with 5 Users. 2000. Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 3 February 2021).

- Lattie, E.G.; Bass, M.; Garcia, S.F.; Phillips, S.M.; Moreno, P.I.; Flores, A.M.; Smith, J.; Scholtens, D.; Barnard, C.; Penedo, F.J.; et al. Optimizing Health Information Technologies for Symptom Management in Cancer Patients and Survivors: Usability Evaluation. JMIR Form. Res. 2020, 4, e18412. [Google Scholar] [CrossRef]

- Dittrich, F.; Albrecht, U.-V.; Scherer, J.; Becker, S.L.; Landgraeber, S.; Back, D.A.; Fessmann, K.; Haversath, M.; Beck, S.; Abbara-Czardybon, M.; et al. Development of Open Backend Structures for Health Care Professionals to Improve Participation in App Developments: Pilot Usability Study of a Medical App. JMIR Form. Res. 2023, 7, e42224. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).