Metaverse and Digital Twins in the Age of AI and Extended Reality

Abstract

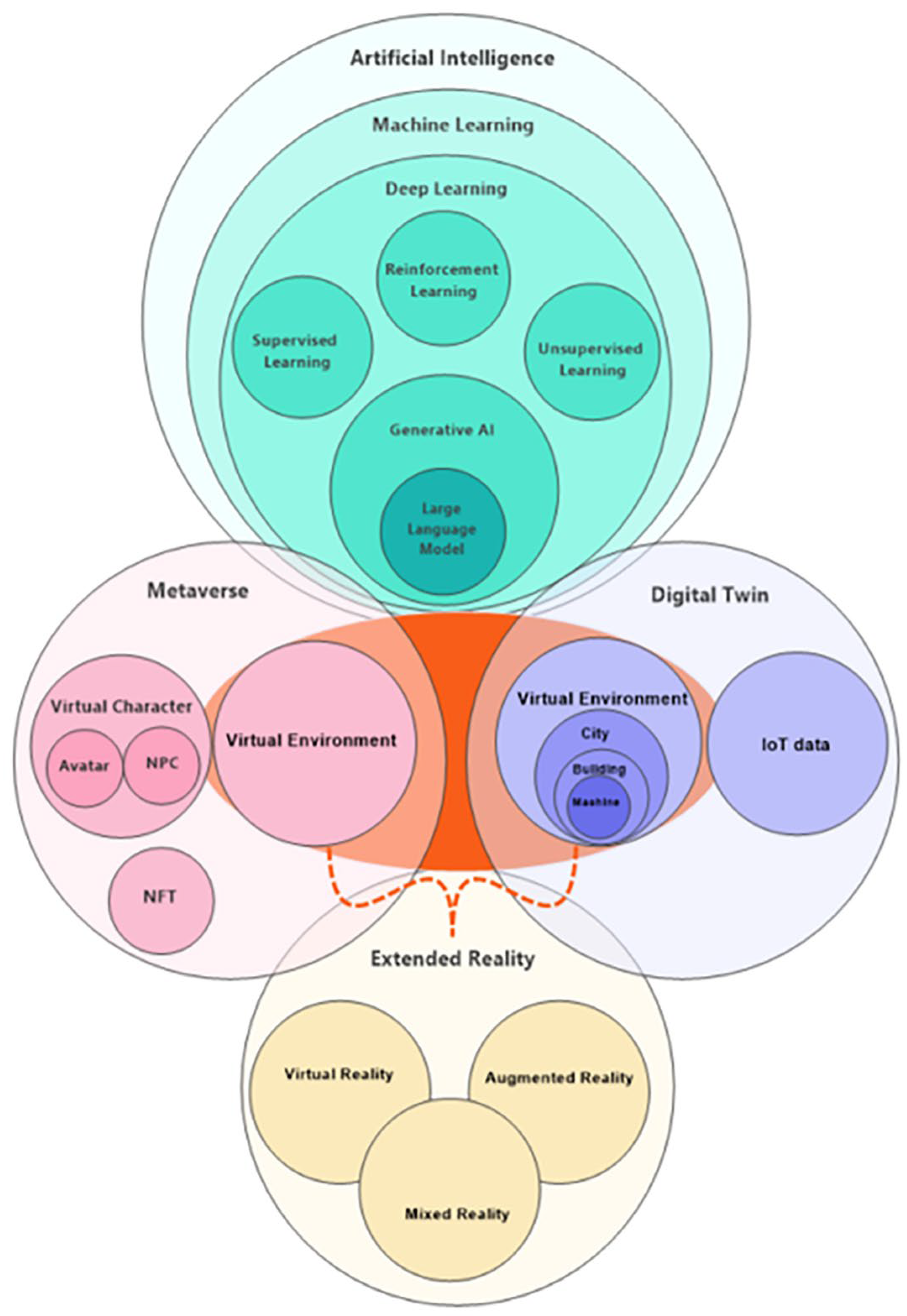

1. Introduction: Digital Twins (DTs) and Metaverse

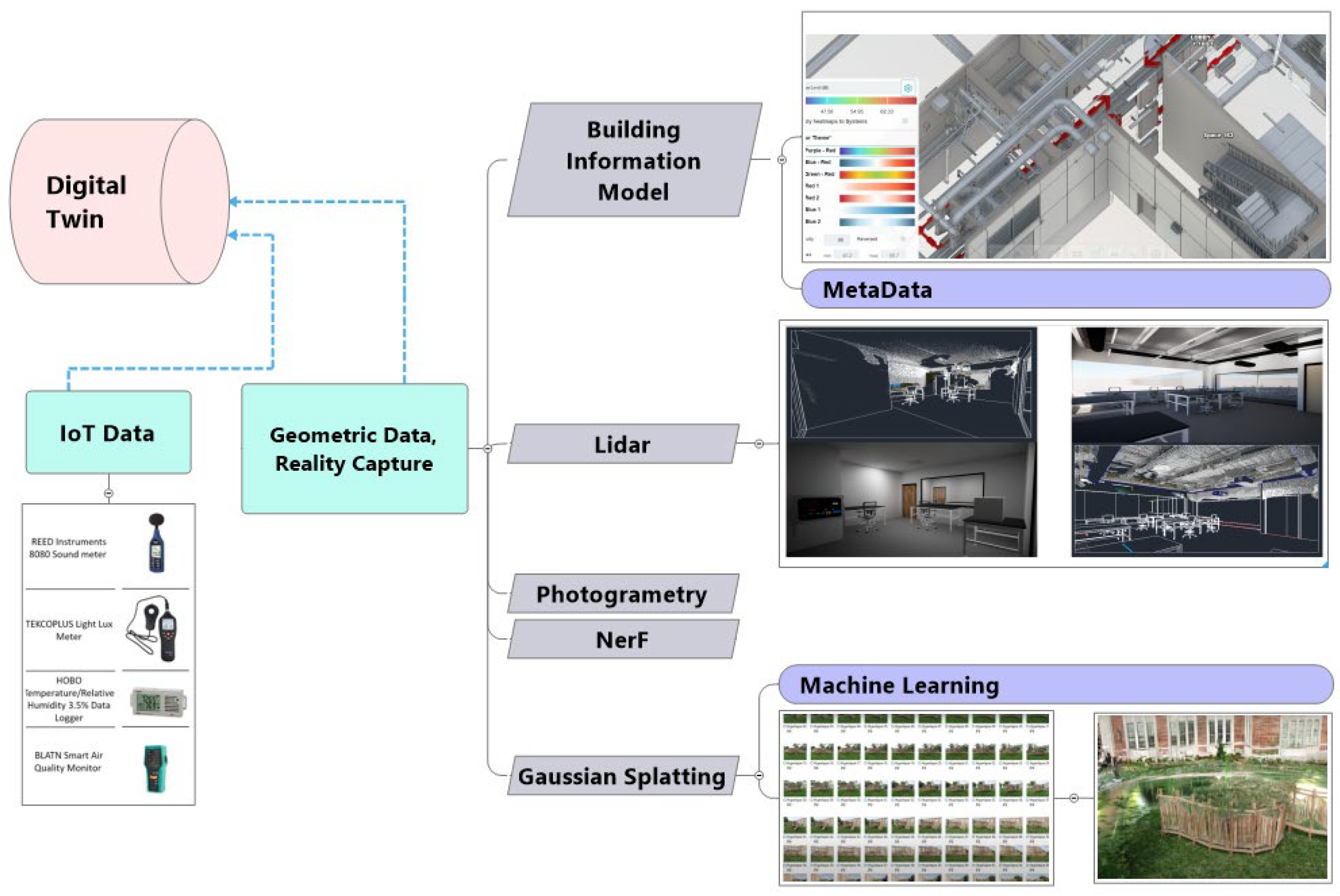

1.1. DTs as Mirrored Reality

1.2. Metaverse as a Fictional World

2. Divergence of Metaverse and Digital Twin Approach

2.1. DT Applications

2.2. Metaverse Applications

3. Interaction of AI with DT and Metaverse

3.1. AI for Content Creation

3.1.1. Traditional vs. AI-Driven DT Workflows

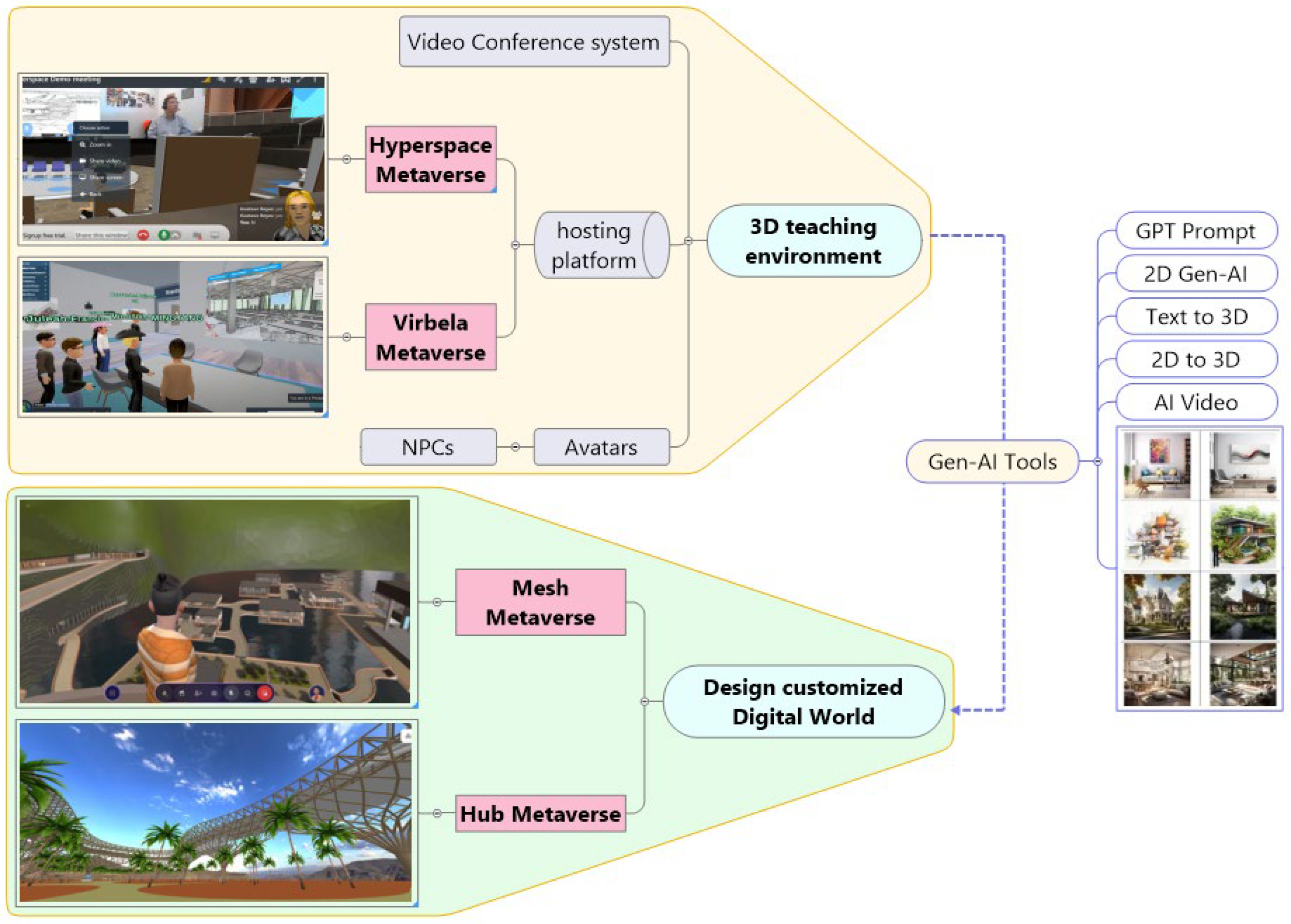

3.1.2. Metaverse Creation with Generative AI

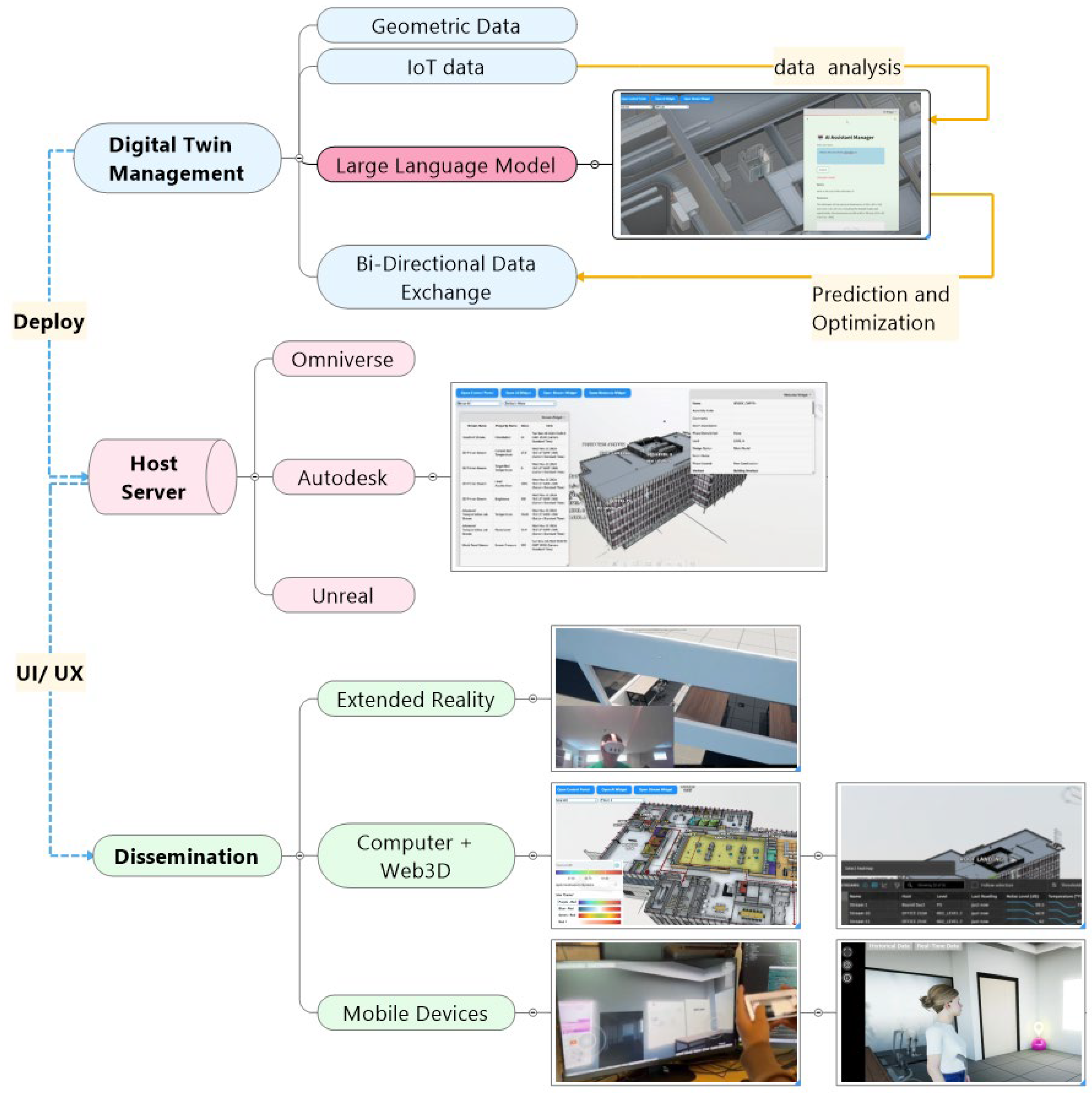

3.2. AI for Management

3.2.1. LLMs for DT Management

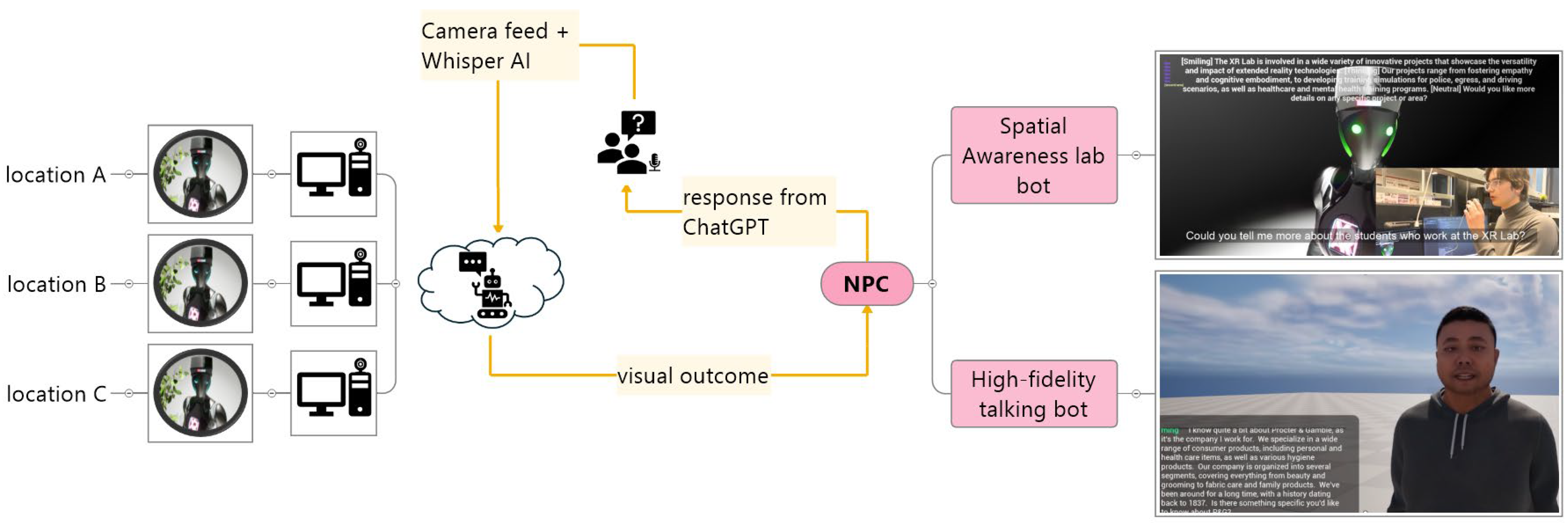

3.2.2. LLM for Metaverse

4. Extended Reality

4.1. Virtual Reality for Digital Twins

4.2. Augmented Reality for Digital Twins

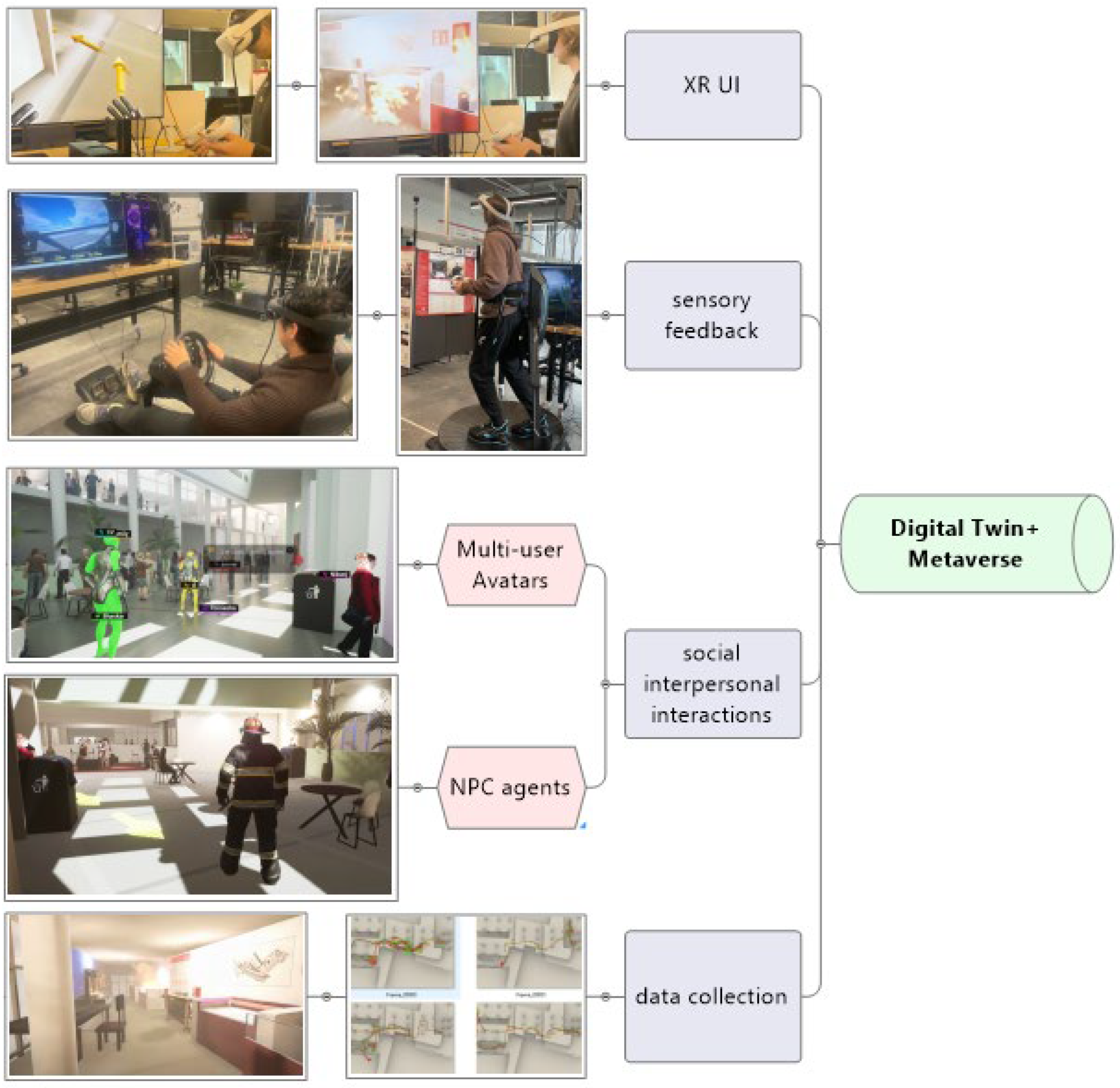

4.3. Virtual Reality for Metaverse

4.4. Augmented Reality for Metaverse

5. Conclusions: Convergence of DT and Metaverse

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van Der Horn, E.; Mahadevan, S. Digital Twin: Generalization, characterization and implementation. Decis. Support Syst. 2021, 145, 113524. [Google Scholar] [CrossRef]

- Wu, Y.; Shang, J.; Xue, F. RegARD: Symmetry-Based Coarse Registration of Smartphone’s Colorful Point Clouds with CAD Drawings for Low-Cost Digital Twin Buildings. Remote Sens. 2021, 13, 1882. [Google Scholar] [CrossRef]

- Benfer, R.; Müller, J. Semantic Digital Twin Creation of Building Systems through Time Series Based Metadata Inference—A Review. Energy Build. 2024, 321, 114637. [Google Scholar] [CrossRef]

- Walczyk, G.; Ożadowicz, A. Building Information Modeling and Digital Twins for Functional and Technical Design of Smart Buildings with Distributed IoT Networks—Review and New Challenges Discussion. Future Internet 2024, 16, 225. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, K.; Wang, J.; Zhao, J.; Feng, C.; Yang, Y.; Zhou, W. Computer Vision Enabled Building Digital Twin Using Building Information Model. IEEE Trans. Ind. Inform. 2023, 19, 2684–2692. [Google Scholar] [CrossRef]

- Popescu, D.; Dragomir, M.; Popescu, S.; Dragomir, D. Building Better Digital Twins for Production Systems by Incorporating Environmental Related Functions—Literature Analysis and Determining Alternatives. Appl. Sci. 2022, 12, 8657. [Google Scholar] [CrossRef]

- Eneyew, D.D.; Capretz, M.A.M.; Bitsuamlak, G.T. Toward Smart-Building Digital Twins: BIM and IoT Data Integration. IEEE Access 2022, 10, 130487–130506. [Google Scholar] [CrossRef]

- National Academies. Foundational Research Gaps and Future Directions for Digital Twins. Available online: https://www.nationalacademies.org/our-work/foundational-research-gaps-and-future-directions-for-digital-twins (accessed on 9 December 2024).

- Smiseth, A.O.; Fernandes, J.F.; Lamata, P. The Challenge of Understanding Heart Failure with Supernormal Left Ventricular Ejection Fraction: Time for Building the Patient’s ‘Digital Twin’. Eur. Heart J.-Cardiovasc. Imaging 2023, 24, 301–303. [Google Scholar] [CrossRef] [PubMed]

- Coveney, P.; Highfield, R. Virtual You: How Building Your Digital Twin Will Revolutionize Medicine and Change Your Life. Science 2024, 385, 1425. [Google Scholar] [CrossRef]

- Cheng, S. Metaverse: Concept, Content and Context; Springer International Publishing AG: Cham, Switzerland, 2023; Available online: https://ebooks.ohiolink.edu/content/e00d8f59-1232-11ee-99ca-0a9b31268bf5 (accessed on 5 May 2025).

- MatthewBall.Co. The Metaverse: What It Is, Where to Find It, and Who Will Build It. Available online: https://www.matthewball.co/all/themetaverse (accessed on 5 January 2025).

- Mystakidis, S. Metaverse. Encyclopedia 2022, 2, 486–497. [Google Scholar] [CrossRef]

- TechTarget. The Metaverse Explained: Everything You Need to Know. Available online: https://www.techtarget.com/whatis/feature/The-metaverse-explained-Everything-you-need-to-know (accessed on 23 May 2025).

- McKinsey & Company. What Is the Metaverse? Available online: https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-the-metaverse (accessed on 23 May 2025).

- The Future of Tech: 2022 Technology Predictions Revealed. Available online: https://www.prnewswire.com/news-releases/the-future-of-tech-2022-technology-predictions-revealed-301460053.html (accessed on 21 December 2024).

- Parijat, C.; Adwani, D.; Bandyopadhyay, A.; Sahoo, B. Metaverse: The Next Version of the Internet. In Healthcare Services in the Metaverse: Game Theory, AI, IoT, and Blockchain; Kalinga Institute of Industrial Technology: Bhubaneshwar, India, 2024; pp. 51–64. [Google Scholar] [CrossRef]

- Wildsmith, D. Urban Ruptures as Cultural Catalysts for Socio-Ecological Innovation in the Metaverse. Tradit. Dwell. Settl. Rev. 2022, 34, 13–14. [Google Scholar]

- Park, S.-M.; Kim, Y.-G. A Metaverse: Taxonomy, Components, Applications, and Open Challenges. IEEE Access 2022, 10, 4209–4251. [Google Scholar] [CrossRef]

- Bjørnskov, J.; Jradi, M. An Ontology-Based Innovative Energy Modeling Framework for Scalable and Adaptable Building Digital Twins. Energy Build. 2023, 292, 113146. [Google Scholar] [CrossRef]

- Cespedes-Cubides, A.S.; Jradi, M. A Review of Building Digital Twins to Improve Energy Efficiency in the Building Operational Stage. Energy Inform. 2024, 7, 11. [Google Scholar] [CrossRef]

- Li, J.; Wang, J. Affordable Generic Digital Twins for Existing Building Environment Management and an Onsite Deployment. Arch. Intell. 2024, 3, 27. [Google Scholar] [CrossRef]

- Bortolini, R.; Rodrigues, R.; Alavi, H.; Vecchia, L.F.D.; Forcada, N. Digital Twins’ Applications for Building Energy Efficiency: A Review. Energies 2022, 15, 7002. [Google Scholar] [CrossRef]

- Ni, Z.; Liu, Y.; Karlsson, M.; Gong, S. Enabling Preventive Conservation of Historic Buildings Through Cloud-Based Digital Twins: A Case Study in the City Theatre, Norrköping. IEEE Access 2022, 10, 90924–90939. [Google Scholar] [CrossRef]

- Bruno, S.; Scioti, A.; Pierucci, A.; Rubino, R.; Di Noia, T.; Fatiguso, F. VERBUM—Virtual Enhanced Reality for Building Modelling (Virtual Technical Tour in Digital Twins for Building Conservation). J. Inf. Technol. Constr. 2022, 27, 20–47. [Google Scholar] [CrossRef]

- Barzilay, O. The Road to the Autonomous Age Will Be Paved by Smart Cities. Forbes. 24 January 2018. Available online: https://www.forbes.com/sites/omribarzilay/2018/01/24/the-road-to-the-autonomous-age-will-be-paved-by-smart-cities/ (accessed on 28 August 2018).

- Wang, T. Interdisciplinary Urban GIS for Smart Cities: Advancements and Opportunities. Geo-Spat. Inf. Sci. 2013, 16, 25–34. [Google Scholar] [CrossRef]

- MIT Media Lab. Group Overview ‹ City Science. Available online: https://www.media.mit.edu/groups/city-science/overview/ (accessed on 28 August 2018).

- Larson, K.; AR Is Transforming Tech. What Can It Do for Cities? MIT Media Lab. Available online: https://www.media.mit.edu/articles/ar-is-transforming-tech-what-can-it-do-for-cities/ (accessed on 28 August 2018).

- Miller, C.; Abdelrahman, M.; Chong, A.; Biljecki, F.; Quintana, M.; Frei, M.; Chew, M.; Wong, D. The Internet-of-Buildings (IoB)—Digital Twin Convergence of Wearable and IoT Data with GIS/BIM. J. Phys. Conf. Ser. 2021, 2042, 012041. [Google Scholar] [CrossRef]

- Mytaverse and Zaha Hadid Architects Build First Libertarian City in the Metaverse. PR Newswire. 7 April 2022. Available online: https://link.gale.com/apps/doc/A699671104/BIC?u=ucinc_main&sid=bookmark-BIC&xid=cfcb44cf (accessed on 6 January 2025).

- ArchDaily. Zaha Hadid Architects Designs Liberland, a ‘Cyber-Urban’ Metaverse City. 15 March 2022. Available online: https://www.archdaily.com/978522/zaha-hadid-architects-designs-cyber-urban-metaverse-city (accessed on 3 May 2025).

- Schumacher, P. The Metaverse as Opportunity for Architecture and Society: Design Drivers, Core Competencies. Arch. Intell. 2022, 1, 11. [Google Scholar] [CrossRef] [PubMed]

- Claßen, H.; Bartels, N.; Riedlinger, U.; Oppermann, L. Transformation of the AECO Industry through the Metaverse: Potentials and Challenges. Discov. Appl. Sci. 2024, 6, 461. [Google Scholar] [CrossRef]

- Hutson, J.; Banerjee, G.; Kshetri, N.; Odenwald, K.; Ratican, J. Architecting the Metaverse: Blockchain and the Financial and Legal Regulatory Challenges of Virtual Real Estate. J. Intell. Learn. Syst. Appl. 2023, 15, 1–23. [Google Scholar] [CrossRef]

- Esen, F.S.; Tłnmaz, H.; Singh, M. Metaverse: Technologies, Opportunities and Threats; Springer: Singapore, 2023. [Google Scholar] [CrossRef]

- Wiederhold, B.K. Ready (or Not) Player One: Initial Musings on the Metaverse. Cyberpsychol. Behav. Soc. Netw. 2022, 25, 1–2. [Google Scholar] [CrossRef]

- Zheng, J.; Martin, F. BIM-GPT: A Prompt-Based Virtual Assistant Framework for BIM Information Retrieval. arXiv 2023. [Google Scholar] [CrossRef]

- Lee, R.; Gim, G.; Kim, J. (Eds.) AI and Metaverse: Volume 2; Studies in Computational Intelligence; Springer Nature: Cham, Switzerland, 2024; Volume 1160. [Google Scholar] [CrossRef]

- Flugelman, I. Love, Death and Designing the Metaverse. Medium. 5 November 2023. Available online: https://ivanflugelman.medium.com/love-death-and-designing-the-metaverse-712445e58775 (accessed on 5 May 2025).

- Bucknell, A. Ways of Worlding: Building Alternative Futures in Multispace. Arch. Des. 2023, 93, 94–103. [Google Scholar] [CrossRef]

- Chamola, V.; Bansal, G.; Das, T.K.; Hassija, V.; Reddy, N.S.S.; Wang, J.; Zeadally, S.; Hussain, A.; Yu, F.R.; Guizani, M.; et al. Beyond Reality: The Pivotal Role of Generative AI in the Metaverse. arXiv 2023. [Google Scholar] [CrossRef]

- Ferdousi, R.; Hossain, M.A.; Yang, C.; El Saddik, A. DefectTwin: When LLM Meets Digital Twin for Railway Defect Inspection. arXiv 2024. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, M.; Jia, K.; Dong, W.; Wang, J. Towards LLM-Enhanced Digital Twins of Intelligent Computing Center. In Proceedings of the 2024 IEEE 24th International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Cambridge, UK, 1–5 July 2024; pp. 84–89. [Google Scholar] [CrossRef]

- Yang, H.; Siew, M.; Joe-Wong, C. An LLM-Based Digital Twin for Optimizing Human-in-the Loop Systems. In Proceedings of the 2024 IEEE International Workshop on Foundation Models for Cyber-Physical Systems & Internet of Things (FMSys), Hong Kong, China, 13–15 May 2024; pp. 26–31. [Google Scholar] [CrossRef]

- Šturm, J.; Zajec, P.; Škrjanc, M.; Mladenić, D.; Grobelnik, M. Enhancing Cognitive Digital Twin Interaction Using an LLM Agent. In Proceedings of the 2024 47th MIPRO ICT and Electronics Convention (MIPRO), Opatija, Croatia, 20–24 May 2024; pp. 103–107. [Google Scholar] [CrossRef]

- Gautam, A.; Aryal, M.R.; Deshpande, S.; Padalkar, S.; Nikolaenko, M.; Tang, M.; Anand, S. IIoT-enabled digital twin for legacy and smart factory machines with LLM integration. J. Manuf. Syst. 2025, 80, 511–523. [Google Scholar] [CrossRef]

- Tang, M. From agent to avatar: Integrate avatar and agent simulation in the virtual reality for wayfinding. In Proceedings of the 23rd International Conference of the Association for Computer-Aided Architectural Design Research in Asia (CAADRIA), Beijing, China, 17–19 May 2018. [Google Scholar]

- Tang, M.; Nikolaenko, M.; Boerwinkle, E.; Obafisoye, S.; Kumar, A.; Rezayat, M.; Lehmann, S.; Lorenz, T. Evaluation of the Effectiveness of Traditional Training vs. Immersive Training. In Extended Reality. XR Salento 2024; De Paolis, L.T., Arpaia, P., Sacco, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2024; Volume 15030. [Google Scholar] [CrossRef]

- Tang, M. Augmented Craftmanship: Assessing augmented reality for design-build education. In Proceedings of the 27th International Conference of the Association for Computer-Aided Architectural Design Research in Asia (CAADRIA), Sydney, Australia, 9–15 April 2022. [Google Scholar]

- Kommineni, R.C. Virtually Interactive DAAP. Master’s Thesis, University of Cincinnati, Cincinnati, OH, USA, 2021. Available online: http://rave.ohiolink.edu/etdc/view?acc_num=ucin162317005355842 (accessed on 3 May 2025).

- Zaha Hadid Architects Creates Parametric London for Fortnite. Dezeen. 23 September 2024. Available online: https://www.dezeen.com/2024/09/23/parametric-london-zaha-hadid-architects-fornite/ (accessed on 3 May 2025).

- Baudrillard, J. Simulacres et Simulation; Éditions Galilée: Paris, France, 1985. [Google Scholar]

- Jeong, D.; Lee, C.; Choi, Y.; Jeong, T. Building Digital Twin Data Model Based on Public Data. Buildings 2024, 14, 2911. [Google Scholar] [CrossRef]

- Manfren, M.; AB James, P.; Aragon, V.; Tronchin, L. Lean and Interpretable Digital Twins for Building Energy Monitoring—A Case Study with Smart Thermostatic Radiator Valves and Gas Absorption Heat Pumps. Energy AI 2023, 14, 100304. [Google Scholar] [CrossRef]

- Chen, Z.-S.; Chen, K.-D.; Xu, Y.-Q.; Pedrycz, W.; Skibniewski, M.J. Multiobjective Optimization-Based Decision Support for Building Digital Twin Maturity Measurement. Adv. Eng. Inform. 2024, 59, 102245. [Google Scholar] [CrossRef]

| Autodesk Tandem | NVIDIA Omniverse | Unreal Engine | |

|---|---|---|---|

| BIM integration | Native, with Metedata | Convert to USD | Datasmith |

| IoT integration | Native with AWS, Azure | Python | Python |

| Bi-directional data exchange | Customized API | Customized API | Customized API |

| XR Support | Limited | VR | VR, AR |

| LLM integration | Limited, through widgets | NVIDIA ACE | Plugins |

| Visualization | Web3D rendering | High-fidelity | High-fidelity |

| Category | 2D Image Generation Tools | 3D Gen-AI Tools | Gaussian Splatting Tools |

|---|---|---|---|

| Tools | Stable Diffusion MidJourney, DALL·E, Adobe Firefly, Hunyuan, PromeAI, Krea, Vizcom, etc | Rodin, Meshy, Tripo, Dream Gaussian, Trellis | VastGaussian, CityGaussian, OccluGaussian |

| Features | Text prompts, image inpainting, upscaling, and stylized output | Text-to-3D, image-to-3D, rapid prototyping to generate 3D mesh models | 3D volumetric model |

| UI | Discord-based, web UI, ComfyUI, ControlNet, Lora | Web UI, ComfyUI | Standalone ML training software |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, M.; Nikolaenko, M.; Alrefai, A.; Kumar, A. Metaverse and Digital Twins in the Age of AI and Extended Reality. Architecture 2025, 5, 36. https://doi.org/10.3390/architecture5020036

Tang M, Nikolaenko M, Alrefai A, Kumar A. Metaverse and Digital Twins in the Age of AI and Extended Reality. Architecture. 2025; 5(2):36. https://doi.org/10.3390/architecture5020036

Chicago/Turabian StyleTang, Ming, Mikhail Nikolaenko, Ahmad Alrefai, and Aayush Kumar. 2025. "Metaverse and Digital Twins in the Age of AI and Extended Reality" Architecture 5, no. 2: 36. https://doi.org/10.3390/architecture5020036

APA StyleTang, M., Nikolaenko, M., Alrefai, A., & Kumar, A. (2025). Metaverse and Digital Twins in the Age of AI and Extended Reality. Architecture, 5(2), 36. https://doi.org/10.3390/architecture5020036