Eye-Tracking Applications in Architecture and Design

Definition

1. History of Eye Tracking

2. Eye Tracking and AI-Simulated Eye-Tracking: Applications and Findings in Architecture and Design

2.1. What Determines First Fixations

2.2. Importance

2.3. Relevance for Architecture and Design

2.4. Eye-Tracking and Stimulus Valence

2.5. Using Virtual Reality Environments

3. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ross, W.D. The Works of Aristotle; Clarendon: Oxford, UK, 1927; Volume 7. [Google Scholar]

- Lejeune, A. L’Optique de Claude Ptolémée dans la Version Latine d’après l’Arabe de l´Émir Eugène de Sicile; Université de Louvain: Louvain, Belgium, 1956. [Google Scholar]

- Sabra, A.I. The Optics of Ibn Al-Haytham; TheWarburg Institute: London, UK, 1989. [Google Scholar]

- Wells, W.C. An Essay upon Single Vision with Two Eyes: Together with Experiments and Observations on Several Other Subjects in Optics; Cadell: London, UK, 1792. [Google Scholar]

- Wade, N.J. Pioneers of eye movement research. Iperception 2010, 1, 33–68. [Google Scholar] [CrossRef] [PubMed]

- Jacob, R.J.K.; Karn, K.S. Eye tracking in Human-Computer Interaction and Usability Research: Ready to Deliver the Promises. In The Mind’s Eye: Cognitive and Applied Aspects of Eye Movement Research; Hyönä, J., Radach, R., Deubel, H., Eds.; Elsevier Science: Amsterdam, The Netherlands, 2003; Volume 2, pp. 573–605. [Google Scholar]

- Javal, E. Essai sur la physiologie de la lecture. Ann. D’oculi-Stique 1878, 80, 97–117. [Google Scholar]

- Dodge, R.; Cline, T.S. The angle velocity of eye movements. Psychol. Rev. 1901, 8, 145–157. [Google Scholar] [CrossRef]

- Judd, C.H.; McAllister, C.N.; Steel, W.M. General introduction to a series of studies of eye movements by means of kinetoscopic photographs. In Psychological Review, Monograph Supplements; Baldwin, J.M., Warren, H.C., Judd, C.H., Eds.; The Review Publishing Company: Baltimore, MD, USA, 1905; Volume 7, pp. 1–16. [Google Scholar]

- Huey, E.B. The Psychology and Pedagogy of Reading, with a Review of the History of Reading and Writing and of Methods, Texts, and Hygiene in Reading; Macmillan: New York, NY, USA, 1908; p. xvi+469. [Google Scholar]

- Mackworth, J.F.; Mackworth, N.H. Eye fixations recorded on changing visual scenes by the television eye-marker. J. Opt. Soc. Am. 1958, 48, 439–445. [Google Scholar] [CrossRef]

- Fitts, P.M.; Jones, R.E.; Milton, J.L. Eye movements of aircraft pilots during instrument-landing approaches. Aeronaut. Eng. Rev. 1950, 9, 56. [Google Scholar]

- Creel, D.J. The electrooculogram. In Handbook of Clinical Neurology; Levin, K.H., Chauvel, P., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; Volume 160, pp. 495–499. [Google Scholar]

- Yarbus, A.L. Eye Movements and Vision; Haigh, B., Translator; Plenum Press: New York, NY, USA, 1967. [Google Scholar]

- Tatler, B.W.; Wade, N.J.; Kwan, H.; Findlay, J.M.; Velichkovsky, B.M. Yarbus, eye movements, and vision. Iperception 2010, 1, 7–27. [Google Scholar] [CrossRef]

- Buswell, G.T. How People Look at Pictures: A Study of the Psychology and Perception in Art; University Chicago Press: Chicago, IL, USA, 1935. [Google Scholar]

- AAlto, P.; Steinert, M. Emergence of eye-tracking in architectural research: A review of studies 1976–2021. Archit. Sci. Rev. 2024, 1, 1–11. [Google Scholar]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef]

- Tollner, T.; Zehetleitner, M.; Gramann, K.; Muller, H.J. Stimulus saliency modulates pre-attentive processing speed in human visual cortex. PLoS ONE 2011, 6, e16276. [Google Scholar] [CrossRef]

- Banich, M.T.; Compton, R.J. Cognitive Neuroscience; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- McFadyen, J. Investigating the Subcortical Route to the Amygdala Across Species and in Disordered Fear Responses. J. Exp. Neurosci. 2019, 13, 1179069519846445. [Google Scholar] [CrossRef]

- Van Le, Q.; Isbell, L.A.; Matsumoto, J.; Nguyen, M.; Hori, E.; Maior, R.S.; Tomaz, C.; Tran, A.H.; Ono, T.; Nishijo, H. Pulvinar neurons reveal neurobiological evidence of past selection for rapid detection of snakes. Proc. Natl. Acad. Sci. USA 2013, 110, 19000–19005. [Google Scholar] [CrossRef] [PubMed]

- Alexander, C.; Ishikawa, S.; Silverstein, M.; Jacobson, M.; Fiksdahl King, I.; Angel, S. A Pattern Language; Oxford University Press: New York, NY, USA, 1977. [Google Scholar]

- Taylor, R.P. Reduction of Physiological Stress Using Fractal Art and Architecture. Leonardo 2006, 39, 245–251. [Google Scholar] [CrossRef]

- Taylor, R.P.; Spehar, B.; Van Donkelaar, P.; Hagerhall, C.M. Perceptual and Physiological Responses to Jackson Pollock’s Fractals. Front. Hum. Neurosci. 2011, 5, 60. [Google Scholar] [CrossRef]

- Fischmeister, F.P.; Martins, M.J.D.; Beisteiner, R.; Fitch, W.T. Self-similarity and recursion as default modes in human cognition. Cortex 2017, 97, 183–201. [Google Scholar] [CrossRef] [PubMed]

- Martins, M.J.; Fischmeister, F.P.; Puig-Waldmuller, E.; Oh, J.; Geissler, A.; Robinson, S.; Fitch, W.T.; Beisteiner, R. Fractal image perception provides novel insights into hierarchical cognition. Neuroimage 2014, 96, 300–308. [Google Scholar] [CrossRef]

- Hägerhäll, C.; Laike, T.; Taylor, R.; Küller, M.; Küller, R.; Martin, T. Investigations of Human EEG Response to Viewing Fractal Patterns. Perception 2008, 37, 1488–1494. [Google Scholar] [CrossRef]

- Lavdas, A.A.; Salingaros, N.A. Architectural Beauty: Developing a Measurable and Objective Scale. Challenges 2022, 13, 56. [Google Scholar] [CrossRef]

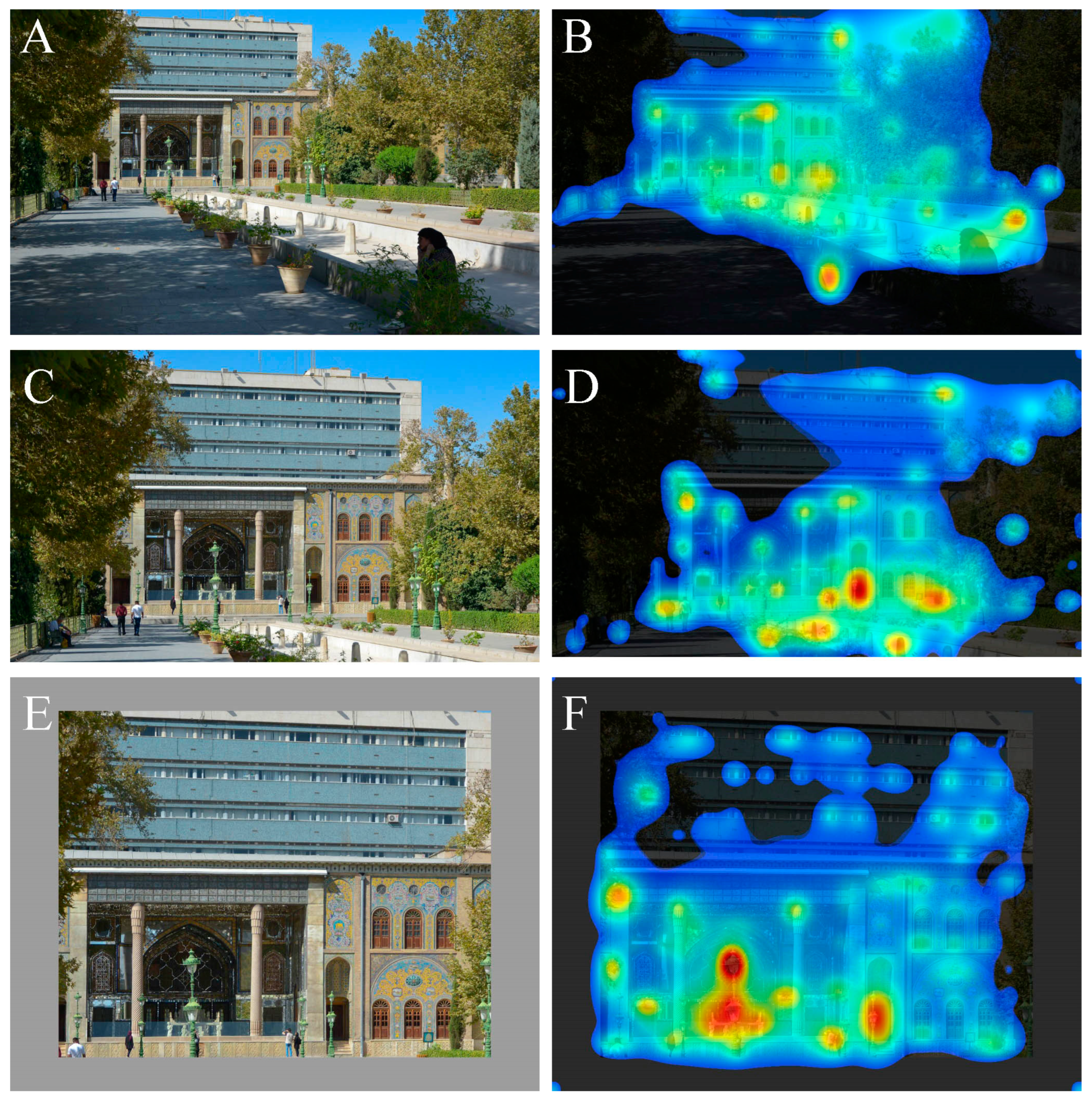

- Lavdas, A.A.; Salingaros, N.A.; Sussman, A. Visual Attention Software: A New Tool for Understanding the “Subliminal” Experience of the Built Environment. Appl. Sci. 2021, 11, 6197. [Google Scholar] [CrossRef]

- Rosas, H.J.; Sussman, A.; Sekely, A.C.; Lavdas, A.A. Using Eye Tracking to Reveal Responses to the Built Environment and Its Constituents. Appl. Sci. 2023, 13, 12071. [Google Scholar] [CrossRef]

- Frumkin, H. Beyond toxicity: Human health and the natural environment. Am. J. Prev. Med. 2001, 20, 234–240. [Google Scholar] [CrossRef]

- Joye, Y. Fractal Architecture Could Be Good for You. Nexus Netw. J. 2007, 9, 311–320. [Google Scholar] [CrossRef]

- Salingaros, N.A. The laws of architecture from a physicist’s perspective. Phys. Essays 1995, 8, 638–643. [Google Scholar] [CrossRef]

- Salingaros, N.A.; Mehaffy, M.W. A Theory of Architecture; Umbau-Verlag: Solingen, Germany, 2006. [Google Scholar]

- Salingaros, N.A. Unified Architectural Theory: Form, Language, Complexity: A Companion to Christopher Alexander’s “The phenomenon of Life: The nature of Order, Book 1”; Sustasis Foundation: Portland, OR, USA, 2013. [Google Scholar]

- Zeki, S. Beauty in Architecture: Not a Luxury-Only a Necessity. Archit. Des. 2019, 89, 14–19. [Google Scholar] [CrossRef]

- Ellard, C. Places of the Heart: The Psychogeography of Everyday Life; Perseus Books; LLC Ed.: New York City, NY, USA, 2015. [Google Scholar]

- Merrifield, C.; Danckert, J. Characterizing the psychophysiological signature of boredom. Exp. Brain. Res. 2014, 232, 481–491. [Google Scholar] [CrossRef]

- Penacchio, O.; Otazu, X.; Wilkins, A.J.; Haigh, S.M. A mechanistic account of visual discomfort. Front. Neurosci. 2023, 17, 1200661. [Google Scholar] [CrossRef]

- Mauss, I.B.; Robinson, M.D. Measures of emotion: A review. Cogn. Emot. 2009, 23, 209–237. [Google Scholar] [CrossRef]

- Zou, Z.; Ergan, S. Where do we look? An eye-tracking study of architectural features in building design. In Advances in Informatics and Computing in Civil and Construction Engineering; Mutis, I., Hartmann, T., Eds.; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Afrooz, A.; White, D.; Neuman, M. Which visual cues are important in way-finding? Measuring the influence of travel mode on visual memory for built environments. Assist. Technol. Res. Ser. 2014, 35, 394–403. [Google Scholar] [CrossRef]

- Hollander, J.; Purdy, A.; Wiley, A.; Foster, V.; Jacob, R.; Taylor, H.; Brunyé, T. Seeing the city: Using eye-tracking technology to explore cognitive responses to the built environment. J. Urban. Int. Res. Placemaking Urban Sustain. 2018, 12, 156–171. [Google Scholar] [CrossRef]

- Lu, Z.; Pesarakli, H. Seeing Is Believing: Using Eye-Tracking Devices in Environmental Research. HERD Health Environ. Res. Des. J. 2023, 16, 15–52. [Google Scholar] [CrossRef]

- Tobii. Available online: https://www.tobii.com/products/eye-trackers (accessed on 14 June 2024).

- PupilLabs. Available online: https://pupil-labs.com/products/neon (accessed on 14 June 2024).

- iMotions. Available online: https://imotions.com/ (accessed on 21 October 2023).

- Biopac. Available online: https://www.biopac.com/product/eye-tracking-etv (accessed on 14 June 2024).

- 3M. Visual Attention Software. Available online: https://www.3m.com/3M/en_US/visual-attention-software-us/ (accessed on 16 May 2021).

- Eyequant. Available online: https://www.eyequant.com (accessed on 1 December 2023).

- Insight, A. Available online: https://attentioninsight.com/ (accessed on 1 December 2023).

- Neurons. Available online: www.neuronsinc.com (accessed on 1 December 2023).

- Expoze. Available online: https://www.expoze.io (accessed on 1 December 2023).

- Sussman, A.; Ward, J. Game-Changing Eye-Tracking Studies Reveal How We Actually See Architecture. Available online: https://commonedge.org/game-changing-eye-tracking-studies-reveal-how-we-actually-see-architecture/ (accessed on 8 November 2023).

- Sussman, A.; Ward, J. Eye-tracking Boston City Hall to better understand human perception and the architectural experience. New Des. Ideas 2019, 3, 53–59. [Google Scholar]

- Lisińska-Kuśnierz, M.; Krupa, M. Suitability of Eye Tracking in Assessing the Visual Perception of Architecture-A Case Study Concerning Selected Projects Located in Cologne. Buildings 2020, 10, 20. [Google Scholar] [CrossRef]

- Suárez, L. Subjective Experience and Visual Attention to a Historic Building. Front. Archit. Res. 2020, 9, 774–804. [Google Scholar] [CrossRef]

- Salingaros, N.A.; Sussman, A. Biometric Pilot-Studies Reveal the Arrangement and Shape of Windows on a Traditional Façade to be Implicitly “Engaging”, Whereas Contemporary Façades Are Not. Urban Sci. 2020, 4, 26. [Google Scholar] [CrossRef]

- Hollander, J.; Sussman, A.; Lowitt, P.; Angus, N.; Situ, M. Eye-tracking emulation software: A promising urban design tool. Archit. Sci. Rev. 2021, 64, 383–393. [Google Scholar] [CrossRef]

- Brielmann, A.A.; Buras, N.H.; Salingaros, N.A.; Taylor, R.P. What Happens in Your Brain When You Walk Down the Street? Implications of Architectural Proportions, Biophilia, and Fractal Geometry for Urban Science. Urban Sci. 2021, 6, 3. [Google Scholar] [CrossRef]

- NCAS. Americans’ Preferred Architecture for Federal Buildings. Available online: https://www.civicart.org/americans-preferred-architecture-for-federal-buildings (accessed on 1 December 2023).

- Slater, A.; Schulenburg, C.V.D.; Brown, E.; Badenoch, M. Newborn infants prefer attractive faces. Infant Behav. Dev. 1998, 21, 345–354. [Google Scholar] [CrossRef]

- Langlois, J.H.; Ritter, J.M.; Roggman, L.A.; Vaughn, L.S. Facial diversity and infant preferences for attractive faces. Dev. Psychol. 1991, 27, 79–84. [Google Scholar]

- Benedek, M.; Kaernbach, C. A continuous measure of phasic electrodermal activity. J. Neurosci. Methods 2010, 190, 80–91. [Google Scholar] [CrossRef]

- Shi, Y.; Ruiz, N.; Taib, R.; Choi, E.; Chen, F. Galvanic Skin Response (GSR) as an Index of Cognitive Load. In Proceedings of the Conference on Human Factors in Computing Systems, CHI 2007, San Jose, CA, USA, April 28–May 3 2007; pp. 2651–2656. [Google Scholar]

- Bakker, J.; Pechenizkiy, M.; Sidorova, N. What’s Your Current Stress Level? Detection of Stress Patterns from GSR Sensor Data. In Proceedings of the 2011 IEEE 11th International Conference on Data Mining Workshops, Vancouver, BC, Canada, 11 December 2011; pp. 573–580. [Google Scholar]

- Ergan, S.; Radwan, A.; Zou, Z.; Tseng, H.-A.; Han, X. Quantifying Human Experience in Architectural Spaces with Integrated Virtual Reality and Body Sensor Networks. J. Comput. Civ. Eng. 2019, 33, 04018062. [Google Scholar] [CrossRef]

- Read, G.L. Facial Electromyography (EMG). In The International Encyclopedia of Communication Research Methods; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015; pp. 1–10. [Google Scholar]

- Dimberg, U. Facial electromyography and emotional reactions. Psychophysiology 1990, 27, 481–494. [Google Scholar] [CrossRef]

- Boxtel, A. Facial EMG as a Tool for Inferring Affective States. In Proceedings of the Measuring Behavior 2010, Eindhoven, The Netherlands, 24–27 August 2010. [Google Scholar]

- Chang, C.-Y.; Chen, P.-K. Human Response to Window Views and Indoor Plants in the Workplace. HortScience Publ. Am. Soc. Hortic. Sci. 2005, 40, 1354–1359. [Google Scholar] [CrossRef]

- Balakrishnan, B.; Sundar, S.S. Capturing Affect in Architectural Visualization—A Case for integrating 3-dimensional visualization and psychophysiology. In Proceedings of the Communicating Space(s) 24th eCAADe Conference Proceedings, Volos, Greece, 6–9 September 2006; pp. 664–669, ISBN 0-9541183-5-9. [Google Scholar]

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Ekman, P.; Rosenberg, E. (Eds.) What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: New York, NY, USA, 2005. [Google Scholar] [CrossRef]

- Valstar, M.; Mehu, M.; Jiang, B.; Pantic, M.; Scherer, K. Meta-Analysis of the First Facial Expression Recognition Challenge. In IEEE Transactions on Systems, Man, and Cybernetics. Part B, Cybernetics: A Publication of the IEEE Systems, Man, and Cybernetics Society; IEEE: Piscataway, NJ, USA, 2012; Volume 42. [Google Scholar] [CrossRef]

- Lewinski, P.; Uyl, T.; Butler, C. Automated Facial Coding: Validation of Basic Emotions and FACS AUs in FaceReader. J. Neurosci. Psychol. Econ. 2014, 7, 227–236. [Google Scholar] [CrossRef]

- Elver Boz, T.; Demirkan, H.; Urgen, B. Visual perception of the built environment in virtual reality: A systematic characterization of human aesthetic experience in spaces with curved boundaries. Psychol. Aesthet. Creat. Arts 2022. Advance online publication. [Google Scholar] [CrossRef]

- Coburn, A.; Vartanian, O.; Kenett, Y.; Nadal, M.; Hartung, F.; Hayn-Leichsenring, G.; Navarrete, G.; González-Mora, J.; Chatterjee, A. Psychological and neural responses to architectural interiors. Cortex 2020, 126, 217–241. [Google Scholar]

- Lee, K.; Park, C.-H.; Kim, J.H. Examination of User Emotions and Task Performance in Indoor Space Design Using Mixed-Reality. Buildings 2023, 13, 1483. [Google Scholar] [CrossRef]

- Tawil, N.; Ascone, L.; Kühn, S. The contour effect: Differences in the aesthetic preference and stress response to photo-realistic living environments. Front. Psychol. 2022, 13, 933344. [Google Scholar] [CrossRef]

- Zou, Z.; Yu, X.; Ergan, S. Integrating Biometric Sensors, VR, and Machine Learning to Classify EEG Signals in Alternative Architecture Designs. In Computing in Civil Engineering 2019; ASCE: Reston, VA, USA, 2019. [Google Scholar]

- Banaei, M.; Ahmadi, A.; Gramann, K.; Hatami, J. Emotional evaluation of architectural interior forms based on personality differences using virtual reality. Front. Archit. Res. 2019, 9, 138–147. [Google Scholar] [CrossRef]

- Mostajeran, F.; Steinicke, F.; Reinhart, S.; Stuerzlinger, W.; Riecke, B.; Kühn, S. Adding virtual plants leads to higher cognitive performance and psychological well-being in virtual reality. Sci. Rep. 2023, 13, 8053. [Google Scholar] [CrossRef]

- Fich, L.B.J.; Jönsson, P.; Kirkegaard, P.H.; Wallergård, M.; Garde, A.H.; Hansen, Å. Can architectural design alter the physiological reaction to psychosocial stress? A virtual TSST experiment. Physiol. Behav. 2014, 135, 91–97. [Google Scholar] [CrossRef]

- Vecchiato, G.; Tieri, G.; Jelic, A.; De Matteis, F.; Maglione, A.; Babiloni, F. Electroencephalographic Correlates of Sensorimotor Integration and Embodiment during the Appreciation of Virtual Architectural Environments. Front. Psychol. 2015, 6, 1944. [Google Scholar] [CrossRef] [PubMed]

- Higuera Trujillo, J.L.; Llinares, C.; Montañana, A.; Rojas, J.-C. Multisensory stress reduction: A neuro-architecture study of paediatric waiting rooms. Build. Res. Inf. 2019, 48, 269–285. [Google Scholar] [CrossRef]

- Valentine, C. Health Implications of Virtual Architecture: An Interdisciplinary Exploration of the Transferability of Findings from Neuroarchitecture. Int. J. Environ. Res. Public Health 2023, 20, 2735. [Google Scholar] [CrossRef] [PubMed]

- Kalantari, S.; Neo, J.R.J. Virtual Environments for Design Research: Lessons Learned From Use of Fully Immersive Virtual Reality in Interior Design Research. J. Inter. Des. 2020, 45, 27–42. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lavdas, A.A. Eye-Tracking Applications in Architecture and Design. Encyclopedia 2024, 4, 1312-1323. https://doi.org/10.3390/encyclopedia4030086

Lavdas AA. Eye-Tracking Applications in Architecture and Design. Encyclopedia. 2024; 4(3):1312-1323. https://doi.org/10.3390/encyclopedia4030086

Chicago/Turabian StyleLavdas, Alexandros A. 2024. "Eye-Tracking Applications in Architecture and Design" Encyclopedia 4, no. 3: 1312-1323. https://doi.org/10.3390/encyclopedia4030086

APA StyleLavdas, A. A. (2024). Eye-Tracking Applications in Architecture and Design. Encyclopedia, 4(3), 1312-1323. https://doi.org/10.3390/encyclopedia4030086