1. Introduction

Rapid urbanization and increased vehicle ownership present unprecedented challenges for transportation systems. Traffic congestion, unpredictable travel times, and elevated emissions adversely affect the efficiency of transportation systems and, in turn, hinder economic productivity and the quality of life in urban environments [

1,

2]. In particular, persistent traffic congestion constitutes a critical challenge for metropolitan areas, leading to economic inefficiencies, excessive fuel consumption, and increased greenhouse gas emissions that deteriorate both environmental quality and public health standards [

3,

4]. Traditional traffic management systems—predominantly reliant on static signal timings or reactive interventions—are increasingly inadequate for addressing the nonlinear and highly dynamic characteristics of modern traffic flows [

5].

The emergence of smart city paradigms, enabled by advances in sensor technologies, Internet of Things (IoT) infrastructure, and artificial intelligence (AI), has created opportunities for the development of adaptive and intelligent traffic control strategies [

6,

7]. Deep learning has, in particular, proven to be a transformative approach [

8], demonstrating significant potential to model complex spatiotemporal dependencies and to extract latent patterns from high-dimensional traffic datasets [

9,

10].

Accurate short-term traffic flow forecasting extends beyond the immediate needs of intelligent transportation systems; it directly informs critical aspects of urban planning and sustainable city design. Reliable predictions can guide dynamic road capacity allocation, such as adaptive signal timing or reversible lane strategies, which enable transportation networks to respond flexibly to fluctuating demand. Forecasting also provides an evidence base for infrastructure investment planning, allowing urban planners to identify persistent congestion hotspots and prioritize road expansions or new public transit corridors where they will yield the greatest long-term benefit.

Equally important, predictive traffic analytics play a pivotal role in advancing sustainability goals. By mitigating congestion, optimizing routing, and reducing idling, forecasting models support the reduction of vehicle emissions and improvements in energy efficiency. These outcomes are directly aligned with the United Nations Sustainable Development Goal (SDG) 11: (sustainable cities and communities) [

11], which emphasizes the need for resilient, efficient, and environmentally responsible urban transport systems. Thus, the proposed methodological contributions to enhance computational performance and to provide tangible tools for planners and policy makers to design safer, more livable, and sustainable cities.

Performing accurate and timely traffic flow prediction is the cornerstone of intelligent transportation systems (ITS). Reliable forecasts support applications such as dynamic signal control, congestion mitigation, proactive route planning, and efficient emergency response allocation [

12,

13]. Recent research has confirmed the effectiveness of recurrent neural networks (RNNs), particularly Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures, in capturing sequential dependencies in traffic data [

14,

15]. However, standalone models often struggle to balance predictive accuracy with computational efficiency, especially under real-time constraints [

16].

To overcome these limitations, this study proposes a hybrid deep learning architecture that integrates GRU and LSTM units, augmented by a temporal attention mechanism. This design enhances the model’s ability to capture both long-term dependencies and contextually relevant temporal features, thereby advancing predictive accuracy and operational efficiency in traffic flow forecasting. The key contributions of this work are as follows:

A novel hybrid deep learning architecture is introduced, combining GRU and LSTM units with an attention mechanism to improve the extraction of complex temporal patterns inherent in traffic data.

Comprehensive evaluation on the publicly available PEMS-03, PEMS-04, PEMS-07, and PEMS-08 datasets [

17] demonstrates that the proposed model outperforms established baselines and recent deep learning methods, achieving measurable error reductions.

Extensive ablation studies are conducted to assess the contribution of each architectural component and to verify the practical feasibility of real-world deployment.

By jointly addressing predictive performance and computational efficiency, this research delivers a robust, scalable, and efficient solution for urban traffic forecasting. The proposed model can serve as a foundational component of intelligent traffic management systems, thereby supporting the broader vision of sustainable, efficient, and adaptive smart cities.

The remainder of this paper is organized as follows:

Section 2 reviews related works.

Section 3 details the proposed architecture.

Section 4 presents the experimental setup, results, and comparative analysis. Finally,

Section 5 concludes the paper and outlines directions for future research.

2. Literature Review

Traffic forecasting has undergone a remarkable transformation over the last decade, evolving from statistical modeling to advanced deep learning solutions. Early predictive models were grounded in classical time-series algorithms, such as ARIMA and Kalman filters [

18,

19], offering simplicity and interpretability but limited in handling nonlinear spatial–temporal dependencies inherent in complex road networks.

Razali et al. [

20] review traffic flow prediction using machine learning and deep learning, highlighting existing gaps, techniques, and evaluation methods. They analyze various predictive models, discussing their strengths and limitations in handling traffic data complexity and variability. The study emphasizes the need for more robust and adaptive prediction frameworks to improve traffic management and decision-making in dynamic urban environments.

As data availability increased, machine learning approaches—including Support Vector Regression (SVR), Random Forests (RF), and k-Nearest Neighbors (k-NN)—were shown to surpass classical methods in forecasting traffic speed and volume, particularly under non-linear and dynamic traffic conditions [

21,

22]. Although these methods improved the regression accuracy, they often fell short at modeling dependencies that stretch both in space and time. Moreover, they required careful feature engineering and struggled when scaling to large, heterogeneous datasets.

The maturation of deep learning introduced hybrid architectures combining Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) [

23], particularly LSTMs. CNNs offer powerful spatial feature extraction from structured sensor arrays, while LSTMs capture temporal sequence dynamics, a combination that has demonstrated superior performance in traffic forecasting tasks [

24,

25]. The alliance of these two components enabled superior short-term predictions and paved the way for real-time traffic forecasting applications.

Recent advancements in Graph Neural Networks (GNNs) have significantly improved the accuracy and robustness of traffic flow prediction by explicitly modeling the spatial structure of road networks. Traditional temporal models often fail to capture complex inter-sensor dependencies, whereas GNN-based models integrate topological and temporal features in a unified framework.

Theodoropoulos et al. [

26] proposed the WEST GCN-LSTM architecture, which introduces weighted stacked spatiotemporal graph convolutions combined with LSTM units. This model incorporates domain-specific policies, such as shared borders and adjustable hop distances, to better capture regional traffic patterns. Similarly, Jin et al. [

27] introduced the STGNPP model, a spatiotemporal graph neural point process framework designed specifically for predicting traffic congestion events, modeling them as stochastic processes over time and graph space. Although the GSTPRN framework introduces a promising integration of spatial–temporal modeling components [

28], computational efficiency and scalability to larger networks are essential. Reliance on self-attention and personalized propagation introduces significant overhead in real-time deployment scenarios.

Advancements in transformer and attention-based models have further revolutionized traffic forecasting by tailoring self-attention mechanisms to spatial and temporal dynamics. Prabowo et al. [

29] introduced Graph Self-attention WaveNet (G-SWaN), which adapts self-attention across sensor pairs by learning unique dynamics for each sensor and sensor pair, yielding superior performance on multiple traffic datasets. Liu et al. [

30] proposed STAEformer, a vanilla transformer integrated with Spatio-Temporal Adaptive Embedding, which captures intrinsic traffic patterns and achieves state-of-the-art results on five real-world datasets. Li et al. [

31] enhanced existing deep forecasting frameworks with a Dynamic Regression module, explicitly modeling structured residual noise across sensors and time, thereby improving both interpretability and robustness. Together, these models demonstrate the transformative potential of attention-based transformer architectures in capturing complex spatiotemporal dependencies for intelligent transportation systems.

Moreover, federated learning paradigms have increasingly emerged in traffic-forecasting applications, offering promising solutions for privacy-preserving, decentralized model training across distributed traffic sensors. Liu et al. [

32] proposed FedOSTC, an online spatiotemporal federated learning framework that combines local GRU encoders with graph-attention-based spatial aggregation, enabling privacy-preserving, adaptive forecasting across distributed sensors. Similarly, Alqubaysi et al. [

33] introduced a federated graph neural network approach for urban traffic prediction that improves model generalization while safeguarding data privacy. More recently, Wang et al. [

34] developed an adaptive federated learning strategy that dynamically adjusts client contributions to enhance the forecasting accuracy in heterogeneous traffic networks.

Hong et al. [

35] propose a resilience recovery method for complex traffic networks using trend forecasting. Their approach predicts network stress trends to guide adaptive recovery strategies, improving both robustness and post-disruption recovery efficiency. Simulation results demonstrate its effectiveness over conventional methods in maintaining traffic network stability.

Moreover, Abduljabbar et al. [

36] surveyed machine learning models for sustainable traffic prediction, highlighting the trade-offs between accuracy and resource consumption. Likewise, Mystakidis et al.’s systematic review [

13] emphasized the challenge of balancing predictive power with model compactness when applied to urban environments.

Chen et al. [

37] proposed an attention-augmented LSTM framework that selectively emphasizes critical time steps, resulting in enhanced prediction accuracy during peak hours. Similarly, Qaffas [

38] demonstrated the effectiveness of transformer-based attention modules in capturing dynamic traffic correlations across sensors in an IoT-enabled environment.

Despite these advancements, several challenges persist. Most current models are limited in their ability to generalize across different urban settings due to overfitting on location-specific datasets [

39,

40]. In light of these findings, the proposed research builds on recent hybrid architectures by incorporating both GRU and LSTM layers, enhanced with an attention mechanism. This aims to improve the temporal learning efficiency and adaptability under dynamic traffic scenarios, contributing to the ongoing effort to make ITS infrastructures more intelligent, responsive, and sustainable.

3. Materials and Methods

This section outlines the proposed deep learning methodology for intelligent traffic flow prediction and optimization. First, the dataset used in this study is described. Then, the preprocessing steps applied to ensure the quality and suitability of the data are presented. After that, the hybrid model architecture is detailed, followed by a description of the training and evaluation procedures.

3.1. Dataset Description

The PEMS-03, PEMS-04, PEMS-07, and PEMS-08 publicly available datasets [

17] are selected for this research due to their comprehensive coverage and widespread use in traffic forecasting studies. Collected by the California Department of Transportation (Caltrans) through the Performance Measurement System (PEMS), these datasets offer high-resolution real-time traffic information from multiple detectors deployed across the freeway system.

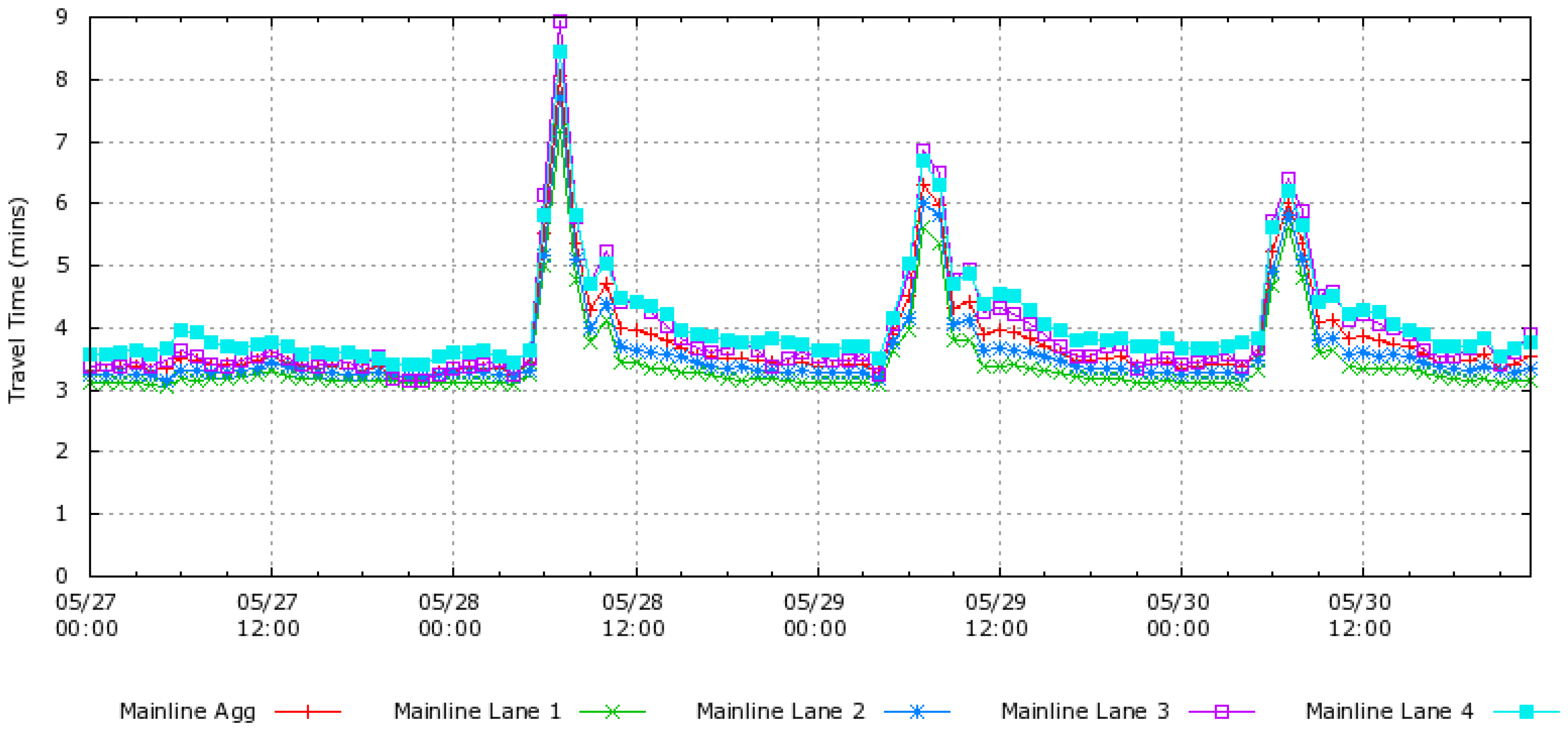

The datasets encompass comprehensive traffic information, including performance metrics, vehicle volume, and recorded crash incidents. For example,

Figure 1 presents a comparative analysis of travel times at different hours of three days for Mainline Lanes, effectively highlighting peak hour congestion patterns.

To ensure reproducibility and transparency, a detailed summary of the datasets employed in this study is provided in

Table 1. Four widely used benchmark subsets from the Caltrans Performance Measurement System (PeMS)—namely PeMS-03, PeMS-04, PeMS-07, and PeMS-08—were selected due to their extensive coverage of freeway networks and prior use in the traffic-forecasting literature. Each dataset consists of high-resolution measurements collected at five-minute intervals, including traffic speed, flow, and occupancy from fixed loop detectors.

The table reports the number of sensors deployed in each district, the temporal coverage of the datasets, the proportion of missing values, and the variables used in this study. To facilitate a fair evaluation, the datasets were partitioned into training, validation, and testing sets following community practice, with exact date ranges specified rather than approximate percentage splits. This explicit reporting allows for direct comparison with prior works and eliminates ambiguity regarding data usage.

Normalization was performed using the training set only, and the resulting parameters were applied consistently to the validation and testing sets. This procedure ensures that no information leakage occurred across partitions. By providing detailed dataset statistics, including missing rates and split protocols, we aim to support reproducibility and enable other researchers to replicate our experiments under identical conditions.

3.2. Data Preprocessing

Preprocessing plays a critical role in preparing raw traffic data for deep learning applications. The following steps were undertaken to enhance the data quality and ensure optimal model performance:

Missing Value Imputation: Real-world traffic datasets often contain missing values due to sensor outages, data corruption, or transmission errors. To ensure data integrity and consistency, two imputation strategies were employed based on the size of the missing segment. For short-term missing values (typically spanning a few time steps), linear interpolation is applied. Given two observed values,

and

, at time steps

and

, the missing value

for

is computed as follows:

This method ensures temporal smoothness and continuity in the time series data.

Moreover, for longer gaps, a spatial nearest-neighbor imputation strategy was used. Let

denote the missing value at detector

i and time

t. The imputed value is estimated using the values from spatially adjacent detectors

as follows:

where

is defined as the set of detectors that are physically adjacent to sensor

i along the freeway network, based on the official Caltrans deployment maps. In practice, we adopt a fixed one-hop radius, which corresponds to the immediate upstream and downstream detectors. This design strikes a balance between locality and sensor coverage, ensuring that missing values are replaced using contextually relevant neighbors without introducing noise from distant detectors.

Normalization: To eliminate feature dominance caused by differing scales, all traffic variables—speed, volume, and occupancy—were normalized using the Min–Max scaling method. This transformation bounds the data within the range

, which accelerates convergence and enhances training stability. The scaling formula is defined as follows:

It is important to note that normalization parameters were computed exclusively on the training set and subsequently applied to the validation and test sets, thereby preventing any information leakage across data partitions.

Sequence Construction: Since recurrent neural networks, such as GRU and LSTM, require sequential inputs, the time-series data was reshaped into a supervised learning format. A sliding window of time steps (equivalent to one hour) was used to predict the subsequent time steps (15 min ahead). This format captures both short-term and emerging patterns in traffic dynamics.

Data Splitting: To evaluate the model performance reliably, the dataset was partitioned into training (70%), validation (15%), and testing (15%) sets. Temporal order was preserved during the split to avoid data leakage and ensure consistency with real-world deployment scenarios.

These preprocessing steps not only enhance data integrity but also optimize the learning conditions for the hybrid GRU-LSTM model with attention mechanism, which is detailed in the following section.

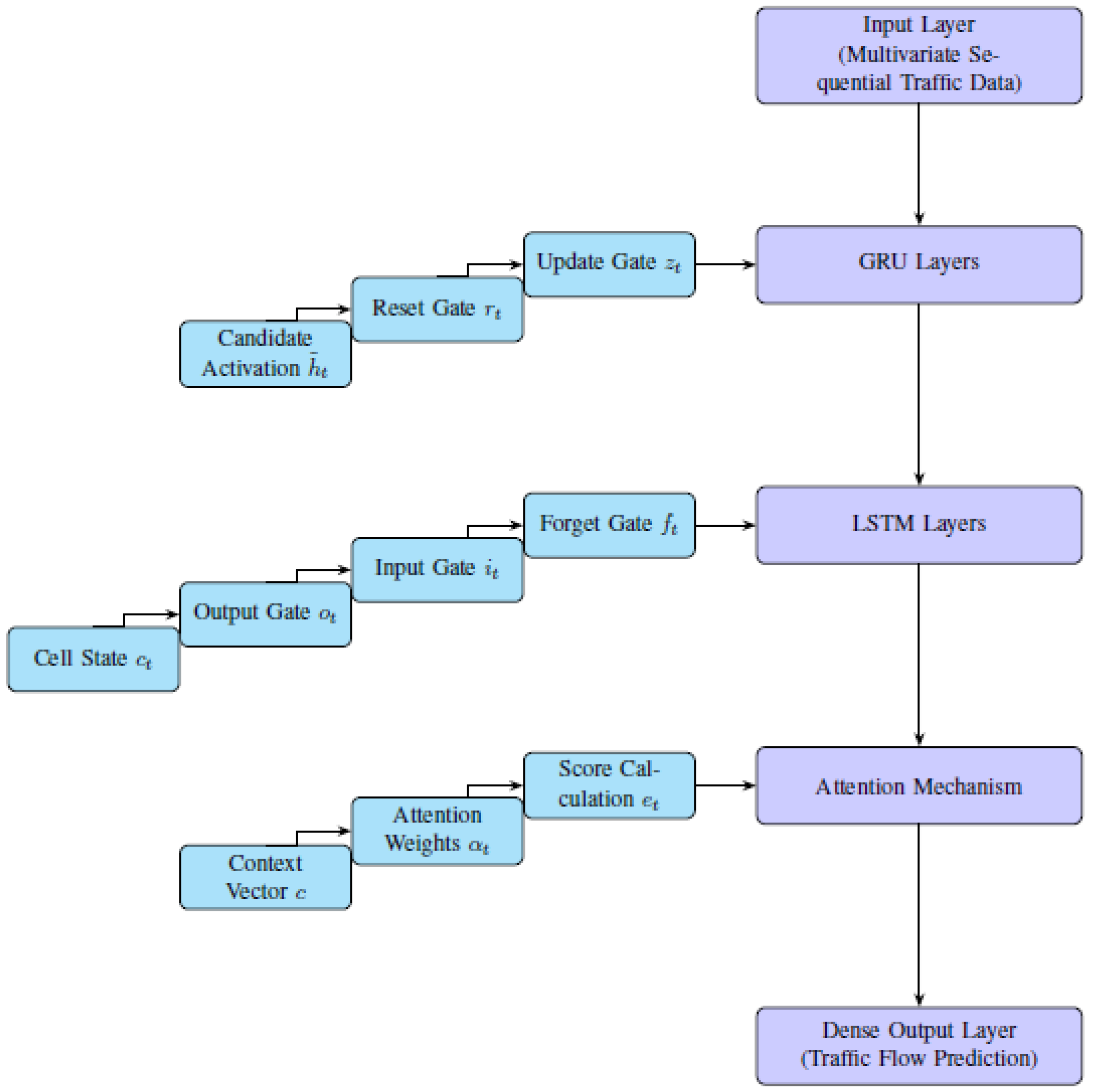

3.3. Hybrid GRU-LSTM Model with Attention Mechanism

The proposed model integrates the complementary strengths of Gated Recurrent Units (GRU) and Long Short-Term Memory (LSTM) networks, augmented by an attention mechanism, to effectively capture complex spatiotemporal dependencies in traffic flow data. This architecture exploits the computational efficiency of GRU for sequence processing alongside the robust long-term memory capabilities of LSTM. The attention mechanism enables dynamic emphasis on the most relevant historical information, enhancing prediction accuracy. The proposed model is structured into five key components, as illustrated in

Figure 2.

Input Layer: Receives preprocessed sequential traffic data (e.g., traffic speed, volume, and occupancy) collected from multiple sensors over a defined look-back window.

GRU Layers: An initial stack of GRU layers processes the input sequences. GRU cells are selected for their computational efficiency and effectiveness in capturing short-term dependencies. These layers reduce dimensionality and extract salient features from raw input.

The GRU unit at time step

t is defined as follows:

where

is the input vector at time step

t,

is the previous hidden state,

is the update gate,

is the reset gate,

is the candidate hidden state, ⊙ denotes element-wise multiplication,

is the sigmoid function,

is the hyperbolic tangent function, and

are learnable parameters.

LSTM Layers: Subsequent LSTM layers model long-term dependencies and mitigate the vanishing-gradient problem, essential for capturing extended temporal patterns. The LSTM cell operations at time step

t are the following:

where

,

, and

are forget, input, and output gates respectively;

is the cell state; and

is the hidden state.

Attention Mechanism: An additive attention mechanism is applied to the LSTM outputs to selectively focus on important time steps. For each hidden state

, the attention score

is computed as follows:

where

is a learnable parameter. The attention weights

are obtained via softmax:

The context vector

c is the weighted sum of hidden states:

Dense Output Layer: The context vector c is passed through one or more fully connected (Dense) layers to generate the final traffic flow predictions over the future time horizon.

The overall model architecture can be illustrated as a sequential pipeline starting with an Input Layer that ingests multivariate time-series traffic data from multiple sensors. The data flows into stacked GRU Layers which compress and extract short-term features. Outputs are then fed into LSTM Layers that model long-range dependencies. The resulting hidden states pass through an Attention Module that computes weighted contextual information emphasizing critical time steps. Finally, the context vector is transformed via Dense Layers, producing multi-step traffic flow forecasts. This modular design enables both interpretability and scalability for real-world intelligent transportation systems.

3.4. Training and Evaluation

The experiments were conducted using the MATLAB R2024a framework. Training was performed with the Adam optimizer, and the Mean Absolute Error (MAE) was employed as the primary loss function. All experiments were executed on a high-performance computing environment equipped with AMD CPUs, Intel processors, and NVIDIA Tesla T4 GPUs. The system comprises 24 processing cores and 384 GB of memory, providing ample computational capacity to efficiently train and evaluate deep learning models on large-scale traffic datasets.

To mitigate overfitting across all experimental models, an early stopping strategy was implemented—monitoring the validation loss and halting training when no improvement was observed over a predefined number of epochs. The optimal batch size and number of training epochs were determined through a combination of preliminary experimentation and ablation studies.

Hyperparameter selection—including learning rate, batch size, and dropout rate—was performed through a combination of systematic grid search on a data subset and iterative validation-based tuning. Specifically, an initial grid search was used to identify promising ranges for key hyperparameters, followed by fine-tuning based on validation set performance. Although K-fold cross-validation was considered for more robust evaluation, it was ultimately excluded due to the sequential nature of time-series data and the associated computational overhead. Instead, a fixed train–validation–test split with temporal ordering was adopted to preserve the data integrity and better simulate real-world deployment scenarios.

The model performance was evaluated using several widely accepted metrics in the domain of traffic flow prediction:

Mean Absolute Error (MAE): Measures the average magnitude of errors between predicted and actual values, irrespective of direction. A lower MAE indicates greater prediction accuracy.

Root Mean Squared Error (RMSE): Reflects the square root of the average of squared differences between predictions and actual values. This metric penalizes larger errors more severely, making it particularly useful when such deviations are costly.

Mean Absolute Percentage Error (MAPE): Represents the prediction error as a percentage of the actual value, providing a normalized measure of accuracy.

Here, and denote the actual and predicted values, respectively, and n is the total number of observations.

Beyond predictive accuracy, the inference time of the model is also evaluated to assess its feasibility for real-time traffic management applications. Comparative analysis with state-of-the-art models highlighted the practical advantages of the proposed approach in terms of computational efficiency.

4. Experimentation Results and Discussion

This section presents the experimental findings of the proposed deep learning model for traffic flow prediction.

4.1. Ablation Study for Hyperparameter Tuning

In a comprehensive research setting, ablation studies are essential for isolating the contributions of individual architectural components and hyperparameters. For the proposed hybrid GRU-LSTM model with an attention mechanism, such analysis involves varying the parameters shown in

Table 2.

4.1.1. Impact of Number of GRU/LSTM Layers

This experiment investigates how varying the number of stacked GRU and LSTM layers affects the model’s ability to capture complex patterns in traffic data. As presented in

Table 3, increasing the depth from a single-layer configuration (1–1) to a two-layer configuration (2–2) significantly improves the prediction accuracy, and further increasing the number of layers (e.g., 3–3 and 4–4) results in marginal gains or even a slight degradation in performance metrics. This trend suggests diminishing returns due to potential overfitting and increased training complexity. The 2-2 configuration thus offers an optimal trade-off between model expressiveness and generalization and is adopted as the baseline in this study.

4.1.2. Impact of Number of Units per Layer

This experiment evaluates the effect of varying the number of hidden units (neurons) in each recurrent layer on the model’s performance. As shown in

Table 4, increasing the number of units initially leads to improved performance, with the lowest MAE and RMSE achieved at 64 units per layer—establishing this configuration as the optimal baseline. While a further increase to 128 units yields marginal improvement, it also introduces a higher computational cost without significant performance gains. Notably, increasing to 256 units results in a slight degradation in accuracy metrics (MAE, RMSE, and MAPE), indicating potential overfitting due to model over-parameterization. These findings underscore the importance of balancing model complexity with generalization when designing deep recurrent architectures for traffic forecasting tasks.

4.1.3. Impact of Attention Mechanism

This experiment investigates the effectiveness of incorporating an attention mechanism into the hybrid GRU-LSTM architecture. The attention module is designed to dynamically weigh temporal features, allowing the model to focus on the most relevant time steps during prediction. As presented in

Table 5, the model augmented with attention significantly outperforms its counterpart without attention. Specifically, the attention-enhanced configuration achieves lower MAE, RMSE, and MAPE values, indicating improved accuracy and robustness. These results highlight the value of attention in enhancing temporal relevance modeling, especially in complex, non-stationary traffic scenarios.

4.1.4. Impact of Look-Back Window Size

The look-back window size defines the number of past time steps utilized as input to predict future traffic conditions. Selecting an appropriate window size is critical, as it determines the extent of historical context the model can learn from. As shown in

Table 6, a shorter window (e.g., 6 steps or 30 min) provides insufficient temporal context, leading to suboptimal performance. Conversely, excessively long windows (e.g., 24 or 36 steps) may introduce redundant or noisy information, negatively affecting the model’s accuracy and increasing computational complexity. The results indicate that a window size of 12 steps achieves the best trade-off, capturing enough historical information for accurate forecasting while maintaining computational efficiency. This configuration is therefore adopted as the baseline in the proposed framework.

4.1.5. Impact of Imputation

To further assess the role of imputation, we performed an ablation study comparing three scenarios: (i) no imputation, (ii) temporal-only linear interpolation, and (iii) spatial neighbor averaging as described in Equation (

2). As shown in

Table 7, temporal interpolation is effective for short gaps, while the combination of temporal and spatial information provides superior robustness when longer outages occur. Specifically, spatial neighbor averaging improves MAE by approximately 3% compared to temporal-only interpolation, highlighting the benefit of exploiting local spatial context during imputation.

The results from these ablation studies demonstrate the critical importance of hyperparameter tuning and architectural design in developing an effective traffic flow prediction model. The selected configuration—2 GRU layers, 2 LSTM layers, 64 units per layer, a 12-step look-back window, and the inclusion of an attention mechanism—achieves a well-rounded balance between accuracy, generalization, and computational efficiency.

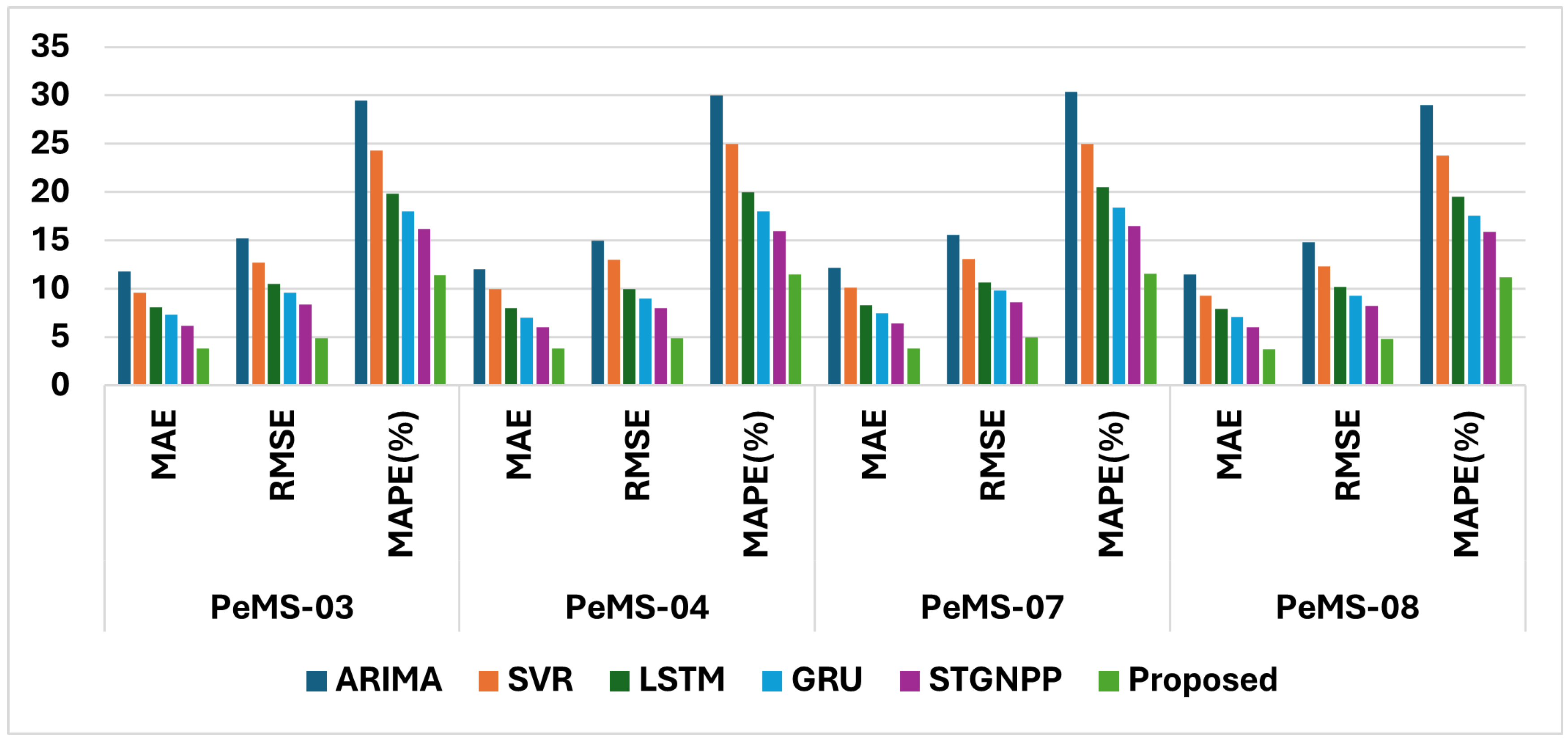

4.2. Comparison with State-of-the-Art Methods

To evaluate the effectiveness of the proposed hybrid GRU-LSTM model, a comprehensive comparison was conducted against a range of state-of-the-art traffic prediction techniques, including statistical methods, classical machine learning algorithms, and advanced deep learning architectures.

Figure 3 summarizes the results in terms of key performance metrics—MAE, RMSE, and MAPE. As observed, traditional models, such as ARIMA [

18] and SVR [

22], exhibit relatively high prediction errors despite their low computational demands, making them less suitable for complex traffic dynamics. Standard deep learning models like LSTM [

37] and GRU [

39] show improved accuracy but still fall short in capturing intricate temporal dependencies effectively. More sophisticated approaches like STGNPP [

27] offer further accuracy gains, albeit with significantly higher inference times. In contrast, the proposed hybrid model achieves the best overall performance across all metrics, delivering lower error rates while maintaining competitive inference speed. This balance of predictive precision and computational efficiency demonstrates the model’s suitability for real-time deployment in dynamic and resource-constrained urban environments.

The inference time, measured in milliseconds per sample, is a critical performance indicator for real-time traffic-forecasting applications, as shown in

Figure 4. In smart city environments, traffic management systems must respond to changing conditions rapidly to optimize signal control, reroute traffic, or issue congestion alerts. Thus, a model’s prediction accuracy must be balanced with its computational efficiency.

While deep learning models, such as LSTM and GRU, offer improved accuracy, their higher computational complexity may render them unsuitable for latency-sensitive deployments if not properly optimized. Models like STGNPP, though accurate, suffer from high inference latency due to complex graph-based spatial encoding. The proposed hybrid GRU-LSTM model with attention achieves a favorable trade-off, delivering state-of-the-art accuracy (MAE: 3.85, RMSE: 4.90, MAPE: 11.5%) while maintaining moderate inference latency (10–15 ms/sample). This balance supports its applicability in real-time systems where both precision and responsiveness are essential.

To further validate the robustness of the proposed hybrid GRU–LSTM model with attention, we conducted a

paired t-test analysis against the strongest baseline model (STGNPP [

27]).The statistical tests were performed across all four PeMS datasets (PeMS-03, PeMS-04, PeMS-07, and PeMS-08), using prediction horizons of 12 steps (1 h ahead).

Table 8 summarizes the average errors (MAE, RMSE, and MAPE) for both models, along with the corresponding

p-values obtained from the paired t-tests.

As shown, the proposed model consistently outperforms STGNPP across all metrics, and the improvements are statistically significant at the 95% confidence level (). These results provide strong evidence that the observed gains are not due to random variation but rather stem from the architectural advantages of the hybrid GRU–LSTM with attention.

Table 9 reports the per-dataset performance of the proposed hybrid GRU–LSTM with the attention model on PeMS-03, PeMS-04, PeMS-07, and PeMS-08. Results are presented as mean values with 95% confidence intervals computed across five independent runs. The model consistently achieves strong predictive accuracy across all datasets, with MAE values ranging from 3.78 to 3.95 and RMSE values between 4.85 and 5.05.

Among the datasets, PeMS-08 exhibits the lowest error rates, which can be attributed to its relatively smaller sensor network and more homogeneous traffic patterns. By contrast, PeMS-04 shows slightly higher errors, reflecting the greater variability and complexity of traffic dynamics in that district. The narrow confidence intervals across all datasets highlight the stability and reproducibility of the proposed approach. These results demonstrate that the model generalizes well across diverse traffic conditions, providing a reliable basis for deployment in real-world ITS applications.

4.3. Qualitative Analysis

Qualitative evaluation involved visual inspection of predicted versus actual traffic flow patterns.

Figure 5 illustrates close alignment between predicted and observed values, capturing key trends such as rush-hour peaks and low-traffic troughs. This visual coherence highlights the model’s generalization capability.

4.4. Discussion

The results obtained from the proposed model provide evidence supporting the efficacy of deep learning approaches for traffic flow prediction. The observed MAE, RMSE, and MAPE values indicate the model’s capability to capture complex temporal dependencies and produce reasonably accurate forecasts. Qualitative analysis, demonstrated via sample prediction plots, further confirms the close correspondence between predicted and actual traffic flow values. This foundational performance underscores the model’s potential applicability to real-world datasets, such as PEMS-03, PEMS-04, PEMS-07, and PEMS-08.

The hybrid GRU-LSTM architecture augmented with an attention mechanism represents a key strength of this approach. GRU layers contribute computational efficiency and effectively model short-term dependencies, while LSTM layers excel at capturing long-term memory necessary for understanding recurrent traffic patterns over extended periods. The attention mechanism enhances this by dynamically weighting the importance of different historical time steps, enabling the model to prioritize critical events, such as sudden congestion or rapid flow changes. Such an adaptive focus is particularly advantageous in dynamic urban traffic environments characterized by rapid and unpredictable fluctuations.

5. Conclusions

This study demonstrates the significant potential of deep learning—specifically a hybrid GRU-LSTM architecture enhanced with an attention mechanism—for intelligent traffic flow prediction and optimization within the context of smart cities. The proposed methodology addresses the pressing need for accurate and efficient traffic forecasting, which is essential for mitigating urban congestion and improving the operational efficiency of transportation systems. The experimental results confirm the capability of the architecture to learn and generalize complex spatiotemporal patterns inherent in traffic data. This work demonstrates that deep learning-based traffic forecasting can serve as a foundational enabler for sustainable urban planning. Beyond achieving state-of-the-art predictive accuracy, the proposed hybrid GRU–LSTM model with attention supports broader planning objectives by reducing congestion, lowering greenhouse gas emissions, and improving the reliability of urban mobility systems. These benefits translate into tangible contributions to environmental sustainability, energy efficiency, and the creation of safer and more livable urban spaces. By bridging the gap between intelligent transportation research and practical planning applications, this study advances the integration of predictive analytics into the design and management of smart, sustainable cities.

Future research will focus on extending the proposed framework to integrate multimodal data sources within the larger smart city ecosystem. In particular, incorporating weather and environmental conditions will allow the model to capture external factors that strongly influence traffic dynamics. Similarly, the inclusion of public transit and mobility-as-a-service (MaaS) data will support a more holistic modeling of urban mobility patterns, allowing for coordination between multiple modes of transportation. Finally, integrating data related to events and infrastructure, such as roadworks, accidents, and large-scale gatherings, will improve the adaptability of the framework to real-world disruptions. Collectively, these extensions will enable the model to evolve from a road-traffic-forecasting tool to a comprehensive decision support system for sustainable and integrated smart city planning.