1. Introduction

Advances in medicine, rising living standards, and increased life expectancy are increasing the population of older people. However, with aging, recalling Terenzio Afro’s sentence “Senectus ipsa est morbus”, the number of people suffering from chronic diseases, including disabling ones, also increases. Multimorbidity, i.e., the coexistence of multiple pathologies, also increases. Basically, by living longer, there is a greater likelihood of being more exposed to physical, psychological, and emotional changes that can increase our risk of MCI. The Global Burden of Disease [

1], which analyses diseases by assessing their prevalence, incidence, and the years affected, has found that the so-called YLDS (years lived with disability) have inevitably increased. In the top ten are MCI, hearing loss, neck pain, musculoskeletal disorders, headache, anxiety, diabetes, and depression. Long-term aging leads to an increase in public health expenditure. The use of technology to support medicine can lead to a reduction in social and healthcare costs.

The developments made in the medical field have been possible thanks to digital technological evolution, from the creation of devices, software platforms, and advanced tools to IoT, Artificial Intelligence, robotics, Cloud Computing, smart wearables, and intelligent analytics aimed at improving the quality and duration of life. For example, Artificial Intelligence has proven to be effective in many areas, from improving image-based diagnostics [

2], analyzing biological signals [

3], recognizing human activities through accelerometric signals [

4], guiding navigation for people with cognitive impairments, and designing neuro-integrated prosthetic systems and organ tissues compatible for transplantation, to surgery, predicting behavior and nerve responses to stimuli [

5] recognizing emotional states through facial expressions [

6], to name just a few of the many applications that exist. All this was possible thanks to acquiring vast volumes of digitized data and the machine learning technique. In the surgical field, the so-called Extended Reality (XR) has also been applied [

7], an all-encompassing term that combines the experiences of augmented reality, virtual reality, and mixed reality. Robotics is also present in the field of surgery to perform minimally invasive surgeries and for training and professional updating. In addition, telepresence robots have been designed to help socially isolated people [

8]. Robot-assisted rehabilitation is also used. The biomedical applications of IoT [

9,

10] are now present in remote patient management, the monitoring of Parkinson’s and Alzheimer’s patients, vital data monitoring, depression monitoring via the smartwatch, glucose monitoring, and efficient drug management.

The term dementia refers to the loss of memory and other cognitive functions with consequences so severe as to prevent those affected from being able to carry out most of their daily activities independently [

11].

Activities of daily living (ADL) are the activities that an adult individual performs independently and without the need for assistance to survive and take care of himself. The first scale of evaluation of ADL to determine the state of functional autonomy of the elderly was proposed for the first time in 1950 by Sidney Katz. It refers to activities closely connected with primary needs, such as personal hygiene, using the bathroom correctly, knowing how to dress and feed themselves self-sufficiently, and walking independently. In addition to ADLs, evaluating IADLs (Instrumental ADLs), activities that are not essential for survival but allow people to live independently inside the home is necessary. Performing daily activities, such as preparing a meal, keeping the house tidy, remembering where they have placed objects, remembering appointments made, getting medicines without help, socializing, and knowing how to manage expenses are difficult or even impossible for these patients. As a result, their lives become highly dependent on the people who assist them. To detect the pattern of behavior in the instrumental activities of daily life, reference is made to the IADL (Instrumental Activities of Daily Living) index. On a scale of values from a minimum to a maximum, the lower the final score, the greater the impairment of the subject’s autonomy. The reliable identification of such deficits is needed, as MCI patients with IADL deficiency appear to have a higher risk of conversion to dementia than MCI patients without IADL deficiency [

12]. Other researchers [

13] explored the relationship between executive functions (EF) and activities of daily living (ADLs) in cognitive disorders, considering the triple classification of basic daily functioning or b-, instrumental, or i-, and advanced or a-ADL. Studies have shown that EFs are poorly correlated with b-ADLs. At the same time, they have significant correlations concerning i- and a-ADLs and contribute the same amount of variance to limitations in both i- and a-ADLs. The analyses conducted also demonstrated the effectiveness of the TMT (Trail-Making Test), CDT (Clock Drawing Test), and Animal Fluency Test (AFT) as diagnostic tools for the assessment of cognitive deficits.

The most common form of dementia is Alzheimer’s disease (AD) [

14]. The precise causes of the development of dementia are not fully known. Genetic factors and environmental influences are considered possible causes. The disease manifests itself initially with minor disorders and then develops over time. This phase between the cognitive decline of normal aging and the more severe decline of dementia is known as Mild Cognitive Impairment (MCI). It is characterized by problems with memory, language, and behavior [

15]. Patients lose their long- and short-term memory abilities. Those affected manifest enormous difficulties in spatial and temporal orientation, with apparent problems moving in family environments. Lack of spatial recognition results in the inability to connect landmarks and paths with places in the environment. These difficulties manifest in various behaviors, such as hesitation in choosing a path, identifying the initial position, distinguishing relevant or unrelated information, and remembering why it is necessary to reach a specific destination [

16]. As a result, the patient will likely run into danger by wandering without awareness of the environmental conditions surrounding them. Finally, the patient moves without knowing the reason for their wandering activity. Indoor navigation requires certain motor or perceptual functions as well as cognitive. However, people with MCI have trouble moving freely around the home because of difficulties acquiring topographic representations of the environment in which they live [

17]. Therefore, an assistive technology, such as a navigation system, can help overcome spatial and temporal orientation. The assistive technology helps improve the quality of life of patients and caregivers [

18].

This terminology, generally, refers to any system that can help a sick person maintain or improve their independence and security. These systems monitor a wide variety of activities and situations via intelligent sensors and are used to monitor lifestyle, provide alerts to assistants, trigger events on compatible smart home devices, or warn support services depending on the severity detected.

Above all, patients who take a more active role in managing their health can experience a better quality of life. Several factors can influence the self-management and independence of patients. Some of these factors may be the disease’s severity and other pathologies, complications, the duration of the disease, and the patient’s age. In addition, other decisive factors are the psychological aspects and the support of family members, caregivers, general medical personnel, and the social context. Physical and psychological barriers are the first obstacles to the cultural approach of self-management techniques, with the reduced care of caregivers to be accepted by patients. The experiences conducted in recent years have shown that the involvement of patients and caregivers in the design of innovative technological healthcare systems improves performance and makes the proposed technological solution acceptable [

19]. The commonly used methodology is the motivational interview, which helps better understand the patient’s needs and shows intelligent solutions that promote independence with simple and captivating examples. During the implementation phase, find check moments to verify the correspondence of the solution adopted with their expectations. Measure the efficiency of care and promote acceptance of the cultural change in self-management [

20].

Impact on Public Health

Cognitive decline has a devastating impact not only on the individual but also on the family and the community, and requires targeted interventions in health, social, and public health services to foster the necessary care and support. Public policies should address the factors that influence mental health by devising a strategy to identify positive steps to be taken to maintain and improve cognitive health. It is crucial to create an environment conducive to mental health and ensure that everyone has the opportunity for a peaceful and healthy life. In this regard, the World Health Organization (WHO) has prepared guidelines for preventing neurodegenerative diseases. Since no effective drugs exist to treat diseases, they suggest proactive and preventive interventions to slow down cognitive degeneration. In particular, it is suggested that the cognitive reserve, which represents the brain’s ability to compensate for or cope with neuropathological damage, intervenes. There are essentially two suggested actions: cognitive stimulation and cognitive training. The first is based on activities that improve cognitive and social functioning. Conversely, the second is the practical exercise of specific tools to improve particular cognitive functions. These actions contribute to the reduction in risk factors and the maintenance of global well-being.

These actions improve the living conditions of the people involved and their caregivers. Training support and psychological support for caregivers must be added to these neuropsychological rehabilitation actions.

The aim of the study was to verify the possibility of applying the technology normally used for video games for the development of an indoor navigation system. There is no evidence of this in the literature. We have developed an easy-to-use solution that can be extended to patients with MCI, easing the workload of caregivers and improving patient safety.

The article is structured as follows:

Section 2, “Navigation”, discusses the characteristic aspects of a navigation system and the available technologies;

Section 3 describes the “State of the art”;

Section 4, “Methodological approach evaluation”, examines some aspects of the design;

Section 5, “Materials and Methods”, after a brief introduction on the AR describes the technology platforms used;

Section 6, “Methodology”, illustrates the methodology and application modules;

Section 7 reflects the results of this study;

Section 8, “Discussion”, after the presentation of the methodological approach used, deals with the results in the context of the existing literature; and

Section 9 summarizes the results obtained and presents the development of the model based on the results obtained.

2. Navigation

In this section, after a brief digression on the digital solutions available to assist older adults with MCI, we examined the issues of indoor navigation and available technologies.

Developments in Information Technology have enabled the creation of innovative solutions for the care and monitoring of patients or to support older people in their daily activities. For example, Internet of Things (IoT) technology is used to communicate with and monitor dementia patients [

21]. Virtual and augmented reality can help with specific tasks [

22].

Considering that the number of people with dementia will increase over time and, consequently, the costs of the care to be provided will increase, information technologies represent a reasonable economic solution for containing health care costs. The benefits are not only economic but also social. For example, having people with dementia perform tasks independently improves their living conditions and relieves caregivers. Some assistive devices for spatial cognitive impairment have been developed to reduce caregivers’ workload or improve care.

Liu et al. [

23] created a Personal Digital Assistant (PDA) to help patients drive and use text, image, or voice cues.

Researchers at the University of Missouri and Baylor University have developed a smartphone personal assistant app to send reminders about upcoming events and tasks [

24]. For example, a mobile tele-coach button has also been developed for older people with cognitive disabilities to communicate directly with family members or caregivers. GPS technology is also a security support. The tendency to leave the house, which often manifests itself in these individuals, justifies using this system for monitoring and localization. For example, GPS devices worn by patients send their location to a server via a wireless link. The data are visible in real-time to healthcare professionals or family members. An alternative solution can be using wristwatches or shoes equipped with GPS components.

Hamilton et al. [

25] have developed an augmented reality system called My Daily Routine (MDR), which can significantly help people with dementia and their care partners. With this system, it is possible to facilitate their independence using augmented reality. The MDR system comprises a website and a HoloLens Augmented Reality (AR) application. The caregiver can view and customize the content of the reminder using the website. For example, when wearing a Microsoft HoloLens AR device with MDR, a person with dementia can receive personalized reminders via text, images, videos, three-dimensional models, and voice prompts.

However, the researchers must not overlook the resistance that the older people show to the use of these technologies. Therefore, they have to think of an easy-to-use tool that does not require reading informational material, allows quick visual access, and minimizes decision-making. Psychological support should also be provided to facilitate acceptance.

2.2. Available Technologies

Indoor navigation systems provide users with the spatial context of a venue and intuitive directions. These can be directional arrows or labels for room identification.

Users can access an indoor navigation system using maps from a website or mobile application. It can select or search for any point of interest on a 3D digital map and receive directions to the desired destination. Positioning, localization, and navigation technologies have been widely researched in various applications. The technologies commonly used are microelectromechanical Systems (MEMS), Artificial Intelligence (AI), WIFI, Bluetooth, RFID, NFC, VLC and GPS systems [

28]. Contrary to the extensive use of GPS in outdoor navigation, for indoor environments, GPS only works satisfactorily, due to the difficulty of propagation of electromagnetic waves due to numerous obstacles.

MEMS systems, consisting of small inertial sensors such as accelerometers, gyroscopes, and magnetometers installed in smartphones, ensure good navigation accuracy and have low costs. However, they are subject to noise in the measurement, and the calculated position is subject to drift depending on the characteristics of the accelerometer and gyroscope.

Radio Frequency Identification (RFID) systems use RFID tags installed in buildings. The signals emitted by these devices are collected by readers and sent to a server, where the coordinates of the subjects moving inside the buildings are processed and determined. These systems can collect and analyze large volumes of data. They are low-cost but have a complex structure and are vulnerable from a privacy and security perspective.

NFC (Near Field Communication)-based systems are an alternative to systems with wireless connectivity. They use mobile devices, such as smartphones or tablets, to read the labels in an environment. By reading the labels, the device identifies the user’s location. A map showing the route to reach a goal of interest can be transferred to the mobile device. Their limitation is their short range.

WIFI-based positioning technology is a good alternative, as WIFI is commonly installed in buildings and can act as access points (AP). Its data can be used to calculate the current position if the mobile device is equipped with a WIFI system. Moreover, WIFI signals undergo attenuation due to reflection or diffraction to obstacles. Reflections influence their accuracy in corridors and screens through walls and ceilings. The accuracy varies from 5 to 15 m.

An alternative technology uses Bluetooth [

29]. They cannot receive signals and can only transmit unique signals to the device to further interpret and localize the target. However, this solution is expensive and requires the installation of additional hardware. They have a range of 50 m, but the best result is obtained with reduced ranges. They find wide applications in buildings with complex architecture.

Another applicable technology is VLC (Visible Light Communication), which uses the camera of a smartphone to detect the light emitted by a fluorescent lamp or LEDs. A unique ID is associated with the emitted light for each emission source. The smartphone can determine the position of the source on a stored map through the ID, enabling this method to determine the user’s position. This technology has the advantage of using a wide range of lamps and, above all, benefiting from the energy efficiency of LEDs.

These applications make use of precalculated routes and static background maps. They also require users to know how maps and the signals generated for routing work. Moving forward, they still need to provide the desired levels of accuracy, which are a determining element in indoor navigation. Smartphones equipped with high-performance cameras and computing capabilities allow computer vision systems, through marker-based algorithms, to estimate the user’s position. Another applicable technology is the augmented reality. It can help the user to reach the chosen destination by visually assisting him [

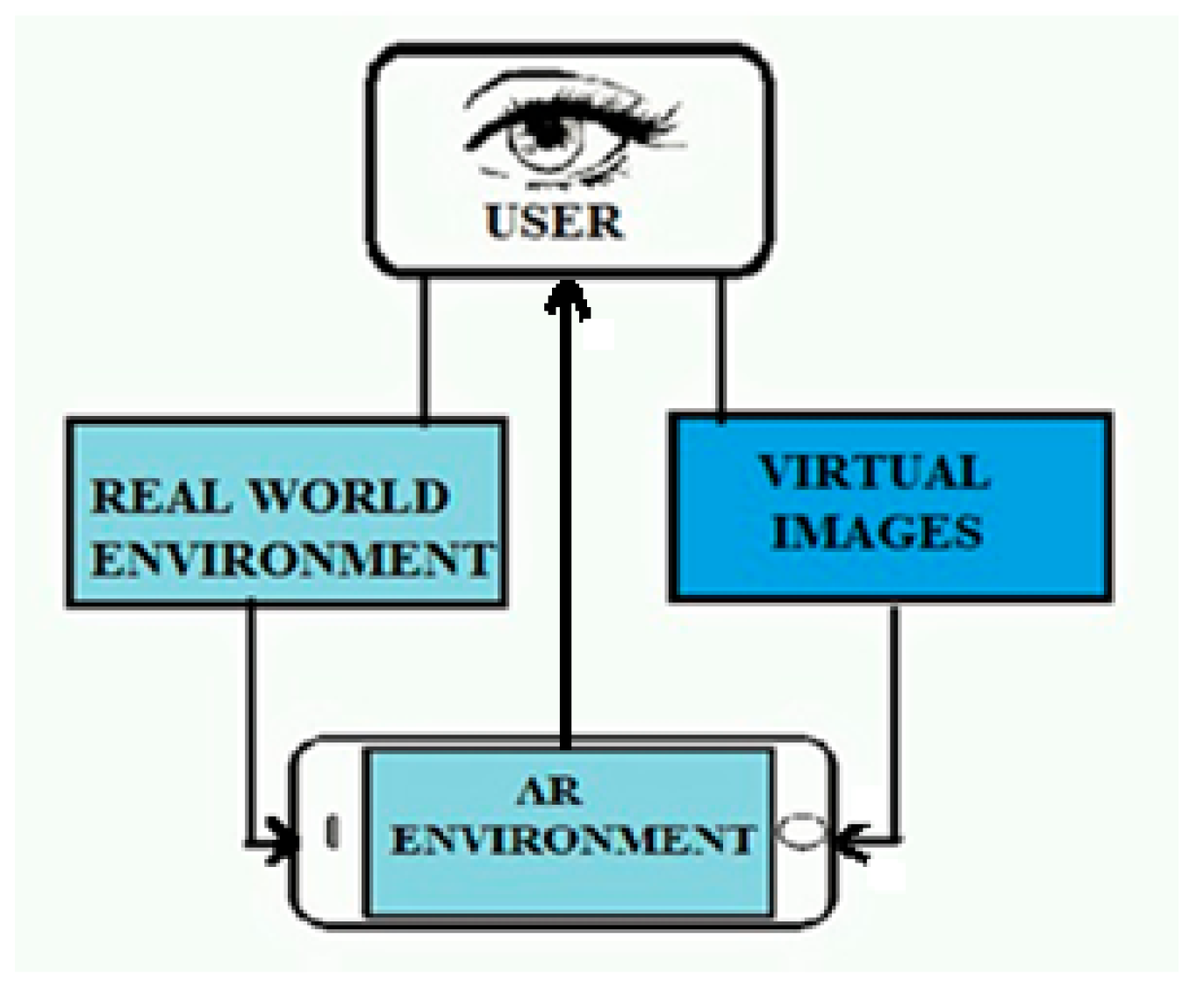

30]. Augmented reality (AR) is a technology that overlaps computer-generated images in a real-world environment. In this way, the virtual and real worlds, in combination with each other, offer the user a unique view and the accurate 3D identification of virtual and real objects. In practice, augmented reality adds details to the field of view perceived by the user, projecting images only in the restricted area in front of their eyes or showing additional information on the smartphone or tablet’s display AR (

Figure 1). It is well known how useful AR has proved to be in navigation applications, by helping to find better and shorter routes. It also provides real-time data on traffic jams and accidents on the route associated with the location, suggesting alternative routes and saving time.

Augmented reality, in indoor navigation, can work without a real-time online data connection for these reasons:

Local data storage: Indoor navigation applications can download building, floor, and route data in advance. These data are then stored locally on the user’s device. When the user enters a building or indoor environment, the application uses this local information to guide them.

Built-in sensors: Many modern devices, such as smartphones, are equipped with accelerometers, gyroscopes, and magnetometers. These sensors can detect the device’s movement and orientation within a building. Combined with locally stored data, the application can calculate the user’s location without depending on online data. Location technologies, and some indoor location technologies, such as Bluetooth Low Energy (BLE) or ultrasound, can be used to determine the user’s location within a building. These technologies do not require an online data connection and can also work offline.

AR is also used in other sectors, such as education [

31], healthcare [

32], public safety [

33], tourism [

34], gas and oil [

35].

Table 1 shows the characteristics of the technologies commonly used

This study aimed to create an easy-to-use indoor navigation system based on augmented reality technology. A video game platform has been tested to verify its adaptability to make an application in the medical field. In view of the problems faced by MCI patients, a system has been hypothesized to help them move independently and safely in their homes, especially in nursing homes.

The reasons that justify the implementation of this system are essentially as follows:

Improved quality of life.

Reduction in anxiety: through clear indications on the path to take, stress and confusion are reduced.

Independence: this solution reduces the need to use navigation aid people.

Personalized itineraries: personalized itineraries are provided according to the patient’s needs.

Involvement: the mode of use actively involves patients.

Reduction in public assistance costs.

It is based on a solution that does not require the installation of technological infrastructures. It uses a smartphone on which an APK (Android Application Package) developed on the Unity Vuforia platform is downloaded. It differs from AR applications on smartphones that usually make use of ARKit (1) or ARCore (2) SDKs (Software Development Kit).

Commonly used platforms for indoor navigation applications are ArCore, ARKit, and Vuforia. They are all valid and can be integrated with Unity. They differ in the following respects:

ARCore is specifically designed for Android devices, it does not work on iOS devices. It works in combination with positioning systems to improve accuracy.

ARKit is specifically designed for iOS devices, it is a proprietary platform, and it does not work on Android devices. It makes use of LIDAR Scanner.

Vuforia is integrated directly into Unity, making it easy for Unity developers to use.

It supports a wide range of devices, including iOS, Android, and some specialized AR devices.

Compared to previous platforms, it has some more advanced features for object recognition.

It easily integrates with other application technologies such as Matterport, Area Target, and NavMesh.

- (1)

- (2)

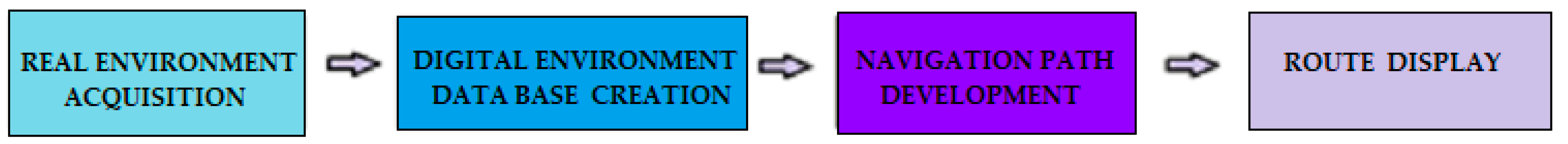

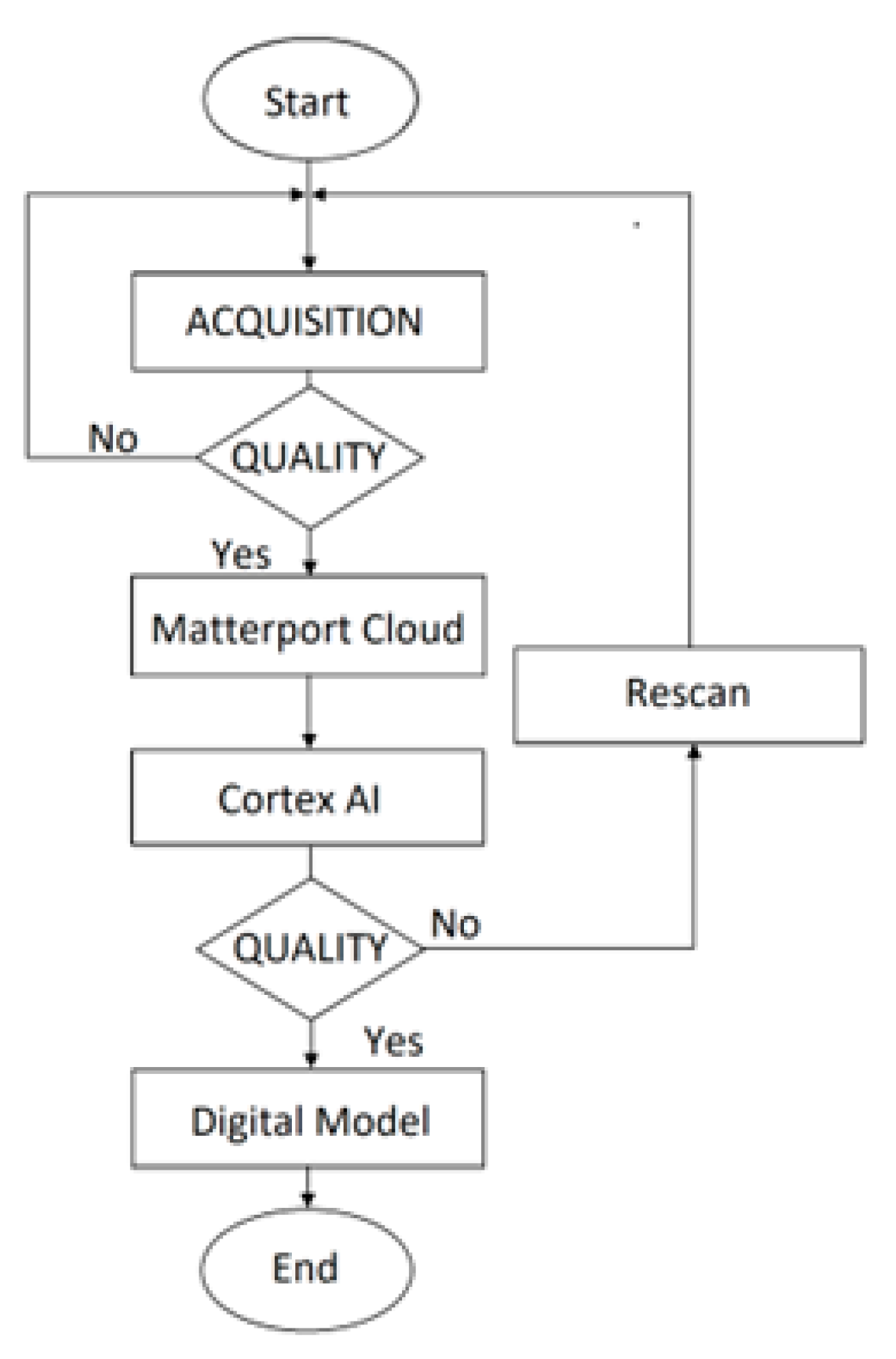

The following figure (

Figure 2) shows a schematic description of the development phases of the applications, ranging from the acquisition of the real environment to the determination of the recommended route. The individual steps are explained in the Methodology section.

3. Related Works

Below are several indoor navigation applications that, while designed for purposes other than assistive technology, can be adapted to support MCI patients [

36].

For example, Abuhan et al. [

37] have developed an assistive technology application called Alzheimer’s Real Time Location System (ARTLS) that tracks patients’ wandering movements in real-time, ensuring their safety. It represents a solution to reduce the burdens to which caregivers are subjected. The tracking system does not interfere with daily routine and privacy. The authors adopted an active Radio Frequency Localization system (ARLS). The tags used as transponders are active RFID with an operating radio frequency of 5.8 GHz. Tags have an accuracy of 1 to 3 m. They are used to locate patients both inside and outside the residence. The receivers are equipped with two antennas at a radio frequency of 5.8 GHz, which is applied for ultra-wideband RTLS (UWB) applications. The communication network used is a wired LAN. From the data collected on the frequency of visits, the caregiver knows which area has a greater frequency of visits by the resident. Therefore, it can improve the quality of care by ensuring greater attention to the area with a higher frequency of visits. The resident may have serious health problems. Caregivers can, in this way, carry out a careful analysis of the behavior of the residents, ensuring with continuous programming a high quality in the management of assistance.

Conversely, Biswas et al. [

38] have designed an indoor navigation application, on a mobile device, for patients with dementia and guests in a hospital. Hospital care for these patients is costly, and it is difficult to provide them with one-on-one caregivers for their navigation support. A system that helps patients move within hospitals avoids the interruption of activities by the medical staff to whom patients turn for guidance, making it a very effective tool, and reducing healthcare costs. The mobile application was developed on an Internet of Things (IoT) system that uses a handheld device with a wireless interface with beacon sensors fixed to the wall. With the connection of the hospital network with the patient, the mobile application produces indoor navigation maps of the hospital using Augmented Reality (AR) technology. The algorithm determines the required destination and translates it into a colored path trace. It is superimposed on the map displayed, indicating the user’s current position and destination. The patient’s position is identified at the time of connection and sent to the Cloud, to which the healthcare professional can connect and know all the movements made. The authors also implemented indoor navigation with an Android app aimed at patient care, health updates, health tips, and recommendations.

Ozdenizci et al. [

39] have designed an indoor navigation system based on Near Field Communication (NFC), which allows users to navigate a building using location updates through NFC tags distributed inside the building, and orient users to the desired destination. NFC is a two-way, short-range, wireless communication technology [

40]; NFC communication takes place between two NFC devices only if they are at a distance of a few centimeters. To activate the system, when entering the building, the user must load a map of the building on his mobile phone by touching a map tag at the entrance containing the connection for the discharge. The NFC internal application calculates the optimal route to the destination based on the request received. When the user navigates inside the building, he must tap on the location tags to update his location. Then, the user receives instructions from the internal NFC application to reorient the path to the destination. The components used in the NFC internal System are map tags, location tags, and the internal NFC application. This application is developed for smartphones to implement the navigation system.

The current position is evaluated, starting from the last position acquired based on the last tag touched, and applying the dead reckoning (DR) method until it touches a new tag. The dead reckoning (DR) method calculates the estimated position using the last preferred or estimated position, incorporating estimates of speed, direction, and route in the time elapsed between the current time and the time of the last fixed position [

41].

Pokale et al. [

42] have developed an indoor navigation application for the university campus. The indoor navigation application helps locate and guide visitors across campus using mobile devices and capturing signals from beacons. These are placed in different locations within the campus. The Bluetooth Low Energy signals of the beacons are the basis of the indoor navigation system. Beacons constantly transmit the signal with a unique identifier to nearby mobile devices, which determine their distance from the beacon based on the strength of the received signal. The authors developed an API to continuously determine the user’s location and calculate the optimal path from the user’s location to the desired destination. Since the application uses Bluetooth Low Energy signals, it consumes less battery.

Parulian et al. [

43] have proposed a project of assistance to indoor navigation, starting from the user’s position to the desired destination by following the compass direction and indicating the number of steps. The route is calculated using sensor technologies in mobile devices: a pedometer and magnetometer. Assistance is provided as a guide, providing sequential instructions based on the route identified. The authors used a list in which the positions of the reference nodes for each room and corridor are indicated using longitude and latitude data. The distance between two successive nodes determines a path segment. Once the starting point and destination have been selected, the algorithm checks each segment that can connect the starting point and the arrival point. Driving instructions are determined by processing the obtained direction, converting the length of the segment into successive steps, and moving the position indicator from one segment to the next. This method uses pedometer and magnetometer sensors to acquire the number of steps taken according to the driving instructions and the direction indicated by the device when the user moves. The tests showed that the user’s height influences the system, number of steps, and sensor threshold value.

Huang et al. [

44] proposed an indoor navigation solution based on augmented reality with 3D spatial recognition, through which all the characteristics of the entire space are collected instead of those based on 2D objects. A routed indicator for reaching the destination is displayed directly on the smartphone screen to avoid deciding which route to take near intersections. Google ARCore created 3D AR models and information inferred from smartphone IMU sensors to position the models. Bluetooth Low Energy beacons (BLE) have been installed within the areas to be traveled at each intersection or point of interest (POI) to obtain information on the current position. Upon receipt of a broadcast signal sent by the beacon, the smartphone, on which the positioning calculation module based on the strength of the received signal is installed, will determine the new route to reach the next waypoint by highlighting a route indicator on the screen. For each beacon, its RSSI distance model (received signal strength indicator) has been provided. The proposed solution has been tested for large buildings, proving practical thanks to the directional antenna in the beacons that regulates the transmission power and the width of the coverage range of the navigation areas. The accuracy obtained was in the range of 3–5 m.

Finally, Tadepalli et al. [

45] developed an indoor navigation application with augmented reality. The application is developed in Unity using Google ARCore. Blender was used to create interactive 3D applications and virtual reality, and QR codes had to be installed in all possible destinations in the building, assuming that any location could be the starting point. Using QR codes, the navigation map identifies the user’s location and places a 3D object on the smartphone screen. Objects are represented by arrows that set the direction to the next point. Once the user scans any QR code, the system understands its current location and asks the user to select the destination. The shortest route to the chosen destination is found using the A* algorithm, and directions to the destination are displayed on the user’s camera screen using augmented reality. Through the feature called Simultaneous Localization and Mapping (SLAM), the user builds or updates a map of a simulated environment while keeping track of the position of a user within it in real-time. The AR view screenshot shows the path to the destination and an indicator indicating the destination reached.

An exciting experience is the one proposed by Rubio-Sandoval et al. [

46], in which an indoor navigation system is based on the integration of Augmented Reality (AR) and Semantic Web technologies. The aim is to present navigation instructions and contextual information about the environment. Combining both technologies for indoor navigation would improve the user experience by linking the information available about indoor locations to navigation paths, the navigation environment, and points of interest.

An ontology that supports a knowledge base (KB) with the information of academic or administrative staff (associated with points of interest) has been defined and implemented.

The AR Foundation framework, offered by the Unity3D engine, was used to implement the positioning function. QR codes were used to store building and location information in the Unity3D coordinate system. The QR code must be scanned if the user’s location is lost (recalibration/repositioning) so that navigation is automatically resumed.

The model was tested in academic environments, modeling the structure, pathways, and locations of two buildings of independent institutions; based on the Likert scale, the result was moderate agreement. The accuracy obtained was 1.23 m.

The technical solution, developed by Pradeep Kumar et al. [

47], was an indoor navigation system that uses an environment tracking technology and augmented reality instructions. The authors developed the application using the ARCore SDK that provides an environment in which to build AR applications for the Android platform. The system uses a pre-scanned 3D map to track the environmental characteristics. The scanned environment that consists of visual features (3D point clouds) is stored as anchors. In a database, these anchors are associated with their corresponding locations and navigation-related information, superimposed on the visual feed during the navigation. The user’s current position and orientation are determined based on the anchors detected by the camera feed.

The route is then calculated and stored with directional instructions.

Visual arrows appear as information superimposed on the camera feed and shown to the user on the device’s display.

For the calculation of the shortest route, the authors used the algorithm A*.

SQLite was used to store routing information, and Firebase was used to store cloud anchors.

A recent work was presented by Bhattacharyya et al. [

48], in which they developed an AR indoor navigation system using environment monitoring and investigated its usability. The system provides guided navigation to direct workers to specific workplaces, promoting their independence. In fact, the system provides information on activities, schedules and the locations of activities to allow them to carry out their tasks without depending heavily on supervisors or collaborators. To build the system, the authors used an environmental tracking approach, instead of image markers, offered by Vuforia SDK’s Area Targets and the Unity Game Engine. Area Targets allow for the precise tracking and enhancement of specific regions and environments by utilizing 3D scans to generate a detailed representation of the space. The camera detects the environment. The scans are augmented and mapped to the real world with good accuracy. The authors utilized the iPhone 14 Pro’s LIDAR scanner to capture the 3D scans and used the Area Target Creator app for the iPhone to generate the Area Targets. Localization is performed in relation to the scans. This solves the localization problem, as it is carried out directly by the system instead of the users who are constantly looking for image markers for their localization. Once the environment is tracked, a line renderer guides the user from their location to their destination.

The authors evaluated the perceived usability and workload (physical and mental) offered to users by the system with a small pilot study. The system achieved a System Usability Scale (SUS) score of 73.06, indicating acceptable usability. Participants also expressed some concerns about the user interface, but were impressed by the system’s ability to locate them.

Below is a comparative table (

Table 2) of the solutions analyzed.

Different platforms, infrastructures, and functionalities have been applied, but the standardization of a process for implementing these systems has yet to be achieved.

While addressing the same issue, the diversity of approaches used is evident. To highlight this aspect, the authors provide a summary of how they approached indoor navigation.

Mohd Fadhil Abuhan et al. developed the Alzheimer’s Real-Time Location System (ARTLS). This system allows us to track all patients instantly in real-time and analyze their spatial movement to improve care management. With real-time tracking, caregivers can monitor patients’ locations and respond promptly to any needs.

Biswas M. et al. developed a mobile application (mHealth) that communicates with Bluetooth beacon sensors and provides step-by-step instructions to reach a specific destination. If a patient needs medical assistance, the system can report the situation to caregivers or doctors and help prevent situations of loss. It has been designed for complex facilities such as hospitals.

Ozdenizci B. et al. presented a user-friendly, low-cost indoor navigation system based on Near Field Communication (NFC) technology. By tapping on NFC tags placed at various points in the building, the app updates the user’s location and provides directions to the desired destination.

Pokale et al. tackled indoor navigation with an indoor system based on beacons that, strategically placed inside a building, can provide location information to users via unique apps. People with MCI can benefit from this technology to move independently within large buildings.

Parulian et al. proposed a solution based on mobile technologies (smartphones or tablets) that use accelerometers, compasses, barometers, magnetometers, and detailed maps of indoor environments to locate the user’s location and provide precise directions.

Huang et al. developed ARBIN (Augmented Reality Based Indoor Navigation System), an app that uses augmented reality (AR) to overlay digital information on top of the real world. It uses visual landmarks within building to guide people.

Tadepalli et al. developed an app that uses augmented reality to display directions to the destination on the user’s camera screen. They have installed QR codes in all possible destinations within a building. Users need to scan a QR code to select a destination.

Rubio-Sandoval et al. developed a navigation system, in which augmented reality combined semantic information to connect points of interest (such as rooms or objects) to information such as descriptions and instructions. These help to better understand the environment and the instructions.

Pradeep Kumar et al. developed the application using the ARCore SDK, providing an environment to build AR applications on the Android platform. The system uses a pre-scanned 3D map to track the environmental characteristics. The scanned environment that consists of visual features (3D point clouds) is stored as anchors. In a database, these anchors are associated with their corresponding locations and navigation-related information, superimposed on the visual feed during the navigation.

Bhattacharyya et al. developed an augmented reality application associated with image markers. The user can frame the route with the camera and receive directions on how to reach a point of interest (PoI).

Bibbò et al. have developed an indoor navigation application using augmented reality, and predefined routes that can be selected from the menu of a smartphone on which it is displayed superimposed on the real environment.

Surprisingly, no author has addressed the issue of acceptability and usability, with the exception of Bhattacharyya.

5. Materials and Methods

Despite the enormous developments in digital technology, the problem of localization and indoor navigation within healthcare systems still needs to be solved in technical and economic aspects [

50]. The solutions adopted refer to sensors and additional hardware to acquire environmental information and use them for the localization of subjects. With the generation of new smartphones and with the help of augmented reality, localization and navigation can be facilitated by assisting the user in reaching the desired destination. The augmented reality system has features that make it preferred in applications such as assisted driving, pedestrian driving, and indoor navigation. It reduces navigation errors, enriches navigation with contextual information, and creates realistic and interactive conditions.

There are two indoor navigation technologies based on AR: markers and markerless. Those with markers use target images to indicate things in a specific space. These indicators determine where the AR application places digital 3D content within the user’s field of view to activate augmented reality.

Conversely, the markerless method uses a real-world object as a marker. Tracking combines the object’s natural characteristics with the target object’s texture.

The ARKit and ARCore SDKs have made AR navigation available on smart devices.

ARKit and ARCore are software development kits (SDKs) released by Apple, the first for iPhone and iPad applications starting from iOS11, and the second by Google [

51]. They allow developers to create augmented reality apps tailored to currently available smartphones. Both can interact with the components of the smartphone (camera and motion sensors) to provide the movement data of the device in space, using a technology called Visual Inertial Odometry (VIO), and insert virtual objects into the scene. ARKit is preferred by developers because it is considered more reliable, as it is supported by hardware and software produced directly by Apple. For ARCore devices on Android smartphones, due to the multiplicity of HW and SW, which are different, it is difficult for Google to provide a single reliable platform for everyone. In addition, the presence of the LIDAR sensor in the iPhone makes navigation more accessible by taking better advantage of depth detection. During environment detection, characteristic points in the scene are captured and then used to detect device movement through image analysis. Points are placed on the floor to create a map of space, which determines whether the user and the point are in the same position.

The proposed solution, implemented differently without requiring wireless technology or hardware installation, hypothesizes an indoor navigation system based on augmented reality (AR), accelerating the search times for a particular place inside an apartment. Using an annotated map, the system routes the user by involving him during navigation, reducing cognitive loads. This solution incorporates AR technology and provides the indications on the screen, superimposed on the natural environments seen through a mobile device’s camera, such as a smartphone or tablet. In this way, the user can easily navigate environments without consulting a map or references.

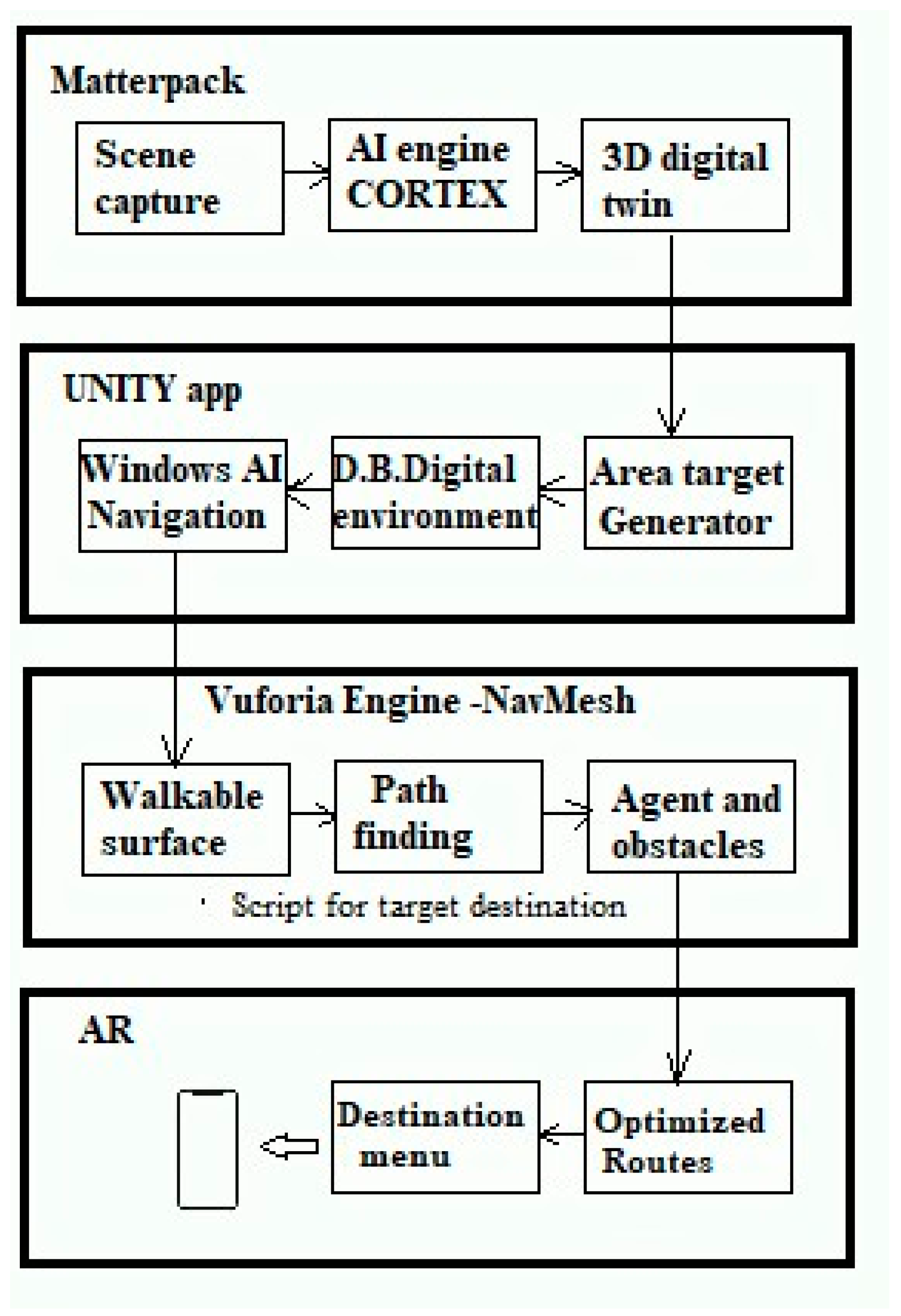

For the realization of the system, different technological environments have been used, such as:

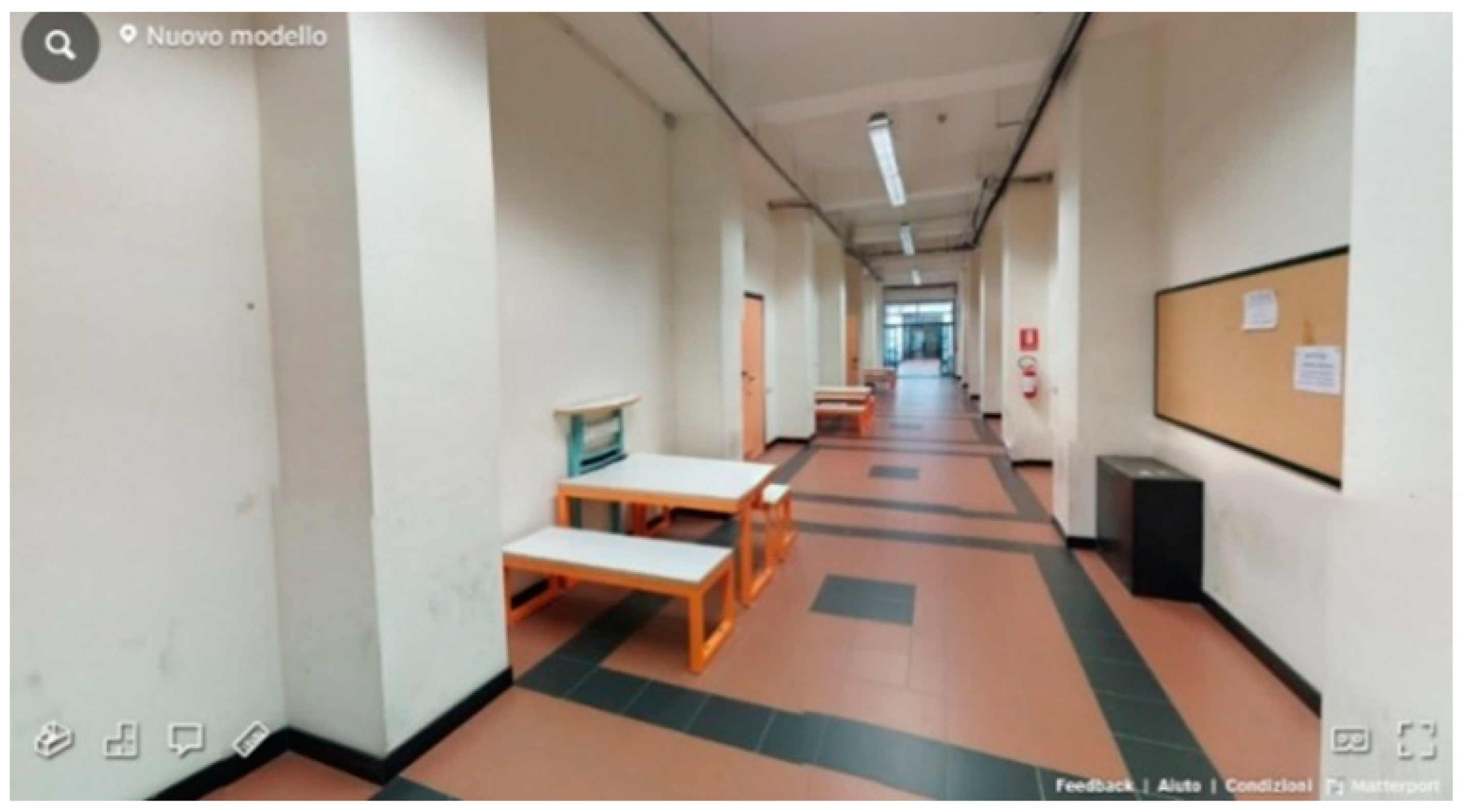

The Matterport Capture software (version 2.31.3) is the module that allows users to generate and customize an immersive digital twin environment. It can make a 3D capture of any space, floor, or building using a smartphone camera. The AI engine, called Cortex, a deep learning neural network, accurately predicts 3D building geometry using a smartphone camera. Its functional characteristics are high resolution, accuracy, quality, and acquisition speed. It also has several features to improve the quality of the scanned model.

It is used as the first step of the development.

The platform allows users to develop 2D and 3D games and experiences. The engine offers a primary scripting API in C # and is used to develop virtual reality, augmented reality, and simulation models. The app development process imports the digital model to create the navigation module.

A platform that allows for the development of augmented reality applications for mobile devices. It applies computer vision technology to recognize and track 3D objects and planar images in real-time. It can position and orient virtual objects with real-world objects when viewed through a mobile device’s camera. The virtual object tracks the position and orientation of the image in real time so that the observer’s perspective of the object matches the perspective of the target. In this way, the virtual object becomes part of the real world. With a specific tool called Area Target Generator (ATG), it is possible to transform a physical space scanned in 3D into a digital model. Vuforia and Unity can coexist on a single platform.

It is used in the third step to create the path to follow.

Figure 3 shows the flow chart of the application development phases with indications of the relevant modules used. The Development Environment section contains detailed guidelines for installing the modules and a description of each module’s design to facilitate its use for those wishing to repeat the experience.

The smartphone used was Xiaomi’s Redmi Note 11 Pro 5G model. This smartphone is equipped with a large 6.7 inch screen with Super AMOLED technology, a resolution of 2400 × 2080 pixels, and a refresh rate of 120Hz. It features a Qualcomm Snapdragon 665 G system chip, accompanied by an Adreno 619 GPU and 6 GB of RAM. The internal memory is 64 GB, expandable via microSDXC up to 1024 GB. The device uses the Android 11 operating system. It has three rear cameras: the main 108 MP, a second 8 MP ultra-wide camera, and a third 2 MP macro camera. Video recording is possible up to 3840 × 2160 (4K UHD) (30 fps). The front camera, on the other hand, is 16 MP.

Connectivity options include Bluetooth 5.1, dual-band Wi-Fi, reversible USB Type-C, and location via GPS, A-GPS, Glonass, Galileo, BeiDou, Cell ID, and Wi-Fi location. Other sensors include accelerometer, gyroscope, and compass. Finally, the device is equipped with NFC and infrared.

Figure 4 shows the components of the application.

7. Results

The algorithm used in NavMesh for calculating the route is based on the Dijkstra algorithm [

52], and on the Artificial Intelligence with which the optimal route is determined, avoiding obstacles. It works on the graph of connected nodes, starting from the node closest to the beginning of the path and following the connection nodes until the destination is reached. Since a mesh of polygons represents the navigation mesh, the algorithm is positioned on a polygon vertex that represents the position in the node and calculates the shortest path between the selected nodes.

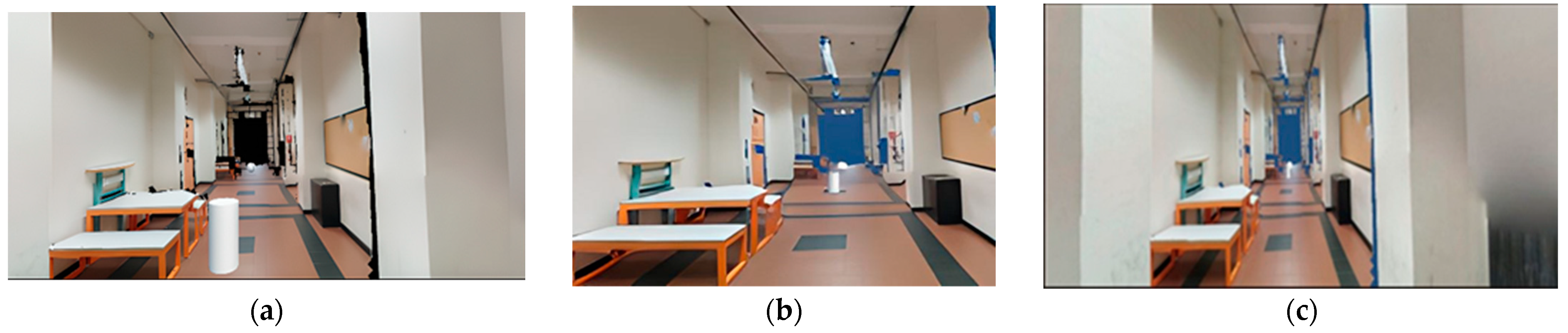

In the project, the route is applied from a starting point to the destination point. The system determines the route, and the user, starting the application, sees a display of a simulation of the path he must take to reach the target destination on their smartphone. Three preloaded paths have been hypothesized in which, through scripts, the chosen targets have been defined and represented by spheres in the simulated path. With the location of the point of arrival, the app simulates the animated route to be taken from the point of departure to the point of arrival. The images below show the different moments of the route. The path’s starting point is the beginning of the corridor, where a cylinder representing the user appears. The point of arrival is the living room, selected from the menu, positioned at the end of the corridor represented by a sphere.

Figure 11a represents the initial instant;

Figure 11b shows an intermediate moment of the path;

Figure 11c, instead, highlights the achievement of the desired destination.

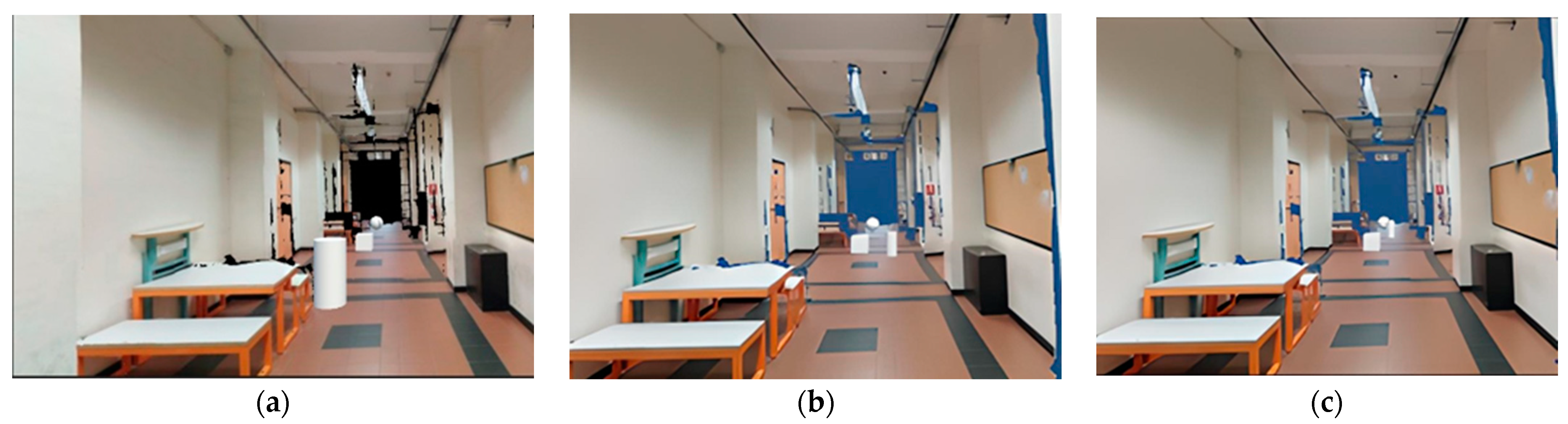

The system allows the user to check for any obstacles on the navigation path. In the following images, the obstacle is represented by a box, but it can be any obstacle. For example, if the obstacle completely blocks the path, the system can provide a path that can bypass it or find an alternative route. The figures below show the three different variations of the route in the presence of obstacles.

Figure 12b shows that the direction of the agent deviates from the original direction by passing by the obstacle, represented by the cube, and resumes normally.

Figure 12a represents the initial instant;

Figure 12b shows an intermediate variation of the path;

Figure 12c, instead, highlights the achievement of the desired destination.

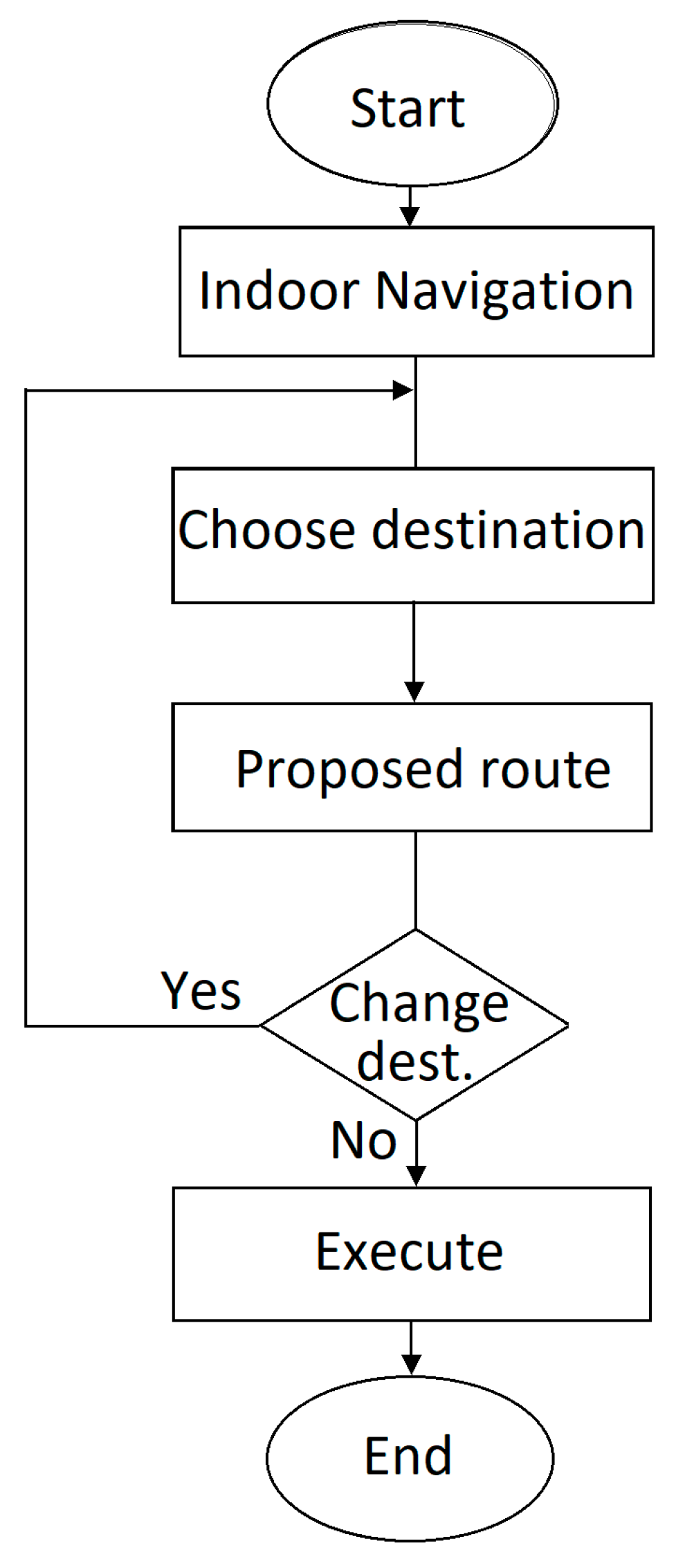

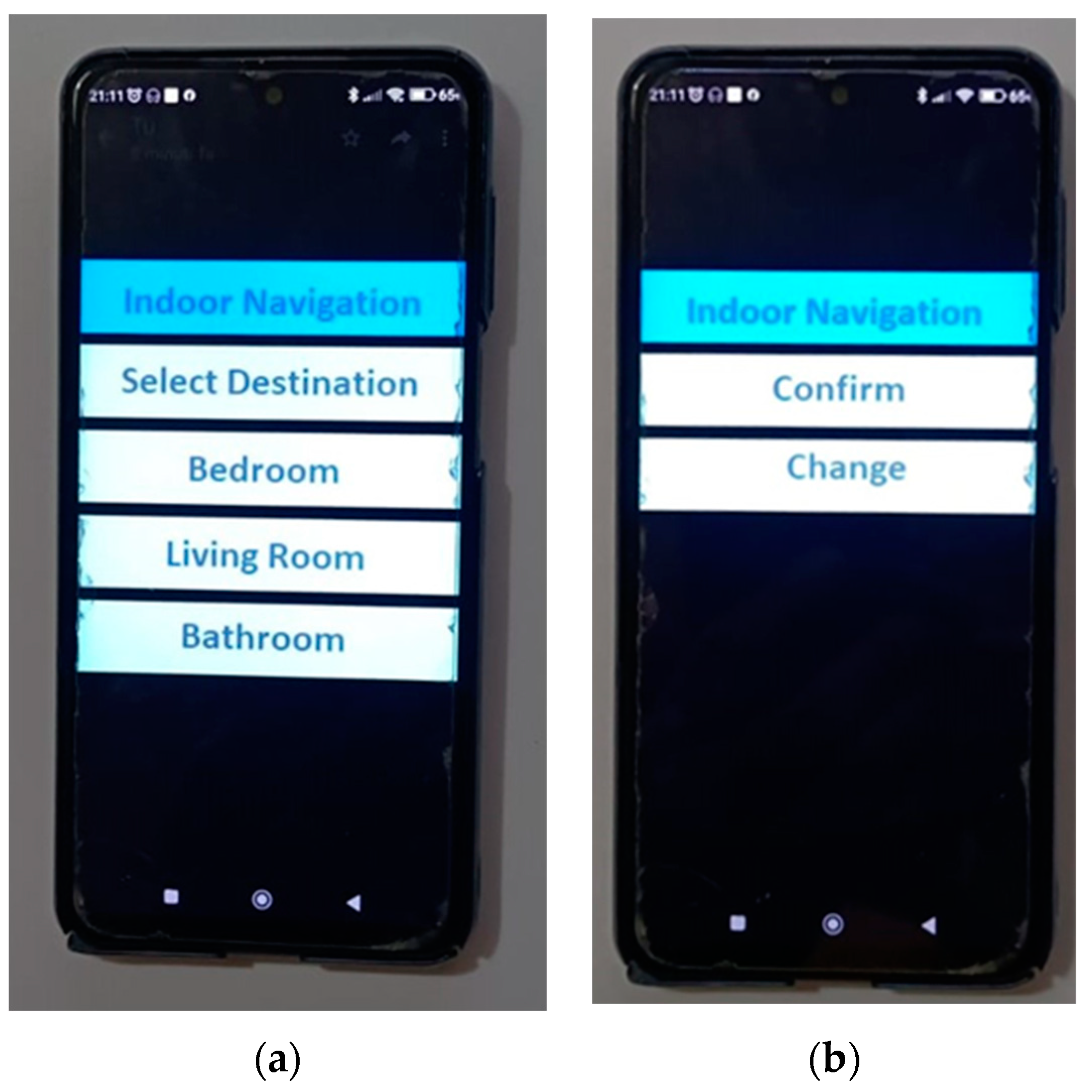

The application created with the Android Build Support module on Unity Editor was exported and installed on the smartphone as an APK. These files are just ZIP files named with a .apk extension instead of the traditional .zip.PK. From a functional point of view, the app is activated through the menu shown in

Figure 13.

The destinations within the structure are displayed when accessing the application, and the user can choose their preferred destination. By selecting the destination, assistance is provided by visualizing the animated route to direct the user to the destination. If the user wishes to change their destination, they can do so by returning to the main menu, and the list of destinations will be displayed again, from which they can choose and start the navigation.

The application does not allow to interactively choose a destination other than those provided. Having found that people with AD, living in nursing homes, have difficulty finding their own room, bathroom, and dining room [

53], which are essential to meet their sleep, food and socialization needs, our idea focused on a simple wayfinding solution dedicated to these destinations.

Tests have been carried out to ensure the system works properly and provides users with timely information. The tests were carried out under the following conditions:

Positioning the user in different locations within the environment.

Verification of different selected routes.

Repeating the routes numerous times.

Verifying the correctness and repeatability of the results provided.

Test of paths with obstacles.

Evaluation of the operation of the system under these conditions.

Evaluation of the system’s response time to the choices made.

Verification of the degree of driving to the desired destination.

A measure of the time taken to reach the desired destination.

Collecting user feedback.

The application was tested in the University of Reggio Calabria laboratories using seven staff members. They replicated the search for intended targets in the model 20 times. The destinations were successfully reached, with an error of 2%. The results obtained regarding precision align with those found in the literature. The model’s error in determining the final destination was of the order of about ten cm.

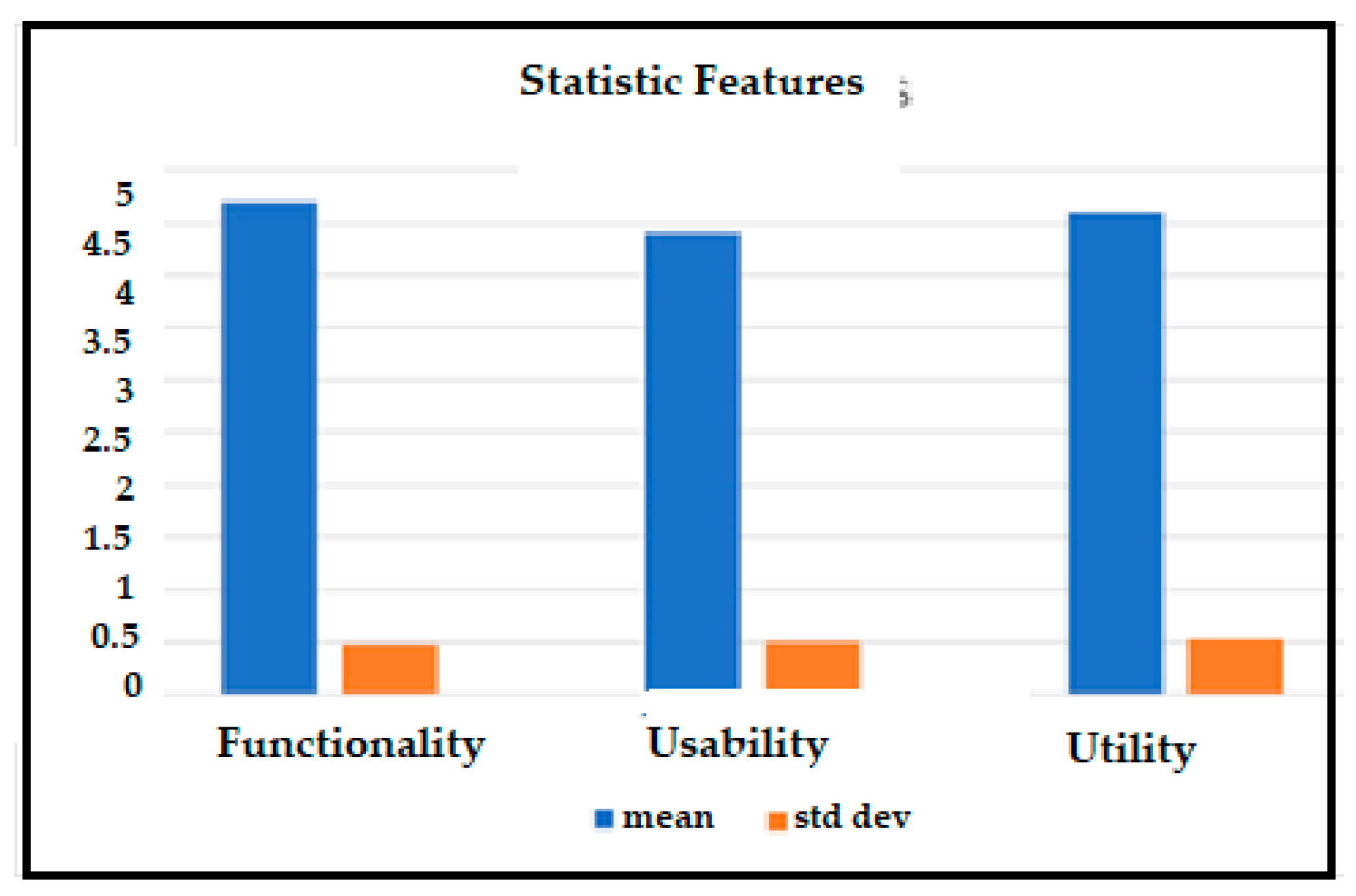

At the same time as the validity and accuracy test, a test was conducted against some evaluation parameters on using the model. In particular, the investigators were asked to express a grade from 1 to 5 on the parameters of:

Functionality: this parameter represents the speed of use in the model’s responses.

Usability: the ease of use of the model.

Utility: this parameter evaluates the ability of the model to make the user independent.

The scale used in

Table 3 is a rating scale in which the values shown take on this meaning: Value Description

Unsatisfactory

Below average

Average

Above average

Excellent

Unsatisfactory: this is the lowest score and indicates that the evaluated item did not meet expectations.

Below average: this score indicates that the evaluated item only partially met expectations.

Average: this score indicates that the item being assessed met expectations adequately.

Above average: this score indicates that evaluated item exceeded expectations.

Excellent: this is the highest score and indicates that evaluated item significantly exceeded expectations.

The evaluations obtained are shown in

Table 3.

Figure 14 shows the values of the mean and standard deviation of the three parameters examined.

The experience was then replicated at the University of Jaipur (India), confirming the results obtained.

In light of the results, the model appeared effective, easy to use, and had a simple user interface. The current limitation of the solution adopted is its use in a scientific environment and with healthy subjects. We intend to extend the application in real-world environments to people with MCI to attest to the validity of the model, which is currently considered a potential tool.

This activity has been postponed to a second phase as it requires a careful and methodical approach that involves a long time for the definition and articulation of some activities, such as:

- ∗

The prior approval of the Ethics Committee.

- ∗

Identification of MCI patients and caregivers available for testing.

- ∗

Obtaining their written consent.

- ∗

Identification of specialists in the field.

- ∗

Definition of diagnostic criteria: according to the DSM-V (Diagnostic and Statistical

- ∗

Manual of Mental Disorders), the diagnostic criteria for MCI include evidence of modest cognitive impairment in one or more cognitive domains.

- ∗

Neuropsychological assessment tests for the determination of their cognitive abilities.

- ∗

Preparation of a protocol.

- ∗

Appropriate training for patients and their caregivers.

- ∗

The test.

- ∗

Acquisition and processing of results. Feedback on ratings regarding the interface, ease of use, and hassle-free navigation.

- ∗

Revision of the model according to the feedback obtained.

- ∗

Monitoring of the application’s use to verify the effectiveness of the navigation model.

A further limitation is the size of the navigation area. With future implementation, it will be extended to multi-story buildings.

8. Discussion

This work’s goal is not to test the application through a statistical analysis of the orientation skills of MCI patients in terms of speed of reaching the destination, or errors of orientation in undertaking the path, nor the evaluation of anxiety that may arise in some patients. This test must be articulated within a nursing home with the help of specialists in the sector. The potential of AR in addressing the problem of indoor navigation by applying the tools usually used in video games was verified. No such experiences were found in the literature.

The methodological approach followed was to examine the problems that afflict older adults with MCI initially. It emerged that being able to move with complete autonomy, lifting the load of caregivers, is one of the most felt problems. The characteristics an indoor navigation system must possess and which technologies can support it were analyzed. Experiences in the industry were explored to obtain a broad view of how research has tackled the problem. The literature needs more evidence of navigation systems to support patients with MCI.

In this section, the implications associated with existing applications were highlighted. Several indoor navigation solutions for different application sectors were identified during the research. The currently available systems use different technologies, infrastructures, and functionalities and manage the places’ contextual information with proprietary formats. Instead, it would be desirable to use a platform-independent model, allowing for standardized indoor navigation system management. Regardless of the model, the researchers used different technologies to detect features that help detect the user’s location and movement, such as GPS, beacons, sensor chips, Wi-Fi, Bluetooth, or fingerprinting. In addition, indoor routes must be mapped and stored in a database to be used as a reference for adjusting the user’s position. In other cases, the authors used computer vision technologies or physical references such as QR codes to detect non-progressive locations. Each technique has its advantages and disadvantages. For localization, it is impossible to use GPS, a standard for outdoor navigation, as indoor navigation systems are commonly prone to errors due to the difficulty of accurately representing the multi-level structures of buildings on a navigation map. While reading markers ensure accurate placement, it also involves constant user iteration. With visual elements, such as labels to indicate rooms and directional arrows to help orientation, driving can only be accessible if these indicators are easily spotted. Machine vision-based systems are independent of external sensors but have high power consumption.

Bluetooth technology is inexpensive but has limited range and accuracy. Bluetooth hotspots should be set up throughout the building for effective location tracking and navigation. The same goes for other technologies like Wi-Fi, RFID, and sensor chips. The cost of implementation and maintenance is not negligible for all of these methods, and a change in weather conditions can affect the signal strength that interrupts the hotspot in the presence of a failed device. These systems require additional infrastructure for their proper functioning. The analysis showed that AR navigation systems use an overlay approach to present users with information about the environment via handheld devices or head-mounted displays (HMDs). These systems are based on three technological components. Through spatial mapping that allows for the tracking of users’ positions, a detailed 3D representation of the environment within the system used is produced. The second component is the path generation system. Dijkstra or A* determines an optimal route from the user’s current location to the intended destination. The third component is the display of navigation aids. Visual or auditory imagery or a combination of maps, turn signals, and points of interest (POIs) may be presented. An essential element to highlight is the ability of the systems to implement complex and accurate calculations to determine routing paths before starting navigation. In addition, users need general map reading skills and an understanding of how they work. Aspects that should be prioritized to make the model simple and user-friendly. The state-of-the-art analysis showed us that there still needs to be a standard solution for indoor navigation, as well as a methodological approach for its development. However, it has allowed us to identify augmented reality and the use of smartphones as the technologies to be examined when designing a navigation system.

The model designed to provide a guided path within a predefined choice has the following characteristics: good accuracy, low cost, and simple user interface with no cognitive effort. The guiding principle was using the potential of video game design tools to simulate paths within the premises. Its realization requires using a mid-to-high-end smartphone without additional hardware or wearable sensors that incur additional installation and maintenance costs. The choice of the smartphone as an essential element of the application is an element that favors the acceptance of the technological tool by the older people. Although it is a widely used device, its use needs to be improved through the digital literacy of older people, with practical interventions and the help of clinicians. In addition, equipping smartphones with easy-to-use interfaces facilitates the conviction of the elderly to use them as their cognitive support. It is suggested by research to be among the tools to be used to cope with cognitive and memory problems [

54]. However, its use is controversial. Some researchers [

55] believe that the electromagnetic waves produced by mobile phones may slow cognitive impairment by reducing beta-amyloid deposits in the brain through an antiplatelet action and an increase in temperature in the brain during use. Others [

56], on the contrary, believe that the electromagnetic waves that activate voltage-gated calcium channels (VGCCs) produce rapid increases in intracellular calcium levels. This phenomenon would lead to an increase in the precocity with which the disease manifests itself.

The scientific community has yet to determine a precise position on the effects of electromagnetic waves.

In developing care tools for patients with MCI, the research is geared towards developing IoT-based devices aimed at reducing the burden on healthcare professionals and improving care services [

57]. Integrating IoT-based wearables with AI applications will improve the efficiency of these devices and contribute significantly to the reduction in home care costs. On the other hand, more research needs to be carried out on the specific applications of indoor navigation for patients with MCI.

While based on the interaction of the natural environment with virtual objects, the proposed solution differs from the others using the same technology in that the model does not use arrow keys, QR or labels to identify the desired destination. It does not have to interact with a 3D map on which to select the destination and receive responses. This requires cognitive effort on the part of the user. The route is not plotted based on points of interest or visual indicators. The usual routes are already predefined and placed in a choice menu. The menu is simple and essential and is created for the user to select one of the three options. Depending on the choice, the animated route appears on the screen at the touch of a button, superimposed on the preloaded map. The interface is such that it does not require any cognitive effort. The application allows the user to reach the chosen destination with an accuracy error of a few cm.

Other applications also provide indoor navigation solutions, but these are actually solutions within integrated patient care systems. They are characterized by application and operational complexities and require specific hardware. Obviously, these elements increase costs.

On the other hand, our solution, developed to provide only a guided path as part of a predefined choice, as has been structured, is easily usable with reasonable acceptance and low cost.

9. Conclusions

In this paper, the authors showed how, with the help of a smartphone and AR technology, an application developed and tested in scientific laboratories could be extended to real environments and in particular help MCI patients to face, among the many problems that afflict them, the difficulty of moving around in environments. The patient can select, on the smartphone, the desired location within the structure, and the application then displays, using augmented reality, the path ahead. We will consider, in the future, experimenting with a study protocol on the impact of the proposed solution on the ability to find the desired way within a residential home for the elderly, with the full involvement of caregivers. This phase requires the implementation of the activities highlighted in the Results section.

It is believed that with the experimentation in a nursing home, it will be possible to verify how the patient reacts with the system concerning:

- −

The ability to follow directions.

- −

Using the system autonomously.

- −

The presence of moments of confusion or bewilderment.

- −

Any benefits brought by the application.

This phase will have to be followed by feedback and adaptation. Based on the feedback from patients and caregivers, the system will be adapted according to the needs that have emerged and the analyzed capacities of the patients.

Adding voice systems and other ways to improve usability may also be evaluated.

Once the validity of the hypothesized interventions has been ascertained, the extension of the application in clinical contexts can be evaluated.

It will also be useful to have a follow-up to improve the performance of the application. This forces the caregiver into stressful work rhythms that they cannot move away from, because the patient could move inside the home or care home without a predefined destination, get lost, or even move away from the home, incurring some danger. An assisted indoor navigation system helps the patient by improving the quality of life and relieving caregivers’ workload. Finally, augmented reality technology emerged as a powerful tool to support patients to move safely and autonomously indoors. It is important to note that the use of AR in navigation is heavily dependent on advances in the field of augmented reality. The evolution of navigation technology will allow us to obtain technology that is increasingly precise and readily accepted by users in the future.

With this in mind, a prototype based on using a smartphone with an easy-to-use interface was suggested, representing a solution that overcomes typical patient resistance and reduces healthcare costs. It can contribute, among other things, to improving comfort, functionality, and quality of life.

The proposed solution is suitable for small navigation areas. In the future, we plan to implement the system by extending it to an entire multi-story building, and in addition, to add audio assistance to help the patient in difficult or even dangerous situations.