1. Introduction

Ensuring driver readiness and fitness to operate a vehicle is a cornerstone of modern road safety. Mental states such as fatigue, alcohol-induced impairment, or cognitive overload significantly compromise reaction times, decision-making accuracy, and attention span—all of which are critical for safe driving. Traditional assessment techniques rely heavily on breathalyzers, blood testing, or observational screening, which are often intrusive, retrospective, and impractical in proactive or simulated environments. This gap calls for novel digital tools that can rapidly and objectively evaluate a driver’s cognitive and motor performance in real-time, particularly in research and educational settings that utilize simulation-based testing.

The relationship between mental impairment and driving performance has long been the focus of traffic safety research. Numerous studies have demonstrated that cognitive fatigue, alcohol intoxication, drug use, and neurological conditions such as concussion significantly impair a driver’s ability to operate a vehicle safely [

1,

2]. In a driving simulator study, researchers found that increasing blood alcohol concentration (BAC) levels (from 0.00% to 0.09%) led to higher average speed, speed variability, and lane-position variability [

3]. Even at 0.03% BAC, participants reported reduced vigilance and impaired judgment. Accident rates rose from 1.5% sober to 8.7% at 0.09% BAC. Another systematic review found that even moderate BACs slow reaction times, decrease contrast sensitivity and divided attention, and increase speed and lane deviations, leading to more errors and crashes [

4]. While habitual drinkers may have some tolerance, they still experience performance declines. Traditional detection methods—such as field sobriety tests, reaction time hardware, or clinical neuropsychological assessments—often lack scalability, objectivity, or applicability outside controlled environments.

To address these limitations, several mobile or tablet-based tools have emerged that assess mental impairment through short, repeatable cognitive-motor tasks. Among them, the Druid App (developed by Impairment Science, Inc.) has gained recognition for its ability to detect a wide range of impairments including those caused by alcohol, cannabis, fatigue, and concussion [

5,

6]. Druid employs a series of divided-attention and balance tasks, producing an impairment score that can be compared with an individual’s unimpaired baseline. Validation studies conducted at Johns Hopkins University confirmed Druid’s sensitivity and reliability, showing that it outperformed common field sobriety tests in detecting cannabis-related cognitive impairment [

7].

Additional research has supported the use of app-based testing to evaluate workplace readiness. A notable case study involved public safety officers using Druid before and after shifts under different scheduling regimes, revealing strong correlations between cognitive test scores and fatigue levels [

8]. These findings suggest that lightweight, smartphone-compatible cognitive testing can be an effective proxy for broader mental state assessments.

However, despite their promise, existing tools such as Druid are not tailored for pre-simulation use, nor do they typically support real-time integration with simulation platforms or administrative dashboards for group-based studies. Moreover, baseline calibration requirements and single-user workflows can limit their use in larger research environments where bulk data collection, participant management, and visualization are critical.

To fill this gap, our approach with the Driver Status Test App (DSTA) builds upon the scientific foundation laid by tools like Druid, while expanding functionality to support simulator integration, group testing, and customizable test design. By embedding both cognitive screening and data management within a single system, DSTA aims to make mental state evaluation practical and scalable for researchers, educators, and policy analysts focused on human factors in driving. DSTA combines a mobile testing interface with a web-based administrative dashboard, enabling researchers to collect, analyze, and export test data in real-time. The goals of this paper are to present the technical structure and workflow of the DSTA system and describe the cognitive and motor tasks implemented for impairment detection.

2. Materials and Methods

The DSTA is a two-part software system developed to evaluate driver cognitive states through structured testing and data analysis. The system comprises a mobile application for test administration and data collection, and a web application for data visualization and export. The modular architecture and real-time backend integration facilitate scalable and reliable cognitive state assessments in experimental and simulation environments.

2.1. Mobile Application

The mobile application is built using React Native (version 0.72.6) and Expo (~49.0.15) and implemented in TypeScript. It uses NativeWind for styling and is integrated with Firebase for backend services including authentication and data storage. The routing and screen navigation are managed via Expo Router (v2.0.0).

The structure of the application is organized into:

app/: Primary routing folder, containing screens for group management, participant management, and test modules.

components/: Reusable UI components such as headers, introductory, and completion screens.

firebase/: Contains service modules for handling backend operations such as group, participant, and test result management.

config/: Holds the configuration file testConfig.ts, which defines parameters for test modules including screen layout, timing, and constants.

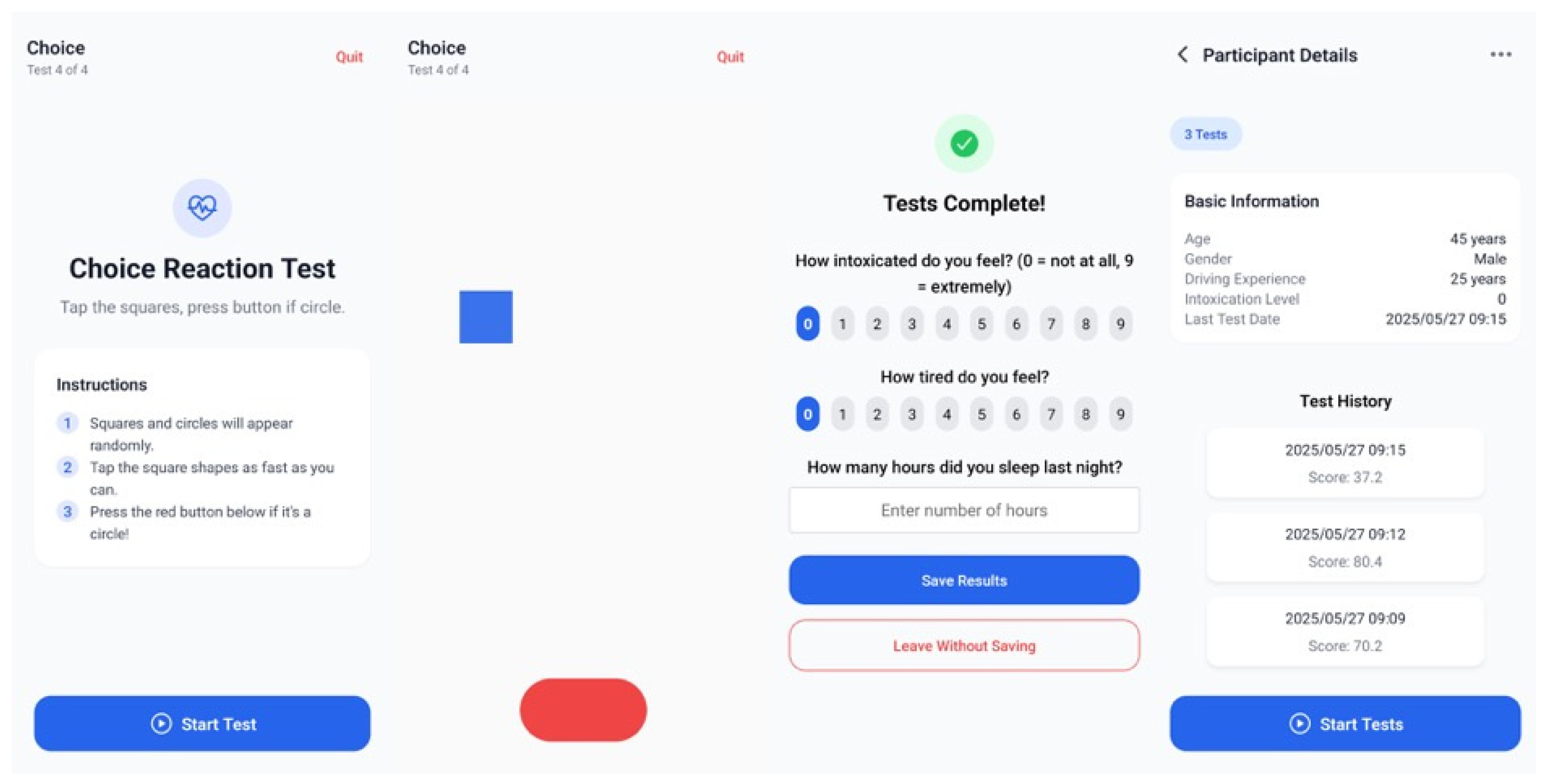

All test modules are preceded by an instructional screen and followed by automatic navigation to the next module. Strict navigation constraints are implemented to maintain data integrity. Participants may exit the test flow only via a controlled “Quit” action, which is accompanied by a confirmation dialogue and state management logic.

2.2. Web Application

The web application serves as an administrative interface to manage data collected from the mobile app. It is built with React (v18.2.0), TypeScript (v5.0.2), and TailwindCSS (v3.3.3), and also integrates Firebase for backend operations. Key functionalities include:

Browsing and managing groups and participants;

Visualizing individual and comparative test results;

Exporting datasets for further analysis.

The application supports real-time synchronization with the database, enabling researchers to monitor participant progress and retrieve test results dynamically.

2.3. Backend Services and Data Model

All backend operations are implemented via Firebase services including Firestore for structured data storage and user authentication. The DSTA’s data structure is defined using TypeScript interfaces to ensure type safety and consistency throughout the application. Three primary interfaces represent core entities: Group, Participant, and TestResults.

The Group interface contains three properties: a unique identifier (id), the group’s name (name), and a timestamp (createdAt) marking the creation date.

The Participant interface stores individual-level metadata including a unique id, birth year (birthYear), and the associated group identifier (groupId). It also includes a Boolean flag (hasCompletedTest) to track whether the test sequence has been finished, and an optional lastTestDate to indicate the most recent test completion timestamp.

The TestResults interface links results to a specific participant via participantId, logs the test completion time using timestamp, and stores the outcomes of four distinct cognitive tests under the tests object. These include Tracking, Precision, Balance, Choice Reaction, each represented by its corresponding result structures (TrackingTestResults, PrecisionTestResults, etc.).

This structured schema supports scalable data management, ensuring that all collected results are associated with the correct participant and testing context while maintaining compatibility with Firebase’s NoSQL data model.

3. Results

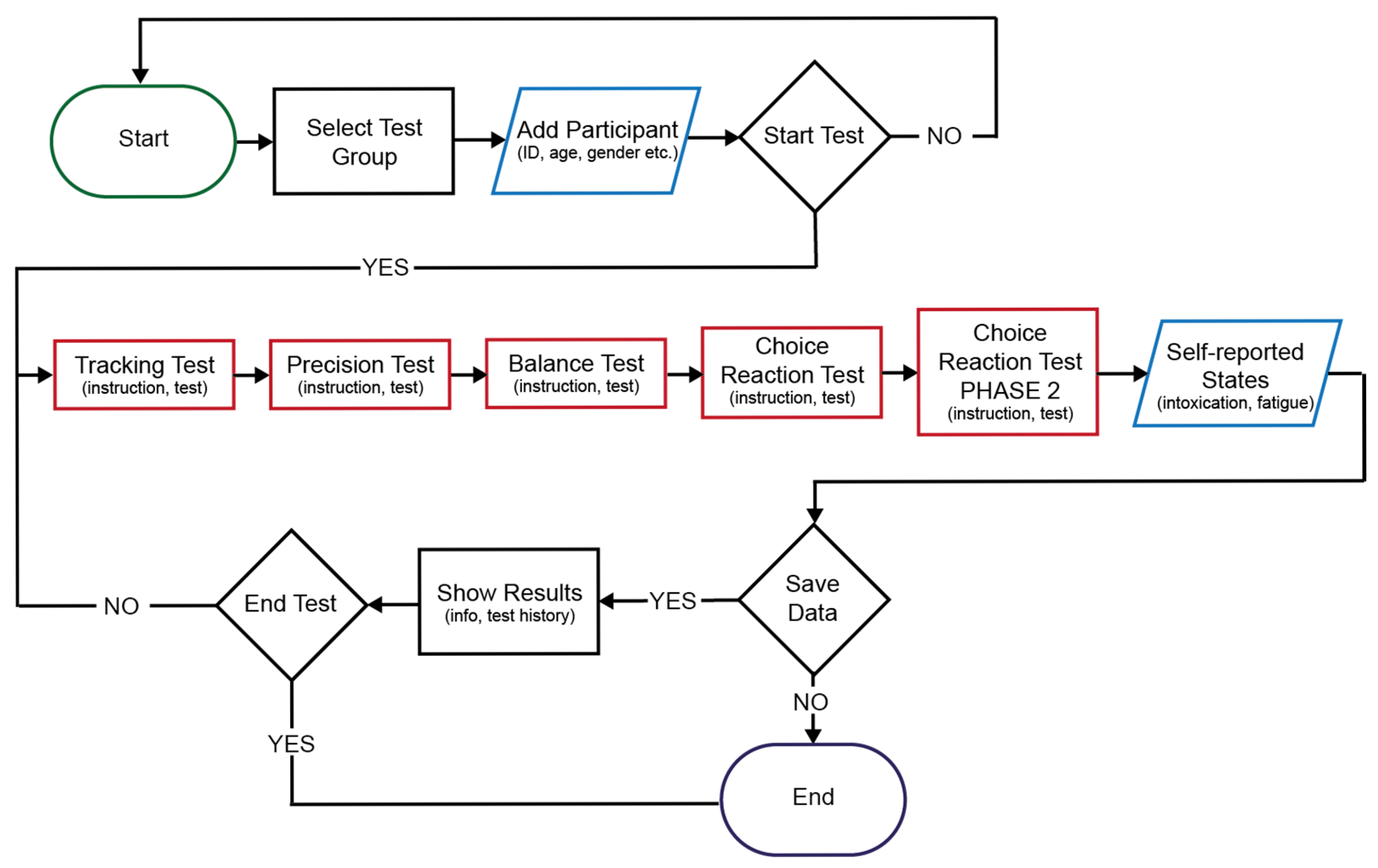

The DSTA is structured around a modular test flow architecture designed to evaluate drivers’ various cognitive and psychomotor functions under varying mental states. The core entity of the system is the TestFlow, which encapsulates user metadata and dynamically collects performance metrics across several test sections (

Figure 1). Before beginning the test, participants must select a predefined test group. This step allows for segmentation based on study conditions, enabling the structured analysis of repeated measurements under varying states.

Each test session begins with collecting demographic and subjective data including age, gender, and driving experience. These values are stored within the TestFlow entity and are used as covariates in subsequent data analysis.

The TestFlow object manages a sequence of test modules, each represented as a distinct TestSection class or subclass, designed to evaluate specific cognitive or motor capabilities. The core test sections are:

Tracking test: Assesses visuomotor coordination and sustained attention by requiring participants to continuously follow a moving stimulus with their finger while simultaneously detecting and counting distractor stimuli.

Precision test: Measures fine motor control and spatial accuracy through the timed tapping of randomly appearing visual targets, emphasizing central-point precision under self-paced temporal conditions.

Balance test: Evaluates postural stability by recording inertial sensor data while the participant maintains single-leg stance with minimal device movement for a fixed duration (10 s).

Choice reaction test (Phases 1 and 2): Tests cognitive flexibility and decision-making speed through shape-based stimulus-response tasks that require inhibition and rule switching. Performance metrics include response time (RT), accuracy, and adaptability under alternating instructions.

Table 1 summarizes the key performance metrics and their definitions for each of the four tests, highlighting measures of spatial error, timing, accuracy, and stability.

All sections inherit a familiar interface, enabling seamless integration into the TestFlow (

Figure 2). This design supports both extensibility and maintainability, allowing future tests to be appended with minimal changes to the architecture. Test execution is centrally timed, and the overall test duration (in seconds) is recorded for later correlation with the performance indicators.

Self-reported levels of intoxication, fatigue, and sleep are asked after each test. Upon test completion, all recorded data are persistently stored anonymously for each participant, organized by individual and randomized ID, and tagged with the group and session ID. The system can display individual results immediately after the test, providing feedback to the user.

Participants are encouraged to retake the test in different conditions (e.g., after sleep deprivation or prolonged driving). Upon completing a second session, the application automatically compares the results from both rounds and highlights key changes across all test sections. For example, an increase in RT or memory errors may indicate the impact of fatigue or intoxication.

We propose a composite-score (CS) framework that quantifies impairment by transforming each raw metric into a standardized deviation from a healthy-control baseline and then aggregating across four functional domains (tracking, precision, balance, choice reaction). If higher raw values reflect worse performance (for example, greater deviation or slower reaction times), calculate

If higher values reflect better performance (for example, percent accuracy), reverse the subtraction so that

ensuring that a positive

always indicates impairment relative to the sober mean.

Once the z-score is computed, it is transformed into a bounded sub-score that reflects the severity of impairment. This transformation is designed to be intuitive and interpretable. If the z-score is zero or negative (i.e., performance is at or better than the sober mean), the sub-score is set to 0. For positive z-scores less than 5, the sub-score increases linearly, with each standard deviation corresponding to 20 points. If the z-score is 5 or greater, the sub-score is capped at the maximum value of 100.

Each test domain—tracking, precision, balance, and choice reaction—yields multiple metrics. Denote the number of metrics in domain

d by

. To obtain a single domain-level score

, simply average the corresponding

sub-scores:

Finally, each domain is assigned a weight

that reflects its sensitivity to impairment. The overall composite-score (CS) is then the weighted sum of the four domain scores:

Because each lies between 0 and 100 and the weights sum to 1, the CS also ranges from 0 (no impairment) to 100 (maximum impairment). Standardized thresholds—such as 0–20 indicating no or negligible impairment, 20–40 mild, 40–60 moderate, and above 60 severe—can then be applied or adjusted based on empirical validation against known levels of intoxication.

4. Discussion

This study introduces the DSTA as a modular platform for assessing cognitive and psychomotor impairment in simulated driving contexts. By combining mobile-based testing with synchronized web-based analytics, DSTA addresses the limitations of traditional screening tools including low scalability, poor simulation compatibility, and a lack of real-time data access [

5,

7].

The test battery effectively targets functional domains known to deteriorate under fatigue, intoxication, or cognitive overload including reaction time, memory, balance, and motor coordination [

1,

3,

4]. In particular, the precision test, which measures fine motor control, complements the visuomotor and postural stability components to offer a comprehensive impairment profile. The integration of a composite scoring framework and standardized via z-scores supports clear classification across impairment levels and enhances cross-session comparability.

This system builds on the principles of tools like the Druid App but extends functionality to support group testing, simulator integration, and dynamic comparative analysis, features that are increasingly vital in experimental and educational contexts. By aligning test results with simulator telemetry and subjective reports, DSTA captures the time-sensitive dynamics of mental state degradation, providing deeper insight into the relationship between cognition and driving behavior.

Limitations include the absence of individualized baselining, which may affect sensitivity in heterogeneous populations. Environmental variables also pose challenges to generalizability. Future development will focus on adaptive scoring models and extended validation across broader populations and impairment types like stress or other medication effects.

5. Conclusions

The DSTA provides a scalable, integrated solution for assessing driver impairment in research and simulation environments. Its modular design, objective scoring, and synchronization capabilities make it a valuable tool for human factors research, safety training, and regulatory applications.

Author Contributions

Conceptualization, V.N. and G.K.; Methodology, V.N. and G.K.; Software, V.N.; Validation, V.N. and G.K.; Formal analysis, V.N.; Investigation, V.N.; Resources, V.N.; Data curation, G.K.; Writing—original draft preparation, V.N.; Writing—review and editing, V.N. and G.K.; Visualization, V.N.; Supervision, G.K.; Project administration, V.N.; Funding acquisition, V.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The research was supported by the European Union within the framework of the National Laboratory for Artificial Intelligence (RRF-2.3.1-21-2022-00004).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Williamson, A.M.; Feyer, A.-M.; Wales, S.; Williamson, A.A.M. Moderate Sleep Deprivation Produces Impairments in Cognitive and Motor Performance Equivalent to Legally Prescribed Levels of Alcohol Intoxication. Occup. Environ. Med. 2000, 57, 649–655. [Google Scholar] [CrossRef] [PubMed]

- Pearce, A.J.; Tommerdahl, M.; King, D.A. Neurophysiological Abnormalities in Individuals with Persistent Post-Concussion Symptoms. Neuroscience 2019, 408, 272–281. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Zhang, X.; Rong, J. Study of the Effects of Alcohol on Drivers and Driving Performance on Straight Road. Math. Probl. Eng. 2014, 2014, 1–10. [Google Scholar] [CrossRef]

- Dong, M.; Lee, Y.Y.; Cha, J.S.; Huang, G. Drinking and Driving: A Systematic Review of the Impacts of Alcohol Consumption on Manual and Automated Driving Performance. J. Safety Res. 2024, 89, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Richman, J.E.; May, L.S. An investigation of the Druid® smartphone/tablet app as a rapid screening assessment for cognitive and psychomotor impairment associated with alcohol intoxication. Vision Dev. Rehab. 2019, 5, 31–42. [Google Scholar]

- Karoly, H.C.; Milburn, M.A.; Brooks-Russell, A.; Brown, M.; Streufert, J.; Bryan, A.D.; Lovrich, N.P.; DeJong, W.; Bidwell, L.C. Effects of High-Potency Cannabis on Psychomotor Performance in Frequent Cannabis Users. Cannabis Cannabinoid Res. 2022, 7, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Spindle, T.R.; Martin, E.L.; Grabenauer, M.; Woodward, T.; Milburn, M.A.; Vandrey, R. Assessment of Cognitive and Psychomotor Impairment, Subjective Effects, and Blood THC Concentrations Following Acute Administration of Oral and Vaporized Cannabis. J. Psychopharmacol. 2021, 35, 786–803. [Google Scholar] [CrossRef] [PubMed]

- DeJong, W.; Choo, P.W.; Jor’dan, A.J.; Stanley, I.M.; Milburn, M.A. Monitoring concussion recovery with the Druid® Rapid impairment assessment app: A case report. Clin. Med. Case Rep. 2025, 7, 1–5. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).