Abstract

A classifier is commonly generated for multifunctional prostheses control or also as input devices in human–computer interfaces. The complementary use of the open-access biomechanical simulation software, OpenSim, is demonstrated for the hand-movement classification performance visualization. A classifier was created from a previously captured database, which has 15 finger movements that were acquired during synchronized hand-movement repetitions with an 8-electrode sensor array placed on the forearm; a 92.89% recognition based on a complete movement was obtained. The OpenSim’s upper limb wrist model is employed, with movement in each of the joints of the hand–fingers. Several hand-motion visualizations were then generated, for the ideal hand movements, and for the best and the worst (53.03%) reproduction, to perceive the classification error in a specific task movement. This demonstrates the usefulness of this simulation tool before applying the classifier to a multifunctional prosthesis.

1. Introduction

The simulation of biomechanical systems is a widely used tool in the scientific field that is currently booming in medical systems; for example, in rehabilitation tasks [1], and in entertainment [2], such as the interaction of manual gestures between humans and computers. Nevertheless, they involve the development of specific manual tasks related to the reproduction of finger movements. Different types of sensors are used [3], but surface electromyography (sEMG) sensors are the most important ones to perform the recognition of the specific hand movement [4,5]. It requires the use of machine learning techniques to go from sEMG data to determine one of the possible motions; simple and complex models are being used. Once some motion classification model is available, it is translated to the physical or visual reproduction of the considered motions.

In this work, the use of a quadratic discriminant classifier (QDA) developed from machine learning techniques is proposed for the simulation of movement in a specialized biomechanical simulation environment OpenSim. The complementary use of the open-access biomechanical simulation software, OpenSim, is demonstrated for the hand-movement classification performance visualization. OpenSim is a musculoskeletal simulation program that allows biomechanical analysis of a variety of models [6,7,8], and currently has tools for real-time motion analysis in [9,10]. The OpenSim’s upper limb wrist model is employed, with movement in each of the joints of the hand–fingers.

The classifier was created from a previously captured database, which has 15 finger movements that were acquired during synchronized hand-movement repetitions with an 8-electrode sensor array placed on the forearm, as described in [11]. The best reproduction obtained was a 92.89% recognition accuracy on average, based on a complete movement across all subjects. Several hand-motion visualizations were then generated, for the ideal hand movements, and for the best and the worst (53.03%) reproduction, to perceive the classification error in a specific task movement. This demonstrates the usefulness of this simulation tool before applying the classifier to a multifunctional prosthesis.

2. Materials and Methods

2.1. Modified Opensim Wrist Model

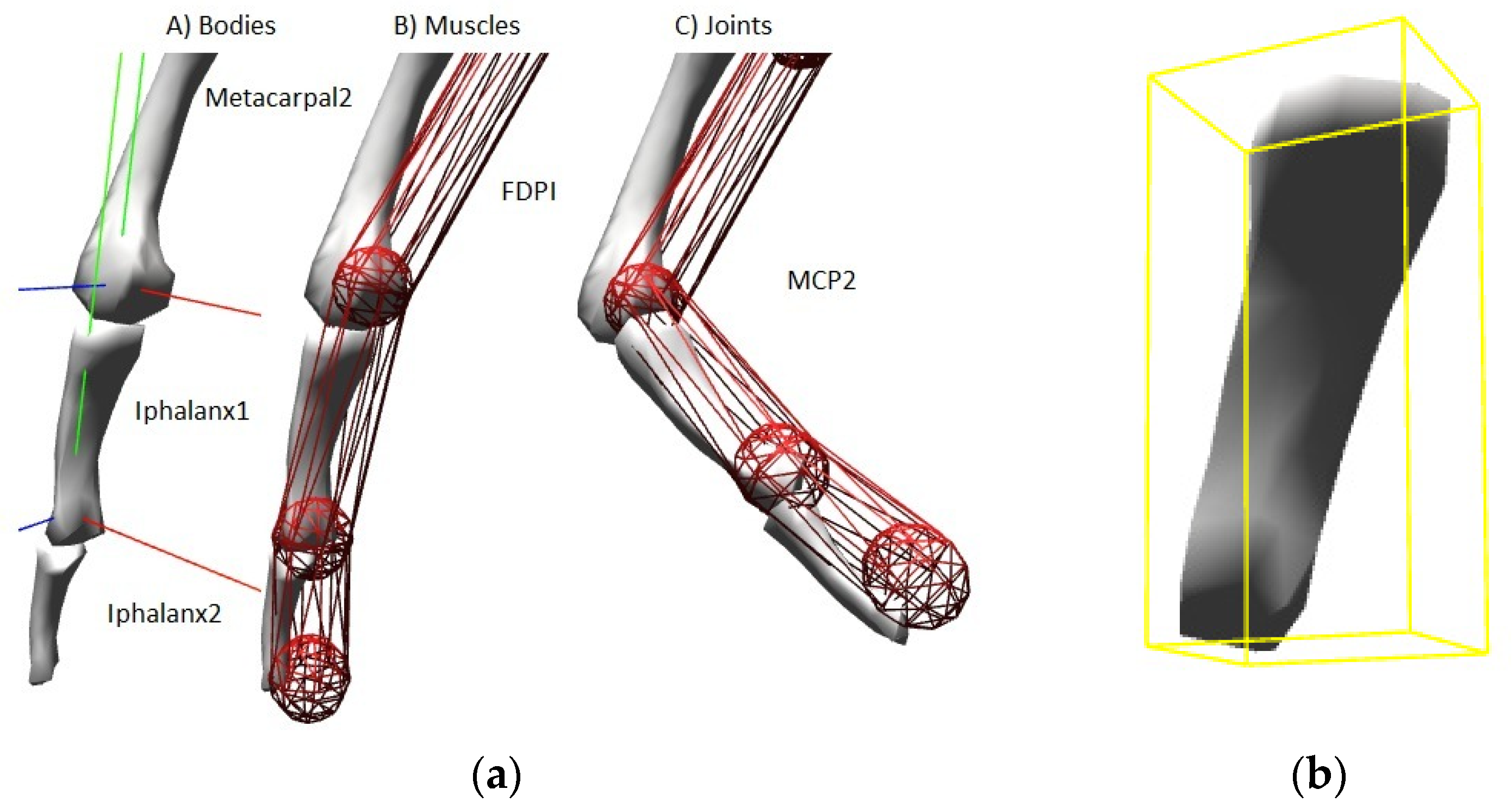

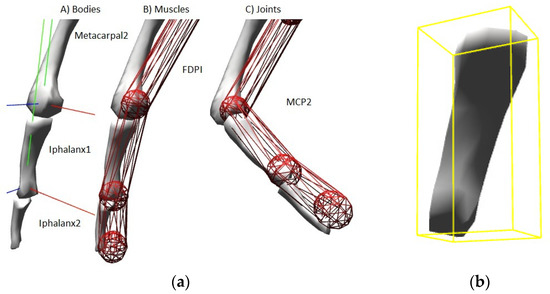

The hand-forearm model used in this work is based on the one created by Delp and Gonzalez concerning the movement of the wrist [12]. The initial model has all the upper limb bones and 10 degrees of freedom (DoF), including those of the elbow, thumb and index finger, and has a total of 23 muscle actuators that control the movement. We updated this OpenSim model for this work, to add motion to the middle, ring and little finger joints [13]. Figure 1 illustrates some sections of interest that had to be modified in the model in order to be used. The model has now 25 actuators and 25 degrees of freedom. The degrees of freedom were added for the middle, ring and little fingers which are the joints between the metacarpals, phalanges, phalangetas and phalanginas, a comparison between the original and the modified model can be seen in Table 1.

Figure 1.

This figure represents segments of the modified model: (a) the parameters that were modified in the model are observed from bones name, number of joints and muscle insertions; (b) a fragment of a bone is observed (a phalanx), an image with a vtp file extension, which is part of the model. These figures were obtained from the visualization of the model under OpenSim, where FDPI is Flexor Digitorium Profundus muscle and MCP2 is Metacarpal2 joint.

Table 1.

Comparison between the number of bones, muscle actuators, joints and degrees of freedom of the models considered.

The joints of the phalanges are trochlear type, in form of a hinge, so they only have a degree of freedom for movement in a single axis. A muscle actuator defined as a muscle has a sequence of points where the muscle is attached onto the bone. Thus, a geometric trajectory is defined by a set of trajectory points. The union of two bones generates a joint, in this joint there can be more than one degree of freedom, but in the case of the phalanges they only have movement in one axis, therefore they only have one degree of freedom.

2.2. Brief Summary of the Database to Model and Classify

The database consists of 8 subjects and 15 finger movements. The 15 movements include the flexion of each of the individual fingers and combinations between these: thumb (T_T), index (I_I), middle (M_M), ring (R_R), little finger (L_L), the combinations TI, TM, TR, TL, IM, MR, RL, IMR, MRL, and finally the closed hand (HC). Each subject performed 12 repetitions for each of the movements, i.e., 1440 repetitions of some type of movement. The raw data files are downloaded from [14] and loaded with the MATLAB csvread function. An array is created for each motion named with the name of the motion. This raw data are used as the input for the pre-processing stage, and correspond to the data of 8 sEMG of the forearm of each subject tested during repetition of movements. Only images of the final position of each movement were presented, the movement was performed for 5 s and started with the hand relaxed in a fixed forearm position [11].

2.3. Vector of DoF Incremental Motion

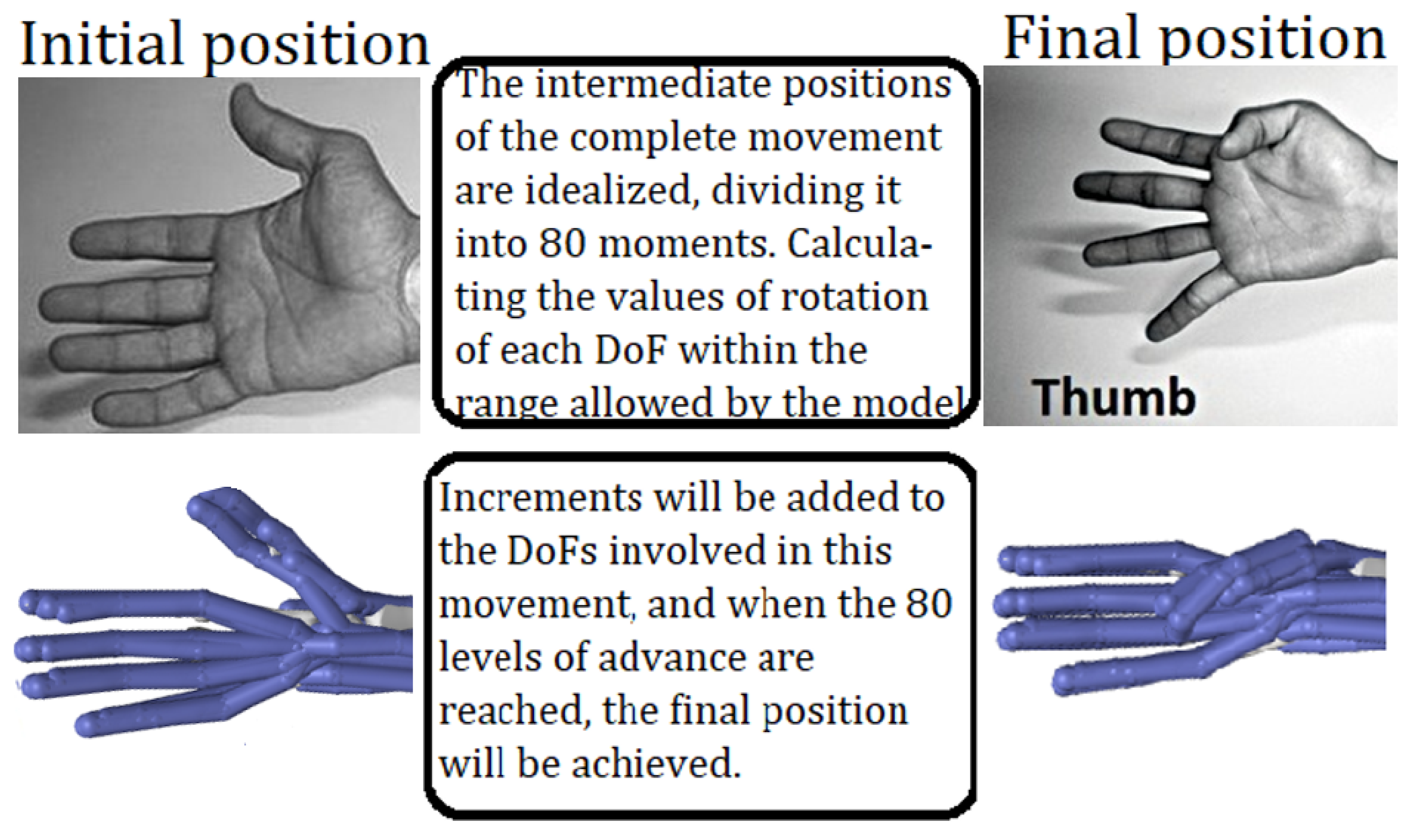

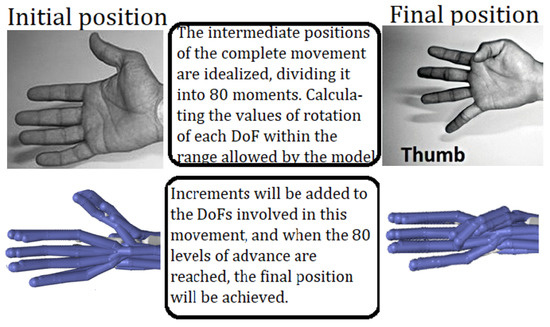

As there is no database video, or data from other types of position sensors, on the movements of the hand from which to infer or measure the position of each joint (or DoF) over time, a virtual ideal motion was created of each joint involved in the complete cycle of a movement, assuming uniform dynamics. Starting with the rotation value that each joint has in the initial position of the hand at rest, until reaching the final rotation in which each joint ends a specific movement, see Figure 2.

Figure 2.

Upper section: images of the hand movement for the case of the thumb. Lower section, OpenSim model in initial and final position, different joints or DoF intervene in this movement.

Once the initial and final image position of the hand for each movement are known, the intermediate positions that make up the complete movement are idealized, dividing it to be 80 moments (steps) of the full 5 s. Calculating the rotation values of each joint involved within the range allowed by the model and required by the type of movement. The starting position for all 15 movements is the same, the hand at rest. Therefore, from this initial position, it is possibly to add a given rotation increment to the joints involved, so that in step 80 the given movement was completed. It is easy to have just the joint rotation increment for each video frame. Therefore, only a vector of the 25 joint rotation increment values is needed for each specific movement.

2.4. Data Pre-Processing and Classification

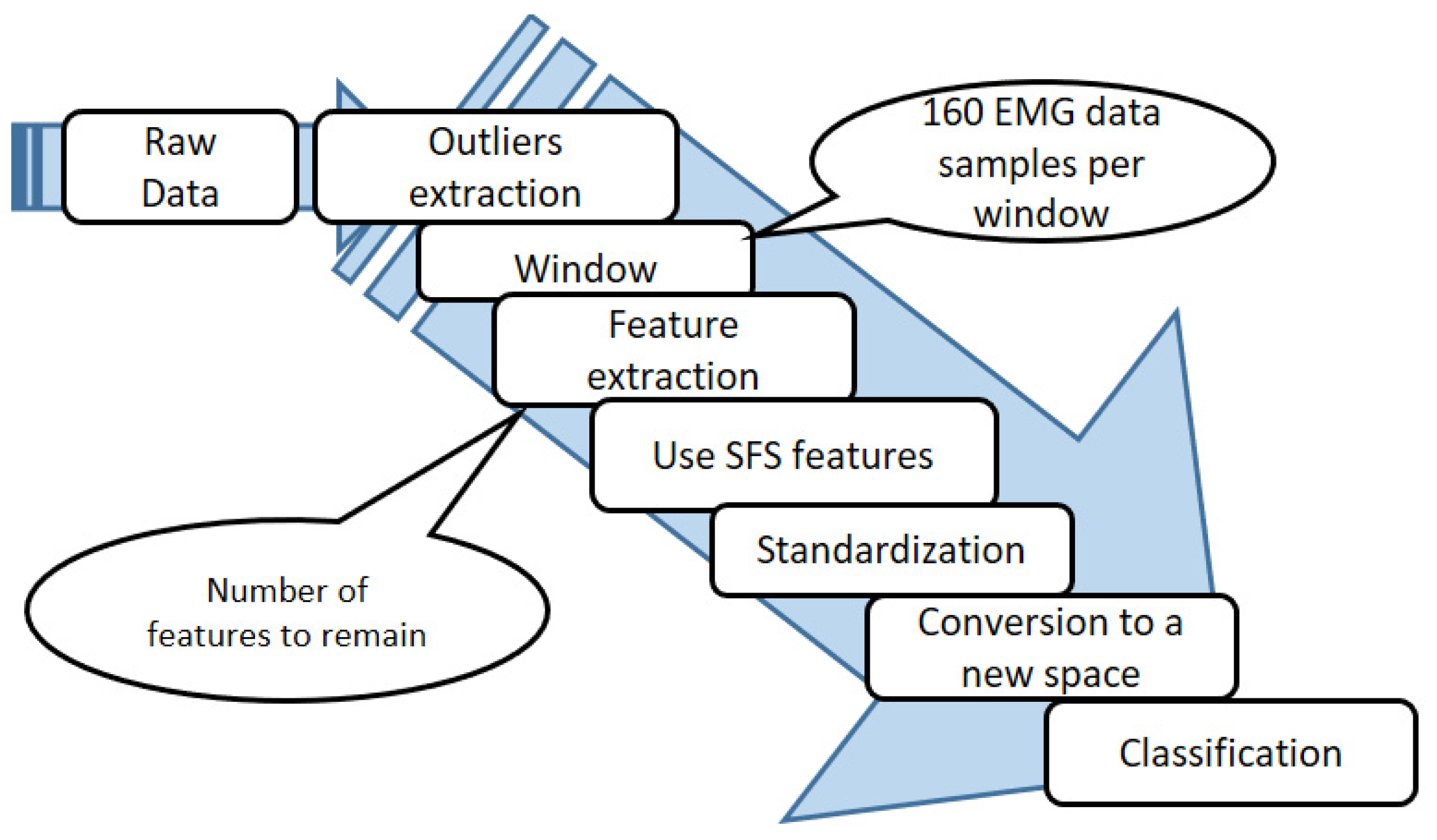

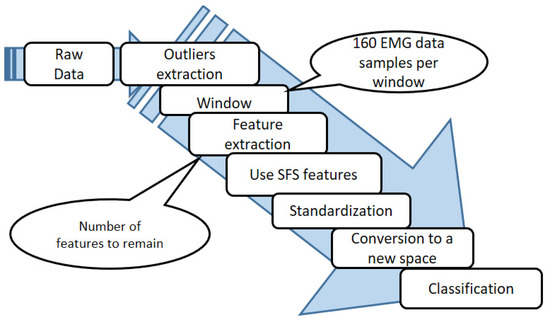

The raw data matrix is used to create a classification model. The classification model is obtained through a procedure described in [15]. This procedure can be seen in general form in Figure 3 and described below as Algorithm 1, developed under the MATLAB environment. The raw data matrix is also used to generate an online classification. Algorithm 2 describes the procedure to perform the online classification of a complete repetition of one of the evaluated hand gestures.

| Algorithm 1 |

|

Figure 3.

Sequence in the pre-processing of data prior to classification.

| Algorithm 2 |

|

With Algorithm 1 it was possible to obtain the selected window size, the best math operations that extract information from the raw EMG signal, the number of features that increase the recognition rate, the statistical parameters of the evaluated data matrix such as mean and standard deviation to normalize new input data and the transformation matrices are obtained, to transform the new data in the new space.

Once the window size was set, the features obtained from SFS, the data normalization parameters and the new space conversion matrices and classification model obtained, we used this information to run over specific hand-motion measurements for an online classification. The following algorithm shows the online process.

2.5. Creation of Motion Files

For each type of the 15 hand movements there was a DoF incremental motion vector, the sum of 80 times one of these vectors of the same movement generates a complete ideal movement file. However, now, when the online classification of a raw complete specific motion was made, it produces a particular classification per window, resulting 80 possible DoF incremental motion vectors to generate a motion file record of fully classified window steps.

Therefore, there are two types of motion files to reproduce the movements, the ideal movements and the movements created from a full classification. This is a visual tool that permits observation of the classification success and to think about the actual performance of the hand gestures. It was decided to take the worst classified motion according to the experimental data. The graph of the progression of the motion in the classification is shown in results.

OpenSim reproduces movements from a movement file with “.mot” extension and at the same time allows us to record videos with “.webm” extension for visualization and storage in a video format.

3. Results

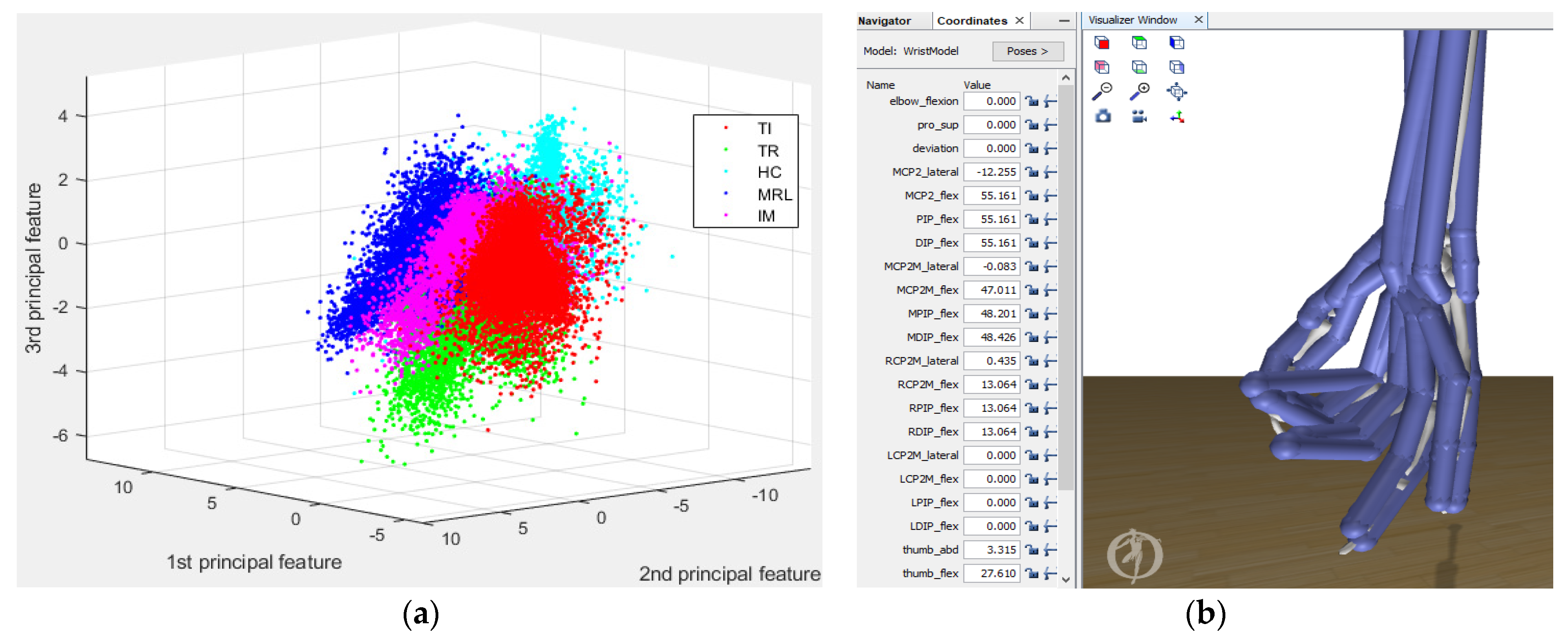

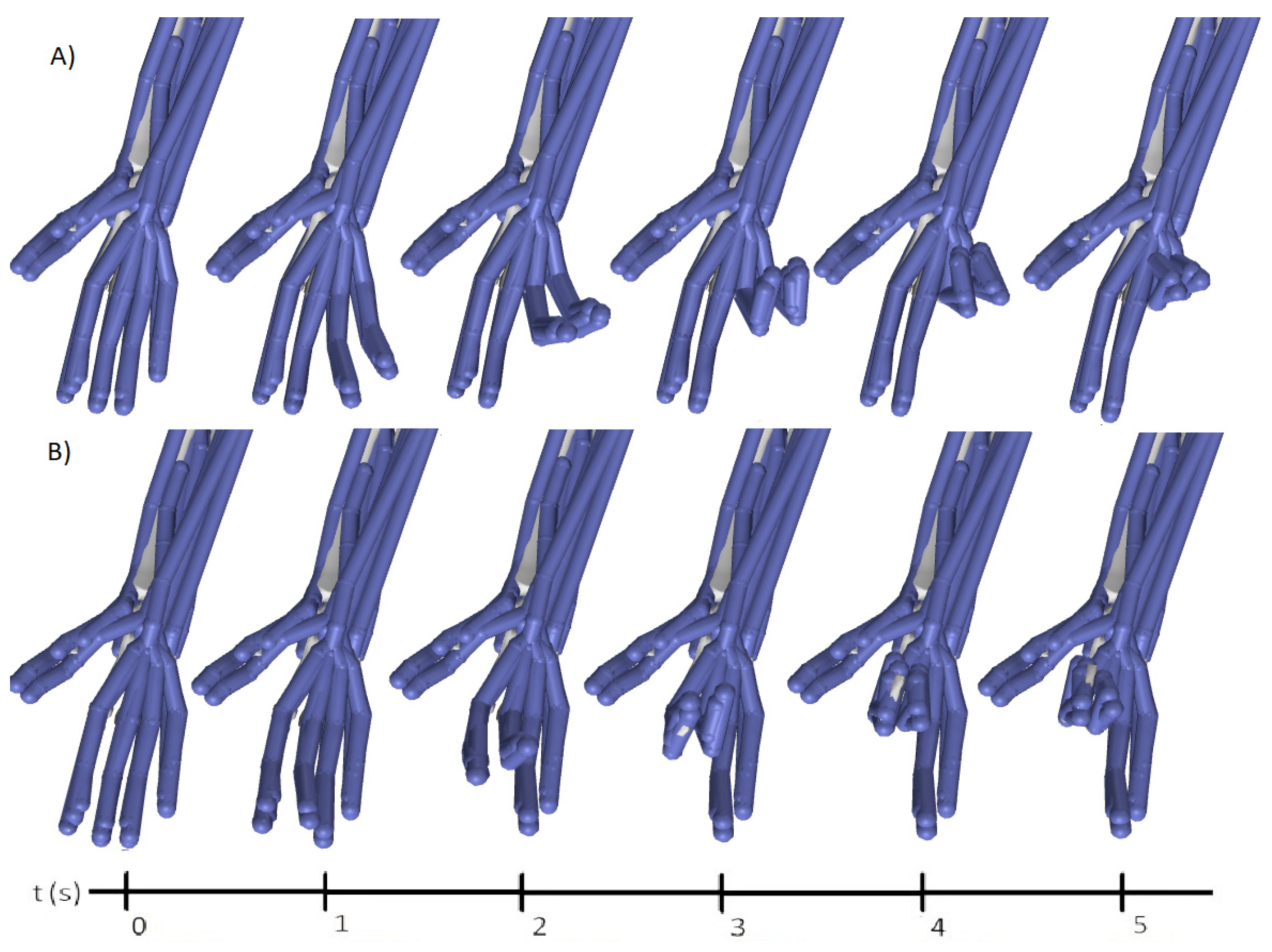

One of the first results of this article was the modified model of the wrist in OpenSim, to include 25 joints with DoF as shown in Figure 4b. This model has now the necessary characteristics to be able to reproduce any movement of the hand evaluated in this work. The OpenSim model needs motion files to generate the movements of each of the joints. A simple method of DoF incremental motion vector was created for the joints involved in any of the 15 evaluated hand movements had a known position transition.

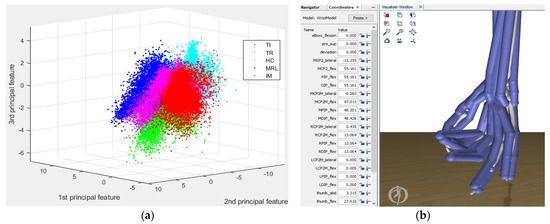

Figure 4.

This figure represents the union between the two parts of this research (a) It can be observed the separation of the classes of 5 movements from only 3 features; (b) Model of the modified wrist which has movement in the 5 fingers of the hand.

The construction of the classifier was obtained from Algorithm 1. In Figure 4a the separation of the classes using the dispersion matrix algorithm can be observed, where with only 3 features it was possible to visualize a separation between five of the hand gestures.

Results of the classification model for the 8-subjects’ raw database data are summarized in Table 2, appearing in the order of worst to best classified movements. Finding that the worst evaluated movement was R_L flexion with a classification percentage of 53.03% and the best movement was T_M with 92.89% recognition. A relationship is also shown about the individual subject classification and the best percentage of recognition found; for example, in the movement R_L, which was the worst classified averaged of the 8 subjects, we have that with subject 7 there is a recognition of 96.70%.

Table 2.

Percentage of average recognition of the 8 subjects.

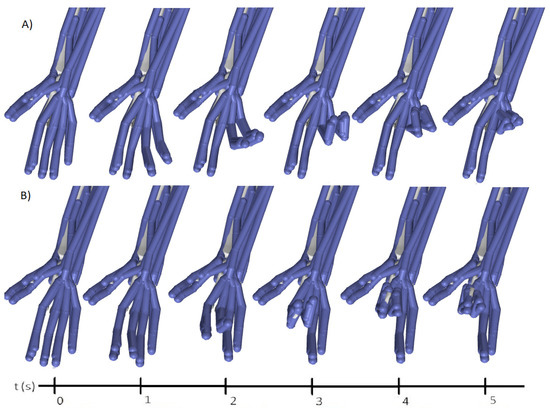

The worst performed movement, and corresponding subject, was selected to review the full classification result with the OpenSim model and to visualize the effect of the error reproduced by this movement classification. Figure 5 shows the result of the classification of the R_L movement compared to the ideal movement along the time of evolution. This method is an evaluation tool of the complete movement from the classification of the EMG recordings.

Figure 5.

Progression of the R_L movement in a classification from a raw data file. (A) Ideal positions of the ring and little fingers flexion (B) Worst case classification for the R_L fingers flexion.

4. Discussion and Conclusions

A classification model was created from an EMG database of hand gestures, obtaining high recognition rate for the evaluated movements, even though the model is used for a group of 8 subjects with 15 movements. The objective in this work was to demonstrate the use of this classification but in a model of hand-movement simulation, as a way visually evaluate the success of the movement recognition through observing how stable and smooth is the trajectory of the hand gesture prediction.

The definition of the classification model and its implementation is critical, and to be able to evaluate the classification model visually is a great tool: the creation of the movement file for any complete repetition of movement and the visualization and reproduction biomechanically under OpenSim. As we can see in the reproduction, a wrongly classified movement does not always affect the total movement generated. The error translated to movement can be expressed in the same way as a movement executed at 80%, perhaps more if the correct movements are considered within the errors. For example, if the classification indicates flexion of the index-middle-ring finger and it is actually index finger, this is a partially good classification, since this movement helps or classifies well the correct movement. Even a normal human hand, to execute a single movement using the ring finger as an example, generates other movements in the other fingers.

The recognition of movements involved in daily life has prospective applications in rehabilitation and in entertainment, such as the interaction of manual gestures between humans and computers. The method used to generate the visualization of the movement could be improved by capturing video or perhaps using gloves to measure the position of each of the joints at the time of generating the databases. Therefore, the software would correlate the physical movement in relation to the electromyography data. At any rate, this paper used an already created database that did not have these measurements and ideal motion was created for each joint; with more realistic joint positions, the simulation program would be more accurate.

Author Contributions

Conceptualization, J.A.A.-G. and M.E.B.-Z.; methodology, J.A.A.-G. and M.E.B.-Z.; software, J.A.A.-G. and M.E.B.-Z.; validation, M.E.B.-Z., R.L.A. and M.A.R.; formal analysis, J.A.A.-G.; investigation, J.A.A.-G.; resources, M.E.B.-Z. and D.C.-G.; data curation, J.A.A.-G.; writing—original draft preparation, M.E.B.-Z.; writing—review and editing, R.L.A. and D.C.-G.; visualization, M.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CONACYT, CVU 67944.

Institutional Review Board Statement

For the online database, all participants provided informed consent prior to participating in the study as was approved by the university research ethics committee.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Motion and model files can be found at SimTK Opensim. Available online: URL https://simtk.org/projects/moving-fingers.

Acknowledgments

We are grateful to the Universidad Autonoma de Baja California for offering its facilities for the study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Adamovich, S.V.; Fluet, G.G.; Mathai, A.; Qiu, Q.; Lewis, J.; Merians, A.S. Design of a complex virtual reality simulation to train finger motion for persons with hemiparesis: A proof of concept study. J. Neuroeng. Rehabil. 2009, 6, 28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4207–4215. [Google Scholar] [CrossRef]

- Pettersson, J.; Falkman, P. Human Movement Direction Classification using Virtual Reality and Eye Tracking. Procedia Manuf. 2020, 51, 95–102. [Google Scholar] [CrossRef]

- Wang, N.; Lao, K.; Zhang, X. Design and Myoelectric Control of an Anthropomorphic Prosthetic Hand. J. Bionic Eng. 2017, 14, 47–59. [Google Scholar] [CrossRef]

- Arteaga, M.V.; Castiblanco, J.C.; Mondragon, I.F.; Colorado, J.D.; Alvarado-Rojas, C. EMG-driven hand model based on the classification of individual finger movements. Biomed. Signal Process. Control. 2020, 58, 101834. [Google Scholar] [CrossRef]

- Sim, T.K. OpenSim. Available online: https://simtk.org/projects/opensim (accessed on 24 September 2021).

- Vaz, R.; Sousa, A.; Tavares, J.M.R. OpenSim and Dentistry: A Study Applied to the Prevention of Musculoskeletal Disorders in the Clinic, Master in Biomedical Engineering 2017/2018. Available online: https://web.fe.up.pt/~tavares/downloads/publications/relatorios/TP_RaquelVaz.pdf (accessed on 24 September 2021).

- Seth, A.; Sherman, M.; Reinbolt, J.A.; Delp, S.L. OpenSim: A musculoskeletal modeling and simulation framework for in silico investigations and exchange. Procedia Iutam 2011, 2, 212–232. [Google Scholar] [CrossRef] [PubMed]

- Seth, A.; Matias, R.; Veloso, A.P.; Delp, S.L. A biomechanical model of the scapulothoracic joint to accurately capture scapular kinematics during shoulder movements. PLoS ONE 2016, 11, e0101428. [Google Scholar] [CrossRef] [PubMed]

- Sim, T.K. OpenSense—Kinematics with IMU Data. Available online: https://simtk-confluence.stanford.edu:8443/display/OpenSim/OpenSense+-+Kinematics+with+IMU+Data (accessed on 24 September 2021).

- Khushaba, R.N.; Kodagoda, S. Electromyogram (EMG) feature reduction using mutual components analysis for multifunction prosthetic fingers control. In Proceedings of the 12th International Conference on Control Automation Robotics & Vision (ICARCV), Guangzhou, China, 5–7 December 2012; pp. 1534–1539. [Google Scholar] [CrossRef]

- Wrist Model. Available online: https://simtk.org/projects/wrist-model (accessed on 24 September 2021).

- Modification of Wrist Model to Include All the Movements of the Fingers. Available online: https://simtk.org/projects/moving-fingers (accessed on 24 September 2021).

- EMG Datasets Repository. Available online: https://www.rami-khushaba.com/electromyogram-emg-repository.html and https://onedrive.live.com/?authkey=%21Ar1wo75HiU9RrLM&id=AAA78954F15E6559%21312&cid=AAA78954F15E6559 (accessed on 24 September 2021).

- Amezquita-Garcia, J.A.; Bravo-Zanoguera, M.E.; González-Navarro, F.F.; Lopez-Avitia, R. Hand Movement Detection from Surface Electromyography Signals by Machine Learning Techniques; Springer: Cham, Switzerland, 2020; Volume 75, pp. 218–227. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).