Cauchy Operator Boosted Artificial Rabbits Optimization for Solving Power System Problems

Abstract

1. Introduction

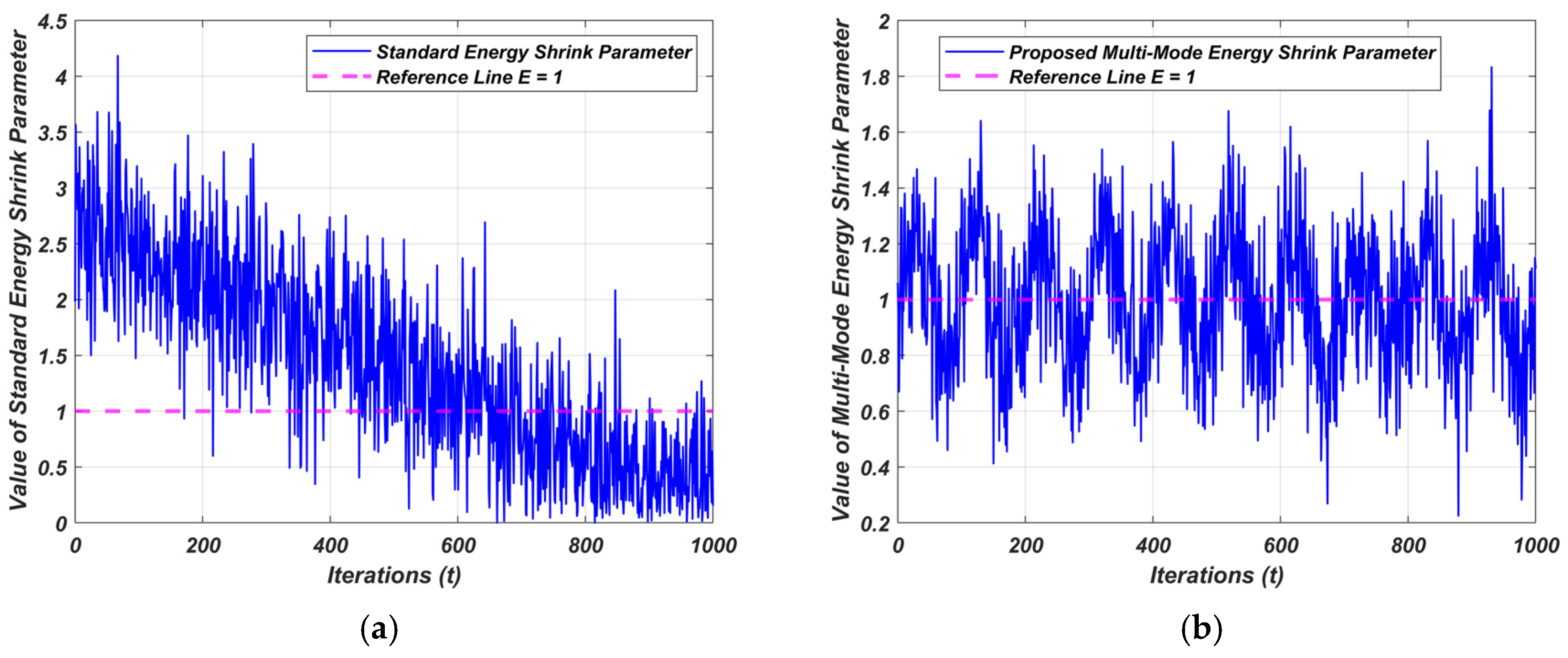

- (1)

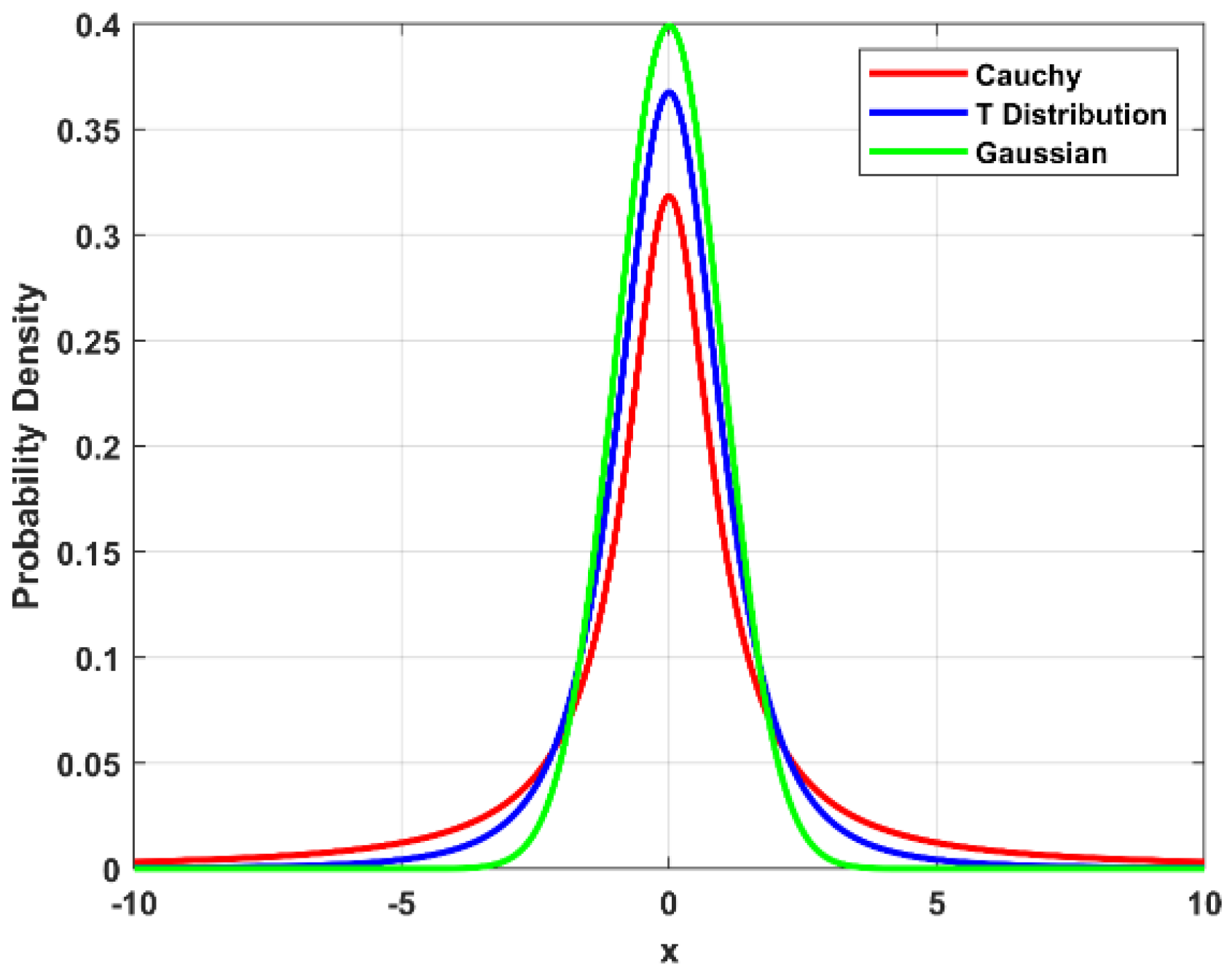

- Heavy-Tailed Distribution Advantage: The Cauchy distribution is characterized by its heavy tails and undefined mean and variance. This property allows the mutation to produce occasional large jumps in the search space, which enhances global exploration and mitigates the risk of premature convergence to local optima, which is a common limitation observed in standard ARO, especially in constrained high-dimensional problems.

- (2)

- Enhanced Exploration Capability: By introducing the Cauchy mutation in the exploration phase, the algorithm gains an increased ability to escape local optima and conduct broader searches across the solution space, leading to a more thorough investigation of potential optimal regions.

- (3)

- Convergence Acceleration: While promoting exploration, the probabilistic nature of the Cauchy operator also permits fine-tuning around promising areas due to the frequent occurrence of smaller steps near the distribution center, thus supporting a more balanced transition between exploration and exploitation.

- (4)

- Supporting Empirical Studies: Prior studies, such as the work on Cauchy mutation-enhanced Harris Hawks Optimization [48], have empirically demonstrated the effectiveness of this approach in improving both convergence speed and solution accuracy in various engineering optimization problems. This empirical background provided a solid basis for adopting a similar strategy in enhancing ARO.

- (1)

- A novel optimization framework has been proposed by enhancing the Artificial Rabbits Optimization (ARO) algorithm with a Cauchy mutation operator and a multi-mode energy shrink control parameter, providing an effective structure for solving complex constrained engineering problems.

- (2)

- The main improvement in comparison to the standard ARO focuses on enhancing the exploration phase and reducing the probability of becoming trapped in local optima. This enhancement is achieved by introducing the Cauchy mutation operator. Additionally, proposing a novel multi-mode control parameter that allows for a seamless transition between exploration and exploitation, avoiding premature convergence and ultimately increasing the exploration and exploitation potential of the search space.

- (3)

- The proposed CARO is applied to eleven power system problems derived from IEEE CEC2020 constrained engineering benchmark functions. The superiority of CARO has been noticed using different metrics. Comprehensive evaluation criteria are used. These criteria are: best, mean, worst, std, and spider plots.

2. Artificial Rabbits Optimization (ARO)

2.1. Detour Foraging (Exploration)

2.2. Random Hiding (Exploitation)

2.3. Energy Shrink (Switch from Exploration to Exploitation)

3. Proposed Cauchy Artificial Rabbits Optimization (CARO)

3.1. Cauchy Distribution

3.2. Proposed Cauchy Operator

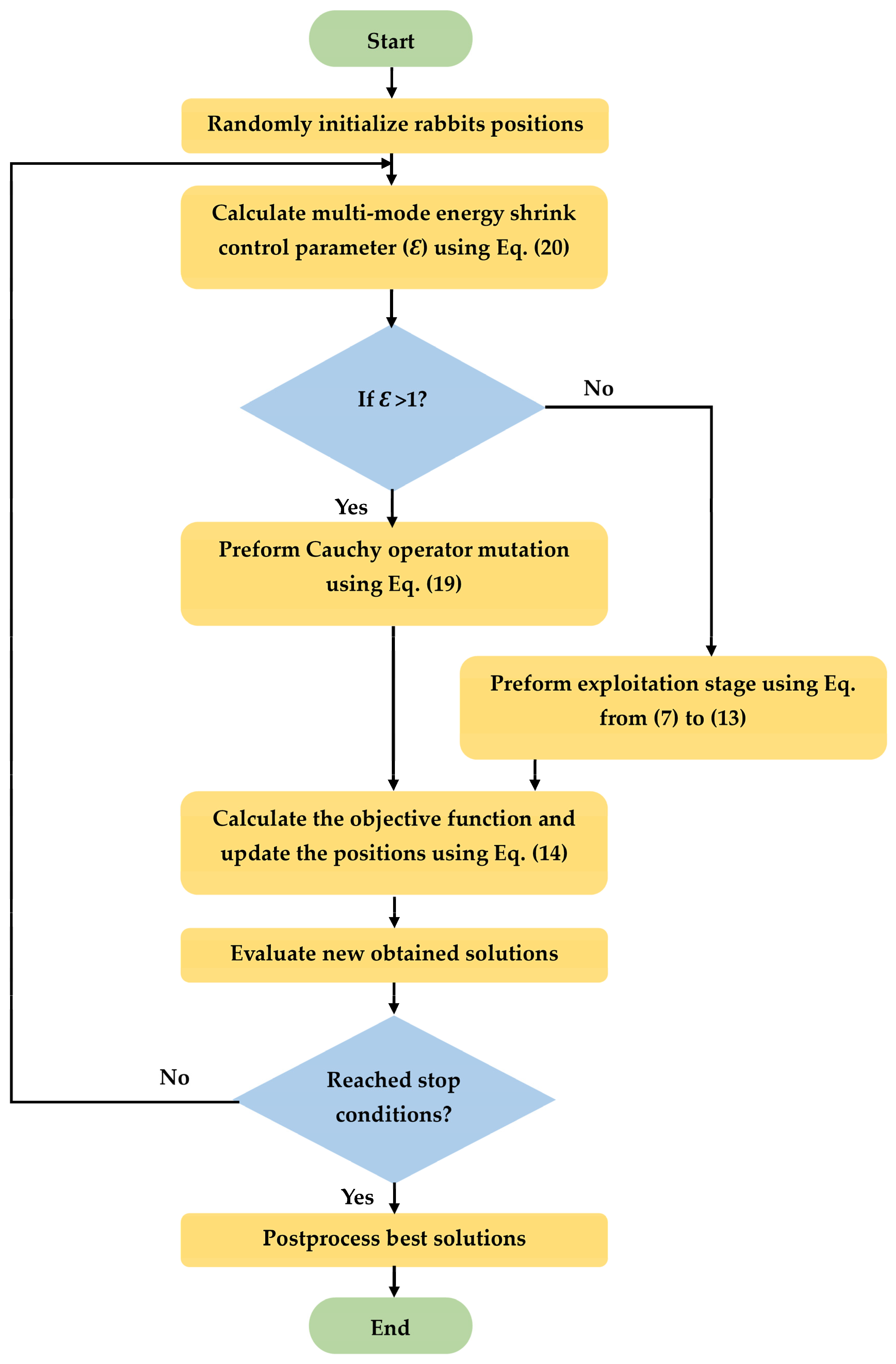

3.3. The Proposed Cauchy Artificial Rabbits’ Optimization Design (CARO)

| Algorithm 1. Pseudo-code of CARO |

Start CARO

Perform Cauchy mutation operator (Equation (19)). Update the position of the ith rabbit (Equation (14)). else (exploitation phase) Perform random hiding strategy (Equation (11)). Update the position of the ith rabbit (Equation (14)). end if

end if

|

3.4. Computational Complexity Analysis

4. Power System Problems

- (1)

- Case 1: Optimal Sizing of Single-Phase Distributed Generation with reactive power support for Phase Balancing at Main Transformer/Grid. Practical distribution systems frequently experience imbalances, which can result in the production of negative and zero sequence currents. Rotating equipment may operate inefficiently as a result of this imbalance, and neutral conductor losses may also occur. In a balanced system, the flow of neutral current is zero, and the conductor that serves as neutral is designed to carry a smaller current under specific conditions.

- (2)

- Case 2: Optimal sizing of distributed generation for active power loss minimization. The main goal of this case involves determining the appropriate capacity and configuration of DG units within an electrical distribution system to reduce active power losses. Active power losses occur when electricity is dissipated as heat as it flows through power lines and components, resulting in a decrease in the overall efficiency of the distribution network. Optimally sizing DG units aims to mitigate these losses by strategically placing and sizing generators. This challenge case can be expressed as follows:

- (3)

- Case 3: Optimal sizing of distributed generation (DG) and capacitors for reactive power loss minimization. The loads in power system, such as transformers, have inductive characteristics that consume reactive power, leading to reduced system performance and increased losses. To address this issue, shunt capacitors (SC) are employed to supply reactive power, thereby improving the Volt-Ampere Reactive of the system. Furthermore, DGs represent an efficient means of reducing active power losses in the system. Integrating SCs with DGs can further contribute to minimizing the power losses. Consequently, this case can be formulated as a constrained optimization problem.

- (4)

- Case 4: Optimal power flow (minimization of active power loss). This engineering case is the subject of ongoing research interest. The problem can be framed as a single-objective constrained optimization benchmark, where the goal is to optimize various factors such as transmission losses, fuel costs, voltage stability, emissions, and more, while adhering to the constraint requirements. In this context, one of the primary objectives is to minimize active power losses, making it an integral part of the optimization problem:

- (5)

- Case 5: Optimal power flow (minimization of fuel cost). In this scenario, the objective is to minimize fuel costs, which is formulated as another objective function within the constrained optimization problem framework.where , , and are the cost coefficient of the ith bus generator.

- (6)

- Case 6: Optimal power flow (minimization of active power loss and fuel cost). Balancing the trade-off between loss reduction and fuel cost minimization is a key challenge in this engineering case, and efficient optimization techniques are required to solve these complex optimization problems, thereby improving the economic and operational performance of power systems.where ai, bi, and ci are the cost coefficient of the ith bus generator, and λi represents the weight factors.

- (7)

- Case 7: Microgrid power flow (islanded case). A proper power flow instrument is required during the operational analysis of this case. Droop controllers manage the control of active and reactive power sharing among Distribution Generators (DGs) in Droop-Based Islanded Microgrids (DBIMGs). For different types of buses, traditional power flow procedures typically involve four unknown variables: voltage angle, voltage magnitude, reactive power, and active power. Traditional approaches are not ideal for handling the power flow problem in this engineering case as the operation frequency is treated as an additional element in this engineering case. The equations regarding this challenging case, to be formulated as a constrained benchmark, are outlined below:

- (8)

- Case 8: Microgrid power flow (grid-connected case)

- (9)

- Case 9: Optimal setting of droop controller for minimization of active power loss in islanded microgrids (IMG). In the context of IMG applications, DGs play a critical role in distributing local loads while ensuring that bus voltages and system frequency remain within acceptable limits. Additionally, it’s crucial to maintain the flow of current within specified bounds across the grid lines. In IMGs, various droop control systems are employed to increase the ability of DGs to share power. It’s essential that these schemes are not only stable but also optimized for performance. In IMGs, to reduce active losses, droop settings need to be adjusted. This particular challenge can be framed as a complex constrained optimization problem.

- (10)

- Case 10: Optimal setting of droop controller for minimization of reactive power loss in islanded microgrids. The optimal setting of a droop controller in islanded microgrids plays a critical role in minimizing reactive power losses and ensuring efficient operation. To reduce reactive power losses, the droop controller parameters, such as the droop slope and reference voltage/frequency, must be carefully adjusted. This case can be formulated:

- (11)

- Case 11: Wind farm layout problem (WFLP). WFLP is a complex optimization challenge. It involves determining the optimal arrangement of wind turbines within a designated area to maximize energy production while considering various constraints and objectives. The primary aim of the WFLP is to design an efficient layout that maximizes the wind farm’s energy output while minimizing costs.

5. Experimental Analysis and Results

5.1. Experimental Setup

5.2. CARO for Power System Problems

5.3. Statistical Results Analysis

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CARO | Cauchy Artificial Rabbits Optimization |

| ARO | Artificial Rabbits Optimization |

| AHA | Artificial Hummingbird Algorithm |

| BWO | Black Widow Optimization |

| DMO | Dwarf Mongoose Optimization |

| DO | Dingo Optimizer |

| GJO | Golden Jackal Optimization |

| HBA | Honey Badger Algorithm |

| SCSO | Sand Cat Swarm Optimization |

| SO | Snake Optimizer |

| MPA | Marine Predators Algorithm |

| AGPSO | Autonomous Groups Particle Swarm Optimization |

| IMODE | Improved Multi-Operator Differential Evolution |

| LSHADE-SPACMA | LSHADE with Semi-Parameter Adaptation Hybrid With CMA-ES (LSHADE-SPACMA) |

| LS_SP | LSHADE-SPACMA |

| CEC2020 | 2020 IEEE Congress on Evolutionary Computation (CEC) |

| DG | Distributed Generation |

| SC | Shunt Capacitors |

| DBIMGs | Droop-Based Islanded Microgrids |

| PFP | Power Flow Problem |

| NNTs | Newton’s Numerical Techniques |

| IMG | Islanded Microgrids |

| WFLP | Wind Farm Layout Problem |

References

- Dutta, R.; Das, S.; De, S. Multi Criteria Decision Making with Machine-Learning Based Load Forecasting Methods for Techno-Economic and Environmentally Sustainable Distributed Hybrid Energy Solution. Energy Convers. Manag. 2023, 291, 117316. [Google Scholar] [CrossRef]

- Byles, D.; Mohagheghi, S. Sustainable Power Grid Expansion: Life Cycle Assessment, Modeling Approaches, Challenges, and Opportunities. Sustainability 2023, 15, 8788. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abdulazeez, A.M. Car Side Impact Design Optimization Problem Using Giant Trevally Optimizer. Structures 2023, 55, 39–45. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abdulazeez, A.M. Improved Northern Goshawk Optimization Algorithm for Global Optimization. In Proceedings of the 2022 4th International Conference on Advanced Science and Engineering (ICOASE), Zakho, Iraq, 21–22 September 2022; pp. 89–94. [Google Scholar]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems. Technical Report. 2011, pp. 341–359. Available online: https://al-roomi.org/multimedia/CEC_Database/CEC2011/CEC2011_TechnicalReport.pdf (accessed on 21 July 2025).

- Lagaros, N.D.; Kournoutos, M.; Kallioras, N.A.; Nordas, A.N. Constraint Handling Techniques for Metaheuristics: A State-of-the-Art Review and New Variants. Optim. Eng. 2023, 24, 2251–2298. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abdulazeez, A.M. Metaheuristics: A Review of Algorithms. Int. J. Online Biomed. Eng. 2023, 19, 142–164. [Google Scholar] [CrossRef]

- Koza, J.R. Genetic Programming, on the Programming of Computers by Means of Natural Selection; A Bradford Book; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Nutcracker Optimizer: A Novel Nature-Inspired Metaheuristic Algorithm for Global Optimization and Engineering Design Problems. Knowl.-Based Syst. 2023, 262, 110248. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abdulazeez, A.M. Giant Trevally Optimizer (GTO): A Novel Metaheuristic Algorithm for Global Optimization and Challenging Engineering Problems. IEEE Access 2022, 10, 121615–121640. [Google Scholar] [CrossRef]

- Wang, X. Draco Lizard Optimizer: A Novel Metaheuristic Algorithm for Global Optimization Problems. Evol. Intell. 2024, 18, 10. [Google Scholar] [CrossRef]

- Hatamlou, A. Black Hole: A New Heuristic Optimization Approach for Data Clustering. Inf. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.; Mabrouk, M.; Al-Atabany, W. Archimedes Optimization Algorithm: A New Metaheuristic Algorithm for Solving Optimization Problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Azizi, M.; Aickelin, U.; A Khorshidi, H.; Baghalzadeh Shishehgarkhaneh, M. Energy Valley Optimizer: A Novel Metaheuristic Algorithm for Global and Engineering Optimization. Sci. Rep. 2023, 13, 226. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y. Brain Storm Optimization Algorithm. In Advances in Swarm Intelligence; Lecture Notes in Computer Science; International Conference in Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6728 LNCS, pp. 303–309. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, Z. Group Teaching Optimization Algorithm: A Novel Metaheuristic Method for Solving Global Optimization Problems. Expert Syst. Appl. 2020, 148, 113246. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.; Zhong, J.; Wei, G. IYDSE: Ameliorated Young’s Double-Slit Experiment Optimizer for Applied Mechanics and Engineering. Comput. Methods Appl. Mech. Eng. 2023, 412, 116062. [Google Scholar] [CrossRef]

- Duzgun, E.; Acar, E.; Yildiz, A.R. A Novel Chaotic Artificial Rabbits Algorithm for Optimization of Constrained Engineering Problems. Mater. Test. 2024, 66, 1449–1462. [Google Scholar] [CrossRef]

- Zaid, S.A.; Bakeer, A.; Albalawi, H.; Alatwi, A.M.; AbdelMeguid, H.; Kassem, A.M. Optimal Fractional-Order Controller for the Voltage Stability of a DC Microgrid Feeding an Electric Vehicle Charging Station. Fractal Fract. 2023, 7, 677. [Google Scholar] [CrossRef]

- Albalawi, H.; Zaid, S.A.; Alatwi, A.M.; Moustafa, M.A. Application of an Optimal Fractional-Order Controller for a Standalone (Wind/Photovoltaic) Microgrid Utilizing Hybrid Storage (Battery/Ultracapacitor) System. Fractal Fract. 2024, 8, 629. [Google Scholar] [CrossRef]

- Ravi, S.; Premkumar, M.; Abualigah, L. Comparative Analysis of Recent Metaheuristic Algorithms for Maximum Power Point Tracking of Solar Photovoltaic Systems under Partial Shading Conditions. Int. J. Appl. Power Eng. 2023, 12, 196–217. [Google Scholar] [CrossRef]

- Pervez, I.; Pervez, A.; Tariq, M.; Sarwar, A.; Chakrabortty, R.K.; Ryan, M.J. Rapid and Robust Adaptive Jaya (Ajaya) Based Maximum Power Point Tracking of a PV-Based Generation System. IEEE Access 2021, 9, 48679–48703. [Google Scholar] [CrossRef]

- Amine Tahiri, M.; Zohra El hlouli, F.; Bencherqui, A.; Karmouni, H.; Amakdouf, H.; Sayyouri, M.; Qjidaa, H. White Blood Cell Automatic Classification Using Deep Learning and Optimized Quaternion Hybrid Moments. Biomed. Signal Process. Control 2023, 86, 105128. [Google Scholar] [CrossRef]

- Saranya, R.; Jaichandran, R. A Dense Kernel Point Convolutional Neural Network for Chronic Liver Disease Classification with Hybrid Chaotic Slime Mould and Giant Trevally Optimizer. Biomed. Signal Process. Control 2025, 102, 107219. [Google Scholar] [CrossRef]

- Aribowo, W. A Novel Improved Sea-Horse Optimizer for Tuning Parameter Power System Stabilizer. J. Robot. Control 2023, 4, 12–22. [Google Scholar] [CrossRef]

- Emam, M.M.; Houssein, E.H.; Ghoniem, R.M. A Modified Reptile Search Algorithm for Global Optimization and Image Segmentation: Case Study Brain MRI Images. Comput. Biol. Med. 2023, 152, 106404. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Huang, L.; Zhong, J.; Hu, G. LARO: Opposition-Based Learning Boosted Artificial Rabbits-Inspired Optimization Algorithm with Lévy Flight. Symmetry 2022, 14, 2282. [Google Scholar] [CrossRef]

- Sadeeq, H.T.; Abrahim, A.; Hameed, T.; Kako, N.; Mohammed, R.; Ahmed, D. An Improved Pelican Optimization Algorithm for Function Optimization and Constrained Engineering Design Problems. Decis. Sci. Lett. 2025, 14, 623–640. [Google Scholar] [CrossRef]

- Tiwari, A.; Chaturvedi, A. A Hybrid Feature Selection Approach Based on Information Theory and Dynamic Butterfly Optimization Algorithm for Data Classification. Expert Syst. Appl. 2022, 196, 116621. [Google Scholar] [CrossRef]

- Houssein, E.H.; Oliva, D.; Samee, N.A.; Mahmoud, N.F.; Emam, M.M. Liver Cancer Algorithm: A Novel Bio-Inspired Optimizer. Comput. Biol. Med. 2023, 165, 107389. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Shu, T.; Xia, J. Multi-Strategy Improved Artificial Rabbit Algorithm for QoS-Aware Service Composition in Cloud Manufacturing. Algorithms 2025, 18, 107. [Google Scholar] [CrossRef]

- Wang, R.; Zhang, S.; Jin, B. Improved Multi-Strategy Artificial Rabbits Optimization for Solving Global Optimization Problems. Sci. Rep. 2024, 14, 18295. [Google Scholar] [CrossRef] [PubMed]

- Shu, T.; Pan, Z.; Ding, Z.; Zu, Z. Resource Scheduling Optimization for Industrial Operating System Using Deep Reinforcement Learning and WOA Algorithm. Expert Syst. Appl. 2024, 255, 124765. [Google Scholar] [CrossRef]

- SeyedOskouei, S.L.; Sojoudizadeh, R.; Milanchian, R.; Azizian, H. Shape and Size Optimization of Truss Structure by Means of Improved Artificial Rabbits Optimization Algorithm. Eng. Optim. 2024, 56, 2329–2358. [Google Scholar] [CrossRef]

- Aljumah, A.S.; Alqahtani, M.H.; Shaheen, A.M.; Ginidi, A.R. Adaptive Operational Allocation of D-SVCs in Distribution Feeders Using Modified Artificial Rabbits Algorithm. Electr. Power Syst. Res. 2025, 245, 111588. [Google Scholar] [CrossRef]

- Singh, S.P.; Dhiman, G.; Juneja, S.; Viriyasitavat, W.; Singal, G.; Kumar, N.; Johri, P. A New QoS Optimization in IoT-Smart Agriculture Using Rapid Adaption Based Nature-Inspired Approach. IEEE Internet Things J. 2023, 11, 5417–5426. [Google Scholar] [CrossRef]

- Qaraad, M.; Amjad, S.; Hussein, N.K.; Mirjalili, S.; Elhosseini, M.A. An Innovative Time-Varying Particle Swarm-Based Salp Algorithm for Intrusion Detection System and Large-Scale Global Optimization Problems. Artif. Intell. Rev. 2023, 56, 8325–8392. [Google Scholar] [CrossRef]

- Reddy, K.M.K.; Rao, A.K.; Rao, R.S. An Improved Grey Wolf Algorithm for Optimal Placement of Unified Power Flow Controller. Adv. Eng. Softw. 2022, 173, 103187. [Google Scholar] [CrossRef]

- Ahmed, Z.H.; Maleki, F.; Yousefikhoshbakht, M.; Haron, H. Solving the Vehicle Routing Problem with Time Windows Using Modified Football Game Algorithm. Egypt. Inform. J. 2023, 24, 100403. [Google Scholar] [CrossRef]

- Sang-To, T.; Le-Minh, H.; Abdel Wahab, M.; Thanh, C. Le A New Metaheuristic Algorithm: Shrimp and Goby Association Search Algorithm and Its Application for Damage Identification in Large-Scale and Complex Structures. Adv. Eng. Softw. 2023, 176, 103363. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An Exhaustive Review of the Metaheuristic Algorithms for Search and Optimization: Taxonomy, Applications, and Open Challenges; Springer: Cham, The Netherlands, 2023; ISBN 0123456789. [Google Scholar]

- Jin, J.; Wang, P. Multiscale Quantum Harmonic Oscillator Algorithm with Guiding Information for Single Objective Optimization. Swarm Evol. Comput. 2021, 65, 100916. [Google Scholar] [CrossRef]

- Makhadmeh, S.N.; Khader, A.T.; Al-Betar, M.A.; Naim, S.; Abasi, A.K.; Alyasseri, Z.A.A. A Novel Hybrid Grey Wolf Optimizer with Min-Conflict Algorithm for Power Scheduling Problem in a Smart Home. Swarm Evol. Comput. 2021, 60, 100793. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial Rabbits Optimization: A New Bio-Inspired Meta-Heuristic Algorithm for Solving Engineering Optimization Problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Wang, Y.; Xiao, Y.; Guo, Y.; Li, J. Dynamic Chaotic Opposition-Based Learning-Driven Hybrid Aquila Optimizer and Artificial Rabbits Optimization Algorithm: Framework and Applications. Processes 2022, 10, 2703. [Google Scholar] [CrossRef]

- Shan, W.; He, X.; Liu, H.; Heidari, A.A.; Wang, M.; Cai, Z.; Chen, H. Cauchy Mutation Boosted Harris Hawk Algorithm: Optimal Performance Design and Engineering Applications. J. Comput. Des. Eng. 2023, 10, 503–526. [Google Scholar] [CrossRef]

- Tang, H.; Lee, J. Adaptive Initialization LSHADE Algorithm Enhanced with Gradient-Based Repair for Real-World Constrained Optimization. Knowl.-Based Syst. 2022, 246, 108696. [Google Scholar] [CrossRef]

- Kumar, A.; Wu, G.; Ali, M.Z.; Mallipeddi, R.; Suganthan, P.N.; Das, S. A Test-Suite of Non-Convex Constrained Optimization Problems from the Real-World and Some Baseline Results. Swarm Evol. Comput. 2020, 56, 100693. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial Hummingbird Algorithm: A New Bio-Inspired Optimizer with Its Engineering Applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Zhong, C.; Li, G.; Meng, Z. Beluga Whale Optimization: A Novel Nature-Inspired Metaheuristic Algorithm. Knowl.-Based Syst. 2022, 251, 109215. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf Mongoose Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Dandelion Optimizer: A Nature-Inspired Metaheuristic Algorithm for Engineering Applications. Eng. Appl. Artif. Intell. 2022, 114, 105075. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden Jackal Optimization: A Novel Nature-Inspired Optimizer for Engineering Applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New Metaheuristic Algorithm for Solving Optimization Problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat Swarm Optimization: A Nature-Inspired Algorithm to Solve Global Optimization Problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A Novel Meta-Heuristic Optimization Algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A Nature-Inspired Metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A.; Sadiq, A.S. Autonomous Particles Groups for Particle Swarm Optimization. Arab. J. Sci. Eng. 2014, 39, 4683–4697. [Google Scholar] [CrossRef]

- Sallam, K.M.; Elsayed, S.M.; Chakrabortty, R.K.; Ryan, M.J. Improved Multi-Operator Differential Evolution Algorithm for Solving Unconstrained Problems. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 9–24 July 2020. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Fattouh, A.M.; Jambi, K.M. LSHADE with Semi-Parameter Adaptation Hybrid with CMA-ES for Solving CEC 2017 Benchmark Problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia-San Sebastián, Spain, 5–8 June 2017; pp. 145–152. [Google Scholar] [CrossRef]

- Liang, J.J.; Suganthan, P.N.; Qu, B.Y.; Gong, D.W.; Yue, C.T. Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2020.

| Parameter | Description | Value/Range |

|---|---|---|

| n | Number of rabbits | 30–100 |

| t | Iteration count | Current iteration count |

| T | Maximum number of iterations | 1000–3000 |

| d | No. of dimensions | Depends on the specific problem |

| Random numbers | [0, 1] | |

| ℘ | Random number | Random number obey Cauchy distribution |

| Amplitude of sinusoidal oscillations | 0.2 | |

| Scaling coefficient | 0.2 | |

| Frequency of oscillations | ||

| Ɛ | Energy shrink control | 2→0.2 |

| Case | ||||

|---|---|---|---|---|

| 1 | 118 | 108 | 0 | 0.0000 |

| 2 | 153 | 148 | 0 | 0.0890 |

| 3 | 158 | 148 | 0 | 0.0720 |

| 4 | 126 | 116 | 0 | 0.0219 |

| 5 | 126 | 116 | 0 | 2.7766 |

| 6 | 126 | 116 | 0 | 2.8677 |

| 7 | 76 | 76 | 0 | 0.0000 |

| 8 | 74 | 74 | 0 | 0.0000 |

| 9 | 86 | 76 | 0 | 0.0862 |

| 10 | 86 | 76 | 0 | 0.0804 |

| 11 | 30 | 0 | 91 | −6260.7000 |

| Case | Index | Comparative Algorithms | |||||||

|---|---|---|---|---|---|---|---|---|---|

| CARO | ARO | AHA | BWO | DMO | DO | GJO | HBA | ||

| 1 | Best | 8.561 × 100 | 1.791 × 101 | 1.011 × 101 | 1.441 × 101 | 1.262 × 101 | 1.351 × 101 | 1.101 × 101 | 1.411 × 101 |

| Mean | 1.831 × 101 | 1.952 × 101 | 2.442 × 101 | 1.922 × 101 | 2.543 × 101 | 2.112 × 101 | 2.112 × 101 | 2.143 × 101 | |

| Worst | 1.922 × 101 | 3.131 × 101 | 3.872 × 101 | 3.121 × 101 | 4.202 × 101 | 3.292 × 101 | 4.113 × 101 | 4.232 × 101 | |

| Std | 1.778 × 100 | 1.997 × 100 | 2.891 × 100 | 1.942 × 100 | 3.074 × 100 | 2.289 × 100 | 2.289 × 100 | 2.344 × 100 | |

| 2 | Best | 2.311 × 101 | 1.621 × 102 | 2.392 × 101 | 2.962 × 102 | 4.392 × 102 | 1.741 × 102 | 1.773 × 102 | 1.813 × 102 |

| Mean | 2.351 × 101 | 2.912 × 102 | 2.463 × 101 | 4.241 × 102 | 7.791 × 102 | 2.773 × 102 | 2.411 × 102 | 2.711 × 102 | |

| Worst | 3.112 × 101 | 4.841 × 102 | 3.161 × 101 | 6.242 × 101 | 1.421 × 103 | 5.142 × 102 | 4.893 × 102 | 5.123 × 102 | |

| Std | 1.880 × 100 | 5.071 × 101 | 2.081 × 100 | 7.500 × 101 | 1.398 × 102 | 5.017 × 101 | 4.159 × 101 | 4.706 × 101 | |

| 3 | Best | 1.421 × 102 | 9.741 × 101 | 4.111 × 102 | 4.292 × 102 | 3.871 × 102 | 1.981 × 102 | 1.951 × 102 | 4.192 × 102 |

| Mean | 1.851 × 102 | 1.721 × 102 | 4.111 × 102 | 5.422 × 102 | 4.742 × 102 | 2.212 × 102 | 2.412 × 102 | 5.221 × 102 | |

| Worst | 1.982 × 102 | 1.961 × 102 | 4.111 × 102 | 7.120 × 102 | 6.010 × 102 | 3.702 × 102 | 3.412 × 102 | 6.321 × 102 | |

| Std | 1.599 × 101 | 1.362 × 101 | 5.725 × 101 | 8.117 × 101 | 6.875 × 101 | 2.256 × 101 | 2.621 × 101 | 7.752 × 101 | |

| 4 | Best | 1.222 × 100 | 6.300 × 100 | 1.788 × 100 | 3.852 × 100 | 6.300 × 100 | 3.521 × 100 | 3.912 × 100 | 1.682 × 100 |

| Mean | 3.361 × 100 | 6.300 × 100 | 5.813 × 100 | 5.972 × 100 | 6.300 × 100 | 4.571 × 100 | 5.983 × 100 | 4.731 × 100 | |

| Worst | 5.443 × 100 | 6.303 × 100 | 1.234 × 101 | 6.891 × 100 | 6.300 × 100 | 7.301 × 100 | 6.933 × 100 | 1.355 × 101 | |

| Std | 3.907 × 10−1 | 9.274 × 10−1 | 8.380 × 10−1 | 8.672 × 10−1 | 9.274 × 10−1 | 6.116 × 10−1 | 8.690 × 10−1 | 6.408 × 10−1 | |

| 5 | Best | 3.372 × 100 | 7.881 × 100 | 1.074 × 101 | 1.344 × 101 | 1.161 × 101 | 5.092 × 100 | 1.385 × 101 | 4.021 × 100 |

| Mean | 8.801 × 100 | 1.212 × 101 | 1.294 × 101 | 1.389 × 101 | 1.451 × 101 | 9.300 × 100 | 1.412 × 101 | 9.800 × 100 | |

| Worst | 1.242 × 101 | 1.322 × 101 | 1.424 × 101 | 1.434 × 101 | 1.671 × 101 | 1.443 × 101 | 1.712 × 101 | 1.454 × 101 | |

| Std | 9.913 × 10−1 | 1.593 × 100 | 1.739 × 100 | 1.831 × 100 | 2.032 × 100 | 1.082 × 100 | 1.959 × 100 | 1.173 × 100 | |

| 6 | Best | 2.122 × 100 | 8.822 × 100 | 7.232 × 100 | 1.677 × 101 | 1.313 × 101 | 3.124 × 100 | 9.829 × 100 | 9.861 × 100 |

| Mean | 8.253 × 100 | 1.611 × 101 | 9.443 × 100 | 1.877 × 101 | 1.452 × 101 | 9.616 × 100 | 1.586 × 101 | 1.662 × 101 | |

| Worst | 1.686 × 101 | 1.678 × 101 | 1.278 × 101 | 1.997 × 101 | 1.673 × 101 | 1.515 × 101 | 1.794 × 101 | 1.871 × 101 | |

| Std | 1.119 × 100 | 2.552 × 100 | 1.336 × 100 | 2.661 × 100 | 2.260 × 100 | 1.367 × 100 | 2.497 × 100 | 2.643 × 100 | |

| 7 | Best | 1.688 × 102 | 4.679 × 102 | 2.334 × 103 | 9.154 × 103 | 4.525 × 102 | 1.717 × 102 | 7.515 × 103 | 2.011 × 103 |

| Mean | 4.022 × 102 | 6.133 × 102 | 3.815 × 103 | 2.103 × 104 | 7.664 × 102 | 4.424 × 102 | 4.262 × 104 | 3.754 × 103 | |

| Worst | 5.436 × 102 | 1.200 × 103 | 5.917 × 103 | 3.853 × 104 | 1.353 × 103 | 6.338 × 102 | 1.022 × 105 | 5.853 × 103 | |

| Std | 4.253 × 101 | 8.161 × 101 | 6.649 × 102 | 3.730 × 103 | 1.091 × 102 | 5.002 × 101 | 7.746 × 103 | 6.539 × 102 | |

| 8 | Best | 2.291 × 10−1 | 2.341 × 101 | 3.332 × 101 | 2.322 × 10−1 | 3.665 × 101 | 3.284 × 101 | 3.781 × 101 | 3.000 × 101 |

| Mean | 3.001 × 101 | 1.432 × 102 | 1.432 × 102 | 3.222 × 101 | 1.037 × 102 | 1.135 × 102 | 1.232 × 102 | 1.017 × 102 | |

| Worst | 7.522 × 101 | 1.263 × 103 | 3.557 × 102 | 7.689 × 101 | 3.584 × 102 | 3.385 × 102 | 3.448 × 102 | 3.183 × 102 | |

| Std | 5.435 × 100 | 2.606 × 101 | 2.606 × 101 | 5.837 × 100 | 1.876 × 101 | 2.058 × 101 | 2.241 × 101 | 1.839 × 101 | |

| 9 | Best | − 1.198 × 10−1 | − 2.456 × 10−1 | − 2.164 × 10−1 | − 2.144 × 10−1 | − 1.400 × 103 | − 1.299 × 101 | − 1.355 × 103 | − 2.441 × 10−1 |

| Mean | − 1.868 × 10−1 | − 2.255 × 10−1 | − 2.067 × 10−1 | − 2.044 × 10−1 | − 5.811 × 102 | − 2.233 × 100 | − 5.879 × 102 | − 2.286 × 10−1 | |

| Worst | − 7.615 × 10−2 | − 2.186 × 10−1 | − 2.056 × 10−1 | − 2.036 × 10−1 | 2.827 × 101 | − 4.449 × 10−1 | 2.773 × 101 | − 2.194 × 10−1 | |

| Std | 1.223 × 10−2 | 1.935 × 10−2 | 1.588 × 10−2 | 1.551 × 10−2 | 1.060 × 102 | 3.854 × 10−1 | 1.071 × 102 | 1.990 × 10−2 | |

| 10 | Best | − 7.121 × 101 | − 9.181 × 10−2 | 3.854 × 100 | − 8.564 × 10−2 | − 9.198 × 10−2 | 3.864 × 100 | − 6.422 × 102 | − 1.223 × 10−1 |

| Mean | 2.811 × 101 | 1.891 × 10−1 | 1.901 × 101 | −8.480 × 10−2 | 1.891 × 10−1 | 1.842 × 101 | − 2.221 × 102 | − 1.181 × 10−1 | |

| Worst | 1.431 × 102 | 7.271 × 10−1 | 2.891 × 101 | − 8.712 × 10−2 | 7.892 × 10−1 | 2.742 × 101 | 2.392 × 102 | − 1.134 × 10−1 | |

| Std | 5.147 × 100 | 5.090 × 10−2 | 3.485 × 100 | 1.278 × 10−3 | 5.126 × 10−2 | 3.376 × 100 | 4.051 × 101 | 4.783 × 10−3 | |

| 11 | Best | − 6.411 × 103 | − 5.822 × 103 | − 5.589 × 103 | − 5.687 × 103 | − 5.866 × 103 | − 5.814 × 103 | − 5.743 × 103 | − 5.978 × 103 |

| Mean | − 6.321 × 103 | − 5.951 × 103 | − 5.222 × 103 | − 5.346 × 103 | − 5.910 × 103 | − 5.711 × 103 | − 5.553 × 103 | − 5.867 × 103 | |

| Worst | − 5.871 × 103 | − 5.851 × 103 | − 4.982 × 103 | − 4.992 × 103 | − 5.668 × 103 | − 5.567 × 103 | − 5.388 × 103 | − 5.756 × 103 | |

| Std | 1.643 × 101 | 8.398 × 101 | 2.172 × 102 | 1.953 × 102 | 9.128 × 101 | 1.278 × 102 | 1.570 × 102 | 1.004 × 102 | |

| Case | Index | Comparative Algorithms | |||||||

| CARO | SCSO | SO | MPA | AGPSO | IMODE | LSHADE_SPACMA | |||

| 1 | Best | 8.561 × 100 | 1.432 × 101 | 1.221 × 101 | 9.73 × 100 | 1.22 × 101 | 1.09 × 101 | 1.07 × 101 | |

| Mean | 1.831 × 101 | 2.342 × 101 | 1.890 × 101 | 1.98 × 101 | 1.98 × 101 | 1.97 × 101 | 1.89 × 101 | ||

| Worst | 1.922 × 101 | 4.712 × 101 | 3.561 × 101 | 3.87 × 101 | 4.49 × 101 | 3.57 × 101 | 3.48 × 101 | ||

| Std | 1.778 × 100 | 2.709 × 100 | 1.887 × 100 | 2.05 × 100 | 2.05 × 100 | 2.03 × 100 | 1.89 × 100 | ||

| 2 | Best | 2.311 × 101 | 2.562 × 102 | 1.320 × 101 | 1.52 × 102 | 1.92 × 102 | 4.90 × 102 | 4.54 × 102 | |

| Mean | 2.351 × 101 | 1.001 × 103 | 2.711 × 101 | 2.81 × 102 | 1.34 × 103 | 9.02 × 102 | 8.06 × 102 | ||

| Worst | 3.112 × 101 | 1.422 × 103 | 4.391 × 101 | 7.11 × 102 | 1.39 × 103 | 1.24 × 103 | 1.26 × 103 | ||

| Std | 1.880 × 100 | 1.801 × 102 | 2.537 × 100 | 4.89 × 101 | 2.42 × 102 | 1.62 × 102 | 1.45 × 102 | ||

| 3 | Best | 1.421 × 102 | 1.952 × 102 | 5.991 × 102 | 9.61 × 101 | 1.56 × 102 | 3.99 × 102 | 3.98 × 102 | |

| Mean | 1.851 × 102 | 6.640 × 102 | 6.023 × 102 | 2.15 × 102 | 9.64 × 102 | 6.46 × 102 | 6.08 × 102 | ||

| Worst | 1.982 × 102 | 1.011 × 103 | 6.053 × 102 | 4.30 × 102 | 1.10 × 103 | 8.87 × 102 | 8.80 × 102 | ||

| Std | 1.599 × 101 | 1.034 × 102 | 9.212 × 101 | 2.17 × 101 | 1.58 × 102 | 1.00 × 102 | 9.35 × 101 | ||

| 4 | Best | 1.222 × 100 | 3.811 × 100 | 6.262 × 100 | 5.22 × 100 | 3.85 × 100 | 1.81 × 100 | 1.85 × 100 | |

| Mean | 3.361 × 100 | 6.011 × 100 | 6.301 × 100 | 8.25 × 100 | 7.11 × 100 | 5.89 × 100 | 5.92 × 100 | ||

| Worst | 5.443 × 100 | 6.301 × 100 | 6.362 × 100 | 1.49 × 101 | 8.40 × 100 | 9.13 × 100 | 9.16 × 100 | ||

| Std | 3.907 × 10−1 | 8.745 × 10−1 | 9.274 × 10−1 | 1.28 × 100 | 1.08 × 100 | 8.53 × 10−1 | 8.58 × 10−1 | ||

| 5 | Best | 3.372 × 100 | 7.901 × 100 | 1.091 × 101 | 4.62 × 100 | 1.35 × 101 | 5.53 × 100 | 5.53 × 100 | |

| Mean | 8.801 × 100 | 1.288 × 101 | 1.282 × 101 | 1.44 × 101 | 1.39 × 101 | 1.51 × 101 | 1.61 × 101 | ||

| Worst | 1.242 × 101 | 1.355 × 101 | 1.722 × 101 | 2.18 × 101 | 1.49 × 101 | 2.38 × 101 | 2.50 × 101 | ||

| Std | 9.913 × 10−1 | 1.721 × 100 | 1.721 × 100 | 2.01 × 100 | 1.92 × 100 | 2.14 × 100 | 2.32 × 100 | ||

| 6 | Best | 2.122 × 100 | 9.414 × 100 | 9.793 × 100 | 2.57 × 100 | 9.57 × 100 | 8.83 × 100 | 9.84 × 100 | |

| Mean | 8.253 × 100 | 1.604 × 101 | 1.553 × 101 | 1.82 × 101 | 1.85 × 101 | 2.26 × 101 | 2.42 × 101 | ||

| Worst | 1.686 × 101 | 1.675 × 101 | 1.762 × 101 | 3.17 × 101 | 1.94 × 101 | 3.67 × 101 | 4.13 × 101 | ||

| Std | 1.119 × 100 | 2.534 × 100 | 2.442 × 100 | 2.94 × 100 | 2.99 × 100 | 3.74 × 100 | 4.03 × 100 | ||

| 7 | Best | 1.688 × 102 | 4.422 × 102 | 2.061 × 103 | 4.68 × 103 | 6.21 × 101 | 5.37 × 104 | 6.46 × 104 | |

| Mean | 4.022 × 102 | 7.554 × 102 | 3.294 × 103 | 3.14 × 104 | 2.58 × 102 | 1.71 × 105 | 1.61 × 105 | ||

| Worst | 5.436 × 102 | 1.044 × 103 | 5.824 × 103 | 1.06 × 105 | 5.99 × 102 | 3.71 × 105 | 3.65 × 105 | ||

| Std | 4.253 × 101 | 1.071 × 102 | 5.699 × 102 | 5.70 × 103 | 1.62 × 101 | 3.12 × 104 | 2.94 × 104 | ||

| 8 | Best | 2.291 × 10−1 | 2.300 × 10−1 | 9.372 × 10−1 | 1.44 × 103 | 2.81 × 10−1 | 3.98 × 104 | 2.60 × 103 | |

| Mean | 3.001 × 101 | 3.023 × 101 | 2.838 × 103 | 2.78 × 104 | 1.08 × 102 | 1.19 × 105 | 1.38 × 104 | ||

| Worst | 7.522 × 101 | 7.487 × 101 | 7.149 × 103 | 2.64 × 105 | 2.85 × 102 | 2.42 × 105 | 2.23 × 105 | ||

| Std | 5.435 × 100 | 5.471 × 100 | 5.166 × 102 | 5.08 × 103 | 1.97 × 101 | 2.17 × 104 | 2.52 × 103 | ||

| 9 | Best | − 1.198 × 10−1 | − 2.233 × 10−1 | − 2.094 × 102 | −1.216 × 102 | −2.73 × 10−1 | −1.16 × 103 | −1.28 × 103 | |

| Mean | − 1.868 × 10−1 | − 2.057 × 10−1 | − 7.699 × 101 | −3.344 × 102 | −3.52 × 10−1 | −8.55 × 102 | −4.45 × 102 | ||

| Worst | − 7.615 × 10−2 | −1.976 × 10−1 | 1.991 × 100 | −4.455 × 102 | −4.16 × 10−1 | −9.67 × 102 | −6.94 × 102 | ||

| Std | 1.223 × 10−2 | 1.570 × 10−2 | 1.401 × 101 | 6.10 × 101 | 4.24 × 10−2 | 1.56 × 102 | 8.12 × 101 | ||

| 10 | Best | −7.121 × 101 | −1.141 × 10−1 | −7.181 × 101 | −6.848 × 101 | −1.04 × 10−1 | −4.75 × 102 | −4.77 × 102 | |

| Mean | 2.811 × 101 | −1.041 × 10−1 | 2.862 × 101 | 3.13 × 101 | 3.17 × 10−1 | −5.31 × 102 | −5.11 × 102 | ||

| Worst | 1.431 × 102 | −9.776 × 10−2 | 1.298 × 102 | 7.49 × 101 | 1.55 × 100 | 2.11 × 102 | 2.09 × 102 | ||

| Std | 5.147 × 100 | 2.227 × 10−3 | 5.238 × 100 | 5.73 × 100 | 7.46 × 10−2 | 9.69 × 101 | 9.33 × 101 | ||

| 11 | Best | − 6.411 × 103 | −5.636 × 103 | − 5.973 × 103 | −6.016 × 103 | −6.12 × 103 | −5.14 × 103 | −5.27 × 103 | |

| Mean | − 6.321 × 103 | −5.442 × 103 | − 5.842 × 103 | −5.745 × 103 | −5.95 × 103 | −5.01 × 103 | −5.12 × 103 | ||

| Worst | −5.871 × 103 | −5.271 × 103 | − 5.732 × 103 | −5.514 × 103 | −5.67 × 103 | −4.73 × 103 | −4.91 × 103 | ||

| Std | 1.643 × 101 | 1.770 × 102 | 1.040 × 102 | 1.21 × 102 | 8.40 × 101 | 2.56 × 102 | 2.36 × 102 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sadeeq, H.T. Cauchy Operator Boosted Artificial Rabbits Optimization for Solving Power System Problems. Eng 2025, 6, 174. https://doi.org/10.3390/eng6080174

Sadeeq HT. Cauchy Operator Boosted Artificial Rabbits Optimization for Solving Power System Problems. Eng. 2025; 6(8):174. https://doi.org/10.3390/eng6080174

Chicago/Turabian StyleSadeeq, Haval Tariq. 2025. "Cauchy Operator Boosted Artificial Rabbits Optimization for Solving Power System Problems" Eng 6, no. 8: 174. https://doi.org/10.3390/eng6080174

APA StyleSadeeq, H. T. (2025). Cauchy Operator Boosted Artificial Rabbits Optimization for Solving Power System Problems. Eng, 6(8), 174. https://doi.org/10.3390/eng6080174