Abstract

Camera-centric perception has matured into a cornerstone of modern autonomy, from self-driving cars and factory cobots to underwater and planetary exploration. This review synthesizes more than a decade of progress in vision-based robotic navigation through an engineering lens, charting the full pipeline from sensing to deployment. We first examine the expanding sensor palette—monocular and multi-camera rigs, stereo and RGB-D devices, LiDAR–camera hybrids, event cameras, and infrared systems—highlighting the complementary operating envelopes and the rise of learning-based depth inference. The advances in visual localization and mapping are then analyzed, contrasting sparse and dense SLAM approaches, as well as monocular, stereo, and visual–inertial formulations. Additional topics include loop closure, semantic mapping, and LiDAR–visual–inertial fusion, which enables drift-free operation in dynamic environments. Building on these foundations, we review the navigation and control strategies, spanning classical planning, reinforcement and imitation learning, hybrid topological–metric memories, and emerging visual language guidance. Application case studies—autonomous driving, industrial manipulation, autonomous underwater vehicles, planetary rovers, aerial drones, and humanoids—demonstrate how tailored sensor suites and algorithms meet domain-specific constraints. Finally, the future research trajectories are distilled: generative AI for synthetic training data and scene completion; high-density 3D perception with solid-state LiDAR and neural implicit representations; event-based vision for ultra-fast control; and human-centric autonomy in next-generation robots. By providing a unified taxonomy, a comparative analysis, and engineering guidelines, this review aims to inform researchers and practitioners designing robust, scalable, vision-driven robotic systems.

1. Introduction

Vision has become a primary sensing modality for autonomous robots because cameras are compact, low-cost, and information-rich. When coupled with modern computer vision and machine learning algorithms, camera systems deliver a dense semantic and geometric understanding of the environment, enabling robots to localize, map, plan, and interact with their surroundings in real time. Rapid gains in the hardware (e.g., high-resolution global-shutter sensors, solid-state LiDAR, neuromorphic event cameras) and software (deep learning, differentiable optimization, large-scale SLAM) have pushed vision-based autonomy from laboratory prototypes to production systems across domains such as self-driving vehicles, smart factories, underwater inspection, and planetary exploration [1,2,3,4]. Yet operating robustly in the wild remains difficult: lighting variations, weather, dynamic obstacles, textureless or reflective surfaces, and resource constraints all challenge perception and control pipelines. Understanding the capabilities, limitations, and integration trade-offs for diverse vision sensors and algorithms is thus essential for engineering reliable robotic platforms.

1.1. Scope and Contributions

This review targets an engineering readership and offers a holistic synthesis of vision-based navigation and perception for autonomous robots. This review offers a panoramic overview of the literature by organizing the design space around a unifying taxonomy of vision sensors, SLAM algorithms, navigation and control strategies, and domain-specific deployments. It distills the canonical approaches, milestone results, and key engineering trade-offs while signposting specialized surveys and seminal papers for readers who need deeper detail. Building on the rich body of work reviewed in the recent literature, we contribute

- A unified taxonomy of vision sensors—from monocular RGB to event and infrared cameras, stereo, RGB-D, and LiDAR–visual hybrids—highlighting their operating envelopes, noise characteristics, and complementary strengths [2,5,6];

- A comparative review of localization and mapping algorithms, spanning sparse and dense visual SLAM, visual–inertial odometry, loop closure, semantic mapping, and the current LiDAR–visual–inertial fusion frameworks [1,7,8];

- An analysis of navigation and control strategies that leverage visual perception, including classical motion planning, deep reinforcement and imitation learning, topological memory, and visual language grounding [9,10,11];

- Cross-domain case studies in autonomous driving, industrial manipulation, autonomous underwater vehicles, and planetary rovers, illustrating how the sensor–algorithm choices are tailored to the domain constraints [4,12,13,14];

- Engineering guidelines and open challenges covering the sensor selection, calibration, real-time computation, safety, and future research directions such as generative AI, neural implicit maps, event-based control, and human-centric autonomy [15,16].

We found that the existing surveys each concentrate on a narrow slice of the field—e.g., vision-based manipulation [17], multi-task perception for autonomous vehicles [18], or single-sensor SLAM pipelines. None simultaneously covers the full spectrum of (i) vision sensor modalities (RGB, stereo, RGB-D/ToF, LiDAR, event, thermal); (ii) geometry-, learning-, and fusion-based SLAM algorithms; (iii) learning-based controllers (DRL, IL, visual servoing, VLN); and (iv) cross-domain deployments spanning ground, aerial, underwater, and planetary robots. This review provides a single reference for both algorithm developers and practitioners integrating vision into real robotic systems by covering the aforementioned subjects under an engineering lens.

1.2. Methodology

This article is written as a narrative engineering review; thus, it provides a broad, integrative overview of the field, sacrificing exhaustive, protocol-driven coverage of systematic or scoping reviews for conceptual synthesis across diverse subtopics [19]. Particularly, a narrative review was chosen because vision-based robotics is a broad and rapidly evolving field that spans many areas (e.g., sensors, algorithms, and application domains) that are often studied separately. A systematic review would require many narrow sub-queries or risk missing relevant developments, especially from applied research. In contrast, a narrative approach allows us to connect these diverse elements into a unified engineering perspective, linking sensor configurations to algorithmic performance and identifying trends that are relevant across different robotic platforms. Three relevant questions guide our synthesis:

- RQ1

- What vision sensor modalities and fusion schemes are employed across robotic domains?

- RQ2

- How do the current localization, mapping, and navigation algorithms exploit vision data, and what quantitative performance do they achieve?

- RQ3

- What outstanding technical challenges remain, and what promising future trends are emerging?

These three questions were chosen to trace the full perception–action pipeline on which contemporary vision-based robots rely. RQ1 targets sensing: by cataloging the range of camera modalities and fusion schemes, it establishes the upstream hardware and signal characteristics that bound system performance. RQ2 addresses the algorithmic core, asking how localization, mapping, and navigation methods convert these raw signals into actionable state estimates and what measurable accuracy they achieve on standard benchmarks. Finally, RQ3 turns to forward-looking gaps, describing unsolved problems and emergent trends that will shape the next generation of autonomous platforms.

Our expert-guided search—conducted by two researchers in computer vision and robotics—reviewed flagship venues and arXiv preprints spanning 2000 to 2025 in a forward and backward reference exploration process, retrieving approximately 300 records. After duplicate removal and abstract screening, 160 papers underwent full-text appraisal. Of these, 150 publications met our engineering-focused inclusion criteria (i.e., reporting substantive engineering results, providing a comprehensive insight of sensors and algorithms, etc.), and 3 others were retained to contextualize the review methodology. The final 150 sources form the basis of this narrative synthesis, which is chosen over a protocol-driven systematic literature review because it allows us to integrate rapidly evolving cross-domain results that fall outside any single, strict taxonomy. A machine-readable spreadsheet summarizing the entry types, publication years, and top venues is provided as Supplementary Material.

1.3. Organization of the Review

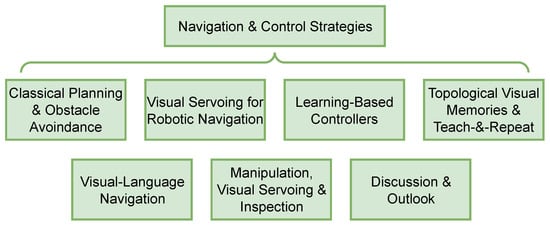

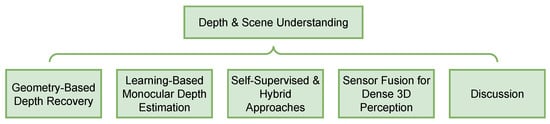

Figure 1 illustrates the overview of the review, and the remainder of this study is structured as follows. Section 2 reviews the vision sensor modalities and compares their performance envelopes. Section 3 reviews the depth and scene understanding techniques, including classical geometry- and learning-based estimation. Section 4 details the visual localization and mapping algorithms, while Section 5 discusses navigation and control strategies that exploit visual feedback. Section 6 presents domain-specific case studies, and Section 7 summarizes benchmark datasets and evaluation protocols. Section 8 details the engineering design guidelines, followed by emerging trends and open challenges in Section 9. Finally, Section 10 concludes the review.

Figure 1.

Schematic illustration of review.

2. Vision Sensor Modalities for Robotics

Robust autonomy hinges on selecting and fusing the right sensor suite. This section reviews the principal camera-centric modalities used in contemporary robots, summarizing their operating principles, advantages, and engineering trade-offs. Figure 2 illustrates a variety of vision sensors employed in mobile robotics, ranging from widely adopted technologies such as monocular and RGB-D cameras to cutting-edge innovations like event-based sensors.

Figure 2.

Vision sensors used in mobile robotics.

2.1. Monocular and Multi-Camera RGB Systems

A single RGB camera supplies high-resolution appearance cues for object recognition and dense semantics but cannot infer metric depth without motion or priors, leading to scale ambiguity in monocular SLAM [5,20]. Multi-camera rigs expand the field of view to 360°, mitigating occlusions and improving place recognition and loop closure [21,22]. Although inexpensive and lightweight, pure RGB systems struggle under poor lighting, overexposure, or textureless surface conditions; additional sensors (e.g., IMU, depth, and LiDAR sensors) are typically integrated to recover the depth, scale, and robustness. The range of these sensors widely varies from a few meters to 500 m and can be expanded to a few kilometers when using specific algorithms [23,24].

2.2. Stereo Vision Rigs

Stereo cameras triangulate depth from the pixel disparities between two spatially separated lenses, yielding real-time metric range data [25]. The range of these sensors and the FoV can widely vary while maintaining a low to moderate cost. In the case of the ZED 2 system, the range varies from 5 to 40 m, depending on the baseline resolution, achieving a FoV of up to 120°. The performance depends on the baseline length, calibration accuracy, and scene texture; repetitive or low-texture regions, glare, and low light impair matching [26]. Nevertheless, stereo depth is passive (no active illumination) and immune to sunlight interference, making it a popular navigation sensor indoors and outdoors, often fused with indirect monocular cues for enhanced robustness [20].

2.3. RGB-D and Time-of-Flight Cameras

RGB-D sensors such as Microsoft Kinect or Intel RealSense project structured light or emit time-of-flight (ToF) pulses to obtain the per-pixel depth aligned with color imagery [5]. They excel for low-texture surfaces where stereo fails, supporting dense mapping and obstacle avoidance at video rates [26]. Their limitations include a short range (typically from 0.3 to 5 m), susceptibility to sunlight or infrared interference, and increased power draw. Recent ToF chips show improved range and outdoor tolerance, yet their cost and power remain higher than those for passive cameras [27]. Moreover, the FoVs of some commercial sensors are from 67° to 108°.

2.4. LiDAR and Camera–LiDAR Hybrids

Spinning or solid-state LiDAR emits laser pulses to build high-resolution 3D point clouds with a centimeter accuracy over tens of hundreds of meters, independent of the lighting [8]. It underpins long-range obstacle detection and drift-free SLAM, but the performance degrades in rain or fog or with transparent/specular objects, and the data are sparse at a distance [6,28]. Moreover, depending on the sensor, the power consumption is high, varying from 8 to 15 W [29]. Camera–LiDAR fusion combines the precise geometry of LiDAR with cameras’ semantics and dense textures, enabling robust perception in mixed lighting and weather [8]. Falling component prices [29] and solid-state designs are accelerating its adoption in mobile robotics.

2.5. Event-Based (Neuromorphic) Cameras

Event cameras asynchronously report per-pixel brightness changes with a micro-second latency and a >120 dB dynamic range [2,30] with a very low power consumption (0.25 mW) [31] and a narrow FoV (75° [32]). They incur no motion blur and sparsely encode only scene changes, yielding high signal-to-noise ratios at extreme speeds or in extreme lighting, ideal for agile drones or high-dynamic-range (HDR) scenes. However, event streams differ radically from conventional frames, demanding specialized algorithms for SLAM and object detection; the resolution and cost also lag mainstream CMOS sensors. Hybrid frame–event perception pipelines are an active research topic.

2.6. Infrared and Thermal Imaging Sensors

Near-infrared (NIR) cameras extend vision into low-light conditions using active IR illumination, while long-wave thermal cameras passively capture emitted heat (7.5–14 μm) and thus operate in complete darkness [33,34]. Thermal imaging penetrates moderate smoke, fog, and dust, supporting search-and-rescue, firefighting, and night-time driving [35,36,37]. These sensors can generate thermal images from a large distance [38] with a FoV from 12.4° to 45° [34]. Their cost and power consumption widely vary, from approximately 200 USD to approximately 7900 USD and from 0.15 W to 28 W, respectively [34]. Trade-offs include a lower spatial resolution, higher noise, a lack of direct depth, and opacity for glass or water. Calibration must account for sensor self-heating and emissivity variations.

2.7. A Comparative Summary

Each modality offers distinct operating envelopes (in terms of range, lighting, and latency), noise profiles, and integration costs, as detailed in Table 1. The attribute “Contributions from 2020” counts the number of conferences and journal articles published from 2020 to the date (2025), according to Scopus, in the context of robotic navigation. Cameras provide rich semantics but limited depth; LiDAR delivers accurate geometry yet sparse color; event sensors excel at high speeds; and IR cameras ensure visibility in darkness. Consequently, state-of-the-art robots increasingly adopts multi-modal perception, fusing complementary sensors through probabilistic or learning-based frameworks to achieve robust, all-weather autonomy.

Table 1.

Comparison of vision sensor modalities.

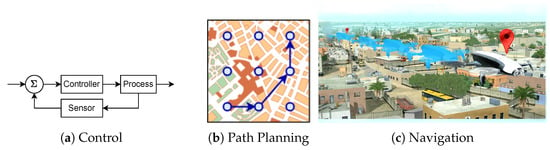

3. Depth and Scene Understanding Techniques

Accurate depth perception is fundamental for collision avoidance, mapping, manipulation, and high-level scene reasoning. Robots derive metric structures either from geometric principles, learning-based inference, or combinations thereof. This section reviews the core techniques and their engineering trade-offs, and Figure 3 illustrates its content.

Figure 3.

A schematic overview of the topics covered in “Depth and Scene Understanding Techniques”.

3.1. Geometry-Based Depth Recovery

Geometry-based depth recovery exploits projective geometry to infer range. We therefore begin with monocular motion, where the structure is triangulated but the global scale is ambiguous. Then, we cover stereo rigs whose fixed baseline converts pixel disparities into metric depth. Later, we conclude with active sensors that obtain range directly via structured light or time-of-flight. This sequence shows how adding calibrated hardware progressively resolves geometric ambiguities while introducing new practical trade-offs.

3.1.1. Structure from Motion and Monocular SLAM

In purely monocular setups, depth is inferred by triangulating feature tracks across successive frames (structure-from-motion). Modern monocular SLAM systems such as ORB-SLAM3 employ keyframe bundle adjustment to refine camera poses and 3D landmarks, but the reconstruction is determined only up to an unknown global scale [1,20]. The scale can be resolved through inertial fusion, known object sizes, or ground-plane constraints, yet meter-level drift may accrue without loop closure or external references.

3.1.2. Stereo Correspondence

Stereo rigs yield metric depth by converting pixel disparities into range:

where f is the focal length, b the baseline (i.e., the distance between the centers of the two camera lenses), and d the disparity. Classical block matching, semi-global matching, and more recent CNN-based cost volumes perform disparity estimations in real time on embedded GPUs [25]. The accuracy hinges on a well-calibrated baseline and textured surfaces; low-texture or reflective areas and occlusions lead to outliers [26]. Despite these limits, stereo is sunlight-immune and passive, making it attractive for outdoor drones and rovers.

3.1.3. Active Depth Sensors

RGB-D and time-of-flight (ToF) cameras bypass correspondence by directly projecting structured light or measuring photon round-trip times, delivering dense depth aligned with color at video rates [5]. They enable real-time dense SLAM (e.g., KinectFusion) but operate over short ranges and suffer in bright IR backgrounds; calibration drift between color and depth streams impacts their accuracy further.

3.2. Learning-Based Monocular Depth Estimation

Deep convolutional and transformer models can predict dense depth maps from a single RGB frame by learning geometric and semantic cues [39]. Supervised variants train on ground-truth LiDAR or stereo disparity, whereas self-supervised approaches exploit the photometric consistency between adjacent frames or stereo pairs, obviating costly depth labels [20]. Recent networks achieve sub-meter errors at 30 Hz on embedded devices, effectively augmenting monocular cameras with pseudo-range for obstacle avoidance and SLAM initialization.

3.3. Self-Supervised and Hybrid Approaches

Self-supervised depth methods jointly estimate depth and pose by minimizing image reprojection errors, often incorporating explainability masks and edge-aware smoothness losses to handle occlusions and textureless regions. Hybrid pipelines integrate CNN depth priors into geometric SLAM, yielding denser reconstructions and mitigating scale drift; CNN-SLAM fuses network predictions with multi-view stereo for robustness in low-parallax segments [40]. Similar ideas infill sparse LiDAR with monocular cues, producing high-resolution depth maps at minimal additional cost.

3.4. Sensor Fusion for Dense 3D Perception

No single sensor excels in all conditions; modern robots therefore fuse complementary depth sources. Visual–inertial odometry provides scale and high-rate motion, while LiDAR offers long-range geometry resilient to lighting. Camera–LiDAR depth completion networks align sparse point clouds with dense image edges, achieving a centimeter-level accuracy even under rain or fog [8]. Probabilistic occupancy fusion—either using a Truncated Signed Distance Field (TSDF) or a Euclidean Signed Distance Field (ESDF)—integrates depth from stereo, RGB-D, or LiDAR into consistent maps for planning. Emerging work has coupled event cameras’ optical flow with stereo’s disparity to boost the depth at high speeds and with a dynamic range.

3.5. The Quantitative Impact of Sensor Fusion on 3D Perception

Recent benchmarks confirm that fusing complementary sensing modalities can measurably enhance the 3D perception across a variety of operating conditions. On the KITTI depth completion benchmark [41], RGB + LiDAR methods such as PENet [42] reduced the root mean square error in the depth by roughly 30% compared with that under sparse LiDAR interpolation alone while maintaining real-time inference at 20–25 FPS [42]. Visual–inertial odometry evaluated on TUM VIE [43] and EuRoC MAV [44] shows that coupling a monocular camera with an IMU limits the translational drift to below 0.5% of the trajectory length, whereas vision-only baselines drift by 1–2% under fast motion [45]. In high-speed outdoor scenes from the DSEC dataset [46], stereo + event fusion yields an accurate dense depth at temporal resolutions unattainable using frame cameras alone, sustaining the performance when the motion blur is severe [46]. Indoors, RGB-D fusion on NYUv2 improves the reconstruction completeness by approximately 10 IoU points relative to that for RGB networks, particularly on low-texture surfaces [47]. Ablation trials further indicate that learning-based attention modules can down-weight unreliable inputs (e.g., LiDAR under fog), preserving the detection recall within 5% of the nominal conditions [48]. Collectively, these studies highlight that carefully designed fusion pipelines raise depth’s accuracy and robustness with a modest computational overhead, although latency trade-offs appear for the most complex architectures.

3.6. Discussion

Geometric methods guarantee physical consistency but falter on ambiguous or low-texture scenes; learning methods generalize the appearance priors yet risk domain shifts. Fusion strategies blend complementary strengths, trending toward multi-modal, self-supervised systems that adapt online. Remaining challenges include robust depth under adverse weather conditions, for transparent or specular objects, and in real-time inference on power-constrained platforms. Advances in neural implicit representations and generative diffusion models (cf. Section 9) promise to close these gaps further by unifying depth, semantics, and appearance into a single, continuous scene model.

4. Visual Localization and Mapping (SLAM)

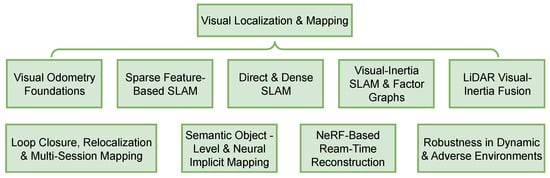

Vision-based Simultaneous Localization and Mapping (SLAM) enables robots to estimate their six-degree-of-freedom (6-DoF) pose while incrementally reconstructing the surrounding scene. Today’s SLAM pipelines power everything from consumer Augmented Reality (AR) to planetary rovers, combining geometric estimation, probabilistic filtering, and, increasingly, deep learning. This section reviews the algorithmic spectrum, organized from low-level visual odometry to multi-sensor, semantic, and learning-augmented systems. Some of the key properties of the milestone SLAM techniques are displayed in comparison in Table 2. Figure 4 provides a schematic that illustrates the topics covered in this section, and Figure 5 displays a wheeled robot ready to explore a structured environment.

Figure 4.

A schematic overview of the topics covered in “Visual Localization and Mapping”.

Figure 5.

A wheeled robot exploring an industrial facility.

4.1. Visual Odometry Foundations

Visual odometry (VO) estimates frame-to-frame motion without enforcing global consistency. Early monocular VO tracked sparse features using RANSAC-based pose estimation, while recent direct methods minimize the photometric error over selected pixels. Though computationally lightweight, VO suffers from unbounded drift and scale ambiguity in monocular cases [49,50]. Consequently, most modern SLAM pipelines build upon VO for local tracking and add global optimization for drift correction, as exemplified by the ORB-SLAM family [1].

4.2. Sparse Feature-Based SLAM

Feature-based (indirect) SLAM detects salient points (e.g., ORB, FAST, SuperPoint) and refines the camera trajectories via bundle adjustment. The ORB-SLAM lineage epitomizes this paradigm: ORB-SLAM2 supports monocular, stereo, and RGB-D sensors, whereas ORB-SLAM3 extends further to multi-camera, fisheye, and visual–inertial configurations with multi-map Atlas management, becoming a default engine for mixed-reality headsets and service robots [1]. Advantages include its real-time speed, robustness to photometric changes, and efficient data association through binary descriptors; limitations arise in texture-poor scenes and under significant motion blur. Authors have reported a good real-time performance on a Core i7-7700 at 3.6 GHz with 32 GB RAM and CPU-only execution.

4.3. Direct and Dense SLAM

Direct approaches bypass explicit feature detection, optimizing photometric or depth residuals at the pixel level. LSD-SLAM pioneered large-scale semi-dense monocular mapping [50], while DSO improved the accuracy via sparse photometric optimization. On the dense end, KinectFusion and its successors fuse RGB-D frames into Truncated Signed Distance Fields (TSDFs) for surface reconstruction. BAD-SLAM exploits the dense depth stream of RGB-D cameras and runs a GPU-accelerated direct bundle adjustment that produces centimeter-level indoor reconstructions in real time [7]. This technique runs on a GTX 1080/1070 (CUDA ≥ 5.3) since the GPU front-end is mandatory for 30 Hz tracking. More recently, DROID-SLAM has replaced the classical geometry with a learned dense-correspondence network and recurrent bundle adjustment, yielding state-of-the-art accuracy on highly dynamic sequences, albeit with heavy GPU use [51]. Direct methods capture richer geometry than indirect pipelines but require photometric calibration and remain sensitive to lighting changes.

4.4. Visual–Inertial SLAM and Factor Graphs

Integrating inertial measurement units (IMUs) mitigates the scale ambiguity and improves the robustness under fast rotations or motion blur. Tightly coupled optimization frameworks, such as VINS-Mono, fuse pre-integrated IMU factors with visual landmarks in a sliding-window bundle adjustment. VINS-Fusion extends this idea by supporting stereo, monocular, and GPS factors; its solver has become a staple on micro-air-vehicles where the mass and power are constrained [52]. Open-source builds demonstrate 40 Hz VIO on the Jetson TX2/Xavier NX as well as desktop i7 CPUs. ORB-SLAM3 also offers a visual–inertial mode that benefits from IMU pre-integration [1]. Factor--graph formulations leverage the sparse matrix structure for real-time nonlinear optimization, achieving centimeter-level accuracy on aerial and handheld platforms.

4.5. LiDAR–Visual–Inertial Fusion

LiDAR provides geometry immune to lighting yet lacks semantics; cameras offer dense texture but struggle in adverse weather. Recent frameworks, such as LIR-LIVO, combine spinning or solid-state LiDAR with cameras and IMUs to achieve drift-free, all-weather odometry [8]. LIO-SAM departs from vision-centric design and tightly couples LiDAR, inertial measurement unit (IMU), and Global Navigation Satellite System (GNSS) measurements within a factor graph, delivering meter-accurate mapping at the city scale while still running in real time on commodity CPUs [53]. Learned cross-modal feature matching (SuperPoint + LightGlue) further enhances the alignment between LiDAR scans and images [54,55], boosting the robustness for large-scale mobile robots.

4.6. Loop Closure, Relocalization, and Multi-Session Mapping

Detecting loop closures is key to correcting accumulated drift. ORB-SLAM employs a bag-of-words place recognition system (DBoW2) to propose loop candidates; pose-graph or full bundle adjustment then enforces global consistency [1]. Learned global descriptors such as NetVLAD or HF-Net boost the recall under drastic viewpoint or lighting changes [56]. ORB-SLAM3’s Atlas merges multiple submaps on the fly, enabling lifelong, multi-session mapping. Robust relocalization allows for rapid recovery from tracking failures and supports teach-and-repeat navigation.

4.7. Semantic, Object-Level, and Neural Implicit Mapping

Augmenting geometric maps with semantics enhances the navigation and manipulation. Kimera integrates real-time metric–semantic SLAM with 3D mesh reconstruction and dynamic scene graphs, producing maps directly usable for high-level planning [57,58,59]. DynaSLAM masks dynamic objects via Mask R-CNN, yielding stable trajectories in crowded scenes [9]. Object-level frameworks such as SLAM++ represent scenes as recognized CAD models, producing compact, interpretable maps [60]. Recent neural implicit systems (iMAP, NeRF-SLAM) jointly optimize camera poses and a continuous radiance field, unifying the geometry and appearance into a learned representation [61,62]. While computationally demanding, these approaches foreshadow integrated metric–semantic mapping.

4.8. NeRF-Based Real-Time 3D Reconstruction for Navigation and Localization

Neural radiance fields (NeRFs) have rapidly progressed from offline novel-view synthesis to online dense mapping that can close the perception–action loop. The pioneering work iMAP showed that a small multi-layer perceptron (MLP) trained incrementally on RGB-D keyframes can already sustain ≈10 Hz camera tracking and 2 Hz global map updates, rivaling TSDF-based RGB-D SLAM on indoor sequences while producing hole-free implicit surfaces [61]. Building on this idea, NICE-SLAM introduced a hierarchical grid encoding: a coarse hash grid stores the global structure whereas per-cell voxel MLPs refine the local detail. The structured latent space yields sub-centimeter depth errors on ScanNet at real-time rates and scales to apartment-sized scenes without catastrophic forgetting [63].

Subsequent systems tighten the speed/accuracy trade-off along three axes.

- Hash-grid accelerations. Instant-NGP’s multiresolution hash encoding cuts both the training and rendering latency by two orders of magnitude—minutes of optimization fall to seconds, enabling live updates at on a single RTX-level GPU [64];

- Dense monocular pipelines. NeRF-SLAM couples a direct photometric tracker with an uncertainty-aware NeRF optimizer to achieve real-time ( tracking, mapping) performance using only a monocular camera, outperforming classical dense SLAM on TUM-RGB-D by up to in L1 depth [65];

- System-level engineering. Recent SP-SLAM prunes sample rays via scene priors and parallel CUDA kernels, reporting a speed-up over NICE-SLAM while keeping the trajectory error below on ScanNet [66].

These real-time NeRF SLAM variants share a common structure: a fast keyframe-based pose front-end (often ORB- or DROID-style) supplies stable camera estimates; a parallel back-end incrementally fits a hash-grid NeRF in windowed regions; and a lightweight ray marcher renders the depth/color for both the reprojection loss and downstream planners. Compared with TSDF or voxel occupancy grids, implicit fields (i) fill unobserved regions plausibly, (ii) compress the geometry at per room, and (iii) support photorealistic relocalization for loop closure and teach-and-repeat tasks.

Limitations and Outlook

The current systems still lag behind classical SLAM in pure CPU settings, and GPU memory constrains the outdoor range (tens of meters). Open challenges include (i) lifelong consistency across dynamic or revisited scenes, (ii) multi-modal fusion with LiDAR/radar for all-weather reliability, and (iii) real-time uncertainty quantification for safety-critical control. Despite the current limitations, the fact that real robots can already fly or walk using real-time NeRF maps shows that this kind of neural mapping is becoming a serious, primary approach to robot navigation.

4.9. Robustness in Dynamic and Adverse Environments

Real-world deployment faces moving objects, lighting shifts, rain, dust, and sensor degradation. Robust front-ends combine learned features (SuperPoint [54], LOFTR [67]), outlier-resilient estimators, and motion segmentation to isolate dynamic regions. DROID-SLAM demonstrates that learned dense correspondence and recurrent optimization can maintain the accuracy even in highly dynamic indoor and outdoor sequences at the cost of at least 11 GB of VRAM [51]. Multi-body SLAM jointly tracks camera and object motion [68]. Event cameras enhance tracking under high dynamic ranges or fast motion. Despite progress, fully reliable SLAM in all weather and day/night cycles remains an open challenge, motivating continued research into adaptive sensor fusion and self-supervised model updating.

Table 2.

The key properties of certain milestone SLAM systems. The metrics are the EuRoC MAV Absolute Trajectory Error (ATE) RMSE averaged over the sequences reported in the original papers (NR: not reported).

Table 2.

The key properties of certain milestone SLAM systems. The metrics are the EuRoC MAV Absolute Trajectory Error (ATE) RMSE averaged over the sequences reported in the original papers (NR: not reported).

| System | Year | Sensor Suite | EuRoC ATE (Avg.) | Loop Closure | Semantic | Hardware |

|---|---|---|---|---|---|---|

| VINS-Fusion [52] | 2018 | Mono/Stereo + IMU + GPS | 0.18 m | Yes | No | CPU |

| BAD-SLAM [7] | 2019 | RGB-D | NR | Yes | No | GPU |

| LIO-SAM [53] | 2020 | LiDAR + IMU + GNSS | NR | Yes | No | CPU |

| Kimera [58] | 2020 | Mono/Stereo/RGB-D + IMU | NR | Yes | Yes | CPU |

| DROID-SLAM [51] | 2021 | Mono/Stereo/RGB-D | 0.022 m | Implicit | No | GPU |

| ORB-SLAM3 [1] | 2021 | Mono/Stereo/RGB-D/IMU | 0.036 m | Yes | No | CPU |

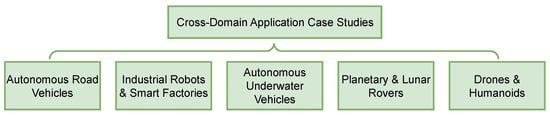

6. Cross-Domain Application Case Studies

Vision-based autonomy manifests differently across engineering domains, each imposing unique environmental constraints, safety requirements, and cost structures. Figure 8 illustrates the content of this section. We highlight four representative case studies plus emerging platforms.

Figure 8.

A schematic overview of the topics covered in “Cross-Domain Application Case Studies”.

6.1. Autonomous Road Vehicles

Camera-centric stacks in self-driving cars fuse multi-camera perception with LiDAR and radar for robust 360° situational awareness [115]. Geometric SLAM supplies ego-motion, while deep networks perform 3D object detection from lifted BEV features [116]. Benchmarks such as KITTI, Cityscapes, and 4Seasons expose challenges ranging from dynamic traffic to night-time and seasonal variations [12,41,117]. The commercial trends favor solid-state LiDAR–camera fusion for long-range detection, with learned depth completion and NetVLAD-style loop closure to handle sparse urban loops.

6.2. Industrial Robots and Smart Factories

Eye-in-hand RGB-D cameras guide grasp networks for bin picking and flexible assembly [105]. Closed-loop visual servoing combined with passive compliance achieves sub-millimeter insertions without force sensors [13]. AI-powered vision inspectors surpass human accuracy for defect detection and dimensional checks, leveraging synthetic data augmentation to address data scarcity [106]. Safety standards drive redundancy: dual-camera setups validate each other, and depth gating prevents inadvertent collision with human co-workers.

6.3. Autonomous Underwater Vehicles (AUVs)

In GPS-denied oceans, AUVs rely on monocular or stereo cameras fused with Doppler velocity logs and pressure sensors for visual–inertial SLAM [14]. Photogrammetric pipelines create high-resolution seabed mosaics for ecological reviews, while visual docking systems detect LED patterns to achieve centimeter-level alignment [118]. Event cameras and specialized de-hazing networks mitigate low-light and turbid conditions, but color distortion and refraction remain open research problems [119].

6.4. Planetary and Lunar Rovers

Mars and lunar rovers employ mast-mounted stereo pairs for terrain mapping, hazard avoidance, and slip prediction [120]. NASA’s Perseverance drove most of its first km via on-board vision-based AutoNav, outperforming earlier generations [4]. Field tests combine visual–inertial odometry with crater-based absolute localization and LiDAR flash sensors for shadowed regions [121]. The Chinese Zhurong similarly demonstrated autonomous navigation on the Martian surface [122].

6.5. Emerging Platforms: Drones and Humanoids

Agile UAVs leverage event cameras and GPU-accelerated VIO for high-speed flight through clutter, achieving >50 m/s in racing scenarios [2]. Next-generation humanoids integrate multi-camera rigs, depth sensors, and large vision language models for manipulation in human environments [123]. Sim-to-real transfer via reinforcement learning and domain randomization is critical for these high-DoF platforms.

Recent quantitative benchmarks across representative air and legged platforms show that multirotors still dominate in specific power while humanoids excel in autonomy per payload. The AscTec Firefly peaks at and the DJI Matrice 600 at , whereas the hydraulic Atlas delivers and the electrically driven Valkyrie [44,124,125,126]. Endurance paints the opposite picture: despite its miniature scale, the Crazyflie 2.1 sustains only 6 min of hover, the Firefly 12 min, and the M600 18 min, yet Atlas and Valkyrie can operate for roughly 50 and 60 min of level walking, respectively [125,126,127]. Normalizing the endurance by deliverable payload reveals an efficiency gap: the M600 achieves about , whereas Atlas and Valkyrie exceed , reflecting the energetic advantages of periodic legged gaits when the payload mass dwarfs the locomotor output. Reliability, however, remains the chief obstacle for humanoids—Valkyrie records a mean time between falls of just 18 min on DARPA-style terrain—while mature UAV avionics enable mission-critical MTBF figures approaching 10 h on the M600 [124,126]. Collectively, these metrics underscore a trade space in which aerial robots offer an unmatched peak power density and maneuverability, whereas humanoids trade speed for superior payload economy and terrestrial reachability.

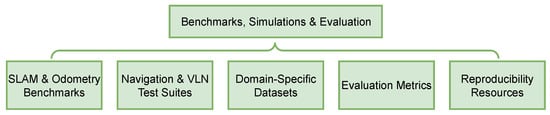

7. Benchmark Datasets, Simulators, and Evaluation Metrics

Benchmarking is central to progress in robot perception and navigation. This section surveys the most widely used datasets, simulators, and evaluation metrics across SLAM, visual odometry, autonomous navigation, and task-specific domains. By standardizing the experimental conditions and reporting protocols, these resources support reproducible comparisons and accelerate system development. Figure 9 provides a schematic overview of the topics covered.

Figure 9.

A schematic overview of the topics covered in “Benchmark Datasets, Simulators, and Evaluation Metrics”.

7.1. SLAM and Odometry Benchmarks

- KITTI Odometry—Stereo/mono sequences with a LiDAR ground truth for driving scenarios; reports translational and rotational RMSEs over 100 m/800 m segments [41];

- EuRoC MAV—Indoor VICON-tracked stereo+IMU recordings for micro-aerial vehicles [44];

- TUM VI—A high-rate fisheye+IMU dataset emphasizing photometric calibration and rapid motion [45];

- 4Seasons—Multi-season, day/night visual–LiDAR sequences for robustness testing under appearance changes [12].

7.2. Navigation and VLN Test Suites

- CARLA—An open-source urban driving simulator with weather perturbations; metrics include the success, collision, and lane invasion rates [128].

- AI Habitat—Photorealistic indoor simulation for point-goal and visual language tasks; success weighted by path length (SPL) is the standard metric [93,129,130,131].

7.3. Domain-Specific Datasets

City-scale perception benchmarks (Cityscapes, nuScenes, Waymo) supply dense labels across multiple sensors for autonomous driving [117,132,133]. Meanwhile, underwater datasets (e.g., RUIE [134], Aqualoc [135], etc.) capture turbidity variations, while lunar/planetary analog datasets provide stereo and thermal imagery with the inertial ground truth.

7.4. Evaluation Metrics

Pose Estimation: Absolute trajectory error (ATE) and relative pose error (RPE) quantify drift; KITTI uses segment-level drift percentages. Mapping: The quality of surface reconstruction is measured via completeness and accuracy against dense laser scans. Navigation: Success/collision rates, the SPL, and dynamic obstacle violations dominate. Detection and Segmentation: The mean average precision (mAP) and panoptic quality (PQ) are used to evaluate 2D/3D perception. Industrial Manipulation: Pick success rates, cycle times, and force thresholds are used to benchmark grasp and assembly.

7.5. Open-Source Software and Reproducibility Resources

Widely adopted frameworks such as ORB-SLAM3, VINS-Mono, OpenVINS, and Kimera provide reference implementations with ROS interfaces, facilitating comparative studies. Public leaderboards (e.g., KITTI Vision, the nuScenes detection challenge) encourage transparent reporting and rapid iterations. Table 3 displays some of the metrics used in robot perception, mapping, navigation, and robotic manipulation.

Table 3.

Frequently used metrics for evaluating robot perception, mapping, navigation, and manipulation.

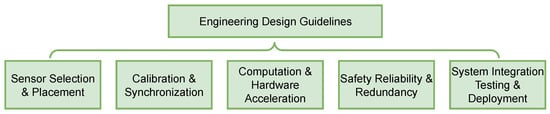

8. Engineering Design Guidelines

Drawing on the cross-domain lessons above, we distil the practical recommendations for designing, integrating, and maintaining vision-based robotic systems. Figure 10 displays a schematic overview of the main aspects discussed in this section.

Figure 10.

A schematic overview of the topics covered in “Engineering Design Guidelines”.

8.1. Sensor Selection and Placement

- Matching the modality to the environment: This favors passive stereo or LiDAR in outdoor sunlight; RGB-D or structured light indoors; and thermal cameras for night or smoke [6,35].

- Maximizing the complementary overlap: Arrange multi-modal rigs with overlapping fields of view to ease extrinsic calibration and redundancy. For 360° coverage, stagger cameras with a overlap to maintain feature continuity [21];

- Minimize parallax in manipulation: Mount eye-in-hand cameras close to the end-effector to reduce hand-eye calibration errors while ensuring a sufficient baseline for stereo depth.

8.2. Calibration and Synchronization

Accurate extrinsic calibration underpins multi-sensor fusion. Spatiotemporal calibration boards or online photometric–geometric optimization (e.g., Kalibr) is used to jointly refine time delays and poses. Recalibration occurs after mechanical shocks or temperature excursions; thermal cameras require emissivity compensation [34].

8.3. Real-Time Computation and Hardware Acceleration

Latency-critical front-ends (feature extraction, CNN inference) are embedded onto GPUs or specialized accelerators; global bundle adjustment is offloaded to CPU threads or the cloud if the bandwidth allows. The worst-case compute loads are profiled and ≥50% headroom is maintained for unexpected dynamics. Event camera pipelines benefit from FPGAs or neuromorphic hardware to process asynchronous streams at μs scale [2].

8.4. Safety, Reliability, and Redundancy

Watchdogs that trigger a safe stop when the perception confidence drops are implemented based on the feature count, reprojection error, or network uncertainty. Multi-modal outputs (e.g., LiDAR range vs. stereo) are cross-checked to detect sensor faults. For collaborative robots, depth or IR gating enforces separation zones and complies with ISO 10218-1 and ISO 10218-2 [136,137].

8.5. System Integration, Testing, and Deployment

Modular architectures (ROS 2, DDS) with simulation-in-the-loop testing (CARLA, Habitat) are adopted before field deployment. Hardware-in-the-loop rigs with lighting and weather variations are used to expose edge cases early. Continuous integration pipelines should replay recorded bag files against regression tests and dataset benchmarks (KITTI, EuRoC). Exhaustive logs (sensor, pose, control) should be recorded for post-processing analysis and dataset curation.

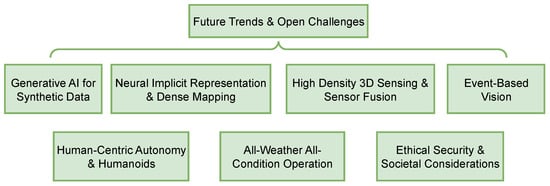

9. Future Trends and Open Research Challenges

Robotic perception is rapidly evolving: generative models now supply physics-faithful synthetic data to reinforce training sets, neural implicit maps target city-scale 3D reconstructions, and emerging sensors provide denser or faster measurements for demanding applications. Simultaneously, multi-modal fusion, human-aware reasoning, and all-weather reliability have moved from exploratory studies to core design goals. These advances promise autonomous systems that learn from richer signals, adapt online, and cooperate naturally with people, yet they create pressing challenges—ensuring domain realism, scaling the representations, fusing heterogeneous sensors under adverse conditions, and embedding strong ethical and security safeguards—which the following subsections address. A schematic illustration of the subjects discussed in this section is provided in Figure 11.

Figure 11.

Schematic overview of the topics covered in “Future Trends and Open Research Challenges”.

9.1. Generative AI for Synthetic Data and Scene Completion

Recent advances in deep generative models—especially diffusion models coupled with 3D scene representations such as neural radiance fields (NeRFs)—are transforming the data generation for robotics and autonomous driving. Systems like NeRFiller iteratively inpaint missing geometry in captured scans, producing multi-view-consistent and simulation-ready completions without user prompts [138]. Hierarchical latent diffusion models (e.g., XCube) extend this capability to high-resolution voxel grids, enabling both single-scan scene completion and text-to-3D generation [139]. In autonomous driving, hierarchical Generative Cellular Automata can synthetically extend realistic LiDAR beyond a sensor’s field of view, enriching the training corpora for long-range perception [140]. These techniques supply abundant, diverse data and explicitly infer occluded regions, narrowing the sim-to-real gap [141].

Open challenges include (i) ensuring domain realism—synthetic data must match real sensor physics to avoid negative transfer [141]; (ii) embedding physical plausibility (support, lighting, dynamics) to prevent the generation of unrealistic objects; (iii) achieving real-time inference through model distillation or efficient generative back-ends; and (iv) establishing standardized metrics for 3D generative quality. Addressing these issues will move generative pipelines from offline augmentation to on-board perception.

To minimize the sim-to-real gap, researchers pair generative pipelines with unsupervised domain adaptation—e.g., style transfer networks such as CycleGAN, feature-level alignment via Maximum Mean Discrepancy, or domain randomization curricula that expose action policies to wide visual variations [142,143,144]. These methods consistently cut the performance drop from synthetic to real data by 20–40% across detection and depth completion benchmarks.

9.2. Neural Implicit Representations and Dense 3D Mapping

Neural implicit maps promise unified geometry, appearance, and semantics at an arbitrary resolution. Grid-based encodings inspired by Instant-NGP, where NGP stands for Neural Graphics Primitives, accelerate optimization by orders of magnitude; Uni-SLAM uses decoupled hash grids and uncertainty-weighted updates to deliver real-time, dense reconstructions while capturing thin structures [145]. For city-scale scenarios, Block-NeRF partitions the environment into independent NeRF blocks that can be trained, streamed, and updated separately, achieving neighborhood-sized neural maps from millions of images [146].

Open challenges center on (i) scalability, representing ever-larger or dynamic worlds without prohibitive memory; (ii) fast incremental training so that maps can adapt at Hz; (iii) probabilistic uncertainty estimation for safety-critical localization; and (iv) robust fusion of multi-modal data (RGB, depth, LiDAR) within a single implicit field.

9.3. High-Density 3D Sensing and Sensor Fusion

Emerging solid-state LiDARs, panoramic ToF, and wide-baseline stereo dramatically increase the point density while lowering cost [147]. Learning-based early fusion networks now exploit these rich signals: SupFusion leverages teacher-guided feature supervision to improve the LiDAR–camera detection accuracy [144], while weather-conditional radar–LiDAR fusion dynamically re-weights modalities to maintain the performance in rain, fog, and snow [148].

Research directions include efficient representations (e.g., bird’s-eye-view tensors) that scale to millions of points per frame, online calibration and temporal alignment of heterogeneous sensors, and graceful degradation under sensor failure.

9.4. Event-Based Vision for Ultra-Fast Control

Event cameras provide a s latency and a high dynamic range, enabling kilohertz feedback for agile drones and manipulation. A hybrid event+frame detector recently demonstrated the motion-capture capability of a 5000 FPS camera at a bandwidth of 45 FPS video, validating the benefit of marrying asynchronous events with the conventional frames [149].

Key gaps remain in developing high-resolution event sensors, establishing standard benchmarks, and unifying frame–event SLAM pipelines that can localize and map at high speeds.

9.5. Human-Centric Autonomy and Humanoids

Human-centric systems increasingly embed large-language or multi-modal models to understand intent and follow natural instructions. In manufacturing, LLM-mediated “symbiotic” robots adjust their plans based on real-time human feedback [150], while pedestrian action and trajectory co-prediction boosts social compliance in driving [151]. Humanoid research is progressing in terms of whole-body manipulation and dynamic locomotion, with surveys highlighting advances in lightweight structures, reinforcement-learning-based control, and safety considerations [152].

Open problems include fluid, context-aware teamwork; safe physical interactions; energy-efficient actuation; and lifelong skill acquisition in unstructured homes.

9.6. All-Weather, All-Condition Operation

Robust autonomy demands perception that endures rain, fog, snow, night, and glare. Multi-modal suites now integrate imaging radar and thermal IR, with condition-aware fusion boosting the detection when vision degrades [148]. Benchmarks such as ACDC provide the adverse-weather ground truth yet remain limited in scale [153]. Simulation with physics-based weather effects and diffusion-generated augmentations further enlarges the rare-condition data [141].

The research must tackle adaptive sensor scheduling, self-supervised domain adaptation, and resilient SLAM that tolerates partial sensor failure, while expanding public datasets covering extreme conditions.

9.7. Ethical, Security, and Societal Considerations

Public acceptance hinges on privacy-preserving perceptions, secure update pipelines, and ethically aligned decision making. Empirical studies show that hybrid ethical algorithms—balancing utilitarian, deontological, and relational principles—are viewed as more acceptable for AV dilemma scenarios than single-rule strategies [154]. Dataset bias auditing and fairness enforcements remain critical to preventing unsafe behaviors, and governance frameworks must evolve alongside technical safeguards.

10. Conclusions

Vision has evolved from a supplemental sensor to a central pillar of robotic autonomy. This review has mapped the engineering landscape from the photon to the actuator, unifying the advances in the sensing hardware, depth inference, SLAM, navigation, and cross-domain deployment. We showed how a diverse sensor palette—of monocular RGB, stereo, RGB-D, LiDAR, event, and infrared—provides complementary trade-offs in range, latency, cost, and robustness. Modern SLAM systems integrate visual, inertial, and LiDAR cues with learned features and semantic understanding to achieve a centimeter-level accuracy over kilometer-scale trajectories, even in dynamic environments. The navigation strategies now span classical geometry-aware planning, deep reinforcement and imitation learning, topological visual memories, and visual language grounding, enabling robots to operate from factory floors to Martian plains.

Case studies in autonomous driving, industrial manipulation, underwater exploration, and planetary rovers have illustrated how the domain constraints shape the sensor–algorithm choices, while benchmark datasets and simulators facilitate reproducible evaluations. Our engineering guidelines distilled the best practices in the sensor placement, calibration, compute budgeting, safety monitoring, and system integration—critical for translating research prototypes into reliable products.

By analyzing 150 relevant studies in robotic navigation and computer vision, five consistent findings emerged that cut across sensors, algorithms, and application domains:

- Fusion is mandatory. Across the benchmarks, combining complementary sensors markedly improves the accuracy: RGB + LiDAR depth completion cuts the RMSE by about 30% on KITTI, and adding an IMU reduces the visual drift on EuRoC/TUM VI from 1 to 2% to below 0.5% of the trajectory length (see Section 3.5).

- Learning is the new front-end. Learned feature extractors (SuperPoint + LightGlue) and dense networks such as DROID-SLAM consistently outperform handcrafted pipelines, maintaining high recall and robust tracking under severe viewpoint, lighting, and dynamic scene changes (Section 4.2 and Section 4.9).

- Implicit mapping is now real-time. Hash-grid NeRF variants—e.g., Instant-NGP and NICE-SLAM—reach >15 Hz camera tracking with a centimeter-level depth accuracy on a single consumer GPU (Section 4.8).

- Topological representations slash memory costs. Systems such as NavTopo achieve an order-of-magnitude (∼10×) reduction in storage relative to that for dense metric grids while retaining centimeter-level repeatability for long routes (Section 5.4).

- Safety relies on redundancy and self-diagnosis. The guideline section (Section 8.4) shows that cross-checking LiDAR, stereo, and network uncertainty signals—coupled with watchdog triggers—detects most perception anomalies before they propagate to the controller, underscoring the importance of built-in fault detection.

While a narrative review is well-suited to providing a broad, integrative panorama of a fast-moving field, it inevitably trades methodological rigor for breadth. It is worth noting that the expert-guided search, although systematic within our team, does not guarantee exhaustive coverage and may introduce selection bias toward well-known venues. Future work could mitigate these issues by coupling our conceptual framework with a protocol-driven scoping or systematic review—following the PRISMA-ScR or Joanna Briggs guidelines. Looking forward, generative AI, neural implicit mapping, high-density 3D sensing, and event-based vision promise to blur the line between perception and cognition further, pushing autonomy toward all-weather, human-aware operation. Yet open challenges remain: maintaining a robust performance in extreme conditions, secure and ethical data handling, and scalable benchmarks for emerging humanoid and aerial platforms. Addressing these issues demands interdisciplinary collaboration across optics, machine learning, control, and system engineering—hallmarks. We hope that this review equips researchers and practitioners with a cohesive roadmap for advancing vision-based robotics from niche deployments to ubiquitous, trustworthy partners in everyday life.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/eng6070153/s1. The spreadsheet (review_stats.ods) contains three tables counting the entry type (article or conference), publications per year and top 15 publications venues.

Author Contributions

Conceptualization: E.A.R.-M., O.S. and W.F.-F.; methodology: F.N.M.-R. and F.A.; investigation: E.A.R.-M.; resources: W.F.-F.; writing—original draft preparation: E.A.R.-M.; writing—review and editing: W.F.-F. and F.A.; supervision: F.A.; project administration: W.F.-F.; funding acquisition: E.A.R.-M. and W.F.-F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science, Humanities, Technology, and Innovation (Secihti), Mexico, formerly known as CONAHCYT.

Data Availability Statement

The spreadsheet containing the counts of publications by year, entry types and the top-15 venues (Supplementary File S1) is openly available alongside this article.

Conflicts of Interest

Author Farouk Achakir was employed by the company Belive AI Lab. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Yu, Z.; Zhou, D.; Shi, J.; Deng, R. Vision-Based Deep Reinforcement Learning of Unmanned Aerial Vehicle (UAV) Autonomous Navigation Using Privileged Information. Drones 2024, 8, 782. [Google Scholar] [CrossRef]

- Verma, V.; Maimone, M.W.; Gaines, D.M.; Francis, R.; Estlin, T.A.; Kuhn, S.R.; Rabideau, G.R.; Chien, S.A.; McHenry, M.M.; Graser, E.J.; et al. Autonomous robotics is driving Perseverance rover’s progress on Mars. Sci. Robot. 2023, 8, eadi3099. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-based navigation techniques for unmanned aerial vehicles: Review and challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Panduru, K.; Walsh, J. Exploring the Unseen: A Survey of Multi-Sensor Fusion and the Role of Explainable AI (XAI) in Autonomous Vehicles. Sensors 2025, 25, 856. [Google Scholar] [CrossRef]

- Schops, T.; Sattler, T.; Pollefeys, M. Bad slam: Bundle adjusted direct rgb-d slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 134–144. [Google Scholar] [CrossRef]

- Zhou, S.; Wang, Z.; Dai, X.; Song, W.; Gu, S. LIR-LIVO: A Lightweight, Robust LiDAR/Vision/Inertial Odometry with Illumination-Resilient Deep Features. arXiv 2025, arXiv:2502.08676. [Google Scholar] [CrossRef]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- Bhaskar, A.; Mahammad, Z.; Jadhav, S.R.; Tokekar, P. NAVINACT: Combining Navigation and Imitation Learning for Bootstrapping Reinforcement Learning. arXiv 2024, arXiv:2408.04054. [Google Scholar]

- Huang, C.; Mees, O.; Zeng, A.; Burgard, W. Visual language maps for robot navigation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 10608–10615. [Google Scholar] [CrossRef]

- Wenzel, P.; Yang, N.; Wang, R.; Zeller, N.; Cremers, D. 4Seasons: Benchmarking visual slam and long-term localization for autonomous driving in challenging conditions. Int. J. Comput. Vis. 2024, 133, 1564–1586. [Google Scholar] [CrossRef]

- Morgan, A.S.; Wen, B.; Liang, J.; Boularias, A.; Dollar, A.M.; Bekris, K. Vision-driven compliant manipulation for reliable, high-precision assembly tasks. arXiv 2021, arXiv:2106.14070. [Google Scholar] [CrossRef]

- Wang, X.; Fan, X.; Shi, P.; Ni, J.; Zhou, Z. An overview of key SLAM technologies for underwater scenes. Remote Sens. 2023, 15, 2496. [Google Scholar] [CrossRef]

- Villalonga, C. Leveraging Synthetic Data to Create Autonomous Driving Perception Systems. Ph.D. Thesis, Universitat Autònoma de Barcelona, Barcelona, Spain, 2020. Available online: https://ddd.uab.cat/pub/tesis/2021/hdl_10803_671739/gvp1de1.pdf (accessed on 6 July 2025).

- Yu, T.; Xiao, T.; Stone, A.; Tompson, J.; Brohan, A.; Wang, S.; Singh, J.; Tan, C.; M, D.; Peralta, J.; et al. Scaling Robot Learning with Semantically Imagined Experience. In Proceedings of the Robotics: Science and Systems (RSS), Daegu, Republic of Korea, 10–14 July 2023. [Google Scholar] [CrossRef]

- Shahria, M.T.; Sunny, M.S.H.; Zarif, M.I.I.; Ghommam, J.; Ahamed, S.I.; Rahman, M.H. A comprehensive review of vision-based robotic applications: Current state, components, approaches, barriers, and potential solutions. Robotics 2022, 11, 139. [Google Scholar] [CrossRef]

- Wang, H.; Li, J.; Dong, H. A Review of Vision-Based Multi-Task Perception Research Methods for Autonomous Vehicles. Sensors 2025, 25, 2611. [Google Scholar] [CrossRef]

- Grant, M.J.; Booth, A. A typology of reviews: An analysis of 14 review types and associated methodologies. Health Inf. Libr. J. 2009, 26, 91–108. [Google Scholar] [CrossRef]

- Liang, H.; Ma, Z.; Zhang, Q. Self-supervised object distance estimation using a monocular camera. Sensors 2022, 22, 2936. [Google Scholar] [CrossRef]

- Won, C.; Seok, H.; Cui, Z.; Pollefeys, M.; Lim, J. OmniSLAM: Omnidirectional localization and dense mapping for wide-baseline multi-camera systems. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 559–566. [Google Scholar] [CrossRef]

- Liu, P.; Geppert, M.; Heng, L.; Sattler, T.; Geiger, A.; Pollefeys, M. Towards robust visual odometry with a multi-camera system. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1154–1161. [Google Scholar] [CrossRef]

- Fields, J.; Salgian, G.; Samarasekera, S.; Kumar, R. Monocular structure from motion for near to long ranges. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1702–1709. [Google Scholar] [CrossRef]

- Haseeb, M.A.; Ristić-Durrant, D.; Gräser, A. Long-range obstacle detection from a monocular camera. In Proceedings of the ACM Computer Science in Cars Symposium (CSCS), Munich, Germany, 13–14 September 2018; pp. 13–14. [Google Scholar] [CrossRef]

- Pinggera, P.; Franke, U.; Mester, R. High-performance long range obstacle detection using stereo vision. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1308–1313. [Google Scholar] [CrossRef]

- Huang, A.S.; Bachrach, A.; Henry, P.; Krainin, M.; Maturana, D.; Fox, D.; Roy, N. Visual odometry and mapping for autonomous flight using an RGB-D camera. In Proceedings of the Robotics Research: The 15th International Symposium ISRR, Puerto Varas, Chile, 11–14 December 2017; pp. 235–252. [Google Scholar][Green Version]

- Adiuku, N.; Avdelidis, N.P.; Tang, G.; Plastropoulos, A. Advancements in learning-based navigation systems for robotic applications in MRO hangar. Sensors 2024, 24, 1377. [Google Scholar] [CrossRef]

- Jeyabal, S.; Sachinthana, W.; Bhagya, S.; Samarakoon, P.; Elara, M.R.; Sheu, B.J. Hard-to-Detect Obstacle Mapping by Fusing LIDAR and Depth Camera. IEEE Sens. J. 2024, 24, 24690–24698. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, L.; Wang, X.; Shen, Y.; Deng, F. LiDAR, IMU, and camera fusion for simultaneous localization and mapping: A systematic review. Artif. Intell. Rev. 2025, 58, 1–59. [Google Scholar] [CrossRef]

- Shi, C.; Song, N.; Li, W.; Li, Y.; Wei, B.; Liu, H.; Jin, J. A Review of Event-Based Indoor Positioning and Navigation. IPIN-WiP 2022, 3248, 12. [Google Scholar]

- Furmonas, J.; Liobe, J.; Barzdenas, V. Analytical review of event-based camera depth estimation methods and systems. Sensors 2022, 22, 1201. [Google Scholar] [CrossRef] [PubMed]

- Rebecq, H.; Gallego, G.; Mueggler, E.; Scaramuzza, D. EMVS: Event-based multi-view stereo—3D reconstruction with an event camera in real-time. Int. J. Comput. Vis. 2018, 126, 1394–1414. [Google Scholar] [CrossRef]

- Munford, M.J.; Rodriguez y Baena, F.; Bowyer, S. Stereoscopic Near-Infrared Fluorescence Imaging: A Proof of Concept Toward Real-Time Depth Perception in Surgical Robotics. Front. Robot. 2019, 6, 66. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.X.B.; Rosser, K.; Chahl, J. A review of modern thermal imaging sensor technology and applications for autonomous aerial navigation. J. Imaging 2021, 7, 217. [Google Scholar] [CrossRef]

- Khattak, S.; Papachristos, C.; Alexis, K. Visual-thermal landmarks and inertial fusion for navigation in degraded visual environments. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–9. [Google Scholar] [CrossRef]

- NG, A.; PB, D.; Shalabi, J.; Jape, S.; Wang, X.; Jacob, Z. Thermal Voyager: A Comparative Study of RGB and Thermal Cameras for Night-Time Autonomous Navigation. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 14116–14122. [Google Scholar] [CrossRef]

- Delaune, J.; Hewitt, R.; Lytle, L.; Sorice, C.; Thakker, R.; Matthies, L. Thermal-inertial odometry for autonomous flight throughout the night. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1122–1128. [Google Scholar] [CrossRef]

- Hou, F.; Zhang, Y.; Zhou, Y.; Zhang, M.; Lv, B.; Wu, J. Review on infrared imaging technology. Sustainability 2022, 14, 11161. [Google Scholar] [CrossRef]

- Zhang, J. Survey on Monocular Metric Depth Estimation. arXiv 2025, arXiv:2501.11841. [Google Scholar] [CrossRef]

- Tateno, K.; Tombari, F.; Laina, I.; Navab, N. Cnn-slam: Real-time dense monocular slam with learned depth prediction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6243–6252. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Hu, M.; Wang, S.; Li, B.; Ning, S.; Fan, L.; Gong, X. Penet: Towards precise and efficient image guided depth completion. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13656–13662. [Google Scholar] [CrossRef]

- Klenk, S.; Chui, J.; Demmel, N.; Cremers, D. TUM-VIE: The TUM stereo visual-inertial event dataset. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 8601–8608. [Google Scholar] [CrossRef]

- Burri, M.; Nikolic, J.; Gohl, P.; Schneider, T.; Rehder, J.; Omari, S.; Achtelik, M.W.; Siegwart, R. The EuRoC micro aerial vehicle datasets. Int. J. Robot. Res. 2016, 35, 1157–1163. [Google Scholar] [CrossRef]

- Schubert, D.; Goll, T.; Demmel, N.; Usenko, V.; Stückler, J.; Cremers, D. The TUM VI benchmark for evaluating visual-inertial odometry. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1680–1687. [Google Scholar] [CrossRef]

- Gehrig, M.; Aarents, W.; Gehrig, D.; Scaramuzza, D. Dsec: A stereo event camera dataset for driving scenarios. IEEE Robot. Autom. Lett. 2021, 6, 4947–4954. [Google Scholar] [CrossRef]

- Bai, L.; Yang, J.; Tian, C.; Sun, Y.; Mao, M.; Xu, Y.; Xu, W. DCANet: Differential convolution attention network for RGB-D semantic segmentation. Pattern Recognit. 2025, 162, 111379. [Google Scholar] [CrossRef]

- Yu, K.; Tao, T.; Xie, H.; Lin, Z.; Liang, T.; Wang, B.; Chen, P.; Hao, D.; Wang, Y.; Liang, X. Benchmarking the robustness of lidar-camera fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3188–3198. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Droid-slam: Deep visual slam for monocular, stereo, and rgb-d cameras. Adv. Neural Inf. Process. Syst. 2021, 34, 16558–16569. [Google Scholar]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Daniela, R. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, Nevada, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar] [CrossRef]

- Lindenberger, P.; Sarlin, P.E.; Pollefeys, M. Lightglue: Local feature matching at light speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 17627–17638. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5297–5307. [Google Scholar] [CrossRef]

- Rosinol, A.; Sattler, T.; Pollefeys, M.; Carlone, L. Incremental visual-inertial 3d mesh generation with structural regularities. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar] [CrossRef]

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An Open-Source Library for Real-Time Metric-Semantic Localization and Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar] [CrossRef]

- Rosinol, A.; Gupta, A.; Abate, M.; Shi, J.; Carlone, L. 3D Dynamic Scene Graphs: Actionable Spatial Perception with Places, Objects, and Humans. In Proceedings of the Robotics: Science and Systems (RSS), Corvalis, OR, USA, 12–16 July 2020. [Google Scholar] [CrossRef]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. Slam++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar] [CrossRef]

- Sucar, E.; Liu, S.; Ortiz, J.; Davison, A.J. imap: Implicit mapping and positioning in real-time. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6229–6238. [Google Scholar] [CrossRef]

- Li, G.; Chen, Q.; Yan, Y.; Pu, J. EC-SLAM: Real-time Dense Neural RGB-D SLAM System with Effectively Constrained Global Bundle Adjustment. arXiv 2024, arXiv:2404.13346. [Google Scholar] [CrossRef]

- Zhu, Z.; Peng, S.; Larsson, V.; Xu, W.; Bao, H.; Cui, Z.; Oswald, M.R.; Pollefeys, M. Nice-slam: Neural implicit scalable encoding for slam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12786–12796. [Google Scholar] [CrossRef]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. ACM Trans. Graph. 2022, 41, 1–15. [Google Scholar] [CrossRef]

- Rosinol, A.; Leonard, J.J.; Carlone, L. Nerf-slam: Real-time dense monocular slam with neural radiance fields. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3437–3444. [Google Scholar] [CrossRef]

- Hong, Z.; Wang, B.; Duan, H.; Huang, Y.; Li, X.; Wen, Z.; Wu, X.; Xiang, W.; Zheng, Y. SP-SLAM: Neural Real-Time Dense SLAM With Scene Priors. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 5182–5194. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Y.; Tian, R.; Liang, S.; Shen, Y.; Coleman, S.; Kerr, D. Fast, Robust, Accurate, Multi-Body Motion Aware SLAM. IEEE Trans. Intell. Transp. Syst. 2023, 25, 4381–4397. [Google Scholar] [CrossRef]

- Kitt, B.M.; Rehder, J.; Chambers, A.D.; Schonbein, M.; Lategahn, H.; Singh, S. Monocular Visual Odometry Using a Planar Road Model to Solve Scale Ambiguity. In Proceedings of the European Conference on Mobile Robots (ECMR), Orebro, Sweden, 7–9 September 2011. [Google Scholar]

- Zheng, S.; Wang, J.; Rizos, C.; Ding, W.; El-Mowafy, A. Simultaneous localization and mapping (slam) for autonomous driving: Concept and analysis. Remote Sens. 2023, 15, 1156. [Google Scholar] [CrossRef]

- Keetha, N.; Karhade, J.; Jatavallabhula, K.M.; Yang, G.; Scherer, S.; Ramanan, D.; Luiten, J. SplaTAM: Splat Track & Map 3D Gaussians for Dense RGB-D SLAM. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21357–21366. [Google Scholar] [CrossRef]

- Joo, K.; Kim, P.; Hebert, M.; Kweon, I.S.; Kim, H.J. Linear RGB-D SLAM for structured environments. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8403–8419. [Google Scholar] [CrossRef]

- Kim, P.; Coltin, B.; Kim, H.J. Linear RGB-D SLAM for Planar Environments. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 350–366. [Google Scholar] [CrossRef]

- Zhou, D.; Wang, Z.; Bandyopadhyay, S.; Schwager, M. Fast, on-line collision avoidance for dynamic vehicles using buffered voronoi cells. IEEE Robot. Autom. Lett. 2017, 2, 1047–1054. [Google Scholar] [CrossRef]

- Wu, J. Rigid 3-D registration: A simple method free of SVD and eigendecomposition. IEEE Trans. Instrum. Meas. 2020, 69, 8288–8303. [Google Scholar] [CrossRef]

- Liu, Y.; Kong, D.; Zhao, D.; Gong, X.; Han, G. A point cloud registration algorithm based on feature extraction and matching. Math. Probl. Eng. 2018, 2018, 7352691. [Google Scholar] [CrossRef]

- Tiar, R.; Lakrouf, M.; Azouaoui, O. Fast ICP-SLAM for a bi-steerable mobile robot in large environments. In Proceedings of the 2015 IEEE International Workshop of Electronics, Control, Measurement, Signals and their Application to Mechatronics (ECMSM), Istanbul, Turkey,, 27–31 July 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Ahmadi, A.; Nardi, L.; Chebrolu, N.; Stachniss, C. Visual servoing-based navigation for monitoring row-crop fields. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4920–4926. [Google Scholar] [CrossRef]

- Li, Y.; Košecka, J. Learning view and target invariant visual servoing for navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 658–664. [Google Scholar] [CrossRef]

- Caron, G.; Marchand, E.; Mouaddib, E.M. Photometric visual servoing for omnidirectional cameras. Auton. Robot. 2013, 35, 177–193. [Google Scholar] [CrossRef]

- Petiteville, A.D.; Hutchinson, S.; Cadenat, V.; Courdesses, M. 2D visual servoing for a long range navigation in a cluttered environment. In Proceedings of the 2011 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 5677–5682. [Google Scholar] [CrossRef]

- Rodríguez Martínez, E.A.; Caron, G.; Pégard, C.; Lara-Alabazares, D. Photometric-Planner for Visual Path Following. IEEE Sens. J. 2020, 21, 11310–11317. [Google Scholar] [CrossRef]

- Rodríguez Martínez, E.A.; Caron, G.; Pégard, C.; Lara-Alabazares, D. Photometric Path Planning for Vision-Based Navigation. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9007–9013. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, Y.; Shi, D.; Zhu, W.; Xia, J.; Jeff, T.; Jin, S.; Gao, K.; Li, X.; Yang, X. NeRF-IBVS: Visual servo based on nerf for visual localization and navigation. Adv. Neural Inf. Process. Syst. 2023, 36, 8292–8304. [Google Scholar]

- Vassallo, R.F.; Schneebeli, H.J.; Santos-Victor, J. Visual servoing and appearance for navigation. Robot. Auton. Syst. 2000, 31, 87–97. [Google Scholar] [CrossRef]

- Li, J.; Wang, X.; Tang, S.; Shi, H.; Wu, F.; Zhuang, Y.; Wang, W.Y. Unsupervised reinforcement learning of transferable meta-skills for embodied navigation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12123–12132. [Google Scholar] [CrossRef]

- Martins, R.; Bersan, D.; Campos, M.F.; Nascimento, E.R. Extending maps with semantic and contextual object information for robot navigation: A learning-based framework using visual and depth cues. J. Intell. Robot. Syst. 2020, 99, 555–569. [Google Scholar] [CrossRef]

- Kwon, O.; Kim, N.; Choi, Y.; Yoo, H.; Park, J.; Oh, S. Visual graph memory with unsupervised representation for visual navigation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15890–15899. [Google Scholar] [CrossRef]

- Cèsar-Tondreau, B.; Warnell, G.; Stump, E.; Kochersberger, K.; Waytowich, N.R. Improving autonomous robotic navigation using imitation learning. Front. Robot. 2021, 8, 627730. [Google Scholar] [CrossRef]