Conundrum of Hydrologic Research: Insights from the Evolution of Flood Frequency Analysis

Abstract

1. Introduction

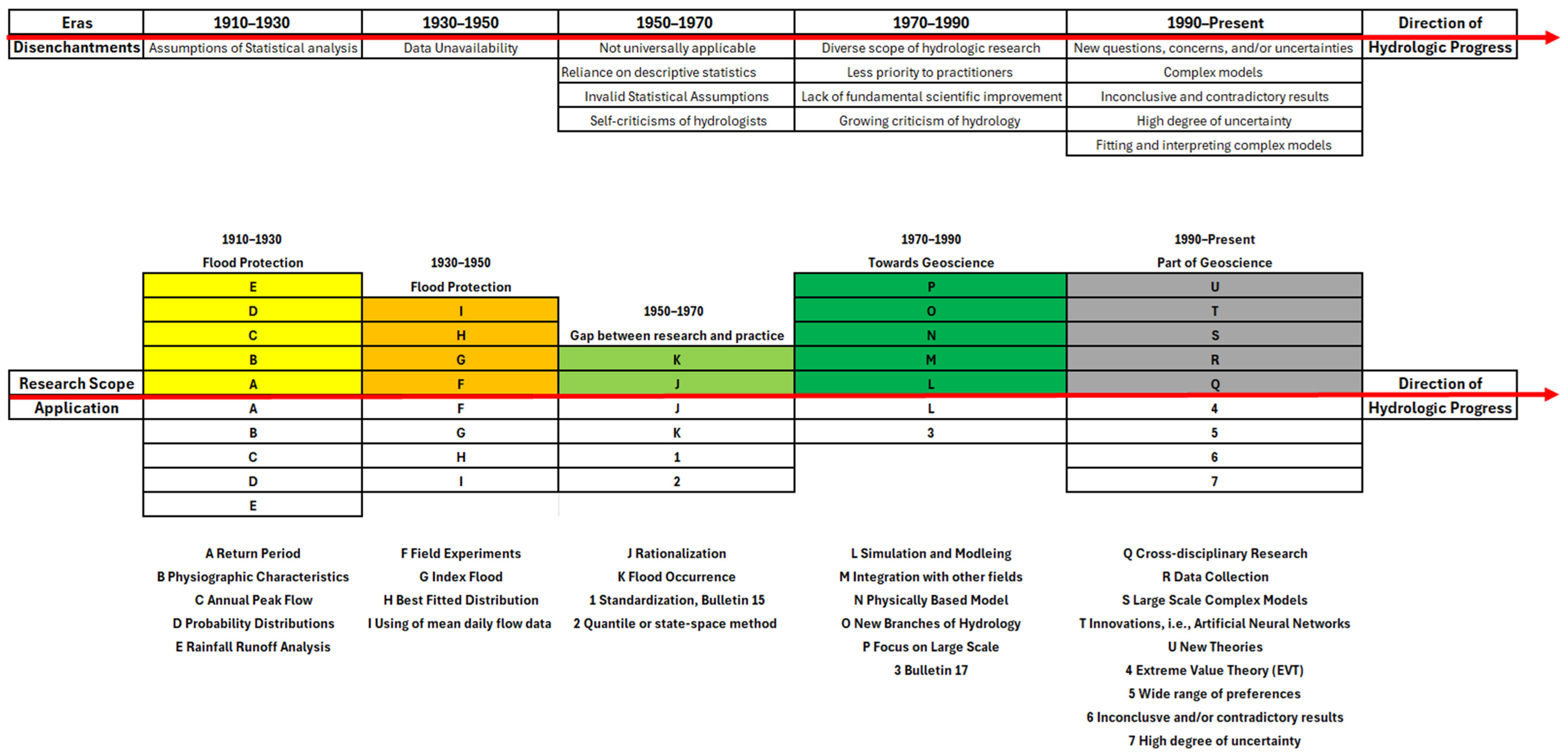

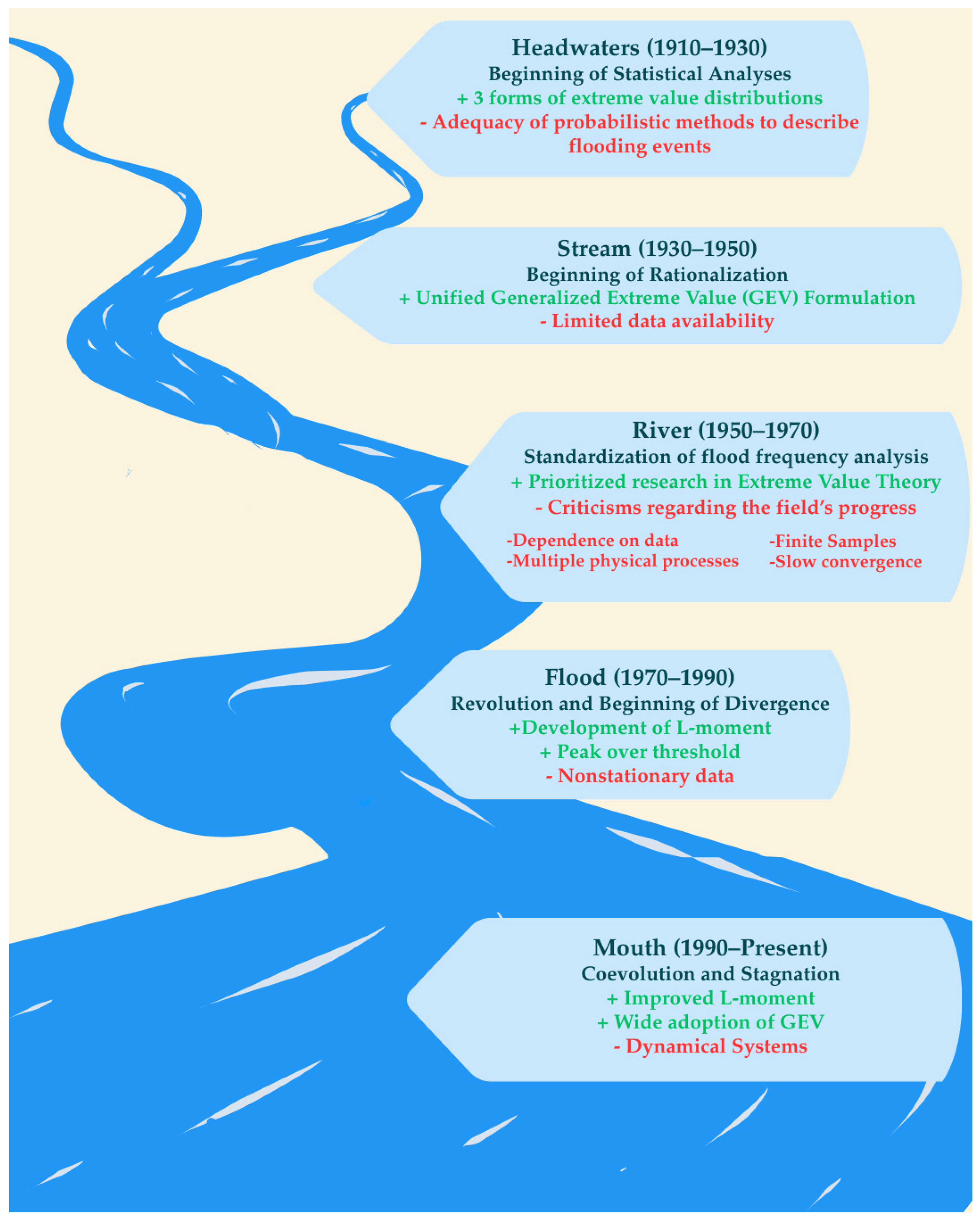

2. Eras of Achievements and Disenchantments

2.1. Beginning of Statistical Analyses (1910–1930)

2.2. Towards Further Understanding and Beginning of Rationalization (1930–1950)

2.3. Towards Standardization of Flood Frequency Analyses (1950–1970)

2.4. Revolution and Beginning of Divergence (1970–1990)

2.5. Coevolution and Stagnation (1990–Present)

3. Scope of Flood Frequency Analysis

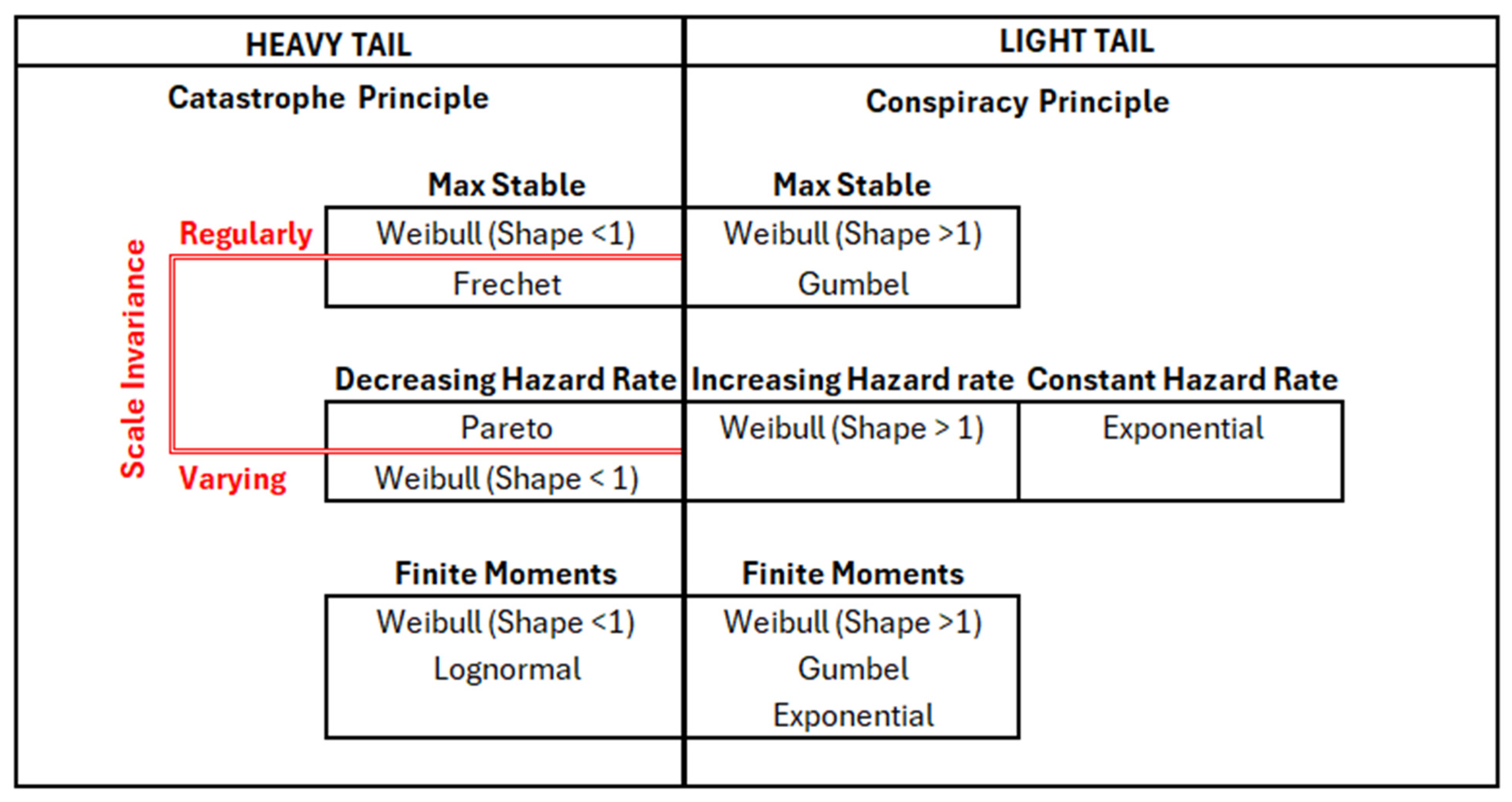

3.1. Research Scope of Extreme Value Theory (EVT)

3.2. Application of Generalized Extreme Value Distribution (GEV)

3.3. Performance of Probability Distributions

4. Conundrum in the Scope of Hydrologic Research

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mulvany, T.J. On the Use of Self-registering Rain and Flood Gauges. Proc. Inst. Civ. Eng. 1850, 4, 18–31. [Google Scholar]

- Dalton, J. Experimental essays on the constitution of mixed gases; on the force of steam or vapor from water and other liquids in different temperatures, both in a torricellian vacuum and in air; on evaporation; and on the expansion of gases by heat. Manch. Lit. Philos. Soc. Mem. Proc. 1802, 5, 535–602. [Google Scholar]

- Sivapalan, M.; Bloschl, G. The growth of hydrologic understanding: Technologies, ideas, and social needs shape the field. Water Resour. Res. 2017, 53, 8137–8146. [Google Scholar] [CrossRef]

- National Research Council (NRC). Opportunities in the Hydrologic Sciences; National Academic Press: Washington, DC, USA, 1991. [Google Scholar]

- Agbotui, P.Y.; Firouzbehi, F.; Medici, G. Review of Effective Porosity in Sandstone Aquifers: Insights for Representation of Contaminant Transport. Sustainability 2025, 17, 6469. [Google Scholar] [CrossRef]

- Sophocleous, M. Interactions between groundwater and surface water: The state of the science. Hydrogeol. J. 2002, 10, 52–67. [Google Scholar] [CrossRef]

- Klemeš, V. A hydrological perspective. J. Hydrol. 1988, 100, 3–28. [Google Scholar] [CrossRef]

- Wagener, T.; Sivapalan, M.; Troch, P.A.; McGlynn, B.L.; Harman, C.J.; Gupta, H.V.; Kumar, P.; Rao, P.S.C.; Basu, N.B.; Wilson, J.S. The future of hydrology: An evolving science for a changing world. Water Resour. Res. 2010, 46, W05301. [Google Scholar] [CrossRef]

- Chow, V.T. A general formula for hydrologic frequency analysis. Trans. Am. Geophys. Union 1951, 32, 231–237. [Google Scholar] [CrossRef]

- Maidment, D.R. Handbook of Hydrology; McGraw-Hill: New York, NY, USA, 1993. [Google Scholar]

- Hershey, R.W.; Fairbridge, R.W. Encyclopedia of Hydrology and Water Resources; Kluwer Academic Publishers: Dordrecht, The Netherland, 1998. [Google Scholar]

- Singh, V.P.; Woolhiser, D.A. Mathematical modeling of watershed hydrology. J. Hydrol. Eng. 2002, 7, 270–294. [Google Scholar] [CrossRef]

- Yevjevich, V. Stochastic Processes in Hydrology; Water Resources Publications: Highlands Ranch, CO, USA, 1972. [Google Scholar]

- Chow, V.T. Handbook of Applied Hydrology; McGraw-Hill: New York, NY, USA, 1964. [Google Scholar]

- Schaffernak, F. Hydrographie; Springer: Wien, Austria, 1935. [Google Scholar]

- Fuller, W.E. Flood flows. Trans. Am. Soc. Civ. Eng. 1914, 77, 567–617. [Google Scholar] [CrossRef]

- Hazen, A. Discussion of ‘Flood flows by W. E. Fuller’. Trans. Am. Soc. Civ. Eng. 1914, 77, 626–632. [Google Scholar]

- Hazen, A. Discussion of ‘The probable variations in yearly runoff as determined from a study of California streams by L. Standish Hall’. Trans. Am. Soc. Civ. Eng. 1921, 84, 214–222. [Google Scholar] [CrossRef]

- Foster, H.A. Theoretical frequency curves. Trans. Am. Soc. Civ. Eng. 1924, 87, 142–203. [Google Scholar] [CrossRef]

- Jarvis, C.S. Flood flow characteristics. Trans. Am. Soc. Civ. Eng. 1926, 89, 985–1033 + 1091–1104. [Google Scholar] [CrossRef]

- Dawdy, D.R.; Griffis, V.W.; Gupta, V.K. Regional flood-frequency analysis: How we got here and where we are going. J. Hydrol. Eng. 2012, 17, 953–959. [Google Scholar] [CrossRef]

- Merriman, T. Discussion of Jarvis, flood flow characteristics. Trans. Am. Soc. Civ. Eng. 1926, 89, 1071. [Google Scholar]

- Green, W.H.; Ampt, G.A. Studies of soil physics. 1. The flow of air and water through soil. J. Agric. Soils 1911, 4, 1–24. [Google Scholar]

- Mein, R.G.; Larson, C.L. Modeling infiltration during a steady rain. Water Resour. Res. 1973, 9, 384–394. [Google Scholar] [CrossRef]

- Sitterson, J.; Knightes, C.; Parmar, R.; Wolfe, K.; Avant, B. An Overview of Rainfall-Runoff Model Types; U.S. Environmental Protection Agency: Washington, DC, USA, 2017. [Google Scholar]

- Jarvis, C.S. Floods in the United States, Magnitude and Frequency; U.S. Water Supply Paper 771; Government Printing Office: Washington, DC, USA, 1936. [Google Scholar]

- Dalrymple, T. Flood-Frequency Analyses; Water Supply Paper 1543-A; United States Geological Survey: Washington, DC, USA, 1960. [Google Scholar]

- Kinnison, H.B.; Colby, B.R. Flood formulas based on drainage-basin characteristics. Trans. Am. Soc. Civ. Eng. 1945, 110, 849–904. [Google Scholar] [CrossRef]

- Horton, R.E. The role of infiltration in the hydrologic cycle. Trans. Am. Geophys. Union 1933, 14, 446–460. [Google Scholar] [CrossRef]

- Beven, K.J. Infiltration excess at the Horton hydrology laboratory (or not?). J. Hydrol. 2004, 293, 219–234. [Google Scholar] [CrossRef]

- Benson, M.A. Evolution of Methods for Evaluating the Occurrence of Floods; Water Supply Paper 1580-A; United States Geological Survey: Washington, DC, USA, 1962. [Google Scholar]

- Benson, M.A. Factors Influencing the Occurrence of Floods in a Humid Region of Diverse Terrain; Water Supply Paper 1580-B; USGS: Washington, DC, USA, 1962. [Google Scholar]

- Benson, M.A. Factors Influencing the Occurrence of Floods in the Southwest; Water Supply Paper 1580-D; USGS: Washington, DC, USA, 1964. [Google Scholar]

- Benson, M.A. Uniform flood-frequency methods for federal agencies. Water Resour. Res. 1968, 4, 891–908. [Google Scholar] [CrossRef]

- Interagency Committee on Water Resources. Methods of Flow Frequency Analysis; Bulletin 13, Subcommittee on Hydrology; U.S. Government Printing Office: Washington, DC, USA, 1966. [Google Scholar]

- Langbein, W.B.; Hoyt, W.G. Water Facts for the Nation’s Future; Ronald: New York, NY, USA, 1959. [Google Scholar]

- Yevjevich, V. Misconceptions in hydrology and their consequences. Water Resour. Res. 1968, 4, 225–232. [Google Scholar] [CrossRef]

- Crawford, N.C.; Linsley, R.K. Digital Simulation in Hydrology: Stanford Watershed Simulation-IV; Technical Report 39; Stanford University: Palo Alto, CA, USA, 1966. [Google Scholar]

- Bear, J. Hydraulics of Groundwater; McGraw-Hill Book Publishing Company: New York, NY, USA, 1979. [Google Scholar]

- Bear, J.; Verruijt, A. Modeling Groundwater Flow and Pollution; D. Reidel Publishing Company: Dordrecht, The Netherlands, 1987. [Google Scholar]

- Baker, V.R.; Kochel, R.C.; Patton, P.C. Flood Geomorphology; John Wiley & Sons: New York, NY, USA, 1988. [Google Scholar]

- Freeze, R.A.; Harlan, R.L. Blueprint for a physically based, digitally simulated hydrologic response model. J. Hydrol. 1969, 9, 237–258. [Google Scholar] [CrossRef]

- Poston, T.; Stewart, I. Catastrophe Theory and Its Applications; Pitman: London, UK, 1978. [Google Scholar]

- Singh, V.P. Hydrologic modeling: Progress and future directions. Geosci. Lett. 2018, 5, 15. [Google Scholar] [CrossRef]

- Singh, V.P. Kinematic wave theory of overland flow. Water Resour. Manag. 2017, 31, 3147–3160. [Google Scholar] [CrossRef]

- Eagleson, P.S. Ecohydrology: Darwinian Expression of Vegetation Form and Function; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Hydrologic Engineering Center. HEC-1 Flood Hydrograph Package: User’s Manual; Army Corps of Engineers: Davis, CA, USA, 1968. [Google Scholar]

- Metcalf and Eddy, Inc.; University of Florida and Water Resources Engineers, Inc. Storm Water Management Model, Vol 1-Final Report; EPA Report No. 11024DOV07/71 (NITS PB-203289); Environmental Protection Agency: Washington, DC, USA, 1971. [Google Scholar]

- Burnash, R.J.C.; Ferral, R.L.; McGuire, R.A. A Generalized Streamflow Simulation System-Conceptual Modeling for Digital Computers; Report; U.S. National Weather Service and Department of Water Resources: Silver Spring, MD, USA, 1973. [Google Scholar]

- Rockwood, D.M. Theory and practice of the SSARR model as related to analyzing and forecasting the response of hydrologic systems. In Applied Modeling in Catchment Hydrology; Singh, V.P., Ed.; Water Resources Publications: Littleton, CO, USA, 1982; pp. 87–106. [Google Scholar]

- Dawdy, D.R.; Litchy, R.W.; Bergmann, J.M. Rainfall-Runoff Simulation Model for Estimation of Flood Peaks for Small Drainage Basins; Geological Survey: Washington, DC, USA, 1970. [Google Scholar]

- Leavesley, G.H.; Lichty, R.W.; Troutman, B.M.; Saindon, L.G. Precipitation-runoff Modeling System-User’s Manual; Water Resources Investigations Report 83-4238; U.S. Geological Survey: Denver, CO, USA, 1983. [Google Scholar]

- Eagleson, P.S. Dynamics of flood frequency. Water Resour. Res. 1972, 8, 878–898. [Google Scholar] [CrossRef]

- Beven, K. Issues in generating stochastic observables for hydrological models. Hydrol. Process. 2021, 35, e14203. [Google Scholar] [CrossRef]

- Stedinger, J.R. Expected probability and annual damage estimators. J. Water Resour. Plan. Manag. 1997, 123, 125–135. [Google Scholar] [CrossRef]

- Water Resources Council (WRC). Guidelines for Determining Flood Flow Frequency; Bulletin 17 of the Hydrology Subcommittee; Water Resources Council: Washington, DC, USA, 1976. [Google Scholar]

- Tasker, G.D.; Stedinger, J.R. An operational GLS model for hydrologic regression. J. Hydrol. 1989, 111, 361–375. [Google Scholar] [CrossRef]

- Bobée, B.; Robitaille, R. The use of the Pearson type 3 distribution and log Pearson type 3 distribution revisited. Water Resour. Res. 1977, 13, 427–443. [Google Scholar] [CrossRef]

- Rao, D.V. Log-Pearson type 3 distribution: Method of mixed moments. J. Hydraul. Div. 1980, 106, 999–1019. [Google Scholar] [CrossRef]

- Rao, D.V. Estimating log-Pearson parameters by mixed moments. J. Hydraul. Div. 1983, 109, 1118–1132. [Google Scholar] [CrossRef]

- Rao, D.V. Fitting log-Pearson type 3 distribution by maximum likelihood. In Hydrologic Frequency Modeling; Singh, V.H., Ed.; Reidel: Norwell, MA, USA, 1986; pp. 395–406. [Google Scholar]

- Pilgrim, D.H. Bridging the gap between flood research and design practice. Water Resour. Res. 1986, 22, 165–176. [Google Scholar] [CrossRef]

- Rajaram, H.; Bahr, J.M.; Blöschl, G.; Cai, X.; Mackay, D.S.; Michalak, A.M.; Montanari, A.; Sanchez-Villa, X.; Sander, G. A reflection on the first 50 years of Water Resources Research. Water Resour. Res. 2015, 51, 7829–7837. [Google Scholar] [CrossRef]

- Sorooshian, S.; Hsu, K.-L.; Coppola, E.; Tomasseti, B.; Verdecchia, M.; Visconti, G. Hydrological Modeling and the Water Cycle: Coupling the Atmospheric and Hydrologic Models; Springer: Dordrecht, The Netherlands, 2008. [Google Scholar]

- Bates, P.D.; Lane, S.N. High Resolution Flow Modelling in Hydrology and Geomorphology; John Wiley: Chichester, UK, 2002. [Google Scholar]

- Singh, V.P. Kinematic Wave Modeling in Water Resources: Surface Water Hydrology; John Wiley: New York, NY, USA, 1996. [Google Scholar]

- Miyazaki, T. Water Flow in Soils; Taylor & Francis: Boca Raton, FL, USA, 2006. [Google Scholar]

- Singh, V.P. Entropy theory. In Handbook of Applied Hydrology; Singh, V.P., Ed.; McGraw-Hill Education: New York, NY, USA, 2017; pp. 31-1–31-8. [Google Scholar]

- Wheater, H.S.; Gober, P. Water security and the science agenda. Water Resour. Res. 2015, 51, 5406–5424. [Google Scholar] [CrossRef]

- Kumar, P.; Alameda, J.C.; Bajcsy, P.; Folk, M.; Markus, M. Hydroinformatics: Data Integrative Approaches in Computation, Analysis, and Modeling; Taylor & Francis: Boca Raton, FL, USA, 2006. [Google Scholar]

- Singh, V.P.; Zhang, L. Copula-entropy theory for multivariate stochastic modeling in water engineering. Geosci. Lett. 2018, 5, 17. [Google Scholar] [CrossRef]

- Sivakumar, B. Nonlinear dynamics and chaos. In Handbook of Applied Hydrology; Singh, V.P., Ed.; McGraw-Hill Education: New York, NY, USA, 2017; pp. 29-1–29-11. [Google Scholar]

- Hosking, J.R.M.; Wallis, J.R. Regional Frequency Analysis: An Approach Based on L–Moments; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Rao, A.R.; Hamed, K.H. Flood Frequency Analysis; CRC Press LLC.: Boca Raton, FL, USA, 2000. [Google Scholar]

- Singh, V.P. Entropy-Based Parameter Estimation in Hydrology; Springer: Dordrecht, The Netherlands, 1998. [Google Scholar]

- Greenwood, J.A.; Landwehr, J.M.; Matalas, N.C.; Wallis, J.R. Probability Weighted Moments: Definition and Relation to Parameters of Several Distributions Expressible in Inverse Form. Water Resour. Res. 1979, 15, 1049–1054. [Google Scholar] [CrossRef]

- Murshed, S.; Park, B.-J.; Jeong, B.-Y.; Park, J.-S. LH-Moments of Some Distributions Useful in Hydrology. Commun. Stat. Appl. Methods 2009, 16, 647–658. [Google Scholar] [CrossRef]

- Hosking, J.R.M. L-moments: Analysis and Estimation of Distributions using Linear, Combinations of Order Statistics. J. R. Stat ist. Soc. 1990, 52, 105–124. [Google Scholar] [CrossRef]

- Wang, Q.J. LH moments for statistical analysis of extreme events. Water Resour. Res. 1997, 33, 2841–2848. [Google Scholar] [CrossRef]

- Gaume, E. Flood frequency analysis: The Bayesian choice. WIREs Water. 2018, 5, e1290. [Google Scholar] [CrossRef]

- Ilinca, C.; Anghel, C.G. Flood Frequency Analysis Using the Gamma Family Probability Distributions. Water 2023, 15, 1389. [Google Scholar] [CrossRef]

- Strupczewski, W.G.; Kochanek, K.; Bogdanowicz, E. Historical floods in flood frequency analysis: Is this game worth the candle? J. Hydrol. 2017, 554, 800–816. [Google Scholar] [CrossRef]

- Macdonald, N.; Kjeldsen, T.R.; Prosdocimi, I.; Sangster, H. Reassessing flood frequency For the Sussex Ouse, Lewes: The Inclusion of historical Flood Information since AD 1650. Nat. Hazards Earth Syst. Sci. 2014, 14, 2817–2828. [Google Scholar] [CrossRef]

- Henderson, R.D.; Collins, D.B.G. Regional Flood Estimation Tool for New Zealand: Final Report Part 1; 2016049CH for MBIE: 38, NIWA Client Report; National Institute of Water & Atmospheric Research Ltd: Christchurch, New Zealand, 2016. [Google Scholar]

- Katz, R.W.; Parlange, M.B.; Naveau, P. Statistics of extremes in hydrology. Adv. Water Resour. 2002, 25, 1287–1304. [Google Scholar] [CrossRef]

- Rootzén, H.; Segers, J.; Wadsworth, J.L. Multivariate peaks over thresholds models. Extremes 2018, 21, 115–145. [Google Scholar] [CrossRef]

- Huser, R.; Wadsworth, J.L. Modeling spatial processes with unknown extremal dependence class. J. Am. Stat. Assoc. 2019, 114, 434–444. [Google Scholar] [CrossRef]

- Winter, H.C.; Tawn, J.A. kth-order Markov extremal models for assessing heatwave risks. Extremes 2017, 20, 393–415. [Google Scholar] [CrossRef]

- Simpson, E.S.; Wadsworth, J.L. Conditional modelling of spatio-temporal extremes for Red Sea surface temperatures. Spat. Stat. 2021, 41, 100482. [Google Scholar] [CrossRef]

- Koutsoyiannis, D. Stochastics of Hydroclimatic Extremes–A Cool Look at Risk; Kallipos: Athens, Greece, 2024. [Google Scholar]

- Pizarro, A.; Dimitriadis, P.; Iliopoulou, T.; Manfreda, S.; Koutsoyiannis, D. Stochastic Analysis of the Marginal and Dependence Structure of Streamflows: From Fine-Scale Records to Multi-Centennial Paleoclimatic Reconstructions. Hydrology 2022, 9, 126. [Google Scholar] [CrossRef]

- Nair, J.; Wierman, A.; Zwart, B. The Fundamentals of Heavy Tails: Properties, Emergence, and Estimation; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar]

- Eljabri, S.S.M. New Statistical Models for Extreme Values. Ph.D. Thesis, The University of Manchester, Manchester, UK, 2013. [Google Scholar]

- Gumbel, E.J. Statistics of Extremes; Columbia University Press: New York, NY, USA, 1958. [Google Scholar]

- Fréchet, M. On the law of probability of the maximum deviation. Ann. Pol. Math. Soc. 1927, 6, 93–116. [Google Scholar]

- Fisher, R.A.; Tippett, L.H.C. Limiting forms of the frequency distribution of the largest or smallest member of a sample. Math. Proc. Camb. Philos. Soc. 1928, 24, 180–190. [Google Scholar] [CrossRef]

- Gnedenko, B.V. Limit distribution for the maximum term in a random series. Ann. Math. 1943, 44, 423–453. [Google Scholar] [CrossRef]

- Bingham, N.H. Regular variation and probability: The early years. J. Comput. Appl. Math. 2007, 200, 357–363. [Google Scholar] [CrossRef]

- Pickands, J. Statistical inference using extreme order statistics. Ann. Stat. 1975, 3, 119–131. [Google Scholar] [CrossRef]

- de Haan, L.; Stadtmüller, U. Generalized regular variation of second order. J. Aust. Math. Soc. (Ser. A) 1996, 61, 381–395. [Google Scholar] [CrossRef]

- Coles, S.; Pericchi, L.R.; Sisson, S.A. A fully probabilistic approach to extreme rainfall modeling. J. Hydrol. 2003, 273, 35–50. [Google Scholar] [CrossRef]

- Coles, S.; Tawn, J.A. Modelling extreme multivariate events. J. R. Stat. Soc. Ser. B Stat. Methodol. 1991, 53, 377–392. [Google Scholar] [CrossRef]

- Hill, B.M. A simple general approach to inference about the tail of a distribution. Ann. Stat. 1975, 3, 1163–1174. [Google Scholar] [CrossRef]

- Wilk, M.B.; Gnanadesikan, R. Probability plotting methods for the analysis for data. Biometrika 1968, 55, 1–17. [Google Scholar] [CrossRef]

- Wilks, S.S. The large-sample distribution of the likelihood ratio for testing composite hypotheses. Ann. Math. Stat. 1938, 9, 60–62. [Google Scholar] [CrossRef]

- Anderson, T.W.; Darling, D.A. A test of goodness of fit. J. Am. Stat. Assoc. 1954, 49, 765–769. [Google Scholar] [CrossRef]

- Kleměš, V. Empirical and causal models in hydrology. J. Hydrol. 1982, 65, 95–104. [Google Scholar]

- Aitchison, J.; Brown, J.A.C. The Lognormal Distribution, with Special Reference to Its Uses in Economics; Cambridge University Press: Cambridge, UK, 1957; pp. 1–176. [Google Scholar]

- Champernowne, D.G. A model of income distribution: A generalization of the Pareto law. Econ. J. 1953, 63, 318–351. [Google Scholar]

- Goldie, C.M. Implicit renewal theory and tails of solutions of random equations. Ann. Appl. Probab. 1991, 1, 126–166. [Google Scholar] [CrossRef]

- Achlioptas, D.; Clauset, A.; Kempe, D.; Moore, C. On the bias of traceroute sampling: Or, power-law degree distributions in regular graphs. J. ACM 2009, 56, 1–28. [Google Scholar] [CrossRef]

- Willinger, W.; Alderson, D.; Doyle, J.C.; Li, L. More “normal” than normal: Scaling distributions and complex systems. Proc. Natl. Acad. Sci. USA 2009, 106, 15508–15513. [Google Scholar]

- Jenkinson, A.F. The frequency distribution of the annual maximum (or minimum) values of meteorological elements. Q. J. R. Meteorol. Soc. 1955, 81, 158–171. [Google Scholar] [CrossRef]

- Hosking, J.R.M.; Wallis, J.R.; Wood, E.F. Estimation of the generalized extreme-value distribution by the method of probability-weighted moments. Technometrics 1985, 27, 251–261. [Google Scholar] [CrossRef]

- Davison, A.C.; Smith, R.L. Models for exceedances over high thresholds. J. R. Stat. Soc. Ser. B Stat. Methodol. 1990, 52, 393–442. [Google Scholar] [CrossRef]

- Coles, S. An Introduction to Statistical Modeling of Extreme Values; Springer: London, UK, 2001; pp. 1–208. [Google Scholar]

- Ailliot, P.; Allard, D.; Monbet, V.; Naveau, P. Stochastic weather generators using generalized linear models and extreme value theory. Clim. Res. 2011, 47, 55–73. [Google Scholar]

- Rai, S.; Hoffman, A.; Lahiri, S.; Nychka, D.W.; Sain, S.R.; Bandyopadhyay, S. Fast parameter estimation of generalized extreme value distribution using neural networks. Environmetrics 2024, 35, e2845. [Google Scholar] [CrossRef]

- Tepetidis, N.; Koutsoyiannis, D.; Iliopoulou, T.; Dimitriadis, P. Investigating the Performance of the Informer Model for Streamflow Forecasting. Water 2024, 16, 2882. [Google Scholar] [CrossRef]

- Smith, R.L. Maximum likelihood estimation in a class of nonregular cases. Biometrika 1985, 72, 67–90. [Google Scholar] [CrossRef]

- Stedinger, J.R.; Thomas, W.O., Jr. Low-flow frequency estimation using base-flow measurements. U. S. Geol. Surv. Open-File Rep. 1985, 85–95, 1–22. [Google Scholar]

- Freitas, A.C.M.; Todd, M. Extreme value laws in dynamical systems for non-smooth observations. J. Stat. Phys. 2010, 142, 108–126. [Google Scholar] [CrossRef]

- Hosking, J.R.M.; Wallis, J.R. Some statistics useful in regional frequency analysis. Water Resour. Res. 1993, 29, 271–281. [Google Scholar] [CrossRef]

- Hosking, J.R.M. The Use of L-Moments in the Analysis of Censored Data. In Recent Advances in Life-Testing and Reliability; Balakrishnan, N., Ed.; CRC Press: Boca Raton, FL, USA, 1995; pp. 123–136. [Google Scholar]

- Balkema, A.A.; de Haan, L. Residual lifetime at great age. Ann. Probab. 1974, 2, 792–804. [Google Scholar] [CrossRef]

- Waylen, P.R.; Woo, M.K. Prediction of annual floods generated by mixed hydrologic processes. Water Resour. Res. 1972, 8, 1286–1292. [Google Scholar]

- Leadbetter, M.R. Extremes and local dependence in stationary sequences. J. Appl. Probab. Theory Relat. Fields 1983, 65, 291–306. [Google Scholar] [CrossRef]

- Davison, A.C.; Hinkley, D.V. Bootstrap Methods and Their Application; Cambridge University Press: Cambridge, UK, 1997; pp. 1–582. [Google Scholar]

- Joe, H. Multivariate Models and Dependence Concepts; Chapman & Hall: London, UK, 1997; pp. 1–399. [Google Scholar]

- Schlather, M. Models for stationary max-stable random fields. Extremes 2002, 5, 33–44. [Google Scholar] [CrossRef]

- Heffernan, J.E.; Tawn, J.A. A conditional approach to multivariate extreme value problems. J. R. Stat. Soc. Ser. B Stat. Methodol. 2004, 66, 497–546. [Google Scholar] [CrossRef]

- Cooley, D.; Nychka, D.; Naveau, P. Bayesian spatial modeling of extreme precipitation return levels. J. Am. Stat. Assoc. 2007, 102, 824–840. [Google Scholar] [CrossRef]

- Faranda, D.; Lucarini, V.; Turchetti, G.; Vaienti, S. Generalized extreme value distribution parameters as dynamical indicators of stability. Int. J. Bifurc. Chaos 2012, 22, 1250276. [Google Scholar] [CrossRef]

- Hussain, F.; Li, Y.; Arun, A.; Haque, M.M. A hybrid modelling framework of machine learning and extreme value theory for crash risk estimation using traffic conflicts. Anal. Methods Accid. Res. 2022, 36, 100248. [Google Scholar] [CrossRef]

- Iliopoulou, T.; Koutsoyiannis, D. Revealing hidden persistence in maximum rainfall records. Hydrol. Sci. J. 2019, 64, 1673–1689. [Google Scholar] [CrossRef]

- Tegegne, G.; Melesse, A.M.; Asfaw, D.H.; Worqlul, A.W. Flood frequency analyses over different basin scales in the Blue Nile River basin, Ethiopia. Hydrology 2020, 7, 44. [Google Scholar] [CrossRef]

- Langat, P.K.; Kumar, L.; Koech, R. Identification of the Most Suitable Probability Distribution Models for Maximum, Minimum, and Mean Streamflow. Water 2019, 11, 734. [Google Scholar] [CrossRef]

- Chen, X.; Shao, Q.; Xu, C.-Y.; Zhang, J.; Zhang, L.; Ye, C. Comparative Study on the Selection Criteria for Fitting Flood Frequency Distribution Models with Emphasis on Upper-Tail Behavior. Water 2017, 9, 320. [Google Scholar] [CrossRef]

- Anghel, C.G.; Stanca, S.C.; Ilinca, C. Two-Parameter Probability Distributions: Methods, Techniques and Comparative Analysis. Water 2023, 15, 3435. [Google Scholar] [CrossRef]

- Dimitriadis, P.; Koutsoyiannis, D.; Iliopoulou, T.; Papanicolaou, P. A Global-Scale Investigation of Stochastic Similarities in Marginal Distribution and Dependence Structure of Key Hydrological-Cycle Processes. Hydrology 2021, 8, 59. [Google Scholar] [CrossRef]

- Gruss, Ł.; Wiatkowski, M.; Tomczyk, P.; Pollert, J., Jr.; Pollert, J., Sr. Comparison of three-parameter distributions in controlled catchments for a stationary and non-stationary data series. Water 2022, 14, 293. [Google Scholar] [CrossRef]

- Saeed, S.F.; Mustafa, A.S.; Aukidy, M.A. Assessment of Flood Frequency Using Maximum Flow Records for the Euphrates River, Iraq. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1076, 012111. [Google Scholar] [CrossRef]

- Zhang, Z.; Stadnyk, T.A.; Burn, D.H. Identification of a preferred statistical distribution for at-site flood frequency analysis in Canada. Can. Water Resour. J. Rev. Can. Des Ressour. Hydr. 2020, 45, 43–58. [Google Scholar] [CrossRef]

- van der Spuy, D.; du Plessis, J. Flood frequency analysis—Part 1: Review of the statistical approach in South Africa. Water SA 2022, 48, 110–119. [Google Scholar] [CrossRef]

- Wang, G.; Chen, X.; Lu, C.; Shi, J.; Zhu, J. A generalized probability distribution of annual discharge derived from correlation dimension analysis in six main basins of China. Water 2020, 34, 2071–2082. [Google Scholar] [CrossRef]

- England, J.F., Jr.; Cohn, T.A.; Faber, B.A.; Stedinger, J.R.; Thomas, W.O., Jr.; Veilleux, A.G.; Kiang, J.E.; Mason, R.R., Jr. Guidelines for Determining Flood Flow Frequency—Bulletin 17C. In U.S. Geological Survey Techniques and Methods; Book 4, Chapter B5; U.S. Geological Survey: Reston, VA, USA, 2019; p. 148, (ver. 1.1, May 2019). [Google Scholar] [CrossRef]

- Bačová Mitková, V.; Pekárová, P.; Halmová, D.; Miklánek, P. The Use of a Uniform Technique for Harmonization and Generalization in Assessing the Flood Discharge Frequencies of Long Return Period Floods in the Danube River Basin. Water 2021, 13, 1337. [Google Scholar] [CrossRef]

- Okoli, K.; Mazzoleni, M.; Breinl, K.; Di Baldassarre, G. A systematic comparison of statistical and hydrological methods for design flood estimation. Hydrol. Res. 2019, 50, 1665–1678. [Google Scholar] [CrossRef]

- Bako, S.S.; Ali, N.; Arasan, J. Assessing model selection techniques for distributions use in hydrological extremes in the presence of trimming and subsampling. J. Niger. Soc. Phys. Sci. 2024, 6, 2077. [Google Scholar] [CrossRef]

- Nnamdi Ekwueme, B.; Obinna Ibeje, A.; Ekeleme, A. Modelling of Maximum Annual Flood for Regional Watersheds Using Markov Model. Saudi J. Civ. Eng. 2021, 5, 26–34. [Google Scholar] [CrossRef]

- Wałęga, A.; Młyński, D.; Wojkowski, J.; Radecki-Pawlik, A.; Lepeška, T. New Empirical Model Using Landscape Hydric Potential Method to Estimate Median Peak Discharges in Mountain Ungauged Catchments. Water 2020, 12, 983. [Google Scholar] [CrossRef]

- Montanari, A.; Young, G.; Savenije, H.H.; Hughes, D.; Wagener, T.; Ren, L.L.; Koutsoyiannis, D.; Cudennec, C.; Toth, E.; Grimaldi, S.; et al. Panta Rhei—Everything Flows: Change in Hydrology and Society. Hydrol. Sci. J. 2013, 58, 1256–1275. [Google Scholar] [CrossRef]

- Mount, N.J.; Maier, H.R.; Toth, E.; Elshorbagy, A.; Solomatine, D.; Chang, F.-J.; Abrahart, R.J. Data-driven modelling approaches for socio-hydrology: Opportunities and challenges within the Panta Rhei Science Plan. Hydrol. Sci. J. 2016, 61, 117–130. [Google Scholar] [CrossRef]

- Beven, K. Changing ideas in hydrology—The case of physically-based models. J. Hydrol. 1989, 105, 157–172. [Google Scholar]

- Koutsoyiannis, D. Reconciling hydrology with engineering. Hydrol. Res. 2014, 45, 2–22. [Google Scholar]

- Graham, M.H.; Dayton, P.K. On the evolution of ecological ideas: Paradigms and scientific progress. Ecology 2002, 83, 1481–1489. [Google Scholar] [CrossRef]

- Cimellaro, G.P. Resilience-based design (RBD) modelling of civil infrastructure to assess seismic hazards. In Handbook of Seismic Risk Analysis and Management of Civil Infrastructure Systems; Tesfamariam, S., Goda, K., Eds.; Woodhead Publishing Series in Civil and Structural Engineering; Woodhead Publishing: Cambridge, UK, 2013; pp. 268–303. [Google Scholar]

- Angelakis, A.N.; Capodaglio, A.G.; Valipour, M.; Krasilnikoff, J.; Ahmed, A.T.; Mandi, L.; Tzanakakis, V.A.; Baba, A.; Kumar, R.; Zheng, X.; et al. Evolution of Floods: From Ancient Times to the Present Times (ca 7600 BC to the Present) and the Future. Land 2023, 12, 1211. [Google Scholar] [CrossRef]

| Heavy-Tailed Distributions [92,108,109,110] | ||

|---|---|---|

| Distributions | Specific Characteristics | Disenchantment |

| Pareto Distribution |

|

|

| Weibull Distribution |

| |

| Frechet Distribution |

| |

| Lognormal Distribution |

| |

| Light Tail Distributions [108,109,110,111] | ||

| Weibull Distribution |

|

|

| Gumbel Distribution |

| |

| Exponential Distribution |

| |

| Key Terms and Concepts | ||

| Heavy-tailed distribution: Tail of the distribution decreases more slowly than the exponential distribution. | ||

| Pareto principle: The wealthiest 20% of the population holds 80% of the wealth. | ||

| Catastrophe principle: A single, exceptionally large value dominates the sum or event. | ||

| Conspiracy principle: Many small or moderate deviations collectively produce an extreme outcome. | ||

| Hazard rate: Likelihood of an impending failure with the age of the component. | ||

| Regularly varying distribution: Scale invariant and follow power law. | ||

| Scale invariance: If the scale (or units) with which the samples from the distribution are measured is changed, then the shape of the distribution is unchanged. | ||

| Memoryless property: Regardless of how long someone has already waited, the expected remaining waiting time is the same as if they had just arrived. | ||

| Multiplicative process: Situations where growth happens proportionally to the current size. | ||

| Max stable distributions: Limiting distributions of extremal processes, and the class of max-stable distributions is made up of three families of distributions—the Fréchet, the Weibull, and the Gumbel. | ||

| Era | Euphoria | Disenchantment | Addressing Disenchantment |

|---|---|---|---|

| Beginning of statistical analysis (1910–1930) | Adequacy of probabilistic methods to describe the flood events [22] | ||

| Beginning of rationalization (1930–1950) | |||

| Standardization of flood frequency analysis (1950–1970) |

|

| |

| Revolution and beginning of divergence (1970–1990) |

| Non-stationary 5 [121] | |

| Coevolution and stagnation (1990–present) | Dynamical Systems 6 [122] |

| |

| Key Terms and Concepts | |||

| Fisher and Tippett theory: For a single process, the behavior of the maxima can be described by the three extreme value distributions–Gumbel, Frechet, and negative Weibull. | |||

| Limit Laws and Domains of Attraction: Aims to differentiate between light- and heavy-tailed probability distributions and determine the specific type of heavy-tailed distribution. | |||

| Peak over threshold: Uses all exceedances above a chosen threshold, providing more data and often better estimates of tail behavior and extreme quantiles. | |||

| Slow convergence: Maxima or exceedances approach GEV or GPD distributions only slowly with increasing sample size. | |||

| Multiple Physical processes: The presence of multiple physical processes (e.g., rainfall–runoff mechanisms, snowmelt, tropical storms, or seasonal climate drivers) can violate EVT assumptions. | |||

| Nonstationary: A non-stationary process exhibits trends, shifts, or cycles, meaning its behavior evolves rather than staying stable. | |||

| Dynamical systems: A dynamical system describes how the state of a physical system evolves over time according to deterministic laws. | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashraf, F.U.; Pennock, W.H.; Borgaonkar, A.D. Conundrum of Hydrologic Research: Insights from the Evolution of Flood Frequency Analysis. CivilEng 2025, 6, 66. https://doi.org/10.3390/civileng6040066

Ashraf FU, Pennock WH, Borgaonkar AD. Conundrum of Hydrologic Research: Insights from the Evolution of Flood Frequency Analysis. CivilEng. 2025; 6(4):66. https://doi.org/10.3390/civileng6040066

Chicago/Turabian StyleAshraf, Fahmidah Ummul, William H. Pennock, and Ashish D. Borgaonkar. 2025. "Conundrum of Hydrologic Research: Insights from the Evolution of Flood Frequency Analysis" CivilEng 6, no. 4: 66. https://doi.org/10.3390/civileng6040066

APA StyleAshraf, F. U., Pennock, W. H., & Borgaonkar, A. D. (2025). Conundrum of Hydrologic Research: Insights from the Evolution of Flood Frequency Analysis. CivilEng, 6(4), 66. https://doi.org/10.3390/civileng6040066