You Only Look Once v5 and Multi-Template Matching for Small-Crack Defect Detection on Metal Surfaces

Abstract

1. Introduction

2. Literature Review

2.1. YOLOv5

2.2. Template Matching and Multi-Template Matching (MTM)

2.3. Small-Object Detection

2.4. Limited Data

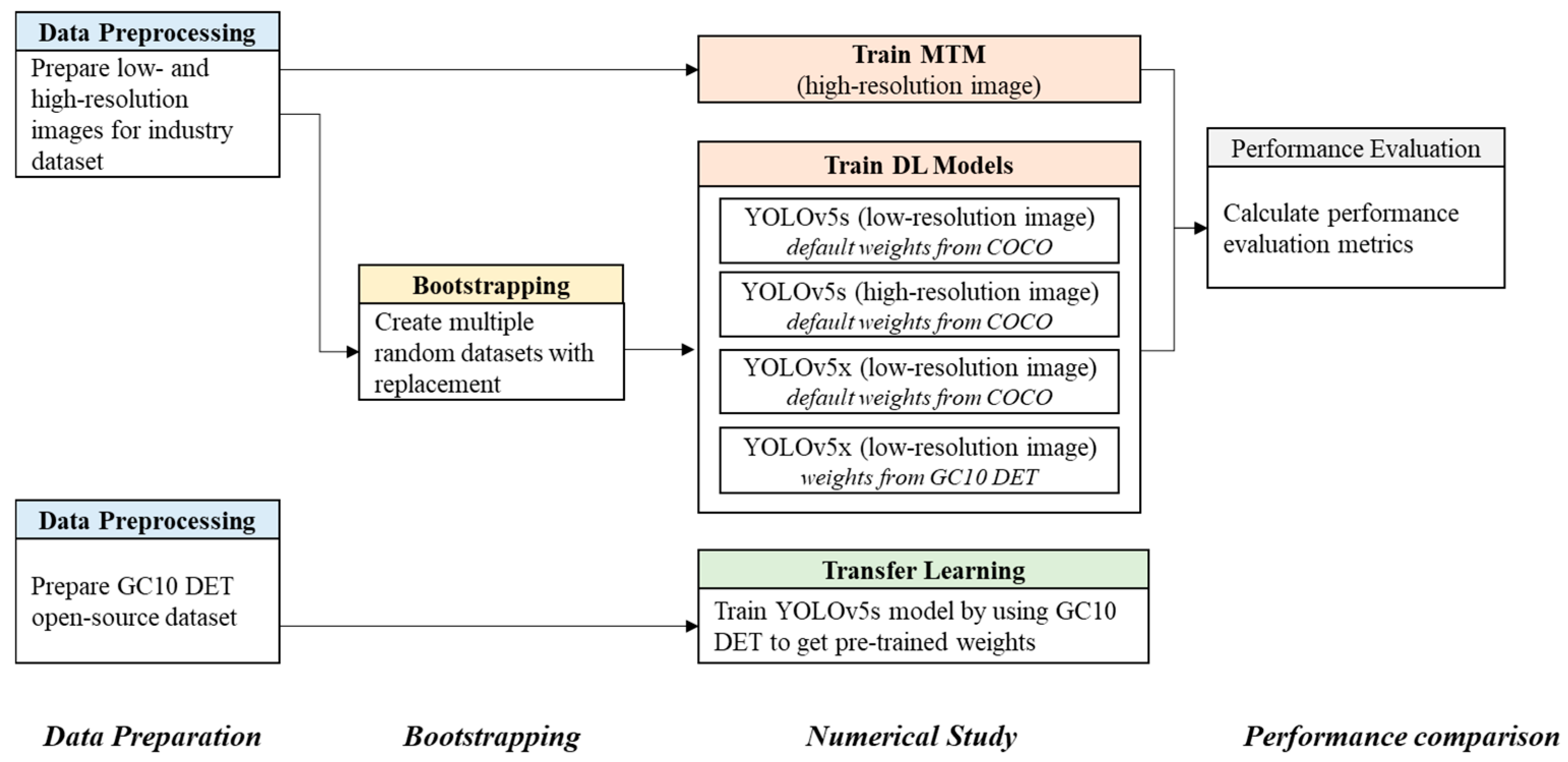

3. Methodology

3.1. Dataset Preparation

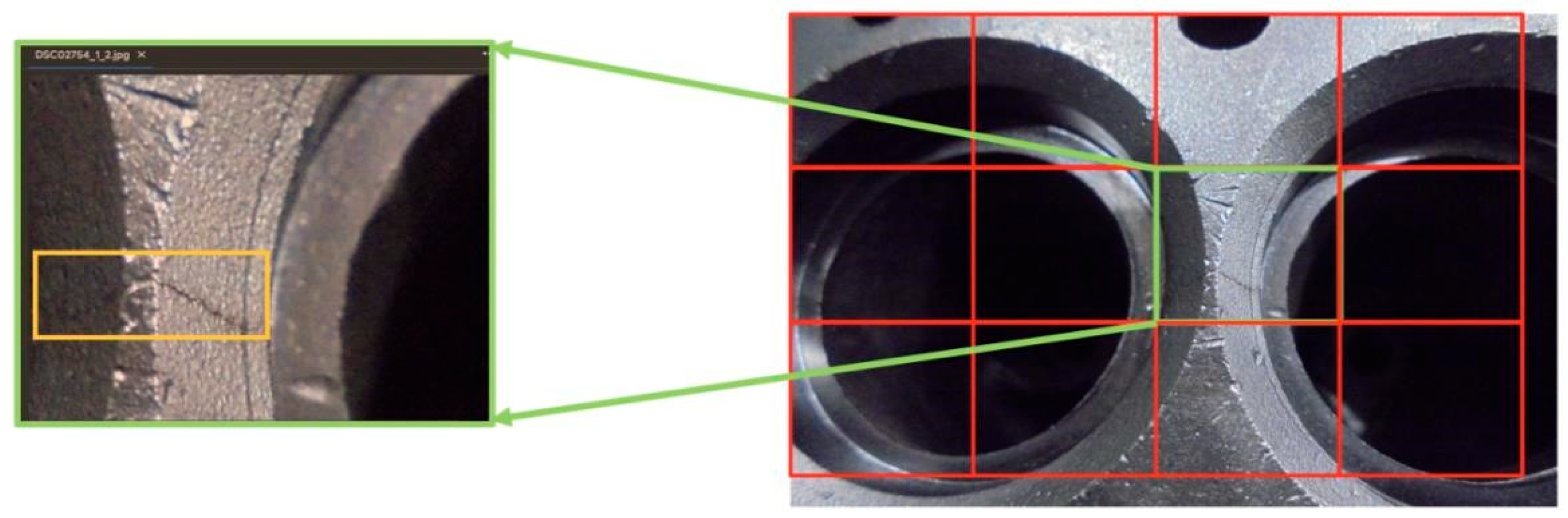

3.1.1. Industry Dataset

- (i)

- Laboratory setup: A Basler ace 3088-16 gm, monochrome area scan camera with an Edmund Optics 8 mm HP series lens was used along with custom C++ code to automate the image acquisition. The camera was positioned at a 100 mm working distance from the cylinder head, with a fixed f/4 aperture, 8 bit pixel depth, and perpendicular alignment for all images, using a Techman TM5-900 cobot. The camera system captured images at a resolution of 3088 × 2064 pixels, corresponding to approximately 28 pixels/mm. Illumination relied on ambient lighting conditions (fluorescent lighting), and the camera employed no filters to mitigate spectral interference from the ambient lighting. Images were captured at the center of each pre-defined grid box measuring 110.28 mm × 74.33 mm on the cylinder head surfaces. No camera models to correct for distortions created by the camera perspective and lens elements were applied to these images. The cast iron cylinder heads were sampled and provided by a remanufacturer in the “cleaned” state produced in the remanufacturing process to enable the manual methods used for defect inspection.

- (ii)

- Remanufacturer’s facility: A standard SLR camera was used with a resolution of 2592 × 1944 pixels and was mounted on a manual slide system to control the camera’s position relative to the cylinder head surface. As with the laboratory setup, cylinder heads were in the “cleaned” state and the camera relied on ambient fluorescent lighting for illumination.

- Tight Bounding Boxes—minimize noise by drawing bounding boxes as tightly as possible around the defects;

- Complete Labeling—ensure all defects are labeled individually, avoiding any grouping;

- No Missed Defects—meant to leave no defect left unlabeled.

3.1.2. Benchmark Datasets for Transfer Learning

3.2. Bootstrapping

3.3. Numerical Study

3.3.1. Model Size

3.3.2. Transfer Learning

3.3.3. Image Resolution

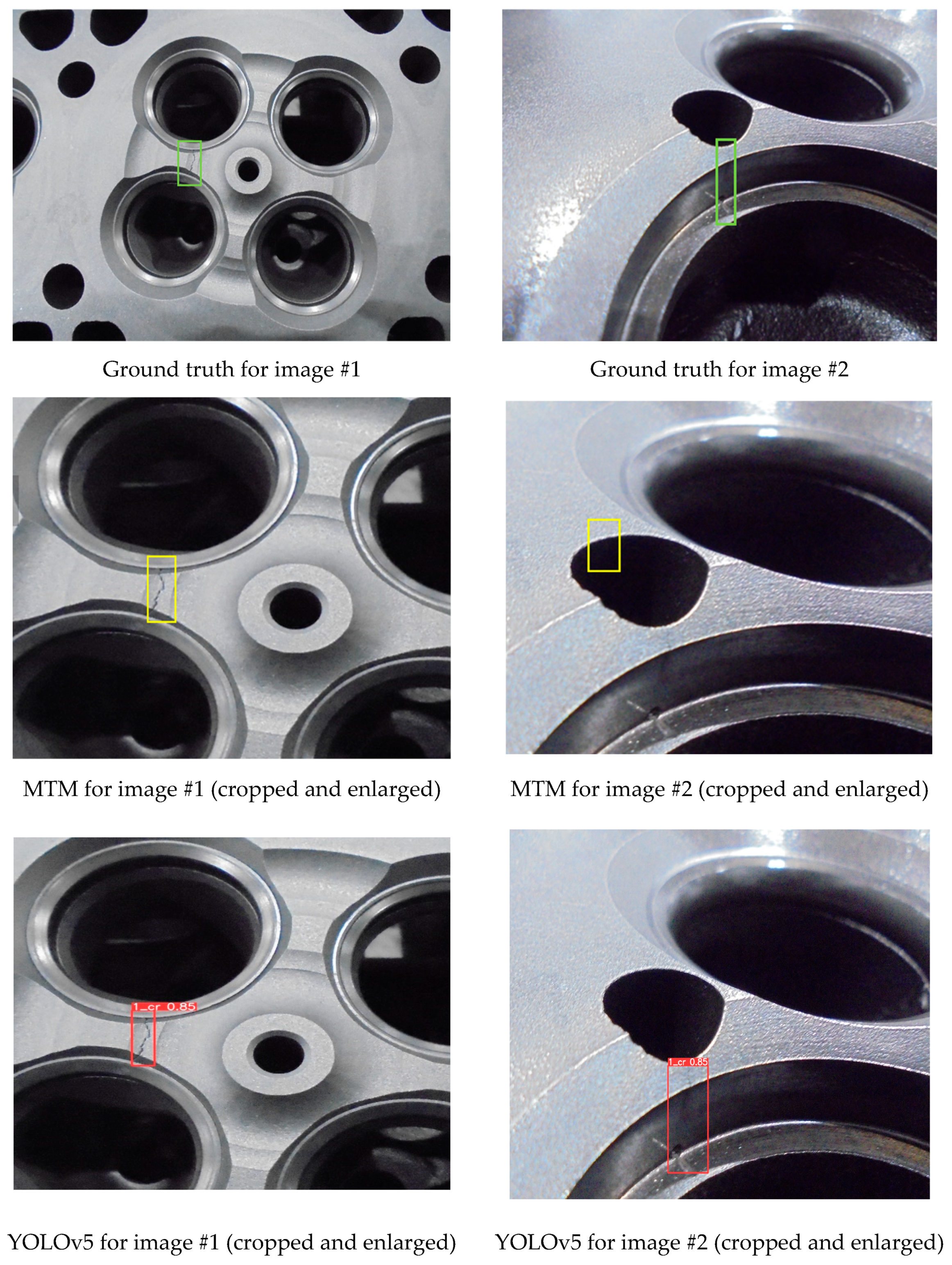

3.3.4. MTM

3.4. Performance Comparison

4. Results

4.1. Impact of Model Size

4.2. Impact of Transfer Learning

4.3. Impact of Resolution

4.4. Performance Comparison Between MTM and YOLOv5

5. Conclusions and Future Work Direction

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AP | Average precision |

| COCO | Common Object in Context dataset |

| CNNs | Convolutional Neural Networks |

| CSPNet | Cross Stage Partial Network |

| DL | Deep Learning |

| FPN | Feature Pyramid Network |

| GC10 DET | Metallic Surface Defect Detection dataset |

| GFLOPs | Giga Floating-point Operations per second |

| MTM | Multi-Template Matching |

| PANet | Path Aggregation Network |

| SSD | Single Shot MultiBox Detector |

| YOLOv5 | You Only Look Once version 5 |

References

- Kujawińska, A.; Vogt, K. Human factors in visual quality control. Manag. Prod. Eng. Rev. 2015, 6, 25–31. [Google Scholar] [CrossRef]

- Mital, A.; Govindaraju, M.; Subramani, B. A comparison between manual and hybrid methods in parts inspection. Integr. Manuf. Syst. 1998, 9, 344–349. [Google Scholar] [CrossRef]

- Cha, Y.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Nwankpa, C.; Eze, S.; Ijomah, W.; Gachagan, A.; Marshall, S. Achieving remanufacturing inspection using deep learning. J. Remanufacturing 2021, 11, 89–105. [Google Scholar] [CrossRef]

- Zheng, H.; Kong, L.X.; Nahavandi, S. Automatic inspection of metallic surface defects using genetic algorithms. J. Mater. Process. Technol. 2002, 125–126, 427–433. [Google Scholar] [CrossRef]

- Kim, C.-W.; Koivo, A.J. Hierarchical classification of surface defects on dusty wood boards. Pattern Recognit. Lett. 1994, 15, 713–721. [Google Scholar] [CrossRef]

- Kang, G.-W.; Liu, H.-B. Surface defects inspection of cold rolled strips based on neural network. In Proceedings of the 2005 International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; IEEE: New York, NY, USA, 2005; Volume 8, pp. 5034–5037. [Google Scholar] [CrossRef]

- Ferguson, M.; Ak, R.; Lee, Y.-T.T.; Law, K.H. Detection and segmentation of manufacturing defects with convolutional neural networks and transfer learning. Smart Sustain. Manuf. Syst. 2018, 2, 137–164. [Google Scholar] [CrossRef]

- Gai, X.; Ye, P.; Wang, J.; Wang, B. Research on defect detection method for steel metal surface based on deep learning. In Proceedings of the 2020 IEEE 5th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 12–14 June 2020; IEEE: New York, NY, USA, 2020; pp. 637–641. [Google Scholar] [CrossRef]

- Cheng, L.; Gong, P.; Qiu, G.; Wang, J.; Liu, Z. Small defect detection in industrial x-ray using convolutional neural network. In Pattern Recognition and Computer Vision; Springer: Cham, Switzerland, 2019; pp. 366–377. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.; Fu, X.; Gan, L. Deep metallic surface defect detection: The new benchmark and detection network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef]

- Zhiyi, H.; Haidong, S.; Lin, J.; Junsheng, C.; Yu, Y. Transfer fault diagnosis of bearing installed in different machines using enhanced deep auto-encoder. Measurement 2020, 152, 107393. [Google Scholar] [CrossRef]

- Han, T.; Liu, C.; Wu, R.; Jiang, D. Deep transfer learning with limited data for machinery fault diagnosis. Appl. Soft Comput. 2021, 103, 107150. [Google Scholar] [CrossRef]

- Aksoy, M.S.; Torkul, O.; Cedimoglu, I.H. An industrial visual inspection system that uses inductive learning. J. Intell. Manuf. 2004, 15, 569–574. [Google Scholar] [CrossRef]

- Tang, F.; Tao, H. Fast multi-scale template matching using binary features. In Proceedings of the 2007 IEEE Workshop on Applications of Computer Vision (WACV ’07), Austin, TX, USA, 21–22 February 2007; IEEE: New York, NY, USA, 2007; p. 36. [Google Scholar] [CrossRef]

- Bao, G.; Cai, S.; Qi, L.; Xun, Y.; Zhang, L.; Yang, Q. Multi-template matching algorithm for cucumber recognition in natural environment. Comput. Electron. Agric. 2016, 127, 754–762. [Google Scholar] [CrossRef]

- Mahalakshmi, T.; Muthaiah, R.; Swaminathan, P. An overview of template matching technique in image processing. Res. J. Appl. Sci. Eng. Technol. 2012, 4, 5469–5473. [Google Scholar]

- Kong, Q.; Wu, Z.; Song, Y. Online detection of external thread surface defects based on an improved template matching algorithm. Measurement 2022, 195, 111087. [Google Scholar] [CrossRef]

- Thomas, L.S.V.; Gehrig, J. Multi-template matching: A versatile tool for object-localization in microscopy images. BMC Bioinform. 2020, 21, 44. [Google Scholar] [CrossRef]

- Zeng, N.; Wu, P.; Wang, Z.; Li, H.; Liu, W.; Liu, X. A small-sized object detection oriented multi-scale feature fusion approach with application to defect detection. IEEE Trans. Instrum. Meas. 2022, 71, 3507014. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Han, W.; Chen, J.; Wang, L.; Feng, R.; Li, F.; Wu, L.; Tian, T.; Yan, J. Methods for small, weak object detection in optical high-resolution remote sensing images: A survey of advances and challenges. IEEE Geosci. Remote Sens. Mag. 2021, 9, 8–34. [Google Scholar] [CrossRef]

- Chen, S.; Tang, Y.; Zou, X.; Huo, H.; Hu, K.; Hu, B.; Pan, Y. Identification and detection of biological information on tiny biological targets based on subtle differences. Machines 2022, 10, 996. [Google Scholar] [CrossRef]

- Dadboud, F.; Patel, V.; Mehta, V.; Bolic, M.; Mantegh, I. Single-stage UAV detection and classification with YOLOV5: Mosaic data augmentation and PANet. In Proceedings of the 2021 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. Version 8.0.0. Available online: https://github.com/ultralytics/ultralytics (accessed on 16 January 2024).

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Zhao, R.; Wang, K.; Xiao, Y.; Gao, F.; Gao, Z. Leveraging Monte Carlo dropout for uncertainty quantification in real-time object detection of autonomous vehicles. IEEE Access 2024, 12, 33384–33399. [Google Scholar] [CrossRef]

- Sun, F.; Li, Z.; Li, Z. A traffic flow detection system based on YOLOv5. In Proceedings of the 2021 2nd International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Shanghai, China, 15–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 458–464. [Google Scholar] [CrossRef]

- Li, J.; Su, Z.; Geng, J.; Yin, Y. Real-time detection of steel strip surface defects based on improved YOLO detection network. IFAC-Pap. 2018, 51, 76–81. [Google Scholar] [CrossRef]

- Zeqiang, S.; Bingcai, C. Improved Yolov5 algorithm for surface defect detection of strip steel. In Artificial Intelligence in China; Springer: Singapore, 2022; pp. 448–456. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Damacharla, P.; Rao, A.; Ringenberg, J.; Javaid, A.Y. TLU-Net: A deep learning approach for automatic steel surface defect detection. In Proceedings of the 2021 International Conference on Applied Artificial Intelligence (ICAPAI), Halden, Norway, 19–21 May 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Talmi, I.; Mechrez, R.; Zelnik-Manor, L. Template matching with deformable diversity similarity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 175–183. [Google Scholar]

- Liu, Y.; Sun, P.; Wergeles, N.; Shang, Y. A survey and performance evaluation of deep learning methods for small object detection. Expert Syst. Appl. 2021, 172, 114602. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- McKeown, D.M.; Denlinger, J.L. Cooperative methods for road tracking in aerial imagery. In Proceedings of the Proceedings CVPR ’88: The Computer Society Conference on Computer Vision and Pattern Recognition, Ann Arbor, MI, USA, 5–9 June 1988; IEEE: New York, NY, USA, 1988; pp. 662–672. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part v 13; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? In Proceedings of the 28th Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 1–9. [Google Scholar]

- Hu, H.; Gu, J.; Zhang, Z.; Dai, J.; Wei, Y. Relation networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3588–3597. [Google Scholar]

- Liu, J.; Guo, F.; Gao, H.; Li, M.; Zhang, Y.; Zhou, H. Defect detection of injection molding products on small datasets using transfer learning. J. Manuf. Process. 2021, 70, 400–413. [Google Scholar] [CrossRef]

- Gong, Y.; Luo, J.; Shao, H.; He, K.; Zeng, W. Automatic defect detection for small metal cylindrical shell using transfer learning and logistic regression. J. Nondestr. Eval. 2020, 39, 24. [Google Scholar] [CrossRef]

- Dubey, P.; Miller, S.; Günay, E.E.; Jackman, J.; Kremer, G.E.; Kremer, P.A. Deep learning-powered visual inspection for metal surfaces—Impact of annotations on algorithms based on defect characteristics. Adv. Eng. Inform. 2024, 62, 102727. [Google Scholar] [CrossRef]

- Bradski, B. The OpenCV library. Dr. Dobb’s J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Liu, R.; Huang, M.; Gao, Z.; Cao, Z.; Cao, P. MSC-DNet: An efficient detector with multi-scale context for defect detection on strip steel surface. Measurement 2023, 209, 112467. [Google Scholar] [CrossRef]

- Tian, R.; Jia, M. DCC-CenterNet: A rapid detection method for steel surface defects. Measurement 2022, 187, 110211. [Google Scholar] [CrossRef]

- Gu, X.; Guo, R.; Wang, H. Quality inspection of workpiece camouflage spraying based on improved YOLOv3-tiny. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; IEEE: New York, NY, USA, 2020; pp. 1363–1367. [Google Scholar] [CrossRef]

- DiCiccio, T.J.; Efron, B. Bootstrap confidence intervals. Stat. Sci. 1996, 11, 189–228. [Google Scholar] [CrossRef]

- Pek, J.; Wong, A.C.M.; Wong, O.C.Y. Confidence intervals for the mean of non-normal distribution: Transform or not to transform. Open J. Stat. 2017, 7, 405–421. [Google Scholar] [CrossRef][Green Version]

- OpenCV. Template matching. In OpenCV Documentation Version 3.4.20-dev; Available online: https://docs.opencv.org/3.4/d4/dc6/tutorial_py_template_matching.html (accessed on 10 March 2023).

| Experimental Structure | |||

|---|---|---|---|

| Experiment Number | Image Dataset Used by the Model | Algorithm Used | Dataset Used for Transfer Learning |

| 1 | Resized 640 × 640 | YOLOv5s | COCO |

| 2 | Resized 640 × 640 | YOLOv5x | COCO |

| 3 | Resized 640 × 640 | YOLOv5s | GC10 |

| 4 | High-resolution (3088 × 2064) | YOLOv5s | COCO |

| 5 | High-resolution (3088 × 2064) | MTM | N/A |

| Experiment Number | Dataset Used by the Model | Algorithm Used | Data Used for Transfer Learning | 95% Confidence Intervals for AP | 95% Confidence Intervals for Recall |

|---|---|---|---|---|---|

| 1 | Resize 640 × 640 | YOLOv5s | COCO | 93.90–94.83% | 90.50–91.76% |

| 2 | Resize 640 × 640 | YOLOv5x | COCO | 94.08–95.22% | 94.70–95.75% |

| 3 | Resize 640 × 640 | YOLOv5s | GC10 | 85.16–87.01% | 84.66–86.52% |

| 4 | High-resolution | YOLOv5s | COCO | 95.14–95.89% | 92.82–93.97% |

| 5 | High-resolution | MTM | N/A | N/A | 12.37% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dubey, P.; Miller, S.; Günay, E.E.; Jackman, J.; Kremer, G.E.; Kremer, P.A. You Only Look Once v5 and Multi-Template Matching for Small-Crack Defect Detection on Metal Surfaces. Automation 2025, 6, 16. https://doi.org/10.3390/automation6020016

Dubey P, Miller S, Günay EE, Jackman J, Kremer GE, Kremer PA. You Only Look Once v5 and Multi-Template Matching for Small-Crack Defect Detection on Metal Surfaces. Automation. 2025; 6(2):16. https://doi.org/10.3390/automation6020016

Chicago/Turabian StyleDubey, Pallavi, Seth Miller, Elif Elçin Günay, John Jackman, Gül E. Kremer, and Paul A. Kremer. 2025. "You Only Look Once v5 and Multi-Template Matching for Small-Crack Defect Detection on Metal Surfaces" Automation 6, no. 2: 16. https://doi.org/10.3390/automation6020016

APA StyleDubey, P., Miller, S., Günay, E. E., Jackman, J., Kremer, G. E., & Kremer, P. A. (2025). You Only Look Once v5 and Multi-Template Matching for Small-Crack Defect Detection on Metal Surfaces. Automation, 6(2), 16. https://doi.org/10.3390/automation6020016