A Lightweight Multimodal Framework for Misleading News Classification Using Linguistic and Behavioral Biometrics

Abstract

1. Introduction

- a.

- To what extent can misleading news classification be conceptualized as a multimodal sensing problem, where linguistic cues and behavioral biometrics act as “soft sensors” for deception?

- b.

- How does the integration of biometric and linguistic features with deep neural embeddings improve detection accuracy and robustness compared to existing state-of-the-art methods?

- c.

- What is the contribution of various modalities, such as credibility history, linguistic and biometric features, and logical consistency, to the overall performance of the proposed method?

- d.

- How can the interpretability, adaptability, and resilience of a lightweight misleading news classification system be enhanced for noisy or rephrased inputs?

- a.

- We reconceptualize misleading news classification as a multimodal sensing problem, where linguistic and behavioral cues are treated as soft sensors that capture deception-related linguistic and cognitive patterns for AI-enabled classification of misleading news.

- b.

- We extend the FDHN framework by incorporating biometric-inspired linguistic features such as lexical diversity, subjectivity, sentiment, and contradiction scores, along with pretrained BERT embeddings, enabling interpretable fusion of behavioral and semantic information.

- c.

- Through a systematic ablation study and cross-validation analysis, we quantify the contribution of different classification modalities, including credibility metadata, stylistic markers, and logical consistency, to overall classification performance, showing that lightweight handcrafted features provide strong complementary features to deep embeddings.

- d.

- We empirically demonstrate the lightweight nature and near-real-time feasibility of the framework, achieving millisecond-level inference latency with a small model footprint while still maintaining competitive performance when justification-like context is available.

- e.

- We employ text augmentation strategies to strengthen robustness against rephrased or noisy misinformation.

2. Related Works

2.1. Misleading News Classification Approaches

2.2. Multimodal and Hybrid Approaches

2.3. Biometrics in Misinformation Analysis

2.4. Evaluation Strategies

2.5. Challenges and Research Gaps

- a.

- Dataset limitations remain a critical barrier, as most existing resources are English-centric and narrowly focused on political misinformation, restricting generalizability across domains and languages.

- b.

- Deep learning systems, especially transformer-based models, achieve high accuracy but often operate as black boxes, limiting transparency in decision-making.

- c.

- Domain adaptability and continuous learning are equally pressing, since misinformation evolves rapidly and models trained on static datasets quickly lose effectiveness.

- d.

- Computational efficiency poses practical obstacles, with state-of-the-art models requiring significant resources that hinder real-time deployment.

- e.

- While behavioral biometrics have demonstrated potential, their systematic integration with linguistic cues remains underexplored, leaving opportunities for more holistic detection methods.

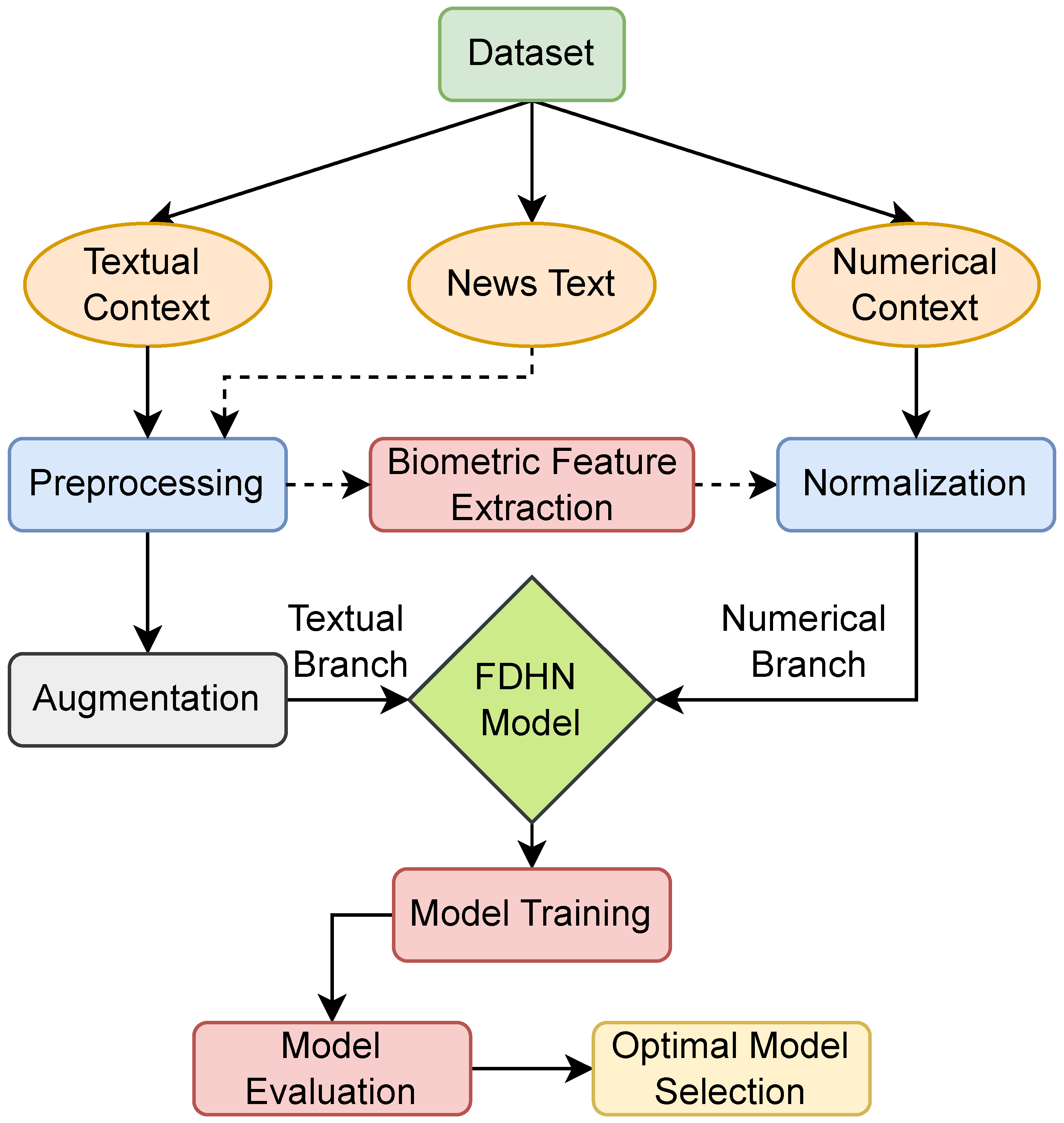

3. Methodology

3.1. Preprocessing

3.1.1. Textual Preprocessing

- a.

- HTML and Non-Alphabetic Character Removal: All input text has been sanitized by stripping HTML tags and removing characters that do not belong to the alphabetic character set. This step eliminates extraneous symbols, numeric noise, and potential markup artifacts that may interfere with linguistic analysis.

- b.

- Lowercasing: All text has been converted to lowercase to ensure uniformity and reduce vocabulary size. This transformation also aids in mitigating issues of sparsity caused by case-sensitive variations of the same word.

- c.

- Stopword Elimination and Lemmatization: Common stopwords, such as “the,” “is”, and “and” have been removed to focus the analysis on semantically rich terms. Subsequently, lemmatization has been applied to reduce words to their base or dictionary forms, enabling better generalization during model training.

- d.

- Tokenization and Padding: All text inputs have been tokenized using the BERT tokenizer from the BERT base model for uncased textual inputs. The tokenizer segments the input into subword units while preserving semantic alignment with the pretrained vocabulary of the BERT model. To ensure uniform input dimensions, sequences have been padded to a consistent length, and longer sequences have been truncated as necessary.

3.1.2. Numeric Preprocessing

3.2. Linguistic & Biometric Feature Extraction

3.2.1. Lexical Analysis

- a.

- Type-Token Ratio (TTR): This metric is used to estimate lexical diversity, computed as the ratio of unique tokens (types) to the total number of tokens in a statement. Higher TTR values suggest a broader vocabulary range and greater linguistic richness.

- b.

- Exclamation Mark Frequency (EMF): The number of exclamation marks is counted in each statement to serve as a proxy for emotional intensity or rhetorical emphasis, which can correlate with subjective or manipulative discourse.

- c.

- Adjective Density (AD): This feature captures the relative frequency of adjectives in a given statement, as identified through part-of-speech tagging. Adjective usage is often associated with evaluative language and can reflect attempts to exaggerate or qualify assertions.

3.2.2. Sentiment and Subjectivity Analysis

- a.

- Sentiment Label and Score: A RoBERTa-based sentiment classifier is utilized to assign polarity (positive or negative) and a corresponding confidence score. RoBERTa is chosen due to its superior performance in sentence-level classification tasks compared to earlier transformer models such as BERT, owing to its dynamic masking and optimized pretraining procedure [38,39]. The resulting sentiment scores enable the model to capture affective orientation and emotional intensity in news statements, which are strongly correlated with misinformation framing [40].

- b.

- Subjectivity Score: To assess the extent of opinionated versus factual content, we incorporate scores derived from BiBERT-Subjectivity, a transformer-based model specifically trained to detect subjective linguistic patterns. Transformer-based subjectivity models outperform traditional lexicon-based approaches by accounting for context and subtle linguistic markers [41]. This measure allows the system to quantify the degree of speculation or personal belief embedded within statements, features that have been linked to deceptive discourse [42].

3.2.3. Contradiction Detection

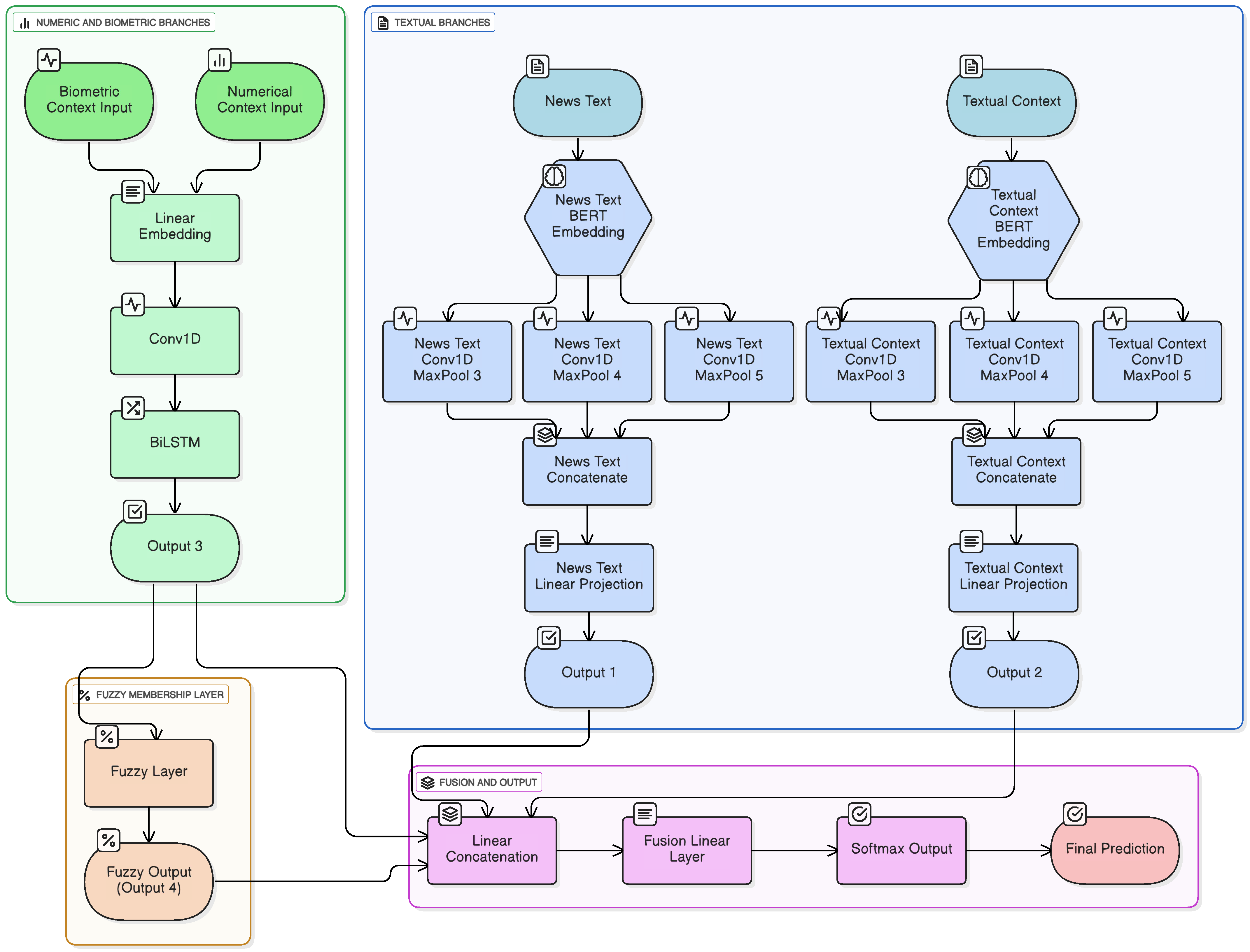

3.3. MNC Architecture

3.3.1. Text Processing

3.3.2. Numeric Metadata

3.3.3. Fusion and Classification

3.4. Text Augmentation Strategy

- a.

- Synonym Replacement (SR): This method utilizes the WordNet [46] lexical database to identify semantically related terms. For each input sentence, a subset of candidate words with available synonyms has been identified. Up to one token is then replaced with a synonym chosen at random. For example, “The politician denied the allegations” may be transformed into “The politician repudiated the allegations”. This procedure introduces lexical variation while preserving semantic fidelity. In the context of the LIAR2 dataset, where claims often exhibit diverse phrasing for similar meanings, SR encourages the model to focus on the underlying semantic content rather than memorizing exact word forms, improving generalization across differently worded statements.

- b.

- Random Deletion (RD): In this technique, each token in the input sequence has an independent probability of being removed. In our implementation, probability is set to 0.1 to avoid degenerated cases. Thus, at least one word is always retained. For instance, “The report was completely false and misleading” may become “The report was false misleading”. Since misleading news claims in LIAR2 are often short, incomplete, or noisy, RD simulates such real-world variations, training the model to tolerate missing or partial information while still accurately classifying the claim’s truthfulness.

3.5. Training and Optimization

- is the ground truth label for sample n and class c,

- is the raw model output (logits),

- is the sigmoid activation function.

4. Dataset

5. Experimental Evaluation

5.1. Experimental Setup

- a.

- Experiment 1 (Feature Ablation Study): Here, each numerical feature, including our handcrafted features, has been combined independently with the news text and textual context while training the model to determine the impact of each numerical feature on improving the model performance.

- b.

- Experiment 2 (Original Metadata Configuration): This experiment is conducted to include only the original numeric and textual metadata fields from the LIAR2 dataset.

- c.

- Experiment 3 (Handcrafted Feature-Enhanced Baseline): This experiment is designed based on the significance of the handcrafted linguistic and biometric features. A threshold has been determined, and any feature above that threshold is to be considered along with the numerical and textual context from the dataset. This experiment involved initially training the model for 10 epochs with a batch size of 32 based on the BCEWithLogitsLoss function at a learning rate of . This is followed by retraining the model at its minimal loss state using Cross Entropy Loss at a learning rate of , repeating the process for BCEWithLogitsLoss function at a learning rate of . This step was particularly useful for escaping shallow local minima and improving convergence stability. For a classification task with N samples and C mutually exclusive classes, the categorical cross-entropy loss is defined in Equation (2)where:

- denotes the one-hot encoded ground truth label for class c,

- is the predicted probability for class c, typically obtained via a softmax activation over the logits.

While BCEWithLogitsLoss is appropriate for multi-label settings, switching to Cross-Entropy Loss during fine-tuning provides smoother gradients and improves convergence, potentially allowing the model to overcome suboptimal regions in the loss landscape. This is the baseline model. - d.

- Experiment 4 (Augmentation Enhanced Baseline): Using the weights of the baseline trained with the handcrafted features in Experiment 3, augmentation was performed on the dataset to optimize the model to perform across a variety of new data.

5.2. Evaluation Metrics

5.3. Experimenal Results

5.3.1. Experiment 1: Baseline with Truth-Count Metadata

5.3.2. Experiments (2–4): Progressive Feature Integration

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bovet, A.; Makse, H.A. Influence of fake news in Twitter during the 2016 US presidential election. Nat. Commun. 2019, 10, 7. [Google Scholar] [CrossRef] [PubMed]

- Xarhoulacos, C.G.; Anagnostopoulou, A.; Stergiopoulos, G.; Gritzalis, D. Misinformation vs. situational awareness: The art of deception and the need for cross-domain detection. Sensors 2021, 21, 5496. [Google Scholar] [CrossRef] [PubMed]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Iavich, M. Combating Fake News with Cryptography in Quantum Era with Post-Quantum Verifiable Image Proofs. J. Cybersecur. Priv. 2025, 5, 31. [Google Scholar] [CrossRef]

- Bode, L.; Vraga, E.K.; Alvarez, G.; Johnson, C.N.; Konieczna, M.; Mirer, M. What viewers want: Assessing the impact of host bias on viewer engagement with political talk shows. J. Broadcast. Electron. Media 2018, 62, 597–613. [Google Scholar] [CrossRef]

- Guo, Z.; Schlichtkrull, M.; Vlachos, A. A survey on automated fact-checking. Trans. Assoc. Comput. Linguist. 2022, 10, 178–206. [Google Scholar]

- Kozik, R.; Mazurczyk, W.; Cabaj, K.; Pawlicka, A.; Pawlicki, M.; Choraś, M. Deep learning for combating misinformation in multicategorical text contents. Sensors 2023, 23, 9666. [Google Scholar] [CrossRef]

- Anzum, F.; Asha, A.Z.; Dey, L.; Gavrilov, A.; Iffath, F.; Ohi, A.Q.; Pond, L.; Shopon, M.; Gavrilova, M.L. A comprehensive review of trustworthy, ethical, and explainable computer vision advancements in online social media. In Global Perspectives on the Applications of Computer Vision in Cybersecurity; IGI Global Scientific Publishing: Hershey, PA, USA, 2024; pp. 1–46. [Google Scholar]

- Farhangian, F.; Ensina, L.A.; Cavalcanti, G.D.; Cruz, R.M. DRES: Fake news detection by dynamic representation and ensemble selection. arXiv 2025, arXiv:2509.16893. [Google Scholar] [CrossRef]

- Garg, S.; Sharma, D.K. Linguistic features based framework for automatic fake news detection. Comput. Ind. Eng. 2022, 172, 108432. [Google Scholar] [CrossRef]

- Horne, B.; Adali, S. This just in: Fake news packs a lot in title, uses simpler, repetitive content in text body, more similar to satire than real news. In Proceedings of the International AAAI conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017; Volume 11, pp. 759–766. [Google Scholar]

- Xu, C.; Kechadi, M.T. An enhanced fake news detection system with fuzzy deep learning. IEEE Access 2024, 12, 88006–88021. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Pennycook, G.; Rand, D.G. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc. Natl. Acad. Sci. USA 2019, 116, 2521–2526. [Google Scholar] [CrossRef] [PubMed]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Inf. Process. Manag. 2020, 57, 102025. [Google Scholar] [CrossRef]

- Pérez-Rosas, V.; Kleinberg, B.; Lefevre, A.; Mihalcea, R. Automatic Detection of Fake News. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 3391–3401. [Google Scholar]

- Khan, J.Y.; Khondaker, M.T.I.; Afroz, S.; Uddin, G.; Iqbal, A. A benchmark study of machine learning models for online fake news detection. Mach. Learn. Appl. 2021, 4, 100032. [Google Scholar] [CrossRef]

- Nasir, J.A.; Khan, O.S.; Varlamis, I. Fake news detection: A hybrid CNN-RNN based deep learning approach. Int. J. Inf. Manag. Data Insights 2021, 1, 100007. [Google Scholar] [CrossRef]

- Ali, A.M.; Ghaleb, F.A.; Al-Rimy, B.A.S.; Alsolami, F.J.; Khan, A.I. Deep ensemble fake news detection model using sequential deep learning technique. Sensors 2022, 22, 6970. [Google Scholar] [CrossRef] [PubMed]

- Varma, R.; Verma, Y.; Vijayvargiya, P.; Churi, P.P. A systematic survey on deep learning and machine learning approaches of fake news detection in the pre-and post-COVID-19 pandemic. Int. J. Intell. Comput. Cybern. 2021, 14, 617–646. [Google Scholar]

- Taeb, M.; Chi, H. Comparison of deepfake detection techniques through deep learning. J. Cybersecur. Priv. 2022, 2, 89–106. [Google Scholar] [CrossRef]

- Xu, C.; Kechadi, M.T. Fuzzy deep hybrid network for fake news detection. In Proceedings of the 12th International Symposium on Information and Communication Technology, Ho Chi Minh, Vietnam, 7–8 December 2023; pp. 118–125. [Google Scholar]

- Agrawal, C.; Pandey, A.; Goyal, S. A survey on role of machine learning and NLP in fake news detection on social media. In Proceedings of the 2021 IEEE 4th International Conference on Computing, Power and Communication Technologies, Kuala Lumpur, Malaysia, 24–26 September 2021; pp. 1–7. [Google Scholar]

- Al-Alshaqi, M.; Rawat, D.B.; Liu, C. Ensemble techniques for robust fake news detection: Integrating transformers, natural language processing, and machine learning. Sensors 2024, 24, 6062. [Google Scholar] [CrossRef]

- Rout, J.; Mishra, M.; Saikia, M.J. Towards Reliable Fake News Detection: Enhanced Attention-Based Transformer Model. J. Cybersecur. Priv. 2025, 5, 43. [Google Scholar] [CrossRef]

- Capuano, N.; Fenza, G.; Loia, V.; Nota, F.D. Content-based fake news detection with machine and deep learning: A systematic review. Neurocomputing 2023, 530, 91–103. [Google Scholar] [CrossRef]

- Chakraborty, A.; Khatri, I.; Choudhry, A.; Gupta, P.; Vishwakarma, D.K.; Prasad, M. An emotion-guided approach to domain adaptive fake news detection using adversarial learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 16178–16179. [Google Scholar]

- Guo, Z.; Yu, K.; Jolfaei, A.; Li, G.; Ding, F.; Beheshti, A. Mixed graph neural network-based fake news detection for sustainable vehicular social networks. IEEE Trans. Intell. Transp. Syst. 2022, 24, 15486–15498. [Google Scholar] [CrossRef]

- Singh, P.; Srivastava, R.; Rana, K.; Kumar, V. SEMI-FND: Stacked ensemble based multimodal inferencing framework for faster fake news detection. Expert Syst. Appl. 2023, 215, 119302. [Google Scholar] [CrossRef]

- Lahby, M.; Aqil, S.; Yafooz, W.M.; Abakarim, Y. Online fake news detection using machine learning techniques: A systematic mapping study. In Combating Fake News with Computational Intelligence Techniques; Springer: Cham, Switzerland, 2021; pp. 3–37. [Google Scholar]

- Parikh, S.B.; Atrey, P.K. Media-rich fake news detection: A survey. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval, Miami, FL, USA, 10–12 April 2018; pp. 436–441. [Google Scholar]

- Bhatia, Y.; Bari, A.H.; Hsu, G.S.J.; Gavrilova, M. Motion capture sensor-based emotion recognition using a bi-modular sequential neural network. Sensors 2022, 22, 403. [Google Scholar] [CrossRef]

- Alkaabi, H.; Jasim, A.K.; Darroudi, A. From Static to Contextual: A Survey of Embedding Advances in NLP. PERFECT J. Smart Algorithms 2025, 2, 64–73. [Google Scholar] [CrossRef]

- Shopon, M.; Tumpa, S.N.; Bhatia, Y.; Kumar, K.P.; Gavrilova, M.L. Biometric systems de-identification: Current advancements and future directions. J. Cybersecur. Priv. 2021, 1, 470–495. [Google Scholar] [CrossRef]

- Ruchansky, N.; Seo, S.; Liu, Y. CSI: A hybrid deep model for fake news detection. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 797–806. [Google Scholar]

- Han, Y.; Turrini, P.; Bazzi, M.; Andrighetto, G.; Polizzi, E.; De Domenico, M. Measuring the co-evolution of online engagement with (mis) information and its visibility at scale. arXiv 2025, arXiv:2506.06106. [Google Scholar] [CrossRef]

- Areshey, A.; Mathkour, H. Exploring transformer models for sentiment classification: A comparison of BERT, RoBERTa, ALBERT, DistilBERT, and XLNet. Expert Syst. 2024, 41, e13701. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Zhou, X.; Jain, A.; Phoha, V.V.; Zafarani, R. Fake news early detection: A theory-driven model. Digit. Threat. Res. Pract. 2020, 1, 12. [Google Scholar] [CrossRef]

- Medhat, W.; Hassan, A.; Korashy, H. Sentiment analysis algorithms and applications: A survey. Ain Shams Eng. J. 2014, 5, 1093–1113. [Google Scholar] [CrossRef]

- Rubin, V.L.; Conroy, N.; Chen, Y.; Cornwell, S. Fake news or truth? using satirical cues to detect potentially misleading news. In Proceedings of the Second Workshop on Computational Approaches to Deception Detection, San Diego, CA, USA, 17 June 2016; pp. 7–17. [Google Scholar]

- Honnibal, M. spaCy 2: Natural Language Understanding with Bloom Embeddings, Convolutional Neural Networks and Incremental Parsing. Available online: https://orkg.org/resources/R11007?noRedirect= (accessed on 1 October 2025).

- Nie, Y.; Williams, A.; Dinan, E.; Bansal, M.; Weston, J.; Kiela, D. Adversarial NLI: A new benchmark for natural language understanding. arXiv 2019, arXiv:1910.14599. [Google Scholar]

- Rashkin, H.; Choi, E.; Jang, J.Y.; Volkova, S.; Choi, Y. Truth of varying shades: Analyzing language in fake news and political fact-checking. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 9–11 September 2017; pp. 2931–2937. [Google Scholar]

- Fellbaum, C. WordNet. In Theory and Applications of Ontology: Computer Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 231–243. [Google Scholar]

- PyTorch Contributors. BCEWithLogitsLoss. Available online: https://docs.pytorch.org/docs/stable/generated/torch.nn.BCEWithLogitsLoss.html (accessed on 1 October 2025).

- Wang, W.Y. “Liar, Liar Pants on Fire”: A New Benchmark Dataset for Fake News Detection. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 422–426. [Google Scholar]

- Poynter Institute. PolitiFact. Available online: https://www.politifact.com/ (accessed on 1 October 2025).

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

| Category | LIAR | LIAR2 |

|---|---|---|

| Number of Statements | 12,836 | 22,962 |

| Truthfulness Labels | 6 categories | 6 categories |

| News Timeline | No | Yes |

| Speaker Information | Job Title + Party | Full Biography |

| Geographic Context | Speaker’s State | State Referenced in Statement |

| Historical Credibility | Partial | Complete |

| Fact-Checker Justifications | No | Yes |

| Data Errors Fixed | No | Yes |

| Type | Features |

|---|---|

| Textual | “date”, “subject”, “speaker”, “speaker description”, |

| “state info”, “context” | |

| Numerical | “true counts”, “mostly true counts”, “half true counts”, |

| “mostly false counts”, “false counts”, “pants on fire counts” |

| Category | Feature | Test Accuracy | F1 Macro | F1 Micro |

|---|---|---|---|---|

| Credibility History | True Count | 0.6450 | 0.6334 | 0.6450 |

| Mostly True Count | 0.6250 | 0.6084 | 0.6250 | |

| Half True Count | 0.5976 | 0.5758 | 0.5976 | |

| Barely True Count | 0.6237 | 0.6066 | 0.6237 | |

| False Count | 0.5910 | 0.5568 | 0.5910 | |

| Pants on Fire Count | 0.6150 | 0.5986 | 0.6150 | |

| Handcrafted Features | TTR | 0.6193 | 0.6048 | 0.6193 |

| EC | 0.6220 | 0.6078 | 0.6220 | |

| AD | 0.6180 | 0.6045 | 0.6180 | |

| Sent. Label | 0.6115 | 0.6077 | 0.6215 | |

| Sent. Score | 0.6167 | 0.6013 | 0.6167 | |

| Subj. Score | 0.6259 | 0.6121 | 0.6259 | |

| Contr. Score | 0.6220 | 0.6087 | 0.6220 |

| Experiment | Test Accuracy | Macro F1 | Micro F1 |

|---|---|---|---|

| Cheng et al. [12] | 70.20% | 69.60% | 70.20% |

| Proposed MNC (Baseline with Native Metadata) | 70.27 ± 0.07% | 69.67 ± 0.09% | 70.27 ± 0.07% |

| Proposed MNC (Fusion with Handcrafted Features) | 71.41 ± 0.12% | 70.56 ± 0.17% | 71.41 ± 0.12% |

| Proposed MNC (Augmentation-Enhanced System) | 71.91 ± 0.23% | 71.17 ± 0.26% | 71.91 ± 0.23% |

| Component | Average Time (ms/Sample) |

|---|---|

| Proposed Model | 2.43 |

| Proposed Linguistic Feature | 0.28 |

| Proposed Sentiment & Subjectivity | 1.38 |

| Proposed Contradiction Feature | 3.89 |

| Total | 7.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haque, M.; Bari, A.S.M.H.; Gavrilova, M.L. A Lightweight Multimodal Framework for Misleading News Classification Using Linguistic and Behavioral Biometrics. J. Cybersecur. Priv. 2025, 5, 104. https://doi.org/10.3390/jcp5040104

Haque M, Bari ASMH, Gavrilova ML. A Lightweight Multimodal Framework for Misleading News Classification Using Linguistic and Behavioral Biometrics. Journal of Cybersecurity and Privacy. 2025; 5(4):104. https://doi.org/10.3390/jcp5040104

Chicago/Turabian StyleHaque, Mahmudul, A. S. M. Hossain Bari, and Marina L. Gavrilova. 2025. "A Lightweight Multimodal Framework for Misleading News Classification Using Linguistic and Behavioral Biometrics" Journal of Cybersecurity and Privacy 5, no. 4: 104. https://doi.org/10.3390/jcp5040104

APA StyleHaque, M., Bari, A. S. M. H., & Gavrilova, M. L. (2025). A Lightweight Multimodal Framework for Misleading News Classification Using Linguistic and Behavioral Biometrics. Journal of Cybersecurity and Privacy, 5(4), 104. https://doi.org/10.3390/jcp5040104