A Game-Theoretic Approach for Quantification of Strategic Behaviors in Digital Forensic Readiness

Abstract

1. Introduction

- Model Strategic Decisions: Capture the objectives and constraints of both attackers and defenders [13].

- Conduct Risk Analysis: Elucidate payoffs and tactics to identify critical vulnerabilities and optimal defensive strategies [14].

- Enable Adaptive Defense: Capture the dynamic nature of cyber threats, including those augmented by AI, to inform adaptive countermeasures [15].

- Optimize Resource Allocation: Evaluate strategy effectiveness to guide efficient investment of limited defensive resources [16].

- A game-theoretic readiness-planning framework for DFR that quantifies strategic attacker–defender interactions.

- A practical integration of MITRE ATT&CK and D3FEND with AHP-weighted metrics to ground utilities in real-world tactics and techniques.

- An equilibrium-based analysis that derives actionable, resource-constrained guidance for SMBs/SMEs.

- An empirical evaluation on APT-inspired, multi-vector scenarios showing that the framework can improve readiness and reduce attacker success under realistic constraints.

2. Related Works

2.1. Game Theory in Digital Forensics

2.2. Digital Forensics Readiness and Techniques

2.3. Advancement in Cybersecurity Modeling

2.4. Innovative Tools and Methodologies

2.5. Digital Forensics in Emerging Domains

2.6. Advanced Persistent Threats and Cybercrime

2.7. Novelty

3. Materials and Methods

3.1. Problem Statement

- : Strategies available to attackers, corresponding to MITRE ATT&CK tactics (e.g., Reconnaissance, Resource Development, Initial Access, Execution, Persistence, etc.).

- : Strategies available to defenders, corresponding to MITRE D3FEND countermeasures (e.g., Model, Detect, Harden, Isolate, Deceive, etc.)

- P: Parameters influencing game models, such as attack severity, defense effectiveness, and forensic capability.

- : Utility function for attackers, representing the payoff based on their strategy and the defenders’ strategy .

- : Utility function for defenders, representing the payoff based on their strategy and the attackers’ strategy .

- Model Construction: Construct game models to represent the interactions between A and D.

- Equilibrium Analysis: Identify Nash equilibria such that

3.2. Methodology

Notation and Symbols

3.3. Proposed Approach

- Players:

- –

- Attacker: 14 strategies ().

- –

- Defender: 6 strategies ().

- Rationality: Both players are presumed rational, seeking to maximize their individual payoffs given knowledge of the opponent’s strategy. The game is simultaneous and non-zero-sum.

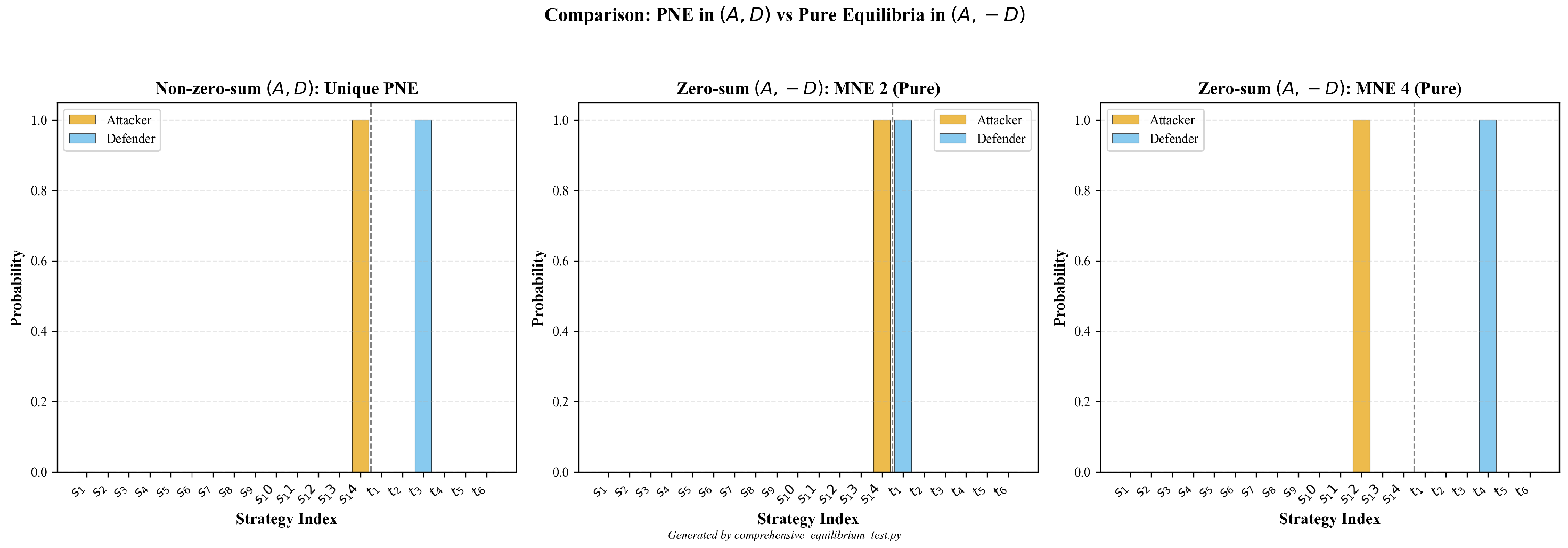

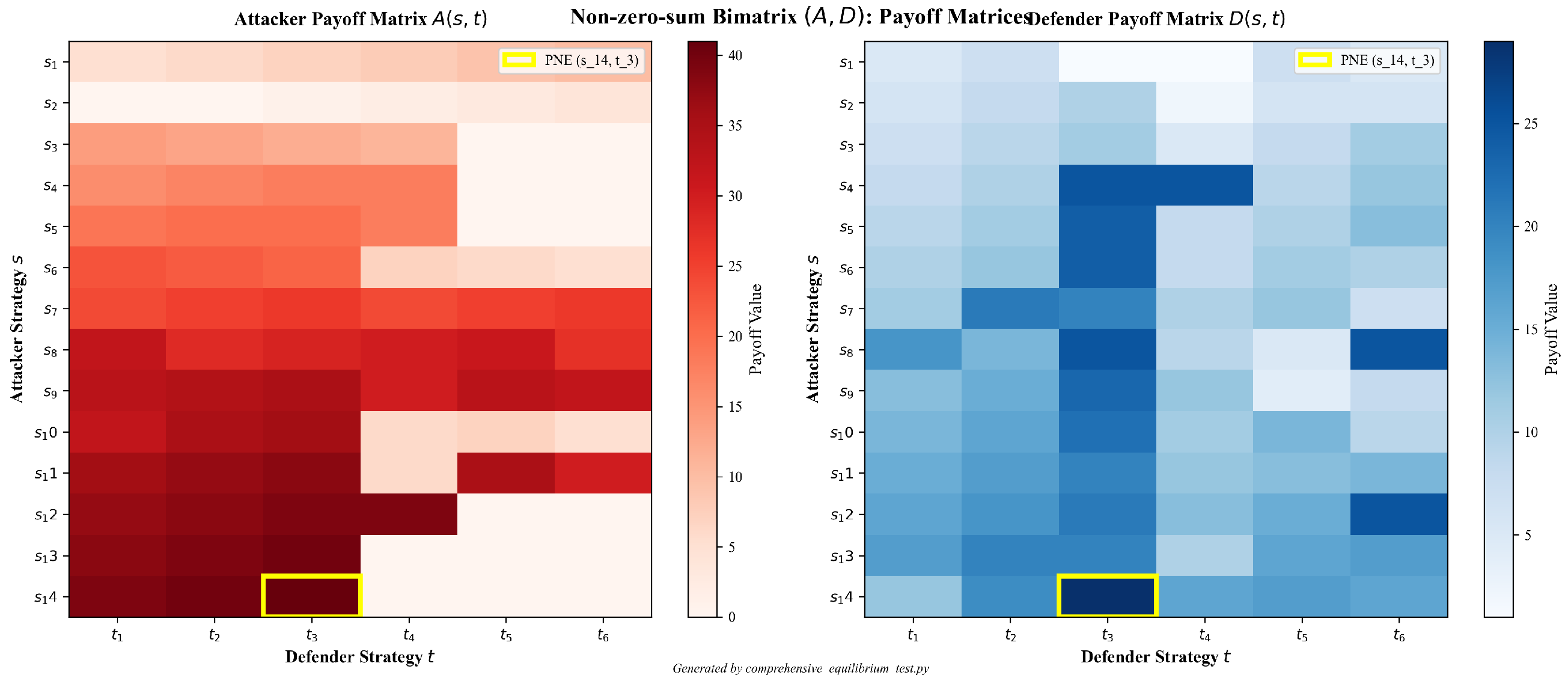

3.3.1. PNE Analysis

3.3.2. MNE Analysis

Main Equilibrium (Non-Zero-Sum)

3.3.3. Payoff Construction from ATT&CK→D3FEND Coverage

3.3.4. Payoff Matrices

3.3.5. Mixed Nash Equilibrium Computation

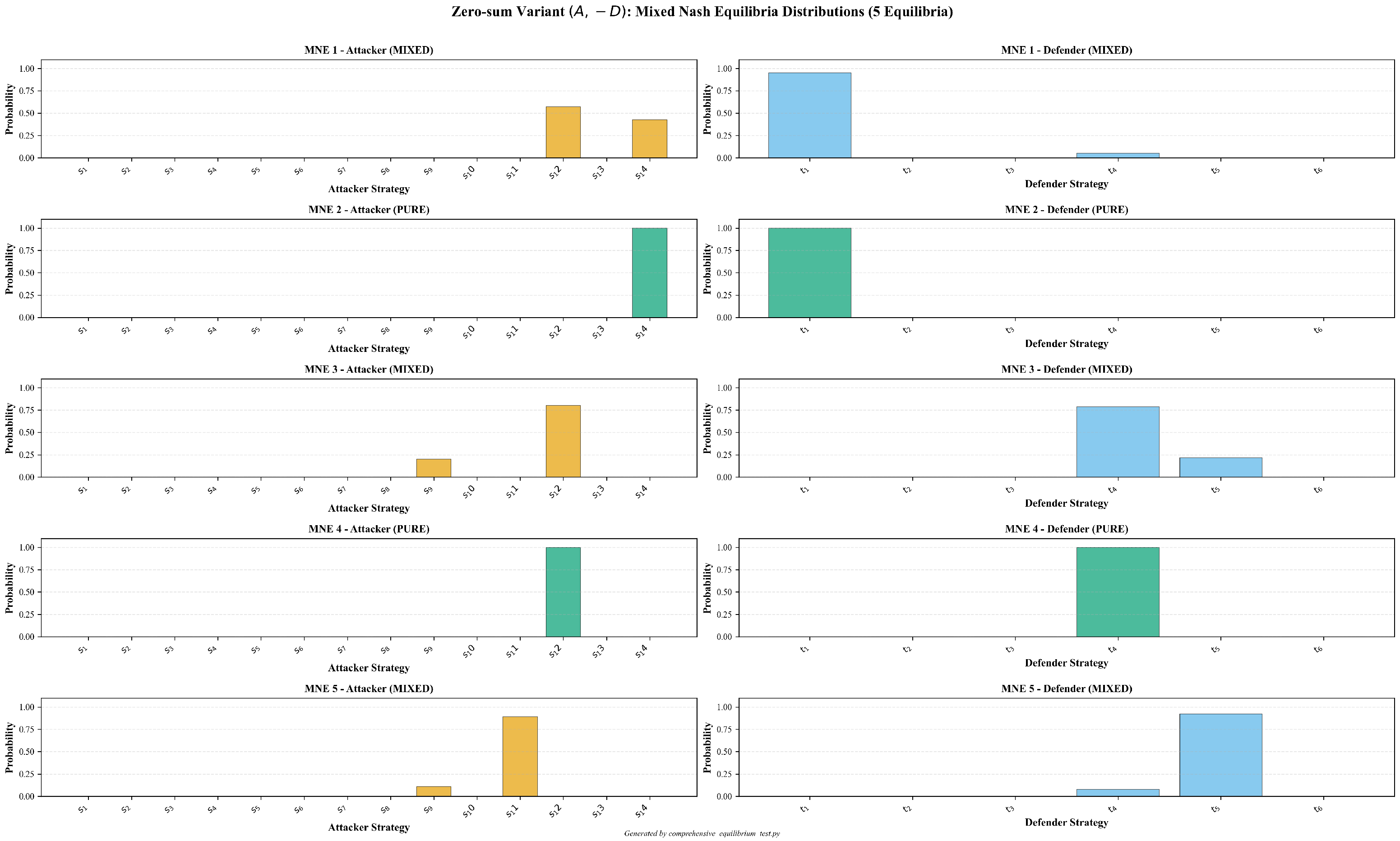

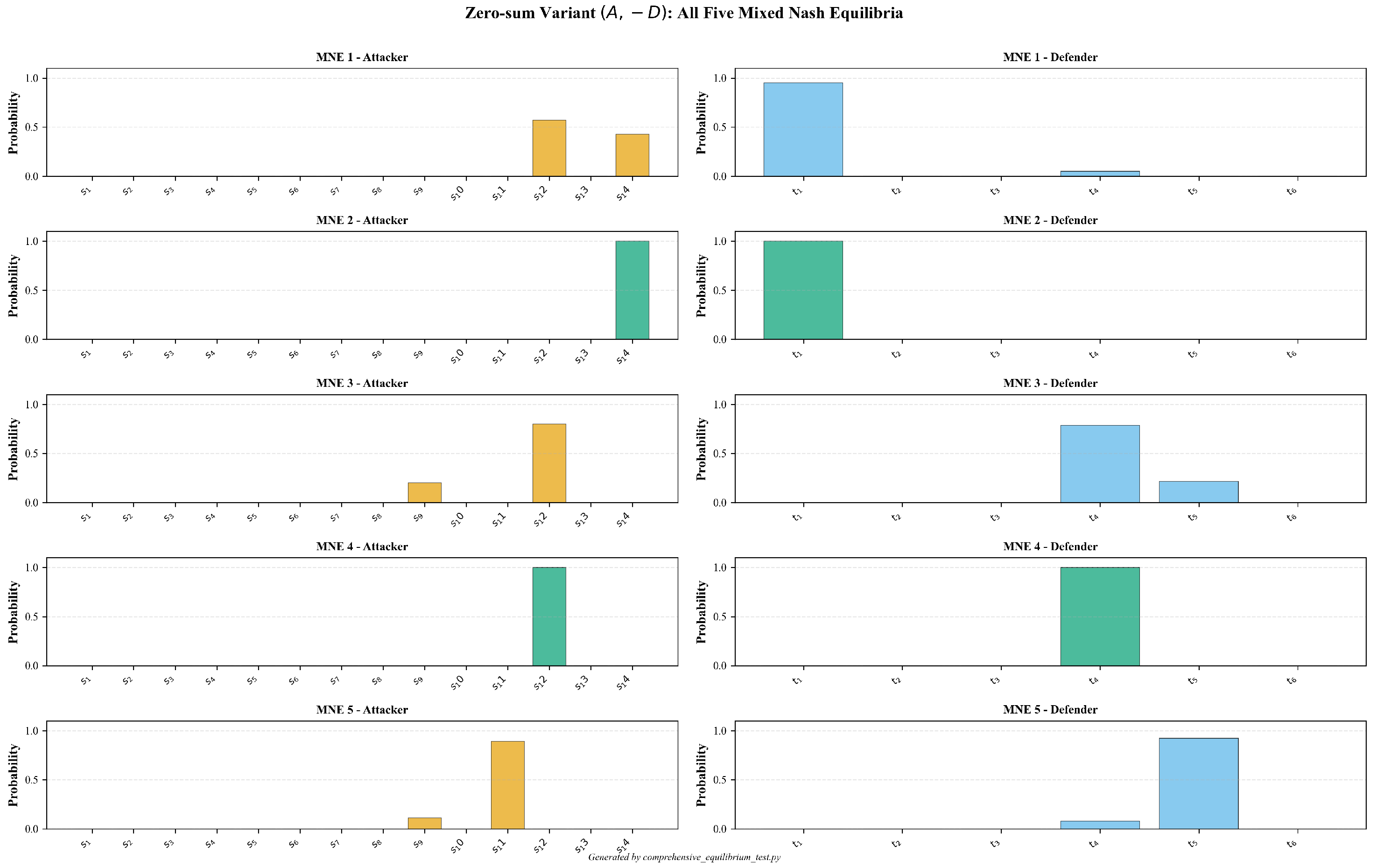

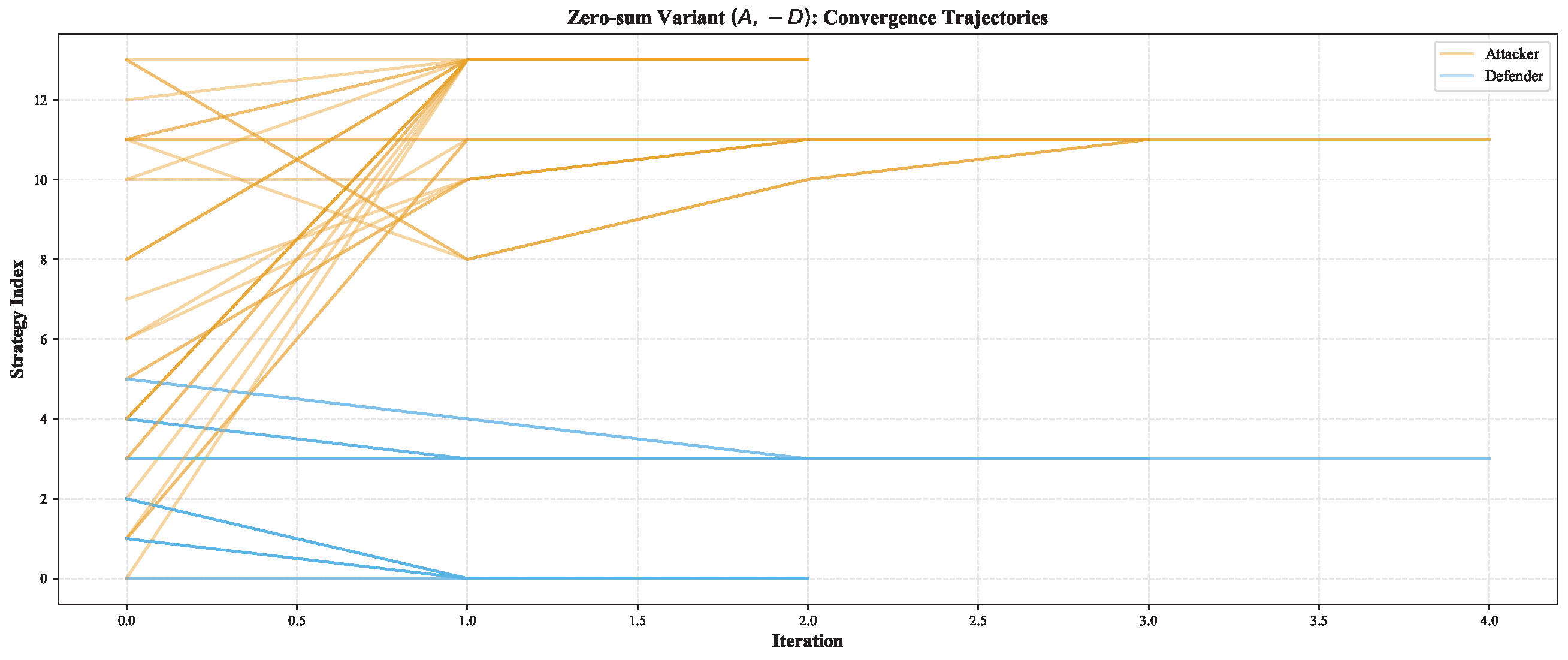

3.3.6. Dynamics Illustration (Zero-Sum Variant)

Methodological Transparency Statement

3.4. Utility Function

3.4.1. Attacker Utility Function

3.4.2. Defender Utility Function

3.4.3. Utility Calculation Algorithms

3.5. Prioritizing DFR Improvements

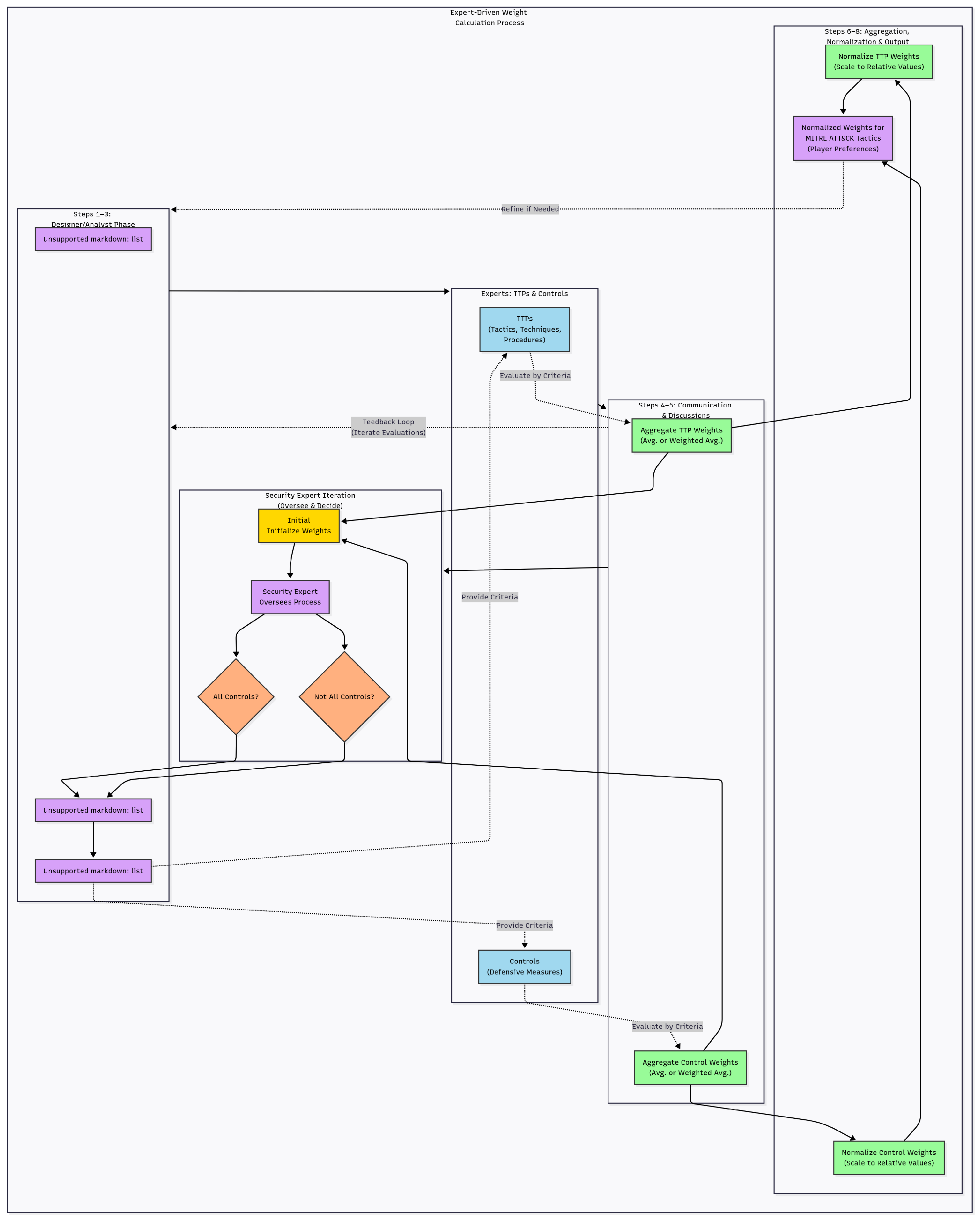

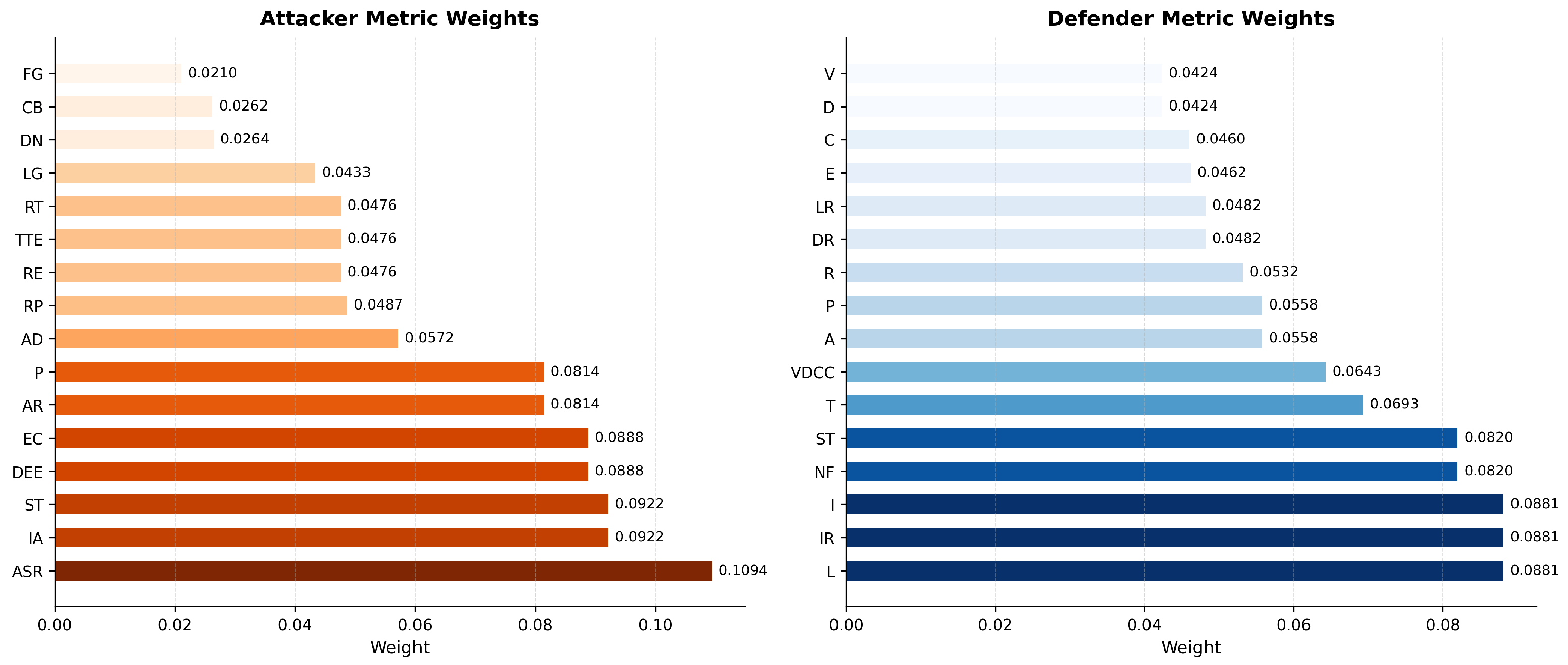

3.5.1. AHP Methodology for Weight Determination

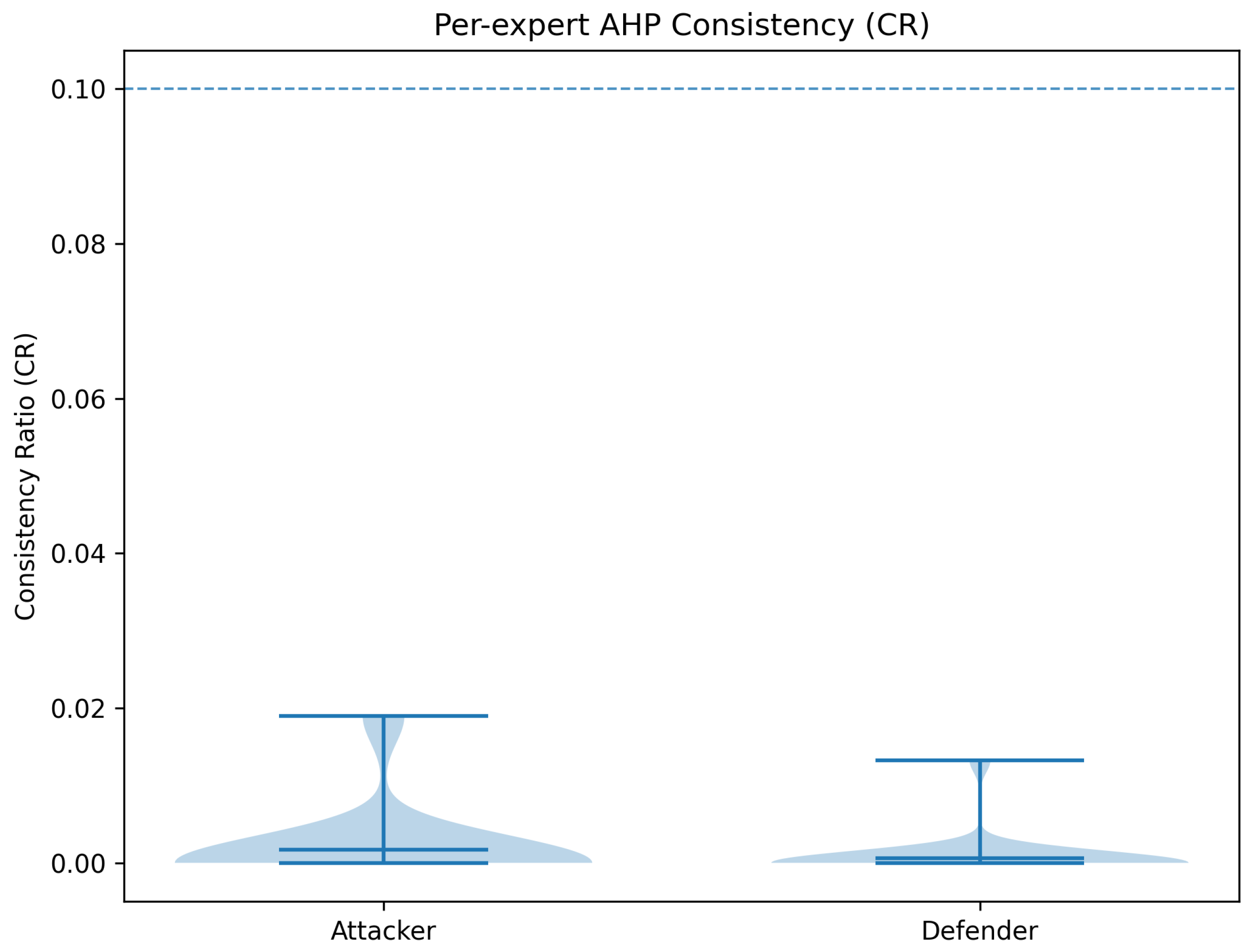

Expert Panel Procedures and Transparency

Reporting Precision and Repeated Weights

Plausibility of Small and Similar CR Values

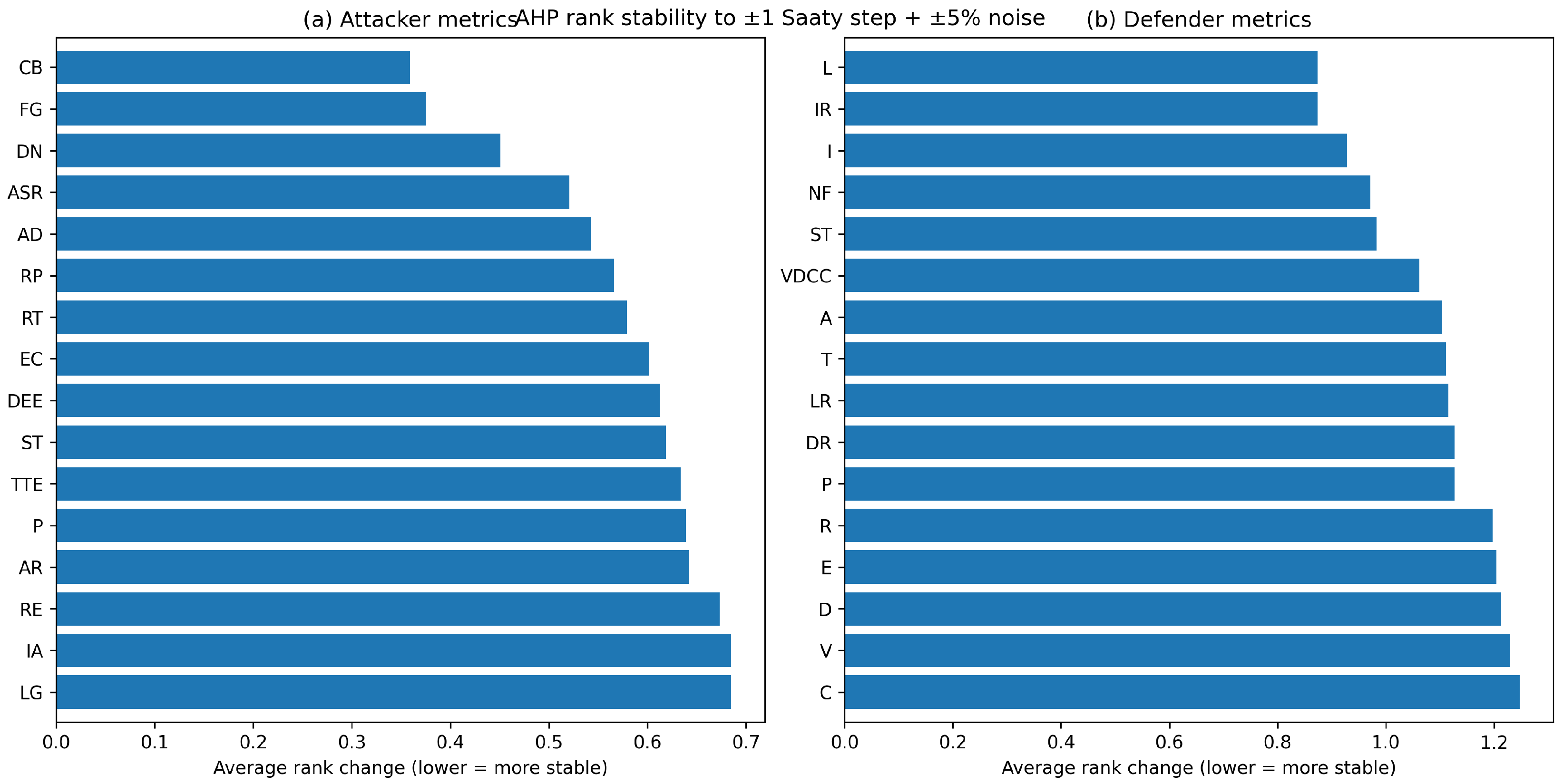

Additional AHP Diagnostics and Robustness

3.5.2. Prioritization Process

3.5.3. DFR Improvement Algorithm

3.6. Reevaluating the DFR

4. Results

4.1. Data Collection and Methodology

Extraction and Mapping Objects

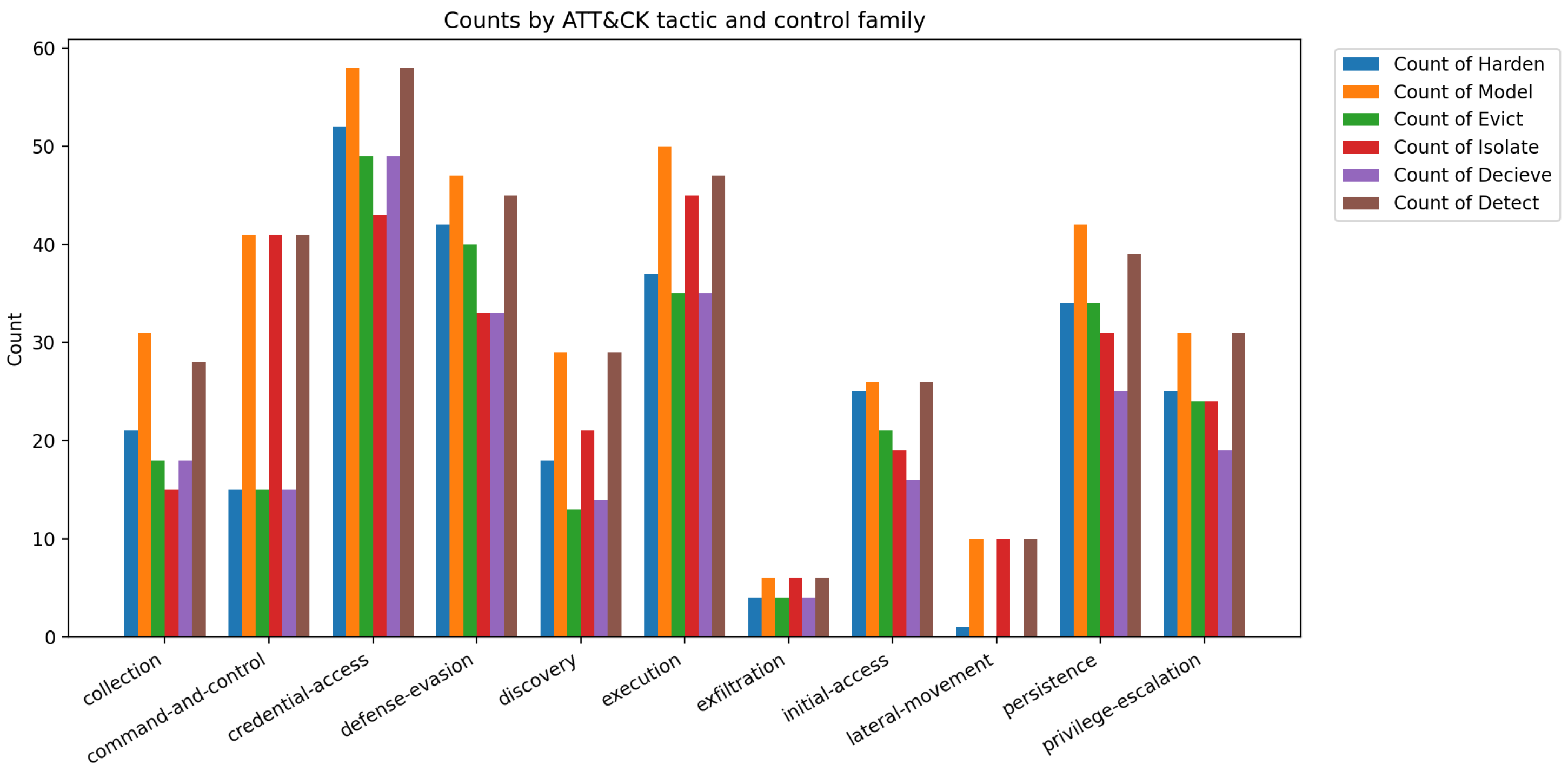

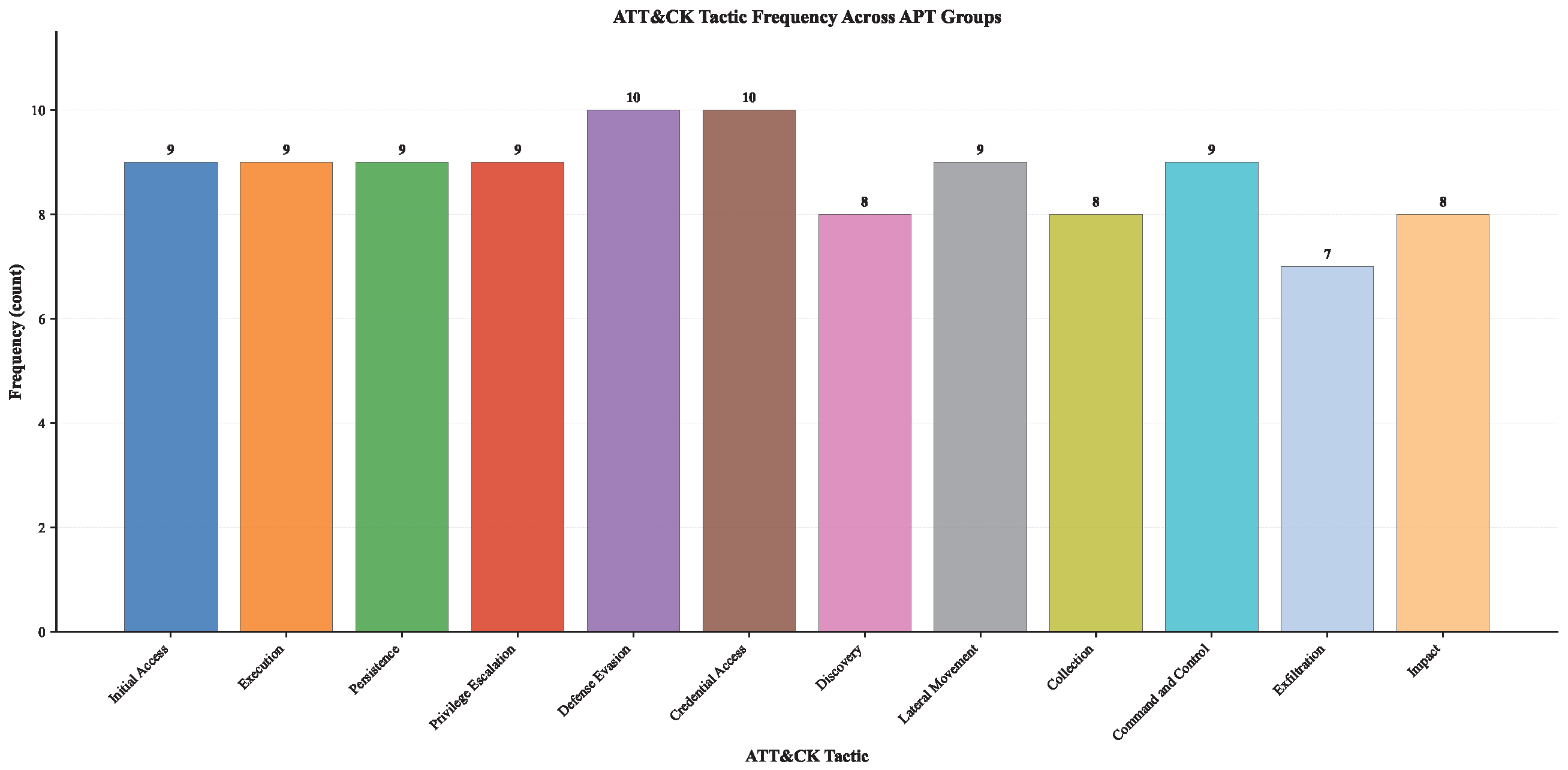

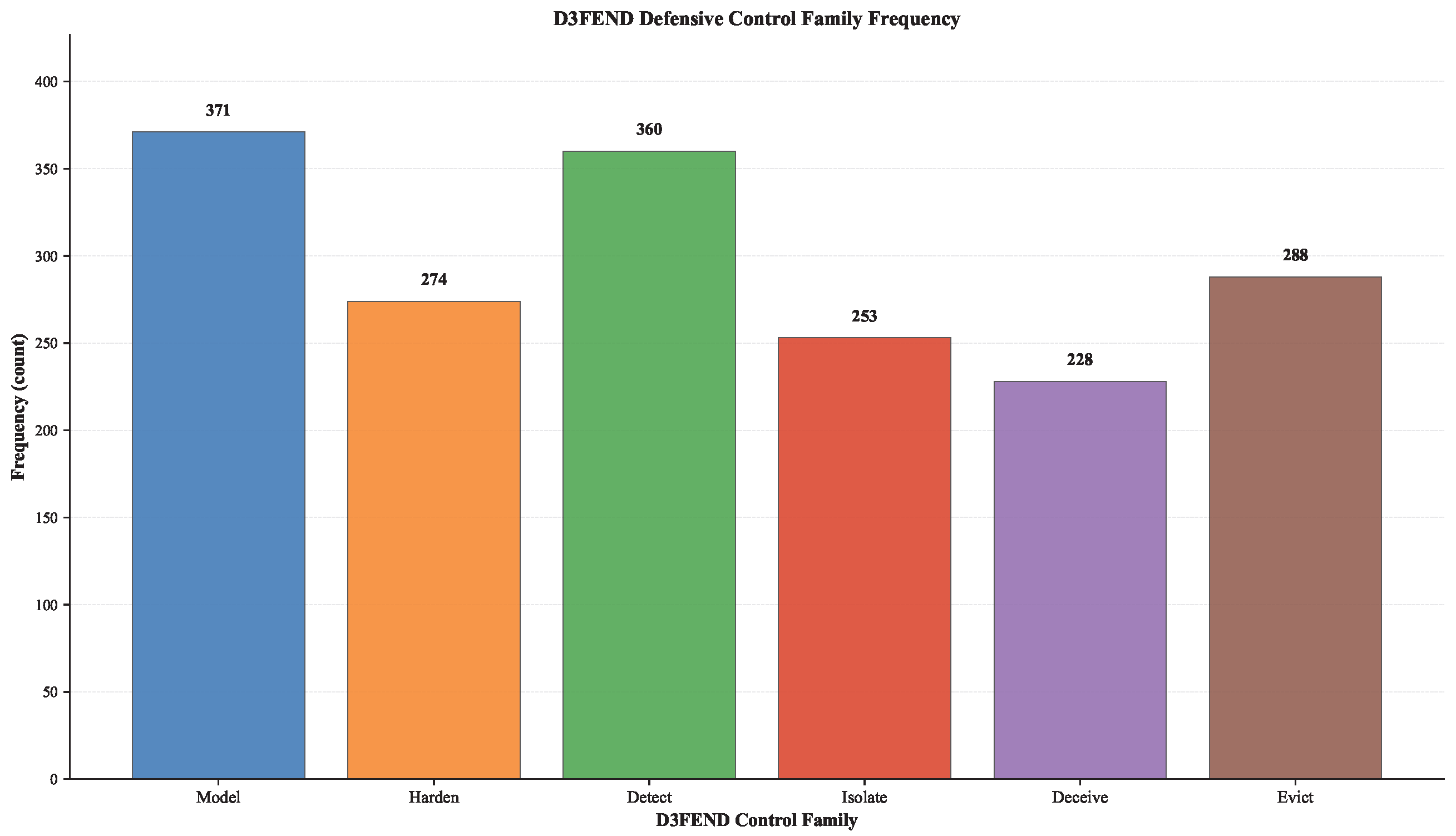

4.2. Analysis of Tactics and Techniques

4.2.1. Named Metrics

- Family-coverage count (APT–technique–family incidence):which counts, for each ATT&CK tactic and D3FEND family f, the number of unique instances with at least one mapped D3FEND technique in family f, de-duplicated once per even if multiple y in the same family map to .

- Tactic recurrence (de-duplicated):

4.2.2. How Figures Are Computed

4.2.3. Notes and Limitations

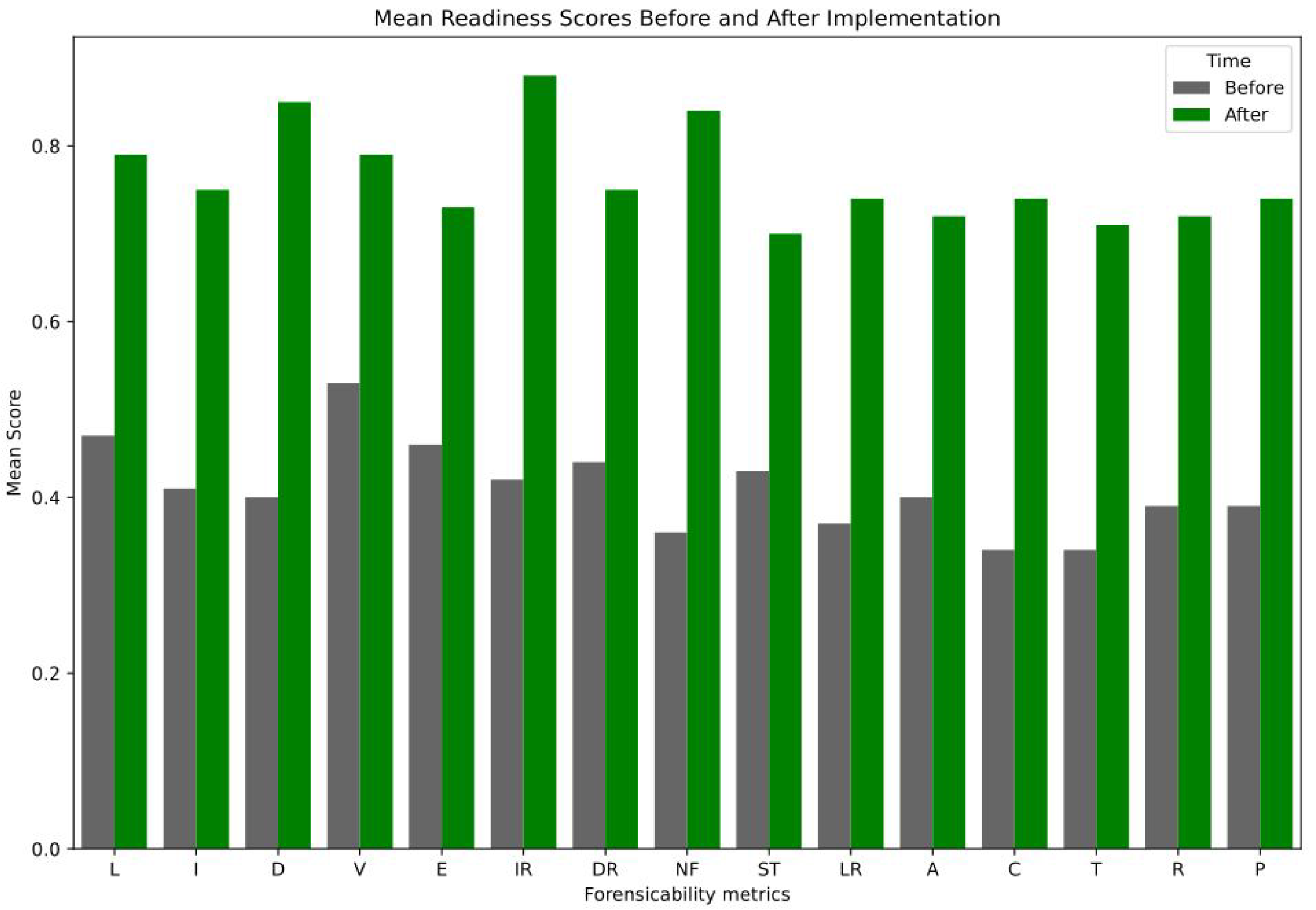

4.3. DFR Metrics Overview and Impact Quantification

4.3.1. Methods: Calibration-Based Synthetic Attacker Profiles

Notation Disambiguation

Limitations

4.4. Comparative Overview of Prior Game-Theoretic Models

Connection to Sun Tzu’s Strategic Wisdom

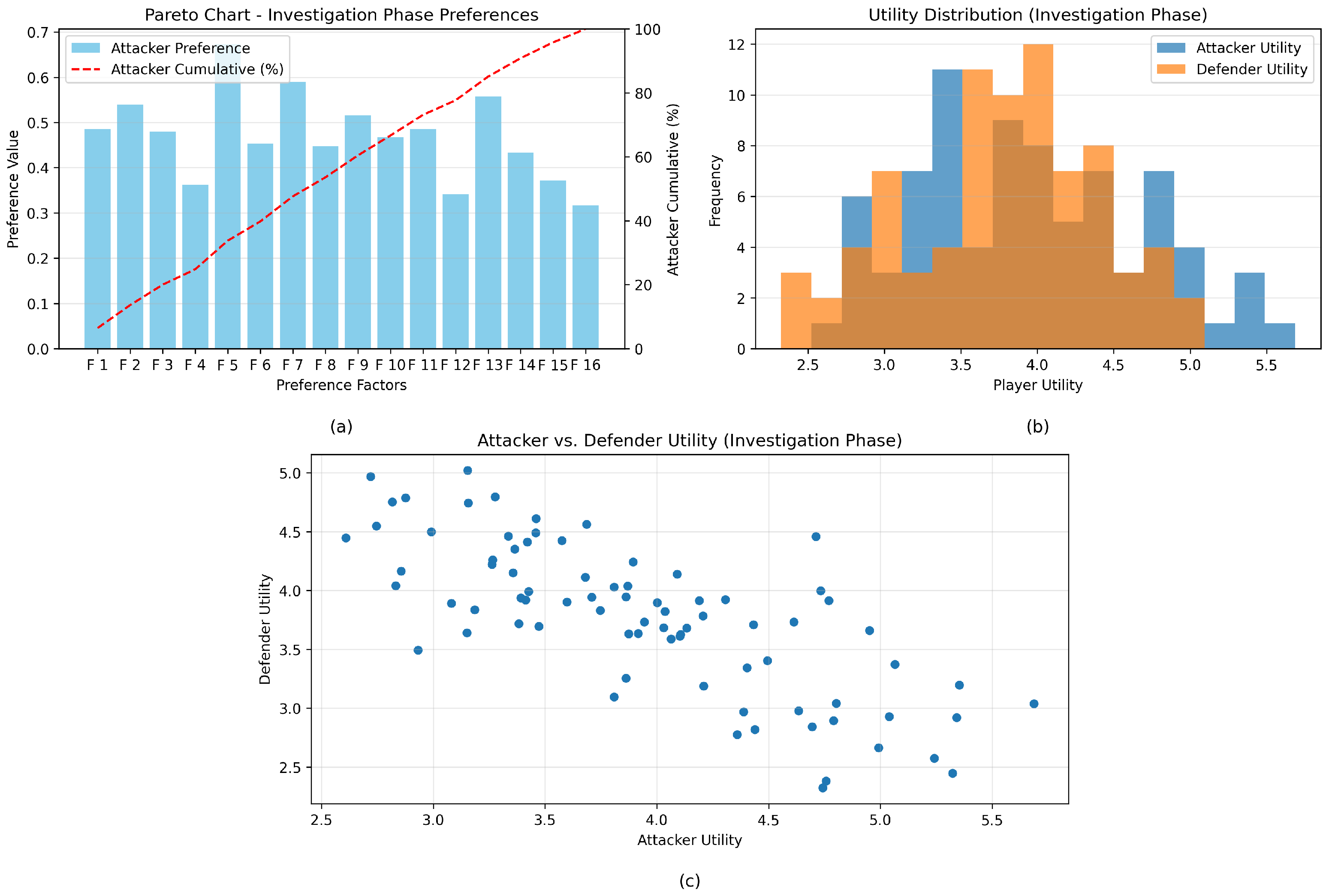

4.5. Attackers vs. Defenders: A Comparative Study

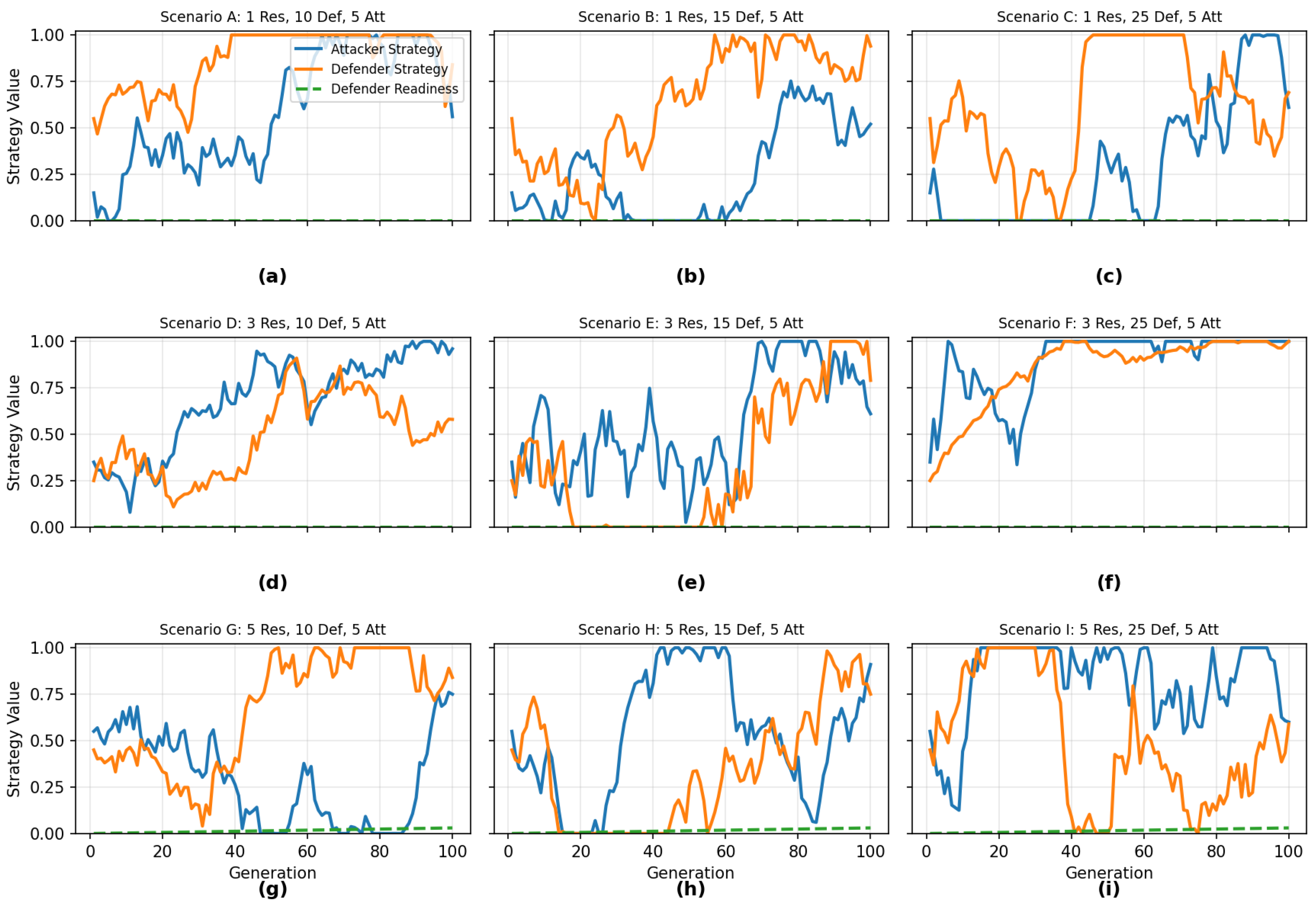

4.6. Game Dynamics and Strategy Analysis

4.6.1. Non-Zero-Sum

4.6.2. Zero-Sum

4.7. Synthetic, Calibration-Based Case Profiles

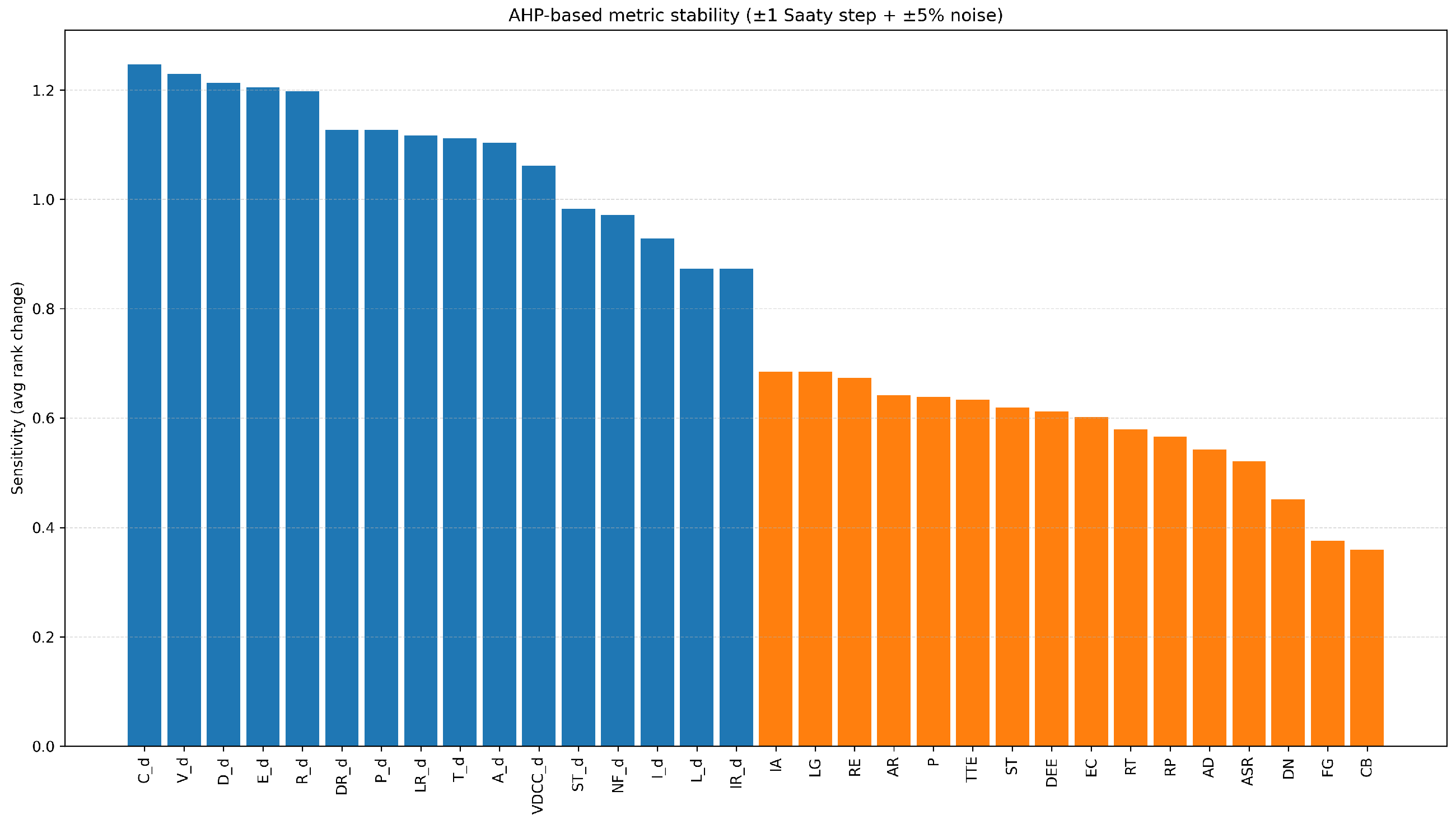

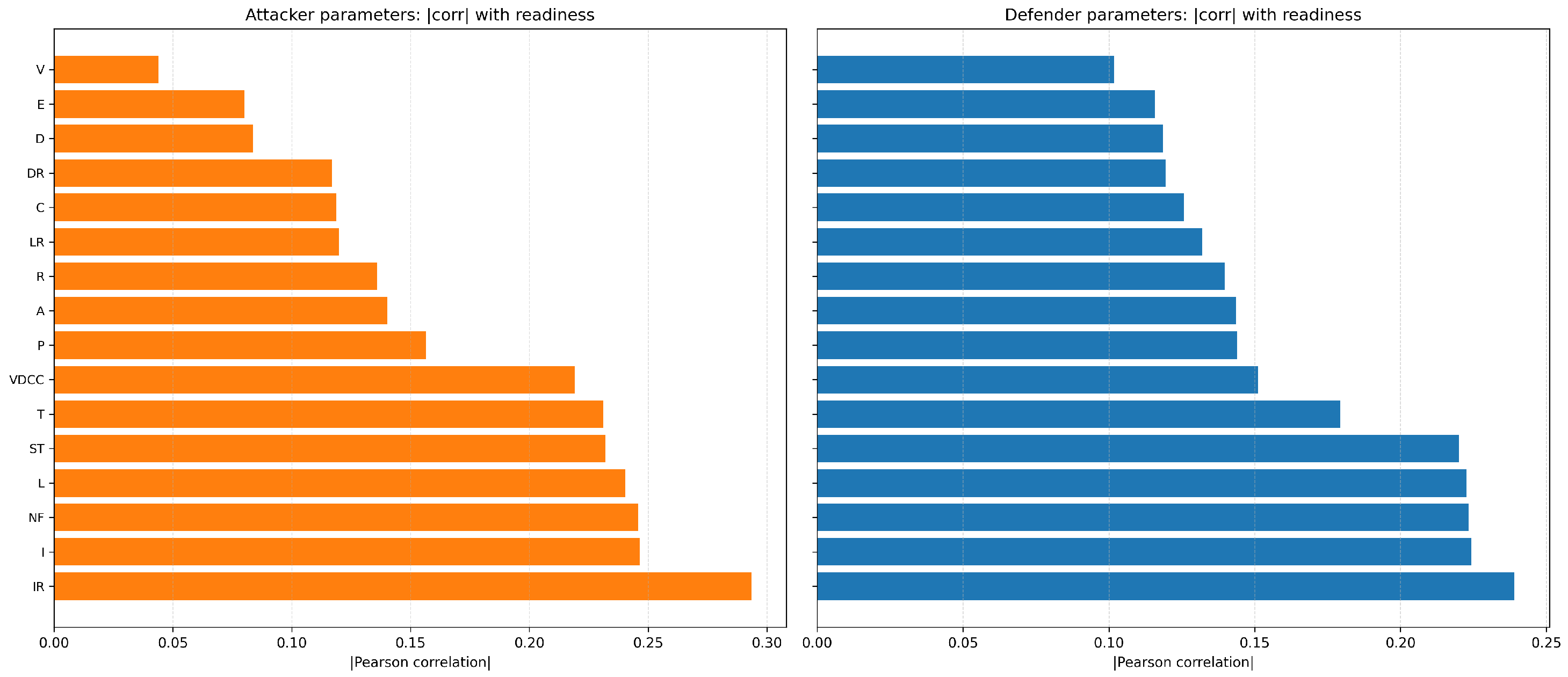

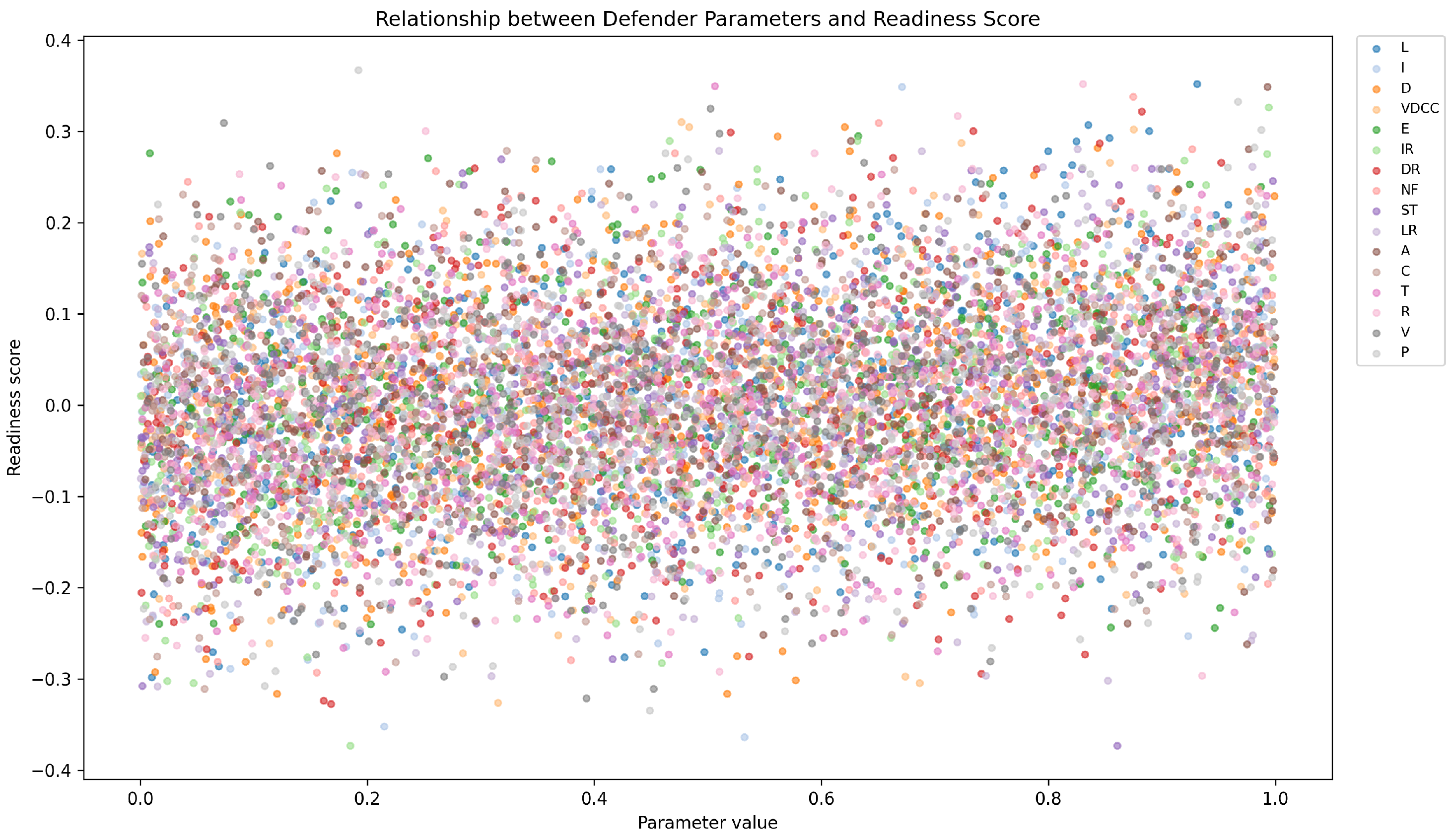

4.8. Sensitivity Analysis

4.8.1. Local Perturbation Sensitivity

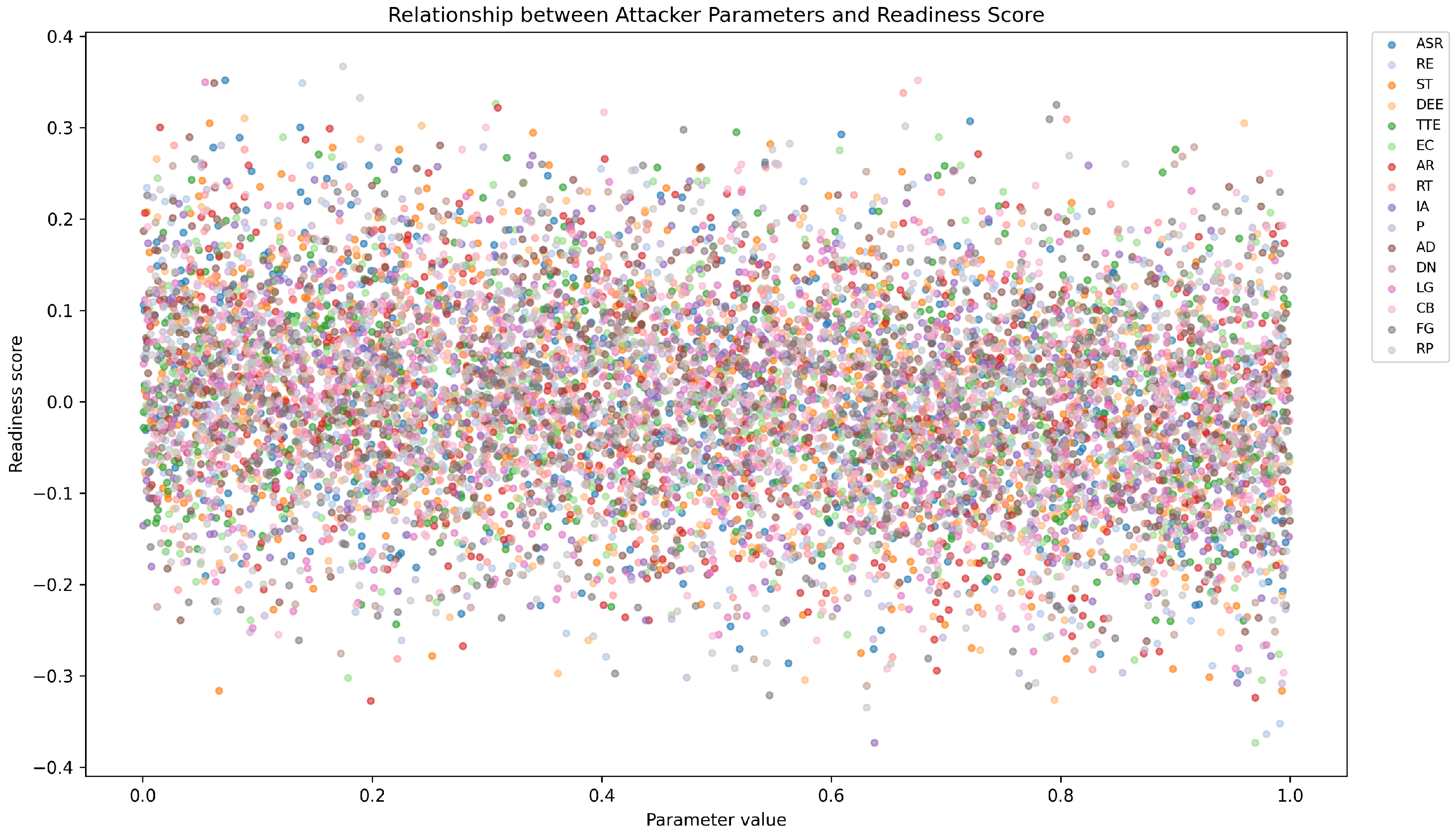

4.8.2. Monte Carlo Simulation

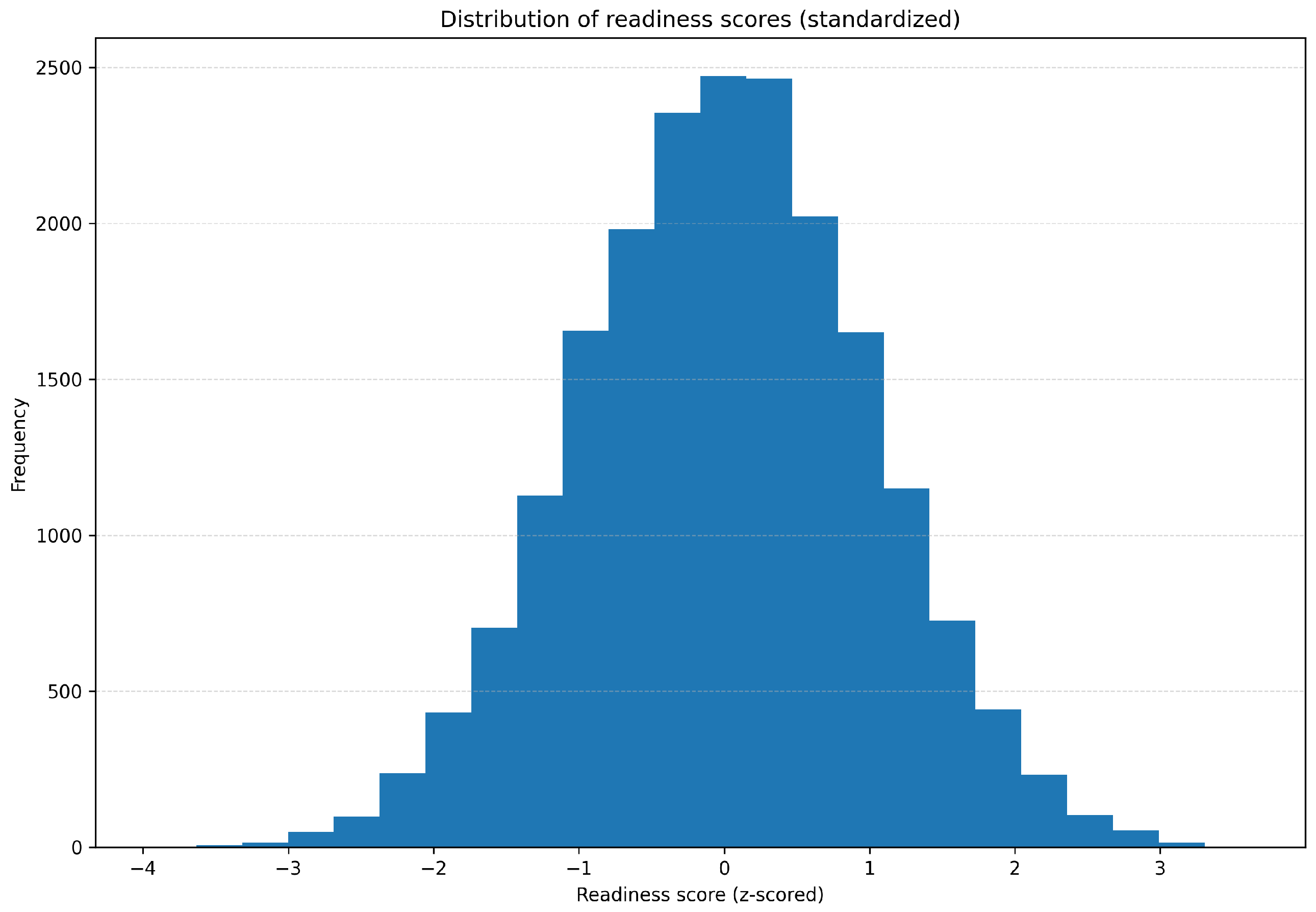

4.9. Distribution of Readiness Score

- Central Peak at 0.0: A high frequency around 0.0 indicates balanced readiness in most systems.

- Symmetrical Spread: Even tapering on both sides suggests system stability across environments.

- Low-Frequency Extremes: Outliers at the tails (−0.3 and +0.3) denote rare but critical deviations requiring targeted intervention.

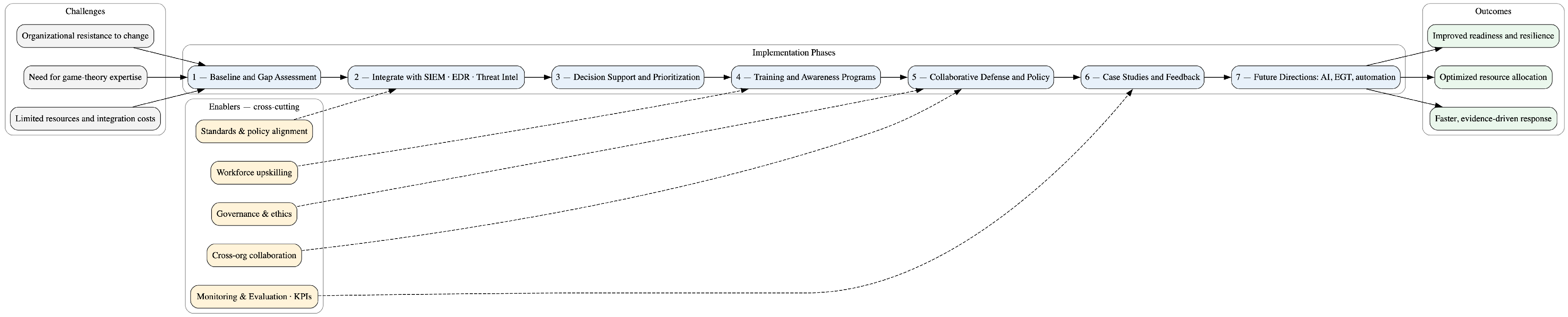

5. Discussion

- Implementation Challenges: Real-world adoption may encounter barriers such as limited resources, integration costs, and the need for game theory expertise. Organizational resistance to change and adaptation to new analytical frameworks are additional challenges.

- Integration with Existing Tools: The framework can align synergistically with existing platforms such as threat intelligence systems, SIEM, and EDR tools. These integrations can enhance decision making and optimize forensic investigation response times.

- Decision Support Systems: Game-theoretic models can augment decision support processes by helping security teams prioritize investments, allocate resources, and optimize incident response based on adaptive risk modeling.

- Training and Awareness Programs: Building internal capability is crucial. Training programs integrating game-theoretic principles into cybersecurity curricula can strengthen decision making under adversarial uncertainty.

- Collaborative Defense Strategies: The framework supports collective defense through shared intelligence and coordinated responses. Collaborative action can improve deterrence and resilience against complex, multi-organizational threats.

- Policy Implications: Incorporating game theory into cybersecurity has policy ramifications, including regulatory alignment, responsible behavior standards, and ethical considerations regarding autonomous or strategic decision models.

- Case Studies and Use Cases: Documented implementations of game-theoretic approaches demonstrate measurable improvements in risk response and forensic readiness. Future research can expand these to varied industry sectors.

- Future Directions: Continued innovation in game model development, integration with AI-driven threat analysis, and tackling emerging cyber challenges remain promising directions.

5.1. Forensicability and Non-Forensicability

5.2. Evolutionary Game Theory Analysis

- Evolutionary Dynamics: Attackers and defenders co-adapt in continuous feedback cycles; the success of one influences the next strategic shift in the other.

- Replication and Mutation: Successful tactics replicate, while mutations introduce strategic diversity critical for both exploration and adaptation.

- Equilibrium and Stability: Evolutionary Stable Strategies (ESSs) represent steady states where neither party benefits from deviation.

- Co-evolutionary Context: The model exposes the perpetual nature of cyber escalation, showing that proactive defense and continuous readiness optimization are essential to remain resilient.

5.3. Attack Impact on Readiness and Investigation Phases

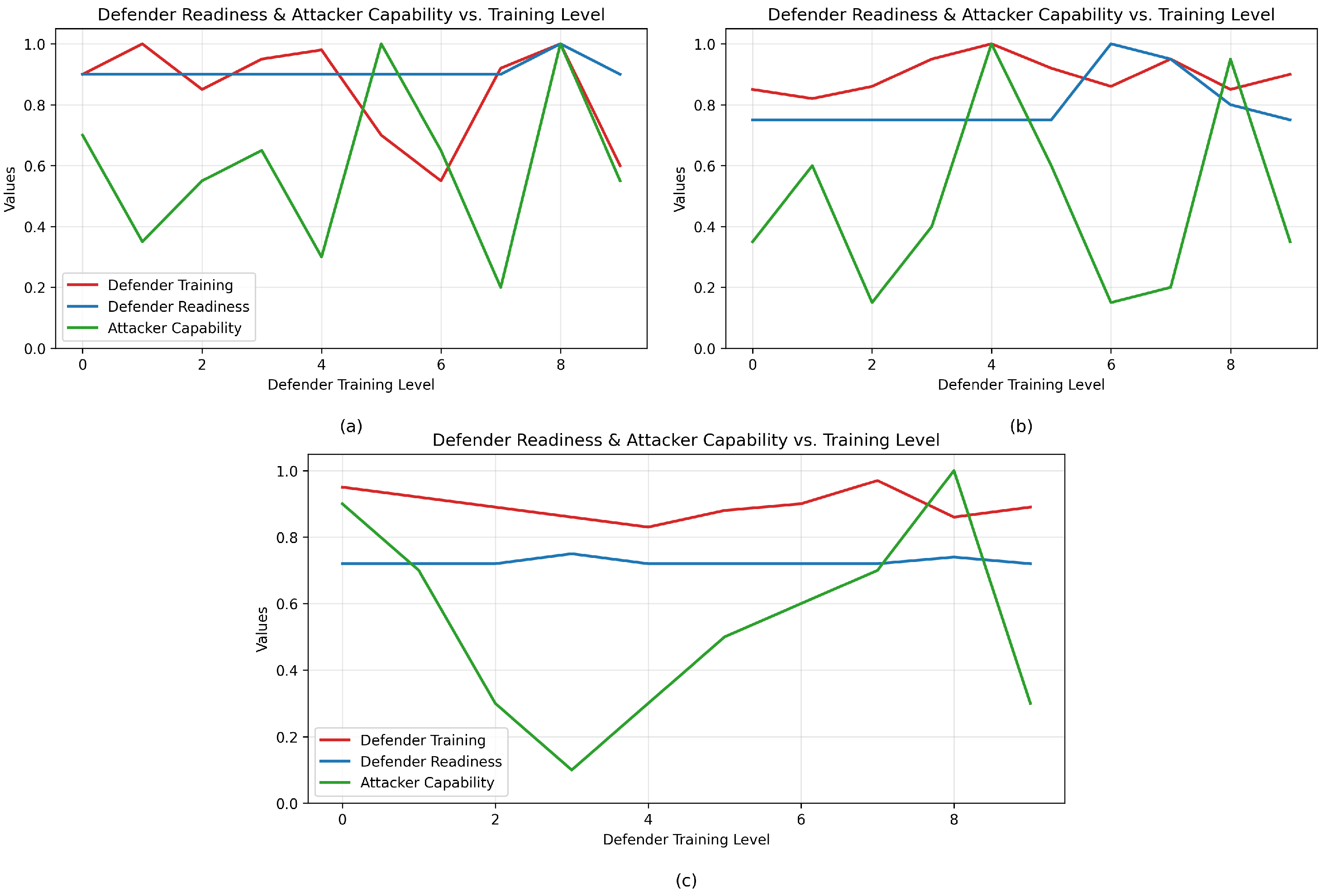

5.4. Readiness and Training Level of the Defender

5.5. Attack Success and Evidence Collection Rates

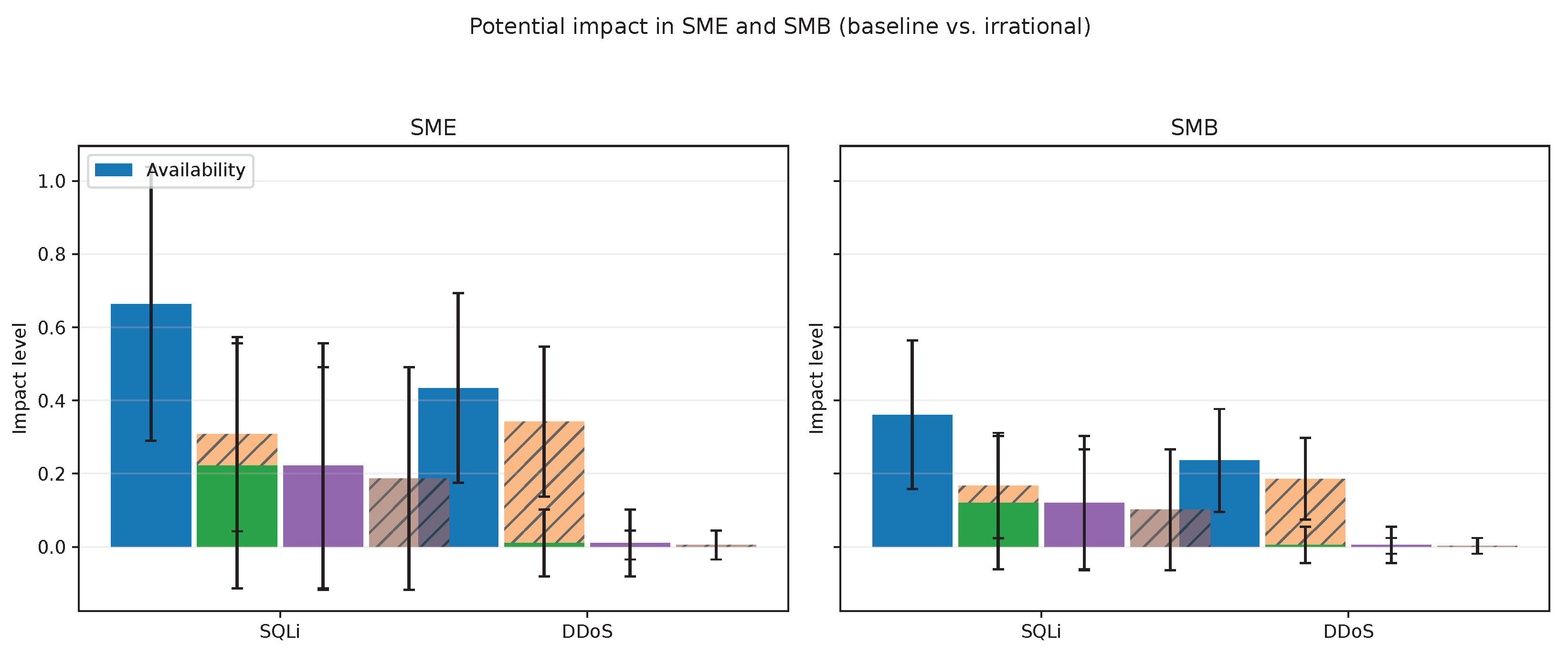

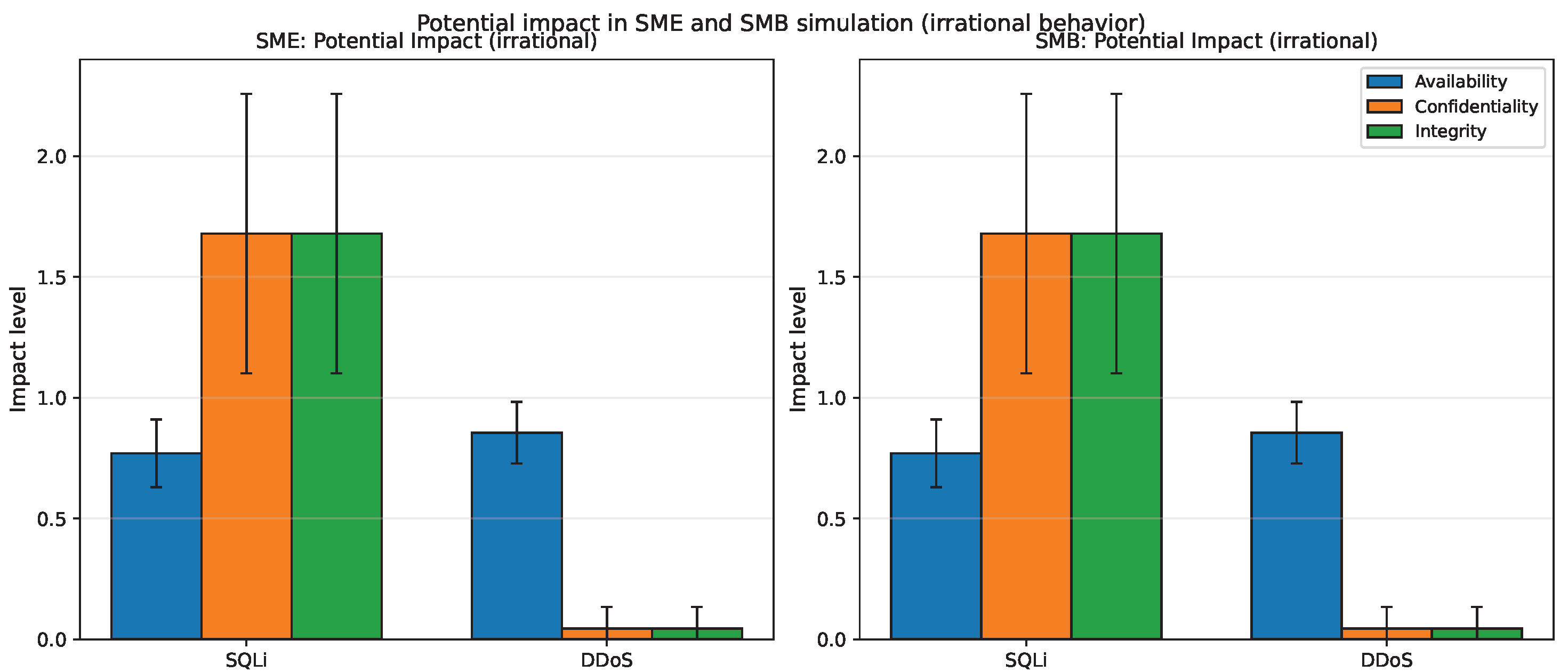

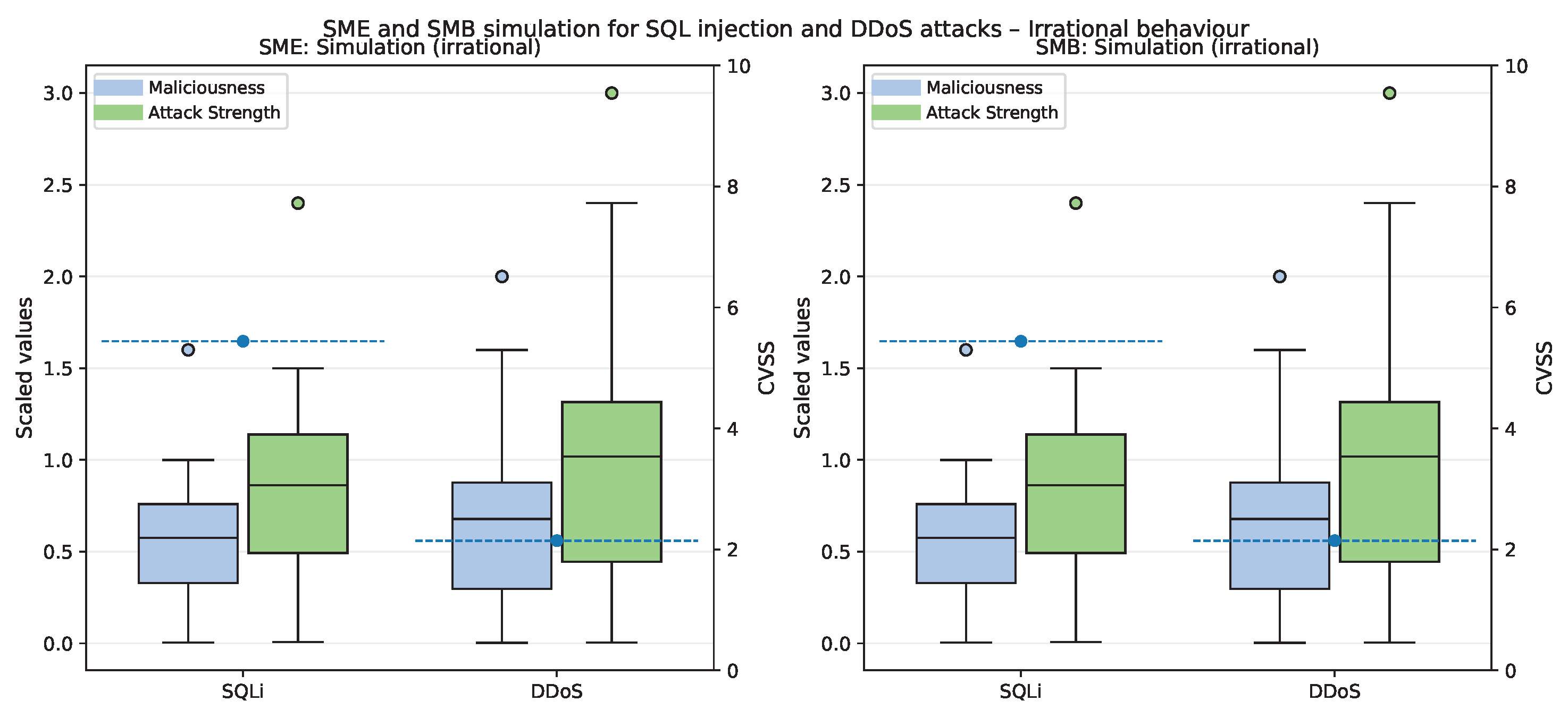

5.6. Comparative Analysis in SMB and SME Organizations

Irrational Attacker Behavior Analysis

5.7. Limitations and Future Work

- Model Complexity: Real-world human elements and deep organizational dynamics may extend beyond current model parameters.

- Data Availability: Reliance on open-source ATT&CK and D3FEND datasets limits coverage of emerging threat behaviors.

- Computational Needs: Evolutionary modeling and large-scale simulations require high-performance computing resources.

- Expert Bias: AHP-based weighting depends on expert judgment, introducing potential subjective bias despite structured controls.

- Real-time Adaptive Models: Integrating continuous learning to instantly adapt to threat changes.

- AI/ML Integration: Employing predictive modeling for attacker intent recognition and defense automation.

- Cross-Organizational Collaboration: Expanding to cooperative game structures for shared threat response.

- Empirical Validation: Conducting large-scale quantitative studies to reinforce and generalize model applicability.

6. Conclusions

6.1. Limitations

6.2. Future Research Directions

- Extended Environmental Applications: Adapting the framework to cloud-native, IoT, and blockchain ecosystems where architectural differences create distinct forensic challenges.

- Dynamic Threat Intelligence Integration: Employing real-time data feeds and AI-based analytics to enable adaptive recalibration of utilities and strategy distributions.

- Standardized Readiness Benchmarks: Developing comparative industry baselines for forensic maturity that support cross-organizational evaluation and improvement.

- Automated Response Coupling: Integrating automated incident response and orchestration tools to bridge the gap between detection and remediation.

- Enhanced Evolutionary Models: Expanding evolutionary game formulations to capture longer-term strategic co-adaptations between attackers and defenders.

- Large-Scale Empirical Validation: Conducting multi-sector, empirical measurement campaigns to statistically validate and refine equilibrium predictions.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AHP | Analytic Hierarchy Process |

| APT | Advanced Persistent Threat |

| ATT&CK | MITRE Adversarial Tactics, Techniques, and Common Knowledge |

| CASE | Cyber-investigation Analysis Standard Expression |

| CIA | Confidentiality, Integrity, Availability (triad) |

| CSIRT | Computer Security Incident Response Team |

| CVSS | Common Vulnerability Scoring System |

| D3FEND | MITRE Defensive Countermeasures Knowledge Graph |

| DFIR | Digital Forensics and Incident Response |

| DFR | Digital Forensic Readiness |

| DDoS | Distributed Denial of Service |

| EDR | Endpoint Detection and Response |

| EGT | Evolutionary Game Theory |

| ESS | Evolutionarily Stable Strategy |

| IDPS | Intrusion Detection and Prevention System |

| JCP | Journal of Cybersecurity and Privacy |

| MCDA | Multi-Criteria Decision Analysis |

| MNE | Mixed Nash Equilibrium |

| NDR | Network Detection and Response |

| NE | Nash Equilibrium |

| PNE | Pure Nash Equilibrium |

| SIEM | Security Information and Event Management |

| SMB | Small and Medium Business |

| SME | Small and Medium Enterprise |

| SQLi | Structured Query Language injection |

| TTP | Tactics, Techniques, and Procedures |

| UCO | Unified Cyber Ontology |

| XDR | Extended Detection and Response |

Appendix A. Simulation Model and Settings

Appendix A.1. Readiness Components

Appendix A.2. Outcome Probabilities

Appendix A.3. Sampling and Maturity Regimes

- Junior+Mid+Senior: , ;

- Mid+Senior: , ;

- Senior: , .

Appendix A.4. Experiment Size and Uncertainty

Appendix B. Additional Materials

Appendix B.1. Notation Table

- vs. A: is a scalar (single entry in the matrix), while A is the entire matrix (bold uppercase). Similarly, is a scalar, D is the matrix.

- x, y vs. x, y: Lowercase x, y denote individual elements or indices; bold x, y denote mixed-strategy vectors (probability distributions over pure strategies).

- Strategy indices: Pure strategies and are elements (not vectors); mixed strategies x and y are probability vectors over these sets.

| Symbol | Type | Description |

|---|---|---|

| Game Structure | ||

| Set | Attacker strategy set: where ATT&CK tactics (see Table 3) | |

| Set | Defender strategy set: where D3FEND control families (see Table 3) | |

| s | Element | Pure attacker strategy: (e.g., , ) |

| t | Element | Pure defender strategy: (e.g., , ) |

| A | Matrix | Attacker payoff matrix: , where entry is the attacker’s utility for strategy pair |

| D | Matrix | Defender payoff matrix: , where entry is the defender’s utility for strategy pair |

| Scalar | Attacker scalar payoff: (unitless utility, higher is better for attacker) | |

| Scalar | Defender scalar payoff: (unitless utility, higher is better for defender) | |

| Pair | Pure Nash equilibrium: strategy profile where and are mutual best responses | |

| Mixed Strategies and Equilibria | ||

| x | Vector | Attacker mixed strategy: where (probability distribution over ) |

| y | Vector | Defender mixed strategy: where (probability distribution over ) |

| Vector | Attacker equilibrium mixed strategy: (optimal probability vector) | |

| Vector | Defender equilibrium mixed strategy: (optimal probability vector) | |

| Scalar | i-th component of x: (probability that attacker plays strategy ) | |

| Scalar | j-th component of y: (probability that defender plays strategy ) | |

| Set | Probability simplex: | |

| Set | Support of : (indices of strategies played with positive probability) | |

| Set | Support of : | |

| Scalar | Attacker expected payoff: (expected utility when attacker plays and defender plays ) | |

| Scalar | Defender expected payoff: (expected utility when defender plays and attacker plays ) | |

| Scalar | Attacker equilibrium value: for all (constant expected payoff at equilibrium) | |

| Scalar | Defender equilibrium value: for all (constant expected payoff at equilibrium) | |

| DFR Metrics and Utilities | ||

| Scalar | Normalized DFR metric i: for strategy pair (see Section 3.3.3) | |

| Scalar | AHP weight for attacker metric i: , (see Table 8) | |

| Scalar | AHP weight for defender metric j: , (see Table 8) | |

| Scalar | Normalized attacker utility: | |

| Scalar | Normalized defender utility: | |

| Scalar | Defender metric k value: (see Section 4.3.1) | |

| Scalar | Attacker metric ℓ value: (see Section 4.3.1) | |

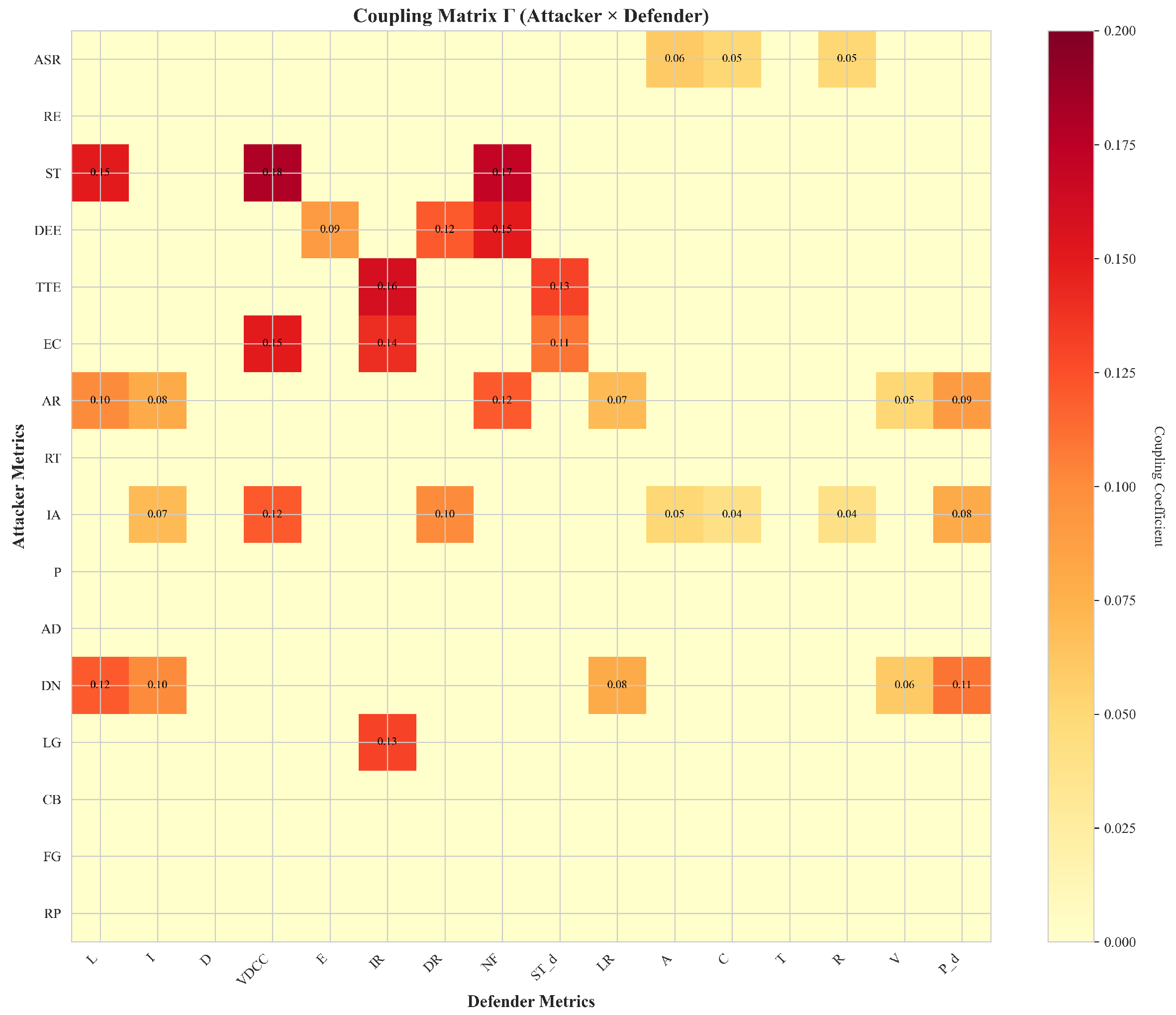

| Matrix | Coupling matrix: linking defender capabilities to attacker metric suppression (see Section 4.3.1) | |

Appendix B.2. Repository and Data Access

- Main repository (reproducibility package): https://github.com/Mehrn0ush/gtDFR (accessed on 12 November 2025). This repository contains all Python 3.10 scripts, configuration files (config/), processed datasets (data/), analysis scripts for equilibrium computation and profile generation (scripts/), and supplementary artifacts (Supplementary Materials, including AHP weight tables, expert consistency reports, equilibrium exports, and manifest files). The repository is version e, d and a tagged release matching this manuscript is provided.

- Historical mapping snapshots (archival only): https://github.com/Mehrn0ush/gtDFR/tree/main/aptcsv/archive (accessed on 12 November 2025). These gists archive earlier CSV/JSON exports used during initial ATT&CK/D3FEND data exploration and MITRE STIX parsing. They are not required to reproduce the main results but are provided for transparency.

Framework Versions and Snapshots

Appendix B.3. ATT&CK/D3FEND Snapshot Comparison Report (Robustness Check)

Appendix B.3.1. Purpose

Appendix B.3.2. Pipeline

Versions Compared

| Snapshot | ATT&CK Version | Bundle Modified (UTC) |

|---|---|---|

| Baseline (closest to analysis window) | v13.1 | 2023-05-09T14:00:00.188Z |

| Intermediate | v14.1 | 2023-11-14T14:00:00.188Z |

| Latest | v18.0 | 2025-10-28T14:00:00.188Z |

Appendix B.3.3. Executive Summary

Appendix B.3.4. Per-APT Deltas (v13.1 → v18.0)

| APT Group | v13.1 | v14.1 | v18.0 | Added | Removed |

| APT33 | 32 | 32 | 31 | 1 | 2 |

| APT39 | 52 | 52 | 53 | 2 | 1 |

| AjaxSecurityTeam | 6 | 6 | 6 | 0 | 0 |

| Cleaver | 5 | 5 | 5 | 0 | 0 |

| CopyKittens | 8 | 8 | 8 | 0 | 0 |

| LeafMiner | 17 | 17 | 17 | 0 | 0 |

| MosesStaff | 12 | 12 | 12 | 1 | 1 |

| MuddyWater | 59 | 59 | 58 | 1 | 2 |

| OilRig | 56 | 56 | 76 | 21 | 1 |

| SilentLibrarian | 13 | 13 | 13 | 0 | 0 |

Appendix B.3.5. Provenance (Console Excerpt)

Appendix B.3.6. Note on D3FEND Coverage in This Run

Appendix B.3.7. Reproducibility

Appendix B.4. STIX Data Extraction Methodology

- STIX object types: Intrusion-set (APT groups), relationship (with relationship_type="uses"), attack-pattern (techniques).

- Scope: Enterprise ATT&CK only (excluded Mobile, ICS, PRE-ATT&CK, which is historical and no longer maintained).

- Relationship path: Intrusion-set.idattack-pattern.id, using only direct relationships (not transitive via malware/tools); we extracted external_references with source_name="mitre-attack" to obtain technique IDs.

- Filtering: Excluded all objects and relationships with revoked==true or x_mitre_ deprecated==true.

- Normalization: ATT&CK technique IDs normalized to uppercase (e.g., t1110.003→T1110.003).

- Sub-technique handling: Counted exact sub-techniques (e.g., T1027.013) as distinct from parent techniques (e.g., T1027); no rollup performed.

Appendix B.5. Synthetic Profile Generation for Case Studies

Appendix B.9.1. Configuration Files

- Metric definition configuration: Canonical definitions of all 32 DFR metrics (16 defender + 16 attacker), including metric IDs, names, descriptions, and framework targeting flags. This configuration serves as the single source of truth for metric ordering and naming across all scripts, tables, and visualizations (available in the repository, Appendix B).

- Coupling matrix configuration: Sparse coupling matrix (16 × 16) encoding ATT&CK↔D3FEND linkages. Nonzero entries (coefficients typically 0.05–0.20) specify which defender metrics suppress which attacker utilities, operationalizing the game-theoretic framework (available in the repository, Appendix B).

Appendix B.5.2. Generator Script

- Defender before profiles: Beta-distributed samples with mild correlation structure (L↔D↔LR, IR↔↔NF, I↔).

- Defender after profiles: For metrics with targeted_by_framework: true, add uplift in [0.10, 0.30]; for others, allow small drift [−0.02, +0.05].

- Attacker before profiles: Weakly correlated Beta priors with semantic blocks (ASR–IA, ST–AR–DN, RE–RT–FG–RP).

- Attacker after profiles: Coupled update via Equation (8) with case-wise scaling and noise .

Appendix B.5.3. Output Files

- RNG seed and hyperparameters.

- Nonzero pattern and values of .

- SHA-256 hashes of all output files.

- QC metrics: mean Defender, Attacker, Readiness with 95% confidence intervals; fraction of unchanged attacker metrics per case; median decrease for linked metrics; and verification that attacker mean does not collapse to zero.

Appendix B.5.4. Visualizations

- Coupling heatmap (Figure A1): 16 × 16 heatmap of showing nonzero entries (attacker rows × defender columns). Darker cells denote stronger suppression coefficients.

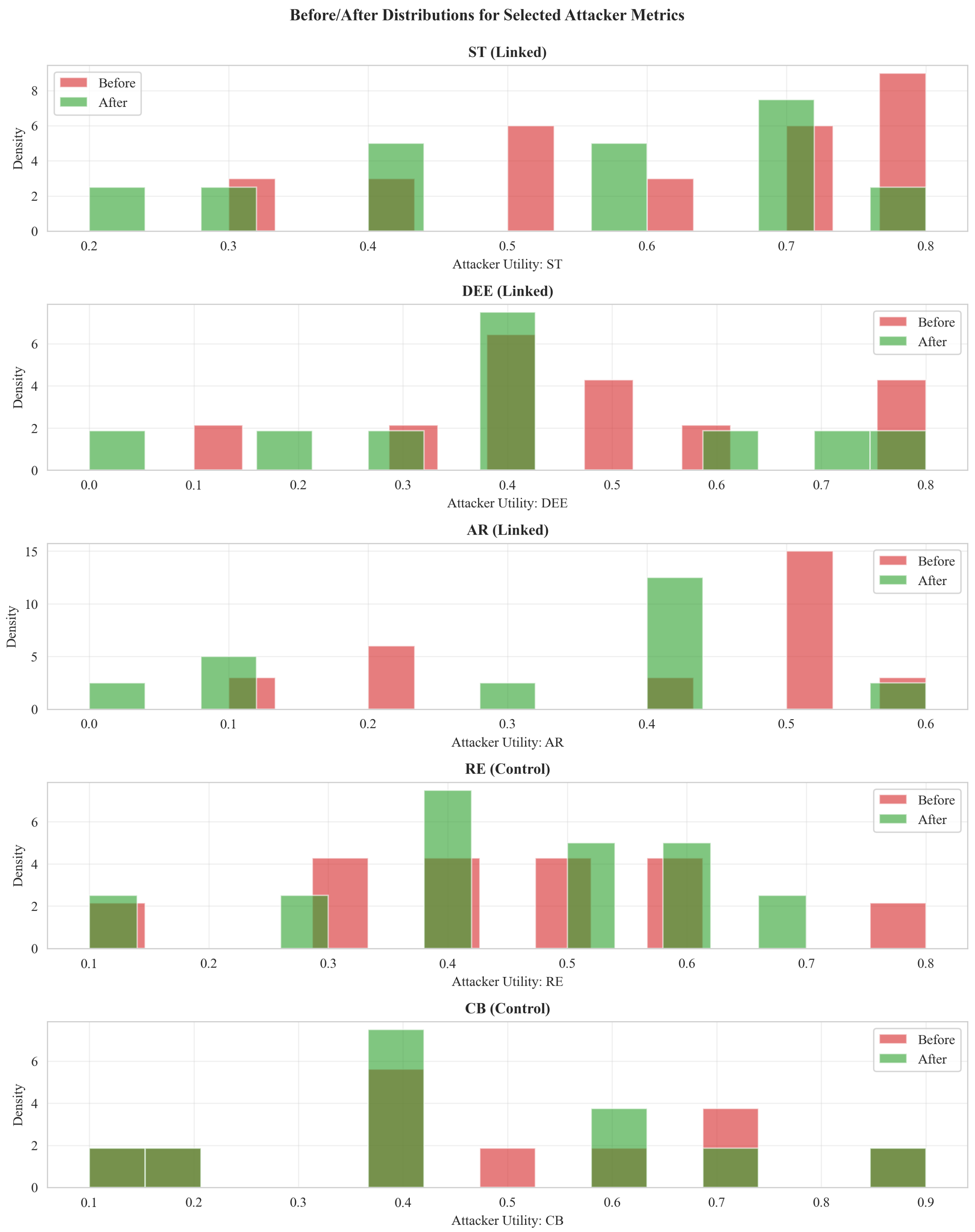

- Before/after ridges (Figure A2): Density distributions for selected attacker metrics (, DEE, AR as linked; RE, CB as controls) across C cases, comparing before vs. after profiles. Linked metrics shift modestly downward; controls remain stable.

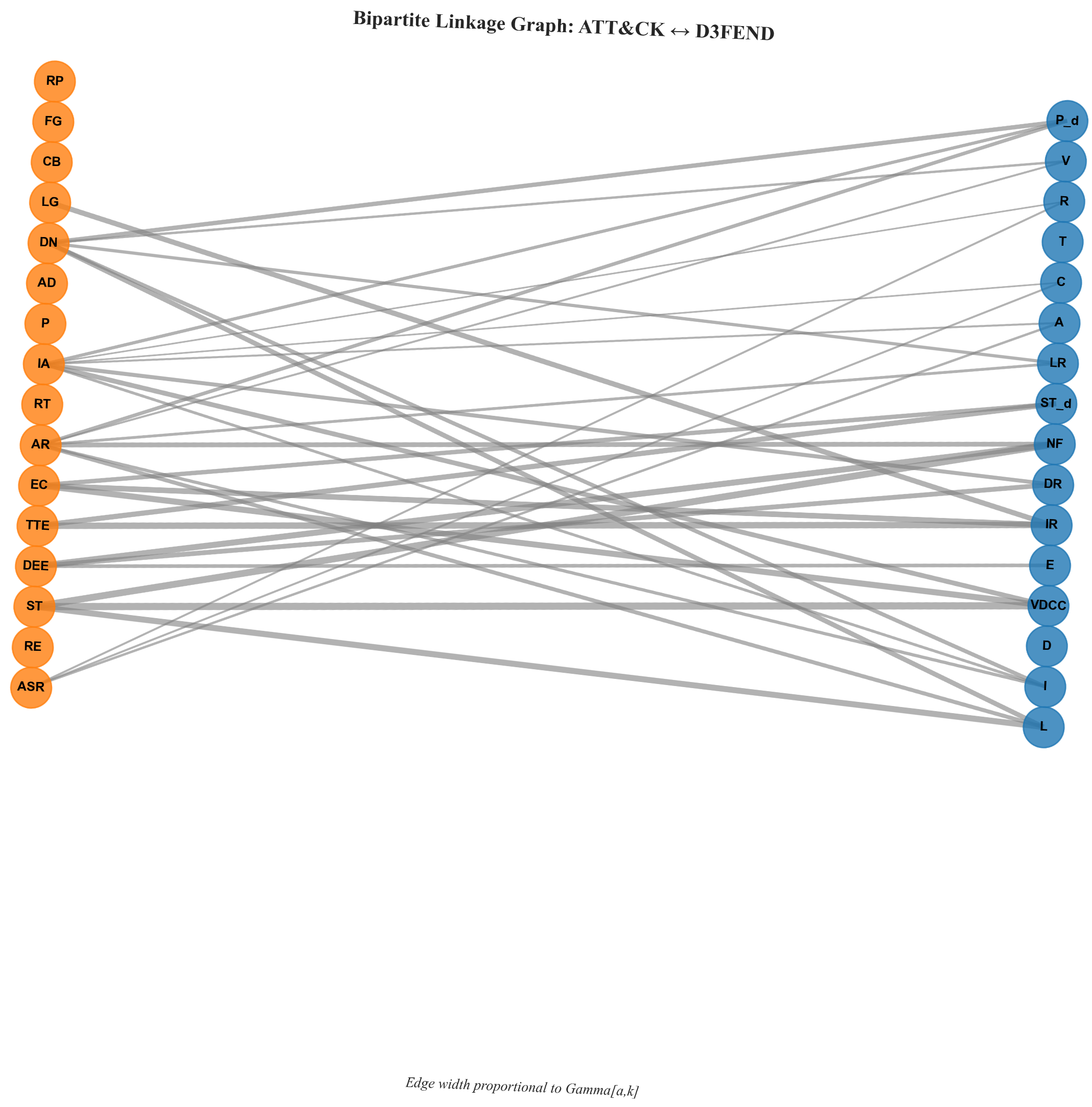

- Bipartite linkage graph (Figure A3): Bipartite graph with attacker metrics (left) and defender metrics (right); edge set with widths proportional to . This visualizes the structural prior behind the calibration.

Appendix B.6. Optional Fuzzy Robustness (Not Used for Table 4 and Table 5)

Appendix B.6.1. Scope

Appendix B.6.2. Specification

Appendix B.7. Pure Nash Equilibrium Verification

Appendix B.8. Equilibrium Computation Details

Appendix B.8.1. Sign Convention

Appendix B.8.2. Main Results (Non-Zero-Sum)

Appendix B.8.3. Zero-Sum Variant

Appendix B.8.4. Non-Degeneracy and ε-Perturbation

Appendix B.9. Zero-Sum Sensitivity Variant (A,-D)

| MNE | Attacker Strategy | Defender Strategy |

|---|---|---|

| MNE 1 | : Command and Control (0.5714) | : Model (0.9512) |

| (mixed) | : Impact (0.4286) | : Isolate (0.0488) |

| MNE 2 | : Impact (1.0000) | : Model (1.0000) |

| (pure) | ||

| MNE 3 | : Discovery (0.2000) | : Isolate (0.7857) |

| (mixed) | : Command and Control (0.8000) | : Deceive (0.2143) |

| MNE 4 | : Command and Control (1.0000) | : Isolate (1.0000) |

| (pure) | ||

| MNE 5 | : Discovery (0.1111) | : Isolate (0.0769) |

| (mixed) | : Collection (0.8889) | : Deceive (0.9231) |

| Attacker | Defender | ||

|---|---|---|---|

| Metric | Weight | Metric | Weight |

| ASR | 0.109 421 | L | 0.088 138 |

| RE | 0.047 640 | I | 0.088 138 |

| ST | 0.092 165 | D | 0.042 359 |

| DEE | 0.088 782 | VDCC | 0.064 290 |

| TTE | 0.047 640 | E | 0.046 193 |

| EC | 0.088 782 | IR | 0.088 138 |

| AR | 0.081 419 | DR | 0.048 163 |

| RT | 0.047 640 | NF | 0.081 980 |

| IA | 0.092 165 | ST | 0.081 980 |

| P | 0.081 419 | LR | 0.048 163 |

| AD | 0.057 193 | A | 0.055 784 |

| DN | 0.026 406 | C | 0.046 039 |

| LG | 0.043 317 | T | 0.069 305 |

| CB | 0.026 246 | R | 0.053 188 |

| FG | 0.021 048 | V | 0.042 359 |

| RP | 0.048 717 | P | 0.055 784 |

| Matrix | n | CI | CR | GCI | Koczkodaj | |

|---|---|---|---|---|---|---|

| Attacker | 16 | 16.452 800 | 0.030 200 | 0.019 000 | 0.066 600 | 0.875 000 |

| Defender | 16 | 16.315 700 | 0.021 000 | 0.013 200 | 0.047 700 | 0.500 000 |

| ATT&CK Tactic | Model | Harden | Detect | Isolate | Deceive | Evict |

|---|---|---|---|---|---|---|

| Collection | 31 | 21 | 28 | 15 | 18 | 18 |

| Command and Control | 41 | 15 | 41 | 15 | 15 | 41 |

| Credential Access | 58 | 52 | 58 | 43 | 49 | 49 |

| Defense Evasion | 47 | 42 | 45 | 33 | 33 | 40 |

| Discovery | 27 | 18 | 24 | 13 | 15 | 18 |

| Execution | 30 | 22 | 27 | 18 | 19 | 21 |

| Exfiltration | 23 | 15 | 20 | 12 | 13 | 16 |

| Initial Access | 35 | 26 | 32 | 21 | 23 | 26 |

| Lateral Movement | 36 | 28 | 33 | 22 | 24 | 27 |

| Persistence | 38 | 29 | 35 | 24 | 26 | 28 |

| Privilege Escalation | 39 | 30 | 36 | 25 | 27 | 29 |

| Reconnaissance | 0 | 0 | 0 | 0 | 0 | 0 |

| Resource Development | 0 | 0 | 0 | 0 | 0 | 0 |

| Impact | 29 | 22 | 26 | 18 | 19 | 21 |

| Total | 371 | 274 | 360 | 253 | 228 | 288 |

| ATT&CK Tactic | Frequency |

|---|---|

| Collection | 8 |

| Command and Control | 9 |

| Credential Access | 10 |

| Defense Evasion | 10 |

| Discovery | 8 |

| Execution | 9 |

| Exfiltration | 7 |

| Initial Access | 9 |

| Lateral Movement | 9 |

| Persistence | 9 |

| Privilege Escalation | 9 |

| Reconnaissance | 0 |

| Resource Development | 0 |

| Impact | 8 |

| Total | 104 |

| D3FEND Control Family | Frequency |

|---|---|

| Model | 371 |

| Harden | 274 |

| Detect | 360 |

| Isolate | 253 |

| Deceive | 228 |

| Evict | 288 |

| Total | 1774 |

Appendix B.10. Attacker Utility Metrics and Scoring Preferences (Full Details)

| Metric | Description | Score |

|---|---|---|

| Attack Success Rate (ASR) | Attack success rate is nearly nonexistent | 0 |

| Attacks are occasionally successful | 0.1–0.3 | |

| Attacks are successful about half of the time | 0.4–0.6 | |

| Attacks are usually successful | 0.7–0.9 | |

| Attacks are always successful | 1 | |

| Resource Efficiency (RE) | Attacks require considerable resources with low payoff | 0 |

| Attacks require significant resources but have a moderate payoff | 0.1–0.3 | |

| Attacks are somewhat resource efficient | 0.4–0.6 | |

| Attacks are quite resource efficient | 0.7–0.9 | |

| Attacks are exceptionally resource efficient | 1 | |

| Stealthiness (ST) | Attacks are always detected and attributed | 0 |

| Attacks are usually detected and often attributed | 0.1–0.3 | |

| Attacks are sometimes detected and occasionally attributed | 0.4–0.6 | |

| Attacks are seldom detected and rarely attributed | 0.7–0.9 | |

| Attacks are never detected nor attributed | 1 | |

| Data Exfiltration Effectiveness (DEE) | Data exfiltration attempts always fail | 0 |

| Data exfiltration attempts succeed only occasionally | 0.1–0.3 | |

| Data exfiltration attempts often succeed | 0.4–0.6 | |

| Data exfiltration attempts usually succeed | 0.7–0.9 | |

| Data exfiltration attempts always succeed | 1 | |

| Time-to-Exploit (TTE) | Vulnerabilities are never successfully exploited before patching | 0 |

| Vulnerabilities are exploited before patching only occasionally | 0.1–0.3 | |

| Vulnerabilities are often exploited before patching | 0.4–0.6 | |

| Vulnerabilities are usually exploited before patching | 0.7–0.9 | |

| Vulnerabilities are always exploited before patching | 1 | |

| Evasion of Countermeasures (EC) | Countermeasures always successfully thwart attacks | 0 |

| Countermeasures often successfully thwart attacks | 0.1–0.3 | |

| Countermeasures sometimes fail to thwart attacks | 0.4–0.6 | |

| Countermeasures often fail to thwart attacks | 0.7–0.9 | |

| Countermeasures never successfully thwart attacks | 1 | |

| Attribution Resistance (AR) | The attacker is always accurately identified | 0 |

| The attacker is often accurately identified | 0.1–0.3 | |

| The attacker is sometimes accurately identified | 0.4–0.6 | |

| The attacker is seldom accurately identified | 0.7–0.9 | |

| The attacker is never accurately identified | 1 | |

| Reusability of Attack Techniques (RT) | Attack techniques are always one-off, never reusable | 0 |

| Attack techniques are occasionally reusable | 0.1–0.3 | |

| Attack techniques are often reusable | 0.4–0.6 | |

| Attack techniques are usually reusable | 0.7–0.9 | |

| Attack techniques are always reusable | 1 | |

| Impact of Attacks (IA) | Attacks cause no notable disruption or loss | 0 |

| Attacks cause minor disruption or loss | 0.1–0.3 | |

| Attacks cause moderate disruption or loss | 0.4–0.6 | |

| Attacks cause major disruption or loss | 0.7–0.9 | |

| Attacks cause catastrophic disruption or loss | 1 | |

| Persistence (P) | The attacker cannot maintain control over compromised systems | 0 |

| The attacker occasionally maintains control over compromised systems | 0.1–0.3 | |

| The attacker often maintains control over compromised systems | 0.4–0.6 | |

| The attacker usually maintains control over compromised systems | 0.7–0.9 | |

| The attacker always maintains control over compromised systems | 1 | |

| Adaptability (AD) | The attacker is unable to adjust strategies in response to changing defenses | 0 |

| The attacker occasionally adjusts strategies in response to changing defenses | 0.1–0.3 | |

| The attacker often adjusts strategies in response to changing defenses | 0.4–0.6 | |

| The attacker usually adjusts strategies in response to changing defenses | 0.7–0.9 | |

| The attacker always adjusts strategies in response to changing defenses | 1 | |

| Deniability (DN) | The attacker cannot deny involvement in attacks | 0 |

| The attacker can occasionally deny involvement in attacks | 0.1–0.3 | |

| The attacker can often deny involvement in attacks | 0.4–0.6 | |

| The attacker can usually deny involvement in attacks | 0.7–0.9 | |

| The attacker can always deny involvement in attacks | 1 | |

| Longevity (LG) | The attacker’s operations are quickly disrupted | 0 |

| The attacker’s operations are often disrupted | 0.1–0.3 | |

| The attacker’s operations are occasionally disrupted | 0.4–0.6 | |

| The attacker’s operations are rarely disrupted | 0.7–0.9 | |

| The attacker’s operations are never disrupted | 1 | |

| Collaboration (CB) | The attacker never collaborates with others | 0 |

| The attacker occasionally collaborates with others | 0.1–0.3 | |

| The attacker often collaborates with others | 0.4–0.6 | |

| The attacker usually collaborates with others | 0.7–0.9 | |

| The attacker always collaborates with others | 1 | |

| Financial Gain (FG) | The attacker never profits from attacks | 0 |

| The attacker occasionally profits from attacks | 0.1–0.3 | |

| The attacker often profits from attacks | 0.4–0.6 | |

| The attacker usually profits from attacks | 0.7–0.9 | |

| The attacker always profits from attacks | 1 | |

| Reputation and Prestige (RP) | The attacker gains no reputation or prestige from attacks | 0 |

| The attacker gains little reputation or prestige from attacks | 0.1–0.3 | |

| The attacker gains some reputation or prestige from attacks | 0.4–0.6 | |

| The attacker gains considerable reputation or prestige from attacks | 0.7–0.9 | |

| The attacker’s reputation or prestige is greatly enhanced by each attack | 1 |

Appendix B.11. Defender Utility Metrics and Scoring Preferences (Full Details)

| Metric | Description | Score |

|---|---|---|

| Logging and Audit Trail Capabilities (L) | No logging or audit trail capabilities | 0 |

| Minimal or ineffective logging and audit trail capabilities | 0.1–0.3 | |

| Moderate logging and audit trail capabilities | 0.4–0.6 | |

| Robust logging and audit trail capabilities with some limitations | 0.7–0.9 | |

| Comprehensive and highly effective logging and audit trail capabilities | 1 | |

| Integrity and Preservation of Digital Evidence (I) | Complete loss of all digital evidence, including backups | 0 |

| Severe damage or compromised backups with limited recoverability | 0.1–0.3 | |

| Partial loss of digital evidence, with some recoverable data | 0.4–0.6 | |

| Reasonable integrity and preservation of digital evidence, with recoverable backups | 0.7–0.9 | |

| Full integrity and preservation of all digital evidence, including secure and accessible backups | 1 | |

| Documentation and Compliance with Digital Forensic Standards (D) | No documentation or non-compliance with digital forensic standards | 0 |

| Incomplete or inadequate documentation and limited adherence to digital forensic standards | 0.1–0.3 | |

| Basic documentation and partial compliance with digital forensic standards | 0.4–0.6 | |

| Well-documented processes and good adherence to digital forensic standards | 0.7–0.9 | |

| Comprehensive documentation and strict compliance with recognized digital forensic standards | 1 | |

| Volatile Data Capture Capabilities (VDCC) | No volatile data capture capabilities | 0 |

| Limited or unreliable volatile data capture capabilities | 0.1–0.3 | |

| Moderate volatile data capture capabilities | 0.4–0.6 | |

| Effective volatile data capture capabilities with some limitations | 0.7–0.9 | |

| Robust and reliable volatile data capture capabilities | 1 | |

| Encryption and Decryption Capabilities (E) | No encryption or decryption capabilities | 0 |

| Weak or limited encryption and decryption capabilities | 0.1–0.3 | |

| Moderate encryption and decryption capabilities | 0.4–0.6 | |

| Strong encryption and decryption capabilities with some limitations | 0.7–0.9 | |

| Highly secure encryption and decryption capabilities | 1 | |

| Incident Response Preparedness (IR) | No incident response plan or team in place | 0 |

| Initial incident response plan, not regularly tested or updated, with limited team capability | 0.1–0.3 | |

| Developed incident response plan, periodically tested, with trained team | 0.4–0.6 | |

| Comprehensive incident response plan, regularly tested and updated, with a well-coordinated team | 0.7–0.9 | |

| Advanced incident response plan, continuously tested and optimized, with a dedicated, experienced team | 1 | |

| Data Recovery Capabilities (DR) | No data recovery processes or tools in place | 0 |

| Basic data recovery tools, with limited effectiveness | 0.1–0.3 | |

| Advanced data recovery tools, with some limitations in terms of capabilities | 0.4–0.6 | |

| Sophisticated data recovery tools, with high success rates | 0.7–0.9 | |

| Comprehensive data recovery tools and processes, with excellent success rates | 1 | |

| Network Forensics Capabilities (NF) | No network forensic capabilities | 0 |

| Basic network forensic capabilities, limited to capturing packets or logs | 0.1–0.3 | |

| Developed network forensic capabilities, with ability to analyze traffic and detect anomalies | 0.4–0.6 | |

| Advanced network forensic capabilities, with proactive threat detection | 0.7–0.9 | |

| Comprehensive network forensic capabilities, with full spectrum threat detection and automated responses | 1 | |

| Staff Training and Expertise () | No trained staff or expertise in digital forensics | 0 |

| Few staff members with basic training in digital forensics | 0.1–0.3 | |

| Several staff members with intermediate-level training, some with certifications | 0.4–0.6 | |

| Most staff members with advanced-level training, many with certifications | 0.7–0.9 | |

| All staff members are experts in digital forensics, with relevant certifications | 1 | |

| Legal & Regulatory Compliance (LR) | Non-compliance with applicable legal and regulatory requirements | 0 |

| Partial compliance with significant shortcomings | 0.1–0.3 | |

| Compliance with most requirements, some minor issues | 0.4–0.6 | |

| High compliance with only minor issues | 0.7–0.9 | |

| Full compliance with all relevant legal and regulatory requirements | 1 | |

| Accuracy (A) | No consistency in results, many errors and inaccuracies in digital forensic analysis | 0 |

| Frequent errors in analysis, high level of inaccuracy | 0.1–0.3 | |

| Some inaccuracies in results, needs further improvement | 0.4–0.6 | |

| High level of accuracy, few inconsistencies or errors | 0.7–0.9 | |

| Extremely accurate, consistent results with virtually no errors | 1 | |

| Completeness (C) | Significant data overlooked, very incomplete analysis | 0 |

| Some relevant data collected, but analysis remains substantially incomplete | 0.1–0.3 | |

| Most of the relevant data collected and analyzed, but some gaps remain | 0.4–0.6 | |

| High degree of completeness in data collection and analysis, minor gaps | 0.7–0.9 | |

| Comprehensive data collection and analysis, virtually no information overlooked | 1 | |

| Timeliness (T) | Extensive delays in digital forensic investigation process, no urgency | 0 |

| Frequent delays, slow response time | 0.1–0.3 | |

| Reasonable response time, occasional delays | 0.4–0.6 | |

| Quick response time, infrequent delays | 0.7–0.9 | |

| Immediate response, efficient process, no delays | 1 | |

| Reliability (R) | Unreliable techniques, inconsistent and unrepeatable results | 0 |

| Some reliability in techniques, but results are often inconsistent | 0.1–0.3 | |

| Mostly reliable techniques, occasional inconsistencies in results | 0.4–0.6 | |

| High reliability in techniques, few inconsistencies | 0.7–0.9 | |

| Highly reliable and consistent techniques, results are dependable and repeatable | 1 | |

| Validity (V) | No adherence to standards, methods not legally or scientifically acceptable | 0 |

| Minimal adherence to standards, many methods not acceptable | 0.1–0.3 | |

| Moderate adherence to standards, some methods not acceptable | 0.4–0.6 | |

| High adherence to standards, majority of methods are acceptable | 0.7–0.9 | |

| Strict adherence to standards, all methods used are legally and scientifically acceptable | 1 | |

| Preservation () | No procedures in place for evidence preservation, evidence frequently damaged or lost | 0 |

| Minimal preservation procedures, evidence sometimes damaged or lost | 0.1–0.3 | |

| Moderate preservation procedures, occasional evidence damage or loss | 0.4–0.6 | |

| Robust preservation procedures, rare instances of evidence damage or loss | 0.7–0.9 | |

| Comprehensive preservation procedures, virtually no damage or loss of evidence | 1 |

Appendix B.12. Game Theory Background

Appendix B.12.1. Players and Actions

Appendix B.12.2. Payoff Functions and Utility

- Defender (D): The organization allocating forensic and defensive resources.

- Attacker (A): The adversary selecting tactics to compromise digital assets.

Appendix B.12.3. Illustrative Scenario

| Attack (SA) | Attack (SI) | |

|---|---|---|

| Defender (HI FT) | ||

| Defender (LI FT) |

Appendix B.12.4. Payoff Interpretation

- Defender payoffs: High investment improves readiness for sophisticated attacks at the expense of potential over-provisioning when the attacker chooses simpler tactics; low investment reduces costs but increases risk against sophisticated attacks.

- Attacker payoffs: Sophisticated attacks demand more resources yet yield higher gains if defences are weak; simpler attacks are cheaper but produce lower returns, especially against well-prepared defenders.

Appendix B.12.5. Equilibrium Concepts

- Pure Nash Equilibrium (PNE): A profile where neither player can improve utility by deviating unilaterally.

- Mixed Nash Equilibrium (MNE): Players randomize over strategies to prevent opponents from gaining by deviation, a common representation for adversaries facing uncertainty (e.g., APTs varying attack vectors).

Appendix B.13. Expert Weight Elicitation Workflow (Extended)

- Expert identification: Recruit DF/DFIR experts with ATT&CK domain knowledge and documented experience (minimum of 5 years professional experience and/or peer-reviewed publications).

- Threat landscape briefing: Provide each expert with the current ATT&CK tactics, associated TTPs, and D3FEND control families relevant to SME/SMB environments.

- Evaluation criteria: Present weighting criteria (Likelihood, Impact, Detectability, Effort) used to reason about attacker tactics and defender capabilities.

- Independent judgments: Collect pairwise comparison matrices (PCMs) independently using the Saaty 1–9 scale for both attacker and defender metric hierarchies.

- Aggregation and normalization: Combine judgments via the element-wise geometric mean, normalize the resulting eigenvectors, and check for each consensus PCM (Algorithm A4).

- Weight publication: Publish the aggregated weights, per-expert consistency diagnostics, and supporting scripts (see Table 8 and Appendix B.2).

Appendix B.14. Supplementary Algorithms

| Algorithm A1 Utility computation with validation |

| Input: : Normalised metric scores, : Metric weights, , Output: : Weighted utility score

|

| Algorithm A2 Readiness classification rule |

| Input: : Utility score (from Algorithm 1) : Readiness threshold (e.g., ) Output:

|

| Algorithm A3 Low-score metric identification |

| Input: : Normalised metric scores : Threshold used in Algorithm A2 Output: : Indices of metrics requiring improvement

|

| Algorithm A4 Eigenvector-based AHP weight derivation |

| Input: Expert PCMs , , with and Output: : Normalised weights and consistency diagnostics

|

| Algorithm A5 Prioritised DFR improvement plan |

| Input: , : Metrics and weights : Candidate improvement indices (from Algorithm A3) : Feasibility, strategy, and monitoring oracles Output: Ordered action list

|

| Algorithm 6 Fuzzy readiness scoring pipeline |

| Input: Normalised inputs Triangular membership functions for each input/output label (Table A3 and Table A4) Rule table mapping input labels to output labels (Table A5) Output:

|

References

- Chen, P.; Desmet, L.; Huygens, C. A study on advanced persistent threats. In Proceedings of the Communications and Multimedia Security: 15th IFIP TC 6/TC 11 International Conference, CMS 2014, Aveiro, Portugal, 25–26 September 2014; Proceedings 15. Springer: Berlin/Heidelberg, Germany, 2014; pp. 63–72. [Google Scholar]

- Rowlingson, R. A ten step process for forensic readiness. Int. J. Digit. Evid. 2004, 2, 1–28. [Google Scholar]

- Google Mandiant. M-Trends 2025: Executive Edition; Google LLC: Mountain View, CA, USA, 2025; Based on Mandiant Consulting Investigations of Targeted Attack Activity Conducted Between 1 January 2024 and 31 December 2024; Available online: https://services.google.com/fh/files/misc/m-trends-2025-executive-edition-en.pdf (accessed on 10 November 2025).

- IBM. Cost of a Data Breach Report 2025: The AI Oversight Gap; IBM Corporation: Armonk, NY, USA, 2025; Based on IBM analysis of research data independently compiled by Ponemon Institute. [Google Scholar]

- Bonderud, D. Cost of a Data Breach 2024: Financial Industry. Available online: https://www.ibm.com/think/insights/cost-of-a-data-breach-2024-financial-industry (accessed on 10 November 2025).

- Johnson, R. 60 Percent of Small Companies Close Within 6 Months of Being Hacked. 2019. Available online: https://cybersecurityventures.com/60-percent-of-small-companies-close-within-6-months-of-being-hacked (accessed on 10 November 2025).

- Baker, P. The SolarWinds Hack Timeline: Who Knew What, and When? 2021. Available online: https://www.csoonline.com/article/570537/the-solarwinds-hack-timeline-who-knew-what-and-when.html (accessed on 10 November 2025).

- Batool, A.; Zowghi, D.; Bano, M. AI governance: A systematic literature review. AI Ethics 2025, 5, 3265–3279. [Google Scholar] [CrossRef]

- Wrightson, T. Advanced Persistent Threat Hacking: The Art and Science of Hacking Any Organization; McGraw-Hill Education Group: New York, NY, USA, 2014; ISBN 978-0-07-182836-9. [Google Scholar]

- Årnes, A. Digital Forensics; John Wiley & Sons: Hoboken, NJ, USA, 2017; ISBN 978-1-119-26238-4. [Google Scholar]

- Griffith, S.B. Sun Tzu: The Art of War; Oxford University Press: London, UK, 1963; Volume 39, ISBN 9780195014761. [Google Scholar]

- Myerson, R.B. Game Theory; Harvard University Press: Cambridge, MA, USA, 2013; ISBN 978-0-674-72861-5. [Google Scholar]

- Belton, V.; Stewart, T. Multiple Criteria Decision Analysis: An Integrated Approach; Springer Science & Business Mediar: Cham, Switzerland, 2002; ISBN 978-0-7923-7505-0. [Google Scholar]

- Lye, K.W.; Wing, J.M. Game strategies in network security. Int. J. Inf. Secur. 2005, 4, 71–86. [Google Scholar] [CrossRef]

- Roy, S.; Ellis, C.; Shiva, S.; Dasgupta, D.; Shandilya, V.; Wu, Q. A survey of game theory as applied to network security. In Proceedings of the 2010 43rd Hawaii International Conference on System Sciences (HICSS), Honolulu, HI, USA, 5–8 January 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1–10. [Google Scholar]

- Zhu, Q.; Basar, T. Game-theoretic methods for robustness, security, and resilience of cyberphysical control systems: Games-in-games principle for optimal cross-layer resilient control systems. IEEE Control Syst. Mag. 2015, 35, 46–65. [Google Scholar]

- Kent, K.; Chevalier, S.; Grance, T. Guide to Integrating Forensic Techniques into Incident Response; NIST Special Publication 800-86; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2006. [Google Scholar] [CrossRef]

- Alpcan, T.; Başar, T. Network Security: A Decision and Game-Theoretic Approach; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Casey, E. Digital Evidence and Computer Crime: Forensic Science, Computers, and the Internet; Academic Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Manshaei, M.H.; Zhu, Q.; Alpcan, T.; Bacşar, T.; Hubaux, J.P. Game theory meets network security and privacy. ACM Comput. Surv. (CSUR) 2013, 45, 1–39. [Google Scholar] [CrossRef]

- Nisioti, A.; Loukas, G.; Rass, S.; Panaousis, E. Game-theoretic decision support for cyber forensic investigations. Sensors 2021, 21, 5300. [Google Scholar] [CrossRef]

- Hasanabadi, S.S.; Lashkari, A.H.; Ghorbani, A.A. A game-theoretic defensive approach for forensic investigators against rootkits. Forensic Sci. Int. Digit. Investig. 2020, 33, 200909. [Google Scholar] [CrossRef]

- Karabiyik, U.; Karabiyik, T. A game theoretic approach for digital forensic tool selection. Mathematics 2020, 8, 774. [Google Scholar] [CrossRef]

- Hasanabadi, S.S.; Lashkari, A.H.; Ghorbani, A.A. A memory-based game-theoretic defensive approach for digital forensic investigators. Forensic Sci. Int. Digit. Investig. 2021, 38, 301214. [Google Scholar] [CrossRef]

- Caporusso, N.; Chea, S.; Abukhaled, R. A game-theoretical model of ransomware. In Proceedings of the Advances in Human Factors in Cybersecurity: Proceedings of the AHFE 2018 International Conference on Human Factors in Cybersecurity, Orlando, FL, USA, 21–25 July 2018; Loews Sapphire Falls Resort at Universal Studios. Springer: Cham, Switzerland, 2018; pp. 69–78. [Google Scholar]

- Kebande, V.R.; Venter, H.S. Novel digital forensic readiness technique in the cloud environment. Aust. J. Forensic Sci. 2018, 50, 552–591. [Google Scholar] [CrossRef]

- Kebande, V.R.; Karie, N.M.; Choo, K.R.; Alawadi, S. Digital forensic readiness intelligence crime repository. Secur. Priv. 2021, 4, e151. [Google Scholar] [CrossRef]

- Englbrecht, L.; Meier, S.; Pernul, G. Towards a capability maturity model for digital forensic readiness. Wirel. Netw. 2020, 26, 4895–4907. [Google Scholar] [CrossRef]

- Reddy, K.; Venter, H.S. The architecture of a digital forensic readiness management system. Comput. Secur. 2013, 32, 73–89. [Google Scholar] [CrossRef]

- Grobler, C.P.; Louwrens, C. Digital forensic readiness as a component of information security best practice. In Proceedings of the IFIP International Information Security Conference; Springer: Boston, MA, USA, 2007; pp. 13–24. [Google Scholar]

- Lakhdhar, Y.; Rekhis, S.; Sabir, E. A Game Theoretic Approach For Deploying Forensic Ready Systems. In Proceedings of the 2020 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 17–19 September 2020; pp. 1–6. [Google Scholar]

- Elyas, M.; Ahmad, A.; Maynard, S.B.; Lonie, A. Digital forensic readiness: Expert perspectives on a theoretical framework. Comput. Secur. 2015, 52, 70–89. [Google Scholar] [CrossRef]

- Baiquni, I.Z.; Amiruddin, A. A case study of digital forensic readiness level measurement using DiFRI model. In Proceedings of the 2022 International Conference on Informatics, Multimedia, Cyber and Information System (ICIMCIS), Jakarta, Indonesia, 16–17 November 2022; pp. 184–189. [Google Scholar]

- Rawindaran, N.; Jayal, A.; Prakash, E. Cybersecurity Framework: Addressing Resiliency in Welsh SMEs for Digital Transformation and Industry 5.0. J. Cybersecur. Priv. 2025, 5, 17. [Google Scholar] [CrossRef]

- Trenwith, P.M.; Venter, H.S. Digital forensic readiness in the cloud. In Proceedings of the 2013 Information Security for South Africa, Johannesburg, South Africa, 14–16 August 2013; pp. 1–5. [Google Scholar]

- Monteiro, D.; Yu, Y.; Zisman, A.; Nuseibeh, B. Adaptive Observability for Forensic-Ready Microservice Systems. IEEE Trans. Serv. Comput. 2023, 16, 3196–3209. [Google Scholar] [CrossRef]

- Xiong, W.; Legrand, E.; Åberg, O.; Lagerström, R. Cyber security threat modeling based on the MITRE Enterprise ATT&CK Matrix. Softw. Syst. Model. 2022, 21, 157–177. [Google Scholar]

- Wang, J.; Neil, M. A Bayesian-network-based cybersecurity adversarial risk analysis framework with numerical examples. arXiv 2021, arXiv:2106.00471. [Google Scholar]

- Usman, N.; Usman, S.; Khan, F.; Jan, M.A.; Sajid, A.; Alazab, M.; Watters, P. Intelligent dynamic malware detection using machine learning in IP reputation for forensics data analytics. Future Gener. Comput. Syst. 2021, 118, 124–141. [Google Scholar] [CrossRef]

- Li, M.; Lal, C.; Conti, M.; Hu, D. LEChain: A blockchain-based lawful evidence management scheme for digital forensics. Future Gener. Comput. Syst. 2021, 115, 406–420. [Google Scholar] [CrossRef]

- Soltani, S.; Seno, S.A.H. Detecting the software usage on a compromised system: A triage solution for digital forensics. Forensic Sci. Int. Digit. Investig. 2023, 44, 301484. [Google Scholar] [CrossRef]

- Rother, C.; Chen, B. Reversing File Access Control Using Disk Forensics on Low-Level Flash Memory. J. Cybersecur. Priv. 2024, 4, 805–822. [Google Scholar] [CrossRef]

- Nikkel, B. Registration Data Access Protocol (RDAP) for digital forensic investigators. Digit. Investig. 2017, 22, 133–141. [Google Scholar] [CrossRef]

- Nikkel, B. Fintech forensics: Criminal investigation and digital evidence in financial technologies. Forensic Sci. Int. Digit. Investig. 2020, 33, 200908. [Google Scholar] [CrossRef]

- Seo, S.; Seok, B.; Lee, C. Digital forensic investigation framework for the metaverse. J. Supercomput. 2023, 79, 9467–9485. [Google Scholar] [CrossRef]

- Malhotra, S. Digital forensics meets ai: A game-changer for the 4th industrial revolution. In Artificial Intelligence and Blockchain in Digital Forensics; River Publishers: Aalborg, Denmark, 2023; pp. 1–20. [Google Scholar]

- Tok, Y.C.; Chattopadhyay, S. Identifying threats, cybercrime and digital forensic opportunities in Smart City Infrastructure via threat modeling. Forensic Sci. Int. Digit. Investig. 2023, 45, 301540. [Google Scholar] [CrossRef]

- Han, K.; Choi, J.H.; Choi, Y.; Lee, G.M.; Whinston, A.B. Security defense against long-term and stealthy cyberattacks. Decis. Support Syst. 2023, 166, 113912. [Google Scholar] [CrossRef]

- Chandra, A.; Snowe, M.J. A taxonomy of cybercrime: Theory and design. Int. J. Account. Inf. Syst. 2020, 38, 100467. [Google Scholar] [CrossRef]

- Casey, E.; Barnum, S.; Griffith, R.; Snyder, J.; van Beek, H.; Nelson, A. Advancing coordinated cyber-investigations and tool interoperability using a community developed specification language. Digit. Investig. 2017, 22, 14–45. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Knight, V.; Campbell, J. Nashpy: A Python library for the computation of Nash equilibria. J. Open Source Softw. 2018, 3, 904. [Google Scholar] [CrossRef]

- Zopounidis, C.; Pardalos, P.M. Handbook of Multicriteria Analysis; Springer Science & Business Media: Cham, Switzerland, 2010; Volume 103. [Google Scholar]

- Saaty, T.L. Analytic hierarchy process. In Encyclopedia of Operations Research and Management Science; Springer: Cham, Switzerland, 2013; pp. 52–64. [Google Scholar]

- Joint Committee for Guides in Metrology (JCGM). Evaluation of Measurement Data—Supplement 1: Propagation of Distributions Using a Monte Carlo Method; JCGM 101:2008; JCGM: Sèvres, France, 2008; Available online: https://www.bipm.org/documents/20126/2071204/JCGM_101_2008_E.pdf (accessed on 10 November 2025)JCGM 101:2008.

- The MITRE Corporation. MITRE ATT&CK STIX Data. Structured Threat Information Expression (STIX 2.1) Datasets for Enterprise, Mobile, and ICS ATT&CK. 2024. Available online: https://github.com/mitre-attack/attack-stix-data (accessed on 10 November 2025).

| Dimension | Our Approach | Nisioti et al. [21] | Karabiyik et al. [23] | Lakhdhar et al. [31] | Wang et al. [38] | Monteiro et al. [36] |

|---|---|---|---|---|---|---|

| Game Model | Non-zero-sum Bimatrix (MNE/PNE) | Bayesian (BNE) | 2 × 2 Normal-Form | Non-cooperative | ARA (Influence Diagram) | Bayesian (BNE) |

| ATT&CK | ✓Explicit (14 Tactics) | ✓Explicit | × | × | × | × |

| D3FEND | ✓Explicit (6 Families) | × | × | × | × | × |

| Knowledge Coupling | ✓ ATT&CK↔D3FEND | △ ATT&CK + CVSS | △ Empirical (ForGe) | △ Internal (CSLib) | △ Probabilistic (HBN) | △ CVSS + OpenTelemetry |

| Weighting Method | ✓AHP (10 Experts) | △ CVSS + SME | △ Rule-based | △ Parametric () | △ Implicit | △ Scalar Parameters |

| Quantitative Utilities | ✓32 AHP Metrics | ✓Payoff Functions | ✓Payoff Matrix | ✓Parametric | ✓Utility Nodes | ✓ Closed-Form |

| Equilibrium | ✓PNE & MNE | ✓BNE | ✓Pure/Mixed NE | ✓Pure/Mixed NE | × ARA | ✓BNE |

| DFR Focus | ✓DFR | △ Post-mortem | △ Investigation Efficiency | ✓Forensic Readiness | × Cyber Risk | ✓Forensic Readiness |

| SME/SMB | ✓Explicitly Targeted | △ Domain-Agnostic | △ Potential | △ Applicable | △ Feasible | △ Implicit |

| Standardization | △ ATT&CK, D3FEND, STIX | △ ATT&CK, STIX, CVSS | △ Open-Source | △ CVE/US-CERT | △ Self-Contained | △ CVSS, OpenTelemetry |

| Reproducibility | ✓Code, Data, Seeds | △ Public Inputs, No Code | △ Code on Request | △ No Public Code/Data | △ No Code, Commercial | ✓Benchmark, Repo |

| Key Differentiator | Integrated DFR | Bayesian Anti-Forensics | Tool Selection | Provability Taxonomy | Adversarial Risk Analysis | Microservice Observability |

| Symbol | Description |

|---|---|

| Game Structure | |

| Attacker strategy set: , ATT&CK tactics | |

| Defender strategy set: , D3FEND control families | |

| A | Attacker payoff matrix: , entry |

| D | Defender payoff matrix: , entry |

| Strategies | |

| Attacker pure strategy (ATT&CK tactic) | |

| Defender pure strategy (D3FEND control family) | |

| x | Attacker mixed strategy: , probability vector over |

| y | Defender mixed strategy: , probability vector over |

| , | Nash equilibrium mixed strategies |

| Utilities and Metrics | |

| Normalized attacker utility: | |

| Normalized defender utility: | |

| AHP weight for attacker metric i: , | |

| AHP weight for defender metric j: , | |

| Attacker DFR metric i value: | |

| Defender DFR metric j value: | |

| Notation Disambiguation | |

| vs. A | is a scalar (single entry); A is the entire matrix |

| x, y vs. x*, y* | x, y are elements/indices; x*, y* are mixed-strategy vectors |

| Defender Strategies | ATT&CK Tactics |

|---|---|

| t1 | t2 | t3 | t4 | t5 | t6 | |

|---|---|---|---|---|---|---|

| s1 | 5 | 6 | 7 | 8 | 9 | 10 |

| s2 | 0 | 0 | 1 | 2 | 3 | 4 |

| s3 | 14 | 13 | 12 | 11 | 0 | 0 |

| s4 | 16 | 17 | 18 | 18 | 0 | 0 |

| s5 | 19 | 20 | 20 | 18 | 0 | 0 |

| s6 | 23 | 22 | 21 | 7 | 6 | 5 |

| s7 | 24 | 25 | 26 | 24 | 25 | 26 |

| s8 | 32 | 28 | 29 | 30 | 31 | 27 |

| s9 | 33 | 34 | 35 | 30 | 33 | 32 |

| s10 | 32 | 35 | 36 | 6 | 7 | 5 |

| s11 | 36 | 37 | 38 | 6 | 35 | 30 |

| s12 | 37 | 38 | 39 | 39 | 0 | 0 |

| s13 | 38 | 39 | 40 | 0 | 0 | 0 |

| s14 | 39 | 40 | 41 | 0 | 0 | 0 |

| t1 | t2 | t3 | t4 | t5 | t6 | |

|---|---|---|---|---|---|---|

| s1 | 5 | 7 | 1 | 1 | 7 | 5 |

| s2 | 6 | 8 | 10 | 2 | 6 | 6 |

| s3 | 7 | 9 | 11 | 5 | 8 | 11 |

| s4 | 8 | 10 | 25 | 25 | 9 | 12 |

| s5 | 9 | 11 | 24 | 8 | 10 | 13 |

| s6 | 10 | 12 | 24 | 8 | 11 | 10 |

| s7 | 11 | 21 | 20 | 10 | 12 | 7 |

| s8 | 18 | 14 | 25 | 9 | 5 | 25 |

| s9 | 13 | 15 | 23 | 12 | 4 | 8 |

| s10 | 14 | 16 | 22 | 11 | 14 | 9 |

| s11 | 15 | 17 | 20 | 12 | 13 | 14 |

| s12 | 16 | 18 | 21 | 13 | 15 | 25 |

| s13 | 17 | 20 | 20 | 10 | 16 | 17 |

| s14 | 12 | 19 | 29 | 16 | 17 | 16 |

| Metric | Description |

|---|---|

| Attack Success Rate (ASR) | Likelihood of successful attack execution |

| Resource Efficiency (RE) | Ratio of attack payoff to resource expenditure |

| Stealthiness (ST) | Ability to avoid detection and attribution |

| Data Exfiltration Effectiveness (DEE) | Success rate of data exfiltration attempts |

| Time-to-Exploit (TTE) | Speed of vulnerability exploitation before patching |

| Evasion of Countermeasures (EC) | Ability to bypass defensive measures |

| Attribution Resistance (AR) | Difficulty in identifying the attacker |

| Reusability of Attack Techniques (RT) | Extent to which attack techniques can be reused |

| Impact of Attacks (IA) | Magnitude of disruption or loss caused |

| Persistence (P) | Ability to maintain control over compromised systems |

| Adaptability (AD) | Capacity to adjust strategies in response to defenses |

| Deniability (DN) | Ability to deny involvement in attacks |

| Longevity (LG) | Duration of operations before disruption |

| Collaboration (CB) | Extent of collaboration with other attackers |

| Financial Gain (FG) | Monetary profit from attacks |

| Reputation and Prestige (RP) | Enhancement of attacker reputation |

| Metric | Description |

|---|---|

| Logging and Audit Trail Capabilities (L) | Extent of logging and audit trail coverage |

| Integrity and Preservation of Digital Evidence (I) | Ability to preserve evidence integrity and backups |

| Documentation and Compliance with Digital Forensic Standards (D) | Adherence to forensic standards and documentation quality |

| Volatile Data Capture Capabilities (VDCC) | Effectiveness of volatile data capture |

| Encryption and Decryption Capabilities (E) | Strength of encryption/decryption capabilities |

| Incident Response Preparedness (IR) | Quality of incident response plans and team readiness |

| Data Recovery Capabilities (DR) | Effectiveness of data recovery tools and processes |

| Network Forensics Capabilities (NF) | Sophistication of network forensic analysis |

| Staff Training and Expertise () | Level of staff training and certifications |

| Legal & Regulatory Compliance (LR) | Compliance with legal and regulatory requirements |

| Accuracy (A) | Consistency and correctness of forensic analysis |

| Completeness (C) | Extent of comprehensive data collection and analysis |

| Timeliness (T) | Speed and efficiency of forensic investigation process |

| Reliability (R) | Consistency and repeatability of forensic techniques |

| Validity (V) | Adherence to legal and scientific standards |

| Preservation () | Effectiveness of evidence preservation procedures |

| Metric (Attacker) | Weight | Metric (Defender) | Weight |

|---|---|---|---|

| ASR | 0.1094 | L | 0.0881 |

| RE | 0.0476 | I | 0.0881 |

| ST | 0.0921 | D | 0.0423 |

| DEE | 0.0887 | VDCC | 0.0642 |

| TTE | 0.0476 | E | 0.0461 |

| EC | 0.0887 | IR | 0.0881 |

| AR | 0.0814 | DR | 0.0481 |

| RT | 0.0476 | NF | 0.0819 |

| IA | 0.0921 | 0.0819 | |

| P | 0.0814 | LR | 0.0481 |

| AD | 0.0571 | A | 0.0557 |

| DN | 0.0264 | C | 0.0460 |

| LG | 0.0433 | T | 0.0693 |

| CB | 0.0262 | R | 0.0531 |

| FG | 0.0210 | V | 0.0423 |

| RP | 0.0487 | 0.0557 |

| Lakhdhar et al. [31] | Monteiro et al. [36] | This Work | |

|---|---|---|---|

| Forensic objective | Investigation-ready infrastructure with cognitive defence | Adaptive observability and evidence collection for microservices | ATT&CK/D3FEND-grounded readiness planning for APT-focused organisations |

| Game formulation | 2-player non-cooperative game; pure/mixed Nash equilibria | 2-player Bayesian game; Bayesian Nash equilibria | Non-zero-sum bimatrix game, zero-sum variant, and evolutionary dynamics |

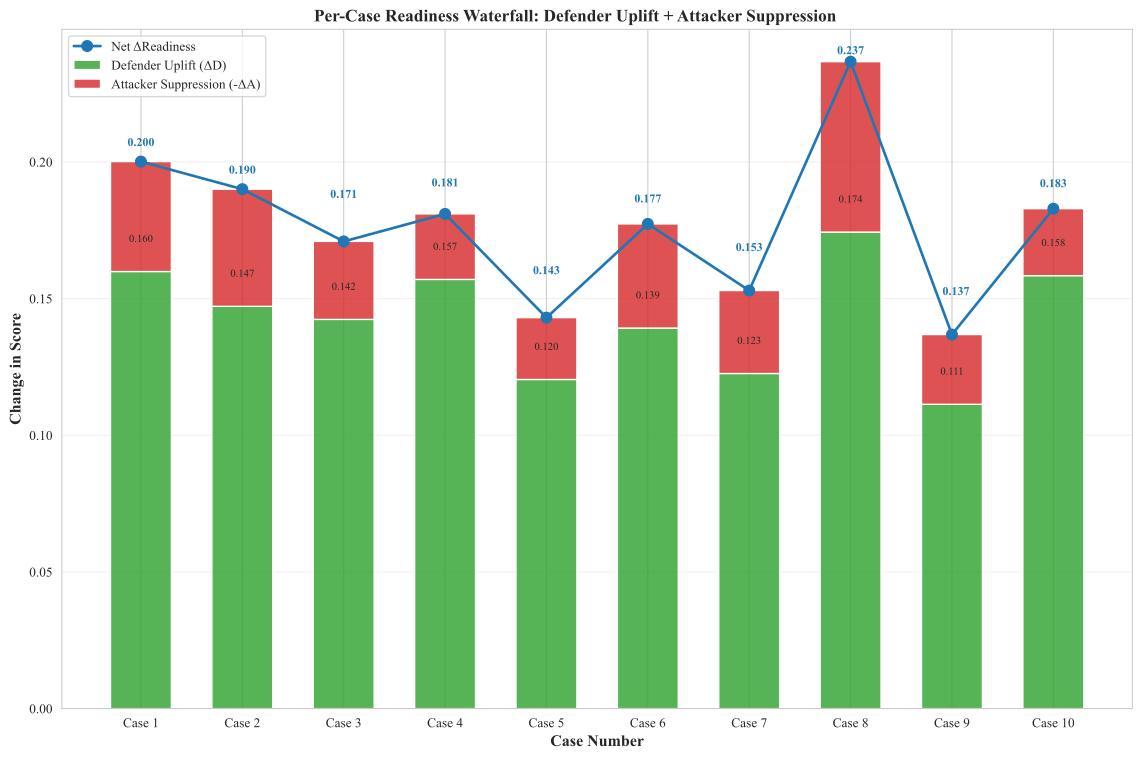

| Reported quantitative effects | Security cost gain of ≈45– vs. static defence; false decisions reduced by ≈70– | improvements of ≈– vs. full/sampling observability | Median readiness improvement of (95% CI [16.3, 19.7]); linked attacker metrics reduced by ≈15– |

| Evaluation assets | Symbolic scenario library (CSLib); parameter sweeps over | TrainTicket benchmark (41 microservices, – concurrent users) | Empirical ATT&CK→D3FEND mappings (10 APT groups); 10 calibrated synthetic readiness profiles |

| Released artefacts | Not publicly documented | Prototype and evaluation scripts (GitHub:no public version available) | STIX extraction scripts, mapping CSVs, and YAML manifests for profile generation (GitHub; version: v1.0) |

| Case | L | I | D | VDCC | E | IR | DR | NF | LR | A | C | T | R | V | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.5 | 0.6 | 0.3 | 0.4 | 0.5 | 0.6 | 0.2 | 0.5 | 0.2 | 0.6 | 0.7 | 0.2 | 0.6 | 0.1 | 0.2 | 0.4 |

| 2 | 0.1 | 0.2 | 0.7 | 0.6 | 0.1 | 0.2 | 0.6 | 0.1 | 0.6 | 0.4 | 0.2 | 0.6 | 0.2 | 0.1 | 0.6 | 0.5 |

| 3 | 0.6 | 0.1 | 0.6 | 0.5 | 0.6 | 0.4 | 0.2 | 0.2 | 0.6 | 0.1 | 0.6 | 0.1 | 0.2 | 0.6 | 0.1 | 0.6 |

| 4 | 0.7 | 0.2 | 0.2 | 0.7 | 0.2 | 0.6 | 0.4 | 0.6 | 0.2 | 0.1 | 0.2 | 0.6 | 0.1 | 0.2 | 0.6 | 0.2 |

| 5 | 0.7 | 0.6 | 0.3 | 0.5 | 0.6 | 0.7 | 0.4 | 0.2 | 0.6 | 0.3 | 0.6 | 0.2 | 0.1 | 0.6 | 0.2 | 0.3 |

| 6 | 0.5 | 0.7 | 0.5 | 0.7 | 0.5 | 0.4 | 0.6 | 0.6 | 0.3 | 0.2 | 0.6 | 0.1 | 0.6 | 0.2 | 0.4 | 0.6 |

| 7 | 0.4 | 0.6 | 0.3 | 0.6 | 0.7 | 0.6 | 0.2 | 0.2 | 0.7 | 0.6 | 0.2 | 0.7 | 0.6 | 0.2 | 0.5 | 0.4 |

| 8 | 0.1 | 0.2 | 0.6 | 0.5 | 0.6 | 0.2 | 0.5 | 0.4 | 0.2 | 0.6 | 0.1 | 0.2 | 0.6 | 0.7 | 0.6 | 0.2 |

| 9 | 0.6 | 0.3 | 0.2 | 0.6 | 0.2 | 0.3 | 0.6 | 0.6 | 0.4 | 0.2 | 0.6 | 0.3 | 0.2 | 0.6 | 0.2 | 0.5 |

| 10 | 0.5 | 0.6 | 0.3 | 0.2 | 0.6 | 0.2 | 0.7 | 0.2 | 0.5 | 0.6 | 0.2 | 0.4 | 0.2 | 0.6 | 0.5 | 0.2 |

| Case | L | I | D | VDCC | E | IR | DR | NF | LR | A | C | T | R | V | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.8 | 0.8 | 0.7 | 0.9 | 0.8 | 0.8 | 0.7 | 0.9 | 0.7 | 0.6 | 0.8 | 0.7 | 0.8 | 0.7 | 0.7 | 0.7 |

| 2 | 0.9 | 0.8 | 0.9 | 0.8 | 0.7 | 0.9 | 0.7 | 0.8 | 0.6 | 0.7 | 0.7 | 0.8 | 0.7 | 0.6 | 0.6 | 0.8 |

| 3 | 0.8 | 0.7 | 0.8 | 0.9 | 0.8 | 0.9 | 0.8 | 0.9 | 0.7 | 0.8 | 0.8 | 0.7 | 0.7 | 0.7 | 0.8 | 0.7 |

| 4 | 0.8 | 0.9 | 0.9 | 0.8 | 0.7 | 0.9 | 0.9 | 0.8 | 0.7 | 0.7 | 0.7 | 0.8 | 0.7 | 0.7 | 0.6 | 0.8 |

| 5 | 0.7 | 0.7 | 0.9 | 0.7 | 0.8 | 0.9 | 0.7 | 0.9 | 0.8 | 0.8 | 0.7 | 0.7 | 0.6 | 0.8 | 0.7 | 0.7 |

| 6 | 0.7 | 0.8 | 0.8 | 0.9 | 0.7 | 0.8 | 0.6 | 0.9 | 0.6 | 0.7 | 0.6 | 0.8 | 0.7 | 0.9 | 0.7 | 0.7 |

| 7 | 0.8 | 0.7 | 0.9 | 0.7 | 0.6 | 0.9 | 0.8 | 0.9 | 0.7 | 0.8 | 0.7 | 0.7 | 0.8 | 0.7 | 0.8 | 0.8 |

| 8 | 0.7 | 0.6 | 0.9 | 0.8 | 0.8 | 0.9 | 0.8 | 0.8 | 0.8 | 0.7 | 0.7 | 0.8 | 0.7 | 0.6 | 0.8 | 0.7 |

| 9 | 0.9 | 0.7 | 0.8 | 0.7 | 0.7 | 0.9 | 0.7 | 0.8 | 0.7 | 0.8 | 0.8 | 0.7 | 0.6 | 0.7 | 0.7 | 0.7 |

| 10 | 0.8 | 0.8 | 0.9 | 0.7 | 0.7 | 0.9 | 0.8 | 0.7 | 0.7 | 0.8 | 0.7 | 0.7 | 0.8 | 0.8 | 0.6 | 0.8 |

| Res. | Def. | Att. | Scen. | Final Val. | Avg. Att. | Avg. Def. | Avg. Read. |

|---|---|---|---|---|---|---|---|

| 1 | 10 | 5 | a | 0.56 | 0.84 | – | 0.00 |

| 1 | 15 | 5 | b | 0.52 | 0.94 | – | 0.00 |

| 1 | 25 | 5 | c | 0.61 | 0.69 | – | 0.00 |

| 3 | 10 | 5 | d | 0.96 | 0.58 | – | 0.00 |

| 3 | 25 | 5 | f | 1.00 | 1.00 | – | 0.00 |

| 5 | 15 | 5 | h | 0.91 | 0.75 | – | 0.03 |

| Low | Medium | High | |

|---|---|---|---|

| Attack success rate | 0.25 ± 0.0038 | 0.53 ± 0.0044 | 0.75 ± 0.0038 |

| Evidence collection rate | 0.93 ± 0.0022 | 0.96 ± 0.0017 | 0.94 ± 0.0021 |

| ID | SME | SMB | Impact Metrics | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Malic. | Str. | Impact | CVSS | Type | Malic. | Str. | Impact | CVSS | Workload | Avail. | Conf. | Integ. | |

| 0 | DDoS | 0.75 | 1.12 | High | 7 | DDoS | 0.75 | 1.12 | High | 7 | 1.125 | 0.8 | 0 | 0 |

| 1 | SQLI | 0.75 | 1.12 | High | 9 | SQLI | 0.75 | 1.12 | High | 9 | 2.7 | 2.58 | 7.2 | 7.2 |

| 2 | DDoS | 0.75 | 1.12 | Med | 0 | DDoS | 0.75 | 1.12 | Med | 0 | 1.125 | 0.96 | 0 | 0 |

| 3 | SQLI | 0.75 | 1.12 | High | 9 | SQLI | 0.75 | 1.12 | High | 9 | 1.125 | 1.005 | 7.2 | 7.2 |

| 4 | DDoS | 0.75 | 1.12 | Low | 0 | DDoS | 0.75 | 1.12 | Low | 0 | 1.125 | 0.96 | 0 | 0 |

| 5 | SQLI | 0.75 | 1.12 | Med | 7 | SQLI | 0.75 | 1.12 | Med | 7 | 2.7 | 2.58 | 2.8 | 2.8 |

| ID | SME | SMB | Impact Metrics | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Malic. | Str. | Impact | CVSS | Type | Malic. | Str. | Impact | CVSS | Workload | Avail. | Conf. | Integ. | |

| 0 | SQLI | 0.49 | 0.73 | Med | 7 | SQLI | 0.49 | 0.73 | Med | 7 | 0.73 | 0.61 | 2.8 | 2.8 |

| 1 | DDoS | 0.75 | 1.12 | High | 7 | DDoS | 0.75 | 1.12 | High | 7 | 1.12 | 0.80 | 0 | 0 |

| 2 | DDoS | 0.80 | 1.21 | High | 7 | DDoS | 0.80 | 1.21 | High | 7 | 1.21 | 0.80 | 0 | 0 |

| 3 | SQLI | 0.16 | 0.24 | High | 9 | SQLI | 0.16 | 0.24 | High | 9 | 0.24 | 0.12 | 7.2 | 7.2 |

| 4 | SQLI | 0.58 | 0.87 | High | 9 | SQLI | 0.58 | 0.87 | High | 9 | 2.45 | 2.33 | 7.2 | 7.2 |

| 5 | DDoS | 0.84 | 1.26 | High | 7 | DDoS | 0.84 | 1.26 | High | 7 | 2.84 | 0.80 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vaseghipanah, M.; Jabbehdari, S.; Navidi, H. A Game-Theoretic Approach for Quantification of Strategic Behaviors in Digital Forensic Readiness. J. Cybersecur. Priv. 2025, 5, 105. https://doi.org/10.3390/jcp5040105

Vaseghipanah M, Jabbehdari S, Navidi H. A Game-Theoretic Approach for Quantification of Strategic Behaviors in Digital Forensic Readiness. Journal of Cybersecurity and Privacy. 2025; 5(4):105. https://doi.org/10.3390/jcp5040105

Chicago/Turabian StyleVaseghipanah, Mehrnoush, Sam Jabbehdari, and Hamidreza Navidi. 2025. "A Game-Theoretic Approach for Quantification of Strategic Behaviors in Digital Forensic Readiness" Journal of Cybersecurity and Privacy 5, no. 4: 105. https://doi.org/10.3390/jcp5040105

APA StyleVaseghipanah, M., Jabbehdari, S., & Navidi, H. (2025). A Game-Theoretic Approach for Quantification of Strategic Behaviors in Digital Forensic Readiness. Journal of Cybersecurity and Privacy, 5(4), 105. https://doi.org/10.3390/jcp5040105