Abstract

Frame-wise steganalysis is a crucial task in low-bit-rate speech streams that can achieve active defense. However, there is no common theory on how to extract steganalysis features for frame-wise steganalysis. Moreover, existing frame-wise steganalysis methods cannot extract fine-grained steganalysis features. Therefore, in this paper, we propose a frame-wise steganalysis method based on mask-gating attention and bilinear codeword feature interaction mechanisms. First, this paper utilizes the mask-gating attention mechanism to dynamically learn the importance of the codewords. Second, the bilinear codeword feature interaction mechanism is used to capture an informative second-order codeword feature interaction pattern in a fine-grained way. Finally, multiple fully connected layers with a residual structure are utilized to capture higher-order codeword interaction features while preserving lower-order interaction features. The experimental results show that the performance of our method is better than that of the state-of-the-art frame-wise steganalysis method on large steganography datasets. The detection accuracy of our method is 74.46% on 1000K testing samples, whereas the detection accuracy of the state-of-the-art method is 72.32%.

1. Introduction

Steganography is a technique used for covert communication, where confidential information is hidden within multimedia carriers such as image [1,2], text [3,4], video [5,6], and audio [7,8] files without arousing suspicion. While steganography can serve legitimate purposes in protecting sensitive information, it is essential to recognize that it can also be misused for illegal activities, including terrorism. To counteract the potential misuse of steganography, the development of steganalysis [9,10] methods becomes crucial. Steganalysis refers to the field of study dedicated to detecting and analyzing hidden information within multimedia carriers.

Indeed, network streaming media services [11] have experienced a significant surge in popularity in recent years. This can be attributed to the growing demand for higher bandwidth and the convergence of networks. In this context, low-bit-rate compressed speech codecs have emerged as a preferred choice for these streaming media services due to their efficient performance. Low-bit-rate speech codecs [11,12] are designed to compress speech signals while maintaining an acceptable level of quality. Due to their efficient compression and satisfactory quality, low-bit-rate voice streams have become a relatively optimal carrier for embedding secret information.

Existing steganography techniques for low-bit-rate speech streams include approaches such as cochlear delay-based methods [13], spread spectrum-based methods [14], least significant bit (LSB) modification-based methods [15,16], and quantization index modulation (QIM)-based methods [17,18,19,20,21]. Cochlear delay-based methods exploit perceptual models of human auditory processing by modifying temporal characteristics such as inter-channel or inter-frame delays to embed information. Spread-spectrum-based methods [14] embed secret information by spreading it across a wide frequency band within the host signal. In the LSB approach [15,16], the values of certain code elements are directly manipulated to embed secret information. By altering the least significant bits of selected elements, hidden data can be inserted into the speech stream. This approach is relatively simple to implement and generally maintains the perceptual quality of the speech signal. However, LSB-based methods are typically very fragile and vulnerable to both intentional and unintentional modifications, making them less robust against steganalysis and signal-processing attacks. QIM-based steganography methods [17,18,19,20,21] utilize quantization index modulation to embed data by carefully modifying the search space of vector quantization (VQ) codewords during the encoding process. QIM-based approaches are designed to balance the trade-offs among distortion, robustness, and embedding capacity, making them particularly suitable for low-bit-rate speech codecs [22].

Although all four types of techniques have been explored in the literature, QIM-based steganography generally offers superior trade-offs in terms of imperceptibility, robustness, and compatibility with existing codecs. Consequently, most recent research on steganalysis in low-bit-rate speech coding has focused on detecting QIM-based methods. Accordingly, our work is dedicated to addressing the challenges of frame-wise detection for QIM-based steganography in low-bit-rate speech streams.

To detect QIM-based steganography techniques [17,18,19,20,21], existing steganalysis methods [21,23,24,25,26,27,28,29,30,31] can be categorized into two types. The first type [24,25,26,32] involves utilizing the statistical characteristics of the codeword distribution to extract steganalysis features. Common statistical characteristics used include the Markov transition matrix [24,32], entropy, and conditional probability. These features are employed to analyze the presence of hidden information in the speech stream. The second category of steganalysis methods leverages deep learning techniques [21,27,28,29,30,31], including Recurrent Neural Networks (RNNs) [21,27,31], convolutional neural networks (CNNs) [21,31], and attention mechanisms [28,29], to automatically extract discriminative features from VoIP streams. These approaches, which benefit from the powerful representation capabilities of neural networks, have demonstrated promising performance in detecting various steganographic schemes.

However, the steganalysis methods described above are intended to detect whether a segment of the speech stream contains secret information. In comparison to segment-wise detection [21,23,24,25,26,27,28,29,30,31], frame-wise detection [33,34] is a key prerequisite for achieving unperceived active steganography defense. The value of some codewords in the frame can be slightly adjusted given the revised embedding position. The hidden channel is destroyed, preventing the receiver from fully extracting the secret information. As a result, this paper focuses on frame-wise detection.

Frame-wise detection in low-bit-rate speech streams [33,34] presents specific challenges for steganalysis feature extraction. Unlike segment-wise detection [21,23,24,25,26,27,28,29,30,31], frame-wise detection [33,34], which leverages temporal correlations and spatial information across longer contexts, treats each frame in isolation. This design choice restricts the ability to utilize temporal dependencies and eliminates access to spatial relationships that exist across the entire speech stream. As a result, identifying covert modifications becomes significantly more difficult.

To address frame-wise detection, Wei et al. [33] proposed a method based on multi-dimensional codeword correlation modeling that captures four dimensions: global-to-local, local-to-global, forward, and backward. Li et al. [34] subsequently introduced a dual-domain representation approach. Despite their effectiveness in certain scenarios, these methods exhibit fundamental limitations that restrict their capacity to fully capture frame-level steganalysis features. First, existing frame-wise steganalysis methods primarily operate at the bit-wise level and overlook vector-level codeword interactions. Typically, they transform discrete codeword indices into embedding vectors, which are then concatenated into a single large vector. Subsequent processing relies on bit-wise operations without explicitly modeling the interactions among the embedded codewords. This design choice hinders the model’s ability to learn rich, meaningful dependencies within a frame. Second, these methods predominantly focus on extracting higher-order features through stacked nonlinear transformations while lacking explicit mechanisms to preserve and exploit lower-order interactions. This limitation can result in models with complex, difficult-to-optimize parameter spaces and can lead to convergence issues during training [35].

Our approach introduces two mechanisms specifically designed to overcome these limitations, representing novel applications in the field of speech steganalysis. The first mechanism is the mask-gating attention mechanism [36], which is designed to learn adaptive importance weights for embedded codewords within a frame. This mechanism enables the model to dynamically emphasize the most informative codewords while simultaneously capturing global contextual information across the entire frame. Theoretically, it addresses the limitation of uniform or static weighting schemes employed by previous methods, offering a principled means to allocate modeling capacity where it is most impactful. The second mechanism is the bilinear feature interaction module, which explicitly captures second-order multiplicative interactions between codeword embeddings. According to established feature interaction theory [37], bilinear operations enable the efficient and expressive learning of pairwise feature combinations in a parameter-efficient manner. This directly mitigates the fundamental limitation of relying solely on additive or shallow nonlinear transformations used in existing steganalysis models.

By integrating these mechanisms within a deep residual network framework, our method is able to capture both lower-order and higher-order codeword feature interactions in a unified and theoretically grounded manner. This design not only addresses the architectural limitations of existing methods [33,34] but also improves the extraction of discriminative steganalysis features at the frame level. Therefore, this paper proposes a frame-wise steganalysis method that combines mask-gating attention [36] and bilinear codeword feature interaction [37] mechanisms. The mask-gating attention module learns to dynamically assess the importance of individual codewords within a frame, while the bilinear interaction module captures informative second-order interaction patterns among codewords. Finally, multiple fully connected layers with residual connections are employed to model higher-order interactions while effectively preserving lower-order information. Existing frame-wise steganalysis methods [33,34] neglect the correlations between codewords at the vector level. Specifically, they first use the embedding layer to map all the codewords in the frame into codeword vectors, then combine those codeword vectors into a large vector, which restricts subsequent operations to the bit-wise level. These methods only learn higher-order features and are not compatible with lower-order features. Moreover, the parameters of these methods are difficult to optimize, and the models are prone to convergence issues [35]. Specifically, because all the operations of existing steganalysis methods are performed at the bit-wise level, the higher-order features are obtained through nonlinear functions.

For frame-wise detection [33,34] in low-bit-rate speech streams, the main challenge is how to extract steganalysis features from individual speech frames. Therefore, in this paper, we propose a frame-wise steganalysis method based on mask-gating attention [36] and bilinear codeword feature interaction [37] mechanisms. First, we utilize the mask-gating attention mechanism to dynamically learn the importance of the codewords. Second, bilinear feature interaction is used to capture informative second-order codeword interaction patterns. Finally, multiple fully connected layers with residual structures are utilized to capture higher-order interaction features while preserving lower-order interaction features. The contributions of this paper are as follows:

- By introducing a mask-gating attention mechanism, the approach overcomes the uniform weighting limitation in existing frame-wise steganalysis methods, enabling dynamic emphasis on informative codewords and effective extraction of global contextual information.

- Through the integration of bilinear feature interaction layers and a deep residual structure, the proposed method addresses the neglect of vector-level codeword correlations in existing approaches, capturing both lower- and higher-order interaction patterns for improved frame-wise detection in low-bit-rate speech streams.

2. Related Works

For the detection of QIM-based steganography [17,18,19,20,21], existing steganalysis methods [21,23,24,25,26,27,28,29,30,31] can be divided into two types.

The first type is segment-wise detection methods [23,24,25,26,27,28,29,30,31], which are aimed at detecting whether a segment of a speech stream contains secret information. Segment-wise steganalysis methods mainly involve two stages. In the first stage, many steganalysis methods [24,25,26,32] adopt the pattern of handcrafted features plus a classifier. Many researchers [24,25,26,32] utilize the statistical characteristics of the codebook correlation feature to extract steganalysis features. Common statistical characteristics used include the Markov transition matrix, entropy, and conditional probability. These features are employed to analyze the presence of hidden information in speech streams. Specifically, in QIM steganography [23,24,25,26], secret information is embedded into low-bit-rate speech streams by modifying the LPC (Linear Predictive Coding) indices. Li et al. [26] observed this change in LPC indices and proposed a method to quantify the correlated characteristics of these indices. To quantify the correlation, Li et al. used first-order Markov transition probabilities. These probabilities are employed to construct a categorical feature vector for each LPC index. This vector captures the relevant properties and variations in the LPC indices, allowing for further analysis and manipulation of speech streams for steganographic purposes. Furthermore, Bayesian network (BN)-based techniques have been proposed to detect hidden information. One example is the steganography-sensitive Codeword Bayesian Network (CBN) introduced in [33], which leverages the correlation changes of codeword spatiotemporal transitions. The CBN model takes into account the correlation patterns and transitions of codewords in order to detect steganographic content. Bayesian inference is then employed for classification tasks, allowing for the accurate identification of hidden information.

During the second stage of research development [27,28,29,30,31], many deep learning-based approaches have emerged, motivated by the success of deep learning in fields such as computer vision and natural language processing. Researchers have explored models such as Recurrent Neural Networks (RNNs) [21,27,31], convolutional neural networks (CNNs) [21,31], and attention mechanisms [28,29] to improve steganalysis in VoIP streams. For example, Lin et al. [27] proposed an RNN-based method to capture temporal dependencies in quantization index sequences, marking the first deep learning approach for QIM steganography detection in VoIP. Yang et al. [29] introduced a model leveraging attention mechanisms to extract correlation features for steganalysis. They further enhanced this work [28] by combining an attention module with a convolutional network to model the semantic hierarchical structure of speech, leading to improved detection performance. Hu et al. [21] presented the Steganalysis Feature Fusion Network (SFFN), a steganalysis framework that integrates Long Short-Term Memory (LSTM) and a CNN and is designed to detect multiple parallel steganography techniques and improve detection generality. Wang et al. [30] developed the key feature extraction and fusion network to enable fast and efficient detection. Additionally, Li et al. [31] proposed a steganalysis approach based on code element (CE) embedding, utilizing Bidirectional Long Short-Term Memory (Bi-LSTM) and a CNN with attention mechanisms.

Beyond these, another important category is frame-wise detection methods [33,34], which aim to determine whether each frame contains covert information. Compared to segment-wise detection, frame-wise detection is essential for achieving low-latency and imperceptible active steganography defense. This paper, therefore, focuses on frame-wise detection. For this task, Wei et al. [33] proposed a method that analyzes codeword correlations from multiple perspectives, including global-to-local, local-to-global, and bidirectional dependencies. Li et al. [34] further introduced a dual-domain representation approach to enhance detection performance.

3. Proposed Method

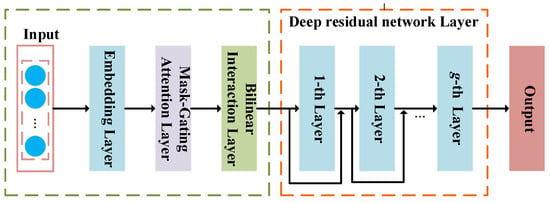

For frame-wise detection [33,34] in low-bit-rate speech streams, the main challenge is how to extract steganalysis features from individual speech frames. As frame-wise detection cannot utilize long-term dependencies, a useful and promising approach is to construct complex features based on the codewords within each speech frame. Therefore, this paper proposes a frame-wise steganalysis method based on mask-gating attention and bilinear interaction mechanisms (MGA-BI), which can dynamically capture the importance of features and their interactions in a fine-grained way. The framework of our method is shown in Figure 1.

Figure 1.

Framework of the proposed method.

3.1. Overview

In this section, we discuss the design of the proposed model, as shown in Algorithm 1. The proposed model comprises the following layers: a codeword embedding layer, a mask-gating attention layer, a bilinear interaction layer, a deep residual network layer, and an output layer. To obtain a sparse representation, the embedding layer [38] is utilized. The mask-gating [36] attention layer first learns a mask, which is a vector of weights that selectively emphasizes important dimensions of the feature embedding. This mask is then applied through element-wise multiplication, allowing the model to efficiently capture complex interactions at the element level. Furthermore, the mask-gating attention layer helps reduce the impact of noise in the feature embedding while highlighting valuable signals. Based on the mask-gating attention layer, the bilinear interaction [37] layer is used to capture second-order codeword interaction features in a fine-grained way. Finally, the output of the bilinear interaction layer is passed to a deep neural network with a residual structure, which returns the prediction score.

| Algorithm 1: Overview of the proposed model |

Input: Input sequence Output: Prediction return |

For frame-wise detection in low-bit-rate speech streams, the form of input is as follows:

where k denotes the number of codewords in the speech frame. Then, as the values of the codewords are discrete, the embedding layer is used to transform each codeword into a feature vector and obtain the distributed representation of codewords [38]. This enables the model to capture correlations between codewords within a single speech frame by using subsequent layers that explicitly learn relationships among the embedded codeword vectors in that frame. Specifically, after the embedding layer maps discrete codewords to continuous representations, these subsequent layers jointly analyze all codeword vectors in the same frame. By computing the interactions among these vectors, the model can identify dependencies that reflect how the value of one codeword is related to others within that frame, thereby learning the internal correlation structure of the frame. The embedding layer is described as follows:

where denotes the embedding layer. When transforming the codewords into a feature vector, the codewords should be represented using one-hot encoding. The tensor has dimensions (B, k, f), where B is the batch size and f is the embedding dimension.

Moreover, to study the importance of the elements in , is reshaped as , defined by the following equation:

The dimension of is (B, ), where × denotes the flattening of the k and f dimensions into a single feature axis. Then, the mask-gating [36] attention mechanism is applied to , and the process is as follows:

where denotes the output of the mask-gating attention [36] mechanism.

To capture fine-grained features at the vector level, is reshaped as , and the dimension of is (b, k, f). By extracting fine-grained features, we can obtain more useful and complex second-order codeword interaction patterns, which can improve the performance of the detection model.

where denotes the output of the bilinear interaction layer. The dimension of is (B, , f). is reshaped as , and its dimension is (B, ).

Next, is input into multiple fully connected layers, which are used to obtain higher-order codeword interaction features via the following equation:

3.2. Codeword Embedding Layer

The input of frame-wise tasks for low-bit-rate speech streams is made up of numerous codewords. The values of these codewords are discrete. Such discrete codewords are encoded as one-hot vectors, resulting in feature spaces with unreasonably high dimensionality for large vocabularies. The embedding layer [38] is used to solve this problem. These codewords are transformed into codeword vectors by the embedding layer, allowing the DNN to learn more complex codeword features and semantic codeword properties.

The transformed embedding vector for the i-th codeword is obtained via

where is the embedding matrix for the i-th codeword and denotes the one-hot representation. The dimension of is (f, n), where n is the maximum value of the i-th codeword of and f is the dimension of the transformed embedding vector.

As each codeword has unique characteristics, there are k embedding matrices for k codewords in the speech frame. By transforming each codeword in the speech frame into a feature vector, we obtain

where is the output of the embedding layer, with a dimension of (B, k, f), and denotes the transformed embedding vector of the i-th codeword .

The mask-gating attention [36] mechanism aims to perform some operations on global elements; thus, the output of the embedding layer is reshaped as , with a dimension of (B, ).

3.3. Mask-Gating Attention Layer

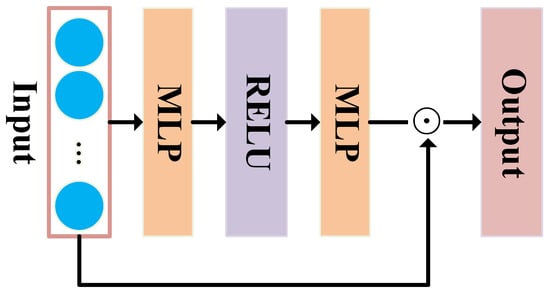

The mask-gating attention [36] mechanism is employed to extract global information from the transformed embedding vectors and dynamically emphasize informative elements. This mechanism provides two key advantages for the model. First, the mask-gating attention mechanism introduces element-wise product operations between the mask and the hidden layer or feature embedding layer. This multiplicative operation efficiently captures complex feature interactions in a unified fashion. By applying the element-wise product, the model can amplify or suppress specific elements based on their importance, allowing for a better representation of intricate feature interactions. This enhances the model’s ability to capture and leverage complex relationships between different elements, ultimately improving its overall performance. Second, the mask-gating attention mechanism enables fine-grained bit-wise attention driven by the input codewords. By utilizing this attention mechanism, the model can reduce the impact of noise present in the embedding and feedforward layers. The attention mechanism allows the model to selectively focus on relevant bits or elements in the input codewords, effectively filtering out noisy or irrelevant information. This fine-grained attention helps enhance the model’s discriminative power, as it can prioritize and attend to the most informative elements while disregarding noise or irrelevant details. The structure of the mask-gating attention mechanism is shown in Figure 2.

Figure 2.

Structure of the mask-gating attention mechanism.

The mask-gating attention mechanism consists of two steps. The first step obtains the codeword-element-guided mask based on two fully connected (FC) layers with identity functions. This mask is generated based on the global contextual information of the input instance and is applied during training to modulate the optimization of parameters in a data-dependent manner. The first FC layer is known as the “aggregation layer,” and it is a wider layer than the second FC layer to better capture global contextual knowledge in the transformed codeword vector. The first FC layer has the training weight parameters , where g represents the g-th FC layer.

The second FC layer, known as the “projection layer,” reduces the dimensionality to the same size as the transformed embedding vector , with the parameters as follows:

where denotes the Rectified Linear Unit activation function, the dimension of is (B, q, k*f), and the dimension of is (B, u, q). q and u denote the number of neurons in the aggregation layer and the projection layer. and denote the biases of the two FC layers.

The second step is to calculate the output of the mask-gating attention layer based on the codeword-element-guided mask and the transformed embedding vector . In this work, element-wise products are utilized to incorporate the global contextual information gathered by the codeword-element-guided mask with the embedding layer, as follows:

where denotes the output of the mask-gating attention layer and ⊙ denotes the element-wise product of two vectors. The definition of the element-wise product is as follows:

where e denotes the size of the vectors and .

The codeword-element-guided mask is a type of bit-wise attention or gating technique that employs global context information included in the input codewords to search for relatively optimal parameter optimization strategies during training. The greater the value of , the more likely it is that the model dynamically recognizes a key component in the feature embedding. Small values in , on the other hand, suppress uninformative items or even noise by lowering the values in the corresponding vectors .

Overall, the mask-gating attention [36] mechanism plays a crucial role in the steganalysis model by extracting global information, highlighting informative elements, and reducing the impact of noise. These advantages contribute to the ability of the model to capture complex feature interactions more efficiently and improve its robustness against noise, ultimately enhancing its performance in steganalysis tasks.

The bilinear interaction [37] layer is aimed at computing second-order feature interactions between two vectors. Therefore, the output of the mask-gating attention layer is reshaped as , with a dimension of (B, k, f).

3.4. Bilinear Interaction Layer

The bilinear interaction [37] layer is utilized to compute second-order feature interactions. This layer offers several advantages for overall model performance. First, the bilinear function employed in the bilinear interaction layer enables the model to learn feature interactions in a fine-grained manner. By computing the vector-wise product of two feature vectors, the model can capture specific pairwise interactions between features. This fine-grained feature interaction learning enhances the network’s ability to extract relevant steganalysis features, as it considers the subtle relationships between different pairs of features. Second, the bilinear interaction layer can extract more complex and informative interaction features. As the codeword features are crossed in pairs, the layer replaces the original codeword features with interaction features that are more intricate and informative. These interaction features are derived from the multiplicative combination of the original features, capturing higher-order relationships and patterns in the data. By incorporating these complex interaction features, the model gains the capacity to represent and understand more sophisticated feature dependencies and correlations, ultimately improving its discrimination ability.

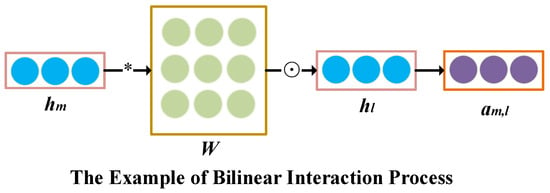

According to researchers [39], the inner product and Hadamard product are traditional techniques used for feature interactions in the interaction layer. However, the inner product and Hadamard product in the interaction layer are too simplistic to properly depict feature interactions. Therefore, we present a finer-grained strategy for learning feature interactions with more parameters, combining the inner product and Hadamard product. The details are shown in Figure 3. In this paper, we propose three types of bilinear functions.

Figure 3.

Example of bilinear interaction.

The first type is the codeword-all type. Specifically, using and in as examples, the result of the second-order interaction feature can be determined as follows:

where the first line defines the inner-product operation as the multiplication of and the weight matrix . is the output of the inner-product operation. The second line shows the Hadamard (element-wise) product of and . The dimension of is (B, f, f). All codeword second-order interaction pairs share the same parameter. There are parameters in the bilinear interaction layer. This type is denoted as ‘codeword-all’.

The second type is the codeword-each type and is defined as follows:

where the first line defines the inner-product operation as the multiplication of and the weight matrix . is the output of the inner-product operation. The second line shows the Hadamard (element-wise) product of and . The dimension of is (B, f, f), and denotes the corresponding parameter matrix of the m-th codeword . As each codeword has its own parameter matrix, the number of learning parameters in the bilinear interaction layer is . Based on this, this type is denoted as ‘codeword-each’.

The third type is the codeword-interaction type and is defined as follows:

where the first line defines the inner-product operation as the multiplication of and the weight matrix . is the output of the inner-product operation. The second line shows the Hadamard (element-wise) product of and . denotes the corresponding parameter matrix of the interaction between and , with a dimension of (B, f, f). There are learning parameters. This type is referred to as ‘codeword-interaction’.

By combining all second-order codeword interaction features, we obtain the output:

For the deep network layers, the input should be two-dimensional. Therefore, the output of is reshaped as , and its dimension is (B, ).

Overall, the bilinear interaction [37] layer plays a crucial role in the steganalysis model by allowing fine-grained learning of feature interactions and extracting complex and informative interaction features. These advantages contribute to enhancing the model’s ability to extract relevant steganalysis features and improve its performance in detecting and analyzing steganographic content.

3.5. Deep Residual Network Layers

In our steganalysis model, the deep network layer consists of multiple fully connected layers. The purpose of this layer is to capture higher-order feature interactions between the codewords implicitly. Additionally, this paper adopts a residual structure [40] within the deep network layer, which offers several advantages. First, the residual structure enables the model to preserve lower-order codeword interaction feature information. This is achieved by incorporating identity mappings, which allow the model to learn residual connections that capture additional information beyond lower-order interactions. By preserving this information, the model can benefit from both lower-order and higher-order interactions, leading to improved performance. Second, the residual structure enhances the correlation between the gradients and the loss function. This correlation is vital for effective learning. By using residual connections, the gradients can directly flow through the network without facing significant impediments. Consequently, the model’s learning ability is enhanced, and it becomes more capable of capturing complex patterns and relationships within the data. Third, the residual structure mitigates the issue of vanishing gradients. In deep networks, as the gradients propagate through multiple layers, they can become exponentially small, leading to difficulties in training deeper models. With residual connections, the gradients have multiple paths to flow through, allowing them to bypass several layers if needed. This alleviates the problem of vanishing gradients and enables more stable and effective training of deep steganalysis models.

As shown in Figure 1, the input of the deep residual network layer is the output of the bilinear interaction layer. The output of the bilinear interaction layer is denoted as , which is passed to the deep neural network. The feedforward process is as follows:

where g represents the g-th fully connected layer and is the activation function. denotes the output of the g-th fully connected layer. and are the weight parameter and bias of the g-th layer. The output of the deep network is denoted as .

In summary, the adoption of a residual structure [40] within the deep network layer provides benefits such as preserving lower-order interaction features, enhancing the correlation between the gradients and loss function, and alleviating the vanishing gradient problem. These advantages contribute to the overall performance and effectiveness of the steganalysis model.

4. Experimental Results and Analysis

4.1. Experimental Setup

Datasets: The study by Lin et al. [27] involved the creation of a publicly available dataset consisting of Chinese and English speech. The dataset was constructed using speech samples, from which the authors randomly selected 3000K speech frames. These speech frames were then encoded using the AMR-NB (Adaptive Multi-Rate Narrowband) codec at 12.2 kbp/s, which is a low-bit-rate speech codec. The resulting coded speeches make up the cover speech dataset.

Steganography methods: In this study, the speech frames from the cover dataset were further processed using specific steganography algorithms to generate steganographic speech frames. Three steganography algorithms, namely CNV [19], ACL [17], and HPS [21], were employed for this purpose. Each algorithm contributed to a separate steganographic dataset, resulting in three steganographic datasets in total. Each steganographic dataset contains steganographic frames with multiple embedding rates (the embedding rate is a parameter in steganography that represents the proportion of secret information embedded within the speech frames). The embedding rate refers to the ratio of embedded bits to the total embedding capacity. In this paper, the researchers used five different embedding rates: 20%, 40%, 60%, 80%, and 100%. These rates indicate the proportion of hidden information embedded within the steganographic speech frames.

Baselines: To evaluate the performance of the proposed method, three steganalysis methods were used for comparison. The first method, DMSM, was proposed by Li et al. [31]. They used codeword-element embedding and the multi-head attention mechanism to detect steganography methods. The second method, FSMDP, was proposed by Wei et al. [33]. They used the multi-dimensional perspective of codeword correlations to detect whether a frame contained secret information. The third method, Stegformer, was proposed by Li et al. [34]. They utilized dual representation and inter-frame correlation to extract steganalysis features. This paper proposes a frame-wise steganalysis method based on mask-gating attention and bilinear interaction mechanisms (MGA-BI).

Implementation details: PyTorch v2.2.2 was used to implement our model. The training and testing steps were carried out using a GeForce GTX 3080 GPU with 10.5 GB of graphics memory. The hyperparameters of our method were as follows: the batch sizes in the training and testing stages were 4096, the maximum training epoch was set to 100, the loss function utilized in the model was binary cross-entropy, and Adam was used as the network training optimizer. The learning rate was set to 0.0045. The other parameters of our model are shown in Table 1.

Table 1.

Parameter settings.

Evaluation Metric: This paper selected classification accuracy as the metric to evaluate the performance of the proposed method. Accuracy is commonly used as a performance metric in classification tasks. It is defined as the ratio of the number of correctly identified samples to the total number of samples.

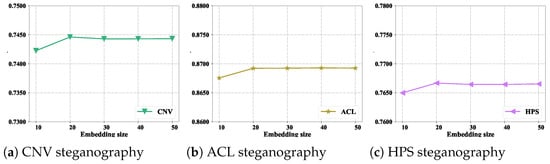

4.2. Discussion of the Dimension of the Embedding Vector

The dimension of the embedding vector (denoted as embedding size) is an important factor in the embedding layer, as it can affect the detection performance of the whole steganalysis model. Therefore, to determine a relatively optimal value for the dimension of the embedding vector, this paper conducted a series of experiments with the embedding rate set to 100%. The experimental results are shown in Figure 4. Figure 4 summarizes the experimental outcomes after increasing the embedding size from 10 to 50.

Figure 4.

Detection accuracies for different embedding sizes across the three steganography datasets.

From Figure 4, it can be concluded that when the embedding size was increased from 10 to 50, the detection accuracy of our method initially increased and then exhibited a decreasing trend across the three steganography datasets. This observation suggests that there is an optimal embedding size for achieving the highest detection accuracy. Increasing the embedding size allows the model to capture more intricate and fine-grained features in the codewords, which can potentially improve detection performance. However, it is important to consider that increasing the embedding size also results in higher computational costs and potentially increased model complexity. Therefore, there is a trade-off between detection accuracy and computational efficiency when selecting the embedding size. In conclusion, it appears that the relatively optimal value for the embedding size is 20. This value likely strikes a balance between capturing sufficient features for accurate detection while maintaining reasonable computational demands.

4.3. Discussion of Mask-Gating Attention Mechanism

The mask-gating attention mechanism in our model aims to dynamically emphasize informative elements. To evaluate its performance, we conducted a series of experiments with the embedding rate set to 100%. The experimental results are presented in Table 2, which reports the performance metrics obtained by our model with and without the mask-gating attention mechanism. Specifically, the notation represents our method with the mask-gating attention mechanism, while represents our method without it. By comparing these two settings, we can assess the impact of the mask-gating attention mechanism on the overall performance of our model. Moreover, we can assess the impact of the mask-gating attention mechanism on the overall performance of existing frame-wise steganalysis methods.

Table 2.

Performance Comparison of Methods with and without MGA.

According to the results presented in Table 2, performance significantly decreased across the three steganography datasets when the mask-gating attention mechanism was removed from our model. This indicates that the mask-gating attention mechanism plays a crucial role in modeling higher-order feature interactions. Furthermore, it is worth noting that the mask-gating attention mechanism is also critical for other models, such as DMSM [31], FSMDP [33], and Stegformer [34]. Its inclusion in these models led to improved performance, suggesting that the mask-gating attention mechanism has broader applicability and effectiveness across different steganography tasks and models. Overall, these findings highlight the importance of the mask-gating attention mechanism in capturing and leveraging global information and informative elements, ultimately enhancing the performance of both our proposed method and other existing steganography models.

4.4. Discussion of Codeword Interaction Types in Bilinear Interaction

The bilinear interaction layer aims to capture interactions between different input fields or features in a model. In this section, we explore the effect of various field types (field-all, field-each, and field-interaction) on the performance of the bilinear interaction layer, with the embedding rate set to 100%. The experimental data and observations summarized in Table 3 provide insights into the performance of the steganalysis models with different field types in the bilinear interaction layer.

Table 3.

Detection Accuracy of the Methods Using Three Types of Bilinear Interaction.

From Table 3, there are several conclusions that can be drawn. The first conclusion is that the performance of the field-interaction type was the best among the three types. Specifically, for the CNV [19] steganography method, the field-interaction type achieved a 0.23% improvement compared to the field-each type. This indicates that the field-interaction approach is more effective in detecting hidden information in speech frames when using the CNV [19] steganography method. The second conclusion is that the field-each type performed better than the field-all type, achieving gains across the three steganography methods. This suggests that the field-each approach is more reliable and achieves higher detection accuracy than the field-all type when analyzing speech frames for hidden information.

4.5. Discussion of the Depth of Deep Residual Network Layers

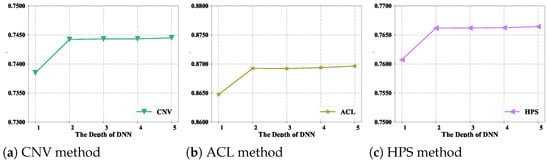

The depth of the deep residual network refers to the number of layers it contains. Increasing the number of layers can potentially increase the model’s capacity to learn complex patterns and representations from the data, which may lead to improved performance. To determine the relatively optimal depth of the deep residual network, we conducted experiments with different depths and assessed the model’s performance, with the embedding rate set to 100%. The experimental results in Figure 5 illustrate the impact of varying the depth on the performance of the steganalysis models.

Figure 5.

Detection accuracies for different depths of the deep residual network across the three steganography datasets.

Indeed, increasing the number of layers in a model can increase its complexity. Figure 5 demonstrates that increasing the number of layers initially enhanced model performance. However, as the number of layers increased from 2 to 3, performance plateaued. This phenomenon occurs because an excessively complex model is prone to overfitting. To strike a balance, setting the number of hidden layers to two is a good choice across the three steganography datasets.

4.6. Performance Comparison

In the frame-wise steganalysis task, the goal is to detect whether individual speech frames contain secret information. This means that rather than considering the entire speech signal or examining speech lengths, the focus is on analyzing each frame independently. The embedding rate in steganography is a parameter that represents the proportion of secret information embedded within the carrier signal (in this case, speech frames). By evaluating detection performance at different embedding rates (ranging from 20% to 100% at 20% intervals), we assessed how well the steganalysis methods performed in detecting hidden information across various levels of embedding. Table 4, Table 5 and Table 6 show the experimental results.

Table 4.

Detection accuracy for the CNV steganography method.

Table 5.

Detection accuracy for the ACL steganography method.

Table 6.

Detection accuracy for the HPS steganography method.

Based on the information provided in Table 4, Table 5 and Table 6, our method achieved the highest detection accuracy among the four steganalysis methods. Specifically, for the ACL steganography method [17], when the embedding rate was 100%, our method achieved an accuracy of 86.92%, while the accuracies of the other methods (DMSM [31], FSMDP [33], and Stegaformer [34]) were 85.48%, 85.90%, and 86.04%, respectively. For the HPS steganography method [21], when the embedding rate was 100%, our method achieved an accuracy of 76.64%. In comparison, the accuracies of the other three methods (DMSM [31], FSMDP [33], and Stegaformer [34]) were 74.04%, 74.21%, and 74.54%, respectively.

In addition, it can be observed that the detection accuracy increased with higher embedding rates. This trend arises because a higher embedding rate corresponds to a larger capacity for concealing secret information in speech frames. The perceptual quality of steganographic speech is closely linked to its detectability. Embedding secret data inevitably introduces subtle distortions, which degrade the perceptual quality of steganographic speech and, in turn, make it more vulnerable to steganalysis. As analyzed in a previous study [17], both the embedding rate and bitrate significantly influence the perceptual quality of steganographic speech, and lower perceptual quality generally leads to higher detection accuracy.

4.7. Comparison of Inference Times Across Methods

Time efficiency plays a critical role in evaluating the effectiveness of steganalysis algorithms, particularly in scenarios requiring real-time detection. Rapid processing of each input sample is essential to ensure timely and responsive analysis. All experiments were conducted on a system configured with an Intel (R) Xeon (R) E5-2678 v3 @ 2.50 GHz CPU and an NVIDIA (Nvidia Corporation, California, America) GeForce GTX 3080Ti GPU. The corresponding results are presented in Table 7.

Table 7.

Average inference time for each frame.

As shown in Table 7, DMSM [31] achieved the highest time efficiency among all the methods. Although our proposed approach did not achieve the fastest inference speed, it satisfied the real-time processing requirement for AMR-NB steganalysis. Given that each AMR-NB speech frame corresponds to 20 ms, the inference time is sufficiently low to support real-time deployment in practical scenarios.

4.8. Ablation Study

In this section, we aim to isolate and understand the individual contributions of each component in the MGA-BI model by conducting ablation experiments. The deep residual network serves as the base model, and we made the following modifications: (1) No BI: We removed the bilinear interaction (BI) [37] layer from the model. (2) No mask-gating attention (MGA) [36] mechanism: We removed the mask-gating attention layer from the model. (3) No residual mechanism [40]: We removed the residual mechanism from the model. By performing these ablations, we can assess the relative importance of each component in the overall performance of the MGA-BI model. This analysis allows us to gain a better understanding of the role played by each component in achieving the reported empirical results. The experimental results are shown in Table 8, Table 9 and Table 10.

Table 8.

Ablation study comparing the performance of different components in the CNV method.

Table 9.

Ablation study comparing the performance of different components in the ACL method.

Table 10.

Ablation study comparing the performance of different components in the HPS method.

From the results presented in Table 8, Table 9 and Table 10, the following observations can be made. First, removing the mask-gating attention [36] and bilinear interaction [37] layers from our model degraded performance. This suggests that these two components are effective and contribute significantly to the overall performance of our method. Second, the experimental results indicate that the bilinear interaction layer is just as crucial as the MGA layer for achieving high performance. This highlights the importance of capturing second-order feature interactions in the model. Lastly, the ablation experiments suggest that the residual mechanism is useful for our method. By preserving the input information, the residual mechanism allows the model to learn from both the raw features and the transformed features provided by the mask-gating attention and bilinear interaction layers, ultimately improving performance. In summary, these ablation experiments provide insight into the individual contributions of the different components of our MGA-BI model and highlight the importance of each component in achieving high performance in the task of interest.

5. Conclusions

To determine whether a tested frame contains secret information in low-bit-rate speech streams, this paper proposes a frame-wise steganalysis method that integrates a mask-gating attention mechanism with a bilinear interaction codeword feature extraction strategy. The framework includes several components: an embedding layer, a mask-gating attention layer, a bilinear interaction layer, and deep residual network layers. Experimental results show that the proposed method achieves better performance than existing steganalysis techniques in frame-wise detection for low-bit-rate speech streams. Nevertheless, the proposed framework has some limitations. First, it analyzes frames independently and does not model temporal dependencies across speech frames, which may reduce its effectiveness in detecting steganographic schemes that distribute hidden information over multiple frames. Second, the method is designed with specific codec structures in mind and may require modifications to adapt to different speech coding standards or variable bit rates. In future work, we aim to address these limitations and improve our approach in several ways: first, by developing a more universal frame detection framework that can generalize to diverse codecs and conditions through adjustments to the architecture or feature extraction techniques; and second, by designing an explainable frame-wise detection method that makes the detection process more transparent and increases trust in real-world applications.

Author Contributions

All authors contributed to the preparation of this paper. C.S. proposed the method, conducted the literature review, designed and performed the experiments, analyzed the results, and wrote the manuscript. A.A. and N.S. supervised the research, offering guidance and suggestions for improvement. N.A.R. assisted in analyzing the experimental results and provided recommendations for revisions. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are publicly available upon request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zou, J.Z.; Chen, M.X.; Gong, L.H. Invisible and robust watermarking model based on hierarchical residual fusion multi-scale convolution. Neurocomputing 2025, 614, 128834. [Google Scholar] [CrossRef]

- Zhang, H.; Kone, M.M.K.; Ma, X.Q.; Zhou, N.R. Frequency-domain attention-guided adaptive robust watermarking model. J. Frankl. Inst. 2025, 362, 107511. [Google Scholar] [CrossRef]

- Roslan, N.A.; Udzir, N.I.; Mahmod, R.; Gutub, A. Systematic literature review and analysis for Arabic text steganography method practically. Egypt. Inform. J. 2022, 23, 177–191. [Google Scholar] [CrossRef]

- Alanazi, N.; Khan, E.; Gutub, A. Efficient security and capacity techniques for Arabic text steganography via engaging Unicode standard encoding. Multimed. Tools Appl. 2021, 80, 1403–1431. [Google Scholar] [CrossRef]

- Kunhoth, J.; Subramanian, N.; Al-Maadeed, S.; Bouridane, A. Video steganography: Recent advances and challenges. Multimed. Tools Appl. 2023, 82, 41943–41985. [Google Scholar] [CrossRef]

- Valandar, M.Y.; Ayubi, P.; Barani, M.J.; Irani, B.Y. A chaotic video steganography technique for carrying different types of secret messages. J. Inf. Secur. Appl. 2022, 66, 103160. [Google Scholar] [CrossRef]

- AlSabhany, A.A.; Ali, A.H.; Ridzuan, F.; Azni, A.; Mokhtar, M.R. Digital audio steganography: Systematic review, classification, and analysis of the current state of the art. Comput. Sci. Rev. 2020, 38, 100316. [Google Scholar] [CrossRef]

- Wu, J.; Chen, B.; Luo, W.; Fang, Y. Audio steganography based on iterative adversarial attacks against convolutional neural networks. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2282–2294. [Google Scholar] [CrossRef]

- Kheddar, H.; Hemis, M.; Himeur, Y.; Megias, D.; Amira, A. Deep learning for steganalysis of diverse data types: A review of methods, taxonomy, challenges and future directions. Neurocomputing 2024, 581, 127528. [Google Scholar] [CrossRef]

- Sun, C.; Tian, H.; Tian, P.; Li, H.; Qian, Z. Multi-agent deep learning for the detection of multiple speech steganography methods. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 2957–2972. [Google Scholar] [CrossRef]

- Sun, C.; Tian, H.; Mazurczyk, W.; Chang, C.C.; Quan, H.; Chen, Y. Steganalysis of adaptive multi-rate speech with unknown embedding rates using clustering and ensemble learning. Comput. Electr. Eng. 2023, 111, 108909. [Google Scholar] [CrossRef]

- Sun, C.; Tian, H.; Mazurczyk, W.; Chang, C.C.; Cai, Y.; Chen, Y. Towards blind detection of steganography in low-bit-rate speech streams. Int. J. Intell. Syst. 2022, 37, 12085–12112. [Google Scholar] [CrossRef]

- Unoki, M.; Miyauchi, R. Method of digital-audio watermarking based on cochlear delay characteristics. In Multimedia Information Hiding Technologies and Methodologies for Controlling Data; IGI Global Scientific Publishing: Hershey, PA, USA, 2013; pp. 42–70. [Google Scholar]

- Cox, I.J.; Kilian, J.; Leighton, F.T.; Shamoon, T. Secure spread spectrum watermarking for multimedia. IEEE Trans. Image Process. 1997, 6, 1673–1687. [Google Scholar] [CrossRef]

- Tian, H.; Zhou, K.; Huang, Y.; Feng, D.; Liu, J. A covert communication model based on least significant bits steganography in voice over IP. In Proceedings of the 2008 the 9th International Conference for Young Computer Scientists, Zhangjiajie, China, 18–21 November 2008; pp. 647–652. [Google Scholar]

- Tian, H.; Jiang, H.; Zhou, K.; Feng, D. Adaptive partial-matching steganography for voice over IP using triple M sequences. Comput. Commun. 2011, 34, 2236–2247. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, C.; Tang, S.; Bai, S. Steganography integration into a low-bit rate speech codec. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1865–1875. [Google Scholar] [CrossRef]

- Huang, Y.; Tao, H.; Xiao, B.; Chang, C. Steganography in low bit-rate speech streams based on quantization index modulation controlled by keys. Sci. China Technol. Sci. 2017, 60, 1585–1596. [Google Scholar] [CrossRef]

- Xiao, B.; Huang, Y.; Tang, S. An approach to information hiding in low bit-rate speech stream. In Proceedings of the IEEE GLOBECOM 2008–2008 IEEE Global Telecommunications Conference, New Orleans, LA, USA, 30 November–4 December 2008; pp. 1–5. [Google Scholar]

- Yan, S.; Tang, G.; Sun, Y.; Gao, Z.; Shen, L. A triple-layer steganography scheme for low bit-rate speech streams. Multimed. Tools Appl. 2015, 74, 11763–11782. [Google Scholar] [CrossRef]

- Hu, Y.; Huang, Y.; Yang, Z.; Huang, Y. Detection of heterogeneous parallel steganography for low bit-rate VoIP speech streams. Neurocomputing 2021, 419, 70–79. [Google Scholar] [CrossRef]

- Chen, B.; Wornell, G.W. Quantization index modulation: A class of provably good methods for digital watermarking and information embedding. IEEE Trans. Inf. Theory 2002, 47, 1423–1443. [Google Scholar] [CrossRef]

- Sun, C.; Tian, H.; Chang, C.C.; Chen, Y.; Cai, Y.; Du, Y.; Chen, Y.H.; Chen, C.C. Steganalysis of adaptive multi-rate speech based on extreme gradient boosting. Electronics 2020, 9, 522. [Google Scholar] [CrossRef]

- Tian, H.; Wu, Y.; Chang, C.C.; Huang, Y.; Chen, Y.; Wang, T.; Cai, Y.; Liu, J. Steganalysis of adaptive multi-rate speech using statistical characteristics of pulse pairs. Signal Process. 2017, 134, 9–22. [Google Scholar] [CrossRef]

- Yang, J.; Li, S. Steganalysis of joint codeword quantization index modulation steganography based on codeword Bayesian network. Neurocomputing 2018, 313, 316–323. [Google Scholar] [CrossRef]

- Li, S.; Jia, Y.; Kuo, C.C.J. Steganalysis of QIM steganography in low-bit-rate speech signals. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1011–1022. [Google Scholar] [CrossRef]

- Lin, Z.; Huang, Y.; Wang, J. RNN-SM: Fast steganalysis of VoIP streams using recurrent neural network. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1854–1868. [Google Scholar] [CrossRef]

- Yang, H.; Yang, Z.; Bao, Y.; Liu, S.; Huang, Y. Fcem: A novel fast correlation extract model for real time steganalysis of VOIP stream via multi-head attention. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2822–2826. [Google Scholar]

- Yang, H.; Yang, Z.; Bao, Y.; Huang, Y. Hierarchical representation network for steganalysis of qim steganography in low-bit-rate speech signals. In Proceedings of the International Conference on Information and Communications Security, Beijing, China, 15–17 December 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 783–798. [Google Scholar]

- Wang, H.; Yang, Z.; Hu, Y.; Yang, Z.; Huang, Y. Fast detection of heterogeneous parallel steganography for streaming voice. In Proceedings of the 2021 ACM Workshop on Information Hiding and Multimedia Security, Virtual, 22–25 June 2021; pp. 137–142. [Google Scholar]

- Li, S.; Wang, J.; Liu, P.; Wei, M.; Yan, Q. Detection of multiple steganography methods in compressed speech based on code element embedding, Bi-LSTM and CNN with attention mechanisms. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1556–1569. [Google Scholar] [CrossRef]

- Ren, Y.; Cai, T.; Tang, M.; Wang, L. AMR steganalysis based on the probability of same pulse position. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1801–1811. [Google Scholar] [CrossRef]

- Wei, M.; Li, S.; Liu, P.; Huang, Y.; Yan, Q.; Wang, J.; Zhang, C. Frame-level steganalysis of QIM steganography in compressed speech based on multi-dimensional perspective of codeword correlations. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 8421–8431. [Google Scholar] [CrossRef]

- Li, S.; Wang, J.; Liu, P. General frame-wise steganalysis of compressed speech based on dual-domain representation and intra-frame correlation leaching. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 2025–2035. [Google Scholar] [CrossRef]

- Lian, J.; Zhou, X.; Zhang, F.; Chen, Z.; Xie, X.; Sun, G. xdeepfm: Combining explicit and implicit feature interactions for recommender systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1754–1763. [Google Scholar]

- Wang, Z.; She, Q.; Zhang, J. MaskNet: Introducing feature-wise multiplication to CTR ranking models by instance-guided mask. arXiv 2021, arXiv:2102.07619. [Google Scholar]

- Huang, T.; Zhang, Z.; Zhang, J. FiBiNET: Combining feature importance and bilinear feature interaction for click-through rate prediction. In Proceedings of the 13th ACM Conference on Recommender Systems, Copenhagen, Denmark, 16–20 September 2019; pp. 169–177. [Google Scholar]

- Lai, S.; Liu, K.; He, S.; Zhao, J. How to generate a good word embedding. IEEE Intell. Syst. 2016, 31, 5–14. [Google Scholar] [CrossRef]

- He, X.; Chua, T.S. Neural factorization machines for sparse predictive analytics. In Proceedings of the 40th International ACM SIGIR conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 355–364. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).