Abstract

This review examines AI governance centered on Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence (the EU Artificial Intelligence Act), alongside comparable instruments (ISO/IEC 42001, NIST AI RMF, OECD Principles, ALTAI). Using a hybrid systematic–scoping method, it maps obligations across actor roles and risk tiers, with particular attention to low-capacity actors, especially SMEs and public authorities. Across the surveyed literature, persistent gaps emerge in enforceability, proportionality, and auditability, compounded by frictions between the AI Act and GDPR and fragmented accountability along the value chain. Rather than introducing a formal model, this paper develops a conceptual lens—compliance asymmetry—to interrogate the structural frictions between regulatory ambition and institutional capacity. This framing enables the identification of normative and operational gaps that must be addressed in future model design.

1. Introduction

Artificial Intelligence (AI) has rapidly evolved into a foundational technology, with general-purpose models like ChatGPT-3.5 accelerating adoption across sectors [1]. While the transformative potential of AI is widely recognized [2,3], regulatory frameworks have struggled to keep pace. Regulation (EU) 2024/1689, commonly known as the European (EU) AI Act, represents the first attempt to impose binding, risk-tiered obligations on AI providers and deployers. Translating these obligations into scalable, auditable compliance processes remains an unresolved challenge, particularly for actors lacking institutional capacity. Existing AI governance frameworks, such as the OECD AI Principles [4], the NISTAI Risk Management Framework [5], and ISO/IEC 42001 [6], provide high-level guidance but often fall short in terms of operational specificity, proportionality, and enforceability [7]. ALTAI (the European Commission’s Assessment List for Trustworthy AI) [8] is more accessible, but has been shown to omit key technical safeguards. It was found that ALTAI overlooks 69% of known AI security vulnerabilities [9]. While ALTAI promotes essential ethical values such as transparency, fairness, and human oversight, it requires further development to serve as a compliance foundation under the EU AI Act. Compounding the complexity is the overlap between the EU AI Act and the General Data Protection Regulation (GDPR), which imposes its own requirements for AI systems that process personal data. Although the EU AI Act does not supersede the GDPR, the interaction between the two creates legal uncertainty, particularly for organizations lacking specialized compliance capacity.

This paper offers a systematic review of the fragmented AI compliance landscape emerging around the EU AI Act and its adjacent governance instruments. It critically examines how current regulatory and normative frameworks, though well-intentioned, fail to translate into actionable obligations for low-capacity actors such as SMEs and public sector entities. Rather than proposing a new model, the paper identifies key structural frictions and design gaps that hinder feasible compliance under real-world constraints. Its contribution lies in framing the operational needs and legal minimums required to enable legally sufficient, auditable, and proportionate compliance practices, even in settings with limited institutional or technical capacity. By clarifying these baseline requirements, the paper lays the conceptual groundwork for future frameworks that are not only normatively aligned but practically attainable.

The key objectives of the study are listed below:

- Analyze the regulatory landscape and actor-specific obligations under the EU AI Act, focusing on the distribution of legal burdens across providers, deployers, importers, and related roles.

- Assess the limitations of ALTAI as a soft-law instrument, especially in traceability and enforceability.

- Review complementary governance frameworks (e.g., ISO/IEC 42001, NIST AI RMF) and identify gaps in their suitability for low-capacity actors.

- Identify structural asymmetries in the compliance ecosystem that call for proportionate, role-sensitive approaches.

2. Methodology

This review followed a structured, hybrid systematic–scoping design. The process was inspired by the systematic review protocol PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [10], but adapted to the interdisciplinary nature of AI governance and the inclusion of both academic and policy sources (Supplementary Materials). The systematic dimension ensured comprehensive coverage of rapidly evolving governance and regulatory frameworks. In contrast, the scoping dimension enabled the integration of earlier works on contextual actors (e.g., SMEs, public authorities), particularly those with longstanding structural capacity constraints. This dual approach provided both up-to-date insights into the EU AI Act and related frameworks, as well as a longitudinal perspective on persistent governance challenges.

2.1. Research Design

The review proceeded in four phases:

- Source identification across academic and policy databases.

- Screening against predefined legal, ethical, and governance relevance criteria.

- Temporal scoping, distinguishing between recent frameworks and longer-standing actor constraints.

- Corpus construction and thematic coding.

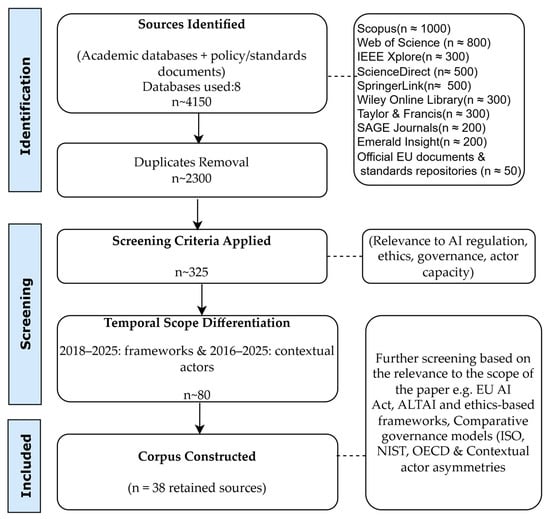

This process is illustrated in Figure 1, which summarizes the flow from initial identification to final thematic clusters.

Figure 1.

PRISMA approach for literature selection.

2.2. Search Strategy

The search drew on major academic databases (Scopus, Web of Science, IEEE Xplore, ScienceDirect, SpringerLink, Wiley Online Library, Taylor & Francis, SAGE, Emerald Insight), as well as official EU documents and standards repositories (e.g., OECD, ISO, NIST, European Commission). Search strings combined regulatory and governance terms (“AI Act,” “Artificial Intelligence Act,” “EU AI regulation,” “ISO/IEC 42001,” “NIST AI RMF,” “OECD Principles”), ethics terms (“Trustworthy AI,” “ALTAI,” “ethics checklist”), and actor-related terms (“SMEs,” “public sector,” “low-capacity actors”).

2.3. Inclusion and Exclusion Criteria

The following exclusion and inclusion criteria were used to select the relevant articles.

2.3.1. Inclusion Criteria

- -

- For technical, legal, and governance-focused studies: be published between 2018 and 2025, reflecting the consolidation period of AI governance frameworks.

- -

- For studies on contextual actors (SMEs, public authorities, low-capacity organizations), also include earlier works (2016 onwards) that provide enduring insights into structural challenges, such as compliance capacity, resource constraints, and organizational readiness.

- -

- Be written in English.

- -

- Present empirical, conceptual, or normative contributions to AI governance, compliance, or risk management.

- -

- Include at least one reference to the EU AI Act, ALTAI, or comparative frameworks (OECD, NIST RMF, ISO/IEC 42001).

2.3.2. Exclusion Criteria

- -

- Non-peer-reviewed opinion pieces, blogs, or news items without substantive analysis.

- -

- Conference abstracts without full papers.

- -

- Sources not addressing governance, regulation, ethics, or structural actor capacity in relation to AI.

2.4. Temporal Scope of Sources

The differentiated temporal scope (as shown in Table 1) reflects the uneven pace of development across domains. Governance frameworks are recent and dynamic, while structural actor issues are long-standing.

Table 1.

Temporal scope of the chosen articles.

2.5. Data Extraction

From the final corpus (n = 37), each document was coded across four thematic axes:

- The EU AI Act (regulatory scope, obligations, proportionality).

- Ethics-based frameworks (ALTAI and related governance checklists).

- Comparative governance models (ISO/IEC 42001, NIST AI RMF, OECD AI Principles).

- Contextual actor asymmetries (SMEs, public authorities, non-specialist deployers).

Coding was performed manually, focusing on both explicit references and latent themes that cut across categories. Table 2 presents the selected articles and their contributions to identifying gaps.

Table 2.

Chosen articles and takeaways.

2.6. Limitations

The hybrid design carries limitations. First, limiting technical and governance sources to the 2018–2025 window risks excluding early conceptual debates; this was mitigated by including contextual actor sources from 2016 onward. Second, reliance on English-language sources may omit perspectives from Member States where implementation debates occur in national languages. Third, although multiple databases were used, database bias cannot be entirely ruled out. These limitations were balanced by deliberately diversifying source types (academic, policy, and standards) and applying a transparent thematic coding framework.

3. Gaps and Discussion

3.1. The Regulatory Landscape: The EU AI Act

3.1.1. Objectives, Scope, and Risk-Based Classification

The European Union Artificial Intelligence Act (EU AI Act), formally Regulation (EU) 2024/1689, was adopted by the European Parliament on 13 March 2024 and by the Council on 21 May 2024. It was signed on 13 June, published in the Official Journal on 12 July, and entered into force on 1 August 2024. It constitutes the world’s first comprehensive legal framework for AI, advancing the European Commission’s digital strategy by transitioning from non-binding ethical principles to legally enforceable obligations. Central to the EU AI Act is a risk-based regulatory model, introduced in Recitals 5–9, that tailors legal obligations to the level of risk posed by AI systems. At the core of the EU AI Act lies a horizontal, tiered governance model that aligns compliance obligations with the level of systemic and individual risk posed by AI systems. This model distinguishes four risk categories: unacceptable risk (Article 5), high risk (Article 6), limited risk (Article 50), and minimal risk (exempt from obligations). Article 7 empowers the Commission to update Annex III, which lists high-risk use cases. Each category carries distinct legal consequences, from outright prohibition to transparency duties or voluntary self-regulation. The specific characteristics, examples, and legal obligations for each category are analyzed in Section 3.1.2, Section 3.1.3, Section 3.1.4 and Section 3.1.5.

3.1.2. Unacceptable-Risk Systems and Implications for Compliance

Unacceptable-risk AI systems are generally prohibited under Article 5 of the EU AI Act. These include applications that contravene fundamental rights, such as systems that exploit vulnerabilities in specific populations (e.g., children or persons with disabilities) and AI used for social scoring by public authorities. The use of real-time remote biometric identification by law enforcement in publicly accessible spaces is also prohibited, except in narrowly defined circumstances, such as searching for missing persons or preventing imminent threats to life or safety, subject to prior judicial or administrative authorization, ex ante registration, and strict safeguards. Their prohibition is grounded in the normative stance that such applications are inherently incompatible with EU democratic values and cannot be justified solely on the basis of risk mitigation.

3.1.3. Limited-Risk Systems and Implications for Compliance

Limited-risk systems are not banned but are subject to specific transparency obligations under Article 50. These obligations require that users be informed when they interact with AI, especially in contexts involving chatbots, biometric categorization, or AI-generated content, such as deepfakes. The aim is to foster informed human agency while avoiding deceptive or manipulative AI. The limited-risk category applies broadly to systems that could mislead users or erode trust if left undisclosed.

3.1.4. Minimal-Risk Systems

Minimal-risk AI systems represent the largest category and include tools such as spam filters, email sorting algorithms, and recommendation engines, which typically do not fall into any of the other three categories. These systems are exempt from the AI Act’s binding requirements.

3.1.5. High-Risk Systems and Implications for Compliance

High-risk applications, such as automated lie detection at EU borders (e.g., iBorderCtrl), illustrate the ethical and regulatory complexity of the AI Act. These systems raise concerns about biometric surveillance, bias, and the structural compliance burdens, particularly when multiple actors share responsibilities for risk management and documentation [11]. The EU AI Act mandates a comprehensive suite of obligations for providers of high-risk AI systems, encompassing lifecycle risk management (Article 9), data governance (Article 10), human oversight (Article 14), and robustness (Article 15). These requirements are formalized through technical documentation (Article 11), logging capabilities (Article 12), transparency and user instructions (Article 13), and provider identification obligations (Article 16(b)). Additionally, providers must implement a quality management system (Article 17), maintain up-to-date technical documentation (Article 18), log system operation (Article 19), and take corrective actions when risks or non-compliance are identified (Article 20). Conformity assessments (Article 43), CE marking (Article 48), and EU declarations of conformity (Article 47) anchor legal accountability, while Article 49 requires registration of high-risk systems in the EU database established under Article 71. Under Article 16(k)–(l) and Recital 80, providers must also ensure accessibility and demonstrate compliance on request by competent authorities.

To navigate these burdens, particularly for complex or sensitive systems (e.g., biometric categorization or risk scoring), organizations are increasingly turning to Fundamental Rights Impact Assessments (FRIsAs). Legally, Article 27 requires FRIAs for certain deployers, such as public authorities and entities providing public services, when using Annex III high-risk systems. However, many providers and other actors adopt FRIAs voluntarily as governance tools to complement mandatory conformity processes. Unlike Data Protection Impact Assessments (DPIAs), which focus narrowly on privacy, FRIAs examine a broader array of rights such as non-discrimination, freedom of expression, and access to justice [12,13]. Without harmonized FRIA methodologies, structured scaffolding tools such as ontologies, AI risk cards, and ethical checklists are often deployed [13,14]. The ALTAI checklist, developed by the European Commission as a soft-law instrument for trustworthy AI, is frequently used in this context. While voluntary, ALTAI’s structured interrogation of fairness, oversight, and societal impact enables operationalization of abstract governance principles and supports early-stage risk mapping [15]. RegTech solutions, including the AI Risk Ontology (AIRO), system transparency cards, and modular FRIA ontologies, are being explored as ways to bridge high-level legal obligations with technical workflows [13,14]. These tools enable traceable, machine-readable documentation and may lower the procedural burden of conformity assessments and audit readiness.

Non-compliance with the AI Act can result in administrative fines of up to €35 million or 7% of global annual turnover for infringements of prohibited practices, €15 million or 3% for other violations, and €7.5 million or 1% for supplying incorrect information (Article 99). Enforcement, however, depends on the harmonization of standards (Articles 40–42) and the administrative capabilities of Member States. In this context, “compliance realism” suggests that incremental adherence, supported by modular frameworks like ALTAI or risk ontologies, may be more feasible for many organizations than complete alignment with aspirational governance ideals. These structural gaps and their implications for compliance tooling are further discussed in Section 3.4 and Section 3.5.

3.1.6. General-Purpose AI (GPAI)

Although not classified as a risk under the EU AI Act, the Regulation establishes a separate framework for General-Purpose AI (GPAI), defined as AI models trained on broad datasets that can serve multiple downstream purposes without requiring fine-tuning. These often include large language models (LLMs), vision transformers, and multimodal systems used across various domains. Articles 51–55 outline obligations for GPAI providers, including

- Transparency documentation on model capabilities, limitations, and risk areas (Article 53).

- Risk mitigation plans, including systemic and societal risk analysis (Article 55 for systemic-risk GPAI).

- Technical documentation detailing architecture, training, and dataset provenance (Article 53).

- Protocols for responsible open release, particularly for systemic-risk GPAI models (Article 55).

Downstream AI system providers that integrate GPAI models into high-risk contexts must obtain and use information provided under Article 53 to meet their own obligations. Furthermore, if a deployer, distributor, or importer substantially modifies or alters the intended purpose of a GPAI model so that it becomes a high-risk system under Article 6, they are legally reclassified as providers under Article 25. This entails full compliance with provider obligations, including technical documentation, conformity assessment, and risk management. These dynamics create a compliance chain in which multiple entities may share accountability for the same outcome.

The regulatory treatment of GPAI thus shifts the focus from application-specific compliance to model-level governance. It also blurs traditional actor categories by distributing compliance responsibilities across development and deployment phases. These dynamics will influence how actor roles are defined and operationalized, which is the focus of the next section.

3.1.7. The AI Value Chain and Role-Specific Compliance

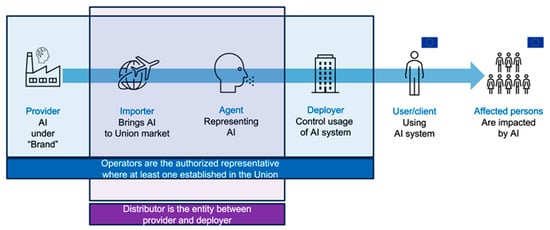

The preceding sections have outlined the EU AI Act’s risk-based structure and associated obligations. To understand how these requirements are applied in practice, however, they must be examined within the broader AI value chain. While the Act assigns distinct legal responsibilities to roles such as providers and deployers, these actors operate within a larger ecosystem of interdependent entities. The EU AI Act establishes a structured taxonomy of actors involved in the development, distribution, and use of AI systems. Article 3 defines the following actors:

- Provider (Art. 3(2)): the natural or legal person who develops an AI system or has it developed, and places it on the market or puts it into service under their name or trademark.

- Deployer (Art. 3(4)): a natural or legal person using an AI system in the course of their professional activities.

- Authorised Representative (Art. 3(5)): a legal entity established in the Union that is appointed in writing by a non-EU provider to act on their behalf concerning regulatory obligations.

- Importer (Art. 3(6)): any natural or legal person established in the Union who places an AI system on the Union market that originates from a third country.

- Distributor (Art. 3(7)): any natural or legal person in the supply chain, other than the provider or importer, who makes an AI system available on the Union market without modifying its properties.

- Product Manufacturer (Art. 3(8)): a manufacturer placing a product on the market or putting it into service under their own name, and who integrates an AI system into that product.

Although not formally recognized as actors, individuals affected by the deployment of AI systems are central to the Act’s risk-based logic, particularly in Recitals 5–9 and 61–63.

A detailed analysis of the corresponding obligations and liability implications for each actor type, categorized by risk level, is presented in Section 3.1.8. Figure 2 illustrates an exemplary flow that maps the journey of AI systems from development to deployment and societal impact, and identifies key actors, including providers, importers, authorized representatives, distributors, and deployers.

Figure 2.

Flow of AI Systems and different Actors.

3.1.8. Summary of Role-Based Obligations Under the EU AI Act

The preceding analysis of the EU AI Act’s risk-based structure and value chain logic demonstrates that legal responsibilities are both tiered by risk and distributed across multiple actor types. However, these obligations are not evenly applied. Instead, they depend on both the actor’s role, as defined in Article 3, and the classification of the AI system they develop, distribute, or use. To synthesize this complexity, Table 3 presents a role–risk matrix that maps the core legal duties imposed on each actor type across the risk categories outlined in Articles 5, 6, and 50. All actors marked with an asterisk (*) in Table 3 are subject to Article 25, meaning that they become a provider of high-risk AI systems and assume the corresponding provider obligations if the conditions of Article 25(1) are met. Since “unacceptable risks” have been banned under Article 5, they entail no obligations for any roles and have not been included in Table 3. Likewise, “minimal-risk” systems have no obligations, as noted in Section 3.1.3, and are therefore excluded from Table 3. As mentioned in Section 3.1.7, although protected by the EU AI Act, both users and affected persons (illustrated in Figure 2) are not considered actors under the Act and have therefore been excluded from the actor–risk obligations matrix. Their rights and interests, rather than the normative structure of the Act, are informed by Recitals 5–9 and 61–63.

Table 3.

Legal obligations per role and risk level (EU Regulation 2024/1689).

The matrix of actor-specific obligations across risk levels reveals a fundamental regulatory asymmetry within the EU AI Act: entities closer to the development phase, such as providers, bear expansive, technically intensive compliance responsibilities. In contrast, actors downstream in the value chain, including distributors, importers, and deployers, are often expected to fulfil operational, ethical, and legal duties without comparable access to system architecture, training data, or design rationale. This fragmented accountability structure creates misaligned incentives and enforcement gaps. For example, deployers must ensure proper human oversight and operational use in accordance with Articles 14 and 26, and in some instances, conduct a Fundamental Rights Impact Assessment under Article 27. Distributors and importers are likewise tasked with verifying compliance under Articles 23 and 24, but may lack the expertise or authority to interrogate opaque algorithmic systems.

While these asymmetries are structurally embedded in the value chain, the EU AI Act also seeks to temper them through explicit proportionality safeguards. The Act does not impose uniform obligations irrespective of context; instead, it acknowledges that compliance must remain reasonable and proportionate to the AI system’s risk category and the role and capacity of the actor involved. Article 9(3) and (5) stipulate that risks need only be mitigated insofar as they can be “reasonably mitigated or eliminated” to achieve an “acceptable residual risk.” Similarly, Recital 109 clarifies that obligations imposed on providers of general-purpose AI models must be “commensurate and proportionate to the type of model provider,” thereby recognizing the disparity between multinational AI developers and smaller firms. In addition, Recital 143, together with Articles 58 and 62, mandates tailored support for SMEs and start-ups, including preferential and, in some cases, cost-free access to regulatory sandboxes, with costs required to remain “fair and proportionate.” Taken together, these provisions acknowledge that actors’ capacities vary significantly and that legal obligations should be calibrated accordingly—particularly when comparing global technology providers to municipal agencies, SMEs, or non-specialist deployers.

This uneven playing field relies on one-size-fits-all compliance strategies that are impractical and potentially exclusionary. Moreover, as discussed in Section 3.1.6, the rise of General Purpose AI (GPAI) further blurs traditional role distinctions. Under Article 25, distributors, importers, or deployers that substantially modify a system, alter its intended purpose so that it falls within the high-risk categories of Article 6, or place the system on the market under their own name or trademark, are legally reclassified as providers. In such cases, they inherit the complete set of provider obligations under Article 16. This regulatory role fluidity introduces additional compliance complexity and risk, particularly for actors with limited institutional or technical capacity.

3.2. Ethics and Governance: The ALTAI Checklist

3.2.1. Origins and Principles

The Assessment List for Trustworthy Artificial Intelligence (ALTAI) was introduced in 2020 by the European Commission’s High-Level Expert Group on AI as a self-assessment tool grounded in the Ethics Guidelines for Trustworthy AI. It operationalizes seven core requirements identified in the Guidelines: human agency and oversight; technical robustness and safety; privacy and data governance; transparency; diversity; non-discrimination and fairness; societal and environmental well-being; and accountability. These principles reflect a normative commitment to ensuring AI is not only technically efficient but also aligned with fundamental rights and societal values [16]. ALTAI’s strength lies in translating these abstract ethical ideals into structured, measurable dimensions. Unlike high-level declarations such as the OECD Principles or UNESCO’s Recommendations, ALTAI is designed explicitly for practical deployment by developers and deployers, making it a cornerstone in the EU’s AI governance ecosystem [17].

3.2.2. Role in EU Governance

Within the EU regulatory landscape, ALTAI is a foundational instrument and a bridge between soft law and formal regulation. Though voluntary, it has been influential in shaping the ethical underpinnings of the EU AI Act and guiding principles in the European AI Strategy and Coordinated Plan [18]. It enables entities to align their operations with expectations of transparency, accountability, and fairness, without yet being subject to direct legal mandates. ALTAI helps operationalize the EU’s emphasis on trustworthy AI by translating principles into data governance tasks, particularly in areas of auditability and data traceability [15]. However, this integration is still evolving. While the EU AI Act mandates conformity assessments and documentation, it does not codify ALTAI as a binding checklist. Thus, ALTAI’s influence is normative, serving more as ethical scaffolding than enforceable regulation. While ALTAI is formally a soft-law instrument, non-binding and not directly referenced in the EU AI Act’s legal text, it has gained practical traction as an auxiliary tool in semi-formal governance settings. Notably, it is increasingly deployed to support Fundamental Rights Impact Assessments (FRIAs), especially in contexts where no sector-specific templates exist. This functional absorption gives ALTAI quasi-regulatory influence despite its voluntary nature. The implication is twofold. First, its ethical framing is shaping compliance expectations even in the absence of legal obligation. Second, its operational under-specification limits its utility unless translated into auditable processes.

3.2.3. Strengths and Normative Value

The key strength of ALTAI is its accessibility and comprehensiveness. It provides a step-by-step tool, with questions and indicators, for organizations to self-assess compliance with the EU’s ethical AI vision. ALTAI is particularly effective in articulating the ethical pillars of accountability, explainability, and usability in a manner that is intelligible to non-specialist audiences [19]. Furthermore, ALTAI represents a significant shift toward procedural ethics, ensuring that ethical principles are embedded throughout the system design and development processes, rather than merely evaluated ex post. ALTAI’s modular design can be tailored to varied contexts, making it a flexible and scalable ethics tool [20]. Normatively, ALTAI reinforces the idea of ethics as a continuous, systemic obligation in AI governance, rather than a one-time evaluation. Its emphasis on human oversight and technical robustness ensures alignment with broader EU policy on fundamental rights and sustainable development. It exemplifies governance by design, embedding compliance and accountability directly into the development pipeline [21].

3.2.4. Sector-Specific Ethical Tensions

While the ALTAI checklist provides a high-level overview of ethical principles, concrete deployments of AI systems reveal practical tensions between these ideals. In healthcare, large language models used in clinical decision support often lack explainability, raising concerns about patient autonomy and trust [22]. In law enforcement contexts such as border control, the use of biometric surveillance tools like iBorderCtrl has sparked criticism over proportionality, privacy, and discriminatory risk [11]. Ethical principles also frequently conflict with one another. Transparency and explainability may undermine data minimization or expose sensitive operational logic, while fairness-enhancing techniques may reduce model performance or introduce new trade-offs. These tensions underscore the need for ethical frameworks that not only articulate principles but also provide mechanisms for prioritizing and resolving competing values in context-specific applications.

3.2.5. Limitations in Security and Enforceability

Despite its normative strengths, ALTAI has several limitations that constrain its practical utility. First, its voluntary nature means there are no formal enforcement mechanisms, leading to questions about its impact in commercial settings where ethical commitments may be deprioritized [23]. Second, the checklist prioritizes procedural ethics over outcome accountability, providing limited guidance on evaluative metrics or real-world benchmarks. This self-assessment structure leaves room for performative ethics, also known as “ethics washing” [24]. Third, ALTAI is inadequate for applications in high-risk areas such as law enforcement or military systems, where adversarial robustness and technical security standards are essential; it remains too general for these contexts [12]. Lastly, ALTAI assumes organizational capacities, including technical, financial, and human, that are often unavailable to small and medium-sized enterprises. Without standardized compliance tools or external audit mechanisms, ALTAI risks being inaccessible or ineffectual for under-resourced actors [20]. These structural limitations frame ALTAI as a foundational, yet ultimately limited, ethical governance instrument.

3.3. Comparative Compliance Frameworks

Existing AI governance models differ not only in scope and application but also in their underlying assumptions about how compliance should be structured. Some reflect a “governance by audit” paradigm, emphasizing post-deployment documentation and oversight. In contrast, others adopt a “governance by design” approach, embedding legal and ethical obligations into the development lifecycle itself. This distinction is particularly relevant for this research, which seeks to develop a compliance framework that is both auditable and adaptable. Section 3.3.1 examines audit-oriented models such as the OECD AI Principles and the NIST AI Risk Management Framework (RMF), which provide voluntary, checklist-based governance structures. Section 3.3.2 turns to design-integrated approaches, including ISO/IEC 42001 and Responsible AI models, which reflect a shift toward iterative, feedback-driven governance. These models conceptualize compliance not as a static certification event, but as a dynamic process—an approach increasingly reflected in modern cybersecurity and risk frameworks, particularly suited to complex socio-technical systems.

3.3.1. Audit-Oriented Frameworks

NIST AI Risk Management Framework (RMF)

The National Institute of Standards and Technology (NIST) introduced its AI Risk Management Framework in 2023 to provide voluntary, sector-agnostic guidance for managing AI-related risks. Structured around four core functions—Map, Measure, Manage, and Govern—the RMF supports innovation while promoting responsible AI use [5]. However, the RMF’s emphasis on post-deployment monitoring limits its adaptability in fast-evolving or adversarial contexts. RMF tends to treat risk as a static entity rather than a co-evolving relationship between the system and its context [25]. Furthermore, its uptake among SMEs remains low, mainly due to the high documentation burdens and limited tailoring for lower-resourced actors. Its focus on governance by audit risks overlooks upstream design flaws that could otherwise be mitigated through embedded safeguards.

OECD AI Principles

The OECD’s 2019 AI Principles, endorsed by over 40 countries, articulate five core commitments: inclusive growth, human-centered values, transparency, robustness and security, and accountability. These principles have informed numerous national AI strategies, including the ethical foundations of the EU AI Act. However, the OECD framework remains non-binding and conceptually broad. Its implementation is inconsistent across jurisdictions, and its guidance lacks operational specificity [26]. While it contributes to normative alignment, its function remains primarily aspirational and symbolic, with its effect heavily dependent on local political will and the degree of regulatory integration.

3.3.2. Design-Integrated Frameworks

ISO/IEC 42001—AI Management Systems

ISO/IEC 42001, released in late 2023, is the first international standard for AI Management Systems (AIMS). Developed by ISO and the IEC, it provides organizations with structured procedures for risk management, traceability, and continuous improvement. It is designed to be compatible with ISO/IEC 27001 [45] and ISO/IEC 9001 [46], embedding AI governance into the broader strategic and operational fabric of organizations. This framework embodies “governance by design” by requiring organizations to treat AI compliance as an ongoing management process, rather than a one-time audit. Its emphasis on continuous documentation, leadership engagement, and iterative improvement closely mirrors the PDCA cycle, an established model for quality and security governance. However, ISO 42001 poses high entry barriers for SMEs, both in terms of cost and procedural formality [27]. Its uptake remains skewed toward large institutions, particularly in regulated sectors like finance [28].

Responsible AI Models

Responsible AI (RAI) models aim to bridge the gap between normative ethics and legal compliance by integrating embedded technical safeguards. These models often draw from design philosophy, integrating obligations directly into system architecture. For instance, using deontic logic to encode legal and ethical rules as system constraints, effectively “building in” responsibility [29]. Other emerging RAI models utilize decentralized governance architectures, such as blockchain-based tokenized compliance tools. While these promote traceability and stakeholder participation, they face challenges in institutional adoption and interoperability. RAI models must possess context-aware, interdisciplinary, and adaptive qualities that align well with circular compliance strategies, but are challenging to implement without clear regulatory scaffolding or technical maturity [30].

3.3.3. Alignment and Gaps

Despite differences in enforcement, scope, and formality, audit- and design-oriented frameworks share broad commitments to transparency, accountability, and fairness. However, key implementation gaps remain. For example, while the EU AI Act mandates non-discrimination, it does not specify computational fairness standards, leaving technical choices to providers [31]. More fundamentally, the divide between governance by design and governance by audit is not merely procedural but epistemological: the former views compliance as embedded and evolving. At the same time, the latter treats it as external and retrospective. Effective AI governance must treat systems as socio-technical artifacts, requiring continuous oversight, contextual adaptation, and cross-functional collaboration [25]—principles that are poorly reflected in static audit regimes. This analysis supports the argument that a living, auditable framework capable of balancing minimal enforceability with real-world adaptability is necessary to support low-capacity actors within the EU AI compliance ecosystem.

3.4. Implementation Gaps and Legal Complexity

3.4.1. Challenges in Translating Regulation to Practice

While the EU AI Act outlines a detailed framework for managing the risks associated with artificial intelligence, the practical implementation of its provisions into everyday institutional operations remains highly uneven. This is particularly evident in high-risk domains, such as healthcare and finance, where the complexity of AI systems often outpaces current legal and technical infrastructures. In the healthcare context, compliance mechanisms vary significantly across jurisdictions, and regulatory clarity is often lacking, despite the AI Act’s harmonizing intent [32]. The financial sector emphasizes the importance of early compliance integration and transparent documentation [28], noting that while many institutions recognize the need for regulatory oversight, few have successfully implemented continuous monitoring practices. This lag is symptomatic of a broader implementation challenge: translating legal requirements into organizational behavior in a timely, consistent manner.

3.4.2. Resource Asymmetry

The Burden on SMEs: A recurring concern in AI governance is the disproportionate burden of regulatory compliance on small and medium-sized enterprises (SMEs). ISO/IEC 42001, while comprehensive, imposes significant documentation, monitoring, and auditing requirements that may exceed the capacity of resource-constrained actors. SMEs struggle to adapt management processes to align with ISO/IEC 42001 due to both technical and human resource limitations [27]. Similarly, the EU AI Act mandates conformity assessments, data quality checks, and traceability obligations for high-risk systems, requirements that often demand legal, technical, and regulatory expertise well beyond the operational scope of smaller firms. The redrafting of the AI Act prioritized fundamental rights but failed to account for disparities in resources across the innovation ecosystem [33]. This imbalance raises concerns about the regulation’s inclusivity and its potential to unintentionally stifle smaller-scale innovation. For such actors, RegTech tools can serve as scaffolding mechanisms, automating routine checks, generating documentation, or embedding legal logic, thereby reducing the compliance burden without sacrificing legal sufficiency [34].

3.4.3. Fragmentation and Contradictions GDPR vs. EU AI Act

Another layer of complexity emerges from the legal fragmentation between the EU AI Act and the General Data Protection Regulation (GDPR). While both frameworks aim to safeguard fundamental rights, they originate from different regulatory logics: product safety under the AI Act and privacy protection under the GDPR. This divergence creates implementation inconsistencies, particularly regarding lawful data processing, consent requirements, and impact assessments. For instance, Article 14 of the AI Act mandates human oversight in high-risk AI systems, whereas Article 22 of the GDPR places strict limitations on automated decision-making. Similarly, GDPR’s emphasis on data minimization (Art. 5(1)(c)) and purpose limitation (Art. 5(1)(b)) can conflict with AI models that require large, diverse datasets for practical training. The AI Act’s lack of computational specificity in defining fairness and non-discrimination further complicates its alignment with the GDPR’s more explicit mandates on individual rights [31]. These contradictions underscore the need for interpretive guidance and integrated regulatory oversight. Without a harmonized interface between the GDPR and the EU AI Act, deployers are left to navigate overlapping obligations and inconsistent audit demands. Effective compliance will likely depend on sector-specific interpretations, greater administrative coordination, and improved regulatory literacy among AI providers [35].

3.4.4. Iterative Governance as a Low-Burden Strategy for SMEs

While regulatory frameworks such as the EU AI Act and ISO/IEC 42001 offer structured approaches to AI governance, their implementation often presumes institutional capacities—legal, technical, and administrative—that many small and medium-sized enterprises (SMEs) may struggle to meet. This does not imply that SMEs cannot or should not achieve compliance; instead, it suggests that the means of achieving compliance must be adapted to their specific realities. Several studies have noted that static, audit-heavy models impose disproportionate burdens on under-resourced actors [27,36], creating barriers to entry even for actors committed to compliance. As a response, iterative governance has emerged as a more sustainable and SME-aligned approach. Rather than treating compliance as a one-time certification or exhaustive audit, iterative models conceptualize it as a continuous, cyclical process of incremental improvement. This reduces immediate administrative pressure while embedding compliance into everyday organizational behavior. Studies of lightweight quality systems have demonstrated that scalable, feedback-driven models significantly lower adoption thresholds by prioritizing contextual flexibility, progressive alignment, and organizational learning [37,38]. Conventional cybersecurity and governance standards, including the NIST framework, often fail to accommodate the specific constraints of SMEs [39]. Their study emphasizes the need for dynamic, responsive, and technologically tailored governance strategies that evolve with an organization’s maturity and risk exposure. Iterative approaches offer SMEs a pathway to regulatory legitimacy, including under the EU AI Act, without requiring immediate full-spectrum compliance. This vision of iterative, low-friction compliance aligns with emerging regulatory thinking that emphasizes dynamic, context-aware governance. Scholars have advocated for anticipatory and agile regulation in digital domains, where compliance tools are embedded in daily operations and refined over time through feedback, risk exposure, and institutional learning [40]. Rather than relying solely on static, audit-based mechanisms, these models promote compliance as a process—a living system that adapts to technological change and evolving organizational capacity. Such models suggest that effective AI governance for SMEs may require compliance infrastructures that evolve in parallel with institutional capabilities, rather than demanding immediate, full-spectrum implementation.

3.5. Framing Least Responsible AI Controls

3.5.1. Rationale and Structural Asymmetry

As outlined in Section 3.1.8, the EU AI Act formally allocates responsibilities across various roles, including providers, deployers, and importers. However, real-world enforcement patterns are far less symmetrical. Small and medium-sized enterprises SMEs in financial services face extensive documentation and impact assessment requirements despite lacking the internal governance structures to meet them [28]. Similarly, public institutions, although subject to strict transparency and oversight expectations, are structurally under-resourced to fulfil them [41]. This reveals a critical governance flaw: formal role-based compliance often demands more than what constrained actors can realistically implement. Their operational capacity does not match their legal exposure. This disconnect can be referred to as regulatory realism, which involves assessing legal obligations not by their normative ambition, but by their implementability within uneven institutional landscapes [25]. In this context, the central question becomes: What constitutes a legally sufficient and auditable set of controls when full-spectrum compliance is infeasible? For many actors, especially those without access to technical system design or legal expertise, the answer lies not in aspirational ethics but in minimum viable compliance: a control set that fulfils the letter of the law while remaining feasible, traceable, and role-sensitive.

3.5.2. Idealism vs. Legal Sufficiency

Mainstream AI governance frameworks, such as ALTAI, ISO/IEC 42001, and the NIST AI RMF, promote a range of normative values: fairness, explainability, robustness, and human oversight. However, empirical evaluations have highlighted critical gaps. These frameworks fail to account for approximately 69% of known AI security vulnerabilities, particularly in cases where actors lack the capacity for technical implementation [9]. While valuable as aspirational instruments, these frameworks frequently fall short of providing enforceable or auditable pathways to compliance. Moreover, regulatory capture by dominant AI providers tends to shift obligations downstream [42]. As a result, deployers, system integrators, and even public sector actors are expected to retain logs, implement oversight, and document risks, often without access to internal model architecture or training data. These role asymmetries create a structural mismatch in formal accountability. Rather than pursue frameworks that presume full ethical maturity, AI compliance must prioritize what is strictly required. A minimal set of legally anchored controls, clearly mapped to articles of the EU AI Act, auditable through evidence, and feasibly actionable by constrained actors, provides a more realistic foundation for compliance. This does not imply an abandonment of ethics but recognizes the need for structured enablement over idealistic projection.

3.5.3. Enabling Compliance in Low-Capacity Settings

Legal obligations must be translated into structured organizational routines to be effective [32]. Compliance must be enabled, not merely demanded. This argument holds particular weight in AI governance, where obligations such as risk management (Art. 9) or data governance (Art. 10) cannot be fulfilled without accompanying operational structures. Cloud-based, interoperable compliance systems should lower entry barriers for auditability, documentation, and traceability [36]. The AGORA database [43], which catalogues over 330 global AI governance instruments, confirms that most tools lack implementation mechanisms for low-capacity actors. Without tangible compliance infrastructures, legal requirements risk becoming symbolic, reinforcing inequality rather than accountability. As such, the concept of Least Responsible AI Controls offers a governance logic that does not rely on voluntary overcompliance or institutional abundance. Instead, it reflects a baseline of defensible, minimum obligations that actors must meet to comply with the EU AI Act under real-world conditions.

4. Conclusions

The earlier sections demonstrate that, while the European Union’s AI governance system—including the EU AI Act, the ALTAI checklist, and other tools—is broadly structured, it also presents several conceptual and operational challenges. Despite its broad aims, the EU AI Act has unclear definitions (such as fairness), mismatched with other legal frameworks (like the GDPR), and limited enforcement capacity across Member States [31,35]. Moreover, as Section 3.2 and Section 3.3 highlight, governance tools such as ALTAI, NIST RMF, ISO/IEC 42001, and the OECD Principles are effective at establishing high-level values but struggle in real-world ecosystems with multiple actors and complex risks. These frameworks often assume that organizations have high levels of maturity and technical skill, which is not always the case in the public and private sectors. Notably, ALTAI’s procedural ethics model lacks direct enforceability and does not provide clear metrics to measure outcomes, thereby limiting its usefulness in high-risk areas [12,24]. Research on compliance also reveals scalability issues. For example, the NIST RMF is flexible but not ideal for dynamic, evolving AI systems. Similarly, ISO/IEC 42001, as it attempts to integrate AI into existing management systems, faces challenges due to high costs and stringent requirements, particularly for small and medium-sized enterprises [27,28]. The central assumption across these frameworks is that organizations have sufficient resources, transparency, and an understanding of regulations, conditions that are often not present in practice. Instead of aiming for perfect or all-encompassing governance, a more fit approach should focus on the smallest set of enforceable obligations that actors with limited capacity, such as SMEs and public sector entities, can realistically meet. These actors typically lack access to detailed system internals and specialized compliance infrastructure, making full compliance impossible. Frameworks should emphasize a set of minimal requirements to address the identified accountability gap that arises when legal demands exceed operational capabilities. These findings collectively underscore the structural and procedural gaps that currently hinder effective AI compliance, particularly for actors with limited institutional capacity. The highlights of the takeaways (to identify gaps) from the chosen articles are shown in Table 3. As a systematic review, this paper does not offer prescriptive solutions but instead maps the conditions under which compliance becomes disproportionately burdensome. This diagnostic perspective serves as a conceptual foundation for targeted intervention in future work.

5. Suggestions for Future Research

The literature reveals an unmet need for minimal governance instruments (in terms of administrative overhead), auditable (traceable and transparent), and secure (robust against adversarial risk). Such tools should prioritize legal sufficiency as a baseline over aspirational ethics, particularly for low-capacity actors. The current global regulatory environment lacks interoperable, role-specific compliance that can be embedded into technical systems and institutional workflows [43]. Cloud-native, plug-and-play governance modules to support compliance among less mature actors [36]. These perspectives support the case for designing compliance frameworks that serve as both legal instruments and scalable infrastructure. The literature also calls for enhanced meta-regulatory coordination. There is a need for cross-sectoral harmonization, particularly where the EU AI Act and GDPR impose overlapping but potentially contradictory obligations [32,44]. Without integrated regulatory guidance or shared compliance tools, fragmented governance will persist. The literature reveals that AI compliance is not a binary state, but rather a gradient ranging from strict legal sufficiency to aspirational ethical governance. While this review identifies and systematizes the key obstacles to proportional and auditable compliance, future research should address these gaps by designing, operationalizing, and evaluating lightweight, role-specific compliance frameworks and tools that can realistically be adopted by low-capacity actors.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcp5040101/s1. The PRISMA checklist is attached.

Author Contributions

Conceptualization, W.W.F.; methodology, W.W.F.; validation, W.W.F.; writing—original draft preparation, W.W.F.; writing—review and editing, W.W.F. and M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Iyelolu, T.V.; Agu, E.E.; Idemudia, C.; Ijomah, T.I. Driving SME Innovation with AI Solutions: Overcoming Adoption Barriers and Future Growth Opportunities. Int. J. Sci. Technol. Res. Arch. 2024, 7, 036–054. [Google Scholar] [CrossRef]

- Jafarzadeh, P.; Vähämäki, T.; Nevalainen, P.; Tuomisto, A.; Heikkonen, J. Supporting SME Companies in Mapping out AI Potential: A Finnish AI Development Case. J. Technol. Transf. 2024, 50, 1016–1035. [Google Scholar] [CrossRef]

- OECD. Recommendation of the Council on Artificial Intelligence; OECD Publishing: Paris, France, 2019. [Google Scholar]

- NIST AI 100-1; Artificial Intelligence Risk Management Framework (AI RMF 1.0). National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2023.

- ISO/IEC 42001:2023; Information Technology—Artificial Intelligence—Management System. International Organization for Standardization (ISO): Geneva, Switzerland, 2023.

- Golpayegani, D.; Pandit, H.J.; Lewis, D. Comparison and Analysis of 3 Key AI Documents: EU’s Proposed AI Act, Assessment List for Trustworthy AI (ALTAI), and ISO/IEC 42001 AI Management System. In Artificial Intelligence and Cognitive Science; Longo, L., O’Reilly, R., Eds.; Communications in Computer and Information Science; Springer Nature: Cham, Switzerland, 2023; Volume 1662, pp. 189–200. ISBN 978-3-031-26437-5. [Google Scholar]

- European Commission, Directorate-General for Communications Networks, Content and Technology. The Assessment List for Trustworthy Artificial Intelligence (ALTAI) for Self-Assessment; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar]

- Madhavan, K.; Yazdinejad, A.; Zarrinkalam, F.; Dehghantanha, A. Quantifying Security Vulnerabilities: A Metric-Driven Security Analysis of Gaps in Current AI Standards. arXiv 2025, arXiv:2502.08610. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef] [PubMed]

- Kalodanis, K.; Rizomiliotis, P.; Feretzakis, G.; Papapavlou, C.; Anagnostopoulos, D. High-Risk AI Systems—Lie Detection Application. Future Internet 2025, 17, 26. [Google Scholar] [CrossRef]

- Li, Z. AI Ethics and Transparency in Operations Management: How Governance Mechanisms Can Reduce Data Bias and Privacy Risks. J. Appl. Econ. Policy Stud. 2024, 13, 89–93. [Google Scholar] [CrossRef]

- Rintamaki, T.; Pandit, H.J. Developing an Ontology for AI Act Fundamental Rights Impact Assessments. arXiv 2024, arXiv:2501.10391. [Google Scholar]

- Golpayegani, D. Semantic Frameworks to Support the EU AI Act’s Risk Management and Documentation. Ph.D. Thesis, Trinity College Dublin, Dublin, Ireland, 2024. [Google Scholar]

- Bhouri, H. Navigating Data Governance: A Critical Analysis of European Regulatory Framework for Artificial Intelligence. In Recent Advances in Public Sector Management; IntechOpen: London, UK, 2025; p. 171. [Google Scholar]

- Kriebitz, A.; Corrigan, C.; Boch, A.; Evans, K.D. Decoding the EU AI Act in the Context of Ethics and Fundamental Rights. In The Elgar Companion to Applied AI Ethics; Edward Elgar Publishing Ltd.: Cheltenham, Gloucestershire, UK, 2024; pp. 123–152. ISBN 1-80392-824-7. [Google Scholar]

- Sun, N.; Miao, Y.; Jiang, H.; Ding, M.; Zhang, J. From Principles to Practice: A Deep Dive into AI Ethics and Regulations. arXiv 2024, arXiv:2412.04683. [Google Scholar] [CrossRef]

- Karami, A. Artificial Intelligence Governance in the European Union. J. Electr. Syst. 2024, 20, 2706–2720. [Google Scholar] [CrossRef]

- Iyer, V.; Manshad, M.; Brannon, D. A Value-Based Approach to AI Ethics: Accountability, Transparency, Explainability, and Usability. Merc. Neg. 2025, 26, 3–12. [Google Scholar] [CrossRef]

- Aiyankovil, K.G.; Lewis, D. Harmonizing AI Data Governance: Profiling ISO/IEC 5259 to Meet the Requirements of the EU AI Act. In Legal Knowledge and Information Systems; IOS Press: Amsterdam, The Netherlands, 2024; pp. 363–365. [Google Scholar]

- Ashraf, Z.A.; Mustafa, N. AI Standards and Regulations. In Intersection of Human Rights and AI in Healthcare; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 325–352. [Google Scholar]

- Comeau, D.S.; Bitterman, D.S.; Celi, L.A. Preventing Unrestricted and Unmonitored AI Experimentation in Healthcare through Transparency and Accountability. npj Digit. Med. 2025, 8, 42. [Google Scholar] [CrossRef]

- Scherz, P. Principles and Virtues in AI Ethics. J. Mil. Ethics 2024, 23, 251–263. [Google Scholar] [CrossRef]

- Ho, C.-Y. A Risk-Based Regulatory Framework for Algorithm Auditing: Rethinking “Who,” “When,” and “What”. Tenn. J. Law Policy 2025, 17, 3. [Google Scholar] [CrossRef]

- Janssen, M. Responsible Governance of Generative AI: Conceptualizing GenAI as Complex Adaptive Systems. Policy Soc. 2025, 44, puae040. [Google Scholar] [CrossRef]

- Al-Omari, O.; Alyousef, A.; Fati, S.; Shannaq, F.; Omari, A. Governance and Ethical Frameworks for AI Integration in Higher Education: Enhancing Personalized Learning and Legal Compliance. J. Ecohumanism 2025, 4, 80–86. [Google Scholar] [CrossRef]

- Proietti, S.; Magnani, R. Assessing AI Adoption and Digitalization in SMEs: A Framework for Implementation. arXiv 2025, arXiv:2501.08184. [Google Scholar] [CrossRef]

- Thoom, S.R. Lessons from AI in Finance: Governance and Compliance in Practice. Int. J. Sci. Res. Arch. 2025, 14, 1387–1395. [Google Scholar] [CrossRef]

- Dimitrios, Z.; Petros, S. Towards Responsible AI: A Framework for Ethical Design Utilizing Deontic Logic. Int. J. Artif. Intell. Tools 2025, 33, 2550003. [Google Scholar]

- Ajibesin, A.A.; Çela, E.; Vajjhala, N.R.; Eappen, P. Future Directions and Responsible AI for Social Impact. In AI for Humanitarianism; CRC Press: Boca Raton, FL, USA, 2025; pp. 206–220. [Google Scholar]

- Meding, K. It’s Complicated. The Relationship of Algorithmic Fairness and Non-Discrimination Regulations in the EU AI Act. arXiv 2025, arXiv:2501.12962. [Google Scholar]

- Busch, F.; Geis, R.; Wang, Y.-C.; Kather, J.N.; Khori, N.A.; Makowski, M.R.; Kolawole, I.K.; Truhn, D.; Clements, W.; Gilbert, S.; et al. AI Regulation in Healthcare around the World: What Is the Status Quo? medRxiv 2025. medRxiv:2025.01.25.25321061. [Google Scholar] [CrossRef]

- Palmiotto, F. The AI Act Roller Coaster: The Evolution of Fundamental Rights Protection in the Legislative Process and the Future of the Regulation. Eur. J. Risk Regul. 2025, 16, 770–793. [Google Scholar] [CrossRef]

- Liang, P. Leveraging Artificial Intelligence in Regulatory Technology (RegTech) for Financial Compliance. Appl. Comput. Eng. 2024, 93, 166–171. [Google Scholar] [CrossRef]

- Paolini E Silva, M.; Tamo-Larrieux, A.; Ammann, O. AI Literacy Under the AI Act: Tracing the Evolution of a Weakened Norm. OSF Prepr. 2025. [Google Scholar] [CrossRef]

- Babalola, O.; Adedoyin, A.; Ogundipe, F.; Folorunso, A.; Nwatu, C.E. Policy Framework for Cloud Computing: AI, Governance, Compliance and Management. Glob. J. Eng. Technol. Adv. 2024, 21, 114–126. [Google Scholar] [CrossRef]

- Gasser, U.; Almeida, V.A.F. A Layered Model for AI Governance. IEEE Internet Comput. 2017, 21, 58–62. [Google Scholar] [CrossRef]

- Heeks, R.; Renken, J. Data Justice for Development: What Would It Mean? Inf. Dev. 2018, 34, 90–102. [Google Scholar] [CrossRef]

- Safa, N.S.; Solms, R.V.; Furnell, S. Information Security Policy Compliance Model in Organizations. Comput. Secur. 2016, 56, 70–82. [Google Scholar] [CrossRef]

- Yeung, K.; Lodge, M. Algorithmic Regulation; Oxford University Press: Oxford, UK, 2019; ISBN 0-19-257543-0. [Google Scholar]

- Popa, D.M. Frontrunner Model for Responsible AI Governance in the Public Sector: The Dutch Perspective. AI Ethics 2024, 5, 2789–2799. [Google Scholar] [CrossRef]

- Wei, K.; Ezell, C.; Gabrieli, N.; Deshpande, C. How Do AI Companies “Fine-Tune” Policy? Examining Regulatory Capture in AI Governance. AIES 2024, 7, 1539–1555. [Google Scholar] [CrossRef]

- Arnold, Z.; Schiff, D.S.; Schiff, K.J.; Love, B.; Melot, J.; Singh, N.; Jenkins, L.; Lin, A.; Pilz, K.; Enweareazu, O.; et al. Introducing the AI Governance and Regulatory Archive (AGORA): An Analytic Infrastructure for Navigating the Emerging AI Governance Landscape. AIES 2024, 7, 39–48. [Google Scholar] [CrossRef]

- Singh, L.; Randhelia, A.; Jain, A.; Choudhary, A.K. Ethical and Regulatory Compliance Challenges of Generative AI in Human Resources. In Generative Artificial Intelligence in Finance; Chelliah, P.R., Dutta, P.K., Kumar, A., Gonzalez, E.D.R.S., Mittal, M., Gupta, S., Eds.; Wiley: Hoboken, NJ, USA, 2025; pp. 199–214. ISBN 978-1-394-27104-7. [Google Scholar]

- ISO/IEC 27001:2022; Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2022.

- ISO 9001:2015; Quality Management Systems—Requirements. International Organization for Standardization (ISO): Geneva, Switzerland, 2015.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).