1. Introduction

In recent years, Ecuador has experienced accelerated growth in the use of digital technologies due to the expansion of mobile internet, online banking, and the digitalization of public services. With the advancement of AI (Artificial Intelligence), which provides us with a personal assistant both on mobile devices and with direct access via the internet, and machine learning (a subset that leverages algorithms and statistical models that allow systems to learn and adapt to data without the need for explicit programming), in addition to the use of natural language processing (NLP), which gives AI systems the ability to understand, interpret, generate, and interact with human languages, exposure to cybercrimes has increased, which are becoming increasingly sophisticated due to the malicious use of artificial intelligence (AI) in their processes. According to data from the Attorney General’s Office, 5237 cybercrime reports were filed in 2021, representing a 129% increase compared to 2020, as shown in the 2021 Cybercrime Reports in Ecuador.

Attackers have leveraged these AI techniques to develop more sophisticated cyberthreats, such as financial fraud; phishing and ransomware lead the statistics, as well as intelligent malware and automated vulnerability exploitation. These AI-driven attacks can be more difficult to detect and defend against in the cloud, posing significant challenges to traditional security measures and their prevention, affecting individuals, businesses, and public institutions alike.

Cybercriminals in Ecuador and around the world are using AI to create sophisticated financial fraud schemes, such as generating fake transactions, phishing (deepfakes), and manipulating banking algorithms. An example of this malicious use is the use of deepfakes to impersonate executives and authorize illegal transfers. There are also cases of scams using AI-powered chatbots that mimic legitimate services, such as fraudulent job offers, loans, or fraudulent banking services. In this case, AI allows cybercriminals to personalize messages for greater credibility. For example, WhatsApp bots trick users into obtaining personal data.

Several phishing attacks use AI, allowing cybercriminals to generate increasingly convincing fake emails and web pages. This is done, for example, by automatically translating messages into Spanish using local slang or idioms, for example, in emails mimicking Ecuadorian banks (Pichincha, Produbanco, etc.) that contain fraudulent links.

Likewise, in this case, cybercriminals use AI to identify vulnerabilities in Ecuadorian companies or public institutions (healthcare, SMEs, banking, etc.) and then launch ransomware with greater precision, obviously with the aim of demanding payments in cryptocurrency. For example, the Sercop attack in January 2025, where critical data was encrypted [

1].

AI has revolutionized cybersecurity, both by enabling early threat detection through predictive analytics and automated responses, and by allowing cybercriminals to leverage it to generate more precise attacks using machine learning to optimize phishing strategies and create highly convincing fake messages and profiles. There is also the case of malware automation, which develops viruses that evade traditional antivirus programs and adapt to system defenses. We also have seen the use of deepfakes and phishing with generative AI, which creates fake audio and video content for extortion or disinformation.

While the Ecuadorian State has focused on updating the COIP, according to the National Assembly:

“Within the framework of the draft Organic Law Reforming the Comprehensive Organic Criminal Code (COIP), the Justice and State Structure Commission took note of twelve bills related to this matter” [

2]. “Among other aspects, the reforms address issues such as the expiration of pretrial detention, cyberterrorism, cyber sabotage, and digital identity theft…”

Asamblea Nacional del Ecuador [

2]

“These reforms are among the bills prioritized by the commission for the 2025–2027 legislative period. Of the general list, 60 initiatives are under review for initial debate, to which the 12 new bills are being incorporated”

Asamblea Nacional del Ecuador [

2]

Therefore, it is necessary that these crimes be defined precisely and clearly to ensure their correct classification and legal application.

2. Materials and Methods

Methodological Process

The study used a mixed analysis methodology, structured in clear phases that guided the research from data collection to interpretation (

Table 1).

Phase 1: Documentary and Regulatory Analysis.

The analysis focused on a systematic review of secondary sources. The selection criteria for academic literature included: thematic relevance (AI applied to cybercrime or cybersecurity), timeliness (publications from the last 5 years), and relevance to the Latin American or Ecuadorian context. For the legal framework, the COIP and the LOPD were analyzed and compared with the EU (GDPR) and Spanish frameworks using comparative analysis matrices that evaluated criteria such as explicit classification of AI crimes, compliance with international standards (NIST, ISO), and data protection and incident response mechanisms.

Phase 2: Empirical Analysis.

This phase consisted of the collection and analysis of primary data. Two instruments were designed and applied: a survey to assess perceptions and knowledge, and a semi-structured interview guide for experts. The survey analysis process was quantitative, using descriptive statistics (frequencies, percentages) to identify trends. For the interviews, qualitative thematic analysis was used, transcribing and coding responses to identify emerging categories and common patterns regarding challenges, threats, and mitigation strategies.

Phase 3: Synthesis and Integration.

In this phase, the findings from the previous phases were triangulated. The analysis process cross-referenced quantitative data (surveys) with qualitative data (interviews) and the results of documentary and comparative analysis. This allowed for a comparison of the perception of the problem with the regulatory and technical reality, validating the conclusions and supporting the proposed mitigation strategies with solid, multifaceted evidence.

3. Results

3.1. Review of Secondary Sources

3.1.1. Evolution of the Use of AI in Cybercrime Globally

Globally, artificial intelligence has transformed the cyberthreat landscape, evolving from simple automation tools to complex systems capable of learning and adapting to commit crimes with greater efficiency and proficiency. Cybercriminals now use machine learning techniques to optimize phishing attacks, generate hyper-realistic deepfakes for impersonation and extortion purposes, and develop intelligent malware that evades traditional defenses [

3]. Furthermore, AI enables the automated exploitation of vulnerabilities and the mass personalization of fraud, posing an unprecedented challenge to conventional security mechanisms [

4]. This rapid evolution has made AI a critical enabler of modern cybercrime, demanding equally advanced responses in detection and prevention.

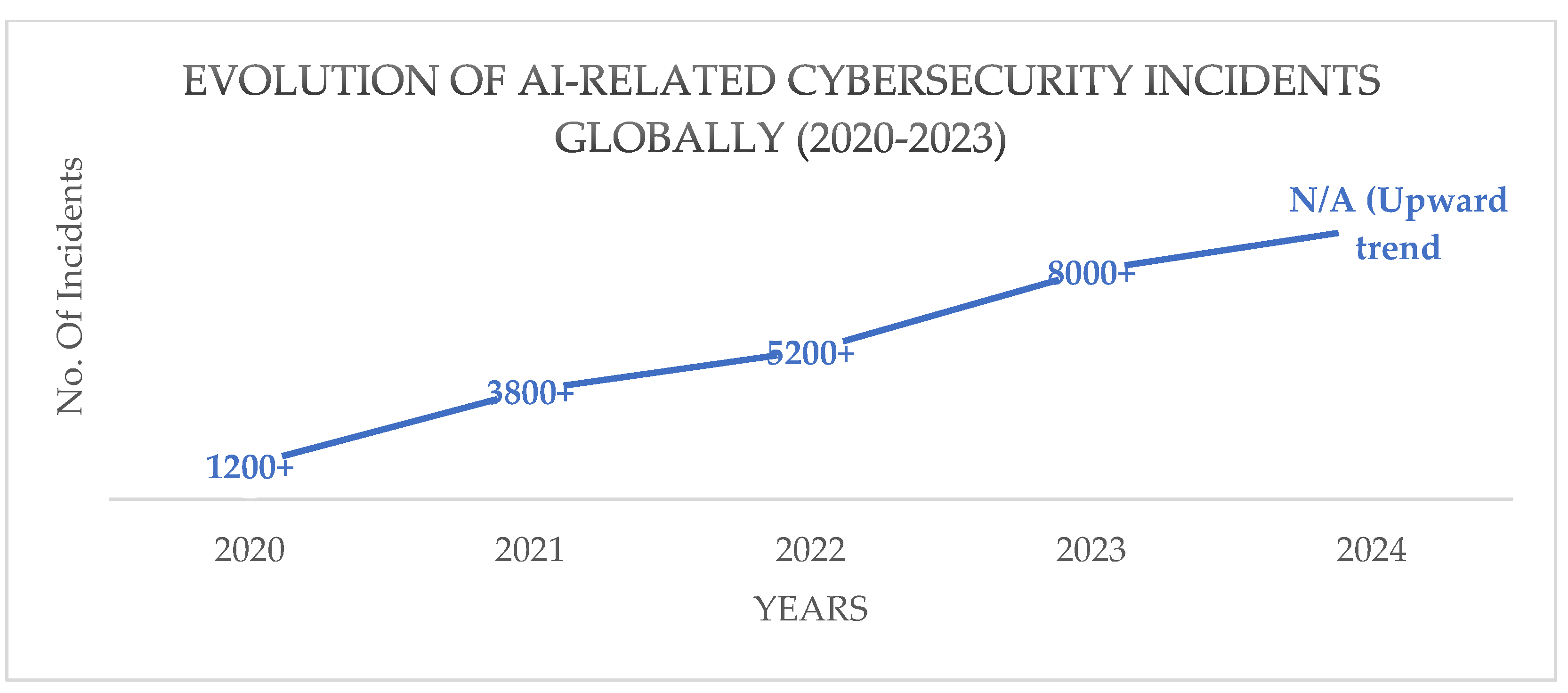

Below, we detail the data on the evolution of AI-related cybersecurity incidents globally, using a data matrix (

Table 2) and a line graph (

Figure 1).

Below is a matrix (

Table 3) and bar chart (

Figure 2) of the types of AI-related cybercrimes and the sectors most vulnerable to their impact.

Below is a matrix (

Table 4) and bar chart (

Figure 3) showing the average economic impact of a security incident, with its costs in US dollars. It also shows a comparison of the average global cost and the cost of the region under analysis.

3.1.2. Relevant Cases or Incidents in Latin America and Ecuador

In Latin America and Ecuador, the malicious use of AI is already a reality, with incidents illustrating its growing impact. Last year, Mexico fell victim to an AI-assisted ransomware attack against its public health system, which optimized the targeting of critical data and hampered its recovery [

13]. In Ecuador, cases have been documented, such as the rise of banking fraud using voice deepfakes that impersonate individuals to authorize illegitimate transfers [

14], as well as generative phishing campaigns that use AI to create highly personalized and persuasive messages in Spanish, leveraging local idioms to deceive unsuspecting victims [

15]. Furthermore, the growing reliance on digital platforms has exposed critical vulnerabilities in data security, making them easier to exploit with AI-powered techniques [

16]. These incidents reflect a regional and national trend toward more effective and difficult-to-detect cyberattacks.

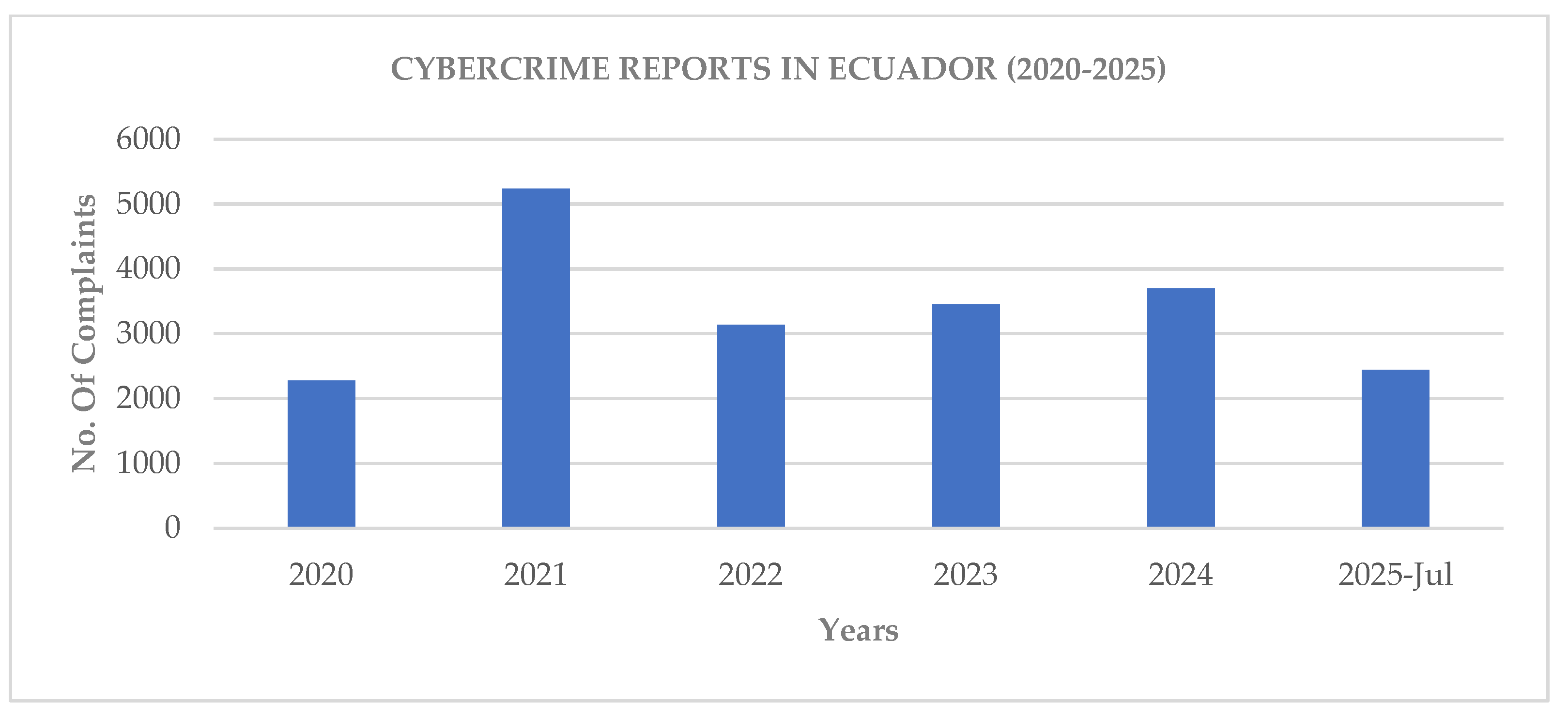

Below is a matrix (

Table 5) and bar chart (

Figure 4) showing the increase from 2020 to 2025 in crimes classified according to the COIP. It should be clarified that at the time of the study, these crimes are only grouped by the type “Fraudulent Appropriation by Electronic Means”. It is important to note the increase between 2020 (COVID-19 Pandemic) and 2021 (Final stages of the COVID-19 Pandemic), which highlights a greater use of electronic media for daily tasks and therefore attacks focus on these media.

3.1.3. Current Legal Framework in Ecuador (Criminal Code, Data Protection Law, Cybersecurity Regulations)

The Ecuadorian legal framework attempts to respond to these challenges, albeit with significant limitations given the evolving nature of AI-driven crimes. The Comprehensive Organic Criminal Code (COIP) criminalizes offenses such as unauthorized access to computer systems (Art. 232) and digital identity theft (Reform Bill Art. 212.14), but lacks specific definitions for modern forms such as fraudulent deepfakes or malicious algorithmic manipulation. Meanwhile, the Organic Law on the Protection of Personal Data (LOPD) establishes security and confidentiality principles for data processing, requiring incident reporting within short timeframes; however, it does not explicitly address risks associated with the use of AI, such as the inference of sensitive data through automated analysis. At the cybersecurity level, although CERT-EC guidelines and references to international standards exist, Ecuador still lacks comprehensive legislation that combines technological risk management with advances in AI, leaving critical gaps in the protection of digital infrastructure and citizen privacy [

18].

Below are two comparison matrices (

Table 6 and

Table 7): the Comprehensive Organic Criminal Code (COIP) and the Organic Law on the Protection of Personal Data (LOPD), with their equivalents, the Spanish Penal Code and EU (GDPR).

“The Global Cybersecurity Index (GCI) is a reliable benchmark that measures countries’ commitment to cybersecurity globally, raising awareness of the importance and different dimensions of the problem. Since cybersecurity has a broad scope, spanning many industries and diverse sectors, each country’s level of development or commitment is assessed based on five pillars: (i) Legal Measures, (ii) Technical Measures, (iii) Organizational Measures, (iv) Capacity Building, and (v) Cooperation, and then aggregated into an overall score”

ITU-Unión Internacional de Telecomunicaciones [

19]

“Of the five GCI assessment pillars, the legal measures component analyzes the development and implementation of cybersecurity policy and regulatory frameworks”

ITU-Unión Internacional de Telecomunicaciones [

19]

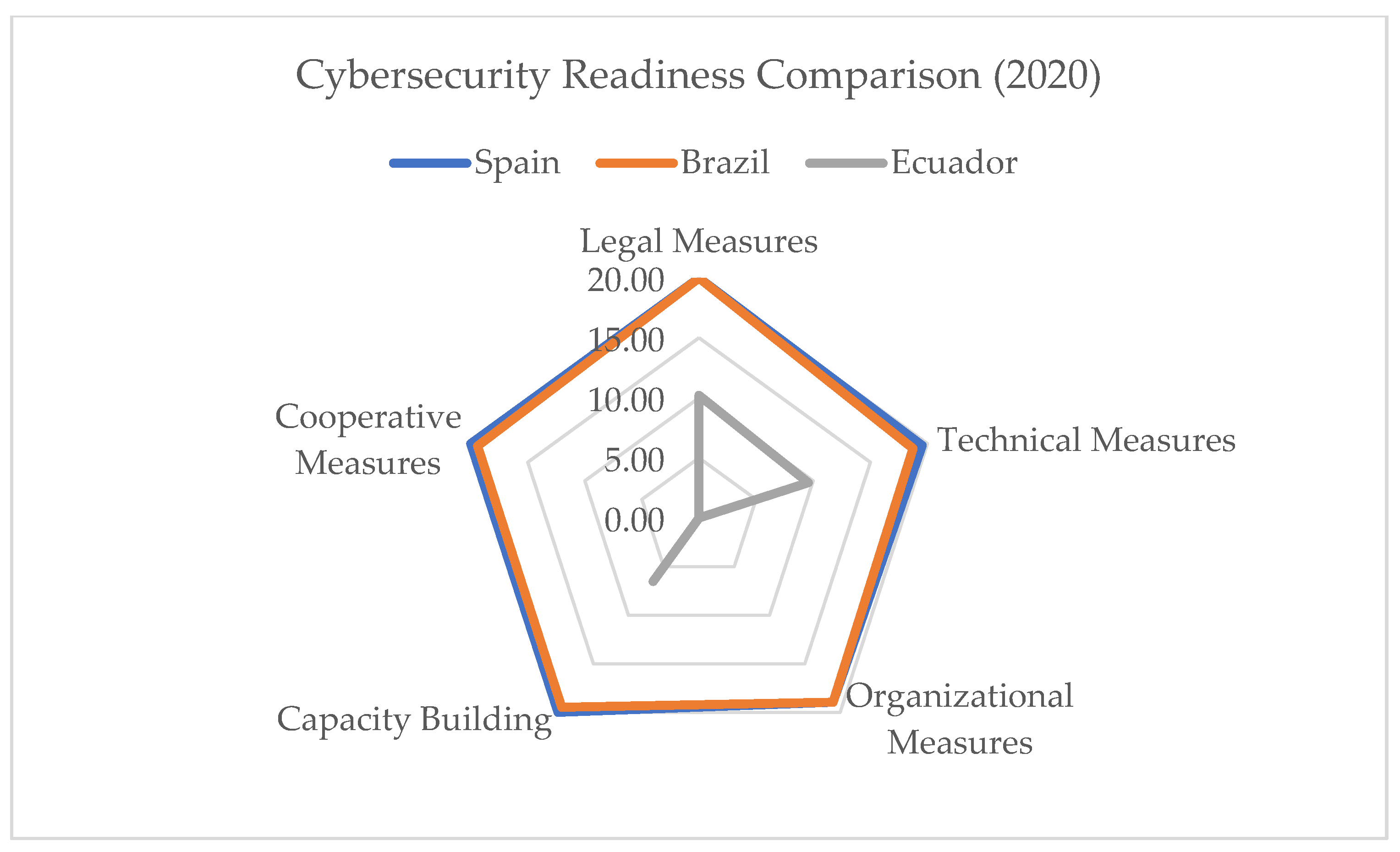

For this reason, it is important to compare the countries in the region (Brazil and Mexico), the country on which the Ecuadorian LOPD and the Ecuadorian penal code are based (Spain), and the country leading the global ranking (the United States). The results of this comparison are shown in a results matrix (

Table 8,

Table 9,

Table 10 and

Table 11) and an area chart (

Figure 5 and

Figure 6).

Data for 2020 and 2024 have been selected for the comparative analysis, considering that Ecuador implemented key regulatory instruments after the reference year 2020. The National Cybersecurity Policy (2021) and the Organic Law on Personal Data Protection (2021), both issued after the initial period, constitute significant regulatory advances reflected in an improvement in the indices, observed both in the Cybersecurity Readiness Benchmark and in the Global Cybersecurity Index Score for 2024.

3.2. Design and Application of Empirical Instruments

This phase consisted of primary data collection and analysis. Two instruments were designed and applied: a survey to assess perceptions and knowledge, and a semi-structured interview guide for experts. The survey analysis process was quantitative, using descriptive statistics (frequencies, percentages) to identify trends. For the interviews, qualitative thematic analysis was used, transcribing responses and coding them to identify emerging categories and common patterns regarding challenges, threats, and mitigation strategies.

3.3. Data Processing and Analysis

The results of the surveys and interviews shown in the graphs of this study should be interpreted considering the characteristics of the sample. The quantitative data, represented in figures below, reflect the responses from a survey conducted with 136 cybersecurity professionals (n = 136), where the 95% confidence intervals demarcate the margin of error for the percentages shown. This component is complemented by qualitative data from 12 interviews with cybersecurity experts, allowing for a more nuanced understanding of the perception of the phenomena investigated.

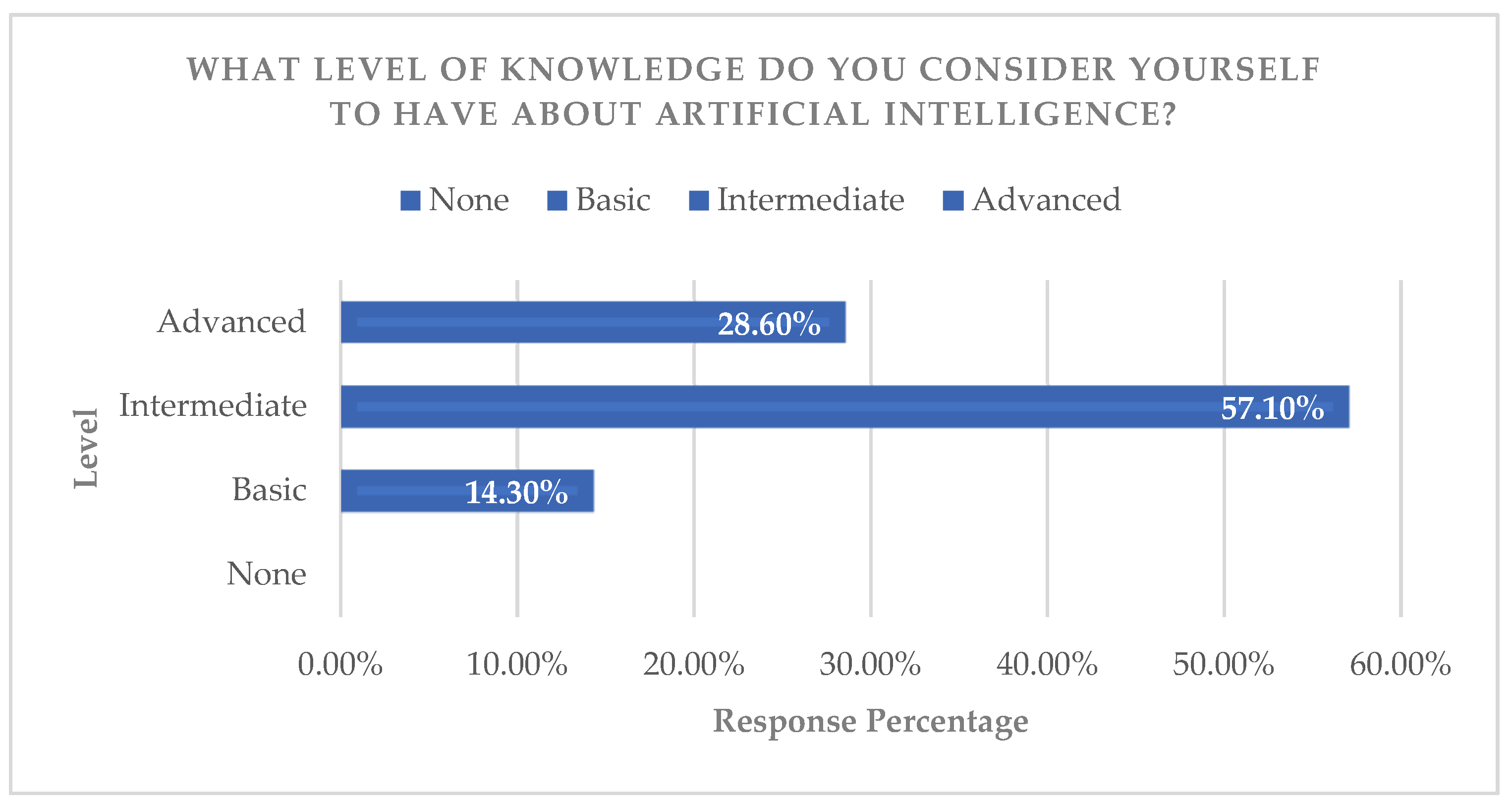

3.3.1. Knowledge and Perception of AI in Cybersecurity

Based on the analysis of survey data, the “level of knowledge” indicator revealed that 57% of participants have intermediate knowledge about AI (

Figure 7). Regarding the “perceived duality” criterion, 71% consider AI to represent both an opportunity and a threat (

Figure 8). This demonstrates a nascent but critical awareness of the issue, which serves as a basis for training initiatives, although it also challenges the paradox that the key technology for defense is also the vector of threat.

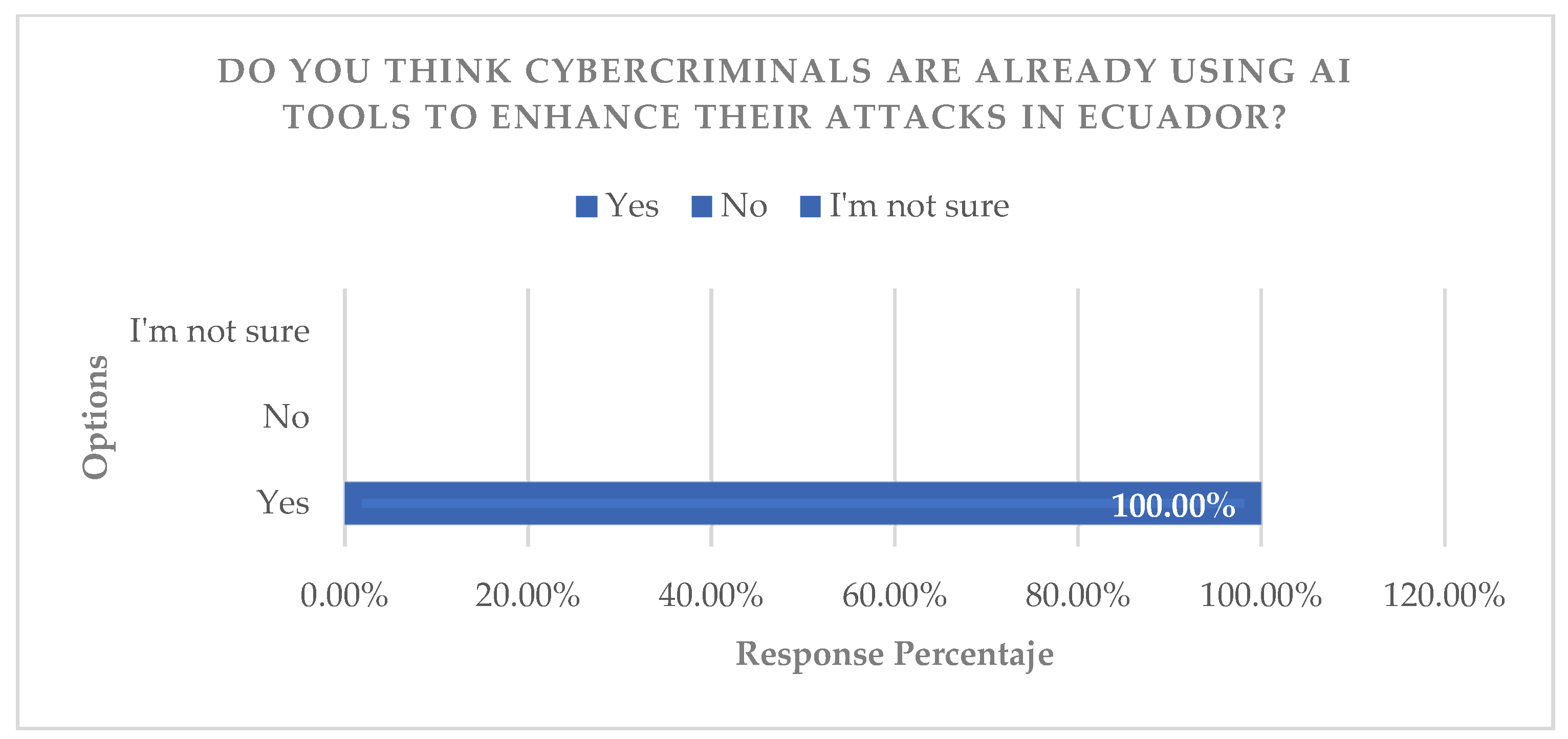

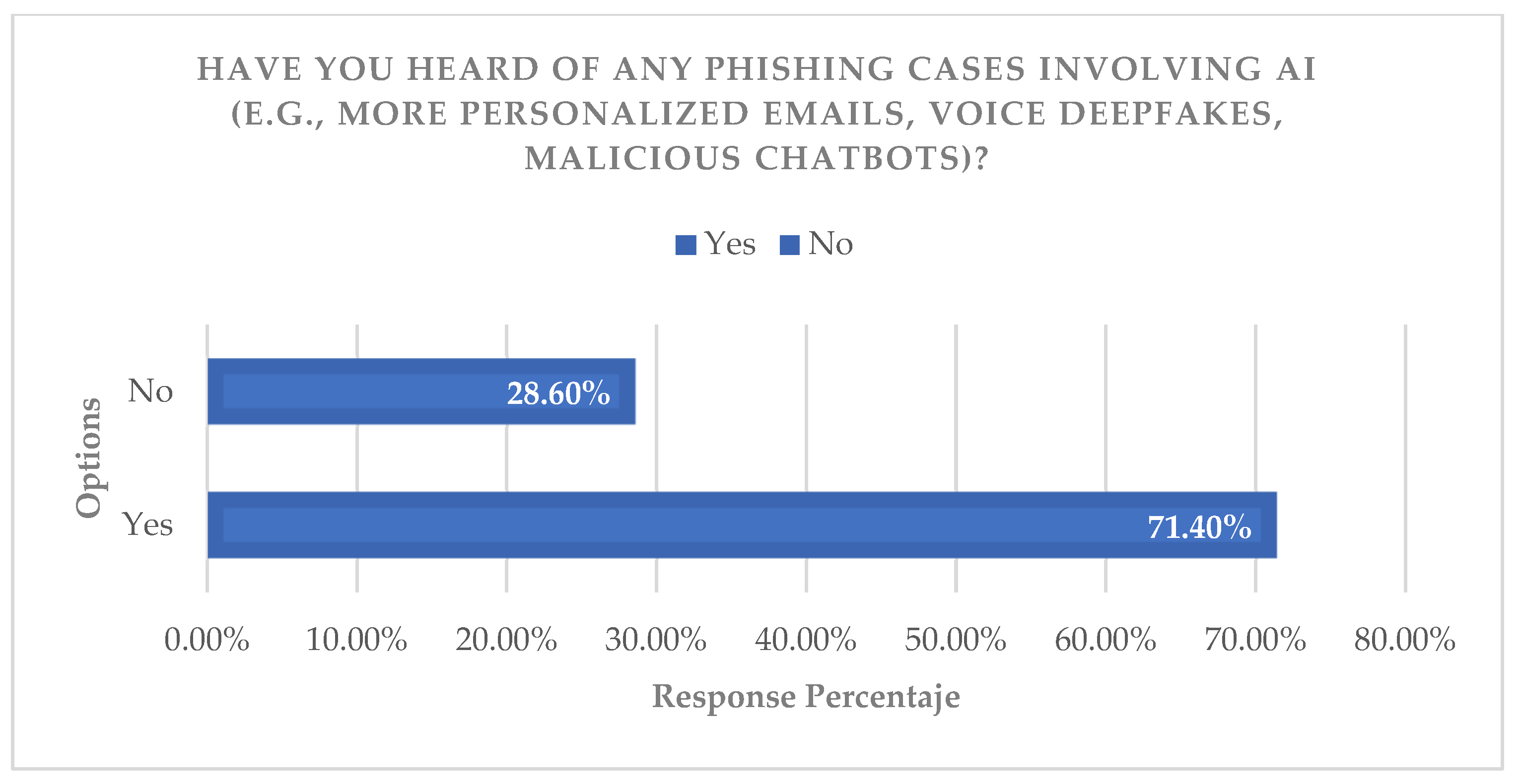

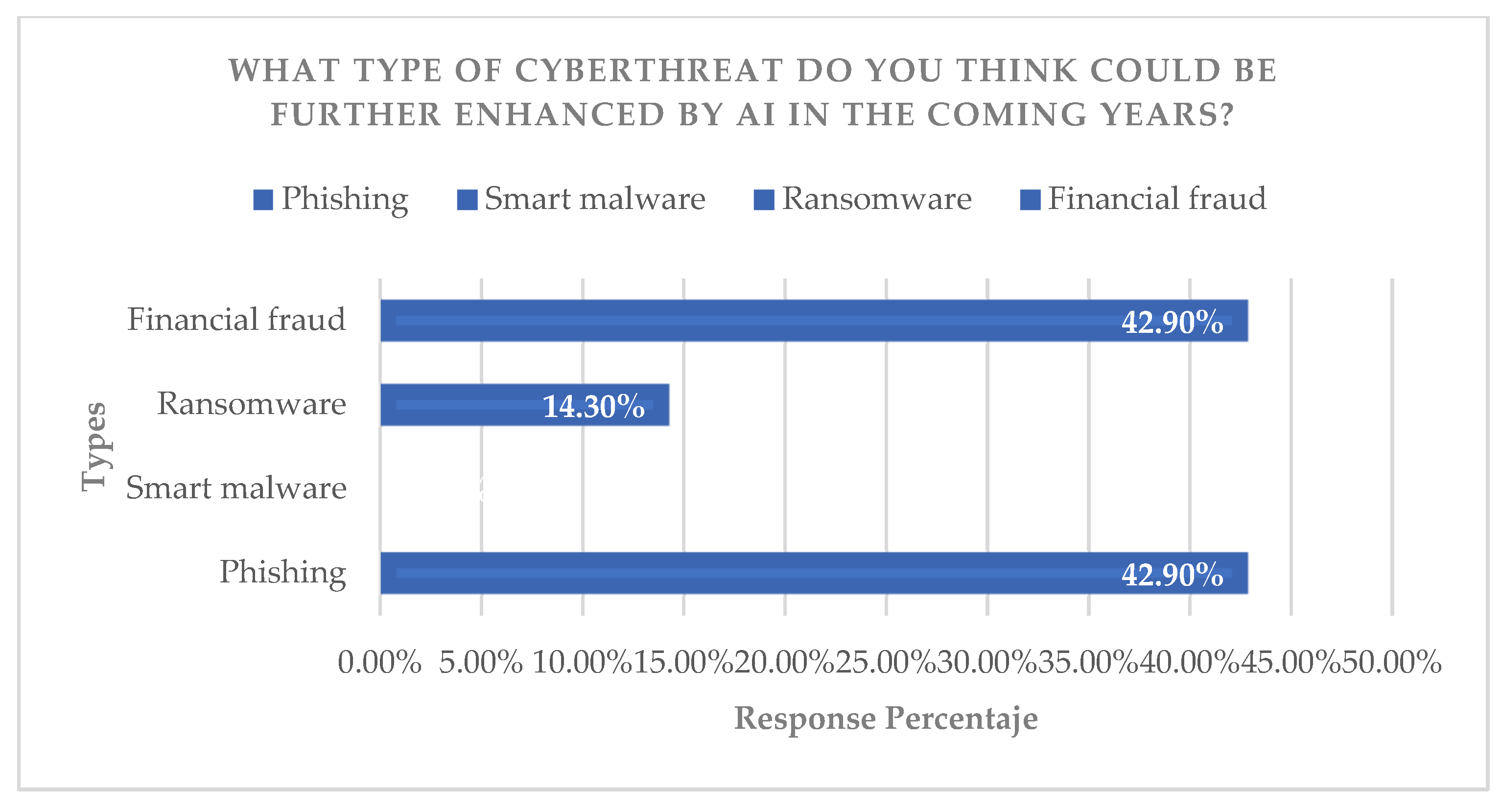

3.3.2. Use of AI in Cybercrime in Ecuador

Regarding the “threat identification” indicator, it was shown that most respondents perceive and have heard of cases where cybercriminals are already using AI in Ecuador. The interviews, analyzed using perception and visualization criteria, show that citizens and experts in the field validate the hypothesis that international threats are applicable to the local context, discussing the urgency of adopting advanced countermeasures (

Figure 9,

Figure 10,

Figure 11 and

Figure 12).

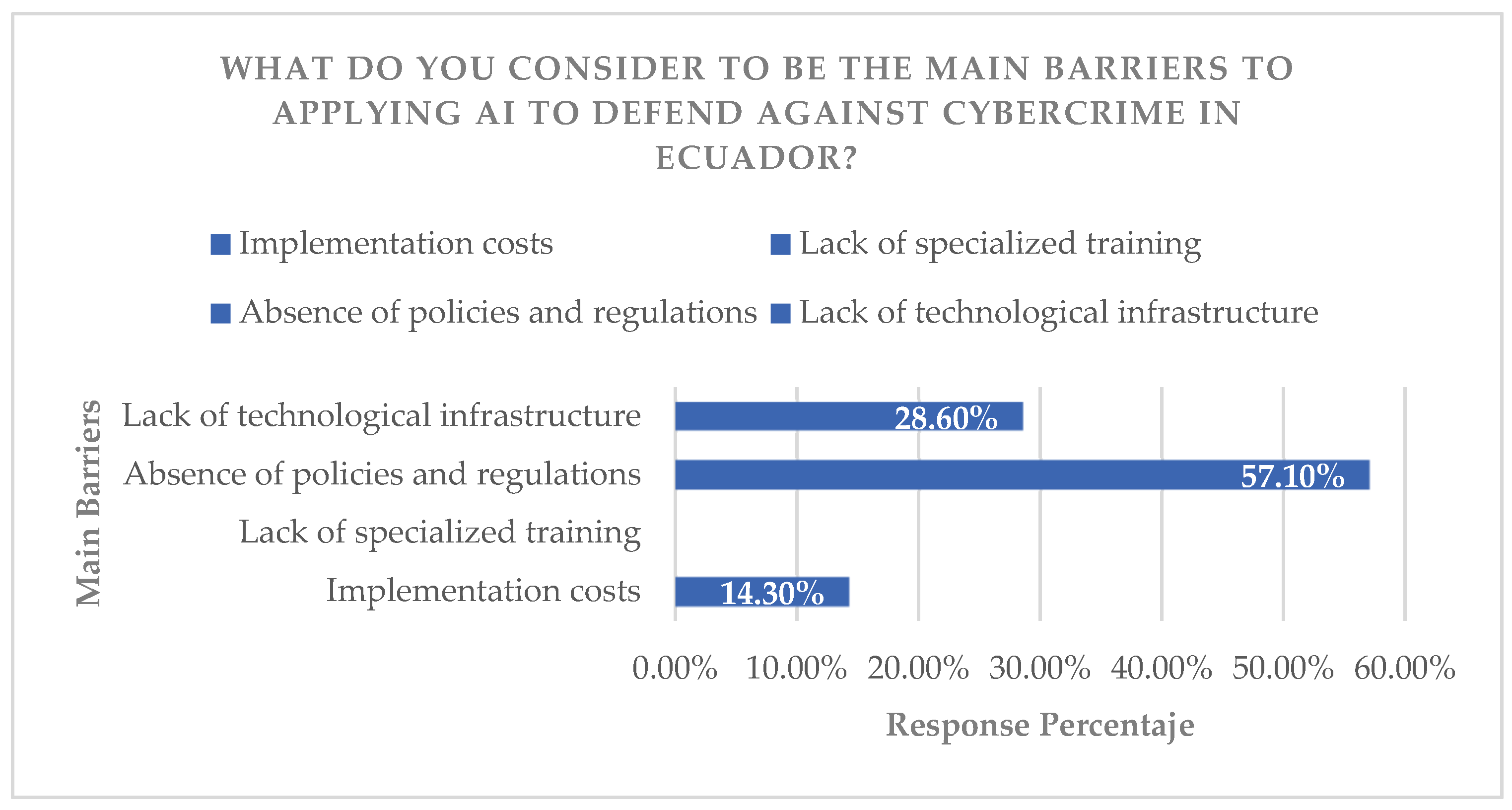

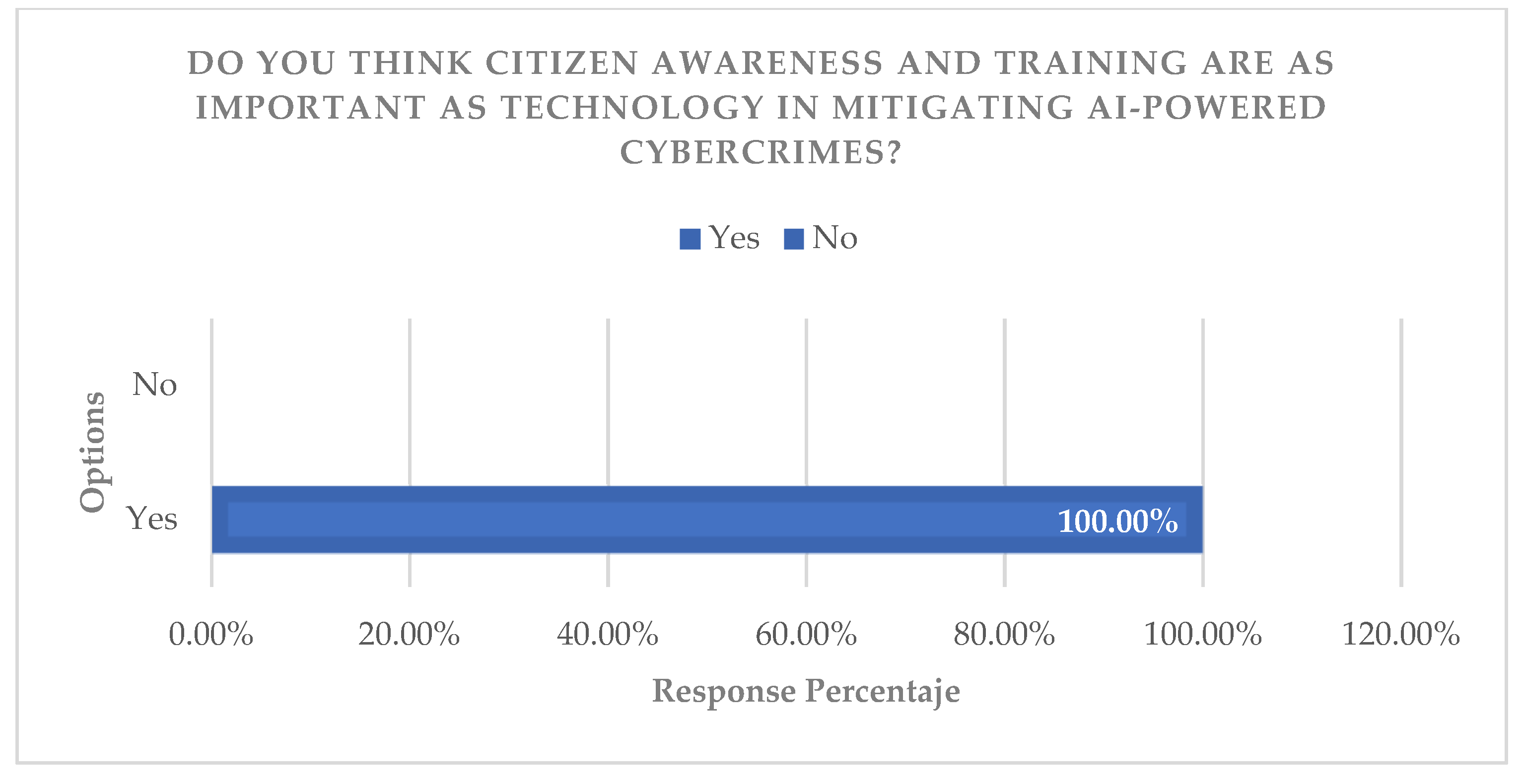

3.3.3. Security Breaches Identified

The analysis of responses under the criteria of “technical, regulatory, and educational gaps” identified the main barriers as a lack of infrastructure (technical), outdated legal frameworks (regulatory), and limited training (educational). This demonstrates a systemic and multifactorial vulnerability. It is argued that the convergence of these gaps amplifies the risk, making mere technological updating insufficient without parallel advances in regulations and human capital (

Figure 13 and

Figure 14).

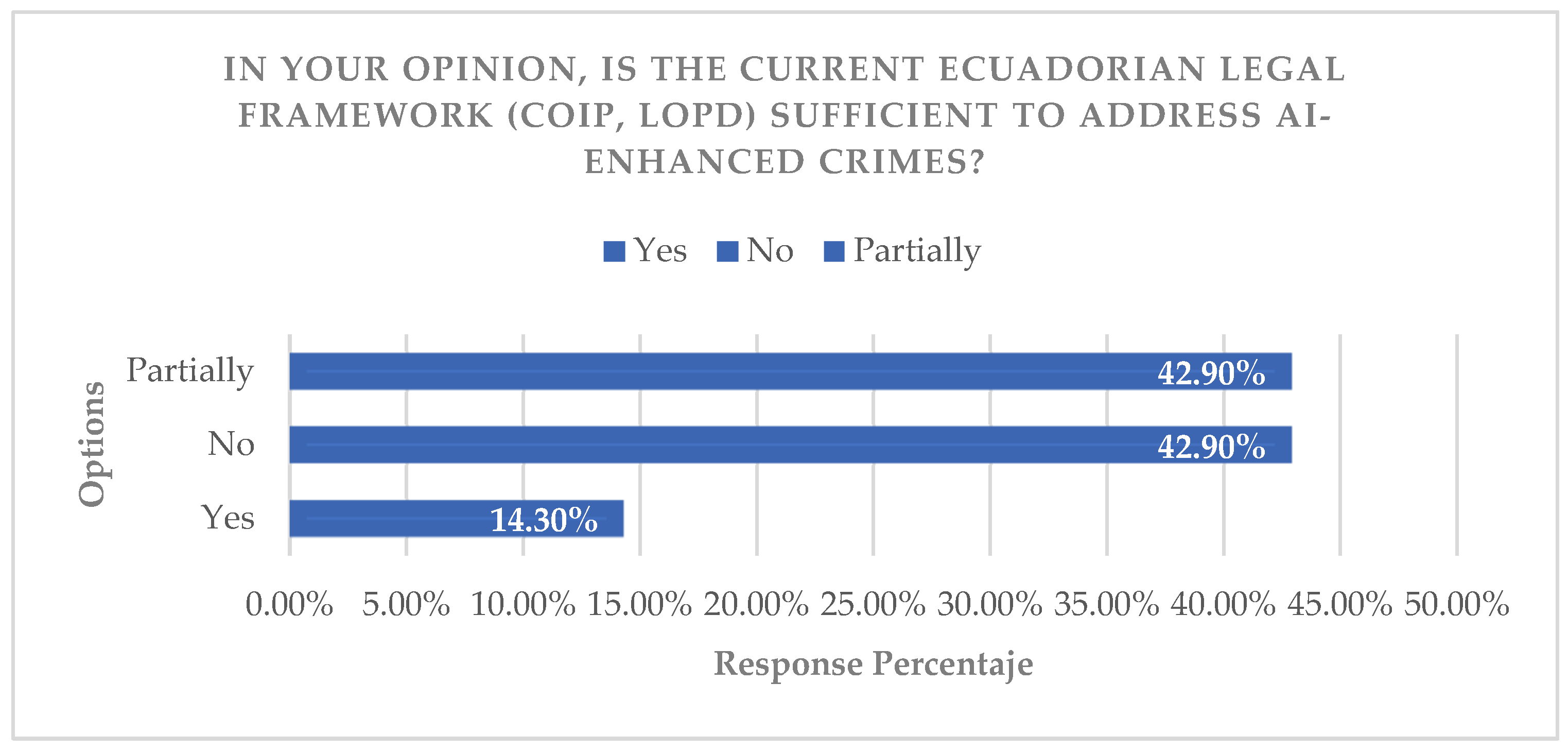

3.3.4. Adaptation of the Legal Framework

Comparative analysis using a matrix of legal criteria (crime classification, sanctions, data protection) as shown in the comparison matrices of COIP, Spanish Criminal Code, LOPD, and GDPR, revealed substantial gaps in the COIP and the LOPD compared to reference frameworks such as the Spanish one and the GDPR, specifically in the criminalization of deepfakes and AI governance. This finding supports the conclusion that the Ecuadorian framework is insufficient and calls into question the need for legal reform that incorporates specific definitions and is harmonized with international standards for the effective prosecution of these crimes (

Figure 15).

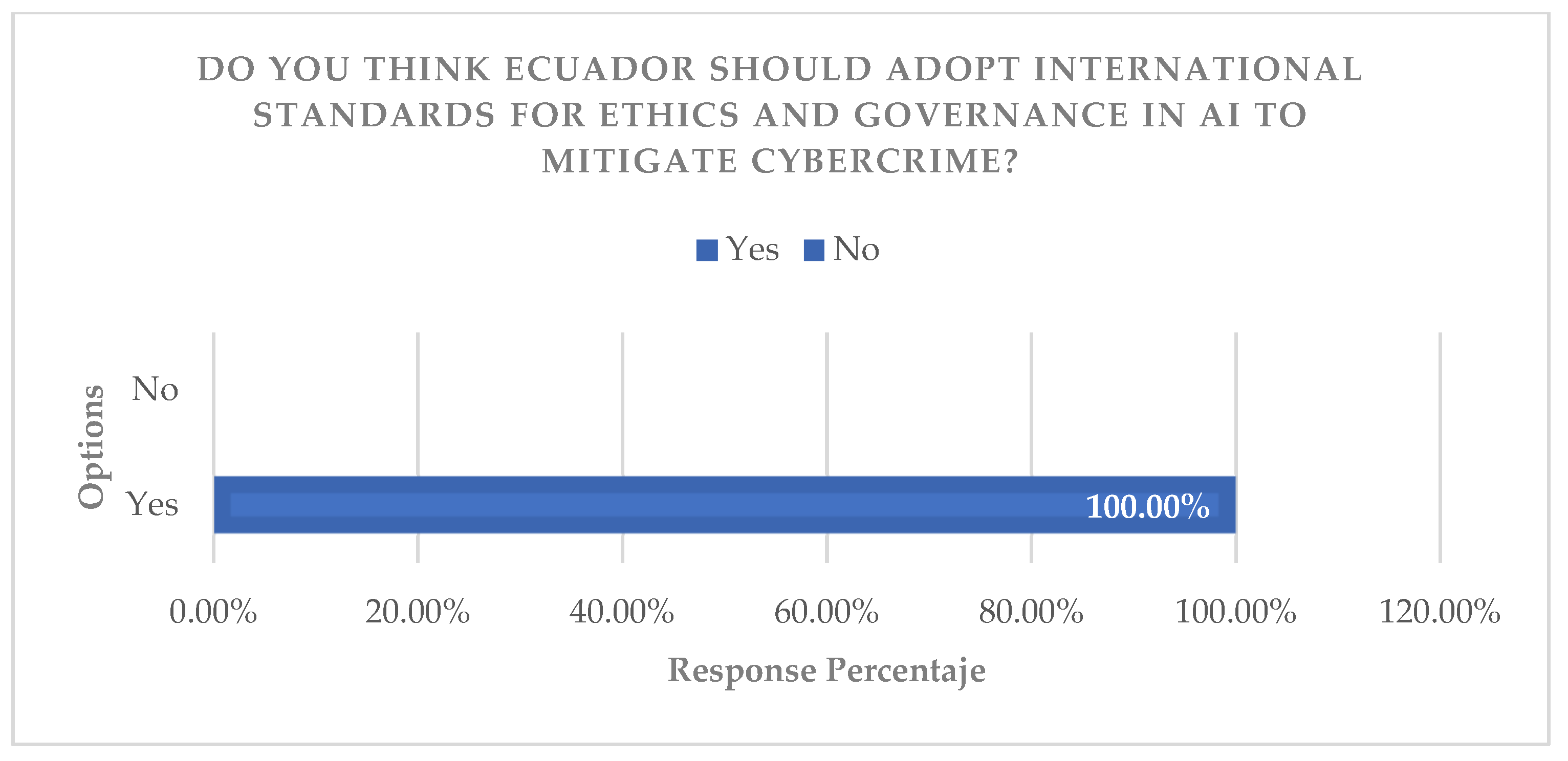

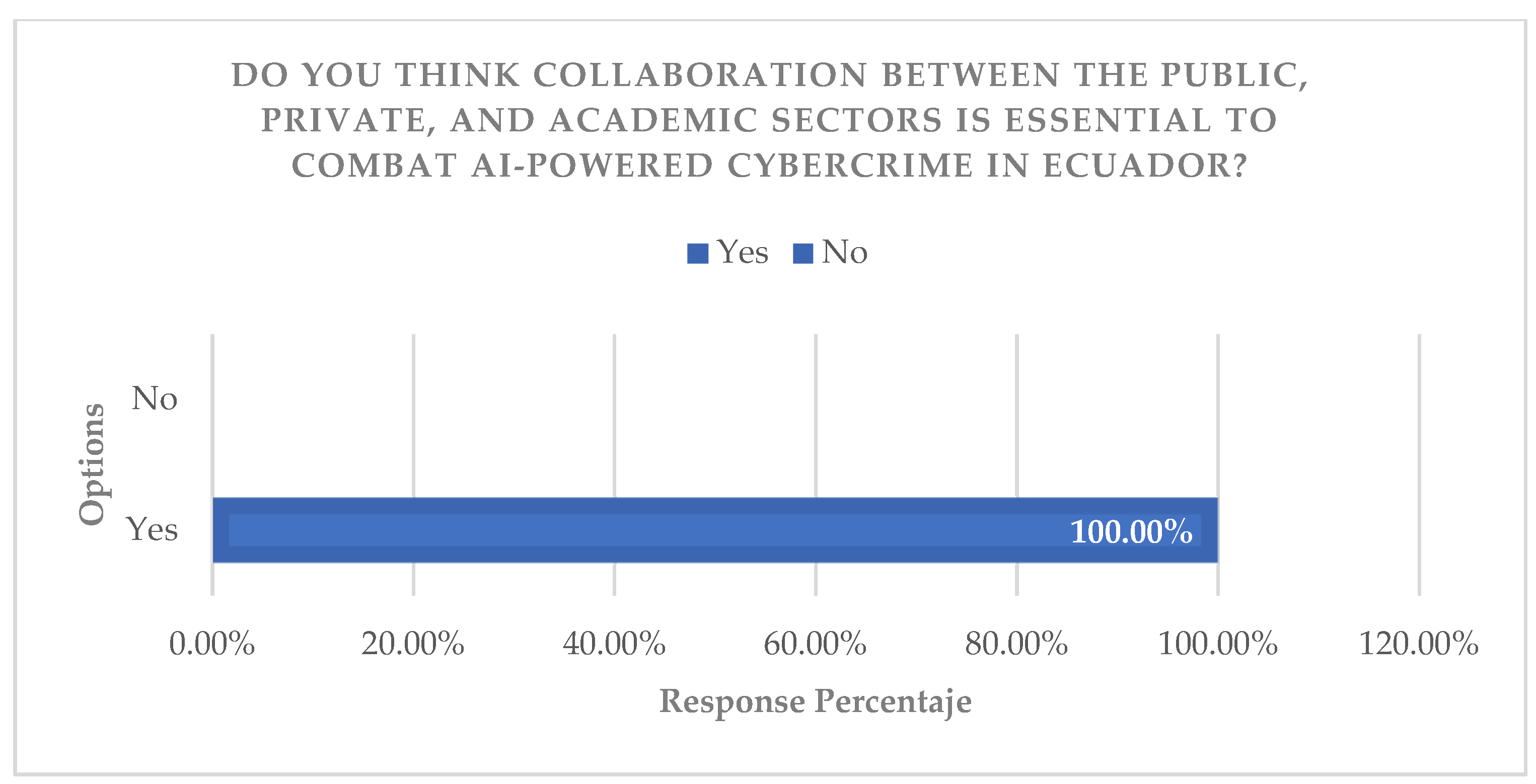

3.3.5. Mitigation Strategies

The analysis of expert interviews, coded under the “priority strategies” criterion, identified consensus on the need to implement threat hunting, adopt frameworks such as NIST/ISO, and strengthen training. Triangulation of these findings with the results of the documentary analysis confirms that the most robust strategy is a comprehensive one, combining technology, regulations, and education. It is argued that the adoption of international standards is not only a technical best practice, but a requirement for national competitiveness and resilience (

Figure 16,

Figure 17,

Figure 18 and

Figure 19).

3.4. Preparation of Mitigation Proposal

Faced with the rise in AI-enhanced cybercrimes in both Ecuador and Latin America and the identified gaps, Ecuador urgently needs a realistic and scalable strategy. The proposal should focus on three pillars: regulatory modernization, technical and educational strengthening, and public–private–academic cooperation.

3.4.1. Modernization of the Legal Framework and Adoption of Standards

It is urgent to reform the COIP to expressly criminalize crimes committed with AI, such as fraudulent deepfakes, malicious algorithmic manipulation, etc., following models like Spain’s, which already criminalize these behaviors. Likewise, mandatory adoption of international standards such as ISO/IEC 27001 [

21] and the NIST Cybersecurity Framework by public entities and critical suppliers should be promoted. This allows for closing legal loopholes and aligning technical defenses with global best practices, enabling us to leverage the progress reflected in the GCI 2024 Index.

3.4.2. Capacity Building and Adaptive Infrastructure

To improve and overcome technical and educational barriers, the creation of a National Cybersecurity and AI Center is proposed, led by a competent entity in the field and focused on:

Mass training and certification of professionals in defensive AI tools, for example, threat hunting and malware analysis.

Implementing low-cost, open-source solutions for SMEs, for example, community SIEMs and threat intelligence sharing platforms.

Running workshops with simulated AI cyberattack exercises for critical sectors (banking, healthcare), using CERT-EC data to prioritize real threat sectors.

3.4.3. Cooperative Governance and Awareness

It is necessary to establish working groups between the Attorney General’s Office, CERT-EC, the National Assembly, universities, and private companies in critical sectors to update incident response protocols with a focus on AI. To complement this, it is also necessary to launch national citizen awareness campaigns on the risks of generative phishing and deepfakes, based on documented local examples. This will foster a culture of proactive cybersecurity and facilitate the prosecution of complex cases.

This proposal is achievable by leveraging existing capabilities, such as progress made in GCI 2024, and allows for prioritizing high-impact, low-cost actions aligned with the urgent needs of the Ecuadorian context.

4. Discussion

Artificial intelligence has evolved from an automation tool to an enabler of critical and sophisticated cyberthreats. Cyberattackers now use machine learning algorithms to optimize phishing campaigns, generate hyper-realistic deepfakes, and develop adaptive malware that evades traditional security controls [

3]. This scenario is confirmed in the local context, where most of the experts surveyed reported an increase in incidents using AI to personalize attacks using local idioms. This is demonstrated by Santillán Molina [

22] in his work on data centralization in Ecuador, where he warns that “AI allows attackers to identify and exploit vulnerabilities in critical infrastructure with unprecedented precision”, which coincides with the ransomware incident against Sercop in 2025 [

1].

The International Telecommunication Union’s (ITU) Global Cybersecurity Index (GCI) reveals a substantial improvement in Ecuador’s readiness between 2020 and 2024, directly affecting the pillars of legal measures and international cooperation. This progress reflects local efforts such as the enactment of the Organic Law on Personal Data Protection (LOPD) in 2021 and the National Cybersecurity Policy. However, Ecuador still lags below the regional average in technical capabilities and specialized skills development. This is demonstrated by the ITU report, which highlights that countries that have integrated international frameworks such as the NIST CSF and fostered public–private partnerships show more accelerated and resilient improvements in the face of complex. This trend underscores the need to invest in technical capabilities and professional training.

The comparative analysis shows that the Ecuadorian legal framework (COIP and LOPD) presents critical gaps in the face of AI-enhanced crimes. As Boza Rendón [

23] concludes in his analysis of the Ecuadorian legal framework, “the absence of specific regulations for artificial intelligence leaves regulatory gaps that can compromise the security and privacy of citizens, especially in the use of automated decision-making systems” [

23]. While the Organic Law on Personal Data Protection (LOPD) establishes general principles, the study reveals shortcomings in “the definition of effective control mechanisms, independent oversight, and clear guarantees for the protection of fundamental rights” [

23] against the risks associated with data processing using AI. Furthermore, the Comprehensive Organic Criminal Code (COIP) lacks provisions that specifically address crimes committed through malicious algorithmic manipulation or deepfakes, which contrasts with more advanced legal frameworks such as that of the European Union. For effective regulation, the author recommends “incorporating fundamental rights impact assessment mechanisms before implementing AI systems” and “establishing provisions that promote the development of ethical and responsible AI” [

23], actions still pending in national legislation.

Now, to address the identified gaps, realistic mitigation strategies are proposed for the Ecuadorian context: the implementation of collaborative threat hunting based on threat intelligence shared between the public and banking sectors; the adoption of open-source tools for the detection of generative phishing and deepfakes; and certified AI training programs for prosecutors and judges. This approach to practical, low-cost solutions is aligned with the recommendations of the NIST Cybersecurity Framework (2020) for resource-constrained organizations, which prioritizes the identification and protection of critical assets through open frameworks and collaboration. Similarly, the International Telecommunication Union [

20] emphasizes in its report that training and awareness initiatives are one of the most cost-effective investments to improve national cyber resilience. These actions do not require massive initial investment, but rather strategic prioritization and multi-sector collaboration.

5. Conclusions

It was established that AI techniques used in cyberattacks internationally (generative phishing, deepfakes, adaptive malware) are fully applicable to the Ecuadorian context, due to its increasing digitalization and the vulnerabilities present in critical infrastructure and SMEs.

It was identified that the main security gaps in Ecuador against AI-driven cyberthreats are technical (lack of advanced tools), regulatory (outdated legal framework), and educational (lack of training and awareness), which severely limits preventive response capacity.

It was analyzed that the evolution of malicious uses of AI in the region, characterized by increased automation and sophistication, is already an operational trend in Ecuador, with a potentially high impact on sectors that handle sensitive data, as exemplified by the attack on Sercop.

It was determined that the Ecuadorian legal framework (COIP, LOPD) presents substantial gaps in the ability to effectively criminalize and prosecute AI-enhanced cybercrimes, making legal reform imperative that incorporates specific definitions and aligns with international ethical governance principles.

The impact of the malicious use of AI in cybercrimes is a growing and real threat to Ecuador, requiring comprehensive and tailored mitigation strategies based on the adoption of international standards (NIST, ISO), urgent regulatory updates, and the implementation of massive training programs.