Abstract

Generative artificial intelligence (AI) and persistent empirical gaps are reshaping the cyber threat landscape faster than Zero-Trust Architecture (ZTA) research can respond. We reviewed 10 recent ZTA surveys and 136 primary studies (2022–2024) and found that 98% provided only partial or no real-world validation, leaving several core controls largely untested. Our critique, therefore, proceeds on two axes: first, mainstream ZTA research is empirically under-powered and operationally unproven; second, generative-AI attacks exploit these very weaknesses, accelerating policy bypass and detection failure. To expose this compounding risk, we contribute the Cyber Fraud Kill Chain (CFKC), a seven-stage attacker model (target identification, preparation, engagement, deception, execution, monetization, and cover-up) that maps specific generative techniques to NIST SP 800-207 components they erode. The CFKC highlights how synthetic identities, context manipulation and adversarial telemetry drive up false-negative rates, extend dwell time, and sidestep audit trails, thereby undermining the Zero-Trust principles of verify explicitly and assume breach. Existing guidance offers no systematic countermeasures for AI-scaled attacks, and that compliance regimes struggle to audit content that AI can mutate on demand. Finally, we outline research directions for adaptive, evidence-driven ZTA, and we argue that incremental extensions of current ZTA that are insufficient; only a generative-AI-aware redesign will sustain defensive parity in the coming threat cycle.

1. Introduction

Zero trust is a foundational cybersecurity principle that advocates for the continuous verification of all entities, both inside and outside a network, and the rejection of implicit trust [1]. This principle challenges traditional perimeter-based security models, which have proven insufficient against modern cyber threats, particularly cyber fraud [2]. Cyber fraud and zero trust are closely interlinked; while cyber fraud exploits gaps in trust and access control, zero trust emerged as a necessary countermeasure [3]. The operationalization of zero trust has been formalized through frameworks like NIST SP 800-207 [4], which provides structured guidelines for implementation. Such frameworks are further realized in architectures such as the UK’s National Cyber Security Centre (NCSC) Zero Trust Architecture Design Principles and Australia’s “Essential 8”. By focusing on verifying user identity, contextual data, and device health before granting access to sensitive resources, zero-trust frameworks aim to minimize the risks of fraud and unauthorized access [2,5]. The principle of “never trust, always verify” offers a proactive and dynamic approach to mitigate the risks posed by an increasingly hostile cyber environment, making zero trust an essential cybersecurity strategy worthy of thorough investigation.

Despite its promise, zero trust has faced significant challenges in addressing the complexities of evolving cyber threats [2]. While it has been refined to include granular access controls, continuous monitoring, and real-time threat detection [1], it struggles with scalability, legacy system integration, and adapting to dynamic IT environments, such as cloud services and mobile devices [1,2,5]. These inherent issues have been compounded by the rise of generative AI, which enables attackers to create synthetic identities, manipulate contextual data, and automate sophisticated attacks [6]. Generative AI weaponizes three structural weak points in today’s zero-trust deployments. First, identity governance pipelines still rely on human-verifiable documents and liveness checks, yet diffusion headshots and LLM-written résumés can now pass those gates unchallenged. Second, adaptive-trust algorithms are trained on clean, human-generated baselines, so a single poisoned burst of synthetic telemetry can drift their decision boundaries. Third, micro-segmented networks depend on deep-packet inspection and protocol heuristics, but GAN-shaped traffic can masquerade as sanctioned backups or VoIP streams. Because model updates arrive faster than security policies can be revised, rule sets and attestations age out in weeks, not years. Unless zero-trust architecture (ZTA) becomes generative-AI-aware, it risks sliding from a living security posture to a static checklist, widening the gap between policy intent and operational reality. The cybersecurity community must, therefore, critically assess and adapt ZTA models to meet emerging challenges. Throughout this article, we use the term “erosion of Zero Trust principles” to denote a quantifiable weakening (manifested as higher false-negative rates, longer attacker dwell time, or policy bypasses), rather than total collapse; the metrics and evidence supporting this definition are examined in Section 6.

This survey critically examines the intersection of zero trust and generative AI, focusing on how generative AI undermines the foundational principles of zero trust. Our study has been structured around five key research questions that explored the definition and implementation of trust within ZTA and the evolving impact of human, process, and technological factors. Through a two-step critical analysis, we have reviewed 10 recent surveys and 136 primary research studies. While some progress has been made, our analysis has revealed that many of these studies lacked sufficient empirical evidence or were not well executed, leaving significant research gaps. Such gaps were further exacerbated by generative AI, rendering many existing zero-trust defenses less effective or obsolete. Additionally, by introducing the novel “Cyber Fraud Kill Chain”, we have provided a structured framework from the attacker’s perspective to assess the full impact of generative AI on zero trust. Our framework has demonstrated how generative AI amplifies weaknesses in zero-trust models, making the gaps identified in current studies even more problematic, urgent, and complex. Our survey has highlighted the need for adaptive, generative-AI-aware zero-trust frameworks that address both existing and emerging vulnerabilities in modern cybersecurity environments. Throughout Section 2, Section 3, Section 4 and Section 5, we flag how generative AI exacerbates existing ZTA gaps—in survey coverage (Section 2), architectural variations (Section 3), review methodology (Section 4), and empirical evaluations (Section 5)—culminating in our AI-aware “Cyber Fraud Kill Chain” (CFKC) in Section 6.

The three major contributions of this study are as follows:

- We systematically evaluated zero-trust architecture (ZTA) research through the lens of the NIST SP 800-207 taxonomy, assessing 10 surveys and 136 primary studies published since 2022. This evaluation identified critical research gaps, including an insufficient focus on behavior-based trust algorithms, continuous monitoring infrastructures, and enclave-based deployments, all of which are essential for addressing modern cybersecurity threats.

- We demonstrated how generative AI amplifies vulnerabilities in existing ZTA models, such as eroding trust mechanisms, disrupting compliance processes, and automating sophisticated attack vectors. To address these challenges, we introduced the novel CFKC framework, which maps the stages of AI-driven fraud and provides actionable insights for improving ZTA defenses against such emerging threats.

- We proposed a comprehensive set of research directions and practical recommendations to guide the evolution of zero-trust frameworks and architectures. These include integrating adaptive trust mechanisms, embedding AI-specific regulatory compliance, and prioritizing advanced defenses against generative AI-driven threats, thereby enabling ZTA to remain effective in modern cybersecurity environments.

2. Related Surveys

This section reviews existing surveys on ZTA to assess their contributions, methodologies, and limitations. The objective is to identify gaps in the current understanding of ZTA and justify the need for this survey, which builds on their limitations by providing a more recent and comprehensive evaluation of ZTA frameworks in the context of generative AI-driven threats. Our review of 10 prominent surveys [1,2,7,8,9,10,11,12,13,14] highlights diverse approaches to ZTA, with an emphasis on theoretical principles, technological advancements, and sector-specific implementations. However, these surveys collectively lack comprehensive empirical validation, real-world deployment examples, and practical guidance for addressing modern cybersecurity challenges. Theoretical Frameworks: Refs. [9,12] focus on the foundational principles and strategic importance of ZTA. While these studies provide valuable theoretical insights, they lack discussions on behavior-based trust mechanisms and continuous monitoring, which are critical for addressing advanced threats. Refs. [1,2] also explore strategic aspects of ZTA but offer limited coverage of real-world implementations or adaptive mechanisms for evolving attack vectors. Technological Advancements: Ref. [7] examines ZTA integration into 6G networks, highlighting continuous authentication and real-time risk assessment but neglecting practical deployment challenges. Ref. [10] provides a comparative analysis of ZTA within cloud computing, offering valuable insights into technology-specific issues. However, both studies lack empirical validation and detailed performance assessments. Practical Implementations: Refs. [11,14] explore ZTA in big data, IoT, and network environments, identifying key components and application areas. While these studies outline implementation strategies, they fail to address real-world challenges and evolving compliance requirements. Refs. [8,13] provide more recent evaluations but would benefit from case studies and empirical data to substantiate their findings. The summarized findings are presented in Table 1. Despite their breadth, none of these ten surveys consider the threat or defense implications of generative AI—an omission this paper remedies by systematically mapping AI-driven fraud vectors onto ZTA controls. Our analysis has highlighted a shared principal shortcoming: the predominant focus on theoretical principles over practical challenges, empirical validation, and real-world applications. We believe such gaps can arise from the difficulty of obtaining proprietary deployment data and the nascent stage of zero-trust implementations. Additionally, none of the reviewed surveys address the disruptive impact of generative AI on ZTA, creating a critical gap in understanding and mitigating emerging threats.

Table 1.

Assessment of the 10 surveys on ZTA coverage (✓: comprehensive; : partial or implicit) with structured synthesis additions.

From such gaps, we derive the following five research questions (RQs) to guide our analysis. Through addressing them, this survey identifies critical gaps in existing ZTA research, demonstrates how generative AI amplifies vulnerabilities, and provides actionable insights for evolving ZTA frameworks to remain effective against modern threats.

- RQ1: How can “trust” and “zero trust” be properly defined within the context of ZTA? A foundational understanding of trust mechanisms is essential for developing coherent ZTA frameworks. This RQ supports Contribution 1, which evaluates ZTA taxonomies and identifies gaps in trust algorithms.

- RQ2: What are the different ways to achieve effective implementation of ZTA across diverse operational environments? Practical deployment strategies are critical for improving ZTA adaptability. This question aligns with Contribution 1 by addressing gaps in behavior-based trust and enclave deployments.

- RQ3: How have people factors, including user behavior, security culture, and insider threats, evolved in the context of ZTA implementation? Generative AI introduces new complexities in user behavior and insider threat dynamics. This question aligns with both Contribution 2 (highlighting CFKC to address people-related vulnerabilities) and Contribution 3 (guiding frameworks to incorporate adaptive mechanisms for evolving human-centric challenges).

- RQ4: What process factors have evolved in the implementation and management of ZTA since the earlier studies? Evolving compliance and governance challenges require new strategies. This question links to both Contribution 2 (addressing process-related disruptions due to generative AI) and Contribution 3 (proposing advanced governance strategies to strengthen ZTA frameworks).

- RQ5: How have technological factors, including advancements in cybersecurity tools, cloud environments, and automation, influenced ZTA implementation, and is the current ZTA knowledge base still relevant? Technological advancements necessitate adaptive security frameworks. This question aligns with both Contribution 2 (highlighting technical vulnerabilities due to generative AI technologies) and Contribution 3 (identifying future technological directions for ZTA).

3. Classification of Existing Studies on ZTA

Unlike traditional security models that rely on perimeter defenses, ZTA operates on the principle of “never trust, always verify”, ensuring that all access requests are continuously authenticated and authorized, regardless of their origin. This section aims to provide a comprehensive review and classification of existing studies on ZTA, focusing on both theoretical foundations and practical implementations. In addition to classifying studies by NIST SP 800-207 components, we explicitly annotate how each approach or deployment variant does—or does not—model generative-AI-enabled attacks and AI-based defences.

3.1. The Zero-Trust Architecture

ZTA is a cybersecurity framework that eliminates implicit trust within networks, enforcing continuous verification of users and devices to access resources [15]. The principle “never trust, always verify” forms the core of ZTA, ensuring that no entity can access sensitive resources without strict validation. ZTA emerged as traditional perimeter-based security models became inadequate due to increasingly sophisticated cyber threats, the rise of remote work, cloud computing, and mobile devices. The term “Zero Trust” was popularized by John Kindervag in 2010 [16], and Google operationalized such principles with its BeyondCorp initiative in 2014 [17], which emphasized secure access based on user identity and context, rather than network location. NIST SP 800-207, published in 2020, formalized ZTA by outlining its key components, including the Policy Engine (PE), Policy Administrator (PA), and Policy Enforcement Point (PEP), enabling dynamic, granular access control. As shown in Figure 1, ZTA has evolved to address the complexities of modern IT environments and is widely adopted across sectors such as government, finance, and healthcare due to its focus on continuous verification, real-time threat monitoring, and reduced attack surfaces. The ZTA access control process, detailed in Algorithm A1 in the Appendix A, emphasizes continuous identity verification, contextual information analysis, and dynamic threat detection to allow or revoke access in real-time, improving resilience against modern cyber threats.

Figure 1.

Timeline of key developments in zero trust architecture (ZTA). Sources: [4,8,12,16,17,18].

3.2. Core Components and Taxonomy of ZTA

This section elaborates on the ZTA core components and provides a structured taxonomy based on the NIST ZTA framework.

3.2.1. Variations of ZTA Approaches

Different approaches to implementing ZTA have emerged to address the diverse security needs of organizations. These variations reflect the flexibility and adaptability of ZTA in different contexts, highlighting the need for a tailored approach based on specific organizational requirements.

- ZTA Using Enhanced Identity Governance: Enhanced identity governance is crucial for maintaining strict control over who accesses what within an organization, and ensures that identity management systems are continuously verifying and re-verifying user and device credentials, which is critical in environments with high turnover, remote workforces, or frequent third-party access [19]. By emphasizing robust identity governance, organizations can reduce the risk of unauthorized access and ensure compliance with regulatory requirements.

- ZTA Using Micro-Segmentation: Micro-segmentation is a strategic approach that enhances security by dividing the network into smaller, isolated segments, which limits the potential damage that can be caused by a breach, as attackers are confined to a small segment of the network, rather than having free rein across the entire environment [20]. The granular control provided through micro-segmentation is particularly beneficial in cloud environments, where the traditional network perimeter is no longer as clearly defined.

- ZTA Using Network Infrastructure and Software Defined Perimeters: Modern network infrastructure, combined with Software Defined Perimeters (SDPs), offers a dynamic and scalable security solution that can adapt to the evolving threat landscape [21]. SDP technology allows for the creation of individualized, secure access pathways for each user, thereby reducing the attack surface. This approach is particularly effective in environments with a high degree of variability in access points, such as those involving IoT devices or remote workers.

3.2.2. Deployed Variations of the Abstract Architecture

The practical implementation of ZTA varies, depending on the specific needs and constraints of the organization. The following models represent common deployment variations, each offering unique advantages based on the operational environment.

- Device Agent/Gateway-Based Deployment: This deployment model involves installing agents or gateways on devices to enforce security policies and monitor activity in real time [22]. It is particularly effective in organizations where devices are highly mobile or where users frequently connect to the network from various locations. The centralized control provided by device agents ensures that security policies are uniformly applied across all devices, regardless of their location or status.

- Enclave-Based Deployment: Enclave-based deployments create isolated, secure zones within the network, which are ideal for protecting highly sensitive data or operations [23]. This model is often used in environments that require a high degree of separation between different departments or functions, such as government agencies or financial institutions. By creating enclaves, organizations can ensure that even if one part of the network is compromised, the rest remains secure.

- Resource Portal-Based Deployment: Resource portal-based deployment utilizes secure portals to control access to resources, providing a highly controlled entry point for users [24]. This model is particularly useful in environments where external partners or clients require access to specific resources without being granted broader network access. By channeling access through secure portals, organizations can maintain strict control over who accesses their most critical assets.

- Device Application Sandboxing: Sandboxing is a technique that isolates applications and processes from the rest of the network, preventing potential threats from spreading [25]. This approach is particularly valuable in environments where untrusted or third-party applications are frequently used. By isolating these applications, organizations can minimize the risk of malware or other threats compromising the network.

3.2.3. Trust Algorithm

Trust algorithms are the backbone of the decision-making process in ZTA. They continuously assess the trustworthiness of entities requesting access, ensuring that only those meeting the strict criteria are granted access [26]. The adaptability of trust algorithms allows organizations to fine-tune their security posture to address emerging threats or changes in the operational environment [27]. Below are common variations of trust algorithms, each tailored to specific security needs and operational contexts:

- Risk-Based Trust Algorithms: To dynamically adjust access decisions based on a calculated risk score [28]. The risk score is typically derived from factors such as user behavior patterns, device security posture, and the sensitivity of the requested resource. Risk-based algorithms enable organizations to implement granular access controls, where higher-risk actions or entities require additional verification steps or are denied access altogether.

- Context-Aware Trust Algorithms: To incorporate real-time contextual information, such as the geographic location of the access request, time of day, and the usual behavior of the user or device [29]. By analyzing these factors, context-aware algorithms can detect anomalies that might indicate a potential security threat, thereby enhancing the accuracy of access decisions.

- Behavior-Based Trust Algorithms: To focus on the continuous monitoring of user and entity behavior to establish a baseline of normal activity [30]. Any deviation from this baseline triggers a reassessment of trust. Behavior-based algorithms are particularly effective in identifying insider threats or compromised credentials, as they can detect subtle changes in how users interact with systems.

- Multi-Factor Trust Algorithms: To combine multiple sources of information, such as biometrics, device health checks, and network conditions, to make comprehensive trust decisions [31]. Through integrating diverse factors, these algorithms provide a more robust and layered security approach, ensuring that access is granted only when all conditions meet the organization’s security standards.

- Adaptive Trust Algorithms: To continuously evolve based on new data and threat intelligence [32]. They can adjust the criteria for trust dynamically, depending on the current threat landscape or changes in organizational policies. This variation is particularly valuable in environments with rapidly changing security requirements, as it allows the algorithm to ”learn” from previous incidents and improve over time.

3.2.4. Network/Environment Components

The network and environment components are critical to supporting ZTA, providing the infrastructure necessary to accommodate its rigorous demands for continuous verification and dynamic access control. The following subcategories outline the key requirements and components needed to ensure a secure and efficient zero-trust environment:

- Network Segmentation and Micro-Segmentation: To limit lateral movement within the network, ZTA requires the implementation of network segmentation and micro-segmentation [33]. This involves dividing the network into smaller, isolated segments that can be individually monitored and controlled. Effective segmentation reduces the potential impact of a security breach by confining the threat to a specific segment, thereby protecting the broader network infrastructure.

- Secure Communication Protocols: Ensuring secure communication across all network components is essential for ZTA, and it includes the use of encrypted protocols such as TLS (Transport Layer Security) and IPsec (Internet Protocol Security) to protect data in transit [34]. These protocols help maintain the confidentiality and integrity of data exchanges between users, devices, and resources within the zero-trust environment.

- High-Performance Authentication and Authorization Systems: ZTA demands robust systems capable of handling large volumes of authentication and authorization requests in real time without introducing significant latency [35]. This includes implementing scalable identity management systems, such as federated identity and single sign-on (SSO) solutions, that can efficiently process and verify access requests while maintaining optimal performance.

- Continuous Monitoring and Logging Infrastructure: Continuous monitoring is a cornerstone of ZTA, requiring an infrastructure that can capture, analyze, and respond to security events in real-time [36]. This includes deploying security information and event management (SIEM) systems, intrusion detection systems (IDS), and advanced analytics platforms that provide comprehensive visibility into network activity and enable proactive threat detection and response.

- Resilient and Redundant Network Architecture: To support the continuous operation of ZTA, the network architecture must be resilient and capable of withstanding disruptions [37]. This involves implementing redundancy through failover mechanisms, load balancing, and distributed network resources to ensure that critical security functions remain operational even in the event of a failure or attack.

- Integration with Cloud and Hybrid Environments: Many organizations operate in cloud or hybrid environments, requiring ZTA to seamlessly integrate with these infrastructures [38]. This includes ensuring that zero-trust principles extend to cloud-based resources, with secure access controls, consistent policy enforcement, and visibility across both on-premises and cloud environments. Proper integration ensures that the security posture is maintained, regardless of where data and applications reside.

3.3. Steps in Implementing ZTA

The implementation of ZTA involves a systematic approach that ensures continuous verification and dynamic access control, which are critical to maintaining a secure and resilient environment. The following structured steps demonstrate the essential phases in implementing ZTA, with a critical review of how existing studies have addressed such aspects.

3.3.1. Identifying Verification Triggers (When to Verify)

The first step in implementing ZTA is identifying the specific conditions and contexts that necessitate verification. Continuous verification is central to ZTA, as it dynamically assesses the trustworthiness of entities requesting access, ensuring that only authenticated and authorized entities are granted access to resources [1,39]. The existing literature has explored various scenarios where verification is required, such as during user authentication, device compliance checks, and network access requests. However, although existing studies have addressed verification timing and conditions, there is a scarcity of research on adaptive verification triggers. These triggers should be capable of accounting for real-time changes in user behavior, device health, and the evolving threat landscape, necessitating further investigation.

3.3.2. Verification Methods and Technologies (How to Verify)

The second step involves the selection and application of appropriate verification methods and technologies. ZTA relies on a variety of technologies, including identity and access management (IAM) systems, multi-factor authentication (MFA), and behavioral analytics, to ensure that every access request is rigorously authenticated and authorized. While existing research has proposed a range of verification mechanisms, the effectiveness of these methods often depends on the specific context in which they are applied. The current body of literature predominantly focuses on traditional verification methods, with limited exploration of emerging techniques such as AI-driven verification and continuous behavioral monitoring. There is a pressing need for further studies on integrating advanced verification technologies in dynamic and complex environments to enhance the effectiveness of ZTA.

3.3.3. Validation of Verification Processes (Verification Validation)

The final step in the implementation process is the validation of verification processes to ensure their reliability and accuracy. Proper validation is essential to maintaining the integrity of the ZTA framework. This involves cross-referencing the outcomes of verification against predefined security policies and employing audit logs and real-time monitoring to identify and address any discrepancies. While many studies advocate for various validation methods, such as the deployment of policy enforcement points (PEPs) and security information and event management (SIEM) systems, continuous real-time validation remains a significant challenge. Despite the critical importance of validation within ZTA, there is a notable lack of research on continuous validation techniques that can adapt to evolving threats and dynamic user behaviors. Developing robust validation frameworks that can maintain the reliability of ZTA in real time is an area that requires further exploration and innovation.

4. Literature Review Methodology

The literature review methodology was carefully designed to ensure a rigorous and comprehensive assessment of relevant studies, including systematic article selection, evaluation criteria, and a structured analysis approach.

4.1. Article Selection Criteria

The review sought studies that examine ZTA in a security context and that appeared after ZTA moved from policy discussion to mainstream deployment. The National Institute of Standards and Technology released SP 800-207 [4] in late 2020; large-scale generative-AI tooling (for example, GPT-3.5 and Llama models) reached public availability since 2022. Work published before that date rarely considers the combined threat surface we analyze. For this reason, we restricted the sampling window to January 2022–August 2024, and our writing started in August 2024.

Search phase. We queried Google Scholar using the Boolean pattern

applied to title or author keywords. Google Scholar returned the union set with 978 unique records; the discipline-specific indexes added no new items once title-level duplication had been removed.

Inclusion criteria. A publication advanced to full-text screening when it satisfied all of the following:

- Published in a peer-reviewed venue—journal special issue, conference proceedings, or magazine with documented editorial review;

- Primary research, rather than a secondary survey;

- Explicit focus on zero trust or a named ZTA component (e.g., policy engine, continuous verification);

- Full text available in English.

Exclusion criteria. During screening, we excluded items that

- Lacked an evaluation section or any empirical evidence;

- Relied on unverifiable data (for instance, simulated traffic with no parameter disclosure);

- Appeared in venues flagged by Cabells or Beall as predatory;

- Were pre-prints, technical reports without peer review, or corporate white-papers.

Outcome. The filtering steps are summarized in Figure 2. Of 978 candidate papers, 368 met every inclusion rule. A further 231 were removed under the exclusion tests, leaving 136 primary studies, plus the 10 survey papers used as baselines. Then, we augmented our query with “generative AI” and synonyms (e.g., “large language model,” “diffusion model”) combined via AND with our ZTA terms to capture any emergent work at the intersection—even though we found virtually none.

Figure 2.

Flowchart of article selection process.

4.2. Article Assessment Criteria

To evaluate the selected studies on ZTA, we employed a unified set of six evaluation criteria designed for primary research: academic rigor and scientific soundness, completeness of the three ZTA core principles, result replicability and code up-to-dateness, implementation versatility, practicality, and research ethics. The intent is to provide a consistent and objective framework for comparison across heterogeneous topics such as identity management, trust algorithms, network architecture, and deployment archetypes. Each criterion is scored on a three-level scale {Good = 1, Pass = 0.5, Fail = 0} using operationalized indicators with measurable thresholds and concrete examples, as detailed in Table 2. Unless otherwise stated, all six criteria are equally weighted for the main analysis, and the Total Score reported in Appendix B (Table A1) is the simple sum across criteria in with no normalization. We additionally report a sensitivity check in Appendix B, where alternative weights are explored for robustness with no material change in study ordering.

Table 2.

Operationalized marking rubric for evaluating primary research on ZTA. Scoring is {Good = 1, Pass = 0.5, Fail = 0} with measurable indicators and examples.

Rater training and reliability. Two raters who are authors of this article independently scored all papers after a 90-minute calibration session that used five exemplar papers and a shared decision log. Disagreements were adjudicated by a third author who did not participate in the initial scoring. On a randomly selected calibration sample of ten papers not used in training, overall Krippendorff’s (ordinal), with per-criterion in the range , and raw percent agreement at averaged across criteria. Full reliability tables, coder prompts, and the decision log template are included in Appendix B. These procedures aim to reduce subjectivity while keeping the rubric usable across diverse study designs.

Scoring and linkage to Appendix tables. The per-paper scores in Appendix B (Table A1) directly instantiate the rubric above, using the same criterion names, the same three-level scale, and equal weighting. When a study straddled categories, raters applied the decision log to maintain consistency, and any post-adjudication changes are marked in the log referenced in Appendix B. This kept the long table valid while making the rubric auditable and reproducible.

5. Evaluation of Existing Primary Studies

This section systematically evaluates 136 existing primary studies on ZTA during 2022–August 2024, categorizing them based on different architectural variations and assessing their practical applicability, scalability, and alignment with zero-trust principles (Table A1 in the Appendix B).

5.1. Variations of ZTA Approaches

As indicated in Algorithm 1, studies under this subsection exhibit a common procedural flow in developing ZTA approaches. This process typically initiates with the identification of security challenges, integrates various technologies such as blockchain or AI, and culminates in the proposal of ZTA models. A critical observation is that these studies frequently lack real-world scalability and validation, indicating a convergence on theoretical frameworks with a divergence in practical applicability.

| Algorithm 1 Summarized common structure of ZTA variation studies |

|

5.1.1. ZTA Using Enhanced Identity Governance

Five studies [26,40,41,42,43] explored the implementation of ZTA with a focus on enhanced identity governance. Ref. [40] developed a ZTA tailored for health information systems, emphasizing strict identity governance to enhance security; however, the study lacked a comprehensive analysis of how scalable the implementation would be across diverse healthcare environments. Ref. [41] proposed a blockchain-based approach to reinforce multi-factor authentication within ZTA to secure digital identities, but it did not provide a detailed discussion on scalability and integration with existing systems. Ref. [42] examined ZTA’s application to digital privacy in healthcare, focusing on enhanced identity governance; however, it inadequately addressed the adaptability of ZTA in dynamic healthcare settings. Ref. [26] implemented a ZTA approach aimed at enhancing information security for healthcare workers through digital health technology adoption, but the study fell short in addressing the specific challenges associated with dynamic and context-aware access controls. Ref. [43] applied ZTA principles, along with probability-based authentication, to improve data security and privacy in cloud environments, but it did not include a comprehensive evaluation of the scalability of this approach across different cloud platforms. While these studies contributed valuable insights into the role of identity governance in ZTA, they collectively overlooked detailed evaluations of scalability and adaptability, which are crucial for the practical deployment of ZTA in various real-world scenarios.

5.1.2. ZTA Using Micro-Segmentation

Five studies [44,45,46,47,48] explored the implementation of ZTA with an emphasis on micro-segmentation. Ref. [44] developed a zero-trust machine learning architecture specifically for healthcare IoT cybersecurity, highlighting the importance of green computing. However, the study lacked a comprehensive evaluation of the practical challenges associated with deployment in real-world scenarios. Ref. [45] proposed a methodology for automated zero-shot data tagging using generative AI to facilitate tactical ZTA implementation, yet it did not adequately address scalability issues in diverse operational environments. Ref. [46] introduced a ZTA approach tailored to cloud-based fintech services, emphasizing micro-segmentation to enhance security, but the study fell short of providing a thorough analysis of the limitations inherent in the proposed approach. Ref. [47] investigated the application of Zero-Trust principles in microservice-based smart grid operational technology (OT) systems to mitigate insider threats, but it lacked a detailed evaluation of scalability, particularly in large-scale environments. Ref. [48] presented a framework for applying zero-trust principles to Docker containers, focusing on micro-segmentation within containerized environments; however, it did not include a detailed analysis of the framework’s performance across varied operational settings. In summary, while such studies reveal the significance of micro-segmentation in enhancing ZTA, they collectively fall short in addressing practical deployment challenges and scalability, which are essential for implementing ZTA effectively across different environments.

5.1.3. ZTA Using Network Infrastructure and Software Defined Perimeters

Sixty-eight studies [13,40,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114] explored the integration of ZTA using network infrastructure and Software Defined Perimeters (SDP). Refs. [49,50,51,52] focused on leveraging SDPs to enhance ZTA deployment in virtual, cloud, and metaverse environments, highlighting the potential of blockchain and AI integration for dynamic and scalable security. However, they lacked a thorough analysis of real-world deployment challenges, which is critical for practical applicability. Refs. [13,53,55,60] examined ZTA implementations in IoT and mobile networks, emphasizing the role of network infrastructure and SDPs to enhance security. These studies identified key differences in ZTA deployment strategies but often fell short of addressing the integration challenges with legacy systems and scalability in large, heterogeneous environments. Similarly, Refs. [54,56,59] proposed decentralized models for ZTA using blockchain and per-packet authorization, yet they lacked comprehensive validation in diverse real-world scenarios, limiting the generalizability of their findings. Studies like [61,65,67] focused on enhancing ZTA in IoV and connected vehicle settings through distributed edge solutions and federated learning. However, their research often did not extend to broader trust validation processes beyond specific use cases like caching or intrusion detection. Meanwhile, Refs. [64,68,69] explored innovative methods like quantum computing and reputation-enhanced schemes to bolster ZTA, but again, the absence of real-world applicability evaluations was a significant gap. Refs. [71,72,75] addressed ZTA in specialized environments such as 6G networks and mandatory access control frameworks, but they inadequately covered practical implementation details. Other studies, such as [73,74,78,81], reviewed modern network infrastructure applications for ZTA but lacked comprehensive analysis of hybrid deployment challenges. Furthermore, Refs. [79,80,88,96] examined ZTA in the context of cloud-native and industrial IoT settings. They focused on enhancing security through modern infrastructure but did not sufficiently address the scalability and integration issues that arise in complex, real-world IoT environments. Studies like [82,85,86] contributed to understanding the applicability of ZTA in edge computing and cyber-physical systems, yet their empirical validation remains a critical concern. Lastly, Refs. [40,81,87] provided innovative approaches to ZTA in healthcare or connected vehicle environments, using SDPs and dynamic infrastructure to secure access and communication. However, they too lacked comprehensive real-world deployment evaluations, which are essential for validating their proposed solutions. While such studies collectively highlight the importance of integrating network infrastructure and SDPs to implement ZTA across various domains, the recurring gaps in addressing practical deployment challenges, scalability, and real-world validation limit their applicability and effectiveness in diverse operational settings.

5.2. Deployed Variations of the Abstract Architecture

As captured in Algorithm 2, studies in this subsection share a generic procedural structure for exploring deployment variations of ZTA. The approach typically begins by identifying deployment challenges, followed by proposing deployment models such as device agent, enclave-based, or resource portal-based strategies. The process then involves addressing scalability issues and evaluating real-world applicability. These studies often culminate in analyzing gaps in deployment models, with a common observation being the lack of practical validation in large-scale environments.

| Algorithm 2 Summarized common structure in deployed variations of ZTA studies |

|

5.2.1. Device Agent/Gateway-Based Deployment

Two studies [115,116] explored the application of device agent and gateway-based deployment strategies within ZTA. Ref. [115] employed a device profiling method combined with deep reinforcement learning to detect anomalies, aiming to enhance the security of ZTA networks. However, this study inadequately addressed scalability issues, limiting its applicability across diverse and large-scale network environments. Meanwhile, Ref. [116] proposed a zero-trust model tailored to intrusion detection in drone networks using device agent-based deployment, but it lacked comprehensive validation, making its practical applicability in real-world scenarios uncertain. While both studies contributed valuable insights into the role of device agent/gateway-based deployment in ZTA, they did not fully explore scalability and real-world applicability, which are critical for the successful implementation of such models in dynamic environments.

5.2.2. Enclave-Based Deployment

No studies were found on enclave-based deployment, despite the critical role enclaves play in protecting sensitive data by isolating it from the rest of the system [15]. With the rise of hardware-based trusted execution environments (TEEs) like Intel SGX and AMD SEV, using enclaves can significantly strengthen ZTA by ensuring that, even if other security measures are compromised, sensitive data remains secure [117]. This gap in research leaves ZTA implementations vulnerable to sophisticated attacks that could exploit such unprotected areas, highlighting an urgent need for focused study and development in this area.

5.2.3. Resource Portal-Based Deployment

Despite its potential to enhance security by providing controlled access to critical resources, no studies were found that specifically address Resource Portal-Based Deployment within the context of ZTA. This lack of research represents a significant oversight, as secure resource portals can offer a robust solution for managing external access, especially in scenarios involving partners and clients who require limited, monitored access [15,118]. Given the increasing need to safeguard sensitive data while allowing selective external access, more research should be dedicated to developing and evaluating resource portal-based deployment strategies in ZTA to fill this critical gap.

5.2.4. Device Application Sandboxing

Ref. [119] was the only study found in this category. While the study proposed a hybrid isolation model for device application sandboxing within ZTA, it lacked comprehensive real-world implementation and performance evaluations. Device application sandboxing is essential for ZTA, as it isolates applications to prevent malicious code from affecting other parts of the system, thereby enhancing security [15]; however, it appears to be an understudied area since 2022, which is concerning, given the increasing sophistication of cyber threats that exploit application vulnerabilities, leaving a critical gap in ZTA research and implementation strategies.

5.3. Trust Algorithm

The studies under this subsection follow a generalized workflow when developing and evaluating trust algorithms within the ZTA. As detailed in Algorithm 3, the process typically initiates with identifying trust and security challenges and culminates in deployment and monitoring in real-world scenarios. The iterative nature of trust algorithm development involves stages of algorithm development, simulation, optimization, validation, integration, and scalability evaluation. This abstract representation encapsulates the shared procedural elements across the studies in this category.

| Algorithm 3 Summarized common structure in trust algorithm development and integration in ZTA |

|

5.3.1. Risk-Based Trust Algorithms

Fourteen studies [120,121,122,123,124,125,126,127,128,129,130,131,132,133] explored various risk-based trust algorithms within the framework of ZTA. Of these, Refs. [120,122,123] primarily focused on enhancing decision-making processes by integrating risk assessment models but lacked concrete real-world deployment examples to validate their proposals. Studies like Refs. [121,127] proposed the use of blockchain and federated learning to manage trust dynamically in network environments, yet they inadequately addressed the scalability of these solutions in practical applications. Refs. [125,126,129] concentrated on developing frameworks to assess the maturity and trustworthiness of systems under ZTA, employing various analytical and algorithmic approaches. However, their limitations lay in the insufficient evaluation of these frameworks against diverse operational scenarios. On the other hand, Refs. [124,128] aimed to tackle specific challenges, such as those in 6G networks and intrusion detection, by formulating risk-based models but did not provide comprehensive details on practical implementation. Studies like Refs. [130,133] introduced innovative risk-scoring algorithms tailored to endpoint devices and network access control, focusing on dynamic adjustments based on security profiles. Despite their innovative approaches, they lacked a thorough analysis of the deployment challenges in real-world settings. Finally, Refs. [131,132] explored user behavior risks and zero-trust data management but failed to address the full spectrum of implementation constraints, leaving gaps in their practical relevance.

5.3.2. Context-Aware Trust Algorithms

Five studies [134,135,136,137,138] explored the development and implementation of context-aware trust algorithms within the framework of ZTA. Ref. [134] implemented a dual fuzzy methodology to enhance trust-aware authentication and task offloading in multi-access edge computing. However, the study lacked a detailed discussion on the challenges associated with real-world deployment, leaving a gap in understanding its practical applicability. Ref. [135] examined the influence of information security culture on the adoption of ZTA within UAE organizations. While the study provided valuable insights into cultural impacts on security adoption, its primary shortcoming was its limited generalizability beyond the specific cultural context of the UAE, making its findings less applicable to broader or more diverse environments. Ref. [136] introduced the ZETA framework, which integrates split learning with zero-trust principles to enhance security in autonomous vehicles operating within 6G networks. Despite its innovative approach, the study did not provide a comprehensive evaluation of the practical implementation and scalability of the framework in real-world settings, leaving unanswered questions about its feasibility and effectiveness under varied operational conditions. Ref. [137] proposed a continuous authentication protocol designed for ZTA that operates without a centralized trust authority, but it did not undergo thorough testing for scalability in real-world environments, which limits the ability to gauge its performance under different network loads and conditions. Ref. [138] presented a context and risk-aware access control approach tailored for zero-trust systems. The study contributed significantly to the theoretical development of context-aware access controls but fell short in evaluating the practical implementation of its approach across diverse operational environments, which is crucial for understanding the broader applicability and effectiveness of the proposed solutions. While these studies have made significant strides in developing context-aware trust algorithms within ZTA, they share common limitations in terms of real-world deployment, scalability, and generalizability, which need to be addressed in future research to fully realize the potential of these innovations.

5.3.3. Behavior-Based Trust Algorithms

No studies were identified that specifically focused on behavior-based trust algorithms within the context of ZTA. This gap is concerning, given the increasing sophistication of modern cyber threats, such as advanced persistent threats (APTs) and social engineering attacks, which often involve compromised insider credentials [15]. Traditional risk-based and static trust algorithms cannot adequately detect these threats, as they rely on predefined rules or known threat patterns [5]. In contrast, behavior-based algorithms excel at identifying anomalies by continuously monitoring user and entity behavior against established baselines. The absence of research in this area leaves a critical vulnerability in ZTA implementations, as these algorithms are uniquely capable of detecting subtle deviations indicative of insider threats or compromised accounts, which are otherwise challenging to identify with conventional methods.

5.3.4. Multi-Factor Trust Algorithms

Two studies [139,140] focused on the development and implementation of multi-factor trust algorithms within the context of ZTA. In [139], the authors explored the automation and orchestration of ZTA, with a particular emphasis on the challenges associated with developing trust algorithms. While this study provided valuable insights into the theoretical aspects of algorithm design, it fell short in terms of comprehensive real-world applicability testing, leaving questions about the practical deployment of such algorithms unanswered. In contrast, Ref. [140] proposed a data-driven zero-trust key algorithm aimed at enhancing security by dynamically adjusting trust levels based on multiple data inputs. This approach suggested a promising method for improving the responsiveness and accuracy of trust decisions in dynamic environments. However, similar to [139], the study by Liu et al. lacked a thorough evaluation of the real-world implementation challenges. The authors did not address how these algorithms would perform under the constraints of existing IT infrastructures or in the presence of advanced persistent threats (APTs). While both studies contributed to the academic discourse on multi-factor trust algorithms in ZTA, they were limited by a lack of empirical testing and practical validation. This gap in real-world applicability requires future research that bridges the divide between theoretical algorithm design and practical implementation.

5.3.5. Adaptive Trust Algorithms

Six studies [26,39,141,142,143,144] were found to have explored adaptive trust algorithms within ZTA. Of these, Ref. [141] proposed a transition from standard policy-based zero trust to an absolute zero-trust (AZT) model utilizing quantum-resistant technologies. While innovative, this study lacked comprehensive practical implementation and real-world validation, limiting its applicability in real-world scenarios. In [142], the authors implemented a blockchain-based zero-trust approach integrated with deep reinforcement learning specifically for supply chain security. However, this study inadequately addressed the practical deployment challenges and the energy consumption of the proposed solution, raising concerns about its feasibility. Ref. [39] critically analyzed various trust algorithms within ZTA, emphasizing the importance of continuous verification. Despite its detailed analysis, the study fell short in evaluating the implementation across real-world deployment scenarios, leaving a significant gap in practical applicability. Similarly, Ref. [143] explored continuous authentication methods within a zero-trust framework, focusing on the potential of adaptive algorithms to enhance security. However, the study’s major inadequacy was the limited practical implementation details and the absence of comprehensive performance evaluation metrics, which are crucial for assessing real-world effectiveness. In [144], an AI-based approach was proposed for implementing ZTA, focusing on the use of adaptive trust algorithms to improve security decision-making processes. However, the study lacked comprehensive evaluation across multiple deployment environments, which limits its generalizability and raises concerns about its adaptability. Finally, Ref. [26] developed a maturity framework for zero-trust security in multi-access edge computing. Although the framework was well conceived, it inadequately addressed the versatility of implementation across different platforms, which is essential for a robust zero-trust deployment. While these studies explored various adaptive trust algorithms that could significantly advance zero-trust principles, most failed to thoroughly address the practical implementation challenges and performance metrics necessary for real-world deployment.

5.4. Network/Environment Components

The studies under this subsection demonstrate a recurring pattern in the lifecycle of network/environment components within ZTA. As detailed in Algorithm 4, the process typically involves identifying operational needs, followed by development, installation, and validation of components. This generic workflow emphasizes the iterative nature of integration, monitoring, and feedback within real-world deployments, highlighting the shared approach across various studies.

| Algorithm 4 Summarized common lifecycle of network/environment components in ZTA |

|

5.4.1. Network Segmentation and Micro-Segmentation

Twelve studies [145,146,147,148,149,150,151,152,153,154,155,156] examined various aspects of network segmentation and micro-segmentation within ZTA. Among them, Refs. [145,146] focused on integrating hardware-level monitoring and zero-trust principles to secure zero-trust networks and cyber–physical systems. However, neither study extensively evaluated their adaptability across diverse environments. Studies like [147,148] developed software integrity protocols and reviewed security in the metaverse, respectively, but failed to provide detailed implementation strategies. While Refs. [149,151] leveraged machine learning and AI to enhance micro-segmentation and adaptive authentication, they did not sufficiently address real-world deployment challenges. Other studies, such as [150,153,154], explored de-perimeterization and graph-based pipelines for zero trust, yet they lacked practical validation and comprehensive guidelines for implementation. Finally, Refs. [155,156] applied micro-segmentation to secure the Internet of Vehicles and digital forensics but did not thoroughly evaluate their applicability and versatility in diverse operational settings.

5.4.2. Secure Communication Protocols

Seven studies [3,34,157,158,159,160,161] were identified that explored secure communication protocols within ZTA. Ref. [157] analyzed the intersection of intellectual property rights with information security technologies, touching on secure communication protocols. However, it inadequately addressed the practical implications of these technologies within operational environments, lacking an in-depth analysis of how these protocols would function under real-world constraints. Ref. [158] proposed integrating federated learning with a zero-trust approach to enhance security in wireless communications. While innovative, the study fell short in comprehensively addressing the complexities of applying this integration across varied real-world scenarios, particularly in environments with diverse network conditions and infrastructure. Ref. [159] introduced a privacy-preserving authentication scheme based on ZTA, emphasizing secure communication protocols. Despite the novel approach, the study did not sufficiently explore the practical implementation and scalability of the proposed solution in diverse environments, leaving gaps in understanding how these protocols would perform under different operational contexts. Ref. [3] investigated the use of proxy smart contracts to enhance ZTA within decentralized oracle networks. Although the study provided valuable insights into the integration of blockchain with ZTA, it lacked a thorough analysis of practical deployment scenarios and real-world performance metrics, making it difficult to assess the effectiveness of the proposed solution. Ref. [160] presented a defense model integrating zero-trust principles with blockchain technology to secure smart electric vehicle chargers. The study was forward-looking but failed to provide a detailed analysis of scalability and performance under varying network conditions, which are critical for assessing the real-world applicability of the proposed protocols. Ref. [161] implemented a blockchain-based mechanism for securely delivering enrollment tokens in zero-trust networks. While the study contributed to the field by addressing secure token delivery, it lacked a comprehensive evaluation of its performance across different real-world scenarios, limiting its practical relevance. Ref. [34] proposed a framework for sustained zero-trust security in mobile core networks for 5G and beyond, focusing on secure communication protocols, but it did not include an in-depth analysis of the practical implementation challenges, such as the impact on network latency and the adaptability of these protocols in dynamic mobile environments. While these studies provide valuable contributions to the development of secure communication protocols within ZTA, most fall short in addressing the practical challenges of real-world deployment, including scalability, performance metrics, and adaptability to diverse operational environments.

5.4.3. High-Performance Authentication and Authorization Systems

This subsection reviews three studies [157,162,163] that focus on the implementation and challenges of high-performance authentication and authorization systems within ZTA. Ref. [157] analyzed the intersection of intellectual property rights with information security technologies. While the study highlighted the importance of safeguarding intellectual property within secure environments, it inadequately addressed the practical implications of these technologies in operational settings, particularly how these systems could be deployed and maintained in real-world scenarios. Ref. [162] proposed a zero-trust decentralized mobile network user plane by implementing dNextG to enhance security through decentralized access control mechanisms. Although the study provided a robust framework for improving security in mobile networks, it fell short in discussing the scalability challenges that arise in diverse and expansive network environments. The study did not adequately consider how the proposed solution would perform across different network scales, particularly in heterogeneous or large-scale deployments. Ref. [163] conducted an analysis of the cost-effectiveness of implementing ZTA in various organizations. While the study offered valuable insights into the financial aspects of ZTA deployment, it lacked a detailed discussion on adapting the proposed solutions to different organizational contexts. The research did not explore how varying organizational structures, resources, and operational needs might impact the effectiveness and adaptability of ZTA solutions. While these studies provide important contributions to the field of high-performance authentication and authorization systems within ZTAs, they each exhibit certain limitations. The practical deployment implications, scalability challenges, and contextual adaptability of the proposed solutions were often inadequately addressed, highlighting areas for further research and improvement.

5.4.4. Continuous Monitoring and Logging Infrastructure

No studies specifically focusing on continuous monitoring and logging infrastructure within the ZTA framework were found. This gap is critical, as continuous monitoring not only enables the early detection of threats by identifying anomalies in real time but also provides the capability to mitigate attacks before they escalate [15]. Logging, particularly with write-once, read-only configurations, ensures accountability and preserves forensic evidence, which is invaluable for post-incident analysis and compliance [15,30]. Ignoring these components leaves ZTA implementations vulnerable to sophisticated attacks that exploit the absence of continuous oversight and reliable audit trails.

5.4.5. Resilient and Redundant Network Architecture

Ref. [39] was the only study identified in this category. The study critically analyzed the infrastructure components required to support ZTA, emphasizing the importance of resilient and redundant network designs to ensure continuous availability and robust security. However, it lacked a thorough examination of practical implementation challenges and did not provide detailed evaluations of the architecture’s adaptability in real-world scenarios. Resilient and redundant network architecture is vital for ZTA as it ensures that network disruptions or failures do not compromise security [15]; nonetheless, this area remains underexplored, especially concerning the practical integration of such architectures in diverse operational environments. The limited focus on implementation adaptability in [39] highlights a significant gap in current ZTA research, necessitating further investigation to address these challenges effectively.

5.4.6. Integration with Cloud and Hybrid Environments

Six studies [28,38,164,165,166,167] explored the integration of ZTA with cloud and hybrid environments, each addressing different aspects of this critical area. Ref. [38] developed a flexible ZTA tailored for the cybersecurity of industrial IoT infrastructures. However, the study did not include a comprehensive evaluation of the architecture’s scalability and performance in diverse real-world settings, leaving questions about its practical applicability. Ref. [164] proposed a comprehensive framework for migrating to ZTA with a focus on network and environment components. Despite its thorough design, the study’s major shortcoming was the limited empirical validation of the proposed framework, making it difficult to assess its effectiveness in practice. Ref. [28] investigated the feasibility of applying ZTA to secure the metaverse. While the study provided valuable insights into the potential benefits and challenges, it lacked an in-depth analysis of the performance impacts and practical challenges associated with its deployment in this emerging digital environment. Ref. [165] examined the potential integration of ZTA with emerging 6G technologies, highlighting various opportunities and challenges. However, the study was limited due to its lack of detailed analysis regarding specific implementation strategies, leaving a gap in understanding how ZTA could be effectively deployed within 6G networks. Ref. [166] explored secure and scalable cross-domain data sharing in a zero-trust cloud-edge-end environment using sharding blockchain. Although the study presented a novel approach, it did not sufficiently discuss the practical implications of sharding blockchain in real-world ZTA scenarios, particularly in terms of scalability and security. Ref. [167] augmented zero-trust network architecture to enhance security in virtual power plants. The study, while innovative, was constrained due to its limited focus on scalability in highly dynamic environments, which is a crucial factor in the successful deployment of ZTA in such settings. While these studies contribute to the growing body of knowledge on integrating ZTA with cloud and hybrid environments, they each exhibit significant gaps, particularly in empirical validation, scalability assessment, and the practical challenges of real-world implementation.

5.5. Major Themes Identified

Our evaluation of the primary studies revealed several overarching themes.

5.5.1. Overstatement of Research Success

A significant number of studies claim to have comprehensively addressed the challenges of ZTA. However, a deeper examination reveals a stark contrast between these claims and the actual rigor of their methodologies and adherence to critical ZTA principles. Among the 136 studies evaluated, the criterion for “academic rigor” shows a somewhat balanced distribution with 85 occurrences of a full score (1) and 52 occurrences of a partial score (0.5). This suggests that, while many studies demonstrate strong theoretical foundations, there is still a notable portion that lacks comprehensive methodological depth. More concerning is the evaluation against the “ZTA 3-Step Completeness” criterion, which is fundamental according to the NIST ZTA guideline. In this area, only 2 studies achieved a full score (1), while a staggering 132 studies received a partial score (0.5), and 3 studies failed entirely (score of 0). This indicates that 98.54% of the studies did not fully meet the critical requirement of ZTA 3-Step Completeness mandated by NIST ZTA [15]. Despite these shortcomings, the studies often present an overly optimistic narrative, downplaying these gaps and overemphasizing their contributions.

5.5.2. Mixed Quality in Research Applicability, Versatility, and Practicality

The quality of research concerning applicability, versatility, practicality, and research ethics varied significantly. This is evident from the diverse scores assigned across the studies. For instance, within the Network Infrastructure and Software Defined Perimeters category, scores for practicality and versatility ranged from as high as 1 to as low as 0, indicating inconsistencies in how well these studies can be applied in real-world scenarios. The average score in this category for practicality was approximately 0.5, which suggests that many studies struggle with implementing their proposed solutions in a way that is both versatile and practical across different environments. This variability highlights the need for more rigorous and contextually adaptable research that addresses the broader challenges of implementing ZTA in diverse operational settings.

5.5.3. Selective Coverage of ZTA Topics

A critical observation is the uneven focus on certain aspects of ZTA in the existing research. Topics like Network Segmentation and Micro-Segmentation and Network Infrastructure and Software Defined Perimeters have been extensively explored, with 12 and 68 studies, respectively, indicating a strong emphasis on network isolation and infrastructure protection. However, significant areas such as Enclave-Based Deployment, Resource Portal-Based Deployment, Behavior-Based Trust Algorithms, and Continuous Monitoring and Logging Infrastructure are notably absent from the literature. This selective attention to specific ZTA components leaves critical security mechanisms underrepresented, which is concerning, given the complex and evolving nature of cyber threats. Enclave-Based Deployment could provide robust isolation for sensitive data but remains unexplored, limiting the understanding of its implementation and effectiveness. Similarly, Behavior-Based Trust Algorithms, essential for detecting sophisticated insider threats and compromised credentials, are missing, potentially leaving organizations vulnerable to undetected malicious activities. Furthermore, the absence of studies on Continuous Monitoring and Logging Infrastructure neglects the need for real-time threat detection and accountability through forensic evidence, which are fundamental for proactive security management and post-incident analysis.

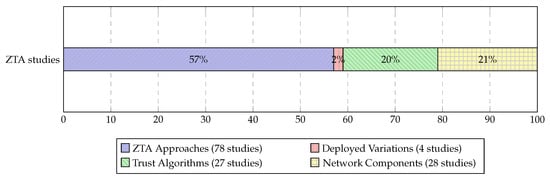

As depicted in Figure 3, the majority of studies focused on ZTA Approaches, comprising 57% of the total. In contrast, only 2% cover Deployed Variations, while 20% and 21% focus on Trust Algorithms and Network Components, respectively. This major imbalance highlighted a pronounced research preference towards high-level architectural concepts over practical deployment and comprehensive trust mechanisms, showing the need for a more balanced approach that should include underrepresented yet critical areas to provide a holistic understanding of ZTA implementations.

Figure 3.

Percentage distribution of studies across major ZTA taxonomy classifications.

5.6. Addressing Research Questions RQ1 and RQ2

The evaluation of existing primary research on ZTA reveals mixed success in addressing the first two research questions (RQ1 and RQ2), while the latter three (RQ3, RQ4, and RQ5) remained unanswered.

RQ1: How can “trust” and “zero trust” be properly defined within the context of ZTA? Answered (although varied in quality). The surveyed studies have explored various theoretical underpinnings of trust and zero trust within ZTA. Several frameworks attempt to define trust as a dynamic, context-sensitive construct, where every interaction must be continuously verified. These definitions have laid the groundwork for understanding trust as a minimal and temporary concept within ZTA, which is fundamental for its implementation. However, the depth and clarity of these definitions differ across studies, with some failing to provide practical guidelines for operationalizing zero trust in diverse environments. Thus, while the question of how to define trust and zero trust has been addressed, the variation in quality and practical applicability limits the overall effectiveness of the existing answers.

RQ2: What are the different ways to achieve effective implementation of ZTA across diverse operational environments? Answered (although varied in quality). The strategies for implementing ZTA across various environments have been examined, with a particular focus on risk-based access controls, network segmentation, and identity governance. Studies have outlined different methods to achieve ZTA, emphasizing the integration of continuous authentication, policy enforcement, and micro-segmentation as key mechanisms. However, the primary weakness lies in the lack of empirical testing in highly varied environments, such as multi-cloud or hybrid infrastructures. While the theoretical models provide a broad understanding of the strategies, the absence of real-world deployment data and scalability assessments means that the question has been only partially addressed with inconsistent quality.

RQ3: How have people factors, including user behavior, security culture, and insider threats, evolved in the context of ZTA implementation? Not answered. No study provided an in-depth analysis of how people-related factors have evolved since the initial ZTA implementations. The existing research failed to consider the dynamic nature of human factors such as changing behavioral patterns, increased sophistication of social engineering attacks, or the evolving role of security culture within organizations. For transparency, we note that a few survey papers gesture at people-related considerations (e.g., multivocal practitioner perspectives and strategic culture claims in [8,9]), but they do not operationalize behavior change, insider-threat evolution, or measurement—hence, our overall judgment remains unchanged.

RQ4: What process factors have evolved in the implementation and management of ZTA since the earlier studies? Not answered. The existing studies did not provide detailed evaluations of how these processes have evolved in response to new security challenges. The research remained focused on theoretical aspects of process management, with little attention paid to real-world adaptations and changes that have occurred over time. Some survey articles discuss high-level governance or process models in specific domains (for example, strategic orientation in [1] and cloud-focused governance themes in [10]), but they do not supply longitudinal or deployment-backed evidence of process evolution.

RQ5: How have technological factors, including advancements in cybersecurity tools, cloud environments, and automation, influenced ZTA implementation, and is the current ZTA knowledge base still relevant? Not answered. The surveyed studies largely failed to consider whether foundational ZTA frameworks remain relevant in light of these technological changes. There was minimal exploration of how modern cybersecurity technologies, such as machine learning, AI-driven analytics, or cloud-native environments, have transformed ZTA strategies. Several survey works catalog technology advances in domain-specific contexts (e.g., 6G integration in [7], cloud-centric analyses in [10], and big data/IoT environments in [14], with verification-oriented perspectives in [2]); however, they stop short of testing the continued relevance of ZTA knowledge via deployment evidence, scalability studies, or comparative baselines, so they do not alter our conclusion for RQ5.

6. Generative AI’s Impact on ZTA: A Cyber Fraud Kill Chain Analysis

To understand how ZTA can effectively counter modern fraud tactics enabled via generative AI, we propose the Cyber Fraud Kill Chain (CFKC), which analyzes the progressive weakening of ZTA effectiveness (which manifests as increased false-negative rates, longer attacker dwell time, or policy bypasses), by mapping seven distinct fraud stages. Unlike traditional security models focused on access control and asset protection, the CFKC addresses the evolving threat landscape shaped by generative-AI-driven fraud techniques, providing a precise lens through which to analyze how core ZTA controls erode under advanced, AI-powered attacks. As was foreshadowed throughout Section 2, Section 3, Section 4 and Section 5, generative AI not only motivates the CFKC but also redefines core ZTA control surfaces—identity, policy, and telemetry—necessitating the seven-stage analysis below.

6.1. Taxonomy of Generative–AI Threats to ZTA

Why a dedicated taxonomy is necessary.

Generative models do not constitute a single attack vector; each model class subverts a different control assumption in NIST SP 800-207. Diffusion pipelines counterfeit artifacts that identity-governance workflows still trust; instruction-tuned LLMs dismantle linguistic heuristics that underpin risk scoring; RL agents discover segmentation gaps faster than policy engineers can close them; and adversarial generators poison behavioral baselines over time. Treating “AI” as undifferentiated automation, therefore, masks the concrete ways Zero-Trust assurances degrade.

- Synthetic–identity fabrication undermines the “verify explicitly” maxim because liveness and document-verification chains cannot attest to the authenticity of inputs that never existed in the physical domain.

- Automated spear-phishing bypasses context and behavior filters by producing messages whose semantics and stylistics fit the recipient’s benign profile distribution.

- Deep-fake executive impersonation defeats presence-based out-of-band checks; once the adversary voices or visualizes a trusted party in real time, the residual safeguard is solely human judgment.

- Adversarial policy evasion shows that segmentation is only as strong as the search effort of an automated agent; RL quickly finds mis-scoped maintenance VLANs and orphaned service accounts.

- Covert exfiltration illustrates that “inspect and log all traffic’’ fails when traffic morphology itself is generated to satisfy detectors trained on historical corpora.

- Adaptive-trust poisoning corrodes trust scores silently: incremental GAN-generated traces shift model decision boundaries without triggering rate-based alarms.

Implication for ZTA research. Most ZTA studies surveyed in Section 2 and Section 5 continue to evaluate controls in isolation and against static threat models. The taxonomy above (Table 3) highlights that generative AI invalidates the composability premise of zero trust: once input authenticity is uncertain, individual control assurances no longer add up to system-level guarantees. The next subsection operationalizes this taxonomy by embedding each threat family into a temporal attacker model, the Cyber-Fraud Kill Chain (CFKC). This shift from control-centric to attacker-centric perspective clarifies when, not just where, generative AI pressures ZTA.

Table 3.

Principal classes of generative–AI threats and their alignment with NIST SP 800-207 components and principles. The evidence column aggregates items published in 2023–2024 and now indicates the source type: peer-reviewed vs. industry/incident-response (IR). Where industry/IR items are cited, we prefer peer-reviewed anchors when available and use short triangulation notes; extended incident descriptions remain in Appendix C.

6.2. The Cyber Fraud Kill Chain