Abstract

Hardware systems are foundational to critical infrastructure, embedded devices, and consumer products, making robust security assurance essential. However, existing hardware security standards remain fragmented, inconsistent in scope, and difficult to integrate, creating gaps in protection and inefficiencies in assurance planning. This paper proposes a unified, standard-aligned, and threat-validated taxonomy of Security Objective Domains (SODs) for hardware security assurance. The taxonomy was inductively derived from 1287 requirements across ten internationally recognized standards using AI-assisted clustering and expert validation, resulting in 22 domains structured by the Boundary-Driven System of Interest model. Each domain was then validated against 167 documented hardware-related threats from CWE/CVE databases, regulatory advisories, and incident reports. This threat-informed mapping enables quantitative analysis of assurance coverage, prioritization of high-risk areas, and identification of cross-domain dependencies. The framework harmonizes terminology, reduces redundancy, and addresses assurance gaps, offering a scalable basis for sector-specific profiles, automated compliance tooling, and evidence-driven risk management. Looking forward, the taxonomy can be extended with sector-specific standards, expanded threat datasets, and integration of weighted severity metrics such as CVSS to further enhance risk-based assurance.

1. Introduction

The security of hardware systems is a foundational prerequisite for trust in connected, safety-critical domains such as healthcare, industrial automation, and embedded Internet of Things (IoT) devices [1,2]. Hardware-level compromise, whether via physical tampering [3], fake parts [4], or the use of vulnerable firmware [5], can lead to operational disruption, non-compliance with regulations, and, in the worst situations, threats to human safety [6]. Unlike software vulnerabilities, which can often be remediated through patches [7], hardware flaws frequently require costly physical interventions, product recalls, or complete device replacement [8], making preventive and systematic assurance essential.

Hardware devices are particularly exposed to weaknesses that differ fundamentally from software. Physical attack surfaces such as side-channel leakage, probing, and fault injection allow adversaries to extract secrets or alter behavior [3,9]. Immutable flaws cannot be corrected after deployment, increasing the cost of failure [7,8]. Supply chain risks introduce counterfeit or unverified components that undermine trust at manufacturing and integration stages [4,10]. Even test and debug interfaces, if left exposed, can be exploited to bypass protections and escalate attacks [11]. These characteristics highlight why hardware assurance requires specialized approaches beyond software-focused methods.

Over the past two decades, multiple international standards have been developed to address hardware security, including FIPS 140-3 [12], ISO/IEC 20897 [13], NIST SP 800-193 [14], ISO/IEC 20243 [15], ISO/IEC 62443 [16], and UL 2900 [17]. While robust within their respective domains, these frameworks remain fragmented, overlapping, and inconsistent in scope and terminology [18,19]. This fragmentation produces three persistent challenges.

- Redundancy: Similar protections (e.g., secure boot, cryptographic validation) are repeated across multiple standards, creating duplicated assurance efforts [19,20].

- Incomplete coverage: Applying a single standard rarely addresses the full set of hardware, supply chain, and lifecycle risks [21,22].

- Integration difficulty: Divergent terminology and threat scope hinder the creation of unified, end-to-end assurance strategies [23,24].

Compliance with a single or even multiple standards does not guarantee resilience against documented threats. Gaps in coverage can leave organizations vulnerable to real-world attack vectors such as side-channel key extraction [9], debug interface exploitation [11], or supply chain insertion of counterfeit components [10]. Moreover, existing frameworks rarely integrate validation against threat intelligence, limiting their ability to prioritize assurance efforts based on actual exploitation trends [25].

To address these issues, this paper introduces a unified, standard-aligned, and threat-validated taxonomy of Security Objective Domains (SODs) for hardware security assurance. The taxonomy is derived inductively from the systematic synthesis of 1287 requirements across ten widely adopted international standards. These requirements were consolidated into 22 domains and mapped to the Boundary-Driven System of Interest (SoI) Model to define clear assurance boundaries. Each domain is validated against documented hardware-related threats from CWE/CVE databases, regulatory advisories, and incident reports, producing a quantitative mapping that supports risk-based prioritization and assurance gap analysis.

This paper makes three contributions:

- A unified taxonomy of 22 SODs, eliminating redundancy and harmonizing terminology across ten major hardware security standards.

- Threat-validated assurance mapping, linking each domain to 167 documented threats, providing a quantitative basis for prioritization and assurance analysis.

- Integration with the Boundary-Driven SoI Model, enabling structured requirement mapping and clear assurance scope definition across heterogeneous standards.

The remainder of this paper is organized as follows: Section 2 provides background on hardware security assurance and relevant standards; Section 3 describes the multi-stage methodology; Section 4 presents the taxonomy and validation results; Section 5 discusses implications, limitations, and future work; and Section 6 concludes.

2. Background

2.1. Security Assurance for Hardware Systems

Security assurance is the systematic process of evaluating and substantiating the trustworthiness of a system, ensuring that it satisfies defined security requirements and can withstand identified threats throughout its lifecycle [26,27]. In the context of hardware systems, assurance must address risk characteristics that differ fundamentally from software security. These include:

- Physical attack surfaces, such as invasive probing, side-channel leakage, and fault injection [3,9];

- Immutable vulnerabilities, since many hardware flaws cannot be patched after deployment, making proactive assurance essential [7,8];

- Supply chain complexity, where unverified or counterfeit components can compromise trust during manufacturing or integration [4,10];

- Lifecycle dependencies, requiring security integration from initial design through manufacturing, provisioning, updates, and decommissioning [21].

While certification schemes, such as Common Criteria and FIPS 140-3, provide formal evaluation against well-defined criteria, such certifications are often narrow in scope and do not guarantee continuous resilience against emerging threats [25]. A broader assurance approach is therefore needed, one that integrates compliance with standards into an ongoing, evidence-based risk management process informed by real-world threat intelligence [28,29].

In addition to addressing technical weaknesses, assurance also requires systematic risk assessment and management. Risk-based methods ensure that assurance objectives are prioritized not only according to compliance requirements but also according to the likelihood and impact of exploitation. Frameworks such as the NIST Risk Management Framework (RMF) and subsequent adaptations for industrial control systems highlight how risk analysis can guide resource allocation and inform continuous monitoring. Recent research, for example, has applied model-based systems engineering to cybersecurity risk assessment in critical sectors, showing that structured risk models can bridge technical requirements with organizational decision-making [22]. Similar approaches have also been demonstrated in IT infrastructure, where the NIST framework has been applied to analyze cybersecurity risks and threats in a structured and auditable manner [30]. Integrating such perspectives into hardware security assurance strengthens its ability to support evidence-driven prioritization and lifecycle resilience.

2.2. Overview of Hardware Security Standards and Divergence in Scope

International and industry-specific hardware security standards offer essential protections, but their objectives, scope, and terminology vary significantly. While each has strong domain relevance, these differences make it challenging to assemble a cohesive assurance strategy without introducing redundancies or leaving unaddressed risks.

2.2.1. Domain-Specific Focus

Standards are often developed for particular operational contexts, which shapes their requirements and omissions. For example, FIPS 140-3 specifies security requirements for cryptographic modules, including tamper resistance, key zeroization, and algorithm validation [12], but does not address upstream supply chain authenticity. NIST SP 800-193 focuses on platform firmware resilience, prescribing secure boot, firmware validation, and recovery mechanisms [14], yet it assumes the trustworthiness of underlying hardware components. Similarly, ISO/IEC 20243 targets supply chain integrity through vendor verification and counterfeit detection [15], but omits runtime protections such as side-channel attack resilience.

2.2.2. Overlapping Definitions

When standards address similar protections, they may differ in how those controls are defined and implemented. For example, secure boot is covered in FIPS 140-3 for cryptographic modules [12], NIST SP 800-193 for firmware [14], and in UL 2900-2-1 for healthcare devices [17]. However, these specifications vary in cryptographic chain depth, rollback handling, and recovery requirements. Such differences complicate cross-standard alignment, especially for organizations pursuing multi-standard compliance.

2.2.3. Variation in Assurance Levels

Standards also differ in how rigorously requirements are verified. ISO/IEC 20897 mandates resilience against side-channel and fault-injection attacks, often requiring empirical laboratory testing [13]. In contrast, IEC 62443-4-2 may treat such protections as optional, depending on the assessed risk level [15,16]. These differences affect the comparability of certification outcomes and the portability of assurance claims across domains.

2.2.4. Implications for Assurance

There is no inherent mechanism for harmonization because these standards have evolved in parallel to serve distinct sectors, such as cryptography, industrial automation, healthcare devices, or supply chain management. Organizations adopting multiple standards must reconcile differences in terminology, threat scope, and assurance levels, often through internal mappings or custom profiles. Without deliberate integration, protections may be inconsistently implemented, tested multiple times, or omitted entirely in areas where no single standard applies.

2.3. The Boundary-Driven SoI Model

The Boundary-Driven SoI Model used in this research builds on prior work by Wen and Katt, where it was originally proposed in the context of software system security assurance evaluations (SAE) [26,31]. The SoI is the specific system, device, or component under SAE, identified by stakeholders as the focus of assurance activities. Establishing the SoI and its boundary prevents ambiguity and ensures that evaluation efforts remain directed and relevant [26].

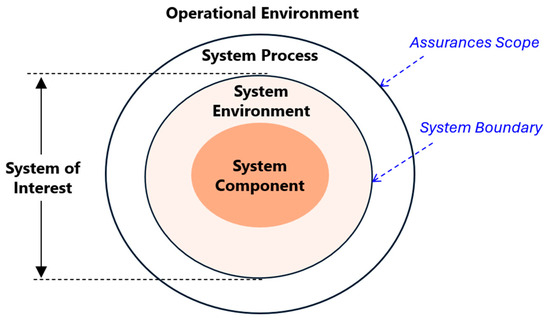

The Boundary-Driven SoI Model used in this research is illustrated in Figure 1. The system boundary delineates the SoI from its external environment, separating elements that are directly assessable from those that are outside the scope of direct evaluation. In the original model, the system boundary enclosed:

Figure 1.

The boundary-driven SoI model.

- System Component: The core hardware forming the trust foundation, subject to direct evaluation.

- System Environment: Integrated or peripheral elements within the boundary whose trustworthiness is essential to the SoI.

External to this boundary lies the Operational Environment, which influences security but is typically beyond the organization’s direct control and is documented as assumptions (e.g., networks, users, physical conditions).

In this study, the model is extended to incorporate a System Process layer positioned between the System Environment and the Operational Environment. System Process is defined as the set of security-relevant operational workflows, such as provisioning, manufacturing, configuration, update delivery, and incident response. These workflows directly affect the trustworthiness of system components and environments throughout their lifecycle. This layer is included inside the assurance scope because these processes, if compromised, can introduce vulnerabilities or bypass technical safeguards even when hardware and environmental protections are in place.

The extension of the SoI model in this study introduces a third layer, System Process, in addition to System Component and System Environment. This extension is necessary because existing frameworks tend to emphasize technical artifacts but leave operational processes implicit. For instance, NIST SP 800-193 provides comprehensive guidance on firmware integrity and recovery but does not address how provisioning or update workflows are secured over time. Similarly, the Trusted Computing Group (TCG) [32] device trust model focuses on hardware roots of trust and cryptographic anchors but does not explicitly integrate supply chain or patching practices into its evaluation boundaries. PSA Certified defines baseline goals for IoT security, yet it also abstracts away lifecycle processes, assuming these are managed externally.

By explicitly incorporating System Process (e.g., provisioning, secure manufacturing, update distribution, patching, auditing), the SoI model captures assurance dependencies that persist beyond the initial design phase. These processes are often the entry points for adversaries, for example, compromised provisioning pipelines leading to rogue device identities, or weak update signing enabling rollback attacks. Including them within the assurance boundary ensures that the taxonomy reflects not only static hardware protections but also the dynamic operational workflows that sustain trustworthiness throughout the device lifecycle.

3. Methodology

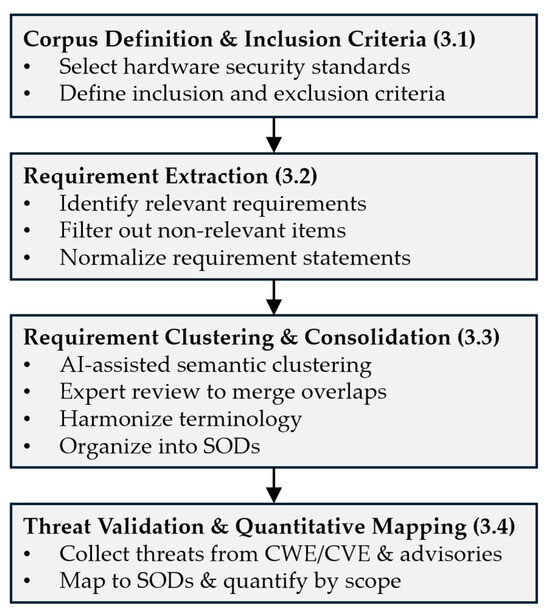

This study adopts a four-stage methodology to develop and validate a unified taxonomy of SODs for hardware security assurance. Figure 2 presents the four-stage methodology used to develop and validate the unified taxonomy. It outlines the progression from corpus definition to threat validation: (1) corpus definition, (2) requirement extraction, (3) requirement clustering and consolidation, and (4) threat validation with quantitative mapping. The stages align with Section 3.1, Section 3.2, Section 3.3 and Section 3.4. First, the corpus of standards is established with defined inclusion and exclusion criteria (Section 3.1). Next, relevant requirements are extracted (Section 3.2) and consolidated through AI-assisted clustering and expert review into distinct SODs (Section 3.3). Finally, each SOD is validated against documented threats to generate a quantitative mapping across assurance scopes (Section 3.4). This approach ensures the taxonomy is inductively derived from empirical requirements and grounded in real-world exploitation evidence, rather than imposed from a purely conceptual model.

Figure 2.

Methodological flow for deriving the SOD taxonomy.

3.1. Corpus Definition and Inclusion Criteria

The first stage of the methodology involved defining the corpus of security standards to be synthesized into the taxonomy. The selection process was designed to ensure that the corpus captures both the breadth of internationally recognized hardware security requirements and the depth of domain-specific assurance provisions. The focus was on standards that are explicitly relevant to hardware platforms, subsystems, and supporting processes, rather than purely software-oriented specifications.

Candidate standards were identified through a structured search of publicly available repositories maintained by recognized standards development organizations (SDOs), regulatory bodies, and sector-specific consortia (e.g., ISO, IEC, NIST, ETSI, GSMA, PCI-SIG). This search was supplemented by targeted consultation with subject-matter experts in cryptographic modules, trusted computing, supply chain security, and embedded device assurance to ensure that no high-impact standard was overlooked.

To ensure methodological transparency and reproducibility, each candidate standard was evaluated against the following inclusion criteria:

- Hardware relevance: The standard must define requirements, controls, or assurance activities applicable to hardware components or their integration into larger systems.

- International recognition: The standard must be published or endorsed by an SDO, regulatory agency, or widely recognized industry alliance with demonstrable cross-border applicability.

- Recency and version stability: The most recent official version or amendment must be available, with a clearly defined version identifier and publication date.

- Evaluability: The standard must contain security requirements or criteria that can be operationalized into discrete, assessable statements (e.g., implementation guidance, testable controls).

- Public availability: Full or authorized access to the standard’s requirements must be possible to allow for detailed extraction and coding.

Standards that were superseded, withdrawn, or limited to purely procedural governance without technical security requirements were excluded. Where a standard existed in multiple parts or profiles (e.g., a base specification with domain-specific supplements), only those components with direct hardware security relevance were included.

The application of these criteria resulted in the selection of ten widely adopted international standards spanning domains such as cryptographic module validation, trusted platform design, secure manufacturing, and device lifecycle assurance. Each of the ten standards contributes a distinct assurance perspective, ensuring coverage across cryptographic validation, firmware integrity, supply chain trust, industrial control systems, and medical devices. For example, FIPS 140-3 and NIST SP 800-193 define technical protections at component and platform levels, ISO/IEC 27001 [33] establishes requirements for information security management systems, ISO/IEC 20243 addresses supply chain authenticity, IEC 62443 focuses on industrial embedded devices, and UL 2900 provides requirements for network-connected medical systems. Collectively, this set captures both horizontal requirements (e.g., cryptography, secure boot) and vertical, sector-specific provisions (e.g., industrial, healthcare). Sector-specific frameworks such as ISO/SAE 21434 (automotive) [34] or DO-326A (aerospace) [35] were deliberately excluded to preserve generality; these remain important targets for future extensions of the taxonomy. Table 1 lists each included standard, its issuing body, publication year, version, primary scope, and assigned domain tags used for later thematic analysis.

Table 1.

Corpus of hardware security standards.

3.2. Requirement Extraction and Coding

The objective of this stage was to build a normalized, traceable dataset of hardware-related security requirements drawn from multiple authoritative standards. This dataset served as the analytical foundation for the clustering and consolidation processes described in Section 3.3.

3.2.1. Extraction Procedure

The objective of this stage was to identify all hardware security requirements from the selected standards while preserving traceability to their original clauses. The process combined AI-assisted pre-filtering with expert manual review to maximize efficiency and accuracy.

First, the corpus of standards was indexed and analyzed using keyword searches (e.g., firmware, cryptographic, tamper, secure boot, key storage) and vector-based semantic matching to detect clauses with high relevance to hardware security. This automated pass reduced the initial 4893 clauses to 1642 candidates for manual review.

Two hardware security experts then independently reviewed each candidate against defined inclusion and exclusion criteria:

- Inclusion: The clause specifies a requirement for protecting hardware platforms, embedded firmware, or processes within the assurance boundary defined by the Boundary-Driven SoI Model.

- Exclusion: The clause relates solely to software security, contains only high-level policy statements without enforceable controls, or addresses operational activities outside the assurance boundary.

This review yielded 1287 validated hardware-related requirements. Each requirement was then paraphrased to remove standard-specific jargon, harmonize grammar, and make the intended security function explicit—forming the basis for the coding and consolidation steps described in Section 3.2.2.

3.2.2. Coding Scheme

Each validated requirement from Section 3.2.1 was normalized to remove standard-specific jargon, unify grammatical structure, and make the intended security function explicit. Normalization included lowercasing, removal of punctuation, collapsing multiple spaces, and lemmatization using spaCy v3 [36]. Exact duplicates were removed before semantic similarity computation, and near-duplicates were merged if cosine similarity exceeded 0.95. The normalized requirements were then coded along two dimensions:

- Security Function Code: a short, semantically precise label representing the core function (e.g., cryptographic key generation, secure boot verification, tamper detection).

- SoI Scope Element: classification according to the Boundary-Driven SoI Model (System Component, System Environment, System Process).

Each retained requirement was assigned a persistent traceability identifier (IDs) in the format <Standard>_<Clause>_<Year> (e.g., FIPS140-3_7.1.1_2019), along with metadata for the originating standard, version, clause reference, original text, matched keyword(s), and semantic cluster ID. This ensured bidirectional traceability between the consolidated taxonomy and the authoritative standard text.

Table 2 provides a representative excerpt from the traceability matrix, showing how requirements from different standards were normalized and provisionally assigned to domains, preserving both source context and analytical grouping.

Table 2.

Sample of requirement coding and provisional domain assignment.

3.3. Clustering and Consolidation

This stage transformed the coded requirements from Section 3.2.2 into a concise, non-overlapping taxonomy of Security Objectives. The goal was to harmonize diverse and potentially redundant requirements from multiple standards into a logically coherent structure aligned with the Boundary-Driven SoI Model. A two-phase approach was applied.

3.3.1. Phase 1: Semantic and Functional Grouping

Requirements were grouped when they addressed the same fundamental capability (e.g., protecting cryptographic keys, verifying boot firmware integrity), produced equivalent assurance outcomes, or targeted the same trust property (confidentiality, integrity, availability, authenticity, or resilience).

To accelerate this process, we applied AI-assisted semantic clustering. Each normalized requirement was embedded using the MPNet model (all-mpnet-base-v2) from the SentenceTransformers library, which belongs to the BERT family of transformer architectures and was fine-tuned for technical requirement similarity [37,38]. We used the all-mpnet-base-v2 model from the SentenceTransformers library [39] (v2.2.2, Python 3.10.4), which produces 768-dimensional embeddings via mean pooling. Similarity computations and clustering were performed using scikit-learn [40] (v1.2.2) and NumPy [41] (v1.24.2), with a fixed random seed of 42 to ensure reproducibility. All processing was executed on an NVIDIA RTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA).

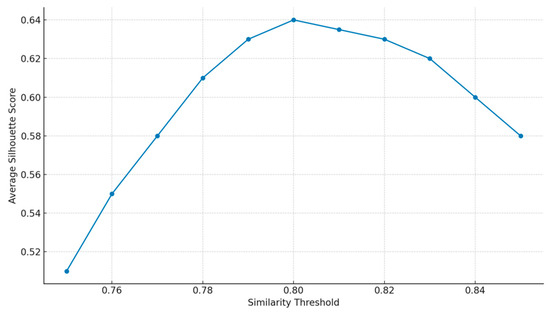

Pairwise cosine similarity scores were calculated, and requirements with similarity above 0.80 were provisionally grouped using hierarchical agglomerative clustering. The 0.80 threshold was selected following pilot experiments with thresholds between 0.75 and 0.85, optimizing for silhouette score and manual cluster coherence. As shown in Figure 3, silhouette scores peaked near 0.80, supporting it as a balance point between cohesion and separation. To confirm robustness, sensitivity checks were also performed within a narrower range of 0.78–0.82. Across this range, more than 92% of cluster assignments remained stable, and no new domain-level groupings appeared. This demonstrates that the resulting taxonomy structure is not an artifact of parameter choice but reflects consistent patterns in the requirement corpus.

Figure 3.

Pilot Experiments—Silhouette Score vs. Threshold.

This automated step served as a candidate generation mechanism, reducing manual workload. Two domain experts reviewed all AI-suggested clusters to confirm functional equivalence, separate cases where similar terminology masked different assurance outcomes, and merge overly narrow clusters. Each expert conducted the review independently before participating in joint consolidation meetings. Disagreements were resolved through discussion, with a third senior reviewer mediating when consensus could not be reached. Final grouping decisions were based on expert consensus to avoid semantic drift or overgeneralization. Inter-rater agreement, calculated on a 20% random sample, yielded Cohen’s κ = 0.82, indicating substantial agreement. The few disagreements that occurred mainly involved overlapping domains, particularly between Boot and Cryptography, and between Identity and Provisioning. These cases were resolved by distinguishing between initialization-time controls (e.g., provisioning of device keys) and runtime protections (e.g., cryptographic operations). This ensured that domain boundaries remained precise.

To ensure transparency in model choice, we benchmarked alternative embedding models. Preliminary trials showed that bert-base-uncased [37] and all-MiniLM-L6-v2 achieved lower mean cluster coherence, while all-mpnet-base-v2 achieved the highest (0.87 on a 200-sample subset) and preserved terminology granularity without excessive generalization. Sensitivity analysis, varying the cosine threshold from 0.78 to 0.82 and substituting the all-MiniLM-L6-v2 model, showed that more than 92% of cluster assignments remained consistent and no new domain-level groupings appeared. These results confirm that the taxonomy’s higher-level structure is robust to small parameter changes. In addition, a generative AI tool (ChatGPT, GPT-5, OpenAI, San Francisco, CA, USA) was used to assist with paraphrasing requirements and suggesting cluster labels; all outputs were manually reviewed and validated by the authors.

3.3.2. Phase 2: Domain Formation and SoI Model Mapping

In the second phase, the validated requirement groups were generalized into SODs. Each SOD represents a distinct and non-overlapping area of hardware security assurance, providing a higher-level abstraction that integrates semantically related requirements across different standards.

To situate the domains within a structured assurance framework, each SOD was mapped to one of the three assurance scopes defined in the Boundary-Driven SoI Model. The System Component scope captures domains focused on core hardware elements such as cryptography, boot mechanisms, and storage. The System Environment scope addresses surrounding but integral elements, including authenticity, traceability, and connectivity. Finally, the System Process scope encompasses lifecycle practices such as secure design, build, and audit activities that sustain trustworthiness over time.

Domain boundaries were derived inductively from the empirical structure of the requirement clusters, ensuring that the taxonomy reflected observed patterns in the data rather than being imposed by pre-existing conceptual frameworks. The outcome of this process was a validated set of 22 SODs that collectively span the assurance space. The final number of 22 domains reflects both the initial AI-suggested clusters and subsequent expert consolidation. The automated clustering initially produced 28 candidate groups; through expert review, several closely related groups were merged (e.g., multiple forms of storage protection unified under a single Storage domain), while no group was split. This iterative process ensured that the final 22 domains were comprehensive yet non-redundant. Every extracted requirement was mapped to at least one domain, with full traceability preserved back to the original standards. Table 3 illustrates how representative requirements from different sources were consolidated into their respective domains, demonstrating the unification of heterogeneous standards into a coherent, threat-validated taxonomy.

Table 3.

Example of requirement consolidation into SODs across assurance scope elements.

3.4. Threat Validation

After consolidating requirements into the final 22 SODs, the taxonomy was validated against real-world hardware-related threats to ensure practical relevance and completeness. The validation process consisted of three structured steps:

3.4.1. Definition of a Representative Threat Corpus

A representative corpus of hardware-related threats was curated from authoritative and diverse sources, including:

- MITRE CWE entries relevant to hardware security (e.g., CWE-1247: Improper Protection Against Voltage and Clock Glitches).

- Selected CVE records illustrating real-world exploitation cases (e.g., firmware manipulation, physical tampering).

- US-CERT and ENISA advisories addressing hardware supply chain attacks, side-channel vulnerabilities, and device misconfiguration risks.

Only threats explicitly related to the System Component, System Environment, or System Process elements of the SoI Model were retained.

3.4.2. Targeted Mapping of Threats to SODs

Instead of full enumeration, threats were mapped using a representative case approach:

- For each SOD, 2–5 representative threats were selected to cover its core functional scope.

- Mapping was performed by aligning the mitigation intent of the SOD with the preventive or detective measures required to address the threat.

- For example, SOD-BOOT (Secure Boot and Firmware Integrity) was linked to CWE-1327 (“Improper Firmware Validation”) and CWE-1194 (Firmware Rollback) as both addresses ensuring authenticity and integrity of boot firmware.

3.4.3. Coverage and Relevance Analysis

The mapping was reviewed to confirm that every SOD corresponded to at least one validated real-world threat. Any gaps triggered iterative refinement of the taxonomy to ensure no domain was purely conceptual. This guaranteed that the taxonomy retained a direct operational link to the evolving hardware threat landscape.

To operationalize this validation, a structured mapping protocol was applied as follows:

Mapping protocol. To ensure transparency and reproducibility, the mapping of threats to SODs followed a structured procedure:

- Threat extraction: Each threat statement was collected with its CWE/CVE identifier and description.

- Scope identification: Analysts determined the affected SoI scope element(s): System Component, Environment, or Process.

- Intent alignment: The mitigation intent of the threat was compared against candidate SOD definitions using a controlled vocabulary.

- Primary assignment: Each threat was mapped to one primary SOD; secondary mappings were recorded only if the threat clearly spanned multiple objectives.

- Dual review: Two experts independently coded each threat; disagreements were logged.

- Consensus resolution: Conflicts (e.g., Boot vs. Cryptography, Identity vs. Provisioning) were resolved by consensus. Representative contested cases are documented in Appendix A.

Table 4 displays a representative sample of this mapping, whereas Appendix A has comprehensive mappings for all 22 SODs as well as exemplary contentious circumstances, such as instances where threats could be legitimately attributed to multiple domains. These instances show how disagreements were settled by consensus, such as when Boot and Cryptography or Identity and Provisioning overlapped. This additional transparency demonstrates how the mapping protocol was applied in practice and provides a methodological template that other researchers and practitioners can adopt when aligning new or emerging threats to the taxonomy.

Table 4.

Example mapping of documented threats to SODs.

4. Results

Following the multi-stage process outlined in Section 3, the final output is a validated taxonomy of 22 SODs, each grounded in authoritative standards and mapped to real-world hardware threats. This section summarizes the taxonomy’s structure (Section 4.1), presents the threat validation outcomes (Section 4.2), and analyzes coverage patterns across assurance scopes and domains (Section 4.3).

4.1. Taxonomy Summary

The validated taxonomy comprises 22 SODs organized under the three assurance scopes of the Boundary-Driven SoI Model: System Component, System Environment, and System Process. Each SOD represents a distinct, high-level objective applicable across hardware platforms, independent of sector or device type.

To provide a unified view, Table 5 summarizes all 22 domains grouped by assurance scope. This consolidated overview serves as a reference point for the taxonomy, while the detailed descriptions and representative threat mappings are provided in Table 6, Table 7 and Table 8.

Table 5.

Overview of the 22 SODs grouped by assurance.

Table 6.

SODs for System Component.

Table 7.

SODs for System Environment.

Table 8.

SODs for System Process.

4.2. Threat Validation Outcomes

Following the procedure outlined in Section 3.4, each of the 22 SODs was mapped to multiple documented hardware-related threats from authoritative sources, including MITRE CWE/CVE, US-CERT advisories, and ENISA reports. This process confirmed that the taxonomy is grounded in operational reality and provides full coverage of the assurance space.

The analysis yielded several key findings. First, coverage was complete: every SOD was linked to at least one validated real-world threat, with a total of 167 distinct CWE/CVE entries mapped. Second, certain domains attracted a disproportionate share of threats: Cryptography (14 threats), Boot (13), Identity (12), Communication (11), and Resilience (10) together accounted for 36% of all mappings, making them clear priority areas for assurance focus. Third, overlap was significant: 23.4% of threats mapped to more than one SOD, underscoring the need for coordinated controls across domains—for example, secure boot mechanisms also reinforce cryptographic protections. Finally, strong evidence linkage was observed: 72.5% of mapped threats were associated with publicly documented incidents, while the remainder represented latent vulnerabilities without confirmed exploitation but with high potential impact.

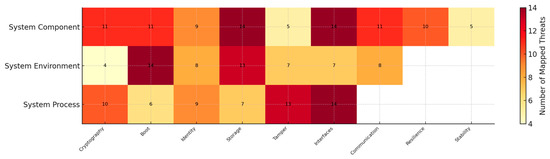

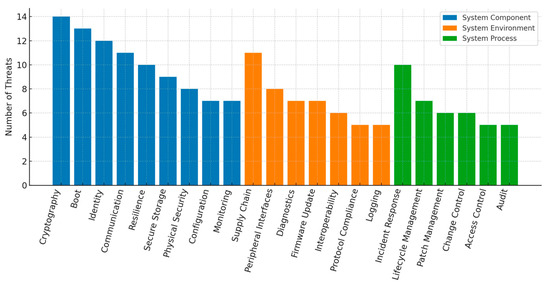

Table 9 summarizes the key quantitative metrics, while Figure 4 presents these results as a heatmap, illustrating the distribution of documented threats across the 22 SODs and the three assurance scopes. The concentration of high-value attack targets, like cryptographic operations, boot integrity, and device identification, that, if compromised, might jeopardize the foundation of confidence for the entire hardware platform is reflected in the darker shade shown in the System Component scope. This concentration explains why these domains also appear prominently in cross-domain mappings. Figure 5 complements this view by ranking all SODs by threat count, highlighting domains such as Cryptography, Boot, Identity, Communication, and Resilience as priority areas for assurance focus.

Table 9.

Summary of the key threat validation result.

Figure 4.

Heatmap of threats mapped to each SOD across the three assurance scopes.

Figure 5.

Threat Count per SOD by assurance scope.

For clarity, “average coverage per domain” in Table 9 refers to the mean number of distinct threats mapped at the assurance scope level (System Component, Environment, or Process), while “average coverage per SOD” refers to the mean number of threats mapped to individual Security Objective Domains. This distinction avoids ambiguity between scope-wide averages and domain-specific counts.

It is also noted that some domains attracted a higher number of mapped threats (e.g., Cryptography with 14) while others had relatively few (e.g., Environment with 3). This imbalance reflects the distribution of documented attack vectors, which concentrate on foundational mechanisms such as cryptography and secure boot. However, domains with fewer threats remain critical, since even a single vulnerability in areas like supply chain handling or audit can have systemic consequences. Practitioners should therefore treat these domains as equally important targets for assurance, despite lower numerical counts.

4.3. Scope- and Domain-Level Analysis

The threat mapping results were further analyzed to examine coverage patterns across the three Assurance Scopes and their respective SODs.

At the scope level, distinct differences emerged in threat density. As shown in Table 10, the System Component scope exhibited the highest average at 8.4 threats per domain, followed by System Environment (7.0) and System Process (6.5). This concentration reflects the broad attack surface and criticality of core hardware platform functions such as cryptographic operations, boot integrity, and secure communications.

Table 10.

Threat coverage by assurance scope.

At the domain level, five SODs accounted for a disproportionate share of mapped threats (Figure 5). Cryptography (14), Boot (13), Identity (12), Communication (11), and Resilience (10) together represent 36% of all mapped threats. Most of these domains belong to the System Component scope, underscoring that protecting platform integrity and secure communications is central to mitigating hardware-level risk.

These results carry several implications for assurance practice. First, prioritization of domains with higher threat counts can yield disproportionate reductions in overall system risk. Second, cross-domain dependencies are significant, as many threats map to multiple domains; for example, boot integrity mechanisms also underpin cryptographic key protection. Finally, balance across scopes remains essential: while System Component dominates in threat density, neglecting System Environment and System Process objectives could leave exploitable gaps in supply chain integrity, lifecycle controls, and operational monitoring.

It should also be emphasized that the distribution of threats across domains is not uniform: some domains, such as Cryptography (14 threats) and Boot (13 threats), attracted significantly higher counts than others, such as Environment (3 threats). This imbalance reflects the concentration of publicly documented attack vectors on core mechanisms that underpin platform trust. However, domains with fewer mapped threats remain no less important, since even a single weakness in areas like Handling or Audit can create systemic assurance failures. For this reason, practitioners are cautioned against equating low threat counts with low criticality; assurance strategies must address all domains to ensure end-to-end resilience.

5. Discussion

The unified taxonomy of SODs presented in this study addresses long-standing challenges in hardware security assurance: fragmented standards, inconsistent terminology, and incomplete threat coverage. By synthesizing requirements from ten widely adopted standards and validating them against 167 documented hardware-related threats, the taxonomy bridges the gap between compliance-oriented frameworks and operational risk realities.

5.1. Practical Implications

The taxonomy provides not only a qualitative framework for structuring hardware security objectives but also a quantitative foundation for assurance analysis. By mapping each SOD to documented threats and cross-domain dependencies, the taxonomy enables measurable assessment of assurance coverage and residual risk.

This quantitative perspective offers several advantages. First, it supports risk-weighted prioritization: threat counts and densities, as visualized in the heatmap (Figure 4), highlight domains where targeted improvements are likely to yield the greatest reduction in system-level risk. Second, it enables comparative assurance analysis, allowing organizations to benchmark their existing control implementations against the taxonomy baseline and identify areas of over- or under-protection. Third, it facilitates profile optimization, where quantitative scores inform the design or refinement of assurance profiles to ensure that resources are allocated according to both operational impact and vulnerability exposure.

To illustrate its practical application, consider an IoT gateway deployed in a healthcare setting. Mapping its implemented controls against the taxonomy shows strong alignment with domains such as Boot, Cryptography, and Communication, but reveals gaps in Traceability and Audit. This gap analysis highlights that, while the device may resist firmware modification or key extraction, the absence of supply chain provenance and comprehensive event logging could undermine overall assurance. In this way, the taxonomy provides a structured method for practitioners to pinpoint weaknesses and prioritize remediation. In this profile, gateway coverage was 13/22 SODs; uncovered/high-risk areas included Traceability (0/3 mapped threats) and Audit (1/4). A pragmatic remediation sequence is: (1) implement provenance tracking for component lots (Traceability), (2) deploy signed event logging with secure export (Audit), and (3) extend key-provisioning checks into manufacturing (Provisioning).

For practitioners, the integration of threat intelligence into the taxonomy means that it functions not only as a conceptual reference but also as a decision-support instrument. Its metric-driven structure provides actionable guidance for planning, implementing, and auditing hardware security assurance throughout the system lifecycle. By grounding assurance objectives in observed threats, the taxonomy ensures that practical efforts remain aligned with the evolving hardware risk landscape. At the same time, it must be acknowledged that the current prioritization metric is based on raw threat counts. While useful as a baseline, this approach does not distinguish between high- and low-severity vulnerabilities. Future work will therefore focus on integrating weighted scoring systems such as CVSS severity ratings or historical exploitation prevalence to refine prioritization and better capture risk impact.

5.2. Comparison with Existing Frameworks

While established hardware security standards such as FIPS 140-3, NIST SP 800-193, ISO/IEC 20243, and IEC 62443 define robust protections within their respective domains, they remain inherently siloed. Their scope, terminology, and assurance depth vary significantly, often reflecting the priorities of a specific sector rather than addressing the complete hardware lifecycle.

The proposed taxonomy directly addresses these limitations by solving three persistent problems in current practice. First, it harmonizes terminology and scope. For example, secure boot is defined differently in FIPS 140-3 (cryptographic modules), NIST SP 800-193 (firmware resiliency), and UL 2900 (healthcare devices), with varying chain-of-trust definitions and rollback requirements. Within the taxonomy, these variations are consolidated into a single Boot domain, preserving essential details while removing semantic inconsistencies.

Second, it eliminates redundancy by merging overlapping controls such as key zeroization, interface lockdown, and counterfeit prevention, which appear across multiple standards. Third, it fills critical coverage gaps. For instance, NIST SP 800-193 assumes the trustworthiness of underlying hardware components, leaving supply chain authenticity unaddressed. The taxonomy explicitly integrates objectives such as Authenticity and Traceability to ensure complete coverage.

Finally, the taxonomy introduces evidence-based validation by grounding its domains in threat intelligence from CWE/CVE data and real-world exploitation cases, ensuring objectives are operationally relevant. It also bridges multi-standard compliance, enabling organizations that must satisfy multiple certifications to map diverse requirements into a unified structure, reduce duplication of testing, and identify residual risks beyond the scope of any single standard.

Beyond these standards, other taxonomic initiatives provide only partial coverage. The MITRE CWE hardware weakness landscape enumerates vulnerability classes (e.g., CWE-1241: Improper Protection Against Electromagnetic Fault Injection) but remains vulnerability-centric and top-down, without semantically grouping assurance requirements or linking them to assurance scopes. NIST SP 800-161 offers supply chain–oriented categories, but is primarily policy-driven and not derived from empirical clustering of technical controls. Commercial certification frameworks, such as the TCG device trust model and PSA Certified security goals, define high-level assurance categories but originate from expert judgment and do not incorporate threat validation.

In contrast, the proposed taxonomy is inductively derived from empirical requirement clusters and validated against 167 representative hardware-related vulnerabilities. This bottom-up, data-driven approach aligns with technical language, sector practices, and operational risks. It bridges the gap between formal standards, vulnerability landscapes, and commercial frameworks, producing a taxonomy that is not only comprehensive and semantically consistent but also adaptable across sectors and resilient to evolving threat landscapes.

5.3. Limitations

While the proposed taxonomy advances the state of hardware security assurance, several limitations should be acknowledged to frame its applicability and guide future refinement.

First, corpus scope. The taxonomy was derived from ten international standards selected for their breadth, adoption, and cross-sector relevance. While these provide strong coverage of hardware security requirements, they do not exhaust all sector-specific standards. For example, automotive (ISO/SAE 21434) and aerospace (DO-326A) frameworks were deliberately excluded to preserve generality. As a result, the taxonomy reflects a generalized baseline rather than a sector-optimized profile. Future extensions should adapt and specialize the taxonomy for individual domains by incorporating additional standards.

Second, threat mapping. The validation against 167 threats was based on purposive selection from sources such as CWE, CVE, and regulatory advisories to ensure representativeness across domains. While sufficient to demonstrate coverage, this dataset does not represent the full universe of hardware threats and is limited by the availability of publicly documented incidents. Consequently, some domains appear to have relatively few mapped threats compared to others. This imbalance mirrors the current state of disclosure but should not be interpreted as lower assurance significance.

Third, prioritization metric. The current analysis uses raw threat counts as a baseline indicator of domain-level exposure. While useful for highlighting concentration points, this approach does not distinguish between high-severity and low-severity vulnerabilities. More nuanced prioritization could be achieved by incorporating weighted scores based on severity metrics (e.g., CVSS), exploitability, or prevalence in future work.

Finally, methodological boundaries. The use of transformer-based embeddings and clustering introduces some dependency on parameterization, such as the cosine similarity threshold. Although sensitivity checks confirmed robustness in the range of 0.78–0.82, small variations could still influence intermediate groupings. Expert validation mitigates this risk but does not eliminate subjectivity entirely.

5.4. Future Work

Building on the taxonomy and validation approach presented in this study, several avenues for future work could further strengthen its applicability and impact.

First, sector-specific adaptation. While this study deliberately excluded domain-specific standards such as ISO/SAE 21434 (automotive) and DO-326A (aerospace) to preserve generality, future efforts will integrate these and similar frameworks to develop specialized profiles. Such extensions will allow the taxonomy to be tailored for high-assurance contexts such as automotive safety, avionics, and defense, while retaining the core harmonized structure.

Second, expanded threat datasets. The current validation against 167 threats provided representative coverage across domains but was limited by available public disclosures. Incorporating a larger and continuously updated dataset of hardware threats—including advisories, vendor reports, and emerging vulnerabilities—will increase fidelity and help ensure that assurance priorities remain aligned with the evolving risk landscape.

Third, enhanced prioritization metrics. The present analysis uses raw threat counts as a baseline indicator of domain-level exposure. While this provides a useful starting point, it does not differentiate between vulnerabilities of varying severity or likelihood. Future work will therefore integrate weighted metrics, such as CVSS severity ratings, exploitability likelihood, and historical prevalence. Building on the case study approach in Section 5.1, future evaluations will combine coverage scoring (e.g., percentage of domains addressed) with severity-weighted prioritization to generate practical remediation sequences that better reflect both breadth and impact of assurance gaps.

Fourth, tool support and automation. The taxonomy is currently applied manually through requirement mapping and expert review. Development of automated tools to support taxonomy-based gap analysis, compliance mapping, and continuous monitoring will facilitate adoption in industry. Integration with model-based systems engineering (MBSE) workflows and digital assurance platforms could provide practitioners with scalable decision-support mechanisms.

Finally, empirical case studies. While this paper included an illustrative example, further validation through in-depth industrial case studies will be essential to demonstrate the taxonomy’s operational utility. Applying the taxonomy to real hardware systems, such as IoT gateways, medical devices, or industrial controllers, will provide richer evidence of its effectiveness in guiding assurance planning and risk management.

6. Conclusions

This study addressed the fragmentation of hardware security standards by developing a unified, standards-aligned, and threat-validated taxonomy of SODs. Derived inductively from 1287 requirements across ten international standards and consolidated through AI-assisted clustering with expert review, the taxonomy harmonizes heterogeneous requirements into a consistent assurance framework. Validation against 167 documented hardware-related threats enabled quantitative mapping across assurance scopes, offering actionable insights for prioritizing assurance activities. The resulting taxonomy provides a structured foundation for cross-standard integration, quantitative evaluation, and the development of tailored assurance profiles for diverse hardware contexts.

Beyond its immediate contribution, the taxonomy establishes a bridge between compliance frameworks, vulnerability landscapes, and operational assurance practices. By embedding threat intelligence directly into domain structures, it shifts assurance activities from static checklist compliance toward evidence-based, risk-informed decision-making. This makes the taxonomy not only a unifying reference model but also a practical decision-support tool for both researchers and practitioners.

Looking forward, the taxonomy establishes a foundation for sector-specific extensions, such as automotive and aerospace, where tailored profiles can enhance domain-specific assurance. It also supports integration with weighted prioritization metrics, such as CVSS severity and exploitability scores, to complement coverage scoring approaches demonstrated in the case study. This combination will enable assurance strategies that reflect both the breadth of domain coverage and the impact of individual vulnerabilities. In addition, its structure is well suited for tool support and automation, enabling continuous monitoring and streamlined compliance mapping. The inductive and data-driven methodology ensures adaptability to evolving technologies and adversarial tactics, while its validation approach supports ongoing refinement as new standards and threat intelligence emerge.

Ultimately, this approach contributes to advancing hardware security assurance as a more methodical, transparent, and robust discipline, supporting both academic research and industrial practice in an increasingly complex and adversarial landscape.

Author Contributions

S.-F.W.—Conceptualization, methodology design, software, data collection, AI-assisted clustering implementation, threat validation, formal analysis, figure generation and manuscript drafting. A.S.—Expert review of taxonomy consolidation, methodological validation, interpretation of results and critical manuscript revision. All authors have read and agreed to the published version of the manuscript.

Funding

This work has received funding from the Research Council of Norway through the SFI Norwegian Centre for Cybersecurity in Critical Sectors (NORCICS) project no. 310105. Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital—Trondheim University Hospital).

Data Availability Statement

All data generated or analyzed during this study are included in this article. Any additional information (e.g., intermediate analysis scripts or aggregation notes) is available from the corresponding author upon reasonable request.

Acknowledgments

The authors gratefully acknowledge the support and collaboration of partners in the SFI NORCICS consortium. The authors also acknowledge the use of a generative AI tool (ChatGPT, GPT-5, OpenAI, San Francisco, CA, USA) for language refinement and assistance in requirement clustering. All outputs were manually reviewed, and the authors take full responsibility for the final content.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Threat-to-Objective Tables by Domain

Table A1.

System Component.

Table A1.

System Component.

| Objective | Representative Threats | CWE/CVE References | Example Incidents |

|---|---|---|---|

| Cryptography | Extraction of keys, side-channel analysis, tamper bypass | CWE-1247 (Power-On Reset Glitching); CWE-1300 (Cryptographic Key Protection) | Side-channel attacks on HSMs; IoT key leakage (Black Hat 2021) |

| Boot | Execution of malicious/unsigned firmware, rollback attacks | CWE-1327 (Improper Firmware Validation); CWE-1194 (Firmware Rollback) | FDA recalls of infusion pumps (2019–2021) for exploitable update channels |

| Identity | Device impersonation, lack of secure attestation | CWE-295 (Improper Certificate Validation); CWE-1322 (Missing Hardware Root-of-Trust) | IoT botnet campaigns exploiting cloned medical sensors (2020) |

| Storage | Theft or tampering of patient/config data | CWE-922 (Insecure Storage); CWE-1258 (Unprotected NVM) | Patient logs exfiltrated from unencrypted medical monitors (ICS-CERT ICSA-20-170-02) |

| Tamper | Physical probing, enclosure attacks, fault injection | CWE-1271 (Physical Side-Channel); CWE-1247 (Voltage Glitch); CWE-1335 (Tamper Response) | Glitching and probing to bypass firmware authentication in infusion pumps (2020) |

| Interfaces | Exploitation of debug/test ports (JTAG, UART, SWD) | CWE-1191 (Improper Debug Control); CWE-1313 (Debug Interface Exploitation) | FDA advisories (2018–2022) on vulnerable debug ports in medical devices |

| Communication | Intercepted or spoofed wireless/wired commands | CWE-319 (Cleartext Transmission); CWE-294 (Spoofing) | ICSMA-21-054-01: Wireless telemetry exploits on infusion pumps |

| Resilience | Fault-induced unsafe behavior | CWE-1332 (Fault State Handling); CWE-1334 (Unsafe State) | Power fluctuations causing overdosing (2020) |

| Stability | Voltage/clock glitching to bypass checks or disrupt operation | CWE-1247 (Glitching); CWE-1333 (Clock Manipulation) | Voltage glitch bypassing secure boot on medical devices (2022) |

Table A2.

System Environment.

Table A2.

System Environment.

| Objective | Representative Threats | CWE/CVE References | Example Incidents |

|---|---|---|---|

| Authenticity | Counterfeit or maliciously altered components in the supply chain | CWE-1303 (Inclusion of Malicious Components); CWE-1121 (Supply Chain Compromise) | Discovery of counterfeit ICs in ventilators during 2020 pandemic supply shortages |

| Traceability | Lost chain-of-custody, untracked component sourcing | CWE-1104 (Use of Unverified Components); CWE-1121 (Supply Chain Risk) | Untracked chips in medical devices traced back to unverified brokers, posing Trojan risks |

| Isolation | Trusted/untrusted subsystems sharing resources (cross-contamination) | CWE-1188 (Insecure Isolation); CWE-1209 (Privilege Separation Failure) | Shared bus vulnerabilities between medical sensor modules and main controllers enabling lateral attacks |

| Connectivity | Compromised wired/wireless links enabling spoofing or injection | CWE-295 (Improper Certificate Validation); CWE-307 (Improper Authentication) | Wi-Fi-based attacks on hospital patient monitors (CERT vulnerability note VU#810027, 2021) |

| Diagnostics | Abuse of factory/maintenance interfaces during field servicing | CWE-1191 (Improper Debug Control); CWE-1259 (Unauthorized Diagnostic Function Access) | Field servicing ports on dialysis machines exploited to alter treatment parameters |

| Environment | Environmental stress degrading security protections (EMI, heat) | CWE-1336 (Environmental Manipulation); CWE-1329 (Hardware Aging Impact) | EMI used to induce failure modes in medical infusion controllers (academic demonstration, 2020) |

| Handling | Tampering during shipping, storage, or assembly | CWE-1314 (Supply Chain Tampering); CWE-1331 (Unprotected Assembly Process) | Staged attacks where malicious code was injected into devices during 3PL warehouse handling (2021) |

Table A3.

System Process.

Table A3.

System Process.

| Objective | Representative Threats | CWE/CVE References | Example Incidents |

|---|---|---|---|

| Design | Lack of threat modeling or security-by-design, leading to exploitable flaws | CWE-1326 (Insecure Design); CWE-1100 (Weak Security Requirements) | Multiple FDA reports on design flaws in wireless infusion systems (weak encryption choices, 2020) |

| Build | Insecure manufacturing or assembly enabling hardware Trojans or backdoors | CWE-1314 (Supply Chain Tampering); CWE-1331 (Unprotected Assembly) | Malicious firmware injected during overseas assembly of healthcare IoT devices (documented in NIST SP 800-161 case studies) |

| Provisioning | Improper key/certificate initialization leaving devices impersonable | CWE-295 (Improper Certificate Validation); CWE-1322 (Missing Root-of-Trust at Provisioning) | IoT medical gateways cloned and misused due to weak provisioning protocols (2021, ICS-CERT advisory) |

| Update | Exploitable update mechanisms allowing unauthorized firmware | CWE-1327 (Improper Firmware Validation); CWE-1194 (Rollback Vulnerabilities) | Insulin pump exploit (2019) where attackers could load old, vulnerable firmware bypassing new protections |

| Patch | Slow or insecure patching leaving exploitable vulnerabilities | CWE-1101 (Incomplete Patching); CWE-1338 (Update Mechanism Misuse) | Delayed patching exploited in 2021 ransomware campaigns targeting hospital device fleets |

| Audit | Missing audit logs, preventing detection or forensic investigation | CWE-778 (Insufficient Logging); CWE-926 (Lack of Traceability) | Regulatory penalties (2022) for lack of traceability after data exfiltration via compromised diagnostic interfaces |

References

- Sadeghi, A.-R.; Wachsmann, C.; Waidner, M. Security and privacy challenges in industrial internet of things. In Proceedings of the 52nd Annual Design Automation Conference, San Francisco, CA, USA, 7–11 June 2015; pp. 1–6. [Google Scholar]

- Humayed, A.; Lin, J.; Li, F.; Luo, B. Cyber-physical systems security—A survey. IEEE Internet Things J. 2017, 4, 1802–1831. [Google Scholar] [CrossRef]

- Hu, W.; Chang, C.-H.; Sengupta, A.; Bhunia, S.; Kastner, R.; Li, H. An overview of hardware security and trust: Threats, countermeasures, and design tools. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 40, 1010–1038. [Google Scholar] [CrossRef]

- Guin, U.; DiMase, D.; Tehranipoor, M. Counterfeit integrated circuits: Detection, avoidance, and the challenges ahead. J. Electron. Test. 2014, 30, 9–23. [Google Scholar] [CrossRef]

- Costin, A.; Zaddach, J.; Francillon, A.; Balzarotti, D. A Large-scale analysis of the security of embedded firmwares. In Proceedings of the 23rd USENIX Security Symposium (USENIX Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 95–110. [Google Scholar]

- Williams, P.A.; Woodward, A.J. Cybersecurity vulnerabilities in medical devices: A complex environment and multifaceted problem. Med. Devices Evid. Res. 2015, 8, 305–316. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Paxson, V. A large-scale empirical study of security patches. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 2201–2215. [Google Scholar]

- Anderson, R.J. Security Engineering: A Guide to Building Dependable Distributed Systems; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Genkin, D.; Pachmanov, L.; Pipman, I.; Tromer, E.; Yarom, Y. ECDSA key extraction from mobile devices via nonintrusive physical side channels. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 1626–1638. [Google Scholar]

- Adee, S. The hunt for the kill switch. IEEE Spectr. 2008, 45, 34–39. [Google Scholar] [CrossRef]

- Cui, A.; Stolfo, S.J. A quantitative analysis of the insecurity of embedded network devices: Results of a wide-area scan. In Proceedings of the 26th Annual Computer Security Applications Conference, Austin, TX, USA, 6–10 December 2010; pp. 97–106. [Google Scholar]

- NIST FIPS 140-3; Security Requirements for Cryptographic Modules. NIST: Gaithersburg, MD, USA, 2019.

- ISO/IEC 20897-1:2020; Information Security, Cybersecurity and Privacy Protection—Physically Unclonable Functions. ISO: Geneva, Switzerland, 2020.

- NIST SP 800-193; Platform Firmware Resiliency Guidelines. NIST: Gaithersburg, MD, USA, 2018.

- ISO/IEC 20243-2:2023; Information technology—Open Trusted Technology ProviderTM Standard (O-TTPS). ISO: Geneva, Switzerland, 2023.

- ISA/IEC 62443; Series of Standards: Industrial Automation and Control Systems Security (1–4). ISA: Durham, NC, USA, 2018.

- UL 2900-2-1:2017; Standard for Software Cybersecurity for Network-Connectable Products—Part 2-1: Particular Requirements for Network Connectable Components of Healthcare Systems. Underwriters Laboratories: Durham, NC, USA, 2017.

- Möller, D.P. NIST cybersecurity framework and MITRE cybersecurity criteria. In Guide to Cybersecurity in Digital Transformation: Trends, Methods, Technologies, Applications and Best Practices; Springer: Berlin/Heidelberg, Germany, 2023; pp. 231–271. [Google Scholar]

- Kalogeraki, E.-M.; Polemi, N. A taxonomy for cybersecurity standards. J. Surveill. Secur. Saf. 2024, 5, 95–115. [Google Scholar]

- Djebbar, F.; Nordström, K. A comparative analysis of industrial cybersecurity standards. IEEE Access 2023, 11, 85315–85332. [Google Scholar] [CrossRef]

- Cohen, B.; Albert, M.G.; McDaniel, E.A. The need for higher education in cyber supply chain security and hardware assurance. Int. J. Syst. Softw. Secur. Prot. (IJSSSP) 2018, 9, 14–27. [Google Scholar] [CrossRef]

- Benson, V.; Furnell, S.; Masi, D.; Muller, T. Regulation, Policy and Cybersecurity. 2021. Available online: https://static1.squarespace.com/static/5f8ebbc01b92bb238509b354/t/659d51bd850d012c9eb1aaad/1704808898551/Discribe%2Breport%2BRegulation%2BPolicy%2Band%2BCybersecurity.pdf (accessed on 10 July 2025).

- Cains, M.G.; Flora, L.; Taber, D.; King, Z.; Henshel, D.S. Defining cyber security and cyber security risk within a multidisciplinary context using expert elicitation. Risk Anal. 2022, 42, 1643–1669. [Google Scholar]

- Andersson, A.; Hedström, K.; Karlsson, F. Standardizing information security–a structurational analysis. Inf. Manag. 2022, 59, 103623. [Google Scholar] [CrossRef]

- Janovsky, A.; Jancar, J.; Svenda, P.; Chmielewski, Ł.; Michalik, J.; Matyas, V. sec-certs: Examining the security certification practice for better vulnerability mitigation. Comput. Secur. 2024, 143, 103895. [Google Scholar] [CrossRef]

- Wen, S.-F.; Katt, B. Exploring the role of assurance context in system security assurance evaluation: A conceptual model. Inf. Comput. Secur. 2024, 32, 159–178. [Google Scholar] [CrossRef]

- Spears, J.L.; Barki, H.; Barton, R.R. Theorizing the concept and role of assurance in information systems security. Inf. Manag. 2013, 50, 598–605. [Google Scholar] [CrossRef]

- El Amin, H.; Samhat, A.E.; Chamoun, M.; Oueidat, L.; Feghali, A. An integrated approach to cyber risk management with cyber threat intelligence framework to secure critical infrastructure. J. Cybersecur. Priv. 2024, 4, 357–381. [Google Scholar] [CrossRef]

- Dekker, M.; Alevizos, L. A threat-intelligence driven methodology to incorporate uncertainty in cyber risk analysis and enhance decision-making. Secur. Priv. 2024, 7, e333. [Google Scholar] [CrossRef]

- Aljumaiah, O.; Jiang, W.; Addula, S.R.; Almaiah, M.A. Analyzing cybersecurity risks and threats in IT infrastructure based on NIST framework. J. Cyber Secur. Risk Audit 2025, 2025, 12–26. [Google Scholar]

- Wen, S.-F.; Katt, B. A quantitative security evaluation and analysis model for web applications based on OWASP application security verification standard. Comput. Secur. 2023, 135, 103532. [Google Scholar] [CrossRef]

- Trusted Computing Group (TCG). Implementing Hardware Roots of Trust; White Paper; TCG: Beaverton, OR, USA, 2019; Available online: https://trustedcomputinggroup.org/resource/implementing-hardware-roots-of-trust/ (accessed on 10 July 2025).

- ISO/IEC 27001:2022; Information Security, Cybersecurity and Privacy Protection—Information Security Management Systems—Requirements. ISO: Geneva, Switzerland, 2022. Available online: https://www.iso.org/standard/27001 (accessed on 8 October 2025).

- ISA ISA/IEC 62443:2021; Road Vehicles—Cybersecurity Engineering. International Organization for Standardization (ISO): Geneva, Switzerland; SAE International: Warrendale, PA, USA, 2021.

- RCTA DO-326A; Airworthiness Security Process Specification. RTCA, Inc.: Washington, DC, USA, 2014.

- Honnibal, M.; Montani, I.; Landeghem, S.V.; Boyd, A. spaCy: Industrial-Strength Natural Language Processing in Python (v3.5). J. Open Source Softw. 2020, 5, 123–132. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Guo, J.L.; Steghöfer, J.-P.; Vogelsang, A.; Cleland-Huang, J. Natural language processing for requirements traceability. In Handbook on Natural Language Processing for Requirements Engineering; Springer: Cham, Switzerland, 2025; pp. 89–116. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Harris, C.R.; Millman, K.J.; Van Der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Camurati, G.; Poeplau, S.; Muench, M.; Hayes, T.; Francillon, A. Screaming channels: When electromagnetic side channels meet radio transceivers. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).