1. Introduction

The Internet of Things (IoT) has transformed our interaction with the environment, offering unprecedented connectivity and data collection capabilities. This technological advancement, however, raises significant privacy concerns, particularly regarding the vast amounts of personal data generated and processed by IoT devices [

1]. As IoT systems become more pervasive in our daily lives, from smart homes to wearable devices, the need for robust privacy-preserving mechanisms becomes increasingly critical.

Differential privacy (DP) has emerged as a promising approach to protect individual privacy while enabling meaningful data analysis [

2]. By adding carefully calibrated noise to data or query results, DP provides strong mathematical guarantees against identifying individuals within a dataset, such as in smart homes, where vast amounts of sensitive data are collected, often without user awareness. DP allows data collection with privacy guarantees before the data is transmitted from local devices to cloud services or third parties. For instance, Grashöfer et al. [

3] focus on allowing users to control data shared across cloud platforms. However, the implementation of DP in IoT systems presents unique challenges, particularly in terms of user experience and control [

4].

Motivation. DP offers strong privacy guarantees; however, users often struggle to understand its implications and effects on their data [

5]. Cummings et al. [

5] found that users often misinterpret traditional explanations of differential privacy, and some become less likely to share data when presented with abstract or vague descriptions. Their study demonstrated that without concrete user-facing representations, users struggle to understand how DP mechanisms protect their data, highlighting a key usability gap in existing solutions. This motivates our work in designing visual, example-driven controls that translate mathematical privacy parameters into relatable settings. This disconnect between technical aspects and user comprehension can lead to mistrust or misuse of privacy-preserving systems.

Contributions. We address this gap by proposing a user-centric approach to implementing Local Differential Privacy (LDP) in IoT contexts. We choose LDP over Global Differential Privacy because it provides stronger privacy guarantees by applying noise to data on the user’s device before sharing, rather than relying on a central entity for privacy protection [

4]. LDP ensures that sensitive data remains private in shared cloud environments, preventing misuse by service providers. Our contributions are as follows:

We review current approaches to implementing DP in IoT systems and analyze the impact of DP on user experience in IoT contexts.

We present a novel interface that empowers users to adjust LDP algorithm parameters to enhance their control over privacy settings while maintaining mathematical rigor, which aligns with the growing recognition that users should be empowered to make informed decisions about their data privacy [

1].

We demonstrate the practical application of our approach by utilizing real-world voice data collected from IoT devices as this data type is both highly relevant to daily life and potentially revealing personal information. We made our code publicly available for download [

6].

We develop intuitive visualizations to help users understand the impact of privacy settings on their data.

We conduct a usability study to evaluate user comprehension, interaction success, and feedback on the interface design.

Outline. The rest of this paper is as follows.

Section 2 reviews the related work.

Section 3 describes the preliminaries on differential privacy.

Section 4 explains our privacy mechanism and its implementation.

Section 5 provides the details on our user interface design and prototype development.

Section 6 presents our user study to confirm its effectiveness and usability.

Section 7 explains the discussion and limitations. Finally,

Section 8 draws conclusions and points out future work.

Materials. We implemented our approach and conducted experiments on a MacBook Pro M2 with 16G memory. We used Python 3.11 with NumPy and Matplotlib to implement differential privacy operations and visualizations (

Section 4). To build the prototype interface, we used Figma for front-end interaction design (

Section 5). We conducted our user testing locally in a browser-based interface simulated via interactive HTML exports of the Figma prototype (

Section 6).

2. Related Work

2.1. User-Centric Privacy in IoT

Kounoudes and Kapitsaki [

7] systematically mapped user-centric privacy-preserving approaches in IoT, establishing correlations between implementation strategies and regulatory compliance. Their findings demonstrate that effective privacy solutions must balance technical robustness with user accessibility. Chhetri and Genaro Motti [

8] advanced this work through empirical analysis of privacy controls in smart homes. They developed a comprehensive framework emphasizing data-centric control mechanisms, interface transparency, and context-aware privacy adaptation. Their research established that privacy needs vary significantly based on device type, location, and user activity. This insight directly informed our implementation of adaptive privacy controls for voice data processing. Their framework provides a validated approach to designing user-centric privacy controls in IoT environments, offering crucial insights into user preferences and privacy concerns. We adapted their framework to incorporate DP controls while aligning with user expectations in IoT scenarios.

2.2. LDP Mechanisms for IoT

Local Differential Privacy has emerged as a promising solution for IoT privacy preservation, offering mathematical guarantees without centralized trust requirements. Osia et al. [

9] demonstrated LDP’s effectiveness through a hybrid deep learning framework that preserves user privacy in speech-based services by separating sensitive and non-sensitive information before transmission. Their work established the viability of combining machine learning techniques with DP in IoT applications. Wang et al. [

4] explored the implementation of LDP to address key challenges in time-series data protection through adaptive privacy budget allocation and temporal correlation preservation. Yang and Al-Masri [

10] introduced a user-centric optimization method that allows users to control privacy trade-offs through weighted criteria. Their approach to parameter optimization showed that user preferences could be effectively incorporated into DP implementations without compromising mathematical guarantees. These implementations influenced our design decisions regarding privacy mechanisms and parameter settings.

2.3. Privacy Interface Design

Cummings et al. [

5] investigated user expectations and understanding of DP. Their research examined how different ways of describing DP influenced users’ willingness to share data. Through systematic investigation of user expectations, they found that the effectiveness of DP communication depends heavily on how well it addresses individuals’ specific privacy concerns. Their investigation of how users interpret and respond to different privacy descriptions helped shape our approach to interface design. Their work emphasized that privacy interfaces should connect technical privacy guarantees to users’ practical privacy concerns rather than focusing on mathematical definitions. This insight guided our development of a visual control system that demonstrates privacy impacts through concrete examples.

3. Differential Privacy Preliminaries

Differential privacy is a rigorous mathematical framework that quantifies the privacy guarantees of an algorithm. It ensures that the output of an algorithm only reveals a little about any individual in the dataset [

2]. The fundamental equation of DP is

, where

A is the algorithm,

D and

are two datasets that differ by one entry,

S is any subset of possible outputs,

(epsilon) is the privacy parameter, and

(delta) is a small probability of failure. The privacy budget, denoted by

, quantifies the trade-off between privacy protection and data utility.

There are two key properties for DP: (1)

Sequential Composition: For

k -differentially private mechanisms applied sequentially as

, where

represents the output after noise is added, the result satisfies (

+

+ … +

)-DP. (2)

Parallel Composition: If these mechanisms are applied to disjoint subsets of the data, the result satisfies max(

,

, …,

)-DP [

11].

Laplace Mechanism. The Laplace mechanism is one of the foundational techniques in DP, designed to achieve pure differential privacy. It works by adding noise drawn from a Laplace distribution to the output of a function. The Laplace mechanism is defined as , where represents the output after noise is added, is the actual function result, Δf is the sensitivity of the function, is the privacy parameter, and is the Laplace distribution with scale parameter b. The probability density function of the Laplace distribution is , where x is the location parameter (the center of the distribution), and is the scale parameter.

Gaussian Mechanism. The Gaussian mechanism provides more flexibility by introducing the concept of approximate differential privacy through the inclusion of a failure probability parameter . The Gaussian mechanism is defined as , where is the Gaussian distribution with mean 0 and variance , and is calibrated based on the sensitivity of the function and the desired privacy parameters. The standard deviation is calculated as , where Δf is the sensitivity of the function. Gaussian mechanism’s ability to maintain data utility while preserving privacy makes it especially valuable for iterative processes and large-scale statistical analyses.

4. Proposed Privacy Mechanism

4.1. Choice of Gaussian Mechanism

The Laplace mechanism provides pure DP with noise based on , offering strong privacy guarantees but potentially higher noise. The Gaussian mechanism introduces an additional parameter for approximate DP, often achieving better accuracy, especially with continuous or high-dimensional data. In our study, as in the IoT environments, the Gaussian mechanism may be more appropriate when dealing with continuous sensor data streams and real-time processing requirements despite its higher resource demands. The Gaussian mechanism’s ability to maintain data utility while providing strong privacy guarantees makes it particularly well suited for voice command processing and other continuous IoT data streams.

4.2. Code and Dataset

We use the voice command dataset [

12] containing user interactions with a voice-controlled virtual assistant. The metadata file describes audio recordings from multiple speakers, including speakers’ properties and audio file paths with 9854 records. Each record includes pre-anonymized age ranges (e.g., “22–40”) [

13], gender, and English fluency level (marked as “advanced”). The command data consists of two main categorical components: actions (such as “activate” and “deactivate”) and categories (including “music” and “lights”). The pre-existing age anonymization through range categorization aligns with our objective of enhancing privacy protection through DP mechanisms. Our Python code is publicly available for download.

4.3. Privacy Mechanism Implementation

Assumption. We assume that Gaussian noise is appropriately scaled for categorical data, with numerical encoding preserving category relationships. Users within the same age range and fluency level are assumed to have similar command patterns. We use a standard delta value ( = 1 × 10−5) and apply differential privacy to provide additional protection beyond the pre-anonymized age data.

We implement the Gaussian mechanism for voice command privacy preservation in our apply_gaussian_mechanism(data, epsilon, delta = 1e-5) function as shown in Listing 1, where data is the original sensitive data we wish to privatize, and epsilon is the privacy parameter, which controls the trade-off between privacy and accuracy. The mechanism works by generating random noise from a normal distribution with value by calling sqrt(2 * np.log(1.25/delta))*(sensitivity/epsilon) that is proportional to the sensitivity of the data and inversely proportional to the privacy budget.

| Listing 1. Gaussian Mechanism. |

![Jcp 05 00036 i001]() |

Sensitivity is the maximum change in the output if a single record in the dataset is modified. A smaller epsilon provides stronger privacy guarantees but adds more noise. The utility measurement algorithm employs a normalized relative error in a [0, 1] range, where 1 indicates perfect utility (no difference) and values approaching 0 indicate significant differences. Hence, stronger privacy protection leads to lower data utility, while maintaining high utility requires accepting weaker privacy guarantees.

Voice command data undergoes numerical encoding for Gaussian mechanism application. Each category receives a unique index (e.g., “music” = 0, and “lights” = 1) through a bidirectional mapping system. After noise addition, values are rounded to the nearest integer and converted back to categories. For example, a noisy value of 0.7 rounds to 1, transforming “music” to “lights”. While rounding off is usually worse than projection to the space of all possible output in the literature of differential privacy, our decision to use rounding was made after careful consideration of several factors: (a) We are dealing with voice commands that need to map to clear categories, such as “music” and “lights”. (b) Users need to understand what their commands might change into. Rounding helps maintain clear and understandable categories, where maintaining interpretable discrete categories is essential for the user interface. (c) In IoT settings, computational efficiency and simplicity are crucial. (d) This trade-off is consistent with our focus on usability.

This encoding method preserves the ordinal relationships between categories while enabling the application of the Gaussian mechanism, though it is important to note that the original categorical data may not have inherent ordinal properties. The observed category transformations are thus influenced both by the magnitude of the added noise and the specific numerical encoding assigned to each category. While our implementation uses direct numerical encoding with the Gaussian mechanism, Format-Preserving Encryption could offer a more sophisticated approach by maintaining semantic relationships between categories. This would ensure transformations occur between semantically related commands (e.g., “increase volume” to “decrease volume” rather than “change language”).

4.4. Setting the Privacy Level

Our implementation helps to visualize the effect of different values of

on the privatized data with epsilon ranges from 0.01 to 10. As shown in

Figure 1, for small

values (high privacy), the privatized data looks very noisy and different from the original data. As

increases, the privatized data starts to resemble the original data (blue curve) more closely, indicating a decrease in privacy but an increase in utility. This visualization helps users understand and select appropriate privacy levels based on their specific requirements for data protection and utility.

Regarding the Gaussian mechanism and the choice of

> 1, we acknowledge that

< 1 is commonly recommended in the differential privacy literature. However, our choice of larger

values is supported by both theoretical and practical considerations: (a) From a theoretical perspective, the Gaussian mechanism provides (

, Δ)-differential privacy guarantees through the interaction of both parameters. The privacy guarantee remains mathematically sound with larger

values when properly accounting for delta. (b) From a practical perspective, real-world implementations have demonstrated the effectiveness of larger

values. For example, Wang et al. [

4] showed that RAPPOR [

14] achieves

-LDP for

≈ 4.39. Our extensive experiments show that the privacy–utility trade-off remains meaningful with these values.

We consider different privacy levels (PLs) ranging from 1 to 10, with

and

= 1 × 10

−5. PLs significantly impact category transformations, showing a clear pattern of increasing changes as privacy strengthens. As shown in

Table 1, at Level 1 (

= 1.000), only 33.3% of categories change, with “music”, “lights”, and “volume” typically unchanged. As privacy increases, transformations become more frequent: Level 3 (

= 0.333) shows a 64.3% transformation rate with “music” changing to “volume”, Level 4 (

= 0.250) reaches 68.0% with more pronounced changes like “music” to “heat”, and Level 7 (

= 0.143) peaks at a 73.6% transformation rate with almost all categories changing. This progression shows that adjacent categories in the original mapping are more likely to be interchanged than distant ones, and the probability of change increases non-linearly with the privacy level. The data also indicates that category stability consistently decreases as epsilon values become smaller, reflecting the inherent trade-off between privacy protection and data utility.

Although our research can measure specific change probabilities (such as action changes ranging from 32.4% to 69.1%), we deliberately excluded these metrics from the user interface for two reasons: First, these probabilities fluctuate with the continuous growth of the dataset, making fixed percentages potentially misleading. Second, users interact with discrete categorical data (specific commands and categories), so presenting continuous probability metrics might create a disconnect between user experience and privacy expectations. Instead, our interface focuses on visualizing the privacy–utility relationship through discrete, practical examples of how commands might change, providing users with a more intuitive and relevant understanding of privacy impacts on their voice commands.

4.5. Privacy Disclosure Attacks

To address the privacy disclosure threat, we model a re-identification attack in which an attacker tries to infer a user’s original voice command by observing the privatized version shown in the interface. For instance, if a user issues the command “play jazz music”, and, under minimal privacy, the transformed command remains close to the original (e.g., “play music”), an attacker with prior knowledge of the user’s behavior might guess the true command. In contrast, under enhanced privacy, the transformed command may become unrelated (e.g., “turn off light”), reducing attacker confidence.

To assess the effectiveness of our approach against potential adversarial inference attacks, we interpret the category change rate under each privacy level as the inverse likelihood of successful re-identification. A higher transformation rate implies that an attacker has less chance to infer the true command, thereby validating the privacy-preserving strength of the Gaussian mechanism. As shown in

Table 1, increasing the privacy level from 1 (

= 1.000) to 7 (

= 0.143) leads to a significant increase in transformation rate from 33.2% to 73.6%, indicating reduced inference accuracy and stronger privacy protection.

We interpret the observed category transformation rates under varying privacy levels as a proxy for evaluating adversarial inference risk. In a re-identification scenario, an attacker aims to guess the original command from the privatized output. Higher transformation rates reduce this likelihood.

Table 1 shows that at

= 0.143 (Enhanced Privacy), 73.6% of the categories change after noise is applied, meaning the attacker has only a maximum of 26.4% chance of correctly inferring the original input. In contrast, at

= 1.000, more than 66% of the categories remain unchanged, increasing the risk of re-identification. This supports our claim that our user-controlled Gaussian mechanism effectively resists disclosure attacks, especially at higher privacy levels.

5. Privacy Control User Interface

5.1. Interface Design Pattern Analysis

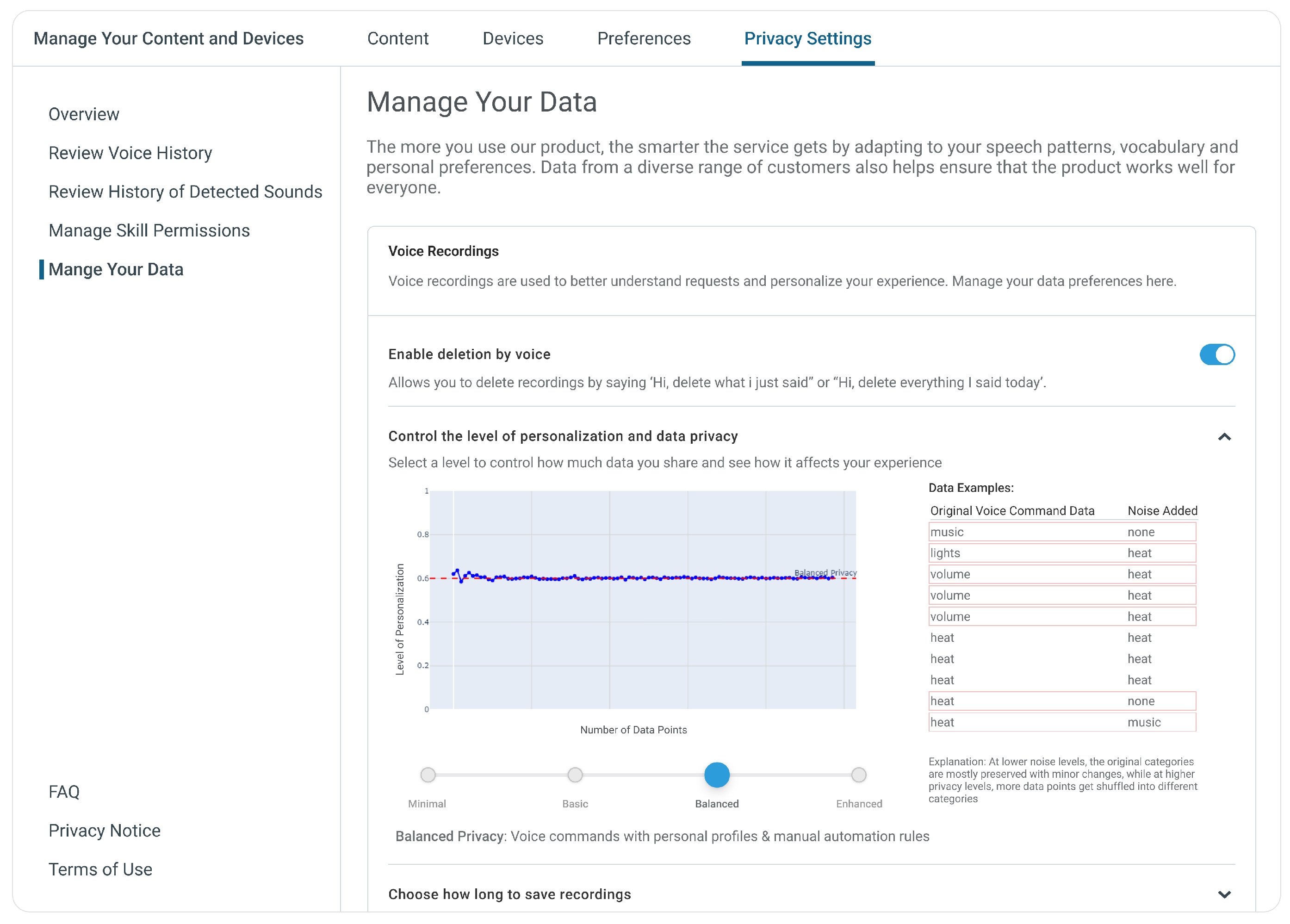

To develop an effective privacy control interface for IoT devices, we first analyzed existing smart home platforms to understand successful design patterns and user interaction models. Our analysis focused on Google Home, Amazon Echo, and Apple HomePod, examining their publicly available documentation [

15,

16,

17] and interface implementations as of early 2024, as shown in

Figure 2.

Table 2 and

Table 3 present a detailed comparison of UI patterns and privacy features across these platforms, revealing key differences in their approaches to privacy management. Key findings from our analysis that informed our design decisions include the following:

Location and accessibility of the interface:

Table 3 shows three distinct approaches to privacy control placement: Amazon’s Privacy Hub [

20], Google’s distributed settings across the Home app and Google Account [

19], and Apple’s nested iOS settings [

21]. We chose to follow Amazon’s centralized hub approach for our design based on the principle that consolidating privacy controls in a single, dedicated location would make settings easier to find and manage. This design choice aims to reduce the cognitive load on users by eliminating the need to navigate multiple menus or remember different setting locations.

Control granularity: Amazon leads with high granularity, Google offers moderate control, and Apple maintains low granularity [

16,

17]. Our interface balances these approaches by implementing a four-level privacy control system (Minimal to Enhanced) with detailed but accessible explanations, learning from the trade-offs observed in existing implementations.

Educational components: There are varying approaches to user education: Amazon’s extensive explanations [

18], Google’s moderate tooltip approach [

19], and Apple’s minimal guidance. Given the complexity of DP as a new concept for most users, we adopted and extended Amazon’s comprehensive educational approach. We implemented detailed explanations and concrete before-and-after examples that demonstrate privacy impacts on user data, making abstract concepts more tangible and actionable for users.

Based on these findings, we developed a privacy control interface that combines the most effective elements from each platform while addressing their limitations. Our interface emphasizes the following:

Table 2.

Comparison of UI patterns across Google Home, Amazon Echo, and Apple HomePod.

Table 2.

Comparison of UI patterns across Google Home, Amazon Echo, and Apple HomePod.

| UI Pattern | Google Home (Google) | Amazon Echo (Alexa) | Apple HomePod (Siri) |

|---|

| Design Language | Material Design | Custom Alexa Design | iOS Design |

| Main Navigation | Bottom navigation | Tab-based navigation | Nested iOS settings |

| Privacy Controls Location | Google Home app and Google Account | Centralized Privacy Hub | iOS settings app |

| Granularity of Controls | Moderate | High | Low |

| Use of Toggle Switches | Extensive | Extensive | Extensive |

| Search Functionality | Yes | Yes | iOS settings search |

| Voice Command Integration | Moderate | High | Limited |

| Contextual Help | Tooltips and help text | Extensive explanations | Minimal |

| Timeline View for History | Yes | Yes | No |

| Discoverability of Settings | Moderate | High | Low |

| Simplicity vs. Complexity | Balanced | More complex | Simpler |

Our comparative analysis of leading smart home platforms revealed key design patterns that informed our privacy control interface, which makes differential privacy concepts accessible to users while maintaining robust functionality. This design approach aims to bridge the gap between complex privacy mechanisms and user understanding through clear organization, intuitive controls, and practical examples.

Table 3.

App privacy feature comparison.

Table 3.

App privacy feature comparison.

| Feature | Google Home (Google) | Amazon Echo (Alexa) | Apple HomePod (Siri) |

|---|

| Visual listening indicator | ✓ | ✓ | ✓ |

| Delete voice history | App, web interface | App, voice command | iOS settings |

| Saved data of recordings | Opt-in | Opt-out | Opt-in |

| Voice command to delete recent activity | ✓ | ✓ (e.g., “Alexa, delete what I just said”) | × |

| Granular deletion options | Moderate | High | N/A |

| Centralized privacy controls | Google Home app | Alexa Privacy Hub | iOS device settings |

| On-device processing emphasis | Moderate | Low | High |

5.2. UI Design and Prototype Development

We built an interactive prototype in Figma that demonstrates the user interface for adjusting privacy settings [

22].

Figure 3 and

Figure 4 present our privacy control widget design featuring desktop and mobile views. The interface design language builds upon Amazon’s Privacy Hub’s foundation, chosen for its intuitive navigation and comprehensive privacy control explanations.

Our interface design prioritizes accessibility without sacrificing technical rigor. By abstracting complex mathematical concepts while maintaining transparency about their effects, we help users understand privacy implications without requiring expertise in differential privacy. The interface achieves this balance by displaying immediate, practical consequences of privacy choices, thereby reducing cognitive load while ensuring informed decision-making.

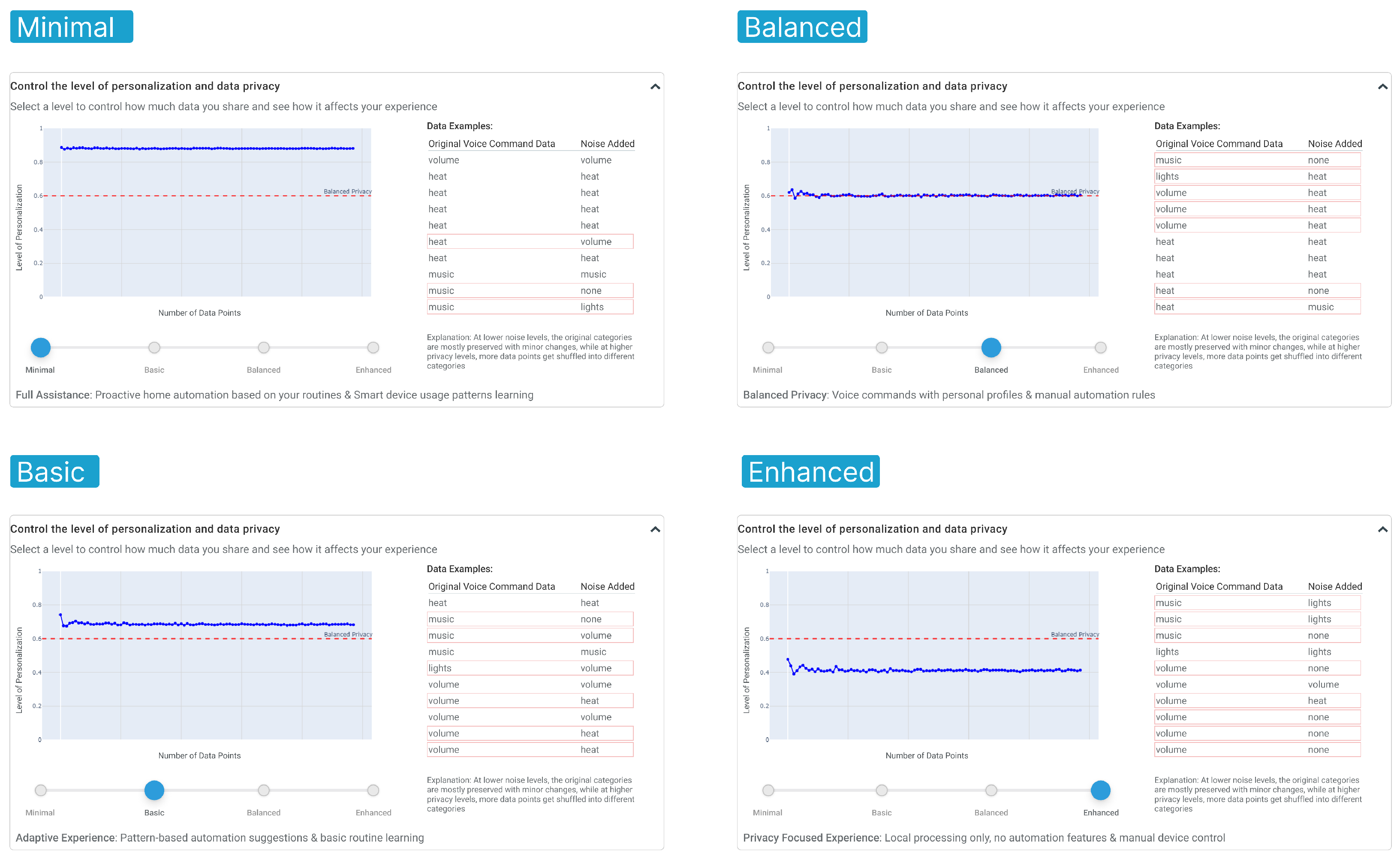

The interface presents a continuous spectrum of four privacy levels (as shown in

Figure 5):

Minimal (Privacy Level 1, = 1): Emphasizes full assistance with proactive automation.

Basic (Privacy Level 3, = 0.33): Balances automation with limited privacy protection.

Balanced (Privacy Level 4, = 0.25): Recommended setting for most users.

Enhanced (Privacy Level 7, = 0.14): Maximizes privacy through local processing only.

Our choices for privacy levels (1, 3, 4, and 7) were primarily driven by visualization effectiveness and user comprehension. Through extensive experimentation, we observed that when , the privatized data points show nearly complete overlap with the original data, making it difficult to demonstrate meaningful privacy protection. When , excessive data scattering occurs, obscuring the underlying patterns. Instead of using complex mathematical terminology, the interface translates differential privacy concepts into user-friendly terms, replacing “Utility” with “Personalization”. This establishes a clear relationship: higher personalization corresponds to higher data utility requirements. The primary visualization displays the relationship between data points and personalization levels, with a “Balanced Privacy” threshold indicating the recommended trade-off point. We intentionally abstract specific data point numbers to help users understand relationships while preventing arbitrary numbers from influencing decisions.

Figure 5.

Desktop widget with 4-level control.

Figure 5.

Desktop widget with 4-level control.

To enhance user understanding, we incorporate a “Data Examples” table positioned on the right side of the interface. This educational element provides concrete before-and-after examples demonstrating how differential privacy processing affects user data. The table presents original command categories alongside their noise-added counterparts, using red highlighting to emphasize transformed data points. For example, a command initially categorized as “music” might transform to “lights” or “none” at higher privacy levels. This visualization illustrates how increased privacy through noise addition can alter command categorization specificity, making the abstract concept of differential privacy tangible through familiar examples. This proposed design approach empowers users to make informed decisions about their data privacy while understanding the practical implications for their device’s functionality, achieving our goal of making the complex concept of differential privacy more tangible and actionable for the average user.

6. User Study

We validate our proposed user experience design through a user study to confirm its effectiveness and usability in real-world scenarios.

6.1. Methodology and Setup

Although our interface design emphasizes accessibility, we conducted a usability study that revealed that while the overall interaction was intuitive, certain components, such as the transformation table and slider explanations, require simplification or repositioning. This study seeks to confirm that abstract privacy settings can be made tangible and intuitive through visual feedback and command transformation examples.

To evaluate the usability and interpretability of our privacy control interface, we conducted a structured user study with six participants from diverse technical and professional backgrounds (Master’s in statistics and data science, 10+ years as cybersecurity and sales product manager in South America, electronics and communication engineer with 2.5 years in SAP, Bachelor’s and Master’s in psychology and education psychology working in educational consulting), male and female, of different ethnicities. Users had basic experience using smart home devices (e.g., Alexa and Google Home) recruited through convenience sampling (students, colleagues, and early adopters).

Our test setup includes prototype (the interactive interface developed in Figma, with desktop and mobile views), tasks (four core usability tasks performed in a 10–15 min think-aloud session), interview questions, and tools (task sheet, observation notes, and optional recording with consent).

Task Scenarios. Each participant completed a series of think-aloud tasks using our interactive prototype (see

Figure 5). During this 10–15 min session, we asked participants to think out loud as they use the interface explaining what they are looking at, what they are trying to do, and what is going through their mind, especially if something is confusing.

Adjust Privacy Level: Interact with the four-level control (Minimal, Basic, Balanced, and Enhanced).

- (a)

Task: Change the privacy level from Minimal to Enhanced.

- (b)

Success criteria: User adjusts slider without help.

Interpret Privacy Graph: Explain the privacy vs. personalization trade-off curve.

- (a)

Task: Describe what the graph shows.

- (b)

Success criteria: User recognizes trade-off between privacy and personalization.

Understand Data Transformations: Interpret how commands like “music” change to “heat”.

- (a)

Task: Explain what is happening in the before/after command table.

- (b)

Success criteria: User identifies that commands are modified to protect privacy.

Choose a Privacy Setting: Pick a level and justify the choice.

- (a)

Task: Select a level and explain their choice.

- (b)

Success criteria: User justifies selection using privacy–utility reasoning.

Interview Questions. After the task completion, we performed a semi-structured interview by asking the following questions:

Can you describe what you think this interface is meant to help you do?

What did you find easy or difficult to understand in this interface?

How would you describe your sense of control over your data while using the interface?

Have you used privacy settings on smart home devices before? If so, how does this interface compare?

If you were designing this for others to use, what would you change or improve?

6.2. Quantitative Task Outcomes

Table 4 summarizes the results of our study. Task 1 (Adjusting the Privacy Level) had the highest completion rate, confirming that the interaction design was largely intuitive. Task 2 (Interpreting the Privacy Graph) revealed a split. Half of the participants understood the personalization–privacy trade-off, while others misread the axes or misunderstood the meaning of data points. Task 3 (Understanding Data Transformations) revealed a consistent confusion. With only one participant identified in the relationship between privacy level and voice command transformation, many misinterpreted the meaning of “noise added” and struggled with the tabular format. And lastly, Task 4 (Choosing a Privacy Level) showed that participants could operate the controls, but few applied reasoned trade-off logic. Most relied on naming intuition (e.g., “Balance sounds safe”) or misunderstood the implications of “Enhanced”.

6.3. Qualitative Insights

The study surfaced multiple constructive and positive themes, highlighting where the interface excelled and where refinements are needed.

Users found the interface visually clear and approachable across all tasks, and participants complimented the overall layout, the simplicity of the navigation, and the interface’s resemblance to existing systems (e.g., Alexa and Google Home). The use of a centralized dashboard with visual feedback was well received.

“This interface is very clear and shows sufficient information in one page… better than Google’s.”

“I liked the visual feedback—moving the slider instantly shows changes.”

Several participants also found the graph-based personalization model more understandable than traditional privacy settings. They noted that it made the consequences of privacy choices more visible.

Explanations under the slider were ignored or misunderstood most users did not read the explanatory text beneath the privacy slider. This appeared to be due to both visual placement and technical wording. Several participants voiced a desire for practical, real-world examples of how privacy levels would affect their smart home experience:

“I’m not sure what I’m looking at… can I click?”

“Too technical… not sure what this means if you don’t know about privacy or data.”

This suggests both relocation of explanatory content, possibly with interactive tooltips or the use of scenario-based previews tied to each setting (e.g., “Your music suggestions may become less accurate”) to enhance clarity.

Transformation table was confusing and may be optional nearly all participants expressed confusion with the “Data Examples” table. Users were unclear on what “noise added” meant or how it affected actual device responses. While the table aimed to concretely demonstrate LDP in action, it often created more cognitive load than clarity.

“Volume is volume before and after… not sure what this means.”

“Is noise like background audio or something?”

Despite its difficulty, users recognized its intent. Future versions could either simplify the transformation logic or evaluate whether such a table is necessary at all, perhaps replacing it with animated or scenario-based demonstrations.

Scenario-based privacy and multi-tenant use cases emerged organically interestingly, and several participants independently brought up contextual and multi-user privacy preferences, e.g., wanting different settings at home vs. work, or per-user configurations in shared households. Though not currently part of the system, this finding aligns well with emerging research in personalized privacy control.

“At home I want as much privacy as possible… in business, I’m fine giving more data.”

“If more people visited, I might change it.”

This is a promising direction for future work—designing adaptive privacy controls that shift based on user, context, or location.

6.4. Summary

Overall, participants found the interface engaging and more transparent than typical smart home privacy menus. While certain conceptual components (e.g., noise transformation) require refinement, the system made privacy feel actionable and less abstract. These results validate using visual, interactive controls to demystify Local Differential Privacy in IoT systems.

7. Discussion and Limitations

Computational Overhead. We believe that the computational overhead of real-time differential privacy mechanisms on resource-constrained IoT devices would be negligible as there is not much heavy processing involved and IoT processing powers such as in smart speakers and hubs are increasing. As discussed in

Section 4.3, the Gaussian mechanism was implemented to privatize voice command data, using basic operations such as logarithm, square root, and random noise generation. The time complexity of the mechanism is in

, where

n is the number of data points to which noise is added. The space complexity is also

as we only store the input data and the generated noise vector. This means that the runtime and memory usage scale linearly with the data size, which is optimal and highly suitable for IoT scenarios that process small-to-moderate batches of sensor or command data.

This theoretical analysis aligns with previous research. Dwork and Roth [

23] emphasize that basic LDP mechanisms with Laplace or Gaussian mechanisms are designed to be computationally lightweight, relying primarily on simple arithmetic operations, making them feasible for edge deployment. Xu et al. [

24] confirm that the local noise addition incurs linear complexity that can be efficiently executed on edge devices with minimal delay. In our experiment with a real-world voice command dataset, we also found it very efficient in adding noise in real time and integrated into an interactive privacy interface, providing responsive feedback.

Semantic Preservation. In this work, utility is evaluated based on the resemblance of transformed words to original inputs. However, this may seem to overlook semantic preservation. For example, flipping a word such as “music” to “lights” may preserve the overall meaning of a command (e.g., “turn on lights” vs. “play music”), which could be seen as undermining perceived privacy protection. However, the transformation of commands between different categories (e.g., “music” to “lights”) is not a weakness but rather a deliberate feature that demonstrates privacy protection in action. When users select higher privacy levels, their commands should intentionally be transformed into different categories to provide stronger privacy guarantees. Our interface makes these transformations explicit and transparent, helping users understand how their privacy choices affect their data. The visible changes in commands serve as concrete evidence of privacy protection, rather than undermining it.

Privacy Protection. Applying LDP at the word-level may not fully protect privacy, as the sequence of commands could still leak sensitive patterns. However, our implementation applies LDP to command categories and actions as complete units and not to individual words. For example, we transform “activate music” as a complete command category, not by separately transforming “activate” and “music”. This approach maintains the semantic integrity of commands while providing privacy protection at the appropriate granularity for smart home interactions.

Other Security Applications. Our visual control model can be extended beyond privacy. The intuitive interface we developed could be generalized to configure security settings in smart home environments. Users could adjust firewall sensitivity, intrusion detection levels, or device authorization policies using similar visual metaphors (sliders, before-and-after examples). For instance, a “Security Level” setting might visually demonstrate how more restrictive settings would block unknown devices or limit third-party integrations. This approach aligns with Human–Computer Interaction (HCI) principles by making complex security decisions comprehensible and actionable, especially for non-technical users.

Limitations. Our current approach has the following limitations that require further study. (a) Experimental Validation: Our experiments rely on results from a single voice command dataset. While we acknowledge the limitation of using one dataset, we believe it represents typical smart home voice commands and reflects the complexity of smart home environments. We leave this as a future work to add more real-world datasets and proceed with an empirical study at each privacy level. (b) Privacy Budget Allocation: Our current implementation does not fully address the challenges of privacy budget allocation and management across multiple queries over time [

4]. In practical IoT applications, where data is collected continuously, this issue may compromise long-term privacy guarantees. While this is an important consideration for production deployment, our current work prioritizes user education and understanding of basic privacy concepts.

8. Conclusions and Future Direction

We present a user-centric approach to implementing Local Differential Privacy in IoT environments, focusing on smart home IoT privacy, specifically voice data. The prototype implementation demonstrates that complex privacy concepts can be made accessible to users while maintaining strong mathematical guarantees. Our prototype interface translates complex mathematical privacy parameters into intuitive privacy levels using visual controls and example-based feedback. We conducted a structured user study to evaluate this interface’s real-world effectiveness. The results demonstrated strong usability for basic interactions (e.g., adjusting privacy levels), along with clear preferences for the visual layout and centralized design. However, the study also revealed consistent difficulties in interpreting the transformation table and understanding explanatory text—highlighting the need for more contextualized, real-world examples and improved educational scaffolding.

Future work can focus on (1) developing more sophisticated visualization techniques to handle complex data types and their interactions [

8], (2) investigating the integration of Local Differential Privacy with federated learning approaches to enhance privacy while maintaining data utility [

9], and (3) exploring effective methods for educating users about data privacy prioritization, particularly given the gap between user perceptions and actual privacy risks [

25].

Based on the findings from our user study, as future work, we would like to (a) redesign explanation zones using progressive disclosure, real-world analogies, and tooltips to improve comprehension; (b) reassess the necessity and format of transformation tables, potentially replacing them with scenario-based previews or simplified animated transitions; (c) introduce multi-scenario and multi-user privacy controls as participants expressed interest in switching settings based on context (e.g., home vs. work) or user roles (e.g., adult vs. child); and (d) expand user studies with more participants across diverse demographics and device experiences to validate generalizability and inform broader deployment.

Additionally, two critical challenges remain: addressing privacy concerns in multi-user IoT environments where different users may have varying privacy preferences and developing standardized privacy interfaces across different IoT platforms to provide consistent user experiences.

Author Contributions

Conceptualization, X.L., S.D. and A.M.F.; methodology, X.L., S.D. and A.M.F.; validation, X.L., S.D. and A.M.F.; formal analysis, X.L., S.D. and A.M.F.; investigation, X.L., S.D. and A.M.F.; writing—original draft preparation, X.L., S.D. and A.M.F.; writing—review and editing, X.L., S.D. and A.M.F.; visualization, X.L. and S.D.; supervision, A.M.F.; project administration, A.M.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rivadeneira, J.E.; Silva, J.S.; Colomo-Palacios, R.; Rodrigues, A.; Boavida, F. User-centric privacy preserving models for a new era of the Internet of Things. J. Netw. Comput. Appl. 2023, 217, 103695. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating noise to sensitivity in private data analysis. J. Priv. Confidentiality 2016, 7, 17–51. [Google Scholar] [CrossRef]

- Grashöfer, J.; Degitz, A.; Raabe, O. User-Centric Secure Data Sharing. 2017. Available online: https://dl.gi.de/items/a99ee2b3-101f-41f6-8a44-cfbc00335e6f (accessed on 21 February 2025).

- Wang, T.; Blocki, J.; Li, N.; Jha, S. Locally differentially private protocols for frequency estimation. In Proceedings of the 26th USENIX Security Symposium (USENIX Security 17), Vancouver, BC, Canada, 16–18 August 2017; pp. 729–745. [Google Scholar]

- Cummings, R.; Kaptchuk, G.; Redmiles, E.M. “I need a better description”: An Investigation Into User Expectations For Differential Privacy. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; pp. 3037–3052. [Google Scholar]

- Li, X.; Dong, S.; Milani Fard, A. Github—Enhancing User Experience with Visual Controls for Local Differential Privacy. 2025. Available online: https://github.com/nyit-vancouver/visual-controls-for-local-differential-privacy (accessed on 21 February 2025).

- Kounoudes, A.D.; Kapitsaki, G.M. A mapping of IoT user-centric privacy preserving approaches to the GDPR. Internet Things 2020, 11, 100179. [Google Scholar] [CrossRef]

- Chhetri, C.; Genaro Motti, V. User-centric privacy controls for smart homes. Proc. ACM Hum. Comput. Interact. 2022, 6, 1–36. [Google Scholar] [CrossRef]

- Osia, S.A.; Shamsabadi, A.S.; Sajadmanesh, S.; Taheri, A.; Katevas, K.; Rabiee, H.R.; Lane, N.D.; Haddadi, H. A hybrid deep learning architecture for privacy-preserving mobile analytics. IEEE Internet Things J. 2020, 7, 4505–4518. [Google Scholar] [CrossRef]

- Yang, W.; Al-Masri, E. ULDP: A User-Centric Local Differential Privacy Optimization Method. In Proceedings of the 2024 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 29–31 May 2024; pp. 316–322. [Google Scholar]

- Dwork, C. Differential privacy: A survey of results. In Proceedings of the International Conference on Theory and Applications of Models of Computation, Xi’an, China, 25–29 April 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1–19. [Google Scholar]

- Buttaci, E. Voice Command Audios for Virtual Assistant—Kaggle.com. 2023. Available online: https://www.kaggle.com/datasets/emanuelbuttaci/audios/data (accessed on 21 February 2025).

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Erlingsson, Ú.; Pihur, V.; Korolova, A. Rappor: Randomized aggregatable privacy-preserving ordinal response. In Proceedings of the 2014 ACM SIGSAC conference on Computer and Communications Security, Scottsdale, AZ, USA, 3–7 November 2014; pp. 1054–1067. [Google Scholar]

- Bugeja, J.; Jacobsson, A.; Davidsson, P. An empirical analysis of smart connected home data. In Proceedings of the Internet of Things—ICIOT 2018: Third International Conference, Held as Part of the Services Conference Federation, SCF 2018, Seattle, WA, USA, 25–30 June 2018; Proceedings 3. Springer: Berlin/Heidelberg, Germany, 2018; pp. 134–149. [Google Scholar]

- Google Nest Help. Privacy and Security for Google Nest Devices. 2024. Available online: https://support.google.com/googlenest/answer/7072285?hl=en (accessed on 16 November 2024).

- Apple Privacy. Ask Siri, Dictation & Privacy. 2024. Available online: https://support.apple.com/en-us/HT210657 (accessed on 16 November 2024).

- PCMag. Amazon Alexa App: Settings to Change Immediately. 2024. Available online: https://www.pcmag.com/how-to/amazon-alexa-app-settings-to-change-immediately (accessed on 16 November 2024).

- HelloTech. Google Home App Update. 2024. Available online: https://www.hellotech.com/blog/google-home-app-update (accessed on 16 November 2024).

- Amazon Privacy Setting. Personalize Your Alexa Privacy Settings. 2024. Available online: https://www.amazon.com/b/?node=23608614011 (accessed on 16 November 2024).

- Apple Newsroom. Apple Advances Its Privacy Leadership with iOS 15, iPadOS 15, macOS Monterey, and watchOS 8. 2021. Available online: https://www.apple.com/ca/newsroom/2021/06/apple-advances-its-privacy-leadership-with-ios-15-ipados-15-macos-monterey-and-watchos-8/ (accessed on 16 November 2024).

- Li, X.; Dong, S.; Milani Fard, A. Figma—Smart Home Privacy Widget Prototype. 2025. Available online: https://www.figma.com/proto/NbBGjJAZFVnNLcnAnNpP4Q/Smart-Home-Privacy-Widget---Prototype (accessed on 21 February 2025).

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Xu, C.; Ren, J.; Zhang, D.; Zhang, Y. Distilling at the edge: A local differential privacy obfuscation framework for IoT data analytics. IEEE Commun. Mag. 2018, 56, 20–25. [Google Scholar] [CrossRef]

- Zheng, S.; Apthorpe, N.; Chetty, M.; Feamster, N. User perceptions of smart home IoT privacy. Proc. ACM Hum. Comput. Interact. 2018, 2, 1–20. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).