Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar

Abstract

1. Introduction

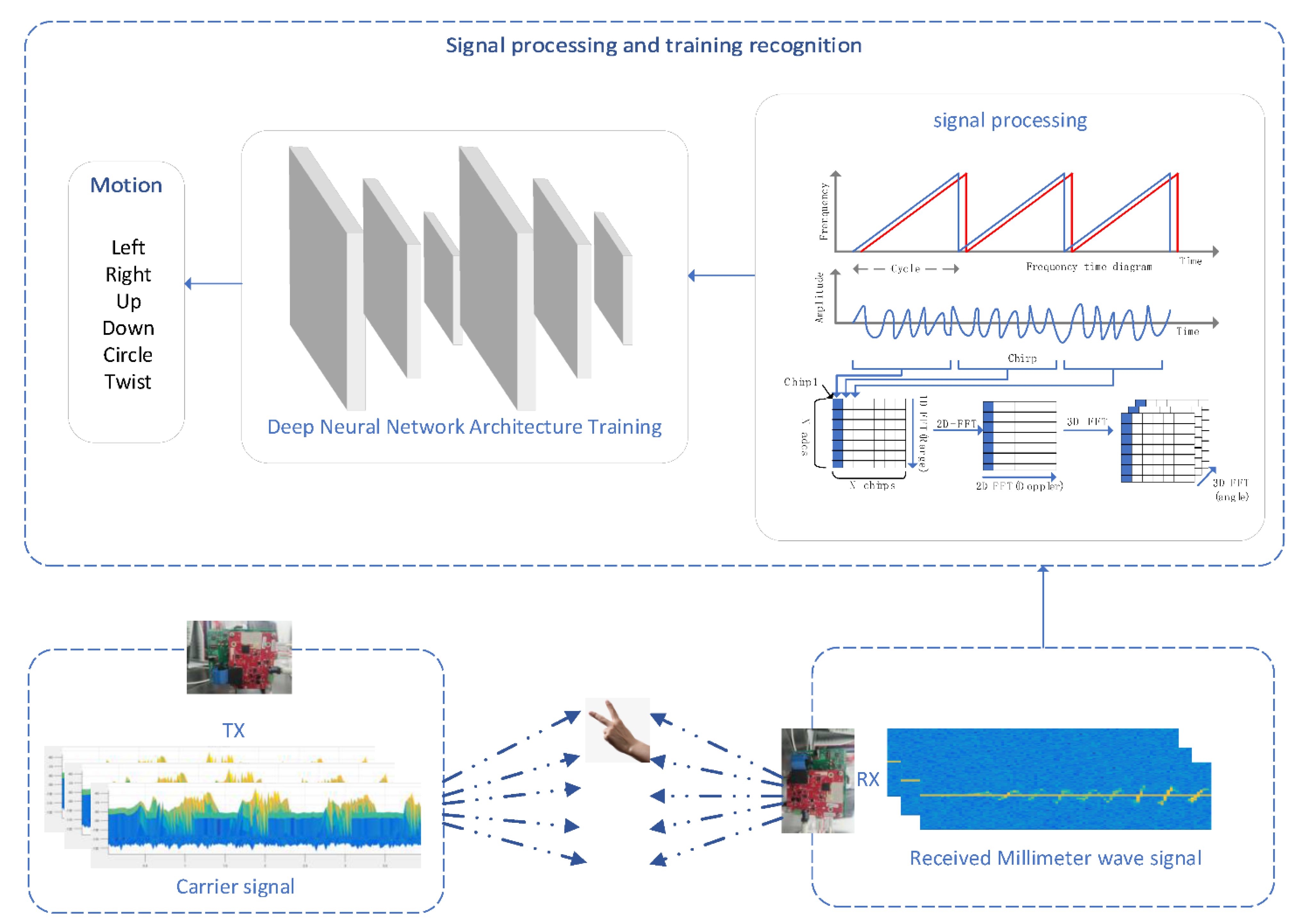

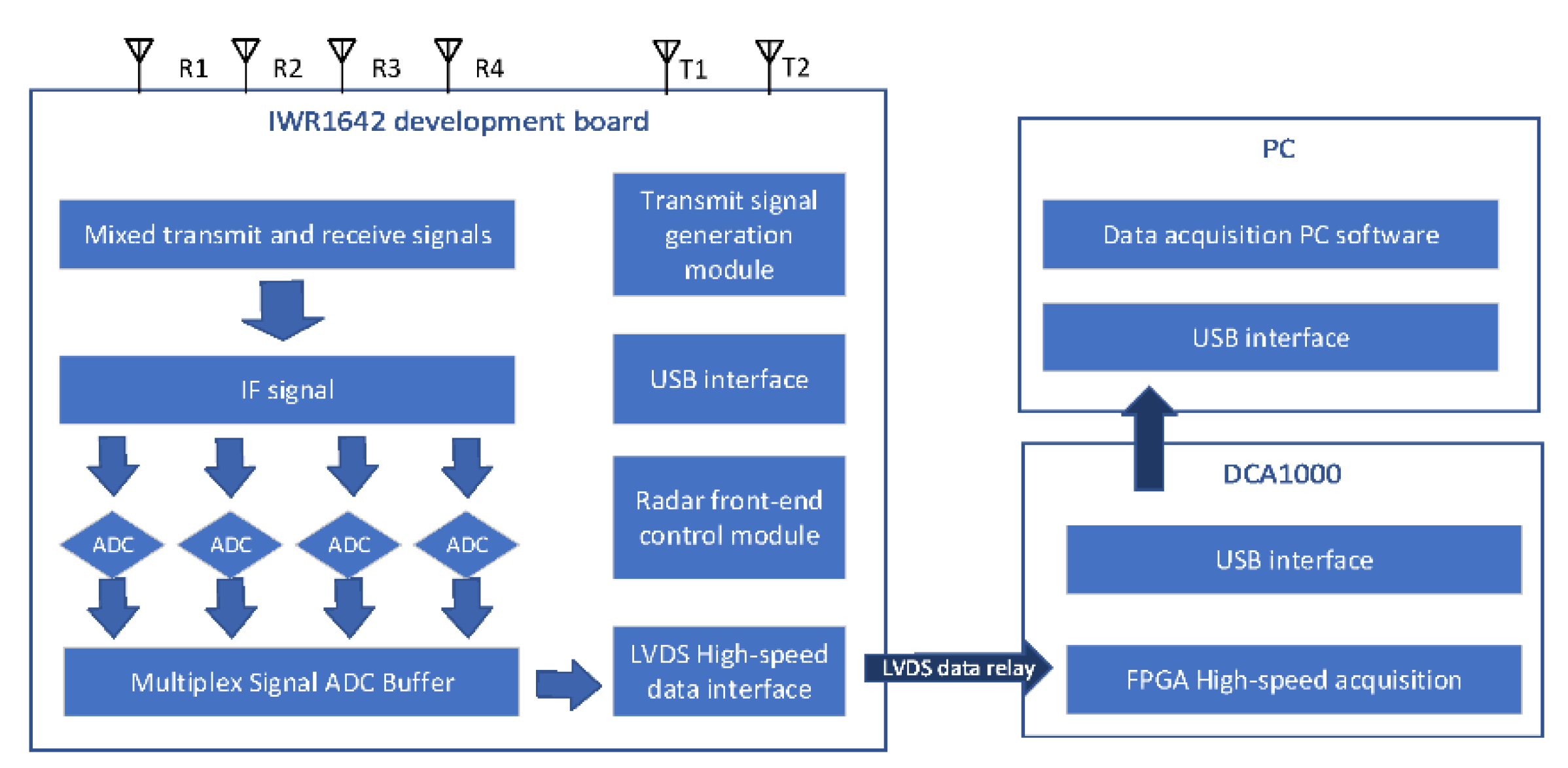

2. FMCW Radar System and Theory

| Algorithm 1 Static filtering algorithm |

| Input: CFAR (Constant False-Alarm Rate) processing result matrix ; distance unit vector where the target in the previous frame is located; distance unit vector where the target is located in the current frame. . |

| Calculation process |

|

|

|

|

|

|

|

|

|

|

|

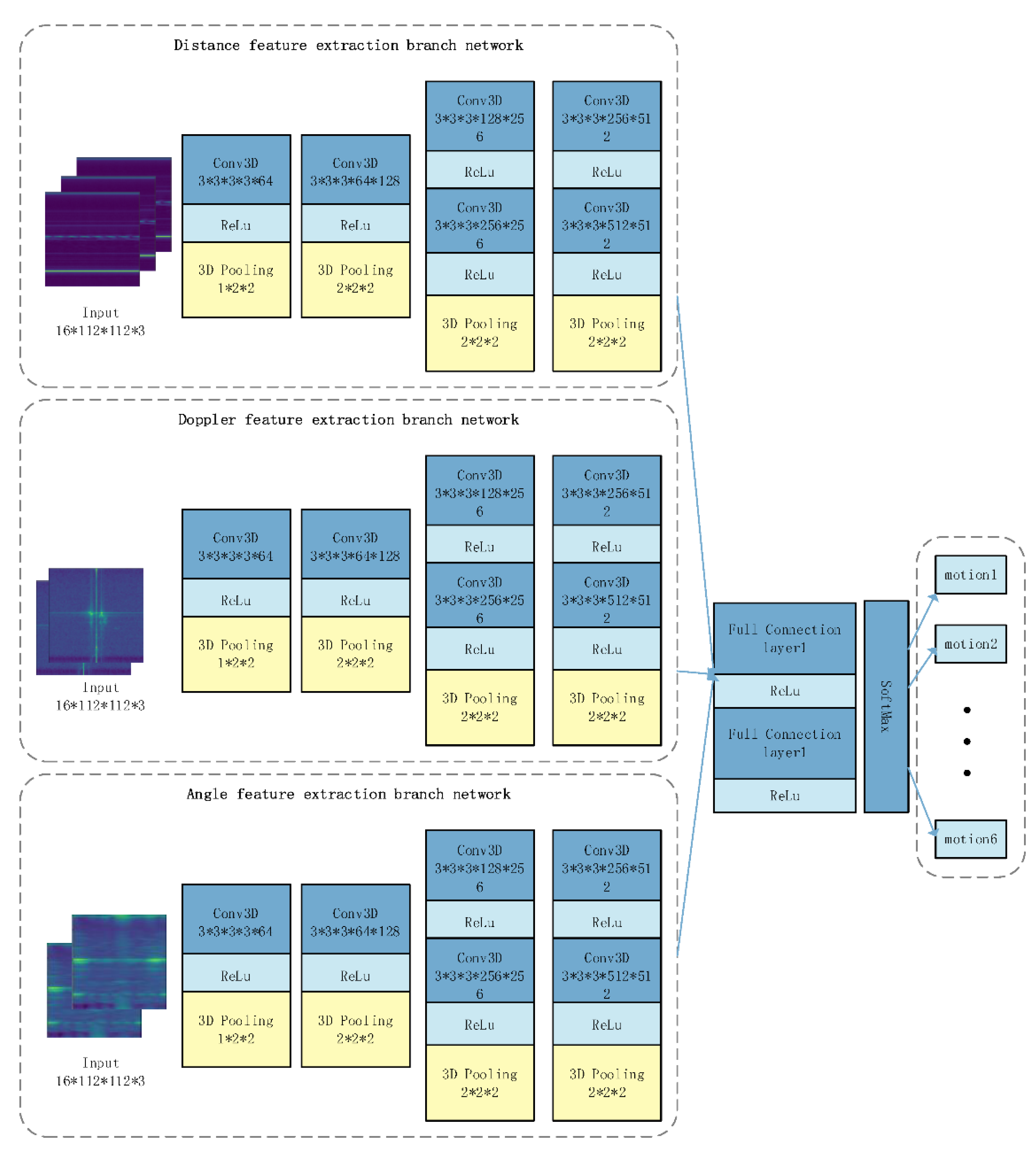

3. Design of Convolutional Neural Network

3.1. Learning Model

3.2. The Three-Dimensional CNN Architecture Analysis

4. Experimental Setup and Result Analysis

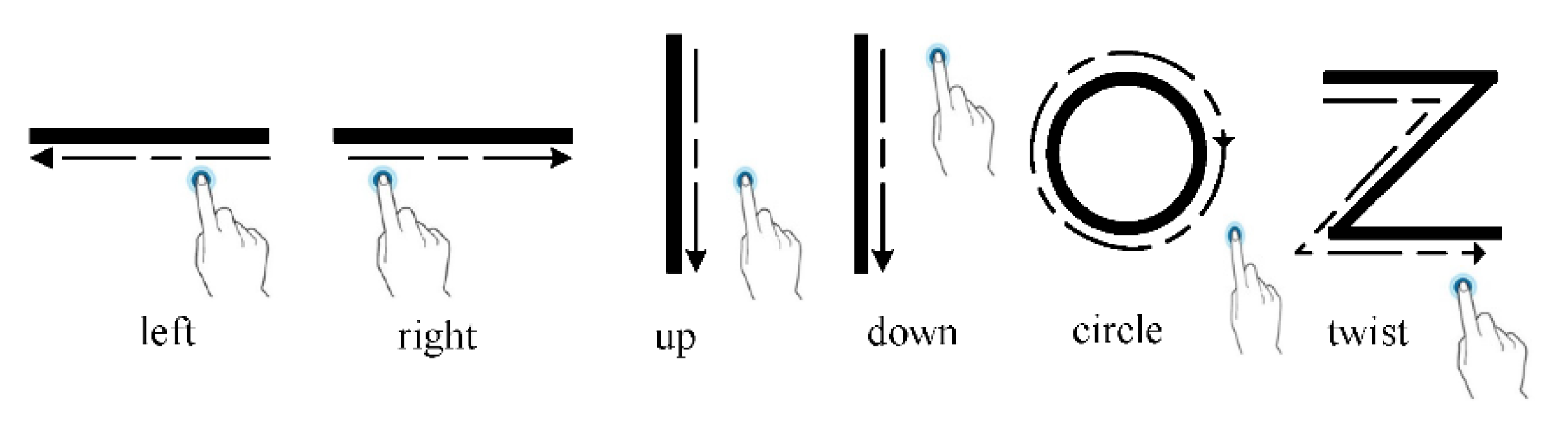

4.1. The Experimental Setup

4.2. The Experimental Analysis

4.2.1. Training Model Parameter Setting

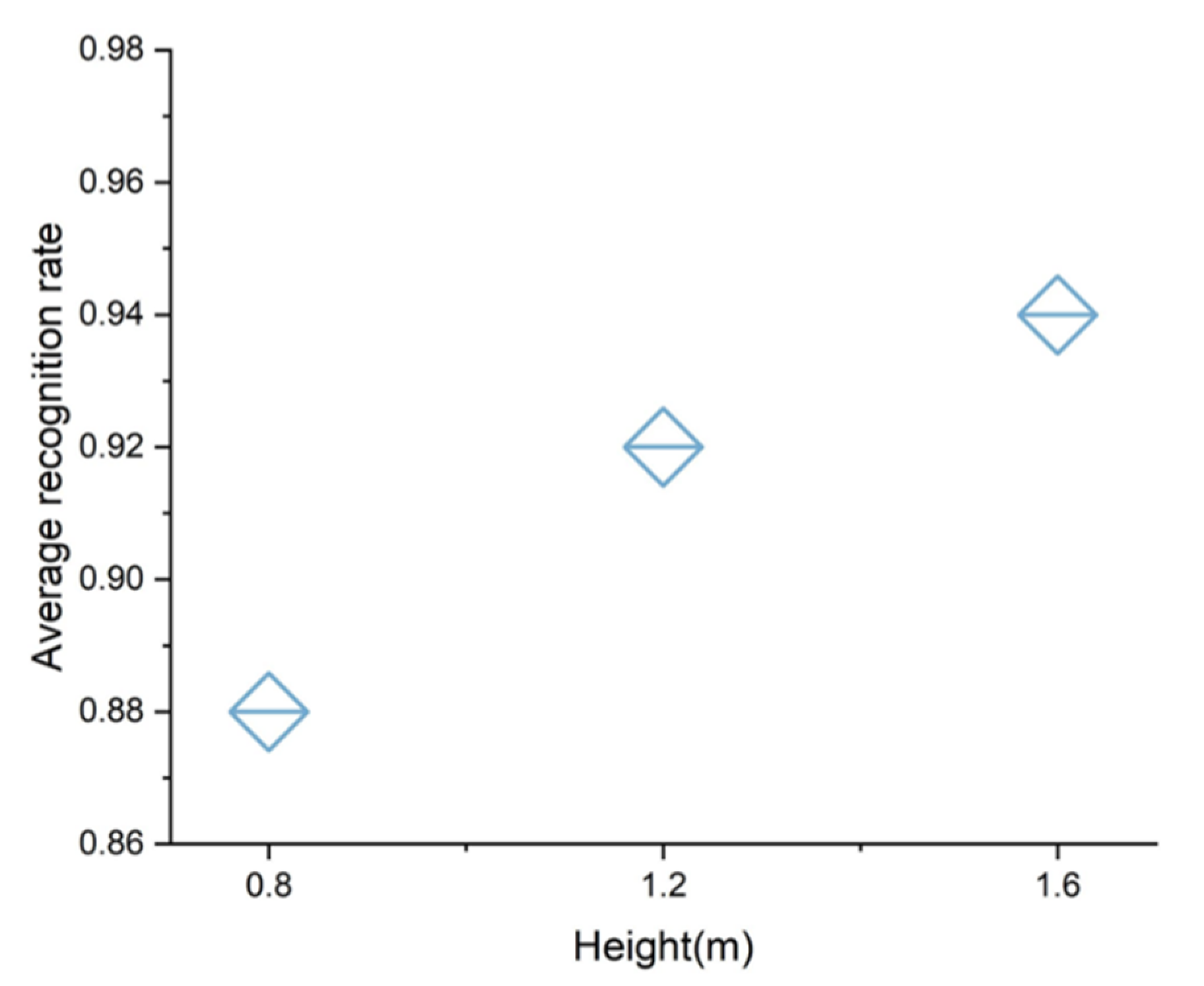

4.2.2. Optimization Analysis of Hardware Parameters

4.2.3. Recognition Accuracy of Different Frequency Bands

4.2.4. Influence of Different Gesture Directions on Recognition Effect

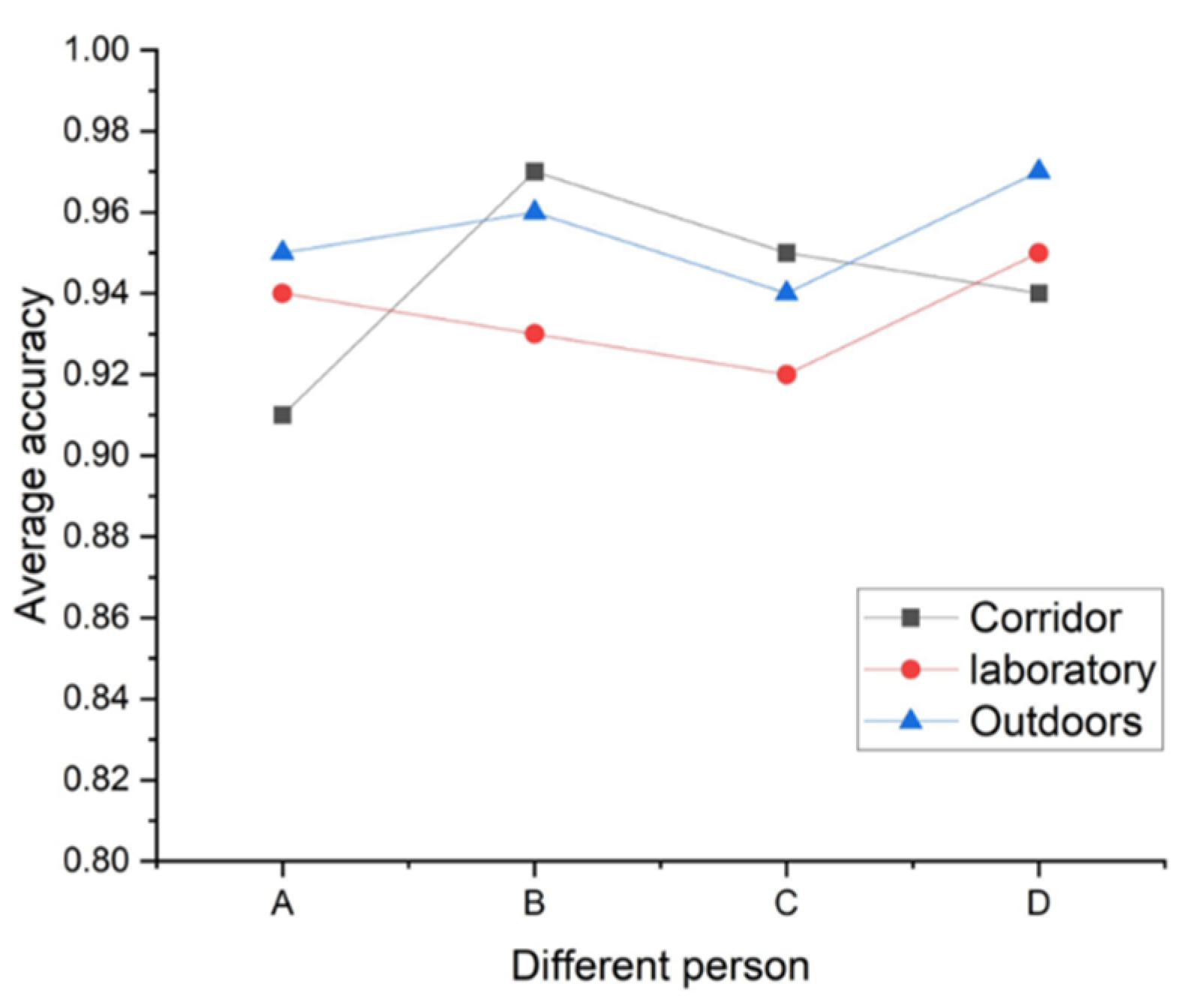

4.2.5. Influence of Different Experimental Locations on Recognition Effect

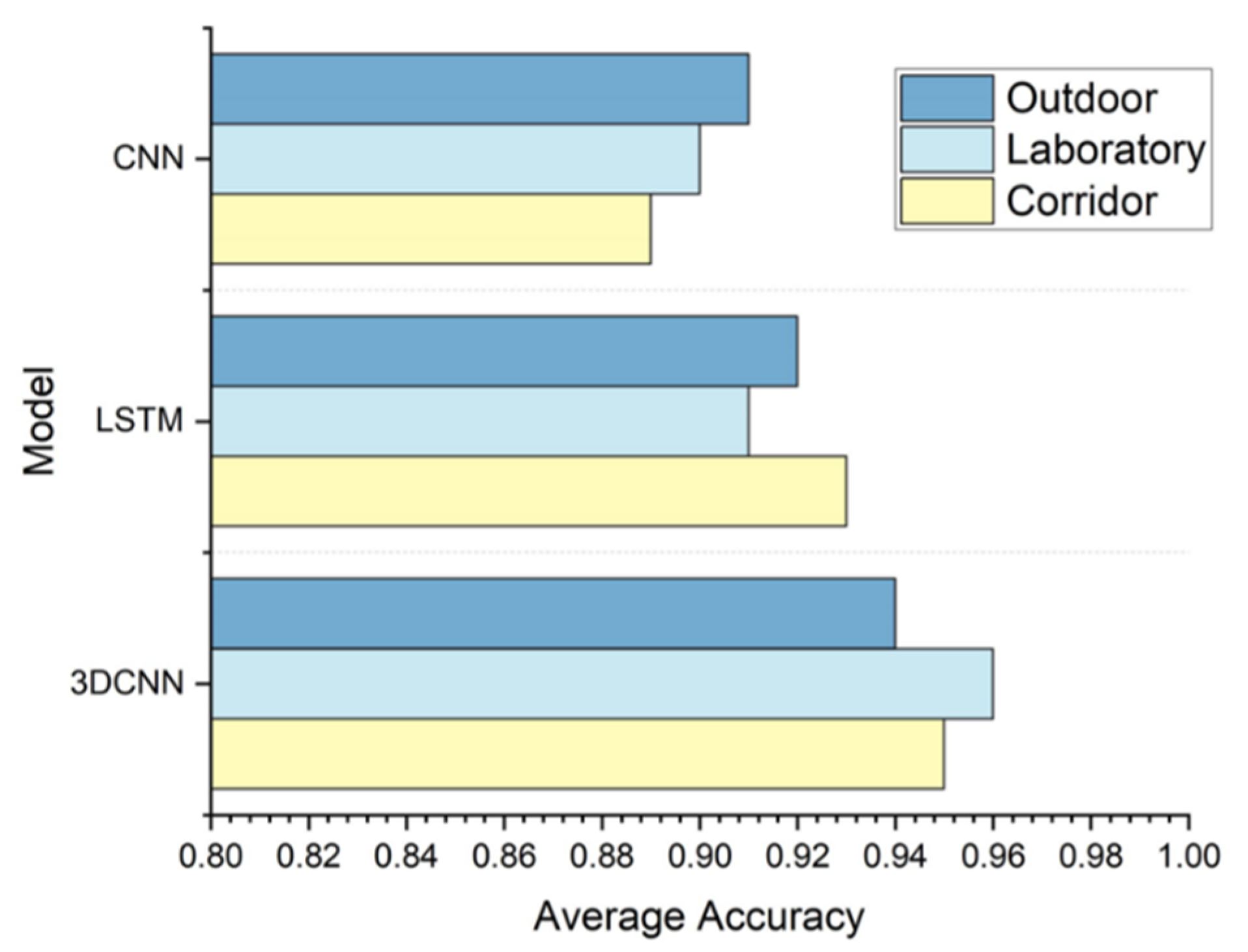

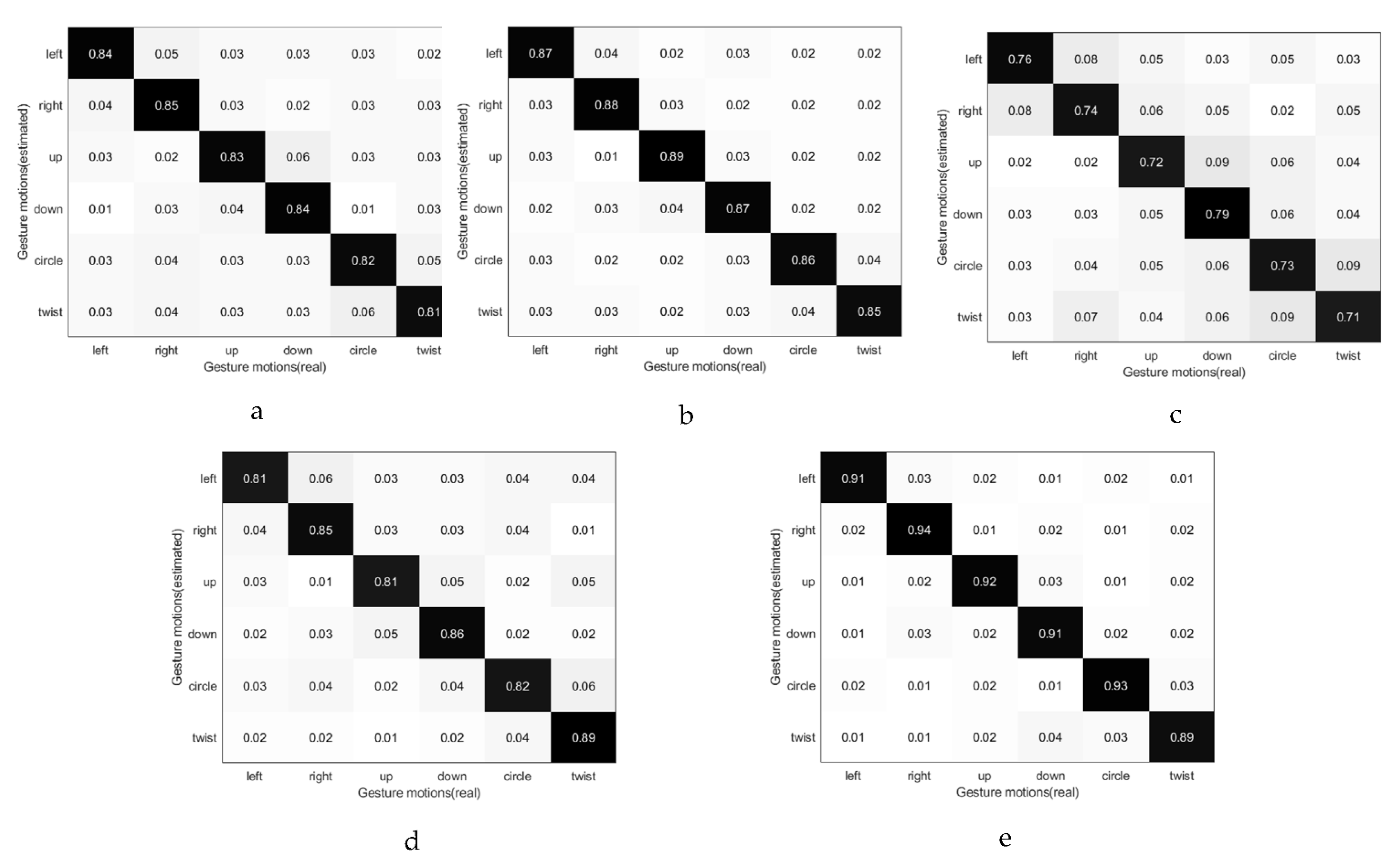

4.2.6. User Diversity and Comparison of Different Models

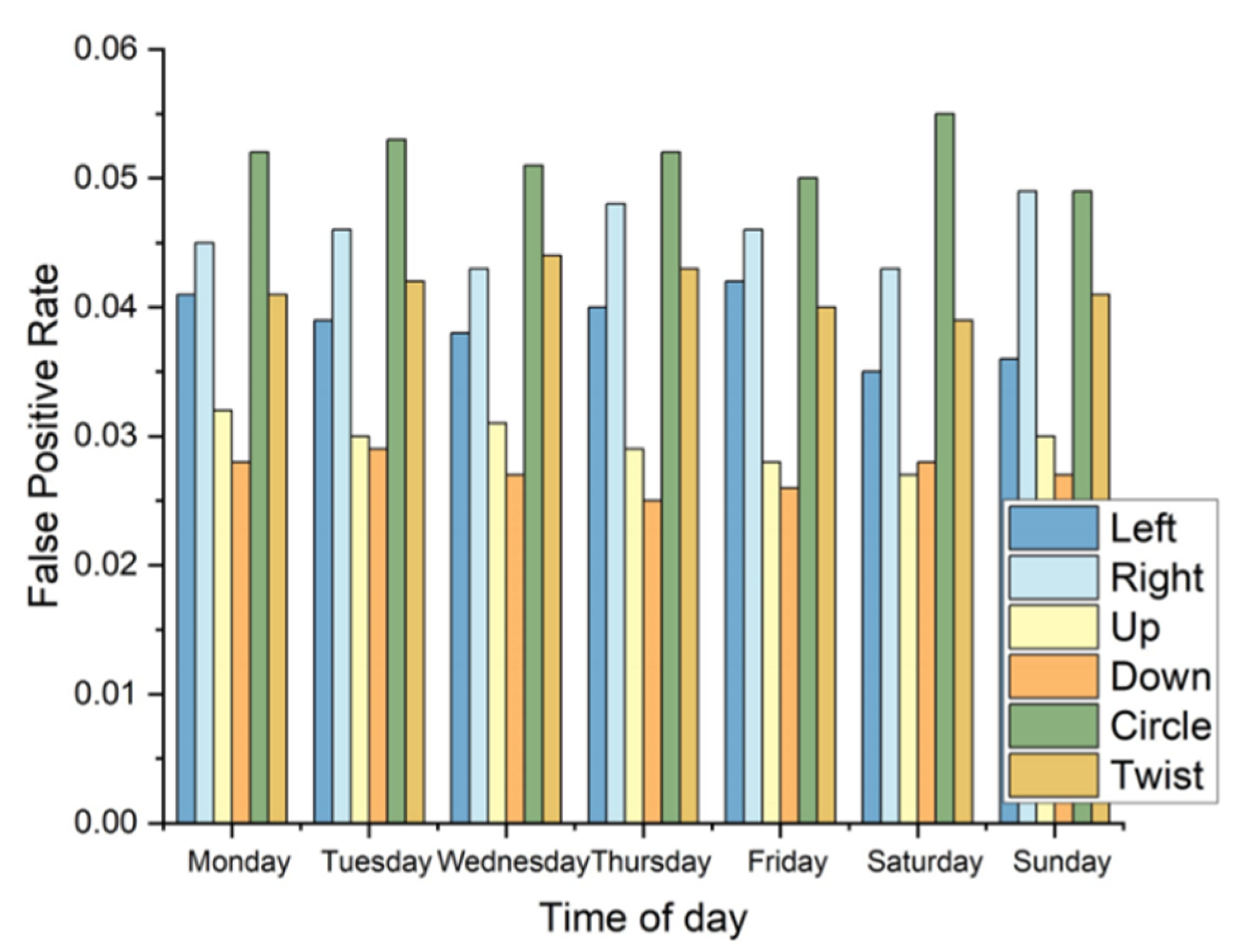

4.2.7. Robustness Validation

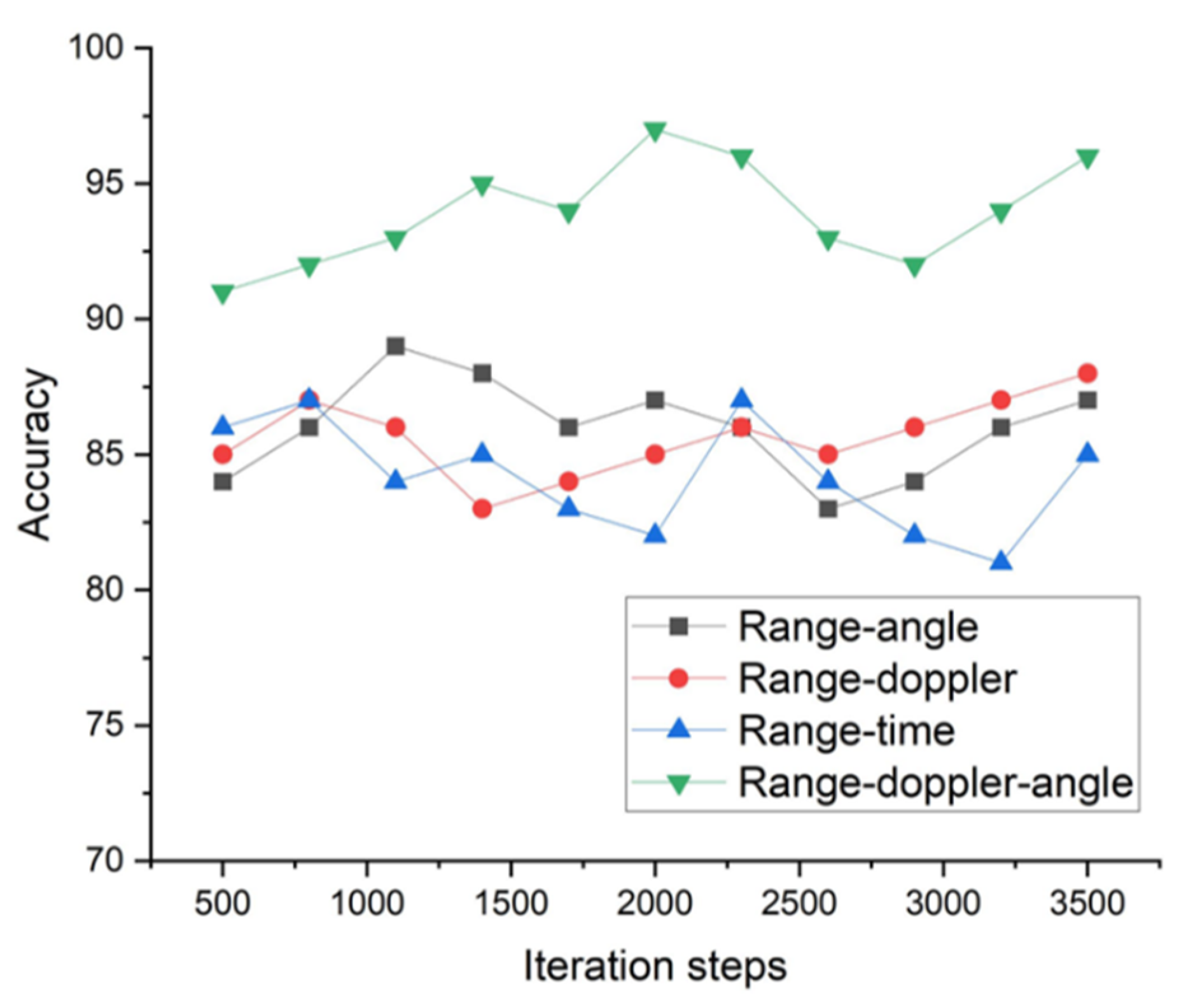

4.2.8. Impact of Different Feature

4.2.9. Comparison with Previous Studies

4.2.10. Overall System Performance Analysis

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, D.; Chen, L. Intelligent driving perception of FM CW millimeter wave radar. Intell. Comput. Appl. 2021, 11, 152–155. [Google Scholar]

- Dang, X.; Qin, F.; Bu, X.; Liang, X. A robust sensing algorithm for fusion of millimeter wave radar and laser radar for intelligent driving. Acta Radaris Sin. 2021, 10, 622–631. [Google Scholar]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, TX, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand gesture recognition with 3D convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Vattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Liu, L.; Qin, B.; Li, L.; Du, Y. Estimation method of Vehicle Trajectory Accuracy sensed by roadside millimeter wave radar. China Commun. Informatiz. 2022, 26, 119–123. [Google Scholar]

- Zhang, L.; Zhong, W.; Zhang, J.; Zhu, Q.; Chen, X. Millimeter wave beam tracking based on vehicle environment situational awareness. Signal Process. 2022, 38, 457–465. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Otero, M. Application of a continuous wave radar for human gait recognition. In Proceedings of the SPIE 5809, Signal Processing, Sensor Fusion, and Target Recognition XIV, Bellingham, WA, USA, 25 May 2005. [Google Scholar]

- Rahman, T.; Adams, A.T.; Ravichandran, R.V.; Zhang, M.; Patel, S.N.; Kientz, J.A.; Choudhury, T. DoppleSleep: A contactless unobtrusive sleep sensing system using short-range Doppler radar. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp’15), Osaka, Japan, 7–11 September 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 39–50. [Google Scholar]

- Dardas, N.H.; Georganas, N.D. Real-Time Hand Gesture Detection and Recognition Using Bag-of-Features and Support Vector Machine Techniques. In Proceedings of the IEEE Transactions on Instrumentation and Measurement, Capri Island, Italy, 10–11 October 2011; Volume 60, pp. 3592–3607. [Google Scholar]

- Wu, J.; Konrad, J.; Ishwar, P. Dynamic time warping for gesture-based user identification and authentication with Kinect. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, UK, 26–31 May 2013; pp. 2371–2375. [Google Scholar]

- Virmani, A.; Shahzad, M. Position and orientation agnostic gesture recognition using wifi. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 19–23 June 2017; pp. 252–264. [Google Scholar]

- Wang, R.Y.; Popović, J. Real-time hand-tracking with a color glove. ACM Trans. Graph. 2009, 28, 1–8. [Google Scholar]

- Sharp, T.; Keskin, C.; Robertson, D.; Taylor, J.; Shotton, J.; Kim, D.; Rhemann, C.; Leichter, I.; Vinnikov, A.; Wei, Y.; et al. Accurate, Robust, and Flexible Real-time Hand Tracking. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI’15), Seoul, Republic of Korea, 18–23 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 3633–3642. [Google Scholar]

- Duffner, S.; Berlemont, S.; Lefebvre, G.; Garcia, C. 3D gesture classification with convolutional neural networks. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 5432–5436. [Google Scholar]

- Chan, L.; Hsieh, C.-H.; Chen, Y.-L.; Yang, S.; Huang, D.-Y.; Liang, R.-H.; Chen, B.-Y. Cyclops: Wearable and Single-Piece Full-Body Gesture Input Devices. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI’15), Seoul, Republic of Korea, 18 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 3001–3009. [Google Scholar]

- Zhang, J.; Tang, Z.; Li, M.; Fang, D.; Nurmi, P.; Wang, Z. CrossSense: Towards cross-site and large-scale WiFi sensing. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 305–320. [Google Scholar]

- Kurakin, A.; Zhang, Z.; Liu, Z. A real time system for dynamic hand gesture recognition with a depth sensor. In Proceedings of the 20th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 12 December 2012; pp. 1975–1979. [Google Scholar]

- Keskin, C.; Kıraç, F.; Kara, Y.E.; Akarun, L. Real Time Hand Pose Estimation Using Depth Sensors. In Consumer Depth Cameras for Computer Vision. Advances in Computer Vision and Pattern Recognition; Fossati, A., Gall, J., Grabner, H., Ren, X., Konolige, K., Eds.; Springer: London, UK, 2013. [Google Scholar]

- Dorfmuller-Ulhaas, K.; Schmalstieg, D. Finger tracking for interaction in augmented environments. In Proceedings of the IEEE and ACM International Symposium on Augmented Reality, New York, NY, USA, 26–29 October 2001; pp. 55–64. [Google Scholar]

- Zheng, Y.; Zhang, Y.; Qian, K.; Zhang, G.; Liu, Y.; Wu, C.; Yang, Z. Zero-effort cross-domain gesture recognition with Wi-Fi. In Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services, Shanghai, China, 26 May 2019; pp. 313–325. [Google Scholar]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo Japan, 16–19 October 2016; pp. 851–860. [Google Scholar]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. 2016, 35, 1–19. [Google Scholar] [CrossRef]

- Li, G.; Zhang, R.; Ritchie, M.; Griffiths, H. Sparsity-driven micro-Doppler feature extraction for dynamic hand gesture recognition. IEEE Trans. Aerosp. Electron. Syst. 2017, 54, 655–665. [Google Scholar] [CrossRef]

- Chen, T.; Zhang, F.; Liu, Z. Gesture Recognition based on FMCW Millimeter Wave Radar. Appl. Sci. Technol. 2021, 48, 1–5. (In Chinese) [Google Scholar]

- Kim, Y.; Toomajian, B. Hand gesture recognition using micro-Doppler signatures with convolutional neural network. IEEE Access 2016, 4, 7125–7130. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, J.; Tian, Z.; Zhou, M.; Wang, S. Multi-dimensional gesture recognition algorithm based on FMCW radar. J. Electron. Inf. Technol. 2019, 41, 822–829. (In Chinese) [Google Scholar]

- Xia, Z.; Zhou, C.; Jie, J.; Zhou, T.; Wang, X.; Xu, F.J. Micro-motion identification based on multi-channel FM continuous wave millimeter-wave radar. Electron. Inf. Technol. 2020, 42, 164–172. (In Chinese) [Google Scholar]

- Jin, B.; Peng, Y.; Kuang, X.; Zhang, Z. Dynamic Gesture Recognition method for Millimeter wave Radar based on 1D-SCNN. J. Electron. Inf. Technol. 2021, 43, 1–8. (In Chinese) [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Haifa, Israel, 18 April 2013; Volume 35, pp. 221–231. [Google Scholar]

- Jiang, W.; Miao, C.; Ma, F.; Ya, S.; Wang, Y.; Yuan, Y.; Xue, H.; Song, C.; Ma, X.; Koutsonikolas, D.; et al. Towards environment independent device free human activity recognition. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 289–304. [Google Scholar]

- Zhou, Y.; Sun, X.; Zha, Z.J.; Zeng, W. Mict: Mixed 3d/2d convolutional tube for human action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 449–458. [Google Scholar]

- Dong, X.; Wu, H.H.; Yan, Y.; Qian, L. Hierarchical transfer convolutional neural networks for image classification. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2817–2825. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. Pointconv: Deep convolutional networks on 3d point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9621–9630. [Google Scholar]

- Jiang, B.; Zhang, Z.; Lin, D.; Tang, J.; Luo, B. Semi-supervised learning with graph learning-convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11313–11320. [Google Scholar]

- Al-Jamali NA, S.; Al-Raweshidy, H.S. Smart IoT Network Based Convolutional Recurrent Neural Network with Element-Wise Prediction System. IEEE Access 2021, 9, 47864–47874. [Google Scholar] [CrossRef]

| Radar Parameter Name | Value |

|---|---|

| FM bandwidth | 4 GHz |

| Antenna configuration | 2Tx, 4Rx |

| Antenna Spacing | Wave Length/2 |

| FM period | 40 μs |

| Data frame period | 40 ms |

| The number of chirp signals in the frame | 128 |

| Sampling Rate | 2 MHz |

| The number of sampling points in the FM-period | 64 |

| Distance resolution | 3.75 cm/s |

| Speed resolution | 4 cm/s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dang, X.; Wei, K.; Hao, Z.; Ma, Z. Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar. Signals 2022, 3, 875-894. https://doi.org/10.3390/signals3040052

Dang X, Wei K, Hao Z, Ma Z. Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar. Signals. 2022; 3(4):875-894. https://doi.org/10.3390/signals3040052

Chicago/Turabian StyleDang, Xiaochao, Kefeng Wei, Zhanjun Hao, and Zhongyu Ma. 2022. "Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar" Signals 3, no. 4: 875-894. https://doi.org/10.3390/signals3040052

APA StyleDang, X., Wei, K., Hao, Z., & Ma, Z. (2022). Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar. Signals, 3(4), 875-894. https://doi.org/10.3390/signals3040052