Use RBF as a Sampling Method in Multistart Global Optimization Method

Abstract

1. Introduction

2. Method Description

2.1. The Multistart Method

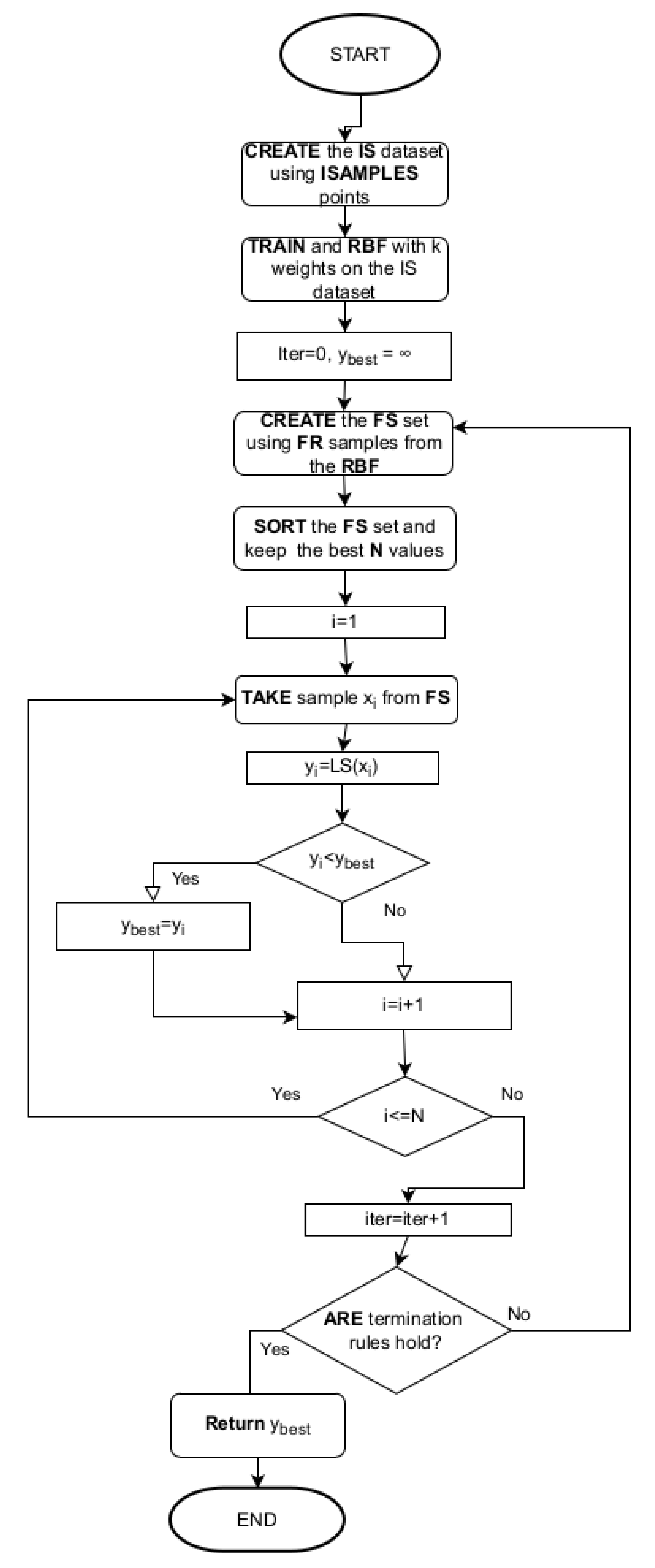

| Algorithm 1 Representation of the Multistart algorithm. |

|

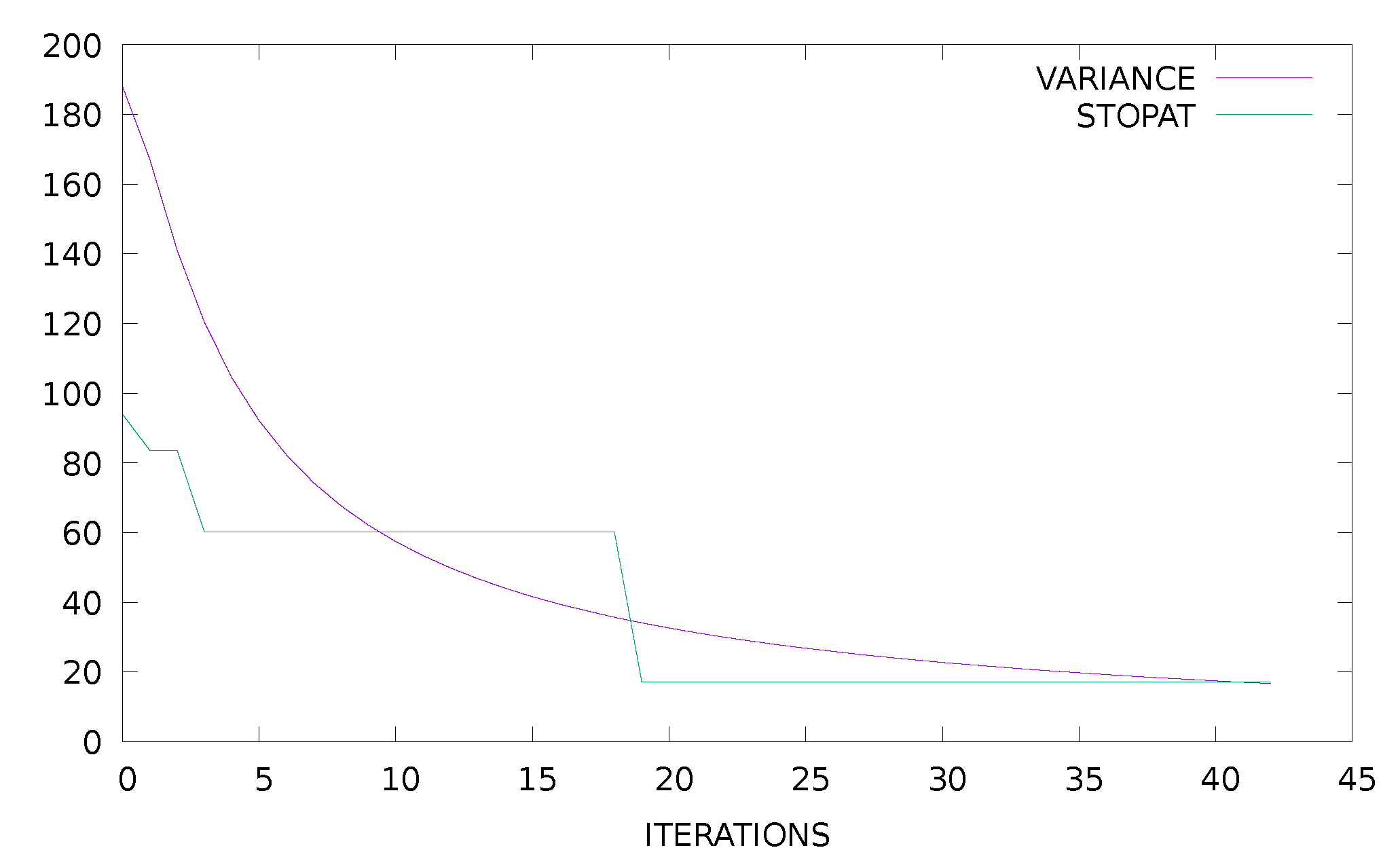

2.2. The Used Termination Rule

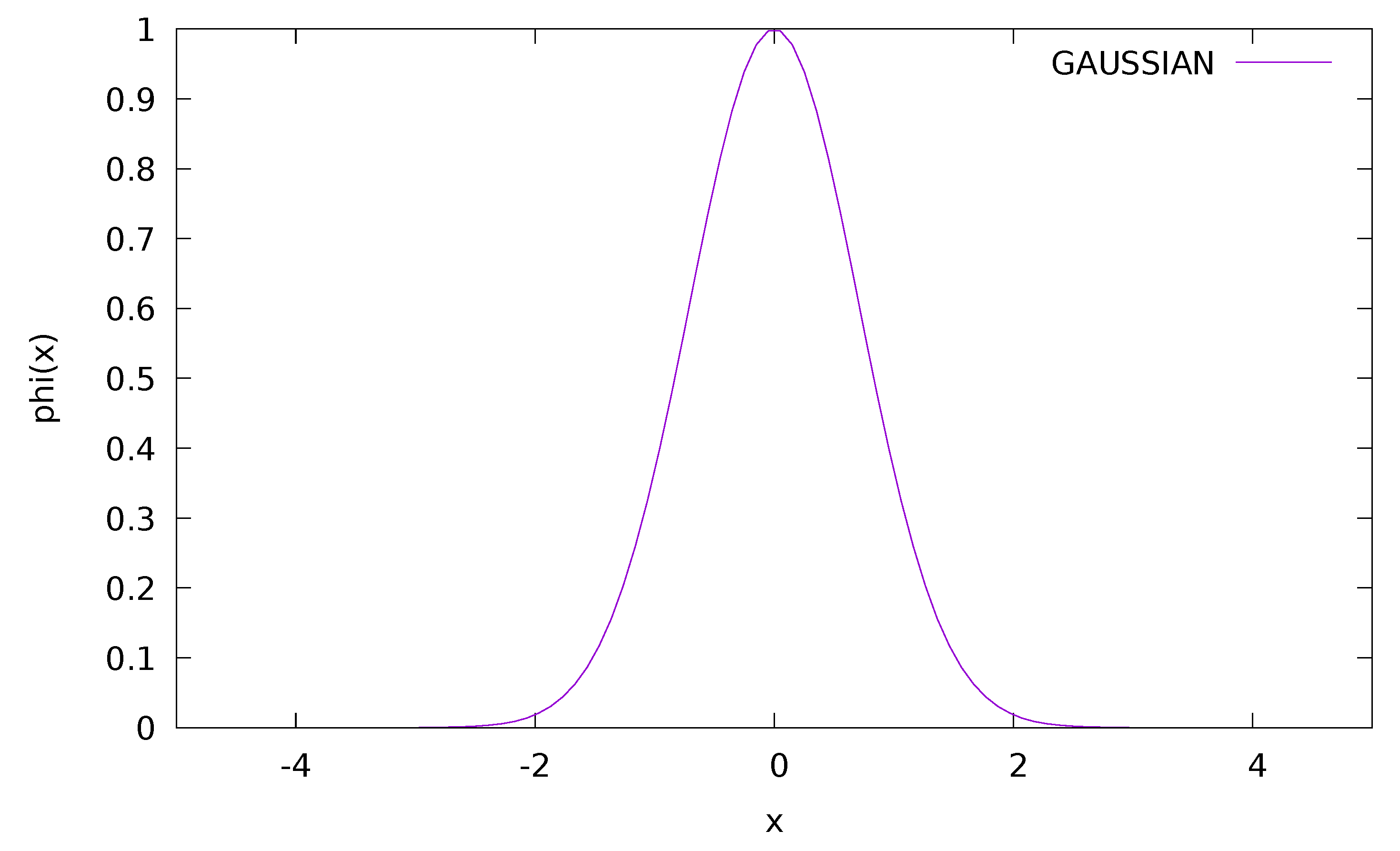

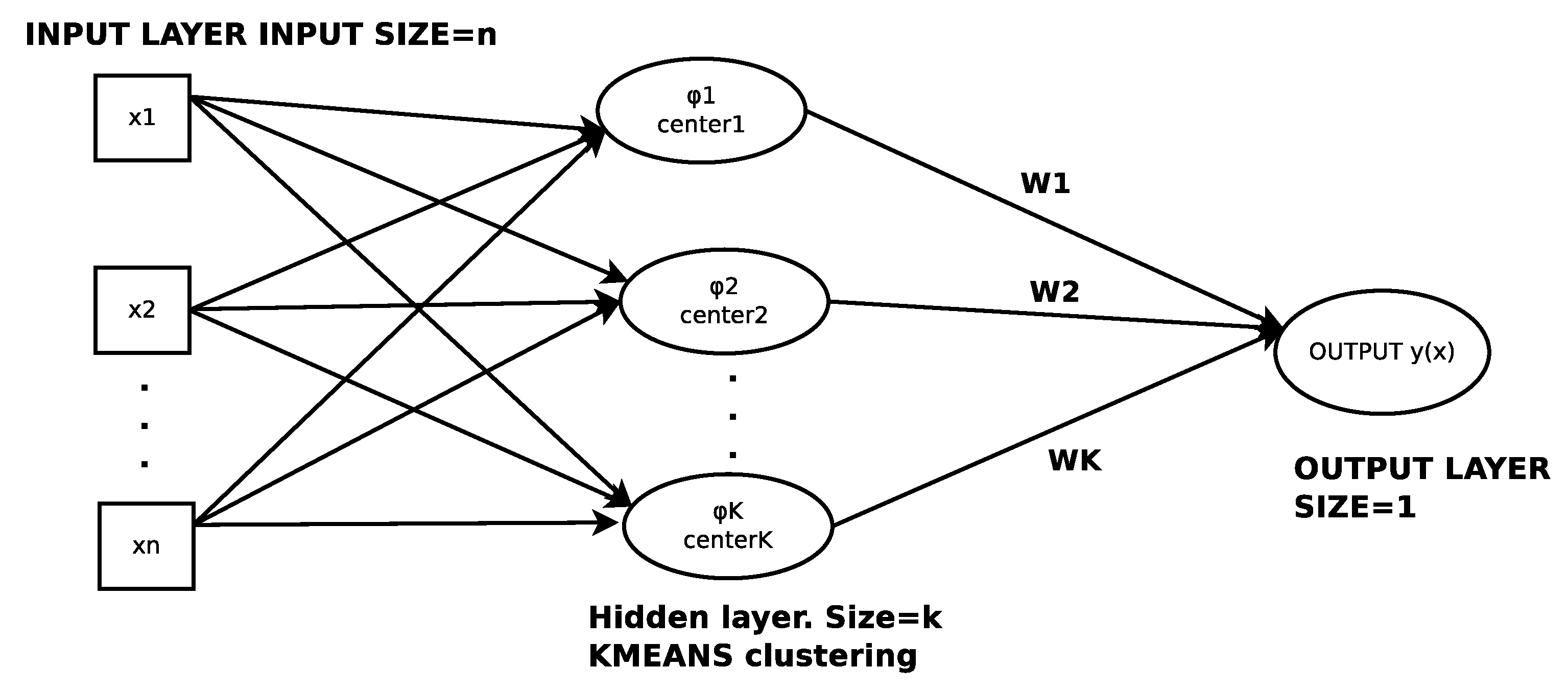

2.3. RBF Networks

- During the first phase the k centers of and the associated variances are calculated through K-Means algorithm [66].

- During the second phase, the weight vector is calculated by solving a linear system of equations with the following procedure:

- (a)

- Set , the matrix for the k weights

- (b)

- Set

- (c)

- Set .

- (d)

- The system to be solved is defined as:The solution is:The matrix is the so - called pseudo-inverse of , with the property

| Algorithm 2 The proposed sampling procedure. |

|

3. Experiments

3.1. Test Functions

- Bent Cigar function The function iswith the global minimum . For the conducted experiments the value was used.

- Bf1 function. The function Bohachevsky 1 is given by the equationwith . The value of the global minimum is 0.0.

- Bf2 function. The function Bohachevsky 2 is given by the equationwith . The value of the global minimum is 0.0.

- Branin function. The function is defined by with . The value of the global minimum is 0.397887.with . The value of the global minimum is −0.352386.

- CM function. The Cosine Mixture function is given by the equationwith . The value of the global minimum is −0.4 and in our experiments we have used . The corresponding function is denoted as CM4

- Camel function. The function is given byThe global minimum has the value of

- Discus function. The function is defined aswith global minimum For the conducted experiments the value was used.

- Easom function. The function is given by the equationwith and global minimum −1.0

- Exponential function. The function is given byThe global minimum is located at with value . In our experiments we used this function with and the corresponding functions are denoted by the labels EXP4, EXP16, EXP64.

- Griewank2 function. The function is given byThe global minimum is located at the with value 0.

- Griewank10 function. The function is given by the equationIn our experiments we have used and the global minimum is 0.0. The function has several local minima in the specified range.

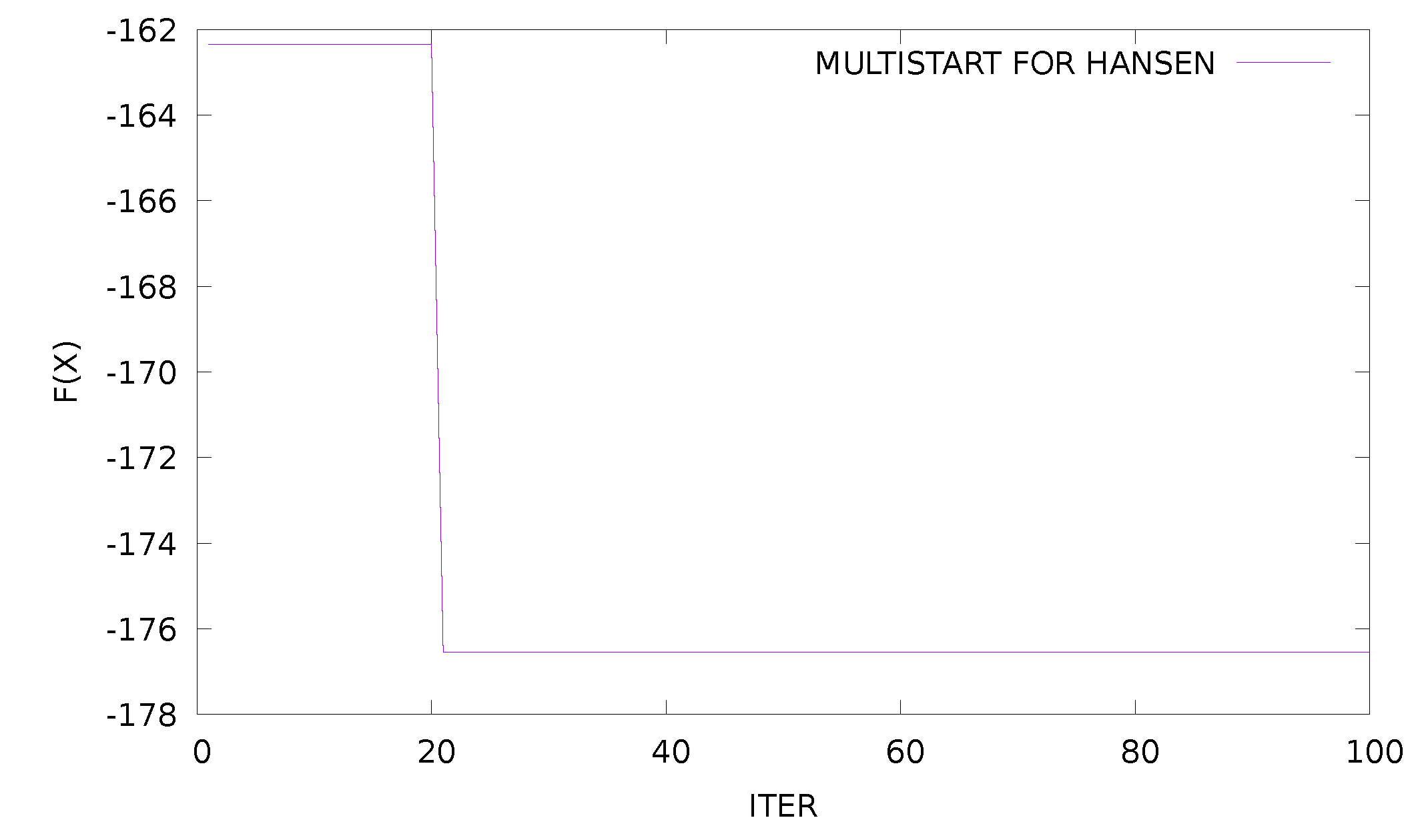

- Hansen function. , . The global minimum of the function is −176.541793.

- Hartman 3 function. The function is given bywith and andThe value of the global minimum is −3.862782.

- Hartman 6 function.with and andthe value of the global minimum is −3.322368.

- High Conditioned Elliptic function, defined aswith global minimum and the value was used in the conducted experiments

- Potential function. The molecular conformation corresponding to the global minimum of the energy of N atoms interacting via the Lennard-Jones potential [69] is used as a test case here. The function to be minimized is given by:In the current experiments two different cases were studied:

- Rastrigin function. The function is given byThe global minimum is located at with value −2.0.

- Shekel 7 function.with and . The value of the global minimum is −10.342378.

- Shekel 5 function.with and . The value of the global minimum is −10.107749.

- Shekel 10 function.with and . The value of the global minimum is −10.536410.

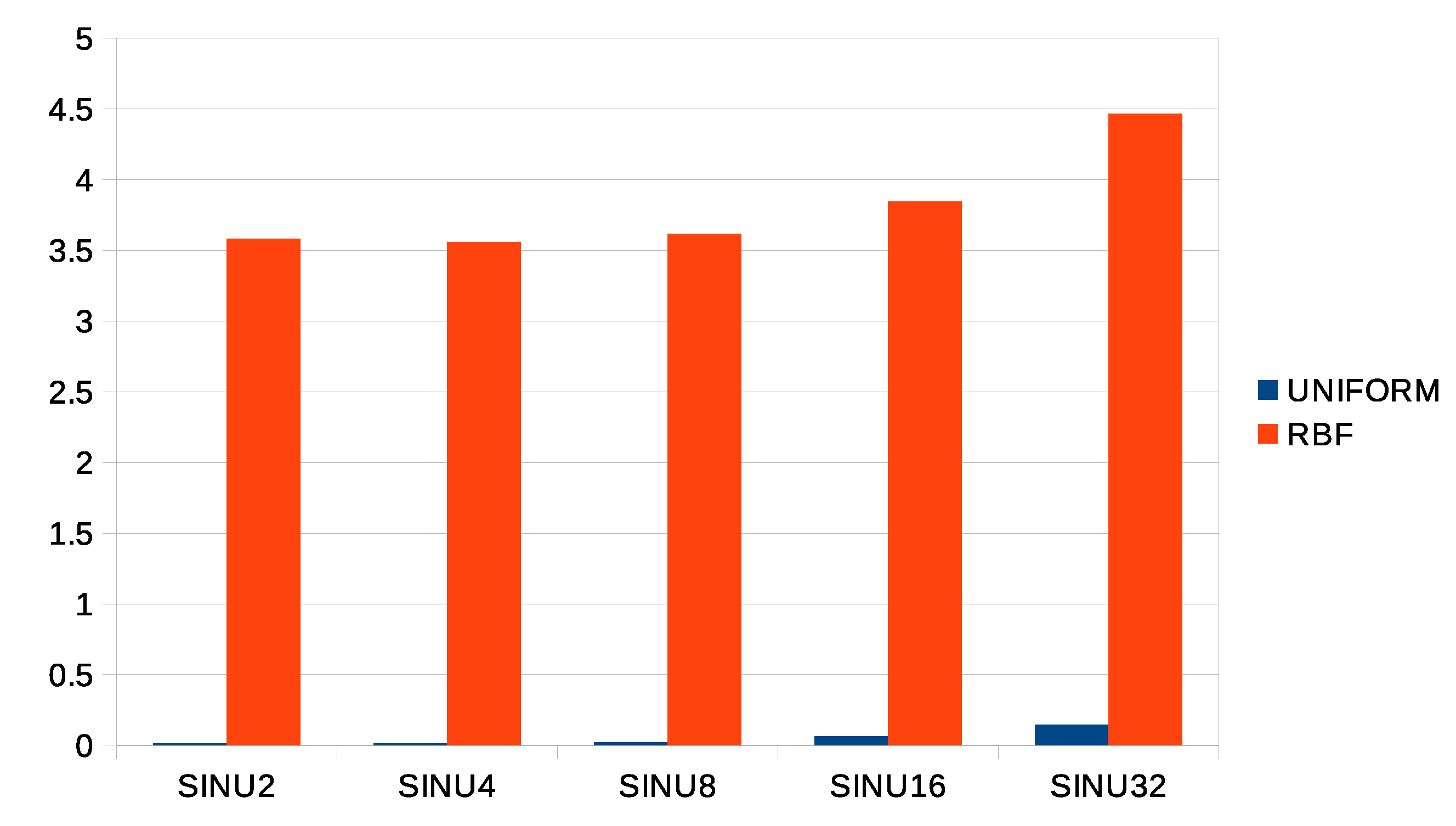

- Sinusoidal function. The function is given byThe global minimum is located at with . In our experiments we used and and the corresponding functions are denoted by the labels SINU4, SINU8 and SINU16 respectively.

- Test2N function. This function is given by the equationThe function has in the specified range and in our experiments we used . The corresponding values of the global minimum is −156.664663 for , −195.830829 for , −234.996994 for and −274.163160 for .

- Test30N function. This function is given bywith . The function has local minima in the specified range and we used in our experiments. The value of the global minimum for this function is 0.0.

3.2. Experimental Results

4. Conclusions

- Application of the proposed technique to other more efficient global optimization methods.

- Parallelization of the training method for the neural network.

- Usage of more efficient methods to train the RBF networks such as Genetic Algorithms.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheong, D.; Kim, Y.M.; Byun, H.W.; Oh, K.J.; Kim, T.Y. Using genetic algorithm to support clustering-based portfolio optimization by investor information. Appl. Soft Comput. 2017, 61, 593–602. [Google Scholar] [CrossRef]

- Díaz, J.G.; Rodríguez, B.G.; Leal, M.; Puerto, J. Global optimization for bilevel portfolio design: Economic insights from the Dow Jones index. Omega 2021, 102, 102353. [Google Scholar] [CrossRef]

- Gao, J.; You, F. Shale Gas Supply Chain Design and Operations toward Better Economic and Life Cycle Environmental Performance: MINLP Model and Global Optimization Algorithm. ACS Sustain. Chem. Eng. 2015, 3, 1282–1291. [Google Scholar] [CrossRef]

- Luo, X.L.; Feng, J.; Zhang, H.H. A genetic algorithm for astroparticle physics studies. Comput. Phys. Commun. 2020, 250, 106818. [Google Scholar] [CrossRef]

- Biekötter, T.; Olea-Romacho, M.O. Reconciling Higgs physics and pseudo-Nambu-Goldstone dark matter in the S2HDM using a genetic algorithm. J. High Energ. Phys. 2021, 2021, 215. [Google Scholar] [CrossRef]

- Gu, T.; Luo, W.; Xiang, H. Prediction of two-dimensional materials by the global optimization approach. WIREs Comput. Sci. 2017, 7, e1295. [Google Scholar] [CrossRef]

- Fang, H.; Zhou, J.; Wang, Z.; Qiu, Z.; Sun, Y.; Lin, Y.; Chen, K.; Zhou, X.; Pan, M. Hybrid method integrating machine learning and particle swarm optimization for smart chemical process operations. Front. Chem. Sci. Eng. 2022, 16, 274–287. [Google Scholar] [CrossRef]

- Furman, D.; Carmeli, B.; Zeiri, Y.; Kosloff, R. Enhanced Particle Swarm Optimization Algorithm: Efficient Training of ReaxFF Reactive Force Fields. J. Chem. Theory Comput. 2018, 14, 3100–3112. [Google Scholar] [CrossRef]

- Heiles, S.; Johnston, R.L. Global optimization of clusters using electronic structure methods. Int. J. Quantum Chem. 2013, 113, 2091–2109. [Google Scholar] [CrossRef]

- Lee, E.K. Large-Scale Optimization-Based Classification Models in Medicine and Biology. Ann. Biomed. Eng. 2007, 35, 1095–1109. [Google Scholar] [CrossRef]

- Hilali-Jaghdam, I.; Ishak, A.B.; Abdel-Khalek, S.; Jamal, A. Quantum and classical genetic algorithms for multilevel segmentation of medical images: A comparative study. Comput. Commun. 2020, 162, 83–93. [Google Scholar] [CrossRef]

- Wolfe, M.A. Interval methods for global optimization. Appl. Math. Comput. 1996, 75, 179–206. [Google Scholar]

- Allahdadi, M.; Nehi, H.M.; Ashayerinasab, H.A.; Javanmard, M. Improving the modified interval linear programming method by new techniques. Inf. Sci. 2016, 339, 224–236. [Google Scholar] [CrossRef]

- Araya, I.; Reyes, V. Interval Branch-and-Bound algorithms for optimization and constraint satisfaction: A survey and prospects. J. Glob. Optim. 2016, 65, 837–866. [Google Scholar] [CrossRef]

- Price, W.L. Global optimization by controlled random search. J. Optim. Theory Appl. 1983, 40, 333–348. [Google Scholar] [CrossRef]

- Filho, N.M.; Albuquerque, R.B.F.; Sousa, B.S.; Santos, L.G.C. A comparative study of controlled random search algorithms with application to inverse aerofoil design. Eng. Optim. 2018, 50, 996–1015. [Google Scholar] [CrossRef]

- Kaelo, P.; Ali, M.M. Numerical studies of some generalized controlled random search algorithms. Asia-Pac. J. Oper. 2012, 29, 1250016. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Ferreiro, A.M.; García, J.A.; López-Salas, J.G.; Vázquez, C. An efficient implementation of parallel simulated annealing algorithm in GPUs. J. Glob. Optim. 2013, 57, 863–890. [Google Scholar] [CrossRef]

- Neri, F.; Tirronen, V. Recent advances in differential evolution: A survey and experimental analysis. Artif. Intell. Rev. 2010, 33, 61–106. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Differential Evolution: A Survey of the State-of-the-Art. IEEE Trans. Evol. Comput. 2011, 15, 4–311. [Google Scholar] [CrossRef]

- Kramer, O. Genetic Algorithms. In Genetic Algorithm Essentials. Studies in Computational Intelligence; Springer: Cham, Switzerland, 2017; Volume 679. [Google Scholar]

- Katoch, S.; Chauhan, S.S.; Kumar, V.A. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef] [PubMed]

- Grady, S.A.; Hussaini, M.Y.; Abdullah, M.M. Placement of wind turbines using genetic algorithms. Renew. Energy 2005, 30, 259–270. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.K.; Blackwell, T. Particle swarm optimization An Overview. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2018, 22, 387–4088. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Socha, K.; Dorigo, M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008, 185, 1155–1173. [Google Scholar] [CrossRef]

- Shieh, H.L.; Kuo, C.C.; Chiang, C.M. Modified particle swarm optimization algorithm with simulated annealing behavior and its numerical verification. Appl. Math. Comput. 2011, 218, 4365–4383. [Google Scholar] [CrossRef]

- Zhoua, S.; Liu, X.; Hua, Y.; Zhou, X.; Yang, S. Adaptive model parameter identification for lithium-ion batteries based on improved coupling hybrid adaptive particle swarm optimization- simulated annealing method. J. Power Source 2021, 482, 228951. [Google Scholar] [CrossRef]

- He, D.; Wang, F.; Mao, Z. A hybrid genetic algorithm approach based on differential evolution for economic dispatch with valve-point effect. Int. J. Electr. Power Energy Syst. 2008, 30, 31–38. [Google Scholar] [CrossRef]

- Trivedi, A.; Srinivasan, D.; Biswas, S.; Reindl, T. A genetic algorithm—Differential evolution based hybrid framework: Case study on unit commitment scheduling problem. Inf. Sci. 2016, 354, 275–300. [Google Scholar] [CrossRef]

- Kao, Y.T.; Zahara, E. A hybrid genetic algorithm and particle swarm optimization for multimodal functions. Appl. Soft Comput. 2008, 8, 849–857. [Google Scholar] [CrossRef]

- Barkalov, K.; Gergel, V. Parallel global optimization on GPU. J. Glob. Optim. 2016, 66, 3–20. [Google Scholar] [CrossRef]

- Kan, G.; Lei, T.; Liang, K.; Li, J.; Ding, L.; He, X.; Yu, H.; Zhang, D.; Zuo, D.; Bao, Z.; et al. A multi-core CPU and many-core GPU based fast parallel shuffled complex evolution global optimization approach. IEEE Trans. Parallel Distrib. Syst. 2017, 28, 332–344. [Google Scholar] [CrossRef]

- Ferreiro, A.M.; García-Rodríguez, J.A.; Vázquez, C.; Costa, E.; Correia, A. Parallel two-phase methods for global optimization on GPU. Math. Comput. Simul. 2019, 156, 67–90. [Google Scholar] [CrossRef]

- Li, W. A Parallel Multi-start Search Algorithm for Dynamic Traveling Salesman Problem. In Experimental Algorithms; Pardalos, P.M., Rebennack, S., Eds.; SEA 2011. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6630. [Google Scholar]

- Marti, R.; Resende, M.G.C.; Ribeiro, C.C. Multi-start methods for combinatorial optimization. Eur. J. Oper. Res. 2013, 226, 1–8. [Google Scholar] [CrossRef]

- Pandiri, V.; Singh, A. Two multi-start heuristics for the k-traveling salesman problem. OPSEARCH 2020, 57, 1164–1204. [Google Scholar] [CrossRef]

- Braysy, O.; Hasle, G.; Dullaert, W. A multi-start local search algorithm for the vehicle routing problem with time windows. Eur. J. Oper. Res. 2004, 159, 586–605. [Google Scholar] [CrossRef]

- Michallet, J.; Prins, C.; Amodeo, L.; Yalaoui, F.; Vitry, G. Multi-start iterated local search for the periodic vehicle routing problem with time windows and time spread constraints on services. Comput. Oper. Res. 2014, 41, 196–207. [Google Scholar] [CrossRef]

- Mauricio, R.G.C.; Werneck, R.F. A hybrid multistart heuristic for the uncapacitated facility location problem. Eur. J. Oper. Res. 2006, 174, 54–68. [Google Scholar]

- Marchiori, E. Genetic, Iterated and Multistart Local Search for the Maximum Clique Problem. In Applications of Evolutionary Computing; Cagnoni, S., Gottlieb, J., Hart, E., Middendorf, M., Raidl, G.R., Eds.; EvoWorkshops 2002. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2279. [Google Scholar]

- Gomes, M.I.; Afonso, L.B.; Chibeles-Martins, N.; Fradinho, J.M. Multi-start Local Search Procedure for the Maximum Fire Risk Insured Capital Problem. In Combinatorial Optimization; Lee, J., Rinaldi, G., Mahjoub, A., Eds.; ISCO 2018. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10856. [Google Scholar] [CrossRef]

- Streuber, M.G.; Zingg, W.D. Evaluating the Risk of Local Optima in Aerodynamic Shape Optimization. AIAA J. 2012, 59, 75–87. [Google Scholar] [CrossRef]

- Ali, M.M.; Storey, C. Topographical multilevel single linkage. J. Glob. Optim. 1994, 5, 49–358. [Google Scholar] [CrossRef]

- Salhi, S.; Queen, N.M. A hybrid algorithm for identifying global and local minima when optimizing functions with many minima. Eur. J. Oper. Res. 2004, 155, 51–67. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Lagaris, I.E. MinFinder: Locating all the local minima of a function. Comput. Phys. Commun. 2006, 174, 166–179. [Google Scholar] [CrossRef]

- Oliveira, H.C.B.d.; Vasconcelos, G.C.; Alvarenga, G. A Multi-Start Simulated Annealing Algorithm for the Vehicle Routing Problem with Time Windows. In Proceedings of the 2006 Ninth Brazilian Symposium on Neural Networks (SBRN’06), Ribeirao Preto, Brazil, 23–27 October 2006; pp. 137–142. [Google Scholar]

- Day, R.F.; Yin, P.Y.; Wang, Y.C.; Chao, C.H. A new hybrid multi-start tabu search for finding hidden purchase decision strategies in WWW based on eye-movements. Appl. Soft Comput. 2016, 48, 217–229. [Google Scholar] [CrossRef]

- Festa, P.; Resende, M.G.C. Hybrid GRASP Heuristics. In Foundations of Computational Intelligence Volume 3. Studies in Computational Intelligence; Abraham, A., Hassanien, A.E., Siarry, P., Engelbrecht, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 203. [Google Scholar]

- Betro, B.; Schoen, F. Optimal and sub-optimal stopping rules for the multistart algorithm in global optimization. Math. Program. 1992, 57, 445–458. [Google Scholar] [CrossRef]

- Hart, W.E. Sequential stopping rules for random optimization methods with applications to multistart local search. Siam J. Optim. 1998, 9, 270–290. [Google Scholar] [CrossRef]

- Lagaris, I.E.; Tsoulos, I.G. Stopping Rules for Box-Constrained Stochastic Global Optimization. Appl. Math. Comput. 2008, 197, 622–632. [Google Scholar] [CrossRef]

- Rocki, K.; Suda, R. An efficient GPU implementation of a multi-start TSP solver for large problem instances. In Proceedings of the GECCO ’12: 14th Annual ConferenceCompanion on Genetic and Evolutionary Computation, Philadelphia, PA, USA, 7–11 July 2012; pp. 1441–1442. [Google Scholar]

- Larson, J.; Wild, S.M. Asynchronously parallel optimization solver for finding multiple minima. Math. Comput. 2018, 10, 303–332. [Google Scholar] [CrossRef]

- Park, J.; Sandberg, I.W. Universal Approximation Using Radial-Basis-Function Networks. Neural Comput. 1991, 3, 246–257. [Google Scholar] [CrossRef]

- Yoo, S.H.; Oh, S.K.; Pedrycz, W. Optimized face recognition algorithm using radial basis function neural networks and its practical applications. Neural Netw. 2015, 69, 111–125. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.B.; Saratchandran, P.; Sundararajan, N. A generalized growing and pruning RBF (GGAP-RBF) neural network for function approximation. IEEE Trans. Neural Netw. 2005, 16, 57–67. [Google Scholar] [CrossRef] [PubMed]

- Majdisova, Z.; Skala, V. Radial basis function approximations: Comparison and applications. Appl. Math. Modell. 2017, 51, 728–743. [Google Scholar] [CrossRef]

- Kuo, B.C.; Ho, H.H.; Li, C.H.; Hung, C.C.; Taur, J.S. A Kernel-Based Feature Selection Method for SVM With RBF Kernel for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 317–3264. [Google Scholar] [CrossRef]

- Han, H.G.; Chen, Q.L.; Qiao, J.F. An efficient self-organizing RBF neural network for water quality prediction. Neural Netw. 2011, 24, 717–725. [Google Scholar] [CrossRef]

- Powell, M.J.D. A Tolerant Algorithm for Linearly Constrained Optimization Calculations. Math. Program. 1989, 45, 547–566. [Google Scholar] [CrossRef]

- Tsoulos, I.G. Modifications of real code genetic algorithm for global optimization. Appl. Math. Comput. 2008, 203, 598–607. [Google Scholar] [CrossRef]

- Tsoulos, I.G.; Tzallas, A.; Karvounis, E. Improving the PSO method for global optimization problems. Evol. Syst. 2021, 12, 875–883. [Google Scholar] [CrossRef]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 21 June–18 July 1965. [Google Scholar]

- Montaz Ali, M.; Khompatraporn, C.; Zabinsky, Z.B. A Numerical Evaluation of Several Stochastic Algorithms on Selected Continuous Global Optimization Test Problems. J. Glob. Optim. 2005, 31, 635–672. [Google Scholar]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.; Esposoto, W.; Gümüs, Z.; Harding, S.; Klepeis, J.; Meyer, C.; Schweiger, C. Handbook of Test Problems in Local and Global Optimization; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999. [Google Scholar]

- Zhang, J.; Glezakou, V.A. Global optimization of chemical cluster structures: Methods, applications, and challenges. Int. J. Quantum Chem. 2021, 121, e26553. [Google Scholar] [CrossRef]

| PARAMETER | VALUE |

|---|---|

| 100 | |

| k | 10 |

| N | 20,50 |

| FR |

| FUNCTION | N = 20 | N = 50 |

|---|---|---|

| BF1 | 3004 | 5975 |

| BF2 | 2828 | 5826 |

| BRANIN | 2409 | 5415 |

| CAMEL | 2661 | 5599 |

| CIGAR | 5588 | 8410 |

| CM4 | 3551 (0.87) | 6431 (0.80) |

| DISCUS | 2817 | 5965 |

| EASOM | 2204 | 5202 |

| EXP4 | 2769 | 5772 |

| EXP16 | 2836 | 5837 |

| EXP64 | 2912 | 5914 |

| GRIEWANK2 | 3938 (0.40) | 6572 (0.30) |

| GRIEWANK10 | 4536 (0.97) | 7520 |

| POTENTIAL3 | 3121 | 6120 |

| POTENTIAL5 | 4363 | 7320 |

| HANSEN | 5344 (0.93) | 9536 (0.90) |

| HARTMAN3 | 2618 | 5608 |

| HARTMAN6 | 3014 | 6037 |

| HIGHELLIPTIC | 4398 | 7306 |

| RASTRIGIN | 3850 (0.83) | 6401 (0.77) |

| ROSENBROCK4 | 6456 | 8584 |

| ROSENBROCK8 | 7646 | 10095 |

| SHEKEL5 | 3144 | 6215 |

| SHEKEL7 | 3354 | 6508 |

| SHEKEL10 | 3388 | 6860 |

| SINU4 | 3935 | 6670 (0.97) |

| SINU8 | 5547 | 8056 |

| SINU16 | 19,313 | 35,751 (0.97) |

| TEST2N4 | 3035 (0.87) | 6002 (0.97) |

| TEST2N5 | 3127 (0.73) | 6042 (0.67) |

| TEST2N6 | 3393 (0.40) | 6169 (0.47) |

| TEST2N7 | 4075 (0.37) | 6443 (0.33) |

| TEST30N3 | 3723 | 6322 |

| TEST30N4 | 3736 | 6465 |

| TOTAL | 142,632 (0.923) | 254,988 (0.916) |

| FUNCTION | ISAMPLES = 100 | ISAMPLES = 200 | ISAMPLES = 500 |

|---|---|---|---|

| BF1 | 1086 | 1159 | 1500 |

| BF2 | 922 | 1026 | 1304 |

| BRANIN | 503 | 590 | 899 |

| CAMEL | 670 | 756 | 1060 |

| CIGAR | 3482 | 3236 | 2849 |

| CM4 | 1583 (0.83) | 1716 (0.83) | 1861 (0.90) |

| DISCUS | 931 | 1206 | 1525 |

| EASOM | 1063 | 401 | 704 |

| EXP4 | 766 | 803 | 1049 |

| EXP16 | 912 | 1009 | 1303 |

| EXP64 | 968 | 1070 | 1359 |

| GRIEWANK2 | 2409 (0.53) | 1641 (0.40) | 2069 (0.57) |

| GRIEWANK10 | 2607 (0.97) | 2609 | 2902 (0.93) |

| POTENTIAL3 | 1211 | 1297 | 1613 |

| POTENTIAL5 | 2414 | 2521 | 2835 |

| HANSEN | 6079 (0.87) | 4785 (0.83) | 6504 (0.77) |

| HARTMAN3 | 729 | 830 | 1143 |

| HARTMAN6 | 1111 (0.90) | 1290 (0.93) | 1525 (0.97) |

| HIGHELLIPTIC | 2618 | 2671 | 3098 |

| RASTRIGIN | 1727 (0.57) | 1043 (0.87) | 1386 |

| ROSENBROCK4 | 4111 | 2672 | 4357 |

| ROSENBROCK8 | 5417 | 6253 | 5609 |

| SHEKEL5 | 1751 (0.73) | 2152 (0.90) | 1245 (0.90) |

| SHEKEL7 | 1667 (0.87) | 1627 (0.83) | 1676 (0.93) |

| SHEKEL10 | 2329 (0.80) | 2946 (0.73) | 3678 (0.77) |

| SINU4 | 938 | 991 | 1227 |

| SINU8 | 1194 | 1360 | 1479 |

| SINU16 | 14,305 (0.87) | 32,647 (0.97) | 21,363 (0.97) |

| TEST2N4 | 904 (0.57) | 936 (0.73) | 1227 |

| TEST2N5 | 1881 (0.80) | 1218 | 1351 |

| TEST2N6 | 1092 (0.67) | 1224 (0.87) | 1435 (0.97) |

| TEST2N7 | 1452 (0.70) | 1397 (0.80) | 1477 (0.90) |

| TEST30N3 | 1244 | 2054 | 2584 |

| TEST30N4 | 2027 | 2644 | 2638 |

| TOTAL | 74,103 (0.902) | 91,780 (0.932) | 89,834 (0.958) |

| FUNCTION | ISAMPLES = 100 | ISAMPLES = 200 | ISAMPLES = 500 |

|---|---|---|---|

| BF1 | 1093 | 1175 | 1527 |

| BF2 | 943 | 1022 | 1319 |

| BRANIN | 502 (0.97) | 594 | 900 |

| CAMEL | 642 | 729 | 1046 |

| CIGAR | 3527 | 3228 | 6729 |

| CM4 | 1491 (0.87) | 1884 (0.90) | 1799 (0.97) |

| DISCUS | 828 | 1365 | 1215 |

| EASOM | 2320 | 398 | 723 |

| EXP4 | 766 | 827 | 1050 |

| EXP16 | 912 | 1007 | 1298 |

| EXP64 | 983 | 1064 | 1358 |

| GRIEWANK2 | 1788 (0.50) | 1762 (0.43) | 2345 (0.50) |

| GRIEWANK10 | 2505 | 2677 | 2868 |

| POTENTIAL3 | 1244 | 1313 | 1609 |

| POTENTIAL5 | 2420 | 2502 | 2795 |

| HANSEN | 6711 (0.70) | 4278 (0.70) | 7264 (0.67) |

| HARTMAN3 | 728 | 830 | 1144 |

| HARTMAN6 | 1027 (0.93) | 1202 (0.93) | 1492 |

| HIGHELLIPTIC | 3455 | 2889 | 3078 |

| RASTRIGIN | 977 (0.53) | 1269 (0.77) | 1397 (0.97) |

| ROSENBROCK4 | 2348 | 2453 | 3278 |

| ROSENBROCK8 | 3928 | 4461 | 4865 |

| SHEKEL5 | 5630 (0.67) | 7498 (0.87) | 1510 (0.93) |

| SHEKEL7 | 2135 (0.67) | 1973 (0.67) | 1815 (0.97) |

| SHEKEL10 | 1864 (0.73) | 1245 (0.60) | 3165 (0.83) |

| SINU4 | 984 | 1020 | 1355 |

| SINU8 | 10,502 | 1517 | 1456 |

| SINU16 | 95,225 (0.83) | 21,658 (0.90) | 21,330 (0.87) |

| TEST2N4 | 820 (0.63) | 1079 (0.90) | 1274 |

| TEST2N5 | 1140 (0.67) | 1107 (0.80) | 1333 |

| TEST2N6 | 1203 (0.73) | 1371 (0.97) | 1440 (0.97) |

| TEST2N7 | 1602 (0.50) | 1200 (0.77) | 1618 (0.97) |

| TEST30N3 | 1494 | 1903 | 2279 |

| TEST30N4 | 1164 | 2287 | 2284 |

| TOTAL | 164,901 (0.880) | 82,787 (0.918) | 91,958 (0.960) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsoulos, I.G.; Tzallas, A.; Tsalikakis, D. Use RBF as a Sampling Method in Multistart Global Optimization Method. Signals 2022, 3, 857-874. https://doi.org/10.3390/signals3040051

Tsoulos IG, Tzallas A, Tsalikakis D. Use RBF as a Sampling Method in Multistart Global Optimization Method. Signals. 2022; 3(4):857-874. https://doi.org/10.3390/signals3040051

Chicago/Turabian StyleTsoulos, Ioannis G., Alexandros Tzallas, and Dimitrios Tsalikakis. 2022. "Use RBF as a Sampling Method in Multistart Global Optimization Method" Signals 3, no. 4: 857-874. https://doi.org/10.3390/signals3040051

APA StyleTsoulos, I. G., Tzallas, A., & Tsalikakis, D. (2022). Use RBF as a Sampling Method in Multistart Global Optimization Method. Signals, 3(4), 857-874. https://doi.org/10.3390/signals3040051