Application of Machine Learning Techniques for Predicting Students’ Acoustic Evaluation in a University Library

Abstract

1. Introduction

1.1. Importance of Acoustic Quality in Learning Environments

1.2. Prediction of Acoustic Quality in Learning Environments

1.3. Application of Machine Learning for Prediction of Indoor Environment Quality

1.4. Research Questions of the Current Study

- Which variables will likely influence students’ acoustic evaluations of a learning space?

- Which predictive models demonstrate the highest accuracy in forecasting students’ acoustic evaluations?

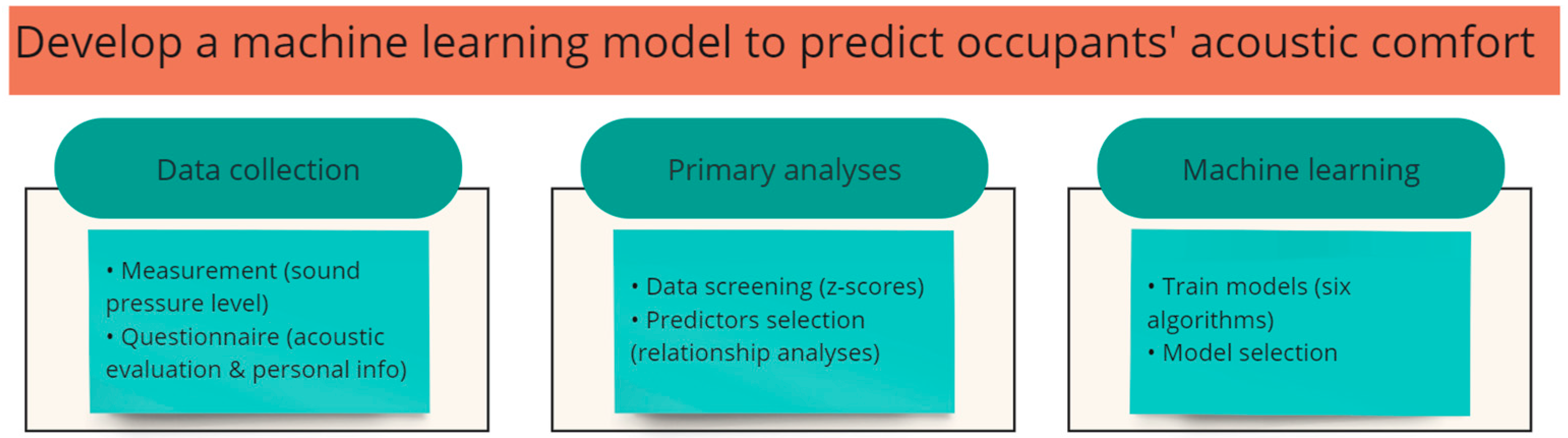

2. Materials and Methods

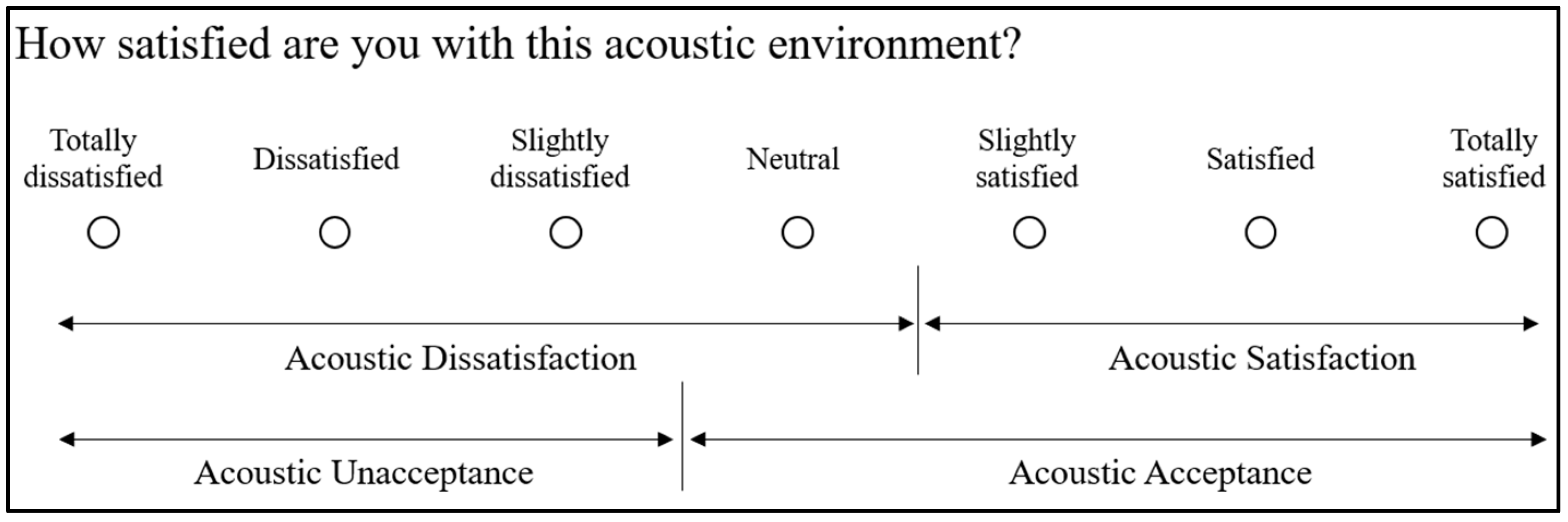

2.1. Data Collection

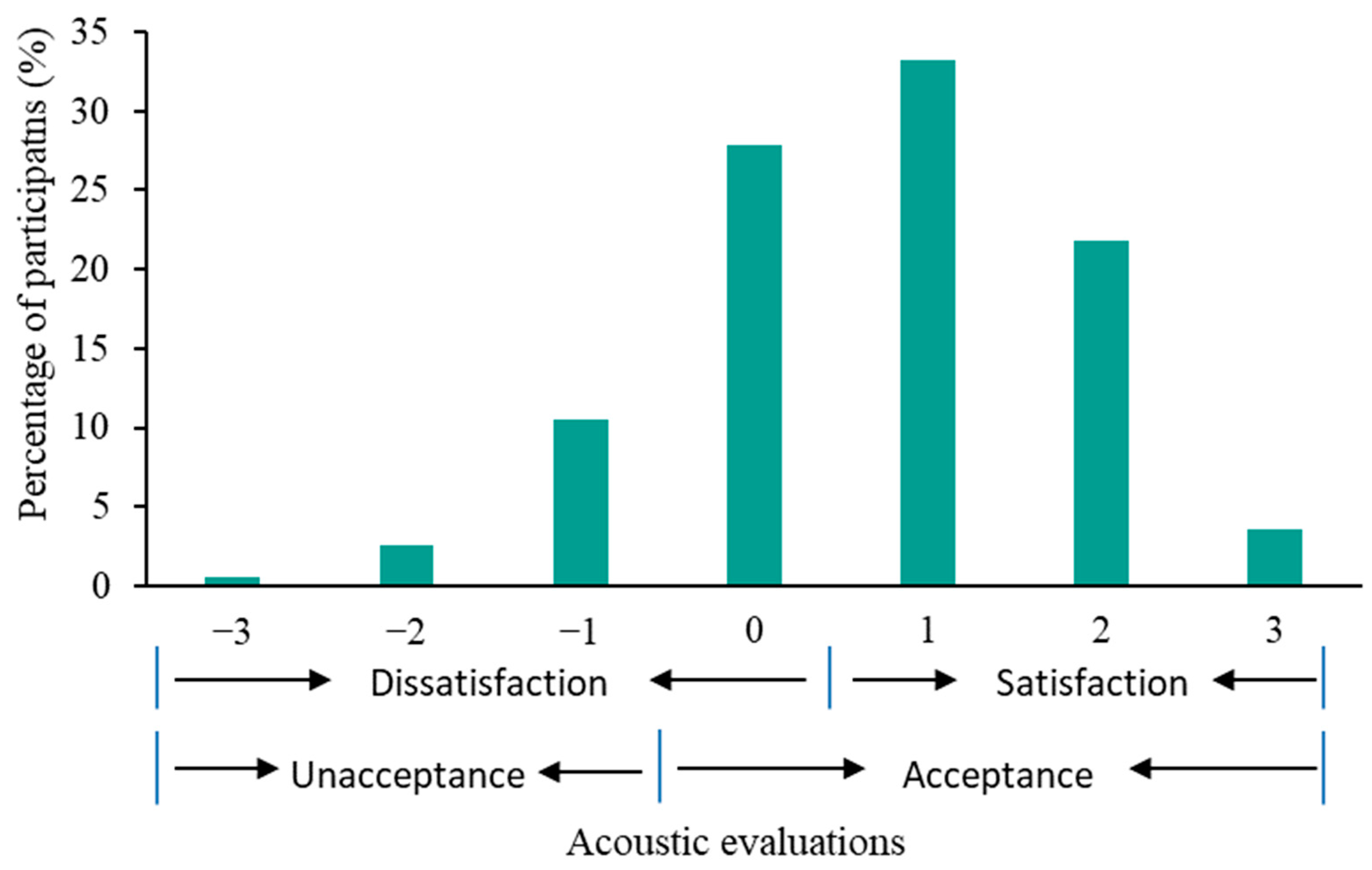

- Acoustic Dissatisfaction: answers from “totally dissatisfied (−3)” to “neutral (0)”;

- Acoustic Satisfaction: answers from “slightly satisfied (1)” to “totally satisfied (3)”;

- Acoustic Unacceptance: answers from “totally dissatisfied (−3)” to “slightly dissatisfied (−1)”;

- Acoustic Acceptance: answers from “neutral (0)” to “totally satisfied (3)”.

2.2. Data Analysis

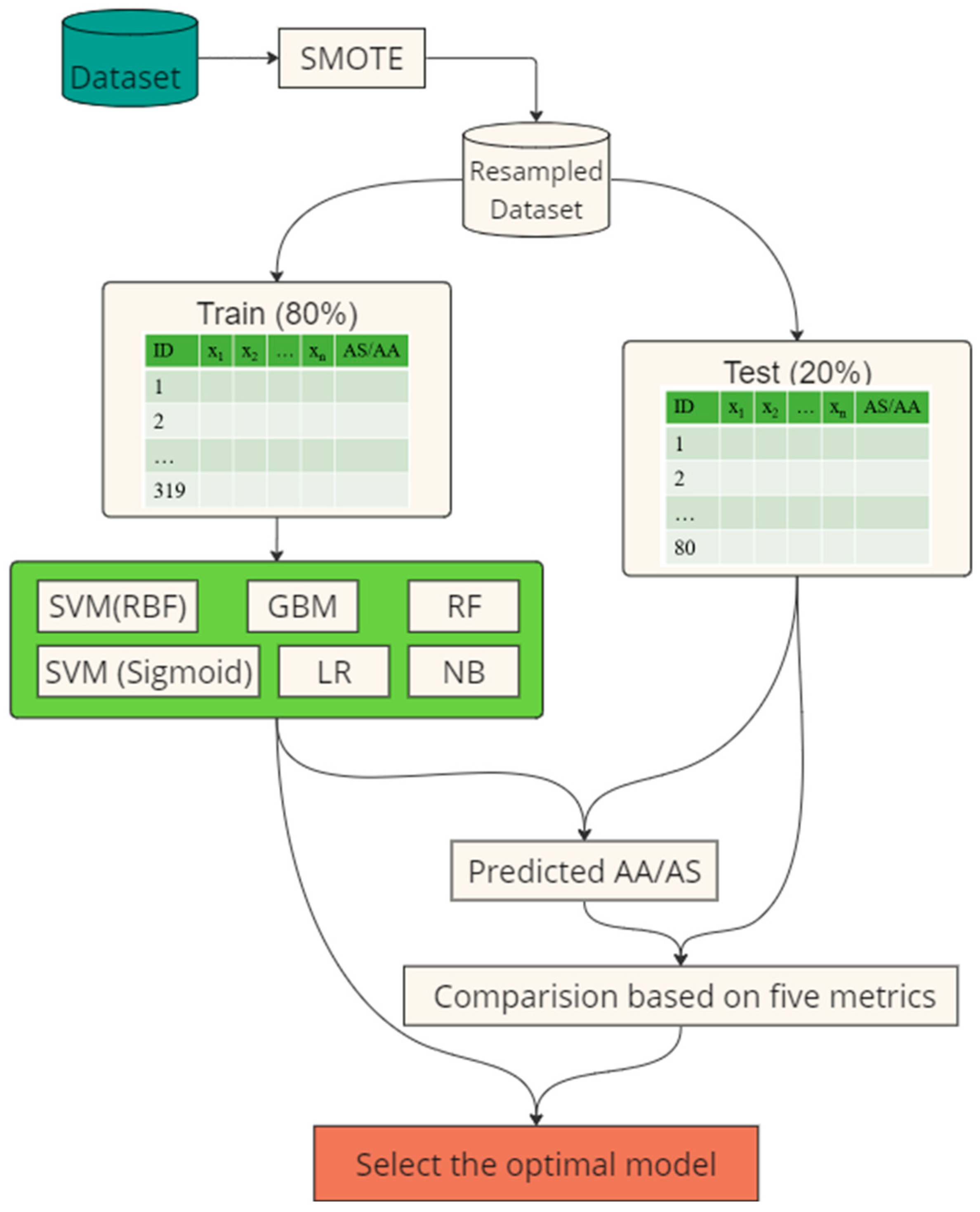

2.3. Machine Learning

- Support Vector Machine–Radial Basis Function (SVM (RBF)): SVM (RBF) is a popular kernel-based classification algorithm that can handle non-linear and high-dimensional data. The radial basis function (RBF) kernel is a common choice for SVM, which maps the data into a high-dimensional space using a Gaussian function. SVM (RBF) is relatively sensitive to model parameters [41].

- Support Vector Machine–Sigmoid (SVM (Sigmoid)). SVM (Sigmoid) is another variant of SVM, and it is also a powerful technique to handle non-linear data. Unlike the SVM (RBF), SVM (Sigmoid) uses the hyperbolic tangent function to map the data [41].

- Gradient Boosting Machine (GBM): GBM is an ensemble technique that builds models sequentially, where each new model aims to improve the previous ones. It combines the predictions of multiple weak learners (usually decision trees) to produce a strong model [42].

- Logistic Regression (LR): LR is a statistical model used for binary classification based on one or more predictor variables. As indicated by its name, LR uses the logistic function to map data [43].

- Random Forest (RF): RF is also an ensemble learning method that constructs multiple decision trees. However, unlike FBM, RF builds trees independently and relies on averaging prediction, leading to high robustness [44].

- Naïve Bayes (NB): NB is a probabilistic classifier based on Bayes’ theorem. NB assumes independence between predictors, which is efficient but might result in less accurate outcomes [45].

3. Results

3.1. Predictor Selection

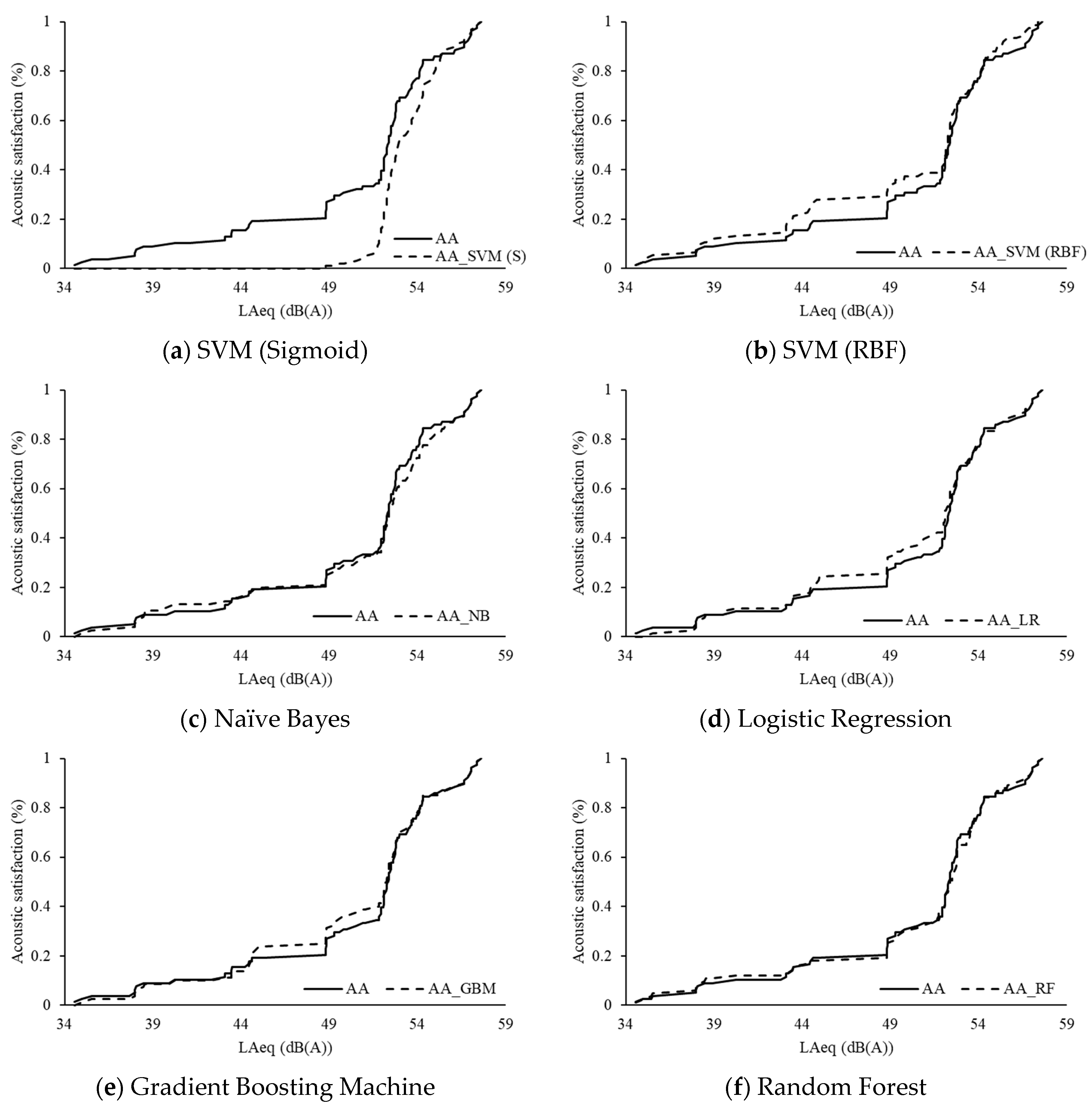

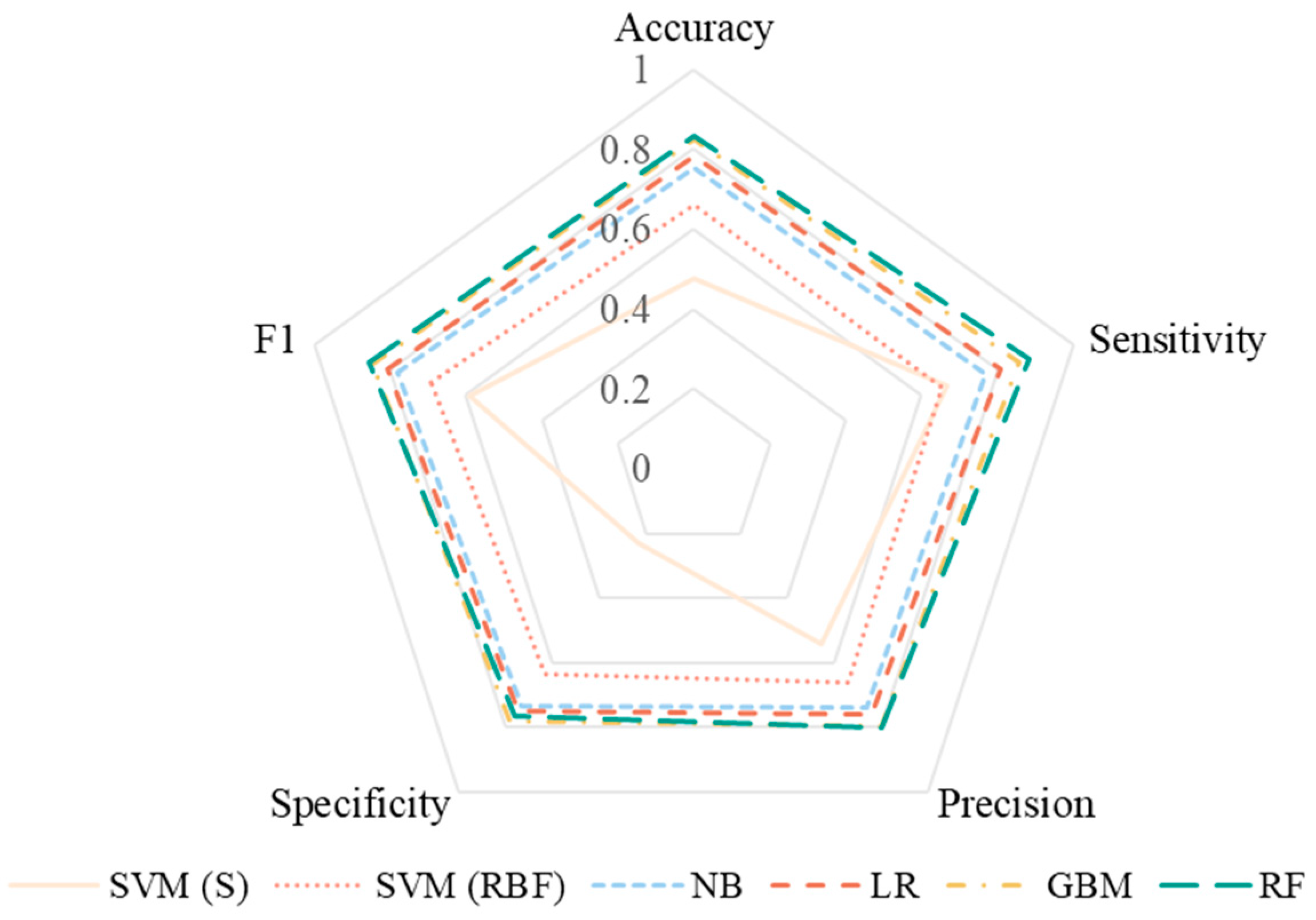

3.2. Acoustic Acceptance Prediction

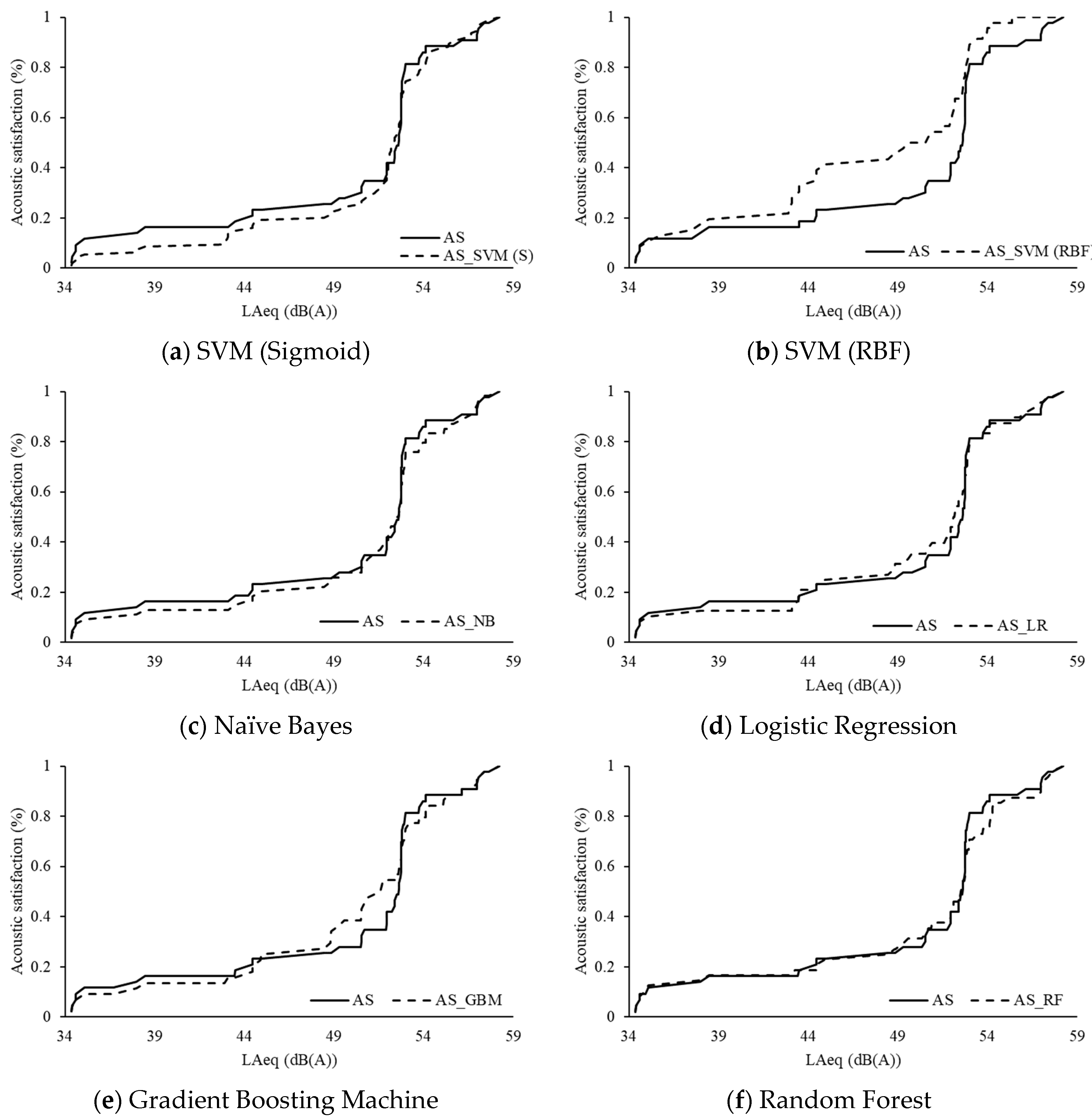

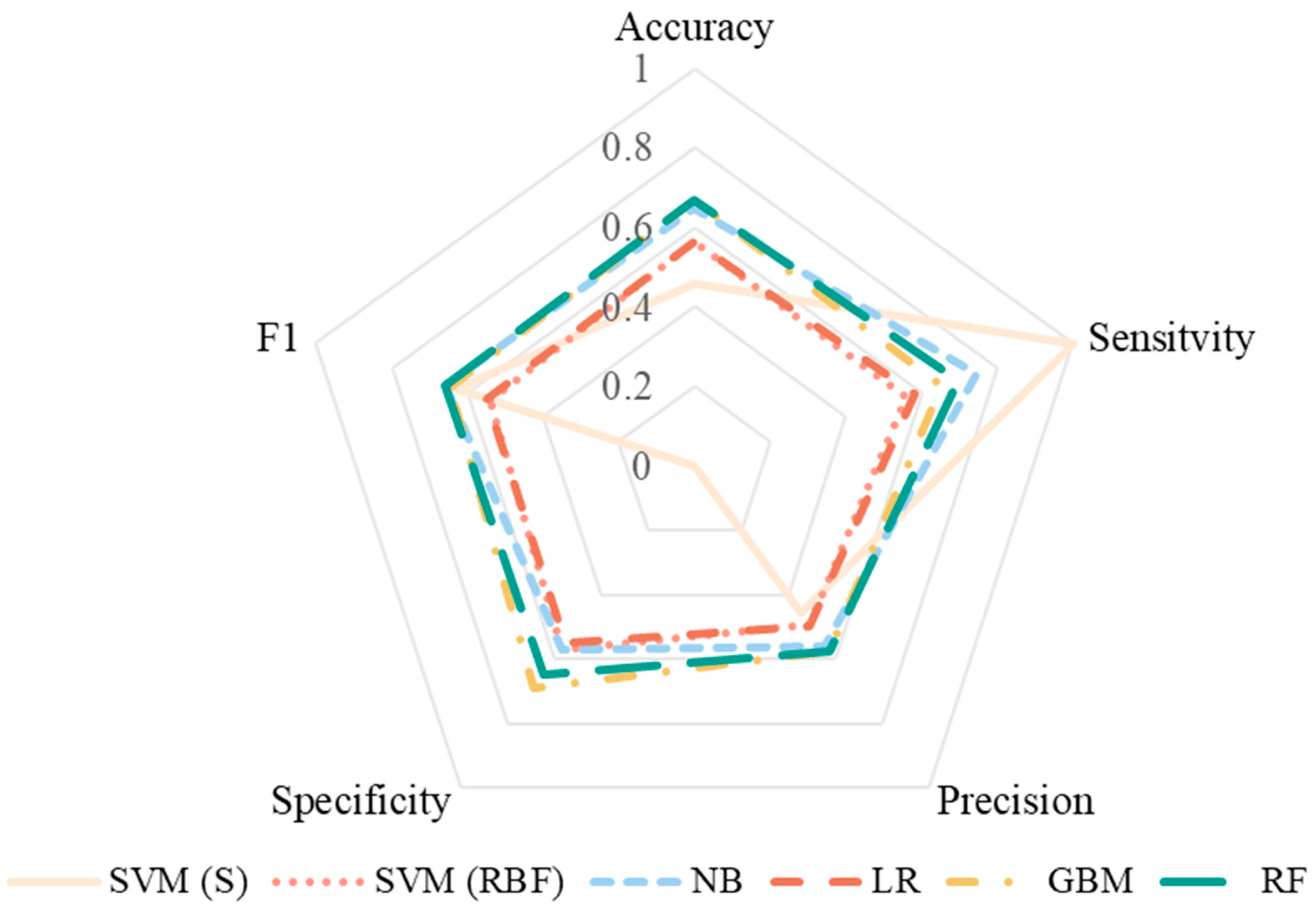

3.3. Acoustic Satisfaction Prediction

4. Discussion

4.1. Parameters to Indicate Occupants’ Acoustic Evaluations

4.2. Comparison of Tested Machine Learning Models

4.3. Limitations and Future Studies

5. Conclusions

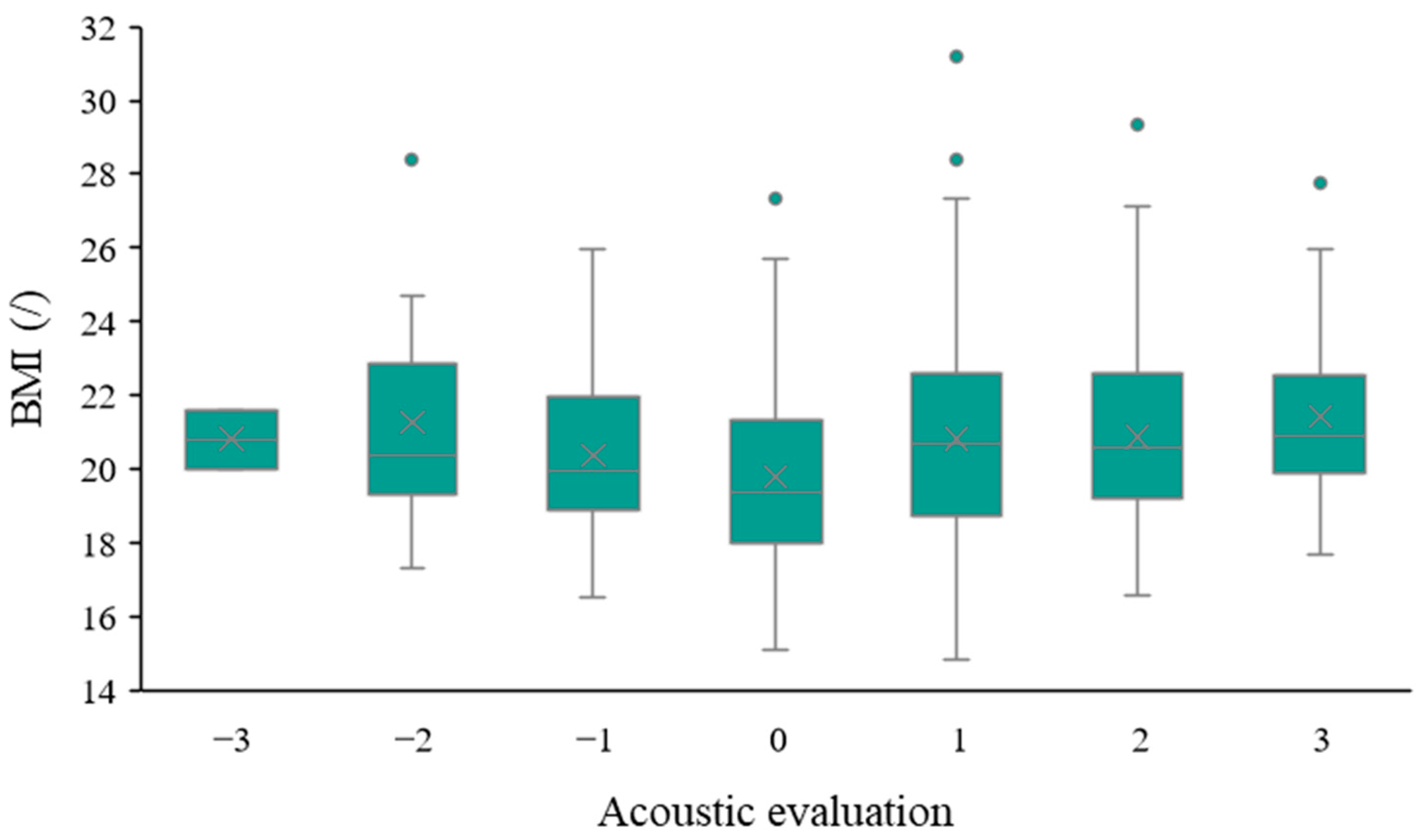

- Personal factors (e.g., age, gender, BMI, and current feeling) significantly impact students’ acoustic evaluations. These personal factors should be considered as essential variables in future acoustic investigations.

- The combination of age, gender, feeling, room type, seat location, and LAeq was used as input variables to predict acoustic acceptance, while the combination of age, feeling, BMI, and LAeq was applied to predict acoustic satisfaction.

- Acoustic acceptance is more tolerant than acoustic satisfaction, as 85% of students accepted the acoustic quality in the investigated environment, while only 58% were satisfied. Moreover, the prediction accuracy of acoustic acceptance (0.72) was higher than that of acoustic satisfaction (0.58). Thus, it is recommended that future acoustic investigations prioritize acoustic acceptance as the target parameter.

- RF and GBM models best predicted both acoustic acceptance and acoustic satisfaction, while SVM models performed the poorest, especially the SVM (Sigmoid).

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mealings, K. A Scoping Review of the Effects of Classroom Acoustic Conditions on Primary School Children’s Physical Health. Acoust. Aust. 2022, 50, 373–381. [Google Scholar] [CrossRef]

- Mealings, K. A scoping review of the effects of classroom acoustic conditions on primary school children’s mental wellbeing. Build. Acoust. 2022, 29, 529–542. [Google Scholar] [CrossRef]

- Wålinder, R.; Gunnarsson, K.; Runeson, R.; Smedje, G. Physiological and Psychological Stress Reactions in Relation to Classroom Noise. Scand. J. Work Environ. Health. 2007, 33, 260–266. [Google Scholar] [CrossRef] [PubMed]

- Klatte, M.; Hellbrück, J.; Seidel, J.; Leistner, P. Effects of Classroom Acoustics on Performance and Well-Being in Elementary School Children: A Field Study. Environ. Behav. 2010, 42, 659–692. [Google Scholar] [CrossRef]

- Polewczyk, I.; Jarosz, M. Teachers’ and students’ assessment of the influence of school rooms acoustic treatment on their performance and wellbeing. Arch. Acoust. 2020, 45, 401–417. [Google Scholar] [CrossRef]

- Astolfi, A.; Puglisi, G.E.; Murgia, S.; Minelli, G.; Pellerey, F.; Prato, A.; Sacco, T. Influence of Classroom Acoustics on Noise Disturbance and Well-Being for First Graders. Front. Psychol. 2019, 10, 2736. [Google Scholar] [CrossRef] [PubMed]

- Dohmen, M.; Braat-Eggen, E.; Kemperman, A.; Hornikx, M. The Effects of Noise on Cognitive Performance and Helplessness in Childhood: A Review. Int. J. Environ. Res. Public Health 2023, 20, 288. [Google Scholar] [CrossRef] [PubMed]

- Mealings, K. A Scoping Review of the Effect of Classroom Acoustic Conditions on Primary School Children’s Numeracy Performance and Listening Comprehension. Acoust. Aust. 2023, 51, 129–158. [Google Scholar] [CrossRef]

- Klatte, M.; Lachmann, T.; Meis, M. Effects of noise and reverberation on speech perception and listening comprehension of children and adults in a classroom-like setting. Noise Health 2010, 12, 270–282. [Google Scholar] [CrossRef]

- Prodi, N.; Visentin, C.; Borella, E.; Mammarella, I.C.; Di Domenico, A. Using speech comprehension to qualify communication in classrooms: Influence of listening condition, task complexity and students’ age and linguistic abilities. Appl. Acoust. 2021, 182, 108239. [Google Scholar] [CrossRef]

- Brännström, K.J.; Rudner, M.; Carlie, J.; Sahlén, B.; Gulz, A.; Andersson, K.; Johansson, R. Listening effort and fatigue in native and non-native primary school children. J. Exp. Child. Psychol. 2021, 210, 105203. [Google Scholar] [CrossRef] [PubMed]

- Mealings, K. Classroom acoustics and cognition: A review of the effects of noise and reverberation on primary school children’s attention and memory. Build. Acoust. 2022, 29, 401–431. [Google Scholar] [CrossRef]

- Masullo, M.; Ruggiero, G.; Fernandez, D.A.; Iachini, T.; Maffei, L. Effects of urban noise variability on cognitive abilities in indoor spaces: Gender differences. Noise Vib. Worldw. 2021, 52, 313–322. [Google Scholar] [CrossRef]

- Sarantopoulos, G.; Lykoudis, S.; Kassomenos, P. Noise levels in primary schools of medium sized city in Greece. Sci. Total Environ. 2014, 482–483, 493–500. [Google Scholar] [CrossRef] [PubMed]

- Kanu, M.O.; Joseph, G.W.; Targema, T.V.; Andenyangnde, D.; Mohammed, I.D. On the Noise Levels in Nursery, Primary and Secondary Schools in Jalingo, Taraba State: Are they in Conformity with the Standards? Present Environ. Sustain. Dev. 2022, 2, 95–111. [Google Scholar] [CrossRef]

- Tahsildoost, M.; Zomorodian, Z.S. Indoor environment quality assessment in classrooms: An integrated approach. J. Build. Phys. 2018, 42, 336–362. [Google Scholar] [CrossRef]

- Yang, D.; Mak, C.M. Relationships between indoor environmental quality and environmental factors in university classrooms. Build. Environ. 2020, 186, 107331. [Google Scholar] [CrossRef]

- Cao, B.; Ouyang, Q.; Zhu, Y.; Huang, L.; Hu, H.; Deng, G. Development of a multivariate regression model for overall satisfaction in public buildings based on field studies in Beijing and Shanghai. Build. Environ. 2012, 47, 394–399. [Google Scholar] [CrossRef]

- Wong, L.T.; Mui, K.W.; Hui, P.S. A multivariate-logistic model for acceptance of indoor environmental quality (IEQ) in offices. Build. Environ. 2008, 43, 1–6. [Google Scholar] [CrossRef]

- Wong, L.T.; Mui, K.W.; Tsang, T.W. Updating Indoor Air Quality (IAQ) Assessment Screening Levels with Machine Learning Models. Int. J. Environ. Res. Public Health 2022, 19, 5724. [Google Scholar] [CrossRef]

- Zhang, W.; Wu, Y.; Calautit, J.K. A review on occupancy prediction through machine learning for enhancing energy efficiency, air quality and thermal comfort in the built environment. Renew. Sustain. Energy Rev. 2022, 167, 112704. [Google Scholar] [CrossRef]

- Luo, M.; Xie, J.; Yan, Y.; Ke, Z.; Yu, P.; Wang, Z.; Zhang, J. Comparing machine learning algorithms in predicting thermal sensation using ASHRAE Comfort Database II. Energy Build. 2020, 210, 109776. [Google Scholar] [CrossRef]

- Chai, Q.; Wang, H.; Zhai, Y.; Yang, L. Using machine learning algorithms to predict occupants’ thermal comfort in naturally ventilated residential buildings. Energy Build. 2020, 217, 109937. [Google Scholar] [CrossRef]

- Huang, H.B.; Huang, X.R.; Li, R.X.; Lim, T.C.; Ding, W.P. Sound quality prediction of vehicle interior noise using deep belief networks. Appl. Acoust. 2016, 113, 149–161. [Google Scholar] [CrossRef]

- Wang, R.; Yao, D.; Zhang, J.; Xiao, X.; Xu, Z. Identification of Key Factors Influencing Sound Insulation Performance of High-Speed Train Composite Floor Based on Machine Learning. Acoustics 2024, 6, 1–17. [Google Scholar] [CrossRef]

- Zhang, E.; Peng, Z.; Zhuo, J. A case study on improving electric bus interior sound quality based on sensitivity analysis of psycho-acoustics parameters. Noise Vib. Worldw. 2023, 54, 460–468. [Google Scholar] [CrossRef]

- Yeh, C.Y.; Tsay, Y.S. Using machine learning to predict indoor acoustic indicators of multi-functional activity centers. Appl. Sci. 2021, 11, 5641. [Google Scholar] [CrossRef]

- Bonet-Solà, D.; Vidaña-Vila, E.; Alsina-Pagès, R.M. Prediction of the acoustic comfort of a dwelling based on automatic sound event detection. Noise Mapp. 2023, 10, 20220177. [Google Scholar] [CrossRef]

- Puyana-Romero, V.; Díaz-Márquez, A.M.; Ciaburro, G.; Hernández-Molina, R. The Acoustic Environment and University Students’ Satisfaction with the Online Education Method during the COVID-19 Lockdown. Int. J. Environ. Res. Public Health 2023, 20, 709. [Google Scholar] [CrossRef]

- Puyana-Romero, V.; Larrea-Álvarez, C.M.; Díaz-Márquez, A.M.; Hernández-Molina, R.; Ciaburro, G. Developing a Model to Predict Self-Reported Student Performance during Online Education Based on the Acoustic Environment. Sustainability 2024, 16, 4411. [Google Scholar] [CrossRef]

- The Chartered Institution of Building Services Engineers. CIBSE TM68: Monitoring Indoor Environment Quality; The Chartered Institution of Building Services Engineers: London, UK, 2022. [Google Scholar]

- Aryal, A.; Becerik-Gerber, B. Thermal comfort modeling when personalized comfort systems are in use: Comparison of sensing and learning methods. Build. Environ. 2020, 185, 107316. [Google Scholar] [CrossRef]

- Hagberg, K.G. Evaluating field measurements of impact sound. Build. Acoust. 2010, 17, 105–128. [Google Scholar] [CrossRef]

- Karmann, C.; Schiavon, S.; Arens, E. Percentage of Commercial Buildings Showing at Least 80% Occupant Satisfied with Their Thermal Comfort. Available online: www.escholarship.org/uc/item/89m0z34x (accessed on 25 May 2024).

- Tsang, T.W.; Mui, K.W.; Wong, L.T.; Yu, W. Bayesian updates for indoor environmental quality (IEQ) acceptance model for residential buildings. Intell. Build. Int. 2021, 13, 17–32. [Google Scholar] [CrossRef]

- Ghazal, S.; Aldowah, H.; Umar, I.N. The relationship between acceptance and satisfaction of learning environment system usage in a balanced learning environment. J. Fundam. Appl. Sci. 2018, 10, 858–870. [Google Scholar] [CrossRef]

- NSW Environment Protection Authority, Noise Guide for Local Government. NSW Environment Protection Authority. 2023. Available online: https://www.epa.nsw.gov.au/your-environment/noise/regulating-noise/noise-guide-local-government (accessed on 25 May 2024).

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-Sampling Technique. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Akın, P. A new hybrid approach based on genetic algorithm and support vector machine methods for hyperparameter optimization in synthetic minority over-sampling technique (SMOTE). AIMS Math. 2023, 8, 9400–9415. [Google Scholar] [CrossRef]

- Satriaji, W.; Kusumaningrum, R. Effect of Synthetic Minority Oversampling Technique (SMOTE), Feature Representation, and Classification Algorithm on Imbalanced Sentiment Analysis. In Proceedings of the 2nd International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 30–31 October 2018; pp. 1–5. [Google Scholar]

- Ghosh, S.; Dasgupta, A.; Swetapadma, A. A study on support vector machine based linear and non-linear pattern classification. In Proceedings of the 2019 International Conference on Intelligent Sustainable Systems (ICISS), Palladam, India, 21–22 February 2019; pp. 24–28. [Google Scholar]

- Ayyadevara, V.K. Gradient Boosting Machine. In Pro Machine Learning Algorithms, 1st ed.; Apress: Berkeley, CA, USA, 2018; pp. 117–134. [Google Scholar]

- Ayyadevara, V.K. Logistic Regression. In Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R, 1st ed.; Apress: Berkeley, CA, USA, 2018; pp. 49–69. [Google Scholar] [CrossRef]

- Ayyadevara, V.K. Random Forest. In Pro Machine Learning Algorithms, 1st ed.; Apress: Berkeley, CA, USA, 2018; pp. 105–116. [Google Scholar]

- Chen, S.; Webb, G.I.; Liu, L.; Ma, X. A novel selective naïve Bayes algorithm. Knowl.-Based Syst. 2020, 192, 105361. [Google Scholar] [CrossRef]

- Tang, S.K. Performance of noise indices in air-conditioned landscaped office buildings. J. Acoust. Soc. Am. 1997, 102, 1657–1663. [Google Scholar] [CrossRef] [PubMed]

- Ng, W.; Minasny, B.; de Sousa Mendes, W.; Demattê, J.A.M. The influence of training sample size on the accuracy of deep learning models for the prediction of soil properties with near-infrared spectroscopy data. SOIL 2020, 6, 565–578. [Google Scholar] [CrossRef]

- Bailly, A.; Blanc, C.; Francis, É.; Guillotin, T.; Jamal, F.; Wakim, B.; Roy, P. Effects of dataset size and interactions on the prediction performance of logistic regression and deep learning models. Comput. Methods Programs Biomed. 2022, 213, 106504. [Google Scholar] [CrossRef]

- Tsangaratos, P.; Ilia, I. Comparison of a logistic regression and Naïve Bayes classifier in landslide susceptibility assessments: The influence of models complexity and training dataset size. Catena 2016, 145, 164–179. [Google Scholar] [CrossRef]

- Hamida, A.; Zhang, D.; Ortiz, M.A.; Bluyssen, P.M. Indicators and methods for assessing acoustical preferences and needs of students in educational buildings: A review. Appl. Acoust. 2023, 202, 109187. [Google Scholar] [CrossRef]

- Boudreault, J.; Campagna, C.; Chebana, F. Machine and deep learning for modelling heat-health relationships. Sci. Total Environ. 2023, 892, 164660. [Google Scholar] [CrossRef] [PubMed]

- Ayyadevara, V.K. Pro Machine Learning Algorithms: A Hands-On Approach to Implementing Algorithms in Python and R; Apress Media LLC: Berkeley, CA, USA, 2018. [Google Scholar] [CrossRef]

- Steyerberg, E.W. Clinical Prediction Models A Practical Approach to Development, Validation, and Updating, 2nd ed.; Springer: Chem, Switzerland, 2019. [Google Scholar]

- Kelly, A.; Johnson, M.A. Investigating the statistical assumptions of naïve bayes classifiers. In Proceedings of the 2021 55th Annual Conference on Information Sciences and Systems, CISS 2021, Baltimore, MD, USA, 24–26 March 2021; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Osisanwo, F.Y.; Akinsola, J.E.T.; Awodele, O.; Hinmikaiye, J.O.; Olakanmi, O.; Akinjobi, J. Supervised Machine Learning Algorithms: Classification and Comparison. Int. J. Comput. Trends Technol. 2017, 48, 128–138. [Google Scholar] [CrossRef]

- Kar, A.; Nath, N.; Kemprai, U.; Aman. Performance Analysis of Support Vector Machine (SVM) on Challenging Datasets for Forest Fire Detection. Int. J. Commun. Netw. Syst. Sci. 2024, 17, 11–29. [Google Scholar] [CrossRef]

| Variables | N = 398 | Acoustic Acceptance | Acoustic Satisfaction | |

|---|---|---|---|---|

| Occupant-related indicators | ||||

| Age | 21.3 (3.5) | t = −3.115 (p = 0.002) | t = −2.224 (p = 0.027) | |

| Gender | Female | 190 (48%) | Χ2 = 3.324 (p = 0.068) | Χ2 = 1.611 (p = 0.204) |

| Male | 208 (52%) | |||

| Feeling | Good | 295 (74%) | Χ2 = 4.775 (p = 0.092) | Χ2 = 9.831 (p = 0.007) |

| Neutral | 95 (24%) | |||

| Bad | 8 (2%) | |||

| BMI | 20.5 (2.6) | t = −0.033 (p = 0.974) | t = 3.142 (p = 0.002) | |

| Room-related indicators | ||||

| Room type | Group | 228 (57%) | Χ2 = 8.642 (p = 0.003) | Χ2 = 0.269 (p = 0.604) |

| Self | 170 (43%) | |||

| Seat location | Middle | 213 (54%) | Χ2 = 12.381 (p = 0.002) | Χ2 = 2.055 (p = 0.358) |

| Others | 185 (46%) | |||

| Dose-related indicators | ||||

| LAeq | 50.1 (6.2) | t = −1.033 (p = 0.302) | t = −2.502 (p = 0.013) | |

| LA90 | 49.1 (6.7) | t = −0.806 (p = 0.421) | t = −2.356 (p = 0.019) | |

| LA10 | 51.1 (6.0) | t = −1.038 (p = 0.304) | t = −2.483 (p = 0.013) | |

| Models | Predicted | Accepted N (%) | Unaccepted n (%) | p * | |

|---|---|---|---|---|---|

| Collected | |||||

| SVM (Sigmoid) | Accepted | 52 (37.7%) | 26 (18.8%) | 0.199 | |

| Unaccepted | 46 (33.3%) | 14 (10.1%) | |||

| SVM (RBF) | Accepted | 53 (38.4%) | 25 (18.1%) | <0.001 | |

| Unaccepted | 22 (15.9%) | 38 (27.5%) | |||

| NB | Accepted | 60 (43.5%) | 18 (13.0%) | <0.001 | |

| Unaccepted | 16 (11.6%) | 44 (31.9%) | |||

| LR | Accepted | 63 (43.5%) | 15 (10.9%) | <0.001 | |

| Unaccepted | 15 (10.9%) | 45 (32.6%) | |||

| GBM | Accepted | 67 (48.6%) | 11 (8.0%) | <0.001 | |

| Unaccepted | 13 (9.4%) | 47 (34.1%) | |||

| RF | Accepted | 69 (50.0%) | 9 (6.5%) | <0.001 | |

| Unaccepted | 14 (10.1%) | 46 (33.3%) | |||

| Models | Predicted | Satisfied n (%) | Dissatisfied n (%) | p * | |

|---|---|---|---|---|---|

| Collected | |||||

| SVM (Sigmoid) | Satisfied | 51 (54.3%) | 0 (0%) | / | |

| Dissatisfied | 43 (45.7%) | 0 (0%) | |||

| SVM (RBF) | Satisfied | 24 (25.5%) | 19 (20.2%) | 0.221 | |

| Dissatisfied | 22 (23.4%) | 29 (30.9%) | |||

| NB | Satisfied | 32 (34.0%) | 11 (11.7%) | 0.002 | |

| Dissatisfied | 22 (23.4%) | 29 (30.9%) | |||

| LR | Satisfied | 25 (26.6%) | 18 (19.1%) | 0.208 | |

| Dissatisfied | 23 (24.5%) | 28 (29.8%) | |||

| GBM | Satisfied | 28 (29.8%) | 15 (16.0%) | 0.001 | |

| Dissatisfied | 16 (17.0%) | 35 (37.2%) | |||

| RF | Satisfied | 30 (31.9%) | 13 (13.8%) | <0.001 | |

| Dissatisfied | 18 (19.1%) | 33 (35.1%) | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Mui, K.-W.; Masullo, M.; Wong, L.-T. Application of Machine Learning Techniques for Predicting Students’ Acoustic Evaluation in a University Library. Acoustics 2024, 6, 681-697. https://doi.org/10.3390/acoustics6030037

Zhang D, Mui K-W, Masullo M, Wong L-T. Application of Machine Learning Techniques for Predicting Students’ Acoustic Evaluation in a University Library. Acoustics. 2024; 6(3):681-697. https://doi.org/10.3390/acoustics6030037

Chicago/Turabian StyleZhang, Dadi, Kwok-Wai Mui, Massimiliano Masullo, and Ling-Tim Wong. 2024. "Application of Machine Learning Techniques for Predicting Students’ Acoustic Evaluation in a University Library" Acoustics 6, no. 3: 681-697. https://doi.org/10.3390/acoustics6030037

APA StyleZhang, D., Mui, K.-W., Masullo, M., & Wong, L.-T. (2024). Application of Machine Learning Techniques for Predicting Students’ Acoustic Evaluation in a University Library. Acoustics, 6(3), 681-697. https://doi.org/10.3390/acoustics6030037