Abstract

Recent studies suggest that real-time auditory feedback is an effective method to facilitate motor learning. The evaluation of the parameter mapping (sound-to-movement mapping) is a crucial, yet frequently neglected step in the development of audio feedback. We therefore conducted two experiments to evaluate audio parameters with target finding exercises designed for balance training. In the first experiment with ten participants, five different audio parameters were evaluated on the X-axis (mediolateral movement). Following that, in a larger experiment with twenty participants in a two-dimensional plane (mediolateral and anterior-posterior movement), a basic and synthetic audio model was compared to a more complex audio model with musical characteristics. Participants were able to orient themselves and find the targets with the audio models. In the one-dimensional condition of experiment one, percussion sounds and synthetic sound wavering were the overall most effective audio parameters. In experiment two, the synthetic model was more effective and better evaluated by the participants. In general, basic sounds were more helpful than complex (musical) sound models. Musical abilities and age were correlated with certain exercise scores. Audio feedback is a promising approach for balance training and should be evaluated with patients. Preliminary evaluation of the respective parameter mapping is highly advisable.

1. Introduction

Concurrent external (augmented) feedback has been shown to accelerate the learning of motor skills by providing additional information about the movement process [1,2,3]. Augmented feedback for movement training has been investigated mostly from studies in the haptic and visual field, whereas audio feedback (AFB) remained largely unconsidered [1,4]. AFB approaches build on the transformation of dynamic and kinematic motion parameters into distinct sound components (e.g., pitch, loudness, rhythm, timbre).

A growing body of research suggests that auditory information has a profound effect on the motor system. Neuroimaging research has shown a broadly distributed neuroanatomic network connecting auditory and motor systems [5]. Findings from recent studies show that during sound-making experiences, strong neurological auditory-motor associations are developed. This provides support for the use of AFB to enhance sensorimotor representations and facilitate movement (re)acquisition [5]. In recent years, real-time AFB, often termed sonification [6], has been more widely investigated in upper-limb rehabilitation [5,7], but considerably less in balance training.

External feedback that provides too much information can, however, cause a dependency that is disadvantageous to long-term learning success, resulting in a so-called guidance effect [8,9]. Detrimental to retention, the patient often relies on the supposedly most reliable (external or internal) feedback and may neglect internal sources of sensory information—such as deep sensitivity or proprioception [10]. Although the exact mechanisms remain a matter of debate, AFB appears to have advantages for long-term learning and retention when compared to more dominant forms of external feedback like visual information. For instance, Fujii et al. [11] and Ronsse et al. [12] found that participants that received visual feedback (VFB), showed poorer performance during retention testing than subjects that were given AFB. The assumption that the effects of feedback can vary based on the sensory modality in which it is provided, is relatively new [10,13].

In general, visual perception is recognized to be dominant over other sensory modalities, as it has been shown in the so-called Colavita visual dominance effect: People often fail to perceive or respond to an auditory signal if they have to respond to a visual signal presented at the same time [14,15]. AFB might promote increased reliance on internal sources of information (like proprioception) during training [10,12,16]. Unlike dominant forms of VFB, AFB distracts the learner less from his or her natural motion perception [10,17]. Additionally, recent studies suggest auditory information can substitute for a loss of proprioceptive information in motor learning tasks [13,18,19]. Concerning the integration of auditory and proprioceptive information, Hasegawa et al. [20] proposed that the auditory system could promote a challenging learning situation that might increase the favorable reliance on the proprioceptive system. In their study, training with AFB led to robust, retainable improvements of postural control. More recently, studies suggest that perceptual dominance is task-specific, depending on which modality provides higher sensitivity and reliability for the specific task [21].

When providing additional information like continuous movement sonification, questions naturally arise regarding the application parameters, such as frequency, accuracy, or sense modality. Once the nature of the movement task is determined, creating AFB involves mapping the data source(s) to the representational acoustic variables. A mixture of theoretically derived criteria and usability testing should be used to ensure that the message AFB designers aim to deliver is understood by the listener [22]. Therefore, the ideal parameter mapping (i.e., the appropriate relationship between body position and sound) has to be carefully developed for specific tasks such as balance training. Mappings that appear to make sense to the researcher may not necessarily make sense to the user [23]. Regrettably, many studies provide little to no information about the characteristics and the design of the respective sound mappings [24], and only a small percentage of relevant publications carry out a proper evaluation of their AFB mappings [10,25,26].

There are an almost infinite number of ways to convert data into sound and there is no established framework for orientation yet. The parameter mapping (sound—movement connection) can be the “make or break”-factor for an AFB intervention [10]. Our goal was therefore to evaluate the parameter mapping of different AFB models for device-supported balance exercises. We present the results of two experiments in which we aimed to identify the most effective and intuitive audio parameters and to control for the influence of age and musical ability on the training scores.

2. Materials and Methods

This section may be divided by subheadings. It should provide a concise and precise description of the experimental results, their interpretation as well as the experimental conclusions that can be drawn.

2.1. Subjects

In the first series of tests, a convenience sample of 10 healthy subjects participated in the study. Subjects were aged 21 to 36 years (Mean (M) = 25.40, Standard Deviation (SD) = 4.43). All subjects were male students of the University of Kempten. None of the participants had mental or physical/mobility limitations.

In the second experiment, 20 participants (M = 43.00, SD = 13.78, female/male = 7/13) were recruited. We looked for subjects from a broader age range to better control for the influence of personal factors. All participants had normal vision without color blindness, no hearing problems, no neurological or psychiatric disorders, and no mobility limitations.

In both experiments, written informed consent was obtained, and participants were informed about their right to terminate the experiment at any time. The tests were carried out in accordance with the ethical guidelines of the Declaration of Helsinki and the ethics board of the institution where the experiments took place. The tests took place in the laboratory for technical acoustics at the University of Applied Sciences Kempten, Germany.

2.2. Experimental Setup

2.2.1. Hardware

The training device used is the CE-certified and commercially available THERA-Trainer “coro” (Medica Medizintechnik GmbH, Hochdorf, Germany), Figure 1. It is a dynamic balance trainer that provides a fall-safe environment. The user is firmly fixed at the pelvis, and the maximum deflection of the body’s center of gravity is 12 degrees in each direction. Detailed information about the balance trainer is given in Matjaic et al. [27].

Figure 1.

The balance training system TheraTrainer coro (source: Medica Medizintechnik GmbH).

Through a Bluetooth position sensor, which is attached to the device on a steel bar next to the user’s pelvis, the current position data of the body’s center of gravity can be traced. Using a computer and processing software, the sensor data can be further processed in real-time. Depending on the task, participants received either continuous audiovisual or audio-only feedback. The visual feedback component is displayed on a screen (Figure 1). We used over-ear headphones (AKG K701) for the audio display. In addition to the basic setup of experiment one (E1), in experiment two (E2), a foam mat was used for participants to stand on, to add proprioceptive difficulty to the exercises.

2.2.2. Software and Sound Generation

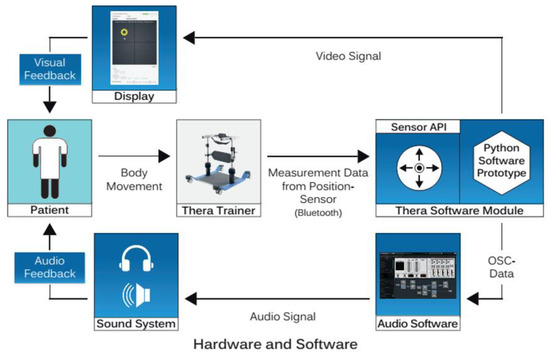

For an accelerated prototype development, the sonification models were first created in Pure Data [28] and later with the Software Reaktor 6 [29]. Both have the advantage of being visually programmed, and changes can be realized quickly. The final software was implemented in Python 3.4 (python.org). The sensor data were first transferred to the main program via an API. Control of the training process and a preliminary data filtering, as well as the visual and acoustic representations, were processed in the written program. Movement data were transferred for sound synthesis via the OSC network protocol [30].

To generate audio in real-time, the audio samples were computed using a callback function. A callback is a piece of executable code that is passed to another application (piece of code) to call back (execute) the desired event or data at a certain time. The callback interface permitted a maximum delay from the sensor input to the sound card of about 12 ms. Two different approaches were used to calculate the individual audio samples: Firstly, for the synthetical sound models, a sine wave was generated in the audio callback function and then modulated according to the movement of the participant. For the musical models, high-quality sound samples were used. These were initially stored in matrices and then read out and adjusted before passing them to the audio output stream, similar to a conventional sampler. An overview of the entire structure can be seen in Figure 2:

Figure 2.

Experimental setup of hardware, software, and feedback.

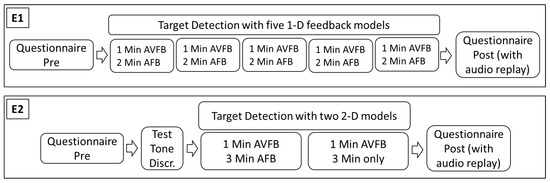

2.3. Procedure (Experimental Protocol)

After reading the experimental timetable and filling out informed consent, participants were asked to fill out a short questionnaire with sociodemographic, health- and balance-related questions, and information about their musical background (years of musical training). After entering the training device (Figure 1), the height of the two sidebars was adjusted, and the security belt was strapped on and tightened. Participants were asked to stand with their feet a shoulder-width apart and lay their hands on the horizontal bar in front of them.

The main goal of the exercises was to identify, approach and enter a target area (i.e., reach the target area sound) with the feedback information as quickly and as often as possible. This target position changed randomly to different positions on the X-axis (mediolateral movement) in E1, or in the two-dimensional plane (additional anterior-posterior movement) in E2. Participants had to find this target position by following the audiovisual or audio-only feedback signals and shifting the center of gravity accordingly. Once the target was reached, participants had to remain within the target area for three seconds to successfully complete the respective round of goal finding.

The training was divided into two parts for each of the sound models. First, the exercise was performed for one minute with AVFB. Current position data was displayed as a line (E1) or a dot (E2) on the screen; the target area was shown as a yellow-shaded block (E1) or circle (E2), see Figure 3. Thereafter, the task had to be carried out merely with acoustic guidance for two minutes (E1), or three minutes (E2), respectively.

In addition to the protocol of E1, a preliminary musical ability test was conducted in E2 to look for the potential influence of musical ability on participant’s training scores and to improve the control of intersubjective variability. The short test (max. two minutes), made by radiologist Jake Mandell (http://jakemandell.com/adaptivepitch), roughly characterizes a person’s overall pitch perception abilities. A series of two short sounds was played, and participants were asked if the second sound is higher or lower than the first tone.

To reduce order and exhaustion effects, the models were ordered quasi-randomized in both experiments, meaning that the chronological sequence of the sound models was balanced across all subjects. After completing the exercises, the sound patterns were briefly played back as a reminder for the subjective evaluation. At the end of the experiment, participants were asked to complete the final questionnaire and to add additional remarks if desired. An overview of the study procedure is shown in Figure 4:

2.4. Audio Parameters

The selection of audio parameters was based on a literature review, e.g., Dubus and Bresin [25], who have reviewed and analyzed 179 scientific publications related to sonification and AFB. We searched the literature for publications and patents in the field of AFB for motor learning (e.g., [7,31,32] and balance improvement (e.g., [33,34,35]). Furthermore, we considered a design framework by Ludovico [36] and recommendations by Walker [37], who emphasized sound-data synchronization, appropriate polarities, and scaling in light of the specific task.

Additional to the literature review, we discussed the audio parameters in a group of experts, consisting of a music therapist (M.A.), an electrical engineer (Ph.D.), a software programmer (B.A.), and a physical therapist (B.A.). Due to the multi-faceted nature of sonification research, many authors recommend interdisciplinary working to develop as natural, pleasant, motivating, and efficient auditory displays as possible [38].

2.4.1. Experiment 1

In the first experiment, we compared five acoustic parameters distinctively. We tested only one audio parameter each, and only in the mediolateral (X-)axis, because the horizontal plane is less researched in the sonification literature, and people’s cone of stability is more equally balanced in the mediolateral. This allowed for an equally weighted evaluation of the audio polarities left/right.

When musical sounds like piano notes were chosen, we used discrete tonal steps from the diatonic system akin to a musical instrument. Synthetic sounds were mapped continuously analogous to non-musical sonification in elite sports (e.g., [39]).

The following audio parameters were implemented for the five models of E1: (1) modulation of the pitch of piano notes, (2) modulation of a synthetic sound by periodicity (wavering), (3) modulation of percussive musical samples, (4) modulation of timbre/brightness of musical sounds, and (5) stereo modulation (panning) of a percussive sample, see Table 1:

Table 1.

Sound characteristics of the one-dimensional models in E1.

All described sound modulations increased or decreased depending on the relative distance to the target area, continuously paralleling the movement of the participant. A clapping sound was played after the successful completion of a goal finding.

2.4.2. Experiment 2

For the second series of tests, two structurally similar 2D sound models were used for the targeting exercises. A basic, synthetically generated, as well as a more complex and musical AFB model, were used to provide information about the center of gravity in the previously defined two-dimensional space (X and Y-axis). The front/back direction in three-dimensional space would correctly have to be called the Z-axis, but we termed this movement direction axis Y, according to the visual display on the screen.

“Synthetic” model:

- The Y-axis was signaled with increasing (movement anterior) and decreasing (movement posterior) frequency white noise (an audio signal with equal intensity at different frequencies).

- The X-axis was signaled by the synthetic modulation of amplitude or frequency (vibrato and tremolo, similar to the model “wavering” of E1).

“Musical” model:

Compared to E1, the only new acoustic parameter was the white noise on the Y-axis in the synthetic model. To stay acoustically congruent within the models and to search for specifics of musical and non-musical sound environments, we aimed to compare a structurally comparable synthetically generated model with a musical model sample-based model. Since the existing literature points out that pitch is best matched on the vertical axis [25], we chose band-pass filtered white noise in which the central frequency of the filter was altered to emphasize higher or lower frequencies in the synthetic model (as the synthetic counterpart to pitch in the musical model). The parameters of timbre and stereo-panning of E1 were excluded from E2 for reasons of inferior performance and participants’ assessment (see results and discussion of E1).

Since the target determination took place in the two-dimensional plane, several parameters had to be superimposed in “mixed” directions (e.g., moving forward and to the right at the same time). The goal was to order and combine these parameters so that the models themselves remain intuitive and the audio signal (relative distance and direction to the target area) remains clear. In the synthetic model, the target area was acoustically characterized by silence, in the musical model by a target sound (tambourine).

2.5. Outcome Measures

Primary outcomes were the recorded raw sensor data of the body movements. We extracted the average time for target findings (TD) and the accuracy/postural sway within the target area (TS).

Additionally, in E2, we extracted real (effectively covered) and ideal distances per target detection. For further statistical analysis, we subtracted ideal distances from real distances to accurately look at the additional, “redundant” movement distances (ADD).

TD was measured in seconds. To measure distances and TD, we calculated a score between 0 and 100 relative to the degrees of tilt measured by the sensor. Distances and TS were calculated as a mean value per TD during the audiovisual and audio-only training, as well as separately for the two movement dimensions mediolateral (X-axis) and anterior-posterior (Y-axis).

Additionally, we collected subjects’ subjective evaluations of the sound models. With visual analog scales (VAS) ranging from 0 to 10 (10 being the best score), subjective statements for each of the models were derived regarding (A) how pleasant and (B) how helpful the model was perceived while performing the target finding. In E2, we additionally asked which of the target area sounds (silence in the synthetic or a sound in the musical model) was perceived as more helpful.

The pitch discrimination test gave out a score of pitch discrimination ability in Hertz. The score describes the size of a sound interval that a participant can reliably differentiate at 500 Hertz (500 Hertz is the reference pitch of the first sound, from which the second sound is different).

2.6. Data Analysis

Raw data were collected and processed for analysis with Python 2.7 scripts (www.python.org). Statistical analysis was conducted using SPSS Version 20. Means and standard deviations were calculated. Shapiro–Wilks tests showed that data were not normally distributed.

Paired Wilcoxon-tests were used to detect whether there was a significant difference between sound models and between the feedback conditions (with and without VFB) in both experiments. Subjective evaluations of the sound models were compared with Wilcoxon tests. In E1, we ordered the results of the sound models chronologically. Friedman tests were then conducted to check whether there was a significant difference in trial duration times, and the first trial was compared to the last trial with Wilcoxon tests. Spearman’s rank correlation coefficient was used to test for correlations between raw (movement) data and personal factors (age and musicality).

3. Results

3.1. Experiment 1

3.1.1. Movement Data

TD times were significantly different across the five sound models without visual support (χ2(4) = 9.6, p = 0.048), with model wavering and percussion having the lowest (quickest), and timbre and stereo having the largest (slowest) average times (Table 2). Means of the five trials with and without additional VFB revealed that with AVFB, overall TD was significantly shorter (z = −2.80, p = 0.005), and total TS was significantly smaller than with AFB alone (z = −2.80, p = 0.005).

Chronologically, means for trial duration were different and became gradually smaller throughout the five time points (with visual support, p = 0.081; with audio-only, p = 0.086). Mean TDs were higher with AFB at the start and showed a larger reduction than with AVFB. TD for the first trial was significantly higher than for the fifth trial with AVFB (z = −2.19, p = 0.028) and with AFB (z = −2.29, p = 0.022).

TS differences were highly significant in the AFB setting (χ2(4) = 21.7, p = 0.000), with stereo having the largest and wavering and percussion the smallest amount of relative TS (Table 2).

3.1.2. Subjective Evaluation

Mean VAS for perceived subjective pleasantness ranged from 3.40 (wavering) to 7.99 (stereo), and were significantly different across the sound models (χ2(4) = 14.3, p = 0.006). Means for VAS helpfulness were not significantly different across models (χ2(4) = 4.90, p = 0.299), with timbre having the lowest (5.50) and pitch the highest ratings (7.57). For VAS results of both experiments, see Table 3.

3.2. Experiment 2

3.2.1. Movement Data

With AFB, the average time for TD with the synthetic model was significantly shorter than with the musical model (z = −2.22, p = 0.020). Subjects’ average TD with AVFB was very similar in the two models (z = −0.19, p = 0.852). In both models, the average time for TD was significantly shorter with visual support compared to audio alone (p < 0.001), see Table 2.

No statistically significant difference was found between the two models for TS, both for the conditions with and without visual support (Table 2). TS was significantly less with AVFB compared to AFB within both models (p < 0.001).

Real distances were significantly larger than ideal distances (p < 0.001). ADD was less in the synthetic model compared to the musical model, both with visual support (z = −0.93, p = 0.351) and without visual support (z = −1.53, p = 0.126), see Table 2. ADD with AFB was significantly larger than with AVFB in both models (each model: z = −3.82, p < 0.001).

Generally, distances on the X-axis were significantly larger than on the Y-axis, both with AVFB (z = −3.10, p = 0.002), and with AFB (z = −2.28, p = 0.023), without significant differences between the sound models. The results of the movement data are shown in Table 2.

3.2.2. Subjective Evaluation

The synthetic model was evaluated significantly more helpful (z = −2.13, p = 0.033). No significant difference was identified regarding perceived pleasantness (z = −0.79, p = 0.433).

Regarding the acoustic signaling of the target area, silence (in the target area of the synthetic model) was evaluated significantly more helpful than target indication through a specific sound (in the target area of the musical model; z = −2.07, p = 0.038).

3.2.3. Correlations

There are high correlations between TD and ADD with ABF (but not with VFB) in both models (synthetic: rs = 0.86, p < 0.001; musical: rs = 0.84, p < 0.001).

The musical test for pitch discrimination (herein called musicality, M = 7.20, SD = 6.85) does not correlate with longer TD with visual support in both models, but without visual support (synthetic: rs = 0.59, p = 0.006; musical: rs = 0.41, p = 0.085). Regarding TS, musicality is related to less TS without visual support in the musical model (rs = 0.46, p = 0.048), but there are no correlations to TS in the other conditions. Musicality is correlated to the amount of time that participants had active musical training (e.g., playing an instrument), rs = 0.60, p = 0.005.

Participant age is related to longer TD in all conditions, both with visual support (synthetic: rs = 0.49, p = 0.028; musical: rs = 0.43, p = 0.060), and without (synthetic: rs = 0.43, p = 0.061; musical: rs = 0.50, p = 0.029). Furthermore, age was related to more TS, but only in the conditions with AVFB (synthetic: rs = 0.39, p = 0.086, musical: rs = 0.50, p = 0.025).

Age and pitch discrimination ability were mildly correlated, rs = 0.33, p = 0.153, with pitch discrimination slightly decreasing with age.

4. Discussion

This research aimed to find the most effective audio parameters for balance training with a commercially available training device. In light of our research questions, the main results are:

- In E1, wavering and percussion were the most successful parameters. Overall, the percussive sound model was the most effective and intuitive both in quantitative and in VAS data.

- In E2, participants were quicker in TD, and movement accuracy was superior with the synthetic model, which was also rated as being more helpful.

- With AVFB, TD was shorter, and both movement and postural accuracy were superior, with similar values regardless of the underlying sound model and the experiment.

- Irrespective of the feedback, participants in E2 were moving more on the X-axis (mediolateral movement), and movement accuracy was superior on the Y-axis, with and without visual support.

- Higher musicality was associated with better results for TD and TS, but only without VFB.

- Increased age is associated with more extended TD in all conditions.

After a short introduction and the preceding audiovisual exercise, all participants were able to orient themselves without visual assistance and based merely on the sonifications, which is in line with other AFB experiments [33,40,41].

4.1. Implications for Acoustic Parameter Mapping

When creating a parameter-mapped sonification, mapping, polarity, and scaling issues are crucial, and user testing is highly advisable [10,22,25]. The results from E1 revealed that TD and TS were significantly different for the five audio parameters, especially with AFB, showing an apparent influence of the respective acoustic environment. Mapping percussion and wavering (synthetic sound modulation) on the mediolateral axis appear to be the two most promising out of the five chosen sound models to locate the target area (and one’s relative distance to it). Timbre (parameters derived from the sound characteristics of musical instruments) was least effective in terms of TD, which corresponds to prior research, where timbre was less effective than pitch [41]. If timbre is used in a 2D-AFB system, we argue it should not be used as a primary, but as an overlaying or adjacent parameter. Prior research suggests, it should also not be mapped vertically (Y-axis), but horizontally [41].

Pitch (represented by musical/piano sounds) was not as effective as percussion and wavering, but more effective than timbre and stereo modulation. Stereo modulation (by changing the relative volume of the acoustic representation of the X-axis) was least effective regarding the quantitative sensor data and was ruled out for further investigation, as it might negatively alter the effect of a second sound parameter on an overlay and might pose problems for patients with impaired (binaural) hearing. Some sound dimensions are less effective in auditory displays for practical design reasons, since they can cause perceptual distortions with other acoustic parameters like pitch [42].

Some authors critically noted that studies scarcely consider subjective and emotional reactions to presented sounds, although probably even the simplest AFB prompts some positive or negative subjective reactions [38]. We therefore aimed to evaluate the participants’ subjective attitudes towards the chosen sound models regarding their perceived helpfulness and pleasantness to get a more comprehensive view of the model’s appeal and usefulness. Subjective helpfulness was comparable in the five models, except for the timbre model (rated distinctly lower than the others), possibly due to its lack of acoustic clarity regarding the polarities. Percussion, wavering, and pitch were evaluated as being the most helpful models. We included them in the 2D-sound models of E2. Though being useful for TD, the sound wavering was not perceived as pleasant, presumably due to its less acoustically pleasant features. The stereo model, on the other hand, was perceived as very pleasant, which was arguably due to the underlying percussive sound pattern and less because of the modulation via stereo panning.

In E2, we combined the most effective and intuitive sound models of E1 into two-dimensional models. We mapped two main sound parameters each into (A) a musical model (consisting of modulated musical sound samples) and (B) a synthetic model (consisting of synthesized sounds modulations). In both models, a form of pitch was mapped to the vertical axis (i.e., piano notes in the musical model and high/low white noise in the synthetic model). The horizontal axis was represented by percussive sound modulations (musical model) and the sound wavering (synthetic model) since these were the most efficient sounds of E1.

TD was significantly shorter in the synthetic model and ADD was less. TS was similar in both models. We assume that crucial factors for the superior effectiveness of the synthetic model were the clarity of the sound signals and the unambiguous sonification of the target area. Additionally, the synthetic model merely signaled the distance and direction from the target area by a continuous increase of volume and sound modulation. This was noticeably different to the sonification of the target area and seemed to be rather straightforward. In contrast, the musical model had discrete tonal steps on the vertical axis (with a static reference and a moving position tone) that need more cognitive—and, to a lesser degree, musical—resources for understanding and processing. Therefore, the musical model was apparently more challenging and more likely to induce central processing mechanisms akin to motor-cognitive dual-task training.

The synthetic feedback of the Y-axis only provided information about whether the subject was positioned too far forward or backward in relation to the target. In contrast, subjects in the musical model had to relate the position tone and the reference tone internally. They had to deduce the distance from the target area from the discrepancy of these tones. Possibly, complex acoustic sound sequences are not suitable to guide movements to discrete target points [40], like it was seen in this study regarding the more extended TD in the musical model.

There seems to be some agreement among listeners about what sound elements are good (or weak) at representing particular spatial dimensions [22]. Mapping pitch vertically appears to be ingrained in our auditory-spatial pathways. From our results, we can confirm that the most effective way and natural association to map pitch in a two-dimensional soundscape is the vertical (anterior-posterior) axis, which is in line with prior studies [25,41].

TD is not only the distance until reaching the target area, because this area is often touched upon before. The goal was to remain in the target area for three seconds. Therefore, the variable of TD is not the time until the target area is reached (for the first time), but the time until a participant realized with sufficient certainty where the goal is located, and where he or she has to stop moving and hold their position. The musical model had the tambourine as a specific target sound, whereas the synthetic model had no target sound. The consequential silence might be more clearly distinguishable from the area around the target. This assumption was also confirmed by participants evaluating silence as being significantly more helpful than a distinct target sound.

In the VAS evaluation, the synthetic model was rated more helpful than the musical model, which is in accordance with the quantitative effectiveness during target detection. Surprisingly, the synthetic model was also rated slightly more pleasant, although the synthetic wavering was rated as being unpleasant in E1. We assume that the helpfulness in terms of clearness, the additional white noise on the X-axis, and the subsequent better results in the more demanding tasks of E2 led to the overall better subjective interpretation of this model. We aimed to develop two models with comparable effectiveness. The results, however, revealed that we overestimated the intelligibility of the (more complex) musical model for inexperienced participants.

The associations within the sensor data between TD and ADD (longer TD correlates with more ADD) indicate an active effort to localize the target, i.e., subjects were “moving while searching,” suggesting an activating and motivating nature of the movement exercises. Conversely, it implies that there was no speed-accuracy trade-off, meaning participants were able to increase the speed of target detection without decreasing the level of movement accuracy, which indicates a generally well comprehensible setup and sound-to-movement mapping.

4.2. AFB and VFB

In our experiments, the audiovisual condition preceded the audio-alone conditions to get participants acquainted with the configuration of the goal finding exercise and to allow for a retrospective comparison with the audio-alone condition. Generally, the quantitative results were better with AVFB, resulting in significantly shorter TD, less TS and less ADD. Contrary to the audio-only conditions, where the choice of sound models significantly influenced participant’s quickness of goal finding, TD was very similar between all audiovisual models, suggesting less influence of the sounds. Possibly, the visual signals were internally weighed as a sufficient sensory input for the task in the AVFB condition. Moreover, the target and a visual representation of one’s position were visible from the start of each task and until a target was reached, which was apparently easier to interpret than real-time changing AFB in which the target position had to be “discovered.”

Learning can be assumed if the speed of TD decreases over time. Chronologically over the five tasks, participants showed gradual improvement in E1. In the audio-alone condition, the TD reduction was more substantial from the first to the last trial. With AVFB, the exercise was quickly understood, but participants were less able to improve their quickness of TD during practice. The short TD with AVFB shows a potential ceiling effect. There was no chronological difference in TD results from model one to model two in E2 since the tasks were far more complex and different for each of the two models.

More than half of the subjects reported that with VFB, they were entirely focused on the screen during training, and the exercise was perceived as rather simple from the beginning. On the other hand, with AFB alone, exercises were often perceived as more challenging (especially at the beginning). The mentioned phenomena are not uncommon when AFB is used: Audio-based systems face the problem that some setups are less effective at the beginning and may require a more extended period of learning. At later stages, however, they may outperform easier-to-learn interfaces [26]. Retention tests often show improvement when subjects first trained with their eyes open, and later only with AFB, akin to our experimental procedure [43].

4.3. AFB and Musicality

In the area of sonification, questions of musicality remain open. The use of musical sounds (as opposed to pure sine wave tones, for example) has been suggested because of the ease of interpreting simple musical sounds [44]. However, a combination of a novel movement task with musical AFB in more than one dimension appeared to be particularly challenging like it was seen in E2, even for healthy subjects.

For reasons outlined in the introduction, it would be a waste of potential if AFB designers aimed to merely imitate VFB with sounds [45]. It still appears advisable to design pleasant-sounding sonifications to the extent possible while still conveying the intended message [10,22]. The goal of our mappings was therefore to deliberately build one sonification out of musical sounds instead of only time and space-related sound information akin to a distance meter in cars. Dyer et al. [45] remarked that there are rarely any comparisons between “basic” and “pleasant” mappings (which should be structurally similar). Hence, the two sound models of E2 provided sound environments that aimed to allow for this comparison.

Studies have generally found weak associations between musical experience and auditory display performance [22]. One plausible explanation for this lack of relationship between musicianship and auditory display performance is the simplistic nature of musical ability self-report metrics, which are often the benchmark for measuring the degree of musical training and ability a person has [22]. A fruitful approach for future measurements of musicianship may be the use of a brief, reliable, and valid measure of musical ability for research purposes along the lines of prior research into musical abilities [46,47]. In E2, a preliminary test for (one important aspect of) musical abilities was therefore implemented to increase reproducibility and help explain the wide differences that have been seen in prior studies between study participants [38]. To estimate musical abilities, we chose pitch differentiation ability and years of active engagement with music to determine musical expertise. For calculating correlations with the experimental training scores, we stuck to the results of the pitch discrimination test (and not the duration of musical training), since it provided us with a more accurate scaling and values greater than 0 for each participant. Perhaps it was the more thorough testing of this aspect of musical ability that led to clearer results and correlations in our study. Pitch discrimination ability had a positive influence on the quickness of TD without visual support, and on TS of the musical model. The findings of several studies show that the way senses communicate and the integration of inputs from various senses can be improved by musical training [48]. Musical practice is also associated with less cognitive decline and increased executive control in older adults [49]. However, the correlations of musicality and movement data were relatively small in our study and have to be regarded as preliminary.

As a limitation, the results of the two test series are merely indicative due to the small size of the groups. The quantitative results have to be interpreted conservatively because had to use non-parametric statistical methods. Furthermore, the comparison of the conditions with and without visual support can only provide minor clues because the training with AFB went two times (E1) or three times (E2) longer and always followed the audiovisual task.

5. Conclusions

In our experiments, we evaluated parameter mappings of novel sonifications for balance training. Basic functional sounds are more efficient for a postural target finding exercise than complex audio models with musical properties, even when the latter are perceived as being more pleasant. We can confirm that audio feedback is a feasible approach for balance training. Subjective evaluations are a useful addition to sensor data when evaluating the effectiveness of AFB. Prior to implementation, movement-to-sound parameter mappings should be evaluated with test subjects. We believe that AFB could serve as an engaging alternative to more common forms of external sensory feedback in balance therapy. Further research should assess potential challenges and benefits in a clinical context and in regard to specific impairments.

Supplementary Materials

The following are available online at https://www.mdpi.com/2624-599X/2/3/34/s1, Audio E1.1–E1.5: display of audio models for E1; Audio E2.1 and E2.2: display of audio models for E2.

Author Contributions

Conceptualization, D.F., M.K. and P.F.; methodology, D.F., M.K. and P.F.; software, M.K.; validation, D.F., M.K., M.E. and P.F.; formal analysis, D.F., M.K. and M.E.; investigation, D.F. and M.K.; resources, P.F; data curation, D.F. and M.K.; writing—original draft preparation, D.F.; writing—review and editing, D.F., M.K., M.E. and P.F.; visualization, D.F., M.K. and M.E.; supervision, P.F.; project administration, D.F., M.K. and P.F.; funding acquisition, P.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Federal Ministry for Economic Affairs and Energy (BMWi) through the Central Innovation Program for SMEs (ZIM), grant number ZF4021401ED5. The APC was funded by the Bavarian Academic Forum (BayWISS)—Doctoral Consortium “Health Research” of the Bavarian State Ministry of Science and the Arts.

Acknowledgments

The authors like to thank the Medica Medizintechnik GmbH for technical support. Dominik Fuchs likes to thank the Andreas Tobias Kind Stiftung for their support during the writing process.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychon. Bull. Rev. 2013, 20, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Todorov, E.; Shadmehr, R.; Bizzi, E. Augmented Feedback Presented in a Virtual Environment Accelerates Learning of a Difficult Motor Task. J. Mot. Behav. 1997, 29, 147–158. [Google Scholar] [CrossRef]

- Wang, C.; Kennedy, D.M.; Boyle, J.B.; Shea, C.H. A guide to performing difficult bimanual coordination tasks: Just follow the yellow brick road. Exp. Brain Res. 2013, 230, 31–40. [Google Scholar] [CrossRef] [PubMed]

- Kennel, C.; Streese, L.; Pizzera, A.; Justen, C.; Hohmann, T.; Raab, M. Auditory reafferences: The influence of real-time feedback on movement control. Front. Psychol. 2015, 6, 69. [Google Scholar] [CrossRef] [PubMed]

- Schaffert, N.; Janzen, T.B.; Mattes, K.; Thaut, M.H. A Review on the Relationship Between Sound and Movement in Sports and Rehabilitation. Front. Psychol. 2019, 10, 244. [Google Scholar] [CrossRef] [PubMed]

- Effenberg, A.O.; Fehse, U.; Schmitz, G.; Krueger, B.; Mechling, H. Movement Sonification: Effects on Motor Learning beyond Rhythmic Adjustments. Front. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef] [PubMed]

- Ghai, S. Effects of Real-Time (Sonification) and Rhythmic Auditory Stimuli on Recovering Arm Function Post Stroke: A Systematic Review and Meta-Analysis. Front. Neurol. 2018, 9, 488. [Google Scholar] [CrossRef] [PubMed]

- Salmoni, A.W.; Schmidt, R.A.; Walter, C.B. Knowledge of results and motor learning: A review and critical reappraisal. Psychol. Bull. 1984, 95, 355–386. [Google Scholar] [CrossRef]

- Winstein, C.J.; Schmidt, R.A. Reduced frequency of knowledge of results enhances motor skill learning. J. Exp. Psychol. Learn. Mem. Cogn. 1990, 16, 677–691. [Google Scholar] [CrossRef]

- Dyer, J.; Stapleton, P.; Rodger, M. Sonification as Concurrent Augmented Feedback for Motor Skill Learning and the Importance of Mapping Design. Open Psychol. J. 2015, 8, 192–202. [Google Scholar] [CrossRef]

- Fujii, S.; Lulic, T.; Chen, J.L. More Feedback Is Better than Less: Learning a Novel Upper Limb Joint Coordination Pattern with Augmented Auditory Feedback. Front. Neurosci. 2016, 10. [Google Scholar] [CrossRef] [PubMed]

- Ronsse, R.; Puttemans, V.; Coxon, J.P.; Goble, D.J.; Wagemans, J.; Wenderoth, N.; Swinnen, S.P. Motor learning with augmented feedback: Modality-dependent behavioral and neural consequences. Cereb. Cortex 2011, 21, 1283–1294. [Google Scholar] [CrossRef] [PubMed]

- Danna, J.; Velay, J.-L. On the Auditory-Proprioception Substitution Hypothesis: Movement Sonification in Two Deafferented Subjects Learning to Write New Characters. Front. Neurosci. 2017, 11, 137. [Google Scholar] [CrossRef] [PubMed]

- Spence, C.; Parise, C.; Chen, Y.-C. The Colavita Visual Dominance Effect. In The Neural Bases of Multisensory Processes: The Colavita Visual Dominance Effect; Murray, M.M., Wallace, M.T., Eds.; CRC Press: Boca Raton, FL, USA, 2012; pp. 529–556. ISBN 9781439812174. [Google Scholar]

- Spence, C. Explaining the Colavita visual dominance effect. In Progress in Brain Research; Srinivasan, N., Ed.; Elsevier: Amsterdam, The Netherlands, 2009; pp. 245–258. ISBN 9780444534262. [Google Scholar]

- Maier, M.; Ballester, B.R.; Verschure, P.F.M.J. Principles of Neurorehabilitation After Stroke Based on Motor Learning and Brain Plasticity Mechanisms. Front. Syst. Neurosci. 2019, 13, 74. [Google Scholar] [CrossRef] [PubMed]

- Whitehead, D.W. Applying Theory to the Critical Review of Evidence from Music-Based Rehabilitation Research. Crit. Rev. Phys. Rehabil. Med. 2015, 27, 79–92. [Google Scholar] [CrossRef]

- Ghai, S.; Schmitz, G.; Hwang, T.-H.; Effenberg, A.O. Auditory Proprioceptive Integration: Effects of Real-Time Kinematic Auditory Feedback on Knee Proprioception. Front. Neurosci. 2018, 12, 142. [Google Scholar] [CrossRef]

- Ghai, S.; Schmitz, G.; Hwang, T.-H.; Effenberg, A.O. Training proprioception with sound: Effects of real-time auditory feedback on intermodal learning. Ann. N. Y. Acad. Sci. 2019, 1438, 50–61. [Google Scholar] [CrossRef]

- Hasegawa, N.; Takeda, K.; Sakuma, M.; Mani, H.; Maejima, H.; Asaka, T. Learning effects of dynamic postural control by auditory biofeedback versus visual biofeedback training. Gait Posture 2017, 58, 188–193. [Google Scholar] [CrossRef]

- Vuong, Q.C.; Laing, M.; Prabhu, A.; Tung, H.I.; Rees, A. Modulated stimuli demonstrate asymmetric interactions between hearing and vision. Sci. Rep. 2019, 9, 7605. [Google Scholar] [CrossRef]

- Walker, B.N.; Nees, M.A. Theory of Sonification. In The Sonification handbook; Hermann, T., Hunt, A., Neuhoff, J.G., Eds.; Logos-Verl.: Berlin, Germany, 2011; pp. 9–39. ISBN 9783832528195. [Google Scholar]

- Kramer, G.; Walker, B.; Bargar, R. Sonification Report. Status of the Field and Research Agenda. International Community for Auditory Display. 1999. Available online: https://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1443&context=psychfacpub (accessed on 23 January 2020).

- Rosati, G.; Roda, A.; Avanzini, F.; Masiero, S. On the role of auditory feedback in robot-assisted movement training after stroke: Review of the literature. Comput. Intell. Neurosci. 2013, 2013, 586138. [Google Scholar] [CrossRef]

- Dubus, G.; Bresin, R. A systematic review of mapping strategies for the sonification of physical quantities. PLoS ONE 2013, 8, e82491. [Google Scholar] [CrossRef] [PubMed]

- Hermann, T.; Hunt, A. Guest Editors’ Introduction: An Introduction to Interactive Sonification. IEEE Multimed. 2005, 12, 20–24. [Google Scholar] [CrossRef]

- Matjacic, Z.; Hesse, S.; Sinkjaer, T. BalanceReTrainer: A new standing-balance training apparatus and methods applied to a chronic hemiparetic subject with a neglect syndrome. NeuroRehabilitation 2003, 18, 251–259. [Google Scholar] [CrossRef] [PubMed]

- Pure Data. Available online: https://puredata.info/ (accessed on 23 January 2020).

- REAKTOR 6. Available online: https://www.native-instruments.com/de/products/komplete/synths/reaktor-6/ (accessed on 23 January 2020).

- Available online: http://opensoundcontrol.org/ (accessed on 14 November 2019).

- Scholz, D.S.; Rohde, S.; Nikmaram, N.; Bruckner, H.-P.; Grossbach, M.; Rollnik, J.D.; Altenmuller, E.O. Sonification of Arm Movements in Stroke Rehabilitation—A Novel Approach in Neurologic Music Therapy. Front. Neurol. 2016, 7, 106. [Google Scholar] [CrossRef] [PubMed]

- Mirelman, A.; Herman, T.; Nicolai, S.; Zijlstra, A.; Zijlstra, W.; Becker, C.; Chiari, L.; Hausdorff, J.M. Audio-biofeedback training for posture and balance in patients with Parkinson’s disease. J. Neuroeng. Rehabil. 2011, 8, 35. [Google Scholar] [CrossRef]

- Chiari, L.; Dozza, M.; Cappello, A.; Horak, F.B.; Macellari, V.; Giansanti, D. Audio-biofeedback for balance improvement: An accelerometry-based system. IEEE Trans. Biomed. Eng. 2005, 52, 2108–2111. [Google Scholar] [CrossRef]

- Dozza, M.; Chiari, L.; Horak, F.B. Audio-Biofeedback Improves Balance in Patients With Bilateral Vestibular Loss. Arch. Phys. Med. Rehabil. 2005, 86, 1401–1403. [Google Scholar] [CrossRef]

- Franco, C.; Fleury, A.; Gumery, P.Y.; Diot, B.; Demongeot, J.; Vuillerme, N. iBalance-ABF: A smartphone-based audio-biofeedback balance system. IEEE Trans. Biomed. Eng. 2013, 60, 211–215. [Google Scholar] [CrossRef]

- Ludovico, L.A.; Presti, G. The sonification space: A reference system for sonification tasks. Int. J. Hum. Comput. Stud. 2016, 85, 72–77. [Google Scholar] [CrossRef]

- Walker, B.N. Consistency of magnitude estimations with conceptual data dimensions used for sonification. Appl. Cognit. Psychol. 2007, 21, 579–599. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Boyer, E.O.; Francoise, J.; Houix, O.; Susini, P.; Roby-Brami, A.; Hanneton, S. Sensori-Motor Learning with Movement Sonification: Perspectives from Recent Interdisciplinary Studies. Front. Neurosci. 2016, 10, 385. [Google Scholar] [CrossRef]

- Schaffert, N.; Mattes, K.; Effenberg, A.O. An investigation of online acoustic information for elite rowers in on-water training conditions. J. Hum. Sport Exerc. 2011, 6. [Google Scholar] [CrossRef]

- Fehse, U.; Schmitz, G.; Hartwig, D.; Ghai, S.; Brock, H.; Effenberg, A.O. Auditory Coding of Reaching Space. Appl. Sci. 2020, 10, 429. [Google Scholar] [CrossRef]

- Scholz, D.S.; Wu, L.; Pirzer, J.; Schneider, J.; Rollnik, J.D.; Großbach, M.; Altenmüller, E.O. Sonification as a possible stroke rehabilitation strategy. Front. Neurosci. 2014, 8, 332. [Google Scholar] [CrossRef]

- Neuhoff, J.G.; Wayand, J.; Kramer, G. Pitch and loudness interact in auditory displays: Can the data get lost in the map? J. Exp. Psychol. Appl. 2002, 8, 17–25. [Google Scholar] [CrossRef] [PubMed]

- Hafström, A.; Malmström, E.-M.; Terdèn, J.; Fransson, P.-A.; Magnusson, M. Improved Balance Confidence and Stability for Elderly After 6 Weeks of a Multimodal Self-Administered Balance-Enhancing Exercise Program: A Randomized Single Arm Crossover Study. Gerontol. Geriatr. Med. 2016, 2, 2333721416644149. [Google Scholar] [CrossRef] [PubMed]

- Brown, L.M.; Brewster, S.A.; Ramloll, R.; Burton, M.R.; Riedel, B. Design Guidelines for Audio Presentation of Graphs and Tables. In Proceedings of the 2003 International Conference on Auditory Display, Boston, MA, USA, 6–9 July 2003. [Google Scholar]

- Dyer, J.; Stapleton, P.; Rodger, M. Sonification of Movement for Motor Skill Learning in a Novel Bimanual Task: Aesthetics and Retention Strategies. 2016. Available online: https://smartech.gatech.edu/bitstream/1853/56574/1/ICAD2016_paper_27.pdf (accessed on 4 July 2020).

- Müllensiefen, D.; Gingras, B.; Musil, J.; Stewart, L. The musicality of non-musicians: An index for assessing musical sophistication in the general population. PLoS ONE 2014, 9, e89642. [Google Scholar] [CrossRef] [PubMed]

- Law, L.N.C.; Zentner, M. Assessing musical abilities objectively: Construction and validation of the profile of music perception skills. PLoS ONE 2012, 7, e52508. [Google Scholar] [CrossRef] [PubMed]

- Landry, S.P.; Champoux, F. Musicians react faster and are better multisensory integrators. Brain Cogn. 2017, 111, 156–162. [Google Scholar] [CrossRef]

- Moussard, A.; Bermudez, P.; Alain, C.; Tays, W.; Moreno, S. Life-long music practice and executive control in older adults: An event-related potential study. Brain Res. 2016, 1642, 146–153. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).