Abstract

It has been proposed that we are entering the age of postmortalism, where digital immortality is a credible option. The desire to overcome death has occupied humanity for centuries, and even though biological immortality is still impossible, recent technological advances have enabled possible eternal life in the metaverse. In palaeoanthropology and archaeology contexts, we are often driven by our preoccupation with visualising and interacting with ancient populations, with the production of facial depictions of people from the past enabling some interaction. New technologies and their implementation, such as the use of Artificial Intelligence (AI), are profoundly transforming the ways that images, videos, voices, and avatars of digital ancient humans are produced, manipulated, disseminated, and viewed. As facial depiction practitioners, postmortalism crosses challenging ethical territory around consent and representation. Should we create a postmortem avatar of someone from past just because it is technically possible, and what are the implications of this kind of forced immortality? This paper describes the history of the technologically mediated simulation of people, discussing the benefits and flaws of each technological iteration. Recent applications of 4D digital technology and AI to the fields of palaeoanthropological and historical facial depiction are discussed in relation to the technical, aesthetic, and ethical challenges associated with this phenomenon.

1. Introduction

The desire to overcome death has occupied humanity for centuries, with the earliest fictional account in the Epic of Gilgamesh, an ancient Sumerian tale from c. 2100 BCE [1]. Even though biological immortality is still impossible, recent technological advances have enabled possible eternal life in the metaverse as a postmortem avatar. This has been proposed as the age of postmortalism [2].

1.1. Faces of People from the Past

Realistic representation after death is considered key to eternal life and our fascination with the faces of people from the past is well documented [3,4,5,6,7,8]. Since facial appearance is critical to human social interaction and status, it is inevitable that the faces of ancient people stimulate particular interest, including judgements about their identity, personality, and character [9]. Our human need to visualise the faces of our ancestors has led to the proliferation of facial depiction exhibitions in museums around the world, including the National Museum of Ireland [10], Heritage Malta [11], and Johns Hopkins Archaeological Museum, USA [12]. The methods of facial depiction from human remains have been evaluated and are well described as based on anatomical standards, craniometrics, and morphological interpretation [5], and interdisciplinary studies have been performed worldwide. In this way, facial depictions of people from the past, such as King Richard III [13], Robert the Bruce [14], and Tutankhamun [15], have contributed to the changing views of the media and the public in relation to historical figures. Palaeoanthropological and archaeological examples include the faces of neanderthals exhibited in the Neanderthal Museum, Germany [16]; Cheddar Man [17], exhibited in the Natural History Museum in the UK and dating from 10,000 years ago; and Otzi, the ice mummy [18], housed in the South Tyrol Museum of Archaeology in Bolzano, Italy, and dating from 3350-3105 BC.

We have contributed over the last 30 years to research related to craniofacial identification and the facial depiction of people from the past, and many examples of Face Lab work, and the work of others, will be included in this perspective paper. This paper discusses the history and context of the facial depiction of deceased individuals, the influence of new technologies, and the ethical issues resulting from the creation of digital postmortem avatars, with a specific focus on how this might affect the fields of palaeoanthropology and archaeology. Examples from archaeology and history will expose some of the challenges and opportunities, and contemporary examples will also be described where there could be functional creep into ancient worlds.

1.2. Cognitive Bias, Re-Humanisation, Authenticity, and Impact on Descendent Communities

Advances in digital technologies have enabled the realistic depiction of ancient faces using 3D computerised systems, haptic devices, 3D scanners, 3D printers, advanced photo-editing, and CGI software. Many examples of these archaeological digital depictions can be found presented in the media and on the internet, such as St Nicolas [19] (Figure 1) and the ancient Egyptian pharaoh, King Ramesses II [20] (Figure 1), and displayed across the Galleries, Libraries, Archives, and Museums (GLAM) and heritage sectors.

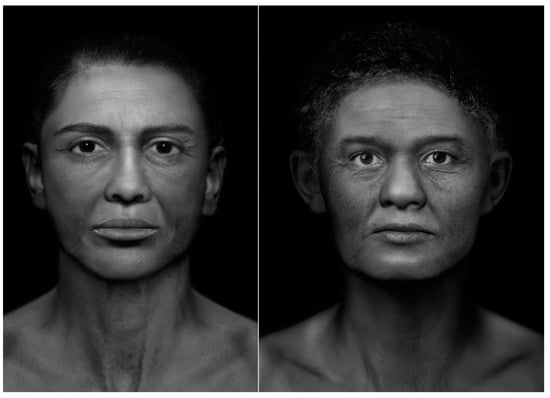

Figure 1.

Digital facial depictions of St Nicolas (left) and the ancient Egyptian pharaoh, Ramesses II (right).

However, there are challenges associated with these highly realistic facial depictions. Whilst craniofacial research has shown good accuracy in relation to the prediction of facial features from skull morphology [21,22], the addition of colour, texture, and detail is notoriously unreliable [9]. Research suggests that different surface details can have an alarmingly strong effect upon how the face is perceived and recognised [23,24,25], and any variation in surface detail can radically change the appearance of facial morphology, especially in relation to age, gender, or ethnicity presentation. Many depictions of ancient people have been controversial and widely debated due to the changing views around race [26,27,28,29], gender [30], and culture [31]. The ethics associated with bias, colonisation, and gender representation have been discussed in the bioanthropology literature [9].

Since it is recognised [32] that the primary goal of the facial depiction of people from the past is humanistic, re-socialising and re-personifying hyper-realistic models and images have become commonplace, suggesting a preference for the visualisation of the individual as a living, breathing person. One research study [33] analysed 192 facial depictions in 71 institutions across Europe and found that the depictions considered the most believable were the ones with the highest degree of ’finish’, even though this level of detail may not be scientifically justifiable with many possible other interpretations.

The effect of cognitive bias on facial depiction presentation has recently been debated [9,34] with calls for the clarification of potential error, possible variation, and degree of caution [35]. The choices made by practitioners for the presentation of facial depictions are critical to their interpretation, as it is known that display parameters such as viewpoint, pose, and background can misrepresent an individual by imparting personality and status. This effect is conflated once the parameters for presentation are increased, such as the addition of static and dynamic expression, gesture, and speech. Certain presentation choices, such as the addition of clothing and styled hair, can be more easily justified in historical applications where there are written documents on appearance or portraits to assist with decision making, but challenges arise when working with ancient individuals where these supporting materials are not available. Further consideration must be given to how these factors might impart personality or character and affect our perception of the subject.

Many facial depictions represent ancient populations and raise questions relating to authenticity, Eurocentric colonialism, and past biases on how we conduct and interpret science, along with the issue of consent of descendent communities. In 2018, the authors of this paper produced archaeological facial depictions of two ancient Egyptians for a project at Johns Hopkins Archaeological Museum [12] that resulted in an exhibition titled Who Am I? Remembering the Dead Through Facial Reconstruction (Figure 2). The project aimed to re-humanise the bodies of two mummified people, known as the Goucher Mummy and the Cohen Mummy, named after their respective collectors, Methodist minister John Goucher and Colonel Mendes Israel Cohen, who attempted to unwrap the remains for display in travelling exhibitions. Throughout the project, the team remained focused on a goal of considered re-humanisation, resulting in 3D depictions with specific display parameters: presented forward facing, with neutral expression, against a dark background, and in grayscale with blurred unknown features, such as hair, to limit the suggestion of undetermined information. The facial depiction of these ancient humans can prevent the viewer from classifying them as museum objects or artefacts, and researchers [7] believe that a facial depiction creates audience empathy for the subject. But do they go far enough, or too far in breathing life into the individuals? The technology is available to present these faces in a more realistic manner and even for their faces to move; however, the curator of the museum, Sanchita Balachandran, noted [12] “if you do an intervention, what do you gain from that? And what do you potentially lose in terms of one’s integrity and encroaching on the dignity of these individuals?”. These depictions are the result of considered decision making, but this process is not always obvious to public audiences who often see the depictions for a short duration in exhibitions or via social media, who might view these as sensational and unnecessary or ways of asserting colonial privilege rather than an act of dignified re-humanisation.

Figure 2.

Facial depictions of ancient Egyptians known as the Goucher (left) and Cohen (right) mummies. Facial depiction images were displayed in the exhibition, Who Am I? Remembering the Dead Through Facial Reconstruction, at Johns Hopkins Archaeological Museum in 2018.

Some interdisciplinary studies have attempted to overcome facial depiction biases through co-design and engagement with indigenous populations and descendent communities, the employment of local researchers, and the use of more relevant visual references. The Face Lab projects, The Sutherland Nine Skeletal Restitution project from South Africa [36] and the 2018 Quest for Ancestral Faces project and exhibition [37,38] in El Museo Canario, Las Palmas de Gran Canaria, are examples of this community-sensitive and inclusive research. The Sutherland Nine project involved unethically collected, 19th century human remains from a university anatomy department that were returned to the descendent community with facial depictions that were specifically requested by the families, utilising images from a 19th century database of people from this part of the world. The descendant families described the process as helping them to reconnect with their ancestors, and this project aimed to contribute to restorative justice, reconciliation, and healing while confronting a traumatic historical moment [39]. The Canarian project involved fifty facial depictions of ancient indigenous human remains from the museum skeletal collection, exhibited alongside fifty photographic portraits of contemporary Canarians. This project included a Canarian researcher working within the UK Face Lab team and collaboration with a local ethnographic photographer.

However, the use of facial depiction in palaeoanthropological exhibitions or media presentations has been criticised due to the largely interpretative nature of anatomic and phenotypic characterisation for early hominids [3,9,40], and researchers suggest that these interpretations rely on flawed assumptions informed by previous racist dogma. For example, hominid anatomy is often assumed to be similar to non-human primates, and cultural beliefs may clash with the depiction of skin colour in ancient populations. Some researchers claim that “very little effort is made to produce reconstructions that are substantiated by strong empirical science” [40], whilst noting that there are immense pressures on museums or the media to produce exciting exhibits that attract large audiences, and this may constrain the presentation of potential errors, caution, or variation.

The temptation to animate these digital facial depictions of historical and ancient humans is often mitigated by moral and ethical observations; however, similar to the desire to present realistic faces for museum settings, there is always a desire for them to do more—to move and speak, which could imply particular characteristics or personalities. We can even place them in a variety of virtual environments for interaction, which might differ widely from their lived locations. These options mean that there is the increased possibility of misrepresentation of the individual in life by fostering new narratives. But is this a concern? Do they still function as a facial depiction, or have they been transformed? Is our perception of these representations of individuals as living, breathing proxies altered as a function of their realism?

As practitioners, we recognise that it is a privilege to be able to create digital facial depictions of people from the past for a public audience. Although it is technically possible to create and manipulate these digital humans, what are the implications of new and emerging technologies that further enhance the realism and ‘human-ness’ of the depiction toward digital immortality? Will these digital humans transcend to become postmortem avatars that ‘exist’ in the metaverse (a range of technologies that allow users to interact with virtual worlds, virtual objects, and each other [41]) or a museum exhibition forever? What are the implications of this kind of forced immortality?

2. The Rise of the Post-Mortem Avatar

2.1. Humanoid Robots and Animatronics

The four-dimensional simulation of people from the past has been of interest for decades. Whilst human-like machines were documented in the 15th century [42], the earliest example of the animation of a historical figure was the moving and talking Abraham Lincoln [43] (Figure 3) developed by the animatronics master, Garner Holt, and presented by Disney at the 1964 New York World’s Fair [44] and at Disneyland since 1965. An updated version of Lincoln was revealed in 2017, with over 50 electrically actuated motions and interactive controls [45].

Figure 3.

Original animatronic Abraham Lincoln developed by Disney. https://flickr.com/photos/79172203@N00/42346731335, accessed on 12 November 2024.

However, animatronic/robotic historical figures are beyond the budget of most of the GLAM and heritage sectors and tend to be presented at high-end exhibitions or entertainment venues, such as Disneyland or Madame Tussauds. Animatronics are also time consuming and resource heavy, and they require skilled artists or engineers, known at Disney as ‘imagineers’. In addition, these robotic figures often suffer from a phenomenon described in 1970 by Masahiro Mori, a robotics professor at the Tokyo Institute of Technology, as ‘the uncanny valley’—a sudden lost sense of affinity or eeriness created when a human robot almost, but not quite, achieves a lifelike appearance [46]. That is, as human likeness increases, our affinity also increases to a threshold, whereafter subtle deviations from human appearance and behaviour produce a disturbing, eery, and disgusting effect [47,48]. Research using humanoid robots supports this concept [49,50], and the most recent humanoid robots launched by Disney still provoked an eery response from the audience [51].

2.2. Animation of Virtual People

The earliest work with computer-based facial expression was carried out in the early-1970s, and the first three-dimensional facial animation was created in 1972 [52]. The early-1980s saw the development of the first muscle-controlled face model, and the 1985 animated short film Tony de Peltrie was a landmark for facial animation [53]. Great strides have been made in this field, especially in recent years, and fully animated realistic human characters have been produced since the early 2000s [54]. However, digital animation is time consuming and requires specialist resources and highly skilled animators. Nonetheless, some museums have invested in animated digital facial depictions to enhance their public engagement with history. The first documented is the talking Bronze Age Gristhorpe Man [55,56], at the Rotunda Museum in Scarborough, UK, followed by a digital exhibit at the Canadian Museum of History depicting a living, breathing, indigenous family from 4000 years ago [57], and the greyscale, blinking, elderly depiction of an ancient indigenous Canarian exhibited in El Museo Canario, Las Palmas de Gran Canaria [37]. The former is a more basic animation with a digital voice estimated from the oral structure of the Gristhorpe Man, and the latter examples demonstrate minimal movement and facial expression that suggest life but not personality. These exhibits are visually arresting and offer a level of re-humanisation beyond the limits of static depiction. The realistic facial configurations and expressions have a neurocognitive impact, stimulating unconscious processes of familiarity that enable the audience to identify with, compare themselves to, and critically review the subjects.

However, animated digital faces have also suffered from the uncanny valley effect [47,58], and some of this uncanniness is thought to be related to the inability of avatars (robots or digital avatars) to replicate the finer details of real human facial movement [59], such as microexpressions, changes in skin microvascularity, and rapid micromotion. In the past, researchers [60] found that even fully and expertly animated avatars were rated as more uncanny than humans, and avoiding the uncanny valley was thought to require the unlikely achievement of a level of hyper-realism so convincing as to be virtually indistinguishable from actual human form.

2.3. Performance-Driven Avatars

Motion capture, later renamed as ‘performance capture’ by the film director James Cameron, has long been utilised in the movie industry, where Robert Zemeckis and Andy Serkis [61] have pioneered the use of actors to drive digitally created characters. Typically, these characters are animated using blend-shape animation, a technique that creates facial expression by animating between a series of sculpted templates. Mihailova [62] claims that, strictly speaking, motion capture should not be credited for this aspect of film characters, since the character’s face is a fully digital creation. However, the animators use video footage of the actor’s performance as a reference for facial expression [63] and design the character to closely resemble the actor in facial structure, so computer-generated muscles can replicate facial expressions realistically [64] The CGI company, Weta Digital, further developed a face rig to capture the facial expressions of actors on set, reducing and enhancing the additional animation processes [65] for film production.

Visual effects supervisor, Guy Williams, explained in relation to the computer-generated younger version of the actor, Will Smith, in Ang Lee’s 2019 film, Gemini Man, “At the beginning of this, I thought the hardest thing would be to make a compelling digital human being; but we got there pretty readily. The greater challenge involved performance. The animation is where this lived or died. If any shape in the animation was off, if any transition of shapes was even slightly off, the entire thing fell apart” [66]. This lifelike virtual human had overcome the uncanny valley, but revulsion was triggered when flaws in facial expression prevented the audience from seeing this as a real person [67].

The subtle difference between simply capturing movement versus capturing performance is the granular level of detail transferred between actor and digital human. Weta Visual Effects Supervisor, Anders Langlands, stated that “even a fraction of a pixel change in the expression, particularly around the eyes, can completely change the way you perceive the character” [68]. Actors are applauded for their performances as fictional characters through performance capture where their acting ability drives the movements and expressions of the digital avatars. However, when the subject is a real historical figure, the facial expressions that are captured and transferred refer back to the contemporary actor [68]. This layered ‘personhood’ presents issues relating to the misrepresentation of people from the past where the aim is to depict the person as an individual not an actor playing that person as a character. Any authenticity or legitimacy of the deceased person could be compromised. This has implications if digital humans ‘live’ in accessible perpetuity where they can be hacked, used as puppets, or later re-purposed to say and do anything, including verbal abuse or information beyond their time in history.

In spite of this, performance capture techniques have been adopted for the animation of faces of people from the past. In 2018, Imaginarium Productions worked with human evolution expert, Professor Chris Stringer, and forensic artist, Dr Chris Rynn, to create a 3D animated avatar of a Neanderthal for the BBC2 series, ‘Neanderthals: Meet Your Ancestors’ [69]. Ned the Neanderthal’s voice and expression were performed by Andy Serkis and transferred to facial depiction by the performance capture team. Another example includes the 4D portrait of Robert Burns (Figure 4), created by the authors of this paper [70], where the expression, voice, and movement of Scots poet, Rab Wilson, were transferred onto the 3D facial depiction (created from a cast of his skull) to produce a digital simulation of Burns reading one of his own poems [71]. Although these 4D transfer techniques are innovative and visually stimulating, they are also time consuming, including the use of expensive and resource-heavy equipment and the necessary additional input of animators. Furthermore, facial morphology and dental patterns dictate facial movement and, as such, any performance transfer from an actor may result in movement that is not congruent with the morphology of the facial depiction. As the creators of the Burns animation, we note that residual characteristic movement of the actor can be seen in the final animation due to inconsistencies between the morphologies. The question of authenticity of palaeoanthropological or archaeological facial depiction arises when 4D elements are introduced into the process that may influence or override anatomical accuracy.

Figure 4.

Stills of the talking head of Robert Burns. A 3D digital facial depiction of the Scottish poet driven by performance transfer.

3. The Impact of Artificial Intelligence (AI)

3.1. Deepfake Technology

‘Deepfake’ is a term derived from ‘deep learning’ and ‘fake’ and is typically used to refer to an image or video of someone that has been created, altered, or manipulated using AI in a way that makes the fabricated media look authentic [72]. In recent years, AI has been applied to digital avatars to create realistic human facial expressions and movement by artificially generating or manipulating image, audio, and video content. Synthetic media is highly believable and realistic, so much so that we are no longer able to trust that what we see or hear is genuine. Smartphones are equipped with cameras and image processing technology that transform every image into machine-legible data. Internet giants such as Google and Facebook, along with government agencies and private companies, have developed machine vision systems, such as Generative Adversarial Networks (GAN), so that any networked digital image may be analysed and manipulated, turning the metaverse into a vast data-mining field [67,68]. Human facial biometrics, behaviour, and expressions can be identified, labelled, stored, and processed to enable AI image transformation or creation based on these learnt patterns of facial expression, behaviour, and appearance. This AI can then be utilised for a wide variety of purposes: from surveillance to policing, from marketing to advertising, from avatars to robots, and from entertainment to heritage. Digital image transformation can take many forms [73], including producing 3D face models from 2D images [74,75]; predicting a face as a different age, gender, BMI, population group, or historical era [76]; face editing [77]; animating a still facial image in a highly realistic way; or adding colour and improving definition for black and white images or video [78]. Examples range from slight enhancement, such as film restoration [79], to complete fabrication, such as AI-generated face images of fictional characters [80]. Deepfake technology relies on training GAN architectures to superimpose data on an original image or video to alter it. Machine learning techniques can be applied to constantly evolve and improve images or videos, creating ever more convincing synthesised media [81] that are indistinguishable from reality. A recent study found that we are not able to discern real faces from fake ones and GAN-generated faces are even rated as more trustworthy than real human faces [82]. Deepfake technology can also be used in the palaeoanthropology and archaeology sectors to add facial expression, movement, and voice to static imagery.

AI, such as the online tool Deep Nostalgia™, promises to overcome mortality by turning personal photographs of deceased relatives into looped animations that smile, blink, and move [83]. Due to these developments, the era of pure photography is disappearing, to be replaced by the era of computer-generated photography, including the transformation of still images into moving images [84]. In creating moving images, AI Deep Nostalgia™ analyses the sample image/s and uses complex neural networks and machine deep learning to recognise a face [85] and then combines it with an AI system that already has authentic movement patterns, such as gestures and natural human movements [86] created from millions of uploaded video content. The resulting looped animations of single headshots are relatively unsophisticated, only allowing for simplistic movements, such as blinking, nodding, or smiling. The AI Deep Nostalgia™ app takes about 20 s to process still faces into moving faces, which has been a game changer for basic 2D face animation. The launch of AI Deep Nostalgia™ provoked a strong public reaction, with over 106 million animations, 96 million of them created in the first year [87]. At the time of the Deep Nostalgia™ launch, MyHeritage stated that the resulting avatars were controversial, with some people finding them magical, whilst others found them creepy [88].

Inevitably, these AI systems have also been utilised to create gesture and expression in facial depictions of ancient people. Notable archaeological examples include the 2024 Perth Museum exhibition of expressive Scottish faces reconstructed from archaeological remains by the craniofacial expert Dr Chris Rynn [89] and the animated face of a Viking man from the St Brice’s Day Massacre in Oxford in 1002 [90]. These exhibits present in a similar way to other forms of facial depiction animation, through engaging re-humanisation with neurocognitive impact, but occasionally they appear eery or uncanny when the app struggles with some hair and facial hair, dental patterns, and non-frontal angles, creating visual artefacts, wobble, and distortion. This is explained as being due to AI learning processes that require a variety of layer classifications on different photographs to produce an accurate output [91], producing photo-processing that is slightly different from the original photograph when the photographic sample consists of only one photo layer.

In addition, structural errors have been noted with AI Deep Nostalgia™. Researchers [84] found that the moving faces had slightly different structures and proportions when compared to the original photograph, and this is problematic for facial depictions that are based on the morphology of the craniofacial complex. Most importantly, the gestures and expression patterns are ‘learned’ from contemporary social media content, and even though AI Deep Nostalgia™ only offers simple motion, the gestures and expressions created are heavily influenced by contemporary ‘selfie-style’ poses and expressions. This is especially noticeable for women, where there appears to be a gender bias in the animation process, creating more passive, coy, and enigmatic expressions for women than men. The AI systems based on contemporary social media content may not, therefore, be wholly appropriate for the depiction of ancient faces in a palaeoanthropological or archaeological context.

3.2. Ghostbots and Chatbots

Contemporary postmortem avatars or replicas have become known as ‘ghostbots’ [92]. Ghostbots are described as having potential beyond entertainment in terms of historic preservation, education, and digital archiving [93]. The most high-profile historical ghostbot was presented in 2019 at the Dalí Museum in St Petersburg, Florida, as a talking, life-size avatar of the deceased artist, Salvador Dalí [93]. This project used more than 6000 frames of filmed interviews and AI generated matching facial expressions that were embedded over footage of an actor with Dalí’s body proportions in combination with an actor’s imitation of Dalí’s distinctive voice [94]. This screen-based postmortem avatar interacts with museum visitors, allowing them to pose with him for a selfie [95]. The museum aimed to create empathy through this avatar and bring Dalí into the context of modern life. This example utilises real footage of the subject in life, and this is, of course, not possible in the fields of archaeology or palaeoanthropology. However, with the increased exposition of digital postmortem avatars, there is increased audience expectation in relation to this kind of individual visualisation.

Along with realistic facial appearance, AI systems have been employed to create ‘authentic’ responses for deceased individuals, and there is the potential for this to be used in palaeoanthropology and archaeology fields. Researchers [96] describe these AI processes for the contemporary deceased as digital resurrection (or ‘grief tech’), integrating comprehensive datasets of the diaries, images, video, written text, and social context related to a historical figure to create an interactive avatar platform reflecting their personalised responses. These AI-powered interactive avatars simulate deceased [97] individuals based on their ‘digital estates’ [98], including images, voicemails, playlists, instant messaging data, image archives, health and fitness profiles, interest libraries, and social media posts [99,100], and predict their responses to social interaction with the living. These avatars are described as providing virtual immortality, and research suggests that while people do not necessarily believe in an afterlife, they accept the possibility of a continued posthumous relationship [101]. Two-way postmortem digital technologies, like those based on ghostbots and AI-operating avatars, invite the continuous presence of the dead into the daily life of the living [101].

Digital estates can include copies of historical archives from periods of time before the origination of the digital world, and AI technology has opened the door to the world of historical ghostbots that respond and communicate ‘in the style of’ the original person. Thanks to recent advancements in AI language models, these chatbots do not just text back canned responses; they are able to present dynamic, longform, natural language conversations on many subjects [102]. Whilst most current ghostbots involve text, audio, or basic animations of well-known historical figures, there appears to be great potential for the creation of realistic 3D digital avatars where archival online content can be utilised alongside postmortem depictions. It is possible to create a digital avatar of a historical figure that can interact with the audience in as authentic a way as possible. Conversations with historical avatars, such as King Richard III, Mary Queen of Scots, or Robert the Bruce, are likely to become commonplace in the GLAM and heritage sectors.

However, archival material for palaeoanthropological or archaeological subjects is limited in comparison to modern digital estates and, in order to fill a gap in knowledge, assumptions and inferences will inevitably be made in relation to the personality and knowledge of the subject. In this way, generative AI tools may create convincing representations but sometimes may invent stories or memories that never happened, produce misinformation, and provide less convincing performances over longer conversations [51]. AI-driven ghostbots may therefore create inaccurate representations and, in a museum or internet setting, there is unlikely to be much discussion around their reliability.

3.3. AI-Powered MetaHumans

The most recent advance in the digital avatar field has been the combined implementation of AI to create realistic human expression, movement, and voice and machine learning to create realistic digital humans. These advances have also enabled the addition of more authentic facial expression and voice through performance capture and an AI-driven animation platform.

In 2021, Epic Games revealed MetaHuman Creator [103], a new, freely available browser-based or plugin AI app for the creation of digital avatars through an intuitive workflow [104], using the UnReal Engine 4.26 platform. Until then, 3D content creation was one of the most arduous tasks in constructing truly convincing digital humans. Even the most experienced artists required significant amounts of time, effort, and equipment, just for one character. MetaHuman Creator allows even an inexperienced non-artist to create a bespoke photorealistic digital human, fully rigged and complete with hair and clothing, in a matter of minutes. Each MetaHuman can vary in geometry and texture complexity, and users have the ability to edit a range of presets, from skin and eye colours, to nose shape and dentition pattern, to the manipulation of strand-based hair. The presets are based on scans of real people, allowing for a multitude of plausible adjustments to the appearance of the MetaHuman. Each MetaHuman delivers AI-generated facial expressions and movement and has a full facial and body rig for animation, with the complexity of these rigs also varying across the different levels of detail. MetaHuman Creator can be used in combination with motion capture and animation technology to create realistic movements for video games, movies, television, and other standard human–computer interfaces. These high-fidelity MetaHuman avatars (Figure 5) have been studied using audience perception ratings; researchers [105,106] found that high-fidelity avatars were considered more human, happy, and attractive, whilst low-fidelity avatars were considered more eery and sad. A previous study [107] also found that photorealism enhanced virtual reality, with higher levels of appeal and empathy than recorded with avatars depicting low levels of realism.

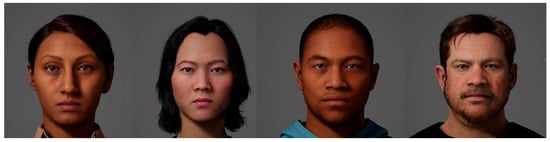

Figure 5.

High-fidelity digital avatars created using MetaHuman Creator.

One study [108] found that MetaHuman faces were able to trigger most of the major responses related to face detection, identification, reading, and agency in a significantly different way to previous computer-generated faces. This study suggested that MetaHuman faces “constitute, from an academic standpoint, a different type of artificial face characterized by a qualitatively superior effect of reality”. These qualities were described as:

- Geometrical accuracy from the perspective of the neurocognitive impact of facial configurations and expressions;

- Unconscious cognitive processes of familiarity due to the database’s diversity;

- High-density information granting semantic ambiguity and communicative complexity;

- Fundamental temporality, reflecting the impossibility of reducing faces to a simple one-dimensional item.

This technology can also be utilised to create 3D digital avatars directly from 3D scan models of living people or from 3D facial depiction models of deceased people (see Figure 6) using the Mesh to MetaHuman plugin for UnReal Engine that enables “users to turn a custom mesh created by scanning, sculpting, or traditional modelling into a fully rigged MetaHuman” [109].

Figure 6.

Facial depiction of female remains (dated 774–993 AD) recovered from Saint Peter’s Abbey, Ghent, Belgium. Close-up view on right. The facial depiction process involved the use of Mesh to MetaHuman to import a 3D facial reconstruction face model into MetaHuman Creator to add textures, including skin and eye colours. Clothing and hair were produced separately in Autodesk Maya, ZBrush, and Adobe Substance Painter. Image courtesy of Face Lab and Ghent University.

In turn, the motion of these avatars can be driven in real time using MetaHuman Animator in UnReal Engine [110], a feature set that enables users to capture an actor’s performance using an iPhone or stereo head-mounted camera system (HMC) and apply it as high-fidelity facial animation on any MetaHuman character, without the need for manual intervention. The application of this within the world of history and archaeology is significant; these technologies enable a more streamlined and less resource-heavy process for the creation of responsive avatars and, when utilised in combination with 3D digital facial depiction from human remains, unlimited opportunities are offered for the depiction of and interaction with historical figures and ancient peoples, including in the metaverse.

Current historical or archaeological postmortem avatar research often involves a team of academics from a variety of fields to authentically represent facial appearance along with speech, language, status, gender, and performance based on scientific evidence. In 2024, a team of interdisciplinary experts, including the authors of this paper, collaborated to produce a digital avatar of the English king Richard III based on a 3D digital facial reconstruction from his skull [111,112]. The team included a voice coach, Yvonne Morley-Chisholm, and a linguist specialising in original pronunciation, Professor David Crystal, and together they utilised research around accents relating to his status and life experience in order to train an actor, Thomas Dennis, to read one of his letters. The team aimed for this to be as authentic as possible using evidence-based interpretation, with Richard’s own words, his most likely accent, and the pronunciation originating from this period in British history.

The technical process involved the creation of a digital 3D avatar of King Richard III using the 3D facial reconstruction model, the Mesh to MetaHuman plugin, and the MetaHuman Creator plugin, plus the creation of a digital 3D avatar of the actor from video recordings of his face at multiple angles and speaking many different voice sounds using the same plugins. Further collection of video and voice recordings of the actor reading Richard III’s speech enabled the transfer of the actor’s facial movements and speech from his digital avatar to the digital avatar of King Richard III (see Figure 7). The use of an actor with similar facial proportions to King Richard III facilitated the performance transfer and the addition of medieval clothing, crown, and hairstyle created using CGI software (Substance Painter and Maya) provided visual authenticity.

Figure 7.

Performance capture and transfer—Richard III’s digital avatar (left), actor (centre), and the actor’s digital avatar (right).

The issues around the authenticity of digital avatars may become more significant when related to palaeoanthropology since there is no similar level of evidence from this time period. This AI-based technology (MetaHuman Creator and UnReal Engine) has already been utilised within the palaeoanthropology world to create an interactive digital model of the 10,000-year-old face of a prehistoric shaman from the Lepenski Vir settlement in Serbia. The project was unveiled at the 2020 Dubai Expo, where visitors were able to capture their own facial movements via iPhone and drive the facial expression of the digital shaman on screen in real-time animation (see Figure 8). In this way, the digital human interacted with a live audience [113]. Whilst this virtual experience proved to be groundbreaking and exciting, the authenticity of these contemporary facial expressions on an ancient person was not interrogated. The interpretation of facial appearance for our human ancestors has always been based on anatomical assumptions and whilst it is unclear whether or not our human ancestors had the physical possibility of speech, there is evidence of the anatomical ability to make sounds [114]. However, there is contemporary evidence of cultural differences in human facial expression across the global population [115,116,117], suggesting that universal evolution of facial expression is unlikely. Therefore, we cannot assume that ancient people shared our facial gestures or social interaction responses. Since these palaeoanthropology or archaeological depictions are presented in the GLAM sector as scientific visualisations, our navigation of facial expression presentation requires more effort to produce depictions that are justified by strong scientific evidence.

Figure 8.

An audience member interacts with the digital avatar of a Stone Age shaman. Image courtesy of Serbia Pavilion Expo 2020 Dubai.

Further complicating the use of MetaHumans for the depiction of palaeoanthropological individuals is the open-source nature of the technologies. Currently they are free for all, and while researchers collaborate to produce avatars of individuals from the past based on available evidence, the ease at which an avatar can be created and then powered by AI in the metaverse presents unknown complexities and potential misuses.

It is now possible to combine MetaHumans with AI to create MetaHuman chatbots. Newer gaming plugins for UnReal Engine have emerged that allow any digital creator with access to the software an opportunity to generate MetaHumans as playable and non-player characters, which can be imported into metaverses, such as Epic Games’ Fortnite, and can respond to players using AI. Convai [118], for example, enables non-player characters to “converse naturally with players via text or voice and can carry out advanced actions as an enemy, companion, or filler bot” [119] using a process of speech-to-text, text prompt to LLM (Large Language Model—AI designed to understand, generate, and interact with human language), LLM response, and then text-to-speech and audio-to-face with blend-shape animations. When Convai is combined with a MetaHuman via an UnReal plugin, the digital avatar can ‘naturally’ provide context-aware responsiveness and undertake two-way interactions with living people in a convincing manner [119]. This pioneering combination of AI-enabled MetaHumans creates seemingly more advanced interactions between humans and MetaHumans than the Stone Age shaman avatar, but the same issues in relation to authenticity and scientific backing are further amplified. While this creates exciting opportunities for users to interact with palaeoanthropological and archaeological avatars in the metaverse, it is also fraught with possible moral and ethical repercussions where the ancient individual’s status changes to an AI-powered ‘puppet’ that can complete unfathomable actions, say anything, die repeatedly, or live forever in the metaverse.

The impact of these advances for the facial depiction of ancient people in the wider cultural heritage sector are currently unknown, but are we more likely to pursue the production of AI-enabled digital postmortem avatars over other modalities, and what challenges will this bring? In combination, the use of AI-powered MetaHumans presents widened opportunities for digitally interactive and voice-enabled human avatars, but this may have critical challenges specifically within the sphere of palaeoanthropology and archaeology.

4. Ethical Challenges for Postmortalism in Cultural Heritage

As the GLAM and heritage sectors adopt deepfake technology and realistic digital avatars of people from the past, they tend to largely gloss over any moral or ideological challenges posed by such expositions, whilst the press often emphasises the capacity to digitally “resurrect” the deceased without critically reviewing its complicated relationship with authenticity and uncanniness [120]. The ethical issues around the method and application of digital avatars relate to different understandings of embodiment, rights, selfhood, and agency [121]. Commentators [122] argue in favour of using postmortem avatars for knowledge transfer in the GLAM and heritage sectors and state that these projects have the potential to become a key driving force toward the public’s acceptance of this deepfake technology. Whilst there has been some discussion around the under-representation of digital avatars of black and brown people, especially in the film industry [60], there has not been any meaningful discussion around the related authenticity of palaeoanthropology or archaeology avatars.

There are many examples of the bias inherent in AI systems [123,124,125,126,127] creating a serious problem for the deployment of such technology, as the resulting models might perform poorly on populations that are minorities within the training set, ultimately presenting higher risks to these populations [128]. For example, gender and racial bias can become exposed through stereotypical imagery [129,130] and limited representation. This presents significant challenges in the fields of ancient archaeology, where there is limited reference material, and the use of AI may exacerbate any existing systemic racism, gender misattribution, or cultural incompetence. In addition, where performance transfer is utilised for subjects in palaeoanthropology, this includes 21st century actors representing early hominids, and without scientific evidence of voice, movement, and expression, this is little more than theatre.

The ethical issues surrounding postmortem avatars or ghostbots are currently under debate, with calls for new solutions to protect the memory of the deceased [59,131]. These authors note that whilst the law provides redress for any mental and emotional harm caused by deepfakes, this excludes the deceased, and these mental and emotional injuries do not include injury to human dignity or legacy. There is no legal redress for postmortem harm, because only living persons can experience harm, and relatives have no actionable claim against a tarnished reputation for the dead relative, as such suits may not be asserted by proxy. In light of this, some researchers [59] have proposed ‘do not bot me’ clauses in personal wills. One recent US survey [132] found that 58% of respondents viewed digital resurrection as socially acceptable if the deceased provided consent, as opposed to only 3% when the deceased had dissented. They also found that 59% of respondents were against the idea of their own digital resurrection. This creates a challenge for the heritage and museum sectors, as there is clear public concern around subject consent in the creation of digital postmortem avatars. However, the audience figures recorded for the Dalí Museum [133] after the launch of the digital Dalí exhibit suggest that the public are less concerned when this is applied to heritage cases. The role of the museum is currently under evaluation, as the GLAM sector recognises the need for adaption to serve communities in new ways, described [134] as a transformational shift from knowledge transfer to knowledge exchange. Whilst there is undeniable public interest in the implementation of postmortem avatars in museum spaces, without simultaneous consideration of the ethical challenges associated with personal consent and human rights, such applications risk maintaining the hegemonic structures that have persisted for decades. Navigating the path between innovation and cultural competence may facilitate exactly the kind of change required to provide educational advantage in the museum sector.

Artists and practitioners who are commissioned to create simulations of ancient people for museums or the media tend to be highly skilled, but this sector can be a competitive field. If the GLAM and media sectors are more concerned with making science exciting, these practitioners can forget their underlying mission to disseminate and contribute to scientific knowledge. Researchers [3,8,40] claim that there is a moral and ethical responsibility and accountability to this work, as it is consumed by the public for scientific understanding. Some researchers [40] state that “artists who purport to facilitate the dissemination of scientific material, whose works are also hosted by renowned institutions of learning, are understandably perceived by the public as experts in their field. However, when artists operating as disseminators of science fail to make sure their models showcase the best available evidence, they fall short in their role of not just educators but artists as well”.

It is clear that deepfake technology poses opportunities for enhanced experiences in the GLAM and heritage sectors. However, realising these opportunities requires postmortem avatars to be well received by the audience, as any resistance will mitigate any benefits. Research relating to human trust in AI representation [135] suggests that trust in robotic AI starts at a low level but increases over time with extended exposure and positive experiences. However, trust in virtual AI has the opposite trajectory, with initial high-level trust decreasing when there are unrealised user expectations and resulting user frustration. In other words, the high-fidelity virtual avatar’s appearance often did not fit the reality of the AI responses. This lack of calibration may be due to a number of factors, such as unnatural eye movement [136], glitches [137], low trustworthiness [138], emotional inauthenticity [58], unusual speech patterns [139], or gender-biased behaviour patterns [140]. There have been calls for more research around the perception and end-user responses to the use of deepfake technology [141].

These digital palaeoanthropological and historical postmortem avatars afford a shift in and relinquishment of curatorial control by facilitating infinite storytelling and independently evolving digital entities. It is no longer the museum or practitioner who breathes life into them, but rather the audience. As these avatars fly the curatorial nest and accomplish digital immortality, what will happen to their individual identities? Will new narratives continuously erode true history? Will new presentations and contexts create ‘evolved’ identities that dictate our future? It can be argued that all those involved in creating these avatars take on ethical and moral responsibilities, not only to the individual they are creating but also to our future selves. In typical Promethean style, what we create may come back to haunt us.

5. Conclusions

Thanks to the rapid development of motion and performance technologies, digital human creation apps, generative AI, and audiovisual technologies, convincing deepfakes of voices, images, and videos depicting real people are increasingly indistinguishable from reality. Realistic and immersive experiences with the deceased are increasing and it is likely that we will soon not only interact with two-dimensional ghostbots but also with lifelike, three-dimensional digital avatars overlayed onto the real world, offering a multi-sensory connection [102]. This immersive experience aims to blur the boundaries between the physical and the digital, the past and the present, and the real and the unreal.

However, postmortalism crosses challenging ethical territory. Should we create a postmortem avatar of someone just because it is technically possible? In current practice, there already exist ethical and moral challenges around the production of facial depictions of ancient individuals who have not consented to this process, and two main challenges arise under the umbrella of consent: forced immortality and misrepresentation. With the addition of new technologies, these challenges are confounded by the capacity for enhanced hyper-realistic static and dynamic digital avatars that transcend portraiture and representation and move into the realm of a proxy or a replacement that can live forever. It is possible that the inclusion of additional physical attributes, such as the fourth dimension, as well as the environment in which they are placed (e.g., gallery vs. metaverse) has shifted our acceptance criterion toward proxies or replacements. Additionally, there is the potential for new narratives, not consented to by the individual, which may include historical reenactment or even entirely new narratives that impart personality and character. This kind of forced immortality and misrepresentation may be seen as a non-consensual violation of individual human dignity, and its application to palaeoanthropology and/or archaeology will be affected by any future legal protections around personal legacy.

Author Contributions

Conceptualization, C.M.W.; writing—original draft preparation, C.M.W., M.A.R. and S.L.S.; writing—review and editing, C.M.W., M.A.R. and S.L.S.; visualization, C.M.W., S.L.S. and M.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No research data.

Acknowledgments

The authors would like to thank everyone in Face Lab for support and assistance.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Harris, S.B. Immortality: The Search for Everlasting Life. In The Skeptic Encyclopedia of Pseudoscience; Shermer, M., Linse, P., Eds.; ABC-CLIO: New York, NY, USA, 2002; pp. 357–358. ISBN 978-1-57607-653-8. [Google Scholar]

- Jacobsen, M.H. (Ed.) Postmortal Society: Towards a Sociology of Immortality; Routledge: London, UK, 2017. [Google Scholar]

- Drell, J.R. Neanderthals: A history of interpretation. Oxf. J. Archaeol. 2000, 19, 1–24. [Google Scholar] [CrossRef]

- Crossland, Z. Acts of estrangement: The post-mortem making of self and other. Archaeol. Dialogues 2009, 16, 102–125. [Google Scholar] [CrossRef]

- Wilkinson, C.M. Facial reconstruction–anatomical art or artistic anatomy? J. Anat. 2010, 216, 235–250. [Google Scholar] [CrossRef]

- Charlier, P. Naming the body (or the bones): Human remains, anthropological/medical collections, religious beliefs and restitution. Clin. Anat. 2010, 27, 291–295. [Google Scholar] [CrossRef]

- Buti, L.; Gruppioni, G.; Benazzi, S. Facial Reconstruction of famous historical figures: Between art and science. In Studies in Forensic Biohistory: Anthropological Perspectives; Stojanowski, C.M., Duncan, W.N., Eds.; Cambridge Studies in Biological and Evolutionary Anthropology Series; Cambridge University Press: Cambridge, UK, 2016; Chapter 9; pp. 191–212. ISBN 131694302X. [Google Scholar]

- Schraven, M. Likeness and likeability: Human remains, facial reconstructions and identity-making in museum displays. In Mannequins in Museums: Power and Resistance on Display; Cooks, B.R., Wagelie, J.J., Eds.; Routledge: New York, NY, USA, 2022; Chapter 3; pp. 45–62. [Google Scholar]

- Wilkinson, C.M. Cognitive Bias and facial depiction from skeletal remains. Bioarchaeology Int. 2020, 4, 1. [Google Scholar] [CrossRef]

- Museum of Ireland: Bog Bodies Research Project. 2024. Available online: https://www.museum.ie/en-ie/collections-research/irish-antiquities-division-collections/collections-list-(1)/iron-age/bog-bodies-research-project (accessed on 23 November 2024).

- Heritage Malta Exhibition of Ancient People. 2024. Available online: https://heritagemalta.mt/news/face-to-face-with-our-ancestors/ (accessed on 23 November 2024).

- Appleton, A. Faces from 2400 Years Ago: Archaeological Museum Exhibit Focuses on Reconstructing the Faces—And Dignity—Of the Goucher Mummy (Left) and the Cohen Mummy. Johns Hopkins Magazine: Archaeology; Fall 2018. 2018. Available online: https://hub.jhu.edu/magazine/2018/fall/mummy-facial-reconstruction/ (accessed on 12 November 2024).

- Wilkinson, C.M. The Man Himself: The Face of Richard III; The Ricardian Bulletin: London, UK, 2013; pp. 50–55. ISSN 0308 4337. [Google Scholar]

- Wilkinson, C.M.; Roughley, M.; Moffat, R.D.; Monckton, D.G.; MacGregor, M. In search of Robert Bruce, part I: Craniofacial analysis of the skull excavated at Dunfermline in 1819. J. Archaeol. Sci. Rep. 2019, 24, 556–564. [Google Scholar] [CrossRef]

- Hawass, Z. Tutankhamun Facial Reconstruction. Press Release 10 May 2005. 2005. Available online: https://www.biomodel.com/downloads/news_KingTutCT_Guardians2.pdf (accessed on 12 November 2024).

- Neanderthal Museum, Germany. 2024. Available online: https://neanderthal.de/en (accessed on 23 November 2024).

- Lotzof, K. Cheddar Man: Mesolithic Britain’s Blue-Eyed Boy. Natural History Museum, Human Evolution. Posted 24 Jan 2018. 2018. Available online: https://www.nhm.ac.uk/discover/cheddar-man-mesolithic-britain-blue-eyed-boy.html (accessed on 12 November 2024).

- Nerlich, A.G.; Egarter Vigl, E.; Fleckinger, A.; Tauber, M.; Peschel, O. The Iceman: Life scenarios and pathological findings from 30 years of research on the glacier mummy “Ötzi”. Der Pathol. 2021, 42, 530–539. [Google Scholar] [CrossRef]

- Introna, F.; Lauretti, C.; Wilkinson, C.M. San Nicola ed il suo volto. In Le Spoglie Della Memoria: Storie di Reliquie: A Cura di Angelo Schiavone; Telemaco: Acerenza, Italy, 2018; pp. 29–50. ISBN 8898294387. [Google Scholar]

- Wilkinson, C.M.; Saleem, S.N.; Liu, C.Y.J.; Roughley, M. Revealing the face of Ramesses II through computed tomography, digital 3D facial reconstruction and computer-generated Imagery. J. Archaeol. Sci. 2023, 160, 105884. [Google Scholar] [CrossRef]

- Wilkinson, C.; Rynn, C.; Peters, H.; Taister, M.; Kau, C.H.; Richmond, S. A blind accuracy assessment of computer-modeled forensic facial reconstruction using computed tomography data from live subjects. Forensic Sci. Med. Sci. Med. Pathol. Pathol. 2006, 2, 179–187. [Google Scholar] [CrossRef]

- Lee, W.J.; Wilkinson, C.M.; Hwang, H.S. An accuracy assessment of forensic computerized facial reconstruction employing cone-beam computed tomography from live subjects. J. Forensic Sci. 2012, 57, 318–327. [Google Scholar] [CrossRef]

- Bruce, V.; Healey, V.; Burton, M.; Doyle, T.; Coombes, A.; Linney, A. Recognising facial surfaces. Perception 1991, 20, 755–769. [Google Scholar] [CrossRef] [PubMed]

- Lewis, M.B. Familiarity, target set and false positives in face recognition. Eur. J. Cogn. Psychol. 1997, 9, 437–459. [Google Scholar] [CrossRef]

- Wright, D.B.; Sladden, B. An own gender bias and the importance of hair in face recognition. Acta Psychol. 2003, 114, 101–114. [Google Scholar] [CrossRef] [PubMed]

- Ciaccia, C. Recreated Face of Queen Nefertiti Sparks ‘Whitewashing’ Race Row. Fox News, 12 February 2018. 2018. Available online: https://www.foxnews.com/science/recreated-face-of-queen-nefertiti-sparks-whitewashing-race-row (accessed on 12 November 2024).

- Devlin, H. First Modern Britons Had ‘Dark to Black’ Skin, Cheddar Man DNA Analysis Reveals, The Guardian (online). 2018. Available online: https://www.theguardian.com/science/2018/feb/07/first-modern-britons-dark-black-skin-cheddar-man-dna-analysis-reveals (accessed on 12 November 2024).

- Schramm, K. Stuck in the tearoom: Facial reconstruction and Postpost-apartheid headache. Am. Anthropol. 2020, 122, 342–355. Available online: https://anthrosource.onlinelibrary.wiley.com/doi/pdfdirect/10.1111/aman.13384?casa_token=QrVK_tcB8XgAAAAA%3AvhQlC4XPAlaPU-ramvcsc6qpJ4AGFPY6DnZhdWPwzFthuLI0lRTpUtKv9_PxUqp38rvQKH4Azr_ZpuI (accessed on 12 November 2024). [CrossRef]

- Redfern, R.; Booth, T. Changing people, changing content. In The Routledge Handbook of Museums, Heritage and Death; Biers, T., Clary, K.S., Eds.; Routledge: Oxon, UK, 2023; Chapter 10; pp. 130–153. [Google Scholar]

- Alberge, D. Meet Erika the Red: Viking Women Were Warriors Too, Say Scientists. The Guardian, 2 November 2019. 2019. Available online: www.theguardian.com/uk-news/2019/nov/02/viking-woman-warrior-face-reconstruction-national-geographic-documentary (accessed on 12 November 2024).

- Robb, J. Towards a Critical Ötziography: Inventing Prehistoric Bodies; Lambert, H., McDonald, M., Eds.; Social Bodies: New York, NY, USA, 2009; pp. 100–128. [Google Scholar]

- Nystrom, K.C. The biohistory of prehistory: Mummies and the forensic creation of identity. In Studies in Forensic Biohistory: Anthropological Perspectives; Stojanowski, C.M., Duncan, W.N., Eds.; Cambridge Studies in Biological and Evolutionary Anthropology series; Cambridge University Press: Cambridge, UK, 2016; Chapter 7; pp. 143–166. ISBN 1107073545. [Google Scholar]

- Anderson, K. Hominin Representations in Museum Displays: Their Role in Forming Public Understanding Through the Non-verbal Communication of Science. Master’s Thesis, Biological and Comparative Anatomy Research Unit, University of Adelaide, Adelaide, Australia, 2011. [Google Scholar]

- Nieves Delgado, A. The problematic use of race in facial reconstruction. Sci. Cult. 2020, 29, 568–593. Available online: www.tandfonline.com/doi/pdf/10.1080/09505431.2020.1740670?casa_token=NgF1CkuoNCcAAAAA:EEtHVW2bj60ddCXG_J5JtFYaHxX73yUrPIjE4E57I9c91mnKbMEXXZQcTLQ5U9lso8Sp1Hnte7m_ (accessed on 12 November 2024). [CrossRef]

- Johnson, H. Craniofacial reconstruction and its socio-ethical implications. Mus. Soc. Issues 2016, 11, 97–113. [Google Scholar] [CrossRef]

- Gibbon, V.E.; Feris, L.; Gretzinger, J.; Smith, K.; Hall, S.; Penn, N.; Mutsvangwa, T.E.; Heale, M.; Finaughty, D.A.; Karanja, Y.W.; et al. Confronting historical legacies of biological anthropology in South Africa—Restitution, redress and community-centered science: The Sutherland Nine. PLoS ONE 2023, 18, e0284785. [Google Scholar] [CrossRef]

- Phillips, F. The Quest for Ancestral Faces. 2018. Available online: https://www.francescaphillips.com/the-quest-for-ancestral-faces/ (accessed on 12 November 2024).

- Ancestral Faces, Canary Islands. Face Lab, Liverpool John Moores University. 2018. Available online: https://www.ljmu.ac.uk/microsites/ancestral-faces-canary-islands (accessed on 12 November 2024).

- Gibbon, V. San and Khoe skeletons: How a South African University Sought to Restore Dignity and Redress the Past. The Conversation. 13 July 2023. 2023. Available online: https://theconversation.com/san-and-khoe-skeletons-how-a-south-african-university-sought-to-restore-dignity-and-redress-the-past-207551 (accessed on 12 November 2024).

- Campbell, R.M.; Vinas, G.; Henneberg, M.; Diogo, R. Visual depictions of our evolutionary past: A broad case study concerning the need for quantitative methods of soft tissue reconstruction and art-science collaborations. Front. Ecol. Evol. 2021, 9, 639048. [Google Scholar]

- Brawley, S. What is the Metaverse and What Impacts Will It Have for Society? UK Parliament, Research Briefing, Published Friday, 19 July 2024. 2024. Available online: https://post.parliament.uk/research-briefings/post-pb-0061/ (accessed on 12 November 2024).

- Moran, M.E. The da Vinci robot. J. Endourol. 2006, 20, 986–990. [Google Scholar] [CrossRef]

- Great Moments with Mr. Lincoln. Available online: https://www.youtube.com/watch?v=mDCoHhZ04Vs (accessed on 23 November 2024).

- Strodder, C. The Disneyland Encyclopedia, 3rd ed.; Santa Monica Press: Solana Beach, CA, USA, 2017; pp. 226–227. ISBN 978-1595800909. [Google Scholar]

- Martin, F. Garner Holt’s Lincoln Animatronic. Press Release 17 March 2019, Themed Attraction. 2019. Available online: https://www.themedattraction.com/garner-holts-lincoln/ (accessed on 12 November 2024).

- Mori, M. The uncanny valley. Energy 1970, 7, 33–35. [Google Scholar]

- Mori, M.; MacDorman, K.F.; Kageki, N. The Uncanny Valley [From the Field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Liao, J.; Huang, J. Think like a robot: How interactions with humanoid service robots affect consumers’ decision strategies. J. Retail. Consum. Serv. 2024, 76, 103575. [Google Scholar] [CrossRef]

- Bartneck, C.; Kanda, T.; Ishiguro, H.; Hagita, N. Is the uncanny valley an uncanny cliff? In Proceedings of the 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju Island, Republic of Korea, 26–29 August 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 368–373. [Google Scholar]

- Mathur, M.B.; Reichling, D.B. Navigating a social world with robot partners: A quantitative cartography of the Uncanny Valley. Cognition 2016, 146, 22–32. [Google Scholar] [CrossRef] [PubMed]

- Sahota, R. Disney Unveils New Lifelike Animatronic Robots with a Human-Like Gaze. Engineering.com, 23 November 2020. 2020. Available online: www.engineering.com/story/disney-unveils-new-lifelike-animatronic-robotswith-a-human-like-gaze (accessed on 12 November 2024).

- Parke, F.I. Computer generated animation of faces. Proc. ACM Annu. Conf. 1972, 1, 451–457. [Google Scholar]

- Tony de Peltrie. 1985. Available online: https://www.imdb.com/title/tt0158278/ (accessed on 12 November 2024).

- Flückiger, B. Digital Bodies. In Visual Effects: Filmbilder aus dem Computer; Flückiger, B., Ed.; Schüren: Marburg, Germany, 2008; pp. 417–467. Available online: https://www.zora.uzh.ch/id/eprint/39752/1/BodiesFlueckigerB.pdf (accessed on 12 November 2024).

- Melton, N.D.; Knusel, C.; Montgomery, J. (Eds.) Gristhorpe Man.: A Life and Death in the Bronze Age; Oxbow Books: Oxford, UK, 2013; ISBN 1782972072. [Google Scholar]

- BBC News. Gristhorpe Man Speaks After 4000 Years. 2 August 2010. 2010. Available online: http://news.bbc.co.uk/local/york/hi/people_and_places/history/newsid_8877000/8877132.stm (accessed on 12 November 2024).

- Clark, T.; Betts, M.; Coupland, G.; Cybulski, J.S.; Paul, J.; Froesch, P.; Feschuk, S.; Joe, R.; Williams, G. Looking into the eyes of the ancient chiefs of shíshálh: The osteology and facial reconstructions of a 4000-year-old high-status family. In Bioarchaeology of Marginalized People; Academic Press: Cambridge, MA, USA, 2019; pp. 53–67. [Google Scholar]

- Kätsyri, J.; Mäkäräinen, M.; Takala, T. Testing the ‘uncanny valley’ hypothesis in semirealistic computer-animated film characters: An empirical evaluation of natural film stimuli. Int. J. Hum. Comput. Stud. 2017, 97, 149–161. [Google Scholar] [CrossRef]

- Tinwell, A.; Grimshaw, M.; Nabi, D.A.; Williams, A. Facial expression of emotion and perception of the Uncanny Valley in virtual characters. Comput. Hum. Behav. 2011, 27, 741–749. [Google Scholar] [CrossRef]

- Weisman, W.D.; Peña, J.F. Face the uncanny: The effects of doppelganger talking head avatars on affect-based trust toward artificial intelligence technology are mediated by uncanny valley perceptions. Cyberpsychology Behav. Soc. Netw. 2021, 24, 182–187. [Google Scholar] [CrossRef]

- Bestor, N. The technologically determined decade: Robert Zemeckis, Andy Serkis, and the promotion of performance capture. Animation 2016, 11, 169–188. [Google Scholar] [CrossRef]

- Mihailova, M. You were not so very different from a hobbit once: Motion capture as an estrangement device in Peter Jackson’s Lord of the Rings trilogy. Ps. Essays Film. Humanit. 2013, 33, 3–105. Available online: https://web.p.ebscohost.com/ehost/detail/detail?vid=0andsid=1f617311-e8e1-4087-b761-16ef8a954233%40redisandbdata=JnNpdGU9ZWhvc3QtbGl2ZQ%3d%3d#AN=95868960anddb=f3h (accessed on 12 November 2024).

- Gunning, T. Gollum and Golem: Special Effects and the Technology of Artificial Bodies. In Hobbits to Hollywood: Essays on Peter Jackson’s Lord of the Rings; Ernest Mathijs and Murray Pomerance, Ed.; Rodopi B.V.: New York, NY, USA, 2006; pp. 319–349. [Google Scholar]

- Prince, S. Digital Visual Effects in Cinema: The Seduction of Reality; Rutgers UP: New Brunswick, Canada, 2012. [Google Scholar]

- Failes, I. From Performance Capture to Creature: How the Apes Were Created in ‘War for the Planet of the Apes’. Feature Film, VFX, Cartoon Brew 18 July 2017. 2017. Available online: https://www.cartoonbrew.com/vfx/performance-capture-creature-apes-created-war-planet-apes-152357.html (accessed on 12 November 2024).

- Allison, T. Race and the digital face: Facial (mis)recognition in Gemini Man. Convergence 2021, 27, 999–1017. [Google Scholar] [CrossRef]

- Remshardt, R. Avatars, Apes and the ‘Test’ of Performance Capture. In Avatars, Activism and Postdigital Performance; Jarvis, L., Savage, K., Eds.; Bloomsbury Publishing: Methuen Drama, UK, 2022. [Google Scholar]

- Langlands, A. Siggraph Presentation on ‘War for the Planet of the Apes’. YouTube, 12 December 2017. Available online: https://youtu.be/txoEDIdbUrg?si=av1WRObXvIr4IP9 (accessed on 21 August 2024).

- Natural History Museum. Hollywood Star Andy Serkis Creates Scientifically Accurate Neanderthal Avatar. Press Release 11 May 2018. 2018. Available online: https://www.nhm.ac.uk/press-office/press-releases/andy-serkis-creates-scientifically-accurate-neanderthal.html (accessed on 12 November 2024).

- Christie, K. Animated Facial Reconstruction Brings Robert Burns to Life. The Scotsman 25 January 2018. 2018. Available online: https://www.scotsman.com/news/uk-news/animated-facial-reconstruction-brings-robert-burns-to-life-2507013 (accessed on 12 November 2024).

- Face Lab, Liverpool John Moores University. Animating Robert Burns. YouTube; 25 Jan 2018. 2018. Available online: https://youtu.be/2S5t6ufFEIM?si=CAM4SFM3mF1i7DN1 (accessed on 12 November 2024).

- Cejudo, R. Ethical Problems of the Use of Deepfakes in the Arts and Culture. In Ethics of Artificial Intelligence; Lara, F., Deckers, J., Eds.; The International Library of Ethics, Law and Technology; Springer: Cham, Switzerland, 2023; Volume 41. [Google Scholar]

- Somaini, A. Film, media, and visual culture studies, and the challenge of machine learning. Eur. J. Media Stud. NECSUS 2021, 10, 49–57. [Google Scholar]

- Wang, S.F.; Lai, S.H. Efficient 3D face reconstruction from a single 2D image by combining statistical and geometrical information. In Proceedings of the Computer Vision–ACCV 2006: 7th Asian Conference on Computer Vision, Hyderabad, India, 13–16 January 2006; Proceedings, Part II, 7. Springer: Berlin/Heidelberg, Germany, 2006; pp. 427–436. Available online: https://link.springer.com/chapter/10.1007/11612704_43 (accessed on 12 November 2024).

- Afzal, H.R.; Luo, S.; Afzal, M.K.; Chaudhary, G.; Khari, M.; Kumar, S.A. 3D face reconstruction from single 2D image using distinctive features. IEEE Access 2020, 8, 180681–180689. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=andarnumber=9210569 (accessed on 12 November 2024). [CrossRef]

- Face App. Available online: https://www.faceapp.com/ (accessed on 12 November 2024).

- Faceswap. Available online: https://faceswap.dev/ (accessed on 12 November 2024).

- Deep Nostalgia. Available online: https://www.myheritage.fr/deep-nostalgia (accessed on 12 November 2024).

- Jackson, P. They Shall Not Grow Old. Imperial War Museums. BBC Click. 15 October 2018. 2018. Available online: https://youtu.be/EYIeactlMWo?si=_Vt66Hj4IjlJKEYs (accessed on 12 November 2024).

- Bandara, P. Ai Photos Reveal What Fictional Couples’ Kids Would Look Like. PetaPixel, Posted 21 February 2023. 2023. Available online: https://petapixel.com/2023/02/21/ai-photos-reveal-what-fictional-couples-kids-would-look-like/ (accessed on 12 November 2024).

- Welker, C.; France, D.; Henty, A.; Wheatley, T. Trading faces: Complete AI face doubles avoid the uncanny valley. Psyarxiv Preprints. 2020. [Google Scholar] [CrossRef]

- Nightingale, S.J.; Farid, H. AI-synthesized faces are indistinguishable from real faces and more trustworthy. Proc. Natl. Acad. Sci. USA 2022, 119, e2120481119. [Google Scholar] [CrossRef]

- Kopelman, S.; Frosh, P. The “algorithmic as if”: Computational resurrection and the animation of the dead in Deep Nostalgia. New Media Soc. 2023. [Google Scholar] [CrossRef]

- Zen, A.P.; Yuningsih, C.R.; Miraj, I.M. Computer generated photography: Still image to moving image. In Sustainable Development in Creative Industries: Embracing Digital Culture for Humanities; Routledge: London, UK, 2023; pp. 123–128. [Google Scholar]

- Howard, J. Artificial Intelligence: Implications for the Future of Work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [CrossRef]

- Sintowoko, D.A.W.; Resmadi, I.; Azhar, H.; Gumilar, G.; Wahab, T. Sustainable Development in Creative Industries: Embracing Digital Culture for Humanities. In Proceedings of the 9th Banung Creative Movement International Conference on Creative Industries, Bandung, Indonesia, 9 September 2021; Routledge Taylor Francis Group: Abingdon, UK, 2022. [Google Scholar] [CrossRef]

- MyHeritage Ltd. (n.d.). Deep Nostalgia™. Retrieved 12 March 2024. Available online: https://www.myheritage.com/deep-nostalgia (accessed on 12 November 2024).

- Wakefield, J. MyHeritage Offers ‘Creepy’ Deepfake Tool to REANIMATE dead. BBC News, 26 February 2021. 2021. Available online: https://www.bbc.co.uk/news/technology-56210053 (accessed on 12 November 2024).

- Story for Faces. Perth Museum. Posted 18 February 2024. Available online: https://perthmuseum.co.uk/stories-for-faces/ (accessed on 12 November 2024).

- Youtube. Viking Man from St Brice’s Day Massacre, Oxford in 1002. Face Lab at Liverpool John Moores University. 2024. Available online: https://youtu.be/hsaG5-QuQxE?si=3X4rRN3ZmWUhJf9R (accessed on 12 November 2024).

- Dong, Z.; Wei, J.; Chen, X.; Zheng, P. Face Detection in Security Monitoring Based on Artificial Intelligence Video Retrieval Technology. IEEE Access 2023, 8, 63421–63433. [Google Scholar] [CrossRef]

- Harbinja, E.; Edwards, L.; McVey, M. Governing Ghostbots. Comput. Law Secur. Rev. 2023, 48, 105791. [Google Scholar] [CrossRef]

- The Dalí. Press Release 23 Jan 2019. Dalí Lives: Museum Brings Artist Back to Life with AI. 2019. Available online: https://thedali.org/press-room/dali-lives-museum-brings-artists-back-to-life-with-ai/ (accessed on 12 November 2024).

- Mihailova, M. To Dally With Dalí: Deepfake (Inter) Faces in the Art Museum. Convergence 2021, 27, 882–898. [Google Scholar] [CrossRef]

- Lee, D. Deepfake Salvador Dalí Takes Selfies with Museum Visitors. The Verge, May 10. 2019. Available online: https://www.theverge.com/2019/5/10/18540953/salvador-dali-lives-deepfake-museum (accessed on 12 November 2024).

- Hutson, J.; Huffman, P.; Ratican, J. Digital resurrection of historical figures: A case study on Mary Sibley through customized ChatGPT. Fac. Scholarsh. 2024, 4, 590. Available online: https://digitalcommons.lindenwood.edu/faculty-research-papers/590 (accessed on 12 November 2024). [CrossRef]

- Mladin, N. Is Digital Resurrection Possible? Theos 15 February 2024. Digital Resurrection of Historical Figures: A Case Study on Mary Sibley Through Customized ChatGPT. 2024. Available online: https://www.theosthinktank.co.uk/comment/2024/02/15/is-digital-resurrection-possible (accessed on 23 September 2024).

- Bassett, D.J. Creation and Inheritance of Digital Afterlives; Springer International Publishing: New York, NY, USA, 2022. [Google Scholar]

- O’Connor, M.; Kasket, E. What Grief isn’t: Dead Grief Concepts Their Digital-Age Revival in Social Media Technology Across the Lifespan; Machin, T., Brownlow, C., Abel, S., Gilmour, J., Eds.; Springer International Publishing: New York, NY, USA, 2022; pp. 115–130. [Google Scholar]

- Segerstad, Y.H.; Bell, J.; Yeshua-Katz, D. A Sort of Permanence: Digital Remains and Posthuman Encounters with Death. Conjunctions 2022, 9, 1–12. [Google Scholar] [CrossRef]

- Altaratz, D.; Morse, T. Digital Séance: Fabricated Encounters with the Dead. Soc. Sci. 2023, 12, 635. [Google Scholar] [CrossRef]

- Kugler, L. Raising the Dead with, A.I. Communications of the ACM. 1 April 2024. Available online: https://cacm.acm.org/news/raising-the-dead-with-ai/ (accessed on 12 November 2024).

- Epic Games. MetaHuman. 2024. Available online: https://MetaHuman.unrealengine.com (accessed on 12 November 2024).

- Fang, Z.; Cai, L.; Wang, G. MetaHuman Creator: The starting point of the metaverse. In Proceedings of the International Symposium on Computer Technology and Information Science (ISCTIS), Guilin, China, 4–6 June 2021; pp. 154–157. [Google Scholar]

- Higgins, D.; Egan, D.; Fribourg, R.; Cowan, B.; McDonnell, R. Ascending from the valley: Can state-of-the-art photorealism avoid the uncanny? In SAP21; ACM Symposium on Applied Perception; ACM Digital Library: New York, NY, USA, 2021; pp. 1–5. Available online: https://www.scss.tcd.ie/rachel.mcdonnell/papers/SAP2021a.pdf (accessed on 23 September 2024).

- Seymour, M.; Yuan, L.I.; Dennis, A.; Riemer, K. Have we crossed the uncanny valley? Understanding affinity, trustworthiness, and preference for realistic digital humans in immersive environments. J. Assoc. Inf. Syst. 2021, 22, 9. Available online: https://aisel.aisnet.org/jais/vol22/iss3/9 (accessed on 12 November 2024). [CrossRef]

- Zibrek, K.; Martin, S.; McDonnell, R. Is Photorealism Important for Perception of Expressive Virtual Humans in Virtual Reality? ACM Trans. Appl. Percept. 2019, 16, 14. [Google Scholar] [CrossRef]

- Giuliana, G.T. What is so special about contemporary CG faces? Semiotics of MetaHumans. Topoi 2022, 41, 821–834. [Google Scholar] [CrossRef]

- Epic Games. MetaHuman Plugin. 15 June 2023. 2023. Available online: https://www.unrealengine.com/marketplace/en-US/product/metahuman-plugin (accessed on 12 November 2024).

- Available online: https://www.unrealengine.com/en-US/blog/delivering-high-quality-facial-animation-in-minutes-metahuman-animator-is-now-available (accessed on 12 November 2024).

- A Voice for Richard III. York Theatre Royal. 17 November 2024. Available online: https://www.yorktheatreroyal.co.uk/show/a-voice-for-king-richard-iii/ (accessed on 12 November 2024).

- Ambrose, T. Hi-Tech Recreation of Richard III’s Voice Has a Yorkshire Accent. The Guardian, 17 November 2024. Available online: https://www.theguardian.com/uk-news/2024/nov/17/technology-used-to-recreate-richard-iiis-voice-with-yorkshire-accent (accessed on 12 November 2024).