Abstract

Nowadays, many museums publish virtual versions of the artifacts they keep in their collections in different 3D model viewers available on the Internet. However, despite the wide experience that some of them have in this field, it is still possible to find many virtual models that do not meet the desirable requirements for their publication on the Internet, especially regarding the optimization of the mesh and color textures needed for greater efficiency in the visualization and downloading of 3D objects. In this study, a sample of virtual models of objects belonging to multiple archaeological museums from different countries is analyzed. We considered multiple characteristics of the models, such as the number of polygons that compose them, the quality of the meshes, filter effects applied during post-processing, etc. The results of this research indicate that it is still necessary to make a greater effort to improve the training and digital skills of the professionals in charge of the digitization process in this area of cultural heritage.

1. Introduction

The digitization of artifacts of our cultural heritage has become a fairly widespread activity today [1]. The utilization of 3D digital technologies in the realm of cultural heritage is found to provide substantial support in the formulation of strategies aimed at mitigating the deterioration and loss of original materials. Their adoption is determined not only by their efficacy in facilitating the work of conservators while adhering to the principles of compatibility, reversibility, and non-invasiveness but also by the potential for preserving digital models and promoting dissemination in the scientific community [2]. Thus, the popularization of the Internet in the last decades of the 20th century has brought a new way of disseminating the collections held in a multitude of museums around the world.

Initially, the promotion of museums relied mainly on photographic images and video recordings, but later, the development of online 3D viewers has favored the publication of three-dimensional models, which has meant new advancements in the means of disseminating cultural heritage [3]. The possibility of sharing 3D meshes through display devices and through virtual and augmented reality makes these platforms a very attractive and versatile tool for cultural promotion. Virtual museums enhance the museum experience by providing an interactive contact with the digital museum artifacts [4]. Users can access the virtual contents every time they want and everywhere [5].

Although there are still many museums and institutions that are reluctant to publish virtual versions of pieces from their collections, more and more of them are uploading a sample of objects to Internet platforms with the intention of disseminating their collections and attracting a greater number of visitors to them. Moreover, in recent years, new ways of sharing three-dimensional files over the Internet through virtual reality and augmented reality have been introduced, adding new possibilities for virtualizing cultural heritage [6].

Many projects have been developed in different countries with the aim of creating online institutional repositories of 3D models obtained by digitizing objects or buildings belonging to the cultural heritage. Two of the most notable initiatives of this type are Europeana and the Digital Public Library of America (DPLA). Europeana is an initiative funded by the European Union that encompasses collections from its member countries and has given rise to one of the largest digital libraries in the world.

Among the collections of Europeana, we can find a large number of 3D models of objects and buildings, although the portal does not have its own viewer for this type of file and, in most cases, these are hosted on commercial platforms such as Sketchfab. In other cases, they are only offered for download, so the user must open it in a viewer on his computer, cell phone, or tablet, with the consequent problem of the compatibility of some files. In addition, in many cases, it is not possible to access them directly, but access must be requested from the responsible institution.

The DPLA is a nonprofit initiative that provides free and open access to digitized cultural heritage materials from libraries, archives, and museums across the United States. Its mission is to promote education, research, and lifelong learning by democratizing access to digital cultural heritage. The DPLA is supported by a variety of funders, including private foundations, government grants, corporate sponsors, and individual donors. It holds a diverse collection of digital cultural heritage materials in both 2D and 3D formats, including digitized books, photographs, manuscripts, maps, and 3D objects such as sculptures and artifacts.

In addition to the aforementioned projects for the creation of online repositories, several initiatives have been developed for the digitization of cultural heritage, such as 3D-ICONS or CyArk. The 3D-ICONS project was funded by the European Commission, through the Information and Communication Technologies Policy Support Program, for the 3D digitization of archaeological monuments and historic buildings, with the aim of providing content for Europeana [7,8]. CyArk, on the other hand, is a non-profit organization dedicated to digitally preserving cultural heritage sites around the world by using advanced documentation techniques, such as laser scanning, photogrammetry and drone imaging, to create accurate and detailed 3D models of endangered sites, monuments, and structures [9]. By doing so, CyArk aims to ensure the digital preservation of these sites, even if they are at risk of damage, destruction, or natural disasters.

Due to the enormous amount of information from different sources related to cultural heritage, the development of projects to integrate all available data has become a necessity from a scientific point of view. The ARIADNE program is a good example of this type of initiative. It is a program funded by the European Commission with the main objective of unifying archaeological information from all over Europe, breaking down barriers between countries and creating a digital archive to facilitate data retrieval. This program is part of the PARTHENOS project, a research infrastructure aimed at promoting interdisciplinary collaboration between different fields of the humanities, with a scientific and rigorous approach [10].

There are also several open-source frameworks that facilitate the development of interactive web-based presentations with high-resolution 3D models. Particularly in the field of cultural heritage, 3DHOP, developed at CNR-ISTI (National Research Council of Italy and Institute of Information Science and Technologies “Alessandro Faedo”), was created as a specialized framework specifically designed for this purpose [11]. However, the success of this application has been limited in terms of several factors, one of which may be that 3DHOP was designed to generate code for embedding 3D models on a website, which typically requires institutions to have their own website and server for hosting multimedia files or to rely on external services provided by third-party companies.

To streamline the management and storage of 3D models generated during the process of the digitization of cultural heritage, several institutional repositories have been created, such as Arches in the USA, the National 3D Data Repository in France, and the Archaeology Data Service in the United Kingdom. These repositories provide infrastructure and resources for the preservation and accessibility of 3D models and other digitized data, facilitating data management and storage in the context of cultural heritage digitization efforts.

Arches is an open-source data management platform that is freely available for worldwide organizations to install, configure, and extend according to their specific needs, without any restrictions on its use. Originally developed for the cultural heritage field by the Getty Conservation Institute and World Monuments Fund, Arches is a flexible and customizable platform that caters to a wide range of use cases based on individual requirements [12].

The National 3D Data Repository is a project that centralizes and stores 3D data for use by the scientific, academic, governmental, and business communities. It includes geospatial information such as terrain and building models and is used for applications such as urban planning and data analysis. It is operated by Archeovision [13], in coordination with Huma-Num, a piece of digital research infrastructure for humanities and social sciences supported by the Ministry of Higher Education, Research, and Innovation of France. Huma-Num offers services such as data storage, analysis tools, and training in digital research, aiming to promote collaboration and innovation in the academic community [14].

The Archaeology Data Service (ADS) is a specialized digital service for archaeology based in the United Kingdom that focuses on the preservation and promotion of access to archaeological data and digital resources, including 3D digital content. ADS offers the long-term conservation of archaeological data in a 3D format, as well as the creation of archaeological databases and information systems in this format [15].

Different private platforms for sharing online three-dimensional models, such as Sketchfab [16] or TurboSquid [17], have gained great importance in recent years for the dissemination of cultural heritage due to their simple content publishing system and the wide range of utilities they offer for customizing the visualization and achieving a more accurate depiction of the represented assets. Among these platforms, SketchFab is particularly noteworthy due to the number of models published and the diversity and importance of the institutions that use it. In addition, Sketchfab allows for the publication of non-downloadable and non-saleable models and has a specific category called Cultural Heritage & History [18]. Another advantage of SketchFab is its integration with the main social networks and its easier access to all kinds of audiences. Perhaps, this is an aspect that should be considered in open-source projects to achieve greater dissemination. On the other hand, the functionalities present in Sketchfab have a greater number of functions and configuration parameters than other tools designed specifically for the cultural heritage field, such as 3D Hop, although the latter has measurement and section-by-plan tools that are interesting for technical studies and are not present in Sketchfab [19].

The main difference between institutional deposits, such as Arches or National 3D Data Repository, and private hosting services lies in their primary purpose. Institutional deposits focus on the long-term preservation and organization of 3D data related to cultural heritage, providing tools for cataloging, documenting, and storing 3D models. Hosting services, on the other hand, prioritize dissemination and user engagement, offering online platforms for uploading, storing, and sharing 3D models with a wider audience, emphasizing accessibility, ease of use, and sharing capabilities. While both serve important roles, institutional deposits are used for internal management and archival purposes, while hosting services are geared towards public dissemination and engagement with 3D content.

The development of the aforementioned initiatives has greatly facilitated the dissemination of 3D content related to cultural heritage. However, the inherent complexity of the digitization process continues to be a limiting factor for institutions when it comes to disseminating their collections on the Internet, as it requires specialized personnel [20] and consumes a considerable amount of time and financial resources. Nevertheless, both the digitization and the subsequent processing of the models, as well as the correct configuration of the parameters in the visualization platform, are crucial to determining the quality and fidelity of the 3D models generated.

1.1. 3D Digitalization Process

The main techniques for digitizing artifacts belonging to archaeological museum collections are close-range photogrammetry and short-range scanning. In recent years, the use of the latter has become widespread because it is a relatively inexpensive method of digitization [21] and also allows for obtaining a high-quality color texture of the object represented. 3D scanners, on the other hand, are a less widespread solution, mainly due to their cost [22].

However, for the correct virtualization of 3D models, a series of technical skills are recommended that are not always possessed by the people in charge of carrying out these tasks. The simplification of digitization processes in recent decades has facilitated access to these technologies for many professionals in the field of cultural heritage conservation without extensive knowledge of 3D modeling, so they tend to directly use the model obtained by scanning or photogrammetry, without performing a post-processing to optimize the asset for publication. The process of adapting a digitized model to the medium in which it will be published is relatively complex. First of all, it is necessary to drastically reduce the number of polygons of the mesh in most cases, since the resolution of the mesh is usually quite high due to the fact that both 3D scanning and photogrammetry nowadays allow for recording even the smallest details of a figure with high precision. Polygon reduction is essential to ensure the smooth real-time performance of the 3D model within the constraints of network data transmission speeds. Through this process, a high-poly model is transformed into a low-poly model. Low-poly models are optimized for real-time applications such as 3D object viewers, virtual reality, and augmented reality, where rendering speed and performance are critical. The reduced polygon count allows for faster rendering and smoother real-time movement, making them ideal for interactive applications that require real-time responsiveness. In contrast, high-poly models have a higher level of detail with more polygons and produce more realistic and detailed representations that require more computational resources and longer rendering times.

The reduction of the resolution of the 3D model can be carried out in many cases by means of the software that incorporates the scanner or the photogrammetry software; however, these programs usually only reduce the number of triangles of the figure but do not usually offer the possibility of converting them into quads, i.e., four-sided polygons, or to arrange the flow of polygons in a more logical way. The correct organization of the faces of the mesh, known as retopology, is important because a good distribution of the polygons allows for maintaining the shape of the figure with a smaller number of faces but also because it facilitates the UV mapping operations. UV mapping is the process by which each polygon of the mesh is matched to a certain area of the image containing the color of the 3D model to be displayed on its surface. This process is essential to be able to correctly see the colors of the virtualized object, and it can be carried out automatically or manually. Usually, the programs used to process the information recorded by the 3D scanner or by the camera in the photogrammetry process perform automatic mapping processes that result in texture images without a clearly identifiable order for a human being, which can make manual editing difficult.

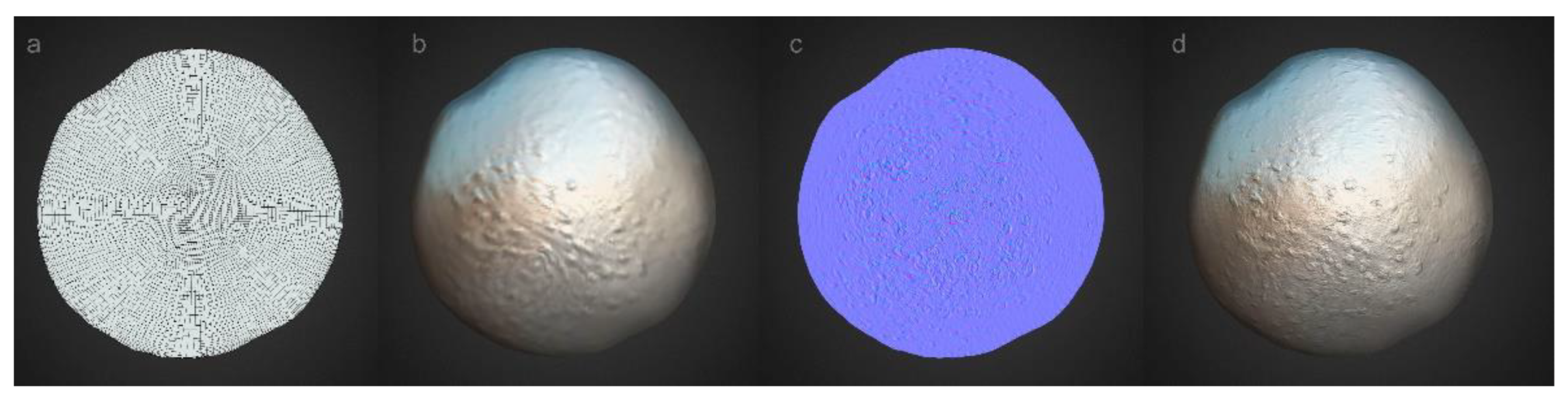

As we have seen, polygon reduction allows us to obtain a mesh that can be quickly transferred from the web, where it is published, to the device on which it is to be reproduced. However, the drastic reduction in the number of polygons of a mesh inexorably results in the loss of surface details, which is not recommended for publication. However, there is a method for ensuring that the reduced polygon count model retains the original appearance of the high-resolution model. The usual way to achieve this is to use a special texture image known as a normal map. This is an image, independent of the one used to color the virtual model, which is assigned to a 3D model so that it displays volume reliefs on screen that it does not actually have. To generate this image, the 3D modeling software calculates, at each point, the difference in volume between the low-resolution mesh and the high-resolution mesh, converting this information into chromatic values, cyan, magenta or green, depending on the orientation of the surface in that particular area. These values are stored in an image, known as a normal map, in which a correspondence between each pixel of the image and a certain region of the polygonal mesh has been previously established through the mapping process. Once this image is created, it can be assigned to the low-resolution model and it will be displayed on the screen with a very similar appearance to the high-resolution model (Figure 1). In addition, the mesh can be easily transferred to other programs or to an on-line platform for sharing 3D models because it will have a very low weight.

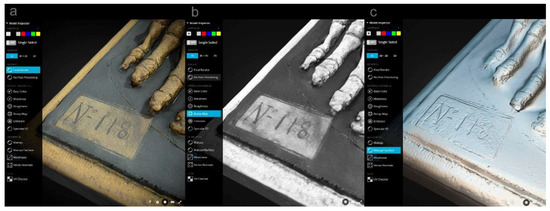

Figure 1.

Use of normal maps for the representation of fine reliefs in a 3D model: (a) low-resolution polygon mesh in wireframe mode; (b) low-resolution polygon mesh in solid mode without a normal map; (c) model showing the normal map; (d) low-resolution polygon mesh in solid mode with a normal map applied.

A similar system, although somewhat simpler and currently less employed, is the use of bump maps. The operation is similar, although images store the information with the difference in volume at each point between the two meshes only in grayscale, so they are less accurate, as they do not consider the orientation of the surface in each area. This method also has the disadvantage that it creates the sensation of volume depending on the tonal value of the object at each point, which leads to misinterpretation of the volume when the represented area has color. Thus, areas painted in black are represented as depressed areas, and light-colored ones will appear excessively elevated (Figure 2).

Figure 2.

Problems due to the use of bump maps based on the color of the object: (a) final model in Sketchfab.com; (b) bump map; and (c) model without color texture. The label number looks sunken due to its dark color, but in the original model, it is flat.

Another task that must be performed for the correct visualization of the virtualized object in a 3D model viewer is the configuration of the materials. These so-called “shaders” are responsible for rendering the visual appearance of objects, reflecting the characteristics of the materials that compose them. Some of the most frequently considered properties when configuring materials are the following: the color, the amount of light reflected by the surface, the surface roughness, the metallic character, the transparency and translucency (subsurface scattering), and the ambient occlusion. Each of these characteristics is controlled by a section of the material called a channel. While some 3D models are composed of a single material that is simple to represent, others have a more complex composition and require more sophisticated tuning to faithfully represent the appearance of the real object. Often, it is necessary to create specific texture images, also called maps, which allow for different values of a certain feature to be assigned to each of the regions of the 3D object. Thus, specular maps are used to determine which area of the mesh will reflect more light and which will reflect less, depending on whether its pixels have lighter or darker values, respectively. Similarly, roughness maps are used to indicate on a gray scale which areas of the mesh will appear brighter. In these maps, darker areas represent smoother regions that produce more concentrated brightness, and lighter areas indicate rougher areas and therefore more diffuse brightness. Similarly, there are maps to indicate which parts of the model are made of a metallic material or which are more transparent. In transparency maps, the darkest regions are those most easily penetrated by light, and the lightest are the most opaque; in metal maps, the whiter areas are more metallic, and the black ones have a less metallic character. Another commonly employed image texture in computer graphics is the ambient occlusion map. This texture map encodes information about the soft shadows and shading that occur in crevices and corners of 3D objects, resulting in a more realistic and visually appealing appearance. This is an image texture that enhances the realism of the object by mimicking the behavior of light hitting surfaces close to each other. On the other hand, transparent materials are usually represented by transmission maps, which indicate which area is opaque and which allows light to pass through the object. Finally, for objects composed of translucent materials, the subsurface scattering filter can be used, which shows the phenomenon known as translucence in the narrower areas of the model. This characteristic implies that the light incident on the object is scattered inside it, and when leaving, it shows a characteristic color depending on the material composition of the object. This phenomenon is typical of materials such as marble, wax, porcelain, and some plastics.

Likewise, the material is assigned the normal maps already mentioned. These maps contain the information of the volume details that the low-poly does not have.

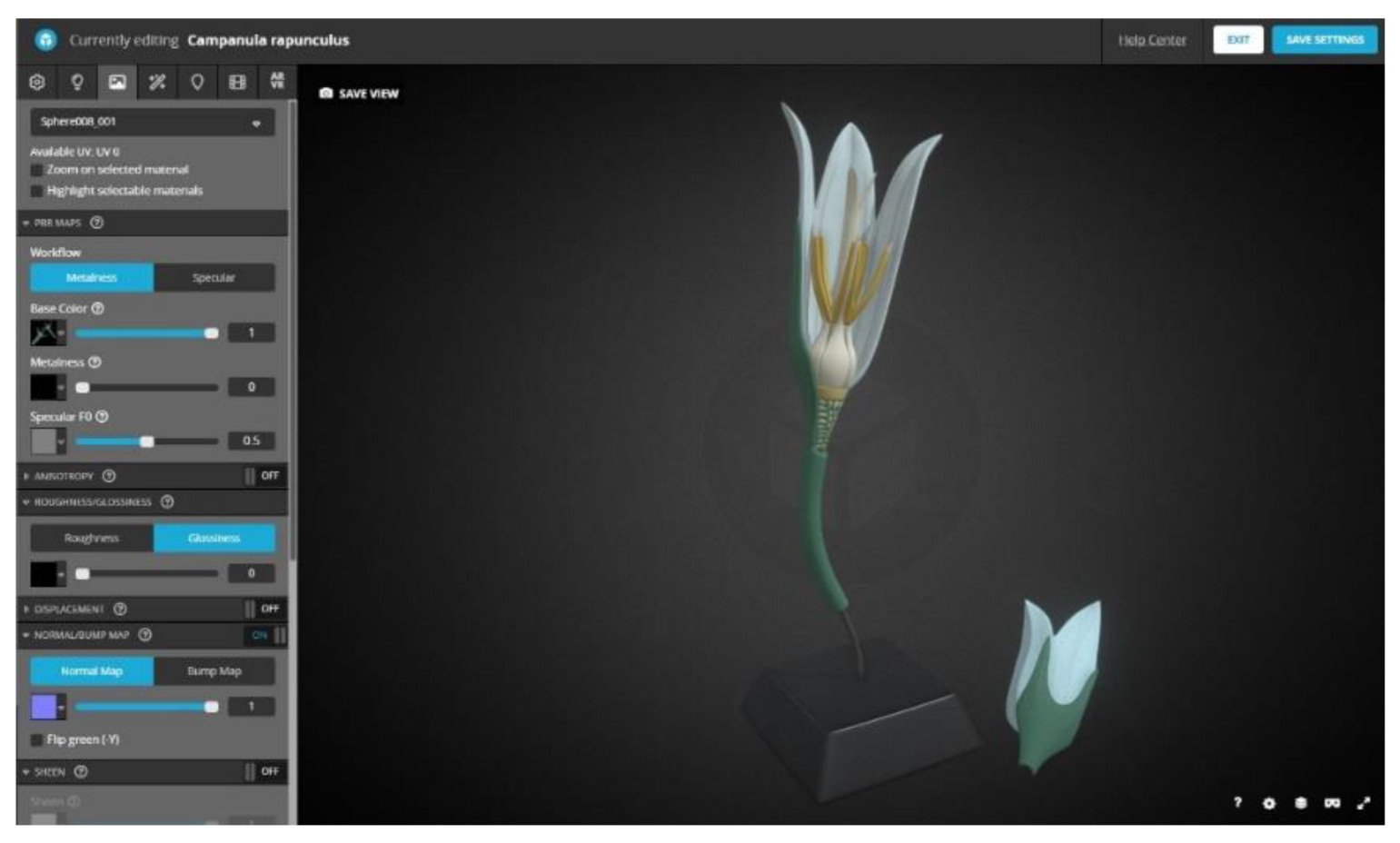

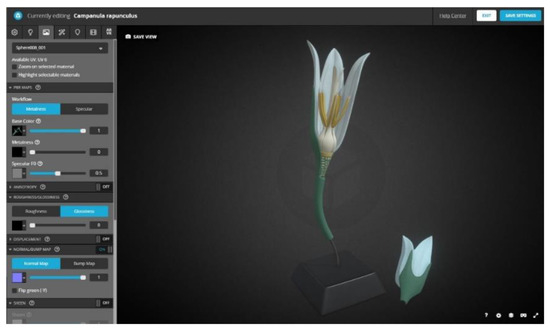

All the materials used in the model must be configured in the program or application in which the model will be reproduced. In each material channel, the appropriate intensity value must be set for the entire mesh if a global adjustment is desired, or a texture map is assigned if a different value is required for each area of the mesh (Figure 3).

Figure 3.

Configuration of model materials on the Sketchfab.com website.

As this material configuration needs some technical knowledge of 3D modeling, it is not always correct in the models that are published on the Internet, so the final result is often not fully satisfactory.

In addition to model optimization and material configuration, the most popular online 3D model viewers allow for the customization of a large number of scene properties. Thus, it is possible to choose the spatial position, focal length, type of background, or scene illumination and apply a wide variety of filters to improve the appearance of the virtualized object. Among the most important, we can highlight the focus filter to increase the sharpness of the image, the chroma, brightness, and saturation adjustments to globally modify the color of the scene, or the ambient occlusion filter to darken the hollow areas of the model without the need to use a specific ambient occlusion map. Also widely used in this type of 3D model viewer are the depth-of-field filters for determining the amount of blur to be produced depending on the distance to the focus point or the vignetting filter, used to create a fading area towards the periphery of the scene, and also, although to a lesser extent, the bloom filter, for giving a brighter appearance to objects.

In short, there are multiple filters and effects that can be used to more faithfully represent the artifacts in 3D model viewers, and they require certain knowledge about material configuration that the professionals of the conservation and restoration of cultural heritage usually do not have.

Another interesting technique for generating graphic documents with a three-dimensional appearance from cultural heritage artifacts is known as Reflectance Transformation Imaging (RTI), which provides a highly accurate image in which it is possible to modify the lighting interactively by moving the cursor on the screen to reveal relieves that are not visible to the naked eye. The elaboration process consists of taking multiple photographs with a DSLR camera in a fixed position while varying the orientation of the light source between shots. Subsequently, the photographs are processed in specific software to obtain a file that can be visualized using an RTI viewer [23].

1.2. Evaluation Methods for the Optimization of 3D Models

Guidelines related to 3D models in the field of cultural heritage are essential for ensuring the responsible and effective use of digital technologies. These guidelines emphasize the accuracy, authenticity, standardization, documentation, and long-term preservation of digital data, including 3D models. In this regard, the 3D reconstruction and digitization of cultural heritage objects must comply with the London Charter and the Seville Principles [24]. One of the fundamental objectives of the London Charter is to “Promote intellectual and technical rigor in digital heritage visualizations”. However, this document also states that both the 3D reconstruction and the configuration of the generated three-dimensional models for visualization are processes in which interpretative or creative decisions are usually made. For this reason, these processes must be properly documented so that the relationship between the virtual models and the artifacts studied, as well as with the documentary sources and the inferences drawn from them, can be correctly interpreted [25]. In the same way, number 4 of the Seville principles talks about authenticity, indicating that when reconstructing or recreating artifacts from the past, it should always be possible to know what is real and what is not [26]. It is also very important to develop methods for identifying degrees of uncertainty in 3D models, such as the use of icons, graphic styles, or metadata [27].

Historic England [28] and Cultural Heritage Imaging [29] also provide guidelines that align closely with the Principles of Seville in the field of cultural heritage preservation. Historic England and Cultural Heritage Imaging emphasize the importance of repair and maintenance techniques that respect the authenticity and integrity of historic buildings and archaeological sites and the use of advanced technologies to document and digitally preserve cultural heritage artifacts and sites. Together, these frameworks provide a comprehensive approach to safeguarding and promoting cultural heritage in a manner that respects its authenticity, integrity, and social significance.

In order to ensure that the visualization of the optimized 3D models is correct and does not produce excessive distortions that alter the user experience, different systems have been developed to analyze the quality of the modified models. Among them, the most important are the quality metrics for mesh quality assessment (MQA), based on the analysis of the polygonal mesh, and point cloud quality assessment (PCQA), focused on the study of the distribution of points detected by the device used to record information, whether a camera or a scanner. Many of these methods are based on analysis techniques designed for the study of 2D images and videos, although the complexity of 3D models makes the task much more complex. Subjective quality assessment is based on an assessment by human observers, while objective quality metrics use automated calculation methods to determine the quality of models. There are different types of quality assessment depending on the use of references to analyze the degree of distortion of the edited model by comparison with this, that is, with the unedited model. Currently, subjective analyses are mostly performed by means of full-reference quality assessment, which uses undistorted reference models to compare them with distorted ones and only analyzes data related to the mesh geometry.

Some authors have proposed different methods for evaluating the quality of 3D models based on the comparison of 3D meshes (model-based metrics), generally using a high-quality reference model and another model distorted or subjected to polygon reduction [30,31]. There have also been different systems proposed based on the comparison of render images obtained from reference models and those obtained from modified models (image-based metrics) [32,33,34,35], and comparisons between such evaluation methods have also been established [36,37], but these analysis systems were designed to compare altered meshes with the original unaltered ones, so it is also not a valid method for establishing the quality of models published on the Internet, where there is usually no reference mesh to compare them with.

The advances achieved in recent years in relation to machine learning technologies have facilitated the design of different learning-based no-reference quality assessment metrics oriented fundamentally to the analysis of the geometric characteristics of 3D models [38,39,40]. Recently, a new no-reference quality assessment metrics method has been published that extracts geometry and color features from point clouds and 3D meshes based on natural scene statistics, an area of perception based on the fact that the human perceptual system is fundamentally adapted to the interpretation of the natural environment [41].

However, automated methods are difficult to apply in the analysis of models of cultural heritage artifacts published on Internet platforms. First, this difficulty is due to the fact that the systems designed at the moment to evaluate the quality of 3D meshes are rather complex to apply in practice and currently do not allow for evaluating a large sample of models in an acceptable time. Second, the methods of the representation of shape and color vary from one model to another and complicate the possible comparison between them. Finally, when evaluating virtual models published on Internet platforms, it is usually not possible to download the high-resolution model, and only the optimized model is available, so it is not possible to establish an evaluation based on the fidelity to a reference object for each of the meshes analyzed. Therefore, given the impossibility of having a high-quality reference model of each of the artifacts represented, it is necessary to evaluate the quality of the model solely from the data available in the virtual model.

Since there is not yet an automated method for analyzing the quality of optimized 3D models for which a high-resolution reference model is not available, this work has opted for a visual analysis performed by an expert in the 3D modeling and 3D digitization of cultural heritage. By the visual inspection of the colored mesh, and turning on and off the color of the 3D model, the details recorded in the photographic texture, such as cracks, pores, wrinkles, or small folds, were identified and compared with the existing reliefs in the mesh devoid of color located in the same place to see if there was an adequate correspondence between the two. This is not a comparison between models but rather a check that the details expected based on the color texture are present in the polygon mesh.

1.3. Cultural Heritage and 3D Model Visualization Platforms

Nowadays, there are many institutions that have published part of their collections on the Internet, uncluding the British Museum (London, UK), the Museo Arqueológico Nacional (Madrid, Spain), the Real Academia de Bellas Artes de San Fernando (Madrid, Spain), The Harvard Museum of the Ancient Near East (Cambridge, MA, USA), the Ministry of Culture (Lima, Peru), The Royal Museums of Art and History (Brussels, Belgium), Museum of Origins of the Sapienza University of Rome (Rome, Italy), and the Statens Museum for Kunst—National Gallery of Denmark (Copenhagen, Denmark). In addition, there are many independent companies and individuals that publish countless models of figures and objects belonging to other museums, such as the Louvre (Paris, France), the Prado Museum (Madrid, Spain), the Hermitage (St. Petersburg, Russia), and the Vatican Museums, (Vatican City, Italy).

In this research, we analyze a large sample of objects belonging to different art museums from different countries, which have been published on Sketchfab.com in order to check if the models uploaded to this platform are correctly optimized and if the configuration of materials and effects is adequate for their exhibition through the 3D model viewer. Sketchfab.com is currently the most widely used website for sharing this type of file and has more than 10 million members and more than 5 million 3D models. The possibilities offered by this platform are numerous, it being able to visualize the models on screen, both on the personal computer and on cell phones or tablets, and also in virtual reality glasses or devices compatible with augmented reality. In addition, the possibilities for configuring the 3D model are quite extensive, including numerous filters and effects.

Some papers have been published on the usefulness of online platforms for the dissemination of 3D models of artifacts belonging to cultural heritage. Such studies analyze the characteristics of these platforms and the availability of certain 3D model customization functions in these applications that allow for improving their authenticity [18,42]. Sthatham analyzed different 3D model viewers present on the Internet and compared their features in order to determine whether they contributed to improving the scientific rigor of the virtualized artifacts, concluding that, indeed, some of them did improve the scientific quality of the models, although they were used infrequently. However, this author does not explain how she found these features to be underutilized and only discusses whether the platforms studied possess such customization features and how they contribute to improving model fidelity. For this reason, the objective of this study is to check the quality of 3D models of cultural heritage artifacts published in Sketchfab and the degree of use of personalization functions on this platform to determine if they are taking full advantage of the possibilities they offer to achieve a faithful representation of virtualized artifacts.

2. Materials and Methods

For the selection of the study sample, we took those 3D models of artistic objects preserved in archaeological museums, discarding excavation models, bone remains, writing tablets, etc.

In order to select the museums, we stratified the list of institutions with these characteristics that are active members of Sketchfab.com, taking five of them within each of the following categories: (1) museums that have published between 10 and 50 models; (2) museums with between 51 and 250 models; (3) museums with more than 250 models; (4) museums with more than 250 models.

Once the selection of museums was established, we took 10 objects from each museum, choosing them from among those that had received the highest number of visits and trying to select objects of various shapes and sizes.

For each museum, we recorded the number of models published in Sketchfab [16], the approximate percentage of downloadable models, the number of overall visits, the number of likes, and the number of followers. Additionally, the period of time for which the institution has been a member of the website was documented.

In order to conduct a comprehensive analysis of each of the 3D models, a thorough review was undertaken, encompassing the information provided on the website as well as the main configuration parameters available through the 3D Settings section of the platform. These parameters enable adjustments to be made to the appearance of the models during the publication process and therefore play a crucial role in achieving a faithful and accurate representation of the object depicted. The various aspects that were analyzed include: (1) the number of polygons; topology or polygon arrangement; (2) the existence and type of UV mapping (manual or automatic); (3) the use of texture maps for the definition of the materials composing the artifact, such as specular maps, roughness, metallness, ambient occlusion, normal maps, bump maps, and transmission maps; (4) the use of the effects filters available on the platform, such as Sharpen, Ambient occlusion, Depth of field, Vigneting, Bloom, Tone mapping (including exposure, brightness, contrast, and saturation), Color balance, and Antialiasing; (5) the use of shadows projected on the ground; (6) inclusion of the institution’s logo on the screen; (7) use of labels with information about the model; (8) use of animations.

In order to analyze the optimization of the meshes for publication in web viewers, we considered, on the one hand, the recommendations offered on the Sketchfab.com help web page for cell phone users. Specifically, we established a limit of 500,000 polygons to consider that a model was suitable for exploration with this type of device. The number of faces of a 3D mesh is the most important factor for achieving an adequate download speed, as well as a correct navigation of the scene. On the other hand, the use of normal maps or bump maps was taken into account to compensate for the loss of definition of surface reliefs due to the reduction in polygons. Thus, we established three categories of 3D objects: (1) models with more than 500,000 polygons; (2) models with fewer than 500,000 polygons that have normal maps or bump maps; (3) models with fewer than 500,000 polygons without normal maps or bump maps.

A score was assigned to each model according to a scale of 0 to 10, where 0 corresponds to the absence of representation of fine details such as those mentioned in the polygonal mesh compared to those observed in the photographic texture and 10 corresponds to their complete and accurate representation. In this scoring, no distinction was made between the details observed in the mesh itself and those provided by the normal or bump map, since in both cases, their visual appearance is similar.

For each of these categories, we calculated the mean scores given for the morphological definition of the 3D mesh and for the definition of the color details. The mean values for the number of polygons and the file size were also calculated. We also recorded, whenever available on the web, the type of technique used to digitize the artifacts, 3D scanning or photogrammetry, as well as the software used for data processing and post-processing.

In addition, an evaluation of the volumetric definition of the model was made, assigning values between 0 and 10, where 0 was the minimum definition of fine details and 10 was the maximum representation of fine details. In those cases where the digitizing technique was deficient and there were obvious meshing errors, the score was penalized according to the severity of the errors. The resolution and quality of the chromatic texture of the object were also evaluated, with 0 being the value assigned to models with an absence of definition of fine color details or chromatic alterations and 10 being given to models with the highest resolution and quality of the chromatic texture of the object.

Finally, we determined whether there was information about the artifact accompanying the model and whether a link to another website with more information about the artifact was included.

3. Results

We analyzed a total of 150 3D models, belonging to 15 different museums. The selected models ranged from 8400 to 12,200,000 polygons. There were 94 meshes with fewer than 500,000 faces and 56 meshes with more than 500,000 faces. The weight of the project files, containing the 3D meshes together with all the texture maps used, ranged from 1 to 294 MB.

Model retopology was used in a small number of cases, specifically in 31 models (20.1%), and was especially scarce in those objects with complex and intricate shapes.

Of the 150 models analyzed, only 79 of them (56%) used normal maps or bump maps as a strategy to optimize the number of polygons in the mesh (55 used normal maps and another 29 used bump maps), while 71 (44%) did not use either map.

The UV mapping observed in all the models was most probably performed automatically because the sutures or cuts made to facilitate the flat development of the texture image were in places where they were very visible, and it would not be expected to locate them in those regions; this task would have been performed manually.

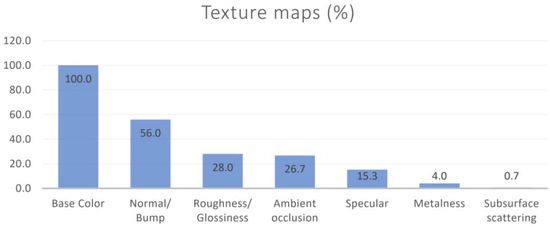

As for other texture maps intended to modify specific regions of the model and the characteristics of the materials, the percentages of use were as follows: specular (15.3%), roughness/glossiness (28%), metallness (4%), ambient occlusion (26.7%), and subsurface scattering (0.7%) (Figure 4).

Figure 4.

Use of different types of texture maps in the 3D models analyzed on the Sketchfab platform expressed as a percentage.

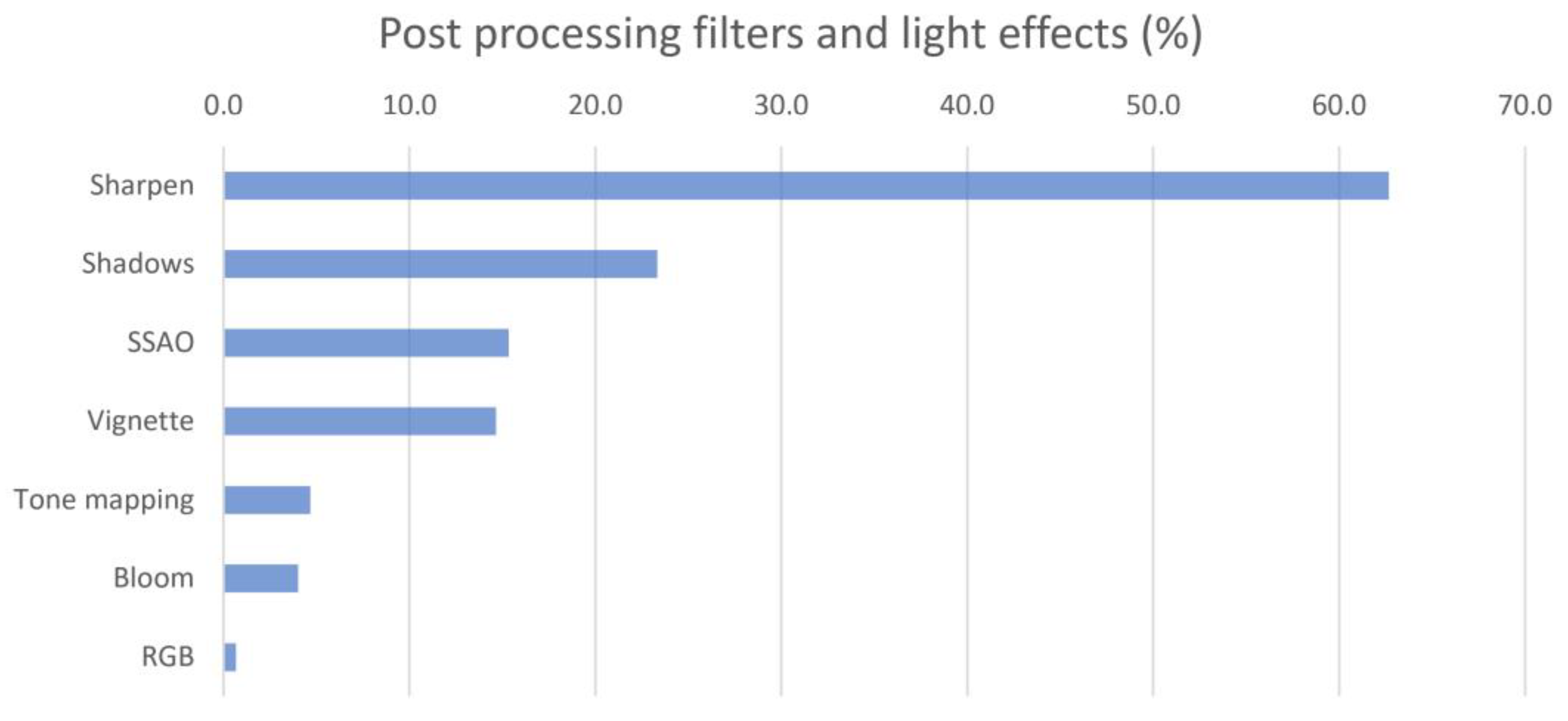

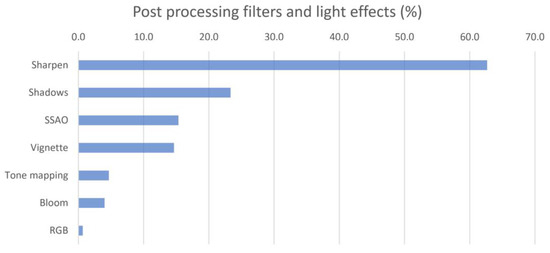

The effects filters available on the web were used in many models. Thus, the Sharpen filter was used in 62.7% of the cases, Ambient occlusion was used in 15.3%, Tonemaping was used in 4.7%, Bloom was used in 4%, and color balance was used in 0.7%. In addition, the Ground shadow lighting effect, which is used to create the effect of a shadow cast on the ground, was used in 21.3% of the models, and the Vignette filter, used to create a black fading effect towards the edges of the scene, was used in 14.7% (Figure 5).

Figure 5.

Main types of post-processing filters used by the institutions included in the study in the 3D models currently published on Sketchfab.

The introduction of an institutional logo within the viewer was detected in 38% of the 3D objects.

The inclusion of animated elements was found in only 4 models (2.7%), and the presence of interactive notes with information about the model was observed in 24 of them (16%).

The rating given to the models in terms of the quality of the morphological details represented was high in general terms, with an average of 7.6 points. The highest rating corresponded to models with normal maps or bump maps, with an average of 8.3 points, followed by models with more than half a million polygons, which obtained an average of 8.1 points. The worst-rated were models with fewer than half a million polygons that did not use normal maps or bump maps, where the average score was 4.3 points.

The scores obtained by the models in relation to the color definition in the three groups considered were 9.5 in the group using normal maps or bump maps, 8.5 in the group with more than half a million polygons, and 8.6 in the group with fewer than half a million polygons without using normal maps or bump maps.

As for the information on the virtualized artifact, 74.7% of the models included data on the artifact represented, and 51.3% of them had a link to a website with extended information on the object.

Only half of the models studied (51.3%) specified the digitization technique used, close-range photogrammetry being the method chosen in most cases. Only one of the museums indicated that a hand-held 3D scanner was used to carry out this task, the model used being the Artec Space Spider (Artec 3D, Luxembourg, Luxembourg).

Some of the museums analyzed gave information about the software used for digitization, although generally without specifying the version. According to the data published together with the models, the most commonly used software were the following: Photoscan/Metashape, on 60 models from 6 different museums; 123D Catch, on 6 models from 1 museum; Reality Capture, on 10 models from 1 museum. In addition, the use of other programs for editing and optimizing models was detected in some institutions, including: Blender (used in three museums), ZBrush (used in two museums), Maya, and Substance Painter (used in one museum each).

4. Discussion

Despite the extensive experience of some museums in the virtualization of their heritage collections (almost a decade), many of the published models have not undergone a mesh optimization process that allows for more efficient visualization and downloading of the models. There are too many models with an excessive number of polygons to be comfortably viewed online, especially on portable devices such as cell phones and tablets. Other models, however, have an insufficient volumetric resolution because the number of polygons is too low but is not compensated for by the use of normal or bump maps to represent fine details, as an insufficient number of institutions use this strategy to enhance the appearance of virtualized objects. Furthermore, in cases where Bump maps are used, they are generally created from the color information in the image, taking advantage of the fact that the more sunken areas are more shaded and therefore darker. However, this strategy has the problem that if the model has dark colors, these areas will look falsely dug, and, conversely, light-colored areas will look artificially elevated. This will be especially visible in areas where there are cast shadows, which will give the false impression of being more sunken than they should be. Certain problems have also been detected in some normal maps, which showed erroneously flat areas or the inversion of the reliefs, with hollow areas protruding and vice versa.

It should be noted that the poor volumetric quality may not be incompatible with an acceptable user experience, since the models are usually intended for the dissemination of culture and the promotion of museums, so, in many cases, a high volumetric resolution is not essential, since with an adequate chromatic accuracy, the model acquires a good appearance, even with a relatively simplified morphology of the 3D object.

The small difference in quality found in the definition of the color texture in meshes with a small number of triangles and those with a large number of triangles is due to the fact that the resolution of the texture image is largely independent of the resolution of the polygonal mesh, which makes it possible to create models with a very detailed chromatic appearance but with little morphological definition.

Despite the initial reluctance of many entities dedicated to the preservation and exhibition of cultural heritage to publish openly three-dimensional models of their assets, many of them now not only show their virtualized collections through the Internet but also share them for free and with creative commons licenses. This is undoubtedly an important step towards the democratization of culture and free access for anyone interested in the history of art to the collections held in some of the world’s most outstanding museums.

Regarding the use of photogrammetry as a digitizing technique in most of the museums analyzed, this may be due to the better color representation it provides due to the quality of DSLR cameras, generally used for this purpose in relation to the cameras that are usually incorporated in 3D scanners. Given that the purpose is informative and that the main objective is that the visual appearance of the object is good, and this is achieved in most cases, the higher volumetric accuracy that could be achieved by means of a scanner is probably not essential.

As for the number of polygons in the models, they can be classified into different categories. The first one comprises those with a high number of polygons, more than 500,000, and which generally reproduce, with great fidelity, the surface reliefs of the artifact without the need to use normal maps or bump maps, but which are usually too heavy, making them difficult to view in online viewers and also to download. However, they are the ones that would give rise to a higher quality of the mesh for those who wish to download them in order to print them. The second one would be formed by models that have a lower number of polygons but that compensate for the small number of polygons with normal maps or bump maps. This group usually has the best performance on platforms for viewing three-dimensional files and also tends to show adequate volumetric detail. On the downside, the actual mesh resolution is relatively low, so it would not be suitable in many cases for quality 3D printing. Finally, models with a polygon count of less than 500,000 and without normal maps or bump maps generally look coarser and lack surface detail, something that becomes very evident when increasing their size on screen to frame a smaller region.

The largest file weights were found primarily in the higher-polygonal-resolution models, as expected, although some high-definition models had relatively small file weights, especially those that used Vertex color as the method for coloring the mesh. It is worth clarifying that a larger file size does not necessarily mean slower model loading in the viewer. This is because the main factor affecting the display speed is the number of polygons in the mesh and not so much the size of the associated texture images, which can also increase the file size significantly. This will matter, however, in determining faster or slower model download speeds.

Retopology was sparingly employed, and its use was especially reduced for those objects with complex and intricate shapes. In the author’s opinion, this may be due to the difficulty involved in performing retopology on these types of parts, especially if performed manually, although further training in this process would be worthwhile since, as mentioned above, retopology can contribute to the optimization of the number of polygons in the mesh.

The existence in all models of UV mapping performed automatically is consistent with a massive digitizing process that requires high processing and post-processing speeds. Manual mapping requires a much longer execution time and is therefore usually dispensed with in these cases.

The scarce use of texture maps to establish the material characteristics in the different regions of the model is probably related, on the one hand, to the technical difficulty involved in the extraction of maps and the possible lack of training of some people in charge of virtualizing the models in relation to the creation and edition of materials for 3D models and, on the other hand, to the time required to perform this task, which may be ignored in some cases in order to publish a greater number of models, even if they are not optimized. The use of these maps has been strongly related to the use of normal maps and bump maps, which could be due to the need for adequate technical training in 3D design required for both tasks.

It should be noted that models with a very small number of polygons, usually fewer than 10,000 faces, show sharp angles between edges, especially in the outline silhouette, something that cannot be compensated for with the use of normal maps or bump maps.

Obviously, the download speed will depend on the Internet connection available to each user, and even models with a high number of polygons can be displayed without problems in a short time, especially on desktop computers. In addition, we must take into account that the characteristics of the connections are improving significantly over time, and this can make models that we now consider poorly optimized become acceptable or even more suitable in a short time.

On the other hand, it is also not convenient to take a number of polygons as an absolute limit to create an adequate model because it is also important to consider the formal complexity of the represented artifact. Some simple objects can be properly virtualized with a very small number of faces, while others that have a large number of nooks and crannies and have a rough texture require a higher mesh resolution.

As for the use of effect filters available on the web, a relatively frequent use of them has been verified. Among them, the Sharpen focus filter stands out, which is almost indispensable, as it serves to compensate for the lack of sharpness with which objects are displayed by default in the viewfinder. In fact, it is striking that its use is not widespread in all museums. Another relatively widely used filter is Ambient occlusion, although this should be handled with care because if high values are used, it can alter the appearance and look unnatural, something that has been observed in some cases. The filters for tone, saturation, contrast, or chromatic corrections have been used very little, and it is worth asking whether the color shown in the models should not have been corrected on a greater number of occasions to achieve a more faithful appearance to the original. It is possible that color management techniques had already been used in most cases during the recording of artifact data, and for that reason, it is preferred to dispense with further adjustments on the web.

With regard to the information on the virtualized artifact accompanying the 3D model, an excessive number of objects (25.3%) were observed for which no data of interest were provided. It is important to reiterate the need to accompany them with descriptive data of the represented artifact, including authorship, measurements, constituent materials, and construction techniques, among others. Additionally, it is recommended to incorporate technical metadata or descriptive data of the 3D model itself, such as the polygon count, file format, file size, use of textures, animation, etc., which is typically included in all Sketchfab 3D models by default. Furthermore, providing open links to other relevant information related to the represented object is highly advisable. Finally, it is of great importance to provide data related to the process of creating the 3D model (known as paradata), such as the digitization method, hardware and software used, optimization technique, etc., for a better understanding of the visual document and its relationship with the real object.

None of the models analyzed in this study possess a Digital Object Identifier (DOI), which has been a longstanding request from experts in the field of cultural heritage to Sketchfab. This lack of DOI identifiers poses a limitation in terms of the proper identification and citation of the virtualized 3D models, which can impact the transparency and credibility of research in the field of archaeological virtualization.

In relation to the potential limitations of this study, a first aspect to highlight is that, due to the methodological characteristics of the analysis, only a sample of 150 3D models published by archaeological museums could be evaluated. Additionally, it is important to mention that, since the analysis was performed by 3D digitization experts and not by a mathematical algorithm, the result could be influenced by observer bias. To mitigate this problem, during the evaluation phase of the volumetric and chromatic characteristics of the models, we relied on assistants who displayed each of the 3D meshes in full screen and, if necessary, hid any logos or text that could reveal the origin of the analyzed artifact. Subsequently, for the collection of technical data about the 3D model or the parameters used in the configuration of the 3D viewer, which are objective data, the experts were able to access all the information without the need for blinding techniques.

5. Conclusions

The analysis method employed in this study allows for an assessment of the volumetric quality of 3D models published on Sketchfab, based on expert analysis through a comparison of the published mesh in solid mode and base color mode. The presence or absence of relief in the polygonal mesh, as compared to the expected details observed in the color texture by experts, indicates a higher or lower fidelity of the model to the original artifact. Chromatic definition has also been evaluated based on the observed level of detail.

Regarding the quality of the analyzed models, most of them present a good to very good volumetric and chromatic definition. However, it has been detected that a significant proportion of models lack an adequate optimization of the 3D mesh. Some of these models have an excessive number of polygons, which results in slow loading times and navigation difficulties. On the other hand, some models have a very low resolution, with insufficient polygons to provide adequate volumetric detail, also lacking normal or bump maps. Fortunately, the chromatic definition is quite good in most of the models, and their appearance is quite accurate when the color texture is activated, so they are perfectly suitable to be used for dissemination purposes. However, it is recommended that the models be optimized to ensure their full utility for research or teaching purposes, addressing issues such as an excessive polygon count or insufficient resolution to improve their overall quality.

A very high proportion of the models do not include a hyperlink to the museum or institution where the original artifact is housed, nor do they provide links to other relevant information. This underscores the urgent need for improved practices in incorporating linked and open data into 3D models published on these platforms, as this would substantially enhance their value for scientific research, education, and dissemination. The use of a DOI (Digital Object Identifier) in the virtualized 3D models would also be highly recommended, which would facilitate their identification and citation in the scientific community.

Despite the importance of linked and open data for 3D models of cultural heritage artifacts, their incorporation into Sketchfab is currently lacking widespread adoption. It would be desirable for linked and open data to be used regularly because they enhance interoperability, reusability, and collaboration among stakeholders, enabling seamless connections with other digital resources. This promotes transparency, reproducibility, and accountability in research, while also enhancing the discoverability and accessibility of 3D models for a wider audience.

Furthermore, when it comes to Sketchfab and cultural heritage, there is a notable absence of specialized functions within 3D viewers that cater specifically to the unique needs of preserving and showcasing cultural heritage artifacts. For instance, there is a lack of measurement and sectioning tools that could aid in accurately analyzing and documenting the dimensions and internal structures of these artifacts. Additionally, the capability of georeferencing, which would allow for linking 3D models to their real-world geographical locations, is also missing. These functionalities could greatly enhance the potential of Sketchfab and other similar platforms in the field of cultural heritage, enabling researchers, historians, and curators to gain valuable insights, conduct precise analyses, and provide a more immersive and interactive experience to viewers, ultimately contributing to the preservation and dissemination of our rich cultural heritage for future generations.

There are some models on Sketchfab that are seemingly combined with Reflectance Transmitted Imaging (RTI) captures, but they are actually hosted as simple 3D reconstructions with any interaction possible with the RTI file sources. It would be desirable for Sketchfab to implement a specific RTI viewer enabling the accurate relighting of the models, hence fully benefitting the type of documentation, as it is commonly used in the field of cultural heritage.

Uncertainty regarding the long-term preservation guarantees of the data is also a concern in this type of privately hosted repositories, as they are often subject to changes in the terms of the service provided. It would be beneficial to have institutional resources that offer the same user-friendly interface and detailed configuration options as Sketchfab while also ensuring long-term preservation of the models and providing identification based on a DOI and specific functions tailored for cultural heritage assets.

Finally, in relation to possible future research, a study of a larger sample would be necessary to achieve a broader analysis of the quality and characteristics of 3D models in the field of cultural heritage. It would also be interesting to create an automated method of analysis based on algorithms that compare the existence of details in the chromatic texture that suggest the existence of reliefs or cavities on the surface with the reliefs that actually exist in the polygonal mesh. This objective method could replace expert analysis and further streamline the evaluation process.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Ubik, S.; Kubišta, J. Interactive Scalable Visualizations of Cultural Heritage for Distance Access. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 10059 LNCS; Springer: Cham, Switzerland, 2016; pp. 191–198. [Google Scholar] [CrossRef]

- Raco, F. From Survey to Integrated Digital Documentation of the Cultural Heritage of Museums: A Protocol for the Anastylosis of Archaeological Finds. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Scopigno, R.; Callieri, M.; Dellepiane, M.; Ponchio, F.; Potenziani, M. Delivering and Using 3D Models on the Web: Are We Ready? Distribución Y Uso De Modelos 3D En La Web: ¿Estamos Listos? Virtual Archaeol. Rev. 2017, 8, 1–9. [Google Scholar] [CrossRef]

- Styliani, S.; Fotis, L.; Kostas, K.; Petros, P. Virtual museums, a survey and some issues for consideration. J. Cult. Herit. 2009, 10, 520–528. [Google Scholar] [CrossRef]

- Sylaiou, S.; Liarokapis, F.; Sechidis, L.; Patias, P.; Georgoula, O. Virtual Museums: First Results of a Survey on Methods and Tools. CIPA 2005 XX International Symposium. 2005. Available online: https://www.cipaheritagedocumentation.org/wp-content/uploads/2018/12/Sylaiou-e.a.-Virtual-museums_first-results-of-a-survey-on-methods-and-tools.pdf (accessed on 12 April 2023).

- Anton, M.; Nicolae, G.; Moldoveanu, A.; Balan, O. Virtual museums-technologies, opportunities and perspectives. Rev. Romana Interactiune Om-Calc. 2018, 11, 127–144. Available online: http://rochi.utcluj.ro/rrioc/articole/RRIOC-11-2-Anton.pdf (accessed on 12 April 2023).

- Barsanti, S.G.; Micoli, L.L.; Guidi, G. Quick textured mesh generation for massive 3D digitization of museum artifacts. In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; pp. 197–200. [Google Scholar] [CrossRef]

- Barsanti, S.G.; Guidi, G. 3D Digitization of museum content within the 3dicons project. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W1, 151–156. [Google Scholar] [CrossRef]

- Underhill, J. In Conversation with CyArk: Digital Heritage in the 21st Century. Int. J. Dig. Art Hist. 2018. [Google Scholar] [CrossRef]

- Uiterwaal, F.; Niccolucci, F.; Bassett, S.; Krauwer, S.; Hollander, H.; Admiraal, F.; Romary, L.; Bruseker, G.; Meghini, C.; Edmond, J.; et al. From Disparate Disciplines to Unity in Diversity: How the PARTHENOS Project Has Brought European Humanities Research Infrastructures Together. Int. J. Humanit. Arts Comput. 2021, 15, 101–116. [Google Scholar] [CrossRef]

- Potenziani, M.; Callieri, M.; Dellepiane, M.; Corsini, M.; Ponchio, F.; Scopigno, R. 3DHOP: 3D Heritage Online Presenter. Comput. Graph. 2015, 52, 129–141. [Google Scholar] [CrossRef]

- Pamart, A.; De Luca, L.; Véron, P. A metadata enriched system for the documentation of multi-modal digital imaging surveys. Stud. Digit. Herit. 2022, 6, 1–24. [Google Scholar] [CrossRef]

- Archeovision. Available online: https://archeovision.cnrs.fr/en/archeovision_en/ (accessed on 10 April 2023).

- Tournon-Valiente, S.; Baillet, V.; Chayani, M.; Dutailly, B.; Granier, X.; Grimaud, V. The French National 3D Data Repository for Humanities: Features, Feedback and Open Questions. In Proceedings of the CAA 2021—“Digital Crossroads”, Virtual, 14–18 June 2021. [Google Scholar] [CrossRef]

- Galeazzi, F.; Callieri, M.; Dellepiane, M.; Charno, M.; Richards, J.; Scopigno, R. Web-based visualization for 3D data in archaeology: The ADS 3D viewer. J. Archaeol. Sci. Rep. 2016, 9, 1–11. [Google Scholar] [CrossRef]

- Sketchfab. Available online: https://sketchfab.com/ (accessed on 10 April 2023).

- TurboSquid. Available online: https://turbosquid.com/ (accessed on 10 April 2023).

- Statham, N. Scientific rigour of online platforms for 3D visualization of heritage. Virtual Archaeol. Rev. 2019, 10. [Google Scholar] [CrossRef]

- Potenziani, M.; Callieri, M.; Scopigno, R. Developing and Maintaining aWeb 3D Viewer for the CH community: An evaluation of the 3dhop framework. In Proceedings of the GCH 2018—Eurographics Workshop on Graphics and Cultural Heritage, Vienna, Austria, 12–15 November 2018. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Fantini, F.; Garagnani, S.; Gaiani, M. A Photogrammetry-Based Workflow for the Accurate 3D Construction and Visualization of Museums Assets. Remote Sens. 2021, 13, 486. [Google Scholar] [CrossRef]

- Dall’asta, E.; Bruno, N.; Bigliardi, G.; Zerbi, A.; Roncella, R. Photogrammetric techniques for promotion of archaeological heritage: The archaeological museum of parma (Italy). ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 243–250. [Google Scholar] [CrossRef]

- Peinado-Santana, S.; Hernández-Lamas, P.; Bernabéu-Larena, J.; Cabau-Anchuelo, B.; Martín-Caro, J.A. Public Works Heritage 3D Model Digitisation, Optimisation and Dissemination with Free and Open-Source Software and Platforms and Low-Cost Tools. Sustainability 2021, 13, 13020. [Google Scholar] [CrossRef]

- Balkan Heritage Foundation. 3D and RTI Documentation of Artifacts at the National Institution Stobi, Republic of North Macedonia. n.d. Available online: https://balkanheritage.org/programs/3d-and-rti-documentation-of-artifacts-at-the-national-institution-stobi-republic-of-north-macedonia/ (accessed on 19 April 2023).

- Muenster, S. Digital 3D Technologies for Humanities Research and Education: An Overview. Appl. Sci. 2022, 12, 2426. [Google Scholar] [CrossRef]

- Denard, H.; Abdelmonem, M.G.; Abdulla, K.M.A.; Anisia-Maria, A.; Moran, R.; Harris, P.; UNESCO World Heritage Centre; UNESCO; Thompson, W.J.; Khalili, M.; et al. Venice Charter 1964 ICOMOS. The London Charter for the Computer-Based Visual-Isation of Cultural Heritage. Preamble Objectives Principles. International Council on Monuments and Sites, 11(February), 101. 2009. Available online: http://www.londoncharter.org/introduction.html (accessed on 12 April 2023).

- ICOMOS General Assembly. The Seville Principles—International Principles of Virtual Archaeology. Fórum Internacional de Arqueología Virtual, 1–27. 2017. Available online: https://icomos.es/wp-content/uploads/2020/06/Seville-Principles-IN-ES-FR.pdf (accessed on 12 April 2023).

- Koller, D.; Frischer, B.; Humphreys, G. Research challenges for digital archives of 3D cultural heritage models. J. Comput. Cult. Herit. 2009, 2, 1–17. [Google Scholar] [CrossRef]

- Historic England. Available online: https://historicengland.org.uk/ (accessed on 10 April 2023).

- Cultural Heritage Imaging. Available online: https://culturalheritageimaging.org/ (accessed on 10 April 2023).

- Corsini, M.; Larabi, M.C.; Lavoué, G.; Petřík, O.; Váša, L.; Wang, K. Perceptual Metrics for Static and Dynamic Triangle Meshes. Comput. Graph. Forum 2013, 32, 101–125. [Google Scholar] [CrossRef]

- Lavoue, G.; Corsini, M. A Comparison of Perceptually-Based Metrics for Objective Evaluation of Geometry Processing. IEEE Trans. Multimedia 2010, 12, 636–649. [Google Scholar] [CrossRef]

- Cruz, L.A.D.S.; Dumic, E.; Alexiou, E.; Prazeres, J.; Duarte, R.; Pereira, M.; Pinheiro, A.; Ebrahimi, T. Point cloud quality evaluation: Towards a definition for test conditions. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Menzel, N.; Guthe, M. Towards Perceptual Simplification of Models with Arbitrary Materials. Comput. Graph. Forum 2010, 29, 2261–2270. [Google Scholar] [CrossRef]

- Qu, L.; Meyer, G.W. Perceptually Guided Polygon Reduction. IEEE Trans. Vis. Comput. Graph. 2008, 14, 1015–1029. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhao, J.; Du, Z.; Zhang, Y. Quantitative analysis of discrete 3D geometrical detail levels based on perceptual metric. Comput. Graph. 2010, 34, 55–65. [Google Scholar] [CrossRef]

- Nehmé, Y.; Farrugia, J.-P.; Dupont, F.; LeCallet, P.; Lavoué, G. Comparison of subjective methods, with and without explicit reference, for quality assessment of 3D graphics. In Proceedings of the SAP ’19: ACM Symposium on Applied Perception 2019, Barcelona, Spain, 19–20 September 2019; Volume 17. [Google Scholar] [CrossRef]

- Nehmé, Y.; Farrugia, J.-P.; Dupont, F.; Le Callet, P.; Lavoué, G. Comparison of Subjective Methods for Quality Assessment of 3D Graphics in Virtual Reality. ACM Trans. Appl. Percept. 2021, 18, 1–23. [Google Scholar] [CrossRef]

- Abouelaziz, I.; El Hassouni, M.; Cherifi, H. No-Reference 3D Mesh Quality Assessment Based on Dihedral Angles Model and Support Vector Regression. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; Volume 9680, pp. 369–377. [Google Scholar] [CrossRef]

- Abouelaziz, I.; Chetouani, A.; El Hassouni, M.; Cherifi, H. A blind mesh visual quality assessment method based on convolutional neural network. In Proceedings of the IS and T International Symposium on Electronic Imaging Science and Technology, Burlingame, CA, USA, 28 January–1 February 2018. [Google Scholar] [CrossRef]

- Abouelaziz, I.; Chetouani, A.; El Hassouni, M.; Latecki, L.J.; Cherifi, H. No-reference mesh visual quality assessment via ensemble of convolutional neural networks and compact multi-linear pooling. Pattern Recognit. 2020, 100, 107174. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, W.; Min, X.; Wang, T.; Lu, W.; Zhai, G. No-Reference Quality Assessment for 3D Colored Point Cloud and Mesh Models. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7618–7631. [Google Scholar] [CrossRef]

- Champion, E.; Rahaman, H. Survey of 3D digital heritage repositories and platforms. Virtual Archaeol. Rev. 2020, 11, 1–15. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).