2.1. Bayesian State-Space Models

The framework used for this analysis will be that of a general Bayesian state-space model. Such a model is fully defined in terms of its transition and observation densities and dependent on a set of parameters . The transition density defines, in a probabilistic sense, the distribution over the hidden states at a time given the states at time t and any external inputs at time t. The observation density defines the distribution of the observed quantity at time t given the states and external inputs also at time t. This framework allows a model to be built for evolving sequences of states from the inital prior states to those at time T. Where the notation indicates the variable from time a to time b inclusively. One important property of this model is that it obeys the Markov property, i.e., the distribution of the states at any point in time t only needs to be conditioned on time in order to capture the effect of the full history of the states from time 0 to .

So far no restriction has been placed on the form of the above densities. It is possible to define the transition in terms of any distribution, although, it can be useful to consider the transition in terms of a function which moves the states from time

to

t and a

process noise. Likewise, for the observation, it is possible to consider a function which is corrupted with some

measurement noise. The generative process can be written as,

It is also possible to represent the structure of this model graphically as in

Figure 1. The graphical model is useful in revealing the Markov structure of the process. There are broadly three tasks associated with models of this form; these are prediction (simulation), filtering and smoothing. These are concerned with recovering the distributions of the hidden states

given differing amounts of information regarding

. The prediction task is to determine the distribution of the states into the future, i.e., predicting

for some

k. Filtering considers the distribution of the states given observations up to that point in time,

. Smoothing infers the distributions of the states given the complete sequence of observations,

, where

.

By restricting the forms of the transition and observation densities to be linear models with additive Gaussian noise, it is possible to recover closed-form solutions for the filtering and smoothing densities. These solutions are given by the now ubiquitous Kalman filtering [

14] and Rauch-Tung-Striebel (RTS) smoothing [

15] algorithms. With these approaches, it is possible to recover the densities of interest for all linear dynamical systems. However, when the model is nonlinear or the noise is no longer additive Gaussian, the filtering and smoothing densities become intractable.

In order to solve nonlinear state-space problems a variety of approximation techniques have been developed; these include the Extended Kalman Filter (EKF) [

16,

17] and the Unscented Kalman Filter (UKF) [

18]. Each of these models, and others [

19], approximate the nonlinear system by a Gaussian distribution at each time step. This approach can work well; however, it will not converge to complex (e.g., multimodal) distributions in the states. An alternative approach is one based on importance sampling, commonly referred to as the Particle Filter (PF).

The particle filtering approach will be used in this paper, a good introduction to the method can be found in Doucet and Johansen [

20]; however, a short overview is presented here. The task is to approximate the filtering density of a general nonlinear state-space model as defined in Equation (1). Importance sampling forms a representation of the distribution of interest as a set of weighted point masses (particles) which form an approximation to the distribution in a Monte Carlo manner. The distribution of the states (The conditioning on

is not explicitly shown here but will still exist).

is approximated as,

with

N particles

and corresponding importance weights

. The importance weights are normalised such that the sum over the weights equals unity, from a set of unnormalised importance weights, at time

t,

. These unnormalised weights are calculated by the ratio of the unnormalised posterior likelihood (For clarity, while

is the object of interest, normally there is only access to

when

. In other words, the posterior distribution of interest is only known up to a constant.). of the states

and a proposal likelihood

at each point in time,

The importance weights can be computed sequentially, such that,

Applying this sequential method naïvely gives rise to problems where the variance of the estimation increases to unacceptable levels. To avoid this increase, the final building block of the particle filter is to introduce a resampling scheme. At a given time the

particle system is resampled according to the importance weight of each particle. The aim of this resampling is to keep particles which approximate the majority of the probability mass of the distribution while discarding particles that do not contribute much information. A general SMC algorithm for filtering in state-space models is shown in Algorithm 1. The external inputs

have been omitted from the notation here for compactness, but can also be included.

| Algorithm 1 SMC for State-Space Filtering |

| All operations for particles |

- 1:

Sample - 2:

Calculate and normalise to obtain - 3:

Resample updating the particle system to - 4:

fordo - 5:

Sample - 6:

Update path histories - 7:

Calculate and normalise to obtain - 8:

Resample updating the particle system to - 9:

end for

|

Before an effective application of this method can be made, there remains a key user choice. That is, the choice of proposal distribution

. The optimal choice of this proposal distribution would be the true filtering distribution of the states. This choice is rarely possible; therefore, a different proposal must be chosen. The simplest approach is to set the proposal distribution to be the transition density of the model, i.e.,

, this is commonly referred to as the

bootstrap particle filter. In this case the value of the (unnormalised) weights is given by the likelihood of the observation

under the observation density

. It is possible to reduce the variance in the proposal, which can improve efficiency by choosing an alternative proposal density [

21]; however, that will not be covered here.

The particle filter, alongside providing an approximate representation of the filtering densities, provides an unbiased estimate

of the marginal likelihood of the filter

. This is given by,

Access to this unbiased estimatior will be a key component of a particle Markov Chain Monte Carlo (pMCMC) scheme.

So far, it has been shown how a general model of a nonlinear dynamical system can be established as a nonlinear state-space model. It has been stated that, in general, this model does not have a tractable posterior and so an approximation is required. The particle filter, an SMC-based scheme for inference of the filtering density, has been introduced as a tool to handle this type of model. This algorithm will form the basis of the approach taken in this paper to solve the joint input-state problem for a nonlinear dynamic system.

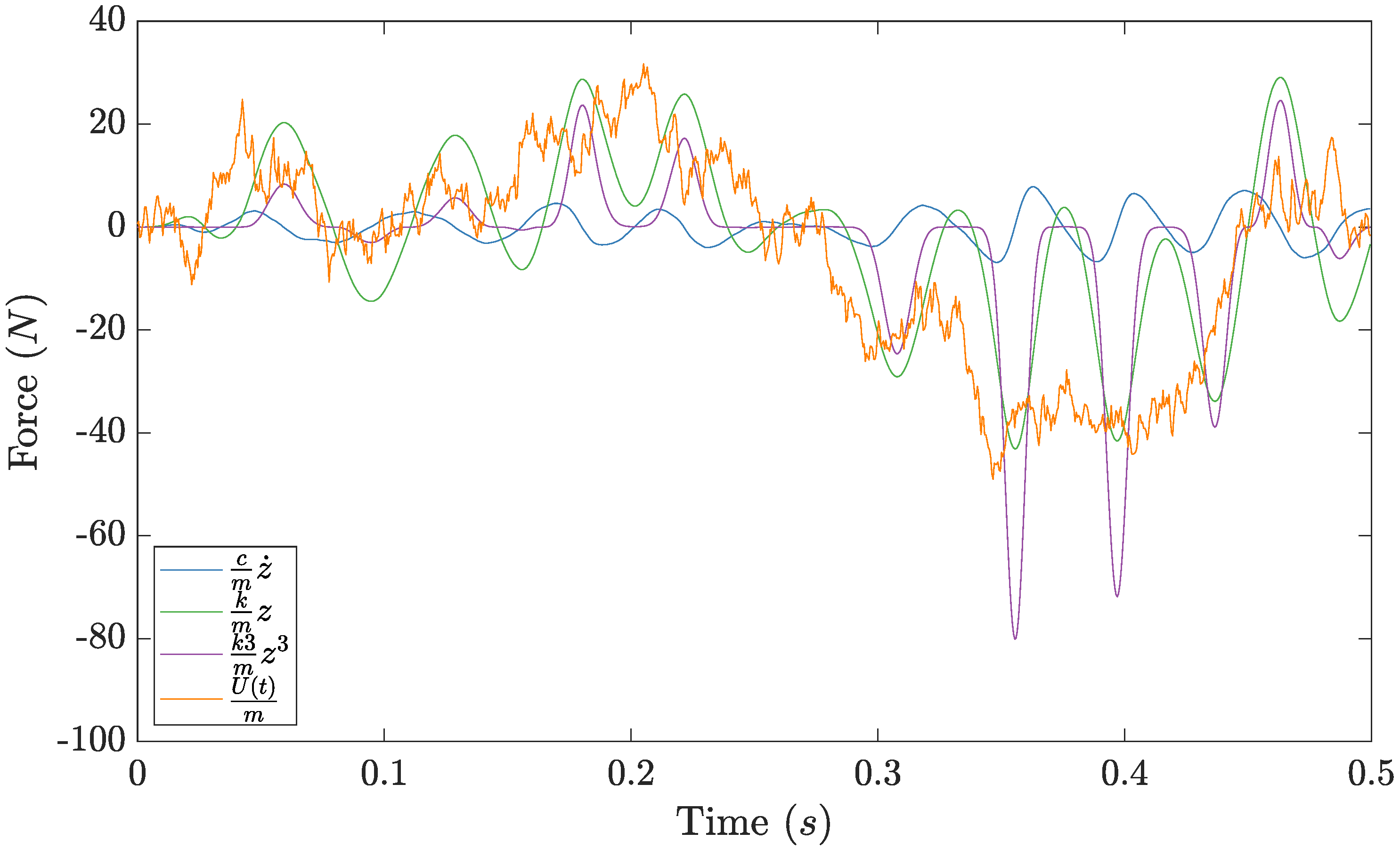

2.2. Input Estimation as a Latent Force Problem

The specific model form used for the joint input-state estimation task can now be developed. The starting point for this will be the second-order differential equation which is the equation of motion of the system. The methodolgy will be shown for a single-degree-of-freedom (SDOF) nonlinear system, although the framework can extend to the multi-degree-of-freedom (MDOF) case. Defining some nonlinear system as,

a point with mass

m is subjected to an external forcing as a function in time

U and its response is governed by its inertial force

and some internal forces

which are a function of its displacement and velocity (Although not shown explicitly to reduce clutter in the notation, it should be realised that the displacement, velocity and acceleration of the system are time-dependent. In other words the

z implies

and similar for the velocity and acceleration.). If

for some contant stiffness

k and damping coefficent

c, the classical linear system is recovered.

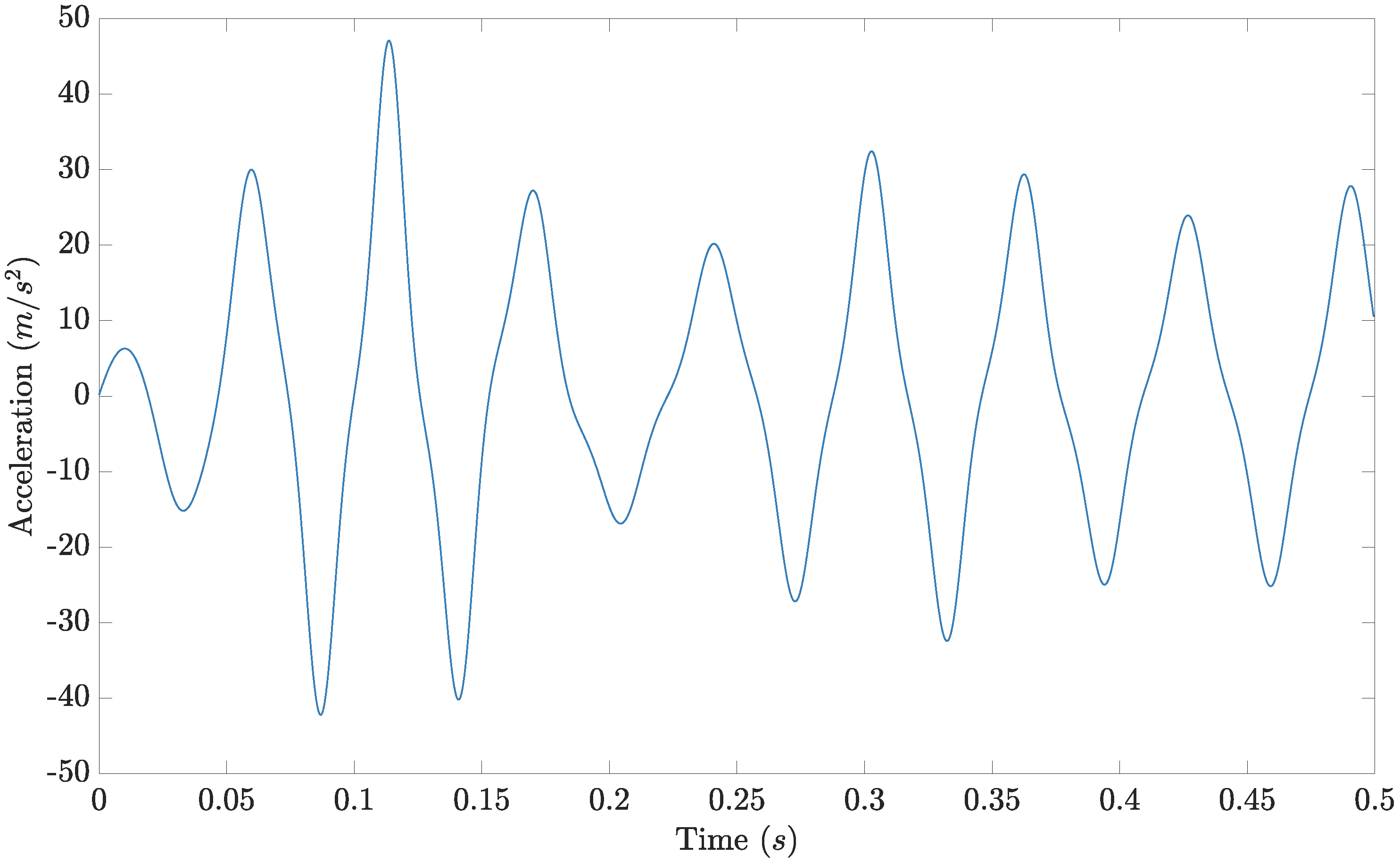

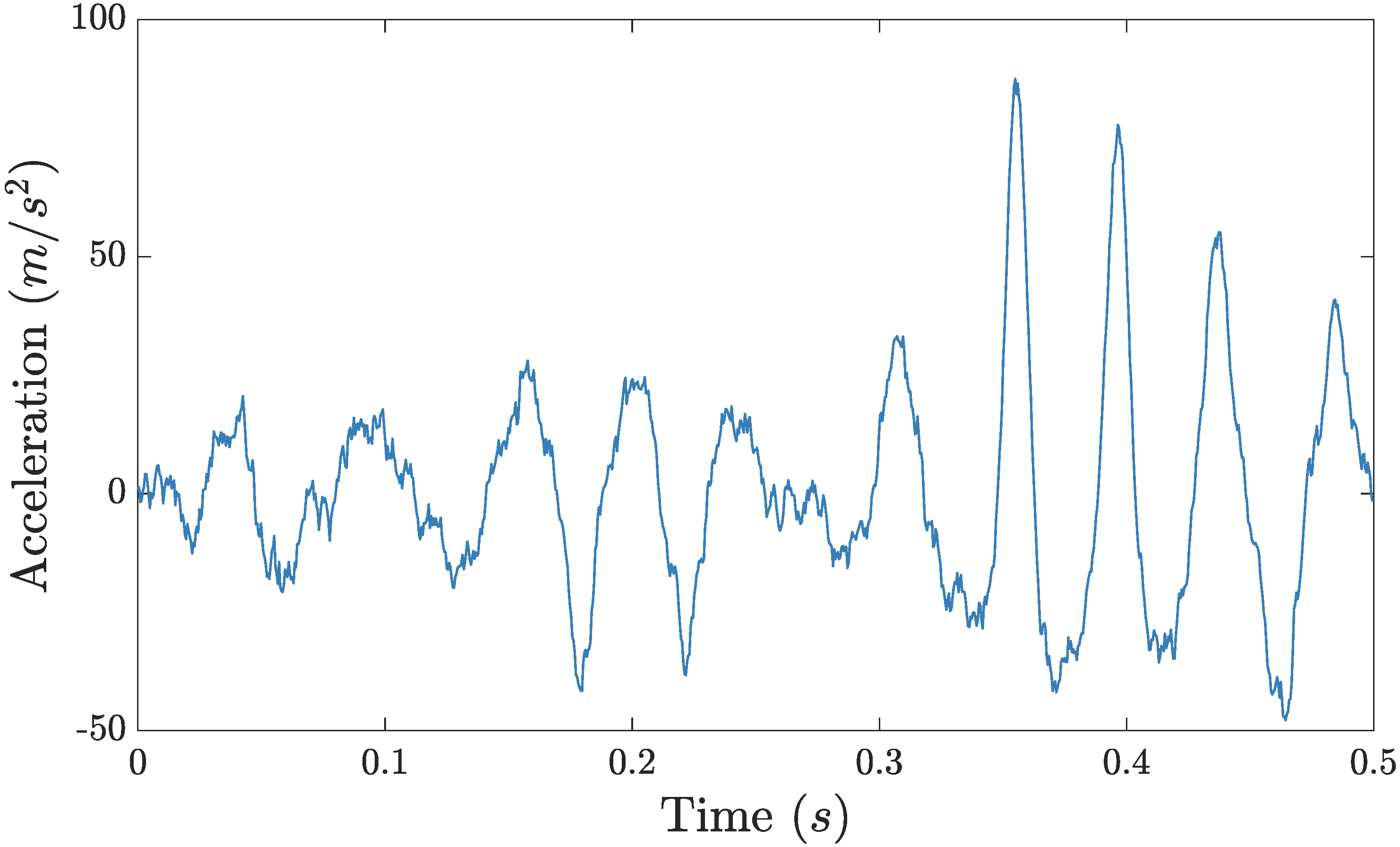

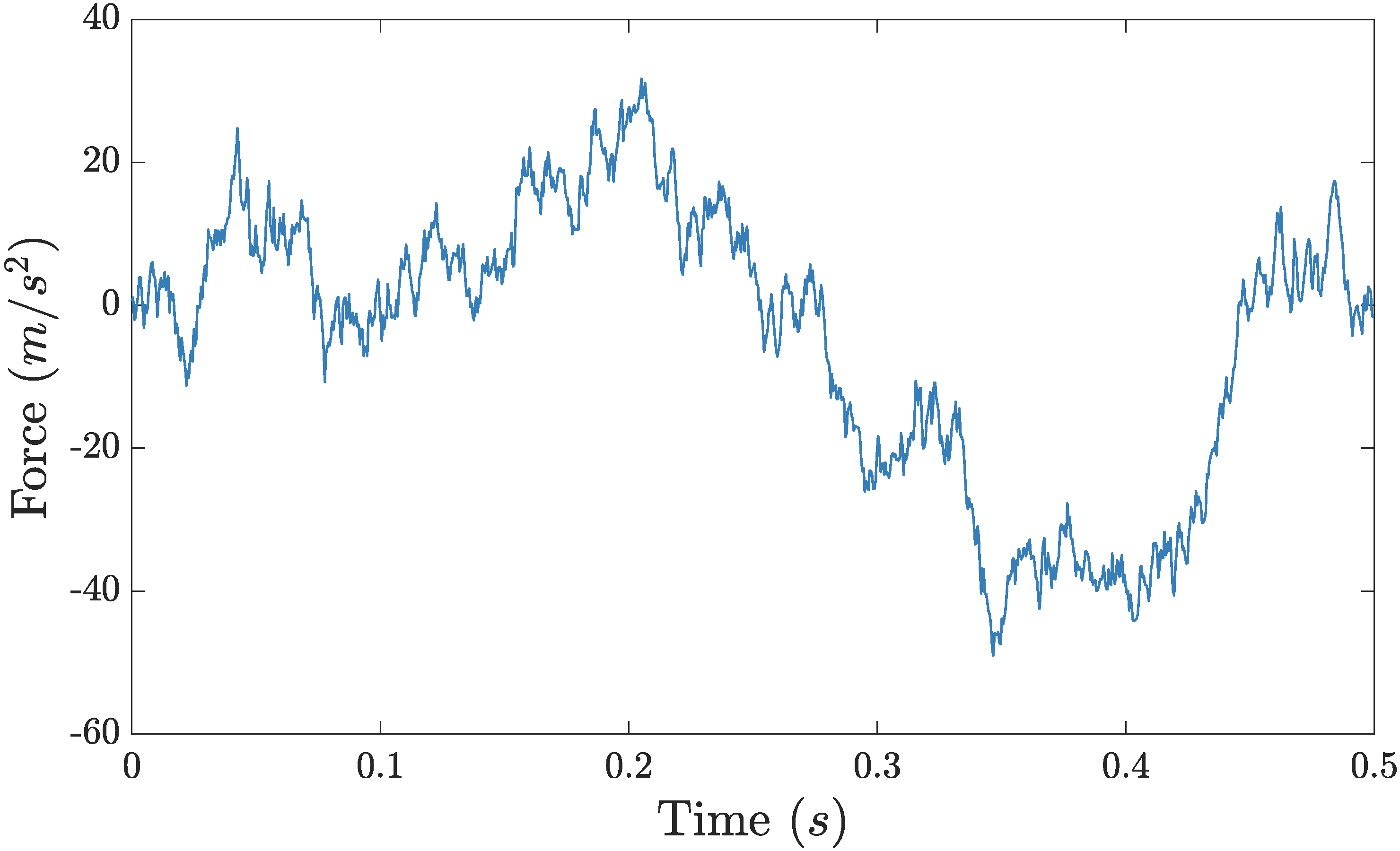

When

U is known (measured), the particle fiter can be used to recover the internal states of the oscillator, the displacement and velocity (computing the acceleration from these is also trivial). By modifying the observation density, a variety of sensor modalities can be handled; however, measuement of acceleration remains the most common approach because of ease of physical experimentation. In this work it is assumed that the parameters are known and the input is not, for the opposite scenario see [

22], it is hoped in future to unify these two into a nonlinear joint input-state-parameter methodolgy. However, in the situation being addressed in this work it is assumed that there is no access to

U.

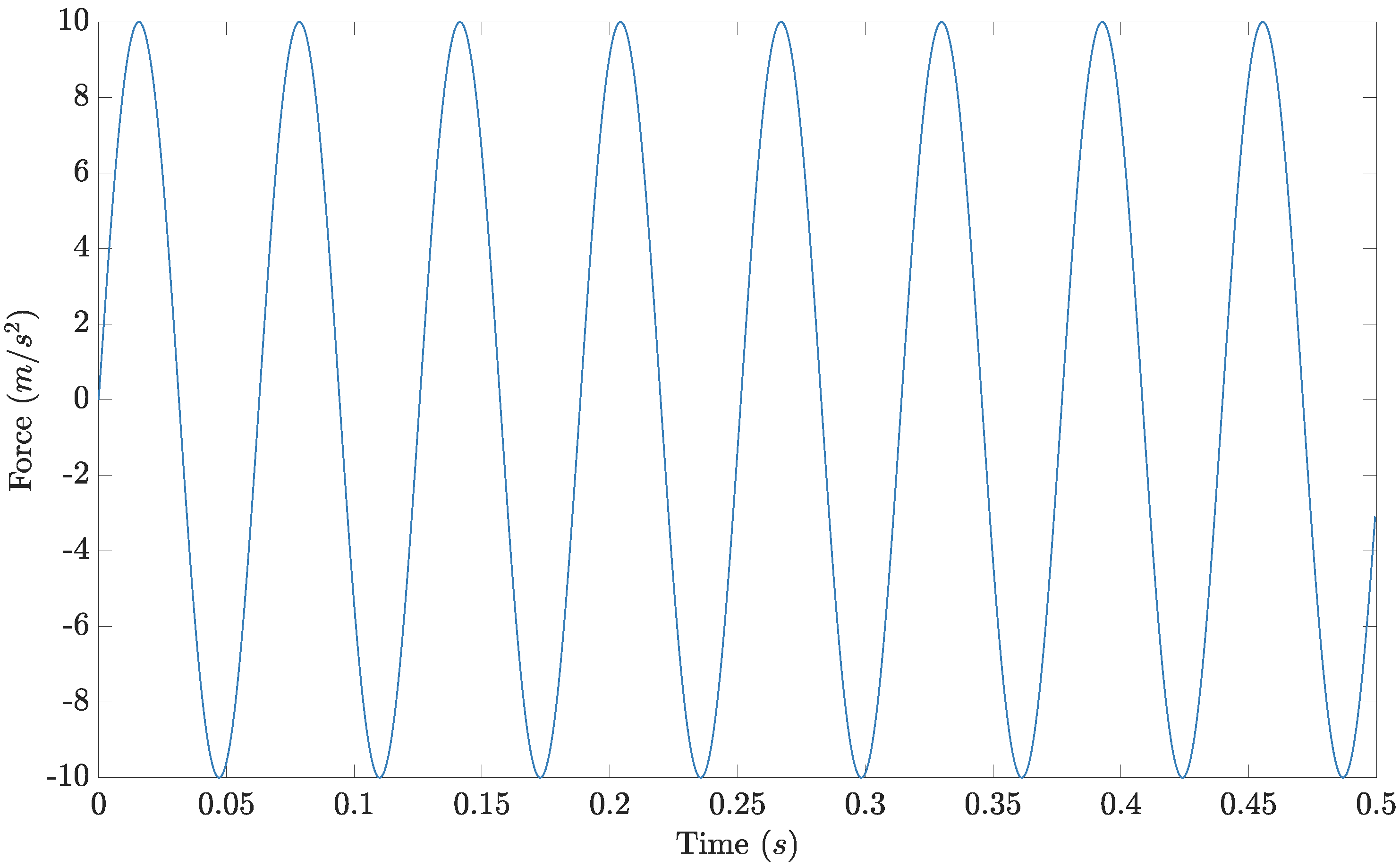

Here, the unknown input will be estimated simultaneously with the unknown states of the model. To do so, a statistical model of the missing force must be established. One elegant approach to this was introduced in Alvarez et al. [

23]. To infer the forces, it is necessary to make some assumption about their generating process. The assumption in Alvarez et al. [

23] is that the unknown forcing

U can be represented by a Gaussian process in time—the same assumption is made in this work.

The Gaussian process (GP) [

24,

25] is a flexible nonparametric Bayesian regression tool. A GP represents a function as an infinite-dimensional joint Gaussian distribution, where each datapoint conditioned on a given input is a sample from a univariate Gaussian conditioned on that input. An intuitive way of viewing the GP is as a probability distribution

over functions; in other words, a single sample from a GP is a function with respect to the specified inputs. A temporal GP, is simply a GP where the inputs to the model are time. The temporal GP over a function

is fully defined in terms of its mean function

and covariance kernel

,

In this work, as is common, it will be assumed that the prior mean function is zero for all time, i.e., .

Alvarez et al. [

23] show how to solve the latent force problem for linear systems where,

and in the MDOF case. One of the difficulties in the approach is the computational cost of solving such a problem. However, it is possible to greatly reduce this cost by transforming the inference into a state-space model. It was shown in Hartikainen and Särkkä [

26], that inference for a Gaussian process in time can be converted into a linear Gaussian state-space model. Using the Kalman filtering and RTS smoothing solutions, an identical solution to the standard GP is recovered in linear time rather than cubic.

Given the availability of this solution, it was sensibile to use this result to improve the efficiency of inference in the latent force model. The combination of the state-space form of the Gaussian process with the linear dynamical system is natural, given the equivalent state-space form of the dynamics; this was shown in Hartikainen and Sarkka [

27]. One of the most useful aspects of this approach is that the whole system remains a linear Gaussian state-space model which can be solved with the Kalman filter and RTS smoother to recover the filtering and smoothing distributions exactly. It is this form of the model that has been exploited previously in structural dynamics [

5,

6,

10].

A full derivation of the conversion from the GP to its state-space form will not be shown here, although it can be found in Hartikainen and Särkkä [

26]. Instead it will be shown how this transformation can be performed for one family of kernels, the Matérn class. This set of kernels are a popular choice in machine learning and have been argued to be a sensible general purpose nonlinear kernel for physical data [

28]. The Matérn kernel is defined as a function of the absolute difference between any two points in time

. As such, it is a stationary isometric kernel, i.e., it is invariant to the absolute values of the inputs, their translation or rotation. Its characteristics are controlled by two hyperparameters; a total scaling

and a length scale

ℓ which mediates the characteristic frequency of the function. In addition, particular kernels are selected from this family by virtue of a smoothness parameter

. The general form of the kernel is given by,

where

is the gamma function and

is the modified Bessel function of the second kind. The most common Matérn kernels are chosen such that

where

p is zero or a positive integer. When

, Equation (

9) recovers the Matérn 1/2 kernel which is equivalent to the function being modelled as an Ornstein-Uhlenbeck process [

19], as

the Matérn covariance converges to the squared-exponential or Gaussian kernel.

To convert these covariance functions into equivalent state-space models, the spectral density of the covariance function is considered. Taking the Fourier transform of Equation (

9) gives the spectral density,

where

. This density can then be rearranged into a rational form with a constant numerator and a denominator that can be expressed as a polynomial in

. This can be rewritten in the form

, where

defines the transfer function of a process for

governed by the differental equation, with

q being the spectral density of the white noise process

,

The differential equation has order a with associated coefficients assuming . This system is driven by a continuous time white noise process which has a spectral density equal to the numerator of the rational form of the spectral density of the kernel.

Returning to the Matérn kernels, it can be shown that,

defining

. With this being the denominator of the rational form, the numerator is simply the constant of proportionality for Equation (

12) which will be referred to as

q with

Therefore, the spectral density of is equal to q.

The expression for the GP in Equation (

11) can now easily be converted into a linear Gaussian state-space model. In continuous time, this produces models in the form,

For example, setting

yields,

This procedure has now provided a state-space representation for the temporal GP which is the prior distribution for a function in time placed over the unknown force on the oscillator.

At this point, the states of the linear system can be augmented with this new model for the forcing. For example, for a model with a Matérn 3/2 kernel chosen to represent the force, the full system would be,

where

u is the augmented hidden state which represents the unknown forcing; as a consequence of the model formulation its derivative

is also estimated. Since this is a linear system, it is a standard procedure to covert it into a discrete-time form and the Kalman filtering and RTS smoothing solutions can be applied, see [

22] for an example of this. Doing so, the smoothing distribution over the forcing is identical to solving the problem in Equation (

8) as in [

23].

Up to this point, the model has been shown in the context of linear systems. This has hopefully provided a roadmap for the construction of an equivalent nonlinear model. The start of developing the nonlinear model will be to generalise Equation (

8) to the nonlinear case,

Considering the development of the model for the linear system shown previously, the reader will notice that the dynamics of the system do not enter until the final step in the model construction. It is therefore possible to use an identical procedure to convert the forcing modelled as a GP into a state-space form. Depending on the kernel, the force will still be represented in the same way, for example as in Equations (

15)–(

17). In order to extend the method to the nonlinear case, it is necessary to consider how this linear model of the forcing may interact with the nonlinear dynamics of the system. This can be done by forming the nonlinear state-space equation for the system. Again using the Matérn 3/2 kernel as an example,

with the spectral density of

being

q as before. Since this is now a nonlinear model, it is no longer possible to write the transition as a matrix-vector product and the state equations are written out in full. It is worth bearing in mind that there are certain nonlinearities which may increase the dimension of the hidden states in the model; for example, the Bouc-Wen hysteresis model. This form of nonlinearity can be incorporated into this framework, provided the system equations can be expressed as a nonlinear state-space model.

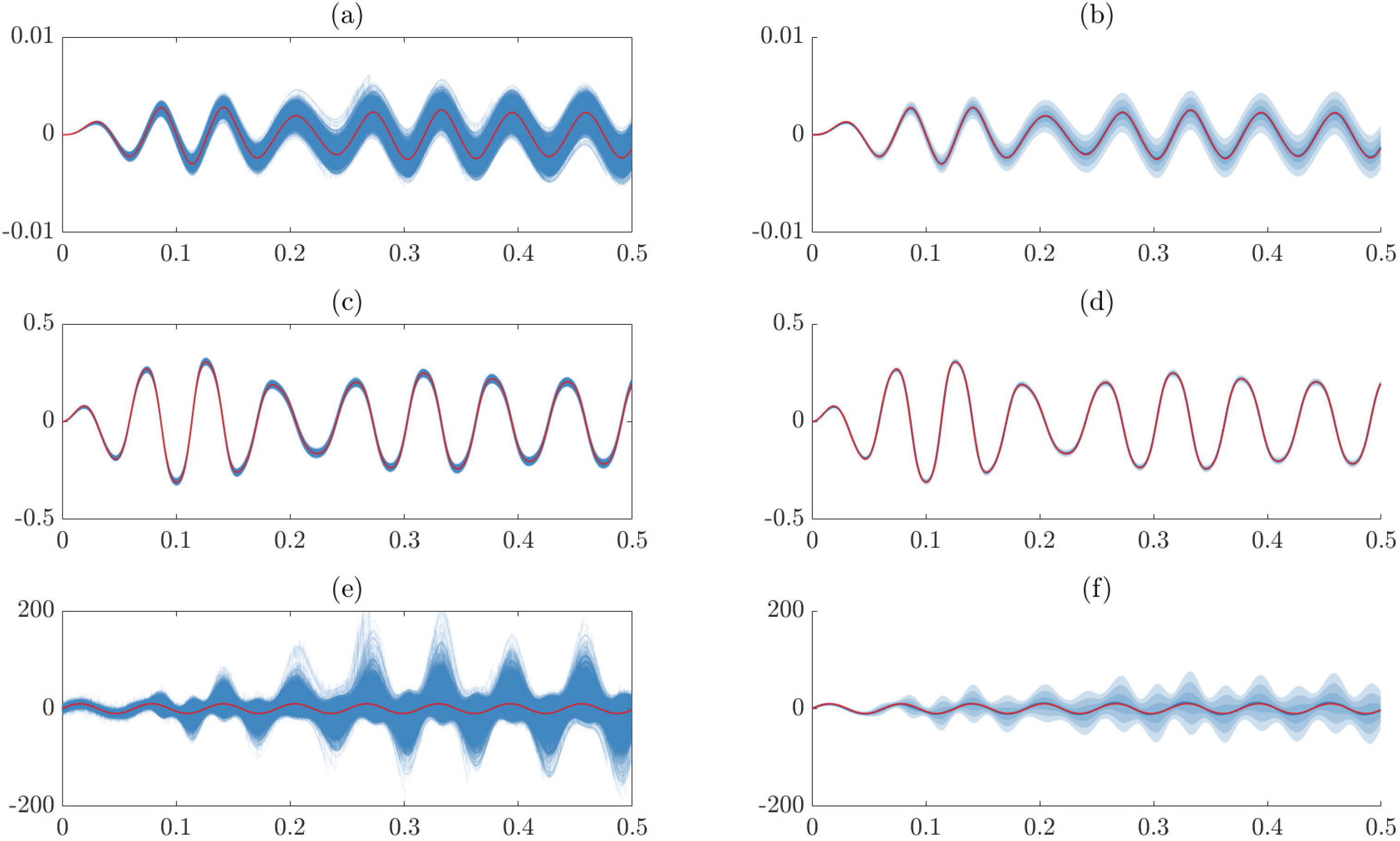

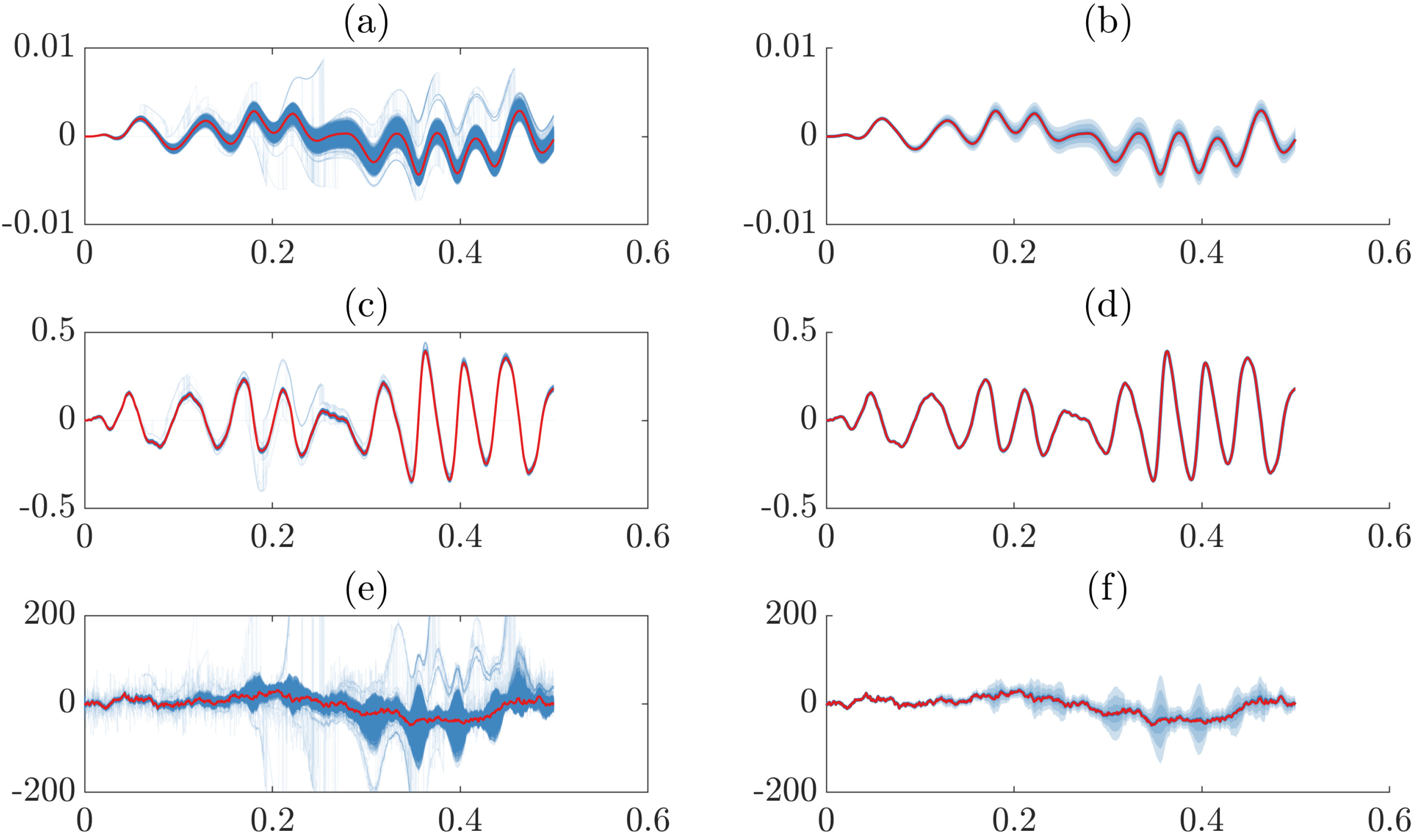

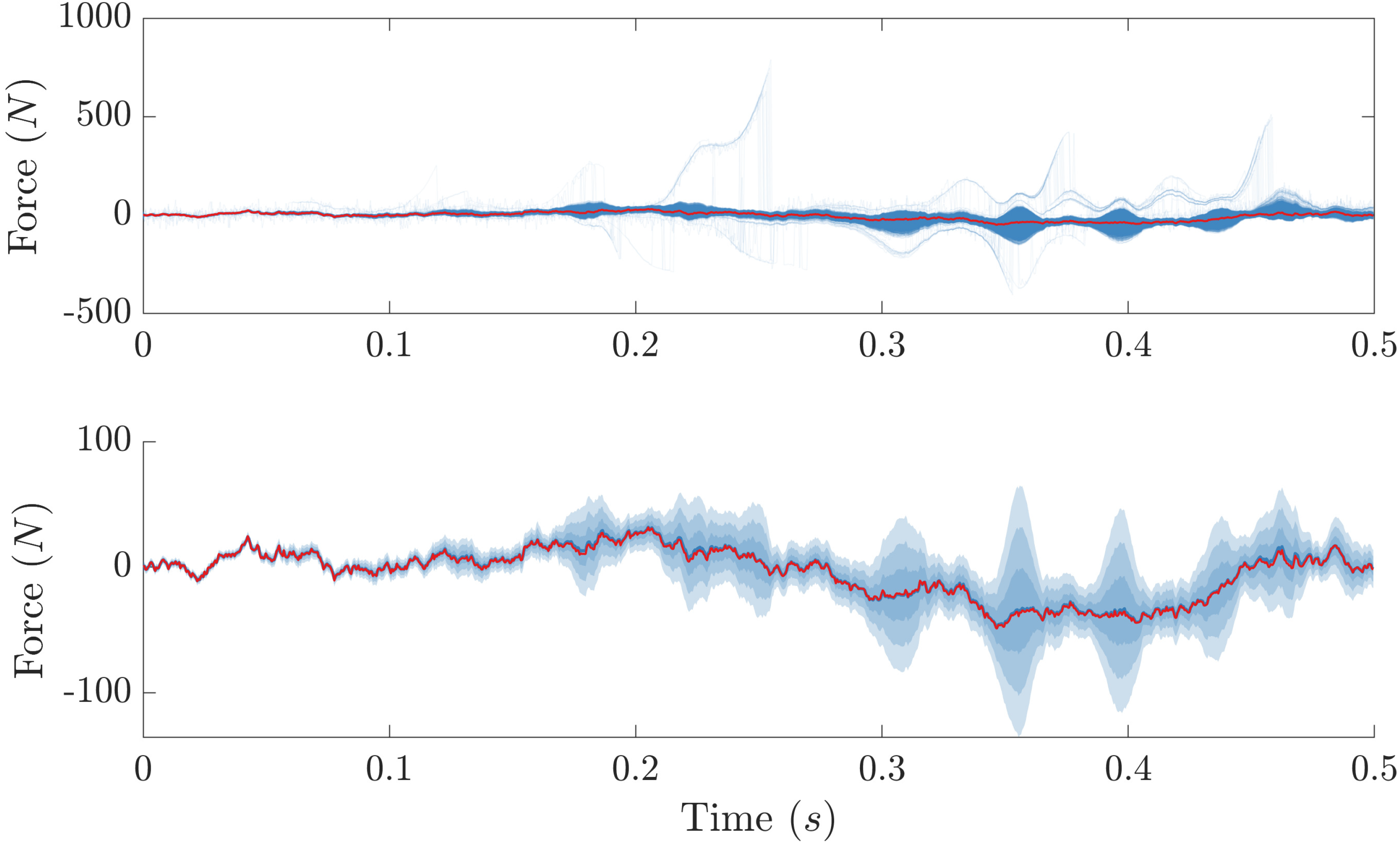

2.3. Inference

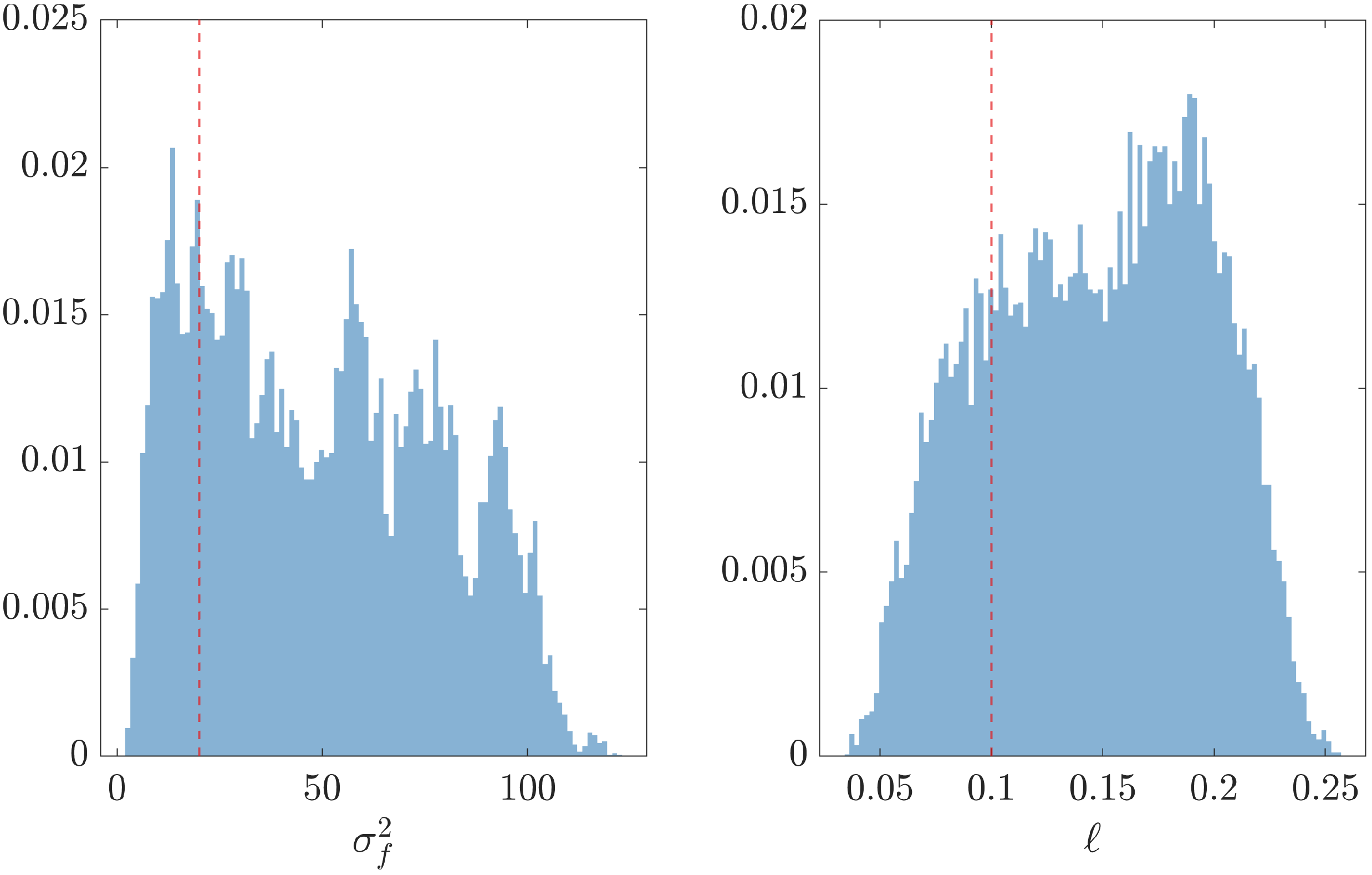

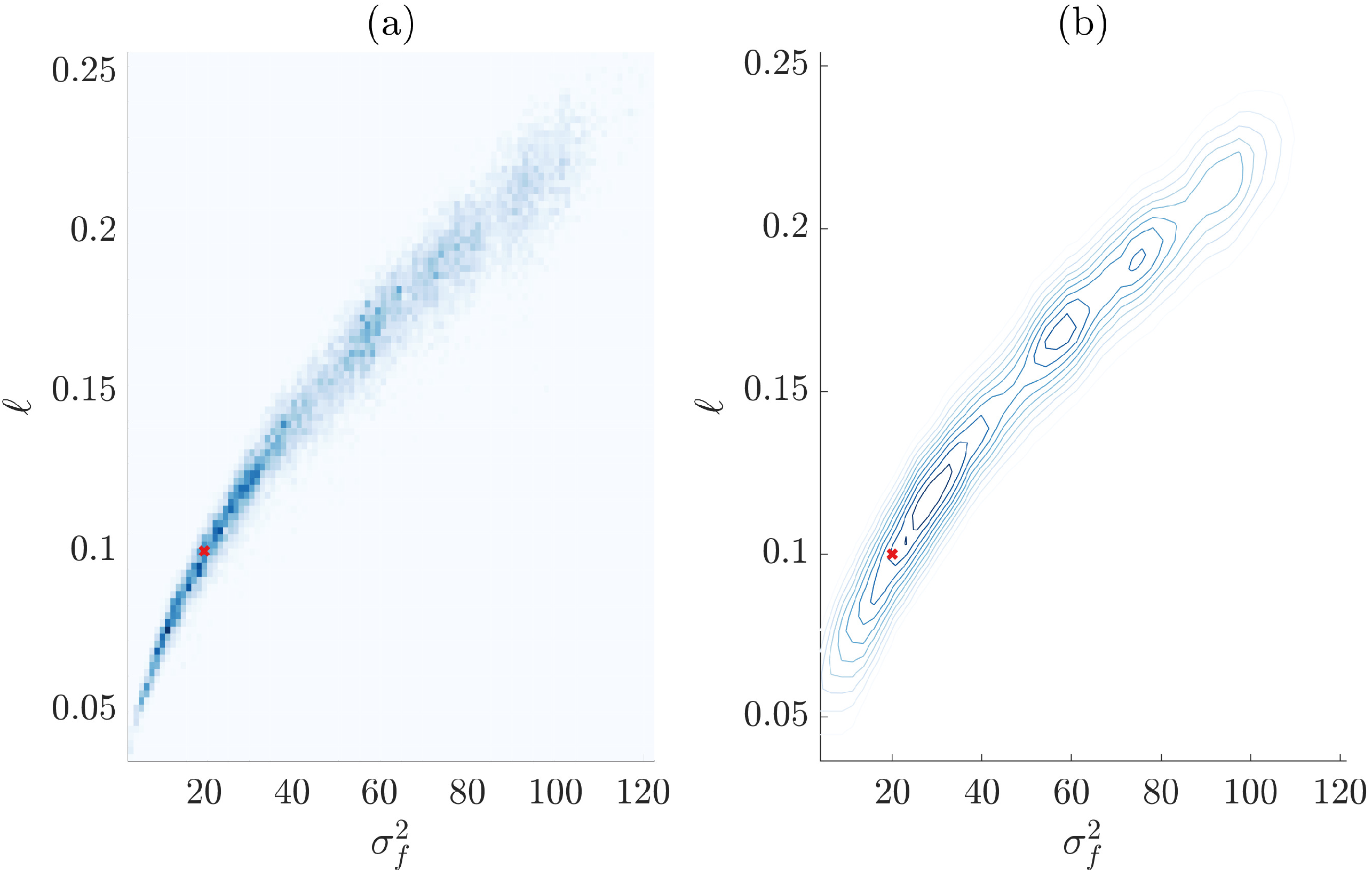

In possession of a nonlinear model for the system, attention turns to inference. There are two unknowns in the system, the hyperparameters associated with the model of the forcing (

and

ℓ) and the internal states which have now been augmented with states related to the forcing signal. Additionally, in order to recover the GP representation of the force, the smoothing distribution of the states is required [

26]. In this model, the parameters of the physical system are known or fixed

a priori and that the task being attempted is joint input-state estimation.

Identification of the smoothing distribution of a nonlinear state-space model is a more challenging task than filtering; however, the basic framework of particle filtering can be used. There are a variety of methods to recover the smoothing distribution of the states starting from a particle filtering approach [

19]. In this case, however, since there are also (hyper)parameters which require identification and the Bayesian solution is desired, a Markov Chain Monte Carlo (MCMC) approach is adopted. Specifically, particle MCMC (pMCMC) [

1] is an approach to performing MCMC inference for nonlinear state-space models.

The particular approach used in this work is a particle Gibbs scheme. In this methodology, a form of particle filter is used to build a Markov kernel which can draw valid samples from the smoothing distribution of the nonlinear state-space model conditioned on some (hyper)parameters. This sample of the states is then used to sample a new set of (hyper)parameters. Similarly to a classical Gibbs sampling approach, alternating samples from these two conditional distributions allows valid samples from the joint posterior to be generated. This approach shows improved efficiency in the sampler due to a blocked construction where all the states are sampled simultaneously and likewise the (hyper)parameters are sampled together. Each of these two updating steps will now be considered.

2.3.1. Inferring the States

In order to generate samples from the smoothing distributions of the states, the particle Gibbs with Ancestor Sampling (PG-AS) [

29] method is used. This technique will be briefly described here but the reader is reffered to Lindsten et al. [

29] for a full introduction and proof that this is a valid Markov kernel with the smoothing distribution as its stationary distribution.

To generate a sample from the smoothing distribution using the particle filter, some modification needs to be made to the procedure seen for the basic particle filter. A more compact form of the Sequential Monte Carlo (SMC) scheme in Algorithm 2 is shown to make clear the necessary modifications. In particular, the notation

will be used to representation a

proposal kernel which returns an

ancestor index and proposed sample for the states at time

t,

.

represents the usual resampling and proposal steps in the particle filter and the explicit book-keeping for the ancestors at each time step aids in recovering the particle paths. The ancestor index

is simply an integer which indicates which particle from time

was used to generate the proposal of

. The weighting of each particle is also now more compactly represented by a general weighting function

.

| Algorithm 2 Sequential Monte Carlo |

| All operations for |

| Sample |

- 2:

Calculate

|

| for do |

- 4:

Sample

|

| Set |

- 6:

Calculate

|

| end for |

Simply taking samples of the paths from this algorithm does not give rise to a valid Markov kernel for sampling the smoothing distribution [

1]; in order to do so it is necessary to make a modification which ensures stationarity of the kernel. This modification is to include one particle trajectory which is not updated as the algorithm runs, referred to as the

reference trajectory. Although the index of this particle does not affect the algorithm, it is customary to set it to be the last index in the filter, i.e., for a particle system with

N particles the

Nth particle would be the reference trajectory. This reference will be denoted as

. This small change forms the conditional SMC algorithm (CSMC) (Algorithm 3) which is valid for generating samples of the smoothing distribution.

| Algorithm 3 Conditional Sequential Monte Carlo |

- 1:

Sample for - 2:

Set - 3:

Calculate for - 4:

fordo - 5:

Sample for - 6:

Set for - 7:

Set - 8:

Set - 9:

Calculate for - 10:

end for

|

To sample from the smoothing distribution of the states, a path is selected based on sampling from a multinomial distribution with probabilities , i.e., the weights of each particle at the final time step. The path is defined in terms of the ancestors for that particle working backwards in time, but this has been updated as the algorithm runs (line 6 of Algorithm 3). CSMC forms a servicable algorithm; however, it can show poor mixing in the Markov chain close to the beginning of the trajectories owing to path degeneracy.

The ancestor sampling approach is a simple yet powerful modification to the algorithm proposed in [

29]. This ancestor sampling adds the additional step of sampling the ancestor for the reference trajectory in the CSMC filter. The ancestor is sampled at each time step according to the following unnormalised weight,

When using the bootstrap filter discussed earlier and resampling at every time step Equation (

21) reduces to,

remembering that

. Conceptually, this is sampling the ancestor for the remaining portion of the reference trajectory according to the likelihood of the reference at time

t given the transition from all of the particles (including the reference) at the previous time step; doing so maintains an invariant Markov kernel on

.

Given this

ancestor sampling procedure, the PGAS Markov kernel can be written down as in Algorithm 4. This PGAS kernel will generate a sample

given the inputs of the parameters

and a reference trajectory

.

| Algorithm 4 PGAS Markov Kernel |

- 1:

Sample for - 2:

Set - 3:

Calculate for - 4:

fordo - 5:

Sample for - 6:

Calculate - 7:

Sample with - 8:

Set - 9:

Set for - 10:

Calculate for - 11:

end for - 12:

Draw k with - 13:

return

|

The methodology outlined in Algorithm 4 now provides an algorithm for generating samples from the smoothing distribution of the states give a previous sample of the states and a sampled set of parameters. Therefore, initialising the algorithm with a guess of the state trajectory and parameters allows samples from the posterior to be generated. It is also necessay to bear in mind the usual burn-in required for an MCMC method meaning that the inital samples will be discarded. In other words, it is now possible to sample, at step j in the MCMC scheme, as . It remains to develop a corresponding update for sampling .

2.3.2. Inferring the Hyperparameters

Working in the Gibbs sampling setting means that the samples of the hyperparameters are generated based upon the most recent sample for the state values. Therefore, it is necessary to consider samples from the posterior of the (hyper)parameters given the total data likelihood. In other words, a method must be developed to sample from . Before addressing how that is done, it is useful to clarify what is meant by in the context of this nonlinear joint input-state estimation problem. Since it is assumed that the physical parameters of the system are known a priori, the only parameters which need identification in this model are the hyperparameters of the GP, this should simplify the problem, as the dimensionality of the parameter vector being inferred remains low. As such, it can be said that ; another advantage of taking the GP latent force approach is that the GP over the unknown input also defines the process noise in the model; therefore, this does not need to be estimated explicitly.

It can now be explored how to sample the conditional distribution of

, given a sample from the states. Applying Bayes theorem,

Unfortunately, in this case it is not possible to sample directly from the posterior in Equation (

23) in closed form. Therefore, a

Metropolis-in-Gibbs approach is taken. That is, a Metropolis-Hastings kernel is used to move the parameters from

to

conditioned on a sample of the states

. The use of this approach is convenient since there is no simple closed-form update for the hyperparameters, which precludes the use of a Gibbs update for them. This follows much the same approach as the standard Metropolis-Hastings kernel where a new set of parameters are proposed according to a proposal density

and these are accepted with an acceptance probability,

To calculate this acceptance probability, it is not necessary to evaluate the normalising constant in Equation (

23)

, since this cancels. It is necessary however, to develop an expression for

. The distribution

is the prior over the hyperparameters which is free to be set by the user. The other distribution in the expression for

is the total data likelihood of the model given a sampled trajectory for

and the observations

. Given that this is a nonlinear state-space model, the likelihood of interest is given by,

As can be seen in Equation (

25), this quantity is directly related to transition and observation densities in the state-space model. Since these densities and observations are known and a sample of the states is available from the PGAS kernel, the quantity in Equation (

25) is relatively easy to compute. Therefore, so is

(provided a tractable prior is chosen!). To compute

in Equation (

24) is a standard procedure; often the proposal density

is chosen to be symmetric (e.g., a random walk centered on

) so it cancels in Equation (

25). As an implementation note, the authors have found that it can be helpful for stability to run a number of these Metropolis-Hastings steps for each new sample of the states

; this does not affect the validity of the identification procedure. It has now been possible to define a Markov kernel

which moves the parameters

from step

to step

j with the stationary distribution

.

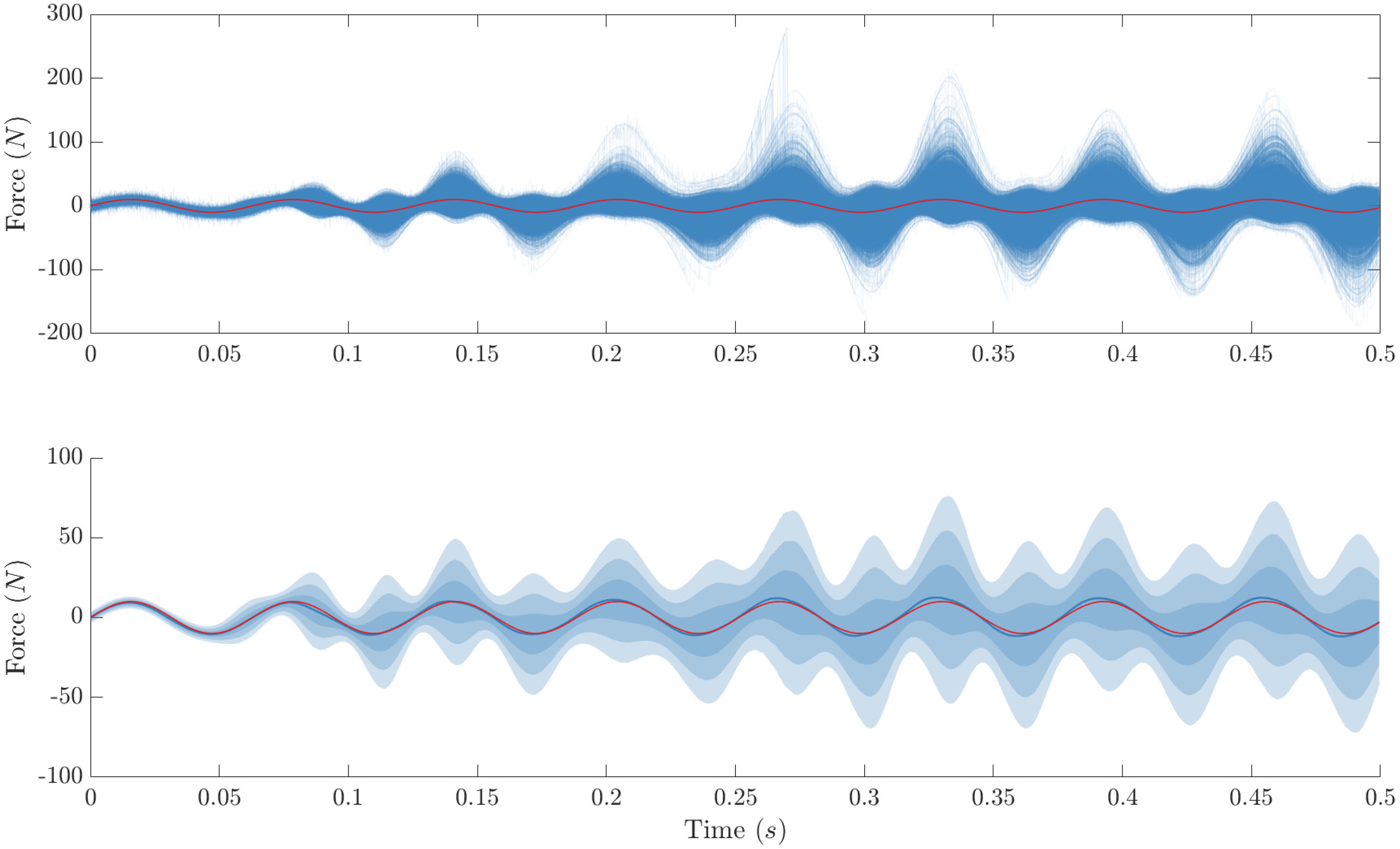

2.3.3. Blocked Particle Gibbs for Joint Input-State Estimation

The full inference procedure is then the combination of these two steps into a blocked Gibbs sampler [

30]. Starting from some initial guess of

and

, the sampler alternates between sampling from the states

using Algorithm 4 conditioned on the current sample of

and sampling

by

conditioned on the latest sample of the states.

With this algorithm in hand, it is now possible to perform the joint input-state estimation for a nonlinear system where the forcing is modelled as a Gaussian process. The number of steps

J, which this chain should be run for remains a user choice, although diagnostics on the chain can help to check for convergence [

30]. It should also be noted that up to this point the form of the nonlinear system has not been restricted, but it is assumed that the system parameters are known. Likewise, other kernels than the Matérn can be used within this framework by following a similar line of logic.

Before moving to the results, it is worth considering theoretically what will happen when the model of the system is mis-specified: if the system parameters used for the input-state estimation were not correct. Since the Gaussian process model of the forcing is sufficiently flexible, the estimated input to the system will be biased. The associated recovered “forcing” state related, which is the Gaussian process, will now be a combination of the unmeasured external forcing and the required internal forces to correct for the discrepancy between the specified nonlinear system model and the “true system” which generated the observations. The level of the bias in the force estimated will be affected by a number of factors; firstly, by the degree to which the parameters (or model) of the nonlinear system are themselves biased and secondly, the relative size of the external force to the correction required to align the assumed system with the true model. The authors would caution a potential user as to the dangers of a mis-specified nonlinear dynamical model if seeking a highly accurate recovery of the forcing signal, however, the results returned can still be of interest and may give potential insight into the dynamics of the system even in the case of bias in the results.