High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia

Abstract

1. Introduction

2. Materials and Methods

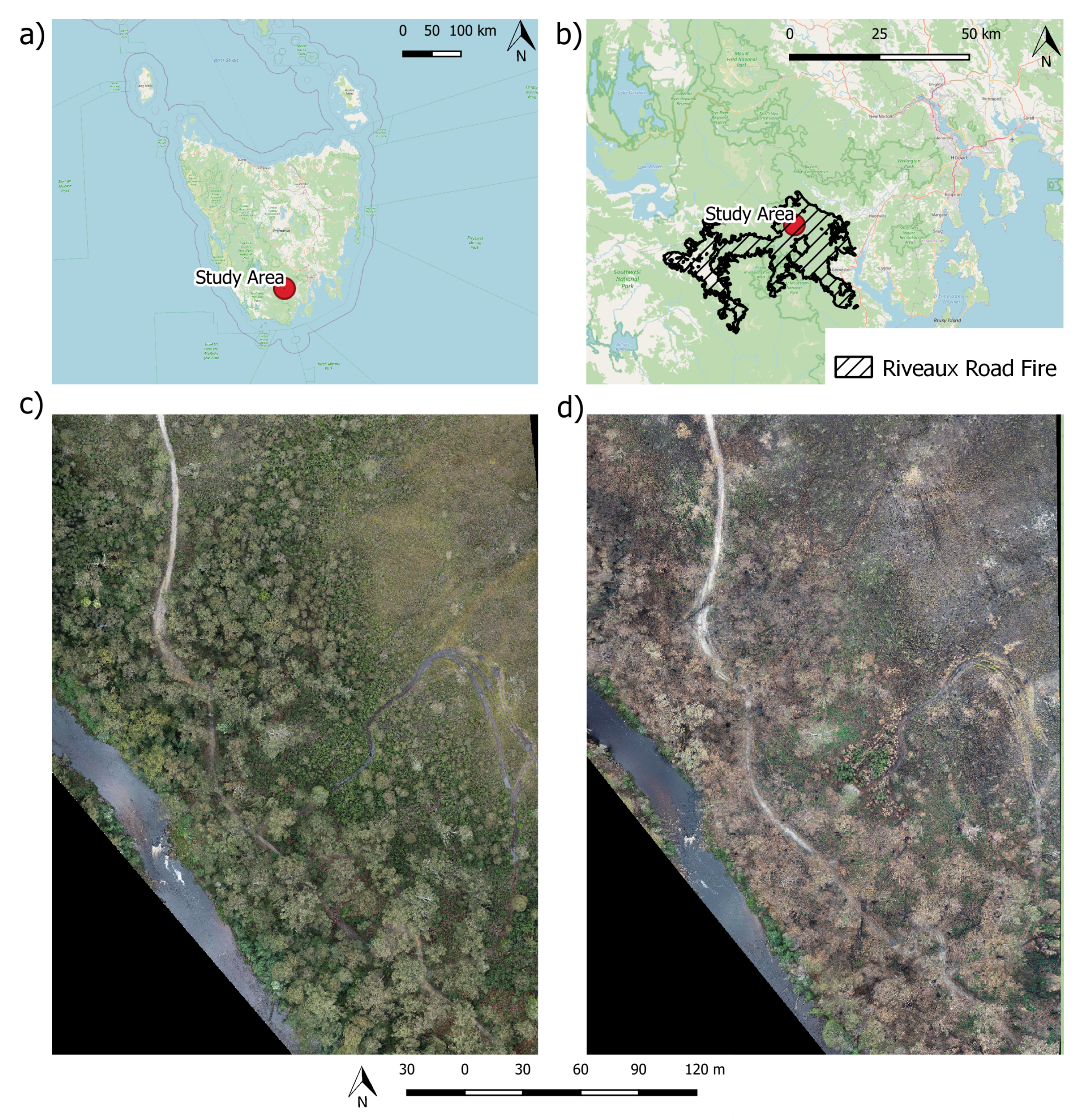

2.1. Study Area and Fire

2.2. Data Collection and Pre-Processing

2.2.1. Ground Control

2.2.2. UAS LiDAR

2.2.3. UAS SfM

2.2.4. Reference Data

2.3. Data Co-Registration

Pre to Post Point Clouds

2.4. Point Cloud Processing

2.5. Fire Severity Classification

2.5.1. Segmentation

2.5.2. Image-Based Features

2.5.3. Point Cloud Features

2.5.4. Random Forests Classification

3. Results

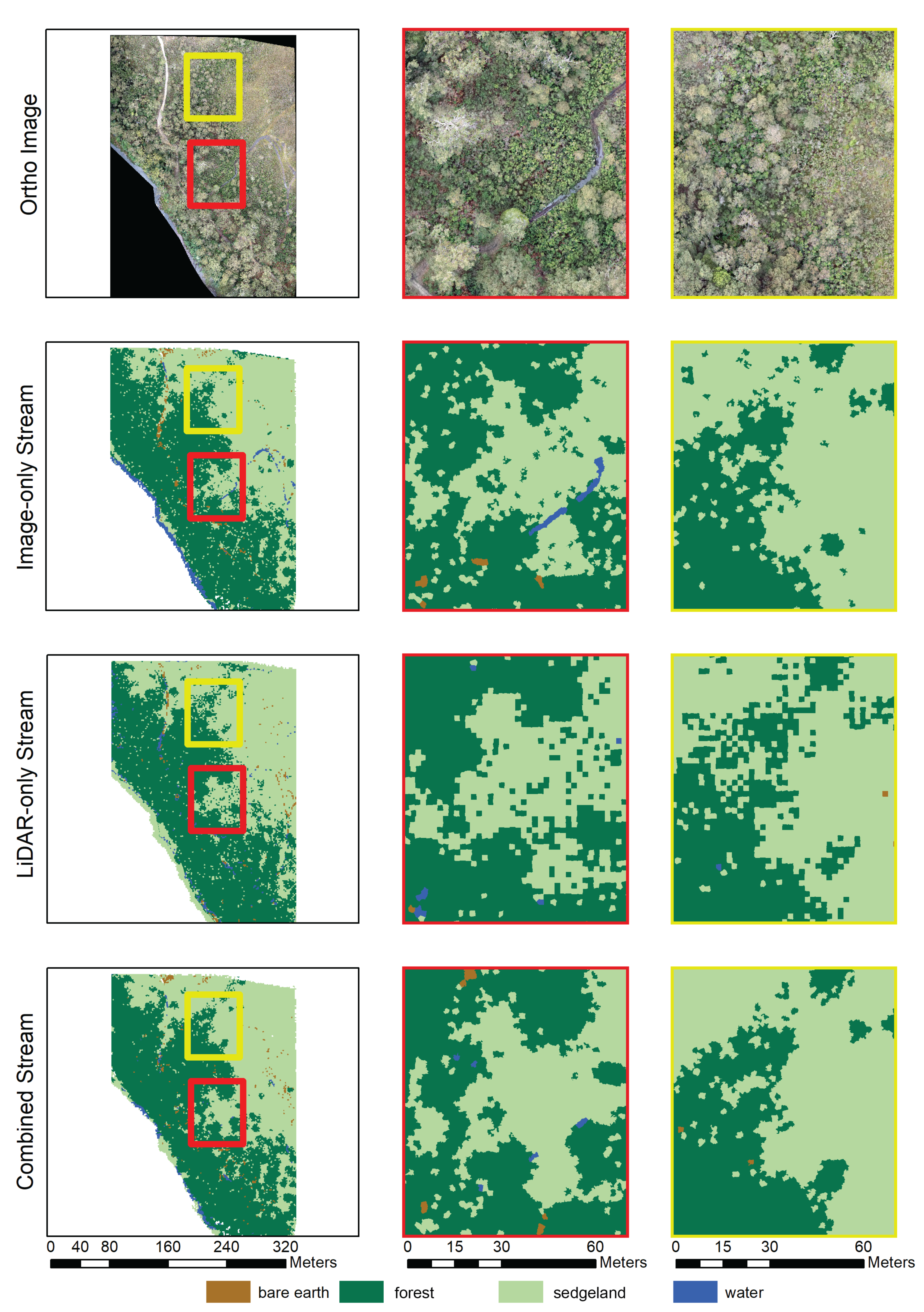

3.1. Vegetation Classification

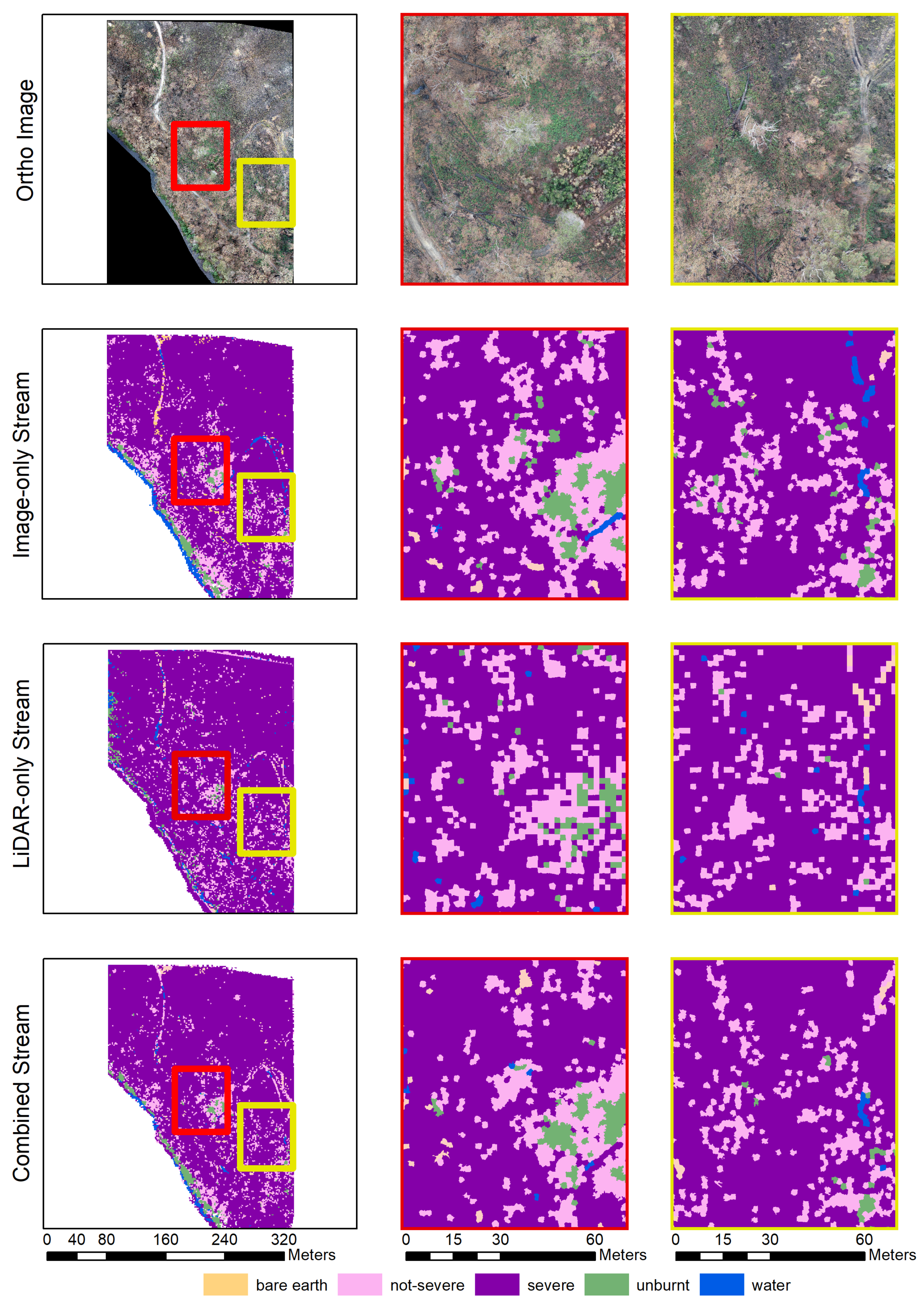

3.2. Fire Severity Classification

3.2.1. Classification of Severity within Sedgeland Segments

3.2.2. Classification of Severity within Forest Segments

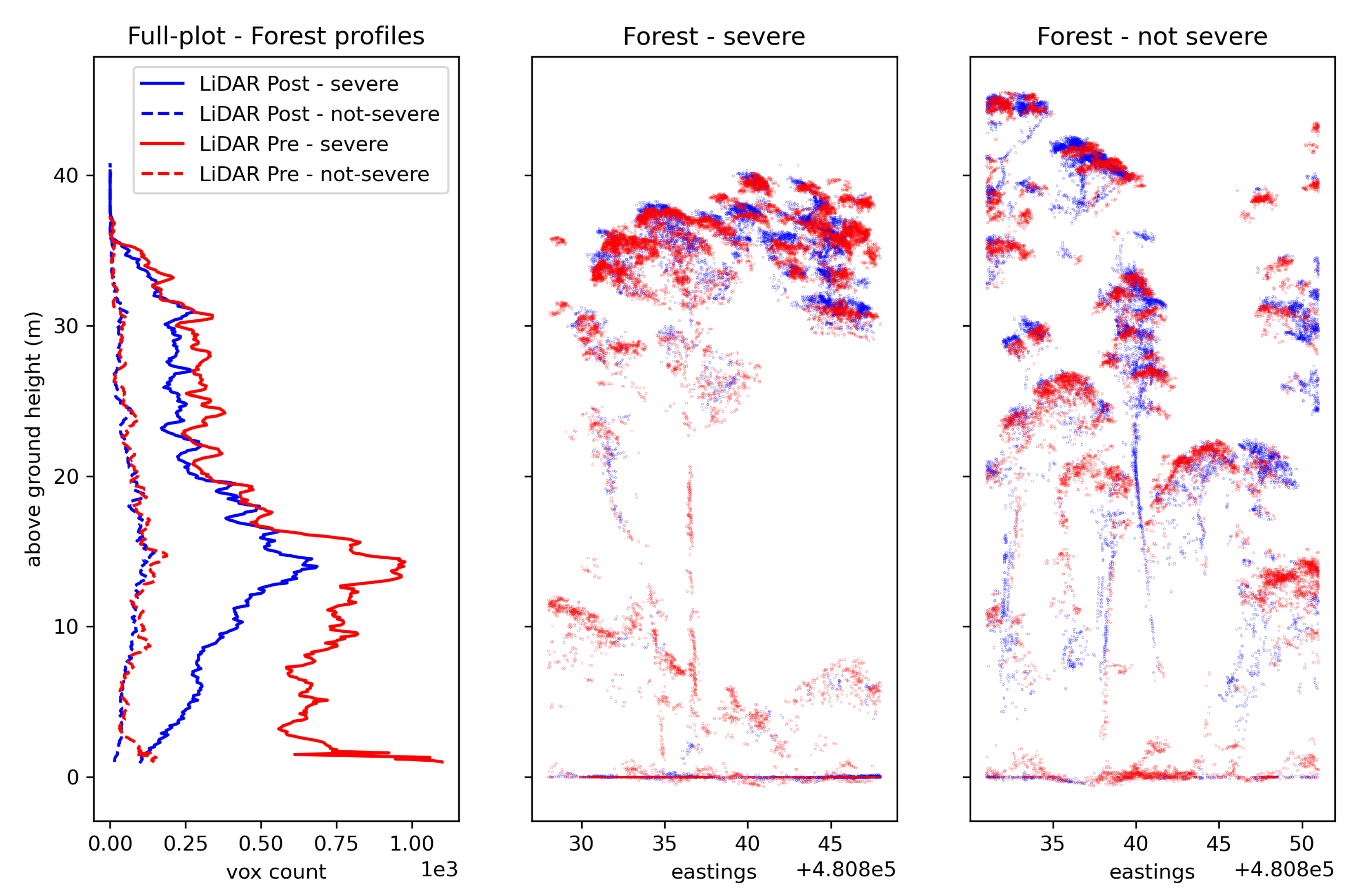

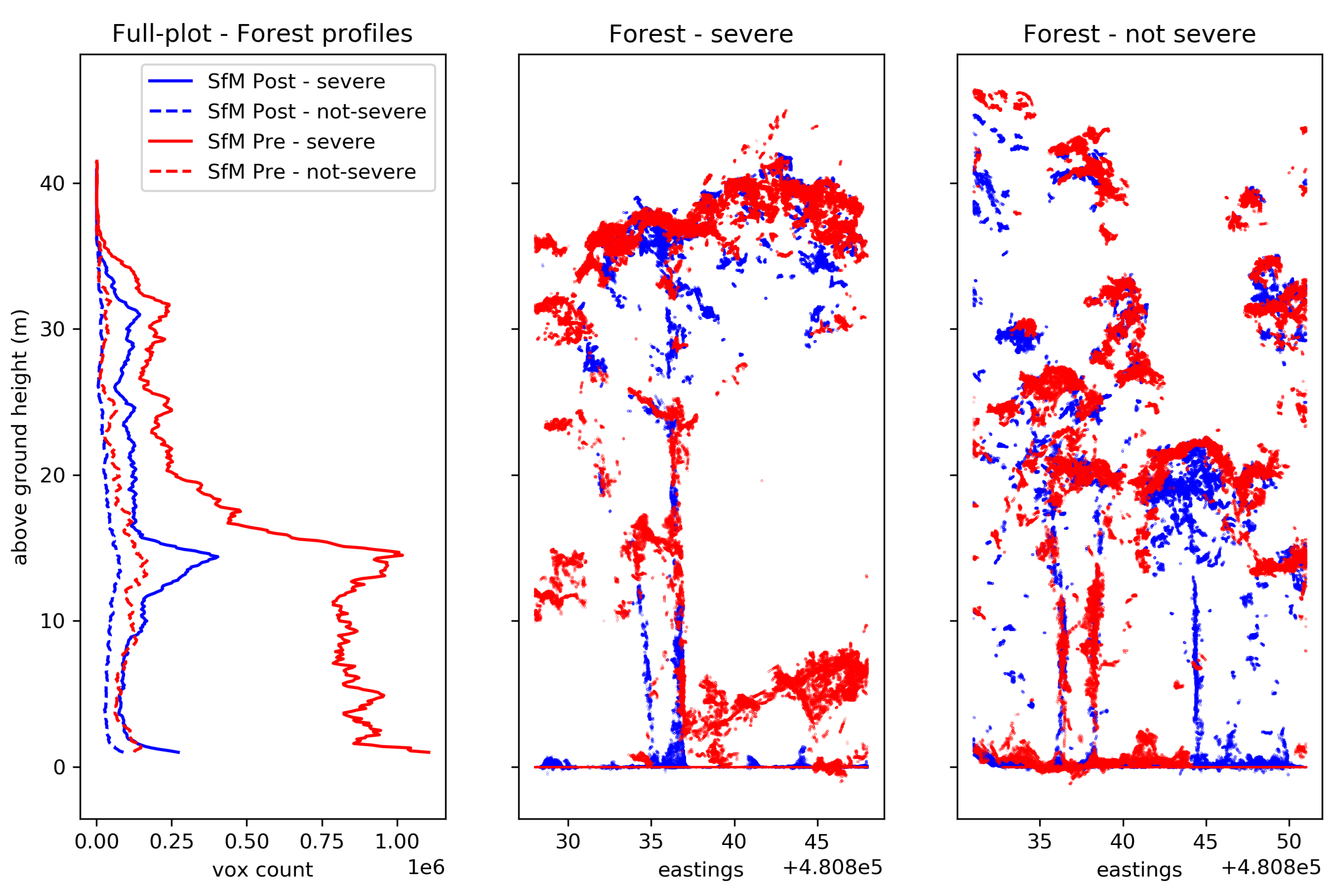

3.3. Change in Vertical Structure as a Mechanism for Describing Fire Severity

3.3.1. Forest and Severe Fire Impact

3.3.2. Forest and Not Severe Fire Impact

3.3.3. Sedgeland and Severe Fire Impact

3.3.4. Sedgeland and Not Severe Fire Impact

4. Discussion

4.1. Land Cover Accuracy

4.2. Severity Accuracy

4.3. Vertical Profile

4.4. Operational Applicability

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Predictor Variables Used in Land Cover Calculation

| Image-Only | LiDAR-Only | Combined | |

|---|---|---|---|

| Validation | 80.6% | 78.9% | 83.1% |

| Variables used | 90th percentile height | Layer count | 50th percentile height (SfM) |

| Distance between top 2 layers (SfM) | 10th percentile height | 10th percentile height (LiDAR) | |

| A (Green-red) mean (Ortho) | 50th percentile height | 90th percentile height (LiDAR) | |

| B (Blue-yellow) mean (Ortho) | Correlation (CHM) | Distance between top 2 layers (LiDAR) | |

| Homogeneity (CHM-SfM) | Homogeneity (CHM) | A (Green-red) mean (Ortho) | |

| Entropy (CHM-SfM) | B (Blue-yellow) mean (Ortho) | ||

| Contrast (Ortho) | Sum of squares variance (CHM-SfM) | ||

| Correlation (Ortho) | Homogeneity (CHM-SfM) | ||

| Contrast (Ortho) | |||

| Correlation (Ortho) |

| Stream | Severity | ||

|---|---|---|---|

| Image Stream | Forest | Sedgeland | |

| Validation | 75.8% | 72.8% | |

| Variables used | Volume (Post) | Volume (Post) | |

| A (Green-red) mean (Post) | 10th percentile height (Post) | ||

| B (Blue-yellow) mean (Post) | A (Green-red) mean (Post) | ||

| Correlation (CHM-Post) | B (Blue-yellow) mean (Post) | ||

| Correlation difference (CHM) | A (Green-red) mean (pre) | ||

| A (Green-red) mean difference (Ortho) | Correlation (CHM-Post) | ||

| Sum of squares variance (CHM-Post) | |||

| Correlation (Ortho-Post) | |||

| Homogeneity (Ortho-Post) | |||

| Contrast difference (CHM) | |||

| Homogeneity difference (CHM) | |||

| Homogeneity difference (Ortho) | |||

| A (Green-red) mean difference | |||

| B (Blue-yellow) mean difference | |||

Appendix B. Predictor Variables Used in Severity Classification from Pre and Post-Fire Calculation

| Stream | Severity | ||

|---|---|---|---|

| LiDAR Stream | Forest | Sedgeland | |

| Validation | 74.5% | 75.2% | |

| Variables used | Volume (Pre) | 10th percentile height (Post) | |

| 10th percentile height (Pre) | Volume (Pre) | ||

| 50th percentile height (Pre) | 10th percentile height (Pre) | ||

| Entropy (CHM-Post) | 90th percentile height (Pre) | ||

| Contrast (CHM-Pre) | Contrast (CHM-Post) | ||

| Correlation (CHM-Pre) | Entropy (CHM-Post) | ||

| Sum of squares variance (CHM-Pre) | Contrast (CHM-Pre) | ||

| Homogeneity (CHM-Pre) | Correlation (CHM-Pre) | ||

| Volume difference | Sum of squares variance (CHM-Pre) | ||

| 10th percentile difference | Volume difference | ||

| Angular second moment difference (CHM) | 10th percentile difference | ||

| Contrast difference (CHM) | 50th percentile difference | ||

| Correlation difference (CHM) | Angular second moment difference (CHM) | ||

| Sum of squares variance difference (CHM) | Contrast difference (CHM) | ||

| Correlation difference (CHM) | |||

| Sum of squares variance difference (CHM) | |||

| Stream | Severity | ||

|---|---|---|---|

| Combined Stream | Forest | Sedgeland | |

| Validation | 78.5% | 76.6% | |

| Variables used | Volume (SfM-Post) | Volume (LiDAR-Post) | |

| A (green-red) mean (Post) | Volume (SfM-Post) | ||

| B (blue-yellow) mean (Post) | A (green-red) mean (Post) | ||

| B (blue-yellow) mean (Pre) | B (blue-yellow) mean (Post) | ||

| Correlation (CHM-Post) | A (green-red) mean (Pre) | ||

| Correlation (Ortho-Post) | Correlation (LiDAR-CHM-Post) | ||

| Homogeneity (Ortho-Post) | Homogeneity (LiDAR-CHM-Post) | ||

| Contrast (Ortho-Pre) | Sum of squares variance (LiDAR CHM-Pre) | ||

| Angular second moment difference (SfM-CHM) | Sum of squares variance (LiDAR CHM-Post) | ||

| Correlation difference (SfM-CHM) | Homogeneity (SfM CHM-Post) | ||

| Angular second moment difference (LiDAR-CHM) | Correlation (SfM CHM-Pre) | ||

| Correlation difference (LiDAR-CHM) | Homogeneity (SfM CHM-Pre) | ||

| Contrast difference (Ortho) | Correlation (Ortho-Post) | ||

| A (green-red) mean difference | Homogeneity (Ortho-Post) | ||

| Volume difference (LiDAR) | |||

| 50th percentile height difference (LiDAR) | |||

| Angular Second Moment difference (CHM-SfM) | |||

| Contrast difference (CHM-SfM) | |||

| Contrast difference (CHM-LIDAR | |||

| Homogeneity difference (CHM-LiDAR) | |||

| Angular second moment difference (Ortho) | |||

| Homogeneity difference (Ortho) | |||

| A (green-red) mean difference | |||

| B (blue-yellow) mean difference | |||

References

- Keeley, J.E.; Pausas, J.G.; Rundel, P.W.; Bond, W.J.; Bradstock, R.A. Fire as an evolutionary pressure shaping plant traits. Trends Plant Sci. 2011, 16, 406–411. [Google Scholar] [CrossRef]

- Orians, G.H.; Milewski, A.V. Ecology of Australia: The effects of nutrient-poor soils and intense fires. Biol. Rev. 2007, 82, 393–423. [Google Scholar] [CrossRef]

- He, T.; Lamont, B.B. Baptism by fire: The pivotal role of ancient conflagrations in evolution of the Earth’s flora. Natl. Sci. Rev. 2018, 5, 237–254. [Google Scholar] [CrossRef]

- Lamont, B.B.; He, T.; Yan, Z. Evolutionary history of fire-stimulated resprouting, flowering, seed release and germination. Biol. Rev. 2019, 94, 903–928. [Google Scholar] [CrossRef] [PubMed]

- Clarke, P.J.; Knox, K.J.; Bradstock, R.A.; Munoz-Robles, C.; Kumar, L. Vegetation, terrain and fire history shape the impact of extreme weather on fire severity and ecosystem response. J. Veg. Sci. 2014, 25, 1033–1044. [Google Scholar] [CrossRef]

- Keeley, J.E. Fire intensity, fire severity and burn severity: A brief review and suggested usage. Int. J. Wildland Fire 2009, 18, 116–126. [Google Scholar] [CrossRef]

- Wagner, C.V. Height of crown scorch in forest fires. Can. J. For. Res. 1973, 3, 373–378. [Google Scholar] [CrossRef]

- Tolhurst, K. Fire from a flora, fauna and soil perspective: Sensible heat measurement. CALM Sci. 1995, 4, 45–88. [Google Scholar]

- Dickinson, M.; Johnson, E. Fire effects on trees. In Forest Fires; Elsevier: Amsterdam, The Netherlands, 2001; pp. 477–525. [Google Scholar]

- Moreno, J.M.; Oechel, W. A simple method for estimating fire intensity after a burn in California chaparral. Acta Oecol. (Oecol. Plant) 1989, 10, 57–68. [Google Scholar]

- Buckley, A.J. Fuel Reducing Regrowth Forests with a Wiregrass Fuel Type: Fire Behaviour Guide and Prescriptions; Fire Management Branch, Department of Conservation and Natural Resources: Victoria, Australia, 1993. [Google Scholar]

- White, J.D.; Ryan, K.C.; Key, C.C.; Running, S.W. Remote sensing of forest fire severity and vegetation recovery. Int. J. Wildland Fire 1996, 6, 125–136. [Google Scholar] [CrossRef]

- Hudak, A.T.; Morgan, P.; Bobbitt, M.J.; Smith, A.M.; Lewis, S.A.; Lentile, L.B.; Robichaud, P.R.; Clark, J.T.; McKinley, R.A. The relationship of multispectral satellite imagery to immediate fire effects. Fire Ecol. 2007, 3, 64–90. [Google Scholar] [CrossRef]

- Roy, D.P.; Boschetti, L.; Trigg, S.N. Remote sensing of fire severity: Assessing the performance of the normalized burn ratio. IEEE Geosci. Remote Sens. Lett. 2006, 3, 112–116. [Google Scholar] [CrossRef]

- Edwards, A.C.; Russell-Smith, J.; Maier, S.W. A comparison and validation of satellite-derived fire severity mapping techniques in fire prone north Australian savannas: Extreme fires and tree stem mortality. Remote Sens. Environ. 2018, 206, 287–299. [Google Scholar] [CrossRef]

- Smith, A.M.; Wooster, M.J.; Drake, N.A.; Dipotso, F.M.; Falkowski, M.J.; Hudak, A.T. Testing the potential of multi-spectral remote sensing for retrospectively estimating fire severity in African Savannahs. Remote Sens. Environ. 2005, 97, 92–115. [Google Scholar] [CrossRef]

- Jakubauskas, M.E.; Lulla, K.P.; Mausel, P.W. Assessment of vegetation change in a fire-altered forest landscape. PE&RS Photogramm. Eng. Remote Sens. 1990, 56, 371–377. [Google Scholar]

- Cocke, A.E.; Fulé, P.Z.; Crouse, J.E. Comparison of burn severity assessments using Differenced Normalized Burn Ratio and ground data. Int. J. Wildland Fire 2005, 14, 189–198. [Google Scholar] [CrossRef]

- Boer, M.M.; Macfarlane, C.; Norris, J.; Sadler, R.J.; Wallace, J.; Grierson, P.F. Mapping burned areas and burn severity patterns in SW Australian eucalypt forest using remotely-sensed changes in leaf area index. Remote Sens. Environ. 2008, 112, 4358–4369. [Google Scholar] [CrossRef]

- García, M.L.; Caselles, V. Mapping burns and natural reforestation using Thematic Mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- French, N.H.; Kasischke, E.S.; Hall, R.J.; Murphy, K.A.; Verbyla, D.L.; Hoy, E.E.; Allen, J.L. Using Landsat data to assess fire and burn severity in the North American boreal forest region: An overview and summary of results. Int. J. Wildland Fire 2008, 17, 443–462. [Google Scholar] [CrossRef]

- Parker, B.M.; Lewis, T.; Srivastava, S.K. Estimation and evaluation of multi-decadal fire severity patterns using Landsat sensors. Remote Sens. Environ. 2015, 170, 340–349. [Google Scholar] [CrossRef]

- Brewer, C.K.; Winne, J.C.; Redmond, R.L.; Opitz, D.W.; Mangrich, M.V. Classifying and mapping wildfire severity. Photogramm. Eng. Remote Sens. 2005, 71, 1311–1320. [Google Scholar] [CrossRef]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- Arkin, J.; Coops, N.C.; Hermosilla, T.; Daniels, L.D.; Plowright, A. Integrated fire severity–land cover mapping using very-high-spatial-resolution aerial imagery and point clouds. Int. J. Wildland Fire 2019, 28, 840–860. [Google Scholar] [CrossRef]

- Simpson, J.E.; Wooster, M.J.; Smith, T.E.; Trivedi, M.; Vernimmen, R.R.; Dedi, R.; Shakti, M.; Dinata, Y. Tropical peatland burn depth and combustion heterogeneity assessed using UAV photogrammetry and airborne LiDAR. Remote Sens. 2016, 8, 1000. [Google Scholar] [CrossRef]

- Carvajal-Ramírez, F.; Marques da Silva, J.R.; Agüera-Vega, F.; Martínez-Carricondo, P.; Serrano, J.; Moral, F.J. Evaluation of fire severity indices based on pre-and post-fire multispectral imagery sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef]

- Shin, J.I.; Seo, W.W.; Kim, T.; Park, J.; Woo, C.S. Using UAV multispectral images for classification of forest burn severity—A case study of the 2019 Gangneung forest fire. Forests 2019, 10, 1025. [Google Scholar] [CrossRef]

- Pérez-Rodríguez, L.A.; Quintano, C.; Marcos, E.; Suarez-Seoane, S.; Calvo, L.; Fernández-Manso, A. Evaluation of Prescribed Fires from Unmanned Aerial Vehicles (UAVs) Imagery and Machine Learning Algorithms. Remote Sens. 2020, 12, 1295. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.J.; Faias, S.P.; Tomé, M.; Díaz-Varela, R.A. Use of multi-temporal UAV-derived imagery for estimating individual tree growth in Pinus pinea stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Klouček, T.; Komárek, J.; Surovỳ, P.; Hrach, K.; Janata, P.; Vašíček, B. The Use of UAV Mounted Sensors for Precise Detection of Bark Beetle Infestation. Remote Sens. 2019, 11, 1561. [Google Scholar] [CrossRef]

- Clapuyt, F.; Vanacker, V.; Schlunegger, F.; Van Oost, K. Unravelling earth flow dynamics with 3-D time series derived from UAV-SfM models. Earth Surf. Dyn. 2017, 5, 791–806. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Paul, T.S.; Morgenroth, J.; Hartley, R. Taking a closer look at invasive alien plant research: A review of the current state, opportunities, and future directions for UAVs. Methods Ecol. Evol. 2019, 10, 2020–2033. [Google Scholar] [CrossRef]

- Arnett, J.T.; Coops, N.C.; Daniels, L.D.; Falls, R.W. Detecting forest damage after a low-severity fire using remote sensing at multiple scales. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 239–246. [Google Scholar] [CrossRef]

- Warner, T.A.; Skowronski, N.S.; Gallagher, M.R. High spatial resolution burn severity mapping of the New Jersey Pine Barrens with WorldView-3 near-infrared and shortwave infrared imagery. Int. J. Remote Sens. 2017, 38, 598–616. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovskỳ, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Liu, K.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Estimating forest structural attributes using UAV-LiDAR data in Ginkgo plantations. ISPRS J. Photogramm. Remote Sens. 2018, 146, 465–482. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR derived canopy height and DBH with terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef]

- Hillman, S.; Wallace, L.; Lucieer, A.; Reinke, K.; Turner, D.; Jones, S. A comparison of terrestrial and UAS sensors for measuring fuel hazard in a dry sclerophyll forest. Int. J. Appl. Earth Obs. Geoinf. 2021, 95, 102261. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Wallace, L.; Watson, C.; Lucieer, A. Detecting pruning of individual stems using airborne laser scanning data captured from an unmanned aerial vehicle. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 76–85. [Google Scholar] [CrossRef]

- Hu, T.; Ma, Q.; Su, Y.; Battles, J.J.; Collins, B.M.; Stephens, S.L.; Kelly, M.; Guo, Q. A simple and integrated approach for fire severity assessment using bi-temporal airborne LiDAR data. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 25–38. [Google Scholar] [CrossRef]

- Hoe, M.S.; Dunn, C.J.; Temesgen, H. Multitemporal LiDAR improves estimates of fire severity in forested landscapes. Int. J. Wildland Fire 2018, 27, 581–594. [Google Scholar] [CrossRef]

- Lee, S.W.; Lee, M.B.; Lee, Y.G.; Won, M.S.; Kim, J.J.; Hong, S.K. Relationship between landscape structure and burn severity at the landscape and class levels in Samchuck, South Korea. For. Ecol. Manag. 2009, 258, 1594–1604. [Google Scholar] [CrossRef]

- Skowronski, N.S.; Gallagher, M.R.; Warner, T.A. Decomposing the interactions between fire severity and canopy fuel structure using multi-temporal, active, and passive remote sensing approaches. Fire 2020, 3, 7. [Google Scholar] [CrossRef]

- Bowman, D.M.; Perry, G.L. Soil or fire: What causes treeless sedgelands in Tasmanian wet forests? Plant Soil 2017, 420, 1–18. [Google Scholar] [CrossRef]

- Crondstedt, M.; Thomas, G.; Considine, P. AFAC Independent Operational Review A Review of the Management of the Tasmanian Fires of Prepared for the Tasmanian Government Acknowledgements; Technical Report March; Australasian Fire and Emergency Service Authorities Council: East Melbourne, Australia, 2019. [Google Scholar]

- Grubinger, S.; Coops, N.C.; Stoehr, M.; El-Kassaby, Y.A.; Lucieer, A.; Turner, D. Modeling realized gains in Douglas-fir (Pseudotsuga menziesii) using laser scanning data from unmanned aircraft systems (UAS). For. Ecol. Manag. 2020, 473, 118284. [Google Scholar] [CrossRef]

- Camarretta, N.; A Harrison, P.; Lucieer, A.; M Potts, B.; Davidson, N.; Hunt, M. From Drones to Phenotype: Using UAV-LiDAR to Detect Species and Provenance Variation in Tree Productivity and Structure. Remote Sens. 2020, 12, 3184. [Google Scholar] [CrossRef]

- du Toit, F.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.; El-Kassaby, Y.A.; Stoehr, M.; Turner, D.; Lucieer, A. Characterizing variations in growth characteristics between Douglas-fir with different genetic gain levels using airborne laser scanning. Trees 2020, 34, 649–664. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D. CloudCompare. 2016. Available online: https://www.danielgm.net/cc (accessed on 19 May 2019).

- Peppa, M.; Hall, J.; Goodyear, J.; Mills, J. Photogrammetric Assessment and Comparison of DJI Phantom 4 PRO and Phantom 4 RTK Small Unmanned Aircraft Systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 503–509. [Google Scholar] [CrossRef]

- Agisoft, L. Agisoft Metashape User Manual, Professional Edition, Version 1.5; Agisoft LLC: St. Petersburg, Russia, 2018; Volume 2, Available online: https://www.agisoft.com/pdf/metashape-pro_1_5_en.pdf (accessed on 1 June 2019).

- Pujari, J.; Pushpalatha, S.; Padmashree, D. Content-based image retrieval using color and shape descriptors. In Proceedings of the 2010 International Conference on Signal and Image Processing, Chennai, India, 15–17 December 2010; pp. 239–242. [Google Scholar]

- Connolly, C.; Fleiss, T. A study of efficiency and accuracy in the transformation from RGB to CIELAB color space. IEEE Trans. Image Process. 1997, 6, 1046–1048. [Google Scholar] [CrossRef]

- Serifoglu Yilmaz, C.; Yilmaz, V.; Güngör, O. Investigating the performances of commercial and non-commercial software for ground filtering of UAV-based point clouds. Int. J. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T. Scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Cancelo-González, J.; Cachaldora, C.; Díaz-Fierros, F.; Prieto, B. Colourimetric variations in burnt granitic forest soils in relation to fire severity. Ecol. Indic. 2014, 46, 92–100. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y.M.; Tonima, M.A. Forest fire flame and smoke detection from UAV-captured images using fire-specific color features and multi-color space local binary pattern. J. Unmanned Veh. Syst. 2020, 8, 285–309. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Education: Noida, India, 2004. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Kayitakire, F.; Hamel, C.; Defourny, P. Retrieving forest structure variables based on image texture analysis and IKONOS-2 imagery. Remote Sens. Environ. 2006, 102, 390–401. [Google Scholar] [CrossRef]

- Rao, P.N.; Sai, M.S.; Sreenivas, K.; Rao, M.K.; Rao, B.; Dwivedi, R.; Venkataratnam, L. Textural analysis of IRS-1D panchromatic data for land cover classification. Int. J. Remote Sens. 2002, 23, 3327–3345. [Google Scholar] [CrossRef]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving tree species classification using UAS multispectral images and texture measures. ISPRS Int. J. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef]

- Wilkes, P.; Jones, S.D.; Suarez, L.; Haywood, A.; Mellor, A.; Woodgate, W.; Soto-Berelov, M.; Skidmore, A.K. Using discrete-return airborne laser scanning to quantify number of canopy strata across diverse forest types. Methods Ecol. Evol. 2016, 7, 700–712. [Google Scholar] [CrossRef]

- Hultquist, C.; Chen, G.; Zhao, K. A comparison of Gaussian process regression, random forests and support vector regression for burn severity assessment in diseased forests. Remote Sens. Lett. 2014, 5, 723–732. [Google Scholar] [CrossRef]

- Collins, L.; Griffioen, P.; Newell, G.; Mellor, A. The utility of Random Forests for wildfire severity mapping. Remote Sens. Environ. 2018, 216, 374–384. [Google Scholar] [CrossRef]

- Meddens, A.J.; Kolden, C.A.; Lutz, J.A. Detecting unburned areas within wildfire perimeters using Landsat and ancillary data across the northwestern United States. Remote Sens. Environ. 2016, 186, 275–285. [Google Scholar] [CrossRef]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene selection for cancer classification using support vector machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Hu, S.; Liu, H.; Zhao, W.; Shi, T.; Hu, Z.; Li, Q.; Wu, G. Comparison of machine learning techniques in inferring phytoplankton size classes. Remote Sens. 2018, 10, 191. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Foody, G.M. Thematic map comparison. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- De Leeuw, J.; Jia, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A. Comparing accuracy assessments to infer superiority of image classification methods. Int. J. Remote Sens. 2006, 27, 223–232. [Google Scholar] [CrossRef]

- Abdel-Rahman, E.M.; Mutanga, O.; Adam, E.; Ismail, R. Detecting Sirex noctilio grey-attacked and lightning-struck pine trees using airborne hyperspectral data, random forest and support vector machines classifiers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 48–59. [Google Scholar] [CrossRef]

- Agresti, A. Categorical Data Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2003; Volume 482. [Google Scholar]

- Ramo, R.; Chuvieco, E. Developing a random forest algorithm for MODIS global burned area classification. Remote Sens. 2017, 9, 1193. [Google Scholar] [CrossRef]

- Goodbody, T.R.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; McCartney, G.; MacLean, D.A. Digital aerial photogrammetry for assessing cumulative spruce budworm defoliation and enhancing forest inventories at a landscape-level. ISPRS J. Photogramm. Remote Sens. 2018, 142, 1–11. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Almeida, D.; Broadbent, E.N.; Zambrano, A.M.A.; Wilkinson, B.E.; Ferreira, M.E.; Chazdon, R.; Meli, P.; Gorgens, E.; Silva, C.A.; Stark, S.C.; et al. Monitoring the structure of forest restoration plantations with a drone-lidar system. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 192–198. [Google Scholar] [CrossRef]

- Tng, D.; Williamson, G.; Jordan, G.; Bowman, D. Giant eucalypts–globally unique fire-adapted rain-forest trees? New Phytologist 2012, 196, 1001–1014. [Google Scholar] [CrossRef] [PubMed]

- Hammill, K.A.; Bradstock, R.A. Remote sensing of fire severity in the Blue Mountains: Influence of vegetation type and inferring fire intensity. Int. J. Wildland Fire 2006, 15, 213–226. [Google Scholar] [CrossRef]

- McCarthy, G.; Moon, K.; Smith, L. Mapping Fire Severity and Fire Extent in Forest in Victoria for Ecological and Fuel Outcomes; Technical Report; Wiley Online Library: Hoboken, NJ, USA, 2017. [Google Scholar]

- Tran, N.; Tanase, M.; Bennett, L.; Aponte, C. Fire-severity classification across temperate Australian forests: Random forests versus spectral index thresholding. Remote Sens. Agric. Ecosyst. Hydrol. XXI Int. Soc. Opt. Photonics 2019, 11149, 111490U. [Google Scholar]

- Burrows, G. Buds, bushfires and resprouting in the eucalypts. Aust. J. Bot. 2013, 61, 331–349. [Google Scholar] [CrossRef]

- Clarke, P.J.; Lawes, M.; Midgley, J.J.; Lamont, B.; Ojeda, F.; Burrows, G.; Enright, N.; Knox, K. Resprouting as a key functional trait: How buds, protection and resources drive persistence after fire. New Phytol. 2013, 197, 19–35. [Google Scholar] [CrossRef]

- Stephens, S.L.; Collins, B.M.; Fettig, C.J.; Finney, M.A.; Hoffman, C.M.; Knapp, E.E.; North, M.P.; Safford, H.; Wayman, R.B. Drought, tree mortality, and wildfire in forests adapted to frequent fire. BioScience 2018, 68, 77–88. [Google Scholar] [CrossRef]

- Veraverbeke, S.; Verstraeten, W.W.; Lhermitte, S.; Goossens, R. Evaluating Landsat Thematic Mapper spectral indices for estimating burn severity of the 2007 Peloponnese wildfires in Greece. Int. J. Wildland Fire 2010, 19, 558–569. [Google Scholar] [CrossRef]

- Leach, N.; Coops, N.C.; Obrknezev, N. Normalization method for multi-sensor high spatial and temporal resolution satellite imagery with radiometric inconsistencies. Comput. Electron. Agric. 2019, 164, 104893. [Google Scholar] [CrossRef]

- Michael, Y.; Lensky, I.M.; Brenner, S.; Tchetchik, A.; Tessler, N.; Helman, D. Economic assessment of fire damage to urban forest in the wildland–urban interface using planet satellites constellation images. Remote Sens. 2018, 10, 1479. [Google Scholar] [CrossRef]

- James, L.A.; Watson, D.G.; Hansen, W.F. Using LiDAR data to map gullies and headwater streams under forest canopy: South Carolina, USA. Catena 2007, 71, 132–144. [Google Scholar] [CrossRef]

- Edwards, A.C.; Russell-Smith, J.; Maier, S.W. Measuring and mapping fire severity in the tropical savannas. Carbon Account. Savanna Fire Manag. 2015, 169, 169–184. [Google Scholar]

- Gupta, V.; Reinke, K.; Jones, S. Changes in the spectral features of fuel layers of an Australian dry sclerophyll forest in response to prescribed burning. Int. J. Wildland Fire 2013, 22, 862–868. [Google Scholar] [CrossRef]

- Puliti, S.; Dash, J.P.; Watt, M.S.; Breidenbach, J.; Pearse, G.D. A comparison of UAV laser scanning, photogrammetry and airborne laser scanning for precision inventory of small-forest properties. For. Int. J. For. Res. 2020, 93, 150–162. [Google Scholar] [CrossRef]

- Ottmar, R.D.; Sandberg, D.V.; Riccardi, C.L.; Prichard, S.J. An overview of the fuel characteristic classification system—Quantifying, classifying, and creating fuelbeds for resource planning. Can. J. For. Res. 2007, 37, 2383–2393. [Google Scholar] [CrossRef]

- Menning, K.M.; Stephens, S.L. Fire climbing in the forest: A semiqualitative, semiquantitative approach to assessing ladder fuel hazards. West. J. Appl. For. 2007, 22, 88–93. [Google Scholar] [CrossRef]

- Prichard, S.J.; Sandberg, D.V.; Ottmar, R.D.; Eberhardt, E.; Andreu, A.; Eagle, P.; Swedin, K. Fuel Characteristic Classification System Version 3.0: Technical Documentation; General Technical Report PNW-GTR-887; US Department of Agriculture, Forest Service, Pacific Northwest Research Station: Portland, OR, USA, 2013; Volume 887, 79p. [Google Scholar]

- Kramer, H.A.; Collins, B.M.; Kelly, M.; Stephens, S.L. Quantifying ladder fuels: A new approach using LiDAR. Forests 2014, 5, 1432–1453. [Google Scholar] [CrossRef]

- Maguya, A.S.; Tegel, K.; Junttila, V.; Kauranne, T.; Korhonen, M.; Burns, J.; Leppanen, V.; Sanz, B. Moving voxel method for estimating canopy base height from airborne laser scanner data. Remote Sens. 2015, 7, 8950–8972. [Google Scholar] [CrossRef]

- Kramer, H.A.; Collins, B.M.; Lake, F.K.; Jakubowski, M.K.; Stephens, S.L.; Kelly, M. Estimating ladder fuels: A new approach combining field photography with LiDAR. Remote Sens. 2016, 8, 766. [Google Scholar] [CrossRef]

- Jarron, L.R.; Coops, N.C.; MacKenzie, W.H.; Tompalski, P.; Dykstra, P. Detection of sub-canopy forest structure using airborne LiDAR. Remote Sens. Environ. 2020, 244, 111770. [Google Scholar] [CrossRef]

- Skowronski, N.; Clark, K.; Nelson, R.; Hom, J.; Patterson, M. Remotely sensed measurements of forest structure and fuel loads in the Pinelands of New Jersey. Remote Sens. Environ. 2007, 108, 123–129. [Google Scholar] [CrossRef]

- Cruz, M.G.; Alexander, M.E.; Wakimoto, R.H. Modeling the likelihood of crown fire occurrence in conifer forest stands. For. Sci. 2004, 50, 640–658. [Google Scholar]

- Twidwell, D.; Allen, C.R.; Detweiler, C.; Higgins, J.; Laney, C.; Elbaum, S. Smokey comes of age: Unmanned aerial systems for fire management. Front. Ecol. Environ. 2016, 14, 333–339. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Moran, C.J.; Seielstad, C.A.; Cunningham, M.R.; Hoff, V.; Parsons, R.A.; Queen, L.; Sauerbrey, K.; Wallace, T. Deriving Fire Behavior Metrics from UAS Imagery. Fire 2019, 2, 36. [Google Scholar] [CrossRef]

- Samiappan, S.; Hathcock, L.; Turnage, G.; McCraine, C.; Pitchford, J.; Moorhead, R. Remote sensing of wildfire using a small unmanned aerial system: Post-fire mapping, vegetation recovery and damage analysis in Grand Bay, Mississippi/Alabama, USA. Drones 2019, 3, 43. [Google Scholar] [CrossRef]

- Shin, P.; Sankey, T.; Moore, M.M.; Thode, A.E. Evaluating unmanned aerial vehicle images for estimating forest canopy fuels in a ponderosa pine stand. Remote Sens. 2018, 10, 1266. [Google Scholar] [CrossRef]

- Bright, B.C.; Loudermilk, E.L.; Pokswinski, S.M.; Hudak, A.T.; O’Brien, J.J. Introducing close-range photogrammetry for characterizing forest understory plant diversity and surface fuel structure at fine scales. Can. J. Remote Sens. 2016, 42, 460–472. [Google Scholar] [CrossRef]

- McColl-Gausden, S.; Bennett, L.; Duff, T.; Cawson, J.; Penman, T. Climatic and edaphic gradients predict variation in wildland fuel hazard in south-eastern Australia. Ecography 2020, 43, 443–455. [Google Scholar] [CrossRef]

- Jenkins, M.E.; Bedward, M.; Price, O.; Bradstock, R.A. Modelling Bushfire Fuel Hazard Using Biophysical Parameters. Forests 2020, 11, 925. [Google Scholar] [CrossRef]

- Cawson, J.G.; Duff, T.J.; Swan, M.H.; Penman, T.D. Wildfire in wet sclerophyll forests: The interplay between disturbances and fuel dynamics. Ecosphere 2018, 9, e02211. [Google Scholar] [CrossRef]

- Burton, J.; Cawson, J.; Noske, P.; Sheridan, G. Shifting states, altered fates: Divergent fuel moisture responses after high frequency wildfire in an obligate seeder eucalypt forest. Forests 2019, 10, 436. [Google Scholar] [CrossRef]

- Taylor, C.; McCarthy, M.A.; Lindenmayer, D.B. Nonlinear effects of stand age on fire severity. Conserv. Lett. 2014, 7, 355–370. [Google Scholar] [CrossRef]

- Attiwill, P.M. Ecological disturbance and the conservative management of eucalypt forests in Australia. For. Ecol. Manag. 1994, 63, 301–346. [Google Scholar] [CrossRef]

- Attiwill, P.; Ryan, M.; Burrows, N.; Cheney, N.; McCaw, L.; Neyland, M.; Read, S. Timber harvesting does not increase fire risk and severity in wet eucalypt forests of southern Australia. Conserv. Lett. 2014, 7, 341–354. [Google Scholar] [CrossRef]

- Price, O.F.; Bradstock, R.A. The efficacy of fuel treatment in mitigating property loss during wildfires: Insights from analysis of the severity of the catastrophic fires in 2009 in Victoria, Australia. J. Environ. Manag. 2012, 113, 146–157. [Google Scholar] [CrossRef]

- Linn, R.R.; Sieg, C.H.; Hoffman, C.M.; Winterkamp, J.L.; McMillin, J.D. Modeling wind fields and fire propagation following bark beetle outbreaks in spatially-heterogeneous pinyon-juniper woodland fuel complexes. Agric. For. Meteorol. 2013, 173, 139–153. [Google Scholar] [CrossRef]

- Mell, W.; Maranghides, A.; McDermott, R.; Manzello, S.L. Numerical simulation and experiments of burning douglas fir trees. Combust. Flame 2009, 156, 2023–2041. [Google Scholar] [CrossRef]

- Rowell, E.; Loudermilk, E.L.; Seielstad, C.; O’Brien, J.J. Using simulated 3D surface fuelbeds and terrestrial laser scan data to develop inputs to fire behavior models. Can. J. Remote Sens. 2016, 42, 443–459. [Google Scholar] [CrossRef]

- Parsons, R.A.; Pimont, F.; Wells, L.; Cohn, G.; Jolly, W.M.; de Coligny, F.; Rigolot, E.; Dupuy, J.L.; Mell, W.; Linn, R.R. Modeling thinning effects on fire behavior with STANDFIRE. Ann. For. Sci. 2018, 75, 7. [Google Scholar] [CrossRef]

| Vegetation Class | Definition | Example Species |

|---|---|---|

| Forest (tall) | Vegetation greater than 3 m in height | Eucalyptus obliqua, Eucalyptus globulus |

| Sedgeland (short) | Vegetation beneath 3 m in height | Gymnoschoenus sphaerocephalus, Melaleuca squamea, Eucalyptus nitida |

| Non-vegetation | Water and Bare earth | N/A |

| Impact | With Forest Vegetation Present | With Sedgeland Vegetation Present |

|---|---|---|

| Severe | >50% crown scorch | Grass combusted (>80%) exposing bare soil, white or black ash |

| Not-severe | <50% crown scorch | Patchy burn on grass and litter incomplete |

| Unburnt | Unburnt | Unburnt grass, or unchanged conditions |

| Stream 1—Image-Only | Stream 2—LiDAR-Only | Stream 3—Combined | |

|---|---|---|---|

| Segmentation | Pre-image | Canopy Height Model (CHM) | Pre-image |

| Ortho image metrics | ✓ | ✓ | |

| Ortho image texture metrics | ✓ | ✓ | |

| Point cloud metrics—UAS SfM | ✓ | ✓ | |

| Point cloud metrics—UAS LiDAR | ✓ | ✓ | |

| CHM texture metrics—UAS SfM | ✓ | ✓ | |

| CHM texture metrics—UAS LiDAR | ✓ | ✓ |

| Image Based Metrics | Image Stream Bands | LiDAR | Description |

|---|---|---|---|

| Mean | LAB | N/A | Metric of each band calculated separately within the segment |

| ASM | L | CHM | Texture calculated from single channel lightness (L) image within segment |

| Contrast | |||

| Correlation | |||

| Sum of squares: variance | |||

| Homogeneity | |||

| Entropy | |||

| Point Cloud Metrics | |||

| Percentiles (10th, 50th, 90th) | RGB point cloud | LiDAR point cloud | Analysis was conducted for the segment and 2nd level of adjacency to the central segment |

| Number of layers | |||

| Distance between 1st and 2nd layer | |||

| Volume of points | |||

| Difference in percentile heights | |||

| Difference in number of layers | |||

| Difference in volume |

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classified Data | Class | Bare Earth | Forest | Sedgeland | Water | User’s Accuracy |

| Bare Earth | 1 | 0 | 1 | 0 | 50.0% | |

| Forest | 2 | 126 | 13 | 1 | 88.7% | |

| Sedgeland | 1 | 30 | 84 | 4 | 70.6% | |

| Water | 0 | 0 | 0 | 5 | 100.0% | |

| Producer’s Accuracy | 25.0% | 80.8% | 85.7% | 50.0% | Overall: 80.6% | |

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classified Data | Class | Bare Earth | Forest | Sedgeland | Water | User’s Accuracy |

| Bare Earth | 1 | 0 | 1 | 0 | 50.0% | |

| Forest | 1 | 128 | 12 | 4 | 88.3% | |

| Sedgeland | 2 | 30 | 85 | 7 | 68.5% | |

| Water | 0 | 1 | 0 | 3 | 75.0% | |

| Producer’s Accuracy | 25.0% | 80.5% | 86.7% | 21.4% | Overall: 78.9% | |

| Reference Data | ||||||

|---|---|---|---|---|---|---|

| Classified Data | Class | Bare Earth | Forest | Sedgeland | Water | User’s Accuracy |

| Bare Earth | 0 | 1 | 1 | 0 | 0.0% | |

| Forest | 1 | 131 | 10 | 2 | 91.0% | |

| Sedgeland | 3 | 24 | 87 | 3 | 74.4% | |

| Water | 0 | 0 | 0 | 4 | 100.0% | |

| Producer’s Accuracy | 0.0% | 84.0% | 88.8% | 44.4% | Overall: 83.1% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and Post Variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 7 | 27 | 1 | 20.0% | |

| Severe | 34 | 177 | 10 | 80.1% | |

| Unburnt | 1 | 0 | 8 | 88.9% | |

| Producer’s Accuracy | 16.7% | 86.8% | 42.1% | Overall: 72.4% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and Post Variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 12 | 15 | 2 | 41.4% | |

| Severe | 32 | 191 | 18 | 79.3% | |

| Unburnt | 0 | 1 | 3 | 75.0% | |

| Producer’s Accuracy | 27.3% | 92.3% | 13.0% | Overall: 75.2% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and Post Variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 10 | 16 | 4 | 33.3% | |

| Severe | 31 | 188 | 10 | 82.1% | |

| Unburnt | 1 | 0 | 5 | 83.3% | |

| Producer’s Accuracy | 23.8% | 92.2% | 26.3% | Overall: 76.6% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and post variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 12 | 22 | 5 | 30.8% | |

| Severe | 29 | 182 | 5 | 84.3% | |

| Unburnt | 1 | 0 | 9 | 90.0% | |

| Producer’s Accuracy | 28.6% | 89.2% | 47.4% | Overall: 76.6% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and Post Variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 13 | 18 | 3 | 38.2% | |

| Severe | 31 | 184 | 13 | 80.7% | |

| Unburnt | 0 | 5 | 7 | 58.3% | |

| Producer’s Accuracy | 29.5% | 88.9% | 30.4% | Overall: 74.5% | |

| Reference Data—Pre and Post Variables | |||||

|---|---|---|---|---|---|

| Classified Data—Pre and Post Variables | Class | Not-Severe | Severe | Unburnt | User’s Accuracy |

| Not-severe | 8 | 12 | 3 | 34.8% | |

| Severe | 33 | 192 | 8 | 82.4% | |

| Unburnt | 1 | 0 | 8 | 88.9% | |

| Producer’s Accuracy | 19.0% | 94.1% | 42.1% | Overall: 78.5% | |

| Capture Method | LiDAR | SfM | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Time | Pre | Post | Pre | Post | Difference (m) | |||||||||||||||

| Value | Mean | Std Dev | Skew | Kurtosis | Mean | Std Dev | Skew | Kurtosis | Difference (m) | Mean | Std Dev | Skew | Kurtosis | Mean | Std Dev | Skew | Kurtosis | |||

| Forest | Severe | 10th % height (m) | 6.57 | 7.17 | 1.87 | 3.80 | 7.05 | 8.17 | 1.04 | 0.13 | 0.48 | 5.39 | 7.34 | 2.55 | 7.05 | 1.58 | 3.90 | 4.11 | 18.52 | −3.81 |

| 50th % height (m) | 20.57 | 10.81 | −0.10 | −1.07 | 20.96 | 10.96 | −0.29 | −0.90 | 0.39 | 15.80 | 10.51 | 0.45 | −0.84 | 16.10 | 11.34 | 0.02 | −1.36 | 0.30 | ||

| 90th % height (m) | 27.32 | 9.57 | −0.23 | −0.80 | 26.94 | 10.73 | −0.63 | −0.09 | −0.39 | 26.16 | 10.03 | −0.51 | −0.47 | 24.72 | 11.91 | −0.62 | −0.59 | −1.44 | ||

| Layer Count | 4.86 | 1.90 | 0.58 | 0.96 | 4.19 | 2.18 | 0.30 | −0.04 | −0.68 | 4.49 | 1.92 | 0.28 | 0.26 | 3.16 | 2.00 | 0.49 | −0.10 | −1.33 | ||

| Volume () | 2.65 | 1.27 | 0.38 | 0.23 | 2.20 | 1.29 | 0.80 | 1.26 | −0.45 | 11.66 | 4.13 | −0.35 | 0.85 | 5.05 | 3.48 | 0.90 | 1.06 | −6.61 | ||

| Not-Severe | 10th % height (m) | 7.02 | 6.94 | 1.54 | 2.15 | 8.99 | 8.24 | 0.59 | −0.77 | 1.97 | 5.03 | 7.28 | 2.72 | 7.50 | 2.27 | 3.80 | 3.01 | 10.21 | −2.76 | |

| 50th % height (m) | 23.81 | 10.27 | −0.38 | −0.76 | 24.05 | 10.22 | −0.51 | −0.58 | 0.24 | 18.28 | 10.45 | 0.24 | −0.83 | 19.76 | 9.71 | −0.32 | −0.95 | 1.49 | ||

| 90th % height (m) | 30.15 | 9.64 | −0.42 | −0.61 | 30.04 | 10.22 | −0.68 | 0.03 | −0.10 | 28.89 | 10.53 | −0.69 | −0.12 | 29.23 | 10.28 | −0.78 | 0.06 | 0.34 | ||

| Layer Count | 4.98 | 1.91 | 0.41 | −0.22 | 4.63 | 2.07 | 0.25 | −0.09 | −0.35 | 4.79 | 1.94 | 0.06 | 0.04 | 3.93 | 1.83 | 0.18 | −0.32 | −0.86 | ||

| Volume () | 3.64 | 1.30 | 0.10 | 0.01 | 3.36 | 1.52 | 0.43 | 0.19 | −0.27 | 13.95 | 4.67 | −0.76 | 1.19 | 9.50 | 4.55 | 0.73 | 0.60 | −4.45 | ||

| Sedgeland | Severe | 10th % height (m) | 0.48 | 0.80 | 11.19 | 249.66 | 0.23 | 1.49 | 10.86 | 125.90 | −0.25 | 0.84 | 1.05 | 13.98 | 365.31 | 0.15 | 0.89 | 15.46 | 274.95 | −0.69 |

| 50th % height (m) | 2.30 | 4.24 | 4.58 | 23.34 | 1.94 | 5.20 | 3.75 | 14.66 | −0.36 | 2.20 | 2.93 | 6.25 | 50.41 | 1.52 | 4.22 | 4.52 | 22.66 | −0.68 | ||

| 90th % height (m) | 5.52 | 6.96 | 2.20 | 5.25 | 4.19 | 7.41 | 2.42 | 5.77 | −1.33 | 5.62 | 6.48 | 2.38 | 6.23 | 3.72 | 6.53 | 2.68 | 7.87 | −1.90 | ||

| Layer Count | 1.57 | 1.53 | 1.43 | 3.99 | 0.83 | 1.20 | 2.19 | 6.41 | −0.75 | 1.84 | 1.25 | 1.35 | 2.98 | 0.74 | 1.03 | 2.30 | 9.40 | −1.10 | ||

| Volume (() | 1.64 | 1.21 | −0.17 | −1.05 | 0.64 | 0.82 | 1.53 | 4.50 | −1.00 | 7.12 | 3.28 | −0.02 | 1.03 | 1.10 | 1.63 | 2.39 | 9.40 | −6.02 | ||

| Not-Severe | 10th % height (m) | 1.08 | 1.28 | 5.42 | 70.09 | 0.54 | 1.32 | 9.01 | 115.19 | −0.54 | 1.22 | 1.16 | 3.19 | 29.90 | 0.49 | 1.43 | 12.25 | 189.11 | −0.74 | |

| 50th % height (m) | 3.37 | 4.47 | 3.88 | 18.53 | 3.45 | 5.46 | 3.22 | 11.66 | 0.08 | 2.86 | 3.03 | 5.21 | 44.98 | 2.42 | 4.00 | 4.19 | 22.01 | −0.44 | ||

| 90th % height (m) | 7.20 | 7.51 | 1.82 | 3.31 | 6.82 | 7.75 | 1.91 | 3.73 | −0.38 | 6.74 | 6.96 | 1.99 | 4.37 | 5.21 | 6.59 | 2.38 | 6.94 | −1.53 | ||

| Layer Count | 1.87 | 1.68 | 1.24 | 1.79 | 1.36 | 1.26 | 1.60 | 4.05 | −0.51 | 1.84 | 1.44 | 1.10 | 1.54 | 1.07 | 1.13 | 2.07 | 9.78 | −0.77 | ||

| Volume () | 1.69 | 1.14 | −0.09 | −0.04 | 1.45 | 1.19 | 0.47 | −0.01 | −0.24 | 6.82 | 4.08 | −0.36 | −0.71 | 3.92 | 3.84 | 0.64 | 0.15 | −2.90 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hillman, S.; Hally, B.; Wallace, L.; Turner, D.; Lucieer, A.; Reinke, K.; Jones, S. High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia. Fire 2021, 4, 14. https://doi.org/10.3390/fire4010014

Hillman S, Hally B, Wallace L, Turner D, Lucieer A, Reinke K, Jones S. High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia. Fire. 2021; 4(1):14. https://doi.org/10.3390/fire4010014

Chicago/Turabian StyleHillman, Samuel, Bryan Hally, Luke Wallace, Darren Turner, Arko Lucieer, Karin Reinke, and Simon Jones. 2021. "High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia" Fire 4, no. 1: 14. https://doi.org/10.3390/fire4010014

APA StyleHillman, S., Hally, B., Wallace, L., Turner, D., Lucieer, A., Reinke, K., & Jones, S. (2021). High-Resolution Estimates of Fire Severity—An Evaluation of UAS Image and LiDAR Mapping Approaches on a Sedgeland Forest Boundary in Tasmania, Australia. Fire, 4(1), 14. https://doi.org/10.3390/fire4010014