1. Introduction

Unmanned Aerial Vehicles (UAVs) are integral to modern applications, spanning surveillance, logistics, and infrastructure inspection, where their autonomy is paramount [

1]. A fundamental prerequisite for such autonomy is a continuous and robust localization capability, which ensures safe and effective operation [

2,

3]. While Global Navigation Satellite Systems, like Global Positioning System (GPS), are the conventional solution, their signals are notoriously unreliable in many critical scenarios. Environments such as dense urban canyons, indoor spaces, or contested areas subject to intentional signal jamming and spoofing render GPS-dependent systems vulnerable, severely limiting UAV operational reliability and versatility [

4,

5]. This limitation motivates developing alternative localization approach that enhance UAV resilience and safety by operating without external beacons [

6].

Vision-Based Navigation (VBN) has emerged as a compelling and powerful alternative, leveraging onboard cameras to perceive the surrounding environment for self-positioning. This approach grants UAVs a high degree of autonomy, enabling them to navigate using intrinsic sensory data. Within VBN, landmark-based navigation is a particularly effective strategy, mirroring the innate cognitive processes humans and animals use to orient themselves by recognizing distinctive features [

7]. By pre-mapping a set of unique visual references or discovering them in real-time, a UAV can effectively match its current view against this map to correct for positional drift accumulated by its Inertial Measurement Unit (IMU). This process allows the vehicle to maintain accurate localization over extended periods without external aid.

However, the efficacy of this strategy hinges on the quality of the landmarks. The automatic identification of what constitutes a “good” landmark is a non-trivial challenge [

8]. An ideal landmark must satisfy two crucial criteria: it must be recognizable under varying conditions (e.g., different viewpoints, lighting, partial occlusions), and it must be sufficiently distinctive to avoid perceptual aliasing—the confusion of one location with another due to repetitive features. For example, an architecturally unique building or a solitary water tower can serve as an excellent, unambiguous landmark. In contrast, generic residential houses in a suburban grid or uniform rows of city buildings offer little discriminative value and can easily mislead a VBN system.

The advent of deep learning, particularly Convolutional Neural Networks (CNNs), has revolutionized the field of computer vision, providing sophisticated tools for feature extraction and object recognition that far surpass classical methods [

9]. CNNs pre-trained on large-scale datasets learn hierarchical feature representations that can be transferred to diverse downstream tasks [

10]. These features, often compressed into vectors known as embeddings, encapsulate rich semantic and structural information, making them ideal for representing visual objects for similarity comparison and re-identification [

11]. Despite these technological advancements, the following critical research gap persists: the autonomous discovery and curation of a database of truly distinctive landmarks from raw Earth observation data, such as aerial survey imagery, remains an open problem. Existing systems often depend on pre-defined markers, extensive manual annotation, or generic object features that are not sufficiently discriminative for reliable, long-term navigation. This paper addresses this gap by proposing a framework to automatically survey an area, identify unique visual references, and build a high-quality landmark map for robust GPS-free navigation.

This paper makes the following specific contributions:

The proposed approach focuses on urban environments and utilizes buildings as the primary landmark candidates, leveraging their structural diversity and permanence. The remainder of this paper is structured as follows:

Section 2 reviews related work.

Section 3 details the materials and methods.

Section 4 presents the experimental results.

Section 5 discusses the findings, and

Section 6 concludes the paper.

2. Related Works

The challenge of UAV navigation in GPS-denied environments has spurred extensive research across several domains, including classical computer vision, deep learning-based visual recognition, and robotics. This section reviews the key advancements in these areas to contextualize our proposed approach for autonomous landmark discovery.

Early work in visual localization and landmark-based navigation predominantly relied on hand-crafted local feature descriptors. Methods such as the Scale-Invariant Feature Transform (SIFT) [

14] and Speeded-Up Robust Features became the standard for identifying and matching keypoints between images. These local descriptors were often aggregated into a global image signature using techniques like Bag-of-Visual-Words (BoVW) [

15], which quantizes local features into a histogram, or the Vector of Locally Aggregated Descriptors (VLAD) [

16], which aggregates descriptor residuals. While foundational, these methods often struggled with scalability in large environments, viewpoint and illumination invariance, and the lack of high-level semantic understanding, making it difficult to distinguish between structurally similar but distinct objects.

The deep learning revolution ushered in a new era of performance for visual recognition tasks. Seminal architectures like AlexNet [

9] and ResNet [

10] demonstrated the power of hierarchical feature learning. This led to the development of specialized networks for visual place recognition, such as NetVLAD [

16], which introduced a learnable aggregation layer to create powerful global embeddings for entire scenes. Other approaches, like Deep Local Features [

17], focused on learning discriminative local features and using attention mechanisms to identify the most salient regions for matching. These methods significantly improved robustness but often required extensive training on large-scale, domain-specific datasets. A prevailing modern paradigm is Detect-to-Retrieve (D2R) [

18], where an object detector first localizes objects of interest before a dedicated network computes their embeddings. This reduces background clutter and improves accuracy, especially for small landmarks. Our work builds on the D2R concept but adds a crucial, novel layer of unsupervised distinctiveness analysis. The underlying techniques of exploring these learned feature spaces often rely on dimensionality reduction for analysis and visualization [

19], with classical methods like Principal Component Analysis (PCA) [

20] and modern non-linear techniques like t-distributed Stochastic Neighbor Embedding (t-SNE) [

21] being essential tools.

Within the specific context of UAVs, research has focused on adapting these techniques to the unique challenges of aerial robotics, including severe viewpoint changes between aerial survey and UAV imagery and the need for computationally efficient onboard processing. Recent surveys highlight the breadth of deep learning-based visual localization techniques being explored [

2,

5]. Specific methods include lightweight visual localization algorithms designed for real-time performance [

6], context-enhanced cross-view geo-localization that explicitly reasons about aerial survey-to-UAV matching [

22], and fine-grained approaches that combine local and global features [

23]. Object detection models from the YOLO family [

24,

25] are particularly popular for their favorable balance of speed and accuracy. This has led to the development of highly specialized, lightweight networks for efficient UAV object detection [

3,

26,

27] and related image analysis tasks like satellite image classification [

28]. Furthermore, as these systems become more autonomous, ensuring their reliability and transparency is critical. This has spurred research into explainable AI for UAV navigation to build trust and understand decision-making processes [

1,

29], aligning with broader goals of developing trustworthy AI systems [

30].

Despite these advances, a critical gap remains. While many systems can detect objects or match scenes [

31], they typically lack an explicit mechanism to autonomously evaluate the navigational quality or distinctiveness of potential landmarks from raw geospatial data. Methods often rely on manually selected key regions [

8] or treat all detected objects of a class (e.g., ‘building’) as equally viable landmarks, which can fail in repetitive urban environments. The core challenge is to automate the curation of a sparse, reliable landmark map from a dense set of object candidates without requiring extensive retraining or manual supervision. Our work addresses this by introducing an unsupervised outlier detection framework based on the Isolation Forest algorithm [

32]. This approach leverages the inherent structure of a learned embedding space to identify statistically rare, and thus visually distinctive, objects. This is philosophically related to detecting subtle data anomalies, a task primarily explored in domains like image resolution enhancement [

33], but applied here for the novel purpose of landmark curation.

Therefore, the goal of this study is to improve UAV navigation by creating and validating a lightweight, automated framework that identifies visually distinctive urban objects in aerial survey imagery to serve as reliable landmarks. We achieve this by first constructing rich object embeddings from a pre-trained segmentation network, a process that requires no additional training. Next, an unsupervised landmark selection strategy identifies unique objects by treating them as statistical outliers within the embedding space. Finally, we validate this approach using a cross-view benchmark dataset, demonstrating that our framework of selecting landmarks results in superior retrieval accuracy.

3. Materials and Methods

This section outlines the methodology for autonomous landmark identification. We begin by defining the problem and stating our core hypothesis. Subsequently, we describe the proposed framework, which involves object segmentation, multi-layer feature extraction, embedding construction, and outlier-based landmark selection. We also detail the rationale for UAV localization using these landmarks and introduce proxy metrics for evaluating and tuning the embedding process.

3.1. Problem Statement

We aim to develop a system that automatically identifies visually distinctive objects, primarily buildings, from a collection of geo-referenced aerial survey images, denoted as . Each image covers a specific geographic area and may contain multiple instances of objects belonging to a predefined set of categories, . In this work, our primary category of interest is ’building’. These identified distinctive objects will serve as landmarks for UAV navigation.

The core of our approach relies on a CNN pre-trained for a relevant task, in this case, building segmentation. For each object detected in an image , we derive a d-dimensional real-valued vector embedding . Objects that exhibit visually unique or distinctive features in the learned embedding space are designated as landmarks. Formally, let be the set of all detected objects across the entire image dataset I. Our primary objective is to identify a subset of landmark objects characterized by unique visual cues and robust appearance under minor transformations (e.g., slight viewpoint changes, illumination variations). This curated set , along with their geolocations, forms a landmark database for subsequent UAV navigation.

3.2. Core Hypothesis

Consider a UAV operating in a GPS-denied environment. Prior to deployment, a database of landmarks is constructed from aerial survey imagery of the operational area. This database contains embeddings and geolocations of distinctive objects. During its mission, the UAV’s onboard camera captures live video frames. Objects detected in these frames are processed to generate embeddings using the same methodology employed for the aerial survey imagery. These query embeddings are then matched against the landmark database. A successful match allows the UAV to estimate its current location based on the known coordinates of the identified landmark.

Our central hypothesis is twofold. First, concerning cross-domain distinctiveness, we posit that objects visually distinctive in aerial survey imagery will generally remain so when viewed from a UAV, despite differing perspectives and sensors. Consequently, in a common embedding space, landmark objects should form sparse, outlier regions, well-separated from clusters of typical objects. Second, regarding hierarchical feature utility, we hypothesize that an optimal object embedding should incorporate features from multiple CNN layers. While deeper layers provide semantic context, intermediate layers retain fine-grained details vital for distinguishing between similar-looking objects [

10]. By focusing on outlier objects identified through this multi-layer embedding, we anticipate a significant improvement in localization reliability due to reduced matching ambiguity.

3.3. Proposed Framework: Landmark Identification Workflow

The proposed framework for autonomous landmark identification consists of four main steps, as illustrated in

Figure 1.

3.3.1. Segmentation-Based Object Detection

Candidate objects are initially identified by applying a CNN-based segmentation model to each aerial survey image . The model is pre-trained to segment objects belonging to categories in (in our case, primarily buildings). For each detected object instance , the segmentation model outputs a binary segmentation mask and a confidence score . We retain only objects whose confidence score exceeds a threshold , yielding an initial set of candidates . We prefer segmentation over bounding-box detection because precise masks capture actual object shapes and help isolate object features from background clutter, leading to purer embeddings. The segmentation backbone used is a YOLOv11n-seg model, chosen for its lightweight architecture and proven proficiency in segmenting buildings from aerial views.

3.3.2. Multi-Layer Feature Extraction

Let the backbone CNN consist of

convolutional layers. We select a subset of

L layers, indexed by

, from which to extract features. The feature map tensor at a given layer

l is calculated as shown in Equation (

1):

where

is the output tensor,

is the number of channels, and

are the spatial dimensions.

During the forward pass for an image

, we hook into the selected layers to store their feature maps. Since deeper layers have reduced spatial dimensions, the original segmentation mask

is downsampled to match the resolution of each feature map

, yielding an aligned mask

. This ensures that we extract features only from activations corresponding to object

. The rationale for using multiple layers, established by Zeiler and Fergus [

34], is to create a rich embedding that combines low-level primitives (e.g., textures) from early layers with high-level semantic information (e.g., structure) from deeper layers, thereby enhancing discriminative power.

3.3.3. Embedding Construction via Aggregation

To represent each object

as a fixed-dimensional embedding vector

, we aggregate the feature activations within its aligned mask

for each selected layer

l. To create a vector for each channel, we apply an aggregation operator

to the set of masked activation values. This process is formalized in Equation (

2), where

is the c-th channel of the feature map at layer

l, and

are the spatial pixel coordinates within that channel’s grid that fall inside the downsampled mask

as follows:

Common choices for the aggregation operator include max-pooling (), which emphasizes the strongest feature activations; average-pooling (), which provides a summary of the overall feature response; and sum-pooling (), which reflects the total activation.

These scalar values are then concatenated across all channels for each selected layer. As described in Equation (

3), the final embedding vector

is formed by concatenating these aggregated vectors across all selected layers

as follows:

The total dimension d of the embedding is the sum of the channel counts in all selected layers as follows: .

3.3.4. Outlier Detection for Landmark Identification

With embeddings generated for all objects, the final step is to distinguish distinctive landmarks from typical ones. Our core hypothesis suggests that landmark embeddings will be outliers in the embedding space, while typical objects will form denser clusters. We employ the Isolation Forest algorithm [

32] for this task due to its computational efficiency, low memory requirements, and proven effectiveness on high-dimensional data without needing data normalization.

While other powerful anomaly detection algorithms exist, such as Local Outlier Factor or One-Class SVMs, Isolation Forest’s non-parametric nature and its ability to handle irrelevant attributes make it particularly well-suited for the potentially noisy feature space of our embeddings. A comparative study of these methods, however, remains an important direction for future investigation. The algorithm isolates observations by random feature splitting; shorter path lengths to isolate a point indicate an anomaly. An object

is designated as a landmark and added to the set

if its anomaly score

from the algorithm exceeds a threshold

, as formalized in Equation (

4), expressed as follows:

This unsupervised approach is advantageous as it requires no manual labeling and leverages the inherent data distribution to define distinctiveness algorithmically. Due to overlapping views in consecutive images within the VPAIR dataset, a single physical landmark may be detected multiple times. If any one of these instances is selected as an outlier, its other views are typically also identified as outliers. This results in the landmark database containing several similar embeddings for the same landmark from different perspectives, which intrinsically enhances the robustness of the retrieval process.

3.4. Rationale for UAV Localization Using Identified Landmarks

The landmark database

, constructed from the aerial survey imagery, serves as the map for UAV localization. The onboard process, illustrated in

Figure 2, mirrors the identification workflow.

First, the UAV’s camera captures a video frame, and the same segmentation CNN detects objects in the view. Second, an embedding is computed for each detected object using the identical multi-layer feature extraction and aggregation methods. Third, this query embedding is matched against the landmark database using a distance metric like L2 distance. Finally, if a query embedding closely matches a landmark embedding, the UAV infers its approximate geolocation from the known coordinates of the matched landmark. This estimate can be further refined using multiple matches or integration into a sensor fusion framework with IMU data. Crucially, the retrieved top-N candidates would then typically be passed to a geometric verification stage (e.g., using RANSAC with a PnP solver) for precise 6-DoF pose estimation and robust outlier rejection, a standard practice that strengthens the final localization solution.

3.5. Proxy Metrics for Embedding Evaluation and Tuning

Optimizing embedding parameters (CNN layers and aggregation function) is crucial, but detailed ground-truth correspondences between aerial survey and UAV views are often unavailable. To enable quantitative tuning, we introduce two proxy retrieval metrics. The Aerial Survey-to-Aerial Survey (AS2AS) retrieval metric evaluates embedding consistency within the aerial survey domain by querying an object from one aerial survey image against a database from adjacent images. A successful retrieval occurs if the same physical object is found. The Aerial Survey-to-UAV (AS2UAV) Retrieval metric more directly addresses the cross-domain gap by matching object embeddings from aerial survey images against a database from UAV images of the same area (as available in datasets like VPAIR [

13]).

To systematically identify an optimal layer combination, we employ a greedy forward selection strategy (Algorithm 1), guided by these proxy metrics. Starting with an empty set, we iteratively add the layer that yields the largest improvement in the chosen metric (e.g., AS2UAV Recall@1) until performance no longer improves.

| Algorithm 1 Greedy algorithm for optimal layer selection |

- 1:

Input: Set of all available CNN layers , chosen proxy metric , aggregation function . - 2:

Output: Optimal set of selected layers . - 3:

Initialize . - 4:

Initialize . - 5:

loop - 6:

. - 7:

. - 8:

for all do - 9:

. - 10:

Construct embeddings using layers in and aggregation . - 11:

Evaluate on the validation set. - 12:

if then - 13:

. - 14:

. - 15:

end if - 16:

end for - 17:

if and then - 18:

. - 19:

. - 20:

else - 21:

break - 22:

end if - 23:

end loop - 24:

return .

|

3.6. Evaluation Metrics for the Final Workflow

Once the landmark database

is constructed, we evaluate the UAV localization task as a retrieval problem. The primary metric is Recall@N, which we use to measure the proportion of query images for which the correct landmark is found within the top

N retrieved results. The calculation is shown in Equation (

5), expressed as follows:

where

is the total number of queries, and

is the count of successful queries where the correct match appears in the top

N results.

Metric in Equation (

5) is crucial because Recall@1 measures exact match accuracy, while Recall@N (for N > 1) indicates the likelihood of the correct match being in a small candidate set, which is valuable for practical navigation systems that can employ further verification steps.

3.7. Experimental Setup

The experimental setup was designed to validate the proposed landmark discovery framework and quantify its impact on retrieval performance. This setup encompasses the dataset selection, implementation details of the models, and the evaluation protocol.

3.7.1. Dataset and Preprocessing

All experiments were conducted on the VPAIR dataset [

13], a publicly available benchmark designed for cross-view visual localization. It features 2788 paired images from a flight over a German urban environment, comprising high-resolution aerial survey orthophotos and corresponding nadir images captured from a UAV (simulated by a light aircraft). This pairing is ideal for evaluating the robustness of features to significant changes in viewpoint and sensor modality. Representative examples of these image pairs are shown in

Figure 3.

A key characteristic of the VPAIR dataset is the consistent Ground Sample Distance (GSD) across both aerial survey and UAV views, which were downsampled to a uniform 800 × 600 pixel resolution. This parity ensures that building structures, our primary landmark candidates, are resolved with sufficient detail for meaningful feature extraction. To confirm this, we analyzed the size of all 18,432 detected buildings in the dataset. As shown in

Figure 4, the distribution of building bounding box sizes is concentrated well above minimal detection thresholds, confirming their suitability for the proposed framework.

3.7.2. Implementation Details

The entire workflow was implemented in Python v3.9 [

35], with deep learning components built on PyTorch v1.12 [

36]. All computations were performed on a workstation equipped with an NVIDIA RTX 3060 GPU.

For object segmentation, we employed the YOLOv11n-seg model from Ultralytics [

37]. This model was chosen for its excellent trade-off between accuracy and computational cost, making it a strong candidate for eventual onboard UAV deployment. The model’s lightweight architecture is detailed in

Table 1, highlighting its low parameter count (

M) and high-speed inference capabilities on GPU hardware.

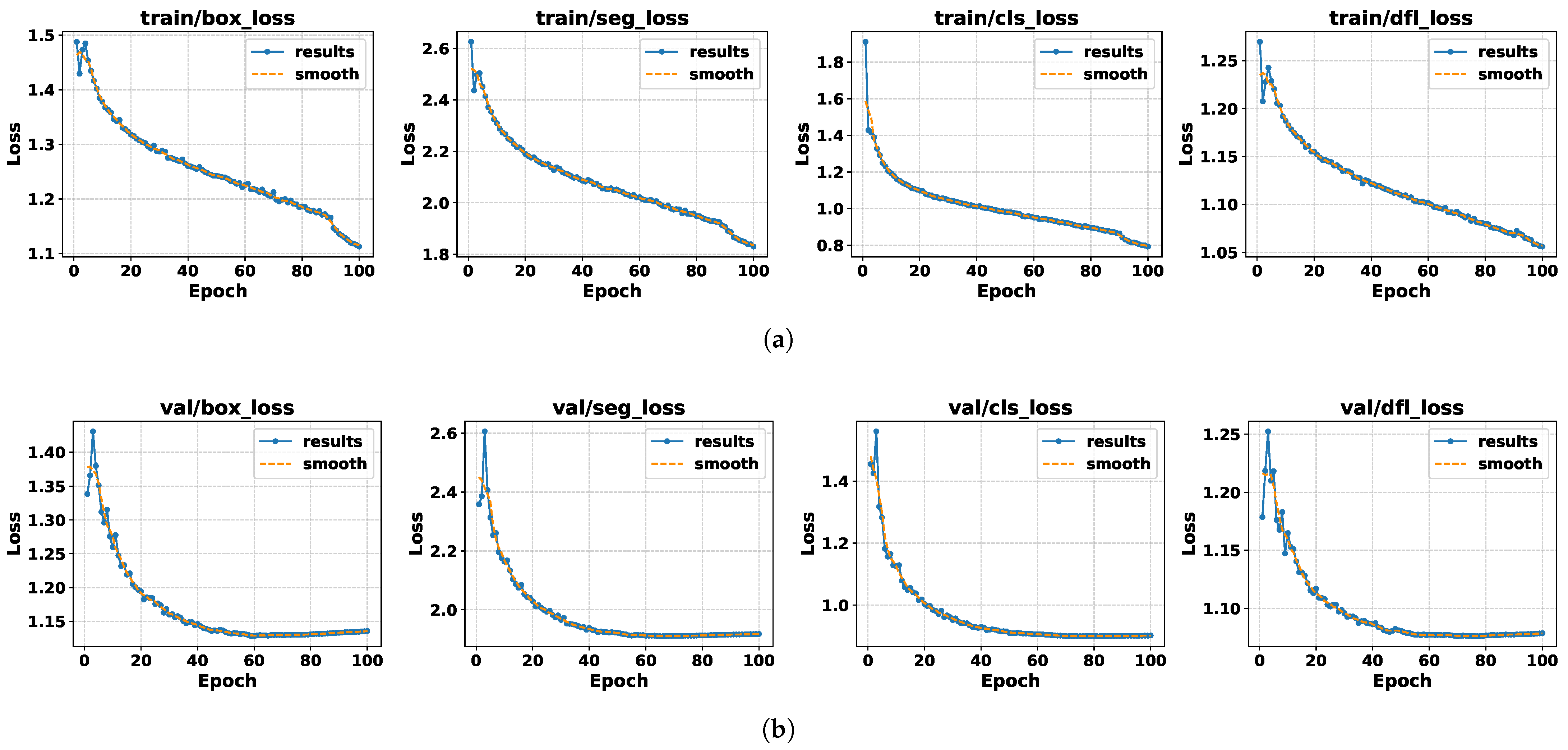

The base model was fine-tuned for 100 epochs on a custom, diverse dataset of satellite images to perform single-class building segmentation. A comprehensive analysis of the fine-tuning process and the resulting model’s performance is provided in

Appendix A.

Unsupervised landmark selection was performed using the Isolation Forest algorithm, implemented with the scikit-learn v1.1 library [

38]. The IsolationForest model was configured with n_estimators = 500. For our primary analysis, the contamination (outlier proportion) was set to a fixed value of 0.01 to identify the most statistically rare objects. We acknowledge that a static global fraction is a simplification and may not be optimal for all environments; an adaptive threshold that adjusts based on local feature density is a key area for future work.

3.7.3. Evaluation Protocol

To validate our core hypotheses, we designed a two-part evaluation protocol. First, for qualitative analysis of the embedding space, we used PCA and t-SNE to project the high-dimensional embedding vectors into 2D space for visualization, as described in our previous work [

19].

Second, for the quantitative evaluation of retrieval performance, we created a benchmark query set by manually annotating 200 buildings from the VPAIR dataset. This set was carefully balanced into two classes based on explicit criteria detailed in

Section 3.7.4: 100 “landmarks” (architecturally unique or visually salient buildings) and 100 “typical buildings” (generic, repetitive structures). The primary metric used to assess performance was Recall@N (defined in Equation (

5)), which measures the proportion of queries for which the correct building is retrieved within the top

N results.

3.7.4. Benchmark Annotation Criteria

To ensure the quantitative evaluation was robust and reproducible, the manual annotation of the 200-building benchmark set followed a strict set of criteria. The annotation was performed by one of the authors and subsequently reviewed by another to ensure consistency.

Landmark Buildings (100 instances): These were selected based on visual saliency and uniqueness within the local context. Key criteria included: (1) irregular or complex building footprints (e.g., L-shaped, T-shaped, or non-rectangular designs); (2) unique roof structures, colors, or textures that clearly distinguished them from neighbors; (3) significant size or height relative to surrounding buildings; or (4) isolation from other structures, making them unambiguous.

Typical Buildings (100 instances): These were selected to represent the visually repetitive background structures. Key criteria included: (1) simple, rectangular footprints; (2) location within dense, grid-like clusters of similar-looking houses; and (3) generic, common architectural features (e.g., standard gabled roofs, uniform color).

This careful curation was essential for creating a benchmark that could fairly test the hypothesis that visually distinctive objects, as identified by our system, are inherently more reliable for retrieval-based localization.

5. Discussion

The results presented herein affirm the viability of autonomously identifying distinctive urban landmarks from aerial survey imagery, a critical capability for UAV navigation in GPS-denied settings. Our integrated framework, combining building segmentation, multi-layer feature embedding, and unsupervised outlier detection, effectively curates a database of landmarks that demonstrate markedly superior retrieval accuracy over typical, non-selected buildings. This section will delve into the implications of these findings, juxtapose our approach with existing methodologies, and critically assess its strengths, weaknesses, and promising avenues for future research.

5.1. Comparative Analysis with Existing Approaches

To situate our work within the broader landscape of visual localization, we provide both a conceptual and an empirical comparison. Conceptually, our framework differs from established paradigms in its explicit focus on automated landmark curation.

Table 4 highlights these differences against traditional, global embedding, and generic D2R approaches.

Empirically comparing our landmark-centric framework to global descriptor methods like NetVLAD [

16] or AnyLoc [

39] is challenging, as they solve different tasks. Our system is designed for sparse landmark-based navigation correction, not continuous global localization. However, a proxy experiment was conducted to provide a relative baseline. We adapted our framework to mimic a global retrieval workflow on the VPAIR dataset’s urban images, using our landmark embeddings to represent local image groups. In this setup, our framework achieved a Recall@1 of 0.197 and Recall@5 of 0.37 (at 100

tolerance). In contrast, a heavyweight system like AnyLoc, which uses a massive ViT-G/14 transformer, achieves Recall@1 of 0.62 on the same task. While AnyLoc’s performance is superior in this global context, it comes at a tremendous computational cost. Our framework’s strength lies not in outperforming such systems at their own task, but in providing a highly efficient, alternative navigation paradigm.

The “lightweight” nature of our approach is a key advantage for onboard deployment. The fine-tuned YOLOv11n-seg model contains only M parameters and requires approximately GFLOPs per 640 px image, with a GPU latency under 2 on a TensorRT-T4. The total model weights are under 15 MB. In contrast, the DINOv2 ViT-G/14 model used by heavyweight approaches like AnyLoc has ~ B parameters (nearly 400× more) and produces large, high-dimensional descriptors (49 152-D vs. our 640-D). This allows our entire landmark identification and matching workflow to run on commodity UAV compute modules without requiring powerful edge GPUs or offloading to the cloud, a critical advantage for SWaP (Size, Weight, and Power)-constrained platforms.

5.2. Interpretation of Findings

The efficacy of our framework stems from its synergistic approach to constructing discriminative embeddings and an unsupervised mechanism for selecting distinctive objects. Qualitative analysis of single-layer embeddings (

Figure A3 and

Figure A4) revealed that max-pooling, particularly with features from deeper CNN layers like 9, 6, and 10 of the YOLOv11n-seg backbone (

Table 2), excelled in cross-domain (AS2UAV) retrieval. This result aligns with established findings in computer vision. Max-pooling is known to be robust to small spatial translations and focuses on the most discriminative feature activation within a region, effectively capturing an object’s defining characteristics [

40]. In contrast, average-pooling captures overall texture and statistics, which, while useful for within-domain tasks (AS2AS), proved less robust to the significant viewpoint and sensor changes inherent in the cross-domain (AS2UAV) task. This object-centric, masked aggregation strategy offers a nuanced feature representation, potentially diverging from optimal strategies in global image retrieval where techniques like Generalized Mean pooling [

41] are common. The efficient use of intermediate layers from a pre-trained segmentation network for embedding construction, without requiring additional training, echoes early work on leveraging pre-trained CNNs for retrieval [

11], but is specifically tailored here for the nuances of building structures.

A significant contribution is the unsupervised landmark selection via Isolation Forest. Unlike many navigation systems that rely on manual selection or pre-defined ‘distinctive’ classes [

8], our framework defines distinctiveness as statistical rarity in the embedding space, enabling automated, data-driven database curation. This is particularly valuable for rapid deployment in unmapped areas. The pronounced improvement in retrieval accuracy for selected landmarks (0.53 Recall@1 vs. 0.31 for typical buildings,

Table 3) validates this strategy. While sharing parallels with visual saliency research, its application here to curate landmarks from objects of the same semantic class is novel. The AS2AS and AS2UAV proxy metrics also proved effective for guiding layer selection (

Figure 5) without extensive ground-truth, with AS2UAV being vital for optimizing cross-domain matching.

5.3. Strengths, Weaknesses, and Limitations

The proposed framework presents several key strengths for autonomous UAV navigation. Its high degree of automation in landmark discovery and database creation minimizes manual effort, facilitating rapid deployment. The lightweight embedding generation, by hooking features from a pre-trained segmentation network, avoids computationally expensive training of dedicated embedding networks, benefiting resource-constrained UAVs. The unsupervised landmark selection via Isolation Forest offers a principled, data-driven approach to define distinctiveness, adapting to environmental visual characteristics and leading to significantly improved retrieval accuracy (Recall@5 of 0.70 for landmarks). Furthermore, the modular framework design allows for future upgrades to individual components.

However, the framework has limitations. A primary limitation is the system’s dependency on the initial segmentation model. As detailed in

Appendix A, the fine-tuned YOLOv11n-seg performs well, but segmentation errors inevitably propagate. False negatives (missed buildings) cause potential landmarks to be entirely omitted from consideration. False positives could pollute the database with non-landmark objects. More critically, imprecise segmentation masks, for instance, those that include shadows, adjacent trees, or parts of other buildings, directly corrupt the feature embeddings by including irrelevant information during the masked aggregation step (Equation (

2)). While our qualitative results suggest some robustness to minor mask inconsistencies (

Figure 9a,b), a quantitative sensitivity analysis of how retrieval accuracy degrades with mask quality (e.g., measured by IoU) was not performed and remains a crucial step for future work to fully characterize the system’s operational reliability.

Validation was conducted on the VPAIR dataset, which, while relevant, features generally favorable daytime conditions and well-matched image resolutions. The aerial survey orthophotos and UAV images have a similar GSD of approximately 10

to 20

per pixel, and all images were downsampled to a uniform 800 × 600 pixel resolution. This resolution parity ensures that detected objects are sufficiently large for meaningful feature extraction, as shown by the distribution of building bounding box sizes in

Figure 4. However, performance under severe weather (e.g., heavy fog, rain), extreme illumination (e.g., night), or in scenarios with large GSD mismatches between aerial survey and UAV imagery remains untested. The current building-centric focus also limits applicability in non-urban areas where other landmark types are crucial.

Methodologically, the fixed contamination rate in Isolation Forest may not be universally optimal, and the hand-crafted aggregation functions, while standard, could potentially be outperformed by learnable alternatives, albeit with increased complexity. The proxy metrics also provide an approximate, not guaranteed optimal, parameter tuning.

5.4. Open Research Challenges and Future Work Directions

Addressing the identified limitations points to several impactful research directions. Expanding the landmark inventory beyond buildings to include features like road intersections, bridges, or natural formations is essential for broader applicability, requiring multi-class segmentation and potentially class-specific embedding strategies. Methodological enhancements, such as investigating learnable aggregation functions (e.g., attention-based pooling [

17] or Perceiver-style resampling [

42]) or adaptive outlier detection mechanisms that adjust distinctiveness thresholds based on scene complexity or navigational needs, could significantly improve performance and flexibility. Enhancing robustness to diverse environmental conditions through domain adaptation, advanced data augmentation e.g., using generative models to create seasonal or lighting variations of training data), or multi-modal sensor fusion (e.g., incorporating thermal or LiDAR data) is critical for real-world deployment.

Further system-level improvements include integrating geometric verification (e.g., Random Sample Consensus with PnP) to filter false positives and enable precise 6-DoF pose estimation. As mentioned in

Section 3, this involves matching 2D keypoints from the UAV image to a 3D model of the landmark, which can often be inferred from the same aerial survey data used for the database. Developing capabilities for online landmark discovery and dynamic map management would allow UAVs to adapt to changing environments and improve their knowledge base over time. The creation of large-scale, multi-season, multi-modal benchmark datasets with precise ground truth is vital for rigorous evaluation and continued progress. Finally, our work contributes to the development of more sophisticated autonomous navigation systems. The curated landmark database constitutes a structured ‘visual knowledge base,’ mapping visual embeddings to semantic labels (‘landmark building’) and geolocations. This provides a concrete external knowledge source that a future navigation agent could query. For example, an agent’s planning module could query for the ‘nearest landmark’ to inform its path or verify its position. This reframes our contribution as a key perceptual component for future agentic systems that leverage external knowledge bases for robust decision-making, aligning with concepts like Retrieval-Augmented Generation but grounded in the specific, functional requirements of navigation [

43].

6. Conclusions

This research successfully developed and validated an automated framework for identifying distinctive urban landmarks from aerial survey imagery to support UAV navigation in GPS-denied environments. By leveraging features from intermediate layers of a pre-trained YOLOv11n-seg model and employing an unsupervised Isolation Forest outlier detection method, our system effectively curates a database of visually unique building landmarks without requiring additional training for embedding generation or manual annotation of distinctiveness. Key quantitative results on the VPAIR benchmark demonstrated that the optimally configured max-pooled embeddings (from layers 9, 6, 10) achieved a top-1 retrieval accuracy of 0.53 for these automatically selected landmarks, a significant improvement over the 0.31 accuracy for typical buildings, and a promising Recall@5 of 0.70 for landmarks. Visual analysis confirmed the robust separation of landmark embeddings and their stability. While the current system demonstrates strong potential, its primary limitations include a building-centric focus, dependence on the initial segmentation quality, and validation on a single dataset under favorable conditions.

Future work will aim to expand the landmark inventory to diverse object types, explore learnable aggregation functions for enhanced viewpoint invariance, integrate geometric verification for precise pose estimation, and rigorously test the system across varied environmental conditions using more comprehensive benchmarks. The development of adaptive outlier detection and dynamic map management will also be crucial.