1. Introduction

The increase in the global population and demand for food production presents significant challenges, particularly in light of existing poverty and resource limitations. According to the Food and Agriculture Organization (FAO) of the United Nations, in 2022, 9% of the world’s population (or 712 million people) were living in extreme poverty, representing an increase of 23 million people compared to 2019. If current trends persist, 590 million people, or 6.9% of the world’s population, are projected to still live in extreme poverty by 2030 [

1].

Achieving the Sustainable Development Goals (SDGs), particularly the goal of eradicating hunger, is increasingly threatened by the rapid growth of the global population. As demand for food surges, the pressure on resources intensifies, posing significant challenges to sustainable agriculture and food security. By 2050, cereal production must increase by 3 billion tonnes annually, while meat production needs to rise by over 200% to meet global consumption demands [

2]. However, this growth is hindered by the degradation of around 20% of agricultural land and the challenge of meeting 40% of water needs by 2030 [

3]. Addressing these critical issues calls for innovative agricultural practices and efficient resource management to secure sustainable food production amid escalating demographic pressures.

The Fourth Agricultural Revolution, often referred to as Agriculture 4.0, is defined by the integration of advanced digital technologies that are fundamentally transforming traditional farming practices. Building upon previous agricultural advancements, this revolution incorporates Information and Communication Technology (ICT), Artificial Intelligence (AI) and automation to enhance efficiency, productivity, and sustainability in farming and improves overall yields. A central aspect of Agriculture 4.0 is smart farming, which utilizes these advanced technologies to optimize agricultural processes. Smart farming enables real-time monitoring and precise control of key variables such as weather change, soil composition, crop health, and moisture levels [

4].

Various technologies, such as remote sensing [

4] and the Internet of Things (IoT) [

5,

6], have been successfully implemented in smart farming, significantly enhancing efficiency and productivity. In recent years, emerging technologies like edge computing, Unmanned Aerial Systems (UASs) [

7], Big Data Analytics (BDA) [

8], and Machine Learning (ML) [

3] have shown great promise in revolutionizing agricultural practices. These advancements not only optimize resource utilization but also play a crucial role in addressing the growing challenges of global food demand, paving the way for a more sustainable and technologically driven agricultural future.

Agricultural practice generally involves three key steps: diagnosis, decision-making, and action. Smart farming leverages modern technologies to support and enhance these processes, encompassing three major components: data acquisition, decision support, and activity assistance [

8]. Smart farming aims to improve the performances of all these three components using modem ICT and AI technologies.

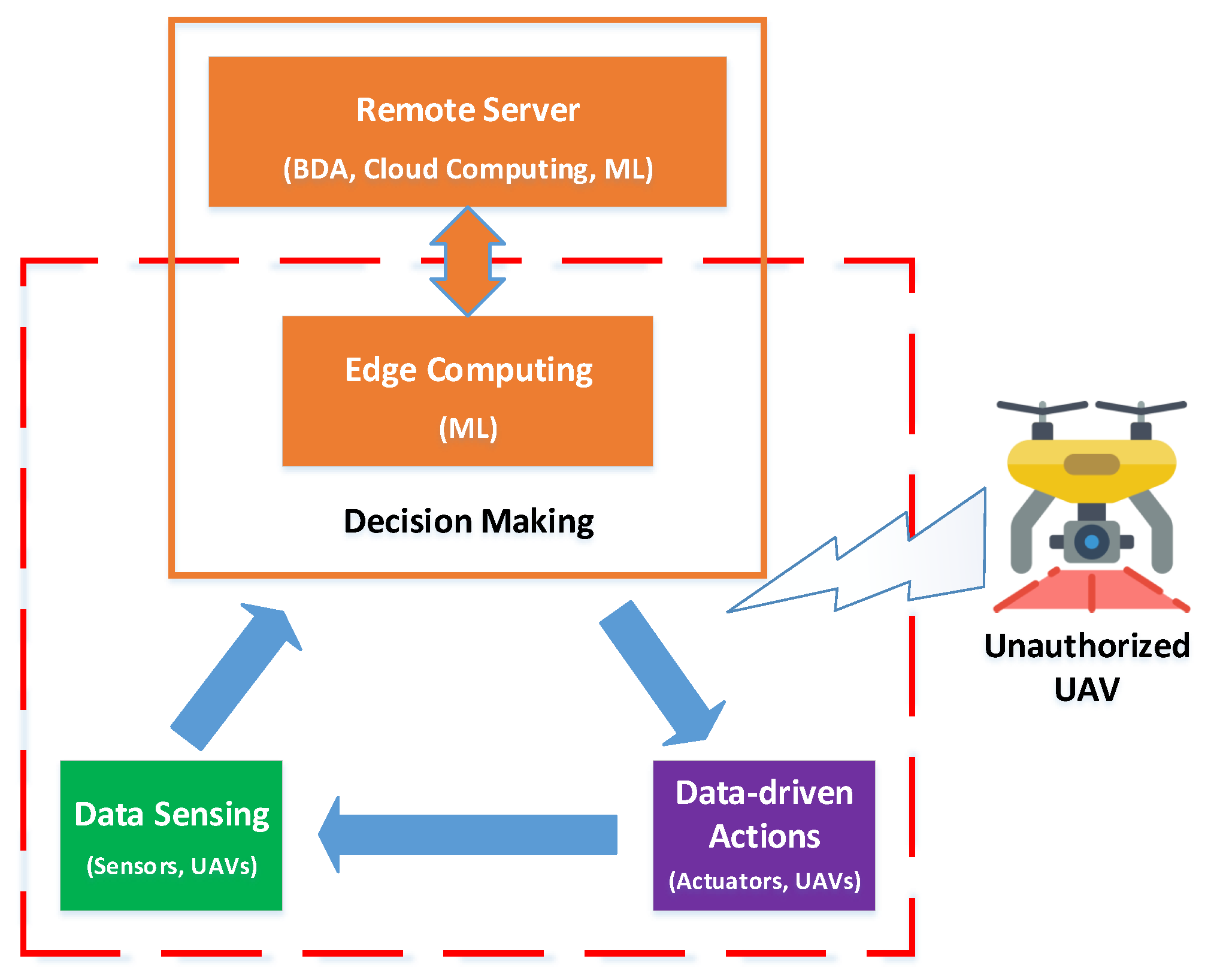

Figure 1 illustrates how the aforementioned technologies are integrated into these three stages.

Furthermore, ref. [

7] identified the IoT and UAVs as two critical technologies for smart farming, offering a comprehensive review of recent research on their applications in agriculture. The study details the core principles of IoT technology, including intelligent sensors, various IoT sensor types, agricultural networks, and protocols, as well as IoT applications and solutions specific to smart farming. Additionally, the authors examine the role of UAV technology in smart agriculture by exploring its application across various domains, such as irrigation, fertilization, pesticide usage, weed management, plant growth monitoring, crop disease management, and field-level phenotyping. The study concludes that IoT and UAV technologies are among the most transformative forces driving the evolution of traditional farming into a new era of intelligence.

While the adoption of these modern technologies significantly improves production and reduces labor costs in smart farming, it also introduces substantial security risks compared to traditional farming practices. There is a growing number of state-of-the-art surveys and review papers addressing security issues related to smart agriculture. These studies [

9,

10,

11,

12,

13] have explored the vulnerabilities and potential threats to these systems [

9], highlighting the need for secure architectures and protocols. Multi-layered architectures [

10,

11], commonly adopted in smart agriculture, have been examined to address these issues from a layered perspective. Researchers have proposed solutions such as blockchain-based protocols [

12], authentication mechanisms, and privacy-preserving techniques [

13] to enhance system reliability. Despite progress, many security challenges remain unexplored, emphasizing the need for further research and technological advancements in this field.

The development of smart farming was classified into three typical modes in [

9]: precision agriculture, facility agriculture, and order agriculture. The authors introduced and analyzed six security and privacy countermeasures for smart agriculture development: authentication and access control, privacy-preserving techniques, blockchain-based solutions for data integrity, cryptography and key management, physical countermeasures, and intrusion detection systems. The authors discussed security challenges in smart farming, including the aspects related to agricultural production and information technology. They suggested that advancements in 5G, fog computing (FC), energy management systems, software-defined networking (SDN), virtual reality (VR), augmented reality (AR), and cybersecurity datasets for smart agriculture would contribute to the field’s development. Additionally, experiments were conducted to examine the impact on smart farming security, with results indicating that agricultural equipment, such as solar insecticidal lamps, could affect agricultural security.

Different security scenarios in the smart farm sector were studied in [

10] through the analysis of possible attacks and threats within the context of IoT applications. This paper reviewed existing testbeds for smart farms to understand their benefits and proposed a layered architecture to analyze security requirements. In this paper, a literature survey of security protocols, with a focus on blockchain-based solutions, was also conducted. The authors concluded that there was an immediate need to develop authentication protocols before any message exchange took place in a smart farming environment. They noted that areas such as cyber–physical searching, secure encrypted searching, and access control were largely unexplored.

The authors of [

11] presented specific security issues in Agriculture 4.0. By reviewing smart agriculture systems, the authors categorized the architecture used by most systems into four layers: perception layer, network layer, edge layer, and application layer. This paper highlighted major security threats from a layered perspective, concluding that current smart farm research allocated too few resources to address security threats, likely because solutions were still in the early stages of development with limited computational resources. However, the authors believed that addressing these threats could enhance the reliability and accuracy of smart farm systems on a large scale.

A similar multi-layered architecture for smart farming was discussed in [

13], where the proposed architecture consisted of four layers: a physical layer, edge layer, cloud layer, and network communication layer. The article surveyed the state-of-the-art research and acknowledged important works related to cybersecurity in smart farming. The authors outlined different entities pertinent to real-time use cases supported by edge and cloud environments and summarized security and privacy issues in smart farming. They highlighted various attack scenarios, including those affecting the entire food supply chain, and discussed open challenges and research problems related to smart farming security and privacy leakage.

Meanwhile, ref. [

12] focused on security and privacy issues in green IoT-based agriculture. The article presented IoT-based smart farming as a four-tier architecture: sensor layer, fog layer, core layer, and cloud layer. Based on this architecture, the authors discussed threat models and classified them into five categories: attacks against privacy, authentication, confidentiality, availability, and integrity. The state-of-the-art technologies for security and privacy preservation in IoT applications were compared, and their adaptation to smart farming was discussed. The authors also emphasized the application of privacy-oriented blockchain-based solutions and consensus algorithms for enhancing smart farming.

The security threats and vulnerabilities addressed in existing research (e.g., [

9,

10,

11,

12,

13]) primarily stem from conventional cyber and physical threats posed by traditional tools and attack methods rather than UAVs. However, the rapid advancement and growing accessibility of UAV technology in recent years have introduced a new and pressing security challenge. This widespread adoption presents significant challenges for safety, security, and privacy. Reports of UAV-related incidents affecting critical infrastructures are growing, and there have been documented cases of terrorists using weaponized UAVs for attacks, surveillance, reconnaissance, and other illegal activities [

14]. As UAVs become increasingly common, unauthorized UAVs (in this manuscript, unauthorized UAVs are defined as UAVs that enter a farm’s airspace without the owner’s permission) have emerged as a novel tool for executing threats against smart farms. This shift necessitates extra attention on smart farming technologies that are most vulnerable to UAV-based attacks. Understanding these risks is critical in addressing the modern threats to smart farms. In parallel, the development of counter-drone technologies should be considered an integral part of future smart farm security strategies to mitigate the potential impact of unauthorized UAVs. This underscores the urgent need to incorporate these countermeasures into smart farming research and development. Against this background, this work aims to investigate UAV-related threats to smart farms, beginning with a discussion on smart farm components valuable to UAV-based attacks, exploring potential interferences caused by UAVs, and concluding with a discussion on counter-UAV measures.

The rest of this paper is organized as follows.

Section 2 outlines the main objectives and motivations for this work, along with our key contributions.

Section 3 and

Section 4 summarize the applications of IoT technology and UAV technology, respectively, both of which are considered sensitive to UAV-based attacks.

Section 5 thoroughly examines the threats posed by unauthorized UAVs to smart farming, taking into account the applications reviewed in the previous sections. Based on these identified threats,

Section 6 examines various UAV detection and positioning technologies in the context of smart farming, serving as the foundational step for developing counter-UAV systems. Furthermore,

Section 7 explores future directions for anti-UAV systems in smart farms, emphasizing the need for hybrid detection methods, non-invasive countermeasures, enhanced security for IoT edge nodes, and real-time autonomous response systems. Finally,

Section 8 presents the conclusions of this paper.

2. Motivation and Contribution

The initial motivation for preparing this review stems from the fact that IoT field devices and smart farm UAVs are critical components of smart farming but are particularly vulnerable to interference from unauthorized UAVs. To the best of the authors’ knowledge, a significant gap exists in understanding the threats posed by unauthorized UAVs to smart farming systems.

In recent decades, UAVs have gained significant popularity, while simultaneously introducing new security challenges. Commercial UAVs available on the market are typically compact, with limited payload capabilities and restricted travel distances. These features make UAVs more covert in their operations but also limit their coverage, presenting security challenges to both traditional and smart farming practices. The covert nature of UAVs, coupled with their limited coverage, poses unique threats, as they can be deployed stealthily to interfere with farm operations or collect unauthorized data.

Meanwhile, the specific security threats posed by unauthorized UAVs to smart farms are not yet fully understood. The potentials for UAV-based attacks, such as data interception, jamming of communication signals, or even physical damage to infrastructure, present significant risks. To address these challenges, it is essential to assess modern smart farm technologies, particularly those vulnerable to UAV-based attacks. This includes evaluating the security of communication networks, IoT devices, and automated systems that manage critical farming operations. Understanding these vulnerabilities is crucial for developing effective countermeasures to protect smart farms from evolving threats posed by unauthorized UAVs.

In this work, a comprehensive literature search was conducted to identify relevant studies on UAV-imposed threats to smart farms. The search was performed using major academic databases, including Scopus, Web of Science, IEEE Xplore, and Google Scholar for supplementary sources. The search strategy employed keyword combinations such as “smart farms AND IoT”, “smart farms AND UAVs”, “smart farms AND security AND privacy” and “UAV threats AND UAV detection”. To ensure a broad and relevant scope, additional references were manually identified from the reference lists of key publications. Only peer-reviewed journal articles, conference proceedings, and high-impact or very recent review papers were considered for inclusion.

The selection of studies was based on predefined inclusion and exclusion criteria. Articles published in English between 2020 and 2024 were prioritized, focusing on the specific topics covered in each section and subsection. In cases where only a limited number of studies met these criteria, an extended range from 2015 to 2020 was considered, incorporating only high-impact publications. Studies were excluded if they were non-English, lacked a clear methodology or experimental validation, or were duplicate publications or preprints without peer review. The screening process was conducted in two stages, beginning with an initial review of titles, abstracts, and conclusions, followed by a full-text assessment to determine their relevance to the study objectives.

The main contributions of this work are summarized as follows:

This review identifies IoT field devices and UAVs as two critical components of smart farming that are particularly vulnerable to unauthorized UAV attacks. It explores recent advancements in the state-of-the-art applications of these technologies within smart farming.

An analysis of unauthorized UAV threats to smart farming is introduced for the first time.

Various UAV detection and positioning technologies are examined, with a focus on their specific challenges in smart farming environments.

3. IoT on Smart Farming

IoT technology is considered as one of the fundamental technologies of smart farming. It plays a pivotal role in enabling efficient and automated agricultural operations by integrating various sensors, devices, and systems to monitor and manage farm activities in real time.

This technology transforms real-world physical things or objects to online virtual objects using specific addressing schemes. The IoT technology aims to unify everything in our world under a common infrastructure, keeping us informed of the state of the things or objects using sensors, as well as giving us control of those things around us using actuators [

15]. The authors of [

16] surveyed 67 research papers published between 2006 and 2019 on the use of IoT in various agricultural applications. As illustrated in

Figure 2, 70% of these applications focus on monitoring, 25% are used for controlling, and the remaining 5% are dedicated to tracking animals.

Regarding data flow, IoT-based smart farms are structured using a four-layer model [

17], as illustrated in

Figure 1. The first layer, the perception layer, consists of various sensors distributed throughout the farm to collect environmental and plant-related data, such as soil moisture, temperature, humidity, and crop health. The network layer facilitates communication between sensors and cloud servers through routing technologies. Since smart farms cover large areas and rely on numerous sensors, gateways are often required to enhance data collection efficiency and streamline information transfer. In the service layer, collected data are stored, processed, and analyzed to generate actionable insights. Advanced data processing techniques extract meaningful information about the farm’s current status, while decision-support tools help farmers optimize agricultural output, maintain crop quality, and efficiently manage resources. The application layer provides a user-friendly interface for data visualization, allowing farmers to monitor farm conditions through graphical representations of sensor data. This enables informed decision-making and precise control over field actuators, ultimately improving overall farm management and productivity.

To further improve the IoT applications on smart farming, recent development also introduces edge computing nodes, including decision-making nodes and information processing nodes. The former nodes connect the sensors and actuators locally in scenarios requiring quick responses, while the later nodes are used to reduce the workload of the central server and suitable for the situations when various information are generated.

In the rest of this section, we discuss IoT applications in smart farming to highlight the essential role of IoT in enabling advanced monitoring and automation for agricultural operations. Key applications such as soil monitoring, crop management, climate monitoring, and automated machinery are presented, demonstrating their contributions to efficient and informed decision-making. The discussion then introduces the novel integration of edge computing, showcasing how this technology further enhances IoT system performance in the context of smart farming. Potential vulnerabilities of these IoT systems, particularly concerning edge computing nodes, are deferred for discussion in

Section 5.

3.1. Monitoring Using IoT Technology

IoT technology is fundamental to smart farming, as agricultural landscapes cover vast areas that require continuous and precise monitoring to ensure optimal productivity and sustainability [

18]. By leveraging a network of interconnected sensors, IoT enables real-time data collection on critical environmental parameters such as soil moisture, temperature, humidity, and atmospheric conditions. Additionally, these sensors play a pivotal role in monitoring the health of crops and livestock, facilitating early detection of diseases, nutrient deficiencies, and other anomalies that could impact yield and farm efficiency. The integration of IoT sensing results with advanced analytics further enhances decision-making, enabling predictive maintenance and resource optimization.

3.1.1. Monitoring Environment Parameters

Environmental parameters that affect crops include soil, water, temperature, and air conditions.

A novel chip-level colorimeter has been developed to detect nitrogen and phosphorus concentrations in soil, using a handheld colorimeter or spectrophotometer. This technology is leveraged to create a system specifically designed for real-time detection of soil parameters on farms. Chemical reactions with the soil solution generate colors in the presence of nutrients, which are then quantitatively measured by sensors [

19].

Two smart measurement stations have been deployed for environmental sensing in smart farms [

20]. One station monitors soil conditions through sensors that measure soil temperature and moisture. The other station tracks weather parameters such as wind speed, wind direction, air temperature, and humidity.

Additionally, a novel method was developed by [

21] to enhance the selectivity of existing metal–oxide–semiconductor (MOX) gas sensors. This system can identify four different gases—ammonia (NH3), nitrous oxide (N2O), methane (CH4), and normal air—and can be applied in smart agriculture to monitor greenhouse gas emissions from the soil.

A low-cost sensory system was proposed in [

22], incorporating various sensors such as soil temperature and moisture sensors, a water level indicator, a pH sensor, a GPS sensor, and a color sensor, all connected to an Arduino UNO microcontroller board. This system collected real-time data at different intervals from crop areas, which will later be analyzed to recommend suitable crops.

Moreover, a system based on the Arduino Mega 2560 microcontroller board was developed in [

23]. It integrates multiple sensors to measure water temperature, pH, dissolved oxygen, water level, and sensor lifespan to monitor water quality in fish farms.

3.1.2. Monitoring Plants Status

IoT technologies can also be adopted for monitoring plant health, including pest infestations, weed presence, and disease infections.

For example, ref. [

24] evaluated the accuracy and performance of a ground-based weed mapping system. This system relies on optoelectronic sensors and location sensors. Specifically, the WeedSeeker sensor, an active optical sensor with its own light source, can distinguish green plants from bare ground by analyzing their different light reflection patterns in the red and near-infrared bands.

In a related study, ref. [

25] reviewed various approaches for selective herbicide application using precise weed detection methods. The authors provided an architecture for an IoT-based smart weed detection platform. This platform, equipped with a a Raspberry Pi 3rd B+ single-board computer (SBC) (Raspberry Pi Foundation, Pencoed, Wales, UK) and a Sony IMX219 image sensor (Sony Semiconductor Solutions Corporation, Tokyo, Japan), captures real-time video of the field. The SBC drives the camera and handles remote computing and networking to exchange information between the platform and the server. A classifier, deployed on the server, uses predefined files and the captured videos to detect weeds.

Through pest control measures aimed at preventing litchi stink bug infestations, ref. [

26] developed an IoT- and AI-based system for pest surveillance and forecasting. Microclimate monitoring stations equipped with multiple sensors are used to capture environmental parameters such as temperature, humidity, soil moisture, light intensity, and atmospheric pressure. The captured data are transmitted via long-range (LoRa) signals to a Raspberry Pi 4 server SBC, which forwards them to the cloud. A pre-trained Long Short-Term Memory (LSTM) network on the cloud server uses the sensor data for pest detection, distribution analysis, and prediction.

Similarly, ref. [

27] designed an IoT- and cloud-based system for monitoring and predicting agricultural pests and diseases. This system collects operational data from sensors deployed inside and outside farming facilities. A testbed demonstrated its capability to predict disease in strawberry plants in a greenhouse environment. The system employs IoT devices produced by oneM2M (

https://www.onem2m.org/, accessed on 19 March 2025) to measure temperature, humidity, oxygen concentration, and illumination intensity. An IoT-Hub was deployed to request data from those devices and transmit them to the cloud server, where a General Infection Model predicted strawberry diseases based on the gathered information.

3.2. Perform Agriculture Activities

IoT actuators play a crucial role in executing actions based on prior sensing observations. Smart irrigation and fertigation systems have garnered significant attention, alongside growing interest in smart temperature and lighting control systems in agriculture.

For example, ref. [

20] developed an IoT-enabled smart irrigation system that predicts required water levels and automatically schedules irrigation. The system gathers soil parameters such as moisture and temperature, and weather parameters such as wind speed, direction, air temperature, and humidity. These are sent to a server via LoRa signals to enable machine learning algorithms to predict water requirements.

Similarly, ref. [

28] proposed two IoT-based smart fertigation systems, addressing short-term and long-term objectives. The short-term approach focuses on daily fertigation management via real-time monitoring of soil conditions and crop growth. Sensors and cameras gather data on soil temperature, moisture, electrical conductivity (EC), pH, and crop development. The long-term approach seeks to optimize economic and environmental outcomes by allocating resources efficiently across multiple crops, relying on the expertise of greenhouse growers and agricultural specialists.

In another study, ref. [

29] explored smart thermal control in pig farming facilities. Their system aimed to establish a thermoneutral zone (TNZ) conducive to pig growth by using IoT technologies. Environmental sensors collected data on ambient temperature, humidity, pigs’ physiological characteristics, and their positions. These data were transmitted and managed through Microsoft Azure (

https://azure.microsoft.com/en-us, accessed on 19 March 2025), with a custom IoT middleware triggering actuators to control air-conditioning and maintain optimal growth conditions.

An IoT-based intelligent LED lighting control system was also developed for greenhouses [

30]. The system used sensors to measure humidity, temperature, UV light, and soil moisture. These data, paired with a neural network-based controller, helped optimize LED lighting based on daylight conditions, promoting better plant growth and crop yield.

3.3. Edge Computing

Recently, edge computing has been integrated into the IoT framework to enhance data quality (QoD) and reduce latency [

31]. The network includes multiple edge nodes, each serving as a gateway that provides services like data capture, security monitoring and detection, prediction, and real-time decision-making support. Three primary functions are considered in the implementation of this edge computing:

Information Processing: This function extracts raw features from the data, thereby reducing the workload on central servers.

Decision-Making: Edge computing supports real-time decision-making, enabling time-sensitive services and addressing connectivity challenges in farms by extending traditional cloud computing architecture to the network’s edge.

Wireless Structure Optimization: It reorganizes the wireless network to enhance efficiency, reduce latency, and decrease energy consumption.

Each of these functions is discussed in more detail in the subsequent sections.

3.3.1. Production Information Processing

Traditionally, all data were collected and stored on centralized servers, where all information processing occurred. For example, ref. [

32] presented Monsanto’s Integrated Agricultural Systems, which collected data on soil conditions and insect threats and provided them to producers. Similarly, ref. [

33] presented a cloud-based IoT data analysis framework that consolidated various sources of raw data into a central repository and performed information extraction using Big Data solutions.

In contrast, addressing issues related to connectivity and sensor failures, ref. [

34] proposed a fog computing framework comprising three types of devices: constrained-resource sensors for capturing raw data, fog nodes for processing data close to the sensors to minimize cloud workload, and cloud nodes for storing processed data and enabling further services. The fog nodes utilized deep neural network-based architectures to develop prediction models for soil moisture and manage missing data issues. Experimental results demonstrated that this framework significantly improved irrigation water savings.

3.3.2. Decision-Making Without Cloud Server

The authors in [

35] introduced a monitoring and control framework that was domain-agnostic and featured subsystems for both monitoring and control, as well as a self-sufficient computing subsystem that did not require an internet connection. This framework was designed for environments lacking internet coverage. It consisted of three main subsystems: the monitoring subsystem, the control subsystem, and the computing subsystem. These subsystems communicated internally, and the computing subsystem could optionally connect to the internet, which enhanced security by reducing the system’s exposure to the public internet.

3.3.3. Wireless Structure Optimization

In smart farming, IoT devices depend on the network layer, illustrated in

Figure 3, to exchange information. This layer can be implemented using both wired [

36] and wireless technologies [

37]. While wired networks are reliable, wireless IoT sensor networks offer distinct advantages, especially in hazardous, remote, or inaccessible areas. Additionally, wireless networks significantly reduce installation costs and simplify maintenance. For instance, industrial wiring costs can reach up to USD 130,650 per meter, but adopting wireless technology can cut these costs by up to 80% [

7].

Wireless communication technologies are categorized into long-range and short-range protocols. Long-range protocols such as LoRa, Sigfox, and narrowband IoT (NB-IoT) offer extensive coverage with low power consumption, making them ideal for large-scale agricultural applications [

38]. In contrast, short-range protocols like WiFi and ZigBee support high-speed data transmission over shorter distances, making them suitable for localized smart farming systems. Reliable connectivity is essential, particularly in challenging environmental conditions, ensuring real-time data transmission for precision agriculture. Wireless communication protocols also handle data encoding, packet routing, and device addressing, serving as the backbone of IoT systems. Comparative studies have extensively analyzed the advantages and trade-offs of these long- and short-range protocols [

17,

38,

39], as summarized in

Table 1.

Moreover, when integrated with edge computing nodes, wireless networks enable dynamic structures that improve data transmission efficiency, reduce latency, and conserve energy.

To address the limitations of battery life and resource constraints in sensor nodes, energy-efficient strategies are critical. The authors in [

40] recently proposed a notable solution which is a dynamic three-level tree topology protocol based on edge computing. This protocol uses a base station to locally process collected data and determine the network topology. The base station evaluates sensor nodes based on energy levels, signal-to-noise ratios, and proximity, grouping sensors into clusters and selecting cluster heads to optimize data transmission. This setup allows efficient data collection and single-hop internet connectivity, providing users with detailed soil information for analysis.

Despite the widespread adoption of wireless IoT frameworks in smart farming [

41], challenges such as operational costs, technical constraints, and data management complexities remain. Addressing these requires balancing data latency with power consumption, ensuring scalable storage and processing, and maintaining data interoperability with diverse cloud systems. Promising directions for overcoming these challenges include leveraging edge nodes and integrating smart farm UAVs that will discussed in Section.

Section 4, which are two hot topics for the research community.

3.4. Summary

This section emphasizes that IoT technology plays a pivotal role in smart farming by facilitating remote monitoring and the automation of critical agricultural processes. The key applications of IoT in smart farming include the following:

Soil monitoring to track moisture, temperature, and nutrient levels, optimizing irrigation and fertilization.

Crop management for monitoring crop health, growth stages, and environmental conditions, providing real-time data for precision agriculture.

Weather monitoring that offers continuous data on weather conditions, aiding in decisions related to planting, harvesting, and crop protection.

Automated machinery where IoT-connected devices enable autonomous operations, boosting efficiency and lowering labor costs.

However, the current design of IoT products lacks integrated security mechanisms, making them vulnerable to unauthorized UAV intrusions. Additionally, while edge computing nodes significantly enhance the speed and efficiency of IoT applications by processing data locally, they also increase the attack surface for unauthorized UAV threats. These UAV-related threats will be discussed in

Section 5.

4. UAV on Smart Farming

UAVs have become another essential part of smart farming, offering advanced capabilities for monitoring, managing, and optimizing agricultural operations. UAVs help farmers enhance productivity, reduce costs, and adopt more sustainable farming practices. Over the past few decades, satellite imagery has effectively addressed agricultural challenges such as land-cover and crop-type classification [

42]. However, recent trends in smart farming research, including monitoring of soil fertility loss, reveal limitations in satellite imaging due to its resolution constraints [

43]. In response, modern agricultural practices are increasingly turning to automated robots to enhance operational efficiency. UAVs and UGVs (Unmanned Ground Vehicles) offer cost-effective and efficient solutions for various tasks. Additionally, UAVs integrated into IoT networks facilitate the collection and analysis of data from IoT sensors, improving decision-making and operational performance. Recently, a novel application of wireless charging has been proposed, utilizing UAVs to charge underground IoT devices. This advancement further highlights the growing interest and potential of the UAV technologies in smart farming research.

The content of this section is summarized in

Figure 4, which illustrates the various applications of UAV technology in smart farming, highlighting its role in improving agricultural operations through precise monitoring, data collection, automation and IoT support. In detail, the first part delves into the use of UAVs as monitoring tools, highlighting their capacity to evaluate crop conditions and generate actionable insights through advanced sensing technologies, with key applications in water stress estimation, weed detection, and disease prediction.

The second part addresses UAV-based yield estimation, showcasing their ability to acquire high-resolution imagery and derive spectral indices for predicting crop yields at various growth stages, offering greater efficiency, accuracy, and cost-effectiveness than traditional methods. The discussion then shifts to precision spraying, where UAVs are demonstrated to improve seeding, fertilization, and pesticide application through adaptive flight path optimization and versatile operational capabilities. Furthermore, UAV-supported harvesting is explored as a transformative innovation in agricultural automation, significantly boosting efficiency, minimizing labor dependency, and maximizing yield through advanced crop identification, strategic harvesting planning, and precision cutting techniques. Finally, the section explores UAV-supported IoT systems, emphasizing their integration with IoT technologies to enhance data collection and communication efficiency in modern agriculture.

4.1. UAVs for Monitoring

Unlike IoT-based monitoring systems, UAVs are not typically used for direct measurement of plant environmental conditions. Instead, they focus on assessing crop status and deriving insights into plants’ needs and environmental factors through crop data analysis. Popular UAV applications include water stress estimation, weed detection, and disease prediction. All of them can be implemented using various advanced sensing technologies, such as optical, multispectral, hyperspectral, thermal, and fluorescence sensors, etc. With the above sensing technologies, UAVs offer significantly finer resolution in detecting plant conditions compared to satellite systems [

43]. They also provide flexible data collection capabilities by operating at different altitudes, enabling detailed imagery for identifying early signs of plant stress, such as weed infestations or disease outbreaks. This ability supports timely, targeted interventions that enhance crop management. Additionally, UAVs contribute to creating forecasting models applicable beyond individual fields, offering insights into regional and even global agricultural trends.

The following sections explore the various sensing technologies used in these UAV monitoring applications in greater detail.

4.1.1. Water Stress Estimation

Numerous studies have utilized UAV-mounted sensors to assess water stress in crops, leveraging advanced imaging and sensing technologies. For instance, ref. [

44] investigated the use of UAV RGB imagery for evaluating drought stress in wheat. A Sony NEX7 camera (Sony Corporation, Chonburi, Thailand) mounted on a DJI-S1000 (SZ DJI Technology Co., Ltd., Shenzhen, China) UAV captured imagery, which was analyzed using the normalized excess green index to segment wheat pixels from the soil. From this segmentation, 21 features were extracted, including statistical and spectral indices, which were then processed through classification models to differentiate between wet and dry wheat.

Similarly, ref. [

45] explored thermal imaging to detect water stress in sorghum using two thermal sensors. The study correlated sensor-derived surface temperature values with phenotypic parameters such as leaf area index, crop height, and soil moisture. Additionally, it examined how UAV flight altitudes influenced sensor accuracy and data interpretation.

In another study, ref. [

46] employed the DJI M-300 (SZ DJI Technology Co., Ltd., Shenzhen, China) equipped with a MicaSense Altum camera and downwelling light sensor to capture optical and thermal imagery of smallholder maize fields. The data were analyzed to predict maize temperature and stomatal conductance, offering insights into crop water stress and moisture levels across different growth stages. These findings demonstrate the potential of UAV-mounted sensors in advancing water stress monitoring and irrigation management in agriculture.

4.1.2. Weed Detection

Beyond monitoring water stress, UAVs have proven highly effective in the early detection of weeds, facilitating targeted and efficient weed management. A major breakthrough in this area is the creation of high-resolution RGB image datasets collected by UAV-mounted optical cameras [

47]. These datasets have played a crucial role in training and evaluating advanced computer vision models, enabling precise identification of weeds at various growth stages, even when mixed with crops. By harnessing these cutting-edge technologies, farmers can implement precision weed management strategies that minimize herbicide usage, promote sustainable farming practices, and support real-time decision-making, ultimately improving crop yield and quality.

For real-time applications, ref. [

48] introduced a weed detection model based on the neural network-based object detection algorithm YOLOv7-tiny architecture, capable of distinguishing weeds from crops using aerial imagery. Optimized for deployment on resource-constrained edge devices, the model was tested on a Jetson AGX Xavier (Nvidia Corporation, Santa Clara, CA, USA) equipped with optical cameras capturing 720p video at 30 FPS. This system can be mounted on ground robots or UAVs, enabling site-specific weed management with low-latency decision-making, providing real-time, adaptive solutions for agricultural fields.

4.1.3. Disease Prediction

UAVs play a vital role in disease prediction by capturing high-resolution imagery, enabling early and precise detection of plant health issues for timely agricultural interventions. For instance, UAV-based solutions have been employed to detect and classify sheath blight (ShB) in rice cultivation, a major global concern. A study utilized a Phantom 2 Vision+ (SZ DJI Technology Co., Ltd., Shenzhen, China) UAV equipped with a digital camera and a Micasense RedEdge

TM multispectral camera (MicaSense, Inc., Seattle, DC, USA) to collect RGB and multispectral imagery. Although RGB and multispectral images were less effective in quantifying ShB severity at early stages, the Normalized Difference Vegetation Index (NDVI) derived from multispectral imagery proved more effective in distinguishing infection levels. The research suggested hyperspectral sensors could enhance early-stage detection of ShB [

49].

Similarly, hyperspectral imaging has shown significant promise in detecting diseases at earlier stages. In [

50], a hyperspectral sensor mounted on a six-rotor DJI Innovations UAV was used to monitor wheat for yellow rust. The sensor, covering a spectral range of 450–950 nm with a 4 nm resolution, captured hyperspectral imagery that was analyzed to develop monitoring models using vegetation indices (VIs) and texture features (TFs). The combined VI-TF model achieved the highest accuracy across all infection stages, with particularly strong performance during late infection stages. These findings underscore the potential of advanced UAV-mounted sensors to enhance disease monitoring and management in smart farming.

These discussions on water stress estimation, weed detection, and disease prediction using UAVs highlight the increasing significance of UAV-based sensing technologies in smart farming. By offering precise and early detection capabilities, these technologies enhance crop management practices and contribute to improved yield outcomes.

4.2. UAV for Yield Estimation

Traditional yield estimation methods, which rely on manual field surveys, are time-consuming, labor-intensive, and susceptible to human error, making them inefficient for large-scale or rapidly changing agricultural environments. In contrast, UAV-based crop yield estimation is transforming agriculture by offering high-resolution, timely, and flexible data acquisition while remaining cost-effective. It enables continuous monitoring across various growth stages and enhances accuracy through advanced analytics, providing deeper insights into crop performance.

The most common applications of UAV-based yield prediction rely on RGB cameras and multispectral sensors. For instance, in [

51], yield prediction was assessed using spectral vegetation indices (SVIs) derived from RGB photos and near-infrared images captured by UAVs at various crop growth stages, including heading, anthesis, milk, and dough stages. They used different UAV platforms in their experiments, including the fixed-wing SwingletCam and the multicopter Hexa Y, equipped with RGB and modified near-infrared cameras. Their findings indicated that the Green Normalized Difference Vegetation Index (GNDVI) was significantly correlated with grain yield when recorded at booting and anthesis stages, showing better yield prediction accuracy compared to the Normalized Difference Vegetation Index (NDVI) recorded at booting.

Similarly, ref. [

52] explored various vegetation indices (VIs) derived from UAV-captured RGB and multispectral imagery for corn yield prediction during different growth stages. Their study utilized a DJI Phantom 3 Pro (SZ DJI Technology Co., Ltd., Shenzhen, China) UAV equipped with a multispectral camera, capturing images across multiple spectral bands, including RGB, green, red, red edge, and near-infrared (NIR). The research identified that specific spectral bands and VIs, particularly those related to red edge and chlorophyll content, showed moderate to good correlation with corn yield at key growth stages such as V6 (vegetative stage) and R5 (reproductive stage).

These studies underscore the potential of UAV-based remote sensing in providing timely and accurate crop yield predictions at different stages, which are vital for optimizing agricultural practices and decision-making.

4.3. Precision Spraying

For control applications, UAVs have been increasingly used in various aspects of planting management, including seeding, fertilization, and pesticide application. Research and development in this area have primarily focused on two key aspects: the design of UAVs and sprayers, and the optimization of flight paths to ensure efficient and precise application.

The authors of [

53] designed a malfunction-controlled quadcopter drone specifically for carrying and spraying liquid pesticides and fertilizers. The drone was equipped with WiFi and GPS modules connected to an Arduino Mega board, allowing for manual control via a developed Android app interfaced with the board. This setup provided farmers with a simple yet effective tool for managing pesticide and fertilizer applications.

Another significant development was presented by [

54], where an adaptive UAV control system was designed to autonomously adjust its flight path based on real-time weather conditions. This system, implemented on a Raspberry Pi Model B SBC, consisted of two subsystems: one for monitoring crop field weather conditions and updating the UAV’s control parameters, and another for optimizing these parameters to adjust the UAV’s flight path. The system’s ability to make real-time adjustments allowed for more precise pesticide deposition on target fields, reducing environmental impact and improving application efficiency.

Additionally, a multifunctional unmanned aerial vehicle (mUAV) was developed by [

55] for rice planting management, encompassing seeding, fertilization, and pesticide application. This mUAV featured an intelligent operation platform with three main components: a flight control platform, a spreading system for sowing and fertilization, and a spraying system for pesticide applications. The systems were designed with a quick-release buckle, allowing the UAV to switch between different agricultural tasks seamlessly. A comparative study conducted throughout the rice cultivation process demonstrated that the mUAV was more efficient in sowing seeds compared to traditional mechanical direct seeding and transplanting methods.

These advancements highlight the potential of UAVs to revolutionize planting management practices, offering precision, efficiency, and adaptability that traditional methods cannot match.

4.4. UAV-Aided Crop Harvesting

Crop harvesting is a labor-intensive and time-consuming process, with manual picking employing over 60% of the agricultural workforce [

56]. However, the increasing labor shortage is expected to drive up harvesting costs significantly in the near future. To address these challenges, researchers have explored automated picking solutions, with machine vision-based picking robots being widely studied due to their low hardware costs and rich visual information. However, most existing picking robots rely on ground-based mobile platforms, limiting their effectiveness in harvesting high-altitude crops such as tree-grown fruits in inter-mountain orchards. As a promising alternative, unmanned aerial vehicles (UAVs) have been introduced as mobile carriers for harvesting end-effectors, offering high efficiency and flexibility in agricultural harvesting [

57]. Current research on UAV-assisted harvesting primarily focuses on three key areas: UAV-aided harvesting planning, crop identification and positioning using UAV imagery, and UAV-based picking mechanisms.

One major challenge in precision harvesting is determining the optimal picking time, especially for crops with short harvesting windows, such as strawberries. Strawberry growers must frequently monitor growth conditions, as mature strawberries remain harvestable for only 1–3 days, requiring batch harvesting within large-scale farmland. To support efficient harvesting, ref. [

58] proposed a UAV-based system for formulating harvesting plans. A lightweight deep learning model based on YOLOv8n was developed to accurately identify strawberries at various growth stages using UAV imagery. A region segmentation algorithm leveraging environmental stability was introduced to isolate strawberry plant regions, while an edge-extraction-based plant counting method was implemented to estimate the number of plants in a given area. Using these techniques, a growth information map was generated to provide a visual representation of growth distribution across fields, assisting farmers in making informed harvesting decisions.

In large-scale apple farms, precise apple detection is crucial for yield estimation and harvesting automation. Traditional apple recognition algorithms, such as YOLOv7, have achieved high accuracy; however, challenges such as occlusions, wiring, branching, and overlapping hinder accurate detection. To address these limitations, ref. [

59] introduced an improved apple detection approach using UAV imagery and a multi-head attention-enhanced YOLOv7 model. This method significantly improved object detection performance in complex backgrounds by enhancing depth estimation, enabling precise data collection on apple distribution and density. The proposed approach facilitates agricultural automation by improving yield estimation accuracy and crop health assessment for better harvesting strategies.

Harvesting fruit from high-altitude trees, such as coconut and palm trees, presents another major challenge, particularly in hilly and mountainous regions. Additionally, the shortage of skilled labor exacerbates the issue, as fruit-picking jobs are often considered undesirable despite high wages. To address this, ref. [

60] proposed a UAV-based fruit harvesting system equipped with a vision system and a slider-crank mechanism for cutting. The quadcopter UAV, powered by brushless DC (BLDC) motors, is capable of lifting payloads of up to 1 kg. The cutting mechanism operates using a DC motor-driven stainless steel (SS 304) cutting tool connected to a reciprocating slider link. A camera integrated into the UAV provides real-time fruit identification, enabling manual cutting via remote control. This UAV-assisted system enhances harvesting efficiency and accessibility in challenging terrains.

Beyond fruit harvesting, UAVs also offer innovative solutions for honey collection, a traditionally labor-intensive and hazardous process. Extracting honey from high-altitude locations, such as hills and tall trees, poses significant risks to human workers. To mitigate these challenges, ref. [

61] proposed an autonomous honey-harvesting drone equipped with a wireless cutting tool and a honey collection unit. The drone features a high-speed motor-driven cutting mechanism controlled via an Arduino-based RF transmitter–receiver system, with a sensing range of up to 3 km. By remotely operating the cutting tool and honey collection system, the proposed UAV-based solution ensures safe and efficient honey harvesting, reducing the risks associated with manual extraction.

In summary, UAVs are revolutionizing agricultural automation by tackling critical challenges in crop harvesting. Advancements in deep learning-based crop identification, UAV-assisted harvesting planning, and precision cutting mechanisms have demonstrated the potential of UAVs to enhance efficiency, minimize labor dependence, and optimize yield management. However, research in this field is still in its early stages, and the development of a fully integrated smart picking UAV system remains a long-term goal, requiring further innovation in autonomous control, sensor fusion, and real-time decision-making.

4.5. UAV-Supported IoT

The integration of UAVs with IoT systems is a rapidly growing area of research, especially in the context of smart farming. This integrated UAV-IoT system is crucial for enhancing data collection and communication, particularly in large-scale monitoring and remote sensing applications where traditional infrastructure might be costly. In this system, UAVs serve multiple roles: they can relay data between sensors and edge nodes, between edge nodes and cloud servers, or directly between sensors and cloud servers, making them versatile tools in smart agriculture.

For instance, ref. [

62] presented a cost-effective platform for environmental monitoring on farms using UAV-integrated IoT technology. This platform was especially useful in areas lacking network deployment, where the installation of a LoRa gateway could have been prohibitively expensive. The platform included underground sensors for soil moisture and an aboveground weather station for measuring temperature, humidity, rain, and solar radiation. A UAV equipped with a LoRa module collected and transmitted data to the cloud server every 12 h, thereby reducing the reliance on continuous network infrastructure.

In another study, ref. [

63] discussed a crop monitoring system that leveraged collaboration between UAVs and federated wireless sensor networks (WSNs) through a hierarchical structure. Sensor data were processed at the ground level by head nodes, which detected sensor faults and extracted relevant information using edge computing algorithms. UAVs were then deployed to collect these compressed data from the cluster head nodes and upload them to a cloud server for further analysis and decision-making. The study also proposed an algorithm to optimize UAV trajectories for efficient data collection and resource utilization.

The authors of [

64] introduced a fog computing-based smart farming framework where UAVs gathered data from IoT sensors and offloaded them to fog sites or edge nodes. In this framework, fog brokers were used to manage resources and control UAVs, enabling efficient and secure data collection even in latency-sensitive applications. The simulation results from this study showed that the proposed framework enhanced data collection rates, energy efficiency, and security.

Moreover, UAVs could also function as mobile charging stations for IoT devices, addressing the issue of limited energy availability in these devices, which was still a relatively new research topic. In [

65], a UAV-based system was proposed for wirelessly charging underground IoT (UIoT) devices used for soil monitoring. These UIoT nodes, which did not require batteries or aboveground attachments, harvested radio energy from the UAV and transmitted data back using the ZigBee protocol. The collected data were then uploaded to a cloud server for soil quality analysis, ensuring continuous and sustainable field operations.

Overall, the integration of UAVs with IoT systems offers significant benefits in smart farming by enabling more efficient, reliable, and scalable monitoring and management solutions.

4.6. Summary

This section emphasizes the crucial role that UAV technology plays in advancing smart farming by offering enhanced monitoring, operational efficiency, and integration with IoT-based systems. Key UAV applications in smart farming include crop monitoring, soil and field analysis, yield estimation, precision spraying, harvesting and supporting IoT networks. A summary of these applications is provided in

Table 2.

However, despite these benefits, unauthorized UAVs pose significant security threats by exploiting the same technologies that improve farming practices, which highlights the necessity for advanced security solutions to protect the integrity and functionality of UAV systems in smart farming. We discuss the unauthorized UAV threats to smart farms in the next section with more details.

5. UAV Threats on Smart Farming

The integration of state-of-the-art IoT and UAV technologies in smart farming, as discussed in

Section 3 and

Section 4, has revolutionized agricultural practices by enhancing efficiency, optimizing resource management, and reducing labor costs. However, while these advancements make farms “smart”, they also introduce new security vulnerabilities that traditional farming does not face. Despite the growing adoption of IoT- and UAV-based solutions, it is observed that many studies fail to consider the associated risks and security measures in their designs. The interconnected nature of these technologies increases the attack surface, making smart farms more susceptible to cyber and physical threats.

At the same time, the global rise in UAV adoption and technological sophistication has escalated security concerns across multiple sectors, including agriculture. While commercially available UAVs are often compact with limited payload capacity and restricted travel distances, their agility and covert nature make them well suited for targeted attacks. Unauthorized UAVs pose a significant threat to smart farms by exploiting vulnerabilities in critical infrastructure such as IoT gateways, edge computing nodes, farm UAVs, etc. These attack vectors create opportunities for malicious actors to disrupt operations, steal sensitive data, or interfere with automated farming processes. In the following section, we examine the security threats of unauthorized UAVs to smart farming, discussing both farm-specific threats and broader smart agricultural security concerns.

5.1. Physical Attacks

UAVs present a substantial threat when weaponized for direct physical attacks on agricultural assets, smart farming systems, and individuals. These devices can be exploited by malicious actors, including terrorist groups or individuals, to deliver explosives, radioactive materials, or firearms, enabling precise and potentially devastating assaults on people and critical infrastructure [

66]. Within the realm of smart farming, such UAVs could target crops, livestock, essential IoT nodes, or other farm UAVs, causing widespread damage, disrupting operations, and endangering both safety and security across the agricultural ecosystem.

Moreover, unauthorized UAVs are vulnerable to farm animal or plant interactions, which could result in environmental damage. For instance, when operating in farms with a large number of animals, drones may collide with vulnerable animals. Additionally, unauthorized UAVs can deliver hazardous materials to a smart farm, targeting plants or animals. Competitors might employ UAVs to spray disease-carrying liquids on specific crops, reducing the target farm’s production.

Furthermore, unauthorized UAVs are susceptible to attacks on smart farm devices, especially essential network nodes. If a hacker identifies these key nodes, they could use a UAV to spray corrosive liquids on the nodes or their antennas, effectively disabling the entire network. In another scenario, if hackers understand the behavior patterns of smart farm spraying UAVs, they could deploy a small, inexpensive UAV to collide with the farm UAVs. This not only interrupts the UAVs’ missions but also causes environmental damage from spilled fertilizers or pesticides, leading to additional cleanup costs.

5.2. Information Leakage

Smart farms leverage a diverse array of sensors, devices, and automated systems to collect vast amounts of complex, dynamic, and spatial data. These data are critical for optimizing agricultural processes, enhancing productivity, and ensuring efficient resource utilization. However, leakage of such information poses significant security and economic risks, potentially undermining the integrity of smart farming operations.

One major threat arises from the unauthorized collection of soil and crop data. UAVs equipped with RGB, thermal, or multispectral cameras can be deployed by competitors or malicious actors to remotely gather crucial agricultural insights [

13]. By analyzing soil fertility levels, crop health indicators, and yield predictions, adversaries can infer a farm’s strategic plans, such as crop rotation schedules, fertilization patterns, and harvesting timelines. This intelligence enables competitors to gain an unfair market advantage, manipulate commodity prices, or even preemptively adjust their own agricultural practices to outcompete targeted farmers. Furthermore, exposure of proprietary farm data compromises business confidentiality, disrupting strategic decision-making and reducing the competitiveness of affected farms.

Beyond the risk of soil and crop information leakage, the exposure of networked sensors and devices presents additional security vulnerabilities. Attackers gaining access to device metadata can exploit this information to bypass security measures or identify key network nodes, laying the groundwork for future cyber or physical attacks [

67]. For instance, knowledge of sensor deployment locations, along with real-time tracking of movable devices such as autonomous tractors or UAVs, can lead to targeted attacks, device theft, or operational sabotage. The ability to predict movement patterns of farm machinery or UAVs increases the risk of interception or hijacking, further exacerbating security concerns.

A particularly insidious method of information leakage involves UAV-based network reconnaissance. Small UAVs, such as a DJI Phantom, equipped with WiFi Pineapples or similar radio transmission penetration platforms, can be flown over a smart farm at different times to capture network traffic data. These rogue UAVs can intercept and log critical wireless communication footprints, including MAC addresses, received signal strength indicators (RSSIs), frequency bands in use, and device manufacturer details. With this intelligence, attackers can map the network architecture, pinpoint the locations of key devices, and track the behavioral patterns of farm UAVs. Such reconnaissance not only facilitates targeted cyberattacks, such as man-in-the-middle attacks or signal jamming, but also introduces severe privacy risks, as attackers can use the collected data to reconstruct the farm’s operational routines and vulnerabilities.

In summary, unauthorized UAVs can be used to facilitate information leakage in smart farming, posing a multifaceted threat that goes beyond simple data breaches. This risk carries significant economic, operational, and security consequences, potentially compromising farm productivity, market competitiveness, and overall data integrity.

5.3. RF Signal Jamming

As reviewing in

Section 3 and

Section 4, the RF-based wireless communication technologies are widely used by smart farms, for instance, wireless sensing networks, WiFi-enabled UAVs, etc. An effective method to disrupt smart farming services is by interfering with RF signals. RF signal jamming refers to deliberately sending the interference signal, typically at a higher power level, in order to prevent the receiver from tracking and obtaining the signal of interest [

68], which will limit the smart farm’s performance. We consider three different implementations: signal propagation jamming, protocol-based jamming, and Global Navigation Satellite System (GNSS) signal jamming. The RF signal jamming attacks are classical cyberattacks to the networks. UAVs can make these attacks more efficient and covert by attacking the key network nodes.

5.3.1. Signal Propagation Jamming

RF signal propagation jamming is a disruptive technique used to degrade, interfere with, or completely interrupt communication between field devices in smart farms. The core idea is to inject powerful interference signals into the communication spectrum in order to reduce the signal-to-interference-plus-noise ratio (SINR) input into the target receiver making it harder to receive the information sent by remote devices, such as smart farm UAVs, UAV controller, sensors or gateways. Assuming the network topology is known to the competitors, the key network nodes, for instance the gateways or the edge computing nodes, can be easily identified and located, then a small UAV equipped with an RF signal generator can be sent close to those nodes and jam those nodes with low emitted energy.

The waveform of the RF interference signal can be wideband noise signals, tone interference signals, and frequency-sweeping signals [

68]. Wideband noise jamming disrupts communications by transmitting a powerful noise signal across a broad frequency range. Unlike narrowband jamming, which interferes with specific frequencies, wideband jamming affects multiple channels simultaneously, making it significantly harder for legitimate signals to be transmitted or received. This interference raises the noise floor, reducing the signal-to-noise ratio (SNR) and leading to signal degradation. As a result, IoT networks or UAVs may experience dropped connections, decreased data rates, or even complete communication failure. Even networks utilizing frequency hopping or spread spectrum techniques remain susceptible, as the jamming signal spans multiple frequencies at once. The increased interference forces smart farm devices to repeatedly retransmit data, leading to network congestion, higher latency, and reduced overall efficiency. Moreover, the additional retransmissions accelerate sensor battery depletion, further compromising the reliability and longevity of the devices.

A tone interference signal utilizes a narrowband transmission at a specific frequency to disrupt legitimate communications in smart farming systems. Depending on its characteristics, it can appear as a single-tone signal or multiple tones. In smart farming environments, where UAVs and IoT-based devices depend on stable wireless connections for real-time data exchange, tone interference can cause significant operational disruptions. For example, interference at critical control frequencies may block remote commands from reaching automated machinery, affecting precision agriculture tasks such as autonomous spraying. The effectiveness of this type of attack depends on the positioning of the tones and the transmitted power.

Frequency-sweeping interference, or sweep jamming, disrupts smart farm communications by continuously shifting the frequency of an interference signal across the operational spectrum instead of targeting a single fixed frequency. As the jamming signal sweeps through different frequency bands, it intermittently overlaps with active communication channels, causing periodic disruptions to UAVs, IoT sensors, and automated machinery. These systems depend on stable wireless connections for real-time monitoring and control, making them particularly vulnerable to this type of interference. The impact of frequency-sweeping interference depends on the sweep rate and bandwidth. A fast sweep rate results in short but frequent disruptions across multiple channels, making it difficult for devices to maintain stable connections. In contrast, a slow sweep rate can dwell on specific frequencies long enough to block critical transmissions, such as remote commands for precision spraying or automated irrigation.

All the above signal is easy to generated by light devices that can be mounted by the commercial UAVs. While high-power noise signals are typically required for effective jamming, UAVs equipped with RF signal generators can mitigate this limitation by flying close to the target, enhancing the jamming effectiveness with lower power requirements.

5.3.2. GNSS Jamming

GNSS jamming constitutes a particular form of RF signal interference that, while not produced by the smart farm itself, poses significant threats to its key technologies. Smart farm operations, including IoT sensors and UAVs, heavily depend on GNSS for accurate positioning, navigation, and timing services. By overwhelming GNSS receivers with high-power noise signals on the same frequencies used by GNSS receivers, attackers can prevent accurate positioning, forcing autonomous tractors, drones, and irrigation systems to lose their spatial awareness. This can lead to inefficient field operations, misaligned seeding or spraying, and even potential collisions between unmanned systems. In worst-case scenarios, prolonged GNSS jamming can completely disable automated machinery, forcing farms to revert to manual operation, reducing efficiency and increasing operational costs. In scenarios where UAVs carry interference signal generators with limited transmission energy, the artificial interference can affect only a restricted number of devices. The precise location information of gateways or edge devices may not be of paramount importance. However, GNSS jamming poses a greater threat to the UAVs [

69] used in smart farming. In such contexts, competitors could deploy high-speed UAVs equipped with signal generators to track and disrupt the GNSS signals received by farm UAVs, thereby interfering with their flight paths. This form of targeted jamming could significantly impede the operational efficiency and reliability of UAVs in smart farming applications.

5.3.3. Protocol-Based Jamming

Due to resource limitations and the rapid development of new technologies, vulnerabilities may exist in the protocols used in smart farm communication networks. Hackers can exploit these weaknesses to attack and disable communication links by employing a UAV as a relay node, positioning it close to a key network node. Three types of classical protocol-based attacks require particular attention: denial-of-service (DoS) attacks, deauthentication attacks, and flooding attacks [

10]. DoS attacks disable a network key node, rendering it inaccessible to the smart farm field devices. Deauthentication attacks disconnect field devices from their access points. Flooding attacks send a large number of fake requests to the network key node, exhausting its computing resources and disabling its services. An experimental WiFi deauthentication attack using the ESP8266 microcontroller development board combined with the WiFi Deauther Monster test module, as described in [

70], targeted a smart farm architecture by exploiting IEEE 802.11 vulnerabilities, specifically the unencrypted management frames. In this experiment, a gateway using a Raspberry Pi SBC was forced to detach from the network, preventing it from forwarding sensing results to the cloud.

5.4. Spoofing

In smart farms, UAVs and IoT devices rely on standardized wireless communication protocols (e.g., WiFi, Bluetooth, Zigbee, LoRa, and proprietary RF protocols) for remote monitoring, automated decision-making, and real-time control. It is essential to ensure that the messages are sent from a trusted authorized entity, rather from a malicious adversary. Spoofing attacks involve the deliberate creation of fraudulent RF signals that mimic legitimate communication protocols to deceive smart farm devices [

68]. These signals can target various components within the smart farming ecosystem, including sensors, edge nodes, GNSS signals, gateways, UAV remote control commands, and feedback transmissions. Typically, spoofing signals are crafted to align with specific communication protocol stacks, necessitating a thorough understanding of these protocols by the attacker prior to launching the attack. To identify the protocol stacks, UAVs can be exploit to collect the RF signal over the target smart farm and the collected results can be used for analyzing the waveform in use and the relative parameters. To implement these attacks, UAVs equipped with spoofers can be deployed close to the target nodes and perform spoofing automatically or as relay nodes controlled remotely.

A typical class of spoofing technology is GNSS signal spoofing. Experimental trials shown in [

71] indicate that when the ratio between the counterfeit signal power and the legitimate signal is larger than or equal to 10 dB, the automatic gain controller module of the GNSS receiver attenuates the authentication GNSS signals to a value below the required threshold; thus, the GNSS receiver will lose the lock and fail to reacquire the legitimate signal. As a result, the counterfeit signal gains control of the receiver. The received signal power is inversely proportional to the propagation distance and proportional to the transmitting power. With the help of the UAVs, which are close to the target nodes, the signal propagation distances are significantly shortened; thus, the power ratio requirement is easy to achieve.

5.5. Summary

The development of UAVs brings significant security risks to smart farms due to the expanded attack surface and limited built-in security of IoT devices and UAVs. Physical attacks using compact UAVs can target crops, livestock, or critical IoT nodes, disrupting operations and threatening safety. Unauthorized UAVs can sabotage farms by spreading diseases or harmful materials. Information leakage is another major concern, as UAVs can collect sensitive data such as network structures, sensor locations, and crop information, enabling attackers to plan targeted disruptions. RF signal jamming, including GNSS jamming, can interrupt communications and navigation, crippling smart farm operations. Protocol-based jamming exploits vulnerabilities in communication protocols to disable networks through denial-of-service or deauthentication attacks. Spoofing attacks deceive smart farm systems by imitating legitimate signals. Although UAVs are not essential for executing the aforementioned attacks, they significantly enhance their efficiency and stealth. These threats underscore the critical need for advanced security measures to protect smart farming systems from unauthorized UAVs.

6. Unauthorized UAV Countermeasures

Given the threats posed by unauthorized UAVs, as discussed in

Section 5, implementing robust security solutions is crucial for protecting smart farms. These solutions can be broadly categorized into two types: passive and active measures. Passive solutions focus on enhancing the resilience of smart farming equipment by integrating security algorithms that can detect and reject cyber or physical threats. In contrast, active solutions are designed to detect and remove unauthorized UAVs from the farm’s airspace, ensuring a more proactive defense. As highlighted in

Section 1, various security issues and mitigation strategies have been explored in previous works [

9,

10,

11,

12]. However, these studies primarily focus on general cybersecurity concerns in smart farming and do not specifically address the challenges posed by unauthorized UAVs. To bridge this gap, we compare the effectiveness of existing security solutions in mitigating UAV-related threats in

Table 3. This analysis provides insight into their applicability for countering UAV intrusions. Building on this foundation, we then shift our focus to active countermeasures in the rest of this section, which are particularly relevant for mitigating unauthorized UAV incursions—an essential aspect of this study.

Several review articles have addressed counter-UAV measures in various contexts. For instance, the authors of [

66] explored anti-drone systems designed to detect, localize, and neutralize intruding drones using a combination of passive surveillance technologies. Their system, ADS-ZJU, integrated drone detection, localization, and radio frequency jamming, offering a comprehensive solution for mitigating threats to public security and privacy.

Similarly, ref. [

67] provided a detailed overview of UAV detection methods, including those based on ambient radio frequency signals, radar, acoustic sensors, and computer vision techniques, as well as common interdiction strategies for neutralizing malicious drones. To safeguard critical infrastructure, ref. [

68] reviewed counter-UAV technologies and presents a survey of drone incidents near airports. The authors proposed risk management plans and effective counter-drone strategies tailored to realistic attack scenarios. Furthermore, ref. [

14] evaluated a multiplatform counter-UAV system involving cooperative mini drones, outlining the sensing, mitigation, and command subsystems required for implementation.

These studies emphasize that the first critical step in any anti-UAV system is the collection and processing of relevant data to identify, classify, and track unauthorized drones. This can be achieved using various sensors, such as electrochemical, acoustic, or imaging sensors. However, the reviewed counter-UAV technologies largely do not account for the specific challenges of smart farming environments.

In this section, the state-of-the-art developments in various UAV detection technologies are explored. We analyze their strengths and limitations while addressing the unique challenges posed by smart farming. A comparative analysis of these technologies, considering their applicability to smart farms, is presented in

Table 4.

6.1. Radar-Based UAV Countermeasure

Radars are active sensors that operate by transmitting segments of electromagnetic waves with carrier frequencies ranging from 3 MHz to 300 GHz. When these waves encounter a target object, they are reflected back and received by the radar’s receiving modules. The system then uses the time difference between transmission and reception to estimate the distance of the object, while the Doppler effect observed in the received waves is used to estimate the object’s speed. By precisely analyzing the Doppler spectrum, radar systems can classify the type of drone and distinguish it from other flying objects or animals, such as birds.