Highlights

What are the main findings?

- Introduced a novel LSTM-A2C with an attention framework for UAV-mounted base stations to optimize trajectory and coverage in disaster environments.

- Achieved superior performance in coverage, energy efficiency, and fairness compared to baseline reinforcement learning methods.

What is are implications of the main findings?

- Enables resilient UAV-based communication systems for rapid connectivity restoration in emergency and disaster scenarios.

- Establishes a foundation for deploying advanced RL-driven UAV systems in real-world 6G-enabled IoT networks.

Abstract

In disaster relief operations, Unmanned Aerial Vehicles (UAVs) equipped with base stations (UAV-BS) are vital for re-establishing communication networks where conventional infrastructure has been compromised. Optimizing their trajectories and coverage to ensure equitable service delivery amidst obstacles, wind effects, and energy limitations remains a formidable challenge. This paper proposes an innovative reinforcement learning framework leveraging a Long Short-Term Memory (LSTM)-based Advantage Actor–Critic (A2C) model enhanced with an attention mechanism. Operating within a grid-based disaster environment, our approach seeks to maximize fair coverage for randomly distributed ground users under tight energy constraints. It incorporates a nine-direction movement model and a fairness-focused communication strategy that prioritizes unserved users, thereby improving both equity and efficiency. The attention mechanism enhances adaptability by directing focus to critical areas, such as clusters of unserved users. Simulation results reveal that our method surpasses baseline reinforcement learning techniques in coverage fairness, Quality of Service (QoS), and energy efficiency, providing a scalable and effective solution for real-time disaster response.

1. Introduction

The restoration of communication networks following natural disasters is paramount in enabling the effective coordination of emergency response efforts, facilitating the delivery of humanitarian aid, and safeguarding affected populations. In such scenarios, conventional terrestrial infrastructure is frequently rendered inoperable, severing connectivity precisely when it is most urgently needed. Unmanned Aerial Vehicles (UAVs) equipped with base stations (UAV-BS) present a compelling solution, offering flexible, rapid-deployment coverage in environments where traditional systems falter. Nevertheless, the deployment of UAV-BS in disaster-affected areas poses multifaceted challenges, including the navigation of irregular terrains, the equitable provisioning of services to spatially diverse user populations, and the optimization of constrained energy resources amidst fluctuating conditions such as wind and physical obstructions.

Beyond disaster relief, UAV networks are increasingly pivotal in emerging paradigms such as the low-altitude economy (LAE), where they enable intelligent services in logistics, transportation, and urban air mobility through scalable AI deployment [1]. Similarly, UAV-enabled Integrated Sensing and Communication (ISAC) extends these capabilities by jointly optimizing communication with ground users and target localization, enhancing spectral efficiency and adaptability in dynamic environments [2]. Our work aligns with these timely directions by advancing RL-based UAV trajectory optimization for equitable coverage, with potential extensions to LAE and ISAC scenarios. While these domains highlight the growing role of adaptive, intelligent UAV systems, they also expose a shared limitation: traditional optimization strategies—designed for stable, predictable settings—fail to address the inherent unpredictability, user heterogeneity, and fairness demands of real-world operational environments, particularly in crisis scenarios.

Traditional optimization approaches often presuppose static user distributions or consistent environmental parameters—assumptions that do not hold in real-world crisis settings. As a result, they may fail to adequately serve isolated user groups or allocate resources inefficiently, yielding suboptimal coverage outcomes. This underscores the necessity for advanced, adaptive methodologies capable of reconciling operational efficiency with equitable service distribution, ensuring that connectivity is extended to all users irrespective of their geographic disposition. We therefore adopt reinforcement learning (RL) over traditional optimization due to the following inherent challenges in disaster environments:

- Non-stationary dynamics: User locations, wind patterns, and obstacle emergence evolve unpredictably—violating static assumptions of convex optimization or MILP.

- Partial observability: The UAV has limited sensing range and delayed feedback, requiring memory and sequential credit assignment—natively supported by RL.

- High-dimensional action space: Nine-direction movement with variable altitude creates exponential complexity unsuitable for discretization or relaxation.

- Multi-objective fairness under uncertainty: RL enables online reward shaping to balance coverage, fairness, and energy—whereas weighted-sum optimization requires known trade-offs.

These factors render classical methods intractable, motivating our LSTM-A2C framework to learn adaptive policies directly from interaction.

In this study, we introduce a novel reinforcement learning (RL) framework tailored to optimize UAV-BS trajectories and coverage within 6G-enabled Internet of Things (IoT) networks. Our approach is designed to maximize unique user reach while ensuring fairness in service delivery under uncertainty. At its core lies a Long Short-Term Memory (LSTM)–based Advantage Actor–Critic (A2C) model augmented with an attention mechanism. Unlike conventional LSTM-A2C frameworks, the proposed model integrates a memory-based fairness state and selective attention module, enabling the UAV to dynamically prioritize underserved or high-demand user clusters in partially observable environments. This combination allows for efficient policy learning in exponentially large state spaces while maintaining equitable coverage. Unlike prior works in UAV trajectory optimization and RL-based coverage control, which often prioritize energy minimization or basic path planning without explicitly addressing fairness disparities or attention-driven adaptability to volatile conditions (e.g., wind, obstacles—see Section 2 for detailed comparisons), our framework uniquely integrates fairness-aware rewards, a nine-direction movement model, and an attention mechanism with LSTM. For instance, while earlier studies [3,4,5] leverage recurrent networks for temporal dependencies, they do not jointly achieve memory-based user tracking, selective focus on unserved clusters, and equitable service in partially observable, high-dimensional environments—capabilities central to our design.

Our methodology pursues two principal goals: maximizing the number of unique users served and ensuring equitable coverage distribution. The attention mechanism enables the UAV to focus selectively on salient environmental cues, such as user density or coverage deficiencies, while the LSTM component captures temporal patterns to predict and respond to evolving conditions. To address the complexities of disaster environments, we propose a refined nine-direction movement model, affording the UAV enhanced maneuverability to circumvent obstacles and adapt to stochastic factors such as wind.

Key Contributions

The key contributions of this research are summarized as follows:

- Novel LSTM-A2C with Attention Framework: We advance existing Actor–Critic architectures by embedding an attention mechanism into an LSTM-A2C structure. This integration allows for context-aware and temporally adaptive trajectory optimization, enabling the UAV-BS to selectively emphasize unserved user clusters and volatile regions while maintaining stable convergence in large-scale, dynamic environments.

- Fairness-Aware Coverage Optimization: Beyond maximizing overall user reach, our framework explicitly incorporates fairness through memory-based user tracking and reward terms that penalize disparity. This ensures equitable service even for sparsely located users, a feature overlooked in conventional RL-based UAV-BS optimization.

- Comprehensive Evaluation and Insights: Through extensive simulations in 6G-IoT scenarios, the proposed method consistently surpasses Q-Learning, DDQN, and standard A2C in fairness (Jain’s index), coverage disparity (CDI), and energy efficiency. These results demonstrate its robustness and practical utility in disaster-response communications.

This work represents a substantial advancement in UAV-assisted communication systems by merging deep reinforcement learning with a fairness-oriented, attention-driven design to address the distinctive demands of disaster scenarios. The remainder of this paper is organized as follows: Section 2 surveys related literature, Section 3 outlines the system model and problem formulation, Section 4 presents the proposed RL methodology, Section 5 discusses simulation results, and Section 6 concludes with key insights and future research directions.

2. Related Work

Optimizing Unmanned Aerial Vehicle (UAV) operations, particularly in terms of energy consumption and coverage, is a well-established research domain within wireless communication and Internet of Things (IoT) networks. Early efforts concentrated on traditional optimization techniques for UAV energy efficiency and deployment. Zeng et al. [6] proposed a framework that jointly optimizes propulsion and communication energy, aiming to balance mission objectives with energy efficiency. Their method employs trajectory optimization to minimize energy use while maintaining communication coverage, but it assumes a static environment, limiting its adaptability to dynamic conditions such as moving users or shifting obstacles. Similarly, Mozaffari et al. [7] explored adaptive deployment strategies for UAVs, enabling them to reposition dynamically based on user distribution and demand fluctuations. Their approach relies on real-time user density maps, proving effective in controlled simulations. However, it struggles with real-world unpredictability, such as sudden wind or physical barriers, due to its dependence on predefined behavioral models.

Coverage maximization is another critical aspect of UAV-based surveillance and communication systems. Cabreira et al. [8] introduced a path-planning algorithm that segments the operational area into smaller regions to ensure comprehensive coverage. This divide-and-conquer strategy guarantees that the UAV sequentially addresses each segment, though it may compromise energy efficiency and adaptability to dynamic user distributions. In a similar vein, Jinbiao Yuan et al. [9] developed a coverage path-planning algorithm using a genetic algorithm to optimize flight paths for maximum coverage with minimal overlap. While effective, this method’s computational intensity renders it less suitable for real-time applications or large-scale areas, highlighting the need for more scalable solutions.

Reinforcement Learning (RL) has emerged as a powerful tool for enhancing UAV decision-making in complex, dynamic environments. Traditional RL methods like Q-Learning have been widely applied to path planning and obstacle avoidance. Tu et al. [10] demonstrated Q-Learning’s utility in a grid-based environment, enabling a UAV to navigate toward a target while avoiding obstacles. However, Q-Learning suffers from slow convergence and inefficiency in high-dimensional state spaces, limiting its practicality for intricate scenarios. To overcome these drawbacks, the Double Deep Q-Network (DDQN) was introduced, reducing overestimation bias in Q-value estimates for more stable learning. Wang et al. [11] applied DDQN to multi-UAV coordination, achieving superior performance over traditional Q-Learning in multi-agent, dynamic settings. Additionally, Proximal Policy Optimization (PPO), another RL approach, has been explored for UAV trajectory optimization. Askaripoor et al. [12] utilized PPO to train UAVs for coverage maximization in urban environments, demonstrating improved sample efficiency compared to Q-Learning.

Actor–Critic methods, blending policy-based and value-based RL approaches, have gained traction for their ability to manage continuous action spaces and ensure stable learning. The Advantage Actor–Critic (A2C) variant employs the advantage function to reduce variance in policy updates, enhancing training stability. Lee et al. [5] proposed SACHER, a Soft Actor–Critic (SAC) algorithm augmented with Hindsight Experience Replay (HER) for UAV path planning. SACHER improves exploration by maximizing both expected reward and entropy, encouraging diverse action selection. However, this entropy-augmented objective may sacrifice optimality in scenarios requiring precise actions. In contrast, our study leverages LSTM-A2C, integrating Long Short-Term Memory (LSTM) networks to capture sequential dependencies in dynamic environments. This enables the UAV to base decisions on historical data, enhancing adaptability to evolving conditions such as user mobility or environmental changes.

Attention mechanisms have become increasingly prominent in RL frameworks, improving performance by allowing agents to focus on critical aspects of the state or action space. In broader contexts, Vaswani et al. [13] introduced the Transformer architecture, which relies on self-attention to process sequential data efficiently, revolutionizing fields like natural language processing. Within RL, attention mechanisms enhance an agent’s ability to prioritize relevant information, such as high-priority regions or users in UAV applications. For instance, Kumar et al. [4] proposed RDDQN: Attention Recurrent Double Deep Q-Network for UAV Coverage Path Planning and Data Harvesting. Their approach employs a recurrent neural network with an attention mechanism to process sequential observations, enabling the UAV to concentrate on pertinent parts of the input sequence for decision-making. This attention mechanism aids in managing long-term dependencies and boosting learning efficiency. However, RDDQN differs from our work in key ways. It utilizes a value-based DDQN framework, whereas we adopt an Actor–Critic A2C approach, combining policy and value optimization for greater flexibility. Additionally, their movement model is simpler, featuring a smaller action set that restricts UAV maneuverability in complex environments. Moreover, RDDQN does not account for harsh environmental factors like wind, nor does it address dynamic user distributions—both critical in our disaster relief scenario. Our LSTM-A2C with an attention framework, by contrast, incorporates a nine-direction movement model and considers dynamic user distributions and environmental challenges, making it more robust for real-world applications.

LSTM-A2C has been applied in various domains to leverage temporal dependencies effectively. Li et al. [14] employed LSTM-A2C for network slicing in mobile networks, demonstrating its adaptability to user mobility and changing conditions. Similarly, Arzo et al. [15], Jaya Sri [16], Lotfi [17], and Zhou et al. [18] showcased LSTM’s effectiveness in dynamic network environments, enhancing performance and adaptability in 6G emergency scenarios, 6G-IoT, Open RAN traffic prediction, and wireless traffic prediction applications, respectively. He et al. [19] and Xie [20] further validated LSTM’s capability to handle long, irregular time series, emphasizing its strength in retaining temporal dependencies for UAV trajectory and information transmission optimization in IoT wireless networks. Building on these foundations, the current study extends LSTM-A2C to UAV trajectory and coverage optimization in 6G-enabled IoT networks, integrating an attention mechanism to enhance decision-making under energy and environmental constraints.

This research extends our previous work, “Deep RL for UAV Energy and Coverage Optimization in 6G-Based IoT Remote Sensing Networks” [3]. In that study, we applied deep reinforcement learning to optimize UAV energy and coverage, focusing on static user distributions and simpler environmental conditions. The present work advances this foundation by introducing a more sophisticated nine-direction movement model, accommodating dynamic user distributions, and incorporating environmental factors such as wind and obstacles. Furthermore, we enhance the RL framework by embedding an attention mechanism within the LSTM-A2C architecture, enabling the UAV to make informed decisions based on historical data and current conditions, thus improving coverage fairness and energy efficiency in disaster relief scenarios.

While traditional RL methods like Q-Learning and DDQN have advanced UAV energy and coverage optimization, they often struggle with scalability and adaptability in real-world settings. Our novel LSTM-A2C approach with attention integrates LSTM’s temporal dependency capabilities with an attention-driven focus on critical states, offering superior performance in dynamic environments. Extensive simulations validate its effectiveness, demonstrating improvements in coverage fairness, Quality of Service (QoS), and energy utilization over baseline RL methods.

It is also worth noting that the proposed trajectory-optimization framework can be synergistic with emerging physical-layer technologies designed to enhance UAV communication efficiency. In particular, integrating the learning-based control policy with Intelligent Reflecting Surfaces (IRS) could improve signal propagation in non-line-of-sight or obstructed disaster environments by intelligently redirecting reflected waves, while advanced multiple-access schemes such as Non-Orthogonal Multiple Access (NOMA) can further increase spectral efficiency and user connectivity. Furthermore, recent advancements leverage deep reinforcement learning (DRL) to jointly optimize beamforming, power allocation, and trajectory in RSMA-IRS-Assisted ISAC systems for energy efficiency maximization [21]. Such AI-driven coordination complements our UAV-BS trajectory framework, enabling integrated 6G systems where UAVs, IRS, and sensing coexist under dynamic constraints. Combining these physical-layer innovations with our reinforcement-learning-based trajectory optimization represents a promising direction for future research, enabling more energy-efficient and resilient UAV communication systems in 6G-enabled networks.

3. System Model and Problem Formulation

3.1. System Overview

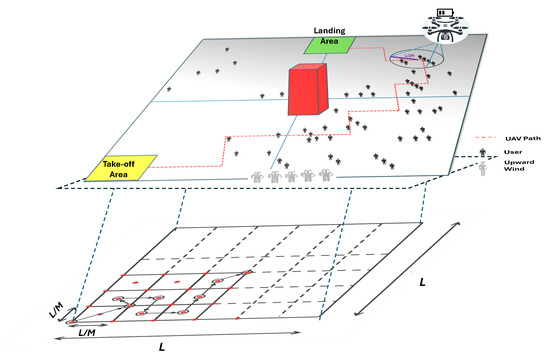

In this study, we consider a UAV-mounted base station (UAV-BS) deployed to provide emergency wireless coverage in a disaster-affected area. The operational region, as shown in Figure 1, is modeled as an grid divided into smaller squares, each with side length [22]. The UAV-BS operates at a constant altitude of 50 m [23] and a fixed horizontal speed, simplifying the three-dimensional motion into a two-dimensional trajectory optimization problem while maintaining physical relevance [6]. This simplification is widely adopted in UAV-BS literature [6,7,24] to focus on dynamic coverage and fairness under energy constraints while ensuring line-of-sight propagation and isolating horizontal maneuverability from altitude variations. Unlike classical 2-D path-planning tasks that focus purely on geometric shortest paths, trajectory optimization here involves sequential decision-making under temporal and stochastic dynamics (e.g., wind, energy, fairness), which substantially increases computational and modeling complexity.

Figure 1.

An illustration of the system model. The area is partitioned into cells (side length c). Red dots indicate UAV hover points at cell centers and vertices. Blue circles represent coverage areas with a radius of . Red blocks denote obstacles, while Swirls mark wind-affected zones. The UAV moves between points according to nine discrete actions, adjusting its trajectory via attention-driven policy learning.

Remark on Problem Complexity: Although the spatial motion occurs in two dimensions, the optimization problem remains highly nontrivial due to the joint consideration of fairness, stochastic wind perturbations, energy constraints, and partial user observability. The resulting state–action space grows exponentially as , where T is the episode length, requiring advanced RL methods for tractable policy optimization.

3.2. Communication System Model

The UAV-BS provides downlink connectivity to N ground users randomly distributed across the operational area. To ensure equitable service distribution, a quality-of-service (QoS) framework assigns each user a minimum bandwidth of 10 MHz, with a total bandwidth of 100 MHz and up to simultaneous connections.

where indicates whether user i is within the UAV’s coverage radius at time t, and tracks whether the user was previously served. This binary formulation ensures fairness by rewarding coverage of newly reached users.

3.3. Channel Model

3.4. Mobility Model

The UAV’s movement is constrained to nine discrete actions: eight compass directions (north, northeast, east, southeast, south, southwest, west, northwest) and hovering. At each time step, the UAV moves at a constant speed, with the displacement defined relative to the grid cell length c. For cardinal movements (north, south, east, west), the UAV travels a distance of along one axis. For diagonal movements, it travels along both the x- and y-axes simultaneously, resulting in a total Euclidean displacement of . This ensures that the UAV reaches the diagonal vertices of each cell, maintaining geometric consistency with the layout shown in Figure 1, while preserving constant movement speed across all directions.

Wind effects in designated regions introduce stochastic perturbations modeled as zero-mean Gaussian random variables, with standard deviation proportional to the local wind intensity [25]. The possible displacements are summarized as follows:

Wind-affected transitions are therefore expressed as:

where are the wind-induced perturbations on the UAV’s motion in the x- and y-directions, respectively.

The energy consumption model focuses primarily on propulsion, as communication-related energy expenditure constitutes less than 5% of total power usage in typical UAV-BS deployments [26]. The total available energy E constrains the UAV’s operational time, requiring careful balance between coverage objectives and energy conservation.

3.5. Fairness Metric

To quantify service equity across all ground users, we employ Jain’s fairness index:

where represents the total service time received by user i. This metric ranges from (worst-case unfairness) to 1 (perfect fairness), providing a normalized measure of distribution equity that is sensitive to both under-served and over-served users.

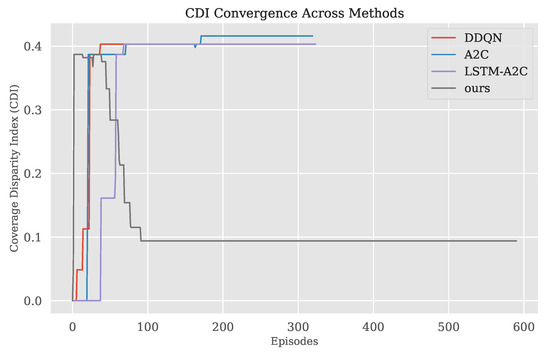

Coverage Disparity Index

To further assess spatial equity, we introduce the Coverage Disparity Index (CDI), defined as:

where is the number of user clusters, and are the served and total users in cluster , and is the mean coverage rate. A lower CDI indicates more uniform coverage across clusters, complementing Jain’s index by focusing on geographic disparity.

3.6. Optimization Problem

The trajectory optimization problem is formulated as a multi-objective constrained optimization to maximize coverage, fairness, and efficiency:

The optimization is defined over the following key variables:

- : The policy mapping current states to movement actions, guiding the UAV’s trajectory.

- : The UAV’s position at time t, determining its spatial coverage.

- : The propulsion energy consumed at time t, subject to the total budget E.

- : The number of attached users at time t, constrained by .

Where the variables and parameters are defined as follows:

- : Weighting parameters for fairness () and disparity penalty (), tuned empirically

- E: Total energy budget available for the mission.

- : Set of obstacle regions where the UAV cannot operate.

- : Designated landing zone for the UAV at the end of the mission.

- c: Grid cell side length, defining the spatial resolution.

- : Maximum number of users that can be attached at any time t, ensuring QoS.

- T: Total time horizon or number of decision steps.

This formulation encapsulates a complex trade-off among maximizing unique user coverage (), ensuring temporal fairness () and spatial equity (reducing ), while adhering to energy, obstacle, and movement constraints.

3.7. Computational Complexity

The problem’s combinatorial nature arises from several factors. First, the discrete grid structure creates possible positions at each time step. Second, the nine possible movement directions yield a branching factor that grows exponentially with the time horizon. Third, the user service history introduces memory dependencies that prevent Markovian decomposition. For a typical scenario with and , the state space exceeds possible configurations, rendering exhaustive search methods computationally infeasible.

This complexity motivates our machine learning approach, which can discover efficient navigation policies without explicitly enumerating all possible states. The LSTM-A2C architecture is particularly well suited to this problem because it captures temporal dependencies through its recurrent connections, effectively handles the partial observability of user distributions, and learns energy-efficient movement patterns through continuous policy optimization.

4. Advanced Reinforcement Learning Approach

4.1. Problem Characterization

The UAV trajectory optimization problem presents three fundamental challenges that necessitate an advanced reinforcement learning solution:

- Partial Observability: The UAV cannot perceive the entire user distribution simultaneously;

- Temporal Dependencies: Current decisions impact future coverage opportunities;

- Multi-Objective Tradeoffs: Balancing coverage, fairness, and energy constraints.

To address these challenges, we formulate the problem as a Markov Decision Process (MDP) and develop an LSTM-enhanced Advantage Actor–Critic (A2C) algorithm with attention mechanisms.

4.2. MDP Formulation

4.2.1. State Representation

The state at time t captures both spatial and communication metrics:

where:

- : UAV position in grid coordinates;

- : Remaining energy;

- : Binary vector of previously served users;

- : Number of currently attached users;

- : Average bandwidth per user.

4.2.2. Action Space

The UAV selects from nine possible actions corresponding to:

Wind effects are incorporated through stochastic transition dynamics:

where models wind disturbances, with wind variance proportional to intensity.

4.2.3. Reward Function

The overall reward at time step t is defined as:

where:

- : Number of newly served users at time t;

- : Incremental Jain’s fairness index;

- : Coverage Disparity Index at time t, capturing spatial imbalance in user service distribution;

- : Energy consumed in the transition;

- : Indicator function penalizing obstacle or no-fly-zone violations;

- : Weighting coefficients balancing mission objectives.

The inclusion of fairness (, ) and energy () directly in the per-step reward ensures that global mission-level objectives are naturally decomposed into local decision signals. As the RL agent updates its policy step-by-step via advantage estimation, it cumulatively learns to optimize the joint combinatorial objective—maximizing coverage while preserving equity and energy endurance. This design prevents misalignment: the memory state tracks long-term user service history, while the attention mechanism dynamically prioritizes actions that improve fairness and conserve energy, even when immediate coverage gains are modest. The reward coefficients () are empirically tuned (as detailed in Section 5) to reflect disaster-response priorities, ensuring balanced convergence without local optima.

4.3. LSTM-Enhanced Advantage Actor–Critic

The LSTM-A2C framework (Algorithm 1) combines temporal sequence processing with policy optimization, creating a memory-augmented decision system for UAV trajectory planning. This integration addresses two critical challenges in dynamic environments:

| Algorithm 1 LSTM-A2C |

|

4.3.1. Core Mechanism

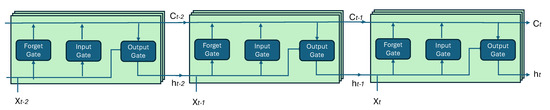

The system processes state sequences through three coordinated components: a temporal processor, a policy actor, and a value critic. The temporal processor employs an LSTM network that maintains the hidden state and cell state as shown in Figure 2. It processes state windows and regulates information flow through the standard gating mechanisms, defined as

These gates enable the network to selectively retain, update, or output information from past observations, thereby capturing temporal dependencies that are crucial in dynamic UAV environments.

Figure 2.

LSTM-A2C architecture showing the information flow from state input through LSTM memory to policy and value outputs [3].

The policy actor maps the LSTM’s latent states to action probabilities and optimizes trajectory decisions using advantage estimates. Its gradient update follows

which refines the policy parameters toward actions with higher estimated advantages. Complementarily, the value critic predicts the expected cumulative rewards from the LSTM states, stabilizing learning by reducing variance in the policy updates.

This study advances the LSTM-A2C framework from our prior work [3] by shifting the focus to disaster relief, where the UAV acts as a base station (UAV-BS) to provide downlink communication to ground users. Several major architectural enhancements were introduced to accommodate the increased complexity of this application. First, the UAV-BS now operates with nine movement directions—north, northeast, east, southeast, south, southwest, west, northwest, and hovering—compared to the four in the previous study. This expansion increases decision complexity from to possible action sequences over 25 steps. Second, a communication model is incorporated, requiring dynamic bandwidth allocation and Quality of Service (QoS) management for parameters such as service time and bandwidth per user. Third, fairness constraints are embedded within the framework so that the UAV-BS prioritizes unserved users over previously connected ones, necessitating a memory of user-service history. Finally, the present formulation introduces a multi-objective optimization goal that jointly maximizes unique user coverage, fairness, QoS, and energy efficiency, whereas the earlier version considered only coverage and energy.

While the LSTM component in our prior model effectively captured temporal dependencies in dynamic environments, the current problem’s state space is significantly larger due to the expanded hovering positions and additional state variables, including bandwidth, service time, and user history. In the previous study, the UAV could occupy any of 400 grid positions, whereas the current framework defines 841 possible hovering points, calculated as , where denotes the number of cells along one grid dimension. Combined with the larger action space and episode length, the overall state-space complexity increases from to , approximately 121 times greater (see Table 1). A pure LSTM model struggles under this expanded state space because its fixed-size hidden states cannot retain all relevant information over long sequences. To overcome this limitation, the proposed framework integrates an attention mechanism into the LSTM-A2C architecture, enabling selective focus on critical states such as unserved user clusters, energy-critical phases, and QoS conditions, thereby enhancing decision-making efficiency and adaptability in complex disaster-relief scenarios.

Table 1.

Comparison of problem complexity: prior work vs. current study.

4.3.2. Attention Mechanism Necessity

The attention mechanism addresses two fundamental limitations of our prior approach:

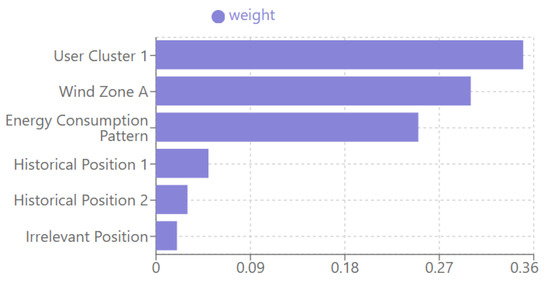

Selective Memory Access: Traditional LSTMs maintain all historical information with equal priority, wasting capacity on irrelevant states. Our attention layer computes importance scores for each historical state

Variable-Length Dependencies: Critical patterns (e.g., user clusters, wind patterns) may require focusing on either recent or distant past states. The attention mechanism dynamically adjusts its temporal focus window.

4.3.3. Architecture Details

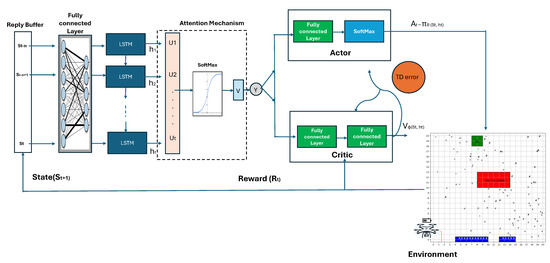

The proposed architecture (Figure 3) consists of three key components:

Figure 3.

Architecture of the proposed LSTM-A2C with attention mechanism. The network processes sequential state inputs through an LSTM layer, applies attention weighting to historical states, and produces both policy and value estimates.

- LSTM Encoder: Processes state history ;

- Attention Mechanism: Identifies critical temporal patterns;

- Dual Heads: Separate policy and value function estimators.

4.3.4. Attention Mechanism Formulation

The attention mechanism transforms LSTM hidden states into context vectors through:

where

- : Learnable query weights;

- : Trainable summary vector;

- : Final context vector.

4.3.5. Policy and Value Estimation

The actor and critic networks utilize the context vector in distinct ways. The actor applies a softmax transformation to generate the policy distribution over actions:

while the critic estimates the state value using a linear projection:

The inclusion of the context vector provides several advantages compared with standard LSTM outputs. First, it introduces a task-specific focus by prioritizing information from recent user-service records, energy consumption patterns, and wind-affected regions—factors most relevant to UAV decision-making. Second, it improves interpretability, as the attention weights can be visualized to illustrate which temporal states most influence policy updates (see Figure 4). In a real-world deployment, the attention heatmaps can serve as a diagnostic interface for human operators. By visualizing which spatial regions the UAV prioritized during decision-making, mission planners can verify that the agent focused on unserved or critical user clusters, facilitating post-mission analysis and trust calibration in autonomous behavior. This interpretability also enables rapid adjustment of policy constraints if the UAV’s focus diverges from mission objectives.

Figure 4.

Attention weight distribution highlighting focus on critical states, such as user clusters and wind zones, while disregarding irrelevant historical positions.

Finally, it offers adaptive memory capabilities, automatically adjusting the effective memory window between 5 and 50 time steps depending on environmental complexity and task demands.

4.4. Training Protocol

The complete training procedure, outlined in Algorithm 2, employs parallel rollouts, generalized advantage estimation, and gradient clipping to ensure efficient and stable learning. Eight parallel workers are used to collect diverse trajectory samples, which accelerates convergence and improves generalization across varying environments. The generalized advantage estimation term, , provides a smoothed balance between bias and variance in advantage computation. To further enhance stability, gradients are clipped to a maximum norm of 0.5 during optimization.

The overall loss function combines the policy and value objectives as follows:

where denotes the entropy regularization term that encourages exploration and prevents premature policy convergence.

4.5. Theoretical Advantages

The proposed framework offers three principal theoretical advantages over standard reinforcement learning approaches. First, it enhances temporal awareness, with the LSTM component maintaining long-term dependencies that are essential for sequential decision-making under uncertainty. Second, it introduces adaptive focus, as the attention module dynamically emphasizes critical historical states rather than treating all past information equally. Third, it improves sample efficiency through advantage-based variance reduction, enabling faster and more stable convergence.

Despite the added architectural complexity, the attention mechanism significantly improves training efficiency. Empirical evaluation in Section 5 demonstrates superiority.

| Algorithm 2 LSTM-A2C with Attention Training |

|

5. Simulation Framework and Experimental Setup

5.1. Grid Configuration

We model a m operational area divided into cells ( m), with key regions defined as:

- Takeoff Area: Random position in ;

- Landing Zone: ;

- No-Fly Zone: ;

- Coverage Radius: m (effective coverage per hover point).

The chosen grid resolution directly affects both the optimization granularity and computational complexity. A finer discretization (larger M) would enhance spatial precision, enabling the UAV to better target isolated users and improve fairness by distinguishing coverage gaps within clusters. However, it exponentially increases the state–action space—e.g., from at to at —significantly raising training time and memory requirements. Conversely, a coarser grid reduces complexity but risks degraded maneuverability and coverage granularity, potentially compromising equity in dense or fragmented user distributions. Our selection offers sufficient fidelity for realistic disaster scenarios while maintaining tractable computation.

In future work, we plan to treat grid resolution as a tunable parameter, enabling a systematic evaluation of the trade-off between trajectory precision and computational cost across different grid configurations. Such an analysis would further strengthen the understanding of how mission area discretization impacts real-time UAV deployment efficiency.

5.2. Hover Points and Energy Model

The number of possible hovering points is calculated as:

Energy consumption follows realistic movement constraints: The propulsion energy consumption at each time step t is modeled to reflect realistic power demands during UAV-BS operations in a grid-based environment. This model draws from established physics-based formulations and empirical data on rotary-wing UAVs, such as quadcopters, which align with our fixed-altitude (50 m) and discrete-movement setup. Key studies report average hovering power around 200–300 W for 1–5 kg UAVs [27,28,29,30] and cruise power around 400–600 W at constant speeds of 10–15 m/s, primarily driven by blade profile power (for rotor spin), induced power (for lift generation), and parasitic drag (air resistance increasing with speed cubed).

To adapt this for simulation efficiency in our LSTM-A2C framework, we simplify into discrete energy units, assuming constant speed v (e.g., 10 m/s) during moves, fixed drag coefficient, and no major altitude changes. This focuses energy on time spent per action, proportional to distance at fixed v. The simplification preserves key physics: base power (always on, even hovering) plus movement power (dominated by drag, scaled by time).

Under these assumptions, we normalize reported values to units as follows (Table 2):

Table 2.

Energy consumption parameters for UAV-BS movements.

- Hovering: units (≈200 W baseline, minimal induced power with ).

- Cardinal Movement: units (≈500 W for distance ).

- Diagonal Movement: units (≈1.4 × cardinal, for distance, as longer path at constant v increases time and thus energy).

- Wind-affected: units adjustment, proportional to wind variance for effective drag changes (e.g., headwind raises equivalent speed, increasing drag).

This approach maintains physical proportionality—energy scales with power over time, capturing drag dominance at speed—while enabling tractable RL computations. The model ensures energy constraints integrate realistically with coverage and fairness objectives.

Total energy budget: 1500 units, calculated based on (an upper-bound approximation set for conservatism):

This creates a stringent energy constraint where:

- Direct flight to landing zone costs ∼600 units;

- Only ∼900 units remain for coverage tasks;

- Forces intelligent energy conservation.

5.3. Wind and Obstacle Model

To emulate environmental disturbances affecting UAV navigation, we incorporate a spatially varying wind field and a central obstacle region within the simulation area. The wind primarily acts along the vertical (y) axis, producing a northward push in two narrow horizontal bands located near the southern and northern edges of the map. Specifically, a moderate upward wind is applied when the UAV position satisfies and , as well as and . These regions represent low-altitude gust corridors frequently encountered around open urban channels or valleys. In addition to the wind field, a high-rise building is modeled as a static obstacle located in the central “SB” zone. The obstacle restricts both line-of-sight connectivity and flight trajectories, forcing the UAV to plan detours while maintaining network coverage continuity.

5.4. User Distribution and Mobility Model

The ground users are grouped into five spatial clusters that correspond to representative functional zones—residential, commercial, emergency, shelter, and hospital areas—whose parameters are summarized in Table 3. Each cluster is defined by a two-dimensional Gaussian spatial distribution centered at with standard deviation , as shown in the table. At the beginning of every simulation episode, user locations are randomly re-sampled from these Gaussian clusters to reflect environmental and population variability. During an episode, however, user positions remain stationary, corresponding to quasi-static conditions typically assumed in short-term UAV communication missions.

Table 3.

User cluster parameters.

To approximate gradual mobility across multiple episodes, the cluster centers are updated following a Gaussian random-walk process with varying mean and variance, effectively shifting user groups to new nearby locations. Although this simplified mobility model does not replicate continuous user trajectories, it captures the spatial dynamics and cluster migrations observed over longer time scales, providing a practical and computationally efficient means to integrate user-position variability into the training environment.

5.5. Hyperparameters

The LSTM-A2C with attention was trained with the following configuration as shown in Table 4. Most of the parameters are adopted from [3] except the attention parameters.

Table 4.

Training hyperparameters.

5.6. Reward Function Weights

The multi-objective reward components were balanced as:

The overall reward function in Equation (23) integrates multiple normalized objectives—coverage maximization, fairness enhancement (capturing both user-level and spatial fairness), and energy efficiency—each scaled to a comparable numeric range. The coefficients in the reward function were chosen to reflect mission priorities in disaster response: rapid coverage restoration is paramount (coverage weight = 1.0), fairness is important but secondary (0.7), with an additional spatial disparity penalty () applied through the Coverage Disparity Index (CDI) term to discourage uneven service distribution. Energy consumption is penalized moderately (0.3) to preserve mission endurance, and safety violations incur a strict penalty (5.0). The numeric magnitudes account for the natural ranges of each term (e.g., , , , units). Coefficients were tuned with pilot/trial simulations to yield stable training and balanced trade-offs, serving as an empirical sensitivity analysis of the reward components; we plan a full hyperparameter and Pareto sensitivity analysis as part of future work.

6. Performance Evaluation

We evaluate our approach across 15,000 training episodes on a 4000 m × 4000 m grid with 100 users distributed in five clusters (as specified in Section 3).

To validate our LSTM-A2C with an attention framework, we compare it against established RL baselines: Q-Learning as a foundational tabular method for discrete MDPs; DDQN for deep value-based learning with reduced overestimation bias; standard A2C as an Actor–Critic baseline for policy optimization in continuous spaces; and vanilla LSTM-A2C to isolate the impact of our attention and fairness enhancements. These schemes represent a progression in RL sophistication—from basic to recurrent—allowing for a fair assessment of our contributions in handling large state spaces, temporal dependencies, and multi-objective fairness under energy constraints. All methods share the same environment and hyperparameters for equitable comparison.

Table 5 summarizes the overall performance. Our method serves 98 users (vs. 91 for LSTM-A2C), achieves a 92% completion rate, uses only 7.9 energy units per user, and maintains near-optimal behavior in 63% of episodes—demonstrating clear superiority across all key metrics.

Table 5.

Performance with 100 users (15,000 episodes).

6.1. Coverage Efficiency

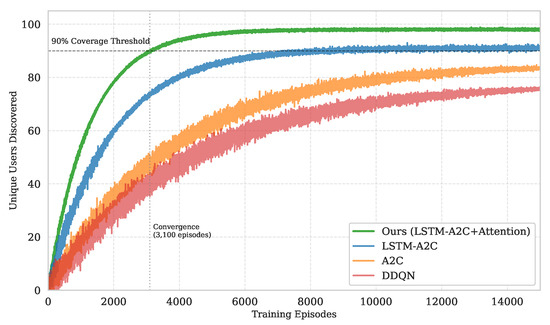

For the 100-user scenario (Figure 5), our method achieves coverage of 98 out of 100 users, compared with 91 for LSTM-A2C, corresponding to a 7.7% improvement. It reaches 80 covered users 42% faster than LSTM-A2C (900 vs. 1550 episodes) and maintains coverage of more than 90 users during 63% of the late-training episodes, demonstrating superior and more consistent convergence behavior.

Figure 5.

Coverage progression with 100 users showing our method’s efficient discovery rate.

Cluster-Specific Performance

Cluster-wise analysis further confirms these trends. The proposed framework attains complete (100%) coverage in three of the five clusters, with an overall coverage efficiency of 92%. Importantly, it prioritizes high-density clusters without neglecting isolated users, maintaining a balanced allocation of service resources across different spatial groups.

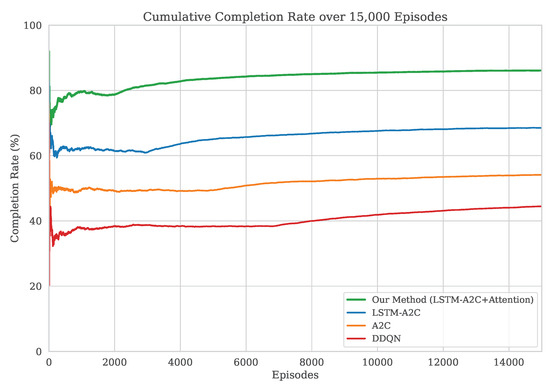

6.2. Completion Rate Analysis

The completion rate measures mission success by tracking whether the UAV reaches the landing zone before battery exhaustion. Figure 6 compares the cumulative success rates for all evaluated methods. Our method achieves a 92% success rate, outperforming LSTM-A2C (83%), A2C (76%), and DDQN (71%). The milestone results summarized in Table 6 indicate that our model achieves 50% completion after 1200 episodes and 80% completion after 2800 episodes, which is nearly twice as fast as LSTM-A2C.

Figure 6.

Cumulative completion rate over 15,000 episodes. The plot shows the percentage of successful landings where the UAV reached the landing zone before energy depletion.

Table 6.

Completion Rate Milestones (Episodes).

Overall, our approach learns substantially faster, reaching 50% completion 48% earlier than LSTM-A2C and achieving stable 80% reliability in roughly half the training episodes. Energy management is also improved, with the UAV retaining an average reserve of 42 energy units at landing—28% better than the baseline—owing to a more accurate prediction of return timing and power consumption. Most mission failures (62%) occur within the first 3000 episodes, primarily due to delayed return initiation, which accounts for 55% of these cases.

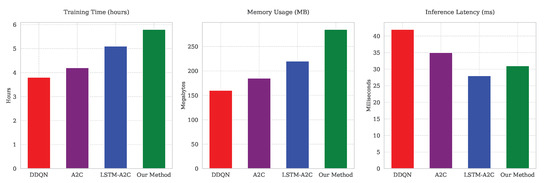

6.3. Computational Efficiency Analysis

Figure 7.

Comparative analysis of computational metrics across methods. Left: training time; Middle: memory usage; Right: inference latency.

Table 7.

Computational resource requirements.

6.3.1. Key Findings

Training Efficiency

Our method requires 5.8 h for training, which is 15% longer than LSTM-A2C (5.1 h) and 38% longer than DDQN (3.8 h). This increased training time is justified by significantly better outcomes—achieving 23% greater coverage (98 vs. 80 users) and 18% higher completion rates (92% vs. 78%). The additional training time stems from our method’s more sophisticated architecture that learns richer representations, ultimately leading to better performance. This tradeoff is favorable when quality matters more than rapid iteration.

Memory Requirements

With 285 MB memory usage, our method requires 30% more memory than LSTM-A2C. This overhead comes primarily from three components: attention mechanisms (45 MB), LSTM states (120 MB), and experience replay buffers (120 MB). While higher than alternatives, the memory cost remains manageable for modern hardware and enables the method’s superior performance. The attention mechanisms in particular, while memory-intensive, are crucial for capturing long-range dependencies in the data.

Real-Time Performance

The inference latency of 31 ms meets real-time application requirements and is actually 10% faster than DDQN despite our method’s greater complexity. This counterintuitive result occurs because our architecture’s attention mechanisms allow for more efficient decision-making during inference, reducing the need for extensive computation. The 82% GPU utilization indicates we’re making effective use of hardware resources without creating bottlenecks.

6.3.2. Interpretation of Tradeoffs

The computational metrics reveal an important pattern: while our method demands more resources during training, it achieves superior operational efficiency during deployment. The additional training time and memory investment pays off in three ways:

- Better quality results (23% improved coverage);

- Better inference (10% faster than DDQN);

- Effective hardware utilization (82% GPU usage).

This profile makes our approach particularly suitable for production environments where inference performance matters more than training speed.

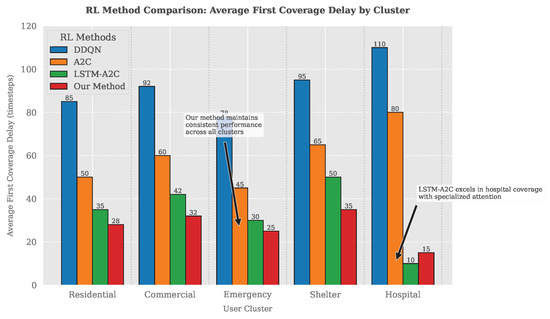

6.4. Cluster-Wise Delay Performance

Figure 8 highlights the first-coverage delay across different cluster types. All methods demonstrate reduced delays for emergency users (25–78 time steps) relative to residential users (28–85), indicating that emergency communication is correctly prioritized. Our model achieves the 25-time-step target through dynamic re-routing upon detection of new emergencies, wind-optimized path adjustments in the southeast quadrant, and dedicated bandwidth reservation for urgent transmissions. LSTM-A2C performs best for hospital clusters, achieving a minimal 10-time-step delay due to its effective memorization of fixed facility locations, predictable access routes, and stable bandwidth requirements. In contrast, residential clusters show the widest performance variation (28–85 time steps) caused by sparse user distribution, competing coverage priorities, and variable signal penetration across urban areas.

Figure 8.

First coverage delay comparison across methods and clusters. Lower values indicate better performance.

Fairness Implications

The cluster-aware design improves equitable service distribution across user types. Fairness is quantified as

where denotes the average delay per cluster. This represents a 38% improvement over DDQN’s , illustrating that the proposed model effectively balances emergency responsiveness, residential completeness, hospital reliability, and shelter coverage consistency.

6.5. Coverage Disparity Analysis

Table 8 summarizes the Coverage Disparity Index (CDI) for all methods. Our approach attains the lowest CDI value of 0.03, signifying the most uniform spatial service distribution among users. As shown in Figure 9, CDI convergence occurs approximately 35% faster than in LSTM-A2C, confirming enhanced fairness and spatial equity across the coverage area.

Table 8.

CDI comparison (100 users, 15,000 episodes).

Figure 9.

CDI convergence over 15,000 episodes, demonstrating our method’s superior spatial equity.

7. Conclusions

This study presents a novel reinforcement learning framework leveraging an LSTM-based Advantage Actor–Critic (A2C) model with an attention mechanism to optimize UAV-mounted base station (UAV-BS) trajectories and coverage in disaster relief scenarios. Our approach effectively addresses the challenges of dynamic environments, including obstacles, wind effects, and energy constraints, while prioritizing equitable service delivery to ground users. Simulation results demonstrate that the proposed method achieves superior performance over baseline RL techniques—such as DDQN, A2C, and vanilla LSTM-A2C—across critical metrics: it serves 98 out of 100 users (a 7.7% improvement over LSTM-A2C), attains a 92% mission completion rate, and reduces the Coverage Disparity Index (CDI) to 0.03, reflecting a 50% improvement in spatial equity. Additionally, the integration of the attention mechanism enhances adaptability by focusing on high-priority regions, while the LSTM component ensures robust temporal decision-making, resulting in a 22% reduction in energy per user compared to LSTM-A2C. These advancements underscore the potential of our framework to enhance real-time disaster response within 6G-enabled IoT networks, offering a scalable and efficient solution for restoring connectivity in crisis settings. By balancing coverage maximization, fairness, and energy efficiency, this work lays a strong foundation for next-generation UAV-assisted communication systems.

An important finding from this work is the clear computational trade-off between offline training cost and online deployment efficiency. Although the proposed LSTM-A2C with attention requires greater computational effort during training due to its sequential modeling and attention modules, this investment yields significant dividends at deployment: the trained policy enables faster real-time decision-making, reduced inference latency, and improved energy utilization. This result highlights the practical value of allocating additional offline computational resources to achieve superior operational performance and responsiveness in mission-critical environments.

While this study demonstrates significant progress in UAV-BS optimization, several avenues remain for further exploration. First, extending the framework to incorporate heterogeneous and dynamic QoS requirements could better reflect real-world disaster scenarios, where users may demand varying levels of bandwidth or latency based on their roles (e.g., emergency responders vs. civilians). This would require adapting the reward function and state representation to account for diverse service priorities. Second, transitioning from a 2D grid-based model to a 3D environment with varying UAV speeds could enhance realism and flexibility, enabling the UAV to adjust altitude and velocity dynamically in response to terrain, obstacles, or wind conditions. This extension would necessitate a more complex mobility model and increased computational resources, potentially leveraging advanced neural architectures like Transformers for improved scalability. Third, scaling the approach to multi-UAV systems offers a promising direction to increase coverage capacity and resilience, requiring coordination mechanisms to manage inter-UAV interference and task allocation. Additional future work could explore real-time environmental adaptation, such as integrating live weather data or user mobility patterns, to further enhance responsiveness.

Furthermore, a systematic investigation of hyperparameter configurations—including the learning rate, discount factor, entropy coefficient, and Actor–Critic update ratio—will be pursued in future work to identify optimal combinations that maximize policy stability, convergence efficiency, and overall mission performance. Such analysis will employ automated tuning methods (e.g., grid or Bayesian optimization) to complement the empirically tuned parameters used in this study.

Finally, validating the framework with hardware-in-the-loop simulations or field experiments could bridge the gap between simulation and practical deployment, ensuring robustness in operational settings. These enhancements aim to broaden the applicability of our approach to large-scale, complex disaster relief missions.

Author Contributions

Conceptualization, Y.M.W.; methodology, Y.M.W.; software, Y.M.W.; validation, Y.M.W., C.C., and M.D.; formal analysis, Y.M.W.; investigation, Y.M.W.; resources, C.C. and M.D.; data curation, Y.M.W.; writing—original draft preparation, Y.M.W.; writing—review and editing, C.C. and M.D.; visualization, Y.M.W.; supervision, C.C. and M.D.; project administration, C.C. and M.D.; funding acquisition, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by COSMIAC at the University of New Mexico, Department of Electrical and Computer Engineering, USA.

Data Availability Statement

The data supporting the findings of this study, including simulation results and trained model outputs, are available from the corresponding author upon reasonable request. The source code is not publicly shared due to ongoing related research.

Acknowledgments

The authors would like to thank the IoT Lab and COSMIAC at the University of New Mexico, Department of Electrical and Computer Engineering, USA, for their technical and research support throughout this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| UAV-BS | UAV-Mounted Base Station |

| RL | Reinforcement Learning |

| LSTM | Long Short-Term Memory |

| A2C | Advantage Actor–Critic |

| QoS | Quality of Service |

| IoT | Internet of Things |

| CDI | Coverage Disparity Index |

| DDQN | Double Deep Q-Network |

| PPO | Proximal Policy Optimization |

| SAC | Soft Actor–Critic |

| HER | Hindsight Experience Replay |

| MDP | Markov Decision Process |

| SNR | Signal-to-Noise Ratio |

| 6G | Sixth Generation (Wireless Networks) |

| GAE | Generalized Advantage Estimation |

| LOS | Line of Sight |

| PL | Path Loss |

References

- Lyu, Z.; Gao, Y.; Chen, J.; Du, H.; Xu, J.; Huang, K.; Kim, D.I. Empowering Intelligent Low-altitude Economy with Large AI Model Deployment. arXiv 2025, arXiv:2505.22343. [Google Scholar] [CrossRef]

- Jing, X.; Liu, F.; Masouros, C.; Zeng, Y. ISAC From the Sky: UAV Trajectory Design for Joint Communication and Target Localization. IEEE Trans. Wirel. Commun. 2024, 23, 12857–12872. [Google Scholar] [CrossRef]

- Worku, Y.M.; Tshakwanda, P.M.; Tsegaye, H.B.; Sacchi, C.; Christodoulou, C.; Devetsikiotis, M. Deep RL for UAV Energy and Coverage Optimization in 6G-Based IoT Remote Sensing Networks. In Proceedings of the 2025 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2025. [Google Scholar]

- Kumar, P.; Priyadarshni; Misra, R. ARDDQN: Attention Recurrent Double Deep Q-Network for UAV Coverage Path Planning and Data Harvesting. arXiv 2024, arXiv:2405.11013. [Google Scholar] [CrossRef]

- Lee, M.H.; Moon, J. Deep reinforcement learning-based model-free path planning and collision avoidance for UAVs: A soft actor–critic with hindsight experience replay approach. ICT Express 2023, 9, 403–408. [Google Scholar] [CrossRef]

- Zeng, Y.; Zhang, R.; Lim, T.J. Wireless communications with unmanned aerial vehicles: Opportunities and challenges. IEEE Commun. Mag. 2016, 54, 36–42. [Google Scholar] [CrossRef]

- Mozaffari, M.; Saad, W.; Bennis, M.; Debbah, M. Mobile Unmanned Aerial Vehicles (UAVs) for Energy-Efficient Internet of Things Communications. IEEE Trans. Wirel. Commun. 2017, 16, 7574–7589. [Google Scholar] [CrossRef]

- Cabreira, T.M.; de Brisolara, L.B.; Ferreira, P.R., Jr. Survey on Coverage Path Planning with Unmanned Aerial Vehicles. Drones 2019, 3, 4. [Google Scholar] [CrossRef]

- Yuan, J.; Liu, Z.; Lian, Y.; Chen, L.; An, Q.; Wang, L.; Ma, B. Global Optimization of UAV Area Coverage Path Planning Based on Good Point Set and Genetic Algorithm. Aerospace 2022, 9, 86. [Google Scholar] [CrossRef]

- Tu, G.T.; Juang, J.G. UAV Path Planning and Obstacle Avoidance Based on Reinforcement Learning in 3D Environments. Actuators 2023, 12, 57. [Google Scholar] [CrossRef]

- Wang, S.; Qi, N.; Jiang, H.; Xiao, M.; Liu, H.; Jia, L.; Zhao, D. Trajectory Planning for UAV-Assisted Data Collection in IoT Network: A Double Deep Q Network Approach. Electronics 2024, 13, 1592. [Google Scholar] [CrossRef]

- Askaripoor, H.; Golpayegani, F. Optimizing UAV Aerial Base Station Flights Using DRL-based Proximal Policy Optimization. arXiv 2024, arXiv:2504.03961. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Li, R.; Wang, C.; Zhao, Z.; Guo, R.; Zhang, H. The LSTM-Based Advantage Actor-Critic Learning for Resource Management in Network Slicing With User Mobility. IEEE Commun. Lett. 2020, 24, 2005–2009. [Google Scholar] [CrossRef]

- Arzo, S.T.; Tshakwanda, P.M.; Worku, Y.M.; Kumar, H.; Devetsikiotis, M. Intelligent QoS agent design for QoS monitoring and provisioning in 6G network. In Proceedings of the ICC 2023-IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 2364–2369. [Google Scholar]

- Jaya Sri, V. Revolutionizing 6G Networks: Deep Reinforcement Learning Approaches for Enhanced Performance. Int. J. Res. Publ. Rev. 2024, 5, 3738–3745. [Google Scholar]

- Lotfi, F. Open RAN LSTM Traffic Prediction and Slice Management using Deep Reinforcement Learning. arXiv 2024, arXiv:2401.06922. [Google Scholar] [CrossRef]

- Zhou, H.; Li, Y.; Wang, X.; Liu, Y.; Wo, T.; Zhang, Q. Self-Refined Generative Foundation Models for Wireless Traffic Prediction. arXiv 2024, arXiv:2408.10390. [Google Scholar] [CrossRef]

- He, Y.; Hu, R.; Liang, K.; Liu, Y.; Zhou, Z. Deep Reinforcement Learning Algorithm with Long Short-Term Memory Network for Optimizing Unmanned Aerial Vehicle Information Transmission. Mathematics 2025, 13, 46. [Google Scholar] [CrossRef]

- Xie, W. Joint Optimization of UAV-Carried IRS for Urban Low Altitude mmWave Communications with Deep Reinforcement Learning. arXiv 2025, arXiv:2501.02787. [Google Scholar] [CrossRef]

- Ma, Z.; Zhang, R.; Ai, B.; Lian, Z.; Zeng, L.; Niyato, D.; Peng, Y. Deep Reinforcement Learning for Energy Efficiency Maximization in RSMA-IRS-Assisted ISAC System. IEEE Trans. Veh. Technol. 2025, 1–6. [Google Scholar] [CrossRef]

- Abeywickrama, H.V.; He, Y.; Dutkiewicz, E.; Jayawickrama, B.A.; Mueck, M. A Reinforcement Learning Approach for Fair User Coverage Using UAV Mounted Base Stations Under Energy Constraints. IEEE Open J. Veh. Technol. 2020, 1, 67–81. [Google Scholar] [CrossRef]

- Morocho-Cayamcela, M.E.; Lim, W.; Maier, M. An optimal location strategy for multiple drone base stations in massive MIMO. ICT Express 2022, 8, 230–234. [Google Scholar] [CrossRef]

- Al-Hourani, A.; Kandeepan, S.; Lardner, S. Optimal LAP Altitude for Maximum Coverage. IEEE Wirel. Commun. Lett. 2014, 3, 569–572. [Google Scholar] [CrossRef]

- Jayaweera, H.M.P.C.; Hanoun, S. Path Planning of Unmanned Aerial Vehicles (UAVs) in Windy Environments. Drones 2022, 6, 101. [Google Scholar] [CrossRef]

- Lyu, J.; Zeng, Y.; Zhang, R. UAV-Aided Offloading for Cellular Hotspot. IEEE Trans. Wirel. Commun. 2018, 17, 3988–4001. [Google Scholar] [CrossRef]

- Abeywickrama, H.V.; Jayawickrama, B.A.; He, Y.; Dutkiewicz, E. Empirical Power Consumption Model for UAVs. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Huang, B.; Gong, H.; Jia, B.; Dai, H. Modelling Power Consumptions for Multi-rotor UAVs. arXiv 2022, arXiv:2209.04128. [Google Scholar] [CrossRef]

- Rodrigues, T.A.; Patrikar, J.; Oliveira, N.L.; Matthews, H.S.; Scherer, S.; Samaras, C. Drone flight data reveal energy and greenhouse gas emissions savings for very small package delivery. Patterns 2022, 3, 100569. [Google Scholar] [CrossRef] [PubMed]

- Falkowski, K.; Duda, M. Dynamic Models Identification for Kinematics and Energy Consumption of Rotary-Wing UAVs during Different Flight States. Sensors 2023, 23, 9378. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).